- 1Hunan Province People's Hospital (The First Affiliated Hospital of Hunan Normal University), Changsha, China

- 2The College of Literature and Journalism, Central South University, Changsha, China

- 3Mobile Health Ministry of Education-China Mobile Joint Laboratory, Changsha, China

- 4School of Computer and Communication Engineering, Changsha University of Science and Technology, Changsha, China

- 5School of Computer Science and Engineering, Central South University, Changsha, China

- 6School of Computing Science and Engineering, Vellore Institute of Technology University, Chennai, Tamil Nadu, India

Retinal vessel extraction plays an important role in the diagnosis of several medical pathologies, such as diabetic retinopathy and glaucoma. In this article, we propose an efficient method based on a B-COSFIRE filter to tackle two challenging problems in fundus vessel segmentation: (i) difficulties in improving segmentation performance and time efficiency together and (ii) difficulties in distinguishing the thin vessel from the vessel-like noise. In the proposed method, first, we used contrast limited adaptive histogram equalization (CLAHE) for contrast enhancement, then excerpted region of interest (ROI) by thresholding the luminosity plane of the CIELab version of the original RGB image. We employed a set of B-COSFIRE filters to detect vessels and morphological filters to remove noise. Binary thresholding was used for vessel segmentation. Finally, a post-processing method based on connected domains was used to eliminate unconnected non-vessel pixels and to obtain the final vessel image. Based on the binary vessel map obtained, we attempt to evaluate the performance of the proposed algorithm on three publicly available databases (DRIVE, STARE, and CHASEDB1) of manually labeled images. The proposed method requires little processing time (around 12 s for each image) and results in the average accuracy, sensitivity, and specificity of 0.9604, 0.7339, and 0.9847 for the DRIVE database, and 0.9558, 0.8003, and 0.9705 for the STARE database, respectively. The results demonstrate that the proposed method has potential for use in computer-aided diagnosis.

Introduction

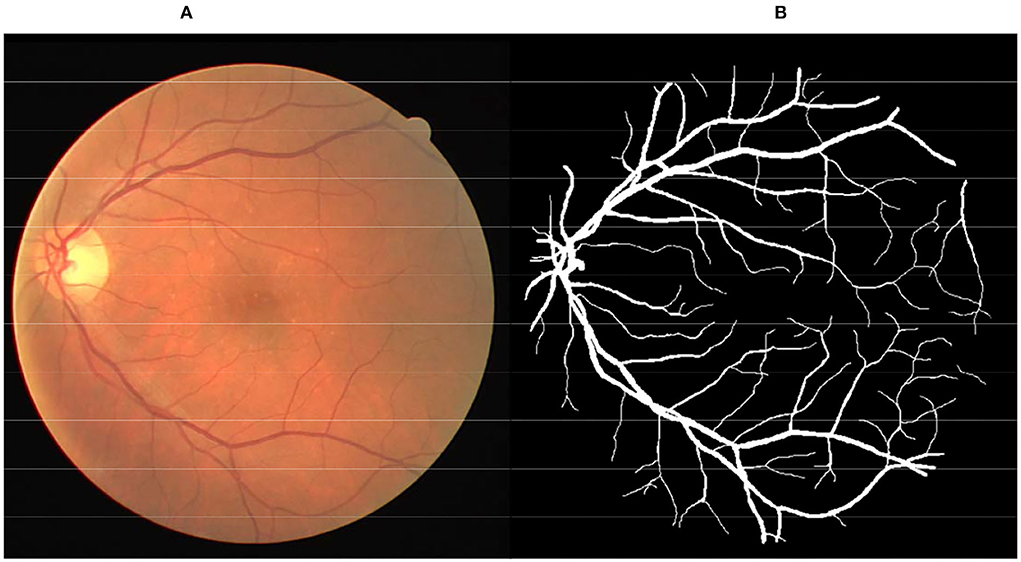

The color fundus image (Figure 1A) is a non-invasive tool generally used to diagnose various pathologies, including diabetic retinopathy, glaucoma, and age-related macular degeneration. Retinal vessels are the only part of the blood circulation system of humans that can be non-invasively observed directly (1, 2). Inspection of the attributes of the retinal vessel such as width, tortuosity, branching pattern, and angles can play significant roles in early disease diagnosis. Moreover, vessel segmentation is a key and indispensable processing step for further processing operations. This means that the accuracy of vessel segmentation greatly affects the diagnostic effect. But, it is a work of redundancy and boredom for professional ophthalmologists, as shown in Figure 1B. Consequently, to save medical resources and improve the effect of diagnosis, the effective vessel segmentation algorithm with high accuracy and less time consumption is remarkably necessary in computer-aided diagnosis.

A bar-selective COSFIRE filter (B-COSFIRE) is highly efficient for bar-shaped structure detection (3). Therefore, it is a novel and valid method for the automatic segmentation of blood vessels. It is based on the Combination of Receptive Field (CORF) computational model of a simple cell in visual cortex (4) and its implementation, called Combination of Shifted Filter Response (COSFIRE) (5). The COSFIRE filter is trainable, meaning it is not predefined in the implementation, but it is determined from a user-specified prototype pattern (3). COSFIRE has the versatility that can be configured to solve many image processing and pattern matching tasks.

In this article, we propose an efficient retinal vessel segmentation method based on the B-COSFIRE filter based on a previous work mentioned in Azzopardi et al. (3). The original method has good time complexity, but its performance is not as satisfactory as we expected. In the proposed method, we have made many attempts, such as employing denoise operation and post-processing operation. The proposed method has achieved a better performance result without losing the nature of good time complexity.

The rest of this article is organized as follows: In Related Works, we examine the existing blood vessel segmentation method and other applications of COSFIRE filters. In Proposed Methodology, we present our method explicitly. In Experimental Results and Analysis, we introduce the datasets used for experiments and reported the experimental results and the comparisons with existing methods. The discussion for future work and conclusion are presented in Findings and Conclusion, respectively.

Related Works

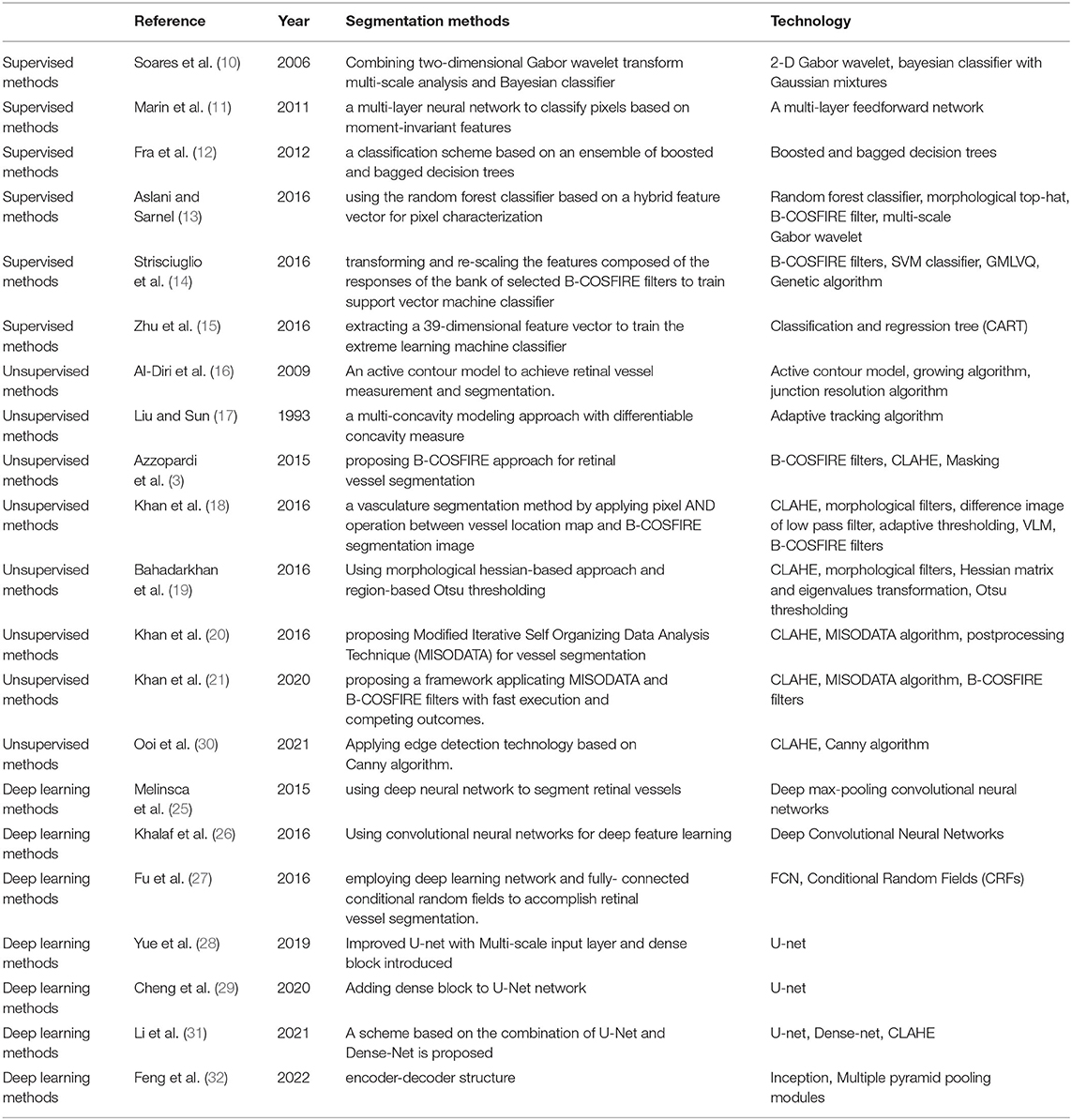

Due to the importance of vascular segmentation in computer-aided diagnosis, an efficient vessel segmentation algorithm has always been a research hot spot. Existing approaches for retinal blood vessel segmentation in fundus images can be divided into two groups: unsupervised methods, which include matched filtering, vessel tracking, model-based approaches, and morphological processing, and supervised methods, which use feature vectors to train a binary classification model (6–8). Whether the method is supervised or not depends only on whether manual marking information with a priori is used.

Supervised methods use pixel-wise feature vectors with labeled information, where manually segmented images are referred to as the gold standard, to train a classifier that can distinguish between vascular and non-vascular pixels. This kind of method mainly includes the following two steps: form pixel-wise feature vectors by feature extraction methods and learn a classification model based on vessel and non-vessel training feature vectors (9). Soares et al. (10) reported a segmentation method based on a Bayesian classifier combined with multi-scale analysis for two-dimensional Gabor wavelet transform. Marin et al. (11) proposed a supervised method for retinal vessel segmentation, by applying a multi-layer neural network to classify pixels based on moment-invariant features. Fraz et al. (12) proposed a classification scheme that fuses boosted and bagged decision trees. Aslani et al. (13) presented a supervised method using the random forest classifier, which constructs a rich collection of a 17-dimensional feature vector including B-COSFIRE filter response and trains a random forest classifier to accomplish the segmentation of fundus images. Strisciuglio et al. (14) proposed a method of retinal vessel segmentation by transforming and re-scaling the features composed of the responses of the bank of selected B-COSFIRE filters to train a support vector machine classifier. Zhu et al. (15) presented a supervised ensemble method for segmenting the retinal vessels by extracting a 39-dimensional feature vector to train the extreme learning machine classifier.

Unsupervised methods mainly extract pathological features through linear operation filtering techniques with predefined kernels. Al-Diri et al. (16) proposed an unsupervised method based on the active contour model to achieve retinal vessel measurement and segmentation. Lam et al. (17) presented a multi-concavity modeling approach based on differentiable concavity measure, which can simultaneously process retinal images of two different health states. Azzopardi et al. (3) proposed a method of retinal vessel segmentation based on the COSFIRE approach, called B-COSFIRE filter, by constructing two kinds of the B-COSFIRE filter which are selective for vessel and vessel-ending, respectively. Khan et al. (18) presented an unsupervised method of vasculature segmentation, by applying pixel AND operation between the vessel location map and B-COSFIRE segmentation image. Bahadarkhan et al. (19) proposed a less computational unsupervised automated technique with promising results for the detection of retinal vasculature by using morphological Hessian-based approach and region-based Otsu thresholding. Khan et al. (20) used distinctive preprocessing steps, thresholding techniques, and post-processing steps to enhance and segment the retinal blood vessels. Khan et al. (21) proposed a framework with fast execution and competing outcomes using MISODATA and B-COSFIRE filters to produce better segmentation results.

Generally, supervised methods perform better than other kinds of methods in retinal vessel segmentation, but supervised methods consume a lot of time in the process of classifier training. On the other hand, the advantage of unsupervised learning is that it does not require artificial label information to train the classifier, which makes it more versatile and easier to implement (6, 22). To sum up, a robust method that can have good performance results and does not require much preparation time is necessary for computer-aided diagnosis.

Deep learning has always been a hot topic in the field of computer research. Deep learning can achieve high-quality performance but requires more training time than supervised learning (23, 24). The advantages and disadvantages of deep learning are obvious. Many scholars have attempted using methods of deep learning to segment vessels in retinal images. Melinsca et al. (25) proposed a method using deep neural network to segment retinal vessels. Khalaf et al. (26) proposed a method of retinal vessel segmentation based on convolutional neural networks for deep feature learning. Fu et al. (27) employed deep learning network and fully connected conditional random fields to accomplish retinal vessel segmentation. Deep learning can often achieve better performance than machine learning; however, deep learning requires much training time and better hardware equipment conditions. Yue et al. (28) introduced the multi-scale input layer and dense block to the conventional U-net so that the network can make use of richer spatial context information. Cheng et al. (29) added a dense block to the U-Net network to make each layer's input come from all the previous layer's output, thereby improving the segmentation accuracy of small blood vessels. In order to follow the discussion easily, we summarize the discussed studies in Table 1.

The COSFIRE method was proposed by Azzopardi et al. (5), based on the CORF computational model. Due to the fast and accurate nature of COSFIRE, it has received great attention in the field of image processing and pattern recognition. In addition to the application of retinal vascular segmentation as mentioned before, there are some applications based on the COSFIRE filter in other directions. Azzopardi et al. (33) employed descriptors of different shapes based on trainable COSFIRE filters to recognize handwritten digits, detect vascular bifurcations in segmented retinal images in Azzopardi et al. (34), and achieve gender recognition from face images in Azzopardi et al. (35). Gecer et al. (36) proposed a method that can recognize objects with the same shape but different colors, by configuring different COSFIRE filters in different color channels. Guo et al. (37, 38) further developed the COSFIRE method, by configuring the COSFIRE filter with the inhibition mechanism to recognize architectural and electrical symbols and to detect key points and recognize objects.

The aforementioned work has the following problems:

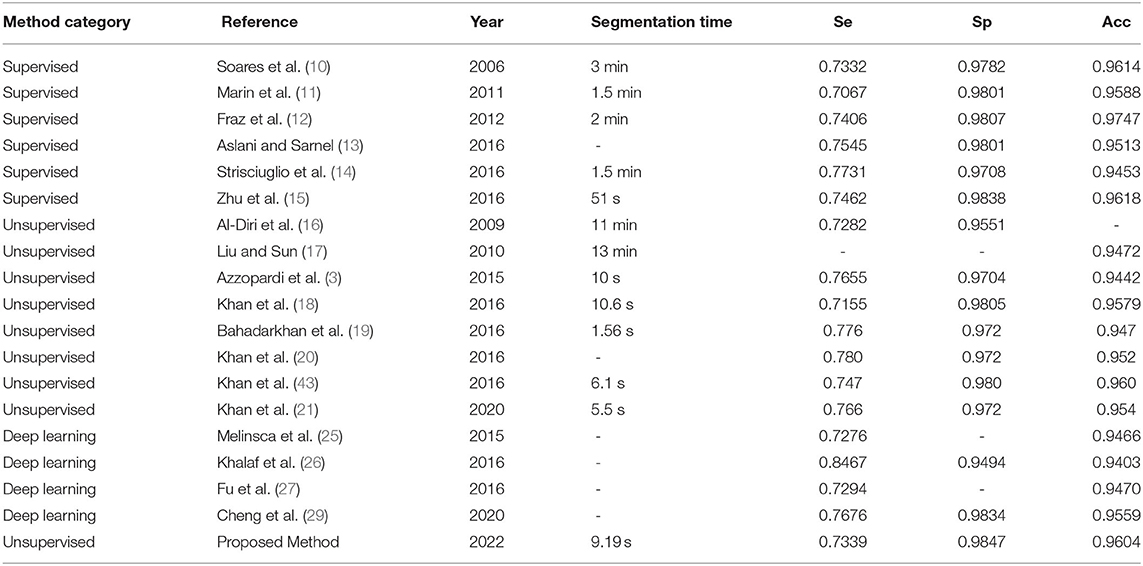

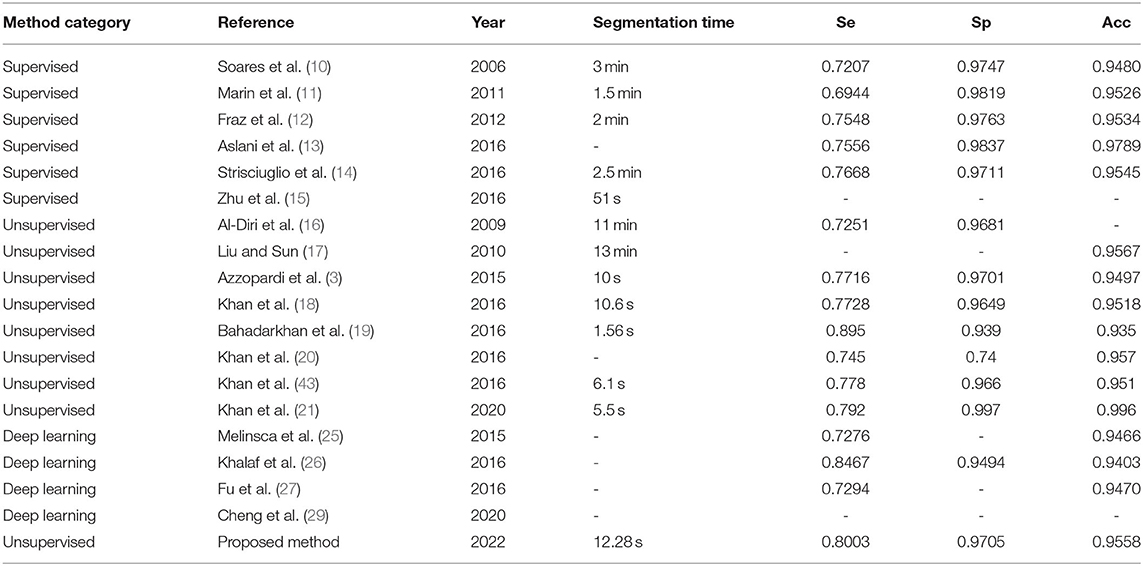

1) Related supervision methods (10–15) have high computational cost and high time cost in the process of model training. Supervised methods need to use a large number of pixel-level data labels for supervised computation, and it consumes much time to produce segmentation results. As shown in Tables 6, 7, the overhead of the relevant supervised methods in the segmentation time is about 1 min.

2) When considering the combined effects of performance and time overhead, some unsupervised (16–21, 30) methods lack efficient application value. Some unsupervised methods have higher time overhead when obtaining higher segmentation performance. While another part of the unsupervised method achieves lower time overhead, the segmentation performance is also reduced. That is to say, these methods cannot guarantee lower time overhead and a better segmentation effect at the same time; in addition, these methods are not versatile and cannot achieve good results in multiple dataset tests.

3) The images that have been processed by binary thresholding are destroyed by noise. Distinguishing the thin vessel from the vessel-like noise is still a challenge (18, 20, 21).

In this article, an improved unsupervised method based on the B-COSFIRE filter is presented. Compared with traditional methods, the proposed method has stronger robustness. The proposed unsupervised method uses image contrast enhancement algorithms and morphological operations and uses the B-COSFIRE filter, which can effectively extract bar-like blood vessels in the fundus image. While improving the effect, it reduces the time overhead. At the same time, our method uses a post-processing algorithm based on connected domains, which can effectively distinguish small connected blood vessel pixels from vessel-like noise. This proves once again that the proposed method has a great application capability in computer-aided diagnosis.

Proposed Methodology

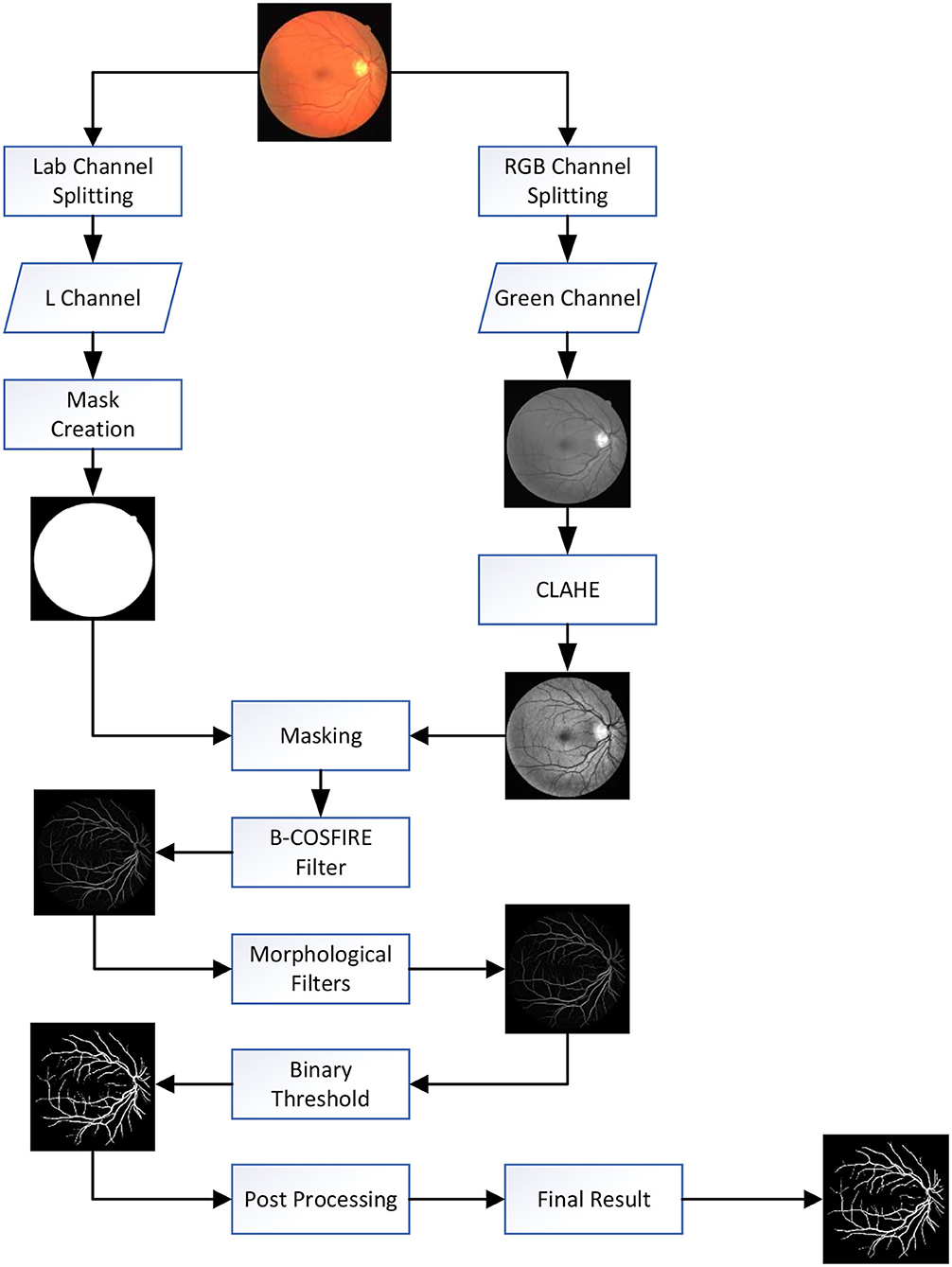

Vessel segmentation is a basic step in image processing of the fundus image; thus, the process should be fast and efficient. The main idea is taking advantage of the high efficiency of the B-COSFIRE filter and employing other operations that do not need much consumption of time to obtain a better result. Figure 2 represents a flowchart of our proposed method with main processing steps. Algorithm 1 shows the implementation steps of our proposed system. The mission of the B-COSFIRE filter is to select all vessels from the fundus image, while the other operation is to enhance vascular features, denoise background noise, and reduce error classification.

Figure 2. Flowchart of proposed method: first, we using contrast limited adaptive histogram equalization (CLAHE) for contrast enhancement. Second, we threshold the luminance plane of the CIELab version of the original RGB image to produce a mask. Third, we apply the B-COSFIRE filter and morphological filter to detect blood vessels and remove noise. Fourth, the binary threshold was used for vessel segmentation. Finally, unconnected non-vessel pixels are eliminated by post-processing to obtain the final segmentation map.

Preprocessing

Before employing operations to segment vessels, we used the following operations to improve or enhance the characteristics of blood vessels:

a. Green channel extraction: The green band is extracted from RGB retinal images, and the FOV mask (field of view) image is obtained by thresholding the luminosity plane of the CIELab version of the original RGB image. Previous works by Niemeijer et al. (39), Mendonca et al. (40), Soares et al. (10), and Ricci et al. (41) have demonstrated that the green channel of RGB fundus images can best highlight the difference between blood vessels and background, whereas the red and blue channels show low contrast and are very noisy.

b. Mask produce: The FOV mask is an important tool for determining the ROI in the process of vessel segmentation. Although the DRIVE dataset provides the FOV mask, most other datasets do not. For the sake of versatility of the proposed method, we adopt this method to obtain FOV mask images by shifting the original RGB images to CIELab version and then thresholding the luminosity plane.

c. CLAHE: Next, the contrast-limited adaptive histogram equalization (CLAHE) algorithm is used to enhance images for expanding vessels characteristics. The CLAHE algorithm can effectively limit noise amplification in relatively uniform areas and improve local contrast, which is usually used as a preprocessing step in retinal image analysis research (42).

Segmentation Processing

The proposed segmentation method is based on the feature of the B-COSFIRE filter with the highest correlation to the bar/vessel shape. A B-COSFIRE filter was originally proposed in Azzopardi et al. (3). It takes the responses of a group of difference-of-Gaussians (DoG) filters at certain positions with respect to the center of its area of support as input. In the proposed method, the B-COSFIRE filter has been used for efficiently obtaining vessel-like structures. The B-COSFIRE filter is trainable and is configured to be selective for bar-like structures (3). The term trainable refers to the ability of determining these positions in an automatic configuration process by using a synthetic vessel or vessel-ending image.

The application of the B-COSFIRE filter consists of four main process: Convolution with DoG filters, blurring response, shifting the blurred responses, and estimating a point-wise weighted geometric mean. In the following, we will introduce these steps.

A center-on DoG function with a positive central region and a negative surround denoted by DoGσ(x, y) is given by (3):

where σ is the standard deviation of the Gaussian function that determines the extent of the surround, 0.5σ is the standard deviation of the inner Gaussian function, and (x, y) represents a pixel location of an image I. The response of a the DoG filter Cσ(x, y) with a kernel function DOGσ(x − x′, y − y′) is computed by convolution, where (x′, y′) represents intensity distribution of image I:

where | ▪ |+ half-wave rectification operation is to suppress (set to 0) the negative values.

In the proposed B-COSFIRE filter (3), every point i is described by a tuple of three parameters DoG(σi, ρi, ϕi), where σi represents the standard deviation of the DoG filter that provides the input, ρi and ϕi represent the polar coordinates of the B-COSFIRE filter, and a set of three tuples of a B-COSFRE filter is denoted by S = (σi, ρi, ϕi|i = 1, .., n), where n stands for the number of considered DoG response.

The blurring operation of the DoG filter is shown as follows. It allows for some tolerance in the position of the specific points.

where and α are constants.

Each blurred DoG response is shifted by a distance ρi in the direction opposite to ϕi, and they meet at the support center of the B-COSFIRE filter. The blurred and shifted response of a DoG filter for each tuple (σi, ρi, ϕi) is denoted by S(σi, ρi, ϕi)(x, y) in set S. The i_th blurred and shifted DoG response is defined as follows:

where − 3σ′ ≤ x′, y′ ≤ 3σ′.

Last, the output of the B-COSFIRE filter is defined as the weighted geometric mean of all the blurred and shifted DoG response:

where and |▪|t represent thresholding the response at a fraction t(0 ≤ t ≤ 1). The equation is an AND-type function that a B-COSFIRE filter achieves as response when all DoG filter responses are >0.

Moreover, to achieve multi-orientation selectivity, the number of B-COSFIRE filters is configured by using prototype patterns in different orientations. A new set is created by manipulating the parameter ¦ × of each tuple:

A rotation-tolerant response is achieved by merging the response of B-COSFIRE filters with different orientation preferences and taking the maximum value at each location (x,y):

The aforementioned operation is an AND-type function that is achieved by the B-COSFIRE filter when all DoG filter responses are non-zero. In total, two kinds of the B-COSFIRE filter are configured (3): symmetric B-COSFIRE filter and asymmetric B-COSFIRE filter. One is selective for vessel, and the other is selective for vessel-ending. For more details, refer to Azzopardi et al. (3).

Morphological filters are used to denoise and to reduce the influence of ophthalmic disorders and to extract useful and meaningful information in small regions of images. Combining image subtraction with openings and closings result in top-hat and bottom-hat transformations (15). The role of the two kinds of transformation is the same, making non-uniform backgrounds uniform and enhancing the image contrast. The top-hat transform is used for light objects on a dark background, so it can make characteristics of vessels more apparent in the dark background. We used the top-hat transform to process the response image produced by the B-COSFIRE filter to enhance vessel structures and reduce noise. As a result, during the threshold segmentation operation, more vessel pixels will be correctly classified and noise pixels will decrease.

The top-hat transformation is defined as follows:

where I is a gray-scale image, S is a linear structuring element, and ° is opening operation. The top-hat transformation of I is defined as I minus the opening of I. Opening operation can effectively extract useful information of the background that is the same size of the structuring element so that employing top-hat transformation can relatively obtain uniform front-view information.

In this study, the structuring element is square. We experimentally found that the use of morphological top-hat transformation has less noise and better performance in vessel segmentation.

There are two ways to select threshold processing vessel response images. The first method is selecting a manual threshold for each dataset, as in Azzopardi et al. (3). This method does not need too much processing time, but the result is relatively bad. The second one is called adaptive thresholding, which automatically selects the threshold value for each image, instead of the whole dataset, as in Khan et al. (18). In proposed method, we choose the first method to select threshold standing in the point of quickly and effectively segmenting vessels. There are no significant differences between the results obtained by different methods, but the first method can save as much processing time as we expected.

Post-processing

The images that have been processed by binary thresholding are destroyed by noise. The present results of vessels segmented are far from satisfactory, that is, some vessel pixels are wrongly classified and disappear in the segmentation images. At the same time, many noise points are classified into vessels. So, we consider the post-processing measures to reduce this phenomenon to obtain a better segmentation result.

The adopted post-processing method is based on the connected domain to recover vessels. Specific steps are as follows:

Step1. Carry out thinning operation on binary image Ire and then the expansion operation with the template of 3 by 3, followed by morphology complement operation so that 0 surround by 1 in the eight neighborhoods is set to 0.

Step2. Repeat Step 1 to obtain a relatively complete vascular connected domain and the resulting image Ifin.

Step3. Obtain Iτ by taking the intersection of Ire and Ifin, then Ifin minus Iτ, and obtain connected regions Iin which can fill Ire.

Step4. In the model of the eight neighborhoods, in this study, each connected domain of Iin have been used to recover vessels that were denoised. The number of the connected domains before and after the recovering operation have been compared. If reduced, it implies that there are misclassified vascular bifurcations and crossovers in this noise region, and the vascular vessels should be recovered. On the contrary, if it increases, it shows that the noise region is real, and it should not be recovered.

Step5. Remove the connected domain that is less than 20 pixels to obtain a better optimized result.

The segmentation results usually consist of some small isolated regions caused by noise, and these regions are sometimes wrongly detected as vessels (18, 20). We used a post-processing method based on connected domains and removed less than or equal to 20 unconnected pixels considered as a non-vessel or a part of the background noise. So the unconnected non-vessel pixel was eliminated, and the thin connected vessel was preserved. After these steps, by identifying and recovering the vascular bifurcations and breakpoints, the continuity and the accuracy of vascular vessels segmentation results were improved. The post-processing operation gives a final resultant binary image.

Experimental Results and Analysis

In our experiments, the proposed method was evaluated on the publicly available DRIVE database and STARE database. It is worth mentioning that the scarcity of artificial calibration image data is a major obstacle for medical image processing research and development. So, even if the number of images in each database is not big enough, the two databases still occupy a very important position in the field of blood vessel segmentation in fundus images. Since these databases contain ground truth maps manually segmented by different professionals, they have gained popularity in the field of retinal vessel segmentation. Experiments on those two databases also facilitate the comparison of the methods proposed in this article with other methods. Three different measures are used to evaluate the performance. Our experiments were tested with MATLAB in the environment of 2.5 Ghz Intel i5-3210 M CPU and 4 GB memory.

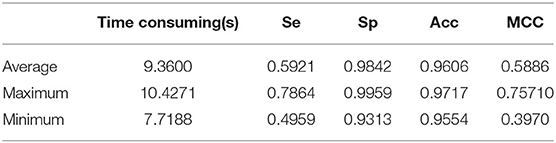

In order to verify the versatility and feasibility of the proposed method, we added a set of experiments, using our method to segment the fundus image in the publicly available CHASEDB1 databases. We used the same three metrics as the aforementioned experiment to evaluate performance. In addition, in order to use a more meaningful measure for the evaluation of the quality of pixel-wise segmentation and to compare with the recent literature to show the effectiveness of our proposed method, the Matthews correlation coefficient (MCC) is introduced (6, 34), the supplementary experiment was tested with MATLAB in the environment of a 2.5 Ghz Intel i5-10300H CPU and 16 GB memory.

Database

The DRIVE database consists of 40 color images taken by a Canon CR5 3CCD camera with a 45° FOV which are divided into a training set and a test set, each containing 20 images. The size of each image is 768 × 584 pixels, with 8 bits per color channel, and the FOV is circular with 450 pixels in diameter. In the DRIVE database, there is a corresponding mask that delineates the FOV area and the binary vessel segmentation. The images in the test set have been segmented by two human observers, while the images in the training set have been segmented by one observer.

The STARE database consists of 20 color images, and half of the STARE database images contains signs of pathologies. A Topcon TRC-50 fundus camera at 35° FOV was used to acquire the images. The size of each image is 700 × 605 pixels with 8 bits per color channel, and the FOV in the images is around 650 × 550 pixels. The images in the STARE database have been manually segmented by two different observers.

The CHASEDB1 database consists of 28 color images taken from the eyes of 14 school children. Usually, the first 20 images are used for training, and the remaining eight images are used for testing. The size of each image is 999 × 960 pixels, and the binary field-of-view (FOV) mask and segmentation ground truth are obtained by manual methods.

For the comparability of experimental data, the performance of the proposed method is measured on the test set of the DRIVE database and on the all images of the STARE database and CHASEDB1 database by comparing the automatically generated binary images with the ones that are segmented by the first observer as gold standard.

Evaluation Method

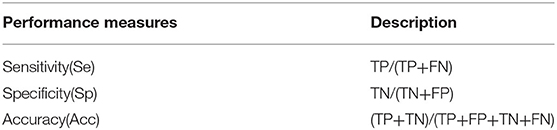

Each resulting binary image was compared with the corresponding gold standard by computing the four performance measurements: true positive (TP) is the number of pixels correctly classified as vessels, false positive (FP) is the number of pixels misclassified as vessels, true negative (TN) is the number of pixels correctly classified as backgrounds, and false negative (FN) is the number of pixels misclassified as backgrounds.

In order to evaluate the performance of our method and compare with state-of-the-art methods, we computed the measures known as accuracy (Acc), sensitivity (Se), and specificity (Sp) in Table 2.

The Acc of one image is a fraction of pixels representing the ratio of the total number of correctly classified pixels to the total number of pixels in the image FOV. Se is determined by dividing the number of pixels correctly classified as vessel pixels by the total vessel pixels in the manual segmentation; thus, Se denotes the ability of correctly identifying the vessel pixels. Sp is determined by dividing the number of pixels correctly classified as background pixels by the total background pixels in the manual segmentation; thus, Sp reflects the ability to detect non-vessel pixels.

In addition, we evaluated MCC indicators referring to Ricci et al. (41). The MCC (7, 21) is a more appropriate indicator of the accuracy of binary categorization in the case of unbalanced structures. The MCC counting transformation is defined as follows:

where N = TN + TP + FN + FP, S = (TP + FN)/N and P = (TP + FP)/N.

Performance of the Proposed Method

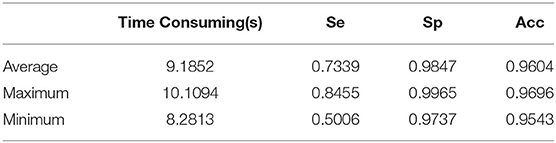

Because the proposed method does not need to train a classifier, images in the training set of the DRIVE database have not been used. We tested on 20 fundus images from the test set of the DRIVE database. The total segmenting time is about 185 s, while each image takes about 9.19 s. The performance results of retinal vessel segmentation on the DRIVE database are shown in Table 3. The average Acc, Se, and Sp of proposed method are 0.9604, 0.7339, and 0.9847, respectively.

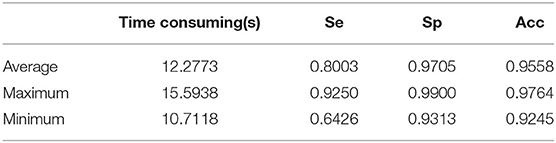

For the STARE database, we tested all 20 fundus images since the proposed method does not have to select images to train a classifier. The total segmenting time is about 242 s, while each image takes about 12.28 s. The performance results of retinal vessel segmentation on the STARE database are shown in Table 4. The average Acc, Se, and Sp of the proposed method are 0.9558, 0.8003, and 0.9705, respectively.

For the CHASEDB1 database, we tested all 28 fundus images for the same reason that the proposed method does not have to select images to train a classifier. The total segmenting time is about 251 s, while each image takes about 8.96 s. The average Acc, Se, and Sp of the proposed method are 0.9606, 0.6921, and 0.9842, respectively. We still introduced the Matthews correlation coefficient with a score of 0.5886. The performance results of retinal vessels segmentation on the CHASEDB1 database are shown in Table 5.

The proposed method can generate the segmentation map corresponding to the fundus map in a short time, as shown in Tables 3–5. The processing time for segmenting DRIVE, STARE, and CHASEDB1 fundus map data using our method can reach 8.2318, 10.7188, and 7.7188s, respectively. This shows that the proposed method has real-time feasibility. In addition, our experiments were tested using MATLAB in different environments, and the test results are shown in Tables 3–5, which shows that our experiments are experimentally feasible.

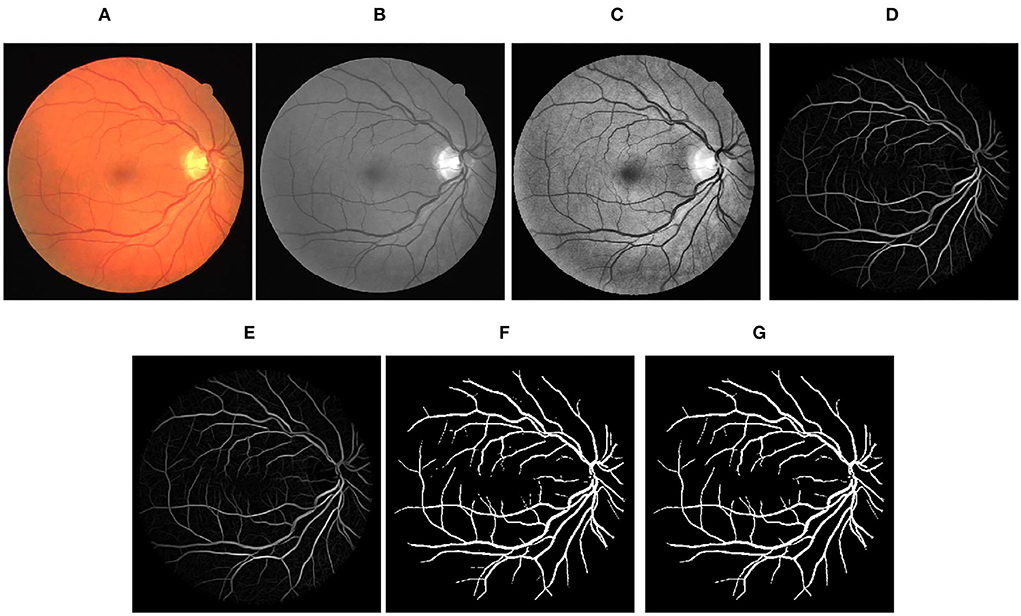

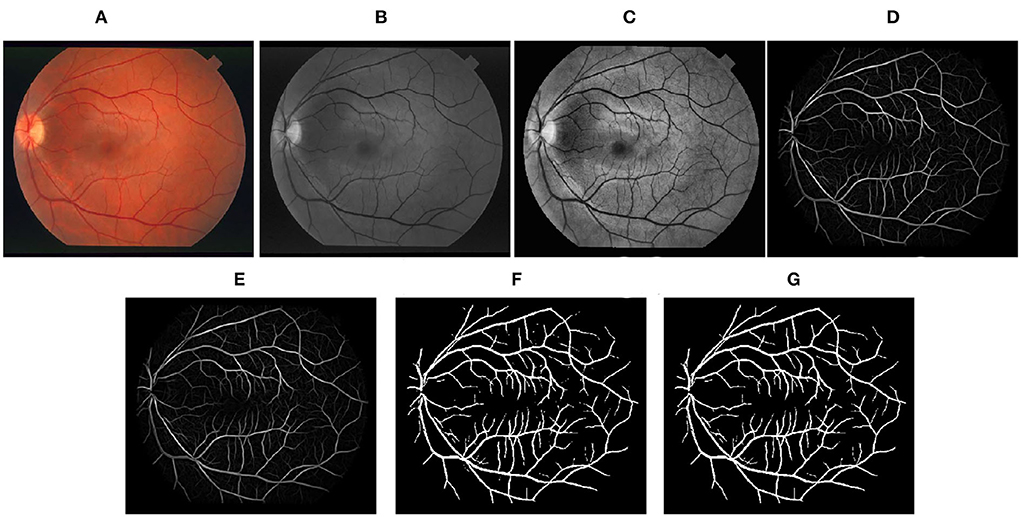

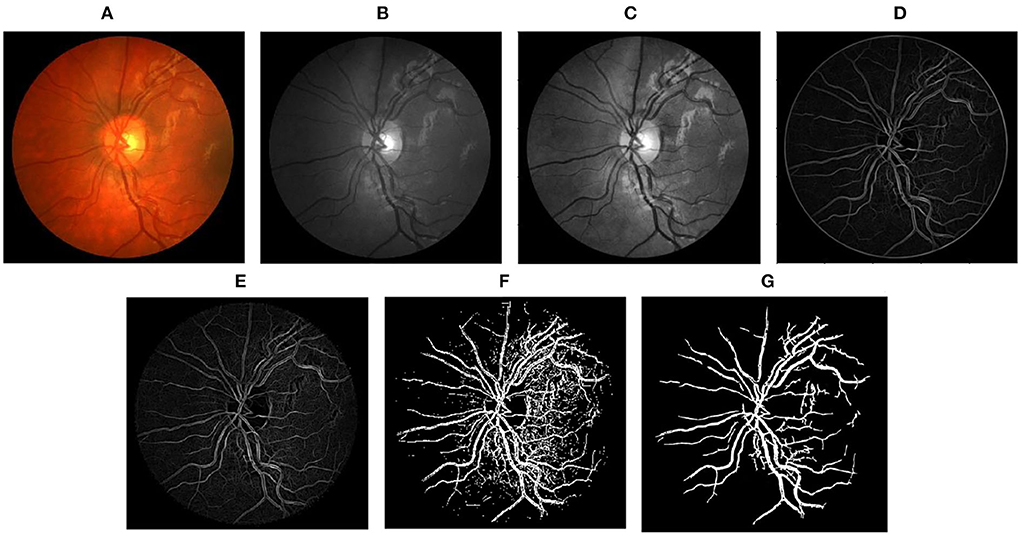

Step-by-step representation of the proposed framework applied to images of DRIVE, STARE, and CHASEDB1 databases has been depicted in Figures 3–5, respectively. In the final segmentation result graph, looking closely at the segmentation of the vessel ends, it is obvious that the method used in this study has good effectiveness in distinguishing between vessel-like noise and thin vessels.

Figure 3. Stepwise illustration of proposed method. (A) Color retinal fundus image from DRIVE database. (B) Green channel retinal fundus image. (C) Processing diagram after CLAHE. (D) Processing diagram after B-COSFIRE filters. (E) Processing diagram after morphological filters. (F) Processing diagram after binary threshold. (G) Final segmented image.

Figure 4. Stepwise illustration of the proposed method. (A) Color retinal fundus image from STARE database. (B) Green channel retinal fundus image. (C) Processing diagram after CLAHE. (D) Processing diagram after B-COSFIRE filters. (E) Processing diagram after morphological filters. (F) Processing diagram after binary threshold. (G) Final segmented image.

Figure 5. Stepwise illustration of the proposed method. (A) Color retinal fundus image from CHASEDB1 database. (B) Green channel retinal fundus image. (C) Processing diagram after CLAHE. (D) Processing diagram after B-COSFIRE filters. (E) Processing diagram after morphological filters. (F) Processing diagram after binary threshold. (G) Final segmented image.

Comparative Experiment

We compared the proposed B-COSIRE filter approach with other state-of-the-art methods including supervised methods (the top six methods), unsupervised methods (in the middle), and five methods of deep learning (the last four methods) on DRIVE and STARE databases, respectively. The performance in the term of accuracy, sensitivity, and specificity is tabulated in Tables 6, 7, respectively.

The proposed framework shows the almost highest results on the DRIVE images for supervised, unsupervised, and deep learning methods, with Acc = 0.9604, Se = 0.7339, and Sp = 0.9847. Our proposed technique also showed high efficiency in terms of sensitivity and specificity among all kinds of techniques on the STARE dataset. The accuracy Acc = 0.9558 also shows a great performance among the methods compared.

In the test experiments on DRIVE and STARE datasets, the proposed method consumed much lower time overhead than supervised methods and deep learning methods and also has advantages over some unsupervised methods. In the CHASEDB1 dataset supplementary experiment, we tested 28 fundus images; the total segmentation time was 251 seconds and the average time was 8.96 s, which shows that our time overhead is relatively low. Furthermore, in the experimental phase, our method does not need to use a large number of image annotations for training. This study used three lightweight public datasets but obtained relatively high test performance, as shown in Tables 6, 7. It can be seen that the proposed method has extremely low overhead on the dataset.

As illustrated before, the proposed unsupervised method is better than other unsupervised methods. The performance of the proposed method is almost the same as that of the supervised methods, although is slightly worse than that of the best performance of deep learning methods. It is important that the proposed method is very efficient, while supervised methods and deep learning methods both consume much time in the process of classifier training. It proves the favorable applicability of the proposed method, which is fast and effective in the field of computer-aided diagnosis.

Findings

In our work, we proposed a vessel segmentation method of fundus images. In the course of the experiment, we discovered some other issues that may be worth studying. First, in the image preprocessing process, the application of contrast enhancement algorithms to the image will not necessarily lead to better segmentation because some contrast enhancement algorithms will also enhance the background features (44), causing the segmented image to contain more noise. In supplementary experiment, we replaced the CLAHE algorithm in our proposed method with the GLM algorithm referring to Khan et al. (43) and found that the segmentation effect is comparable. We assume this is affected by the environment and data. This is also the direction of our next work. We look forward to making improvements in the method proposed by Khan et al. (43) to further optimize the segmentation method. Second, we found that there are white bars of noise in the blood vessels in part of the binarized images after segmentation. We were able to eliminate it through morphological operations, but the effect still has room for improvement. We believe that we can consider the method of multi-scale input fusion in future.

Conclusion

This article presents an improved unsupervised method for vascular segmentation on retinal color fundus images. The proposed method is based on the B-COSFIRE filter, through a series of operations including CLAHE, B-COSFIRE filters, morphological filters, and post-process to obtain final binary vessel images. The method is tested on the public databases, DRIVE, STARE, and CHASEDB1. The proposed method requires little processing time (around 9 s for each image in DRIVE and CHASEDB1, 12 s for each image in STARE) and results in the average accuracy, sensitivity, and specificity of 0.9604, 0.7339, and 0.9847 for DRIVE database; 0.9558, 0.8003, and 0.9705 for STARE database; and 0.6921, 0.9842, and 0.9606 for CHASEDB1 database, respectively. In general, the method used in this study has the following advantages: 1. low time overhead and low dataset overhead, 2. good versatility in the field of computer-aided diagnosis, and 3. a relatively high segmentation effect while maintaining a relatively low time overhead. Through the analysis of the experimental results, it is proved that the method proposed in this article is cutting-edge and effective in the field of retinal blood vessel segmentation. In conclusion, the proposed method can be employed for computer-aided diagnosis, disease screening, and any other circumstances that require fast delineation of blood vessels. It may help us prevent many related diseases, such as diabetes and glaucoma. The direction of our future investigation should be done by configuring specific COSFIRE filters of different shapes with other methods of image processing to solve lesion extraction in retinal fundus images.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://blogs.kingston.ac.uk/retinal/chasedb1/; http://www.isi.uu.nl/Research/Databases/DRIVE/download.php.

Author contributions

WL, YX, and HH are the experimental designers and executors of this study, completed the data analysis, and wrote the first draft of the paper. CZ, HW, and ZL participated in the experimental design and analysis of the experimental results. AS is the project designer and director, who directed the experimental design, data analysis, thesis writing, and revision. All authors read and agree to the final text.

Funding

This work is supported by the Scientific and Technological Innovation Leading Plan of High-tech Industry of Hunan Province (2020GK2021), the National Natural Science Foundation of China (61702559), the Research on the Application of Multi-modal Artificial Intelligence in Diagnosis and Treatment of Type 2 Diabetes under Grant No. 2020SK50910, the International Science and Technology Innovation Joint Base of Machine Vision and Medical Image Processing in Hunan Province (2021CB1013), and the Natural Science Foundation of Hunan Province (No. 2022JJ30762).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Yusup M, Chen XY. Epidemiology survey of visual loss. Int J Ophthalmol. (2010) 10:304–7. doi: 10.3969/j.issn.1672-5123.2010.02.033

2. Resnikoff S, Pascolini D, Etya'Ale D, Kocur I, Pararajasegaram R, Pokharel G P, et al. Global data on visual impairment in the year 2002. Bull World Health Organ. (2004) 82:844–51. doi: 10.0000/PMID15640920

3. Azzopardi G, Strisciuglio N, Vento M, Petkov N. Trainable COSFIRE filters for vessel delineation with application to retinal images. Med Image Anal. (2015) 19:46–57. doi: 10.1016/j.media.2014.08.002

4. Azzopardi G, Petkov N. A CORF computational model of a simple cell that relies on LGN input outperforms the Gabor function model. Biol Cybern. (2012) 106:177–89. doi: 10.1007/s00422-012-0486-6

5. Azzopardi G, Azzopardi N. Trainable COSFIRE filters for keypoint detection and pattern recognition. IEEE Trans Pattern Anal Mach Intell. (2012) 35:490–503. doi: 10.1109/TPAMI.2012.106

6. Khan KB, Khaliq AA, Jalil A, Iftikhar MA, Ullah N, Aziz MW, et al. A review of retinal blood vessels extraction techniques: challenges, taxonomy, and future trends. Pattern Anal Appl. (2018) 22:767–802. doi: 10.1007/s10044-018-0754-8

7. Owen CG, Rudnicka AR, Mullen R, Barman SA, Monekosso D, Whincup PH, et al. Measuring retinal vessel tortuosity in 10-year-old children: validation of the Computer-Assisted Image Analysis of the Retina (CAIAR) program. Invest Ophthalmol Vis Sci. (2009) 50:2004–10. doi: 10.1167/iovs.08-3018

8. Mittal M, Iwendi C, Khan S, Javed AR. Analysis of security and energy efficiency for shortest route discovery in low-energy adaptive clustering hierarchy protocol using Levenberg-Marquardt neural network and gated recurrent unit for intrusion detection system. Trans Emerg Telecommun Technol. (2021) 32: e3997. doi: 10.1002/ett.3997

9. Strisciuglio N, Azzopardi G, Vento M, Petkov N. Multiscale blood vessel delineation using B- COSFIRE filters. International Conference on Computer Analysis of Images and Patterns. Valletta: Springer, Cham. (2015).

10. Soares J, Leandro J, Cesar RM, Jelinek HF, Cree M. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans Med Imaging. (2006) 25:1214–22. doi: 10.1109/TMI.2006.879967

11. Marin D, Aquino A, Gegundez-Arias ME, Bravo JM. A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans Med Imaging. (2011) 30:146. doi: 10.1109/TMI.2010.2064333

12. Fraz MM, Remagnino P, Hoppe A, Uyyanonvara B, Rudnicka AR, Owen CG, et al. Blood vessel segmentation methodologies in retinal images – A survey. Comput Methods Prog Biomedi. (2012) 108:407–33. doi: 10.1016/j.cmpb.2012.03.009

13. Aslani S, Sarnel H. A new supervised retinal vessel segmentation method based on robust hybrid features. Biomed Signal Process Control. (2016) 30:1–12. doi: 10.1016/j.bspc.2016.05.006

14. Strisciuglio N, Azzopardi G, Vento M, Petkov N. Supervised vessel delineation in retinal fundus images with the automatic selection of B-COSFIRE filters. Mach Vision Appl. (2016) 27:1137–49. doi: 10.1007/s00138-016-0781-7

15. Zhu C, Zou B, Xiang Y, Cui J, Hui WU. An ensemble retinal vessel segmentation based on supervised learning in fundus images. Chin J Electron. (2016) 25:503–11. doi: 10.1049/cje.2016.05.016

16. Al-Diri B, Hunter A, Steel D. An Active Contour Model for Segmenting and Measuring Retinal Vessels. IEEE Trans Med Imaging. (2009) 28:1488–97. doi: 10.1109/TMI.2009.2017941

17. Liu I, Sun Y. Recursive tracking of vascular networks in angiograms based on the detection- deletion scheme. IEEE Trans Med Imaging. (1993) 12:334–41. doi: 10.1109/42.232264

18. Khan KB Khaliq AA Shahid M B-COSFIRE filter and VLM based retinal blood vessels segmentation and denoising. In: International Conference on Computing. Quetta: IEEE. (2016).

19. Bahadarkhan K, Khaliq AA, Shahid M. A morphological hessian based approach for retinal blood vessels segmentation and denoising using region based otsu thresholding. PLoS ONE. (2016) 11:1646–2744. doi: 10.1371/journal.pone.0158996

20. Khan KB, Khaliq AA, Shahid M, Khan S. An efficient technique for retinal vessel segmentation and denoising using modified isodata and Clahe. Int Islamic Univ Malays Eng J. (2016) 17:31–46(16). doi: 10.31436/iiumej.v17i2.611

21. Khan KB, Siddique MS, Ahmad M, Mazzara M, Khattak DK. A hybrid unsupervised approach for retinal vessel segmentation. BioMed Res Int. (2020) 2020. doi: 10.1155/2020/8365783

22. Chen C, Chuah JH, Ali R, Wang Y. Retinal vessel segmentation using deep learning: a review. IEEE Access. (2021) 9:111985–2004. doi: 10.1109/ACCESS.2021.3102176

23. Dhiman P, Kukreja V, Manoharan P, Kaur A, Kamruzzaman MM, Dhaou IB, et al. A novel deep learning model for detection of severity level of the disease in citrus fruits. Electronics. (2022) 11:495. doi: 10.3390/electronics11030495

24. Gadamsetty S, Ch R, Ch A, Iwendi C, Gadekallu TR. Hash-based deep learning approach for remote sensing satellite imagery detection. Water. (2022) 14:707. doi: 10.3390/w14050707

25. Melinsca M, Prentasic P, Loncaric S. Retinal vessel segmentation using deep neural networks. In: International Conference on Computer Vision Theory and Applications. Coimbatore. (2015).

26. Khalaf AF, Yassine IA, Fahmy AS. Convolutional neural networks for deep feature learning in retinal vessel segmentation. IEEE International Conference on Image Processing. IEEE. (2016) 10:385–8. doi: 10.1109/ICIP.2016.7532384

27. Fu H, Xu Y, Wong D, Jiang L. Retinal vessel segmentation via deep learning network and fully- connected conditional random fields. In: 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI 2016). Prague: IEEE. (2016).

28. Yue K, Zou B, Chen Z, Liu Q. Retinal vessel segmentation using dense U-net with multiscale inputs. J Med Imaging. (2019) 6:1. doi: 10.1117/1.JMI.6.3.034004

29. Cheng Y, Ma M, Zhang L, Jin C J, Zhou Y. Retinal blood vessel segmentation based on Densely Connected U-Net. Math Biosci Eng. (2020) 17:3088–108. doi: 10.3934/mbe.2020175

30. Ooi AZH, Embong Z, Abd Hamid AI, Zainon R, Wang SL, Ng TF, et al. Interactive blood vessel segmentation from retinal fundus image based on canny edge detector. Sensors. (2021) 21:1424–8220. doi: 10.3390/s21196380

31. Li Z, Jia M, Yang X, Xu M. Blood vessel segmentation of retinal image based on dense-U-net network. Micromachines (Basel). (2021) 12:1478. doi: 10.3390/mi12121478

32. Zhai ZL, Feng S, Yao LY, Li PH. Retinal vessel image segmentation algorithm based on encoder- decoder structure. Multimedia Tools Appl. (2022) 13167:157. doi: 10.1007/s11042-022-13176-5

33. Azzopardi G, Petkov N. A shape descriptor based on trainable COSFIRE filters for the recognition of handwritten digits. In: International Conference on Computer Analysis of Images & Patterns. Berlin, Heidelberg: Springer. (2013).

34. Azzopardi G, Petkov N. Automatic detection of vascular bifurcations in segmented retinal images using trainable COSFIRE filters. Pattern Recognit Lett. (2013) 34:922–33. doi: 10.1016/j.patrec.2012.11.002

35. Azzopardi G, Greco A, Vento M. Gender recognition from face images with trainable COSFIRE filters. In: IEEE International Conference on Advanced Video & Signal Based Surveillance. IEEE. Colorado Springs. (2016).

36. Gecer B, Azzopardi G, Petkov N. Color-blob-based COSFIRE filters for object recognition. Image Vision Comput. (2017) 57:165–74. doi: 10.1016/j.imavis.2016.10.006

37. Guo J, Shi C, Azzopardi G, Petkov N. Recognition of Architectural and Electrical Symbols by COSFIRE Filters with Inhibition. In: Computer analysis of Images and Patterns. Valletta: Springer, Cham. (2015).

38. Guo J, Shi C, Azzopardi G, Petkov N. Inhibition-augmented trainable COSFIRE filters for keypoint detection and object recognition. Mach Vision Appl. (2016) 27:1197–211. doi: 10.1007/s00138-016-0777-3

39. Niemeijer M, Staal JJ, Ginneken BV, Loog M, Abramoff MD. Comparative study of retinal vessel segmentation methods on a new publicly available database. Proc SPIE. (2004) 5370:648–56. doi: 10.1117/12.535349

40. Mendonca AM, Campilho A. Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction. IEEE Trans Med Imaging. (2006) 25:1200–13. doi: 10.1109/TMI.2006.879955

41. Ricci E, Perfetti R. Retinal blood vessel segmentation using line operators and support vector classification. IEEE Trans Med Imaging. (2007) 26:1357. doi: 10.1109/TMI.2007.898551

42. Fadzil M, Nugroho HA, Nugroho HA, znita IL. Contrast Enhancement of Retinal Vasculature in Digital Fundus Image. In: International Conference on Digital Image Processing IEEE. Bangkok. (2009).

43. Khan KB, Khaliq AA, Shahid M. A novel fast GLM approach for retinal vascular segmentation and denoising. J Inf Sci Eng. (2017) 33:1611–27. doi: 10.6688/JISE.2017.33.6.14

Keywords: retinal vessel segmentation, COSFIRE, postprocess, computer-aided diagnosis, medical image segmentation

Citation: Li W, Xiao Y, Hu H, Zhu C, Wang H, Liu Z and Sangaiah AK (2022) Retinal Vessel Segmentation Based on B-COSFIRE Filters in Fundus Images. Front. Public Health 10:914973. doi: 10.3389/fpubh.2022.914973

Received: 07 April 2022; Accepted: 14 June 2022;

Published: 09 September 2022.

Edited by:

Celestine Iwendi, School of Creative Technologies University of Bolton, United KingdomReviewed by:

Ebuka Ibeke, Robert Gordon University, United KingdomShweta Agrawal, Sage University, India

Copyright © 2022 Li, Xiao, Hu, Zhu, Wang, Liu and Sangaiah. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chengzhang Zhu, YW5hbmRhd29ya0AxMjYuY29t

Wenjing Li

Wenjing Li Yalong Xiao

Yalong Xiao Hangyu Hu4

Hangyu Hu4 Chengzhang Zhu

Chengzhang Zhu