- 1Institute of Modern Physics, Chinese Academy of Sciences, Lanzhou, China

- 2Key Laboratory of Basic Research on Heavy Ion Radiation Application in Medicine, Lanzhou, China

- 3Key Laboratory of Heavy Ion Radiation Biology and Medicine of Chinese Academy of Sciences, Lanzhou, China

- 4University of Chinese Academy of Sciences, Beijing, China

Objective: Precise segmentation of human organs and anatomic structures (especially organs at risk, OARs) is the basis and prerequisite for the treatment planning of radiation therapy. In order to ensure rapid and accurate design of radiotherapy treatment planning, an automatic organ segmentation technique was investigated based on deep learning convolutional neural network.

Method: A deep learning convolutional neural network (CNN) algorithm called BCDU-Net has been modified and developed further by us. Twenty two thousand CT images and the corresponding organ contours of 17 types delineated manually by experienced physicians from 329 patients were used to train and validate the algorithm. The CT images randomly selected were employed to test the modified BCDU-Net algorithm. The weight parameters of the algorithm model were acquired from the training of the convolutional neural network.

Result: The average Dice similarity coefficient (DSC) of the automatic segmentation and manual segmentation of the human organs of 17 types reached 0.8376, and the best coefficient reached up to 0.9676. It took 1.5–2 s and about 1 h to automatically segment the contours of an organ in an image of the CT dataset for a patient and the 17 organs for the CT dataset with the method developed by us, respectively.

Conclusion: The modified deep neural network algorithm could be used to automatically segment human organs of 17 types quickly and accurately. The accuracy and speed of the method meet the requirements of its application in radiotherapy.

Introduction

Radiation therapy, which deliver lethal doses to a target volume while sparing the surrounding normal tissues as much as possible, has been a key modality of cancer treatments. So, accurate and rapid identification and delineation of normal organs and target volumes are the basis for precision radiotherapy (1–5). In the conventional workflow of radiation therapy, medical doctors spend too much time dealing with CT images manually and the accuracy of organs segmentation depends heavily on the professional skills of medical doctors (6). An amateurish job of human organ contour segmentation could seriously influence the curative effect of radiotherapy.

The common organ segmentation algorithms with conventional automaticity and semi-automaticity are based on gray value, texture, template setting, and other features of CT images. Therefore, these methods are often apt to failure in identifying all organs in CT images and delineating the contours (7–9).

With the rapid development of artificial intelligence (AI) technologies, especially in the application of fundamental convolutional neural networks (CNN), the medical image recognition and segmentation are getting more and more higher reliability and accuracy, and this is also a hot issue in current research (10–15), a lot of new CNN net (such as BDR-CNN-GCN) were designed to classified the cancer and achiever a significantly effective (16). The appearance of semantic-based full convolutional network and U-Net enables AI technology to achieve pixel-to-pixel prediction, which had a wide impact on the field of computer vision as soon as they appeared. It has been widely used in the image segmentation, object detection, object recognition, and so on. In the field of biomedical images, CNN is widely used in automatic detection and classification of diseases, prediction of therapeutic effects, segmentation and recognition of special tissues and organs, etc. (15, 17–21). The medical data which has been annotated by experienced physicians was used to train and validate the CNN net, so the CNN can be used to predict and extracts the features from new medical data. Based on these features which obtained from the designed CNNs, the algorithm can accurately predict and segment the medical images (22–26).

In order to achieve the precision radiotherapy, automatic and accurate identification, and segmentation of human organs in medical images are absolutely necessary. Therefore, we designed and modified a U network of CNNs (BCDU-Net: Bi-Directional ConvLSTM U-Net with Dense Connected Convolutions) (27–29), and CT images and corresponding organs (RT-structure) data set from 339 patients were applied to train, validate, and test the network in our work. The method of automatic and accurate segmentation of human organs in medical image definitely can provide support for decreasing the workload of physicians and the development of precision radiotherapy in the future.

Materials and Methods

Test Data

In this study, the data were randomly selected from more than 22,000 CT images and corresponding tissue and organ contours from 339 patients who received radiotherapy in a tumor hospital in 2018. All of these tissue and organ contours which had been used in radiotherapy were generated and verified by several experienced physicians using the conventional commercial treatment planning system in the hospital.

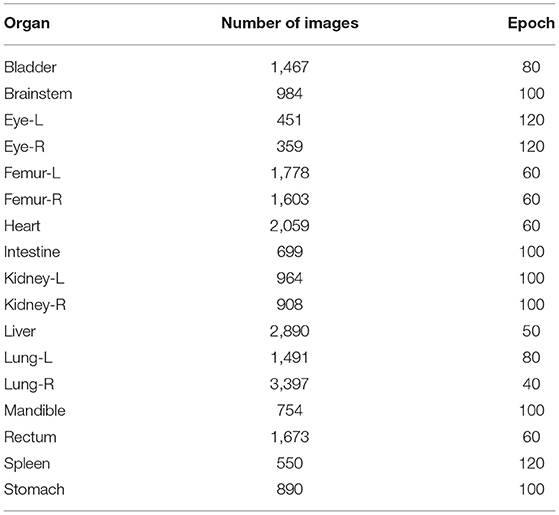

The CT images and corresponding tissue and organ contours used in the experiment are outlined as follows: 984 bladder images, 451 brainstem images, 451 left eye (eye-L) images, 359 right eye (eye-R) images, 1,778 left femur (femur-L) images, 1,603 right femur (femur-R) images, 2,059 heart images, 699 intestine images, 964 left kidney images, 908 right kidney images, 2,890 liver images, 1,491 left lung images, 3,397 right lung images, and 754 mandible images, 1,673 rectum images, 550 spleen images, and 890 stomach images.

In this study, 70% of the images of each organ contours and corresponding CT images were randomly selected for training, 20% were selected for verification and 10% were selected for testing.

Deep CNN Algorithm

In order to realize automatic segmentation of organs with CT images, a new U-Net algorithm based on the deep CNN algorithm was designed and developed using python language, in which a BCDU-Net network algorithm of deep neural network which has been published by Azad et al. (27) was referred and improved by us in the present work. In the BCDU-net algorithm the authors included BN after each up-convolutional layer to speed up the network learning process. And BN can help the network to improve the performance. Also the network with dense connections could improve the accuracy. The key idea of dense convolutions is sharing feature maps between blocks through direct connection between convolutional block. Consequently, each dense block receives all preceding layers as input, and therefore, produces more diversified and richer features. So the BCDU-net has a better preference (28, 29).

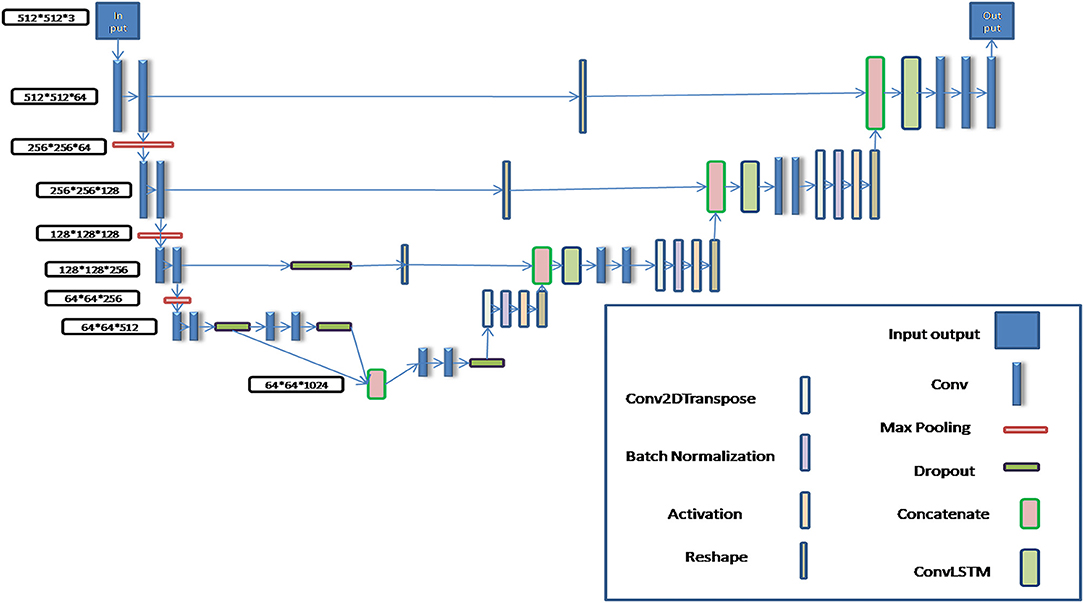

The schematic diagram of the modified algorithm is shown in Figure 1. In the conventional U-Net algorithm, input images are directly copied and added into the deconvolution decoder part from the code part of network, so that the automatic segmentation prediction could be realized. The Bi-Directional ConvLSTM algorithm (28, 29) is used to extract features in the BCDU-Net network compared with the conventional U-Net one. In this work, several conventional U-Net network algorithms were tested and the BCDU-Net model showed an excellent performance in automatic organ recognition with CT images. Therefore, the BCDU-Net model algorithm was modified for use in this work.

In the process of algorithm development, in order to make the BCDU-Net network model suitable for the input of 512*512*3 CT images and the output of 512*512*3 tissue and organ contour sets, it was modified by adding two convolution computations behind the deconvolution computing layer so as to obtain multi-channel segmentation images. Then, the predicted contours of organs were automatically output.

Assessment Method

Accuracy, Precision, and Dice similarity coefficient (DSC) were used to evaluate the algorithm effectiveness in this paper.

Recall: the proportion of correct prediction results in all test data. The threshold range of accuracy is [0,1], the larger the value of accuracy is, the better the results are.

Precision: the proportion of correct prediction. The threshold range of precision is [0,1], the larger the value of accuracy is, the better the results are.

DSC: an important parameter to evaluate the effect of network prediction. Its calculation formula is as follows:

The threshold range of DSC is [0,1], the closer it is to 1, the more accurate the prediction results will be.

In this paper, all of the mentioned evaluation parameters were used to evaluate the prediction results of each organ contours.

Testing Platform

The hardware platform used in this work is as follows: Dell T5820/P5820X (tower workstation); CPU: I7-7800x 6-core 3.5 ghz Core X series; Graphics GPU: Nvidia Titan RTX-24G; Memory: DDR4 32 GB; Hard disk: solid state 1T+ mechanical 4T.

Operating system: Ubuntu Linux 16.04; Development tools: Spyder + Tensorflow + Keras; Development language: Python.

Results

More than 22,000 CT images and corresponded organ contours from 329 patients were randomly extracted, in which 70% was for training set, 20% for validation set and 10% for testing set. The labeled CT images and corresponded organ contour images were used to train and validate the algorithm modified in this paper. And then the weight parameters of the modified BCDU-Net algorithm model were obtained.

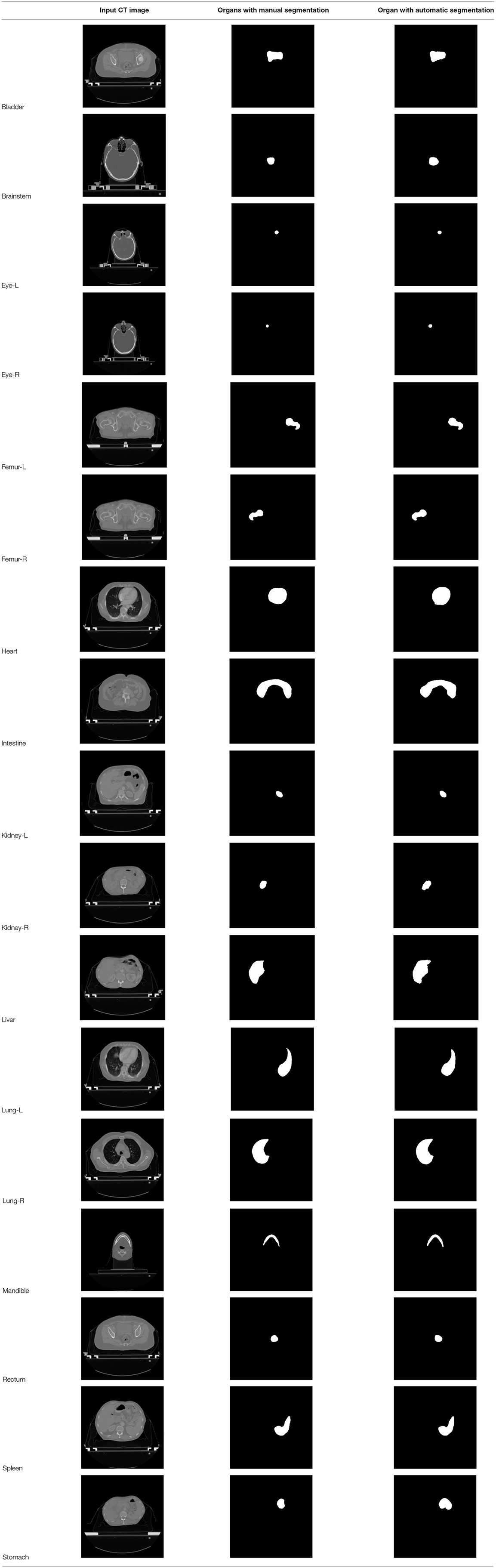

According to the acquired model parameters mentioned above, the testing set was calculated and examined. The performance of the modified algorithm for automatic organ segmentation in CT images is shown in Table 1. The organ contours segmented automatically by the algorithm were similar to those delineated by physicians manually. The model parameters including DSC, Accuracy, Recall, and Precision evaluation ones were served to evaluate the segmentation effectiveness of each organ in the validation and testing sets. In our work, the BCDU-Net CNN algorithm model was used to automatically segment different organs with the different training parameters such as epoch learning rate. The CT images which were randomly selected from the patients were put into the network model for training, and then the contours of different organs which were delineated automatically by the AI technology and manually by medical doctors were evaluated with the similarity coefficients, respectively. The results are given in Table 2. Most of the DSC values were better than 0.85 and among them the best even reached up to 0.9676. Generally, the automatic segmentation results met the requirements of clinical practice.

Discussion

At present, it takes medical doctors a lot of time and energy to identify and delineate human organs in CT images for radiotherapy treatment planning. High accuracy and efficiency of manual segmentation is always a big challenge for medical doctors. With the development of AI technology, the performance of the deep learning CNN algorithm which is used in image processing becomes better, and the CNN algorithm gets more applications in medical images processing for automatic detection of diseases and delineation of specific tissues and organs (malignant and benign). New BDR-CNN-GCN algorithm had been designed and used to classify breast cancer and achieved a better results (16). Also some new AI algorithms were designed and applied in different aspects of healthcare which strongly support the development of healthcare automation technology (2–4, 7–9). In our work, the BCDU-Net deep learning CNN model was modified and used for training and validation via 22,000 CT images and corresponding human organs 17 type from 339 patients. Compared with the manual segmentation, the average DSC value of the modified algorithm for the automatic segmentation of 17 type human organs was 0.8376, and the best DSC coefficient was up to 0.9676. Moreover, the DSC coefficient of 13 in 17 organs was better than 0.82. Obviously, the effectiveness of the algorithm after modification was improved.

The number of the images for each human organ in the 17 type, which were employed for training and validation, was different. So various epoch values were set when we trained for the different organs. The results are given in Table 3. The epoch value was set small when the number of the training images was large. Conversely, the epoch value was set large when the number of the training images was small. So, the accuracy of the automatic organ segmentation was improved, and the DSC value became better.

In this work, the DSC values of four organs (intestine, stomach, rectum, and brainstem) were lower than those of the other organs. The reason probably was that it was difficult to split these four organs accurately by medical doctors. So, the labeling quality of the images which were used to train and validate the network was poor. It took 6–8 h to train and validate the modified BCDU-Net algorithm model parameters for each organ. However, the contour of an organ could be segmented automatically in about 1.5–2 s from CT image. Clearly, the automatic organ segmentation with the modified algorithm is much higher efficient than manual delineation by medical doctors.

Even so, we will improve the precision of the modified model through cooperating closely with more experienced medical doctors, making the proposed method to be applied in clinical practice as early as possible.

Conclusion

To achieve accurate automatic organ segmentation in CT images, the structure of the BCDU-Net CNN algorithm model was designed and improved. More than 22,000 CT images and the contours of human organs of 17 types from 339 patients were applied to train and validate the CNN algorithm model. So, the parameters of the algorithm model were obtained. The performance of the algorithm with an average DSC coefficient of 0.8376 and time consumption of about 1.5–2 s was obtained. Thus, the algorithm could be used to segment human organs of 17 types in CT images automatically and efficiently. More cooperation with experienced medical doctors definitely makes the modified model more suitable for clinical use.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://pan.cstcloud.cn/s/gO5MpMG9TNQ.

Ethics Statement

The studies involving human participants were reviewed and approved by the Academic Committee of the Institute of Modern Physics, Chinese Academy of Sciences. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

GS and QL: conception, design, and experimental testing. XJ and CS: administrative support and data interpretation. All authors contributed to the article and approved the submitted version.

Funding

This work was jointly supported by the Key Deployment Project of Chinese Academy of Sciences (Grant No. KFZD-SW-222) and the West Light Foundation of Chinese Academy of Sciences [Grant No. (2019)90].

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Wang SH, Sun J, Phillips P, Zhao G, Zhang YD. Polarimetric synthetic aperture radar image segmentation by convolutional neural network using graphical processing units. J. Real Time Image Process. (2018) 15:631–42. doi: 10.1007/s11554-017-0717-0

2. Wang SH, Tang C, Sun J, Yang J, Huang C, Phillips P, et al. Multiple sclerosis identification by 14-layer convolutional neural network with batch normalization, dropout, and stochastic pooling. Front Neurosci. (2018) 12: 818. doi: 10.3389/fnins.2018.00818

3. Zhang YD, Pan C, Sun J, Tang C. Multiple sclerosis identification by convolutional neural network with dropout and parametric ReLU. J Comput Sci. (2018) 28:1–10. doi: 10.1016/j.jocs.2018.07.003

4. Chen L, Shen C, Zhou Z, Maquilan G, Albuquerque K, Folkert MR, et al. Automatic PET cervical tumor segmentation by combining deep learning and anatomic prior. Phys Med Biol. (2019) 64:085019. doi: 10.1088/1361-6560/ab0b64

5. Bi WL, Hosny A, Schabath MB, Giger ML, Birkbak NJ, Mehrtash A, et al. Artificial intelligence in cancer imaging: clinical challenges and applications. CA Cancer J Clin. (2019) 69:127–57. doi: 10.3322/caac.21552

6. James C, Luis S, Issam EN. Big data analytics for prostate radiotherapy. Front Oncol. (2016) 6:149. doi: 10.3389/fonc.2016.00149

7. Ibragimov B, Xing L. Segmentation of organs-at-risks in head and neck CT images using convolutional neural networks. Med Phys. (2017) 44:547–57. doi: 10.1002/mp.12045

8. Babier A, Boutilier JJ, McNiven AL, Chan T. Knowledge-based automated planning for oropharyngeal cancer. Med Phys. (2018) 45:2875–83. doi: 10.1002/mp.12930

9. Meyer P, Noblet V, Mazzara C, Lallement A. Survey on deep learning for radiotherapy. Comput Biol Med. (2018) 98:126–46. doi: 10.1016/j.compbiomed.2018.05.018

10. Xing L, Krupinski EA, Cai J. Artificial intelligence will soon change the landscape of medical physics research and practice. Med Phys. (2018) 45:1791–3. doi: 10.1002/mp.12831

11. Le MH, Chen J, Wang L, Wang Z, Liu W, Cheng KT, et al. Automated diagnosis of prostate cancer in multi-parametric MRI based on multimodal convolutional neural networks. Phys. Med. Biol. (2017) 62:6497–14. doi: 10.1088/1361-6560/aa7731

12. Basheera S, Satya SRM. Classification of brain tumors using deep features extracted using CNN. J. Phys. Conf. (2019) 1172:012016. doi: 10.1088/1742-6596/1172/1/012016

13. Junyoung P, Donghwi H, Yun KK, Kang SK, Kyeong KY, Sung LJ. Computed tomography super-resolution using deep convolutional neural network. Phys. Med. Biol. (2018) 63:145011. doi: 10.1088/1361-6560/aacdd4

14. Liang H, Tsui B, Ni H, Valentim C, Baxter SL, Liu G, et al. Evaluation and accurate diagnoses of pediatric diseases using artificial intelligence. Nat Med. (2019) 25:433–8. doi: 10.1038/s41591-018-0335-9

15. Jochems A, Deist TM, Naqa IE, Kessler M, Mayo C, Reeves J, et al. Developing and validating a survival prediction model for NSCLC patients through distributed learning across 3 countries. Int J Radiat Oncol Biol Phys. (2017) 99:344–52. doi: 10.1016/j.ijrobp.2017.04.021

16. Zhang YD, Satapathy SC, Guttery DS, Górriz JM, Wang SH. Improved breast cancer classification through combining graph convolutional network and convolutional neural network. Inform Process Manag. (2021) 58:102439. doi: 10.1016/j.ipm.2020.102439

17. Lustberg T, Van Soest J, Gooding M, Peressutti D, Aljabar P, Van der Stoep J, et al. Clinical evaluation of atlas and deep learning based automatic contouring for lung cancer. Radiother. Oncol. (2017) 126:312–7. doi: 10.1016/j.radonc.2017.11.012

18. Zhen X, Chen J, Zhong Z, Hrycushko BA, Zhou L, Jiang SB, et al. Deep convolutional neural network with transfer learning for rectum toxicity prediction in cervical cancer radiotherapy: a feasibility study. Phys Med Biol. (2017) 62:8246–63. doi: 10.1088/1361-6560/aa8d09

19. Zhang K, Zuo W, Chen Y, Meng D, Zhang L. Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans Image Process. (2017) 26:3142–55. doi: 10.1515/9783110524116

20. Xu M, Qi S, Yue Y, Teng Y, Wei Q. Segmentation of lung parenchyma in CT images using CNN trained with the clustering algorithm generated dataset. Biomed Eng Online. (2019) 18:2. doi: 10.1186/s12938-018-0619-9

21. Chen X, Men K, Li Y, Yi J, Dai J. A feasibility study on an automated method to generate patient-specific dose distributions for radiotherapy using deep learning. Med Phys. (2019) 46:56–64. doi: 10.1002/mp.13262

22. Komatsu S, Oike T, Komatsu Y, Kubota Y, Nakano T. Deep learning-assisted literature mining for in vitro radiosensitivity data. Radiother Oncol. (2019) 139:87–93. doi: 10.1016/j.radonc.2019.07.003

23. Sun Y, Shi H, Zhang S, Wang P, Zhao W, Zhou X, et al. Accurate and rapid CT image segmentation of the eyes and surrounding organs for precise radiotherapy. Med Phys. (2019) 46:2214–22. doi: 10.1002/mp.13463

24. Cardenas CE, McCarroll R, Court LE, Elgohari BA, Elhalawani H, Fuller CD, et al. Deep learning algorithm for auto-delineation of high-risk oropharyngeal clinical target volumes with built-in dice similarity coefficient parameter optimization function. Int J Radiat Oncol Biol Phys. (2018) 101:468–78. doi: 10.1016/j.ijrobp.2018.01.114

25. Der Veen JV, Willems S, Deschuymer S, Drb C, Wc A, Fm B, et al. Benefits of deep learning for delineation of organs at risk in head and neck cancer. Radiother Oncol. (2019) 138:68–74. doi: 10.1016/j.radonc.2019.05.010

26. Dolensek N, Gehrlach DA, Klein AS, Nadine G. Facial expressions of emotion states and their neuronal correlates in mice. Science. (2020) 368:89–94. doi: 10.1126/science.aaz9468

27. Azad R, Asadiaghbolaghi M, Fathy M, Escalera S. Bi-directional ConvLSTM U-Net with Densley connected convolutions. In: IEEE/CVF International Conference on Computer Vision Workshop (ICCVW). Seoul. (2019). doi: 10.1109/ICCVW.2019.00052

28. Song H, Wang W, Zhao S, Shen J, Lam KM. Pyramid dilated deeper ConvLSTM for video salient object detection. In: European Conference on Computer Vision. Munich (2018). p. 744–60. doi: 10.1007/978-3-030-01252-6_44

Keywords: convolutional neural network (CNN), human organs, CT images, automatic segmentation, Dice similarity coefficient (DSC)

Citation: Shen G, Jin X, Sun C and Li Q (2022) Artificial Intelligence Radiotherapy Planning: Automatic Segmentation of Human Organs in CT Images Based on a Modified Convolutional Neural Network. Front. Public Health 10:813135. doi: 10.3389/fpubh.2022.813135

Received: 11 November 2021; Accepted: 24 March 2022;

Published: 15 April 2022.

Edited by:

Dariusz Leszczynski, University of Helsinki, FinlandReviewed by:

Yu-Dong Zhang, University of Leicester, United KingdomZhonghong Xin, First Hospital of Lanzhou University, China

Copyright © 2022 Shen, Jin, Sun and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qiang Li, bGlxaWFuZ0BpbXBjYXMuYWMuY24=

Guosheng Shen

Guosheng Shen Xiaodong Jin

Xiaodong Jin Chao Sun

Chao Sun Qiang Li

Qiang Li