94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

BRIEF RESEARCH REPORT article

Front. Public Health, 13 May 2022

Sec. Public Health Education and Promotion

Volume 10 - 2022 | https://doi.org/10.3389/fpubh.2022.765581

This article is part of the Research TopicCOVID-19: Risk Communication and BlameView all 23 articles

The COVID-19 outbreak triggered a massive spread of unverified news on social media and has become a source of rumors. This paper studies the impact of a virtual rumor control center (RCC) on Weibo user behavior. The collected COVID-19 breaking news stories were divided into positive, negative, and neutral categories, while the moderating effect model was used to analyze the influence of anti-rumor on user behavior (forwarding, liking, and commenting). Our research found that rumor refuting does not directly affect user behavior but does have an indirect moderating effect. Rumor refuting has a profound impact on user forwarding behavior in cases of positive and negative news. Specifically, when the epidemic becomes more serious, the role of rumor refuting becomes critical, and vice versa. Refuting rumors reduces user willingness to forward positive or negative news, with more impact on negative news. Time lag analysis shows a significant moderation of unverified news within 72 h of refuting rumors but indicated an apparent weakening trend over time. Furthermore, we discovered non-linear feature and counter-cyclical phenomena in the moderating effect of rumor refutation.

The COVID-19 pandemic has exerted unprecedented and devastating effects on human societies. Unlike previous pandemics such as the H1N1 flu in 1918, COVID-19 is spreading rapidly through an interconnected world. As countries adopt strict physical distancing and other measures to control the virus, people increasingly depend on global digital social media networks such as Facebook, Twitter, and Weibo. These platforms help users sustain contact with others, enhance interpersonal interactions, and share virus-related information. However, these digital social networks also serve to promote a different type of virus, misinformation. The viral dissemination of inaccurate scientific information via digital social media may be used as a political weapon to destroy public trust in governments (1, 2). The WHO uses the term “infodemic” for the wide distribution of large amounts of misinformation through social networks (3, 4). Infodemics must be controlled because of the potential harm they inflict on human societies. Some administrations have sought to limit COVID-19-related misinformation dissemination on social media by pressuring corporations such as Facebook, Weibo, and Twitter to take appropriate actions (5). Social media has enormous power to manage rumors and is considered a potential rumor control center (RCC).

COVID-19 has been a continuous hot topic on social networks since early 2020, with massive news generated every moment. An information cascade begins when a user asserts news in a tweet (6). RCC is an authority whose goal is to minimize the spread of fake news by generating a positive cascade (7). However, fake news is not simply false information. It may also be polarized content, satire, misreporting, commentary, persuasive information, and citizen journalism (8). In most instances, the sharer either does not know or does not suspect the news they are sharing is fake. Because sharing fake news may negatively impact relationships, in the presence of RCC, users may be more inclined to share positive information (9). McIntyre and Gibson (10) found that valence plays a significant role in readers' affect, in that positive news makes readers feel good. In theory, positive news has a beneficial effect on restraining the speed of spreading rumors (11), but a lack of empirical analysis exists on the role of RCC in this process. Unlike previous research that focused on true or false news, this study looks at the impact of RCC on social network user behavior from the perspective of positive and negative information to help us understand how RCC works. Classification of news according to positive, negative, and neutral allows us to provide a new path for the design of RCC strategies. Existing research often aims at the short-term impact of a single or separate news cascade. Fortunately, COVID-19, as a continuous event with a series of information cascades, creates a natural experiment for us to study the mid-to-long-term impacts of RCC on user social network behavior. This article examines how RCC changes user behavior from the vantage of positive, negative, and neutral news.

This study first collected breaking news data and rumor-refuting information on social media during the first round of COVID-19 outbreak in China in 2020, then classified them according to the valency of news, and used regression models to analyze the impact of rumor-refuting on user behavior. The marginal contribution of this paper is to analyze the impact of RCC on the spread of rumors in the early stage of COVID-19, which provides valuable insights for improving RCC's strategies in responding to sudden disease disasters. Further, this paper studies a series of epidemic news events, which can reveal the law of RCC's effect on rumors more completely than only focusing on the impact of a single event. The research results explain how RCC changes the news cascade and provides guidance for designing social media anti-rumor strategies. Rumors may be real or fake news, but since this article is based on the RCC perspective, it needs to be clarified that the rumor mentioned in this article refers to fake news.

Online media is the site of information propagation and the persistent discussions surrounding such information. When an individual receives news about COVID-19, for instance a rumor, he may turn to other sources to understand, evaluate, debunk, or verify the information, often depending on their prior beliefs (12). Users will also use RCC as an important source for assessing the credibility of the information. There are currently two ways to control fake news on social networks: one is to tag misinformation so that users can identify suspicious information (13, 14), the way Twitter does; the other is to continuously broadcast rumor-defending information through RCC accounts, such as Weibo. Both approaches have benefits, and glaring limitations. The first approach allows users to see the suspicious information tag, but flaws in the algorithm may miss some potential rumor seeds, such as puns or ironic expressions. The second approach uses an “anti-rumor” process, akin to the way rumors are spread (15, 16), but this process has a lag effect and uncertainty. Existing studies on the effectiveness of rebuttals have reached mixed findings. Some studies showed that rebuttals help reduce belief in rumors (17–19), while other studies revealed opposite results. For instance, there is the “backfire effect” where corrections actually increase the belief in rumors (20). Opposing views on the role of RCC may signify undiscovered mechanisms.

Like Twitter, Weibo is a platform for users to share, distribute, and obtain information based on their associations. Users can receive all information about COVID-19, including official announcements, news, rumors, and anti-rumors. Weibo publishes relevant messages related to COVID-19 in real-time in a prominent location, informing users of details such as the current number of infections and deaths, etc. The platform also established an official rumor-defying account to control the spread of rumors (Weibo RCC). As of 26 July 2020, this account had about 2.33 million registered followers and a total of 9,607 messages.

User behaviors on Weibo include clicking, forwarding, liking, and commenting. Clicking signifies user interest, forwarding represents user action to disseminate information, liking represents positive user attitude, and commenting indicates user interest in public discourse on a topic. Weibo builds a real-time Hot Topic Ranking (HTR) list based on the above data and makes recommendations on the user's homepage. The HTR is a structured news cascade, composed of the 50 most popular news at the time. After clicking on one of the news items, users see a summary and the most popular user comments right below it. Although HTR uses an objective way to describe news, the news itself may be positive, negative, or neural, which is a crucial factor affecting user behavior (9, 11).

The definition of positive and negative news is the basis of this research. Harcup and O'Neill (21) defined good news as “stories with particularly positive overtones such as rescues and cures” and bad news as “stories with particularly negative overtones, such as conflict or tragedy.” McIntyre and Gibson (10) defined a positive news as one that focuses on the benefits of an event or issue and a negative news as one that focuses on the harmful outcomes of an event or issue. The definition of positive and negative news in this study is based on the previous research and the characteristics of COVID-19 news. Positive news is good for building public confidence in the fight against the epidemic. These news include posts on medical staff actively treating patients, online charity concerts held by celebrities, public donations of medical supplies, and signs of improvement in the epidemic, such as zero new confirmed cases in a region, reopening of closed roads, or active development of a new vaccine. Negative news, on the other hand, can harm public sentiment. These posts include government announcements of city closures and delays in the opening of schools. News items that were neither positive nor negative were collectively classified as neutral.

There is a growing body of work suggesting that responses to positive and negative information are asymmetric—that negative information has a much greater impact on individuals' attitudes than does positive information (22). Scholars who focus on information diffusion have suggested that people might be more likely to share positive rather than negative messages in an effort to signal their identity or enhance their self-presentation (23, 24). In contrast to their findings, Hansen et al. (25) found that negative news messages were shared more than positive news messages on Twitter. Soroka and McAdams (26) conducted a psychophysiological experiment showing that negative news elicits stronger and more sustained reactions than does positive news. When the news content is negative, it produces a stronger reaction and/or higher attention, which may be due to the framing effect caused by the mediating role of user emotions (27). Emotion is the regulator of people's social behavior (28), and the content characteristics of social media will be regulated by emotions and affect people's engagement (29), and even trigger aggressive behaviors (30). In the face of disease risk, it has also been proven that emotional variables such as fear and anger can generate positive preventive behaviors through the use of social media (31). In the process of dispelling rumors, users' social media behaviors will probably be affected by the valence of news. In order to quantify this impact, this article uses the frequency of rumor refuting to measure the intensity of rumor refuting, and proposes the following hypotheses:

H1. The intensity of anti-rumor affects users' behaviors with different impacts on positive, negative and neutral news.

H2. The intensity of anti-rumor plays a moderating role between public panic and user behaviors with different impacts on positive, negative and neutral news.

A linear regression model was used for empirical analysis, where the dependent variable was the user social behavior on COVID-19 news. The online user behavior regarding COVID-19 information resulted from the combined effects of receiving varied information during the study. Therefore, the data related to user behavior can effectively measure the public response to rumor rebuttals. This study collected public comments on Weibo as the basis of the analysis, but no patient and public participated in the experiment.

The social media panic is closely related to the spread of the pandemic and media reports (32). Therefore, we used the reported incidence of infections to measure public panic. Furthermore, the peak time of the epidemic (5 February 2020) also exerted a powerful impact on public panic; thus, a peak dummy variable was introduced.

To test H1, the main effect model is as follows:

To test H2, the moderating effect model was postulated in the following manner:

where behaviort represents user behavior, anti_rumort and panict represents the intensity of rumor rebuttals and the degree of public panic, respectively. The dummy variable peak_dumt represents the epidemic peak (before or after the peak). α0, α1, α2, α3, α4 are coefficients. T reflects lag time and panict × anti_rumort represents the interaction effect.

This study collected epidemic-related data on Weibo, China's largest social networking platform, from 1 January 2020 to 31 March 2020, including 4,004 COVID-19-related news and 1,150 RCC anti-rumor information. The daily number of infections comes from official disclosures. Data collection date is 23 April 2020. COVID-19 news items were filtered and classified manually into three categories according to content attributes: positive, negative, or neutral. Furthermore, since the collected data were cross–sectional, we restructured it by the hour according to the “48-h allocation” method described below. Finally, 1,657 time-series samples were obtained. The calculation method of each variable is outlined below:

behaviort: Around 4,004 news items on COVID-19 were obtained after manual screening, and the number of clicks, forwards, likes, and comments of each post were also collated. The calculation was accomplished by counting the number of clicks, forwards, and comments according to the hour. We subsequently computed the value of forwards/clicks, likes/clicks, comments/clicks for every hour to use as dependent variables. Finally, behaviort was recalculated according to a “48-h allocation” approach.

anti_rumort: The data were extracted from Weibo's RCC and yielded a total of 1,150 records of effective anti-rumors. The rumor refutation was carried out by Weibo RCC at different times every day, and we counted its release frequency every hour. The number of RCC releases per hour was used as an indicator of rumor refutation intensity after being processed through the “48-h allocation.”

panict: China's official daily release of newly confirmed COVID-19 cases (confirm_add), fresh suspected infections (suspect_add), and current COVID-19 related deaths (dead_add) were compiled to measure public panic. Since the number of suspected cases had a great impact on the Chinese public in the early stage of the epidemic, the model used suspect_add as the main indicator. For the sake of robustness, we used confirm_add and dead_add as alternative indicators (see Supplementary Material).

panic_dummyt: Public panic may differ significantly before and after the peak of the pandemic. Therefore, it was recorded as 0 before 5 February 2020, and as 1 after that date.

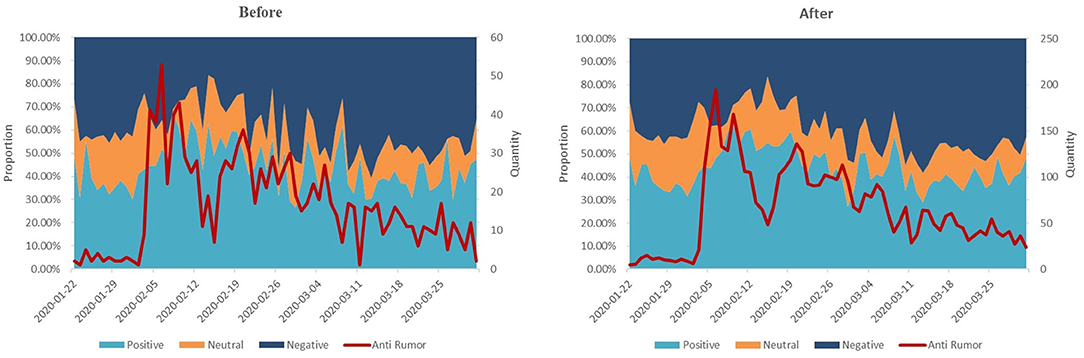

Forty-eight-hour allocation: The power of information dissemination on social networks shows the characteristics of non-linear decline. According to Kwak et al. (33) on the spread of Twitter information, more than 50% of the forwarding behavior occurred within 1 h of the posting an item, and more than 75% of the forwarding behavior occurred within 1 day. Based on the above research, we used the weight allocation method to simulate the characteristics of social network information dissemination within 48 h, and its weight will continue to decrease over time, which is the so-called “48-h allocation.” Then, behaviort and antirumort were processed according to the “48-h allocation.” The specific processing method entailed starting from the release time of the entry. 50% weight was allocated for the first hour, 25%/23 for the following 23 h, and 25%/24 for the next 24 h. For example, if an entry was listed at 0:00 on 1 January 2020, it was recorded as 50% × (number of clicks, forwards, comments, likes) from 0:00 to 1:00 on 1 January 2020, as 25%/23 × (number of clicks, forwards, comments, likes) from 1:00 to 23:00, and as 25% /24 × (number of clicks, forwards, comments, likes) from 0:00 to 23:00 on 2 January 2020. Table 1 outlines the variable definitions. The statistical description and correlation coefficient after data processing are exhibited in Table 1. Figure 1 shows the daily accumulation of positive, negative, and neutral news and the number of anti-rumors before (left) and after (right) the “48-h allocation.”

Figure 1. Daily accumulation graph of positive, negative, and neutral news and the number of anti-rumors before (left) and after (right) the “48-h allocation.” The primary vertical axis represents the proportion of positive, neutral and negative rumors, while the secondary vertical axis represents the strength of the rumor-refuting information.

According to model (1), the main effect regression was performed on positive news, negative news, and neutral news, with behaviort as the dependent variable, and anti_rumort, panict, and panic_dumt as explanatory variables. Then, according to the model (2), the moderating effect variable panict × anti_rumort was added, and finally, 18 regression models were established. To reduce collinearity, panict, and anti_rumort are mean-centered. The regression results show that rumor refuting has a complex impact on user behavior with different news types (as shown in the Table 2).

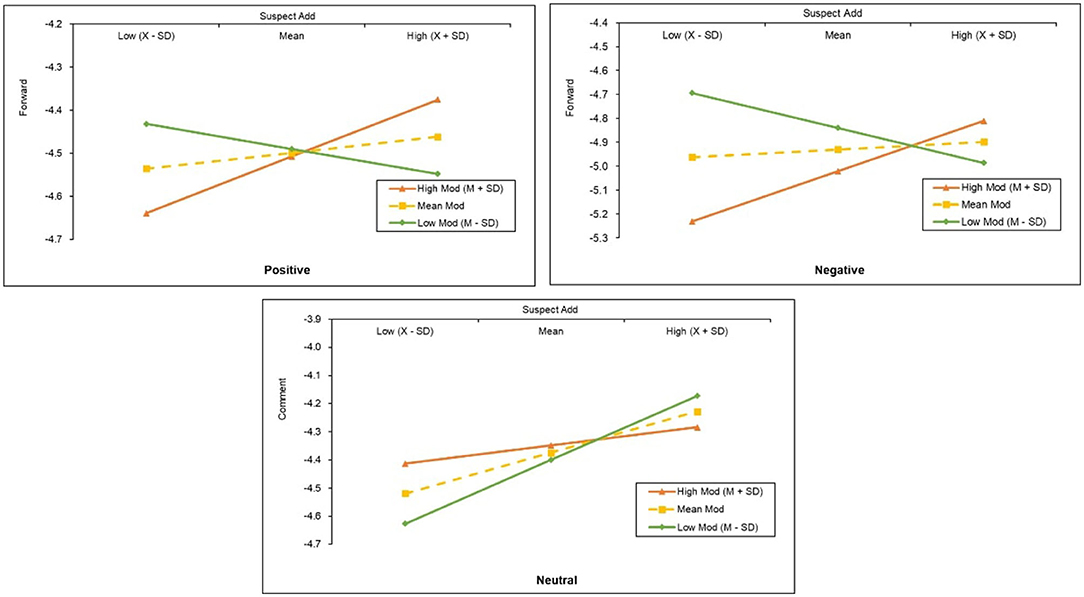

The number of new suspected cases (suspect_add) significantly affected user forwarding and commenting behavior via moderating effect. Before the moderating effect was added, the intensity of rumor rejection did not affect the spread of positive news, rejecting H1). However, there was an interaction effect between the anti-rumor intensity (anti_rumor) and the number of new suspected cases (suspect_add) (β = 0.0320, p < 0.001), and H2 cannot be rejected. β is the non–standard coefficient. The simple slope graph shows that with the increase of new suspected cases, refuting rumors stimulated user enthusiasm for forwarding positive news (Figure 2). The dummy variable peak epidemic time (peak_dum) was negatively significant in all regressions, proving the impact of the epidemic peak time on user behavior. After the peak of the epidemic, there was a decline in reposting, liking, and commenting on positive news.

Figure 2. Simple slope test graphs. A simple slope plot represents the direction and strength of the moderating effect.

The number of new suspected cases (suspect_add) also significantly affected user forwarding and commenting behavior via both main and moderating effect. Anti-rumors can significantly inhibit the spread of negative news: the regression coefficients of the variable anti_rumor for forwarding (forward_d_clicks) and comments (comment_d_clicks) were (β = −0.0606, p < 0.001) and (β = −0.0309, p < 0.05), respectively, and H1 cannot be rejected. Furthermore, the model also had a significant moderating effect on forwarding behavior, consistent with the positive news results. However, the dummy variable peak_dum was only negatively significant for the forwarding behavior. This shows that, compared to positive and neutral news, users still maintained strong enthusiasm for negative news even after the peak.

The number of new suspected cases (suspect_add) will also significantly affect user forwarding and commenting behavior through only moderating effect. Before we added the moderation effect, the intensity of rumor rejection had no significant impact on the forwarding and comments on positive news. Although it was significant for liking (β = 0.0374, p < 0.05), it became insignificant after the moderation effect was added. The moderating effect for commenting is significant (β = −0.0274, p < 0.001).

To further analyze the meaning of the moderating effect, a simple slope test was conducted (34) (see the Figure 2). Figure 2 shows that varying intensities of anti-rumor exercised significant differences in the moderating effect of public behavior (high, median, and low mode represents high, middle and low level, respectively). For positive and negative news, the moderating effect had a non–linear feature. When the intensity of anti-rumor was higher than a certain critical value, the greater the intensity, the stronger the influence on user behavior, and vice versa. There was no similar rule for neutral news, but as the intensity of rumor refuting increased, user enthusiasm for neutral news declined precipitously.

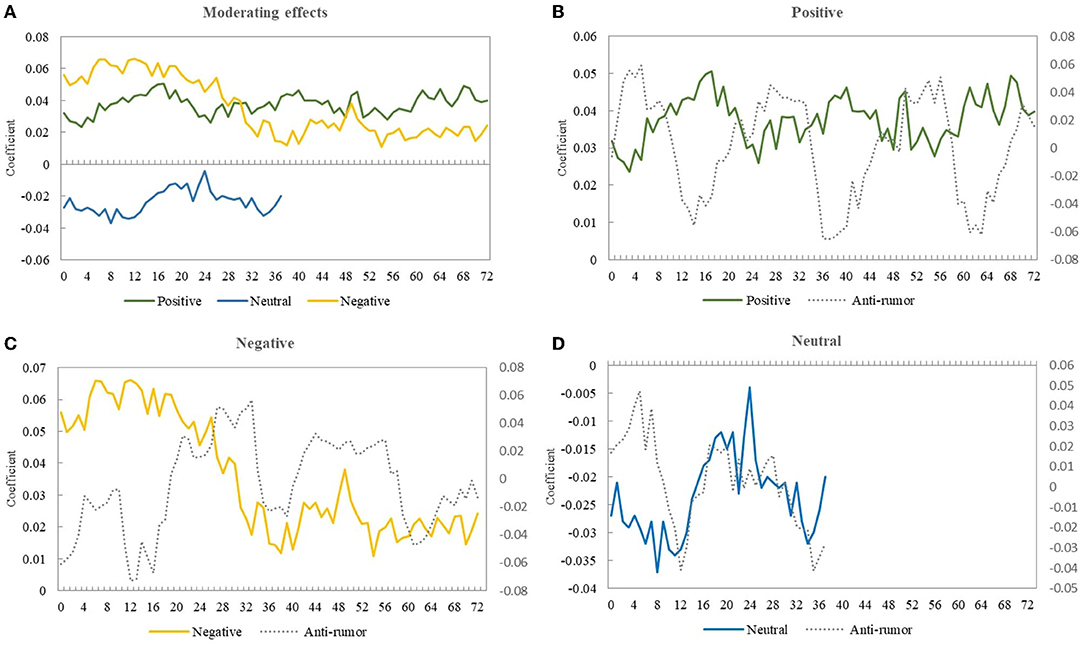

The dissemination of rumor refutation information takes a certain amount of time; thus, a lag effect may exist. Therefore, the explanatory variable behaviort+T was used as a lag item to test the moderating effects of anti- rumor messages released within 72 h. The results evinced the significant moderating effect of rumor refutation within 72 h but indicated an apparent weakening trend over time (see the Figure 3A). Specifically, the impact of rumor rebuttal on negative news peaked within 12 h and then continued to decline until reduced to half after 32 h. The moderating effect of positive news remained consistent within a relatively stable range and fluctuated. For neutral news, the mediating effect was no longer significant after 36 h.

Figure 3. The moderating effect of rumor refutation over time. (A) Represents the trend of the moderating effect of positive, neutral and negative information over time. (B–D) Represent the comparison of the three types of information and the corresponding moderating effects of refuting rumors.

After further analyzing the change trend of the coefficients of anti-rumor and moderating effect, the results revealed a clear counter-cyclical relationship between them, which was especially obvious for positive news (see the Figures 3B–D). When the anti-rumor effect decreased, the moderating effect began to rise. A reasonable explanation for this phenomenon is that the role of anti-rumor has a certain lead time. After it reaches a peak, the moderating effect starts to work, and the lead time is estimated at 16–20 h.

This study reveals the complicated mechanism of rumor refuting on user behavior using RCC broadcast methods. Refuting rumors does not directly affect user behavior, but indirectly changes it through a moderating effect. For both positive and negative news, rumor refuting has a positive impact on user forwarding behavior through interactive effects. When the epidemic becomes more serious, the role of rumor refuting intensifies, and vice versa. Further, there is a counter-cyclical phenomenon between the main effect of refuting rumors and the moderating effect. When the main effect begins to weaken, the moderating effect increases instead. This shows that RCC directly affects the spread of rumors first, and then further affects the wider social behavior of users. This shows that refuting rumors can not only reduce the spread of rumors, but also affect users' reactions to negative news more widely, which further reduces the environment for the spread of rumors. This finding has not received sufficient attention in past research. As far as neutral information is concerned, a fascinating discovery has emerged from our data. We found that dispelling rumors stimulates users to be more expressive and opinionated as the epidemic worsened. This finding indicates that neutral news is more likely to originate rumors, because users interpret uncertainty in a variety of ways, often promoting the appearance of hearsay.

From the vantage of user behavior, rumor refuting reduces user willingness to forward positive and negative news simultaneously, with a deeper impact on negative news, which is consistent with previous researches (23, 24). However, refuting rumors will not alter user liking and commenting behavior, proving that RCC only impacts the spread of information but hardly affects user enthusiasm for participating in discussions. Further examination is needed of the non–linear feature in the mediation effect of rumor refuting. If it exists, greater flexibility is necessary to design rumor-refuting strategies. In any case, categorizing news into positive, negative, and neutral and then formulating targeted strategies to dispel rumors can effectively improve the efficiency of RCC. It is much cheaper to classify news in advance than to identify rumors after the fact. Furthermore, the peak time of the epidemic was found to exercise a significant impact on user behavior. After the epidemic peaked, user enthusiasm for all types of news dropped significantly. These findings suggest that RCC can break the framing effect produced by public sentiment (27), thereby alleviating public panic caused by COVID-19, but it requires sophisticated intervention strategies.

The main contribution of this study is to find that RCC can not only suppress the spread of rumors, but also can further affect the wider behavior of users, thereby helping to dispel rumors. This finding helps to optimize the design of RCC strategies. For example, targeting technology can be used to broadcast rumor-refuting information to specific groups of people based on the valence of news. But the research also has some limitations. First, its conclusions are limited and applicable to China's cultural environment because Weibo data were used for the investigation. Therefore, it is necessary to conduct a cross-cultural comparative study of user behavior. Second, the timing of this study was limited to the outbreak stage of the epidemic in China. However, the global transmission characteristics of COVID-19 have undergone significant changes, and the user psychology may have changed. Finally, this study uses behavioral data for correlation analysis, which cannot fully reveal the operating mechanism of RCC.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding authors.

The studies involving human participants were reviewed and approved by Central University of Finance and Economics. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

BL: writing and editing of the paper. JS and BC: literature review and methodological design. QW: data collection and processing. QT: discussion part of the paper. All authors contributed to the article and approved the submitted version.

This paper is supported by the National Social Science Foundation (Project Number 19BGL300).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2022.765581/full#supplementary-material

1. Guess A, Nyhan B, Reifler J. Selective Exposure to Misinformation: Evidence From the Consumption of Fake News During the 2016 US Presidential Campaign. European Research Council (2018).

2. Singer PW, Brooking ET. LikeWar: The Weaponization of Social Media. New York, NY: Eamon Dolan Books (2018).

3. Garrett L. COVID−19: the medium is the message. The Lancet. (2020) 395:942–3. doi: 10.1016/S0140-6736(20)30600-0

4. Krumholz HM, Bloom, T, Ross, JS,. Preprints Can Fill a Void in Times of Rapidly Changing Science. (2020). Available online at: https://www.statnews.com/2020/01/31/preprints-fill-void-rapidly-changing-science/ (accessed March 01, 2022).

5. Shu C, Shieber J. Facebook, Reddit, Google, LinkedIn, Microsoft, Twitter and YouTube issue joint statement on misinformation. TechCrunch. (2020).

6. Vosoughi S, Roy D, Aral S. The spread of true and false news online. Science. (2018) 359:1146–51. doi: 10.1126/science.aap9559

7. Tong G, Wu W, Du DZ. Distributed rumor blocking with multiple positive cascades. IEEE Trans Comput Soc Syst. (2018) 5:468–80. doi: 10.1109/TCSS.2018.2818661

8. Molina MD, Sundar SS. Technological affordances can promote misinformation: what journalists should watch out for when relying on online tools and social media. In: Journalism and Truth in an Age of Social Media. New York, NY: Oxford University Press (2019). p. 182–98. doi: 10.1093/oso/9780190900250.003.0013

9. Duffy A, Tandoc E, Ling R. Too good to be, too good not to share: the social utility of fake news. Inf Commun Soc. (2020) 23:1965–79. doi: 10.1080/1369118X.2019.1623904

10. McIntyre KE, Gibson R. Positive news makes readers feel good: a “silver-lining” approach to negative news can attract audiences. South Commun J. (2016) 81:304–15. doi: 10.1080/1041794X.2016.1171892

11. Luo Y, Ma J. The influence of positive news on rumor spreading in social networks with scale-free characteristics. Int J Mod Phys C. (2018) 29:1850078. doi: 10.1142/S012918311850078X

12. Lewandowsky S, Ecker UK, Seifert CM, Schwarz N, Cook J. Misinformation and its correction: continued influence and successful debiasing. Psychol Sci Public Interest. (2012) 13:106–31. doi: 10.1177/1529100612451018

13. Castillo C, Mendoza M, Poblete B. Information credibility on twitter. In: Proceedings of the 20th International Conference on World Wide Web. New York, NY (2011).

14. Chu Z, Gianvecchio S, Wang H, Jajodia S. Detecting automation of twitter accounts: are you a human, bot, or cyborg?. IEEE Trans Dependable Secure Comput. (2012) 9:811–24. doi: 10.1109/TDSC.2012.75

15. Tripathy RM, Bagchi A, Mehta S. A study of rumor control strategies on social networks. In: Proceedings of the 19th ACM International Conference on Information and Knowledge Management. New York, NY (2010).

16. Pal A, Chua AY, Goh DHL. How do users respond to online rumor rebuttals? Comput Hum Behav. (2020) 106:106243. doi: 10.1016/j.chb.2019.106243

17. Weeks BE, Garrett RK. Electoral consequences of political rumors: motivated reasoning, candidate rumors, and vote choice during the 2008 us presidential election. Int J Public Opin Res. (2014) 26:401–22. doi: 10.1093/ijpor/edu005

18. Tanaka Y, Sakamoto Y, Matsuka T. Toward a social-technological system that inactivates false rumors through the critical thinking of crowds. In: 2013 46th Hawaii International Conference on System Sciences. Wailea, HI (2013).

19. Huang H. A war of (mis) information: the political effects of rumors and rumor rebuttals in an authoritarian country. Br J Polit Sci. (2017) 47:283–311. doi: 10.1017/S0007123415000253

20. Nyhan B, Reifler J. When corrections fail: the persistence of political misperceptions. Polit Behav. (2010) 32:303–30. doi: 10.1007/s11109-010-9112-2

21. Harcup T, O'Neill D. What is news? Galtung and Ruge revisited. Journal Stud. (2001) 2:261–80. doi: 10.1080/14616700118449

22. Soroka SN. Good news and bad news: asymmetric responses to economic information. J Polit. (2006) 68:372–85. doi: 10.1111/j.1468-2508.2006.00413.x

23. Wojnicki AC, Godes D. Word-of-Mouth as Self-Enhancement. Harvard Business School Marketing Unit, Research Paper No. 06–01. (2008).

24. Berger J, Milkman K. What makes online content viral? J Mark Res. (2012) 49:192–205. doi: 10.1509/jmr.10.0353

25. Hansen LK, Arvidsson A, Nielsen FA, Colleoni E, Etter M. Good friends, bad news: affect and virality in Twitter. Commun Comput Inf Sci. (2011) 185:34–43. doi: 10.1007/978-3-642-22309-9_5

26. Soroka S, McAdams S. News, politics, and negativity. Polit Commun. (2015) 32:1–22. doi: 10.1080/10584609.2014.881942

27. Lecheler S, Schuck AR, De Vreese CH. Dealing with feelings: positive and negative discrete emotions as mediators of news framing effects. Communications. (2013) 38:189–209. doi: 10.1515/commun-2013-0011

28. Beckes L, Edwards WL. Emotions as regulators of social behavior. In: Beauchaine TP, Crowell SE, editors. The Oxford Handbook of Emotion Dysregulation. Oxford: Oxford University Press (2018), p. 30–2. doi: 10.1093/oxfordhb/9780190689285.013.3

29. Schreiner M, Fischer T, Riedl R. Impact of content characteristics and emotion on behavioral engagement in social media: literature review and research agenda. Electron Commer Res. (2021) 21:329–45. doi: 10.1007/s10660-019-09353-8

30. Smeijers D, Benbouriche M, Garofalo C. The association between emotion, social information processing, and aggressive behavior. Eur Psychol. (2020) 25:23–30. doi: 10.1027/1016-9040/a000395

31. Oh SH, Lee SY, Han C. The effects of social media use on preventive behaviors during infectious disease outbreaks: the mediating role of self-relevant emotions and public risk perception. Health Commun. (2021) 36:972–81. doi: 10.1080/10410236.2020.1724639

32. Depoux A, Martin S, Karafillakis E, Preet R, Wilder-Smith A, Larson H. The pandemic of social media panic travels faster than the COVID-19 outbreak. J Travel Med. (2020) 27:taaa031. doi: 10.1093/jtm/taaa031

33. Kwak H, Lee C, Park H, Moon S. What is Twitter, a social network or a news media?. In: Proceedings of the 19th International Conference on World Wide Web. New York, NY (2010).

Keywords: COVID-19, behavior, social network, Weibo, rumor control

Citation: Lu B, Sun J, Chen B, Wang Q and Tan Q (2022) A Study on the Effectiveness of Rumor Control via Social Media Networks to Alleviate Public Panic About COVID-19. Front. Public Health 10:765581. doi: 10.3389/fpubh.2022.765581

Received: 27 August 2021; Accepted: 25 April 2022;

Published: 13 May 2022.

Edited by:

Rukhsana Ahmed, University at Albany, United StatesCopyright © 2022 Lu, Sun, Chen, Wang and Tan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jinlu Sun, amlubHVAYnVhYS5lZHUuY24=; Bo Chen, YmNoZW5AY3VmZS5lZHUuY24=; Qi Wang, MjAxOTIxMDE4NEBlbWFpbC5jdWZlLmVkdS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.