94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Public Health , 18 October 2019

Sec. Public Health Education and Promotion

Volume 7 - 2019 | https://doi.org/10.3389/fpubh.2019.00293

Heather L. Shepherd1,2*

Heather L. Shepherd1,2* Liesbeth Geerligs1

Liesbeth Geerligs1 Phyllis Butow1,2

Phyllis Butow1,2 Lindy Masya1

Lindy Masya1 Joanne Shaw1

Joanne Shaw1 Melanie Price1

Melanie Price1 Haryana M. Dhillon1,2

Haryana M. Dhillon1,2 Thomas F. Hack3,4

Thomas F. Hack3,4 Afaf Girgis5

Afaf Girgis5 Tim Luckett6

Tim Luckett6 Melanie Lovell7,8

Melanie Lovell7,8 Brian Kelly9

Brian Kelly9 Philip Beale10

Philip Beale10 Peter Grimison11,12

Peter Grimison11,12 Tim Shaw13

Tim Shaw13 Rosalie Viney14

Rosalie Viney14 Nicole M. Rankin15

Nicole M. Rankin15Objective and Study Setting: Research efforts to identify factors that influence successful implementation are growing. This paper describes methods of defining and measuring outcomes of implementation success, using a cluster randomized controlled trial with 12 cancer services in Australia comparing the effectiveness of implementation strategies to support adherence to the Australian Clinical Pathway for the Screening, Assessment and Management of Anxiety and Depression in Adult Cancer Patients (ADAPT CP).

Study Design and Methods: Using the StaRI guidelines, a process evaluation was planned to explore participant experience of the ADAPT CP, resources and implementation strategies according to the Implementation Outcomes Framework. This study focused on identifying measurable outcome criteria, prior to data collection for the trial, which is currently in progress.

Principal Findings: We translated each implementation outcome into clearly defined and measurable criteria, noting whether each addressed the ADAPT CP, resources or implementation strategies, or a combination of the three. A consensus process defined measures for the primary outcome (adherence) and secondary (implementation) outcomes; this process included literature review, discussion and clear measurement parameters. Based on our experience, we present an approach that could be used as a guide for other researchers and clinicians seeking to define success in their work.

Conclusions: Defining and operationalizing success in real-world implementation yields a range of methodological challenges and complexities that may be overcome by iterative review and engagement with end users. A clear understanding of how outcomes are defined and measured, based on a strong theoretical framework, is crucial to meaningful measurement and outcomes. The conceptual approach described in this article could be generalized for use in other studies.

Trial Registration: The ADAPT Program to support the management of anxiety and depression in adult cancer patients: a cluster randomized trial to evaluate different implementation strategies of the Clinical Pathway for Screening, Assessment and Management of Anxiety and Depression in Adult Cancer Patients was prospectively registered with the Australian New Zealand Clinical Trials Registry Registration Number: ACTRN12617000411347.

Implementation science is defined as “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services. It includes the study of influences on healthcare professional and organizational behavior” (1). Clinical pathways, increasingly used to inform patient management and care, provide evidence-based recommendations to guide best practice and consistent care for specific patient concerns in homogeneous patient groups. Whilst carefully designed evidence-based clinical pathways have demonstrated success in bringing about change in patient management (2) and improved patient outcomes (3, 4), they are not always successfully implemented and hence rarely lead to the desired real world practice change (5, 6).

The discipline of implementation science has grown considerably over the past decade, focusing on informing effective research translation into practice, through study of strategies which support the uptake of evidence-based interventions, and understanding of barriers impeding this process (1). Implementation research acknowledges that awareness of context and use of appropriately tailored implementation strategies are critical to success (7). To enable evaluation of such strategies, clear and well-defined markers of success should be selected and operationalized prior to embarking on implementation.

A key issue delaying progress in the discipline is a lack of clarity in defining the “success” against which strategies are judged. Defining success for clinical pathways in particular is recognized as complex, given they are comprised of multiple interacting components (8), each of which may require its own definition of success. A recent systematic review of guideline dissemination and implementation strategies (9) noted that definitions of effectiveness used to date are diverse, rarely explained in detail or grounded in theory, and often confound intervention and implementation success. They also tend to be categorical rather than continuous, meaning that findings are hard to interpret and cannot be generalized or made useful to practitioners and policy makers.

The Standards for Reporting in Implementation studies (StaRI) guidelines (10) facilitate clarity of reporting, thereby overcoming some of these issues. These guidelines highlight the importance of separating intervention success (e.g., treatment effectiveness) from implementation success (successful roll-out) to distinguish whether failure occurred due to the intervention not working (intervention failure), or whether the intervention was not implemented effectively including lack of uptake (implementation failure) (11).

To assist researchers in defining and reporting specific success outcomes, various evaluation frameworks have been developed (12), including the implementation outcomes taxonomy by Proctor et al. (11), which proposes eight distinct outcomes: acceptability, adoption, appropriateness, feasibility, fidelity, implementation cost, penetration and sustainability. Whilst these outcomes provide a working model for how to conceptualize success, they must still be operationalized via practical tools and measures. Also, collection of success data for real-world implementation research poses a challenge in busy, time-poor settings, where research is not the primary focus, even for engaged stakeholders (13–15). Thus, pragmatically, success measures should be brief, broadly applicable and sensitive to change (16). Currently there is little research that provides worked examples of how researchers and clinicians can respond to these challenges, engaging with the complex issues of defining and operationalizing success.

The aim of this paper is to provide a conceptual approach to developing a clear definition and criteria for implementation success in a current cluster randomized controlled trial (ADAPT Cluster RCT); data collection and final results for this trial will be complete in 2020. The ADAPT Cluster RCT compares the effectiveness of implementation strategies to influence adherence to the Australian Clinical Pathway for the Screening, Assessment and Management of Anxiety and Depression in Adult Cancer Patients (ADAPT CP) (17). In line with StaRI guidelines, we specify the implementation strategies designed by the ADAPT Program Measurement and Implementation Working Group to support implementation, how they are anticipated to work, and how their success will be assessed in relation to pre-defined outcomes. To do this, we focus specifically on the StaRI reporting standards around methods of evaluation, which encourage researchers to report “defined, pre-specified primary and other outcomes of the implementation strategy and how they were assessed” (10). Using the ADAPT Cluster RCT as an example, and specifically the methodological work in designing and developing measurable outcomes for this trial, we illustrate how researchers can define implementation success when designing and developing implementation studies with guidance to translate measurement concepts into measurable data.

Full descriptions of the ADAPT CP (17, 18) and the ADAPT Cluster RCT protocol (19) are reported elsewhere but brief details are provided here to give context. The ADAPT Cluster RCT is registered with the Australian New Zealand Clinical Trials Registry ACTRN12617000411347. The ADAPT CP is designed to facilitate evidence-based responses to anxiety and depression in patients with cancer. It was developed to address research showing that, despite high rates of anxiety and depression in this population (3) and high acceptance that psychosocial care is integral to quality cancer care, anxiety and depression are often undetected, under-estimated and poorly managed in busy cancer services (20, 21). The ADAPT CP incorporates iterative screening and five steps of care, with specific recommendations for staffing, content and timing of interventions based on the level of anxiety and/or depression symptoms reported by the patient; and can be tailored to individual cancer services' available resources. Development was guided by existing empirical evidence and wide stakeholder consultation involving in-depth clinician interviews and a Delphi consensus process with >80 experienced multi-disciplinary clinicians working across a range of cancer services in Australia (18, 22). Implementation of the ADAPT CP by cancer services was anticipated to require significant change to workflow and resourcing.

Following a barriers and facilitators analysis (22) a range of evidence-based, online resources were developed to facilitate successful implementation of the ADAPT. Online resources include education for staff about how to discuss anxiety and depression with patients, explain screening and make referrals if necessary (hosted on eviQ, a portal for oncology health professional education hosted by the Cancer Institute NSW); information for patients about anxiety and depression; a self-guided cognitive-behavioral program (iCanADAPT) for anxiety and depression (23); and an individualized referral network map. These resources are accessible via the ADAPT Portal, an operational web-based system that facilitates staff and patient access to regular screening and the evidence-based step allocation and referral recommendations outlined in the ADAPT CP (24).

Development of implementation strategies for ADAPT were guided by the Promoting Action Research in Health Services (PARiHS) framework (25) (see Table 1). Within the ADAPT Cluster RCT, 12 cancer services (including approximately 2,000 patients), implementing the ADAPT CP are randomized to receive one of two different implementation support packages. As part of this large implementation research program, we designed a cluster randomized trial, the ADAPT Cluster RCT, to evaluate the level of implementation support required (core vs. enhanced) to achieve adherence to the ADAPT CP over a 12-month period.

In selecting and defining outcomes of implementation success for the ADAPT Cluster RCT, we were informed by the Implementation Outcomes framework and the StaRI Statement and Checklist (10, 11). Both documents propose that their frameworks be used as a catalyst for discussing and defining how implementation studies are conceived, planned, and reported. These documents prompted discussion within the ADAPT Steering Committee and a smaller working group with specialized interest and expertise in measurement of success, around the structure and collection of implementation outcome data.

To capture the dynamic nature of success outcomes, baseline, mid-point and endpoint data collection using questionnaires and semi-structured interviews was planned. Thus, data collection occurs just after staff have been trained in the ADAPT CP and associated resources and been exposed to some implementation strategies (T0), and again after staff have experience of the pathway in practice, as well as the full suite of implementation strategies at 6 and 12 months following implementation (T1 and T2).

A major challenge in the development of implementation outcomes for the ADAPT Cluster RCT, and the subject of this methodology paper was differentiating between the three concepts underlying the implementation: the ADAPT CP; the intervention components, and; the implementations strategies. To address this, we describe how the ADAPT Program investigators, led by the Measurement and Implementation Working Group membership agreed to measure successful implementation of the ADAPT Clinical Pathway, and then agreed on methods of measuring success of the intervention components and implementation strategies, with the goal of choosing theoretically, empirically and psychometrically rigorous measurement approaches that matched implementation goals and frameworks.

Based on a comprehensive literature review and iterative discussion within the Measurement and Implementation Working Group, the primary outcome agreed on for the ADAPT Cluster RCT was site adherence to the key tasks of screening, assessment, referral and management defined by the ADAPT CP. Much of the existing research addresses medication adherence and is therefore focused on algorithms describing patient medication intake. Such outcomes are rigid, clearly defined and easily measurable. Measuring adherence to a clinical pathway is more complex as it is a multifaceted and multi-disciplinary therapeutic intervention. To address this issue, we defined adherence as the delivery, by any appropriate staff (individually defined at each site), of the main components of the ADAPT CP.

The ADAPT CP uses a stepped care model, allocating patients based on their screening scores and, if required, a triage conversation with a clinical staff member, into one of five steps, from minimal to very severe level of anxiety and/or depression, each with its own recommended treatment regimen. An added complexity is the need to consider individual differences between patients, meaning that the relevant recommendations of the ADAPT CP may differ even within patients allocated to the same step. To address this complexity, we specified adherence as the percentage of all actions appropriate to the patient's level of anxiety and depression as confirmed by the triage conversation, undertaken for each patient at each screening episode. Quantitative data collected via the ADAPT Portal enables capture of all adherence data.

To provide a more clinically relevant measure of adherence, we further defined a categorical outcome (adherent: ≥70% of patients experience ≥70% of key components recommended by the ADAPT CP (e.g., screening, triage, referral and re-screening); or non-adherent: <70% of patients experiencing ≥70% of key components recommended by the ADAPT CP), based on accepted implementation targets (26, 27).

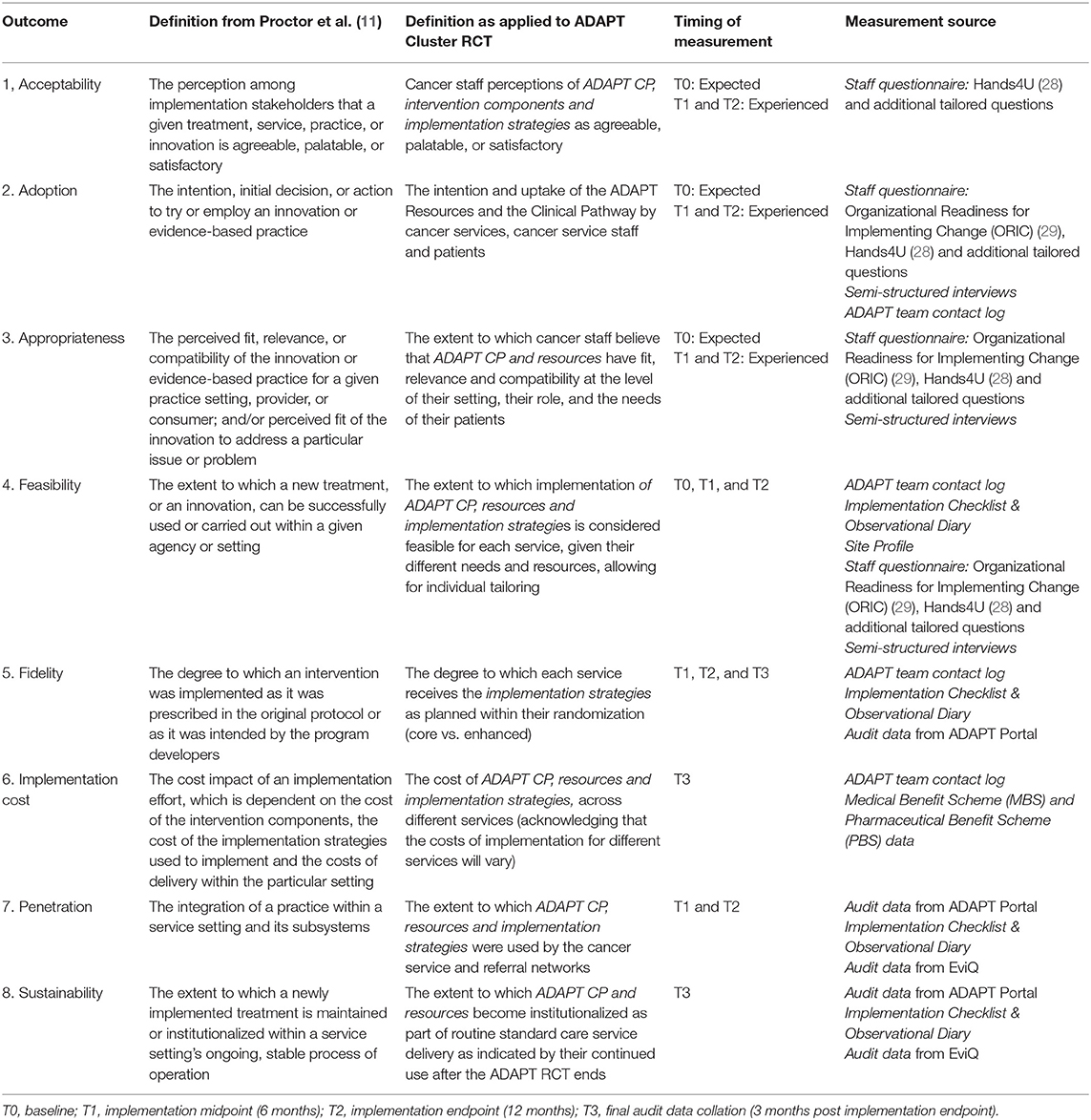

Using the StaRI guidelines, a process evaluation was planned to explore participant experience of the ADAPT CP, resources and implementation strategies according to the Proctor Implementation Outcomes framework. We translated each outcome into clearly defined and measurable criteria for our study outcomes (Table 2), noting whether each addressed the ADAPT CP, resources or implementation strategies, or a combination of the three. We applied the Proctor definitions by inserting the context, the relevant participants from whom data was being collected. The expert working group agreed that this step would clarify the data and outcomes to support reporting of the ADAPT cluster RCT findings. Table 2 also indicates the agreed timepoints of data collection prior to the 12 month supported implementation period (T0), at 6 months (T1) and at 12 months (T2) following implementation to add to the evidence on how implementation outcomes may shift over time, potentially allowing research to identify “leading” and “lagging” indicators of success (11).

Table 2. Success outcomes based on Proctor et al. (11) as defined for the ADAPT RCT.

A rapid review of the literature identified measures of success with strong psychometric properties and face validity that mapped well to the PARiHS domains, and that were concise to minimize participant burden. We chose the Organizational Readiness for Implementing Change (ORIC) (29) (measuring staff perceptions of organizational confidence, commitment and motivation to adopt ADAPT CP) and the Hands4U questionnaire (30), tailored for the purposes of the ADAPT CP (measuring staff personal confidence in implementing ADAPT CP, attitudes, intention, satisfaction regarding ADAPT CP, the resources and implementation strategies and perceived peer and leadership support for ADAPT CP).

After mapping these measures onto the eight outcomes described above, we identified gaps in the selected validated measures where important outcomes in the context of implementation of the ADAPT CP remained, including: perceived need, belief in the evidence base, perceived strength of leadership support, credibility of the research team and organizational leaders, perceived workload/burden of implementing, organizational fit and benefit and the organizational burden/costs. We developed 13 study-specific items to address these gaps, listed in Table 3.

We also included in design the use of study logs and checklists to document interactions between the research team and study sites, combined with semi-structured interviews with multidisciplinary service staff involved in the implementation of ADAPT to complement the quantitative survey data to gather the most holistic impression of success. The semi-structured interviews comprise questions designed to assess all elements of the implementation process. Creation of the interview guide was informed by the PARiHS framework, the Consolidated Framework for Implementing Research [CFIR (31)] and a recent systematic review of hospital-based implementation barriers and facilitators (32). It includes questions both about specific components of ADAPT CP and more general insights into each service context. It was pilot tested by two members of the research team (LG and PB).

We describe how each of the eight implementation outcomes is applied within the ADAPT Cluster RCT, demonstrating how quantitative and qualitative data are aligned to each outcome.

Proctor and colleagues note that assessment of acceptability must focus on the stakeholder's “knowledge of or direct experience with various dimensions of the treatment to be implemented, such as its content, complexity, or comfort” (p. 67), ascertained via formal, objective data-collection of stakeholder views. Quantitatively, we operationalized acceptability with the questions (see Table 3) covering confidence, commitment, motivation, credibility, evidence, perceived importance and alignment with service or institutional goals.

Qualitatively, interview questions probe attitudes toward the ADAPT CP, the resources and strategies. Questions address perceptions regarding: the utility, helpfulness and likely efficacy of the ADAPT CP; service attitudes toward research utilization, quality improvement (QI), professional development, new initiatives, mental health and technology; whether services support staff with time and resources to implement new initiatives; staff receptivity to change; service attitudes to implementation strategies; and perceived role of champions and leadership within services.

Mid-way, and at the end of the implementation phase (T1 and T2), additional interview questions explore experience of the ADAPT CP implementation and the implementation strategies to date, positive or negative elements of these, whether strategies and resources are a suitable fit for the service and the staff profile, and whether strategies occur as planned.

The implementation of a multi-faceted intervention like a clinical pathway means that adoption must be considered in relation to both the intervention and the implementation process, the dual strands highlighted by the StaRI checklist. In considering adoption, we sought to differentiate between adoption of the clinical pathway, the intervention components and implementation strategies and decided to measure adoption of the implementation strategies separately as part of feasibility and fidelity.

In line with the StaRI checklist (10), we differentiated between adoption of the ADAPT CP and the intervention components, noting that the distinction between these dual strands is not always clear cut but can aid in clarity of study design and reporting (11).

We decided that adoption of the ADAPT CP was covered by the primary outcome of adherence. At baseline, we supplement this with questionnaire items assessing intention to use the CP (Table 3).

Adoption of ADAPT resources are measured by quantifying staff training user numbers, page hits on referral templates within the ADAPT portal and patient user numbers of the online therapy program, iCanADAPT, and anxiety/depression resources.

Adoption of ADAPT implementation strategies is measured quantitatively via page hits on system generated reports, ADAPT logs of staff attendance at meetings and training sessions, and email/phone contacts with each site regarding IT and training.

While the above measures are at organizational level using data from the ADAPT Portal and online registration numbers, qualitative data assesses adoption of implementation strategies at the individual level, ensuring that levels of adoption are measured at the staff and organizational level (10). Qualitatively, interview questions probe barriers and facilitators to staff engagement, whether implementation strategies are actioned, which strategies are most or least helpful and how they enable implementation.

“Appropriateness” and “acceptability” are often used interchangeably in the literature; however, a distinction between these two constructs is crucial. Proctor et al. suggest that appropriateness encompasses provider perceptions of the fit of a program to their service (including mission or values), their role or skillset, as well as fit to the patient population. They also note that such perceptions may be shaped by the organizational culture. Assessment of this outcome requires recognition and understanding of the site context and its role in shaping provider perceptions. We assess appropriateness at the organizational level via both staff interviews as well as field observations made by the research team.

Quantitatively, we operationalized appropriateness with the questions covering organizational fit, confidence, commitment, motivation, evidence, perceived benefit, importance and alignment with service or institutional goals (Table 3).

Qualitatively, appropriateness is assessed with interview questions probing whether the ADAPT CP, the resources and strategies are needed, would work within the service, and would fit with a service's mission and values.

Proctor et al. note that an intervention may be appropriate for a service in terms of fit to values and goals, but not feasible due to lack of resources or infrastructure.

Quantitatively we assess feasibility of the ADAPT CP with questionnaire items covering confidence, perception of service capacity and capability (Table 3).

Similarly to appropriateness, feasibility has much to do with our understanding of the environment and resources (staff profile and workload). We capture current staffing numbers and overall staff profile supporting each cancer service during the engagement period with each service and reflect on any impact on the ability to implement the ADAPT CP, deliver and respond to implementation strategies.

Qualitatively, at all timepoints we assess feasibility through questions related to barriers and facilitators to the ADAPT CP and the implementation strategies in practice, and how or if they are overcome.

Fidelity is defined as the degree to which an intervention was implemented as it was prescribed in the protocol or as intended by the program developers (11). Fidelity to the intervention is measured in efficacy and effectiveness trials, yet there are few instruments for measuring fidelity to implementation. It is typically measured by comparing the original evidence-based intervention and the disseminated/implemented intervention in terms of factors such as adherence, quality of delivery, program component differentiation, exposure to the intervention, and participant responsiveness or involvement (26, 27, 33).

Defining success is challenging for flexible interventions that allow local adaptation in order to increase their relevance and applicability (34–36), and which respond reflexively to unique characteristics and unpredictable reactions in their intervention settings (37). The “fidelity/adaptation dilemma” (38) and its resolution is regarded as one of the most important challenges for evaluation (39). The StaRI guidelines highlight the important distinction between active or core components of an intervention (to which fidelity is expected), and modifiable components, which may be adapted by local sites to aid implementation.

We defined non-modifiable components of the ADAPT CP and implementation strategies, as well as those that could (and should) be tailored to individual site requirements (see Table 4). Within the ADAPT Cluster RCT, we are able to apply fidelity first to the implementation of the ADAPT CP, measured by adherence to the recommendations (screening, triage, step allocation and intervention where appropriate), and second, to the level of implementation strategies received as planned according to randomization (core vs. enhanced). The primary data source for assessing fidelity to the ADAPT CP is the captured data points from the ADAPT Portal which contribute to the primary outcome of adherence. Content of key information in online education and therapy programs were not flexible and fidelity data are captured during delivery.

Flexibility or tailoring was permitted in the delivery of the listed implementation strategies (Table 4), governed by the service scheduling (e.g., training), and tailored content for awareness campaigns to meet service priorities. Additional flexibility was permitted to respond to audit and feedback provided to participating services and to requests for further support throughout the implementation period. Assessment of these outcomes is challenging, as they do not fit well with the traditional method of measuring fidelity (checklists based on audio or video recordings, self-report, direct observation). Within the ADAPT Cluster RCT, the ADAPT team record the planned and actual delivery of the implementation strategies in the ADAPT Implementation Checklist and Observational Diary. ADAPT Contact logs are used by the ADAPT team delivering the implementation strategies to record any instances of tailoring or variation in non-modifiable aspects of both the CP and implementation strategies. Therefore, data from both the ADAPT Implementation Checklist and Observational Diary and ADAPT Contact log will be utilized to assess fidelity to the planned implementation strategies.

Much cost research focuses on quantifying the cost of the intervention (40), while comparatively little data exists on the best ways to capture implementation costs (41). Direct measures of implementation cost are needed to inform decision-making on implementation strategies, yet implementation costs are challenging to measure, as they need to include billable costs of all resources used as well as administrative costs and employee time (42), particularly in the case of complex interventions with multi-component strategies. It is recognized that implementation costs will vary according to setting and there are many challenges involved in assessing the true cost. StaRI guidelines advocate the importance of separating implementation process costs from intervention costs at the design stage.

In the ADAPT Cluster RCT, we sought to address this issue by costing each implementation strategy and each intervention component. Implementation costs include direct costs, time spent at each site by the research team and opportunity costs. These include logging all contact with services, received and/or initiated by the ADAPT team, allocating this also to a planned (linked to implementation strategies listed in Table 1), or unplanned categorization to capture number of contacts with services throughout the implementation period. Time, type of contact, issue and related strategy are also collected, enabling a monetary cost to be calculated for staff time in supporting the ADAPT CP implementation, as well as costs associated with services provided and funded by the Australian Department of Health, via the Medicare Benefits Scheme from audit data, staff wages, and expenditure reports. These data will contribute to a final cost and value of implementing the ADAPT CP, with success being determined through health economic principles.

In the data collected from services, we determine staffing profile at the beginning of the implementation and at midpoint and endpoint. One question in our staff survey directly asks for agreement or not to the statement “Implementing the anxiety and depression pathway will cost the organization too much money.” These data will provide some contextual information which may support health economic analysis tapping into perceived value rather than monetary outlay.

To date, penetration has been captured in a range of ways by implementation scientists, from examining service recipients who received the desired care (43), to looking at the number of providers who delivered the desired intervention. Proctor et al. note that penetration is not frequently used in the literature as a term, but may have overlap with terms such as saturation, research or institutionalization.

In the ADAPT RCT, penetration is operationalized to mean the extent to which ADAPT resources and Clinical Pathway components are used by the cancer service and referral networks. This outcome is assessed quantitatively in surveys completed by staff at midpoint and endpoint where items ask whether staff are aware of implementation strategies (e.g., posters, newsletters, reports). Qualitatively, interview questions about penetration focus on staff engagement, whether staff follow the ADAPT CP in practice and use the resources, whether the experience is positive and therefore a good news story within and beyond the service or department, and whether the implementation strategies enable the implementation by bedding down new processes and practice.

Whilst integration is not an explicit outcome in the Implementation Outcomes framework, adoption, fidelity and penetration all provide valuable information from where integration into routine care in the case of the ADAPT CP can be extrapolated. Integration reflects the medium to longer-term outcomes of whether an intervention becomes an integrated component of standard care, while longer-term integration will be determined in outcomes measuring sustainability.

Sustainability, the last aspect of the Proctor Implementation Outcome framework, is a term with varied meanings and interpretation (11). Proctor et al.'s definition incorporates key aspects of other theories, emphasizing program integration into organizational policy and practice and encouraging exploration of the processes through which a program becomes institutionalized. They note that penetration and sustainability are likely to be linked, as higher penetration of an intervention may contribute to long-term sustainability. To date, there has been greater focus on sustainability in conceptual rather than empirical papers. Where sustainability has been explored in trials, outcomes have focused on patient level changes, rather than measuring factors related to institutionalization in health services (44).

Within the ADAPT Cluster RCT, we defined sustainability in a way that would capture the extent of maintenance and institutionalization of the ADAPT CP within the service (as shown by the ADAPT Portal audit data at the end of the 12-month implementation period), as well as exploring stakeholder intent to maintain implementation of the ADAPT CP from qualitative data.

In terms of staff reported data, we have aligned one question in the Staff Survey to the theme of sustainability, namely: “People who work here feel confident that they can keep the momentum going in implementing the anxiety and depression pathway (ORIC).” Similar to themes we aligned with penetration, qualitative questions probing sustainability focus on staff engagement with the ADAPT CP in practice, their positive or negative experiences and, as a consequence, whether staff perceive the initiative continuing beyond the ADAPT Cluster RCT experience. Ideally, additional data collection for at least 12 months beyond the implementation period of services using the ADAPT Portal will provide more robust sustainability and integration data that can contribute to health services implementation. For the ADAPT Cluster RCT, this aspect can be predicted only based on service engagement over the ADAPT RCT 12 month supported implementation period, influenced or not by randomization to one of two different implementation packages.

In undertaking the process of defining success for the ADAPT Cluster RCT, it became clear that there is a paucity of research that explores and systematically recounts the ways in which outcomes for success are selected and operationalized. We noted the importance of specifying theoretically and contextually sensitive definitions of success outcomes and drew from both existing frameworks and ongoing consultation with key stakeholders and clinical service staff to tailor and revise our outcome definitions. Our attempt to separate outcomes, components and strategies described above in the ADAPT Cluster RCT organization and design has shown that measuring implementation success using existing frameworks was not straightforward. There is likely to be interaction across success outcomes, for example the impact of acceptability on adoption, penetration and sustainability. Therefore, using a conceptual approach to mapping the relationships between success outcomes may provide some valuable insights into this issue.

We have generated a combination of qualitative and quantitative measures to capture our primary and secondary success outcomes, to capture both the breadth of data and the depth of information that can be provided by the lived experience of the staff engaging in the intervention. The consistent application of these outcomes can further our understanding of whether and how the implementation process was successful, what made it successful and which intervention components and implementation strategies had the strongest impact.

Our approach to operationalizing success outcomes highlighted several key lessons and useful steps to consider when measuring success. First, selecting and consulting key theoretical frameworks such as the Implementation Outcomes framework and STaRI guidelines was an essential first step to ensure we were capturing relevant dimensions. This approach is consistent with implementation science principles to use theory in project design, conduct, analysis and evaluation.

Second, we needed to define each success outcome within the context of our specific intervention (ADAPT CP) and research design (evaluating implementation strategies within a cluster RCT) before moving on to defining measures. This entailed deciding on the unit of analysis (individual or organizational level) for which success is most meaningful. We decided to address both, by gathering objective data on organizational outcomes, as well as at the individual level via staff perceptions of organization outcomes and of their own individual outcomes.

Third, we needed to define the elements (intervention, resources and implementation strategies) that were addressed under each success outcome. As shown in Table 2, mapping how definitions were applied across the cluster RCT, the timing of measurements and the existing sources of measurement. Systematically mapping our defined success outcomes and elements against existing measures was particularly helpful in ensuring confidence we had fully captured all relevant aspects of success.

Fourth, we needed to be flexible in combining psychometrically proven measures (to ensure measurement reliability and validity and allow comparison with other literature) and creating our own quantitative and qualitative questions and checklists (to ensure we had covered all theoretically important and contextual aspects).

Fifth, our guiding frameworks highlighted the need to provide a rich description of context to assist understanding of the interplay between the intervention, the providers, and the service, particularly if seeking to assess whether the implementation strategies can be transposed to other settings or will require adaption. Thus, we were careful to include a longitudinal qualitative component to measurement of success.

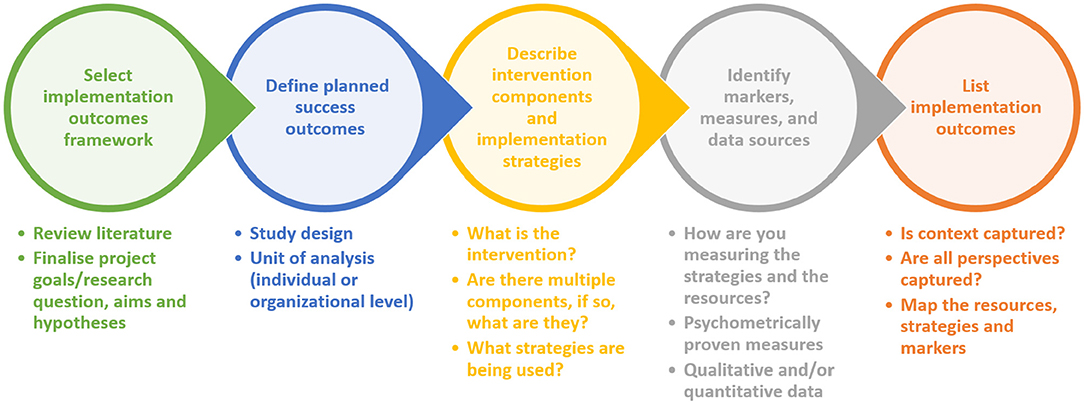

We have illustrated how we targeted defining implementation success and applying it to a cluster RCT focused on answering implementation effectiveness, in Figure 1, showing the concepts and questions that may be helpful to researchers in evaluating the success of the intervention being implemented as well as the implementation itself.

Figure 1. Targeting definable implementation outcomes. A conceptual approach to enable comparison of the effectiveness of implementation strategies and intervention components in a cluster randomized trial.

An ongoing challenge for the ADAPT Cluster RCT will be balancing the process of obtaining data for measurement of success without unduly influencing the process of the intervention and implementation, or overburdening participants with data collection throughout the implementation process.

Finally, the process of decision-making about success outcomes early in the process was recognized as a key part of the ADAPT research program, requiring regular iterative review and openness to flexibility, noting that assumed “essential” intervention components or implementation strategies may not turn out to be so. This work highlights the need to carefully consider multi-faceted success and end user perspectives as a key component in the evaluation of complex interventions and their implementation.

This paper provides an example of how in planning for a large cluster randomized trial with implementation success as the focus of the research questions, we tackled the planned collection and mapping of data to an implementation outcomes framework. We do not present the results of the cluster randomized trial, rather provide guidance to researchers and health services about how to approach projects in this field. For this research project, we were able to manage data collection using the resources of the research team, however we do not address issues for those seeking ongoing evaluation of initiatives, although reporting set up within initial implementation may be sustainable within institutions. This aspect warrants further enquiry and recommendations for researchers and health services.

We have described a conceptual approach to selecting and defining success outcomes and what issues arose in making these specific to the ADAPT Cluster RCT. We believe this approach provides guidance to researchers and program leaders in implementation science to carefully design studies that collect data for both intervention components and implementation strategies. We contend that these findings have a range of implications for other implementation researchers, health services staff and policy makers, including the need to think about multi-faceted success, and grounding definitions in theoretical frameworks. We also note the importance of thorough review of the range of available measures to identify those that best fit with the implementation strategy and intervention context, and not impacting intervention or implementation burden by extensive additional data collection.

No trial data is presented in the paper. The study protocol for the cluster RCT used as an example for this paper is published, Butow et al. (19).

The Cluster RCT used as an example for this methodological paper was approved by the Sydney Local Health District (RPAH Zone) Human Research Ethics Committee, Protocol X16-0378 HREC/16/RPAH/522.

HS and LG wrote the first draft of the manuscript. NR and PBu made significant contributions to subsequent drafts. HS compiled revisions from authors and prepared the final draft of the manuscript for submission. All authors were involved in developing the content of the manuscript through their participation in the ADAPT Working Groups, conceptualizing the markers of success and mapping them to the ADAPT Cluster RCT design, contributed to making revisions and responding to reviewer feedback, read and approved the final manuscript.

This manuscript was prepared as part of the Anxiety and Depression Pathway (ADAPT) Program, led by the Psycho-oncology Cooperative Research Group (PoCoG). The ADAPT Program is funded by a Translational Program Grant from the Cancer Institute NSW 14/TPG/1-02. The funding body had no role in study design, data collection, analysis or writing of the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors acknowledge the support of The ADAPT Program Group representing the investigators and advisors to the ADAPT Program (cG9jb2cuYWRhcHRAc3lkbmV5LmVkdS5hdQ==): PBu, JS, Melanie Price, HS, LM, NR, AG, BK, TH, RV, Josephine Clayton, PBe, Laura Kirsten, HD, PG, Michael Murphy, Jill Newby, John Stubbs, Frances Orr, Toni Lindsay, Gavin Andrews, TS, Joseph Coll, Karen Allison, Jessica Cuddy, Fiona White, LG, ML, TL, Kate Baychek, Don Piro, Alison Pearce, and Jackie Yim. The authors would additionally like to acknowledge the commitment and contribution to this work of Melanie Price (1965-2018). Melanie Price was a respected member of the psycho-oncology and palliative care community in Australia for over 22 years. She was a tireless advocate for people affected by cancer, their families and psycho-oncology as a discipline.

1. Eccles MP, Mittman BS. Welcome to implementation science. Implement Sci. (2006) 1:1–1. doi: 10.1186/1748-5908-1-1

2. Rotter T, Kinsman L, James E, Machotta A, Gothe H, Willis J, et al. Clinical pathways: effects on professional practice, patient outcomes, length of stay and hospital costs. Cochrane Database Syst Rev. (2010) 3:CD006632. doi: 10.1002/14651858.CD006632.pub2

3. Mitchell AJ, Chan M, Bhatti H, Halton M, Grassi L, Johansen C, et al. Prevalence of depression, anxiety, and adjustment disorder in oncological, haematological, and palliative-care settings: a meta-analysis of 94 interview-based studies. Lancet Oncol. (2011) 12:160–74. doi: 10.1016/S1470-2045(11)70002-X

4. Ski CF, Page K, Thompson DR, Cummins RA, Salzberg M, Worrall-Carter L. Clinical outcomes associated with screening and referral for depression in an acute cardiac ward. J Clin Nurs. (2012) 21:2228–34. doi: 10.1111/j.1365-2702.2011.03934.x

5. Grimshaw J, Eccles M, Tetroe J. Implementing clinical guidelines: current evidence and future implications. J Contin Educ Health Profess. (2004) 2:S31–7. doi: 10.1002/chp.1340240506

6. Grol R, Grimshaw J. From best evidence to best practice: effective implementation of change in patients' care. Lancet. (2003) 362:1225–30. doi: 10.1016/S0140-6736(03)14546-1

7. Allen D, Gillen E, Rixson L. Systematic review of the effectiveness of integrated care pathways: what works, for whom, in which circumstances? Int J Evid Based Healthcare. (2009) 7:61–74. doi: 10.1111/j.1744-1609.2009.00127.x

8. Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, et al. Process evaluation of complex interventions: medical research council guidance. BMJ. (2015) 350:h1258. doi: 10.1136/bmj.h1258

9. Grimshaw J, Eccles M, Thomas R, MacLennan G, Ramsay C, Fraser C, et al. Toward evidence-based quality improvement. Evidence (and its limitations) of the effectiveness of guideline dissemination and implementation strategies 1966–1998. J Gen Intern Med. (2006) 21:S14–20. doi: 10.1111/j.1525-1497.2006.00357.x

10. Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, et al. Standards for reporting implementation studies (StaRI) statement. BMJ. (2017) 356. doi: 10.1136/bmj.i6795

11. Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Administr Policy Ment Health. (2011) 38:65–76. doi: 10.1007/s10488-010-0319-7

12. Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. (2015) 10:53. doi: 10.1186/s13012-015-0242-0

13. Nutting PA, Beasley JW, Werner JJ. Practice-based research networks answer primary care questions. J Am Med Assoc. (1999) 281:686–8. doi: 10.1001/jama.281.8.686

14. Mays GP, Hogg RA. Expanding delivery system research in public health settings: lessons from practice-based research networks. journal of public health management and practice. JPHMP. (2012) 18:485–98. doi: 10.1097/PHH.0b013e31825f75c9

15. Stange KC, Woolf SH, Gjeltema K. One minute for prevention: The power of leveraging to fulfill the promise of health behavior counseling11The full text of this commentary is available via AJPM Online at www.ajpm-online.net. Am J Prevent Med. (2002) 22:320–3. doi: 10.1016/S0749-3797(02)00413-0

16. Glasgow R. What does it mean to be pragmatic? Pragmatic methods, measures, and models to facilitate research translation. Health Educ Behav. (2013) 40:257–65. doi: 10.1177/1090198113486805

17. Butow P, Price MA, Shaw JM, Turner J, Clayton JM, Grimison P, et al. Clinical pathway for the screening, assessment and management of anxiety and depression in adult cancer patients: Australian guidelines. Psychooncology. (2015) 24:987–1001. doi: 10.1002/pon.3920

18. Shaw JM, Price MA, Clayton JM, Grimison P, Shaw T, Rankin N, et al. Developing a clinical pathway for the identification and management of anxiety and depression in adult cancer patients: an online Delphi consensus process. Support Cancer Care. (2016) 24:33–41. doi: 10.1007/s00520-015-2742-5

19. Butow P, Shaw J, Shepherd HL, Price M, Masya L, Kelly B, et al. Comparison of implementation strategies to influence adherence to the clinical pathway for screening, assessment and management of anxiety and depression in adult cancer patients (ADAPT CP): study protocol of a cluster randomised controlled trial. BMC Cancer. (2018) 18:1077. doi: 10.1186/s12885-018-4962-9

20. Fallowfield L, Ratcliffe D, Jenkins V, Saul J. Psychiatric morbidity and its recognition by doctors in patients with cancer. Brit J Cancer. (2001) 84:1011–5. doi: 10.1054/bjoc.2001.1724

21. Sanson-Fisher R, Girgis A, Boyes A, Bonevski B, Burton L, Cook P. The unmet supportive care needs of patients with cancer. Support Care Rev Group Cancer. (2000) 88:226–37.

22. Rankin NM, Butow PN, Thein T, Robinson T, Shaw JM, Price MA, et al. Everybody wants it done but nobody wants to do it: an exploration of the barrier and enablers of critical components towards creating a clinical pathway for anxiety and depression in cancer. BMC Health Serv Res. (2015) 15:28. doi: 10.1186/s12913-015-0691-9

23. Karageorge A, Murphy MJ, Newby JM, Kirsten L, Andrews G, Allison K, et al. Acceptability of an internet cognitive behavioural therapy program for people with early-stage cancer and cancer survivors with depression and/or anxiety: thematic findings from focus groups. Support Care Cancer. (2017) 25:2129–36. doi: 10.1007/s00520-017-3617-8

24. Shepherd H, Shaw J, Price M, Butow P, Dhillon H, Kirsten L, et al. Making screening, assessment, referral and management of anxiety and depression in cancer care a reality: developing a system addressing barriers and facilitators to support sustainable implementation. Asia Pacific J Clin Oncol. (2017) 13:60–105. doi: 10.1111/ajco.12798

25. Kitson A, Harvey G, McCormack B. Enabling the implementation of evidence-based practice: a conceptual framework. Qual Health Care. (1998) 7:149–58. doi: 10.1136/qshc.7.3.149

26. Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: are implementation effects out of control? Clin Psychol Rev. (1998) 18:23–45. doi: 10.1016/S0272-7358(97)00043-3

28. van der Meer EW, Boot CR, Jungbauer FH, van der Klink JJ, Rustemeyer T, Coenraads PJ, et al. Hands4U: a multifaceted strategy to implement guideline-based recommendations to prevent hand eczema in health care workers: design of a randomised controlled trial and (cost) effectiveness evaluation. BMC Public Health. (2011) 11:669. doi: 10.1186/1471-2458-11-669

29. Shea CM, Jacobs SR, Esserman DA, Bruce K, Weiner BJ. Organizational readiness for implementing change: a psychometric assessment of a new measure. Implement Sci. (2014) 9:7. doi: 10.1186/1748-5908-9-7

30. van der Meer EW, Boot CR, Twisk JW, Coenraads PJ, Jungbauer FH, van der Gulden JW, et al. Hands4U: the effectiveness of a multifaceted implementation strategy on behaviour related to the prevention of hand eczema-a randomised controlled trial among healthcare workers. Occup Environ Med. (2014) 71:492–9. doi: 10.1136/oemed-2013-102034

31. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. (2009) 4:50. doi: 10.1186/1748-5908-4-50

32. Geerligs L, Rankin NM, Shepherd HL, Butow P. Hospital-based interventions: a systematic review of staff-reported barriers and facilitators to implementation processes. Implement Sci. (2018) 13:36. doi: 10.1186/s13012-018-0726-9

33. Lewis CC, Fischer S, Weiner BJ, Stanick C, Kim M, Martinez RG. Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implement Sci. (2015) 10:155. doi: 10.1186/s13012-015-0342-x

34. Pérez D, Lefèvre P, Castro M, Sánchez L, Toledo ME, Vanlerberghe V, et al. Process-oriented fidelity research assists in evaluation, adjustment and scaling-up of community-based interventions. Health Policy Plann. (2010) 26:413–22. doi: 10.1093/heapol/czq077

35. Hasson H, Blomberg S, Dunér A. Fidelity and moderating factors in complex interventions: a case study of a continuum of care program for frail elderly people in health and social care. Implement Sci. (2012) 7:23. doi: 10.1186/1748-5908-7-23

36. Zwarenstein M, Treweek S, Gagnier JJ, Altman DG, Tunis S, Haynes B, et al. Improving the reporting of pragmatic trials: an extension of the CONSORT statement. BMJ. (2008) 337:a2390. doi: 10.1136/bmj.a2390

37. Moore G, Audrey S, Barker M, Bond L, Bonell C, Cooper C, et al. Process evaluation in complex public health intervention studies: the need for guidance. J Epidemiol Commun Health. (2014) 68:101–2. doi: 10.1136/jech-2013-202869

38. Cherney A, Head B. Evidence-based policy and practice key challenges for improvement. Aust J Soc Issues. (2010) 45:509–26. doi: 10.1002/j.1839-4655.2010.tb00195.x

39. Glasgow RE. Key evaluation issues in facilitating translation of research to practice and policy. In: Williams B, Sankar M, Editors. Evaluation South Asia. Kathmandu: UNICEF Regional Office for South Asia (2008). p. 15–24.

40. Ronckers ET, Groot W, Steenbakkers M, Ruland E, Ament A. Costs of the 'Hartslag Limburg' community heart health intervention. BMC Public Health. (2006) 6:51. doi: 10.1186/1471-2458-6-51

41. Hoomans T, Severens J. Economic evaluation of implementation strategies in health care. Implement Sci. (2014) 9:168. doi: 10.1186/s13012-014-0168-y

42. Chamberlain P, Snowden LR, Padgett C, Saldana L, Roles J, Holmes L, et al. A strategy for assessing costs of implementing new practices in the child welfare system: adapting the English cost calculator in the United States. Admin Policy Ment Health. (2011) 38:24–31. doi: 10.1007/s10488-010-0318-8

43. Stiles PG, Boothroyd RA, Snyder K, Zong X. Service penetration by persons with severe mental illness: how should it be measured? J Behav Health Serv Res. (2002) 29:198–207. doi: 10.1097/00075484-200205000-00010

Keywords: implementation science, health services research, outcome measurement, methodology, psycho-oncology, clinical pathways

Citation: Shepherd HL, Geerligs L, Butow P, Masya L, Shaw J, Price M, Dhillon HM, Hack TF, Girgis A, Luckett T, Lovell M, Kelly B, Beale P, Grimison P, Shaw T, Viney R and Rankin NM (2019) The Elusive Search for Success: Defining and Measuring Implementation Outcomes in a Real-World Hospital Trial. Front. Public Health 7:293. doi: 10.3389/fpubh.2019.00293

Received: 23 July 2019; Accepted: 27 September 2019;

Published: 18 October 2019.

Edited by:

William Edson Aaronson, Temple University, United StatesReviewed by:

Jo Ann Shoup, Kaiser Permanente, United StatesCopyright © 2019 Shepherd, Geerligs, Butow, Masya, Shaw, Price, Dhillon, Hack, Girgis, Luckett, Lovell, Kelly, Beale, Grimison, Shaw, Viney and Rankin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Heather L. Shepherd, aGVhdGhlci5zaGVwaGVyZEBzeWRuZXkuZWR1LmF1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.