94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

CURRICULUM, INSTRUCTION, AND PEDAGOGY article

Front. Public Health, 07 May 2018

Sec. Public Health Education and Promotion

Volume 6 - 2018 | https://doi.org/10.3389/fpubh.2018.00113

This article is part of the Research TopicMethods and Applications in Implementation ScienceView all 20 articles

Background: Implementation science lacks a systematic approach to the development of learning strategies for online training in evidence-based practices (EBPs) that takes the context of real-world practice into account. The field of instructional design offers ecologically valid and systematic processes to develop learning strategies for workforce development and performance support.

Objective: This report describes the application of an instructional design framework—Analyze, Design, Develop, Implement, and Evaluate (ADDIE) model—in the development and evaluation of e-learning modules as one strategy among a multifaceted approach to the implementation of individual placement and support (IPS), a model of supported employment for community behavioral health treatment programs, in New York State.

Methods: We applied quantitative and qualitative methods to develop and evaluate three IPS e-learning modules. Throughout the ADDIE process, we conducted formative and summative evaluations and identified determinants of implementation using the Consolidated Framework for Implementation Research (CFIR). Formative evaluations consisted of qualitative feedback received from recipients and providers during early pilot work. The summative evaluation consisted of levels 1 and 2 (reaction to the training, self-reported knowledge, and practice change) quantitative and qualitative data and was guided by the Kirkpatrick model for training evaluation.

Results: Formative evaluation with key stakeholders identified a range of learning needs that informed the development of a pilot training program in IPS. Feedback on this pilot training program informed the design document of three e-learning modules on IPS: Introduction to IPS, IPS Job development, and Using the IPS Employment Resource Book. Each module was developed iteratively and provided an assessment of learning needs that informed successive modules. All modules were disseminated and evaluated through a learning management system. Summative evaluation revealed that learners rated the modules positively, and self-report of knowledge acquisition was high (mean range: 4.4–4.6 out of 5). About half of learners indicated that they would change their practice after watching the modules (range: 48–51%). All learners who completed the level 1 evaluation demonstrated 80% or better mastery of knowledge on the level 2 evaluation embedded in each module. The CFIR was used to identify implementation barriers and facilitators among the evaluation data which facilitated planning for subsequent implementation support activities in the IPS initiative.

Conclusion: Instructional design approaches such as ADDIE may offer implementation scientists and practitioners a flexible and systematic approach for the development of e-learning modules as a single component or one strategy in a multifaceted approach for training in EBPs.

A recent report by the Institute of Medicine Best Care at a Lower Cost: The Path to Continuously Learning Health Care in America (1), reported, “Achieving higher quality care at lower cost will require fundamental commitments to the incentives, culture, and leadership that foster continuous learning, as the lessons from research and each care experience are systematically captured, assessed, and translated into reliable care.” Central to the translation from research to practice and reliable care is training health-care providers in evidence-based practices (EBPs). In behavioral health care, training in EBPs often involves developing new clinical competencies. This training should take into account the context and needs of the practice community as well as strategies to facilitate adoption and implementation (2). Increasingly, training utilizes online modalities to expand its reach and efficiency, digital media to promote active engagement, shorter learning sessions to foster knowledge retention, and methods to demonstrate and practice skills that can be applied in the workplace (3).

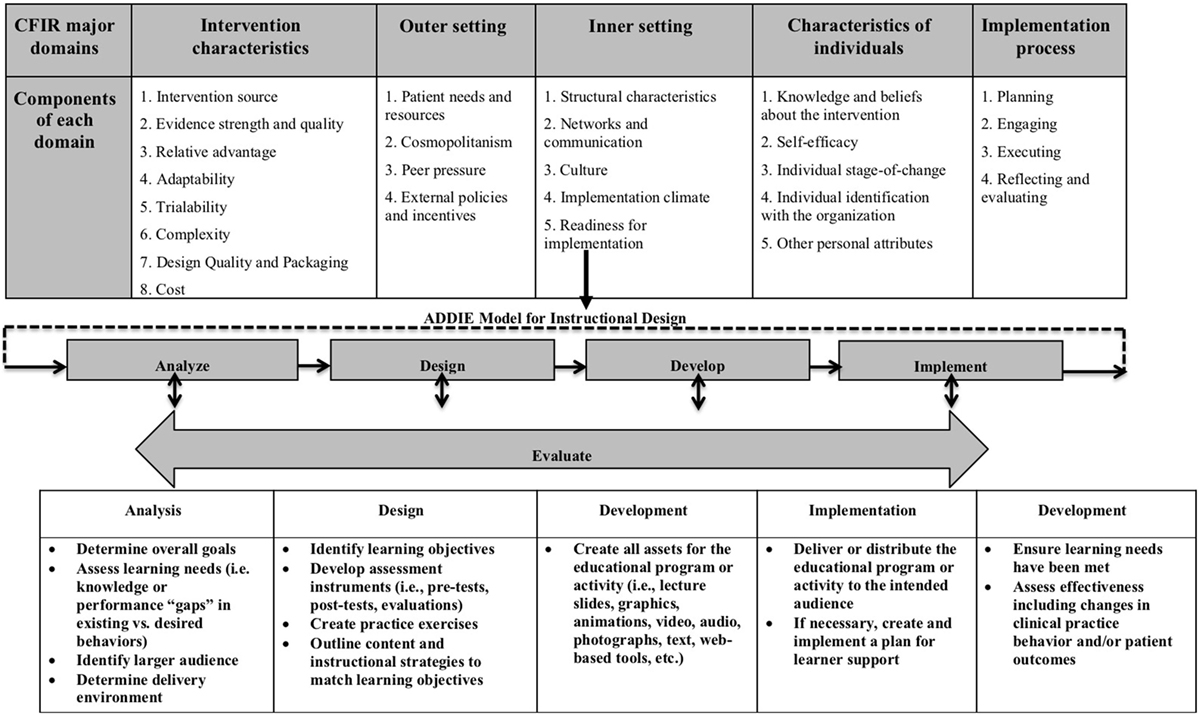

Implementation science, a field dedicated to understanding targeted dissemination and implementation of EBPs and the use of strategies to improve adoption in community health-care settings, has guided the work of translating research to practice. Numerous frameworks in implementation science provide a menu of constructs that have been associated with effective implementation. Damschroder (Figure 1) (4) combined 19 published implementation theories into the Consolidated Framework for Implementation Research (CFIR). The CFIR provides a menu of constructs that have been associated with effective implementation. The framework is organized into five domains: intervention characteristics, inner setting, outer setting, characteristics of individuals, and process. Under the inner setting domain, one key construct under the component readiness for implementation is access to knowledge and information. Access to knowledge and information is defined as the ease of access to digestible information and knowledge about the practice and how to incorporate it into work tasks. This is the function of training. It is purported that when timely on-the-job training is available, implementation is more likely to be successful (5).

Figure 1. Using an instructional design framework [Analyze, Design, Develop, Implement, and Evaluate (ADDIE)] as a systematic process to develop training in an implementation framework [Consolidated Framework for Implementation Research (CFIR)].

Although training is an important determinant of successful implementation (5), the field of implementation science lacks systematic approaches for the development of training that takes into account the learners’ needs, context, and optimal modalities for learning. Training in EBPs and their evaluation has been identified as a priority item on the National Institutes of Health research agenda (Program Announcement in Dissemination and Implementation Research in Health; https://grants.nih.gov/grants/guide/pa-files/PAR-16-238.html) and a commonly used implementation strategy in implementation practice and research (6). Intervention or EBP developers are not likely to have expertise in instructional design and may miss the mark of engaging busy practitioners in training for several reasons. First, didactic approaches may not take into account the level of interest or needs of the practitioners. Second, traditional training approaches may not consider organizational factors (i.e., time available for provider training) also key to successful implementation (7). Third, how individuals learn and process information is evolving given our access to the Internet and technology. The field of implementation science may benefit from an ecologically valid approach to the development of learning experiences for training health-care practitioners.

Recent reports have pointed to the utility of instructional design in the dissemination and implementation of EBPs in behavioral health (8, 9). The field of instructional design offers one model, Analyze, Design, Develop, Implement and Evaluate (ADDIE) (10), that takes into account learning theory, the learner’s needs and environment, and approaches to training practitioners in EBPs. The foundations of ADDIE are traced back to World War II when the U.S. military developed strategies for rapidly training people to perform complex technical tasks. The ADDIE model is used in creating a teaching curriculum or a training that is geared toward producing specific learning outcomes and behavioral changes. It provides a systematic approach to the analysis of learning needs, the design and development of a curriculum, and the implementation and initial evaluation of a training program (11, 12). This model of developing training programs is particularly useful if the focus of the program is targeted toward changing participant behavior and improving performance. ADDIE is increasingly being adopted in industries such as health care (13). Recent studies have successfully adopted the ADDIE model to improve patient safety, procedural competency, and disaster simulation (14, 15). It has also been effectively used in medical training and education to change practice behaviors in the management of various medical conditions (16–18).

The options for delivery and modalities used in training (e.g., mobile devices, webcasts, and podcasts) have expanded significantly in the last decade. With online learning technology, there is an opportunity to reach learners anytime and anywhere to provide performance support. One such example of an online learning technology is e-learning modules. e-Learning modules are self-paced lessons that enable the learner to read text, listen to narrated content, observe video scenarios, and respond to questions or prompts, in a multimedia format designed to maximize engagement and retention. Learning management systems (LMSs) host e-learning modules and capture learning metrics and performance. Learning analytics provided by LMSs enable the ability to track individual and group performance which may be used to provide feedback and support continuous learning in large systems of care.

In this report, we provide an example of the application of ADDIE in the development and evaluation of e-learning modules as one strategy among a multifaceted approach to the dissemination and implementation of the individual placement and support (IPS) model of supported employment in community treatment programs in New York State (NYS). Specifically, we (1) describe the application of an instructional design framework, ADDIE, in the iterative development of e-learning modules for IPS; (2) conduct a large-scale dissemination of the IPS e-learning modules throughout the state using an LMS; (3) evaluate learner reaction, self-reported knowledge, and practice change after IPS e-learning modules; (4) identify key barriers and facilitators to future IPS implementation using formative and summative ADDIE evaluation data and the CFIR.

We used three frameworks to guide the process of developing e-learning modules (ADDIE), identify determinants of future training and implementation (CFIR), and evaluate the IPS e-learning modules [Kirkpatrick model (19)]. The ADDIE model consists of five phases, beginning with identifying key stakeholder needs, educational goals, and optimal methods of content delivery (analysis). This information was used to establish a design document for the training (design) that is vetted by key stakeholders prior to building the e-learning modules (development). After iterative refinement, e-learning modules were disseminated and evaluated using the Kirkpatrick model for training evaluation (19) (implementation/evaluation). Results from the formative and summative evaluations conducted during the ADDIE process, identified barriers/facilitators to implementation using CFIR domains (Figure 1). Doing so allowed the IPS team to iteratively ensure sufficient attention to contextual variables, align with the larger conceptual and empirical implementation literature (9, 20) as well as select strategies to build a multifaceted approach to IPS implementation.

In November 2007, the NYS Office of Mental Health (OMH) and the Department of Psychiatry, Columbia University, established the Center for Practice Innovations (CPI) at Columbia Psychiatry and New York State Psychiatric Institute to promote the widespread use of EBPs throughout NYS. CPI uses innovative approaches to build stakeholder collaborations, develop and maintain providers’ expertise, and build agency infrastructures that support implementing and sustaining these EBPs. CPI works with OMH to identify and involve consumer, family, provider, and scientific-academic organizations as partners in supporting the goals of OMH and CPI. CPI’s initial charge was to provide training for the NYS behavioral health-care workforce. Given the size and geographical dispersion of this workforce, CPI turned to distance-learning technologies and e-learning modules (21, 22). Distance technologies may offer cost-effective alternatives to typical training methods, and some evidence suggests that such technologies are at least as effective as a face-to-face training (21). CPI has collaborated with key stakeholders and content experts to create more than 100 e-learning modules to provide training for its initiatives. CPI’s online modules and resources require the use of an online learning platform, an LMS, that facilitates access to online training, event registration, and resource libraries for each initiative.

One of these initiatives, IPS, provides training and implementation support in an evidence-based approach to supported employment (23). Rates of competitive employment were low across NYS, with a competitive employment rate of 9.2% in 2011 prior to systematic IPS implementation (Patient Characteristics Survey data, 2011 obtained from https://www.omh.ny.gov/omhweb/statistics/). In response, OMH leadership identified supported employment as a key service in personalized recovery oriented services (PROS) programs, a comprehensive model that integrates rehabilitation, treatment, and support services for people with serious mental illness. The number of PROS programs in New York has increased significantly over the past decade: in 2017, 86 programs were serving 10,500 individuals. In order to reach these 86 programs statewide, the IPS initiative developed a series of three e-learning modules: Introduction to IPS, IPS Job Development, and Using the IPS Employment Resource Book. The module development team included an instructional designer, subject matter experts (SMEs), course developers, and a project manager.

In the analysis phase, the instructional problem was clarified, the instructional goals and objectives were established, and the learner’s environment, existing knowledge, and skills were identified. The module development team engaged in a discussion to identify the instructional problem and understand the expectations for performance after completing the modules. Because IPS had not been previously implemented in NYS, it was expected that learners’ existing knowledge and skills of IPS would be minimal. Formative evaluation via preliminary discussions with agency administrators, employment supervisors, and employment staff members in PROS programs included questions about learners’ experiences with and opinions about traditional vocational rehabilitation methods, attitudes about IPS principles (i.e., zero exclusion), awareness of or experiences with IPS, and expectations and attitudes about the likelihood of program recipients in their programs working competitively. These discussions revealed several needs: lack of understanding of the evidence for IPS (CFIR: intervention), discomfort with some IPS principles which are inconsistent with traditional approaches to vocational rehabilitation (characteristics of individuals), lack of knowledge about the specific skills and tasks involved in the model (characteristics of individuals), lack of familiarity with how to do job development and why it is important (characteristics of individuals), and the lack of tools that can be used in real-time meetings with potential employers (implementation process). These data informed the development of a curriculum for a pilot training program in IPS that consisted of in-person training, webinars, and on-site technical assistance. Through this pilot process, observations were made about learners’ strengths and additional training needs, and the PROS program environment. In addition, program recipients’ (adults diagnosed with serious mental illness, living in the community, many with histories of hospitalizations and treatment) employment needs (e.g., consistent with individuals’ personal strengths and interests), part-time for many, easily accessible with public transportation (outer setting) supported another cycle of modifications to the IPS curriculum and informed decisions about pedagogical format. As the initiative required scalability across the state of New York, it was determined that e-learning modules would be an important resource-efficient implementation strategy.

The design phase established learning objectives, exercises, content, lesson planning, and media selection via a design document, which served as the blueprint for building the training program. The instructional designer gathered feedback from the analysis phase and resources on the topic provided by SMEs (e.g., books, research publications, information available online) and identified content to support the learning objectives (Table 1) for all three IPS e-learning modules. The module development team designed a 10-item knowledge quiz and a 10-item level 1 reaction survey consisting of both closed- and open-ended questions. Iteratively, the instructional designer presented design documents for review and feedback from the module development team. An example design document for the IPS Job Development module is provided in the Figure S1 in Supplementary Material.

During the development phase, the course developer received the reviewed design document and used an authoring tool software to create multimedia e-learning modules according to the design document. During this phase, the IPS modules were animated using video, graphics with narration, knowledge checks, and photographs. Formative evaluation from the analysis phase led to the development of a tool, the Employment Resource Book (24), that could be utilized by key stakeholders (providers, supervisors, and recipients) during any phase of employment (e.g., considering work, actively seeking employment, maintaining employment), and one module was developed to provide guidance about using this resource. The IPS training was built into three short e-learning modules to reflect learners’ time availability and attention span during the workday, then tested in prototype with the module development team and revised.

During the implementation phase, e-learning modules were uploaded to the CPI LMS for usability testing. During usability testing, the module’s functionality is evaluated prior to training implementation. For example, the module development team tested whether videos play and navigation works (e.g., next buttons and links to additional resources) on a variety of web browsers and devices. Feedback from the usability testing phase is used to fix errors in navigation and improve user experience (25). After usability testing issues were addressed, the modules were ready for implementation.

When the IPS initiative began, the NYS-OMH Rehabilitation Services Unit sent an official email communication strongly encouraging PROS program providers and supervisors to participate in the training offered by the CPI IPS initiative. Further, each PROS program supervisor received an email, alerting them that the new IPS e-learning module was available in CPI’s LMS. Through the LMS, PROS program participation in the modules was tracked, and completion could be monitored by PROS programs and NYS-OMH.

We applied quantitative and qualitative methods as part of formative and summative evaluation in the ADDIE process. Formative evaluations consisted of qualitative feedback received from recipients and providers during early pilot work, which identified training needs. The summative evaluation consisted of quantitative and qualitative data and was guided by the Kirkpatrick model for training evaluation (19). The four levels of evaluation are (1) the reaction of the learner about the training experience, (2) the learner’s resulting learning and increase in knowledge from the training experience, (3) the learner’s behavioral change and improvement after applying the skills on the job, and (4) the results or effects that the learner’s performance has on care provided. For this report, we focus on the first two levels, specifically, the level 1—reaction of the learner including training experience, self-reported knowledge acquisition, and self-reported practice change through a survey and level 2—resulting knowledge through post-module quizzes.

To keep the learner experience seamless, a decision was made to embed the knowledge quiz, assessing knowledge of IPS model-related concepts, skills, and tools, within each module. In order for the module to be marked as completed, learners are required to answer at least 80% of the knowledge items correctly, which satisfies continuing education accreditation requirements. Learners are able to retake the quiz as many times as needed to meet this criterion score. Once the module is completed, the learner is prompted to complete the level 1 survey. The level 1 reaction survey was based on learning objectives set forth in each e-learning module, accreditation requirements, and example questions from Kirkpatrick level 1 (19). Questions included rating the module overall, if it met stated learning objectives, if the information presented was new to the learner, and questions about module-specific self-reported knowledge and practice change. In addition, three open-ended questions were included: What could we improve? What do you like the most about this module? and Where do you think you might use what you learned in this module?

The NYS Psychiatric Institute Institutional Review Board determined that this evaluation did not meet the definition of human subject research.

Using IBM© SPSS© Statistics Version 24, we applied descriptive statistics to quantitative level 1 summative evaluation data. For the qualitative formative and summative evaluation data, we employed a thematic analysis to identify themes within the open-ended question data (26). Two coders reviewed the open-ended question data independently to identify codes and develop an initial code list. The coders combined codes into overarching themes and met to review and label them. Coders met twice to discuss discrepancies and achieve consensus on key barriers and facilitators within the CFIR framework. We report on those themes that were raised by at least 10% of the sample.

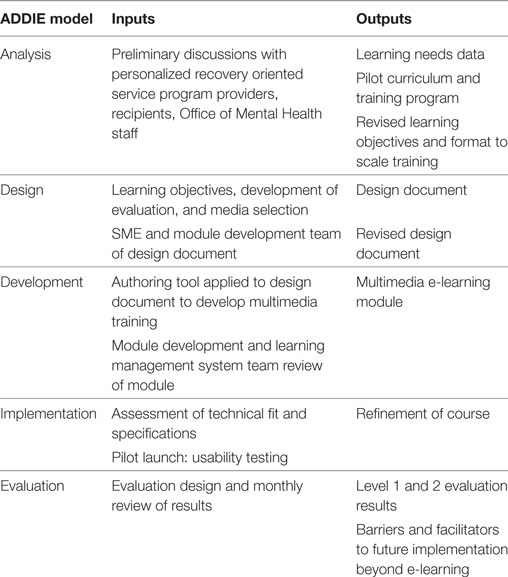

We describe the inputs and outputs during each phase of IPS module development using ADDIE in Table 2. Formative evaluation during each stage of ADDIE allowed for the iterative revision of the content for each module and the identification of needs for subsequent modules. Feedback received from the evaluation of the first module led to the development of the second module (i.e., desire to learn more about job development) and to the development of the Employment Resource Book including the associated third module (i.e., desire to be better equipped to deal with common challenges).

Table 2. Using Analyze, Design, Develop, Implement, and Evaluate (ADDIE) to develop individual placement and support modules.

Summative evaluation examined the impact of the IPS training modules and assisted in the identification of barriers and facilitators for IPS implementation in the future. Table 3 summarizes level 1 evaluation data for all three IPS modules. Learners’ background and experience varied considerably across programs. Many were rehabilitation counselors, social workers, and some had non-behavioral health backgrounds. Learners rated all three modules highly (mean range: 4.4–4.5 out of 5). Learners also indicated that the modules presented new information and met their stated learning objectives (mean range: 4.3–4.4 out of 5). Similarly, learners’ self-report of knowledge acquisition was high (mean range: 4.4–4.6 out of 5). About half of learners indicated that they would change their practice after watching the modules (range: 48–51%). All learners who completed the level 1 evaluation demonstrated 80% or better mastery of knowledge on the level 2 evaluation embedded in each module.

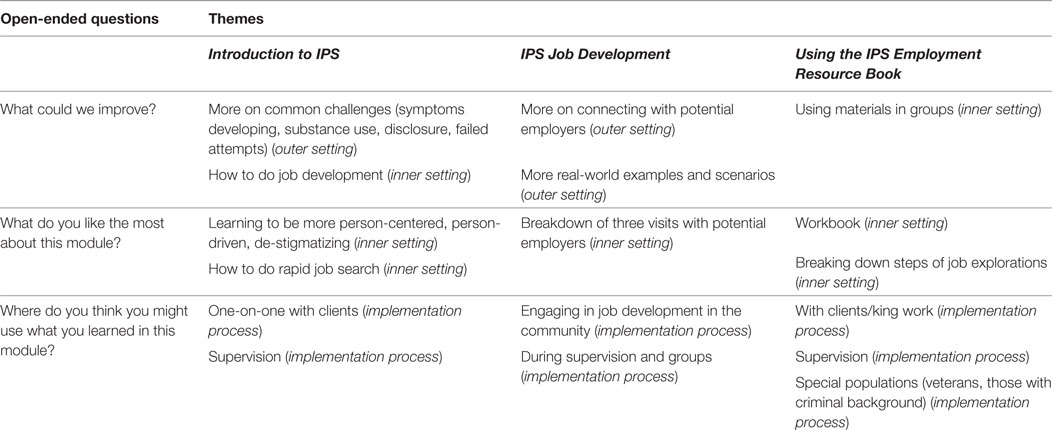

Open-ended question themes and related CFIR domains from these e-learning modules helped identify additional implementation support needs to be addressed by the multifaceted approach to implementing IPS (i.e., statewide webinars, regional online meetings focusing on special topics such as IPS fidelity and supervision, an IPS library with tools to help IPS implementation, and individualized program consultations that focus on addressing implementation challenges and enhancing provider competence). Themes from the open-ended questions for all three IPS modules are described using the CFIR in Table 4. These themes related to three CFIR domains: outer setting, inner setting, and implementation process. They provided information on how the modules were acceptable, what the future learning needs are, and how the information learned will be used in everyday practice.

Table 4. Themes and CFIR domains from level 1 survey open-ended questions for individual placement and support (IPS) modules.

This report provides one example of how an instructional design approach may be applied to the development of e-learning modules as one strategy in a multifaceted approach to the implementation of IPS supported employment for community program providers in a large state public behavioral health system. Through iterative development, we applied the ADDIE model to develop a series of e-learning modules for IPS. Using an LMS, these modules were disseminated and evaluated by PROS program providers throughout NY state. Results from both level 1 and level 2 evaluations indicate that the ADDIE model was successful in improving practitioner knowledge. In addition, learners received the e-learning modules favorably, rating them highly overall and noting that they met stated learning objectives and presented new information. Throughout the development process, data from the e-learning modules were described using the CFIR to identify needs that led to additional e-learning modules as well as strategies for subsequent implementation supports through a learning collaborative statewide (27).

The ADDIE model and CFIR were used as complementary approaches in the development of e-learning resources for training providers in an EBP. Our experience in this process produced several lessons learned and recommendations for implementation researchers and practitioners. The analysis phase of the ADDIE model required assessment of multistakeholder needs and context early on in the process of developing training. We recommend taking the time to assess and include end users and recipients to shape and increase the ecological validity of the training. In addition, the use of the CFIR domains allowed us to anticipate barriers and map future implementation strategies. During the design process, the establishment of clear and measureable learning objectives was important and facilitated focus and evaluation of knowledge and skill acquisition. We recommend the a priori assembly of e-learning module development teams to work with the instructional designer and establish an efficient process for the review of training content and format through weekly iterative review meetings during the design and development stages. Although the ADDIE process points to the introduction of the learning platform (e.g., website, LMS) at the implementation stage, we would recommend that the team with technical expertise (i.e., in our case, the courseware developers and LMS administrators) be introduced earlier in the process during the development stage. This is crucial to the feasibility and usability of the end product. Once implemented, we recommend a scheduled monthly review of the evaluation data that is being collected as learners participate in the e-leaning modules. This information will identify any needed revisions to the training content, the need for future content development, and barriers and facilitators for future implementation.

This article reports on the development of e-learning modules that were one part of a larger implementation effort in a state system. This implementation was not a part of a rigorous research evaluation. Limitations of this report include inability to formally assess pre–post knowledge, practice and readiness for IPS implementation using validated scales based on accepted standard in the literature, variation in sample sizes for the e-learning modules precluding examination of a stable cohort of learners over time, and the inability to directly assess the specific impact of these e-learning modules on employment outcomes apart from other elements of the entire initiative. Notably, only half of the providers who completed the evaluation noted an intention to change their practice, and we did not have the capacity to assess practice change at the individual provider level at this stage of IPS implementation (level 3). However, in our subsequent work (27), program fidelity assessments using established measures demonstrated improvement over time, suggesting that level 3 provider practice change and fidelity self-assessed by program sites are shown to be associated with higher employment rates (level 4), which are sustained over time (28). Future research may focus on more rigorous evaluation of knowledge, practice change, mixed-method assessment of how the content from e-learning modules influences practice, and the essential role of care recipients in helping to design training within implementation efforts.

From adoption to sustainability, implementation science focuses on strategies to promote the systematic uptake of research findings into routine practice. Successful implementation relies on iterative, interacting activities that follow a systematic process for strategy development. In the case of training as an implementation strategy, instructional design offers a systematic and iterative process. First, it applies instructional theory to the development of training regardless of subject matter. Second, it identifies fundamental elements of the learners’ needs and real-world setting factors in addition to the EBP being implemented. Third, it creates accountability to align training content with measurable learning objectives and assesses learner knowledge and skill acquisition based on content. Lastly, it engages multimedia novel approaches in the development of educational and training resources.

Compared to more intensive approaches to training and workforce development, the development of e-learning modules informed by an instructional design approach provides implementation science the opportunity to scale and support training at the level of knowledge and skill acquisition for a range of EBPs. These modules can be used either as stand-alone or as part of blended learning activities and implementation supports as in the IPS initiative. Another example, in the case of complex, multi-component intervention or model of care, is CPI’s work with Assertive Community Treatment, where instructional design is used to develop e-learning modules as a first step in a blended learning training curriculum for practitioners in a state system (29). As such, there is increasing interest in examining the effect of an instructional design approach to training in behavioral health, especially for large systems of care.

Instructional design approaches such as ADDIE may offer implementation scientists and practitioners a flexible and systematic guideline for the development of e-learning modules as a single component or one strategy in a multifaceted approach for training practitioners in EBPs. In this way, this approach facilitates the translation between science to practice that takes into account the context of the learner and leverages technology for expanded reach, both promising approaches for workforce development and a learning health-care system (1, 30).

SP, CL, and LD conceived the study and conceptual framework. SP and NC managed data and analyses. SP, PM, NC, and CL contributed to writing the manuscript with feedback and supervision from LD.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer AR and the handling Editor declared their shared affiliation.

SP is a fellow of the Implementation Research Institute (IRI), at the George Warren Brown School of Social Work, Washington University in St. Louis, through an award from the National Institute of Mental Health (R25 MH080916) and the Department of Veterans Affairs, Health Services Research & Development Service, Quality Enhancement Research Initiative (QUERI).

The Supplementary Material for this article can be found online at https://www.frontiersin.org/articles/10.3389/fpubh.2018.00113/full#supplementary-material.

Figure S1. IPS job development design document.

1. IOM (Institute of Medicine). Best Care at Lower Cost: The Path to Continuously Learning Health Care in America. Washington, DC: The National Academies Press (2013).

2. Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health (2011) 38(1):4–23. doi:10.1007/s10488-010-0327-7

3. Dam NV. Inside the Learning Brain. Association for talent development (2013). Available from: https://www.td.org/Publications/Magazines/TD/TD-Archive/2013/04/Inside-the-Learning-Brain (Accessed: January 21, 2018).

4. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci (2009) 4(1):50. doi:10.1186/1748-5908-4-50

5. Mattox T, Kilburn R. Supporting Effective Implementation of Evidence-Based Practices: A Resource Guide for Child-Serving Organizations. Santa Monica: European Union (2016).

6. Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci (2013) 8:139. doi:10.1186/1748-5908-8-139

7. Lyon AR, Stirman SW, Kerns SEU, Bruns EJ. Developing the mental health workforce: review and application of training approaches from multiple disciplines. Adm Policy Ment Health (2011) 38(4):238–53. doi:10.1007/s10488-010-0331-y

8. Weingardt K. The role of instructional design and technology in the dissemination of empirically supported, manual-based therapies. Clin Psychol Sci Pract (2004) 11:331–41. doi:10.1093/clipsy.bph087

9. Beidas RS, Koerner K, Weingardt KR, Kendall PC. Training research: practical recommendations for maximum impact. Adm Policy Ment Health (2011) 38(4):223–37. doi:10.1007/s10488-011-0338-z

10. Dick W, Carey L. The Systematic Design of Instruction. 4th ed. New York: Harper Collins College Publishers (1996).

11. Reiser RA, Dempsey JV, editors. Trends and Issues in Instructional Design and Technology. Upper Saddle River, NJ: Merrill Prentice Hall (2002).

12. Allen WC. Overview and evolution of the ADDIE training system. Adv Dev Hum Resour (2006) 8:430–41. doi:10.1177/1523422306292942

13. Tariq M, Bhulani N, Jafferani A, Naeem Q, Ahsan S, Motiwala A, et al. Optimum number of procedures required to achieve procedural skills competency in internal medicine residents. BMC Med Educ (2015) 15(1):179. doi:10.1186/s12909-015-0457-4

14. Battles JB. Improving patient safety by instructional systems design. Qual Saf Health Care (2006) 15(Suppl 1):i25–9. doi:10.1136/qshc.2005.015917

15. Miller GT, Motola I, Brotons AA, Issenberg SB. Preparing for the worst. A review of the ADDIE simulation model for disaster-response training. JEMS (2010) 35(9):suppl 11–3.

16. Malan Z, Mash B, Everett-Murphy K. Development of a training programme for primary care providers to counsel patients with risky lifestyle behaviours in South Africa. Afr J Prim Health Care Fam Med (2015) 7(1):a819. doi:10.4102/phcfm.v7i1.819

17. Mash B. Development of the programme mental disorders in primary care as internet-based distance education in South Africa. Med Educ (2001) 35(10):996–9. doi:10.1111/j.1365-2923.2001.01031.x

18. Mash B. Diabetes education in primary care: a practical approach using the ADDIE model. Contin Med Educ (2010) 28:485–7.

19. Kirkpatrick DL. Evaluating Training Programs. San Francisco, CA: Berrett-Koehler Publishers, Inc. (1994).

20. Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci (2015) 10:53. doi:10.1186/s13012-015-0242-0

21. Covell NH, Margolies PJ, Smith MF, Merrens MR, Essock SM. Distance training and implementation supports to scale-up integrated treatment for people with co-occurring mental health and substance use disorders. J Dual Diagn (2011) 7:62–172. doi:10.1080/15504263.2011.593157

22. Covell NH, Margolies PJ, Myers RW, Ruderman D, Fazio ML, McNabb LM, et al. Scaling up evidence-based behavioral health care practices in New York State. Psychiatr Serv (2014) 65:713–5. doi:10.1176/appi.ps.201400071

23. Drake RE, Bond GR, Becker DR. Individual Placement and Support: An Evidence-Based Approach to Supported Employment. New York: Oxford University Press (2012).

24. Jewell T, Margolies P, Salerno A, Scannevin G, Dixon LB. Employment Resource Book. New York City: Research Foundation for Mental Hygiene (2014).

25. Running a Usability Test. (2018). Available from: http://www.usability.gov/ (Accessed: March 22, 2018).

26. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol (2006) 3(2):77–101. doi:10.1191/1478088706qp063oa

27. Margolies PJ, Broadway-Wilson K, Gregory R, Jewell TC, Scannevin G, Myers RW, et al. Use of learning collaboratives by the center for practice innovations to bring IPS to scale in New York State. Psychiatr Serv (2015) 66:4–6. doi:10.1176/appi.ps.201400383

28. Margolies PJ, Humensky JL, Chiang IC, Covell NH, Broadway-Wilson K, Gregory R, et al. Is there a role for fidelity self-assessment in the individual placement and support model of supported employment? Psychiatr Serv (2017) 68(9):975–8. doi:10.1176/appi.ps.201600264

29. Thorning H, Marino L, Jean-Noel P, Lopez L, Covell NH, Chiang I, et al. Adoption of a blended training curriculum for ACT in New York State. Psychiatr Serv (2016) 67(9):940–2. doi:10.1176/appi.ps.201600143

Keywords: e-learning, supported employment, implementation science, instructional design, training

Citation: Patel SR, Margolies PJ, Covell NH, Lipscomb C and Dixon LB (2018) Using Instructional Design, Analyze, Design, Develop, Implement, and Evaluate, to Develop e-Learning Modules to Disseminate Supported Employment for Community Behavioral Health Treatment Programs in New York State. Front. Public Health 6:113. doi: 10.3389/fpubh.2018.00113

Received: 31 January 2018; Accepted: 04 April 2018;

Published: 07 May 2018

Edited by:

Ross Brownson, Washington University in St. Louis, United StatesReviewed by:

Geetha Gopalan, University of Maryland, United StatesCopyright: © 2018 Patel, Margolies, Covell, Lipscomb and Dixon. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sapana R. Patel, c2FwYW5hLnBhdGVsQG55c3BpLmNvbHVtYmlhLmVkdQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.