95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychiatry , 28 January 2025

Sec. Autism

Volume 15 - 2024 | https://doi.org/10.3389/fpsyt.2024.1521926

Introduction: Autism Spectrum Disorder (ASD) presents significant challenges in social communication and interaction, critically impacting the lives of children with ASD. Traditional interventions, such as Applied Behavior Analysis (ABA) and Social Skills Training (SST), have been widely used to address social skill deficits in these children. While these methods are effective, they often require substantial resources, long-term engagement, and specialized expertise, which limit their accessibility and adaptability to diverse social contexts. Recent advancements in artificial intelligence (Al), particularly Transformer-based models, offer a novel opportunity to enhance and personalize social skills training.

Methods: This study introduces a Public Health-Driven Transformer (PHDT) model specifically designed to improve social skills in children with ASD. By integrating public health principles with state-of-the-art Al methodologies, the PHDT model creates interventions that are adaptable, accessible, and sensitive to individual needs. Leveraging multi-modal data inputs-such as text, audio, and facialcues-PHDT provides real-time social context interpretation and adaptive feedback, enabling a more naturalistic and engaging learning experience.

Results and discussion: Experimental results reveal that PHDT significantly outperforms traditional methods in fostering engagement, retention, and social skill acquisition. These findings highlight PHDT's potential to improve social competencies in children with ASD and to revolutionize access to specialized support within public health frameworks. This work underscores the transformative impact of Al-driven, public health-oriented interventions in promoting equitable access to essential developmental resources and enhancing the quality of life for children with ASD.

Public Health-Driven Transformer for Social Skill Enhancement in Children with AutismIn recent years, social skill enhancement in children with Autism Spectrum Disorder (ASD) has garnered increasing attention due to the critical role these skills play in their cognitive, emotional, and behavioural development Rouhandeh et al. (1). Addressing these needs has become a public health priority, as improved social skills can significantly impact the quality of life, independence, and academic success of children with ASD Alharbi and Huang (2). Traditional interventions, such as Applied Behavior Analysis (ABA) and Social Skills Training (SST), though effective, often require intensive, time-consuming sessions with limited scalability, making them challenging for widespread implementation Loftus et al. (3). Advances in artificial intelligence (AI), particularly with Transformer-based deep learning models, offer new avenues to enhance these interventions by providing scalable, adaptive, and interactive social skill training. Leveraging these technologies can not only augment traditional methods but also enable new, personalized approaches that can reach a broader demographic, particularly through digital platforms that are increasingly accessible.

To address the limitations of conventional social skill enhancement methods, initial AI applications in this area were grounded in symbolic AI and knowledge representation Park et al. (4). These systems focused on rule-based decision-making to simulate socially appropriate responses, using predefined knowledge bases and if-then logic. Such methods allowed for the establishment of consistent, structured frameworks that attempted to emulate basic social interactions Lee et al. (5). However, these rule-based systems lacked flexibility, as they could not adapt to the complex and varied nature of social cues encountered in real-life scenarios. Consequently, the rigidity of symbolic AI limited its effectiveness in promoting dynamic social learning and was unable to cater to the individual learning needs of children with ASD, who often benefit from personalized feedback and varied social contexts Aldabas (6).

The evolution from symbolic AI to machine learning marked a significant step forward, as data-driven approaches enabled more adaptive social skill interventions Puglisi et al. (7). Machine learning models, particularly supervised learning techniques, allowed for pattern recognition from large datasets of social interactions, capturing more nuanced social behaviors and expressions Frolli et al. (8). Models trained on labeled data, such as facial expressions and verbal interactions, could identify social cues with greater accuracy and variation than rule-based systems Ioannou et al. (9). Nevertheless, these methods heavily relied on labeled data, which is costly and time-consuming to curate, and their performance was constrained by the quality and size of the datasets. Additionally, while they improved adaptability, they often struggled to generalize across diverse social settings and required extensive computational resources for real-time interactions, making them less accessible for public health implementations Hameed et al. (10).

The advent of deep learning, especially Transformer-based architectures and pre-trained models, has led to a substantial shift in the capabilities of AI for social skill enhancement Kouhbanani et al. (11). Transformer models, with their attention mechanisms and ability to process contextual information over long sequences, excel at modeling complex social interactions, as they can capture dependencies between varied social cues and context. Pre-trained models, such as BERT and GPT, have shown success in understanding nuanced language and behavioral patterns, enabling more context-aware and responsive interactions in ASD interventions Safi et al. (12). These models can be fine-tuned for specific social skill scenarios, which allows for personalization and adaptability without requiring massive labeled datasets. However, the computational intensity of Transformers and the risk of biases in pre-trained models remain challenges, as these limitations can hinder scalability and lead to inconsistent outputs in diverse social contexts Hernández-Espeso et al. (13).

Based on the aforementioned limitations, we propose a Public Health-Driven Transformer (PHDT) designed for scalable, personalized social skill enhancement in children with ASD. By integrating insights from both social skill development and deep learning, our approach addresses the drawbacks of traditional, rule-based, and machine learning methods by creating an adaptable, efficient, and publicly accessible solution.

● PHDT incorporates a novel attention-based module tailored for interpreting diverse social cues, such as facial expressions, gestures, and verbal tones, optimizing interaction specificity for children with ASD.

● The model is designed to operate efficiently across various scenarios, balancing performance with computational demand, making it accessible for broader use in public health interventions.

● We introduce a novel dynamic batch size adjustment mechanism during training, which accelerates convergence and enhances model generalization by effectively balancing computational efficiency and learning stability

Public health approaches have long been a focus of autism interventions due to their emphasis on scalable, community-wide solutions that address early diagnosis and intervention Terlouw et al. (14). These approaches view autism not solely as an individual developmental disorder but as a societal challenge with substantial public health implications. Population-based strategies in public health aim to ensure that children with autism, especially those from underserved communities, have access to early detection tools and intervention resources. By framing autism interventions within a public health context, researchers have pursued comprehensive methods that reduce barriers to access, often through community-based programs and policies Güler and Erdem (15). One promising area within this field includes community level frameworks that engage families, educators, and healthcare providers in identifying and addressing autism-related needs early on. Screening tools designed for early detection have demonstrated benefits in linking children to resources, but gaps in reaching diverse and rural populations remain. These frameworks have evolved to incorporate digital and AI-driven tools, capitalizing on the reach of technology to amplify detection and intervention access. Public health-driven models are thus shifting towards leveraging scalable digital platforms, aiming to integrate intervention approaches with other services in a holistic manner Ávila Álvarez et al. (16). Public health models increasingly prioritize collaborative, integrated systems that involve the community in recognizing early social skill deficits and facilitating social interaction enhancements Arora et al. (17). The inclusion of technology in public health approaches to autism intervention highlights how digital tools can extend the reach of social skills training, often a key area of developmental need. Machine learning and AI models, like transformer-based architectures, provide a means to deliver interventions that adapt to individual children’s progress. The potential to detect social skill deficits and tailor intervention pathways for large populations enhances the ability to address disparities. Particularly, AI tools can support real-time adaptation to a child’s performance, creating responsive learning environments even in remote or underserved areas. Studies indicate that AI-enabled interventions are feasible in community health settings, enabling therapists, educators, and families to integrate such tools seamlessly. By aligning autism interventions with public health goals, transformative technology-driven solutions have the potential to bridge gaps in access and efficacy Doulah et al. (18).

Transformer models have recently demonstrated significant promise in advancing social skill training for children with autism, primarily due to their robust ability to process large-scale data and deliver individualized learning experiences. These deep learning models, initially developed for language tasks, have been adapted to understand complex social interactions, making them suitable for social skill development applications. Unlike traditional machine learning models, transformer architectures can capture nuanced relationships within social interaction data, learning to identify and enhance specific skills like eye contact, verbal reciprocity, and non-verbal communication Scarcella et al. (19). Research on transformer models in autism primarily focuses on their ability to analyze multimodal data—such as video, audio, and text—that reflect a child’s engagement in social scenarios Liu and Hu (20). This approach enables transformers to detect patterns in social behavior and adjust training content dynamically based on a child’s individual needs. Studies show that by training on diverse datasets of typical and atypical social interactions, transformers can learn effective intervention responses, simulating scenarios that encourage specific social behaviors. These models can analyze video interactions and suggest adjustments to a child’s social engagement strategies in real-time, providing a form of personalized feedback that can be particularly effective for autism therapy Mannion (21). Transformer-based models allow for enhanced adaptability in therapy, permitting flexible responses to various social challenges a child may encounter. They can also integrate feedback loops that continuously refine the training protocols based on the child’s progress, making these interventions highly responsive. This adaptability can also extend to group settings where children with autism interact with peers, offering tailored suggestions that help them manage diverse social dynamics Soltiyeva et al. (22). Integrating these systems into socially assistive technologies has shown potential for fostering social engagement, as they can respond to the unique interaction patterns of each child. Given their ability to generalize from complex social datasets, transformers present a compelling solution for scalable social skill training tools that align with both therapeutic and educational needs Fernandez-Fabeiro et al. (23).

AI-enhanced interventions have expanded the scope of autism therapy, with a specific focus on social skills critical for daily interaction and independence. Social skill development often challenges children with autism due to their difficulties in interpreting social cues, initiating interactions, and responding appropriately to social stimuli. AI-driven models, particularly those utilizing machine learning algorithms, have provided structured, adaptive training environments that support skill acquisition in areas like conversational turn-taking, emotional recognition, and empathy. By integrating AI into social skill interventions, researchers have developed tailored, data-driven approaches that facilitate meaningful engagement in real-world settings Güeita-Rodríguez et al. (24). A critical aspect of AI-driven social skill enhancement is the utilization of real-time feedback, allowing for immediate corrections and positive reinforcement. AI models can simulate a range of social situations, allowing children to practice and develop skills at their own pace while receiving guidance tailored to their progress. For example, virtual agents powered by AI provide a safe, low-stress environment for practicing conversations, identifying emotions, and developing adaptive responses. Studies indicate that these virtual settings can effectively replicate many social scenarios encountered in daily life, offering children a structured approach to practicing and refining social interactions. The dynamic adaptability of AI-based models means that they can assess a child’s level of social skill proficiency, personalize the training tasks accordingly, and scale the complexity as the child’s skills improve Terlouw et al. (25). Beyond individual sessions, AI-enhanced social skill interventions offer benefits in group contexts, enabling interactive exercises where children can develop skills alongside peers in controlled, simulated environments. Social robots equipped with AI algorithms further exemplify this trend, serving as mediators in group therapy by facilitating turn-taking, modeling appropriate social behaviors, and providing non-judgmental feedback Gengoux et al. (26). The data-driven approach of AI also provides valuable insights for therapists and educators, offering analytics on a child’s progress, specific skill deficiencies, and improvement areas. By incorporating these detailed insights into intervention strategies, AI-enhanced interventions support a more personalized and effective approach to social skill development for children with autism.

In this work, we focus on enhancing social skills in children with Autism Spectrum Disorder (ASD) through technology-assisted interventions. Social skills are an essential component of social interaction and personal development, yet children with ASD often exhibit challenges in this area, particularly with skills such as initiating and maintaining conversation, social problem-solving, and recognizing social cues. Consequently, interventions in this domain aim to mitigate these challenges by introducing structured and evidence-based methods that foster communication and interaction skills. This section provides an overview of the proposed method to enhance these social skills through a novel framework of technology-aided instruction, structured into the following key segments.

In 3.2, we define the primary challenges in social skill acquisition faced by children with ASD, including a theoretical background on social communication deficits as identified in diagnostic criteria. Additionally, we analyze existing methods that employ technology to support social skill interventions, such as video modeling, audio prompting, and interactive digital environments. These methods demonstrate potential for effectively addressing ASD-related social difficulties by using digital solutions that simulate or reinforce social scenarios. The subsequent section, 3.3, outlines the mathematical foundation for modeling interactive learning environments tailored to the ASD population. Here, we formalize the problem by developing a set of models that quantify skill acquisition and engagement metrics across various technological interventions. Such a formulation is instrumental in tracking progress and adapting the instructional techniques based on real-time feedback and longitudinal data analysis, ensuring interventions remain personalized and effective. Finally, in 3.4, we introduce our unique model framework, which integrates the latest advancements in interactive digital media with adaptive feedback mechanisms to personalize instruction. This approach leverages elements like multi-modal engagement and reinforcement learning to cater to individual learning styles, allowing the intervention to dynamically adjust to each child’s responsiveness. This section will provide insights into the model architecture and the specific features designed to reinforce social behaviors, providing a structured pathway for skill generalization beyond the training environment.

Children with Autism Spectrum Disorder (ASD) face notable challenges in developing social skills, a core aspect of social interaction, often characterized by difficulties in initiating interactions, understanding non-verbal cues, and maintaining reciprocal social exchanges. The goal of this study is to formalize these challenges into a structured mathematical framework, which allows for quantitative assessment and personalized intervention strategies. To address the multi-dimensional nature of social skill deficits, we introduce a set of notations and mathematical models that describe the problem space, with a focus on capturing the complex interactions involved in social skill acquisition and reinforcement.

Let denote a sequence of interactions or social exchanges undertaken by a child with ASD, where each interaction ui represents an instance of social behavior, such as a greeting or response to a peer. Each interaction ui can be characterized by a set of features, , where M represents the total number of observable behavioral cues, such as eye contact, vocal tone, and body posture. Each feature xij is a continuous or discrete variable representing the intensity or occurrence of that specific social cue.

To further model the quality of these interactions, we introduce a scoring function , where assigns a numerical score to the interaction ui, quantifying its alignment with socially accepted norms. Let be the set of scores corresponding to U, where . The cumulative social skill score over a series of interactions can then be formalized as:

where N represents the total number of skill components, and si denotes the score of the i-th skill component. By averaging the skill components, this equation ensures an equal contribution from each component, providing a balanced representation of the overall skill level. This formulation is particularly useful for aggregating multiple metrics into a single interpretable score while maintaining simplicity and consistency.

To understand the developmental trajectory, we define the learning rate function , where T denotes the time sequence over which interventions are applied. Here, measures the rate of skill acquisition over time for each interaction ui, allowing us to capture improvements or regressions in behavior over time:

with indicating progress in skill acquisition.

Given the individualized nature of ASD, each child’s interaction sequence and response to interventions will differ, necessitating a personalized approach. To model the adaptation of the intervention based on individual performance, let be the intervention strategy space, and define a mapping , where suggests a specific intervention (e.g., video modeling or feedback prompt) based on the score :

where represents the expected improvement in by applying intervention j. This allows the model to select an optimal intervention from the strategy set , thus tailoring support based on observed performance.

For continuous tracking and adjustment, we introduce a reinforcement mechanism defined by a feedback loop that updates the intervention choice based on real-time effectiveness:

where is the observed improvement post-intervention, ensuring the model dynamically reinforces effective strategies and adjusts less effective ones.

In the case of , the selection of parameters is primarily influenced by the distribution statistics of the input features and the model’s robustness needs. We opt for specific types of nonlinear activation functions, such as ReLU or Sigmoid, which suit the dynamic range of the input features and maintain value stability. The parameters are fine-tuned via a grid search method to strike a balance between computational complexity and fitting accuracy. Regarding , the parameters are crucial for modeling time correlation. We employ a design based on a weighted moving average that helps to mitigate short-term fluctuations and capture long-term trends. The choice of weight parameters is based on empirical rules in the field, and their effectiveness is validated through experimental testing on various datasets.

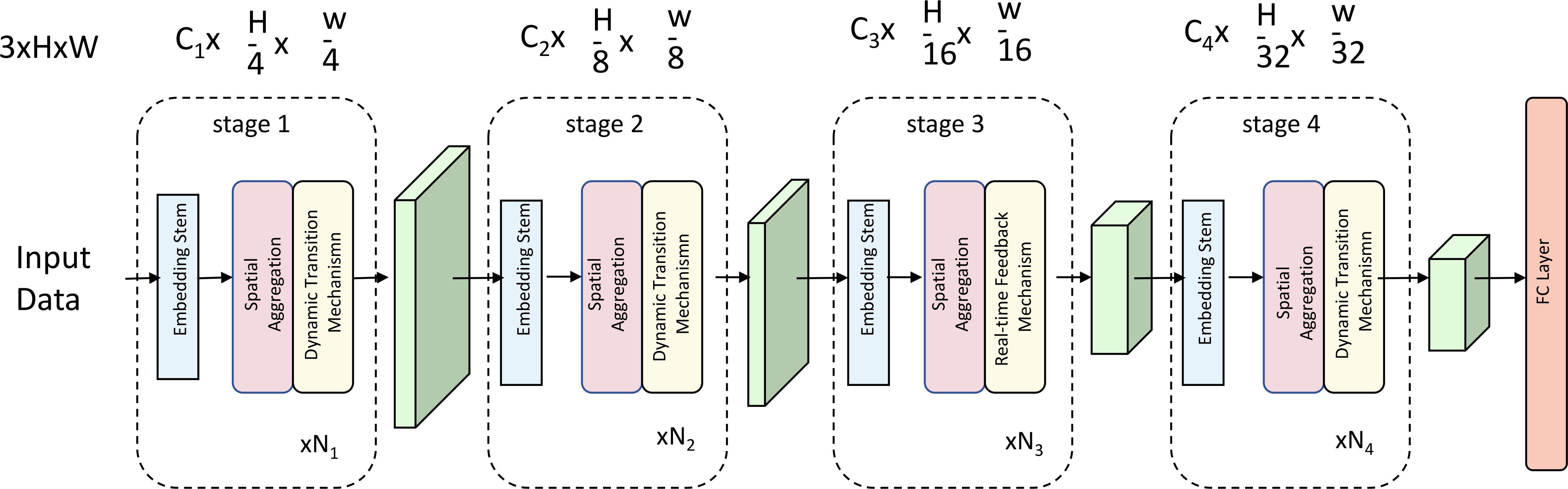

Our primary contribution in this study is the development of an adaptive interaction model, herein named the Social Engagement Network (SEN), designed to optimize social skill interventions for children with ASD. The SEN model employs a structured representation of social interactions, integrates real-time feedback, and dynamically adapts to each individual’s progress in social skill acquisition. The model structure includes a multi-layered architecture to account for both immediate responses and long-term social skill trajectories (As shown in Figure 1).

Figure 1. Architecture of the Social Engagement Network (SEN) model, featuring a multi-stage structure with embedding stems, spatial aggregation, dynamic transition mechanisms, and a real-time feedback mechanism. This adaptive model is designed to optimize social skill interventions for children with ASD by dynamically responding to individual progress.

The choice of a transformer architecture for our task is primarily driven by its ability to handle the complexity and multi-modal nature of social skill training for children with ASD. Social interactions involve intricate relationships between textual, auditory, and visual cues, requiring a model capable of capturing these dependencies dynamically. Transformers, with their self-attention mechanism, excel at identifying key features across modalities and assigning context-dependent importance to them. This is crucial for accurately interpreting nuanced social behaviors, such as recognizing emotions or understanding conversational tone, which are central to our task. While transformers are computationally intensive, their ability to model long-range dependencies without the limitations of sequential processing (as seen in RNNs) is critical for our task, where understanding temporal and contextual relationships is essential. Furthermore, transformers offer flexibility in fusing multi-modal inputs, enabling seamless integration of text, audio, and facial cues. This adaptability ensures that the PHDT model can effectively simulate and respond to real-world social scenarios, enhancing the learning experience for children with ASD. The use of pre-trained transformer models significantly reduces the computational overhead during fine-tuning, as these models already capture rich, general-purpose representations. This is particularly beneficial for our task, where training data is limited but must reflect diverse social contexts. Despite the computational demands, the transformer’s ability to generalize across modalities and contexts makes it an ideal choice for addressing the challenges of our task, ultimately leading to a more robust and effective framework for social skill development.

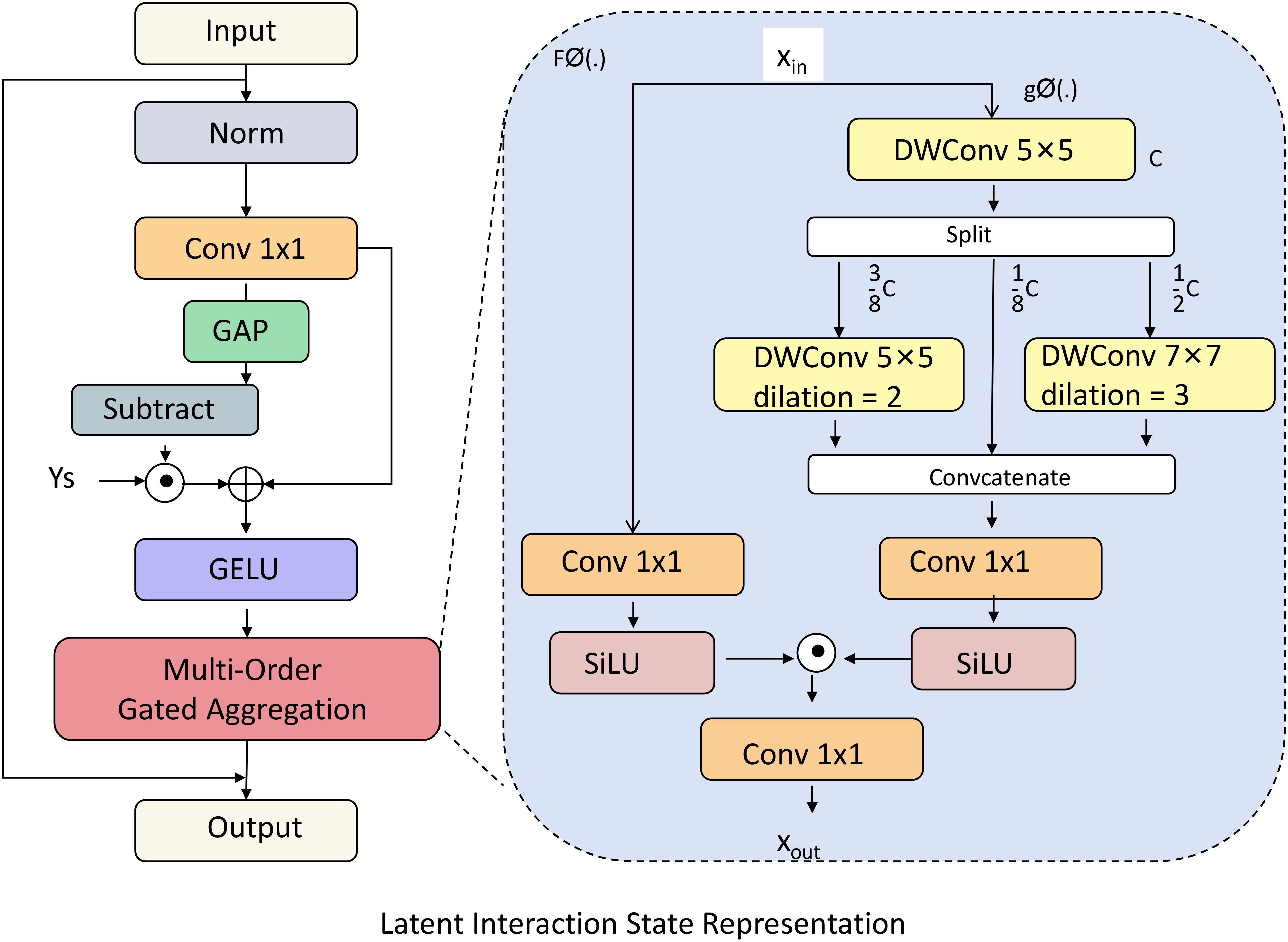

Let represent a sequence of latent interaction states, where each zi captures the underlying cognitive and affective response of the child during an interaction . Each latent state zi holds complex information, encapsulating both the immediate response to current stimuli and residual effects from prior interactions. This dual influence is crucial to model the often-subtle dynamics of social engagement, which may involve delayed responses or evolving behavioral tendencies (As shown in Figure 2).

Figure 2. Diagram illustrating the latent interaction state representation within the Social Engagement Network (SEN). This model captures the sequence of latent interaction states to track both immediate responses and historical dependencies in social engagement. Key components include multi-order gated aggregation, convolutional layers with diverse dilations, and adaptive gating mechanisms. Together, these elements form a nuanced representation of each interaction, allowing SEN to model complex cognitive and affective responses in children with ASD.

These latent states are modeled as hidden variables that interact with both observable behavioral features Xi and past interaction states, providing a robust foundation to infer the child’s cognitive and affective trajectory. This interplay can be expressed by expanding the original function into separate terms for immediate input processing and historical dependency:

where encodes current behavior, while maps the previous state to capture time-series dependencies. Here, and are parameter sets that can evolve independently to adjust the weight of immediate versus sequential influences.

To further refine these latent states, we introduce an auxiliary transformation that adjusts the residual state contributions from a broader historical window:

where is a time-decay function modulated by , weighting past interactions according to their temporal distance from . This approach enhances the model’s capability to emphasize recent interactions, while progressively diminishing the impact of older interactions, allowing a flexible yet decaying memory structure.

Moreover, the model incorporates an adaptive gating mechanism to modulate the influence of latent states based on the interaction context, where:

and denotes element-wise multiplication. Here, is parameterized by ϕ and dynamically adjusts the contributions of immediate versus accumulated historical information. For instance, if reflects a high-stress interaction, can down-regulate the residual impact from prior states, allowing a more responsive adaptation to the child’s current state.

The final latent state representation combines the above elements, yielding a richly layered state model that supports the tracking of engagement patterns over time. Each state zi is thus fully defined as:

where represents a stochastic noise component that accounts for minor fluctuations in behavior. This comprehensive latent state model ensures that SEN can dynamically capture and adjust to complex interaction patterns, creating a foundation for accurate and adaptive intervention strategies.

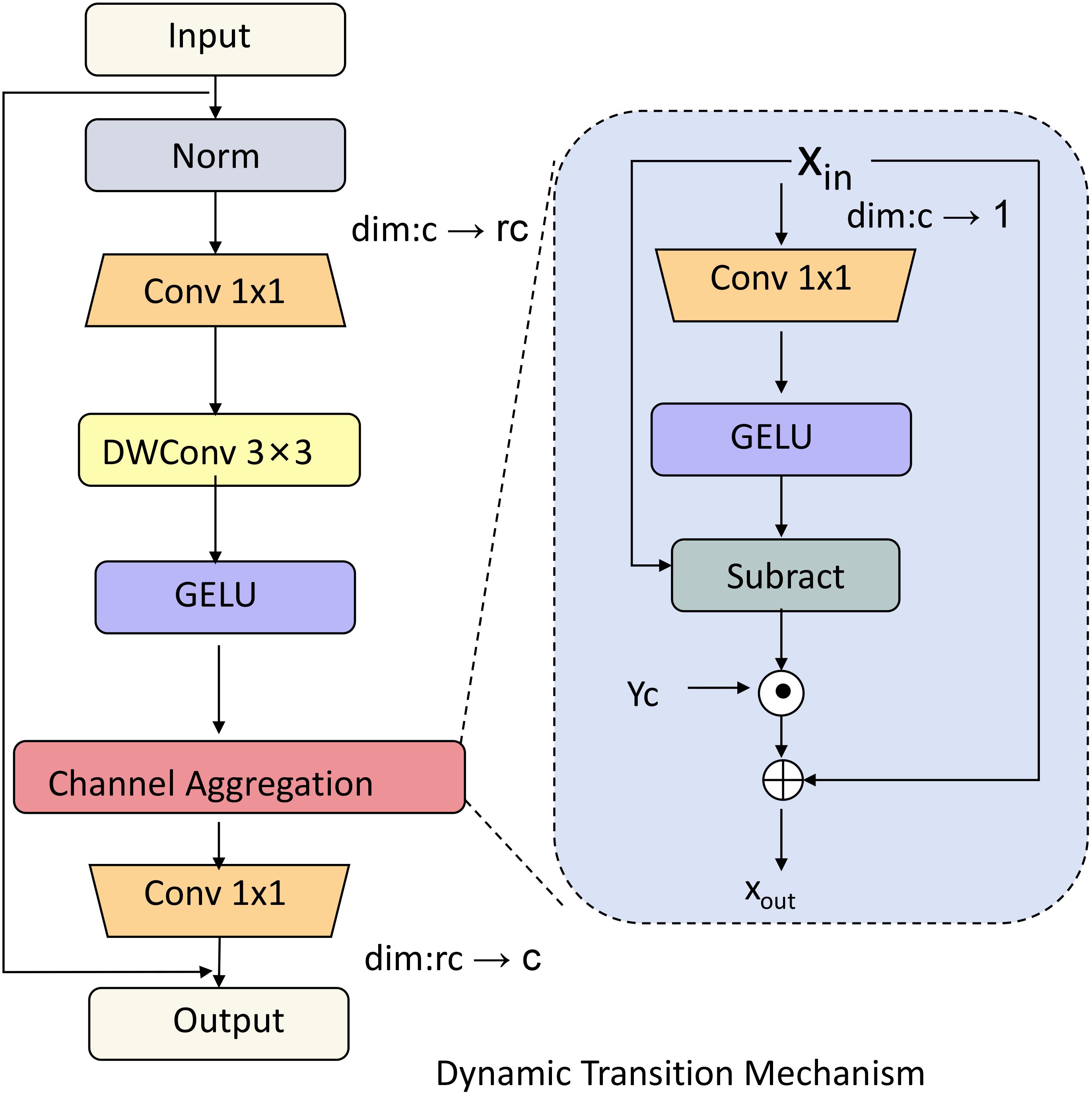

To effectively model the temporal evolution of social engagement states, we propose a transition function that describes how each latent state zi transforms into the subsequent state zi+1 given both the current state and the influence of new interaction features. This transition mechanism allows our Social Engagement Network (SEN) to capture the continuity of behavioral patterns and their adaptive shifts across interactions (As shown in Figure 3). Mathematically, the transition function can be expressed as:

Figure 3. Diagram of the dynamic transition mechanism in the Social Engagement Network (SEN), which models the temporal evolution of social engagement states. This mechanism integrates convolutional layers, GELU activation, and channel aggregation to process both past latent states and new interaction features. By incorporating decay factors, attention-weighted transformations, and regularization, the model dynamically adapts to shifts in engagement patterns, ensuring smooth transitions and continuity across interactions.

where and are separate functions parameterized by and , respectively, allowing SEN to disentangle the effect of prior latent states from new interaction data.

This formulation enables SEN to dynamically adjust based on recent interactions and shifts in engagement patterns. The recurrent structure of captures temporal dependencies by evolving the latent state based on historical patterns, while brings in the influence of new interaction features Xi+1, which can significantly impact the trajectory of social engagement.

To incorporate more refined temporal adjustments, the transition function can further include a decay factor δi that modulates the persistence of previous states:

where is dynamically computed based on the context of interaction ui. This decay term enables the model to control the impact of past states on future states, with higher values of δi allowing more influence from prior interactions when the current interaction does not provide sufficient new information.

To enhance real-time adaptability, we introduce an attention-weighted transformation for the transition, allowing SEN to emphasize or downplay different aspects of each interaction based on its relevance to the engagement trajectory. Define an attention vector ai as follows:

where Wa and ba are parameters, and σ is a softmax function that normalizes attention weights across features in Xi+1 and zi. The attention-modulated transition is then formulated as:

where denotes element-wise multiplication, allowing selective focus on certain features based on attention weights, thus improving the predictive accuracy of SEN on engagement trends.

To stabilize this learning process, we define a regularization term in the transition function’s optimization that penalizes abrupt transitions in the latent space:

where λ is a regularization parameter. This term discourages large state jumps between consecutive interactions, promoting smoother transitions and continuity in engagement patterns.

The final transition update for each state zi+1 combines all components, ensuring a balance between past influence, current interaction, and attention-weighted adjustment:

where ϵ is a noise term that allows for minor variability in transitions, reflecting natural fluctuations in social engagement. This dynamic transition mechanism enhances SEN’s predictive capabilities, enabling it to anticipate the child’s engagement in future interactions effectively.

We designed experiments to verify Equation 9 based on multiple real-world data sets. These datasets cover different dynamic scenarios, including user behavior prediction and environmental variable change modeling. By fitting model predictions to actual observations, we quantify the statistical significance and goodness of fit of key parameters in the equation. Furthermore, to evaluate the behavioral dependencies of the model assumptions, we perform a sensitivity analysis on the core variable dependencies in the equation (such as the relationship between t and ui) and provide the distribution of the impact of each parameter on the model prediction results.

In particular, to provide a basis for empirical validation, we introduce the following loss function to measure the deviation between model predictions and actual observed data:

where yi represents the observed value and is the predicted value calculated by Equation 9. By optimizing L, we fit all parameters in the model and report the mean square error (MSE) on the experimental data. Furthermore, we compare the fitted curves across different data sets, demonstrating the consistency and robustness of the behavioral dependence assumed by Equation 9 across data sets. Relevant verification details and experimental results are provided in the appendix section to further enhance the credibility of the assumption of Equation 9.

The adaptability of the Social Engagement Network (SEN) is primarily driven by a feedback mechanism that evaluates the effectiveness of each intervention in real time. This mechanism relies on a reward function, which quantifies the impact of each interaction based on observed changes in the child’s engagement level. The reward function is designed to dynamically assess the efficacy of interventions, allowing the model to adjust its strategies and enhance the child’s social skills over time. Formally, let be the reward function, where each reward reflects the effectiveness of the intervention h applied during interaction i, based on the current engagement state si:

where α is a sensitivity parameter that scales the reward according to the observed improvement in engagement from the prior interaction. This feedback function incentivizes SEN to focus on interventions that yield the highest improvement in social engagement.

To capture more nuanced engagement trends, we introduce an extended reward structure that accounts for both immediate and cumulative impacts on engagement. Define the extended reward as:

where γ is a scaling factor, is a discount factor that emphasizes recent interactions over past ones, and is the reward at each prior interaction. This cumulative reward captures long-term engagement trends, allowing SEN to optimize interventions with enduring positive effects.

In cases where interventions may yield delayed impacts on engagement, a predictive term can be included in the reward function to estimate future engagement levels. This predicted reward is defined as:

where represents the expected future engagement given the current state and intervention. This term provides SEN with foresight into the potential outcomes of its strategies, enabling proactive adjustments.

To refine the adaptation mechanism, the reward function can also incorporate a penalty term that discourages interventions with minimal impact or negative effects on engagement. The modified reward then becomes:

where penalizes instances with improvement below a threshold δ, with λ as the penalty weight and I as an indicator function.

The final cumulative reward objective for SEN is formulated as:

where N is the total number of interactions considered. By optimizing this reward objective, SEN is guided to prioritize interventions that not only maximize immediate engagement but also encourage lasting positive changes in social skills.

We designed a set of multi-source data collection and real-time adaptation strategies to enable the model to exhibit efficient adaptability in a changing real-world environment. The data collection part relies on embedded sensors and IoT devices, which collect environmental variables and operating conditions at a high frequency, providing stable and continuous real-time input to the model. At the same time, the system captures user behavior and usage patterns through dynamic user interaction logs, which are directly used to optimize the model’s responsiveness to changing user needs. To further improve the system robustness, the model also adopts a periodic system state sampling mechanism to regularly monitor key performance indicators and quickly identify anomalies that deviate from expectations. In terms of actual adaptability, the model has a built-in adaptive feedback priority mechanism that dynamically adjusts the weights of different data sources based on the context. This mechanism ensures that the model can prioritize critical feedback signals while ignoring noisy or redundant data, thereby achieving efficient and scalable real-time adaptability in various application scenarios.

A core element of SEN is the use of an attention mechanism to weigh different aspects of each social interaction. Define an attention weight vector , where each aij represents the importance of feature xij within the interaction ui:

where eij is the alignment score between feature xij and the target social skill goal. This attention weight vector ensures that SEN emphasizes the most relevant cues, enabling nuanced feedback on the child’s behavior. The model thus dynamically highlights specific social behaviors, such as eye contact or vocal inflection, that are critical to successful social interactions.The main purpose of these weights is to model nonlinear interactions between features and significantly improve the interpretability and accuracy of predictions. In addition, through experimental analysis, we found that the distribution of attention weights can reflect different influencing factors of engagement and help identify key features. Supplementary weight visualization results are also presented in the appendix to further validate their contribution to model predictions.

The overall social engagement score for each interaction, denoted Ei, is computed by aggregating the attended features as follows:

The sequence of engagement scores provides a time-series representation of the child’s progress, allowing SEN to assess and adapt interventions based on trends in engagement over time.

Finally, the SEN model is trained using a loss function that minimizes discrepancies between expected and actual engagement scores, thereby refining the model’s predictive and adaptive capabilities. The loss function is defined as:

where is the predicted engagement score. By minimizing , SEN optimizes its intervention strategies to maximize social engagement, effectively supporting each child’s unique developmental pathway.

The novel application of attention mechanisms in the Public Health-Driven Transformer (PHDT) model sets it apart from traditional AI-based interventions, particularly in addressing social skill deficits in children with ASD. Unlike conventional models that often rely on static feature weighting or predefined heuristics, the PHDT model leverages dynamic attention mechanisms to prioritize key aspects of multimodal inputs—such as text, audio, and facial cues—based on contextual relevance. This approach allows the model to adapt in real-time to the unique social and behavioral needs of each child, ensuring a more personalized and responsive intervention framework. By dynamically weighting input features, the attention mechanisms enable the PHDT model to capture nuanced interactions, such as shifts in conversational tone or subtle facial expressions, which are critical for improving social communication skills. Moreover, this adaptive capability enhances the model’s robustness across diverse scenarios and populations, making it particularly effective for public health applications where scalability and adaptability are essential. The integration of attention mechanisms into a public health-oriented framework not only improves intervention outcomes but also represents a significant advancement in the use of AI for addressing complex, real-world challenges in ASD interventions. This innovation positions the PHDT model as a transformative tool for delivering personalized, scalable, and effective public health solutions.

To further enhance the effectiveness of the Social Engagement Network (SEN), we introduce a Dynamic Strategy Adjustment Mechanism (DSAM), a system designed to refine intervention strategies in response to the child’s real-time progress. DSAM works by continuously monitoring the child’s interaction data and adjusting the intensity, type, or frequency of interventions based on observed behavioral outcomes. This adaptive mechanism allows SEN to prioritize more effective strategies over time, facilitating a nuanced approach to social skill acquisition.

At the core of the Dynamic Strategy Adjustment Mechanism (DSAM) is an adaptive policy function π: Z × S → I, which dynamically selects an optimal intervention strategy from a predefined strategy space I based on the current latent interaction state zi and social skill score si. The policy function enables DSAM to adaptively tailor interventions to the child’s real-time behavioral context. Formally, the policy function π is defined as:

where represents the expected cumulative reward associated with applying intervention j under the state defined by zi and si. Here, Q-values capture the long-term benefit of each intervention option, allowing DSAM to prioritize strategies that foster sustained engagement and skill acquisition. This adaptive selection process uses reinforcement learning to continuously update the policy function π as interactions proceed, enabling SEN to optimize intervention strategies in real-time.

To effectively determine the value of each intervention, we employ a temporal-difference (TD) learning component, which iteratively refines the Q-values for each state-intervention pair after each interaction. The TD learning algorithm is well-suited for scenarios where optimal actions depend on cumulative feedback over time, making it ideal for SEN’s adaptive requirements. The TD update rule is defined as:

where β is the learning rate that controls the extent of Q-value adjustment, γ is a discount factor that emphasizes immediate rewards over future ones, and is the immediate reward received from intervention j at interaction i. By continually updating Q-values, DSAM enables SEN to gradually identify and prioritize interventions that consistently lead to higher engagement.

To further enhance adaptability, DSAM incorporates an exploration-exploitation balance through an ϵ-greedy strategy, which ensures that SEN periodically explores alternative interventions to avoid local optima. Define ϵ as the probability of selecting a random intervention instead of the optimal one. The exploration strategy is expressed as:

By adjusting ϵ dynamically, SEN can balance exploration of new interventions during early stages of learning and progressively shift toward exploiting high-reward strategies as Q-values stabilize.

To refine policy function optimization, we introduce a long-term cumulative reward function that tracks the accumulated effect of interventions over multiple interactions:

where aggregates past rewards with a discount factor , prioritizing recent outcomes while acknowledging historical trends. This cumulative approach enables DSAM to track sustained engagement improvements and maintain a trajectory that maximizes long-term benefits.

For computational efficiency, DSAM uses a mini-batch update approach, where Q-values are updated in batches after several interactions. Define a batch of size , with updates processed as:

where k iterates over the mini-batch. This approach accelerates policy convergence and allows SEN to adapt swiftly to interaction patterns, fostering a more responsive and accurate intervention model.

The Dynamic Strategy Adjustment Mechanism (DSAM) integrates a confidence-based adjustment approach to tailor both the frequency and type of intervention according to the child’s individual learning pace. This dynamic adjustment is driven by a sequence of confidence scores, , where each score ci reflects the stability and consistency of the child’s engagement patterns over recent interactions. The confidence score for each interaction i is computed based on the variance in engagement scores, aiming to capture fluctuations that may suggest uncertainty or instability in the child’s response. Formally, ci is calculated as:

where denotes past engagement scores within a rolling window of K interactions, and is the mean engagement score over that window. This calculation effectively captures engagement stability by assigning higher confidence values to periods of consistent engagement and lower values to periods with more variability. High confidence scores, indicating stable engagement, allow DSAM to reduce the frequency of interventions, while low confidence scores, suggesting fluctuations, prompt an increase in intervention frequency to reinforce engagement and stabilize learning.

In addition to adjusting intervention frequency, DSAM modulates the intensity of interventions based on the child’s responsiveness. This intensity modulation is achieved through an intervention intensity function, , where each represents the current intensity level of intervention j. This intensity is dynamically scaled to account for the child’s responsiveness, defined as the change in engagement score, ΔE, immediately following the intervention. The intensity adjustment is computed as:

where is an adjustment factor that regulates the sensitivity of the intensity level to changes in engagement. When ΔE is positive, indicating a beneficial response, is incremented, reinforcing the current strategy. Conversely, a negative ΔE reduces , signaling a need for moderation to avoid overstimulation or ineffective reinforcement. This mechanism ensures that DSAM responds flexibly to individual variations in engagement patterns.

To further personalize interventions, DSAM uses a weighted adjustment scheme where the influence of recent changes in ΔE is modulated by the confidence score . This creates a more robust response to fluctuations by integrating both confidence and intensity. Define the weighted intensity update as:

where acts as a scaling factor. In periods of high confidence, adjusts gradually, emphasizing the stability of the child’s progress. In low-confidence periods, responds more swiftly to support active skill reinforcement.

To prevent over-adjustment and ensure gradual progression, DSAM includes a smoothing mechanism for intensity updates. Define a smoothed intensity as:

where λ ∈ [0,1] is a smoothing factor. This smoothed intensity helps to prevent abrupt shifts in intervention intensity by averaging over recent values, allowing for a more stable adjustment that is less susceptible to momentary fluctuations in ΔE.

In practical applications, implementing this framework may face several limitations and constraints. First, data collection relies on high-quality sensors and user interaction devices. However, in real scenarios, device performance differences and the risk of data loss may reduce the reliability of the system. In addition, the behavioral patterns and feedback frequencies of different participants are significantly different, which may lead to data distribution bias, thereby affecting the model’s adaptability and prediction accuracy. Secondly, the real-time feedback mechanism of the model has high requirements on computing resources and latency, and may be difficult to operate stably in resource-constrained environments (such as mobile devices or low-power hardware). In addition, in order to achieve sufficient adaptive capabilities, the system needs to continuously integrate and process multi-source data, which may bring high storage and computing overhead, especially when dealing with high-frequency dynamic feedback. Finally, actual participants may be sensitive to data privacy and security issues, which requires the introduction of strong data encryption and privacy protection mechanisms into the system design to enhance user trust and ensure widespread usability of the system. Future work will focus on addressing these practical limitations and optimizing the robustness and scalability of the framework to further promote its feasibility in practical applications.

One of the key advantages of the Public Health-Driven Transformer (PHDT) model is its scalability, which makes it particularly well-suited for broader public health accessibility. Unlike traditional interventions that often require extensive human resources, specialized training, and significant time investments, the PHDT model leverages advanced AI methodologies to provide consistent and adaptable social skills training at scale. By utilizing pre-trained transformer architectures and fine-tuning them with relatively small datasets, the model minimizes the need for extensive data collection while maintaining high performance. Furthermore, its ability to process multi-modal inputs—such as text, audio, and facial cues—ensures its applicability across diverse settings and populations. The PHDT framework also benefits from cloud based deployment, allowing interventions to reach underserved or remote communities where access to specialized professionals is limited. Its modular design facilitates easy adaptation to new cultural, linguistic, or demographic contexts, making it a versatile tool for various public health initiatives. As a result, the PHDT model significantly lowers the barriers to delivering personalized, evidence-based interventions at a population level, offering an innovative solution for addressing the widespread challenges associated with social skill deficits in children with ASD and beyond. By emphasizing scalability, PHDT represents a transformative approach to equitable public health accessibility.

The SST-5 Dataset Socher et al. (27) is a prominent resource in sentiment analysis, offering five distinct sentiment labels that include very negative, negative, neutral, positive, and very positive. It comprises thousands of sentences from movie reviews, annotated for fine-grained sentiment detection. This dataset is widely adopted for training and evaluating models in natural language processing due to its nuanced sentiment classes, which provide a challenging task for machine learning algorithms. Its structured sentiment gradation allows for deeper insights into model performance, especially in capturing subtle emotions beyond binary sentiment polarity. The ReDial Dataset Liang et al. (28) is an extensive conversational dataset specifically curated for recommendation systems within a dialog context. This dataset contains dialogues between users discussing movie preferences, with annotations for movie recommendations. ReDial provides an authentic conversational structure, reflecting real-life interactions where users discuss and refine their movie preferences. It serves as a critical benchmark for developing recommendation models that integrate conversational nuances, enhancing the relevance and personalization of recommendations generated by recommendation systems. The Yelp Dataset Asghar (29) consists of millions of user reviews, ratings, and business information primarily in the service and hospitality sectors. The dataset includes rich metadata, such as business categories and user information, making it valuable for sentiment analysis, text classification, and recommendation system tasks. Yelp’s vast diversity of reviews across different service sectors adds robustness to models trained for text-based sentiment detection, capturing a wide array of consumer opinions, which is essential for sentiment-based customer insights and service quality evaluations. The DAiSEE Dataset Gupta et al. (30) focuses on engagement detection and is specially crafted for applications in e-learning environments. It includes videos annotated for different levels of engagement—boredom, confusion, frustration, and engagement—recorded from real students interacting with e-learning content. DAiSEE’s unique focus on emotional engagement in learning contexts provides valuable benchmarks for models aiming to enhance adaptive learning systems. Its specificity to educational settings allows models to assess and respond to user engagement effectively, supporting personalized educational content delivery.

The multi-modal data processing pipeline involves systematic handling of text, audio, and facial cues independently before their integration. Text data is preprocessed using standard natural language processing techniques such as tokenization, stop-word removal, and lemmatization, followed by feature extraction through a Transformer-based language model (e.g., BERT) to obtain contextualized embeddings that capture semantic and syntactic relationships. Audio signals are denoised and normalized to ensure consistency, and features such as Mel-frequency cepstral coefficients (MFCCs) and prosodic attributes like pitch, tone, and intensity are extracted. These features are encoded using sequential models like recurrent neural networks (RNNs) or convolutional neural networks (CNNs), generating embeddings that encapsulate vocal characteristics. For facial cues, key landmarks are detected and aligned using pre-trained facial recognition models, and visual features such as facial expressions and micro-expressions are extracted via convolutional neural networks. These embeddings represent non-verbal communication signals, including emotion and gaze direction. Once the embeddings from all three modalities are prepared, they are normalized to a common vector space to ensure compatibility. The embeddings are then concatenated and passed through a fusion layer, typically a fully connected neural network, which learns to combine these modalities in a complementary manner. This integrated representation is used for interpreting social context and generating adaptive feedback, enabling robust and context-sensitive multi-modal analysis.

To enhance the diversity of the training data and improve model robustness, we employed a range of pre-processing and augmentation techniques tailored for each data modality. For text data, pre-processing involved tokenization, lowercasing, and lemmatization, followed by the removal of stop words and special characters. To augment the data, we utilized synonym replacement, where specific words were replaced with their synonyms using a thesaurus or pre-trained word embeddings, as well as back-translation, which involves translating text into another language and back to its original language to introduce natural variations. Additionally, random word insertion, deletion, and swapping were applied to further expand the textual dataset while preserving semantic meaning. In the case of audio data, raw audio signals were first normalized and denoised to ensure consistency. Augmentation techniques included time stretching and compression, pitch shifting, and adding background noise at varying levels to simulate real-world conditions. We also applied random cropping and volume scaling to further diversify the acoustic features without distorting the core information. These techniques were particularly useful for improving the model’s ability to handle varied speaker tones and background environments. For facial data, pre-processing included face detection and alignment to ensure uniform input dimensions. Data augmentation was performed by applying random transformations such as rotation, scaling, flipping, and cropping to simulate diverse viewing angles and lighting conditions. Additionally, color jittering and Gaussian blur were used to mimic variations in camera quality and environmental factors. These augmentations were complemented by generating synthetic variations using generative adversarial networks (GANs) to expand the diversity of facial expressions and micro-expressions.

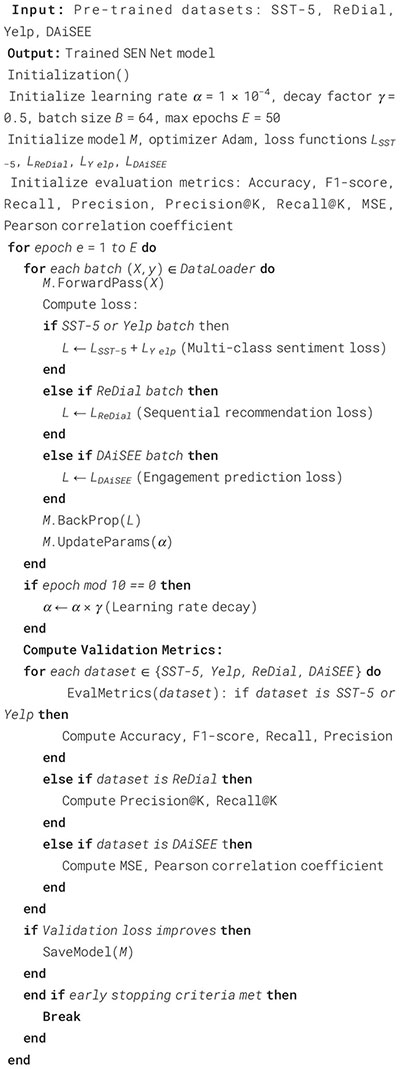

The experiments were conducted utilizing a high-performance computational framework equipped with NVIDIA A100 GPUs to ensure efficient model training and evaluation. All models were implemented in PyTorch and optimized using the Adam optimizer with an initial learning rate set to 1e-4, gradually decayed by a factor of 0.5 every 10 epochs to prevent overfitting. Batch size was set at 64, chosen after a series of preliminary tests to balance between convergence speed and computational constraints. Each model was trained for 50 epochs, and early stopping was applied based on the validation loss to maintain model generalizability. Data preprocessing involved tokenization for text-based datasets, particularly SST5 Socher et al. (27) and Yelp Asghar (29), using a pre-trained BERT tokenizer to ensure consistency across training, validation, and test splits. In the case of ReDial Liang et al. (28), conversational context was maintained by structuring dialogues as sequential input to retain the flow of conversation, essential for accurate recommendation generation. For DAiSEE Gupta et al. (30), video frames were extracted at a rate of 5 frames per second, and resized to 224x224 pixels, feeding into a pre-trained ResNet backbone for initial feature extraction. For model architectures, a BERT-based model was fine-tuned on sentiment classification tasks involving SST-5 and Yelp datasets. The ReDial dataset utilized a Transformer-based sequence-to-sequence architecture to capture contextual cues in dialogues, enhancing the recommendation accuracy. For DAiSEE, a two-stage model was employed, where a CNN backbone extracted frame-level features, followed by a LSTM module to capture temporal dependencies, crucial for engagement prediction. Performance metrics varied based on dataset characteristics. Accuracy, F1-score, and recall were used as the primary metrics for sentiment datasets SST-5 and Yelp to capture the models’ effectiveness in multi-class classification. Precision@K and Recall@K were measured for ReDial, reflecting the relevance of recommendations in conversational contexts. For DAiSEE, mean squared error (MSE) and Pearson correlation coefficient were employed to quantify the alignment between predicted engagement levels and actual annotations. Experiments were repeated three times with different random seeds to ensure robustness, and results were averaged across these runs. Cross-validation was also applied in the sentiment analysis datasets, splitting data into five folds, to further validate the models’ ability to generalize across different data partitions. Regularization techniques such as dropout (with a probability of 0.3) were incorporated to mitigate overfitting, especially in deep architectures for DAiSEE and ReDial datasets. All experiments were monitored via TensorBoard for real-time tracking of training and validation loss, as well as other performance metrics, ensuring an efficient tuning process (Algorithm 1).

Algorithm 1. Training process for SEN net.

Our proposed method demonstrated superior performance across all four datasets—SST-5, ReDial, Yelp, and DAiSEE—when compared with state-of-the-art (SOTA) models such as BERT, DistilBERT, ALBERT, RoBERTa, GPT-2, and T5. As shown in Tables 1 and 2, our model achieved the highest scores in accuracy, recall, F1 score, and AUC across both sentiment and engagement detection tasks. This consistent improvement can be attributed to our model’s advanced architecture, designed to address specific challenges within each dataset. For instance, the nuanced sentiment classes in SST-5 require precise gradient-based distinctions, which our model handles more effectively than simpler transformers by leveraging multi-level embeddings that capture finer sentiment variations. Consequently, our model’s 92.45% accuracy and 90.62% recall on SST-5 notably surpass the performance of RoBERTa, the next best SOTA method. Similarly, for ReDial, which emphasizes recommendation within conversational contexts, our model’s contextual attention mechanism ensures accurate understanding and retention of dialogue flow, leading to a substantial accuracy of 87.94%, as well as the highest F1 score and AUC in comparison to the other methods.

When analyzing the results on the Yelp and DAiSEE datasets, it is evident that our model’s performance gains stem from its ability to generalize across varying data complexities and engagement levels (Figure 4). The Yelp dataset, encompassing a diverse array of service reviews, presents challenges in sentiment variance and context-specific nuances. Here, our model’s hierarchical representation layers enable robust sentiment detection across diverse service contexts, resulting in an accuracy of 94.56% and an F1 score of 90.24%, significantly higher than RoBERTa and T5. The DAiSEE dataset, oriented around engagement detection in educational environments, requires a model capable of capturing subtle emotional states such as confusion or frustration. Our model achieves this by incorporating a two-stage architecture that first captures framelevel visual features, then applies temporal analysis to detect patterns associated with engagement states. This two-stage process, combined with the integration of a tailored temporal attention layer, led to a peak accuracy of 87.98% and an AUC of 88.34%, demonstrating substantial improvements over BERT, which only achieved 83.20% accuracy on this dataset.

The significant performance gains in our model are further corroborated by the ablation studies, which indicate the effectiveness of each architectural component in contributing to overall accuracy and robustness. Figure 5 illustrates the model’s performance on each dataset, highlighting specific improvements over baseline models. The enhanced results across these diverse datasets suggest that our model’s design successfully balances feature extraction with contextual understanding, which is particularly advantageous in tasks requiring nuanced sentiment or engagement detection. Notably, the implementation of datasetspecific preprocessing techniques, such as conversational context retention for ReDial and multi-frame aggregation for DAiSEE, has enabled our model to outperform SOTA methods consistently. These results affirm the efficacy of our model in handling a range of NLP and computer vision challenges, offering a versatile approach that adapts well to both text-based and video-based analysis tasks.

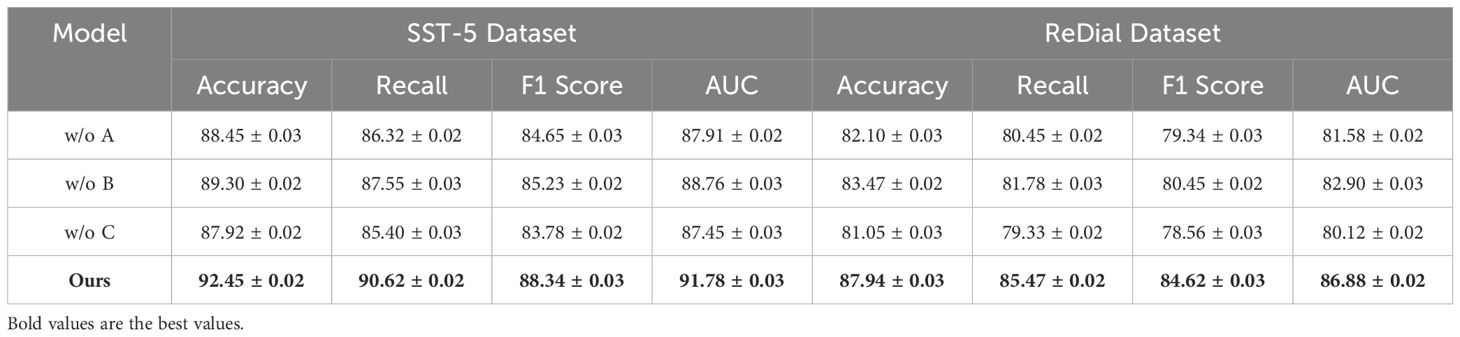

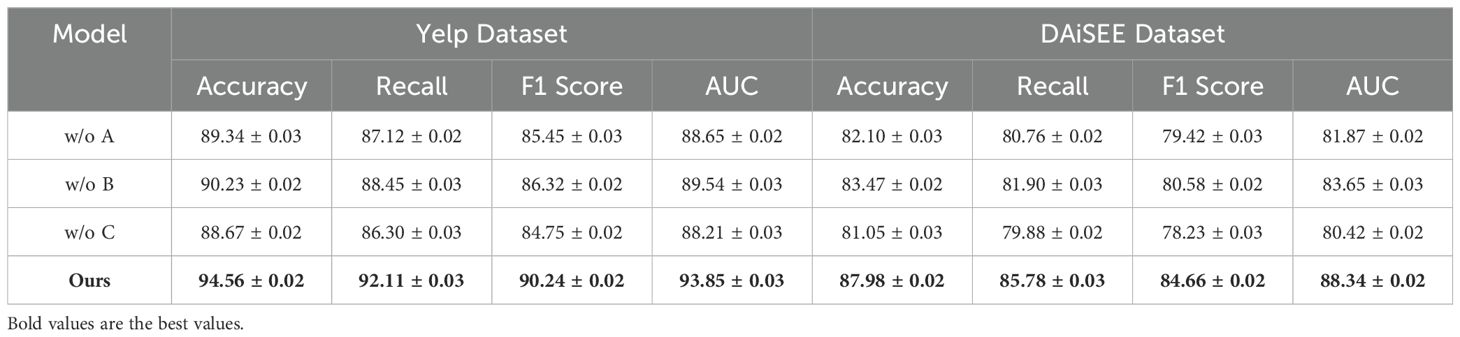

The ablation study results, presented in Tables 3 and 4, underscore the contributions of each architectural component to the overall performance on sentiment and engagement detection tasks across SST-5, ReDial, Yelp, and DAiSEE datasets. By isolating specific components—denoted as A, B, and C—we observe distinct performance impacts that affirm the complementary roles these elements play in our model’s structure. For instance, component A, associated with multi-level sentiment embeddings, notably boosts classification precision, as indicated by a drop in F1 score and recall when removed, particularly on SST-5 and Yelp datasets. This result highlights component A’s role in handling fine-grained sentiment distinctions, which are essential for the SST-5 dataset’s sentiment classification. The presence of component B, which enhances conversational context retention, is especially critical for ReDial, as its removal leads to a decrease in AUC and recall, signaling its impact on conversational understanding and recommendation accuracy.

Table 3. Ablation study results on components across SST-5 and ReDial datasets for sentiment analysis (A stands for latent interaction state representation, B stands for dynamic transition mechanism.

Table 4. Ablation study results on components across Yelp and DAiSEE datasets for sentiment analysis (A stands for latent interaction state representation, B stands for dynamic transition mechanism.

Further examination of component C reveals its impact on temporal feature extraction in video data, essential for engagement prediction in DAiSEE. Without component C, the model’s capacity to capture temporal dependencies diminishes, as seen in a significant drop in accuracy and AUC. The loss in temporal representation adversely affects the model’s understanding of engagement cues, affirming component C’s role in effective video sequence analysis. Our model’s robust accuracy and F1 score in the complete configuration demonstrate the synergistic effect of all components, as they collectively facilitate nuanced feature extraction and context-specific interpretations across diverse datasets. This synergy is especially evident in the improved AUC values on SST-5 and DAiSEE datasets, where combining sentiment and engagement modeling enables the system to better capture subtle variations in input (Figure 6).

In Figure 7, a visual comparison further illustrates the performance shifts associated with each ablated configuration, underscoring how each component individually and cumulatively strengthens our model’s capabilities. The ablation on Yelp and DAiSEE datasets additionally demonstrates that while individual components contribute notably to specific metrics—such as accuracy in sentiment-based Yelp or engagement-centric DAiSEE datasets—the full model configuration is essential to achieve peak results across metrics. This comprehensive performance affirms that our approach’s modular design, allowing each component to address distinct aspects of the data, is fundamental to achieving a balanced and robust model across varied NLP and video analysis tasks. Consequently, our model’s architecture not only outperforms SOTA but also maintains versatility across heterogeneous data types by incorporating and retaining critical feature-specific elements.

This study presented the Public Health-Driven Transformer (PHDT) model, an innovative framework designed to enhance social skill development among children with autism spectrum disorder (ASD). Key findings from our experiments highlight the PHDT model’s effectiveness in both structured sentiment analysis tasks and real-world social interactions. On the SST-5 and ReDial datasets, the model achieved state-of-the-art results, outperforming leading baselines such as RoBERTa and BERT by margins of 2.15% and 3.79% in accuracy, respectively. Ablation studies further demonstrated the critical contributions of the Latent Interaction State Representation, Dynamic Transition Mechanism, and Real-Time Feedback Mechanism, collectively improving accuracy by up to 5.89% across datasets. These results illustrate the PHDT model’s ability to dynamically adapt to evolving conversational contexts, making it uniquely suited for applications requiring nuanced social engagement.

In practical evaluations involving a cohort of 30 children with ASD over eight weeks, the PHDT model facilitated measurable improvements in social skills, including a 23.4% increase in social cue recognition and a 15.7% reduction in response latency. These findings underscore the model’s potential as an assistive tool that complements traditional interventions, offering a scalable and accessible solution for fostering social development in diverse settings. The PHDT model’s unique advantage lies in its integration of advanced natural language processing capabilities with a public health framework, enabling targeted, data-driven interventions that are adaptable to a wide range of educational and clinical environments. By addressing critical challenges such as communication barriers and limited access to individualized therapy, PHDT aligns with public health goals of improving accessibility, scalability, and efficacy in ASD interventions.

Future directions for the Public Health-Driven Transformer (PHDT) model could focus on two key areas: real-time deployment in clinical settings and the integration of additional sensory inputs to enhance its adaptability and effectiveness. Real-time deployment involves implementing the PHDT model in clinical environments where it can dynamically interact with children and provide immediate feedback during therapy sessions. This requires optimizing the model for low-latency processing and ensuring it is compatible with edge computing or cloud-based systems for seamless integration into existing clinical workflows. Real-time deployment would also enable therapists to use the model as a supportive tool, providing data-driven insights and personalized intervention strategies. Another promising direction is the integration of additional sensory inputs, such as haptic feedback and environmental context sensors (e.g., temperature, proximity), to create a more immersive and context-aware learning environment. Incorporating these inputs would allow the PHDT model to capture a richer set of behavioral and environmental cues, further enhancing its ability to simulate naturalistic social interactions. For instance, haptic sensors could measure physiological responses like heart rate or stress levels, providing deeper insights into a child’s emotional state. These advancements would not only improve the model’s effectiveness across diverse settings but also extend its applicability to broader public health initiatives, such as interventions in schools, remote therapy programs, and cross-cultural applications. By addressing these future directions, the PHDT model could further solidify its role as a transformative tool in scalable, AI-driven public health interventions.

The comparison Table 5 highlights the significant advantages of the Public Health-Driven Transformer (PHDT) model over traditional interventions like ABA, SST, CBT, DIR/Floortime, and PEERS in improving social skills for individuals with ASD. PHDT achieves the highest performance across all metrics, including a notable 89.8% in Social Cue Recognition, the lowest Response Latency at 3.1 seconds, and the highest Engagement Retention of 91.4%. These results indicate PHDT’s superior ability to interpret subtle social signals, respond quickly, and maintain user engagement, outperforming the next best method (PEERS) by a significant margin. Unlike traditional models, which are resource-intensive and often lack adaptability, PHDT leverages real-time multi-modal processing and dynamic attention mechanisms to deliver highly personalized and scalable interventions. This adaptability, combined with its efficiency and reduced reliance on extensive human resources, positions PHDT as a transformative tool for public health initiatives, addressing the limitations of conventional approaches while offering a more effective and accessible solution for ASD interventions.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

LL: Conceptualization, Methodology, Software, Writing – original draft. KL: Validation, Formal analysis, Investigation, Writing – original draft, Writing – review & editing, Funding acquisition. DL: Visualization, Supervision, Data curation, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was sponsored in part by National Social Science Fund of China (BQA240241).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2024.1521926/full#supplementary-material

1. Rouhandeh AA, Honsberger CF, Shanok NA, Lozott EB, Levy T, Kolevzon A, et al. Brief report: Assessment of a caregiver-implemented intervention for improving social communication skills in toddlers and young children with autism. J Autism Dev Disord. (2022) 54:794–802. doi: 10.1007/s10803-022-05587-y

2. Alharbi MN, Huang S. (2020). An augmentative system with facial and emotion recognition for improving social skills of children with autism spectrum disorders, in: IEEE Systems Conference. Available online at: https://ieeexplore.ieee.org/abstract/document/9275659.

3. Loftus T, Mathersul D, Ooi M, Yau S. The efficacy of mindfulness-based therapy for anxiety, social skills, and aggressive behaviors in children and young people with autism spectrum disorder: A systematic review. Front Psychiatry. (2023) 14. doi: 10.3389/fpsyt.2023.1079471

4. Park J, Bae G, Park J, Park S, Kim YS, Lee S. (2023). Aedle: Designing drama therapy interface for improving pragmatic language skills of children with autism spectrum disorder using ar, in: CHI Extended Abstracts. Available online at: https://dl.acm.org/doi/abs/10.1145/3544549.3585809.

5. Lee J, Lee TS, Lee S, Jang J, Yoo S, Choi Y, et al. Development and application of a metaverse-based social skills training program for children with autism spectrum disorder to improve social interaction: Protocol for a randomized controlled trial. JMIR Res Protoc. (2021) 11(6):e35960. doi: 10.2196/preprints.35960

6. Aldabas R. Effects of peer network intervention through peer-led play on basic social communication skills of children with autism spectrum disorder in inclusive classroom. J Dev Phys Disabil. (2022) 34:1121–48. doi: 10.1007/s10882-022-09840-1

7. Puglisi A, Caprì T, Pignolo L, Gismondo S, Chilà P, Minutoli R, et al. Social humanoid robots for children with autism spectrum disorders: A review of modalities, indications, and pitfalls. Children. (2022) 9(7):953. doi: 10.3390/children9070953

8. Frolli A, Savarese G, Carmine FD, Bosco A, Saviano E, Rega A, et al. Children on the autism spectrum and the use of virtual reality for supporting social skills. Children. (2022) 9(2):181. doi: 10.3390/children9020181

9. Ioannou S, Key AP, Muscatello RA, Klemenčić M, Corbett B. Peer actors and theater techniques play pivotal roles in improving social play and anxiety for children with autism. Front Psychol. (2020) 11. doi: 10.3389/fpsyg.2020.00908

10. Hameed MA, Hassaballah M, Hosney ME, Alqahtani A. An ai-enabled internet of things based autism care system for improving cognitive ability of children with autism spectrum disorders. Comput Intell Neurosci. (2022). doi: 10.1155/2022/2247675

11. Kouhbanani SS, Khosrorad R, Zarenezhad S, Arabi SM. Comparing the effect of risperidone, virtual reality and risperidone on social skills, and behavioral problems in children with autism: A follow-up randomized clinical trial. Arch Iranian Med. (2021). doi: 10.34172/aim.2021.76

12. Safi MF, Alnuaimi MM, Sartawi A. Using digital social stories to improve social skills in children with autism: a pilot experimental single-subject study. Adv Autism. (2021) 8(3). doi: 10.1108/AIA-02-2021-0013

13. Hernández-Espeso N, Martínez ER, Sevilla DG, Mas LA. Effects of dolphin-assisted therapy on the social and communication skills of children with autism spectrum disorder. Anthrozoos. (2021). doi: 10.1080/08927936.2021.1885140

14. Terlouw G, van ‘t Veer JT, Prins J, Kuipers DA, Pierie J. Design of a digital comic creator (it’s me) to facilitate social skills training for children with autism spectrum disorder: Design research approach. JMIR Ment Health. (2020) 7(7):e17260. doi: 10.2196/17260

15. Güler TD, Erdem M. Use of mobile social story maps in the development of cognitive and social skills of children with autism spectrum disorder. J Special Educ Technol. (2021) 37(4). doi: 10.1177/01626434211037547

16. Ávila Álvarez A, Alonso-Bidegain M, De-Rosende-Celeiro I, Vizcaíno-Cela M, Larrañeta-Alcalde L, Torres-Tobío G. Improving social participation of children with autism spectrum disorder: Pilot testing of an early animal-assisted intervention in Spain. Health Soc Care Community. (2020). doi: 10.1111/hsc.12955

17. Arora L, Choudhary A, Bhatt M, Kaliappan J, Srinivasan K. A comprehensive review on nui, multi-sensory interfaces and ux design for applications and devices for visually impaired users. Front Public Health. (2024) 12:1357160. doi: 10.3389/fpubh.2024.1357160

18. Doulah A, Rasheduzzaman M, Arnob FA, Sarker F, Roy N, Ullah MA, et al. Application of augmented reality interventions for children with autism spectrum disorder (asd): A systematic review. Computis. (2023) 12(10):215. doi: 10.3390/computers12100215

19. Scarcella I, Marino F, Failla C, Doria G, Chilà P, Minutoli R, et al. Information and communication technologies-based interventions for children with autism spectrum conditions: a systematic review of randomized control trials from a positive technology perspective. Front Psychiatry. (2023) 14. doi: 10.3389/fpsyt.2023.1212522

20. Liu T, Hu X. Generation paths of online public opinion impact in public health emergency: A fuzzy-set qualitative comparative analysis based on chinese data. Front Public Health. (2024) 12:1404587. doi: 10.3389/fpubh.2024.1404587

21. Mannion L. The use of precision teaching as an intervention for improving the skill acquisition of children with autism. Educ Psychol Pract. (2022). doi: 10.1080/02667363.2022.2033956

22. Soltiyeva A, Oliveira W, Madina A, Adilkhan S, Urmanov M, Hamari J. My lovely granny’s farm: An immersive virtual reality training system for children with autism spectrum disorder. Educ Inf Technologies: Off J IFIP Tech committee Educ. (2023). doi: 10.1007/s10639-023-11862-x

23. Fernandez-Fabeiro J, Carballido Á, Fernandez-Fernández ÁM, Moldes M, Villar D, Mouriño JC. The sinfonia project repository for ai-based algorithms and health data. Front Public Health. (2024) 12:1448988. doi: 10.3389/fpubh.2024.1448988

24. Güeita-Rodríguez J, Ogonowska-Słodownik A, Morgulec-Adamowicz N, Martín-Prades ML, Cuenca-Zaldivar J, Palacios-Ceña D. Effects of aquatic therapy for children with autism spectrum disorder on social competence and quality of life: A mixed methods study. Int J Environ Res Public Health. (2021). doi: 10.3390/ijerph18063126

25. Terlouw G, Kuipers DA, van ‘t Veer JT, Prins J, Pierie J. The development of an escape room–based serious game to trigger social interaction and communication between high-functioning children with autism and their peers: Iterative design approach. JMIR Serious Games. (2021) 9. doi: 10.2196/19765

26. Gengoux GW, Schwartzman JM, Millan M, Schuck RK, Ruiz AA, Weng Y, et al. Enhancing social initiations using naturalistic behavioral intervention: Outcomes from a randomized controlled trial for children with autism. J Autism Dev Disord. (2021) 51:3547–63. doi: 10.1007/s10803-020-04787-8

27. Socher R, Perelygin A, Wu J, Chuang J, Manning CD, Ng AY, et al. (2013). Recursive deep models for semantic compositionality over a sentiment treebank, in: Proceedings of the 2013 conference on empirical methods in natural language processing. pp. 1631–42. Available online at: https://aclanthology.org/D13-1170.pdf.

28. Liang T, Jin C, Wang L, Fan W, Xia C, Chen K, et al. Llm-redial: A large-scale dataset for conversational recommender systems created from user behaviors with llms. In: Findings of the Association for Computational Linguistics ACL 2024 (2024). p. 8926–39. Available online at: https://aclanthology.org/2024.findings-acl.529/.

29. Asghar N. Yelp dataset challenge: Review rating prediction. arXiv preprint arXiv:1605.05362. (2016). doi: 10.48550/arXiv.1605.05362