94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychiatry, 19 February 2024

Sec. Autism

Volume 15 - 2024 | https://doi.org/10.3389/fpsyt.2024.1328708

This article is part of the Research TopicWorld Autism Awareness Day 2024View all 7 articles

Introduction: Individuals with Autism Spectrum Disorder (ASD) show atypical recognition of facial emotions, which has been suggested to stem from arousal and attention allocation. Recent studies have focused on the ability to perceive an average expression from multiple spatially different expressions. This study investigated the effect of autistic traits on temporal ensemble, that is, the perception of the average expression from multiple changing expressions.

Methods: We conducted a simplified temporal-ensemble task and analyzed behavioral responses, pupil size, and viewing times for eyes of a face. Participants with and without diagnosis of ASD viewed serial presentations of facial expressions that randomly switched between emotional and neutral. The temporal ratio of the emotional expressions was manipulated. The participants estimated the intensity of the facial emotions for the overall presentation.

Results: We obtained three major results: (a) many participants with ASD were less susceptible to the ratio of anger expression for temporal ensembles, (b) they produced significantly greater pupil size for angry expressions (within-participants comparison) and smaller pupil size for sad expressions (between-groups comparison), and (c) pupil size and viewing time to eyes were not correlated with the temporal ensemble.

Discussion: These results suggest atypical temporal integration of anger expression and arousal characteristics in individuals with ASD; however, the atypical integration is not fully explained by arousal or attentional allocation.

An essential feature of individuals with autism spectrum disorder (ASD) is difficulty with social communication, which is related to an impaired ability to recognize facial emotions (1, 2). One method for investigating underlying mechanisms is the eye-tracking approach. Individuals with ASD tend to fixate on the eyes of a face for shorter periods than typically developing (TD) individuals (3, 4). This tendency has frequently been observed, especially in adults with ASD (5, 6). Given that attention towards the eyes enhances facial emotion recognition (7), atypical visual patterns would influence the impaired recognition of facial emotions.

Previous studies have proposed social salience and eye avoidance hypotheses to explain the atypical recognition of facial emotions in individuals with ASD. The social salience hypothesis presumes that individuals with ASD are less susceptible to socially informative areas (i.e. eyes; 8). Although the eyes provide viewers with mental states, intentions, and emotions (9), attention in individuals with ASD is not captured by eyes due to decreased social brain activity (10, 11). These individuals attend to physically salient parts of the face (e.g. the mouth), thus impairing recognition of facial emotions. In contrast, the eye-avoidance hypothesis presumes that social stimuli, especially for eyes with negative expressions, increase arousal levels in individuals with ASD (12). For instance, individuals with ASD are motivated to avoid eye regions to decrease arousal levels (4). This hypothesis is consistent with previous reports showing increased amygdala activity in individuals with ASD for fear expressions (13) and that autistic traits mainly impair the recognition of negative emotions (14). Stuart et al. (15) noted mixed evidence for these two hypotheses. Moriuchi et al. (16) conducted a cueing experiment, manipulating participants’ attention to focus on the eyes of facial images before presentation. They found that the time required to start eye avoidance did not differ between the ASD and TD groups. Additionally, they did not observe the eye avoidance when the participants’ attention was focused on the eyes of facial images by cueing method. Thus, it has been argued that the atypical recognition of facial emotions in individuals with ASD is related to arousal and attentional patterns, but the underlying mechanism remains unclear.

Recent studies investigated the characteristics of facial ensembles in individuals with ASD. Ensemble refers to perceiving an averaged item from multiple items arranged in different spatial or temporal positions (17). This ensemble perception has also been observed in facial emotions (18). Integrating multiple emotional expressions has various advantages for social communication. For example, to understand moods among members, it is necessary not only to recognize the facial emotions of each member but also to integrate them into a summary. Moreover, dynamic facial expressions should be integrally perceived to estimate others’ emotions because daily expressions change within a specific time window. Such integration is suggested to be difficult for individuals with ASD because of problems with global processing (19, 20) or bias to local processing (21). Therefore, autistic traits are considered to influence ensemble perception characteristics. Previous studies investigated the effects of autistic traits on spatial facial ensembles. Karaminis et al. (22) reported no differences in the facial ensembles between individuals with and without ASD. Recently, Chakrabarty and Wada (23) reported that half of the participants with the autism spectrum condition showed a decreased facial ensemble, while the other half did not. Although group differences in spatial ensembles are not apparent, there seem to be significant individual differences.

However, the effects of autistic traits on temporal facial ensembles remain unclear. Given the weak central coherence (19, 20) or local bias (21), individuals with ASD may perceive emotions based on a part of serial facial presentations rather than entire presentations. To investigate this issue, Harada et al. (24) examined whether autistic traits, measured using the Autism-Spectrum Quotient (AQ: 25, 26), influenced temporal facial ensembles. In this study, the participants viewed facial expressions serially switched between emotional and neutral. The participants evaluated the perceived intensity of the facial emotions for the overall presentation. They found that facial ensembles were influenced by the ratio of emotional expression time to total presentation time. Participants with higher autistic traits tended to score the perceived intensity of angry expressions as low (24). However, a higher AQ score does not always indicate a clinical diagnosis of ASD (27). This limitation should be resolved by clarifying group differences in the temporal ensemble of participants with and without a clinical diagnosis of ASD.

This study investigated the temporal ensemble characteristics of individuals with ASD. The experimental procedure was as described by Harada et al. (24). In this experiment, facial expressions were presented serially for three seconds and pseudo-randomly switched between emotional and neutral stimuli. The temporal ratio of the emotional expressions was manipulated as six levels. Subsequently, participants reported the intensity of their facial emotions. We also measured pupil size and attentional allocation during the presentation of facial images. Previous studies have asserted that the impaired recognition of facial emotions is related to atypical arousal and attentional patterns (8, 12). Therefore, we measured the perceived intensity of facial emotions (an indicator of the temporal ensemble), pupil size, and viewing time for facial images throughout the experiment. The pupil size is frequently used to measure arousal levels (28).

Three points must be verified: First, whether individuals with ASD would be less susceptible to the temporal ensembles of the perceived intensity of emotional expression than TD individuals. This is plausible because autistic traits impair the integration of multiple (or global) items (19, 20). Given that autistic traits impair the recognition of negative expressions (14), a lower susceptibility effect may be observed for negative emotions. Second, if the prediction was supported, we examined how the arousal level was related to the outcome. As pupil sizes reflect the arousal level (28), pupil sizes in individuals with ASD would be smaller for presenting facial expressions based on the social salience hypothesis but larger according to the eye-avoidance hypothesis. This is because the social salience hypothesis presumes that autistic traits decrease sensitivity to social information, whereas the eye-avoidance hypothesis presumes that these traits increase arousal to social information. Third, we examined whether viewing times for the eyes in facial images were shorter in individuals with ASD. Given these two hypotheses, individuals with ASD have shorter viewing times for eyes of the face.

Twenty individuals with ASD and 17 TD individuals (age and IQ were matched) participated in this study. The participants were naïve to the purpose of the study until they were debriefed. Before joining the research project, one of the authors, a graduate student, participated in the experiment. The participant was unaware of the study’s purpose. The sample size was determined based on previous studies (15–21 participants with ASD; 29–31). Written informed consent was obtained from all the participants. This study was approved by the Research Institute of the National Rehabilitation Center for Persons with Disabilities (approval number: 2020-012). Written informed consent was obtained from all participants.

The participants in the present study were broadly recruited from residents and university students near the institute. The participants were recruited under the following conditions; (1) age range of 15–40 years and (2) full-scale IQ of 80 or higher. Therefore, the participants completed the Japanese version (32) of the Wechsler Adult Intelligence Scale-III (33) For the TD group, participants were required not to have a diagnosis of a developmental disorders. For the ASD group, participants were required to have a diagnosis such as ASD, Asperger’s disorder, or Pervasive Developmental Disorder-Not Otherwise Specified, and the diagnosis was confirmed by the Japanese version (34) of the Autism Diagnostic Observation Schedule Component, Second Edition (ADOS-2; 35) as described below; An occupational therapist (M.S) with a research license for ADOS-2 assessed the ADOS-2 scores of each ASD participant in the ASD group.

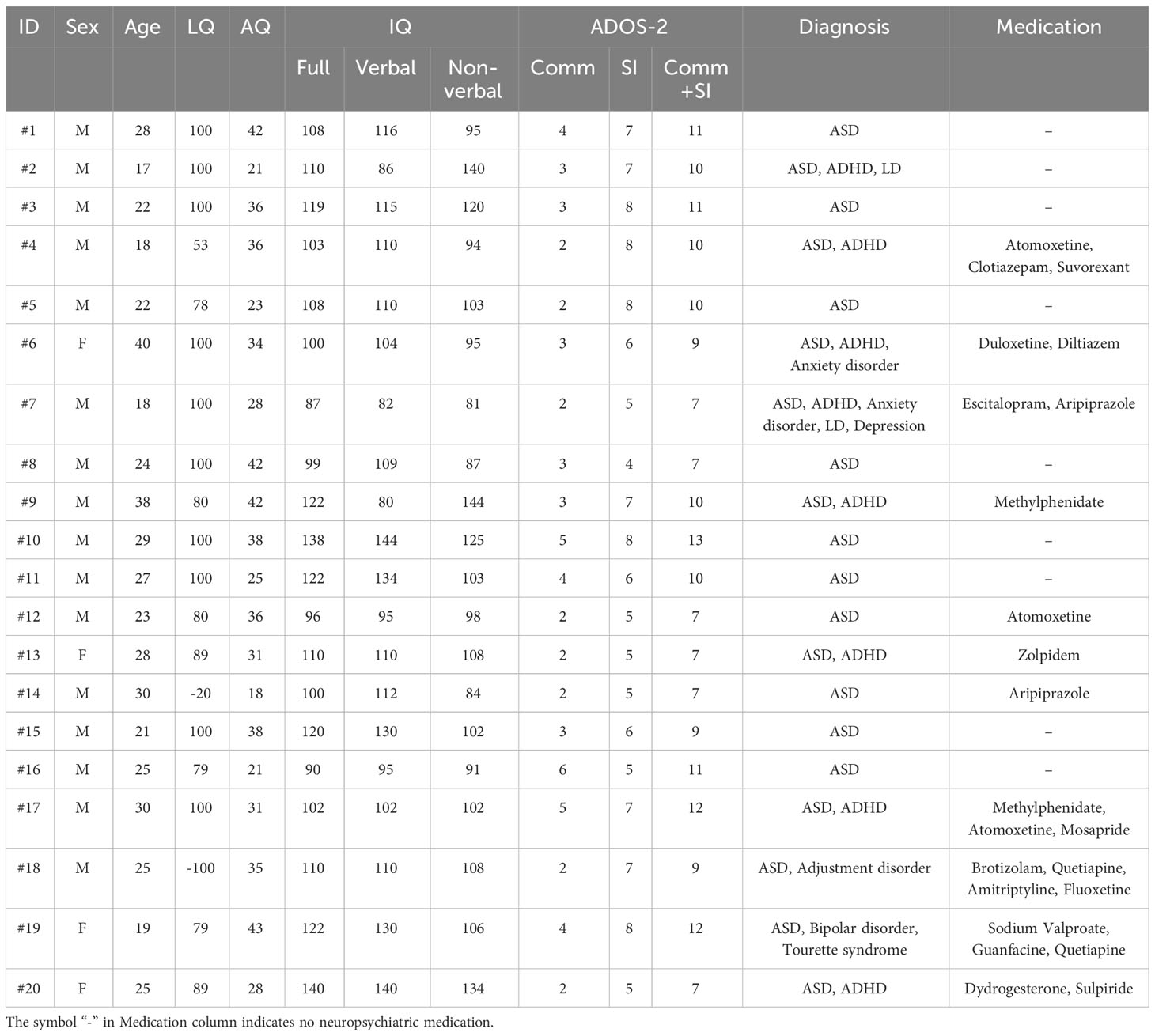

The profile data of the participants with ASD are shown in Table 1 (see Table 2 for details of TD participants). Age and IQs were matched between the two groups [t (35) = 1.148, p = 0.259 for ages; t (35) = 1.318, p = 0.196 for verbal IQ; t (35) = 0.296, p = 0.769 for non-verbal IQ; t (35) = 0.647, p = 0.522 for full IQ]. Their ages ranged from 17 to 40 years old (M = 25.45, SD = 6.13) in participants with ASD and from 16 to 37 years old in TD participants (M = 23.29, SD = 5.12). The mean IQ in the participants with ASD was 110.70 (SD = 18.21) for the verbal, 106.00 (SD = 17.98) for non-verbal, and 110.30 (SD = 14.26) for full. The mean IQ in the TD participants was 117.65 (SD = 12.84) for the verbal, 104.53 (SD = 10.62) for non-verbal, and 113.12 (SD = 11.84) for full. The mean AQ was significantly higher in participants with ASD (M = 32.40, SD = 7.79) than in TD participants (M = 17.71, SD = 6.57) [t (35) = 6.136, p < 0.001]. All TD participants had AQ scores of < 30. The laterality quotient (LQ) for handedness (36) was matched between the two groups [t (35) = 0.054, p = 0.957]. Gender ratios were not significantly different between the ASD and TD groups (χ2 = 0.079, p = 0.506, by a chi-square test). Therefore, we believe that age and gender variations have a restricted effect because these differences were not significant between the two groups, even though the present study includes a wide ranges of participant ages.

Table 1 Details of the 20 participants with ASD. All participants were residents of Japan (East Asian).

The apparatus and stimuli were the same as those described in Harada et al. (24). An LED monitor and desktop PC were used to conduct the study. The stimuli were presented on the monitor and controlled using MATLAB (MathWorks) with PsychToolbox (37–39). Eyelink 1000 PLUS (SR Research) was used to measure the participants’ pupil sizes and gaze positions.

Facial images of six basic emotions (anger, disgust, fear, happiness, sadness, and surprise) and neutral expressions were selected from the Facial Database of Advanced Industrial Science and Technology (40). A total of 42 facial images [(six emotions and neutral expressions) × six actors] were used.

The Japanese version of the AQ (26) was used to evaluate autistic traits. Participants were asked to indicate their agreement levels with 50 questions that described social communication scenes. Scoring was based on Wakabayashi et al. (26): the “agree” and “partial agree” responses to autistic items and “disagree” and “partial disagree” responses to inverted autistic items were counted.

After instructions and informed consents, the participants sat on a chair in front of the monitor. The head was stabilised using the chin rest position. The participants performed a facial-emotion recognition task and a temporal ensemble task for approximately 60–80 minutes (including the resting time).

A facial emotion recognition task was conducted to confirm the recognition accuracy of static facial emotions. After the space key was pushed, a black fixation cross (‘+’) was presented at the centre of the monitor for one second. Subsequently, a facial image of any emotion and six choices (anger, disgust, fear, happiness, sadness, and surprise) were presented until responses. After the participants selected the most appropriate choice, the subsequent trial began. There were 72 trials (six facial emotion × six actors × two repetitions). In this task, the pupil size and gaze position were not recorded.

A nine-point calibration of eye tracking was conducted before the ensemble task. Participants were asked to fixate on each dot presented at any of nine locations (upper left, upper centre, upper right, middle left, middle centre, middle right, lower left, lower centre, and lower right of the monitor). If a corneal reflex image was not stably detected or the calculated gaze locations were largely biased with the actual location, the nine-point calibration was repeated up to three times. If the three trials failed, we conducted the ensemble task without eye tracking (six participants in the ASD group and three in the TD group). The number of participants without eye tracking was comparable to that of a previous study (24). Failures were most likely caused by equipping glasses to correct astigmatism.

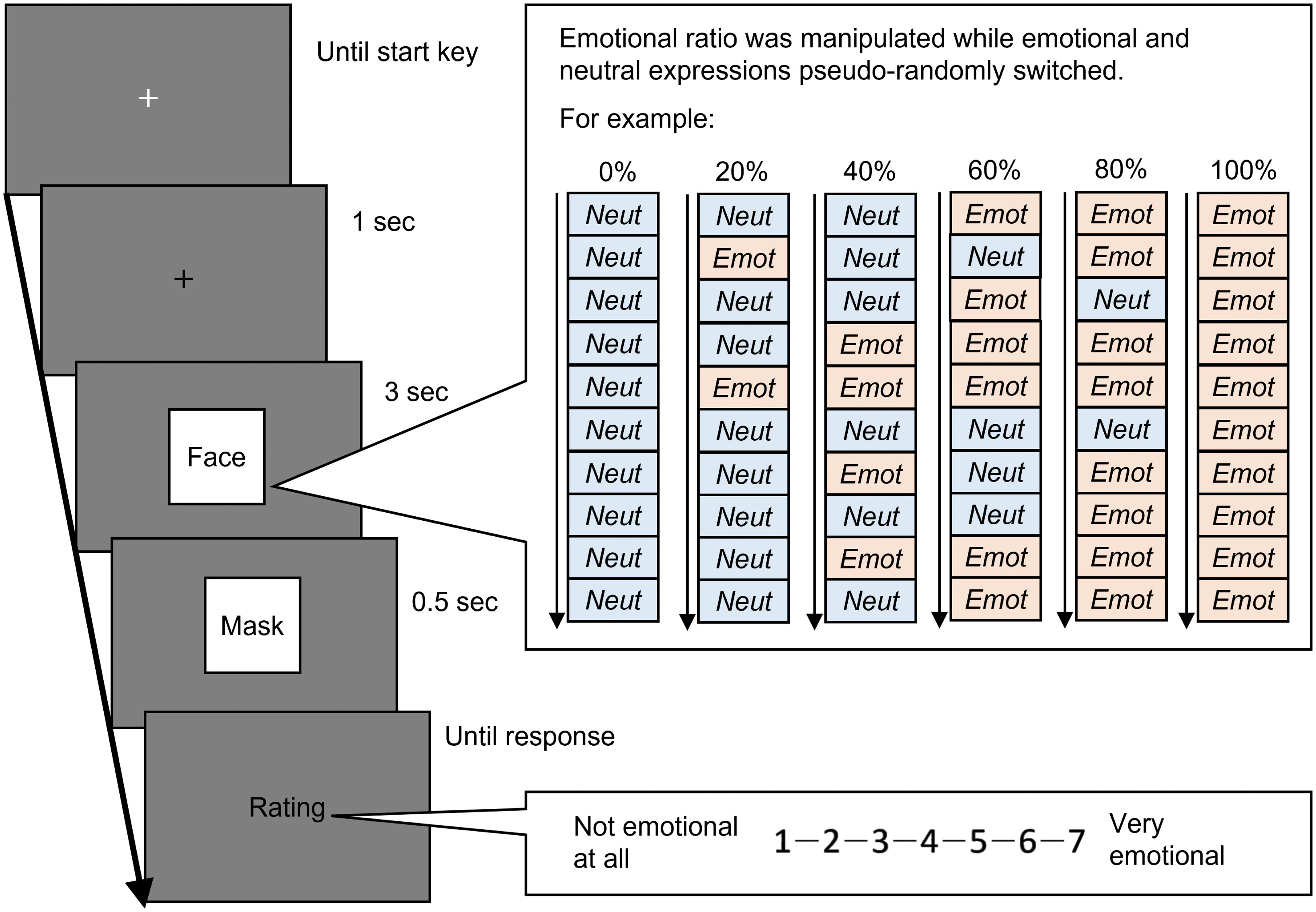

The temporal ensemble task was performed to investigate the effect of autistic traits on the temporal facial ensemble. The trial sequence is shown in Figure 1. A black fixation cross (‘+’) was presented at the centre of the monitor. Subsequently, facial images of the same actor (7°× 7°of visual angle) were presented serially for three seconds. The facial presentation comprised 10 frames (0.3 seconds each), and the facial images were pseudo-randomly switched between emotional and neutral. The temporal ratio of emotional expressions to the total presentations was manipulated at six levels (0, 20, 40, 60, 80, and 100%). While viewing the facial images, the participants’ pupil size and gaze position were recorded (the sampling rate was set at 60 Hz by the PsychToolBox). After masking the stimuli, the participants estimated the perceived intensity of the facial emotions for the overall presentations using a seven-point scale (1 = not emotional to 7 = very emotional). The subsequent trial was initiated after a response. A total of 216 trials were conducted (six facial emotions × six ratios × six actors). We manipulated facial emotions between the experimental blocks and the ratio within each block. Although the order of facial emotions was not counterbalanced across participants, it did not differ between the ASD and TD groups significantly [χ2 (5) = 1.802, p = 0.876].

Figure 1 The trial sequence of the temporal ensemble task. In this task, the facial presentation comprised 10 frames that showed a neutral or one emotional expression. Facial expressions pseudo-randomly switched between neutral and emotion. The ratio of total duration for emotional expressions was manipulated as six levels. The inter-stimulus interval was 0. Ratios 0% and 100% indicate static neutral and emotional expressions, respectively.

The data obtained from the nine TD participants overlapped with those reported by Harada et al. (24). We analysed three dependent variables to examine our hypotheses: the perceived intensity of facial emotion, pupil size, and viewing time. The perceived intensity of the facial emotions was calculated by rating the data for the ensemble task. Pupil size is mainly composed of an initial reflex primarily influenced by the luminosity of the visual images and a later component (approximately 2 s after the stimulus onset: 28). The latter can be influenced by the arousal changes (41). Following previous studies, the average pupil size from 2 to 3 s after facial onset was analysed to evaluate the effect of emotional expressions on arousal. Data captured from 20–80% ratios were excluded from the analyses to control for the initial reflex. The pupil values captured from the eye tracker were converted into two types of data: the Z-score, which was calculated within the experimental block, and the relative data, which was derived from the pupil value (the value in 100% condition was divided by the mean values in 0% condition). The Z-score was employed to facilitate within-participants comparison, whereas the relative pupil size was utilised for between-group comparison. In the viewing-time analyses, two rectangles (120 pixel in width and 100 pixel in height) and a rectangle (280 pixel in with and 200 pixel in height) were set on eyes and a mouth as the areas of interest, respectively. For facial presentation, the gaze duration maintained in these areas was calculated as the viewing time of the eyes and mouth.

Statistical significance tests were conducted using software R (version 4.2.1). Analyses of Variance (ANOVAs) were conducted using the R function (anovakun version 4.8.9: 42). For multiple comparisons, the p-values were corrected using Holm’s method.

Before the analyses, we excluded eye data from 11 participants for the following reasons: the calibration failure in eye tracking (six participants with ASD and three TD participants) and corneal reflex detection in less than half of the total participants in some facial emotion conditions (two participants with ASD) mainly due to equipping glasses to correct astigmatism. Thus, we analysed the eye data from 12 participants in the ASD group and 14 in the TD group. To mitigate the effect of eye blinks, pupil data captured within ± 100 ms of a pupil miss were linearly interpolated, following the method described by Bradley et al. (28). Additionally, we excluded trials in which the interpolated data accounted for more than half of the total data points.

For a comprehensive summary of the ANOVAs, please refer to Table 3.

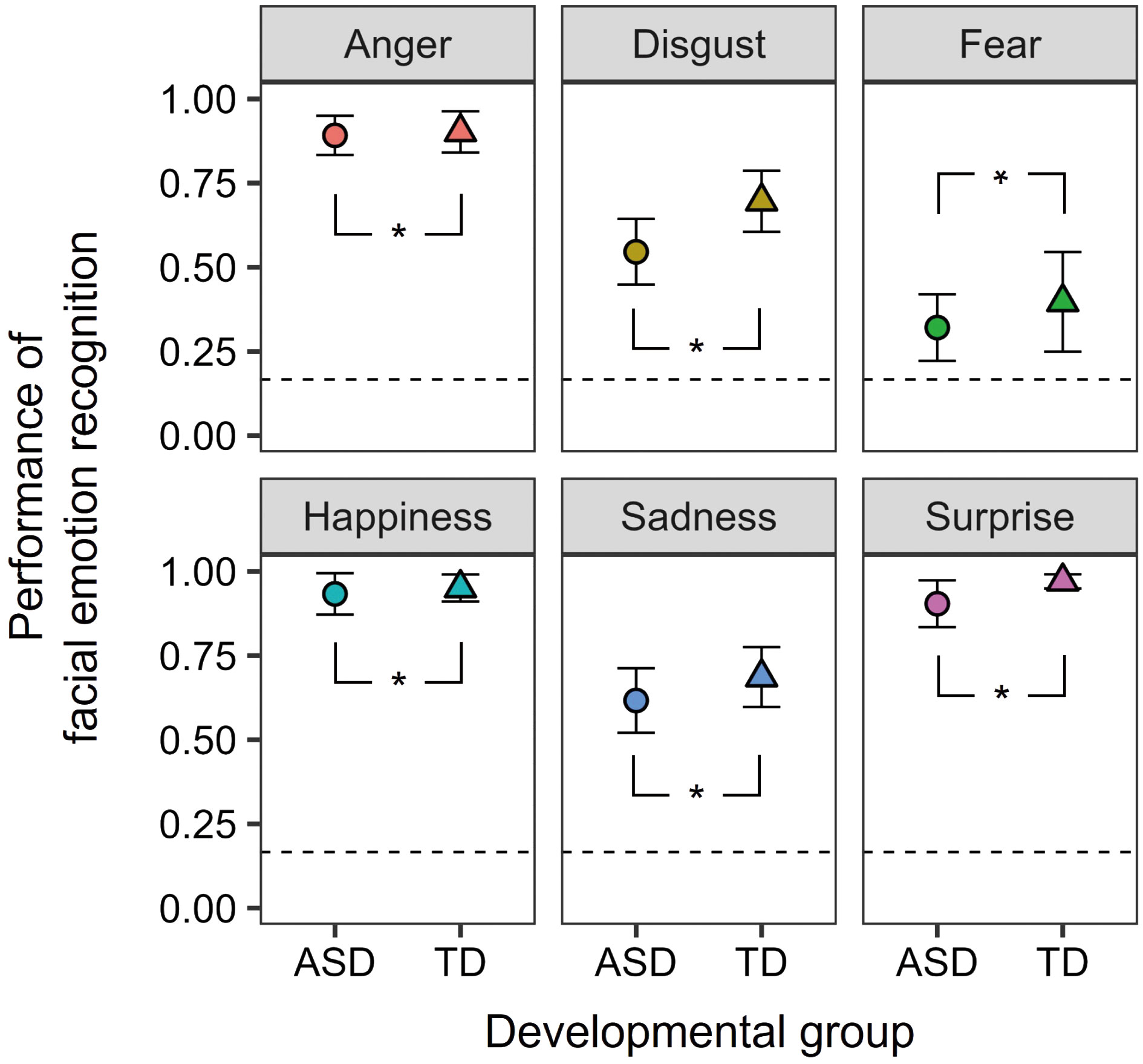

Figure 2 displays the performance of facial emotion recognition. This observation indicates that participants in both groups demonstrated a higher level of accuracy in recognizing facial emotions compared to the chance level. To confirm the effects of autistic traits, a two-way mixed-design ANOVA was conducted with the factors of developmental group (ASD and TD) and facial emotions (anger, disgust, fear, happiness, sadness, and surprise). The main effects of developmental group [F (1, 35) = 6.161, p = 0.0180, ηp2 = 0.150] and facial emotions [F (5, 175) = 72.075, p < 0.0001, ηp2 = 0.673] were significant. The former results show that individuals with ASD have difficulty recognizing facial emotions, irrespective of the emotion type. The two-way interaction was not significant [F (5, 175) = 0.840, p = 0.523, ηp2 = 0.023]. Moreover, error patterns were analyzed in the Supplemental Information (Supplementary Figure S1).

Figure 2 The mean facial emotion recognition task performance for the ASD and TD groups. The error bars represent 95% confidence intervals. Dashed lines represent the chance levels. Asterisks (*) represent significant differences between the two groups (p <.05).

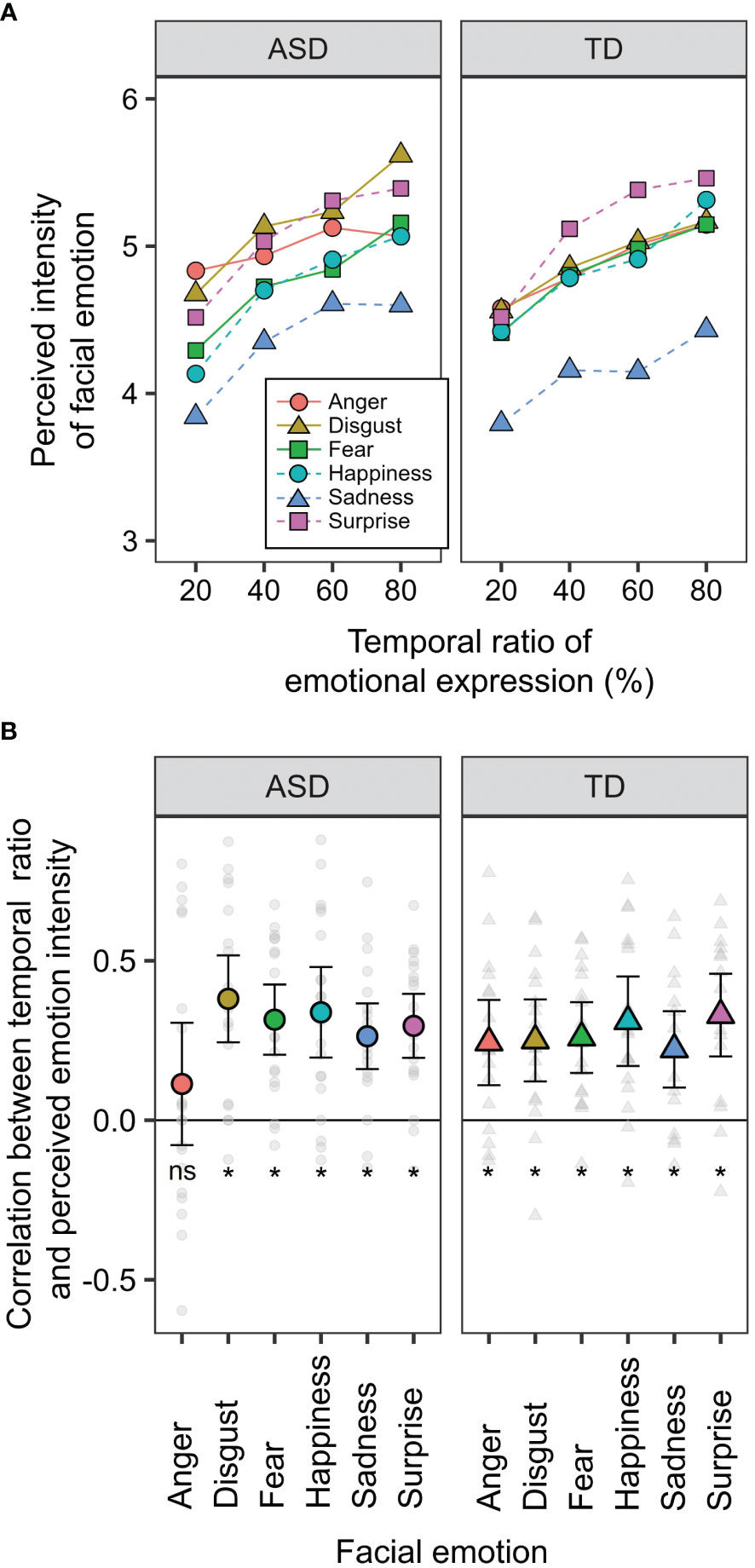

Figure 3A shows the perceived intensity of the facial emotions in the two groups. Ratios of 0 and 100% were excluded from the analysis because these conditions were static (complete data are shown in Supplementary Figure S2). A three-way mixed-design ANOVA was performed with the factors of developmental group, facial emotion, and ratio (20, 40, 60, and 80%). The main effects of facial emotion [F (5, 175) = 11.407, p < 0.0001, ηp2 = 0.246] and ratio [F (3, 105) = 47.084, p < 0.0001, ηp2 = 0.574] were significant. Furthermore, the two-way interaction between facial emotion × ratio was significant [F (15, 525) = 2.281, p = 0.004, ηp2 = 0.061]. The other main effects and interactions were not significant (Fs < 1.195, ps > 0.271, ηp2s < 0.033). The perceived intensity of anger has been reported to correlate with the AQ score obtained from TD individuals (24). To confirm reproducibility, we calculated the correlation coefficients between the AQ score and perceived intensity in TD participants (Supplementary Table S1). The correlation coefficient for the anger condition was -0.337 [t (15) = 1.388, p = 0.185], which is comparable to the value (r = -0.353) obtained in the previous study. Although the present r-value was not significant, this could be because our sample size was smaller than that of the previous studies.

Figure 3 Behavioural data of the temporal ensemble task. (A) The means of perceived intensity of facial emotion as a function of the ratio of emotional expression. (B) The means of the ratio effect on the facial ensemble. The values were calculated as correlation coefficients between ratio and perceived intensity. Small dots indicate the data obtained from each participant. Error bars represent 95% confidence intervals. Asterisks (*) represent the values were significantly different from zero (p <.05), while "ns" represents there was no significant difference.

To examine the temporal ensemble characteristics in individuals with ASD, we calculated the correlation coefficients between the ratio and perceived intensity of facial emotions (Figure 3B). This correlation indicated the extent to which the temporal ensemble depended on the presentation time of the emotional expression (temporal ratio effect). If individuals with ASD perceived emotions based on a part of serial facial presentations rather than an entire presentation, the temporal ratio effect would decrease. T-tests were conducted between the values and zero (i.e. no correlation) to examine the temporal ratio effect. Multiplicity was corrected using Holm’s method. The results revealed that these values were significantly larger than zero (|t|s > 3.865, ps < 0.005), except for the anger condition in the ASD group [t (18) = 1.242, p = 0.228]. These results suggest that temporal ensembles in many individuals with ASD are less susceptible to the presentation times of angry expressions. This finding is consistent with our hypotheses. This may be related to atypical social perceptions of negative emotional faces among individuals with ASD. Tanaka and Sung (12) discussed that individuals with ASD are sensitive to angry expressions, which would increase arousal levels and cause them to avoid the eyes. The effects of arousal and attentional allocation on temporal ensembles are analyzed later to examine this possibility.

Moreover, a two-way mixed-design ANOVA was performed with the factors of developmental group and facial emotions. The main effect of facial emotion was significant [F (5, 175) = 3.219, p = 0.008, ηp2 = 0.084], but that of the developmental group was not significant [F (1, 35) = 0.051, p = 0.823, ηp2 = 0.001]. The two-way interaction was marginally significant [F (5, 175) = 1.982, p = 0.084, ηp2 = 0.054]. The reason for this marginal significance may be that the five participants with ASD showed a more significant temporal ratio effect for angry expressions (Figure 3B). Individuals with ASD show more considerable individual differences in facial ensembles (23), which is consistent with the present results.

These results are partially inconsistent with those reported by Harada et al. (24). In the TD group, the temporal ratio effect was not significantly different between anger and other emotions; however, Harada et al. reported significant differences. A potential reason for this inconsistency is that we recruited TD participants based on AQ scores (AQ < 30), whereas the previous study included TD participants irrespective of their AQ scores. Given that autistic traits are related to the temporal ratio effect, the results of Harada et al. (24) may have been derived from participants with higher AQ scores.

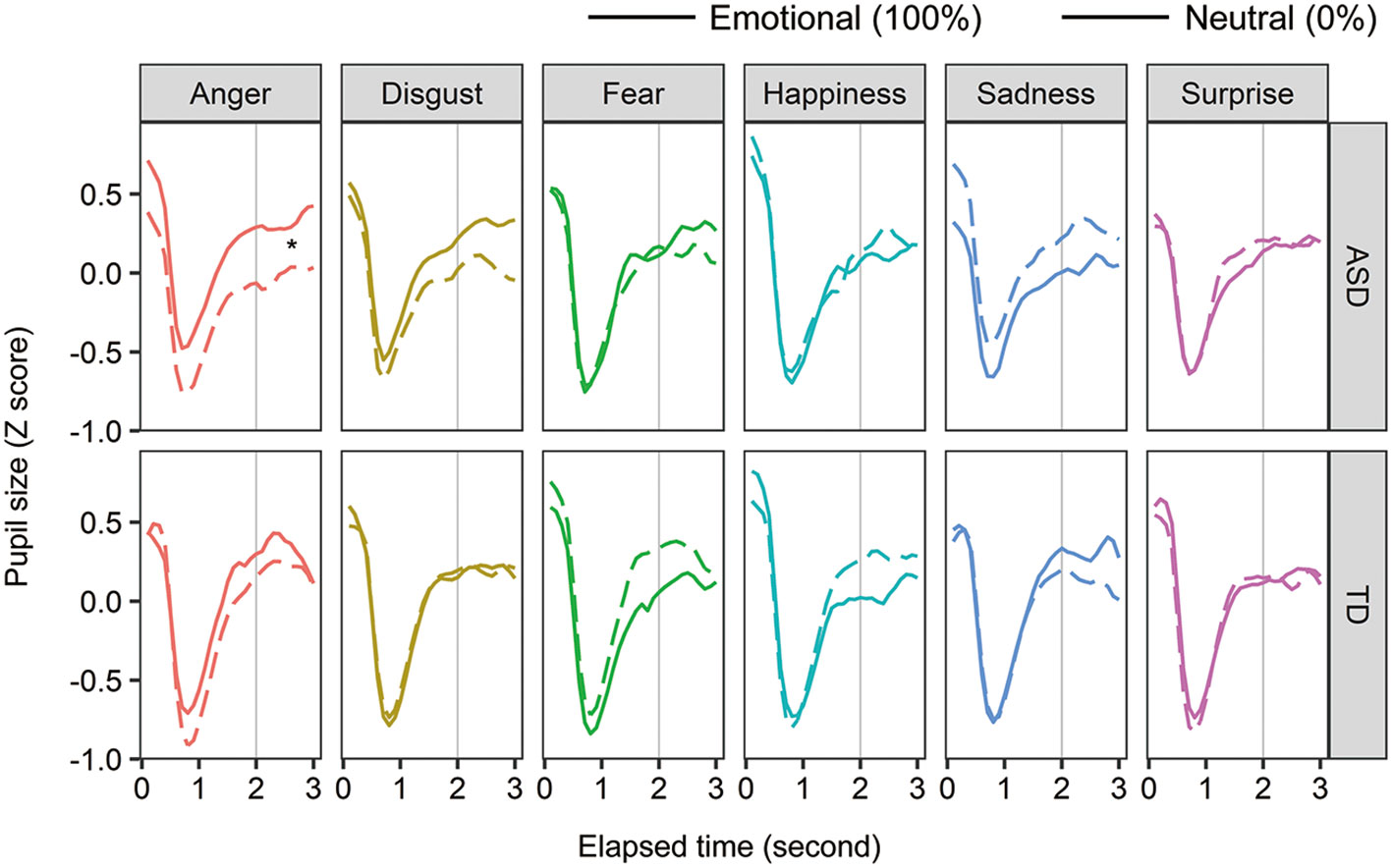

To examine the effect of facial emotions on arousal in individuals with ASD, pupil size was analyzed in two ways: within-participant and between-participant comparisons. For the within-group comparison, we calculated the Z-score of the pupil value in each facial emotion condition (Figure 4). We averaged the score from 2 to 3 s after facial expression onset. For each group, a two-way within-participant ANOVA was conducted with the factors of facial emotion and ratio (emotion:100%, neutral:0%). In the ASD group, the main effects of facial emotion and ratio were not significant (Fs < 0.577, ps > 0.463, ηp2 < 0.012), and the two-way interaction was significant [F (5, 55) = 2.929, p = 0.021, ηp2 = 0.210]. For the anger condition, the simple main effect of ratio was significant [F (1, 11) = 8.434, p = 0.014, ηp2 = 0.434] and pupil size in the ASD group was significantly larger for angry expression than for neutral expression. Although the simple main effect of facial emotion on emotional condition (100%) was significant [F (5, 55) = 2.396, p = 0.049, ηp2 = 0.179], a multiple comparison test showed non-significant differences between facial emotions. Other simple main effects were not significant. For the TD group, neither any main effect nor the two-way interaction was significant (Fs < 2.182, ps > 0.067, ηp2s < 0.144). These within-participant comparisons show that individuals with ASD produce larger pupil sizes for angry expressions than neutral expressions, whereas typically developing individuals do not.

Figure 4 The mean change in pupil sizes for facial expressions. Asterisks (*) indicate significant differences (p <.05).

For between-participant comparison, we divided the pupil value in the 100% condition by the mean pupil value in the 0% condition. After facial expression onset, we averaged the relative pupil size from 2 to 3 s. A two-way mixed-design ANOVA was conducted with the factors of developmental group and facial emotion. The main effect of facial emotion and two-way interaction were significant [F (5, 120) = 2.817, p = 0.019, ηp2 = 0.105; F (5, 120) = 2.955, p = 0.015, ηp2 = 0.110], and the main effect of developmental group was not [F (1, 24) = 0.328, p = 0.572, ηp2 = 0.014]. For sad expressions, the simple main effect of developmental group was significant [F (1, 24) = 4.327, p = 0.048, ηp2 = 0.153]. This indicates that sad expression decreases arousal levels in individuals with ASD. For the ASD group, the simple main effect of facial emotion was significant [F (5, 55) = 3.507, p = 0.008, ηp2 = 0.242], but a multiple comparison test showed no significant differences between facial emotions. Other simple main effects were not significant.

Participants with ASD showed increased arousal for angry expressions and decreased arousal for sad expressions. One possible explanation is that individuals with ASD are sensitive to arousal from facial stimuli. As angry expressions are more arousing (40) than other expressions, arousal in individuals with ASD would increase. Similarly, because sad expressions are less arousing, their arousal would decrease with presentation.

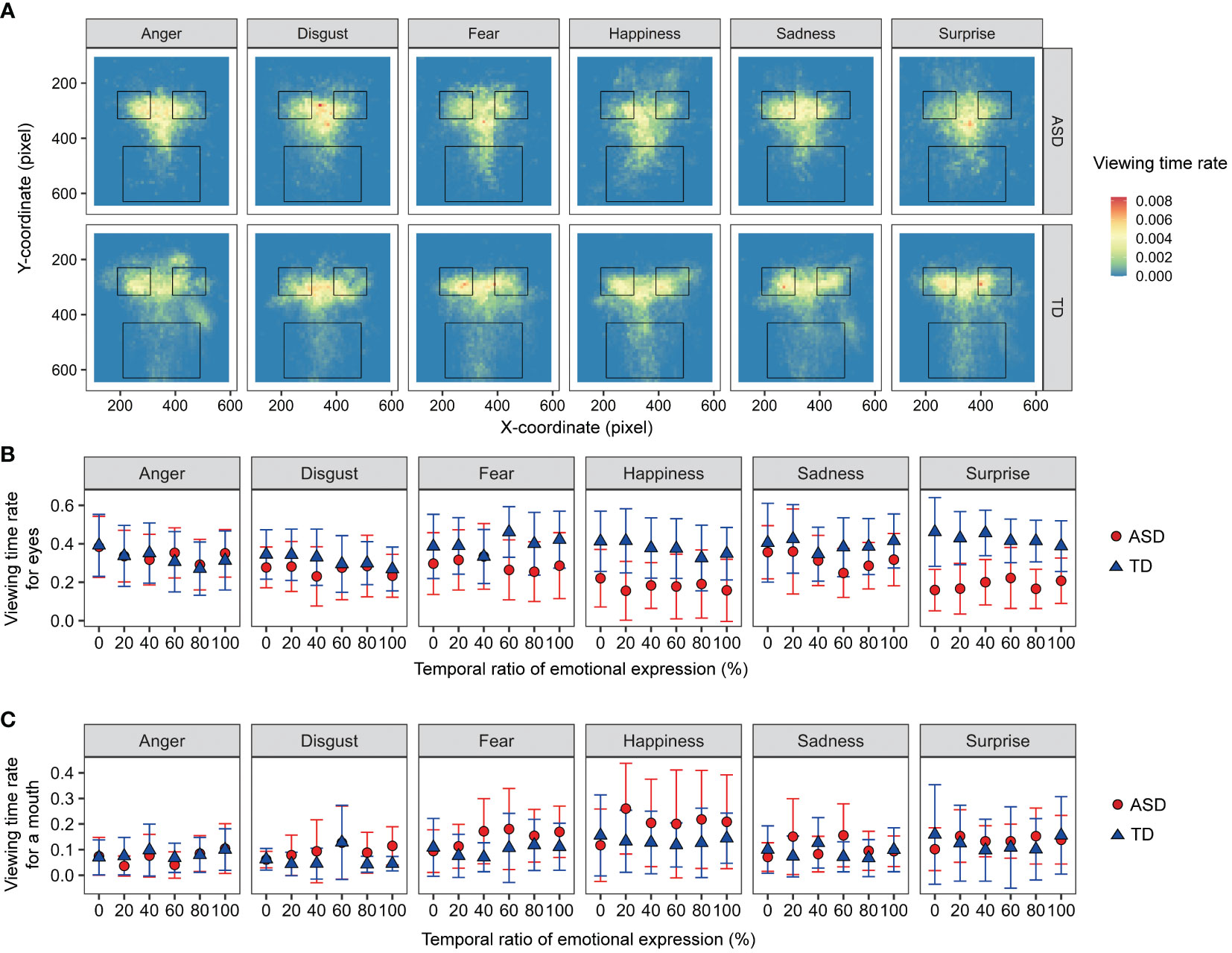

Viewing-time rates were analyzed to investigate the effects of autistic traits on attentional allocation. Figure 5 shows the mean viewing time rates for the facial images. For the viewing time rates for eyes and mouth, a three-way mixed-design ANOVA was performed with the factors of developmental group, facial emotion, and ratio (0, 20, 40, 60, 80, and 100%). For viewing times on the eyes, the main effect of ratio was significant [F (5, 120) = 4.948, p < 0.001, ηp2 = 0.171]. The other main effects and interactions were not significant (Fs < 1.932, ps > 0.053, ηp2s < 0.092). For the viewing times on a mouth, the main effect of facial emotion [F (5, 120) = 2.698, p = 0.024, ηp2 = 0.101] and two-way interaction between developmental group and ratio [F (5, 120) = 2.719, p = 0.023, ηp2 = 0.102] were significant. For the ASD group, the simple main effect of ratio was significant [F (5, 55) = 3.225, p = 0.0127, ηp2 = 0.227], but a multiple comparison test showed non-significant differences between ratios. The other main effects and interactions were not significant (Fs < 1.958, ps > 0.090, ηp2s < 0.075). The results showed no difference in attentional allocation between individuals with and without ASD.

Figure 5 The viewing-time data for facial images. (A) Heat maps of viewing times. The three frames of black quadrangles indicate areas of interest for the eyes and mouth. (B) The means of viewing-time rates on the eye areas. (C) The means of viewing-time rates on the mouth area. Error bars represent 95% confidence intervals.

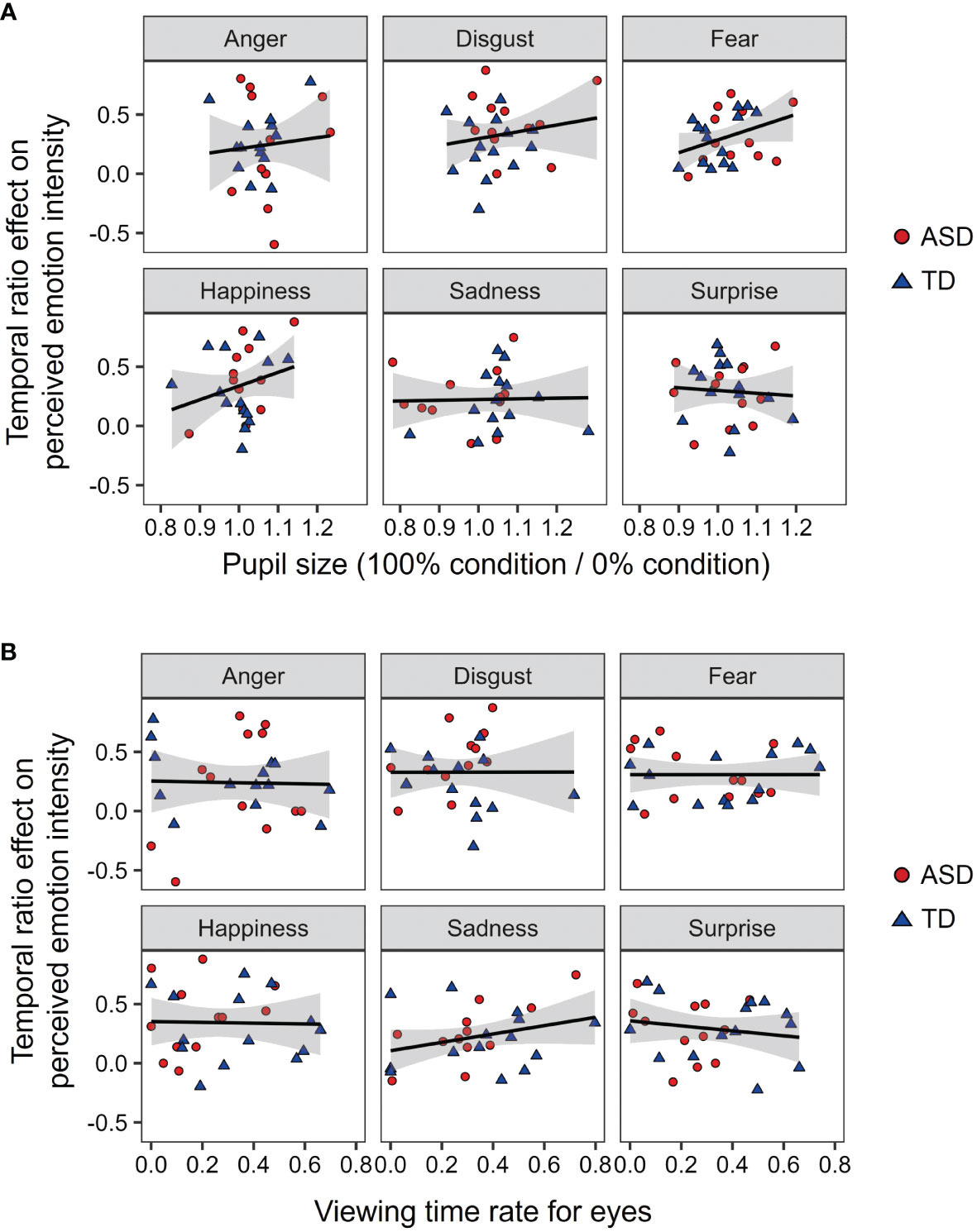

To discuss the mechanisms underlying the effect of autistic traits on temporal ensembles, we examined the effects of pupil size and viewing time for eyes on the temporal ensemble of facial emotions (Figure 6). For each facial emotion, we conducted a multiple regression analysis on the temporal ratio effect with developmental group, pupil size, and viewing times for the eyes as predictors. The results showed that none of the models were significant (Fs < 1.322, ps > 0.293, R2s < 0.153). This suggests that the rating of the temporal ensemble was not fully explained by arousal or attentional allocation.

Figure 6 The relationship between the temporal ensemble, arousal, and attentional allocation. (A) The relationship between the pupil size and temporal ratio effect. (B) The relationship between the viewing times for eyes and temporal ratio effect. An asterisk (*) represents the significant correlation (p <.05).

This study investigated the temporal ensemble characteristics of emotional expression in individuals with ASD. The temporal ensemble of many participants with ASD was less susceptible to the temporal ratio of angry expressions. This finding is consistent with our hypotheses. Moreover, Figure 6A shows that pupil size and viewing time did not affect the temporal ratio effect. These results suggest that anger weakens the temporal ensemble in individuals with ASD; however, arousal or attention to the eyes cannot fully explain this effect.

We found that individuals with ASD have difficulty averaging facial emotion of anger recognition. This finding could be used to assess autistic traits from the perspective of temporal facial ensembles. Recent studies have proposed behavioral assessments using computational models of facial expressions (43, 44). Leo et al. (44) developed a computational model that can evaluate individuals’ abilities to express their emotional states by analyzing the muscle conditions of facial parts according to computer vision and machine learning technologies. This idea can be applied to facial emotion recognition. For example, a typical ensemble of neutral-anger expressions can be modelled using data obtained from individuals without ASD. This model is useful for quantitatively assessing how atypical individuals’ temporal ensemble abilities are. Furthermore, the findings of this study may be useful for developing tools that support communication in situations such as online meetings. If the temporal ensemble of angry facial expressions causes excessive arousal, then tempering the display may facilitate communication, and vice versa.

The pupil data produced two results regarding whether individuals with ASD are sensitive to emotional expression. The participants with ASD showed a more significant size of pupils while viewing angry expressions but a smaller size while viewing sad expressions. As noted above, previous studies have proposed reported mixed evidence: Participants with ASD have a smaller pupil size (45) and a larger one (46) for facial expressions. Our results may integrate the mixed results into the idea that individuals with ASD are sensitive to stimuli arousal levels. Angry expressions are more arousing, whereas sad expressions are less arousing (40). Accordingly, a larger pupil size for angry expressions and a smaller one for sad expressions are considered sensitive responses, which might be related to hypersensitivity in individuals with ASD. This idea can explain some of the mixed results but does not explain other results (e.g., 11). However, further studies are required to examine this hypothesis.

Alternatively, we can interpret that smaller pupil size for sadness stems from decreased affective empathy in individuals with ASD. Empathy has been discussed in the context of both affective and cognitive empathy (47). The pupillary response is considered to be influenced by affective empathy because it is under the control of the automatic nervous system. Interestingly, social responsiveness has been reported to be related to sensitivity to sad expressions (48), demonstrating the effect of affective empathy on responses to sadness. However, we did not observe any specific effect of sadness on the facial emotion recognition (Figure 2) or the temporal ensemble (Figure 3B). This suggests that individuals with ASD can recognize sad expressions but may show atypical emotional responses.

Viewing times for eyes did not differ between individuals with ASD and those with TD, which is inconsistent with previous results. Studies using eye-tracking have shown that individuals with ASD fixate on the eyes of static facial images for shorter durations (49). One possible reason for these inconsistent results is that our stimuli may have caused dynamic perceptions. Unlike static facial expressions, dynamic facial expressions are less likely to cause shorter fixations on the eyes of facial images (50). Our facial images switched between emotional and neutral images, which may attract attention in individuals with ASD.

This study had three potential limitations. First, the present study includes wide ranges of participant ages, and we did not examine whether the results can be generalized to different age groups of individuals with ASD. As the participants with ASD were older than 16 years, the present results represent trends in adolescents and adults with ASD. It has been suggested that atypical recognition processes of facial emotions change with age. Black et al. (49) found that children with ASD less produced shorter fixations on the eyes of faces (51, 52), although adolescents and adults with ASD more produced them. Moreover, increased arousal may stem from negative social feedback (49). Such social effects increase during adolescence (e.g., social anxiety: 53). Future studies should include children with ASD to examine the impact of autistic traits on temporal ensembles. Second, the diversity of the participants with ASD may have influenced the results. Some participants with ASD had clinical symptoms (e.g. ADHD, anxiety disorders, and learning disorders) and were taking psychoactive drugs (e.g. methylphenidate). The co-occurrence of clinical symptoms is common in individuals with ASD (54). Therefore, it is difficult to fully attribute the present results to autistic traits. Third, a simplified procedure was used to measure the temporal ensembles. As Harada et al. (24) noted, the classical approach for a temporal ensemble presents facial images with a manipulated emotional intensity. In contrast, we presented emotional and neutral images to measure the temporal ratio effect. Future studies should use the classical temporal ensemble procedure to address this limitation.

The following two significant results were obtained: First, many individuals with ASD have temporal ensembles less susceptible to the temporal ratio of angry expressions. Second, individuals with ASD produced a greater size of pupils when viewing angry expressions and a smaller size of pupils when viewing sad expressions. However, pupil size and viewing times for eyes of faces did not predict the temporal ensembles. These results suggest that (a) autistic traits influence the temporal integration of angry expressions, (b) individuals with ASD show atypical emotional responses that are sensitive to facial expressions, and (c) the temporal ensemble of facial emotions is not fully explained by arousal or attentional allocation.

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/hkcys/.

The studies involving humans were approved by Ethics Committee of the National Rehabilitation Center for Persons with Disabilities. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

YH: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft. JO: Conceptualization, Funding acquisition, Methodology, Resources, Writing – review & editing. MS: Methodology, Writing – review & editing. NI: Methodology, Resources, Validation, Writing – review & editing. KM: Methodology, Writing – review & editing. MeW: Methodology, Writing – review & editing. MaW: Conceptualization, Data curation, Funding acquisition, Project administration, Supervision, Validation, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study was supported in part by Grant-in-Aid for Scientific Researches on Innovative Areas no. 20H04595 and Japan Society for the Promotion of Science KAKENHI grant nos. 21H05053, 22K18666, 21K19750, 20K19855.

We would like to thank Dr. Na Chen for her helpful comments and Drs Reiko Fukatsu and Yuko Seko for their continuous encouragement.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2024.1328708/full#supplementary-material

1. Harms MB, Martin A, Wallace GL. Facial emotion recognition in autism spectrum disorders: A review of behavioral and neuroimaging studies. Neuropsychol Rev (2010) 20:290–322. doi: 10.1007/s11065-010-9138-6

2. Uljarevic M, Hamilton A. Recognition of emotions in autism: A formal meta-analysis. J Autism Dev Disord (2013) 43:1517–26. doi: 10.1007/s10803-012-1695-5

3. Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry (2002) 59:809–16. doi: 10.1001/archpsyc.59.9.809

4. Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, et al. Gaze fixation and the neural circuitry of face processing in autism. Nat Neurosci (2005) 8:519–26. doi: 10.1038/nn1421

5. Pelphrey K, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. J Autism Dev Disord (2002) 32:249–61. doi: 10.1023/a:1016374617369

6. Hernandez N, Metzger A, Magné R, Bonnet-Brilhault F, Roux S, Barthelemy C, et al. Exploration of core features of a human face by healthy and autistic adults analyzed by visual scanning. Neuropsychologia (2009) 47:1004–12. doi: 10.1016/j.neuropsychologia.2008.10.023

7. Falkmer M, Bjällmark A, Larsson M, Falkmer T. Recognition of facially expressed emotions and visual search strategies in adults with Asperger syndrome. Res Autism Spectr Disord (2011) 5:210–7. doi: 10.1016/j.rasd.2010.03.013

8. Baron-Cohen S, Jolliffe T, Mortimore C, Robertson M. Another advanced test of theory of mind: Evidence from very high functioning adults with autism or Asperger syndrome. J Child Psychol Psychiatry (1997) 38:813–22. doi: 10.1111/j.1469-7610.1997.tb01599.x

9. Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The “Reading the Mind in the Eyes” Test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. J Child Psychol Psychiatry (2001) 42:241–51. doi: 10.1017/S0021963001006643

10. Adolphs R, Sears L, Piven J. Abnormal processing of social information from faces in autism. J Cogn Neurosci (2001) 13:232–40. doi: 10.1162/089892901564289

11. Pelphrey KA, Morris JP, Mccarthy G, Labar KS. Perception of dynamic changes in facial affect and identity in autism. Soc Cogn Affect Neurosci (2007) 2:140–9. doi: 10.1093/scan/nsm010

12. Tanaka JW, Sung A. The “Eye Avoidance” hypothesis of autism face processing. J Autism Dev Disord (2016) 46:1538–52. doi: 10.1007/s10803-013-1976-7

13. Lassalle A, Åsberg JJ, Zürcher NR, Hippolyte L, Billstedt E, Ward N, et al. Hypersensitivity to low intensity fearful faces in autism when fixation is constrained to the eyes. Hum Brain Mapp (2017) 38:5943–57. doi: 10.1002/hbm.23800

14. Song Y, Hakoda Y. Selective impairment of basic emotion recognition in people with autism: discrimination thresholds for recognition of facial expressions of varying intensities. J Autism Dev Disord (2018) 48:1886–94. doi: 10.1007/s10803-017-3428-2

15. Stuart N, Whitehouse A, Palermo R, Bothe E, Badcock N. Eye gaze in autism spectrum disorder: A review of neutral evidence for the eye avoidance hypothesis. J Autism Dev Disord (2023) 53:1884–905. doi: 10.1007/s10803-022-05443-z

16. Moriuchi J, Klin A, Jones W. Mechanisms of diminished attention to eyes in Autism. Am J Psychiatry (2017) 174:26–35. doi: 10.1176/appi.ajp.2016.15091222

17. Whitney D, Leib Y. Ensemble perception. Annu Rev Psychol (2018) 69:105–29. doi: 10.1146/annurev-psych-010416-044232

18. Haberman J, Harp T, Whitney D. Averaging facial expression over time. J Vision (2009) 9:1–13. doi: 10.1167/9.11.1

19. Frith U, Happé F. Autism: beyond “theory of mind”. Cognition (1994) 50:115–32. doi: 10.1016/0010-0277(94)90024-8

20. Happé F, Frith U. The weak coherence account: Detail-focused cognitive style in autism spectrum disorders. J Autism Dev Disord (2006) 36:5–25. doi: 10.1007/s10803-005-0039-0

21. Mottron L, Dawson M, Soulières I, Hubert B, Burack J. Enhanced perceptual functioning in autism: An update, and eight principles of autistic perception. J Autism Dev Disord (2006) 36:27–43. doi: 10.1007/s10803-005-0040-7

22. Karaminis T, Neil L, Manning C, Turi M, Fiorentini C, Burr D, et al. Ensemble perception of emotions in autistic and typical children and adolescents. Dev Cogn Neurosci (2017) 24:51–62. doi: 10.1016/j.dcn.2017.01.005

23. Chakrabarty M, Wada M. Perceptual effects of fast and automatic visual ensemble statistics from faces in individuals with typical development and autism spectrum conditions. Sci Rep (2020) 10:2169. doi: 10.1038/s41598-020-58971-y

24. Harada Y, Ohyama J, Wada M. Effects of temporal properties of facial expressions on the perceived intensity of emotion. R Soc Open Sci (2023) 10:220585. doi: 10.1098/rsos.220585

25. Baron-Cohen S, Wheelwright S, Skinner R, Martin J, Clubley E. The autism-spectrum quotient (AQ): evidence from asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. J Autism Dev Disord (2001) 31:5–17. doi: 10.1023/A:1005653411471

26. Wakabayashi A, Tojo Y, Baron-Cohen S, Wheelwright S. The Autism-Spectrum Quotient (AQ) Japanese version: Evidence from high-functioning clinical group and normal adults. Shinrigaku Kenkyu (2004) 75:78–84. doi: 10.4992/jjpsy.75.78

27. Ashwood KL, Gillan N, Horder J, Hayward H, Woodhouse E, McEwen FS, et al. Predicting the diagnosis of autism in adults using the Autism-Spectrum Quotient (AQ) questionnaire. psychol Med (2016) 46:2595–604. doi: 10.1017/S0033291716001082

28. Bradley MM, Miccoli L, Escrig MA, Lang PJ. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology (2008) 45:602–7. doi: 10.1111/j.1469-8986.2008.00654.x

29. Law Smith MJ, Montagne B, Perrett DI, Gill M, Gallagher L. Detecting subtle facial emotion recognition deficits in high-functioning Autism using dynamic stimuli of varying intensities. Neuropsychologia (2010) 48:2777–81. doi: 10.1016/j.neuropsychologia.2010.03.008

30. Kliemann D, Dziobek I, Hatri A, Baudewig J, Heekeren HR. The role of the amygdala in atypical gaze on emotional faces in autism spectrum disorders. J Neurosci (2012) 32:9469–76. doi: 10.1523/JNEUROSCI.5294-11.2012

31. Sun S, Webster PJ, Wang Y, Yu H, Yu R, Wang S. Reduced pupil oscillation during facial emotion judgment in people with autism spectrum disorder. J Autism Dev Disord (2023) 53:1963–73. doi: 10.1007/s10803-022-05478-2

32. Fujita H, Maekawa H, Dairoku K, Yamanaka K. A Japanese version of the WAIS-III. Bunkyo, Tokyo: Nihon Bunka Kagakusha. (2006).

33. Wechsler. Wechsler adult intelligence scale-III. In: The psychological corporation. San Antonio, TX: Psychological corporation. (1997).

34. Kuroda M, Inada N. Autism diagnostic observation schedule 2nd ed. Bunkyo, Tokyo: Kaneko Shobo. (2015).

35. Lord C, Rutter M, DiLavore PC, Risi S, Gotham K, Bishop SL. Autism diagnostic observation schedule 2nd ed. Los Angeles, CA: Western Psychological Services. (2012).

36. Oldfield RC. The assessment and analysis of handedness: The edinburgh inventory. Neuropsychologia (1971) 9:97–113. doi: 10.1016/0028-3932(71)90067-4

37. Brainard DH. The psychophysics toolbox. Spatial Vision (1997) 10:433–6. doi: 10.1163/156856897X00357

38. Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision (1997) 10:437–42. doi: 10.1163/156856897X00366

39. Kleiner M, Brainard D, Pelli D, Ingling A, Murray R, Broussard C. What’s new in psychtoolbox-3? Perception (2007) 36:1–16. doi: 10.1068/v070821

40. Fujimura T, Umemura H. Development and validation of a facial expression database based on the dimensional and categorical model of emotions. Cogn Emotion (2018) 32:1663–70. doi: 10.1080/02699931.2017.1419936

41. Bradley M, Sapigao R, Lang P. Sympathetic ANS modulation of pupil diameter in emotional scene perception: Effects of hedonic content, brightness, and contrast. Psychophysiology (2017) 54:1419–35. doi: 10.1111/psyp.12890

42. Iseki R. Anovakun (2023). Available online at: http://riseki.php.xdomain.jp/index.php?ANOVA%E5%90%9B (Accessed 15 May, 2023).

43. Leo M, Carcagni P, Distante C, Spagnolo P, Mazzeo PL, Rosato AC, et al. Computational assessment of facial expressions production in ASD children. Sensors (2018) 18:3993. doi: 10.3390/s18113993

44. Leo M, Carcagni P, Distante C, Mazzeo PL, Spagnolo P, Levante A, et al. Computational analysis of deep visual data for quantifying facial expression production. Appl Sci (2019) 9:4542. doi: 10.3390/app9214542

45. Anderson CJ, Colombo J, Shaddy DJ. Visual scanning and pupillary responses in young children with autism spectrum disorder. J Clin Exp Neuropsychol. (2006) 28:1238–56. doi: 10.1080/13803390500376790

46. Wagner JB, Luyster RJ, Tager-Flusberg H, Nelson CA. Greater pupil size in responses to emotional faces as an early marker of social-communicative difficulties in infants at high risk for autism. Infancy (2016) 21:560–81.

47. Chrysikou E, Thompson W. Assessing cognitive and affective empathy through the interpersonal reactivity index: An argument against a Two-Factor Model. Assessment (2016) 23:769–77. doi: 10.1177/1073191115599055

48. Wallace G, Case L, Harms M, Silvers J, Kenworthy L, Martin A. Diminished sensitivity to sad facial expressions in high functioning autism spectrum disorders is associated with symptomatology and adaptive functioning. J Autism Dev Disord (2011) 41:1475–86. doi: 10.1007/s10803-010-1170-0

49. Black MH, Chen NTM, Iyer K, Lipp OV, Bölte S, Falkmer M, et al. Mechanisms of facial emotion recognition in autism spectrum disorders: Insights from eye tracking and electroencephalography. Neurosci Biobehav Rev (2017) 80:488–515. doi: 10.1016/j.neubiorev.2017.06.016

50. Han B, Tijus C, Le Barillier F, Nadel J. Morphing technique reveals intact perception of object motion and disturbed perception of emotional expressions by low-functioning adolescents with autism spectrum disorder. Res Dev Disabil. (2015) 47:393–404. doi: 10.1016/j.ridd.2015.09.025

51. Nuske HJ, Vivanti G, Dissanayake C. Reactivity to fearful expressions of familiar and unfamiliar people in children with autism: an eye-tracking pupillometry study. J Neurodev Disord (2014) 6:14. doi: 10.1186/1866-1955-6-14

52. Nuske HJ, Vivanti G, Hudry K, Dissanayake C. Pupillometry reveals reduced unconscious emotional reactivity in autism. Biol Psychol (2014) 101:24–35. doi: 10.1016/j.biopsycho.2014.07.003

53. Kessler RC, Berglund P, Demler O, Jin R, Merikangas KR, Walters EE. Lifetime prevalence and age-of-onset distributions of DSM-IV disorders in the national comorbidity survey replication. Arch Gen Psychiatry (2005) 62:593–602. doi: 10.1001/archpsyc.62.6.593

Keywords: facial emotion, temporal ensemble, pupil size, eye tracking, eye-avoidance

Citation: Harada Y, Ohyama J, Sano M, Ishii N, Maida K, Wada M and Wada M (2024) Temporal characteristics of facial ensemble in individuals with autism spectrum disorder: examination from arousal and attentional allocation. Front. Psychiatry 15:1328708. doi: 10.3389/fpsyt.2024.1328708

Received: 27 October 2023; Accepted: 02 February 2024;

Published: 19 February 2024.

Edited by:

Adham Atyabi, University of Colorado Colorado Springs, United StatesReviewed by:

Wan-Chun Su, National Institutes of Health (NIH), United StatesCopyright © 2024 Harada, Ohyama, Sano, Ishii, Maida, Wada and Wada. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Makoto Wada, d2FkYS1tYWtvdG9AcmVoYWIuZ2UuanA=

†ORCID: Makoto Wada, orcid.org/0000-0002-2183-5053

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.