- 1Department of Psychology and Educational Counseling, The Center for Psychobiological Research, Max Stern Yezreel Valley College, Emek Yezreel, Israel

- 2Department of Brain Sciences, Faculty of Medicine, Imperial College London, London, United Kingdom

- 3Faculty of Graduate Studies, Oranim Academic College, Kiryat Tiv'on, Israel

ChatGPT, an artificial intelligence language model developed by OpenAI, holds the potential for contributing to the field of mental health. Nevertheless, although ChatGPT theoretically shows promise, its clinical abilities in suicide prevention, a significant mental health concern, have yet to be demonstrated. To address this knowledge gap, this study aims to compare ChatGPT’s assessments of mental health indicators to those of mental health professionals in a hypothetical case study that focuses on suicide risk assessment. Specifically, ChatGPT was asked to evaluate a text vignette describing a hypothetical patient with varying levels of perceived burdensomeness and thwarted belongingness. The ChatGPT assessments were compared to the norms of mental health professionals. The results indicated that ChatGPT rated the risk of suicide attempts lower than did the mental health professionals in all conditions. Furthermore, ChatGPT rated mental resilience lower than the norms in most conditions. These results imply that gatekeepers, patients or even mental health professionals who rely on ChatGPT for evaluating suicidal risk or as a complementary tool to improve decision-making may receive an inaccurate assessment that underestimates the actual suicide risk.

Introduction

ChatGPT is a tool developed by OpenAI that is based on GPT language model technology and available in the public domain (1). In the few months since it was launched on 30 November 2022, ChatGPT has gained a remarkable 100 million users, making it the most rapidly growing consumer application to date (2). ChatGPT is a highly sophisticated chatbot that can handle text-based requests ranging from simple queries to more advanced tasks. It comprehends and interprets user requests and generates appropriate responses in nearly natural human language (3). This ability to generate human-like language and perform complex tasks makes ChatGPT a significant breakthrough in the fields of natural language processing and artificial intelligence (2, 3). Most studies conducted on ChatGPT have focused on its use in academia (4), whereas exploration of its applications in the field of applied psychology has been limited. While ChatGPT demonstrates promising theoretical potential (5), its clinical capabilities in the field of mental health remain unclear, and particularly its ability to address critical issues such as suicide prevention, a significant mental health concern.

Suicide constitutes a major health problem and cause of death across the globe. Of the approximately 90–100 suicide attempts per 100,000 individuals each year in Israel, 7.9 result in death (6, 7). In the United States, the death rate is 14.2 per 100,000 suicide attempts (8). Psychiatric diseases are at least 10 times more prevalent among individuals who attempt and/or commit suicide than in the general population (9). Suicide attempts are often impulsive and occur at times of crisis as a response to being unable to manage daily stresses and demands (10). Among patients discharged from the hospital following a suicide attempt, the risk of a subsequent attempt during the first 3 years after hospitalization is 12%–30% (11).

In response, considerable efforts have been invested in developing effective suicide prevention strategies to reduce the risk of recurring attempts (12). Early identification of individuals at risk of suicide is a fundamental prevention strategy, particularly among the high-risk population of mental health patients (13). Hence, clinicians’ ability to recognize indicators of suicide potential is essential to implement appropriate crisis management and suicide intervention strategies, especially during times of acute crisis (14). Given the magnitude of the problem, significant investment in suicide prevention programs is crucial. Recent initiatives have focused on training gatekeepers from various community groups (e.g., teachers, policymakers, and military commanders) to detect indicators of suicide risk (15, 16). These efforts aim to broaden the pool of professionals capable of evaluating suicide risk beyond psychiatrists and clinical psychologists working in hospital settings. The term “gatekeeper” refers to individuals who are in direct contact with those who may be at risk of suicide and can be trained to identify significant suicide risk factors. Gatekeeper training programs are often part of broader suicide prevention initiatives aimed at enhancing knowledge and providing training for identifying risk factors and suicidal behaviors. These programs also aim to equip participants with the necessary skills to assess an individual’s level of risk and to manage the situation effectively by providing access to appropriate resources and treatment referrals (17). The role of gatekeepers is to facilitate access to appropriate care and treatment for those at risk of suicide (17). Research suggests that such gatekeepers can be useful in reducing suicide as part of a systematic approach to suicide prevention (18–20). In theory, artificial intelligence (AI) has the potential to support gatekeepers in their decision-making processes and improve the effectiveness of formal psychometric tools and clinical assessments in predicting suicide behavior. Currently, these methods are often found to have insufficient predictive capabilities (21, 22).

The current study

In the current research, we investigated the identifiable limitations of ChatGPT to evaluate suicide risk and to identify associated factors. In addition, we examined whether suicidality risk assessment contains fundamental principles of the Interpersonal Theory of Suicide (ITS), a well-established and empirically supported theoretical framework proposed by Joiner (23, 24) for assessing the risk of suicide and identifying associated factors. Specifically, we examined how the two core dimensions of ITS, namely perceived burdensomeness and perceived thwarted belongingness, influence therapists’ perceptions and evaluations of suicide ideation and behavior. The term perceived burdensomeness refers to a psychological state in which individuals perceives that their very existence imposes a burden on their family, friends, and/or society. According to Joiner et al. (25), this perception leads individuals to believe that their death would be more beneficial than their continued life, in terms of the impact on their family, friends, society, and so on. As defined by Van Orden et al. (24), the concept of thwarted belongingness refers to a feeling of estrangement from others, with particular emphasis on the distressing sensation of being excluded from one’s family, friends, or other cherished groups. This construct appears to play a crucial role in suicide, as it represents a basic psychological requirement. Failure to meet this need may lead to an inescapable sense of distress (26). We specifically selected perceived burdensomeness and thwarted belongingness, excluding other risk factors like gender, age, access to weapons, etc., because they provide a closer reflection of the patient’s mental experience. By adopting this approach, we aim to investigate ChatGPT’s identifiable limitations to assess suicidal risk based on how the patient interprets their interpersonal experiences.

The objective of the current study:

To exploring identifiable limitations using ChatGPT as a suicide risk assessment tool across two identifiable variables perceived burdensomeness and thwarted belongingness.

Methods

Artificial intelligence procedure

Using the free version of ChatGPT (OpenAI San Francisco), we conducted three evaluations during the period of 20–30 March 2023 (ChatGPT March 14 version) to examine how perceived burdensomeness and thwarted belongingness affect the performance of ChatGPT in assessing various aspects of suicidal risk compared to the performance of human therapists.

Input source

In the present investigation, we adopted the text vignette methodology as elucidated by Bresin et al. (27) to examine the observer’s perspective of suicidal acts. A specific vignette from Levi-Belz and Gamliel (28) was entered to the ChatGPT interface. The chosen vignette contains general background and two additional paragraphs: one for evaluating low or high perceived burdensomeness and the other for assessing low or high thwarted belongingness.

General background

Jane is a 37-year-old single woman without children, currently living alone, but in her past spent a long time living with her father, who passed away 2 years ago following a severe illness. Jane holds a B.A. degree and has kept a steady job for 10 years. Recently, however, her manager informed her that, due to financial cuts, she must dismiss her. Jane had a close, intimate relationship for the past year with a boyfriend, her first serious and meaningful one; she was very excited about the relationship, hoping it would lead to marriage. However, following a period of repeated quarrels, her boyfriend informed her he no longer loved her, and he left her. Jane was left very sad and hurt. In recent weeks she keeps thinking about the relationship over and over again, trying to analyze what happened. Jane is experiencing a heavy emotional load, she is very tired, lacking energy, and finding it hard to sleep nights. Her routine tasks have become difficult for her, and she finds it hard to concentrate and to function properly. She dreads the future, and it seems to her that there is no chance that she would find a love like that again.

Low perceived burdensomeness condition

In general, Jane feels she has abilities. She feels she has something to contribute to the world and to others around her, and sometimes people come to her for help and advice. She feels important and meaningful, and she seems to believe in herself.

High perceived burdensomeness condition

Jane feels worthless. Often she experiences herself as a burden to the people around her and she feels that it would be better if she were gone. She often sees herself as having no real meaning in other people’s lives and she experiences feelings of self-hatred. For example, she is angry at herself and thinks that the departure of her boyfriend was her fault.

Low thwarted belongingness condition

Jane has some close friends, and she feels that she can tell them about what she has been going through in recent weeks. In general she makes connections relatively easily and, despite the recent crisis, she feels lucky because there are people around who care for her.

High thwarted belongingness condition

Jane is experiencing significant loneliness in general and she has only some, if any, close friends around her. She is very withdrawn, distant from others, and she feels that there are no people to whom she could turn in times of trouble. Therefore, she has not shared her feelings with anyone since the breakup.

Measures

After each of the four conditions (1. low burdensomeness and low belongingness; 2. low burdensomeness and high belongingness; 3. high burdensomeness and low belongingness; 4. high burdensomeness and high belongingness), we submitted six questions to ChatGTP taken from Levi-Belz and Gamliel (28). Each question was categorized into one of four subscales: (1) Psychache (i.e., psychological pain) (Q1: “What do you think is Jane’s level of distress?”; Q2: “What do you think is Jane’s level of mental pain?”); (2) Suicidal ideation (Q3: “What is the likelihood Jane will have suicidal thoughts?”); (3) Risk of suicide attempt (Q4: “What is the likelihood Jane will attempt suicide?”; Q5: “What is the likelihood Jane will make a serious suicide attempt?”); and (4) Resilience (Q6: “How do you assess Jane’s level of resilience?”). All Six questions employed a seven-point Likert type scale, estimating intensity (Q1, Q2, Q6) and likelihood (Q3–Q5), ranging from 1 (very slight) to 7 (very high).

Scoring

ChatGPT’s performance was scored according to Levi-Belz and Gamliel (28). We then compared the ChatGPT scores to the norms of 379 participants trained in mental health professions (21% male, mean age 36 ± 8.8), 53 participants who were psychology graduate students, 266 with a master’s degree, and 60 with a doctorate. In terms of professional roles, 43 participants held certifications as supervisors in their mental health specialty, 108 were certified experts, 128 were interns, and 100 either had not begun their internship or were in professions not mandating an internship. The majority of the sample, accounting for 84%, comprised practicing mental health professionals, while the remaining individuals had previous experience in the mental health field but were currently inactive (28).

Statistical analysis

The data were presented as means ± SDs and as percentages of the first second and third evaluations. Two-sample t-tests were used to evaluate the differences between the average ChatGPT performance on the three evaluations and the norms of the mental health professionals reported by Levi-Belz and Gamliel (28).

Results

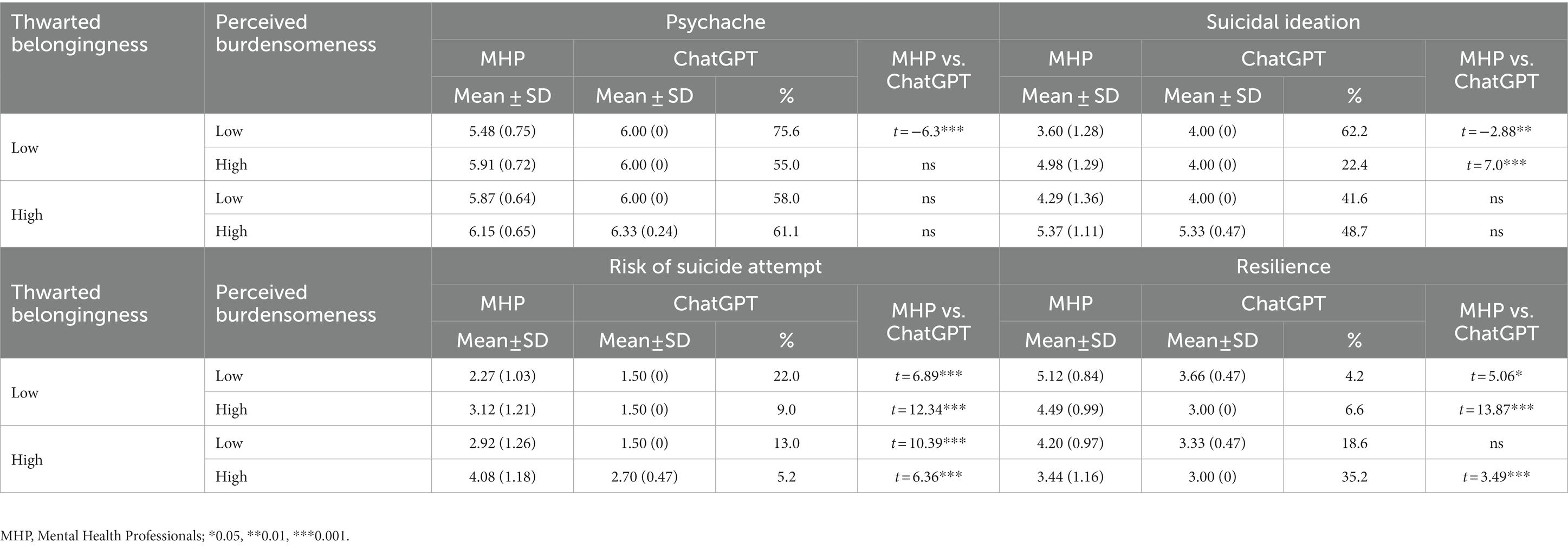

Table 1 depicts ChatGPT’s performance (mean ± SD) for all four conditions (1. low burdensomeness and low belongingness; 2. low burdensomeness and high belongingness; 3. high burdensomeness and low belongingness; 4. high burdensomeness and high belongingness) for the four dependent variables (1. psychache; 2. suicidal ideation; 3. risk of suicide attempt, and 4. resilience) compared to the norms of the health professionals reported by Levi-Belz and Gamliel (28).

Table 1. Descriptive statistics of the four variables—psychache, suicidal ideation, risk of suicide attempt, and resilience—as a function of perceived burdensomeness and perceived thwarted belongingness.

Psychache

In the low burdensomeness and low belongingness condition, ChatGPT assessed the level of psychache as higher than the sample of mental health professionals (75th percentile, t = −6.3, p < 0.001). In the other conditions, no significant differences were found (percentile range 55–61). The ChatGPT mean scores and standard deviations were the same (6 ± 0) in the first three conditions.

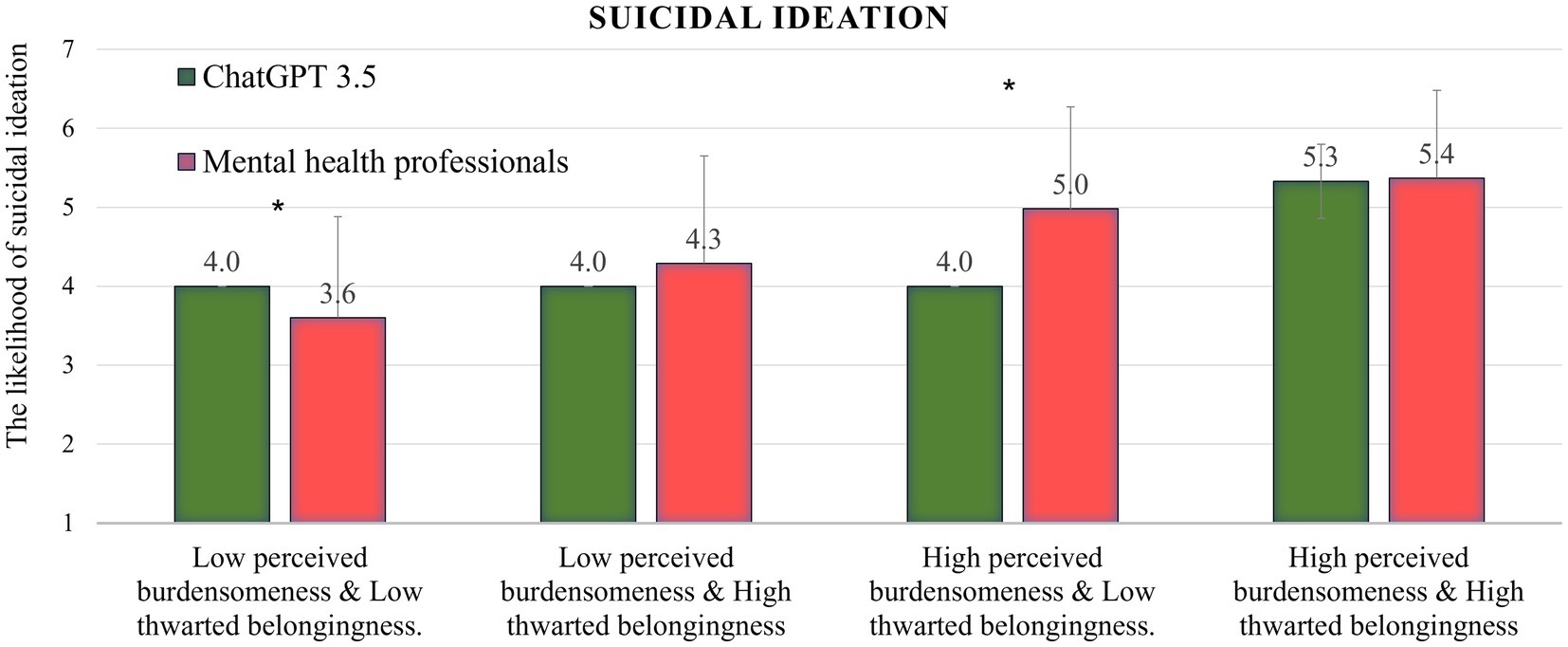

Suicidal ideation

As can be seen in Figure 1, in the low burdensomeness and low belongingness condition, ChatGPT assessed the level of suicidal ideation as higher than the sample of mental health professionals (62nd percentile, t = −2.8, p < 0.01). In the low burdensomeness and high belongingness condition, ChatGPT assessed the level of suicidal ideation as low compared to the mental health professionals sample (22nd percentile, t = 7.00, p < 0.001). In the other two conditions, no significant differences were found (percentile range 41–48). The ChatGPT mean scores and standard deviations were the same (4 ± 0) in the first three conditions.

Figure 1. ChatGPT’s performance in all four conditions on the suicidal ideation variable, compared to the norms of mental health professionals; *p < 0.01.

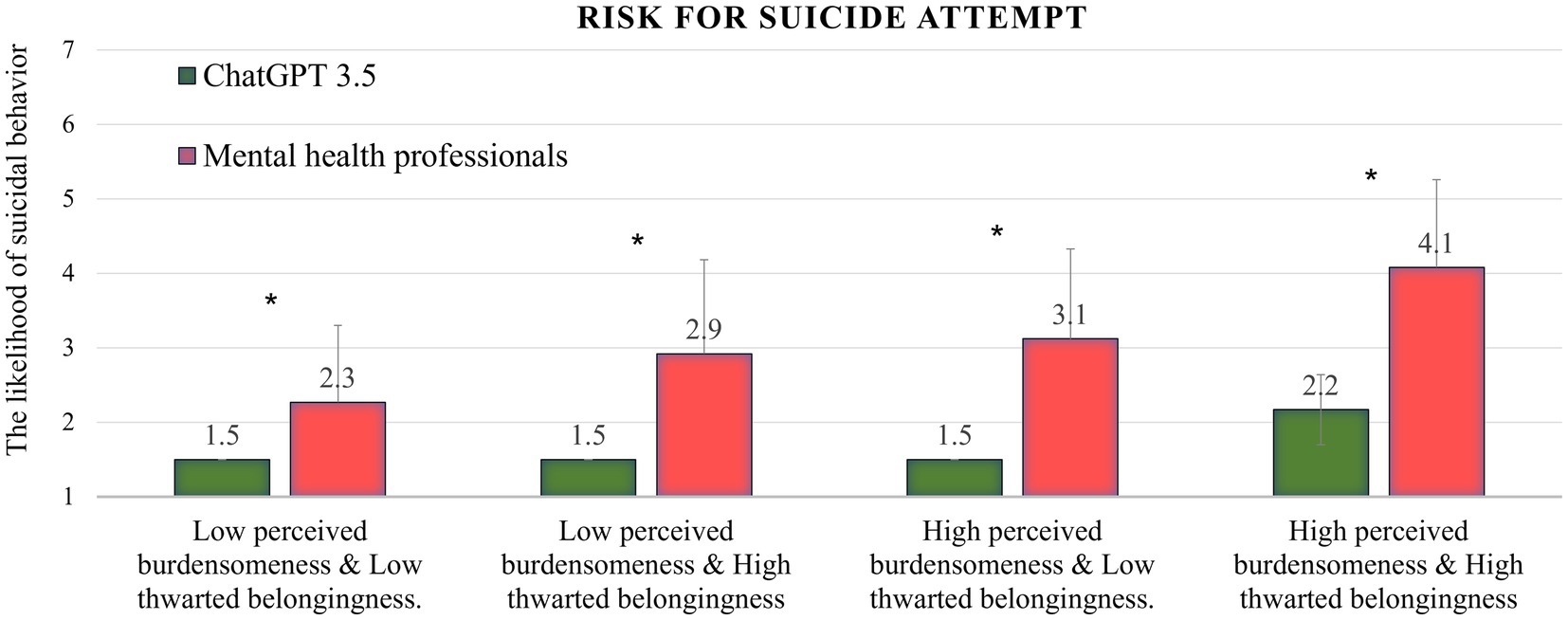

Risk of suicide attempt

Figure 2 shows that in all four conditions, ChatGPT assessed the level of risk of suicide attempts significantly lower than did the mental health professionals sample (percentile range 5–22; t-test range = 6.36–12.34; p < 0.001 for all conditions). In the condition reflecting the highest level of risk (high burdensomeness and high belongingness), the ChatGPT assessment was ranked in the lowest percentile (5th). The ChatGPT mean scores and standard deviations were the same (1.5 ± 0) in the first three conditions.

Figure 2. ChatGPT’s performance in all four conditions on the risk for suicide attempt variable, compared to the norms of mental health professionals; *p < 0.001.

Resilience

In all conditions except the low burdensomeness and high belongingness condition, ChatGPT assessed the level of resilience as significantly lower compared to the mental health professionals sample (percentile range 4–35; t-test range = 3.49–13.87; p < 0.001 for low burdensomeness and high belongingness and high burdensomeness and high belongingness, p < 0.05 for low burdensomeness and low belongingness). In the low burdensomeness and high belongingness conditions, no significant differences were found (18th percentile).

Discussion

This study aimed to examine exploring identifiable limitations of ChatGPT evaluates suicidal behavior and contributing factors in comparison to the norms established by Levi-Belz and Gamliel (28) for healthcare professionals. This study offers a unique contribution by evaluating ChatGPT ability to assess suicidal risk in the context of perceived burdensomeness and thwarted belongingness. To the best of our knowledge, this issue has not been explored in previous research.

The findings show that ChatGPT consistently underestimated the likelihood of suicide attempts across all scenarios when compared to mental health professionals. Specifically, in the condition involving high burdensomeness and high belongingness, which has the highest risk level according to the Interpersonal Theory of Suicide (ITS), ChatGPT ranked the risk in the lowest percentile (5th). The absolute value of suicidal behavior was greater in this condition than in the other three, suggesting that ChatGPT takes perceived burdensomeness and thwarted belongingness into account to some extent, albeit to a lesser degree than what is suggested by theory and clinical experience (23, 24, 28). While the assessment of mental health professionals was influenced by the presence of either perceived burdensomeness or thwarted belongingness, ChatGPT’s assessment was only affected when both factors were present, and even then, only to a minimal extent.

In most conditions, ChatGPT tended to rate mental resilience lower than the norms. In addition, the psychache and suicidal ideation variables were pretty similar to the norms in most of the conditions. These results indicate that ChatGPT’s assessment of risk of suicide attempt is less influenced by factors such as resilience (28–30). Based on the findings, we can deduce that ChatGPT’s overall evaluation might not fully encompass the complexities of assessing suicidal risk, and its capacity to identify potential risk and protective factors may deviate from established clinical expertise. While chatbots show promise in improving the insufficient predictive capability of clinical assessments and suicide risk questionnaires (21, 22), it is evident that, within the context of this study, they are unable to effectively fulfill this role.

These results differ from those of contemporary artificial intelligence (AI) models for evaluating suicidal risk (31, 32). Consequently, the study emphasizes the importance of exercising prudence and further refining AI models for appraising suicidal risk. The purpose of this study was to explore the potential of artificial intelligence (AI) in evaluating mental health risks beyond its theoretical proficiency and semantic knowledge, as discussed in previous literature (33, 34). Specifically, ChatGPT shows major potential for contributing to assessment processes in mental health (35), among other things due to its vast knowledge, high accessibility on any phone or computer, and ability to reduce the feeling of stigma and shame associated with psychological or psychiatric settings. Previous research on the application of AI in mental health has focused primarily on its potential to aid in technical tasks, thereby reducing the need for clinical interactions. This approach suggests that AI technologies can assist in completing non-personalized tasks, thus freeing up clinicians’ time to focus on delivering more empathic care and “humanizing” their practice, as the quality of mental health care often relies on the clinician-patient relationship (36). Scholars have proposed several potential applications of AI in the mental health field, including assisting clinicians with time-consuming tasks such as updating medical records, improving diagnostic and prognostic accuracy, promoting understanding of mental illnesses mechanisms, and enhancing treatment based on biological feedback (37–40). The findings of the current study suggest that using ChatGPT to evaluate suicide risk through vignettes or as a tool for improved decision-making among clinicians or gatekeepers is not in line with clinical experience and is not recommended. The implication is that ChatGPT should be used to direct individuals who pose questions about suicide to receive mental health treatment or assistance.

The present study has several limitations. First, it focused on the March 13 version of ChatGPT. More recent versions have since been released, and forthcoming studies should investigate this issue using these updates. Second, the study focused on a vignette featuring a female participant, whereas the incidence of suicide deaths is higher among males. Therefore, we recommend conducting additional studies to investigate vignettes featuring various demographic groups, including male participants, psychiatric patients, adolescents, and older individuals. Third, we compared the ChatGPT data with a sample of data from mental health professionals in Israel. Therefore, we recommend investigating the appraisal of therapists from other countries to assess cross-cultural differences. Fourth limitation is that the research on which we were based (28) did not report the degree of reliability between the judges. Accordingly, an assessment of the degree of variability in human responses can be roughly estimated through standard deviations alone. Lastly, This research delved into the intricate realm of suicide risk assessment using artificial intelligence. However, in order to establish a more expansive understanding, further studies are necessary. These subsequent investigations should explore supplementary risk factors, incorporate additional large language models, analyze data at various time points, and compare findings with a wider range of clinical samples.

Conclusion

The objective of this research was to investigate the extent of ChatGPT’s ability to evaluate suicide risk and to identify few factors compared to the assessment of mental health professionals and to explore how this assessment is influenced by perceived burdensomeness and thwarted belongingness. The findings revealed that in all scenarios ChatGPT consistently assessed the risk of suicide attempt as lower than did mental health professionals. The results of this study imply that ChatGPT’s evaluation of suicidal ideation and suicide attempt risk may be less affected by elements such as resilience, perceived burdensomeness, and thwarted belongingness. The finding that ChatGPT underestimates the risk of suicide attempts in comparison to mental health care professionals, especially in the most severe condition, is a significant cause for concern. It implies that gatekeepers, patients, or even mental health professionals who are considering using ChatGPT for evaluating suicidal risk may receive an inaccurate assessment that underestimates the actual risk. Despite the theoretical possibility that ChatGPT’s assessments are more precise, it is incumbent upon mental health professionals to prioritize human life and treat the chat assessments in this domain as unprofessional until further evidence becomes available.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

ZE: conception and design of the study, acquisition and analysis of data, and drafting of a significant portion of the manuscript and figures. IL: conception and design of the study, acquisition and analysis of data, and drafting of a significant portion of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This research was supported by Oranim College (no. 5100260).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Kirmani, AR. Artificial intelligence-enabled science poetry. ACS Energy Letters. (2022) 8:574–76.

2. Liu, Y, Deng, G, Xu, Z, Li, Y, Zheng, Y, Zhang, Y, et al. Jailbreaking chatgpt via prompt engineering: An empirical study. arXiv Preprint. (2023) arXiv:2305.13860.

3. Emenike, ME, and Emenike, BU. Was this title generated by ChatGPT? Considerations for artificial intelligence text-generation software programs for chemists and chemistry educators. J Chem Educ. (2023) 100:1413–8. doi: 10.1021/acs.jchemed.3c00063

4. Khalil, M., and Er, E. Will ChatGPT get you caught? Rethinking plagiarism detection. (2023). arXiv [Preprint]. arXiv:2302.04335.

5. Guo, B., Zhang, X., Wang, Z., Jiang, M., Nie, J., Ding, Y., et al. How close is ChatGPT to human experts? Comparison corpus, evaluation, and detection. (2023). arXiv [Preprint] arXiv:2301.07597.

6. Lew, B, Lester, D, Mustapha, FI, Yip, P, Chen, Y, Panirselvam, RR, et al. Decriminalizing suicide attempt in the 21st century: an examination of suicide rates in countries that penalize suicide, a critical review. BMC Psychiatry. (2022) 22:424. doi: 10.1186/s12888-022-04060-5

7. Zalsman, G. Suicide: epidemiology, etiology, treatment and prevention. Harefuah. (2019) 158:468–72.

8. Stone, DM, Jones, CM, and Mack, KA. Changes in suicide rates—United States, 2018–2019. Morb Mortal Wkly Rep. (2021) 70:261–8. doi: 10.15585/mmwr.mm7008a1

9. Bachmann, S. Epidemiology of suicide and the psychiatric perspective. Int J Environ Res Public Health. (2018) 15:1425. doi: 10.3390/ijerph15071425

10. Gvion, Y, and Apter, A. Aggression, impulsivity, and suicide behavior: a review of the literature. Arch Suicide Res. (2011) 15:93–112. doi: 10.1080/13811118.2011.565265

11. Chung, DT, Ryan, CJ, Hadzi-Pavlovic, D, Singh, SP, Stanton, C, and Large, MM. Suicide rates after discharge from psychiatric facilities: a systematic review and meta-analysis. JAMA Psychiat. (2017) 74:694–702. doi: 10.1001/jamapsychiatry.2017.1044

12. Zalsman, G, Hawton, K, Wasserman, D, van Heeringen, K, Arensman, E, Sarchiapone, M, et al. Suicide prevention strategies revisited: 10-year systematic review. Lancet Psychiatry. (2016) 3:646–59. doi: 10.1016/S2215-0366(16)30030-X

13. Bolton, JM, Gunnell, D, and Turecki, G. Suicide risk assessment and intervention in people with mental illness. BMJ. (2015) 351:4978. doi: 10.1136/bmj.h4978

14. Wasserman, D, Rihmer, Z, Rujescu, D, Sarchiapone, M, Sokolowski, M, Titelman, D, et al. The european psychiatric association (EPA) guidance on suicide treatment and prevention. Eur Psychiatry. (2012) 27:129–41. doi: 10.1016/j.eurpsy.2011.06.003

15. Burnette, C, Ramchand, R, and Ayer, L. Gatekeeper training for suicide prevention: a theoretical model and review of the empirical literature. Rand Health Q. (2015) 5:16. doi: 10.7249/j.ctt13x1ftq

16. Stickl Haugen, J., Sutter, C. C., Tinstman Jones, J. L., and Campbell, L. O. Teachers as youth suicide prevention gatekeepers: an examination of suicide prevention training and exposure to students at risk of suicide. (2022). In: Paper Presented at the Child & Youth Care Forum, 52. 583-601.

17. Morton, M, Wang, S, Tse, K, Chung, C, Bergmans, Y, Ceniti, A, et al. Gatekeeper training for friends and family of individuals at risk of suicide: a systematic review. J Community Psychol. (2021) 49:1838–71. doi: 10.1002/jcop.22624

18. Holmes, G, Clacy, A, Hermens, DF, and Lagopoulos, J. The long-term efficacy of suicide prevention gatekeeper training: a systematic review. Arch Suicide Res. (2021) 25:177–207. doi: 10.1080/13811118.2019.1690608

19. Levkovich, I, and Vigdor, I. How school counsellors cope with suicide attempts among adolescents—a qualitative study in Israel. J Psychol Couns Sch. (2021) 31:63–75. doi: 10.1017/jgc.2020.14

20. Torok, M, Calear, AL, Smart, A, Nicolopoulos, A, and Wong, Q. Preventing adolescent suicide: a systematic review of the effectiveness and change mechanisms of suicide prevention gatekeeping training programs for teachers and parents. J Adolesc. (2019) 73:100–12. doi: 10.1016/j.adolescence.2019.04.005

21. Franklin, JC, Ribeiro, JD, Fox, KR, Bentley, KH, Kleiman, EM, Huang, X, et al. Risk factors for suicidal thoughts and behaviors: a meta-analysis of 50 years of research. Psychol Bull. (2017) 143:187–232. doi: 10.1037/bul0000084

22. Vera-Varela, C, Manrique Mirón, PC, Barrigón, ML, Álvarez-García, R, Portillo, P, Chamorro, J, et al. Low level of agreement between self-report and clinical assessment of passive suicidal ideation. Arch Suicide Res. (2022) 26:1895–910. doi: 10.1080/13811118.2021.1945984

24. Van Orden, KA, Witte, TK, Gordon, KH, Bender, TW, and Joiner, TE. Suicidal desire and the capability for suicide: tests of the interpersonal-psychological theory of suicidal behavior among adults. J Consult Clin Psychol. (2008) 76:72–83. doi: 10.1037/0022-006X.76.1.72

25. Joiner, TE, Van Orden, KA, Witte, TK, Selby, EA, Ribeiro, JD, Lewis, R, et al. Main predictions of the interpersonal–psychological theory of suicidal behavior: empirical tests in two samples of young adults. J Abnorm Psychol. (2009) 118:634–46. doi: 10.1037/a0016500

26. Shneidman, E. S. A psychological approach to suicide. Paper presented at the American Psychological Association convention, 1986, Washington, DC, US; this chapter is based upon one of the 1986 master lectures that were presented at the aforementioned convention. (1987).

27. Bresin, K, Sand, E, and Gordon, KH. Non-suicidal self-injury from the observer’s perspective: A vignette study. Arch Suicide Res. (2013) 17:185–95.

28. Levi-Belz, Y, and Gamliel, E. The effect of perceived burdensomeness and thwarted belongingness on therapists' assessment of patients' suicide risk. Psychother Res. (2016) 26:436–45. doi: 10.1080/10503307.2015.1013161

29. Gautam, A, and Nagle, YK. Moderating effect of resilience: thwarted belongingness and perceived burdensomeness as predictors of suicidal ideation. J Psychosoc Res. (2016) 11:459.

30. Kinory, D, Aisenberg, D, and Levi-Belz, Y. The cost of being apart: the contribution of perceived burdensomeness and thwarted belongingness to suicide ideation among Israeli older adults. J Nerv Ment Dis. (2020) 208:663–70. doi: 10.1097/NMD.0000000000001198

31. Bernert, RA, Hilberg, AM, Melia, R, Kim, JP, Shah, NH, and Abnousi, F. Artificial intelligence and suicide prevention: a systematic review of machine learning investigations. Int J Environ Res Public Health. (2020) 17:5929. doi: 10.3390/ijerph17165929

32. Fonseka, TM, Bhat, V, and Kennedy, SH. The utility of artificial intelligence in suicide risk prediction and the management of suicidal behaviors. Austral New Z J Psychiatry. (2019) 53:954–64. doi: 10.1177/0004867419864428

33. Kung, TH, Cheatham, M, Medenilla, A, Sillos, C, De Leon, L, Elepaño, C, et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digit Health. (2023) 2:e0000198. doi: 10.1371/journal.pdig.0000198

34. Rudolph, J, Tan, S, and Tan, S. ChatGPT: bullshit spewer or the end of traditional assessments in higher education? J Appl Learn Teach. (2023) 6:342–63. doi: 10.37074/jalt.2023.6.1.9

35. Elyoseph, Z, Hadar Shoval, D, Asraf, K, and Lvovsky, M. ChatGPT outperforms humans in emotional awareness evaluations. Front Psychol. (2023) 14:2116. doi: 10.3389/fpsyg.2023.1199058

36. Topol, E. Deep medicine: How artificial intelligence can make healthcare human again. UK: Hachette (2019).

37. Braun, U, Schaefer, A, Betzel, RF, Tost, H, Meyer-Lindenberg, A, and Bassett, DS. From maps to multi-dimensional network mechanisms of mental disorders. Neuron. (2018) 97:14–31. doi: 10.1016/j.neuron.2017.11.007

38. Bzdok, D, and Meyer-Lindenberg, A. Machine learning for precision psychiatry: opportunities and challenges. Biol Psychiatry Cogn Neurosci Neuroimag. (2018) 3:223–30. doi: 10.1016/j.bpsc.2017.11.007

39. Doraiswamy, PM, Blease, C, and Bodner, K. Artificial intelligence and the future of psychiatry: insights from a global physician survey. Artif Intell Med. (2020) 102:101753. doi: 10.1016/j.artmed.2019.101753

Keywords: artificial intelligence, ChatGPT, diagnosis, psychological assessment, suicide risk, risk assessment, text vignette

Citation: Elyoseph Z and Levkovich I (2023) Beyond human expertise: the promise and limitations of ChatGPT in suicide risk assessment. Front. Psychiatry. 14:1213141. doi: 10.3389/fpsyt.2023.1213141

Edited by:

Beth Krone, Icahn School of Medicine at Mount Sinai, United StatesReviewed by:

M. David Rudd, University of Memphis, United StatesAmna Mohyud Din Chaudhary, Case Western Reserve University, United States

Copyright © 2023 Elyoseph and Levkovich. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zohar Elyoseph, em9oYXJlQHl2Yy5hYy5pbA==

†These authors have contributed equally to this work

Zohar Elyoseph

Zohar Elyoseph Inbar Levkovich

Inbar Levkovich