- 1Department of Systems Innovation, Graduate School of Engineering Science, Osaka University, Osaka, Japan

- 2Department of Neuropsychiatry, Keio University School of Medicine, Tokyo, Japan

- 3National Center of Neurology and Psychiatry, Department of Preventive Intervention for Psychiatric Disorders, National Institute of Mental Health, Tokyo, Japan

- 4Department of Neuropsychiatry, Graduate School of Biomedical Sciences, Nagasaki University, Nagasaki, Japan

- 5College of Science and Engineering, Kanazawa University, Ishikawa, Japan

Introduction: Job interviews are a major barrier to employment for individuals with autism spectrum disorders (ASD). During the coronavirus pandemic, establishing online job interview training at home was indispensable. However, many hurdles prevent individuals with ASD from concentrating on online job interview training. To facilitate the acquisition of interview skills from home for individuals with ASD, we developed a group interview training program with a virtual conferencing system (GIT-VICS Program) that uses computer graphics (CG) robots.

Methods: This study investigated the feasibility of the GIT-VICS Program in facilitating skill acquisition for face-to-face job interviews in pre-post measures. In the GIT-VICS Program, five participants were grouped and played the roles of interviewees (1), interviewers (2), and human resources (2). They alternately practiced each role in GIT-VICS Program sessions conducted over 8 or 9 days over three consecutive weeks. Before and after the GIT-VICS Program, the participants underwent a mock face-to-face job interview with two experienced human interviewers (MFH) to evaluate its effect.

Results: Fourteen participants completed the trial procedures without experiencing any technological challenges or distress that would have led to the termination of the session. The GIT-VICS Program improved their job interview skills (verbal competence, nonverbal competence, and interview performance).

Discussion: Given the promising results of this study and to draw clear conclusions about the efficacy of CG robots for mock online job interview training, future studies adding appropriate guidance for manner of job interview by experts are needed.

1. Introduction

Autism spectrum disorder (ASD) comprises a range of conditions categorized by challenges with social skills, repetitive behaviors, and verbal and nonverbal communication (1). The Centers for Disease Control and Prevention (CDC) in the US estimates that one in 44 children has ASD (2). A previous study (3) estimated that approximately 50,000 youth with ASD turn 18 years old every year in the US. As a necessary component of adult life, employment is one of the most desirable achievements for individuals with ASD when they enter adulthood (4, 5). However, it is challenging for them to obtain or maintain meaningful jobs (6). The Office for National Statistics recently reported that just 22% of adults with ASD have employment of any kind (7).

Job interviews are major barriers to employment for individuals with ASD (8, 9). Many are not good at verbal communication and conveying job-relevant interview content, and they are not confident in their ability to perform job interviews (10). Most importantly, they experience problems with nonverbal communication, which is directly related to poor performance during job interviews (9). Nonverbal communication is as important as verbal communication in job interviews. Certain nonverbal mistakes “(e.g., individuals with ASD not looking the interviewer in the eye, not making adequate facial expressions)” can reduce the chances of getting a job offer, even if the answers to the interview questions are excellent. Moreover, most job interview settings include multiple interviewers, making job interviews more difficult for individuals with ASD.

Applicants are required to practice for in-person job interviews. In the coronavirus pandemic, establishing online job interview training at home is indispensable. However, many hurdles prevent individuals with ASD from concentrating on online job interview training. In our previous study, we found that individuals with ASD had higher interpersonal tension during online interview training and lower motivation in an online job interview setting (11).

“The Social Motivation Theory of Autism” (12) suggested that individuals with ASD can be interpreted as extreme cases of reduced social motivation. Social motivation is a powerful force that guides human behavior. It can be described as a set of psychological properties and biological mechanisms that bias individuals to preferentially place themselves in the social world, seek social interactions, feel pleasure, and work to foster and maintain social bonds. Social motivation allows individuals with ASD to establish smooth relationships and promotes coordination. Social communication intervention approaches may be effective in providing motivational activities and settings for individuals with ASD (13).

A previous study using realistic virtual humans reported that individuals with ASD saw agent peers in interview settings using virtual reality while talking less frequently than typical development (14). Our preliminary study confirmed that many individuals with ASD are fearful of realistic virtual humans and avoid looking at them because of complexity of their realism. Unlike humans, virtual humanoid robots operate in predictable and lawful systems and, thus, can provide a highly structured learning environment that allows individuals with ASD to focus on relevant stimuli. Individuals with ASD may be highly motivated to communicate with virtual humanoid robots and exhibit social behaviors toward them (11).

When designing objects for use by individuals with ASD, researchers often subscribe to the idea that “simple is better”; that is, they gravitate toward simple and mechanical objects (15–19). Based on these considerations, the use of a simple virtual agent for training individuals with ASD can be inferred to be appropriate. Studies on virtual exposure using clinical samples have shown that even simple virtual agents can significantly increase anxiety and are more effective for phobics than speaking in front of an empty virtual seminar room (11, 20). In designing a virtual human-robot mock online job interview training for individuals with ASD, it is important to consider how to design the agent’s eyes, because individuals with ASD pay less attention to their eyes than individuals with typical development (21). Increasing eye contact is widely recognized as an important and promising treatment for individuals with ASD (22, 23). To create useful online job interview training that is beneficial to many of these individuals, it is important to the agent in their eyes during training. If individuals with ASD can exercise eye contact with virtual agents, they may overcome their fear of the interviewer’s gaze and may be able to reduce their anxiety during the interview process.

Low motivation for interview training may be partly due to the inability of individuals with ASD to understand others’ perspectives and how they behave in an interview setting. Challenges in this area may arise owing to the impaired ability of individuals with ASD to attribute mental states to themselves and others (24), often called the Theory of Mind (ToM). ToM represents the cognitive ability to infer the mental states of oneself and others and is an essential ability in social cognitive functioning and a core cognitive impairment in individuals with ASD (25). If individuals with ASD cannot put themselves in the position of an interlocutor because of impairments in ToM (24), they are unlikely to understand the impact of their actions on others. Moreover, they may not have been motivated to learn the verbal and nonverbal communication required for successful job interviews. Therefore, interventions from the perspective of ToM are required.

To facilitate at-home interview skill acquisition for individuals with ASD, we developed a group interview training program using a virtual conferencing system (GIT-VICS Program). In this program, five or six individuals with ASD were assigned to a group. Each group comprised one interviewee, two interviewers, and two meta-evaluators. The participants performed all the roles multiple times in random order.

The GIT-VICS Program differs from the method used previously (11) in that (1) the meta-evaluator evaluated the performance of both the interviewee and the interviewers, (2) the virtual interview sessions included two interviewers (compared to only one interviewer in the previous study), and (3) the interviewee was given an additional way to control the gaze of the computer graphics (CG) robot as well as a task to properly present attention to both interviewers. Above all, in this study, to investigate the effectiveness of the GIT-VICS Program, we prepared a face-to-face mock job interview setting (in a previous study, we prepared a mock online job interview setting). The CG robots used in the GIT-VICS Program show a range of simplified expressions that are simpler and less complex than real human faces. Careful design of the eyes and multiple degrees of freedom (DoFs) dedicated to controlling the field of vision contribute to rich eye expressions. In these environments, the user assumes the roles of interviewee and interviewer and can safely rehearse initiations and responses. The GIT-VICS Program offers trainees the following benefits: (1) active participation rather than passive observation, (2) a unique training experience, and (3) low cost and high accessibility. By having experience not only performing the role of an interviewee but also evaluating and meta-evaluating the interviewers, we expected that the participants could learn the perspectives of others (i.e., the perspectives of the interviewer and the meta-evaluator). Thus, we considered that our system would be effective in facilitating the acquisition of interview skills by individuals with ASD. This study investigated the effectiveness of the GIT-VICS Program in facilitating skill acquisition for face-to-face interviews.

2. Materials and methods

2.1. Participants

This study was approved by the Ethics Committee of Kanazawa University. The participants were recruited through flyers explaining the details of the experiment. All procedures performed in studies involving human participants were conducted in accordance with the ethical standards of the institutional and/or national research committee, and the 1964 Declaration of Helsinki and its subsequent amendments. After receiving a full explanation of the study, all participants and their guardians agreed to participate. Written informed consent for the release of any potentially personally identifiable images or data contained in this article was obtained from the individuals and/or legal guardians of the minors. The authors declare that no conflicts of interest exist in this study. The inclusion criteria included (1) a diagnosis of ASD according to the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-5) (1) from an experienced psychiatrist, (2) intelligence quota (IQ) ≥70, (3) age 20–29 years, (4) unemployed and actively seeking employment, and (5) not taking medication. The exclusion criterion for the ASD group was medical conditions associated with ASD (e.g., Shank3, fragile X syndrome, and Rett syndrome). At enrollment, the diagnoses of all participants were confirmed by an experienced psychiatrist with >15 years of experience in ASD using DSM-5 criteria and standardized criteria from the Diagnostic Interview for Social and Communication Disorders (DISCO) (26). The DISCO has been reported to exhibit good psychometric properties (27). All participants had been acquaintances for at least 1 year. A Mini-International Neuropsychiatric Interview (28) was conducted to rule out other psychiatric diagnoses.

The participants completed the Autism Spectrum Quotient-Japanese version (AQ-J) (29) to assess behaviors and symptoms specific to ASD. The AQ-J is a short questionnaire containing five subscales (social skills, attention switching, attention to detail, imagination, and communication). Previous studies of the AQ-J have been replicated across cultures (30) and ages (31, 32). Notably, the AQ is sensitive to broader autism phenotypes. In this study, we did not set a cutoff based on the AQ-J score and used only the DSM-5 and DICSO to diagnose ASD and determine the participants to be included in our study.

Full-scale IQ scores were measured by either the Wechsler Adult Intelligence Scale–Third Edition or the Japanese Adult Reading Test (JART) (33). The latter is a standardized cognitive function test used to estimate the premorbid IQ of individuals with cognitive impairment. The JART is valid with respect to IQ measurements. The JART results were compared to those of the WAIS-III (33).

The severity of each participant’s social anxiety symptoms was measured using the Liebowitz Social Anxiety Scale (LSAS) (34). This clinician-administered scale consists of 24 items: 13 describing performance situations and 11 describing social interactions. Each item was rated separately for “fear” and “avoidance” on a four-point categorical scale. Receiver operating curve analyzes showed that an LSAS score of 30 is correlated with minimal symptoms and is the optimal cutoff value for distinguishing between individuals with and without social anxiety disorders (35).

The ADHD Rating Scale (ADHD-RS) (36) includes 18 items related to inattentive and hyperactive–impulsive symptoms, scored on a four-point scale (0 = never, 1 = sometimes, 2 = often, 3 = very often), and assesses symptom severity over the past week. The total score is calculated as the sum of the scores for all 18 items.

2.2. Apparatus

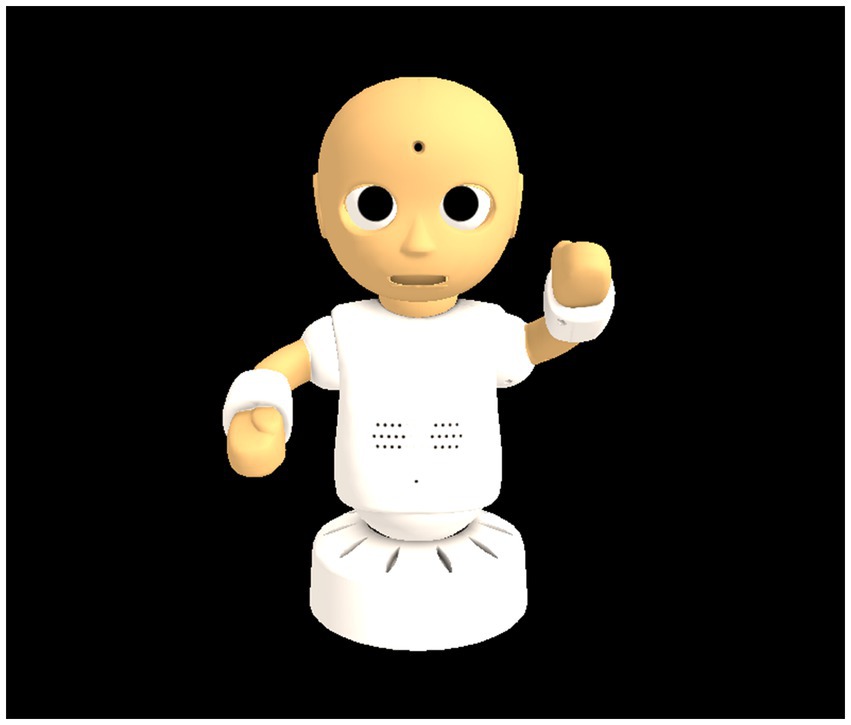

An online virtual conferencing system was used, with CG robots representing the proxy agents of the participants (Figure 1). A humanoid robot, CommU (Vstone Co., Ltd.), which has been used in several studies on the treatment and education of individuals with ASD (17, 37, 38), used a three-dimensional CG model. The participants could talk to each other as if they became a CommU in the virtual conversation room; therefore, this conferencing system was called CommU-Talk. The CommU had 14 DoFs: waist (2), left shoulder (2), right shoulder (2), neck (3), eyes (3), eyelids (1), and lips (1). The CommU’s face, which contributes to its facial expression by focusing on the design of its eyes and incorporating multiple DoFs dedicated to gaze control, can display a variety of simplified facial expressions that are less complex than those of a real human face. Its small and cute appearance is expected to alleviate the fear of participants with ASD. In this experiment, three CG robots were remotely teleoperated by three participants (one interviewee and two interviewers) and displayed on the screens of their laptops.

In CommU-Talk, the participants’ voices were captured using microphones and replayed. Furthermore, the captured voices were used to automatically produce the nonverbal behaviors of the CG robots so that they looked like they were actively speaking and attentively listening to each other; the speaking CG robot moved its lips and made hand gestures in synchrony with its voice, while the listening robots directed their gazes and nodded toward the speaking robot. The automatic function to produce nonverbal behavior synchronized with voices is expected not only to enable easy control of CG robots but also to provide users with a rich sense of agency and a sense of being attended to by others, independent of the participant’s usual behavior. Furthermore, the user can click the CG robots of the other participants on the screen to make the CG robot produce a gaze movement toward the clicked robot, which is expected to make the user accustomed to consciously looking at the listeners.

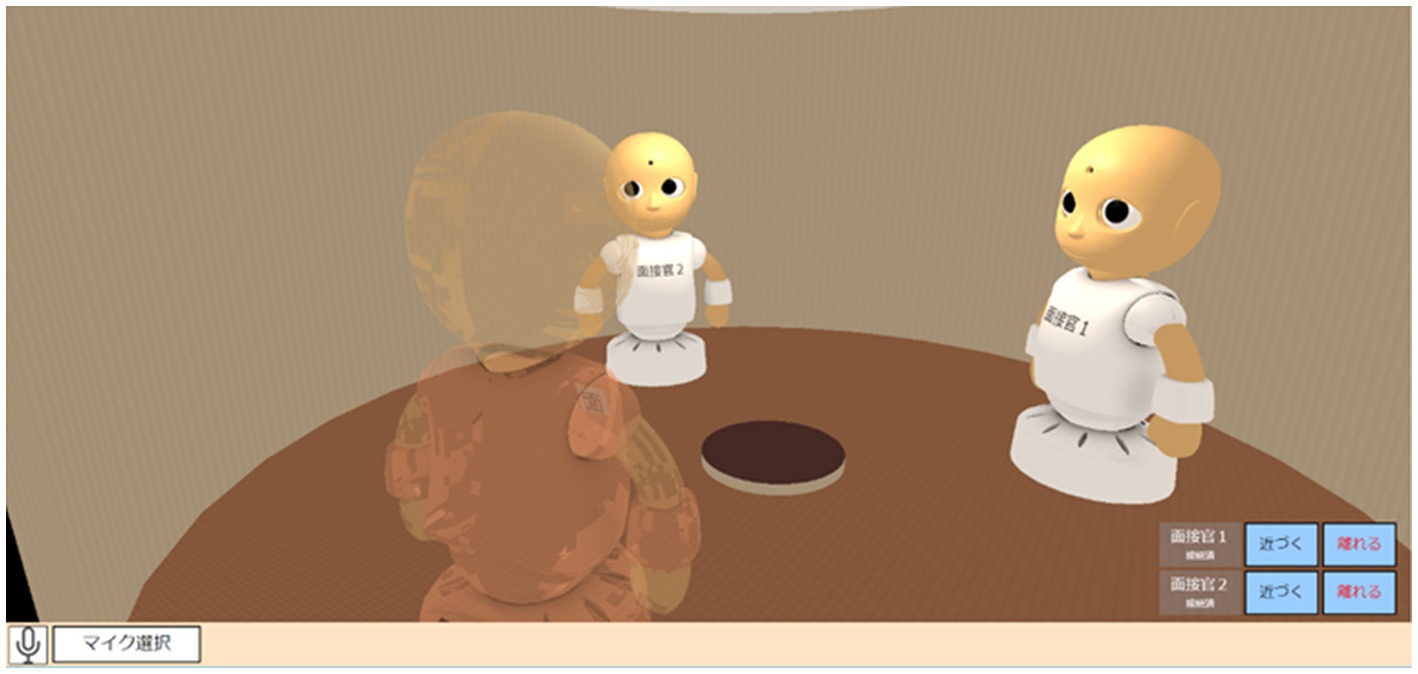

On the screen of the interviewee’s laptop computer, the avatar operated by the interviewee showed its back, whereas the other two avatars operated by the interviewers faced each other (Figure 2). On the screen of each interviewer’s laptop computer, an avatar operated by the interviewer displayed its back, while the other two avatars operated by the interviewee and the other interviewer displayed their faces. The same interview scene captured from different angles was recorded and played during the discussion phase using a conventional online conferencing system (Zoom) to share with the meta-evaluators.

2.3. Procedures

Fifteen male participants were divided into three groups with five participants each. In each group, they alternately played the roles of interviewer, interviewee, and meta-evaluator in the GIT-VICS Program sessions conducted over 8 or 9 days over 3 consecutive weeks. Fourteen participants used a CG robot to play the role of interviewee five times during the GIT-VICS Program, while one skipped it twice because of an unexpected absence due to family misfortune. In all sessions, two interviewers played the roles of the CG robots and evaluated the interviewees with two meta-evaluators. The number of meta-evaluators was reduced when only one was absent, whereas the session was postponed when more than one was absent. The average times (SD) spent playing the role of interviewee, interviewer, and meta-evaluator were 4.9 (0.5), 9.7 (1.9), and 8.8 (1.5) minutes, respectively.

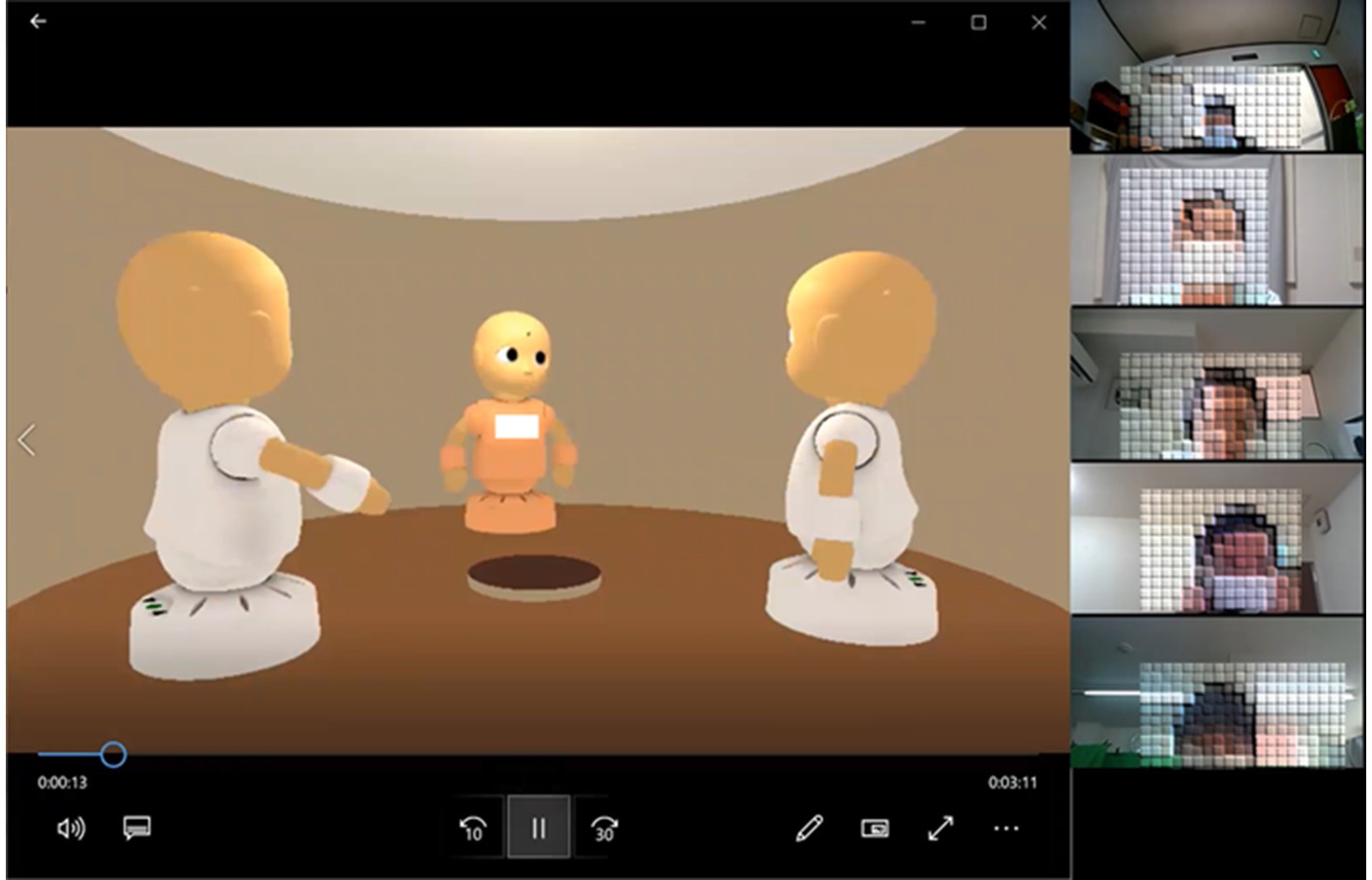

Each session consisted of four phases: first job interview, feedback, second job interview, and final comments. The first mock online job interview was conducted in CommU-Talk for approximately 3 min, with only two interviewers and one interviewee. Subsequently, the feedback was started in a conventional online meeting system (Zoom), in which not only the participants in the first phase but also the meta-evaluators and experimental assistants participated (Figure 3). The experimental assistant shared the recorded video of the interview in the first phase and facilitated discussions to evaluate the interviewees. The interviewee was then asked to complete a second job interview with the same two interviewers on CommU-Talk. Finally, an additional conversation for the final comments was held in the same online meeting system, and all participants, except for the experimental assistant, provided final short comments on the interviewee’s second performance.

To allow the participants to simulate an interview situation, each interviewee was given fictitious recruitment information containing company names, job types, and working conditions from which they selected one as the company to which to apply. The job types included data entry clerks, supermarket inventories, janitors, restaurant cooking assistants, nursing assistants, and newspaper delivery personnel. Two other participants alternately asked questions based on the prepared lists as interviewers (Supplementary material S1), which the interviewees answered. The interviewees were asked to concentrate on answering the questions in their first and second sessions. After the third session, they were asked to direct the gaze of the robot alternately toward the interviewers by clicking on the interviewers’ CG robots on the screen.

In the feedback phase, all participants talked while showing their faces in Zoom. The experimental assistant shared a video clip capturing the interview scene in CommU-Talk and facilitated a discussion to evaluate the performance of the interviewee based on the prepared scripts (Supplementary material S2). The interviewers and meta-evaluators assessed the performance of the interviewees by completing and discussing a prepared scoring sheet. Therefore, the meta-evaluators evaluated the performances without any direct experience as interviewers. The scoring sheet included evaluation items in terms of verbal factors (appropriate word use, enthusiasm, and appropriate question responses). In addition, a different item on nonverbal factors was included depending on the number of sessions, as the interviewee for the current interviewee to be evaluated. Appropriate speaking speed, vocal fluency, eye contact, response with appropriate timing, and vocal volume were added to the first to fifth sessions, respectively. In the fourth and fifth sessions, an item regarding appropriate eye contact was added. Subsequently, for each item, the interviewers and meta-evaluators explained and discussed the reasons for their scores to determine the score. The interviewee listened to the discussions as observers.

Finally, in the second phase, the interviewees participated in a mock online job interview using CG robots as in the first setup. Each session lasted approximately 50–60 min (3 min for the first and second job interviews, 40 min for feedback, and 8 min for final comments).

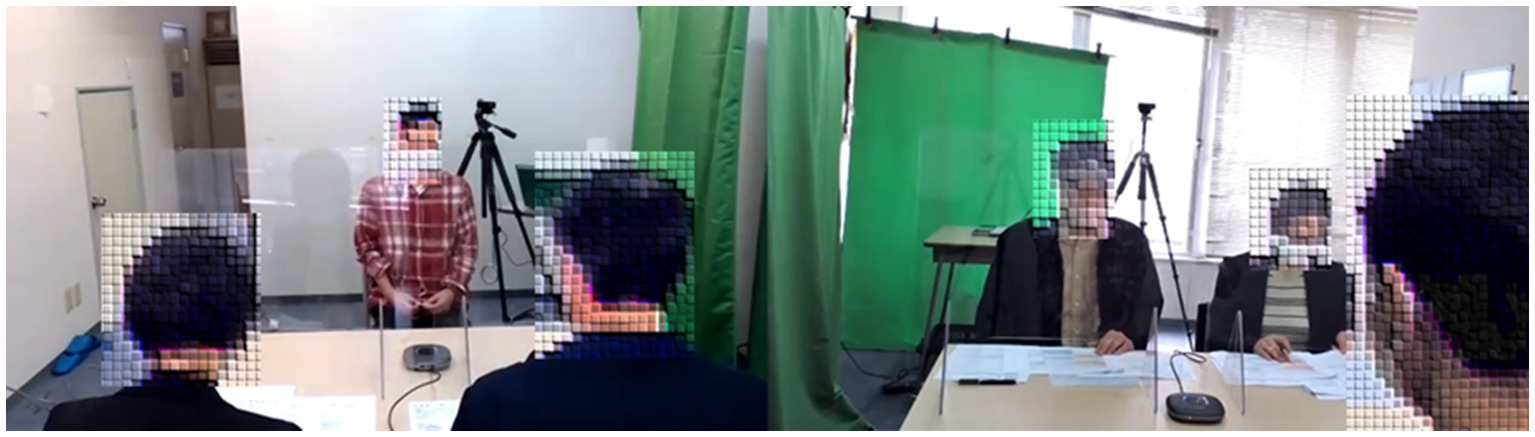

Before and after the GIT-VICS Program, the participants underwent a mock face-to-face job interview with two experienced human interviewers (MFH) (Figure 4) to evaluate its effect.

2.4. Self-evaluation

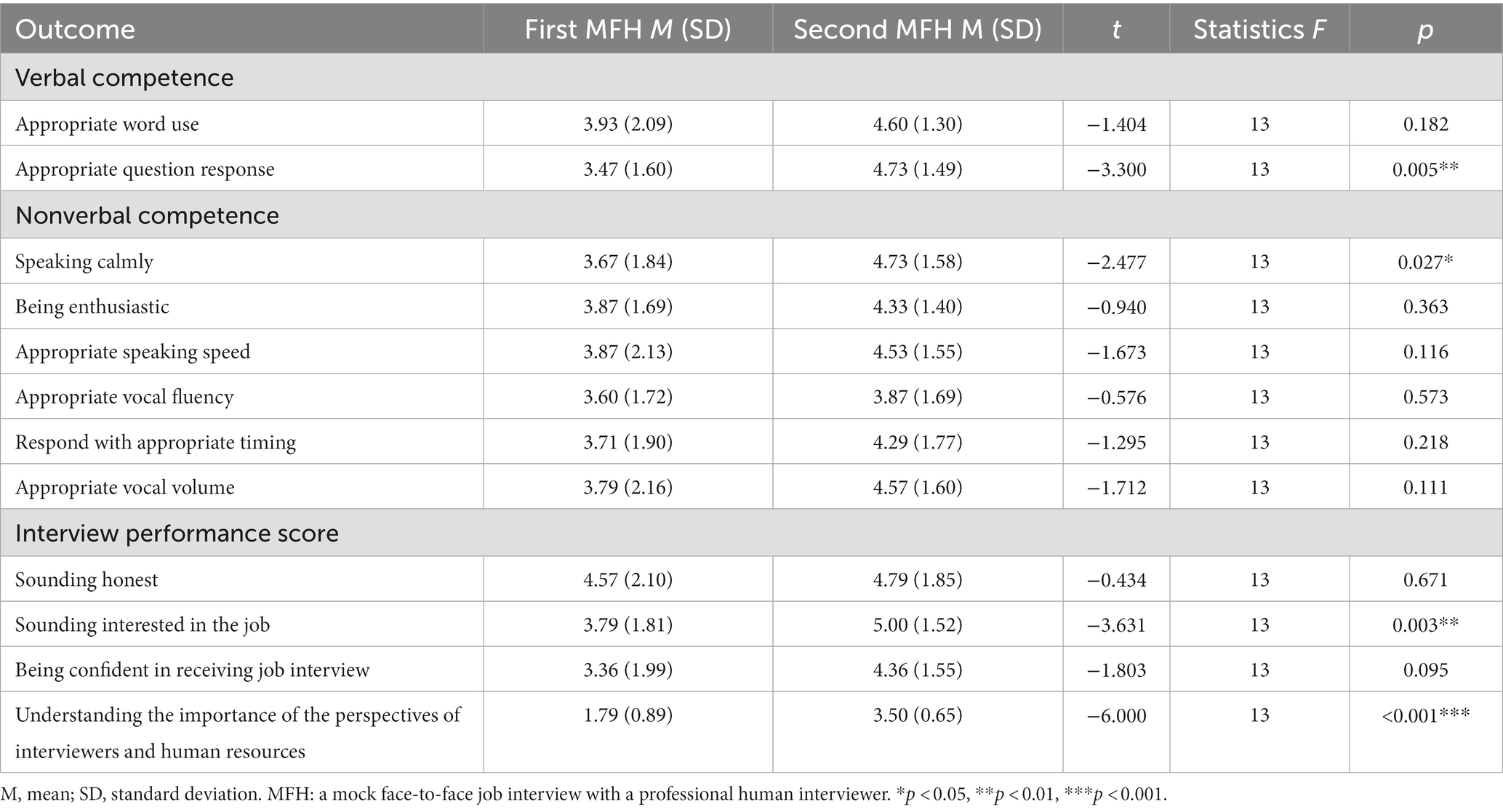

After the first MFH (i.e., before the GIT-VICS Program) and the second MFH (i.e., after the GIT-VICS Program), we asked the participants to complete a questionnaire about their interview performance. The included items surveyed verbal competence (appropriate word use, appropriate question response), nonverbal competence (speaking calmly, being enthusiastic, appropriate speaking speed, appropriate vocal fluency, responding with appropriate timing, and appropriate vocal volume), and interview performance (sounding honest, sounding interested in the job, and being confident in receiving job interviews). These items were rated on a seven-point Likert scale (ranging from “1 = strongly disagree” to “7 = strongly agree”). In addition, to assess the extent to which each participant in the position of interviewee understood that their point of view differed from that of the interviewer or meta-evaluator, we asked them to rate, on a scale of 1–5 (1: I cannot understand the perspectives of the interviewer and meta-evaluators in the job interview setting at all to 5: I can understand the perspectives of the interviewer and human resources in a job interview setting perfectly) the extent to which they understood the point of view of the interviewers and meta-evaluators.

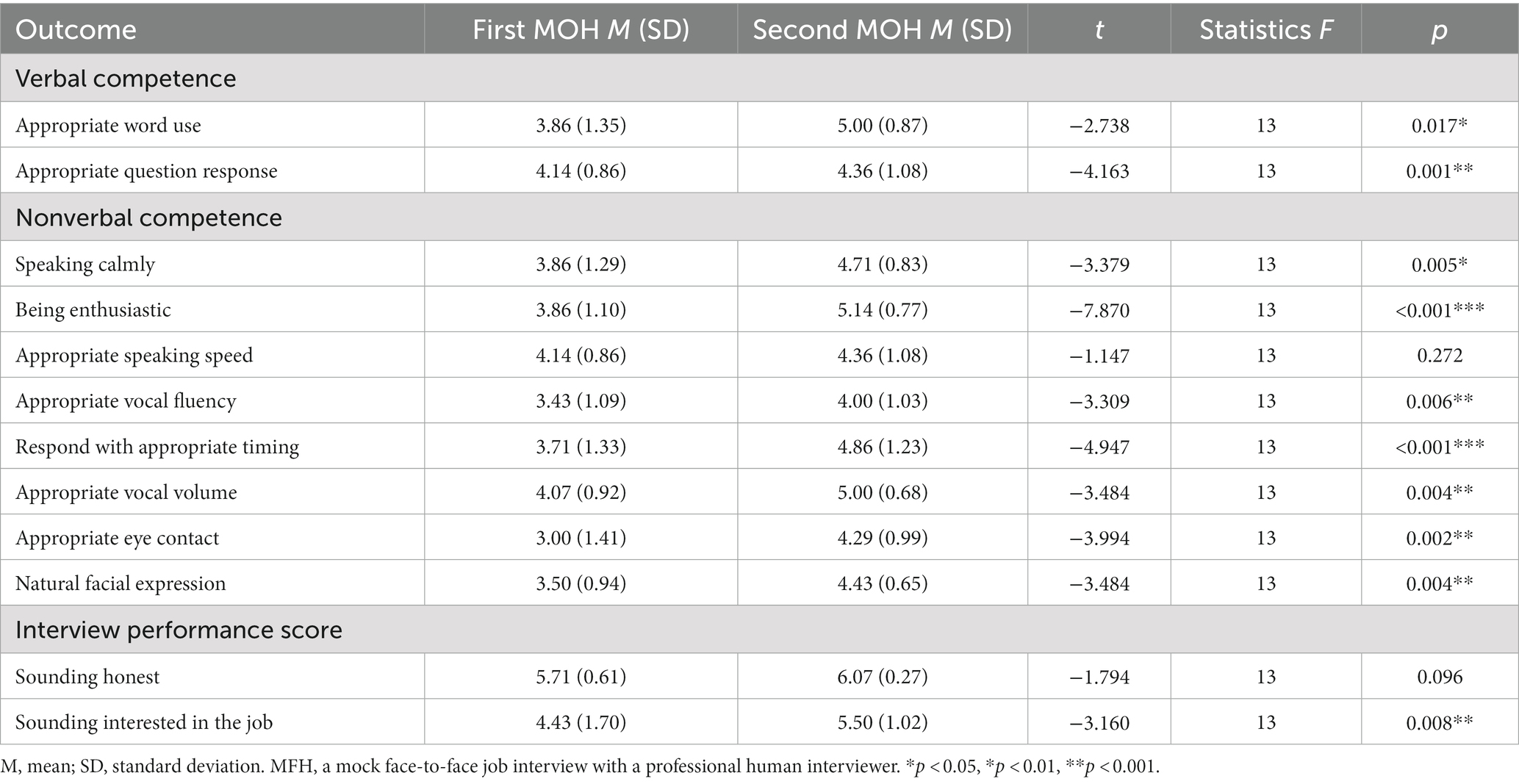

2.5. Other’s evaluation

The two raters independently evaluated the interview performances after the first MFH (before the GIT-VICS Program) and the second MFH (after the GIT-VICS Program) by watching video recordings of the sessions. Before the experiment, both raters received approximately 10 h of training in interview scoring while watching videos of interview scenes. The raters scored the interviews using a seven-point Likert scale related to verbal competence (appropriate word use, appropriate question response), nonverbal competence (speaking calmly, being enthusiastic, appropriate speaking speed, appropriate vocal fluency, appropriate timing, appropriate vocal volume, appropriate eye contact, and natural facial expressions), and interview performance (sounding honest and sounding interested in the job). The ratings ranged from 1 (very poor) to 7 (very excellent).

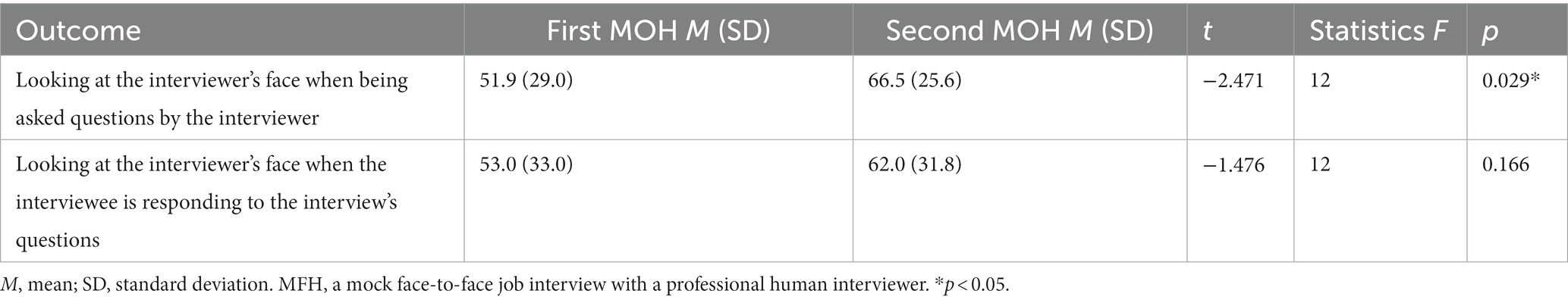

The raters also evaluated whether the participants looked at the interlocutor’s face every 5 s in the MFH. They calculated the number of frames in the interview to determine whether the interviewee looked at the interlocutor’s face when the interviewee was being asked questions. The raters also calculated the number of frames in the interview to determine whether the interviewees looked at the interviewer’s face when they responded to the interview questions.

Both raters were blinded to the day of the shooting (i.e., the first or second MFH). The primary and secondary raters showed moderate reliability [intraclass correlation coefficient (ICC) = 0.64] in interview performance scores. The primary secondary rater showed substantial reliability [ICC = 0.89] regarding whether the interviewee looked at the interviewer’s face. The primary rater’s score was used in this study because he was a more experienced evaluator than the second rater. After the intervention, the participants’ supporters, trainers, and job coaches were asked the following question: “Did the participants learn to understand the point of view of the interviewer after the intervention?”

2.6. Statistical analysis

Statistical analyzes were performed using IBM SPSS Statistics for Windows, version 24.0 (IBM Corp., Armonk, NY, United States). Descriptive statistics were calculated for the samples.

To assess the degree of improvement in self-evaluation (appropriate word use, appropriate question response, speaking calmly, being enthusiastic, appropriate speaking speed, appropriate vocal fluency, responding with appropriate timing, appropriate vocal volume, sounding honest, sounding interested in the job, being confident in receiving job interview, and understanding the perspectives of interviewers and meta-evaluators in job interview setting) and evaluation of others (appropriate word use, appropriate question response, speaking calmly, being enthusiastic, appropriate speaking speed, appropriate vocal fluency, responding with appropriate timing, appropriate vocal volume appropriate, eye contact, natural facial expressions, sounding honest, sounding interested in the job, recognition of the point of view of interviewers and meta-evaluators, and looking at the interlocutor’s face when being asked questions by the interviewer and when the interviewee is responding to the interview’s questions) between the first and second MFH, a paired t-test was performed. We employed an alpha level of 0.05 for these analyzes.

3. Results

3.1. Demographic data

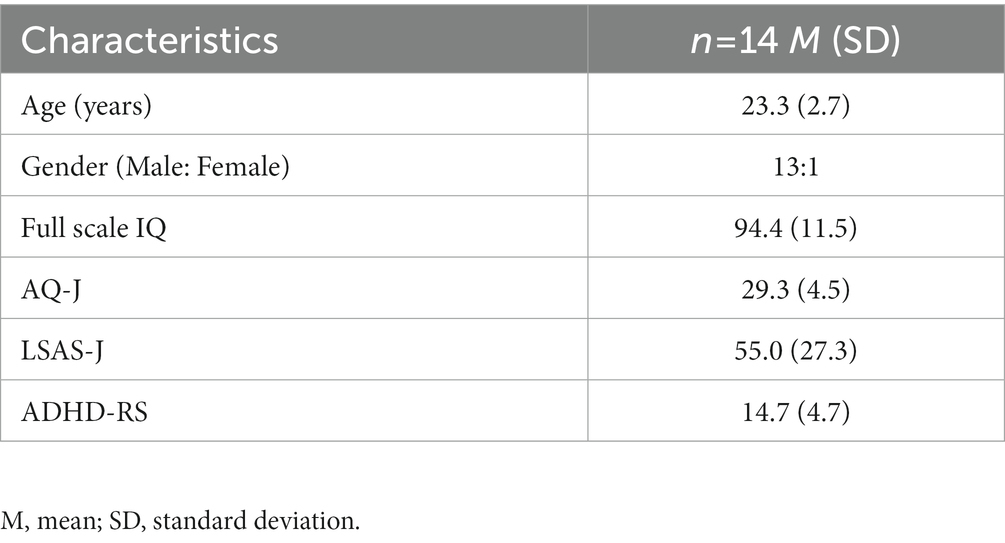

Fifteen individuals with ASD participated in this study. One participant dropped out of the experiment before the second MFH because of family misfortune (i.e., he could finish the first MFH or the entire course of the GIT-VICS Program). Fourteen participants completed the trial procedure without any technical challenges or participant distress that would have led to the termination of the session (see Table 1 for the experimental details of the participants who could finish). The participants’ performances were carefully monitored to ensure that all participants were focused during the trial and remained highly motivated from the beginning to the end of the experiment.

3.2. Main result

The self-evaluation showed significant increases between the first and second MFH in appropriate question response (3.47 vs. 4.73; p = 0.005), speaking calmly (3.67 vs. 4.73; p = 0.027), and sounding interested in the job (3.79 vs. 5.00; p = 0.003). The recognition of the importance of the perspectives of interviewers and meta-evaluators also increased significantly after the second MOH compared to after the first MOH (3.50 vs. 1.79; p < 0.001; Table 2).

The evaluation of others showed significant increases between the first and second MFH in appropriate word use (3.86 vs. 5.00, p = 0.017), appropriate question response (3.43 vs. 4.86, p = 0.001), speaking calmly (3.86 vs. 4.71, p = 0.005), being enthusiastic (3.86 vs. 5.14, p < 0.001), appropriate vocal fluency (3.43 vs. 4.00, p = 0.006), responding with appropriate timing (3.71 vs. 4.86, p < 0.001), appropriate vocal volume (4.07 vs. 5.00, p = 0.004), appropriate eye contact (3.00 vs. 4.29, p = 0.002), natural facial expressions (3.50 vs. 4.43, p = 0.004), and sounding interested in the job (4.43 vs. 5.50, p = 0.008; Table 3).

We could not evaluate whether one participant looked at the interlocutor’s face because part of the video of the first MFH was blurry. Thus, we evaluated whether 13 of the participants looked at the interviewer’s face. We observed a significant increase between the first and second MFH when looking at the interviewer’s face when the interviewees responded to questions (51.9 vs. 66.5, p = 0.029) but not in looking at the interviewer’s face when asked questions (53.0 vs. 62.0%; p = 0.166; Table 4).

Table 4. Means and standard error of the mean of the extent of participant look at the interviewer’s face at first MFH and second MFH.

In the semi-structured interview, the participants’ supporters responded to the following prompt: “All students seemed to learn to better understand the point of view of the interviewer.” At the 1-year follow-up after the intervention, nine participants had passed job interview tests and gained employment. In our interview, 1 year after the intervention, all participants answered, “The experience with the GIT-VICS Program was the trigger to put ourselves in the interviewer’s shoes.”

4. Discussion

The current study evaluated the feasibility of the GIT-VICS Program, a new group-based online job interview training program that uses CG robots. The completion rate suggested that participants who received the GIT-VICS Program continued to participate without losing motivation. Using a CG robot and learning the importance of interview skills by experiencing other perspectives (i.e., the viewpoints of the interviewer and meta-evaluators) may have sustained their motivation. The GIT-VICS Program contributed to improvements in various job interview skills (i.e., verbal competence, nonverbal competence, and interview performance). These results occurred in the absence of specific interview skill training by professionals (i.e., the improvements were based only on practice and feedback from participants with ASD).

In this program, playing the role of an interviewer using CG CommU had many advantages over conversing face-to-face in an online setting. Sensory overstimulation from humans in face-to-face conversations is a serious problem for individuals with ASD and interferes with the processing of social signals (39). By using this system in the present program, interviewers and meta-evaluators could not see the actual appearance of the interviewees. Therefore, the interviewees were free from the awareness of being watched by others, which may be linked to decreased interpersonal anxiety in online mock job interview training. Furthermore, a growing body of literature indicates that many individuals with ASD have the desire and motivation to use technology (40); thus, the technology behind the CG CommU might increase users’ enthusiasm and focus on the program.

The participants in this study experienced improvements in some self-evaluation items (appropriate question responses, speaking calmly, sounding interested in the job, and recognizing the importance of the perspectives of interviewers and meta-evaluators) but did not experience improved self-confidence in their self-evaluations. In the GIT-VICS Program, only individuals with ASD participated in the feedback phases; they identified the weak points of the interviewees without paying due respect, which may be linked to the fact that they could not improve their self-confidence. Evidence indicates that the more confident one is in performing an interview, the greater the social engagement during the interview, and the more effective the verbal and nonverbal communication strategies (41, 42). Future projects should be modified so that participants can point out both the weaknesses and strengths of the interviewees and ensure that they point out the weaknesses.

The participants received training only in an online setting and not in a face-to-face job interview setting. In addition, we used a simple humanoid robot, the CG CommU, which is difficult to generalize to humans. As individuals with ASD have low self-esteem (43) and many are not good at generalization (44), they may still not have confidence in the face-to-face job interview setting after the GIT-VICS Program.

The participants in this study could take the viewpoints of not only an interviewer evaluating the interviewee but also a meta-evaluator who argues for the validity of the evaluations by interviewers and another meta-evaluator. These experiences might deepen the participants’ understanding of the perspective of the interviewer in job interview situations–that is, how they would be evaluated and what they should do to be evaluated highly.

The participants showed improvements in most items (appropriate word use, appropriate question response, speaking calmly, being enthusiastic, appropriate vocal fluency, responding with appropriate timing, appropriate vocal volume, eye contact, natural facial expressions, sounding interested in the job, recognition of the importance of the point of view of interviewers and meta-evaluators, and looking at the interlocutor’s face when asked questions by the interviewer and when the interviewee was responding to the interview questions) in the evaluation of others. Completion of the GIT-VICS Program increased the participants’ recognition of the importance of the perspectives of interviewers and meta-evaluators, concentrating during the trials, and being highly motivated from the start to the end of the program, which may have contributed to the improvements in most items. As many individuals with ASD are poor at generalization (44), improving the evaluation of others in a real-world job interview setting after the GIT-VICS Program is significant.

The results of this study showed that the GIT-VICS Program did not improve looking at the interlocutor’s face when the interviewee listened to the interviewers’ utterances but did so when he or she spoke to them. These different effects may be explained by how the participants experienced looking in the GIT-VICS Program. In the GIT-VICS Program, when the interviewer listened to the utterances of the interviewers’ CG robots, the interviewee’s CG robot automatically looked toward the speaking CG robot. However, when the interviewer spoke to answer questions, the interviewer was also asked to consciously control the gaze of his CG robot by clicking on the target CG robot to be looked at in the last three trials. Moreover, the looking performance was explicitly evaluated in the feedback session. In other words, the participants only became accustomed to the active experience, which could be viewed as an improvement in looking at the interlocutors’ faces when answering.

This study has several limitations. First, the sample size was relatively small. In addition, most participants were male. Future studies involving larger samples of female participants are required to provide more meaningful data regarding the potential use of this system. Second, this study was not a controlled study. There was no sham training group to compare with the online CG robot training group. At the time that this experiment was conducted, the Japanese government had declared a state of emergency due to the proliferation of coronavirus disease 2019 (COVID-19), so we could not ask participants to participate in a controlled setting. Given the urgent need for individuals with ASD to prepare for online job interviews, a no-control pilot study had to be conducted.

Nonetheless, it is worthwhile to report the results in their current form, which demonstrated improvements in areas in which the intervention was challenging, as this underscored the need for a large-scale follow-up study with a control group to establish the efficacy of the intervention. The ultimate goal of the program is to improve the participants’ communication skills in everyday life and give them a competitive edge when seeking employment or volunteer positions. An employment support facility is required to ensure that our system works. Given the high cost of caring for individuals with ASD (45), it is of critical economic importance to help these individuals achieve competitive status. Future long-term longitudinal studies of work support facilities are needed to test whether this program can achieve this goal.

This is the first study to evaluate the effect of mock online job interview training using CG robots in increasing the ability of individuals with ASD to participate in face-to-face job interviews. Our program improved most interview performance items related to the evaluation of others in individuals with ASD. This result occurred in the absence of specific interview skill training by professionals. If professionals can provide appropriate guidance, individuals with ASD may improve on other items. Given the promising results of this study and to draw clear conclusions about the efficacy of CG robots for mock online job interview training, future studies adding appropriate guidance for manner of job interview by experts are needed.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by Ethics Committee of Kanazawa University. The patients/participants provided their written informed consent to participate in this study.

Author contributions

YY and HK designed the study, conducted the experiments, conducted the statistical analyzes, analyzed and interpreted the data, and drafted the manuscript. YY, TM, KS, HI, MM, HH, and AK conceptualized the study, participated in its design, assisted with data collection and scoring of behavioral measures, analyzed and interpreted the data, drafted the manuscript, and critically revised the manuscript for important intellectual content. HK approved the final version to be published. All the authors have read and approved the final version of the manuscript.

Funding

This work was supported by JST and Moonshot R&D, Grant Number JPMJMS2011.

Acknowledgments

The authors would like to thank all participants and their families for their cooperation in this study. They thank M. Miyao and H. Ito for their support in our experiments.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2023.1198433/full#supplementary-material

References

1. American Psychiatric Association. Diagnostic and statistical manual of mental disorders. Arlington, VA: American Psychiatric Publishing (2013).

2. Maenner, MJ, Shaw, KA, Bakian, AV, Bilder, DA, Durkin, MS, Esler, A, et al. Prevalence and characteristics of autism Spectrum disorder among children aged 8 years–autism and developmental disabilities monitoring network, 11 sites, United States, 2018. MMWR Surveill Summ. (2021) 70:1–16. doi: 10.15585/mmwr.ss7011a1

3. Shattuck, PT, Roux, AM, Hudson, LE, Taylor, JL, Maenner, MJ, and Trani, JF. Services for adults with an autism spectrum disorder. Can J Psychiatr. (2012) 57:284–91. doi: 10.1177/070674371205700503

4. Roux, AM, Shattuck, PT, Cooper, BP, Anderson, KA, Wagner, M, and Narendorf, SC. Postsecondary employment experiences among young adults with an autism Spectrum disorder. J Am Acad Child Adolesc Psychiatry. (2013) 52:931–9. doi: 10.1016/j.jaac.2013.05.019

5. Wilczynski, SM, Trammell, B, and Clarke, LS. Improving employment outcomes among adolescents and adults on the autism Spectrum. Psychol Sch. (2013) 50:876–87. doi: 10.1002/pits.21718

6. Hendricks, D. Employment and adults with autism spectrum disorders: challenges and strategies for success. J Vocat Rehabil. (2010) 32:125–34. doi: 10.3233/JVR-2010-0502

7. National Autistic Society. New shocking data highlights the autism employment gap. (2021). Available at: https://www.autism.org.uk/what-we-do/news/new-data-on-the-autism-employment-gap. (Accessed February 19, 2021).

8. Higgins, KK, Koch, LC, Boughfman, EM, and Vierstra, C. School-to-work transition and Asperger syndrome. Work. (2008) 31:291–8.

9. Strickland, DC, Coles, CD, and Southern, LB. JobTIPS: a transition to employment program for individuals with autism spectrum disorders. J Autism Dev Disord. (2013) 43:2472–83. doi: 10.1007/s10803-013-1800-4

10. Kumazaki, H, Muramatsu, T, Yoshikawa, Y, Corbett, BA, Matsumoto, Y, Higashida, H, et al. Job interview training targeting nonverbal communication using an android robot for individuals with autism spectrum disorder. Autism. (2019) 23:1586–95. doi: 10.1177/1362361319827134

11. Kumazaki, H, Yoshikawa, Y, Muramatsu, T, Haraguchi, H, Fujisato, H, Sakai, K, et al. Group-based online job interview training program using virtual robot for individuals with autism Spectrum disorders. Frontiers. Psychiatry. (2022) 12:12. doi: 10.3389/fpsyt.2021.704564

12. Chevallier, C, Kohls, G, Troiani, V, Brodkin, ES, and Schultz, RT. The social motivation theory of autism. Trends Cogn Sci. (2012) 16:231–9. doi: 10.1016/j.tics.2012.02.007

13. Warren, ZE, Zheng, Z, Swanson, AR, Bekele, E, Zhang, L, Crittendon, JA, et al. Can robotic interaction improve joint attention skills? J Autism Dev Disord. (2015) 45:3726–34. doi: 10.1007/s10803-013-1918-4

14. Jarrold, W, Mundy, P, Gwaltney, M, Bailenson, J, Hatt, N, McIntyre, N, et al. Social attention in a virtual public speaking task in higher functioning children with autism. Autism Res. (2013) 6:393–410. doi: 10.1002/aur.1302

15. Costa, S, Lehmann, H, Dautenhahn, K, Robins, B, and Soares, F. Using a humanoid robot to elicit body awareness and appropriate physical interaction in children with autism. Int J Soc Robot. (2014) 7:265–78. doi: 10.1007/s12369-014-0250-2

16. Iacono, I, Lehmann, H, Marti, P, Robins, B, and Dautenhahn, K. Robots as social mediators for children with autism - a preliminary analysis comparing two different robotic platforms. 2011 IEEE International Conference on Development and Learning (ICDL), pp. 1–6. (2011).

17. Kumazaki, H, Muramatsu, T, Yoshikawa, Y, Matsumoto, Y, Ishiguro, H, Kikuchi, M, et al. Optimal robot for intervention for individuals with autism spectrum disorders. Psychiatry Clin Neurosci. (2020) 74:581–6. doi: 10.1111/pcn.13132

18. Ricks, DJ, and Colton, MB. Trends and considerations in robot-assisted autism therapy. 2010 IEEE International Conference on Robotics and Automation, pp. 4354–4359. (2010).

19. Robins, B, Dautenhahn, K, and Dubowski, J. Does appearance matter in the interaction of children with autism with a humanoid robot? Interact Stud. (2006) 7:479–512. doi: 10.1075/is.7.3.16rob

20. Kumazaki, H, Muramatsu, T, Kobayashi, K, Watanabe, T, Terada, K, Higashida, H, et al. Feasibility of autism-focused public speech training using a simple virtual audience for autism spectrum disorder. Psychiatry Clin Neurosci. (2019) 74:124–31. doi: 10.1111/pcn.12949

21. Pelphrey, KA, Sasson, NJ, Reznick, JS, Paul, G, Goldman, BD, and Piven, J. Visual scanning of faces in autism. J Autism Dev Disord. (2002) 32:249–61. doi: 10.1023/A:1016374617369

22. Cook, JL, Rapp, JT, Mann, KR, McHugh, C, Burji, C, and Nuta, R. A practitioner model for increasing eye contact in children with autism. Behav Modif. (2017) 41:382–404. doi: 10.1177/0145445516689323

23. Ninci, J, Lang, R, Davenport, K, Lee, A, Garner, J, Moore, M, et al. An analysis of the generalization and maintenance of eye contact taught during play. Dev Neurorehabil. (2013) 16:301–7. doi: 10.3109/17518423.2012.730557

24. Baron-Cohen, S, Tager-Fulsberg, H, and Cohen, DJ. Understanding other minds: Perspectives from developmental cognitive neuroscience. Oxford: Oxford University Press (2000).

25. Baron-Cohen, S, Leslie, AM, and Frith, U. Does the autistic child have a “theory of mind”? Cognition. (1985) 21:37–46. doi: 10.1016/0010-0277(85)90022-8

26. Leekam, SR, Libby, SJ, Wing, L, Gould, J, and Taylor, C. The diagnostic interview for social and communication disorders: algorithms for ICD-10 childhood autism and Wing and Gould autistic spectrum disorder. J Child Psychol Psychiatry. (2002) 43:327–42. doi: 10.1111/1469-7610.00024

27. Wing, L, Leekam, SR, Libby, SJ, Gould, J, and Larcombe, M. The diagnostic interview for social and communication disorders: background, inter-rater reliability and clinical use. J Child Psychol Psychiatry. (2002) 43:307–25. doi: 10.1111/1469-7610.00023

28. Sheehan, DV, Lecrubier, Y, Harnett Sheehan, K, Janavs, J, Weiller, E, Keskiner, A, et al. The validity of the Mini international neuropsychiatric interview (MINI) according to the SCID-P and its reliability. Eur Psychiatry. (1997) 12:232–41. doi: 10.1016/S0924-9338(97)83297-X

29. Wakabayashi, A, Tojo, Y, Baron-Cohen, S, and Wheelwright, S. The autism-Spectrum quotient (AQ) Japanese version: evidence from high-functioning clinical group and normal adults. Shinrigaku Kenkyu. (2004) 75:78–84. doi: 10.4992/jjpsy.75.78

30. Wakabayashi, A, Baron-Cohen, S, Uchiyama, T, Yoshida, Y, Tojo, Y, Kuroda, M, et al. The autism-spectrum quotient (AQ) children’s version in Japan: a cross-cultural comparison. J Autism Dev Disord. (2007) 37:491–500. doi: 10.1007/s10803-006-0181-3

31. Auyeung, B, Baron-Cohen, S, Wheelwright, S, and Allison, C. The autism Spectrum quotient: Children’s version (AQ-child). J Autism Dev Disord. (2008) 38:1230–40. doi: 10.1007/s10803-007-0504-z

32. Baron-Cohen, S, Hoekstra, RA, Knickmeyer, R, and Wheelwright, S. The autism-Spectrum quotient (AQ)–adolescent version. J Autism Dev Disord. (2006) 36:343–50. doi: 10.1007/s10803-006-0073-6

33. Hirata-Mogi, S, Koike, S, Toriyama, R, Matsuoka, K, Kim, Y, and Kasai, K. Reliability of a paper-and-pencil version of the Japanese adult Reading test short version. Psychiatry Clin Neurosci. (2016) 70:362. doi: 10.1111/pcn.12400

34. Liebowitz, MR. Social phobia. Mod Probl Pharmacopsychiatry. (1987) 22:141–73. doi: 10.1159/000414022

35. Mennin, DS, Fresco, DM, Heimberg, RG, Schneier, FR, Davies, SO, and Liebowitz, MR. Screening for social anxiety disorder in the clinical setting: using the Liebowitz social anxiety scale. J Anxiety Disord. (2002) 16:661–73. doi: 10.1016/S0887-6185(02)00134-2

36. DuPaul, GJ, Reid, R, Anastopoulos, AD, Lambert, MC, Watkins, MW, and Power, TJ. Parent and teacher ratings of attention-deficit/hyperactivity disorder symptoms: factor structure and normative data. Psychol Assess. (2016) 28:214–25. doi: 10.1037/pas0000166

37. Kumazaki, H, Yoshikawa, Y, Yoshimura, Y, Ikeda, T, Hasegawa, C, Saito, DN, et al. The impact of robotic intervention on joint attention in children with autism spectrum disorders. Molecular. Autism. (2018) 9:46. doi: 10.1186/s13229-018-0230-8

38. Shimaya, J, Yoshikawa, Y, Matsumoto, Y, Kumazaki, H, Ishiguro, H, Mimura, M, et al. Advantages of indirect conversation via a desktop humanoid robot: case study on daily life guidance for adolescents with autism spectrum disorders. 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), p. 831–836. (2016).

39. Hurtig, T, Kuusikko, S, Mattila, M-L, Haapsamo, H, Ebeling, H, Jussila, K, et al. Multi-informant reports of psychiatric symptoms among high-functioning adolescents with Asperger syndrome or autism. Autism. (2009) 13:583–98. doi: 10.1177/1362361309335719

40. Diehl, JJ, Schmitt, LM, Villano, M, and Crowell, CR. The clinical use of robots for individuals with autism Spectrum disorders: a critical review. Res Autism Spectr Disord. (2012) 6:249–62. doi: 10.1016/j.rasd.2011.05.006

41. Hall, NC, Jackson Gradt, SE, Goetz, T, and Musu-Gillette, LE. Attributional retraining, self-esteem, and the job interview: benefits and risks for college student employment. J Exp Educ. (2011) 79:318–39. doi: 10.1080/00220973.2010.503247

42. Tay, C, Ang, S, and Van Dyne, L. Personality, biographical characteristics, and job interview success: a longitudinal study of the mediating effects of interviewing self-efficacy and the moderating effects of internal locus of causality. J Appl Psychol. (2006) 91:446–54. doi: 10.1037/0021-9010.91.2.446

43. van der Cruijsen, R, and Boyer, BE. Explicit and implicit self-esteem in youth with autism spectrum disorders. Autism. (2020) 25:349–60. doi: 10.1177/1362361320961006

44. Low, J, Goddard, E, and Melser, J. Generativity and imagination in autism spectrum disorder: evidence from individual differences in children’s impossible entity drawings. Br J Dev Psychol. (2009) 27:425–44. doi: 10.1348/026151008X334728

Keywords: autism spectrum disorders, job interview, interview skill, robot, computer graphics

Citation: Yoshikawa Y, Muramatsu T, Sakai K, Haraguchi H, Kudo A, Ishiguro H, Mimura M and Kumazaki H (2023) A new group-based online job interview training program using computer graphics robots for individuals with autism spectrum disorders. Front. Psychiatry. 14:1198433. doi: 10.3389/fpsyt.2023.1198433

Edited by:

Rosa Calvo Escalona, Hospital Clinic of Barcelona, SpainReviewed by:

Marie-Maude Geoffray, Centre Hospitalier Le Vinatier, FranceArmand Manukyan, Universitéde Lorraine, France

Copyright © 2023 Yoshikawa, Muramatsu, Sakai, Haraguchi, Kudo, Ishiguro, Mimura and Kumazaki. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hirokazu Kumazaki, a3VtYXpha2lAdGlhcmEub2NuLm5lLmpw

Yuichiro Yoshikawa

Yuichiro Yoshikawa Taro Muramatsu2

Taro Muramatsu2 Hiroshi Ishiguro

Hiroshi Ishiguro Masaru Mimura

Masaru Mimura Hirokazu Kumazaki

Hirokazu Kumazaki