95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Psychiatry , 09 June 2022

Sec. Digital Mental Health

Volume 13 - 2022 | https://doi.org/10.3389/fpsyt.2022.926286

Vincent P. Martin1,2

Vincent P. Martin1,2 Jean-Luc Rouas1

Jean-Luc Rouas1 Pierre Philip2,3

Pierre Philip2,3 Pierre Fourneret4

Pierre Fourneret4 Jean-Arthur Micoulaud-Franchi2,3

Jean-Arthur Micoulaud-Franchi2,3 Christophe Gauld4,5*

Christophe Gauld4,5*In order to create a dynamic for the psychiatry of the future, bringing together digital technology and clinical practice, we propose in this paper a cross-teaching translational roadmap comparing clinical reasoning with computational reasoning. Based on the relevant literature on clinical ways of thinking, we differentiate the process of clinical judgment into four main stages: collection of variables, theoretical background, construction of the model, and use of the model. We detail, for each step, parallels between: i) clinical reasoning; ii) the ML engineer methodology to build a ML model; iii) and the ML model itself. Such analysis supports the understanding of the empirical practice of each of the disciplines (psychiatry and ML engineering). Thus, ML does not only bring methods to the clinician, but also supports educational issues for clinical practice. Psychiatry can rely on developments in ML reasoning to shed light on its own practice in a clever way. In return, this analysis highlights the importance of subjectivity of the ML engineers and their methodologies.

Todays gospel in clinical psychiatry is that the field struggles with the absence of useful biomarkers, requires relying on an operationalized phenomenological level (1) and that interrater reliability for common psychiatric disorders should be strengthened by various measures of transdiagnostic symptoms (2, 3). Facing the inefficiency of the responses provided in recent decades (4), the focus has shifted to better definitions of phenotypes (5): how to refine clinical phenomenology in order to find biomarkers, improve reliability, or better qualitatively measure symptoms?

One answer to this thorny question is a refinement of the analysis of clinical judgment and reasoning, which relies on a large literature in psychiatry that is continuously developed for more than 50 years (6). While this issue is not specific to psychiatry, this domain could be chosen as a privileged clinical field to study it, inasmuch as a complex medical discipline possessing a set of deep reflections, salient questions and subtle counter-examples (7).

On one side, clinicians can be considered as a non-explicit black-box model. They make choices based on clinical decision-making processes that are not necessarily explicit. These choices are made according to an “embodied model,” i.e., a clinical model used to support the clinical reasoning and clinical decision.

In parallel, the growing interest in computational sciences allows considering “objective” automated methods, such as Artificial Intelligence (AI), and more specifically Machine Learning (ML) (8, 9). However, computational decision-making processes are created and designed by an engineer, who makes choices about the conception of such models that are not devoid of any subjectivity (10). In this way, ML engineers are driven by their experiential and subjective way of thinking, i.e., “embodied models”—like clinicians.

In this article, we hypothesize that the co-lighting of reciprocal reasoning and cross-teaching between IA and clinician reasoning could be fruitful (11, 12). This interdisciplinary dialog would not only shed light on clinical practice but also create a dynamic for the challenges of the psychiatry of the future, bringing together digital technology and clinical practice (13–15).

Thus, we do not propose to list the different computational methods designed to replace the judgment of the clinician. Rather, we aim to discuss in a systematic way how comparing clinical reasoning with data modelization could help to understand the formulation of the clinical judgment.

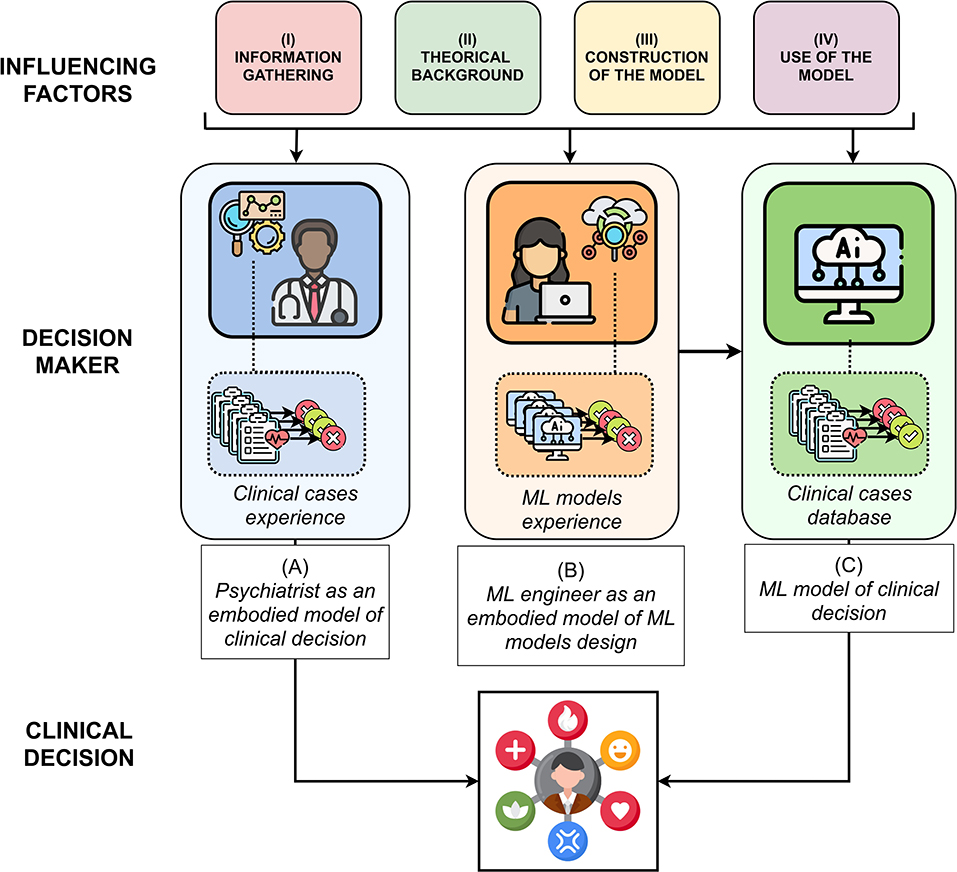

The aim of this paper is to provide a translational roadmap comparing clinical reasoning with computational reasoning, as proposed in Figure 1. Such a translational roadmap corresponds to a set of definitions and tools, necessarily not exhaustive, which allows feeding the understanding of clinical reasoning for the ML engineer and the understanding of ML for the clinician. Consequently, this article is intended for both psychiatrists interested in ML and ML engineers interested in clinical reasoning.

Figure 1. A translational roadmap in four steps accounting computational reasoning (i.e., ML engineer as an embodied model) by analogy with clinical reasoning (i.e., psychiatrist as an embodied model), in order to support clinical decisions.

First, based on the relevant literature on clinical ways of thinking (16–25) and ML (26–32), we have differentiated the process of clinical judgment into four main stages. Such a translational effort allows to analytically detail each step of the two modes of clinical and computational reasoning. Secondly, we have brought to light parallels between clinical reasoning, the engineer methodology to build a ML model and the ML model itself. Such identification necessarily leads us to distinguish the decision-making model of clinicians, embodied with them, the decision-making model of ML engineers (about how to design a ML model), embodied with them, and the ML models themselves. The details of these parallels are decomposed in four steps: 1) collection of variables, 2) theoretical background, 3) construction of the model, 4) use of the model. They are detailed in Table 1. A more complete version of this table with examples is available in Supplementary Table S1.

In each of the four steps, we detail the factors taking part in (A) the embodied clinical model of the psychiatrist; (B) then the embodied model of the engineer ML; (C) then if it exists, the ML algorithm which allows rendering the clinical decision.

The first step of clinical reasoning is data gathering, divided into two main steps: collection of variables, including the choice, labeling, prioritization, and granularity of these variables; and relations between them. The collection of variables is a sensible step, during which the characteristics of the patients are projected into main dimensions.

First, numerous variables can be collected both by the clinicians and the ML engineers. Regarding the first, these variables can be symptoms, risk factors, harms and values, external validators, or even biomarkers (33).

On their side, data scientists in charge of the database design also select one or multiple input data that can be measured, e.g., voice, facial expressions, Internet of Things (IoT) data, biological, or genetic data (34).

Then, clinicians annotate the phenomena expressed by their patients, to create data potentially integrated into their clinical model, with a potential loss of information (16, 19).

In a smaller amount, the same importance of labellization occurs when the ML engineer names a variable or uses an alias for a parametrized and complicated function (35, 36).

These variables are hierarchically selected from the patient according to their expected importance, i.e., their epistemic gain, with a view to prediction and/or prognosis and/or clinical care. For instance, psychiatric medication is generally prescribed in a transdiagnostic manner, based on symptoms belonging to different diagnostic categories (37). Indeed, the same treatment for acoustico-verbal hallucinations can be given, whether it is a diagnosis of schizophrenia or another type of delirium (e.g., paranoid).

On their side, to prioritize the variables, ML engineers choose an algorithm that corresponds to the criterion they want to satisfy, e.g., according to their discriminative power (38).

The clinician adjusts the finesse of the collected variables through the clinical interview (39). For instance, if the patient describes a "sleep disorder," she/he will seek to question the type of disorder, e.g., insomnia, and more specifically early or late insomnia (40).

On the other side, the available finesse both in terms of time and concept for ML algorithms are restricted to (static) datasets, chosen by the dataset designer (41).

Clinicians account for a causal chain between symptoms, i.e., correlations between variables (42, 43). Clinicians tend to think intuitively about psychiatric disorders in terms of a mutual causal influence between clinical manifestations (44).

Regarding ML, the transition from statistical models to causal learning is one of the biggest challenges for AI in the coming years: causal AI is not (yet) a reality. Indeed, the concern of causality is expressed in the computer science community through explainable AI, which aims at extracting clues from black-box models in order to allow engineers to interpret them and make themselves the causal chain between the inputs of the system and the label (45).

However, recent and current works are focusing on causal learning, i.e., the design of models that “contains the mechanisms giving rise to the observed statistical dependences” (46) which is one of the biggest challenges of ML for the next few years.

Once the data is collected, different theoretical backgrounds (i.e., set of rules) allow structuring the data.

The embodied models of clinicians are rooted in different theoretical traditions. For example, the medical model corresponds to a vision primarily guided by the consideration of common causes allowing explaining a set of symptoms, sometimes anchored in a mainly neurobiological model. These different theoretical traditions influence the definition of what a psychiatric disorder is [e.g., harm and dysfunction in the Wakefield model (47), which postulates that a medical disorder is defined by a dysfunction resulting in harm to the patient], and by extension the consideration of the patient. Thus, psychiatrists are regulated by theoretical sets of laws and rules structuring their way of conceiving their embodied clinical model (and therefore of structuring their data).

This consideration echoes the different trends existing in the machine learning field which is at the crossroad of multiple different approaches. One example is the recent advent of data-driven AI led by Andrew Ng, in opposition to the classical model-driven approach that is usually employed in ML (48). In the latter, the focus is on the design of the machine learning model (e.g., a classifier or regressor) that will model the best relationship between the features extracted from this data and the label on a given dataset, whereas data-driven AI focuses on data engineering processes to obtain higher performances with a given model.

Psychiatry is regulated by a set of guidelines and recommendations which are beyond the control and subjectivity of the clinician. Some of these guidelines are internationally recognized, e.g., the National Institute for Health and Care Excellence (NICE) Guidelines or the American Psychiatric Association Practice Guidelines.

Although there are some guidelines on the application of ML in the medical field [e.g., (49)], guidelines on how to build such systems are few and rarely used or followed. Usually, machine learning engineers follow implicit guidelines picked from “rules of good practice” and some reference conferences, articles or ML engineers that make “jurisprudence” and serve as guides for machine learning engineers to design their models [e.g., the yearly conference NEURIPS (50), exposing the latest trends in artificial intelligence].

Clinicians' personality will influence their theoretical choices. For instance, if they are inclined to take risks, or conversely to be rather conservative, they will tend to favor different diagnostic practices. Likewise, uncertain decisions will lead to different diagnostic, therapeutic responses and more generally different decision-making paths depending on the clinicians and patients willingness to take risks.

Regarding ML engineers, personality also influences their choices. Indeed, depending on their recklessness (or adventurousness) or conservatism, their sense of the aesthetics, and other aspects of their personality, engineers will choose one implementation over another (51, 52). For example, enterprising engineers try new and funky pipelines despite the risk of low performances, while “conservative” engineers stick to state-of-the-art-inspired pipelines (53).

After having collected a set of data under the influence of the theoretical background, the structuring of this data is done by the clinician or ML engineer / ML algorithm according to the training of the model, experience and expertise, and cognitive reasoning.

Educational training in psychiatry is largely based on case studies. This repetition of confrontations with clinical cases (fictitious or not) will allow clinicians to be trained. They will be rewarded or penalized according to their skills, which will allow their internal model to be trained in front of new cases (e.g., the National Classifying Exam in France or the Psychiatry Certification examination in the US).

Similarly, at the end of their schooling, the ML engineers models of problem solving with ML has been shaped by the examples they have dealt with in class, whether they are fictional examples or real data.

Regarding the ML model, an initial version is trained with the whole dataset (i.e., input variables annotated with some clinical label) when the best pipeline and configuration (or “hyperparameters”) have been selected, before being deployed in real conditions and confronted to new data (54). Thus, the training of an initial model is undertaken systematically.

Learning is not a static process. The models of both psychiatrists and engineers do not stay in their initial state. The constitution of the theoretical background is largely influenced by two factors: time and expertise.

Concerning time, the number of cases encountered by the clinician changes their initial theoretical background. For example, young clinicians tend to follow their personal readings and training will open up to other theoretical backgrounds over time (55). Regarding expertise, the knowledge of clinicians in one area influences their practices in other areas (i.e., diagnostic and therapeutic practice). For example, clinicians working with neuroscientists in a specialized center for autism might tend to apply their neurodevelopmental models to other psychiatric disorders (16).

The same tenet about time and expertise factors apply to ML engineers. On one hand, the more they confront diversified problems and interact with their peers, the more their perspective of the field grows and allows them to embrace new theoretical backgrounds (10). On the other hand, engineers working in a neurocomputational or in a theoretical informatics team do not embrace similar problems in the same way, and develop different approaches to solve them.

Regarding the ML model, when the pipeline is put into production (real-life situation), no background modification is possible (the pipeline is static—it has been chosen during the training phase), but a shift of specialization is possible depending on the data it is fed with (54, 56).

Clinical cognitive reasoning is a field of research in its own right. In this article, we have specified how the clinical decision pertains to each of these steps. However, the way of using the embodied clinical model also has parallels with the embodied model of the engineer ML and the ML algorithm.

Indeed, clinicians use each set of variables for each patient, i.e., they build as many models as there are cases, based on their theoretical rules. Thus, clinicians can rely more or less on theoretical hypotheses or on data presented to them in the clinical practice, in order to build their model. Consequently, they can either strongly constrain their data with predefined laws in a theory-driven manner, or on the contrary use clinical reasoning by trial and error in a data-driven manner (e.g., constraining their model with descriptive categorical criteriological approaches).

On the contrary, ML engineers always try to balance their theoretical knowledge with regards to the data. Indeed, significant parts of the model of the engineer rely on rules that depend on data distributions and tasks (26, 32).

Regarding ML models, when they are put into production, the only parameter that could be set regarding their degree of freedom is the choice to fine train the model with the new samples, and if so, the importance to give to them (54, 56).

Considering diagnostic and therapeutic issues constitute only part of the medical care. Indeed, other challenges, such as the cost of a clinician to society, necessarily influence clinical practice. For instance, on a day of hospital consultation, clinicians should have seen a certain number of patients in consultation, requiring that they limit the time dedicated to each of them. Thus, cost and time are two intrinsically related uncontrollable factors that should be considered in the clinical modeling of practitioners.

The same factors shape the engineers work, but through another temporality: these factors influence the ML engineer and ML system during the design of the ML model, not during its use. In fact, both the engineers salary and the number of projects they are working on may affect the way they code. For instance, engineers could limit themselves to pre-coded pipelines [such as the Python library Sci-kit learn (57)] because of lack of time, whereas some solutions could have worked better but would have required more time. Time and cost factors also influence the precision of the parameters of the ML model, e.g., through the material needed to accelerate their computation (e.g., GPU cost).

To these constraints are added external influences, such as clinicians team within which they work, and more generally the social and institutional pressures and requirements that weigh on them. For instance, these pressures and requirements are more or less burdensome depending on novice decision makers or experienced clinicians—the latter theoretically resisting conflicts and external constraints. Finally, beyond the external constraints, clinicians work voluntarily in an interdisciplinary manner. Such an issue requires compromises on the part of clinicians, who find themselves at the intersection of external viewpoints and which modifies their clinical judgment.

The same constraints can be applied to ML engineers, depending on the specialization they come from and the environment they are working in.

An equivalent of interdisciplinarity for ML models could be transfer learning, in which a model trained on a specific model from one domain is fine-trained on new data and used in another task (58).

From this fruitful comparison emerges the idea that the understanding of AI does not only bring methods to the clinician, but also sustains secondary benefits: due to the necessary decomposition of its operation (59), it supports educational issues for clinical practice. Thus, understanding how the ML works could inform clinical reasoning. Far from the possibility that psychiatric diagnosis no longer requires clinicians (60), the discipline can conversely rely on developments in ML reasoning to shed light on its own practice in a clever way.

In return, the understanding of such an embodied clinical model could help to understand the importance of the subjectivity of ML engineers. While this effect has already been documented in the literature [e.g., (10)], this comparison brought into light some of the factors involved in the choice of engineers when designing a ML model, continuing the deconstruction of the myth of a desubjectivized and neutral AI.

Such developments proposed in this article not only have an interdisciplinary scope, allowing for the understanding of the empirical practice of each of the disciplines (psychiatry and ML engineering), but also a transdisciplinary scope of clinical reasoning, providing new methods for dialectically grasping what cannot be understood through the prism of a single discipline—even in interaction with another field.

This three-model dialog (embodied model of the psychiatrist, embodied model of the engineer and ML model) only partially considers, however, a crucial fourth actor: the embodied model of subjectivity of the patients. Like those presented in this perspective paper, the latter collects information through a theoretical background which is then processed with its own embodied model. Just as the comparison between clinical reasoning in psychiatry and reasoning in ML has led to the emergence of new methods for studying clinical decision-making, a dialog between these fields and the patients subjectivity would complement this transdisciplinary approach, while placing patient ethics at the center of the discussion.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

VM: writing, original draft preparation, conceptualization, and editing. CG: writing, original draft preparation, conceptualization, methodology, and editing. J-LR: supervision, reviewing, and validation. PP: reviewing, methodology, and validation. PF: reviewing, resources, and validation. J-AM-F: supervision, methodology, reviewing, resources, and validation. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict ofinterest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We thank Dr. Guillaume Dumas for his positive feedback and encouragement on this project.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2022.926286/full#supplementary-material

1. Regier DA, Kuhl EA, Kupfer DJ. The DSM-5: classification and criteria changes. World Psychiatry. (2013) 12:92–8. doi: 10.1002/wps.20050

2. Fried EI. The 52 symptoms of major depression: lack of content overlap among seven common depression scales. J Affect Disord. (2017) 208:191–7. doi: 10.1016/j.jad.2016.10.019

3. Santor DA, Gregus M, Welch A. FOCUS ARTICLE: eight decades of measurement in depression. Measurement. (2006) 4:135–55. doi: 10.1207/s15366359mea0403_1

4. Kendler KS. Toward a philosophical structure for psychiatry. Am J Psychiatry. (2005) 162:433–40. doi: 10.1176/appi.ajp.162.3.433

5. Nelson B, McGorry PD, Fernandez AV. Integrating clinical staging and phenomenological psychopathology to add depth, nuance, and utility to clinical phenotyping: a heuristic challenge. Lancet Psychiatry. (2021) 8:162–8. doi: 10.1016/S2215-0366(20)30316-3

6. Kienle GS, Kiene H. Clinical judgement and the medical profession: clinical judgement and medical profession. J Eval Clin Pract. (2011) 17:621–627. doi: 10.1111/j.1365-2753.2010.01560.x

7. Morenz B, Sales B. Complexity of ethical decision making in psychiatry. Ethics Behav. (1997) 7:1–14. doi: 10.1207/s15327019eb0701_1

8. Servan-Schreiber D. Artificial intelligence and psychiatry. J Nervous Mental Dis. (1986) 174:191–202. doi: 10.1097/00005053-198604000-00001

9. Doraiswamy PM, Blease C, Bodner K. Artificial intelligence and the future of psychiatry: insights from a global physician survey. Artif Intell Med. (2020) 102:101753. doi: 10.1016/j.artmed.2019.101753

10. Cummings ML, Li S. Subjectivity in the creation of machine learning models. J Data Inform Quality. (2021) 13:1–19. doi: 10.1145/3418034

11. Starke G, De Clercq E, Borgwardt S, Elger BS. Computing schizophrenia: ethical challenges for machine learning in psychiatry. Psychol Med. (2020) 51:2515–21. doi: 10.1017/S0033291720001683

12. Gauld C, Micoulaud-Franchi JA, Dumas G., Comment on Starke et al. Computing schizophrenia: ethical challenges for machine learning in psychiatry: from machine learning to student learning: pedagogical challenges for psychiatry. Psychol Med. (2021) 51:2509–11. doi: 10.1017/S0033291720003906

13. Faes L, Sim DA, van Smeden M, Held U, Bossuyt PM, Bachmann LM. Artificial intelligence and statistics: just the old wine in new wineskins? Front Digital Health. (2022) 4:833912. doi: 10.3389/fdgth.2022.833912

14. Insel TR. Digital phenotyping: a global tool for psychiatry. World Psychiatry. (2018) 17:276–77. doi: 10.1002/wps.20550

15. Torous J, Bucci S, Bell IH, Kessing LV, Faurholt-Jepsen M, Whelan P, et al. The growing field of digital psychiatry: current evidence and the future of apps, social media, chatbots, and virtual reality. World Psychiatry. (2021) 20:318–35. doi: 10.1002/wps.20883

16. Bhugra D, Easter A, Mallaris Y, Gupta S. Clinical decision making in psychiatry by psychiatrists. Acta Psychiatr Scandinavica. (2011) 124:403–11. doi: 10.1111/j.1600-0447.2011.01737.x

17. Zarin DA, Earls F. Diagnostic decision making in psychiatry. Am J Psychiatry. (1993) 150:197–206. doi: 10.1176/ajp.150.2.197

18. Redelmeier DA, Schull MJ, Hux JE, Tu JV, Ferris LE. Problems for clinical judgement: 1. Eliciting an insightful history of present illness. CMAJ. (2001) 164:647–51. Available online at: https://www.cmaj.ca/content/164/5/647

19. Croskerry P. A universal model of diagnostic reasoning. Acad Med. (2009) 84:1022–8. doi: 10.1097/ACM.0b013e3181ace703

20. Groopman J, Prichard M. How doctors think. J Med Person. (2009) 7:49–50. doi: 10.1007/s12682-009-0009-y

21. Crumlish N, Kelly BD. How psychiatrists think. Adv Psychiatr Treatment. (2009) 15:72–9. doi: 10.1192/apt.bp.107.005298

22. Bhugra D. Decision-making in psychiatry: what can we learn? Acta Psychiatr Scandinavica. (2008) 118:1–3. doi: 10.1111/j.1600-0447.2008.01220.x

23. Bhugra D, Malliaris Y, Gupta S. How shrinks think: decision making in psychiatry. Austr Psychiatry. (2010) 18:391–3. doi: 10.3109/10398562.2010.500474

24. Bhugra D, Tasman A, Pathare S, Priebe S, Smith S, Torous J, et al. The WPA- lancet psychiatry commission on the future of psychiatry. Lancet Psychiatry. (2017) 4:775–818. doi: 10.1016/S2215-0366(17)30333-4

25. Galanter CA, Patel VL. Medical decision making: a selective review for child psychiatrists and psychologists. J Child Psycholo Psychiatry. (2005) 46:675–89. doi: 10.1111/j.1469-7610.2005.01452.x

26. Jung A. Machine Learning: The Basics. Singapore: Springer (2022). Available online at: https://books.google.fr/books?hl=fr&lr=&id=1IBaEAAAQBAJ&oi=fnd&pg=PR8&dq=Jung+A.+Machine+Learning:+the+Basics.+(2022).&ots=XSbLxiuEU-&sig=FmFsJFT-nykqNinWsOtmCZ_FXk0&redir_esc=y#v=onepage&q=Jung%20A.%20Machine%20Learning%3A%20the%20Basics.%20(2022).&f=false

28. Rebala G, Ravi A, Churiwala S. machine learning definition and basics. In: An Introduction to Machine Learning. Cham: Springer International Publishing (2019). p. 1–17.

29. Sarkar D, Bali R, Sharma T. Machine learning basics. In: Practical Machine Learning with Python. Berkeley, CA: Apress (2018). p. 3–65.

30. Chatzilygeroudis K, Hatzilygeroudis I, Perikos I. Machine learning basics. In: Eslambolchilar P, Komninos A, Dunlop M, editors. Intelligent Computing for Interactive System Design, 1st Edn. New York, NY: ACM (2021). p. 143–93.

31. Ayyadevara VK. Basics of machine learning. In: Pro Machine Learning Algorithms. Berkeley, CA: Apress (2018). p. 1–15.

32. Gerard C. The basics of machine learning. In: Practical Machine Learning in JavaScript. Berkeley, CA: Apress (2021). p. 1–24.

34. Mohr DC, Zhang M, Schueller SM. Personal sensing: understanding mental health using ubiquitous sensors and machine learning. Annu Rev Clin Psychol. (2017) 13:23–47. doi: 10.1146/annurev-clinpsy-032816-044949

35. Deissenboeck F, Pizka M. Concise and consistent naming. Software Quality J. (2006) 14:261–82. doi: 10.1007/s11219-006-9219-1

36. Xu W, Xu D, Deng L. Measurement of source code readability using word concreteness and memory retention of variable names. In: 2017 IEEE 41st Annual Computer Software and Applications Conference (COMPSAC). vol. 1. Turin: IEEE (2017). p. 33–8.

37. Waszczuk MA, Zimmerman M, Ruggero C, Li K, MacNamara A, Weinberg A, et al. What do clinicians treat: diagnoses or symptoms? The incremental validity of a symptom-based, dimensional characterization of emotional disorders in predicting medication prescription patterns. Compr Psychiatry. (2017) 79:80–8. doi: 10.1016/j.comppsych.2017.04.004

38. Ferri FJ, Pudil P, Hatef M, Kittler J. Comparative study of techniques for large-scale feature selection. In: Machine Intelligence and Pattern Recognition. vol. 16. Elsevier (1994). p. 403–13. doi: 10.1016/B978-0-444-81892-8.50040-7

39. Cohen AS, Schwartz E, Le T, Cowan T, Cox C, Tucker R, et al. Validating digital phenotyping technologies for clinical use: the critical importance of “resolution”. World Psychiatry. (2020) 19:114–5. doi: 10.1002/wps.20703

40. Edinger JD, Bonnet MH, Bootzin RR, Doghramji K, Dorsey CM, Espie CA, et al. Derivation of research diagnostic criteria for insomnia: report of an american academy of sleep medicine work group. Sleep. (2004) 27:1567–96. doi: 10.1093/sleep/27.8.1567

41. Martin VP, Rouas JL, Micoulaud-Franchi JA, Philip P, Krajewski J. How to design a relevant corpus for sleepiness detection through voice? Front Digital Health. (2021) 3:124. doi: 10.3389/fdgth.2021.686068

42. Borsboom D. A network theory of mental disorders. World Psychiatry. (2017) 16:5–13. doi: 10.1002/wps.20375

43. Borsboom D, Cramer AOJ. Network analysis: an integrative approach to the structure of psychopathology. Ann Rev Clin Psychol. (2013) 9:91–121. doi: 10.1146/annurev-clinpsy-050212-185608

44. Kim NS, Ahn WK. The influence of naive causal theories on lay concepts of mental illness. Am J Psychol. (2002) 115:33–65. doi: 10.2307/1423673

45. Rudin C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell. (2019) 1:206–15. doi: 10.1038/s42256-019-0048-x

46. Schölkopf B, Locatello F, Bauer S, Ke NR, Kalchbrenner N, Goyal A, et al. Towards causal representation learning. arXiv:210211107 [cs] (2021). doi: 10.48550/arXiv.2102.11107

47. Wakefield JC. The concept of mental disorder: on the boundary between biological facts and social values. Am Psychol. (1992) 47:373. doi: 10.1037/0003-066X.47.3.373

48. Motamedi M, Sakharnykh N, Kaldewey T. A Data-centric approach for training deep neural networks with less data. arXiv:2110.03613. (2021). doi: 10.48550/arXiv.2110.03613

49. Luo W, Phung D, Tran T, Gupta S, Rana S, Karmakar C, et al. Guidelines for developing and reporting machine learning predictive models in biomedical research: a multidisciplinary view. J Med Internet Res. (2016) 18:e5870. doi: 10.2196/jmir.5870

50. Ranzato M, Beygelzimer A, Nguyen K, Liang P, Vaughan JW, Dauphin Y. Advances in Neural Information Processing Systems, Vol.34. Neural Information Processing Systems Foundation, Inc. (2021).

51. Knuth DE. Computer programming as an art. In: ACM Turing Award Lectures, Vol. 17. San Diego, CA: ACM (2007). p. 667–73. doi: 10.1145/361604.361612

52. Berry D. The Philosophy of Software: Code and Mediation in the Digital Age. Palgrave Macmillan (2016).

53. Kording K, Blohm G, Schrater P, Kay K. Appreciating diversity of goals in computational neuroscience. Open Sci Framework. (2018) 1–8. doi: 10.31219/osf.io/3vy69

54. Soh J, Singh P. Machine learning operations. In: Data Science Solutions on Azure. Berkeley, CA: Apress (2020). p. 259–79.

56. Breck E, Cai S, Nielsen E, Salib M, Sculley D. The ML test score: a rubric for ML production readiness and technical debt reduction. In: 2017 IEEE International Conference on Big Data (Big Data). Boston, MA: IEEE (2017). p. 1123–32.

57. Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: machine learning in python. J Mach Learn Res. (2011) 12:2825–30. doi: 10.5555/1953048.2078195

58. Torrey L, Shavlik J. Transfer Learning [chapter]. IGI Global (2010). ISBN: 9781605667669. Available online at: https://www.igi-global.com/chapter/transfer-learning/www.igi-global.com/chapter/transfer-learning/36988.

59. Bechtel W, Richardson RC. Discovering Complexity: Decomposition and Localization as Strategies in Scientific Research. Cambridge: MIT Press (2010).

Keywords: clinical decision, artificial intelligence, machine learning, clinical practice, cross-talk

Citation: Martin VP, Rouas J-L, Philip P, Fourneret P, Micoulaud-Franchi J-A and Gauld C (2022) How Does Comparison With Artificial Intelligence Shed Light on the Way Clinicians Reason? A Cross-Talk Perspective. Front. Psychiatry 13:926286. doi: 10.3389/fpsyt.2022.926286

Received: 22 April 2022; Accepted: 13 May 2022;

Published: 09 June 2022.

Edited by:

Maj Vinberg, University Hospital of Copenhagen, DenmarkReviewed by:

Morten Tønning, Region Hovedstad Psychiatry, DenmarkCopyright © 2022 Martin, Rouas, Philip, Fourneret, Micoulaud-Franchi and Gauld. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christophe Gauld, Z2F1bGRjaHJpc3RvcGhlQGdtYWlsLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.