95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

OPINION article

Front. Psychiatry , 05 August 2022

Sec. Digital Mental Health

Volume 13 - 2022 | https://doi.org/10.3389/fpsyt.2022.900615

This article is part of the Research Topic Digital Mental Health Research: Understanding Participant Engagement and Need for User-centered Assessment and Interventional Digital Tools View all 13 articles

Large unmet needs in mental health combined with the stress caused by pandemic mitigation measures have accelerated the use of digital mental health apps and software-based solutions (1). Global investor funding for virtual behavioral services and mental health apps in 2021 exceeded $5.5 billion, a 139% jump from 2020, according to CB Insights (2). While there are thousands of apps claiming to improve various aspects of mental wellbeing, many of them have never gone through clinical trials or regulatory scrutiny. The term “digital therapeutic” is used in the literature to distinguish high quality evidence-based software programs from wellness apps (3). Regulators use the term “software as a medical device” (SaMD) or “software in a medical device” (SiMD) to refer to software that functions as a medical device and is promoted to treat a specific condition. When a SaMD or SiMD is deployed on a phone it is referred to as a mobile medical app (MMA) (4, 5). The International Medical Device Regulators Forum (IMDRF), a voluntary group of medical device regulators from around the world has developed detailed guidance on definitions, framework for risk categorization, quality management, and the clinical evaluation of such devices (6–8). Non-traditional approaches, outside of RCTs, to evaluate efficacy for such tools has also been discussed elsewhere (9).

To date, only a few clinically tested software devices have been authorized by the U.S. Food and Drug Administration for treating specific mental health disorders (excluding devices marketed under pandemic-related emergency use authorization). These include reSET for substance abuse disorder (10), reSET-O for opioid use disorder (11), Somryst for chronic insomnia (12, 13) and EndeavorRx for pediatric attention deficit hyperactivity disorder (14, 15). SaMDs and MMAs for treating mild cognitive impairment, Alzheimer's disease, schizophrenia, autism, depression, social anxiety disorder, phobias and PTSD are in clinical trials (1, 3, 5, 16, 17) and may also come to market soon. The state of efficacy for non-regulated, wellness apps (e.g., for mindfulness or stress management) is beyond the scope of this article, and readers are referred elsewhere for information on these apps (16).

While digital therapeutics and apps undoubtedly hold promise, relatively little attention has focused on attrition rates. Even effective apps will have limited impact if they are not highly engaging and result in high attrition (18, 19). Attrition, the loss of a randomized subject(s) from a study sample, is a very common issue in clinical trials and results from several causes such as refusal to participate after randomization, an early dropout from the study, and loss of subject's study data. Attrition can substantially bias estimates of efficacy and reduce generalizability (20). Traditionally, regulatory trials of psychopharmacological agents have used the last observation carried forward (LOCF) statistical method to accommodate attrition–but this has been increasingly replaced with mixed-effects models, and pattern-mixture and propensity adjustments (20). Compliance in trials of psychopharmacological agents is traditionally measured via pill counts. However, in virtual platform trials of digital therapeutics, compliance cannot simply be measured by the number of times a subject logs on to an app and it is important to also measure and report how engaged users were with the app (21). Currently, there is no standard way to define what constitutes meaningful engagement and how to compare engagement across different digital therapeutic devices (21). There is also no consensus as to how to deal with users who are non-engaged but stay in the study.

As patients typically use apps on their own time, they must be intrinsically motivated to do so and must perceive the benefits from the app as meaningful (18). Such intrinsic motivation may be low for psychiatric patients with depression, anhedonia, or cognitive difficulties. For example, in one study of internet-based cognitive behavioral therapy for depression, the highest engagers comprised just 10.6% of the sample (22). This is further highlighted by a 2020 meta-analysis of 18 randomized trials of (non-FDA cleared) mobile apps for treating depression (trial duration ranging from 10 days to 6 months), in which the pooled dropout rate was 26.2% and rose to 47.8% when adjusted for publication bias (19). The authors concluded that this raises concern over whether efficacy was overstated in these studies. Real-world attrition rates for non-FDA cleared mental wellness apps are not readily available for direct comparison. But one study of 93 non-FDA approved Android apps (median installs 100,000), targeting mental wellbeing, found the medians of app 15 and 30-day retention rates were very low at 3.9% and 3.3% (23). In that study, the daily active user rate (median open rate) was only 4.0%. (23) These data highlight that the number of app installs has very little correlation with daily long-term usage.

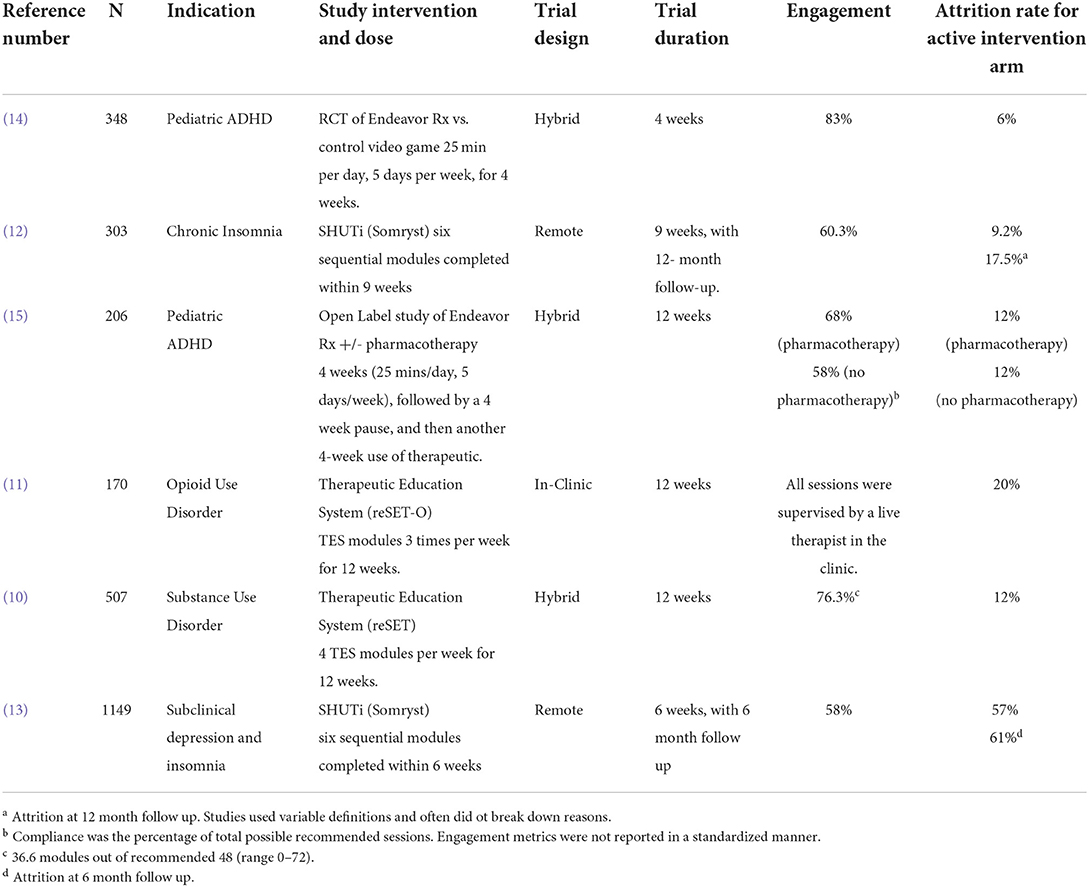

Attrition and engagement rates (self-defined by study sponsors) in the pivotal studies for four FDA-authorized neuropsychiatric digital therapeutics are shown in Table 1. The studies reported significant benefits for the digital therapeutic vs. a control condition (Table 1), (10–15). Sample sizes were adequate, ranging from 170 to 1,149 participants (Table 1). Active intervention durations were relatively short ranging from 4 to 12-weeks (Table 1). Trial design, nature of therapy, incentives, and diagnosis influenced attrition. The Somyrst trials additionally reported 6 and 12-month follow up data. Attrition was lowest and compliance was highest in the pivotal study (14) of EndeavorRx for pediatric ADHD (Table 1) – this was a short 4-week trial of an interactive videogame where compliance was monitored electronically and there was close parental supervision. However, in their open 12-week study (15), the average missions engaged (with the videogame therapeutic) dropped by 34% at week 4 and by 50% at week 12 (Table 1). In the Somryst study for chronic insomnia (12), only 60% of subjects completed all 6 core modules of CBT and frequency of subject logins varied from 0 to 142 times (median of 25). While efficacy was sustained even at 12-month follow up, the decrease in insomnia score was greater in subjects who completed all 6 modules vs. those who did not. In the Somryst study for subclinical depression with insomnia (13), attrition rate was 58% at 6 weeks and on average only 3.5 of the 6 modules were completed. Patients completing <4 modules had no significant overall benefits vs. the control condition and were not different from the control condition at 6 months. In the reSET study for substance use disorder (10), the drop-out rate was low (12%) – this was likely because subjects were seen twice a week in the clinic, supervised by therapists, paid prizes ranging from “thank you” notes to up to $100 cash for compliance, and on average, earned $277 in prizes over 12 weeks. In their long-term follow-up (10), when this contingency incentive ended, the superiority of the digital therapeutic over the control condition also ended.

Table 1. Engagement and Attrition rates in the pivotal studies of FDA-cleared Digital Therapeutics for menta health.

Mental health conditions, like major depressive disorder, ADHD, and PTSD, require sustained treatment. Because the field of digital therapeutics is still in its early stages, currently, there is little long-term efficacy data. If even well-designed, gamified, digital therapeutics have a 50% drop in engagement in 3 months then the outlook for long-term efficacy is grim. While drop-out rates in clinical trials can be kept low through frequent clinician contact, gamification, feedback, and cash incentives, this is not practical in the real world and hence attrition rates will be far higher. Finally, if the costs of increasing engagement and compliance equals that of a getting live psychiatric care, then digital therapies would become less attractive as a scalable low-cost solution.

Our scrutiny of published data also reveals several scientific gaps. First is the lack of standardized definitions of attrition and engagement in the field of digital therapeutics. Second is the lack of standardized reporting requirements by journals. A single digital therapeutic session can generate a dozen or more different metrics of how a user may interact with the app. Even widely used clinical trials reporting checklists, such as CONSORT, have not yet required the reporting of all such engagement metrics in digital trials. This makes it hard to extract such data from published reports and compare metrics across trials and products. Third, is the lack of a standardized definition of compliance. Fourth is the lack of standardized statistical methods, such as mixed models or last observation carried forward, to account for attrition and engagement biases in digital trials.

Fortunately, several constructs are emerging as promising features to increase engagement – both related to external factors of motivation and UX design (24). Several factors such as ease of use, gamification, ability to personalize app, in-app symptom monitoring, numerical feedback, ability to chart progress, socialization within the app, and integration with clinical services, have been reported to increase engagement (17, 18). A machine learning analysis of 54,604 adult patients with depression and anxiety identified 5 distinct engagement patterns for digital cognitive behavior therapy over 14-weeks: low engagers [36.5% of sample], late engagers [21.4%], high engagers with rapid disengagement [25.5%], high engagers with moderate decrease [6.0%], and high persistent engagers [10.6%]. Depression improvement rates were lowest for the low engagers (22). This study suggested machine learning algorithms may be useful to tailor interventions and a human touch for each of these five groups. Kaiser Permanente found that integrating digital mental health solutions – provided via clinician referral – into their health care delivery system was able to successfully enhance engagement (25). Fears around privacy and data security for mental health data may be a factor in engagement and attrition for some participants and this should be addressed upfront. While we do not have all the solutions, encouraging the availability of raw data from clinical trials through trial registries, analyzing long-term real-world data on patient reported outcomes, user experience (engagement and compliance) and product reliability (18) will be important to enhance their utility.

Digital therapeutics for mental health are here to stay. As the pivotal studies demonstrate, they benefit a substantial number of patients. However, the gap between intention and real-world efficacy for digital therapeutics remains large. There is an urgent need to recognize this gap and for stakeholders–regulators, technology developers, clinicians, patients–to come together to close this gap and ensure that this form of treatment is useful to clinical populations.

AN and PMD drafted the article. SB and MH provided critical edits. All authors helped with data interpretation of cited studies, contributed to the article, and approved the submitted version.

The study was funded by a grant from the National Institute of Aging to PMD.

MH has received grants from NIMH, NIA, Brain Initiative and Stanley Foundation for other projects. For other projects, PMD has received grants from NIA, DARPA, DOD, ONR, Salix, Avanir, Avid, Cure Alzheimer's Fund, Karen L. Wrenn Trust, Steve Aoki Foundation, and advisory fees from Apollo, Brain Forum, Clearview, Lumos, Neuroglee, Otsuka, Verily, Vitakey, Sermo, Lilly, Nutricia, and Transposon. PD is a co-inventor on patents for diagnosis or treatment of neuropsychiatric conditions and owns shares in biotechnology companies. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Doraiswamy PM, London E, Varnum P, Harvey B, Saxena S, Tottman S, et al. Empowering 8 billion minds: Enabling better mental health for all via the ethical adoption of technologies. NAM Perspect. (2019). doi: 10.31478/201910b. Available online at: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8406599/ (accessed May 9, 2022).

2. CB Insights,. State of Mental Health Tech 2021 report. CB Insights Research. (2022). Available online at: https://www.cbinsights.com/research/report/mental-health-tech-trends-2021/ (accessed May 9, 2022).

4. U.S. Food and Drug Administration. Policy for device software functions and mobile medical applications. (2019). Available online at: https://www.fda.gov/media/80958/download (accessed May 9, 2022).

5. Shuren J, Doraiswamy PM. Digital Therapeutics for MCI and Alzheimer's disease: A Regulatory Perspective – Highlights From The Clinical Trials on Alzheimer's Disease conference (CTAD). J Prev Alzheimers Dis. (2022) 9:236. doi: 10.14283/jpad.2022.28

6. International Medical Devices Regulator Forum. Software as a medical device (SaMD): key definitions. (2013). Available online at: https://www.imdrf.org/sites/default/files/docs/imdrf/final/technical/imdrf-tech-131209-samd-key-definitions-140901.pdf (accessed May 9, 2022).

7. International Devices Regulator Forum. “Sofware as a medical device”: possible framework for risk categorization and corresponding considerations. (2014). Available online at: https://www.imdrf.org/sites/default/files/docs/imdrf/final/technical/imdrf-tech-140918-samd-framework-risk-categorization-141013.pdf

8. U.S. Food and Drug Administration. Software as a medical device (SaMD) clinical evaluation. Guidance for Industry and Food and Drug Administration staff. (2017). Available online at: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/software-medical-device-samd-clinical-evaluation/ (accessed May 9, 2022).

9. Guo C, Ashrafian H, Ghafur S, Fontana G, Gardner C, Prime M. Challenges for the evaluation of digital health solutions – a call for innovative evidence generation approaches. NPJ Digit Med. (2020) 3:110–110. doi: 10.1038/s41746-020-00314-2

10. Campbell ANC, Nunes EV, Matthews AG, Stitzer M, Miele GM, Polsky D, et al. Internet-delivered treatment for substance abuse: a multisite randomized controlled trial. Am J Psychiatry. (2014) 171:683–90. doi: 10.1176/appi.ajp.2014.13081055

11. Christensen DR, Landes RD, Jackson L, Marsch LA, Mancino MJ, Chopra MP, et al. Adding an internet-delivered treatment to an efficacious treatment package for opioid dependence. J Consult Clin Psychol. (2014) 82:964–72. doi: 10.1037/a0037496

12. Ritterband LM, Thorndike FP, Ingersoll KS, Lord HR, Gonder-Frederick L, Frederick C, et al. Effect of a web-based cognitive behavior therapy for insomnia intervention with 1-year follow-up: a randomized clinical trial. JAMA Psychiatry. (2016) 74:68–75. doi: 10.1001/jamapsychiatry.2016.3249

13. Christensen H, Batterham PJ, Gosling JA, Ritterband LM, Griffiths KM, Thorndike FP, et al. Effectiveness of an online insomnia program (SHUTi) for prevention of depressive episodes (the GoodNight study): a randomised controlled trial. Lancet Psychiatry. (2016) 3:333–41. doi: 10.1016/S2215-0366(15)00536-2

14. Kollins SH, DeLoss DJ, Cañadas E, Lutz J, Findling RL, Keefe RSE, et al. A novel digital intervention for actively reducing severity of paediatric ADHD (STARS-ADHD): a randomised controlled trial. Lancet Digit Health. (2020) 2:e168–78. doi: 10.1016/S2589-7500(20)30017-0

15. Kollins SH, Childress A, Heusser AC, Lutz J. Effectiveness of a digital therapeutic as adjunct to treatment with medication in pediatric ADHD. NPJ Digit Med. (2021) 4:58–58. doi: 10.1038/s41746-021-00429-0

16. Lau N, O'Daffer A, Colt S, Yi-Frazier JP, Palermo TM, McCauley E, et al. Android and iPhone mobile apps for psychosocial wellness and stress management: systematic search in app stores and literature review. JMIR Mhealth Uhealth. (2020) 8:e17798. doi: 10.2196/17798

17. Bodner KA, Goldberg TE, Devanand DP, Doraiswamy PM. Advancing computerized cognitive training for MCI and Alzheimer's disease in a pandemic and post-pandemic world. Front Psychiatry. (2020) 11:557571. doi: 10.3389/fpsyt.2020.557571

18. Boardman S. Creating wellness apps with high patient engagement to close the intention-action gap. Int PsychGeriatr. (2021) 33:551–2. doi: 10.1017/S1041610220003385

19. Torous J, Lipschitz J, Ng M, Firth J. Dropout rates in clinical trials of smartphone apps for depressive symptoms: a systematic review and meta-analysis. J Affect Disord. (2020) 263:413–9. doi: 10.1016/j.jad.2019.11.167

20. Leon AC, Mallinckrodt CH, Chuang-Stein C, Archibald DG, Archer GE, Chartier K. Attrition in randomized controlled clinical trials: methodological issues in psychopharmacology. Biol Psychiatry. (2006) 59:1001–5. doi: 10.1016/j.biopsych.2005.10.020

21. Torous J, Michalak EE, O'Brien HL. Digital health and engagement - looking behind the measures and methods. JAMA Net Open. (2020) 3:e2010918. doi: 10.1001/jamanetworkopen.2020.10918

22. Chien I, Enrique A, Palacios J, Regan T, Keegan D, Carter D, et al. A machine learning approach to understanding patterns of engagement with internet-delivered mental health interventions. JAMA Netw Open. (2020) 3:e2010791. doi: 10.1001/jamanetworkopen.2020.10791

23. Baumel A, Muench F, Edan S, Kane JM. Objective user engagement with mental health apps: systematic search and panel-based usage analysis. J Med Internet Res. (2019) 21:e14567. doi: 10.2196/14567

24. Szinay D, Jones A, Chadborn T, Brown J, Naughton F. Influences on the uptake of and engagement with health and well-being smartphone apps: systematic review. J Med Internet Res. (2020) 22:e17572. doi: 10.2196/17572

Keywords: ADHD, depression, anxiety, Alzheimer's disease, PTSD, autism, schizophrenia, addiction

Citation: Nwosu A, Boardman S, Husain MM and Doraiswamy PM (2022) Digital therapeutics for mental health: Is attrition the Achilles heel? Front. Psychiatry 13:900615. doi: 10.3389/fpsyt.2022.900615

Received: 21 March 2022; Accepted: 29 June 2022;

Published: 05 August 2022.

Edited by:

Benjamin Nelson, Meru Health Inc., United StatesReviewed by:

Alicia Salamanca-Sanabria, ETH Zürich, SingaporeCopyright © 2022 Nwosu, Boardman, Husain and Doraiswamy. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: P. Murali Doraiswamy, bXVyYWxpLmRvcmFpc3dhbXlAZHVrZS5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.