- Sapien Labs, Arlington, VA, United States

Assessment of mental illness typically relies on a disorder classification system that is considered to be at odds with the vast disorder comorbidity and symptom heterogeneity that exists within and across patients. Patients with the same disorder diagnosis exhibit diverse symptom profiles and comorbidities creating numerous clinical and research challenges. Here we provide a quantitative analysis of the symptom heterogeneity and disorder comorbidity across a sample of 107,349 adult individuals (aged 18–85 years) from 8 English-speaking countries. Data were acquired using the Mental Health Quotient, an anonymous, online, self-report tool that comprehensively evaluates symptom profiles across 10 common mental health disorders. Dissimilarity of symptom profiles within and between disorders was then computed. We found a continuum of symptom prevalence rather than a clear separation of normal and disordered. While 58.7% of those with 5 or more clinically significant symptoms did not map to the diagnostic criteria of any of the 10 DSM-5 disorders studied, those with symptom profiles that mapped to at least one disorder had, on average, 20 clinically significant symptoms. Within this group, the heterogeneity of symptom profiles was almost as high within a disorder label as between 2 disorder labels and not separable from randomly selected groups of individuals with at least one of any of the 10 disorders. Overall, these results quantify the scale of misalignment between clinical symptom profiles and DSM-5 disorder labels and demonstrate that DSM-5 disorder criteria do not separate individuals from random when the complete mental health symptom profile of an individual is considered. Greater emphasis on empirical, disorder agnostic approaches to symptom profiling would help overcome existing challenges with heterogeneity and comorbidity, aiding clinical and research outcomes.

Introduction

The mental health of our society is a problem of growing concern. In 2017, 792 million people lived with a mental health disorder globally (1), while depression is the leading cause of disability as measured by Years Lived with Disability (YLDs) and a major contributor to the global burden of disease (2, 3). As people grapple with the consequences of the Covid-19 pandemic, the number reporting challenges with their mental health has further increased (4–7), emphasizing the importance of improving our understanding of mental illness to enable better outcomes.

In the absence of an understanding of underlying etiologies and biology of mental health challenges, the classification systems of DSM-5 (8) and ICD-11 (9) evolved to define mental health disorders by symptom criteria whereby specific groupings of symptoms are each assigned “disorder” labels. This approach presupposes that (i) the specific groupings of symptoms are good at separating individuals based on their symptom profile, such that individuals with a particular diagnosis have similar symptom presentations and (ii) that these symptom-based diagnostic groups each share a common underlying etiology. However, a large literature now highlights major misalignments between these disorder classifications and the symptomatic experience of patients. Firstly, the criteria-based approach to diagnosis, where one must have a subset of symptoms out of a larger group, means there are many ways to be diagnosed with the same disorder. For example, by some estimates there are 636,120 different possible symptom combinations that can lead to a diagnosis of PTSD (10) and 227 different possible ways to be diagnosed with depression (11), indicating considerable heterogeneity in symptom profiles within disorders. The observed heterogeneity in symptom profiles within disorders (12–19) has also led to various new definitions of disorder subtypes (20, 21). Secondly, there are many possible ways that patients can be comorbid across DSM-5 disorders (22), with studies showing that individuals commonly meet the criteria for multiple disorders (23–31) and that evolution of disorders across a lifetime is a pervasive phenomenon (28, 32–34). This misalignment is further exacerbated by an array of mental health assessment tools that are heterogeneous and overlapping, creating a system of diagnosis and evaluation that is poorly standardized and introducing further ambiguity (35, 36).

As a result, there has been considerable discussion over the validity of the DSM-5 classification approach (37–46). In addition, numerous studies have reported on the consequences of this misalignment between disorder classifications and patient symptom profiles. First, by focusing on a specific subset of symptoms for the definition of a disorder, this classification system precludes an understanding of the true range and diversity of symptom profiles present across clinical populations as the objective is typically to narrow down to a single disorder diagnosis, rather than embrace individual differences (47) or symptom complexity (48–50). However, the nuances of an individual's symptom profile hold important information that can guide clinical decision-making (51–58). Second, it results in inaccurate, not otherwise specified (NOS) or mixed diagnoses (59–63) where those with symptom profiles that are a poor fit for the clinical criteria, may have to embark on a long struggle to find effective treatment (64). Third, from a research perspective, studies aiming to develop new therapies and medications for mental health disorders typically select participants based on a diagnosis, whereas this group may be substantially heterogeneous in terms of their symptom profiles, and therefore outcomes (19, 21). To try to overcome some of these challenges, alternative transdiagnostic frameworks such as the Research Domain Criteria (RDoC) from the National Institute of Mental Health (NIMH) (65, 66) and the Hierarchical Taxonomy of Psychopathology (HiTOP) (67–69) have been proposed. These offer alternative ways to evaluate symptom profiles, either in terms of specific transdiagnostic constructs and subconstructs (RDoC), or dimensions at multiple levels of hierarchy (HiTOP), where the peak of the hierarchy can be denoted as a single overall factor of psychopathology, or p-factor (70, 71).

However, despite these various criticisms, the disorder classification system laid out by the DSM-5 is ingrained in psychiatric decision-making, social policy and popular discourse, and continues to dominate the field of mental health. One reason for the continued debate, is that there has not been a clear empirical assessment of how well the disorder classification system separates individuals into groups based on symptom criteria. For example, although there may be many combinations of symptoms that deliver a diagnosis of any individual disorder (10, 11), the overall symptom profiles of individuals with one diagnosis may nonetheless be substantially different relative to individuals in another disorder group. If so, the classification system would then serve to broadly discriminate between groups, which has a first order utility for differential determination of treatment pathways.

Here we assessed quantitatively the degree to which the symptom profiles of individuals associated with one disorder classification, as determined by the DSM-5 symptom criteria, were distinct from those of another, and how much they differed from a random selection of individuals with any disorder. To do so, we used a self-report mental well-being assessment tool, the Mental Health Quotient (MHQ) (72) that comprehensively covers mental health symptoms pertaining to 10 common DSM-5 disorders in a manner that is easy to administer, and on completion provides individuals with an aggregate score (quotient) along with a detailed report with feedback and recommendations for help seeking and self-care.

The MHQ was developed through a systematic analysis of the question content from 126 commonly used mental health questionnaires and interviews which typically conform to criteria laid down in the DSM (35). The 126 assessments included commonly used diagnostic scales and assessments of depression, anxiety, bipolar disorder, attention-deficit/hyperactivity disorder (ADHD), posttraumatic stress disorder (PTSD), obsessive compulsive disorder (OCD), addiction, schizophrenia, eating disorder, and autism spectrum disorder (ASD), as well as cross-disorder tools. Forty-three identified symptom categories were then combined with additional elements from RDoC to develop a questionnaire that comprehensively assessed a complete profile of 47 mental elements using a 9-point life impact rating scale [for details on the question format see (72) and Supplementary Figures 1A,B]. In this study, we used data from 107,349 respondents across 8 English-speaking countries (73) who completed the MHQ between April 2020 and June 2021. The 47 MHQ elements were mapped to the diagnostic criteria, as outlined in DSM-5, for the 10 mental health disorders on which the MHQ was based. We then evaluated the heterogeneity of symptom profiles of those within DSM-5 disorder groups relative to between disorder groups and random groupings of individuals who met the criteria for any one of the 10 disorders.

We show here that, contrary to expectation, the heterogeneity of the symptom profiles across individuals selected randomly across any disorder group, was no different than across individuals selected from within any specific disorder group. Furthermore, symptom profiles were as heterogeneous within a disorder group as they were between disorder groups. This challenges the fundamental premise on which the DSM-5 has been developed and has significant implications from both a clinical and research perspective.

Materials and Methods

Data Acquisition

The data sample was taken from the Mental Health Million open-access database which acquires data by offering the MHQ online and free of charge in multiple languages (72, 73). Respondents took this 15–20-min assessment anonymously online for the purpose of receiving a mental well-being score (the mental health quotient) and personalized report on completion, based on their responses (see below and Supplementary Figure 1C). The MHQ was publicized primarily through social media and Google Ads targeting a broad cross section of adults aged 18 and above, where self-selecting respondents may have had a specific mental health interest or concern. Outreach directed participants to the MHQ website (https://sapienlabs.org/mhq/) to complete the assessment. No financial compensation was provided. The Mental Health Million Project is a public interest project that tracks the evolving mental well-being of the global population, and its social determinants, and is governed by an academic advisory committee. The project has ethics approval from the Health Media Lab Institutional Review Board (HML IRB), an independent IRB that provides assurance for the protection of human subjects in international social & behavioral research (OHRP Institutional Review Board #00001211, Federal Wide Assurance #00001102, IORG #0000850).

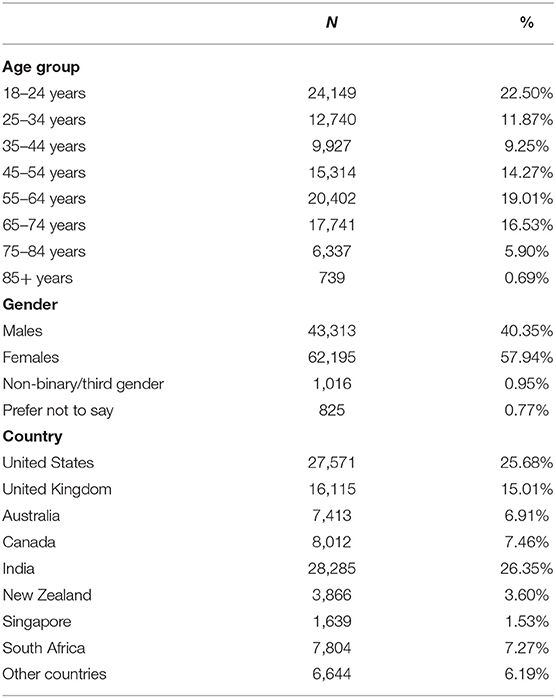

For this study, we utilized responses to the English version of the MHQ between April 2020 and June 2021 which included 107,349 respondents predominantly from the United States, Canada, United Kingdom, Australia, New Zealand, South Africa, Singapore and India, with the majority of respondents living in India (26.35%) United States (25.68%), and smaller proportions of respondents living in Singapore (1.53%) and New Zealand (3.60%). Across all countries, 40.35% of the sample were male; 57.94% female; 0.95% reported as non-binary/third gender and 0.77% preferred not to say. The sample covered all age brackets but had larger samples in the younger (18–24; 22.50%) and older (55–64; 19.01%) age groups relative to their proportion in the population. These differences likely reflect the self-selected nature of the sample (see Limitations section in the Discussion). The demographic breakdown (including age, gender, country, employment status, and education) of the sample is shown in Table 1 and Supplementary Table 1.

MHQ Assessment

The MHQ is a transdiagnostic assessment that comprehensively covers all possible symptoms across 10 major mental health disorders as well as elements derived from RDoC constructs and subconstructs. The development and structure of the MHQ are described in previously published papers (35, 72). Briefly, the list of MHQ elements or items was determined based on a comprehensive coding of mental health symptoms assessed in questions across 126 different mental health questionnaires and interviews spanning 10 major mental health disorders as well as cross-disorder assessments. These included questionnaires for depression [e.g., Patient Health Questionnaire, PHQ-9 (74)], anxiety [e.g., Generalized Anxiety Disorder Assessment, GAD-7 (75)], bipolar disorder [e.g., Mood Disorder Questionnaire, MDQ (76)], ADHD [e.g., Conners Adult ADHD Rating Scale, CAARS (77)], PTSD [e.g., Clinician-Administered PTSD Scale for DSM-5, CAPS-5 (78, 79)], OCD [e.g., Yale Brown Obsessive Compulsive Symptom Scale & Checklist™, Y-BOCS (80, 81)], addiction [e.g., Addiction Severity Index, ASI-5 (82)], schizophrenia [e.g., Brief Psychiatric Rating Scale, BPRS (83, 84)], eating disorder [e.g., Eating Disorder Inventory, EDI-3 (85)], and ASD [e.g., Autism Diagnostic Interview Revised, ADI-R (86, 87)], as well as cross-disorder tools [e.g., Structured Clinical Interview for DSM-5, SCID-5-CV (88); see (72) for more details and a full list of the 126 assessment tools]. These disorders were selected based on their inclusion in the DSM-5 clinical interview (SCID-CV) (88). In addition, ASD and eating disorder were included due to both their prevalence and their broad public and scientific interest. A total of 10,154 questions were coded and consolidated into a set of 43 symptom categories. The resultant items were then reviewed in the context of other transdiagnostic frameworks including RDoC constructs and subconstructs put forward by the NIMH (65), and a few additions (e.g., selective attention, coordination) were made to ensure that the list of items reflected components within this non-DSM framework. The resulting categories were then reorganized into a set of 47 elements that describe mental health and mental well-being (Figure 3 and Supplementary Table 2).

Within the MHQ, each of these 47 elements were rated by respondents using a 9-point life impact rating scale reflecting the impact of a particular mental aspect on one's ability to function (Supplementary Figures 1A,B). This scale was designed depending on whether the item exists on a spectrum from positive to negative (spectrum items such as memory) or as varying degrees of problem severity (problem items such as suicidal thoughts). For spectrum items, 1 on the 9-point scale referred to “Is a real challenge and impacts my ability to function,” 9 referred to “It is a real asset to my life and my performance,” and 5 referred to “Sometimes I wish it was better, but it's ok.” For problem items, 1 on the 9-point scale referred to “Never causes me any problems,” 9 referred to “Has a constant and severe impact on my ability to function,” and 5 referred to “Sometimes causes me difficulties or distress but I can manage.” Respondents made rating responses based on their current perception of themselves rather than a specific time frame. However, the rating on the 9-point life impact scale has been shown to have a good correlation against more common metrics of symptom frequency and severity, and ratings across elements are highly reliable from sample to sample (89).

Data across 30 descriptors relating to the demographic, life experience, lifestyle and situational profile of the individual were also collected. Demographic descriptors relating to the age, gender, geographical location, employment status, and education of the individual were obtained prior to the collection of mental well-being ratings, while life experience (e.g., life traumas, Covid-19 impacts, medical conditions, life satisfaction, substance use), lifestyle (sleep, exercise and socializing), and help seeking (including reasons for/ not) were obtained after the collection of mental well-being ratings [see (73) for a complete list].

On completion, respondents received their MHQ (a composite mental well-being score that places them along a spectrum from distressed to thriving), along with a personal report that provided recommendations for help-seeking and self-care. Provision of a personal report aimed to ensure greater interest of the respondent to answer questions thoughtfully and accurately. An extract of an example MHQ report detailing the MHQ score and sub-scores is presented in Supplementary Figure 1C; see (72, 73) for further information on how these scores were calculated. The MHQ score has substantial criterion validity, relating systematically to both productivity and clinical burden (89).

Exclusion Criteria

The following exclusion criteria were applied to the data. First, those respondents who took under 7 min (an indication that the questions were not actually read) or over 1 h to complete the assessment (suggesting that the individual was not focused on the assessment), were excluded. Secondly, individuals who found the assessment hard to understand (i.e., responded no to the question, “Did you find this assessment easy to understand?”) were excluded. Third, respondents who made unusual or unrealistic responses (e.g., those who stated they had not eaten for 48+ hours or who stated they had slept for 30+ hours) were excluded. These responses might be poorly considered by the respondent or reflect circumstances where thinking was impaired and therefore invalid. Altogether, this resulted in the exclusion of 6.9% of respondents, with 107,349 respondents available for the final analysis.

Symptom Criteria

For each of the 47 MHQ items, responses were determined to be clinically significant symptoms if they met a particular threshold of impact on the individual's ability to function (hereafter referred to as “clinical symptom(s)” or “symptom(s)”). For problem items which represented a unidimensional scale of symptom severity from 1 to 9, the threshold selection for a clinical symptom was ≥8. For spectrum items where elements of mental function could be either a negative symptom or a positive asset (e.g., memory) and the 1–9 scale ranged from negative symptom (1–4) to positive asset (6–9), the threshold selection for a clinical symptom was a rating of ≤1. Testing of the 9-point scale for one problem element (Feelings of Sadness, Distress or Hopelessness) demonstrated that a selection of 8 corresponded to an average symptom frequency of 5 days per week (89). This threshold thus corresponds to the DSM-5 criteria of experiencing the symptom “nearly every day” for depression. However, we note that the correspondence between frequency and life impact rating may differ from item to item (see Limitations section of the Discussion).

Computing Dissimilarity of Symptom Profiles

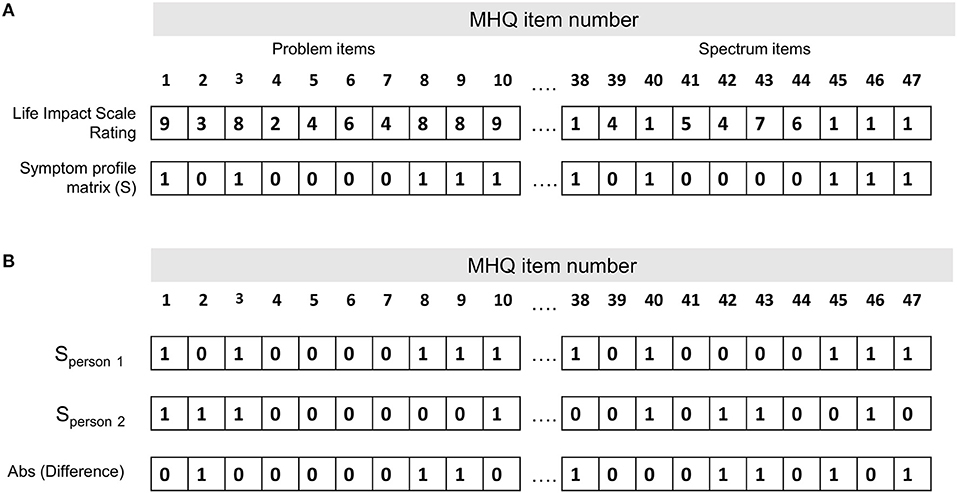

Symptom dissimilarity between each pair of individuals was calculated as the percentage of symptoms that differed (i.e., where one person had the symptom and the other did not) out of the total number of possible symptoms (i.e., 47 MHQ items representing 47 possible symptoms; Supplementary Table 2). Figure 1A shows the symptom profile determined for one person whereby each of the 47 MHQ elements (representing a comprehensive set of possible symptoms) are coded as 1 if it was a symptom (i.e., the rating selected was above the threshold where it was considered a symptom, generally in this paper ≥8 for problem items and ≤1 for spectrum items), and 0 if it was not a symptom (i.e., did not meet the threshold). Symptom dissimilarity between two people was calculated as the sum of the absolute difference between the symptom matrix for each person as a fraction of the total number of symptoms (Figure 1B). Restated:

where Sp1 is the symptom profile of person 1, Sp2 is the symptom profile of person 2 and N is the total number of possible symptoms (here 47).

Figure 1. Calculation of the symptom dissimilarity between two people. (A) Symptom profile for one person (person 1 in B). Each of the 47 MHQ elements (representing a comprehensive set of possible mental symptoms) are coded as 1 if it is a symptom (i.e., the rating selected is above the threshold where it is considered a symptom, generally in this paper ≥8 for problem items and ≤1 for spectrum items), and 0 if it is not a symptom (i.e., does not meet the threshold). (B) Symptom profile for two people. Symptom dissimilarity is calculated as the sum of the absolute difference between the symptom matrix for each person/total number of symptoms.

For example, out of 47 possible symptoms, if each person had 20 symptoms which did not overlap at all they would have a dissimilarity of 40/47, or 85%. If they each had just 10 symptoms which did not overlap, they would have a dissimilarity of 20/47, or 43%. Conversely, if all 10 or 20 symptoms perfectly overlapped, they would have a dissimilarity of 0/47 or 0%. This measure thus reflects not only which symptoms the two people had in common, but also the common lack of symptoms.

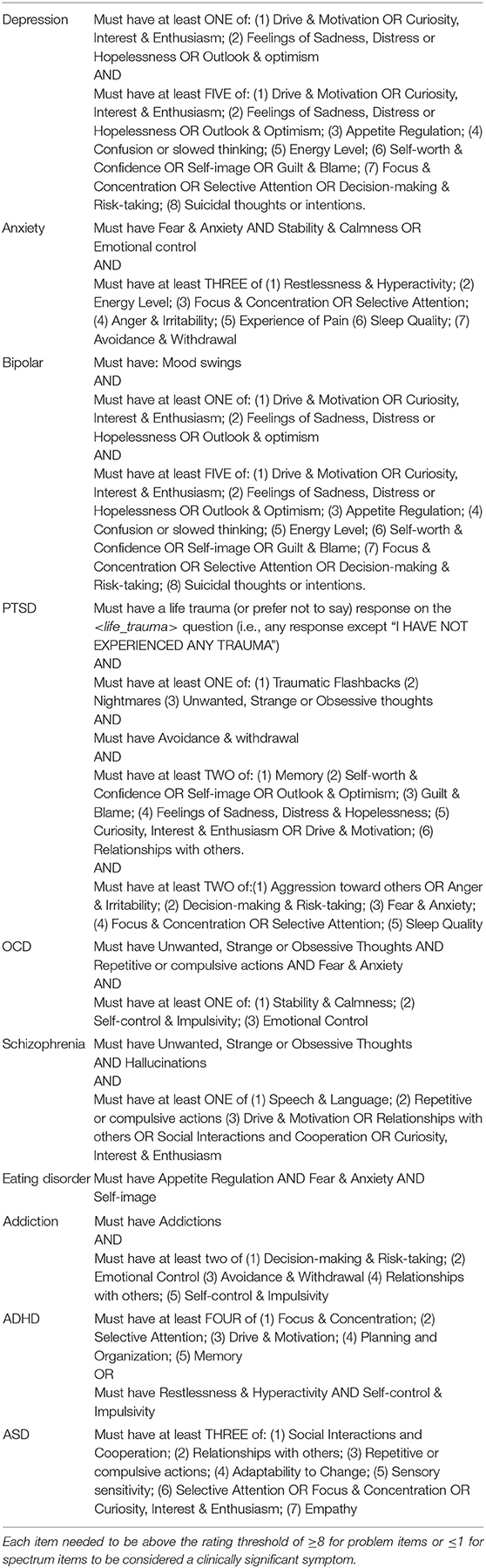

Mapping of the MHQ to DSM-5 Criteria

MHQ items were first mapped to the symptoms described within the criteria of each of the 10 DSM-5 disorders, based on the closest semantic match (Figure 3 and Table 2). We note that some disorder criteria included multiple symptoms and therefore mapped to more than one MHQ item. For example, one criterion for depression includes “Diminished ability to think or concentrate, or indecisiveness,” which mapped to MHQ items of Focus and concentration, Selective attention, and Decision-making & risk-taking. As MHQ questions were formulated from DSM-based assessment tools, all symptoms described in the DSM-5 criteria for these 10 disorders had an MHQ match. The specific criteria rules of the DSM-5 were then applied to arrive at diagnostic rules using the MHQ for each of the 10 disorders (see Table 2).

For example, within the DSM-5, a positive diagnosis for depression requires that the individual has experienced at least 5 out of a list of 8 different criteria with one of them being a depressed mood or loss of interest or pleasure. These criteria, when applied to the MHQ elements, required that the symptom profile (MHQ elements meeting the symptom threshold) included at least Feelings of Sadness, Distress or Hopelessness or poor Outlook & Optimism AND/OR poor Drive & Motivation or poor Curiosity, Interest & Enthusiasm, AND must have least 5 of (1) Feelings of Sadness, Distress or Hopelessness or poor Outlook & Optimism; (2) poor Drive & Motivation or poor Curiosity, Interest & Enthusiasm; (3) poor Appetite Regulation; (4) Confusion or slowed thinking; (5) low Energy Level; (6) low Self-image or low Self-worth & Confidence or Guilt & Blame; (7) poor Decision-making & Risk-taking or poor Focus & Concentration or poor Selective Attention; (8) Suicidal thoughts or intentions (Figure 3 and Table 2).

Although this MHQ diagnostic criteria mapping does not mean that the individual would be diagnosed with that disorder in the context of a clinical interview, it indicates that their pattern of clinical symptoms broadly aligned with the diagnostic criteria for that disorder. However, we note the caveat that for bipolar disorder, symptoms denoted extreme versions of positive assets (e.g., grandiosity and decreased need for sleep) that were not fully articulated within the MHQ, while for OCD the MHQ items were broader (e.g., obsessive thoughts were incorporated within a general item reflecting Strange, unwanted and obsessive thoughts; see section Limitations). Furthermore, specific criteria relating to symptom timing were not included, as this is not included in the MHQ which assesses the individual's current perception of themselves.

Within Disorder Analysis

To determine the heterogeneity of symptom profiles within a disorder group (i.e., all those who mapped to a particular disorder as described in section Mapping of the MHQ to DSM-5 Criteria), we computed the symptom dissimilarity (as defined in section Computing Dissimilarity of Symptom Profiles) between all pairs of individuals within the group and determined the average symptom dissimilarity and the standard deviation (SD) of the dissimilarity between all pairs. We similarly computed the dissimilarity of symptom profiles of each individual within a disorder group to each individual in a random demographically matched group, computing statistics on these distributions as described in section Statistics. We note that 10 iterations of random groupings (each N = 3,333) yielded similar results (average symptom dissimilarity across all pairs of individuals ± SD was 40.5 ± 11.3% and ranged from 39.5 to 41.2%) indicating that any comparison would provide a similar result.

Between Disorder Analysis

We next compared the symptom profiles of individuals between two disorder groups. To do so, we first computed the symptom dissimilarity between each individual from one disorder group and each individual from another disorder group. We did so by including all individuals in each group (but excluding self-comparisons), and also by removing those individuals who were part of both disorder groups (i.e., would be considered “comorbid”). This computation was performed across the whole sample, and separately across different country, age and gender demographic groups.

Statistics

To determine whether individuals within a disorder group were statistically more homogenous than a randomly selected group of individuals with at least one disorder, we computed the p-value of the difference in the distribution of dissimilarities for each disorder group compared to a randomly selected group using a two tailed t-test.

To test the hypothesis that each disorder group represented sufficient symptom homogeneity such that it represented a cluster that could be differentiated from across the disorder space, we used the Hopkins Statistic (H) as described in (90). This statistic compares the distance (i.e., symptom dissimilarity) of elements from each disorder group to the nearest neighbor within the disorder group and to the nearest neighbor in the randomly selected group as follows:

Where d is the nearest neighbor distance within the disorder group and r is the nearest neighbor distance to the random group.

If the total nearest neighbor distance between symptom profiles within the disorder group is, on average, the same as the distance to the nearest neighbor from the random group, H should be about 0.5, implying that the disorder group does not represent a cluster that can be differentiated from random. On the other hand, if the disorder groups each represent relatively compact and isolated clusters, H should be larger than 0.5 and almost equal to 1.0 for very well-defined clusters.

Results

Prevalence and Profile of Clinical Symptoms

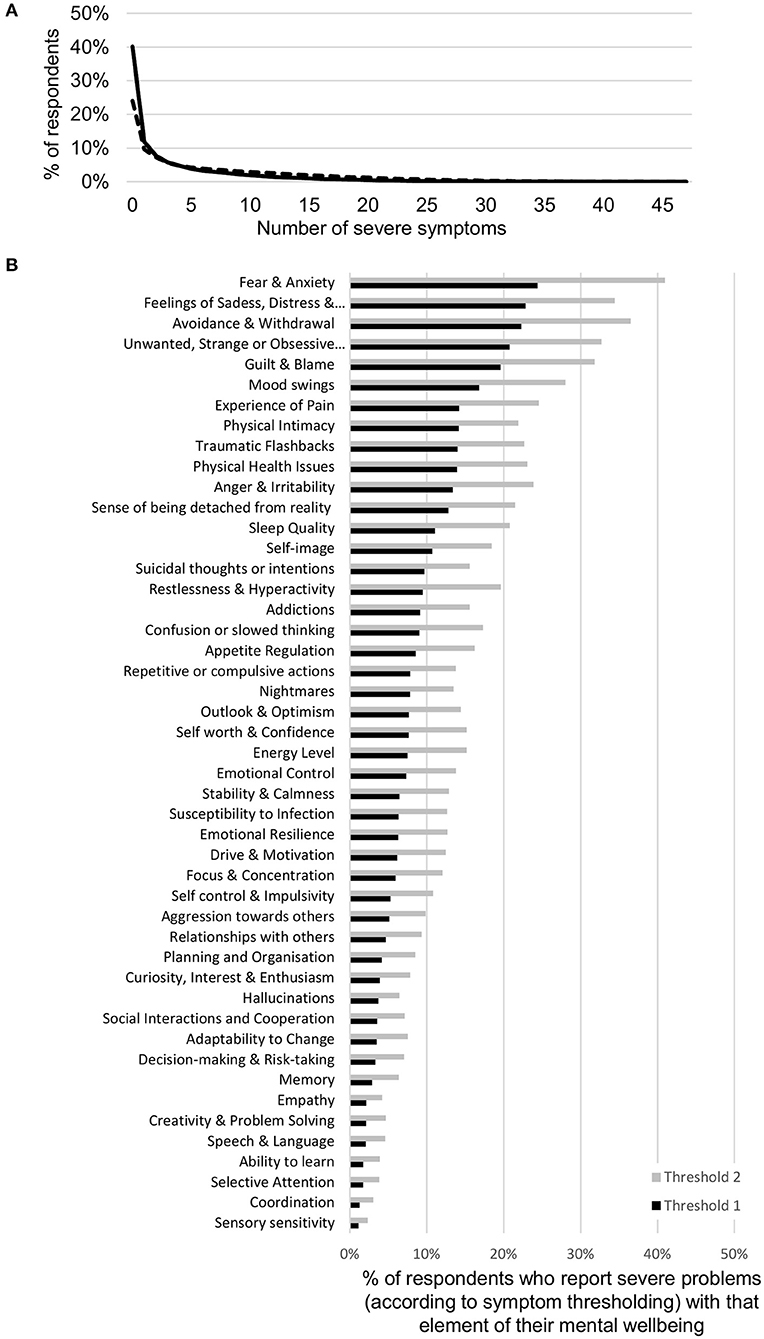

The prevalence of clinical symptoms in the sample was dependent on the threshold used to determine clinical significance. While the DSM-5 typically specifies that a mental aspect should be considered a symptom if it occurs “nearly every day,” “persistently,” or “more days than not,” in reality, thresholding is determined using a wide variety of criteria across diagnostic screening tools, from severity and frequency to timing and duration of symptoms (35). Here the criterion used was severity of impact on one's ability to function. At the stringent threshold criterion used to define a clinical symptom throughout this paper (see Symptom Criteria section in the Methods), 40.1% of all respondents across the sample had no clinical symptoms, while 25.9% had more than 5 clinical symptoms (Figure 2A). However, when the threshold was shifted one point (dotted line in 2A; ≥7 for problem items; ≤2 for spectrum items), it resulted in only 24.0% of all respondents having no clinical symptoms while 44.4% had more than 5. The fraction of the sample with successively larger numbers of clinical symptoms decreased in a manner that was best fit by an exponential function. Thus, purely from the perspective of number of clinical symptoms, there was no specific distinction where one might draw the line between normal and disordered. Changing the threshold to define a clinical symptom less stringently in terms of severity decreased the fraction of people with no clinical symptoms but did not change the shape of this curve (Figure 2A dotted line). Within this distribution, some clinical symptoms were more prevalent than others (Figure 2B; black bars). Four clinical symptoms were reported by >20% of the sample including Unwanted, strange or obsessive thoughts, Feelings of sadness, distress or hopelessness, Fear and anxiety and Avoidance and withdrawal. Others such as problems with Sensory sensitivity, Coordination and Selective attention were rarer, with rates <2%. When the threshold was shifted one point (gray bars in 2B; ≥7 for problem items; ≤2 for spectrum items), the prevalence of different symptoms increased across all elements, but to a greater or lesser degree across elements. We note that while there were significant differences in symptom prevalence between age and gender groups, the exponential structure of symptom prevalence was the same. While not shown here, differences between age and gender groups have been shown with a subset of this data previously (91). Given these differences, our analysis looked at the whole sample as well as across different demographic groups.

Figure 2. Symptom prevalence in the sample. (A) Prevalence of number of clinical mental health symptoms in the sample as defined by a threshold of severity in the MHQ (≥8 for problem items and ≤1 for spectrum items; black line). Dotted line denotes the prevalence when the threshold is shifted by one point (≥7 for problem items and ≤2 for spectrum items). The percentage with each higher number of clinical symptoms decreases exponentially. (B) The proportion of respondents who reported severe problems with each of the 47 elements of mental well-being included in the MHQ, as defined by a threshold of severity in the MHQ (≥8 for problem items and ≤1 for spectrum items; black bars). Prevalence of specific symptoms across the sample ranged from 24.4 to 1.1%. Gray bars denote the proportion when the threshold is shifted by one point (≥7 for problem items and ≤2 for spectrum items).

Mapping of Clinical Symptoms to the DSM-5 Criteria

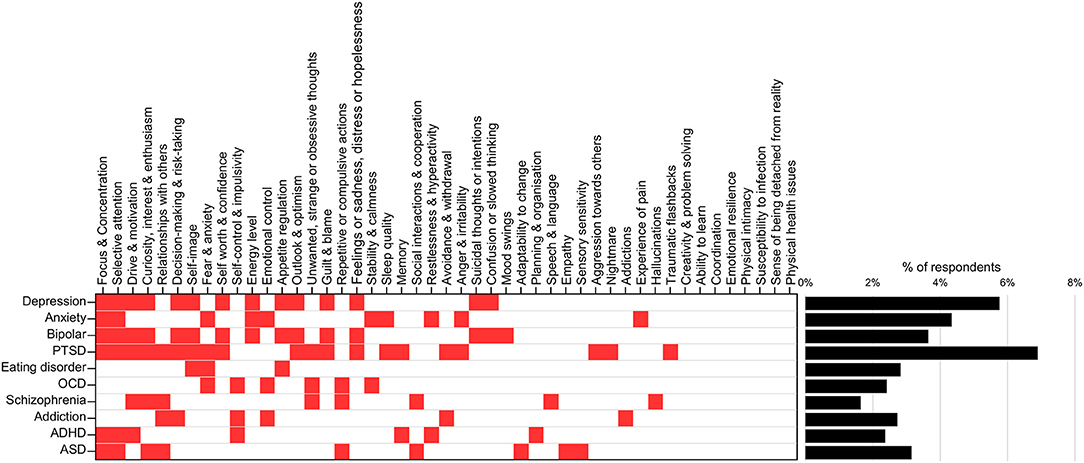

Figure 3 (left) shows the mapping of elements or items of the MHQ to each of 10 mental health disorders, based on the DSM-5 diagnostic criteria. Only 12 out of 47 MHQ items that mapped to DSM-5 disorder criteria were unique to one “disorder” label. However, the specific criteria for each disorder differed (see Table 2). Applying these rules to the clinical symptoms of each individual (as determined in Symptom Criteria section in the Methods), the prevalence for each of these disorders ranged between 1.6% (schizophrenia) to 6.9% (PTSD) (Figure 3, right). These fall within the broad ranges of prevalence across epidemiological studies. For example, the prevalence of ADHD within the current adult sample (2.4%) closely matched the estimated global prevalence of ADHD of 2.8% (92). Similarly, the prevalence of depression within this study (5.8%), lay within the range of prevalence estimated by some studies (3, 93, 94), although is lower than more recent estimates from other sources (95, 96). However, prevalence estimates vary substantially depending on the assessment tool used, the geographical location, and also the timing in relation to the Covid-19 pandemic (7, 96–103). For example, the epidemiological estimates for PTSD varied between 7 and 53.8% in one recent meta-analysis (7).

Figure 3. Mapping of MHQ symptoms to DSM-diagnostic criteria. MHQ items corresponding to each DSM-5 defined disorder (left; red squares) and associated prevalence of each disorder following application of diagnostic rules (right). Full mapping and rules shown in Table 2.

DSM-Diagnostic Comorbidity Profiles

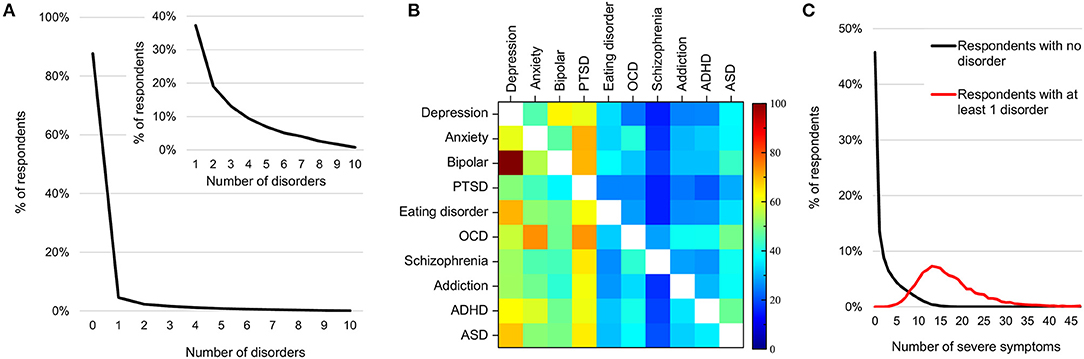

Overall, 12.3% of all respondents mapped to one or more disorders (Figure 4A), within which 62.7% mapped to more than one disorder (Figure 4A, inset) in line with findings that comorbidity is the norm (33, 34, 71). Figure 4B shows comorbidity for the 10 disorders by rows (numbers in Supplementary Table 3). For example, row 1 represents the fraction of individuals whose symptom profiles aligned with the criteria for depression and who also aligned with the criteria for each of the other disorders (shown across the columns). Here 25.7 and 37.3% of those with depression also met the criteria for ADHD and ASD, respectively. Conversely, 62.6 and 68.1% of individuals who mapped to ADHD and ASD criteria, respectively, also aligned with criteria for depression. Note from prevalence estimates in Figure 3, while 5.8% of individuals met the criteria for depression, only 2.4 and 3.2% met the criteria for ADHD and ASD, respectively.

Figure 4. Prevalence and comorbidity among DSM-disorders. (A) Percentage of individuals by number of disorders to which they map. Inset shows the mapping of the 12.3% with one or more disorder. (B) Percentage of individuals with each disorder who also map to each other disorder (numbers in Supplementary Table 3). (C) Distribution of number of clinical symptoms across individuals who do not map to any disorder criteria (black line) and those who map to at least one (red line).

Symptom Prevalence Beyond DSM-Disorder Criteria

The minimum number of clinical symptoms required for a diagnosis of each of these 10 disorders, as per the DSM-5, ranges from 3 to 6. For instance, depression requires a minimum of 5 symptoms across 5 criteria out of a possible 18 symptoms and 8 criteria. Nonetheless, among those who mapped to at least one disorder, the average number of clinical symptoms was 20 (median 15), substantially higher than the minimum criteria for any one disorder (Figure 4C; red curve). On the other hand, 58.7% of respondents with 5 or more clinical symptoms did not map to any of the 10 disorder criteria (Figure 4C, black curve).

Symptom Heterogeneity Within Disorders

If a disorder group had significantly lower symptom dissimilarity, i.e., more homogeneous symptom profiles, compared to a randomly selected group, this would indicate that the DSM-5 diagnostic criteria for that disorder was good at separating individuals by a particular profile. We therefore first evaluated the heterogeneity of symptom profiles within each DSM-5 disorder group to see how they compared to the heterogeneity of groups of randomly selection individuals from across any of the 10 disorder groups. We hypothesized that overall, the symptom profiles of individuals within each DSM-5 disorder group would be more homogeneous than a randomly selected group and therefore reasonably well-separated.

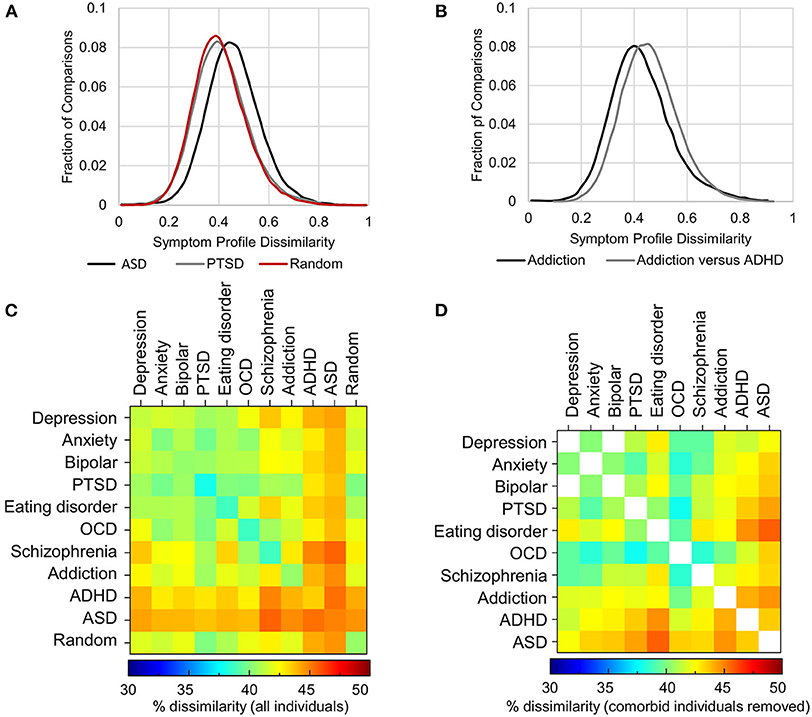

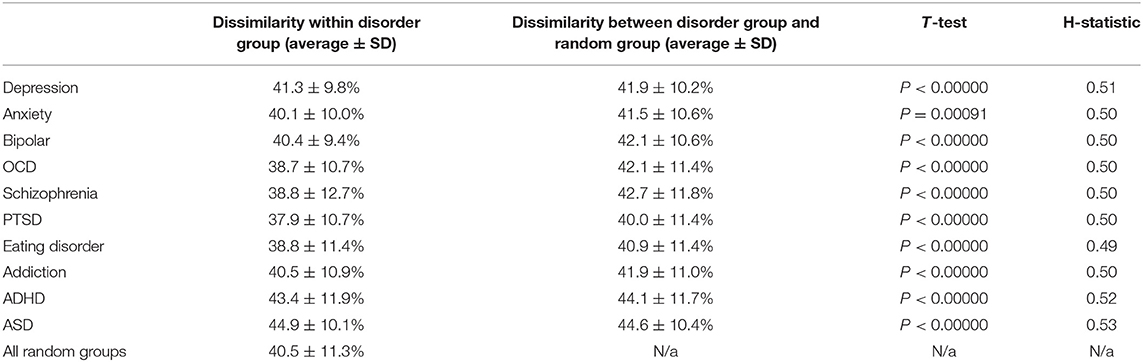

First, within each disorder, the heterogeneity (average dissimilarity ± SD) ranged from 37.9 ± 10.7 for PTSD (i.e., the most consistent) to 44.9 ± 10.1 for ASD (the most heterogeneous), while the mean heterogeneity for randomly selected groups was 40.5 ± 11.3% (Figure 5A, diagonal of Figure 5C and Table 3). Only PTSD (which had the highest prevalence in the sample, see Figure 3) had more than 5% lower average dissimilarity (6.4%) and therefore greater homogeneity than the average of the random groups, while ADHD and ASD had more than 5% higher average dissimilarity and therefore greater heterogeneity than the average of the random groups (7.2 and 10.9%, respectively; Figures 5A,C and Table 3). While the differences in dissimilarity values were small, even very small differences were statistically significant by t-test (all p < 0.0009), since the sample size was large.

Figure 5. Heterogeneity of symptom profiles within and between disorders. (A) Distribution of symptom profile dissimilarity across all individuals who map to criteria for PTSD (gray), ASD (black) and a randomly selected groups of individuals from the pool of individuals with at least one of the 10 disorders (red). (B) Distribution of the dissimilarity of symptom profiles of all individuals mapping to the diagnostic criteria for addiction (black) and the dissimilarity of symptom profiles between those with addiction and those with ADHD excluding comorbid individuals. (C) Symptom dissimilarity within and between each pair of disorders ranges from 37.9% (within PTSD) to 45.6% (between ASD and Schizophrenia). (D) Symptom dissimilarity as in (C) but with comorbid individuals excluded ranged from 37.8 to 45.7%.

Table 3. Dissimilarity of symptom profiles within each disorder group and the comparison against symptom profiles within groups of randomly selected individuals from any disorder group.

Given that the difference in heterogeneity from the random groups was significant, although in both positive and negative directions (i.e., more and less heterogeneous), we also looked at the Hopkins Statistic, a measure of how well disorder groups could be separated. The Hopkins Statistic measures how much the distance (dissimilarity) between nearest neighbors of elements in the disorder group differs from the distance to the nearest neighbor in the random group such that a value of 0.5 would indicate that there was no difference. On the other hand, separable groups where the elements have closer neighbors within their group relative to a random group would typically have a Hopkins Statistic value >0.7 (see section Methods). Here the Hopkins statistic ranged from 0.49 to 0.53 across all 10 disorders indicating that they were not distinguishable from a random sample and therefore did not represent a valid cluster.

We similarly ran this analysis for different demographic segments in order to determine whether there was any difference for a particular demographic (e.g., age, gender, country) such that the DSM-5 criteria better separated individuals in one particular group. However, the results were similar irrespective of demographic (Supplementary Table 4).

Symptom Dissimilarity Between Disorders

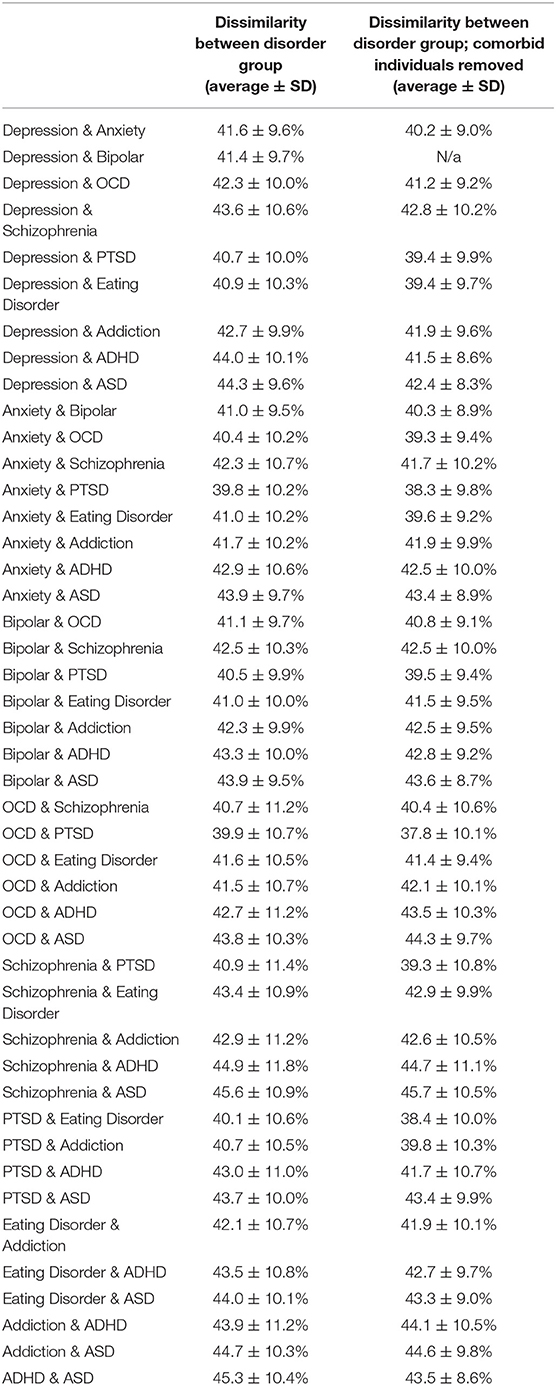

As randomly selected groups would typically have a composition that reflected the prevalence of disorders and their comorbidities, we next hypothesized that the symptom profiles between disorders might be significantly different from one another when comorbid individuals were removed. Significantly, between disorders, dissimilarity was not much higher than the within disorder dissimilarity, ranging from 39.8% (between Anxiety and PTSD) to 45.6% (between Schizophrenia and ASD) (Figure 5C and Table 4). In addition, contrary to expectation, excluding all comorbid individuals from the comparison decreased heterogeneity, although not substantially, as comorbid individuals tended to have a larger number of symptoms overall, and therefore greater symptom dissimilarity (Figure 5D and Table 4). Only in 18% of comparisons did heterogeneity increase. Overall, in either direction the maximal difference was 5.7%. Figure 5B shows an example of the within disorder heterogeneity for addiction compared to the heterogeneity between individuals with addiction and ADHD after removing those individuals who were comorbid for both. This demonstrates the very small difference in dissimilarity among those with addiction compared to the dissimilarity between those who had addiction and those who had ADHD (but not both).

Table 4. Dissimilarity of symptom profiles between each disorder group, with and without comorbid individuals removed.

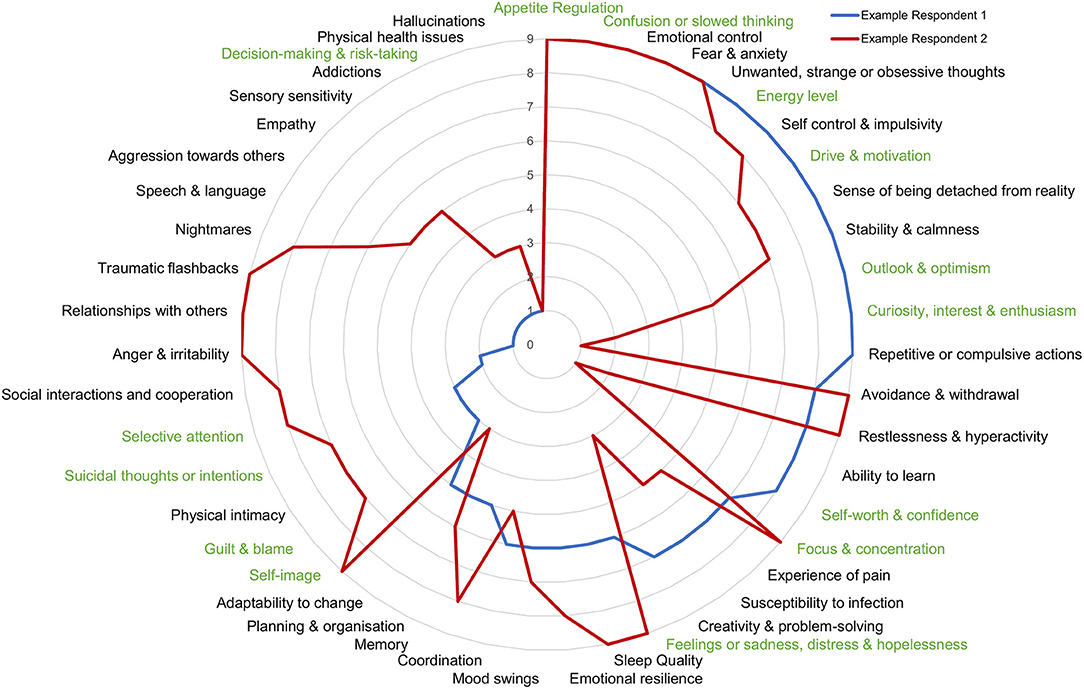

Thus, contrary to expectation, the DSM-5 criteria by which disorders are defined does not separate individuals based on their overall symptom profile and is closely equivalent to a random drawing of individuals. As a demonstration of the heterogeneity within disorders, we show the clinical symptom profile of 2 individuals who met the diagnostic criteria for depression (Figure 6). If these individuals were only considered on the basis of a depression screening, their dramatically different symptom profiles would be missed.

Figure 6. Symptom profile of 2 individuals who map to DSM-criteria for depression. Comparison of symptom profiles of 2 individuals mapping to diagnostic criteria for depression is an example of the within disorder symptom heterogeneity. Higher numbers toward the outside of the circle represent a greater problem.

Discussion

The heterogeneity of symptom profiles within disorders, and comorbidity across disorders, are known to present a significant challenge to the effective diagnosis, treatment and research of mental illness (20, 23, 31). Here we quantify the degree of heterogeneity of comprehensive symptom profiles of 107,349 individuals within and across DSM-diagnostic criteria. While 58.7% of those with 5 or more clinically significant symptoms did not map to the diagnostic criteria of any of the 10 DSM-5 disorders, those with symptom profiles that mapped to at least one disorder reported, on average, 20 clinically significant symptoms. The heterogeneity of symptom profiles was almost as high within a disorder group (average dissimilarity of 40.5% across all disorders) as between 2 disorder groups (41.8% on average with comorbid individuals removed) and no individual disorder group was separable from randomly selected groups of individuals with at least one disorder, with the Hopkins statistic ranging between 0.49 and 0.53. Overall, the DSM-5 disorder criteria failed to separate individuals by symptom profiles any better than random assignment. Thus, rather than representing a method of separating groups based on symptoms, disorder labels primarily serve to emphasize a particular subset of symptoms without consideration of the entire symptom profile. Given that the symptom criteria of the DSM-5 do not specifically reflect any known underlying biology or etiology, this calls into question their utility as a diagnostic system and likely plays a greater role in hindering than helping progress in the understanding and treatment of mental health challenges. We discuss here the implications of these results for how we consider mental health epidemiology, clinical diagnosis, treatment, and research.

Estimating Prevalence and Mental Health Epidemiology

Across the sample, symptom prevalence decreased systematically with an exponential decay. Thus, drawing the line between how many severe symptoms are normal vs. clinical is essentially an arbitrary judgement. Across the sample, using the more stringent threshold of negative life impact to define clinical significance, 12.3% had symptom profiles that aligned with at least one DSM-5 disorder diagnosis. However, changing the threshold of clinical significance within the MHQ rating framework by one severity point changed clinical prevalence to 25.8%. While prevalence estimates in this data, based on point estimates and using the most stringent threshold criteria, matched up with some epidemiological prevalence estimates (3, 92–94), studies have wide ranges that depend on the tool used, the thresholds considered, as well as geography and time period (7, 96–103). For example, a recent meta-analysis found that point prevalence of depression ranged from 3.1 to 87.3% across 48 studies and 7 populations (97). Notwithstanding the heterogeneity of disorders, this highlights that there is no absolute epidemiological estimate but rather that each estimate must be qualified by the thresholds used, as well as other factors. Thus, changes in prevalence across time periods cannot be performed using different tools. It also raises the question of who decides the appropriate threshold, or boundary, between a disorder and the normal variation of human existence (104–106). This has implications for numerous societal aspects, such as the threshold at which a person warrants medical attention as well as debate over financial and resource allocation.

Implications for Clinical Diagnosis and Research

These results highlight the quantitative extent of the challenge of utilizing DSM-5 based criteria for diagnosis and treatment selection, where symptoms of those who map to DSM-5 criteria are as heterogeneous within disorder labels as between and cannot be differentiated from random. Given this heterogeneity, it is no surprise that only 39% of clinicians report often or always referring to written DSM criteria during an initial evaluation and approximately a third often or always use not otherwise specified (NOS) categories, primarily due to the patient not meeting criteria for a specific category (107). These results also illustrate the challenges of selecting patients or participants into a research study based on their meeting criteria for a disorder label. When participants are recruited into a trial based on these diagnostic criteria, they are as symptomatically heterogeneous a pool as if one were to simply recruit randomly among those with mental health distress from any of these 10 disorders. This likely contributes substantially to the challenge of obtaining clear results in clinical trials where outcomes are rarely unequivocal and typically successful for a low proportion of patients (15). In addition, efficacy of a treatment with respect to the subset of symptoms emphasized by a DSM-5 based disorder label may not reflect overall efficacy in treating mental well-being, as other symptoms that are not considered in a particular diagnosis may fail to improve or even get worse.

Limitations

It is important that we acknowledge some limitations of this data and study. First, symptom profiles are obtained through a self-report questionnaire. While the assessment has now been extensively tested for reliability and validity (89), this would nonetheless limit the inclusion of individuals who are so impaired that they may be unable to take an online survey, or unable to understand the questions sufficiently to answer them.

Second, the sample consisted of self-selected individuals whose symptom profiles may differ from those who chose not to take an assessment. However, this concern is mitigated by the large sample size, broad demographic representation and similar results obtained across demographics. Even if the results only apply to those who would present for mental health assessment this is nonetheless important as they represent the population more likely to be seeking help.

Third, the sample was acquired during the Covid-19 pandemic during which symptom profiles may differ from pre-pandemic times. Should this be the case, it would highlight the challenge of a hard and fast diagnostic system that does not consider the evolution of mental health challenges over time.

Fourth, the criteria mapping process was limited by the absence of specific information pertaining to symptom frequency within the MHQ for each item. The threshold of 8 was determined as an appropriate selection for at least one problem item (Feelings of sadness, distress or hopelessness), equivalent on average to experiencing the symptom 5 days per week in alignment with the DSM-5 requirement of experiencing the symptom “nearly every day” for depression. However, it is possible that the correspondence between frequency and life impact rating may differ from item to item and so other threshold values may have been more appropriate for other items. Nonetheless, while one would expect that most thresholds would not be far off the mark, this highlights the challenges of a threshold-based approach which plagues most, if not all, assessment tools.

Finally, the presence of broad or imperfect matches for certain symptoms pertaining to OCD and bipolar disorder could have affected the accuracy of the mapping and the specific values of prevalence and overlap as derived from this data. While this must be verified in future studies, the inaccurate mapping of one or two symptoms is not likely to impact the overall finding—that overall symptom profiles are as heterogeneous within disorder groups as between and not separable as a group from randomly selected individuals with any disorder. This is because the DSM-5 typically considers only between 3 and 6 symptoms for a diagnosis whereas individuals present with 20 symptoms on average (Figure 4C). Therefore, while more accurate mapping may improve the symptom overlap by one or two symptoms, this would not move the needle substantially since the 15–17 other symptoms that the diagnosis did not consider would contribute far more to the heterogeneity.

Nonetheless, it is important to compare the MHQ criteria mapping for diagnostic determination to more commonly used assessments, for example mapping against the PHQ-9 (74) and the GAD-7 (75) to determine the alignment between MHQ criteria for depression and anxiety, and the scores from these two questionnaires, respectively. Furthermore, validation of these results with an alternative questionnaire where questions are phrased differently but still cover all symptoms comprehensively would also be important.

Future Directions

Despite the many challenges of the DSM-5, there is no denying that it is deeply embedded in the fabric of clinical, economic and social decision-making. Indeed 64% of clinicians often or always use the diagnostic codes for administrative or billing purposes and 55% find it very or extremely useful for communicating clinical diagnoses with colleagues and other healthcare professionals (107). However, given the broad reaching negative implications of a disorder classification system that is indistinguishable from random groupings, it is exceedingly important to identify and develop alternative approaches.

From the symptom perspective, we advocate for an approach that is rooted in empirical understanding of symptoms clusters. A stepwise change toward disorder agnostic phenotypes of symptom profiles would have multiple benefits. First, it would allow clinicians to obtain a complete picture of patient symptoms so that they could make more informed treatment decisions based on the whole patient experience leading to more streamlined or personalized treatment pathways. Second, it would further support the application of transdiagnostic frameworks such as RDoC (65). Third, it would aid the search for underlying etiologies, and the identification of social determinants, by allowing phenotypic testing of the efficacy of medications and interventions. In the quest to construct empirical phenotypes of symptoms, different mechanisms of constructing and comparing symptom profiles should be explored. For example, dissimilarity is likely amplified by thresholding, and comparisons that look across the scale of impact may yield a clearer picture.

Finally, it would also be important to utilize large-scale data of comprehensive symptom profiles, such as we have used here, to understand the relative stability of symptoms within individuals and the relationships between symptoms. Multiple approaches have already been proposed in this direction. For example, network approaches (49, 50) have been utilized to identify which symptoms are having the greatest impact in sustaining other symptoms, introducing possibilities for specific targeting of symptoms that will likely have the greatest impact on clinical outcomes (56–58). Another approach, HiTOP (67–69), proposes a hierarchical framework that combines individual symptoms into components or traits, assembling them into empirically derived syndromes, and finally grouping them into psychopathology spectra (e.g., internalizing and externalizing). We note that the MHQ is a symptom profiling tool, rather than a diagnostic framework, and thus would be envisaged as a transdiagnostic or disorder agnostic assessment that could be used to support insights arising from network studies, or those using frameworks such as HiTOP and RDoC. Ultimately, various approaches proposed should be tested to determine which provides the closest correspondence to clinical trial response criteria and insight in relation to physiological parameters.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://sapienlabs.org/mhm-data-access-request/.

Ethics Statement

The studies involving human participants were reviewed and approved by Health Media Lab Institutional Review Board. Participation was anonymous and involved online informed consent.

Author Contributions

JN and TT conceptualized, designed, led the study, interpreted the data, drafted the manuscript, and made critical revisions. JN and VP performed the analysis. JN created the figures. JN, TT, and VP approved the final version and agreed to be accountable for all aspects of the work. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by Sapien Labs using private unrestricted donor funding. Sapien Labs was a 501(c)(3) not for profit research organization founded in 2016 with a mission to understand and enable the human mind.

Conflict of Interest

TT received a grant award from the National Institute of Mental Health (NIMH) to develop a commercial version of the MHQ tool referenced herein.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2021.775762/full#supplementary-material

References

1. Ritchie H, Roser M. Published Online at OurWorldInData.org. Available online at: https://ourworldindata.org/mental-health (2021).

2. Friedrich MJ. Depression is the leading cause of disability around the world. JAMA. (2017). 317:1517. doi: 10.1001/jama.2017.3826

4. Pierce M, Hope H, Ford T, Hatch S, Hotopf M, John A, et al. Mental health before and during the COVID-19 pandemic: a longitudinal probability sample survey of the UK population. Lancet Psychiatry. (2020). 7:883–92. doi: 10.1016/S2215-0366(20)30308-4

5. Serafini G, Parmigiani B, Amerio A, Aguglia A, Sher L, Amore M. The psychological impact of COVID-19 on the mental health in the general population. QJM. (2020). 113:531–7. doi: 10.1093/qjmed/hcaa201

6. Varma P, Junge M, Meaklim H, Jackson ML. Younger people are more vulnerable to stress, anxiety and depression during COVID-19 pandemic: a global cross-sectional survey. Prog Neuropsychopharmacol Biol Psychiatry. (2020). 109:110236. doi: 10.1016/j.pnpbp.2020.110236

7. Xiong J, Lipsitz O, Nasri F, Lui LMW, Gill H, Phan L, et al. Impact of COVID-19 pandemic on mental health in the general population: a systematic review. J Affect Disord. (2020). 277:55–64. doi: 10.1016/j.jad.2020.08.001

9. WHO. International Statistical Classification of Diseases and Related Health Problems. 11th Rev. Geneva (2018).

10. Galatzer-Levy IR, Bryant RA. 636,120 ways to have posttraumatic stress disorder. Perspect Psychol Sci. (2013). 8:651–62. doi: 10.1177/1745691613504115

11. Zimmerman M, Ellison W, Young D, Chelminski I, Dalrymple K. How many different ways do patients meet the diagnostic criteria for major depressive disorder? Compr Psychiatry. (2015). 56:29–34. doi: 10.1016/j.comppsych.2014.09.007

12. Goldberg D. The heterogeneity of “major depression”. World Psychiatry. (2011). 10:226–8. doi: 10.1002/j.2051-5545.2011.tb00061.x

13. Buch AM, Liston C. Dissecting diagnostic heterogeneity in depression by integrating neuroimaging and genetics. Neuropsychopharmacology. (2021). 46:156–75. doi: 10.1038/s41386-020-00789-3

14. Nandi A, Beard JR, Galea S. Epidemiologic heterogeneity of common mood and anxiety disorders over the lifecourse in the general population: a systematic review. BMC Psychiatry. (2009). 9:31. doi: 10.1186/1471-244X-9-31

15. Khan A, Mar KF, Brown WA. The conundrum of depression clinical trials: one size does not fit all. Int Clin Psychopharmacol. (2018) 33:239–48. doi: 10.1097/YIC.0000000000000229

16. Fried EI, Nesse RM. Depression is not a consistent syndrome: an investigation of unique symptom patterns in the STAR*D study. J Affect Disord. (2015). 172:96–102. doi: 10.1016/j.jad.2014.10.010

17. Luo Y, Weibman D, Halperin JM, Li X. A review of heterogeneity in attention deficit/hyperactivity disorder (ADHD). Front Hum Neurosci. (2019). 13:42. doi: 10.3389/fnhum.2019.00042

18. Masi A, DeMayo MM, Glozier N, Guastella AJ. An overview of autism spectrum disorder, heterogeneity and treatment options. Neurosci Bull. (2017). 33:183–93. doi: 10.1007/s12264-017-0100-y

19. Drysdale AT, Grosenick L, Downar J, Dunlop K, Mansouri F, Meng Y, et al. Resting-state connectivity biomarkers define neurophysiological subtypes of depression. Nat Med. (2017). 23:28–38. doi: 10.1038/nm.4246

20. Feczko E, Miranda-Dominguez O, Marr M, Graham AM, Nigg JT, Fair DA. The heterogeneity problem: approaches to identify psychiatric subtypes. Trends Cogn Sci. (2019). 23:584–601. doi: 10.1016/j.tics.2019.03.009

21. Zhang Y, Wu W, Toll RT, Naparstek S, Maron-Katz A, Watts M, et al. Identification of psychiatric disorder subtypes from functional connectivity patterns in resting-state electroencephalography. Nat Biomed Eng. (2021). 5:309–23. doi: 10.1038/s41551-020-00614-8

22. Young G, Lareau C, Pierre B. One quintillion ways to have PTSD comorbidity: recommendations for the disordered DSM-5. Psychol Injury Law. (2014). 7:61–74. doi: 10.1007/s12207-014-9186-y

23. Maj M. ‘Psychiatric comorbidity': an artefact of current diagnostic systems? Br J Psychiatry. (2005). 186:182–4. doi: 10.1192/bjp.186.3.182

24. Hirschfeld RMA. The comorbidity of major depression and anxiety disorders: recognition and management in primary care. Prim Care Companion J Clin Psychiatry. (2001). 3:244–54. doi: 10.4088/PCC.v03n0609

25. Kessler RC, Merikangas KR, Wang PS. Prevalence, comorbidity, and service utilization for mood disorders in the United States at the beginning of the twenty-first century. Annu Rev Clin Psychol. (2007). 3:137–58. doi: 10.1146/annurev.clinpsy.3.022806.091444

26. Kaufman J, Charney D. Comorbidity of mood and anxiety disorders. Depress Anxiety. (2000). 12(Suppl. 1).:69–76. doi: 10.1002/1520-6394(2000).12:1+<69::AID-DA9>3.0.CO;2-K

27. Brady KT. Posttraumatic stress disorder and comorbidity: recognizing the many faces of PTSD. J Clin Psychiatry. (1997). 58(Suppl. 9).:12–5.

28. Buckley PF, Miller BJ, Lehrer DS, Castle DJ. Psychiatric comorbidities and schizophrenia. Schizophr Bull. (2009). 35:383–402. doi: 10.1093/schbul/sbn135

29. Jensen PS, Martin D, Cantwell DP. Comorbidity in ADHD: implications for research, practice, and DSM-V. J Am Acad Child Adolesc Psychiatry. (1997). 36:1065–79. doi: 10.1097/00004583-199708000-00014

30. Angold A, Costello EJ, Erkanli A. Comorbidity. J Child Psychol Psychiatry. (1999). 40:57–87. doi: 10.1111/1469-7610.00424

31. Allsopp K, Read J, Corcoran R, Kinderman P. Heterogeneity in psychiatric diagnostic classification. Psychiatry Res. (2019). 279:15–22. doi: 10.1016/j.psychres.2019.07.005

32. Kessler RC, Nelson CB, McGonagle KA, Liu J, Swartz M, Blazer DG. Comorbidity of DSM-III-R major depressive disorder in the general population: results from the US National Comorbidity Survey. Br J Psychiatry. (1996) 168:17–30. doi: 10.1192/S0007125000298371

33. Plana-Ripoll O, Pedersen CB, Holtz Y, Benros ME, Dalsgaard S, de Jonge P, et al. Exploring comorbidity within mental disorders among a Danish national population. JAMA Psychiatry. (2019). 76:259–70. doi: 10.1001/jamapsychiatry.2018.3658

34. Caspi A, Houts RM, Ambler A, Danese A, Elliott ML, Hariri A, et al. Longitudinal assessment of mental health disorders and comorbidities across 4 decades among participants in the Dunedin birth cohort study. JAMA Netw Open. (2020). 3:e203221. doi: 10.1001/jamanetworkopen.2020.3221

35. Newson JJ, Hunter D, Thiagarajan TC. The heterogeneity of mental health assessment. Front Psychiatry. (2020). 11:76. doi: 10.3389/fpsyt.2020.00076

36. Fried EI. The 52 symptoms of major depression: lack of content overlap among seven common depression scales. J Affect Disord. (2017). 208:191–7. doi: 10.1016/j.jad.2016.10.019

37. Wakefield JC. Diagnostic issues and controversies in DSM-5: return of the false positives problem. Annu Rev Clin Psychol. (2016). 12:105–32. doi: 10.1146/annurev-clinpsy-032814-112800

38. Stein DJ, Lund C, Nesse RM. Classification systems in psychiatry: diagnosis and global mental health in the era of DSM-5 and ICD-11. Curr Opin Psychiatry. (2013). 26:493–7. doi: 10.1097/YCO.0b013e3283642dfd

39. Pierre JM. The borders of mental disorder in psychiatry and the DSM: past, present, and future. J Psychiatr Pract. (2010). 16:375–86. doi: 10.1097/01.pra.0000390756.37754.68

40. Rössler W. What is normal? The impact of psychiatric classification on mental health practice and research. Front Public Health. (2013). 1:68. doi: 10.3389/fpubh.2013.00068

41. Krueger RF, Hopwood CJ, Wright AG, Markon KE. Challenges and strategies in helping the DSM become more dimensional and empirically based. Curr Psychiatry Rep. (2014). 16:515. doi: 10.1007/s11920-014-0515-3

42. First MB, Wakefield JC. Diagnostic criteria as dysfunction indicators: bridging the chasm between the definition of mental disorder and diagnostic criteria for specific disorders. Can J Psychiatry. (2013). 58:663–9. doi: 10.1177/070674371305801203

43. Clark LA, Cuthbert B, Lewis-Fernández R, Narrow WE, Reed GM. Three approaches to understanding and classifying mental disorder: ICD-11, DSM-5, and the national institute of mental health's research domain criteria (RDoC). Psychol Sci Public Interest. (2017). 18:72–145. doi: 10.1177/1529100617727266

44. Hickie IB, Scott J, Hermens DF, Scott EM, Naismith SL, Guastella AJ, et al. Clinical classification in mental health at the cross-roads: which direction next? BMC Med. (2013). 11:125. doi: 10.1186/1741-7015-11-125

45. Kapur S, Phillips AG, Insel TR. Why has it taken so long for biological psychiatry to develop clinical tests and what to do about it? Mol Psychiatry. (2012). 17:1174–9. doi: 10.1038/mp.2012.105

46. McElroy E, Patalay P. In search of disorders: internalizing symptom networks in a large clinical sample. J Child Psychol Psychiatry. (2019). 60:897–906. doi: 10.1111/jcpp.13044

47. Zimmerman M, Mattia JI. Psychiatric diagnosis in clinical practice: is comorbidity being missed? Compr Psychiatry. (1999). 40:182–91. doi: 10.1016/S0010-440X(99).90001-9

48. Fried EI, Robinaugh DJ. Systems all the way down: embracing complexity in mental health research. BMC Medicine. (2020). 18:205. doi: 10.1186/s12916-020-01668-w

49. Borsboom D. A network theory of mental disorders. World Psychiatry. (2017). 16:5–13. doi: 10.1002/wps.20375

50. Cramer AO, Waldorp LJ, van der Maas HL, Borsboom D. Comorbidity: a network perspective. Behav Brain Sci. (2010). 33:137–50; discussion: 50–93. doi: 10.1017/S0140525X09991567

51. Fried EI, Nesse RM. Depression sum-scores don't add up: why analyzing specific depression symptoms is essential. BMC Med. (2015). 13:72. doi: 10.1186/s12916-015-0325-4

52. Waszczuk MA, Zimmerman M, Ruggero C, Li K, MacNamara A, Weinberg A, et al. What do clinicians treat: diagnoses or symptoms? The incremental validity of a symptom-based, dimensional characterization of emotional disorders in predicting medication prescription patterns. Compr Psychiatry. (2017). 79:80–8. doi: 10.1016/j.comppsych.2017.04.004

53. Tanner J, Zeffiro T, Wyss D, Perron N, Rufer M, Mueller-Pfeiffer C. Psychiatric symptom profiles predict functional impairment. Front Psychiatry. (2019). 10:37. doi: 10.3389/fpsyt.2019.00037

54. Contreras A, Nieto I, Valiente C, Espinosa R, Vazquez C. The study of psychopathology from the network analysis perspective: a systematic review. Psychother Psychosomatics. (2019). 88:71–83. doi: 10.1159/000497425

55. Robinaugh DJ, Hoekstra RHA, Toner ER, Borsboom D. The network approach to psychopathology: a review of the literature 2008–2018 and an agenda for future research. Psychol Med. (2020). 50:353–66. doi: 10.1017/S0033291719003404

56. Bak M, Drukker M, Hasmi L, van Os J. An n=1 clinical network analysis of symptoms and treatment in psychosis. PLoS ONE. (2016). 11:e0162811. doi: 10.1371/journal.pone.0162811

57. Blanken TF, Borsboom D, Penninx BW, Van Someren EJ. Network outcome analysis identifies difficulty initiating sleep as a primary target for prevention of depression: a 6-year prospective study. Sleep. (2020) 43:1–6. doi: 10.1093/sleep/zsz288

58. Isvoranu AM, Ziermans T, Schirmbeck F, Borsboom D, Geurts HM, de Haan L. Autistic symptoms and social functioning in psychosis: a network approach. Schizophr Bull. (2021). sbab084. doi: 10.1093/schbul/sbab084

59. Tzur Bitan D, Grossman Giron A, Alon G, Mendlovic S, Bloch Y, Segev A. Attitudes of mental health clinicians toward perceived inaccuracy of a schizophrenia diagnosis in routine clinical practice. BMC Psychiatry. (2018). 18:317. doi: 10.1186/s12888-018-1897-2

60. Pacchiarotti I, Kotzalidis GD, Murru A, Mazzarini L, Rapinesi C, Valentí M, et al. Mixed features in depression: the unmet needs of diagnostic and statistical manual of mental disorders fifth edition. Psychiatr Clin North Am. (2020). 43:59–68. doi: 10.1016/j.psc.2019.10.006

61. Fairburn CG, Bohn K. Eating disorder NOS (EDNOS).: an example of the troublesome “not otherwise specified” (NOS). category in DSM-IV. Behav Res Ther. (2005). 43:691–701. doi: 10.1016/j.brat.2004.06.011

62. Rajakannan T, Safer DJ, Burcu M, Zito JM. National trends in psychiatric not otherwise specified (NOS). diagnosis and medication use among adults in outpatient treatment. Psychiatr Serv. (2016). 67:289–95. doi: 10.1176/appi.ps.201500045

63. Zimmerman M. A review of 20 years of research on overdiagnosis and underdiagnosis in the Rhode Island methods to improve diagnostic assessment and services (MIDAS). project. Can J Psychiatry. (2016). 61:71–9. doi: 10.1177/0706743715625935

64. Harris MG, Kazdin AE, Chiu WT, Sampson NA, Aguilar-Gaxiola S, Al-Hamzawi A, et al. Findings from world mental health surveys of the perceived helpfulness of treatment for patients with major depressive disorder. JAMA Psychiatry. (2020). 77:830–41. doi: 10.1001/jamapsychiatry.2020.1107

65. Insel T, Cuthbert B, Garvey M, Heinssen R, Pine DS, Quinn K, et al. Research domain criteria (RDoC).: toward a new classification framework for research on mental disorders. Am J Psychiatry. (2010). 167:748–51. doi: 10.1176/appi.ajp.2010.09091379

66. Insel TR. The NIMH research domain criteria (RDoC). project: precision medicine for psychiatry. Am J Psychiatry. (2014). 171:395–7. doi: 10.1176/appi.ajp.2014.14020138

67. Conway CC, Forbes MK, Forbush KT, Fried EI, Hallquist MN, Kotov R, et al. A hierarchical taxonomy of psychopathology can transform mental health research. Perspect Psychol Sci. (2019). 14:419–36. doi: 10.31234/osf.io/wsygp

68. Kotov R, Krueger RF, Watson D, Achenbach TM, Althoff RR, Bagby RM, et al. The hierarchical taxonomy of psychopathology (HiTOP).: a dimensional alternative to traditional nosologies. J Abnormal Psychol. (2017). 126:454–77. doi: 10.1037/abn0000258

69. Kotov R, Krueger RF, Watson D, Cicero DC, Conway CC, DeYoung CG, et al. The hierarchical taxonomy of psychopathology (HiTOP).: a quantitative nosology based on consensus of evidence. Annu Rev Clin Psychol. (2021). 17:83–108. doi: 10.1146/annurev-clinpsy-081219-093304

70. Caspi A, Houts RM, Belsky DW, Goldman-Mellor SJ, Harrington H, Israel S, et al. The p factor: one general psychopathology factor in the structure of psychiatric disorders? Clin Psychol Sci. (2014). 2:119–37. doi: 10.1177/2167702613497473

71. Caspi A, Moffitt TE. All for one and one for all: mental disorders in one dimension. Am J Psychiatry. (2018). 175:831–44. doi: 10.1176/appi.ajp.2018.17121383

72. Newson JJ, Thiagarajan TC. Assessment of population well-being with the mental health quotient (MHQ).: development and usability study. JMIR Ment Health. (2020). 7:e17935. doi: 10.2196/17935

73. Newson J, Thiagarajan T. Dynamic dataset of global population mental wellbeing. PsyArXiv. [Preprint]. (2021). doi: 10.31234/osf.io/vtzne

74. Spitzer RL, Kroenke K, Williams JBW, the Patient Health Questionnaire Primary Care Study G. Validation and utility of a self-report version of PRIME-MD. The PHQ primary care study. JAMA. (1999). 282:1737–44. doi: 10.1001/jama.282.18.1737

75. Spitzer RL, Kroenke K, Williams JBW, Löwe B. A brief measure for assessing generalized anxiety disorder: the GAD-7. JAMA Internal Med. (2006). 166:1092–7. doi: 10.1001/archinte.166.10.1092

76. Hirschfeld RMA, Williams JBW, Spitzer RL, Calabrese JR, Flynn L, Keck PE, et al. Development and validation of a screening instrument for bipolar spectrum disorder: the mood disorder questionnaire. Am J Psychiatry. (2000). 157:1873–5. doi: 10.1176/appi.ajp.157.11.1873

77. Conners C, Erhardt D, Sparrow E. Conners' Adult ADHD Rating Scales (CAARS). New York: Multi-Health Systems, Inc. (1999).

78. Weathers FW, Blake DD, Schnurr PP, Kaloupek DG, Marx BP, Keane TM. The Clinician-Administered PTSD Scale for DSM-5 (CAPS-5). – Past Month [Measurement Instrument]. (2015). Available online at: https://www.ptsd.va.gov/

79. Weathers FW, Bovin MJ, Lee DJ, Sloan DM, Schnurr PP, Kaloupek DG, et al. The Clinician-Administered PTSD Scale for DSM−5 (CAPS-5).: development and initial psychometric evaluation in military veterans. Psychol Assessment. (2018). 30:383–95. doi: 10.1037/pas0000486

80. Goodman WK, Price LH, Rasmussen SA, Mazure C, Fleischmann RL, Hill CL, et al. The Yale-Brown Obsessive Compulsive Scale: I. Development, use, and reliability. Arch General Psychiatry. (1989). 46:1006–11. doi: 10.1001/archpsyc.1989.01810110048007

81. Storch EA, Rasmussen SA, Price LH, Larson MJ, Murphy TK, Goodman WK. Development and psychometric evaluation of the Yale–Brown Obsessive-Compulsive Scale—Second Edition. Psychol Assessment. (2010). 22:223–32. doi: 10.1037/a0018492

82. McLellan AT, Kushner H, Metzger D, Peters R, Smith I, Grissom G, et al. The fifth edition of the Addiction Severity Index. J Substance Abuse Treatment. (1992). 9:199–213. doi: 10.1016/0740-5472(92).90062-S

83. Overall JE, Gorham DR. The brief psychiatric rating scale. Psychol Rep. (1962). 10:799–812. doi: 10.2466/pr0.1962.10.3.799

84. Ventura J, Lukoff D, Nuechterlein K, Liberman RP, Green MF, Shaner A. Brief Psychiatric Rating Scale Expanded version 4.0: scales anchor points and administration manual. Int J Meth Psychiatr Res. (1993). 13:221–44.

85. Garner DM. Eating Disorder Inventory-3. Professional Manual. Lutz F, editor. Psychological Assessment Resources, Inc. (2004).

86. Lord C, Rutter M, Le Couteur A. Autism Diagnostic Interview-Revised: a revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. J Autism Dev Disord. (1994). 24:659–85. doi: 10.1007/BF02172145

87. Rutter ML, Le C, Lord C. Autism Diagnostic Interview - Revised: Manual. Los Angeles, CA: Western Psychological Services (2003).

88. First M, Williams J, Karg R, Spitzer R. Structured Clinical Interview for DSM-5 Disorders, Clinician Version (SCID-5-CV).. Arlington, VA: American Psychiatric Association (2016).

89. Newson J, Pastukh V, Thiagarajan T. Reliability and Validity of the Mental Health Quotient (MHQ). (2021). Available online at: psyarxiv.com/n7e9p

90. Banerjee A, Dave RN editors. Validating clusters using the Hopkins statistic. In: 2004 IEEE International Conference on Fuzzy Systems (IEEE Cat No04CH37542). Budapest (2004).

91. Newson J, Pastukh V, Sukhoi O, Taylor J, Thiagarajan T. Mental State of the World 2020, Mental Health Million Project. Washington, DC: Sapien Labs (2021).

92. Fayyad J, Sampson NA, Hwang I, Adamowski T, Aguilar-Gaxiola S, Al-Hamzawi A, et al. The descriptive epidemiology of DSM-IV Adult ADHD in the World Health Organization World Mental Health Surveys. Atten Defic Hyperact Disord. (2017). 9:47–65. doi: 10.1007/s12402-016-0208-3

93. Wittchen HU, Jacobi F, Rehm J, Gustavsson A, Svensson M, Jönsson B, et al. The size and burden of mental disorders and other disorders of the brain in Europe 2010. Eur Neuropsychopharmacol. (2011). 21:655–79. doi: 10.1016/j.euroneuro.2011.07.018

94. Bromet E, Andrade LH, Hwang I, Sampson NA, Alonso J, de Girolamo G, et al. Cross-national epidemiology of DSM-IV major depressive episode. BMC Med. (2011). 9:90. doi: 10.1186/1741-7015-9-90

95. Ettman CK, Abdalla SM, Cohen GH, Sampson L, Vivier PM, Galea S. Prevalence of depression symptoms in US adults before and during the COVID-19 pandemic. JAMA Netw Open. (2020). 3:e2019686. doi: 10.1001/jamanetworkopen.2020.19686

96. Cénat JM, Blais-Rochette C, Kokou-Kpolou CK, Noorishad PG, Mukunzi JN, McIntee SE, et al. Prevalence of symptoms of depression, anxiety, insomnia, posttraumatic stress disorder, and psychological distress among populations affected by the COVID-19 pandemic: a systematic review and meta-analysis. Psychiatry Res. (2021). 295:113599. doi: 10.1016/j.psychres.2020.113599

97. Wu T, Jia X, Shi H, Niu J, Yin X, Xie J, et al. Prevalence of mental health problems during the COVID-19 pandemic: a systematic review and meta-analysis. J Affect Disord. (2021). 281:91–8. doi: 10.1016/j.jad.2020.11.117

98. Baxter AJ, Scott KM, Vos T, Whiteford HA. Global prevalence of anxiety disorders: a systematic review and meta-regression. Psychol Med. (2013). 43:897–910. doi: 10.1017/S003329171200147X

99. Steel Z, Marnane C, Iranpour C, Chey T, Jackson JW, Patel V, et al. The global prevalence of common mental disorders: a systematic review and meta-analysis 1980-2013. Int J Epidemiol. (2014). 43:476–93. doi: 10.1093/ije/dyu038

100. McGrath J, Saha S, Welham J, El Saadi O, MacCauley C, Chant D. A systematic review of the incidence of schizophrenia: the distribution of rates and the influence of sex, urbanicity, migrant status and methodology. BMC Med. (2004). 2:13. doi: 10.1186/1741-7015-2-13

101. Lim GY, Tam WW, Lu Y, Ho CS, Zhang MW, Ho RC. Prevalence of Depression in the Community from 30 Countries between 1994 and 2014. Sci Rep. (2018). 8:2861. doi: 10.1038/s41598-018-21243-x

102. Fontenelle LF, Mendlowicz MV, Versiani M. The descriptive epidemiology of obsessive-compulsive disorder. Prog Neuropsychopharmacol Biol Psychiatry. (2006). 30:327–37. doi: 10.1016/j.pnpbp.2005.11.001

103. Atwoli L, Stein DJ, Koenen KC, McLaughlin KA. Epidemiology of posttraumatic stress disorder: prevalence, correlates and consequences. Curr Opin Psychiatry. (2015). 28:307–11. doi: 10.1097/YCO.0000000000000167

104. Cooper RV. Avoiding false positives: zones of rarity, the threshold problem, and the DSM clinical significance criterion. Can J Psychiatry. (2013). 58:606–11. doi: 10.1177/070674371305801105

105. Bolton D. Overdiagnosis problems in the DSM-IV and the new DSM-5: can they be resolved by the distress-impairment criterion? Can J Psychiatry. (2013). 58:612–7. doi: 10.1177/070674371305801106

106. Wakefield JC. The concept of mental disorder: diagnostic implications of the harmful dysfunction analysis. World Psychiatry. (2007). 6:149–56.

Keywords: diagnosis, symptom profiles, DSM-5, comorbidity, heterogeneity, depression, ADHD, autism spectrum disorder (ASD)

Citation: Newson JJ, Pastukh V and Thiagarajan TC (2021) Poor Separation of Clinical Symptom Profiles by DSM-5 Disorder Criteria. Front. Psychiatry 12:775762. doi: 10.3389/fpsyt.2021.775762

Received: 14 September 2021; Accepted: 05 November 2021;

Published: 29 November 2021.

Edited by:

M. Zachary Rosenthal, Duke University, United StatesReviewed by:

Gerald Young, York University, CanadaMatthew Southward, University of Kentucky, United States

Aaron Reuben, Duke University, United States

Copyright © 2021 Newson, Pastukh and Thiagarajan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jennifer Jane Newson, amVubmlmZXJAc2FwaWVubGFicy5vcmc=

Jennifer Jane Newson

Jennifer Jane Newson Vladyslav Pastukh

Vladyslav Pastukh Tara C. Thiagarajan

Tara C. Thiagarajan