- 1Department of Psychology, University of Nevada, Las Vegas, NV, United States

- 2Department of Biobehavioral Nursing and Health Informatics, University of Washington, Seattle, WA, United States

- 3Krembil Centre for Neuroinformatics, Centre for Addiction and Mental Health, Toronto, ON, Canada

- 4Vector Institute for Artificial Intelligence, Toronto, ON, Canada

- 5Department of Biomedical Informatics and Medical Education, University of Washington, Seattle, WA, United States

- 6Institute of Psychiatry, Psychology & Neuroscience, King's College London, London, United Kingdom

Artificial intelligence (AI) in healthcare aims to learn patterns in large multimodal datasets within and across individuals. These patterns may either improve understanding of current clinical status or predict a future outcome. AI holds the potential to revolutionize geriatric mental health care and research by supporting diagnosis, treatment, and clinical decision-making. However, much of this momentum is driven by data and computer scientists and engineers and runs the risk of being disconnected from pragmatic issues in clinical practice. This interprofessional perspective bridges the experiences of clinical scientists and data science. We provide a brief overview of AI with the main focus on possible applications and challenges of using AI-based approaches for research and clinical care in geriatric mental health. We suggest future AI applications in geriatric mental health consider pragmatic considerations of clinical practice, methodological differences between data and clinical science, and address issues of ethics, privacy, and trust.

Introduction

Artificial intelligence (AI) learns patterns in large multimodal datasets both within and across individuals (1) to help improve understanding of current clinical status [e.g., calculating a risk score for heart disease (2)] or predict a future outcome [e.g., predicting daily mood fluctuations (3)]. Such technology is increasingly critical and opportune in our digital healthcare revolution. Advances in technology, such as the ubiquity of smartphones, other wearables, and embedded sensors, in addition to the emergence of large datasets (e.g., electronic health records) have altered the landscape of clinical care and research. AI approaches can dynamically interpret such complex data and generate incredible insight to potentially improve clinical methods and results. AI holds the potential to revolutionize geriatric mental health care and research by learning and applying such individualized predictions to guide clinical decision-making. Specifically, AI can contribute to the proactive and objective assessment of mental health symptoms to aid in diagnosis and treatment delivery to suit individual needs, including long-term monitoring and care management.

The big promise for AI in mental health care and research—largely due to its reliance on big data—is to facilitate understanding of what works for whom, and when. However, much of this momentum is driven by machine learning experts (e.g., data and computer scientists and engineers) and runs the risk of being disconnected from pragmatic issues in clinical practice. In this piece, we bring the perspectives of clinician-scientists in clinical geropsychology (BNR and MS) and geriatric nursing (OZ) to bear on expertise in AI and data science (AP). We provide a brief overview of AI in mental health with the main focus on possible applications and challenges of using AI-based approaches for research and clinical care in geriatric mental health.

Clinical Applications of AI

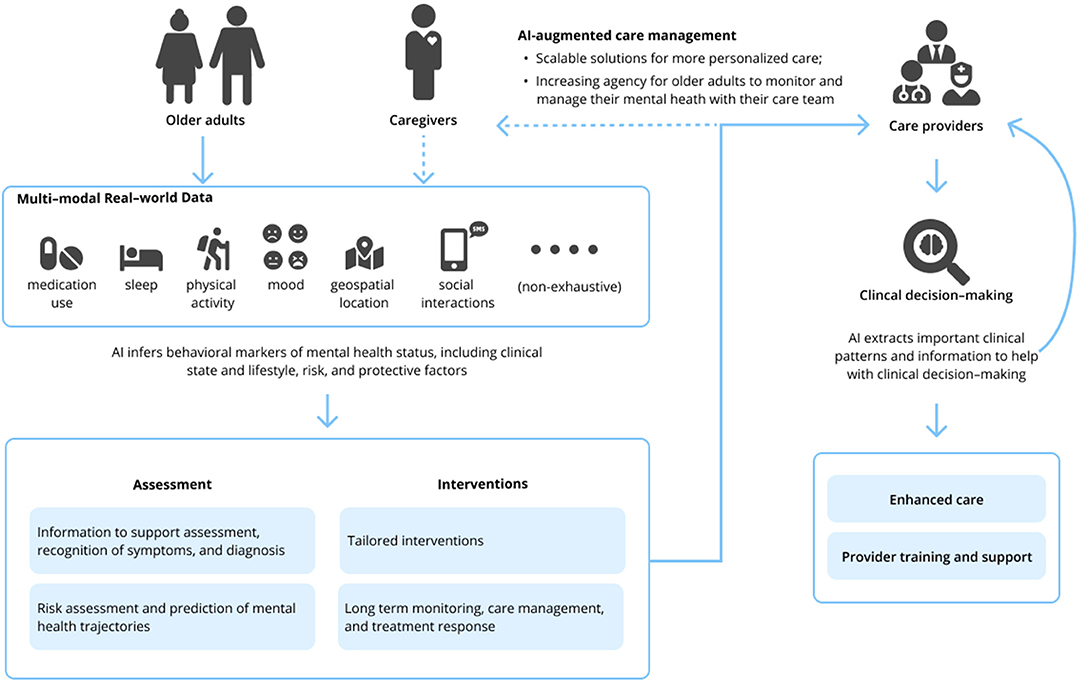

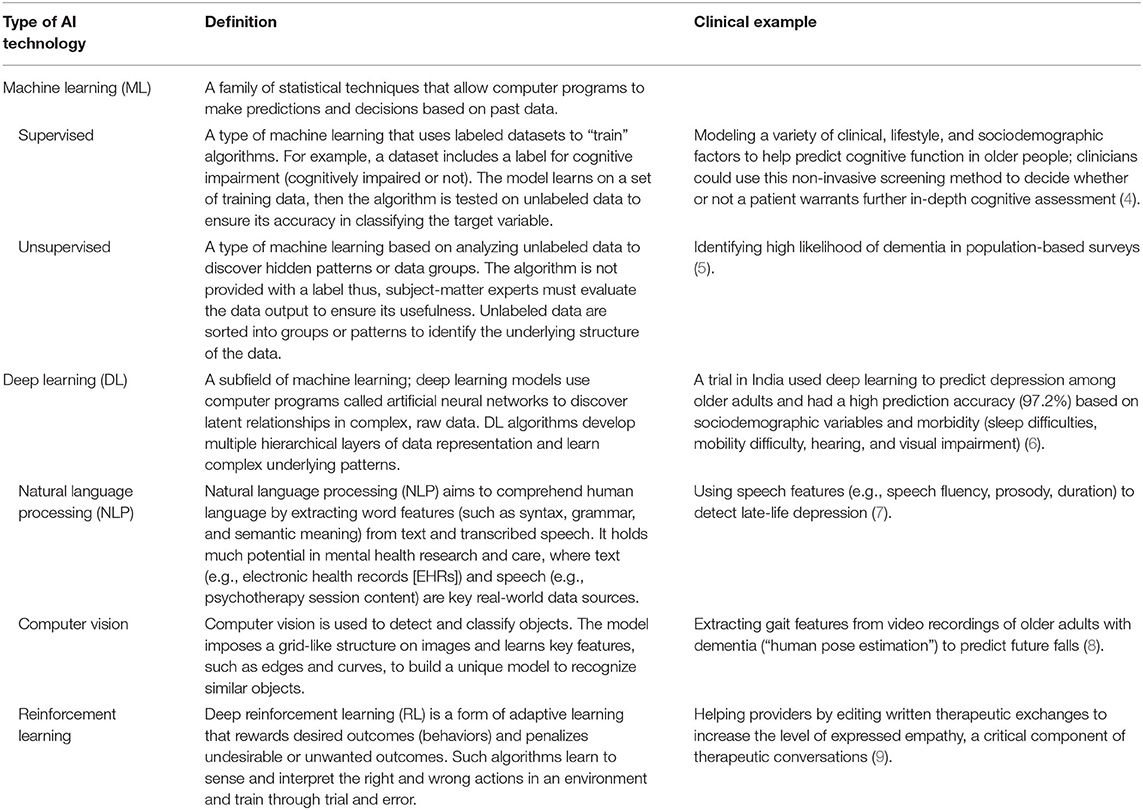

The field of geriatric mental health focuses on both normal and pathological aging from a biological and psychological perspective; this encompasses acute and chronic physical illness, neurodegeneration and cognitive impairment, and mental disorders in people aged 65 and older. Research and clinical applications within geriatric mental health focus on both care delivery and the evaluation, diagnosis, prevention, and treatment of such disorders. The appeal of such AI-enabled technology to advance geriatric mental health care is 2-fold. First, AI technologies hold the potential to develop precision models that are both personalized and conceivably more accurate than traditional clinical care using vast amounts of real-world multimodal data about patients, including the influence of environmental and other risk and protective factors. Secondly, technology in general has long been heralded as a means to overcome traditional access barriers of cost, time, distance, and stigma, all of which are relevant for older adults. While a thorough review of AI is beyond the scope of this Perspective, relevant machine learning (ML) and deep learning applications [including natural language processing (NLP)] of AI are presented in Table 1. Interested readers are directed to other reviews for more in-depth descriptions of AI in mental health (10–13). We briefly review three clinical domains relevant to geriatric mental health care below and subsequently suggest specific areas where AI can assist clinical care (see Figure 1).

Table 1. Overview of artificial intelligence (AI) technologies with relevance to geriatric mental health.

Assessment, Symptom Recognition, and Diagnosis

A major issue in geriatric mental health care and research is accurate classification of a disorder. Many mental health conditions, including late-life depression, go undetected and untreated (14). When symptoms are recognized, diagnosis primarily relies on subjective recollections of symptoms, which leads to a considerable amount of diagnostic variability and may be subject to patient recall bias (15). Moreover, differential diagnosis can be particularly challenging in older adult patients with multimorbidities or when considering conditions with overlapping symptoms. A compelling application of AI is accurately predicting who needs mental health treatment before someone realizes they need it—or, before symptoms become too burdensome—by tracking early cues related to a change in an individual's daily behavior. One of the most ubiquitous opportunities is personal sensing, which converts the huge amount of sensor data collected by our phones (or other wearable devices) into clinically meaningful information about behaviors, thoughts, and emotions to make inferences about clinical status and/or disorders (16). These data sources can be rich and multimodal, encapsulating sleep, social interaction, and physical activity, to name a few features. Such data may serve as objective measures for hallmark symptoms (e.g., fatigue and sleep disturbances) in the diagnosis of depression in older adults (17).

A small but growing body of literature has begun to apply AI approaches to geriatric mental health assessment and diagnosis, largely in the context of depression (10) and neurocognitive impairment (11). For example, language ability and processing—including spontaneous speech—is often an early affected cognitive domain in the course of dementia, especially Alzheimer's disease, and has been proposed as a target for early recognition and diagnosis (18). However, traditional methods of early recognition and diagnosis often produce significant overlap with “normal” cognitive functioning among older adults, and thus have reduced clinical utility in early detection (19). AI techniques such as NLP may detect speech features (e.g., acoustic features such as pause duration and emotion) that are sensitive to cognitive decline and may better differentiate those with early impairment than traditional neuropsychological assessment (19, 20).

Treatment and Treatment Monitoring

The shortage of geriatric mental health specialists (21) and barriers to treatment seeking among older adults (22–24) mean that patients with mental health needs are often delayed in obtaining treatment, if they receive treatment at all. As it stands in current clinical practice, access to evidence-based treatment is often limited (25), and when implemented, treatment decisions are often guided by trial and error. Ongoing assessment is also crucial to assess effectiveness of treatment, but may be overlooked or untenable in routine practice, rendering ineffective treatment decisions. As AI aims to predict who needs mental health services, the next compelling application of such technology will be to answer the question of “What works for whom, and when?” A promising application of AI for mental health, inspired by precision medicine, is to identify subgroups of patients with similar symptom expressions and outcomes to guide treatment decisions, commonly referred to as “subtyping” (26). Once treatment is initiated, AI could also help clinicians monitor response to treatment and symptom trajectory, such as through passive recording of behavioral data using wearable sensors.

Another example to optimize treatment is to quickly mobilize tailored supports using just-in-time adaptive interventions. These adapt the type, timing, and intensity of treatment based on the individual's need at the moment and context they most need the support (27). Such efforts in geriatrics include computational modeling based on smartphone data to target health behavior change (i.e., low physical activity and sedentary behavior) in older adults (28). These models use sensor data to monitor health states with the goal of delivering personalized interventions to mitigate behavioral and psychological factors that contribute to health risk.

Intelligent voice assistants, virtual health agents, and conversational agents (e.g., chatbots) are designed to reduce health care system burden (29) and improve patient autonomy and self-management (30). While mainstream conversational agents have yet to be tested with older adults, preliminary evidence suggests that older adults are comfortable self-disclosing with other conversational agents (19). It is conceivable that such AI may one day be used to support “aging in place,” such as allowing older adults to complete remote assessments for routine monitoring. Researchers are also prototyping AI-based “smart homes” to support safety and independence among older adults and individuals with disabilities and chronic conditions (31). However, ongoing engagement is required for AI to assist with long-term monitoring or treatment delivery. For example, while intelligent voice assistants such as Amazon's Echo have the potential to support independence among older adults, users may discontinue such products if they do not realize benefits or experience challenges using such devices in shared spaces (32).

Clinical Decision-Making, Provider Training and Support

AI may free up time for the clinician to implement treatment decisions and focus on other therapeutic targets (e.g., client rapport) where the application of current AI technologies has been ineffective (33). AI-based data collection and harmonization may streamline patient flow, automate assessments, monitor longitudinal trajectories and outcomes, reduce paperwork, and monitor medication(s) and potential contraindications (34), thus freeing providers to practice the “human” elements of mental health care. AI may also be used to train mental health professionals. This is particularly relevant to the current geriatric workforce shortage (21). Examples include virtual patient simulations to train and evaluate clinical skills (e.g., asking proper diagnostic questions) (35) and NLP to analyze the quality of engagement between a therapist and a patient in a psychotherapy session (36). However, limitations to this technology remain; this work found models only modestly predicted patient-rated alliance from psychotherapy session content (36).

Challenges and Opportunities

Now we want to highlight some challenges and propose how AI solutions can be applied to real-world problems in geriatric mental health care and research. We suggest the AI community partners with clinician-researchers and care teams (including nursing staff, providers, and caregivers), and vice versa, in order to make most relevant the potential of such technology. This is particularly germane to issues of geriatric mental health care.

Unique Challenges in Geriatrics

Aging is a complex process that involves interconnected changes spanning cellular to psychological to sociocultural processes, the results of which present unique challenges when working in geriatrics. First, older adults are less likely than younger adults to receive accurate diagnosis and treatment for mental health issues (37), and barriers are greater among racially and ethnically diverse older adults compared to their non-Latino White counterparts (23). Workforce shortages, specifically lack of providers with competencies in the specific needs of older adults, contribute to these issues (21). Older adults also present with greater comorbidity, chronicity, and complexity than their younger adult counterparts; acute and chronic physical health conditions, medication use, and cognitive, sensory, or functional impairments can all complicate the detection and diagnosis of a mental health condition. Additionally, the variation in manifestation of mental health symptoms and treatment responses in older adults affects timely and accurate diagnosis. For example, an enduring finding in geriatric mental health care is that older adults with depressive symptoms are less likely than younger adults to present with sadness and are more apt to endorse anhedonia (loss of interest or pleasure), apathy, and somatic symptoms such as fatigue, diffuse aches and pain, or malaise (38). Somatic symptoms of late-life depression also overlap with symptoms of chronic disease, potentially obscuring or complicating diagnosis of mental health conditions. Moreover, older adults may be poor utilizers of mental health services if they are uncertain whether their symptoms are due to psychological problems or normal aging (39). Thus, AI holds promise to capture real-world behavioral data to aid in the recognition and diagnosis of mental health conditions in older adults. The majority of the literature points to applications of AI among younger adults (often college-aged convenience samples). Next steps are to prototype, train, and validate AI approaches on data from diverse respondents, including older adults, to capture the specific clinical needs and heterogeneity in the population.

Social, environmental, and familial contexts are important considerations in geriatric mental health. Caregiving is one such relevant factor. Persons with chronic or life-limiting disease–often older adults—require progressively extensive attention and assistance with activities of daily living. This care is often provided by family members or other unpaid caregivers. AI technologies may better prepare and support caregivers in their tasks. A systematic review of 30 studies (40) described a range of assistive AI devices designed to facilitate caregiving, such as support with dressing or handwashing or detecting falls. However, the review noted that most studies were descriptive or exploratory, offering very limited evidence of such technology to date.

Social factors such as social isolation and loneliness may also exacerbate mental health issues; indeed, a recent federal report highlighted the epidemic of social isolation and loneliness among older adults (41). AI could be used to both assess and offer supports for loneliness. For example, a proof-of-concept study used NLP to identify loneliness among U.S. community-dwelling older adults based on speech from qualitative interviews (42). Importantly, this study attempted to understand sex differences in the reporting of such a complex psychological construct—something with which clinicians may struggle. As with much of the AI applications to date in geriatric mental health, the authors note that future work will need larger, more diverse samples and to incorporate multimodal data streams to improve the predictions. In any case, AI supports designed for older adults will need to address not only psychological and biological/medical factors, but social and environmental factors to be most relevant.

The term “older adult” encompasses a wide range of the lifespan and includes diverse individuals from various birth cohorts; racial/ethnic, cultural and socioeconomic backgrounds; and functional abilities. As healthcare in general, and AI opportunities specifically, relies on technology, there is concern that older adults will be left out of such a digital health revolution. Even though many members of the “young-old” (65–74 years) and older cohorts may be accustomed to smart devices and other technologies, older adults are often left out of the design and marketing of such innovations (43). Sensory issues, ranging from tremors to limited vision, may also impede the use of conventional technological devices designed for users with normative abilities. When innovations are marketed toward older people, they often reflect a pathological view of aging and are limited to support for emergency monitoring (e.g., fall detection). Our call to action is that AI developers leverage a user-centered perspective, including diverse older adults with a range of health-related quality of life, during the design and evaluation (44) to uncover such technology's viability and fit-for-purpose in the target population.

Methodological, Practical, and Other Challenges

Given the pursuit of such rapid and novel innovation, not all AI developments will readily translate to clinical or other real-world settings. While not exhaustive, we outline a few key challenges in an attempt to bridge data science with clinical science in geriatric mental health and suggest next steps in addressing such challenges.

First, there has been a paradigm shift away from traditional experimental studies that typify mental health research to rapid innovations in AI (13). The empirical approaches familiar to clinicians—namely hypothesis testing and reliance on evidence-based practice—are potentially at odds with the proof-of-concept, hypothesis-generating demonstrations that characterize much AI research to date. The innovations propelling AI forward are often tested on small samples to demonstrate proof-of-concept (40); however, this runs the risk that ML models will be overfit, leading to spurious findings and lacking generalizability to new data sources. External validation of the model (that is, testing in new datasets) is essential to improve prediction, yet only three of 51 studies in a recent review of ML in psychotherapy research did so (45). When large datasets are available, they are often prone to bias arising from differential recruitment, attrition, and engagement over time (46). Importantly, adults over the age of 60 are those least represented in digital health study samples, and such studies rarely reflect the racial/ethnic and geographical diversity of the U.S., limiting the validity of findings (46). Moreover, researchers from non-health science fields may use different reporting norms than clinician scientists, resulting in missing key pieces of information, including participant demographics and other aspects of methods (e.g., location of data collection) (40), which limit inferences and generalizability.

When it comes to implementation of AI, clinicians may override algorithm-based recommendations, or patients may be wary of algorithm-recommended treatment. Although computational modeling is a powerful tool to sift through predictors to develop complex algorithms, the “black box” of such computations may be off-putting to clinicians who have long relied on their own clinical reasoning to drive decision-making, or who may not fully understand the statistical models (47). Moreover, algorithm recommendations may not fully incorporate all clinical considerations, including patient restrictions or preferences. A major pitfall of using AI for mental health care—geriatric or otherwise—is that such systems will sometimes be wrong, resulting in patient harm. For example, a patient with a depressive disorder may be misclassified and not treated. While such error happens in human-based decision making, it will be important to build in safeguards when implementing such AI systems at scale (e.g., transparency around computational inferences and classification; routine clinician assessment to augment such AI classification for greater reliability; development of other safety nets in healthcare). Finally, even if we could use AI to accurately predict clinical state or worsening of a patient via sensor data or other algorithmic prediction, what would a clinic or individual clinicians actually do with such data? A clear bridge between developing and implementing such predication-based models is developing appropriate clinical workflows and interventions to address such predictions.

Data scientists must also partner with clinicians and clinical scientists to ensure that data features are meaningful and valid for older adults (16). In our own work using ML to model daily variation in depressive symptoms based on mobility data, we were unable to access raw mobility data from the proprietary sensor software and translate such data into meaningful variables (48). We also ran into issues with intra- and interindividual variation in phone usage patterns—data are only as robust as the degree to which users use the device (3, 48), which may vary between older and younger adults. More work is needed to understand older adults as unique users of devices, such as smartphones, rather than simply extrapolating assumptions from younger users. Finally, sensors and other multimodal sources of data may detect incredible variability in clinical states and behavioral markers. However, for practical utility, AI models need to be trained to differentiate features that are clinically relevant—that is, diagnostic—from transient mood states. This will again require models based on large and diverse samples of older adults to ascertain features associated with geriatric mental health conditions.

One cannot tread into the topic of AI without running into discussion of ethics, structural inequalities, privacy, and trust issues. A full discussion of these topics is beyond the scope of this paper but has been discussed elsewhere (49–51). Briefly, these will be critical issues to consider as the innovation of data science meets the practical applications of clinical work. For example, what are the bioethical considerations if an AI algorithm recommends a particular intervention, which the clinician decides against it, and the patient decompensates? Or, conversely, where does liability lie if a patient dies after a clinician deploys an algorithm-recommended treatment (52)? It is also crucial to acknowledge that racial, gender, and ageist biases and discrimination are deeply embedded in healthcare—and as a result, in the AI systems that learn from such data sources. When unchecked, the inferences drawn from such technologies are likely to perpetuate systemic injustices in healthcare. These may result from bias and a lack of transparency in developing algorithms, such as using training data from a preponderance of young White men or using flawed proxy variables to calculate risk scores (53). Such bias is then further maintained in how providers respond to such algorithmic predictions. Thus, understanding and preventing the root causes for bias in AI systems must be a priority to monitor and mitigate such consequences. Privacy concerns among users of various technology-based assessments and interventions has also been a central theme arising in research from our group (54–56). Trust may vary as a function of who is conducting the research—for example, trust in internet-based research is higher (and participants more likely to share their data) when the research is conducted by university researchers compared to private companies (55). Building trustworthiness of AI in geriatric mental health care and research will rely on reconciling some of the issues discussed above—namely, explainability (the ability to understand or describe how a model arrived at its prediction), transparency (clear and transparent methodology), and generalizability (related to methodology; exhaustive testing and validation of models) (57).

Conclusion

AI holds promise for more accurate diagnosis and personalized treatment recommendations, yet the field is nascent with no established pathway for integration into routine clinical care. A recent market research survey found that healthcare providers remain highly skeptical of consumer technology, remote data collection, and the integrity of such data (58). Moreover, development and implementation of such technology must incorporate clinicians, patients, and caregivers as key stakeholder groups to build trust and adopt user-centered approaches that address privacy and usability issues. We may be on the cusp of a new era that will allow the full potential of AI to take hold in mental health care broadly, and geriatrics specifically. However, until clinicians join forces with data scientists, engineers, and developers—and until such technology addresses the pragmatic concerns that clinicians and patients face—we will only scratch the surface of such potential for these technologies.

Author Contributions

BNR and AP conceptualized and designed the work. BNR, MS, and AP drafted the manuscript. All authors critically read and revised the manuscript for important intellectual content and approved the submitted version.

Funding

This project was supported in part by the National Institute of Mental Health [grant P50 MH115837] and the National Institute on Aging [grant K23 AG059912]. AP's effort was supported by a grant from the Krembil Foundation, Toronto, Canada. Open access publication fees were provided by BNR's startup funds from the University of Nevada, Las Vegas. The sponsors played no role in the design of this manuscript, nor did they have any role during its execution or decision to submit.

Conflict of Interest

BR receives unrelated research support from Sanvello.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We thank Alejandra Lopez from the University of Washington ALACRITY Center for her assistance in formatting and designing our figure.

References

1. Wiens J, Shenoy ES. Machine learning for healthcare: on the verge of a major shift in healthcare epidemiology. Clin Infect Dis. (2018) 66:149–53. doi: 10.1093/cid/cix731

2. Alaa AM, Bolton T, Di Angelantonio E, Rudd JHF, van der Schaar M. Cardiovascular disease risk prediction using automated machine learning: a prospective study of 423,604 UK Biobank participants. PLoS ONE. (2019) 14:e0213653. doi: 10.1371/journal.pone.0213653

3. Pratap A, Atkins DC, Renn BN, Tanana MJ, Mooney SD, Anguera JA, et al. The accuracy of passive phone sensors in predicting daily mood. Depress Anxiety. (2019) 36:72–81. doi: 10.1002/da.22822

4. Rankin D, Black M, Flanagan B, Hughes CF, Moore A, Hoey L, et al. Identifying key predictors of cognitive dysfunction in older people using supervised machine learning techniques: observational study. JMIR Med Inform. (2020) 8:e20995. doi: 10.2196/20995

5. Cleret de Langavant L, Bayen E, Yaffe K. Unsupervised machine learning to identify high likelihood of dementia in population-based surveys: development and validation study. J Med Internet Res. (2018) 20:e10493. doi: 10.2196/10493

6. Sau A, Bhakta I. Artificial neural network (ANN) model to predict depression among geriatric population at a Slum in Kolkata, India. J Clin Diagn Res. (2017) 11:VC01–4. doi: 10.7860/JCDR/2017/23656.9762

7. DeSouza DD, Robin J, Gumus M, Yeung A. Natural language processing as an emerging tool to detect late-life depression. Front Psychiatry. (2021) 12:719125. doi: 10.3389/fpsyt.2021.719125

8. Ng K-D, Mehdizadeh S, Iaboni A, Mansfield A, Flint A, Taati B. Measuring gait variables using computer vision to assess mobility and fall risk in older adults with dementia. IEEE J Transl Eng Health Med. (2020) 8:1–9. doi: 10.1109/JTEHM.2020.2998326

9. Sharma A, Lin IW, Miner AS, Atkins DC, Althoff T. Towards facilitating empathic conversations in online mental health support: a reinforcement learning approach. In: Proceedings of the Web Conference 2021. Ljubljana: ACM (2021). p. 194–205. doi: 10.1145/3442381.3450097

10. Graham S, Depp C, Lee EE, Nebeker C, Tu X, Kim H-C, et al. Artificial intelligence for mental health and mental illnesses: an overview. Curr Psychiatry Rep. (2019) 21:116. doi: 10.1007/s11920-019-1094-0

11. Graham SA, Lee EE, Jeste DV, Van Patten R, Twamley EW, Nebeker C, et al. Artificial intelligence approaches to predicting and detecting cognitive decline in older adults: a conceptual review. Psychiatry Res. (2020) 284:112732. doi: 10.1016/j.psychres.2019.112732

12. Lee EE, Torous J, De Choudhury M, Depp CA, Graham SA, Kim H-C, et al. Artificial intelligence for mental health care: clinical applications, barriers, facilitators, and artificial wisdom. Biol Psychiatry Cogn Neurosci Neuroimaging. (2021) 6:856–64. doi: 10.1016/j.bpsc.2021.02.001

13. Chekroud AM, Bondar J, Delgadillo J, Doherty G, Wasil A, Fokkema M, et al. The promise of machine learning in predicting treatment outcomes in psychiatry. World Psychiatry. (2021) 20:154–70. doi: 10.1002/wps.20882

15. Folsom DP, Lindamer L, Montross LP, Hawthorne W, Golshan S, Hough R, et al. Diagnostic variability for schizophrenia and major depression in a large public mental health care system dataset. Psychiatry Res. (2006) 144:167–75. doi: 10.1016/j.psychres.2005.12.002

16. Mohr DC, Zhang M, Schueller SM. Personal sensing: understanding mental health using ubiquitous sensors and machine learning. Annu Rev Clin Psychol. (2017) 13:23–47. doi: 10.1146/annurev-clinpsy-032816-044949

17. Berke EM, Choudhury T, Ali S, Rabbi M. Objective measurement of sociability and activity: mobile sensing in the community. Ann Fam Med. (2011) 9:344–50. doi: 10.1370/afm.1266

18. Taler V, Phillips NA. Language performance in Alzheimer's disease and mild cognitive impairment: a comparative review. J Clin Exp Neuropsychol. (2008) 30:501–56. doi: 10.1080/13803390701550128

19. Beltrami D, Gagliardi G, Rossini Favretti R, Ghidoni E, Tamburini F, Calzà L. Speech analysis by natural language processing techniques: a possible tool for very early detection of cognitive decline? Front Aging Neurosci. (2018) 10:369. doi: 10.3389/fnagi.2018.00369

20. Gil D, Johnsson M. Diagnosing Parkinson by using artificial neural networks and support vector machines. Glob J Comput Sci Technol. (2009) 9:63–71.

21. Committee on the Mental Health Workforce for Geriatric Populations Board on Health Care Services Institute of Medicine. The mental health and substance use workforce for older adults: in whose hands? In: Eden J, Maslow K, Le M, Blazer D, editors, Washington, DC: National Academies Press (US) (2012). Available online at: http://www.ncbi.nlm.nih.gov/books/NBK201410/ (accessed June 25, 2021).

22. Brenes GA, Danhauer SC, Lyles MF, Hogan PE, Miller ME. Barriers to mental health treatment in rural older adults. Am J Geriatr Psychiatry. (2015) 23:1172–8. doi: 10.1016/j.jagp.2015.06.002

23. Jimenez DE, Bartels SJ, Cardenas V, Dhaliwal SS, Alegría M. Cultural beliefs and mental health treatment preferences of ethnically diverse older adult consumers in primary care. Am J Geriatr Psychiatry. (2012) 20:533–42. doi: 10.1097/JGP.0b013e318227f876

24. Mojtabai R, Olfson M, Sampson NA, Jin R, Druss B, Wang PS, et al. Barriers to mental health treatment: results from the National Comorbidity Survey Replication. Psychol Med. (2011) 41:1751–61. doi: 10.1017/S0033291710002291

25. Areán PA, Renn BN, Ratzliff A. Making psychotherapy available in the United States: implementation challenges and solutions. Psychiatr Serv. (2021) 72:222–4. doi: 10.1176/appi.ps.202000220

26. Saria S, Goldenberg A. Subtyping: what it is and its role in precision medicine. IEEE Intell Syst. (2015) 30:70–5. doi: 10.1109/MIS.2015.60

27. Nahum-Shani I, Smith SN, Spring BJ, Collins LM, Witkiewitz K, Tewari A, et al. Just-in-time adaptive interventions (JITAIs) in mobile health: key components and design principles for ongoing health behavior support. Ann Behav Med Publ Soc Behav Med. (2017) 52:446–62. doi: 10.1007/s12160-016-9830-8

28. Müller AM, Blandford A, Yardley L. The conceptualization of a Just-In-Time Adaptive Intervention (JITAI) for the reduction of sedentary behavior in older adults. mHealth. (2017) 3:37–37. doi: 10.21037/mhealth.2017.08.05

29. Davenport T, Kalakota R. The potential for artificial intelligence in healthcare. Future Healthc J. (2019) 6:94–8. doi: 10.7861/futurehosp.6-2-94

30. Inkster B, Sarda S, Subramanian V. An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: real-world data evaluation mixed-methods study. JMIR MHealth UHealth. (2018) 6:e12106. doi: 10.2196/12106

31. Euronews. Smarter Than the Average Home—Technology and Assisted Living. euronews (2020). Available online at: https://www.euronews.com/next/2020/09/25/smarter-than-the-average-home-how-technology-is-aiding-assisted-living (accessed June 25, 2021).

32. Trajkova M, Martin-Hammond A. “Alexa is a toy”: exploring older adults' reasons for using, limiting, and abandoning echo. In: Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. New York, NY: Association for Computing Machinery (2020). p. 1–13. doi: 10.1145/3313831.3376760

33. Luxton DD. Recommendations for the ethical use and design of artificial intelligent care providers. Artif Intell Med. (2014) 62:1–10. doi: 10.1016/j.artmed.2014.06.004

34. Bindoff I, Stafford A, Peterson G, Kang BH, Tenni P. The potential for intelligent decision support systems to improve the quality and consistency of medication reviews. J Clin Pharm Ther. (2012) 37:452–8. doi: 10.1111/j.1365-2710.2011.01327.x

35. Bond WF, Lynch TJ, Mischler MJ, Fish JL, McGarvey JS, Taylor JT, et al. Virtual standardized patient simulation: case development and pilot application to high-value care. Simul Healthc. (2019) 14:241–50. doi: 10.1097/SIH.0000000000000373

36. Goldberg SB, Flemotomos N, Martinez VR, Tanana MJ, Kuo PB, Pace BT, et al. Machine learning and natural language processing in psychotherapy research: alliance as example use case. J Couns Psychol. (2020) 67:438–48. doi: 10.1037/cou0000382

37. Bor JS. Among the elderly, many mental illnesses go undiagnosed. Health Aff. (2015) 34:727–31. doi: 10.1377/hlthaff.2015.0314

38. Gallo JJ, Rabins PV, Anthony JC. Sadness in older persons: 13-year follow-up of a community sample in Baltimore, Maryland. Psychol Med. (1999) 29:341–50. doi: 10.1017/S0033291798008083

39. Knight BG, Winterbotham S. Rural and urban older adults' perceptions of mental health services accessibility. Aging Ment Health. (2020) 24:978–84. doi: 10.1080/13607863.2019.1576159

40. Xie B, Tao C, Li J, Hilsabeck RC, Aguirre A. Artificial intelligence for caregivers of persons with Alzheimer's disease and related dementias: systematic literature review. JMIR Med Inform. (2020) 8:e18189. doi: 10.2196/18189

41. National Academies of Sciences Engineering and Medicine. Social Isolation and Loneliness in Older Adults: Opportunities for the Health Care System. Washington, DC: National Academies Press (2020). Available online at: https://www.nap.edu/catalog/25663 (accessed May 16, 2020).

42. Badal VD, Graham SA, Depp CA, Shinkawa K, Yamada Y, Palinkas LA, et al. Prediction of loneliness in older adults using natural language processing: exploring sex differences in speech. Am J Geriatr Psychiatry. (2021) 29:853–66. doi: 10.1016/j.jagp.2020.09.009

43. Seifert A, Cotten SR, Xie BA. Double burden of exclusion? Digital and social exclusion of older adults in times of COVID-19. J Gerontol Ser B. (2021) 76:e99–103. doi: 10.1093/geronb/gbaa098

44. Kolovson S, Pratap A, Duffy J, Allred R, Munson SA, Areán PA. Understanding participant needs for engagement and attitudes towards passive sensing in remote digital health studies. In: Proceedings of the 14th EAI International Conference on Pervasive Computing Technologies for Healthcare. Atlanta, GA: ACM (2020). p. 347–62. doi: 10.1145/3421937.3422025

45. Aafjes-van Doorn K, Kamsteeg C, Bate J, Aafjes M. A scoping review of machine learning in psychotherapy research. Psychother Res. (2021) 31:92–116. doi: 10.1080/10503307.2020.1808729

46. Pratap A, Neto EC, Snyder P, Stepnowsky C, Elhadad N, Grant D, et al. Indicators of retention in remote digital health studies: a cross-study evaluation of 100,000 participants. Npj Digit Med. (2020) 3:21. doi: 10.1038/s41746-020-0224-8

47. Windish DM, Huot SJ, Green ML. Medicine residents' understanding of the biostatistics and results in the medical literature. J Am Med Assoc. (2007) 298:1010–22. doi: 10.1001/jama.298.9.1010

48. Renn BN, Pratap A, Atkins DC, Mooney SD, Areán PA. Smartphone-based passive assessment of mobility in depression: challenges and opportunities. Ment Health Phys Act. (2018) 14:136–9. doi: 10.1016/j.mhpa.2018.04.003

49. Mittelstadt B. Principles alone cannot guarantee ethical AI. Nat Mach Intell. (2019) 1:501–7. doi: 10.1038/s42256-019-0114-4

50. Mooney SJ, Pejaver V. Big data in public health: terminology, machine learning, and privacy. Annu Rev Public Health. (2018) 39:95–112. doi: 10.1146/annurev-publhealth-040617-014208

51. Cirillo D, Catuara-Solarz S, Morey C, Guney E, Subirats L, Mellino S, et al. Sex and gender differences and biases in artificial intelligence for biomedicine and healthcare. Npj Digit Med. (2020) 3:81. doi: 10.1038/s41746-020-0288-5

52. Price WN, Gerke S, Cohen IG. Potential liability for physicians using artificial intelligence. J Am Med Assoc. (2019) 322:1765. doi: 10.1001/jama.2019.15064

53. Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. (2019) 366:447–53. doi: 10.1126/science.aax2342

54. Areán PA, Pratap A, Hsin H, Huppert TK, Hendricks KE, Heagerty PJ, et al. Perceived utility and characterization of personal google search histories to detect data patterns proximal to a suicide attempt in individuals who previously attempted suicide: pilot cohort study. J Med Internet Res. (2021) 23:e27918. doi: 10.2196/27918

55. Pratap A, Allred R, Duffy J, Rivera D, Lee HS, Renn BN, et al. Contemporary views of research participant willingness to participate and share digital data in biomedical research. J Am Med Assoc Netw Open. (2019) 2:e1915717. doi: 10.1001/jamanetworkopen.2019.15717

56. Renn BN, Hoeft TJ, Lee HS, Bauer AM, Areán PA. Preference for in-person psychotherapy versus digital psychotherapy options for depression: survey of adults in the US. Npj Digit Med. (2019) 2:6. doi: 10.1038/s41746-019-0077-1

57. Chandler C, Foltz PW, Elvevåg B. Using machine learning in psychiatry: the need to establish a framework that nurtures trustworthiness. Schizophr Bull. (2019) 2019:sbz105. doi: 10.1093/schbul/sbz105

58. Shinkman R. Survey Casts Doubt on Utility of Wearable Devices in Healthcare. Healthcare Dive (2021). Available online at: https://www.healthcaredive.com/news/survey-casts-doubt-on-utility-of-wearable-devices-in-healthcare/598846/ (accessed May 10, 2021).

Keywords: machine learning, deep learning, psychotherapy, older adults, technology, depression, natural language processing, personalized medicine/personalized health care

Citation: Renn BN, Schurr M, Zaslavsky O and Pratap A (2021) Artificial Intelligence: An Interprofessional Perspective on Implications for Geriatric Mental Health Research and Care. Front. Psychiatry 12:734909. doi: 10.3389/fpsyt.2021.734909

Received: 01 July 2021; Accepted: 07 October 2021;

Published: 15 November 2021.

Edited by:

Ellen E. Lee, University of California, San Diego, United StatesReviewed by:

Helmet Karim, University of Pittsburgh, United StatesHuali Wang, Peking University Sixth Hospital, China

Copyright © 2021 Renn, Schurr, Zaslavsky and Pratap. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Brenna N. Renn, YnJlbm5hLnJlbm5AdW5sdi5lZHU=

Brenna N. Renn

Brenna N. Renn Matthew Schurr

Matthew Schurr Oleg Zaslavsky

Oleg Zaslavsky Abhishek Pratap

Abhishek Pratap