- Department of Psychiatry, University of Michigan, Ann Arbor, MI, United States

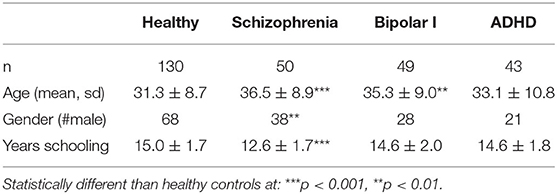

There is substantial interest in identifying biobehavioral dimensions of individual variation that cut across heterogenous disorder categories, and computational models can play a major role in advancing this goal. In this report, we focused on efficiency of evidence accumulation (EEA), a computationally characterized variable derived from sequential sampling models of choice tasks. We created an EEA factor from three behavioral tasks in the UCLA Phenomics dataset (n = 272), which includes healthy participants (n = 130) as well-participants with schizophrenia (n = 50), bipolar disorder (n = 49), and attention-deficit/hyperactivity disorder (n = 43). We found that the EEA factor was significantly reduced in all three disorders, and that it correlated with an overall severity score for psychopathology as well as self-report measures of impulsivity. Although EEA was significantly correlated with general intelligence, it remained associated with psychopathology and symptom scales even after controlling for intelligence scores. Taken together, these findings suggest EEA is a promising computationally-characterized dimension of neurocognitive variation, with diminished EEA conferring transdiagnostic vulnerability to psychopathology.

Introduction

The standard approach to psychiatric nosology, reflected in the widely used DSM (1) and ICD (2) systems, emphasizes discrete diagnostic categories, each assumed to have its own distinct pathophysiology. An alternative approach, central to the Research Domain Criteria (RDoC) initiative (3–5), understands mental disorders in terms of fundamental biobehavioral dimensions of variation that span disorders (6, 7). In this second approach, there is a critical need to identify these fundamental dimensions of variation, which are assumed to operate at a latent mechanistic level and may not have easily appreciated, one-to-one relationships with observable symptoms (3).

Computational psychiatry (8–12) is a research field that aims to formally model complex behaviors, typically performance during carefully constructed experimental tasks, in order to better understand abnormal patterns of functioning in psychiatric disorders. Standard models of task performance used in this field typically feature a small number of parameters that reflect latent psychological functions, and which might represent candidate biobehavioral dimensions on which individuals vary in clinically-significant ways. Here we focus on sequential sampling models (SSMs) (13–16), a class of models developed in mathematical psychology that are widely-used in cognitive neuroscience and have a substantial track record of success in explaining behavioral and neural phenomena on choice tasks (17–19).

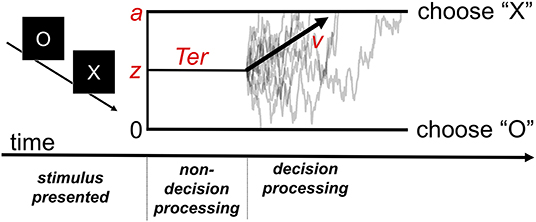

SSMs aim to capture performance on forced choice tasks in which subjects select from two or more response options—a class of tasks that subsumes a large portion of experimental cognitive paradigms. In the diffusion decision model (DDM) (14, 15, 20), a popular and well-validated SSM, task performance is described by a noisy decision process that drifts over time toward one of two decision boundaries. The average rate at which the process moves toward the correct decision boundary is determined by the “drift rate” parameter v. The model also includes several additional parameters that determine other aspects of the decision process, which are discussed in Figure 1.

Figure 1. The diffusion decision model (DDM). The model explains performance in a simple decision task in which participants must decide whether the stimulus presented was an “X” or “O.” Following stimulus presentation, the decision process drifts between the boundaries for each possible response. Gray traces represent the paths of the stochastic decision processes for individual trials. The black arrow represents the critical drift rate parameter, v, which determines the average rate at which the process drifts toward the correct response boundary (e.g., toward an “X” response on a trial in which an “X” stimulus was presented). The z parameter represents the starting point bias of the process (in the example, it is set midway between the two decision boundaries representing absence of bias). The a parameter determines where the upper boundary is set (with the lower boundary always set at 0) and can be used to index “response caution,” the quantity of evidence that is required before the decision boundary is reached. The model also includes a parameter for time spent on processes peripheral to the decision (Ter).

Although the DDM and related SSMs are used in experimental contexts to simulate and measure a wide variety of latent neurocognitive processes, the drift rate parameter is often of key interest. Individual differences in this parameter reflect the ability of the system to efficiently accumulate stimulus information that is relevant to selecting an appropriate response in the context of noisy information. Importantly, SSMs allow the drift rate to be disentangled from multiple other factors that affect task performance, which are indexed with the other model parameters, yielding much more precise estimates.

Trait efficiency of evidence accumulation (EEA), as indexed by the drift rate in SSMs, has several properties that make it attractive as a candidate transdiagnostic dimension. First, people exhibit stable individual differences in EEA (21, 22), suggesting it has trait-like qualities. Second, EEA manifests across a wide variety of tasks, and individual differences in EEA are correlated across these tasks (22, 23). This suggests EEA has some generality in influencing performance across multiple psychological domains. Third, EEA has been observed to be diminished in several psychiatric disorders (16, 24, 25), though most studies thus far have examined attention-deficit/hyperactivity disorder (ADHD) (26–29), and different tasks were used across these studies, making comparisons across disorders difficult. Fourth, recent work links EEA conceptually as well as empirically to poor self-regulation and impulsive decision-making (“impulsivity”) (30–32). Since impulsivity is itself found to be elevated across multiple psychiatric disorders (33, 34), a connection between EEA and impulsivity adds to the evidence that EEA might be implicated in psychopathology transdiagnostically.

The present study investigates EEA across multiple psychiatric disorders, while addressing some of the limitations in previous research. We take advantage of the publicly-available UCLA Phenomics dataset, a large sample (n = 272, with 142 having schizophrenia, bipolar disorder, or ADHD) that was extensively characterized with structured clinical interviews, self-report scale measures of symptom dimensions, behavioral measures of cognition, and a number of forced choice tasks that can be analyzed in an SSM framework (35). We constructed an EEA factor from three behavioral tasks available in this sample, the Stroop task, Go/No Go task, and the Stop Signal task. We hypothesized that EEA would be significantly reduced across three disorder categories, and that it would be significantly negatively associated with global psychopathology severity and trait impulsivity.

Methods

Participants

Participants were recruited as part of a larger study within the Consortium for Neuropsychiatric Phenomics at University of California, Los Angeles (www.phenomics.ucla.edu). Healthy controls were recruited through community advertisements from the Los Angelos area, while patients were recruited through outreach to local clinics and online portals. All participants were between ages 21 and 50, had no major medical illnesses, and provided urinalyses negative for drugs. Healthy controls in addition were excluded if they had a lifetime diagnosis of schizophrenia, bipolar disorder or substance abuse/dependence, or current diagnosis of other psychiatric disorders. All participants underwent structured clinical interviews, neuropsychological testing, and administration of a number of behavioral tasks. They in addition underwent two fMRI scanning sessions, but imaging data is not part of the present analysis. Comprehensive descriptions of the sample and measures collected are available elsewhere (35). Study procedures were approved by the UCLA institutional review board and all participants provided written informed consent.

The present analysis relies on a sample of 272 subjects. Psychiatric diagnosis was established by the Structured Clinical Interview for DSM-IV (36). Primary diagnoses and demographic information are shown in Table 1. As shown in this table, participants with schizophrenia and participants with bipolar disorder were younger than healthy participants. In addition, participants with schizophrenia were more likely to be male and had fewer years of school. Thus, we report results with statistical correction for these demographic factors. Some subjects were missing data for certain measures (Stroop Task n = 2, Stop Signal Task n = 2). As a result, the sample size available for most comparisons was 268 subjects.

Behavioral Tasks and Construction of an Evidence Accumulation Factor

Three behavioral tasks in the UCLA Phenomics dataset met the basic assumptions of SSMs. In particular, they were all choice tasks with discrete response alternatives, all afforded relatively rapid responses (under 1.5 s mean reaction time), and all had sufficient number of trials (ranging from 54 to 360 trials per task/condition). Full descriptions of all measures are available at the UCLA Phenomics Wiki page (http://lcni-3.uoregon.edu/phenowiki/index.php/HTAC). Brief descriptions follow:

Color/Word Stroop Task–This is a computerized version of the traditional Stroop task in which individuals are asked to respond with the ink color (red, green or blue) of a word stimulus. The meaning of the word is either congruent with the color (e.g., “RED” in red coloring) or incongruent, matching a different possible color response (e.g., “BLUE” in red coloring). Participants were presented with 98 congruent and 54 incongruent trials in pseudorandom order. Each stimulus was presented for 150 ms following a 250 ms fixation cross and participants were allowed to respond during a subsequent 2,000 ms interval. Responses were immediately followed by an 1,850–1,950 ms blank screen delay.

Go/No-Go Task–Participants were presented with a sequence of letters and were instructed to press a button as quickly as possible after the presentation of any letter except “X,” but to withhold their response after the presentation of “X.” The task contained 18 blocks presented in random order, with six blocks in each of three inter-trial interval (ITI) conditions: 1,000, 2,000, and 4,000 ms. Each stimulus was presented for 250 ms and followed by a blank screen response interval of 750, 1,750, or 3,750 ms depending on the ITI condition. Each block contained 20 trials (two of which were “X” trials) for a total of 360 trials across the entire task.

Stop Signal Task–Participants were presented with a series of “X” and “O” stimuli for 1,000 ms and were asked to press a button corresponding to the stimulus presented on that trial. Trials were preceded by a 500 ms fixation cross and followed by a 100 ms blank screen interval. On a subset of trials (25%, “stop” trials) an auditory tone which indicated that the participant should withhold their response to that trial (“stop signal”) was played following the stimulus presentation. The latency of the stop signal following the stimulus onset, or “stop signal delay” (SSD), was determined using a standard staircase tracking algorithm which dynamically adjusted the SSD on each “stop” trial in 50 ms increments with the goal of obtaining an inhibition rate of ~50% for each individual. Participants completed two blocks of 32 “stop” trials and 96 “go” (i.e., non-“stop”) trials each. The task's primary dependent measure of response inhibition, stop-signal reaction time (SSRT), which is thought to index the latency of a top-down process that inhibits responses on “stop” trials (37), was estimated using a quantile-based method outlined by (38). For the model-based analysis, we focused exclusively on the 192 “go” trials, following previous applications of SSMs to the stop signal task (26, 30, 39).

The UCLA Phenomics dataset does not provide trial-level data for these tasks, but instead provides detailed per-subject summary statistics characterizing a wide variety of task dimensions. Thus, we used the “EZ-diffusion model” (EZDM) approach (40). This method provides a closed-form analytic solution for estimating the main parameters of the DDM (see Introduction). Inputs to the EZDM procedure are three subject-level summary statistics: the proportion of correct decisions, the mean of correct response times, and the variance of correct response times. The EZDM procedure produces parameter estimates for individuals' drift rate (v), boundary separation (a: an index of response caution), and non-decision time (Ter: time taken up by perceptual and motor processes peripheral to processing of the choice).

EZ-diffusion model has been shown to produce parameter estimates and inferences that are highly similar to those drawn from more complex modeling methods (41, 42), and some data suggests it recovers individual-differences—the main interest in this study—better than model-fitting methods that use trial-to-trial data (43). Previous comprehensive simulation studies suggest that EZDM can precisely recover drift rate with roughly 70 trials per task (44). Since the trial counts (i.e., 98, 54,192, and 360) for our task conditions typically exceed this number, we can be reasonably confident that drift rates for these tasks were accurately recovered, and even more confident that the latent factor, which draws strength across trials in all four conditions, was accurately recovered. We also note that, although the DDM is intended to describe two-choice tasks, it is possible to obtain comparable parameter estimates for three-choice paradigms, such as the current study's Stroop task, under the assumption that correct and error responses are similar across trials requiring different choices (15). Hence, we adopted this simplifying assumption in order to allow drift rate estimates to be obtained from the Stroop task in this unique data set.

EZ-diffusion model was applied separately to four task conditions: Stroop congruent trials, Stroop incongruent trials, Go/No-Go trials, and Stop Signal “go” trials. Parameters were estimated in R (45) using the code from the original EZDM manuscript (40) with the scaling parameter (within-trial drift variability) set at 1. For the Go/No-Go task, proportion correct was computed by considering all trials (i.e., both “X” and non-“X”), and the response time mean and variance was set to the values for correct hits (trials on which individuals correctly inhibited their response do not have an observed response time), a method similar to that used in (27). For participants with perfect accuracy on any task, the edge correction recommended in the original article was used: We replaced the proportion correct value of 1 with a value that corresponds to the output of the following equation, where n is the number of trials in the task:

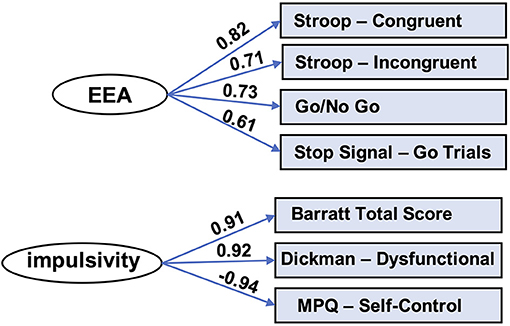

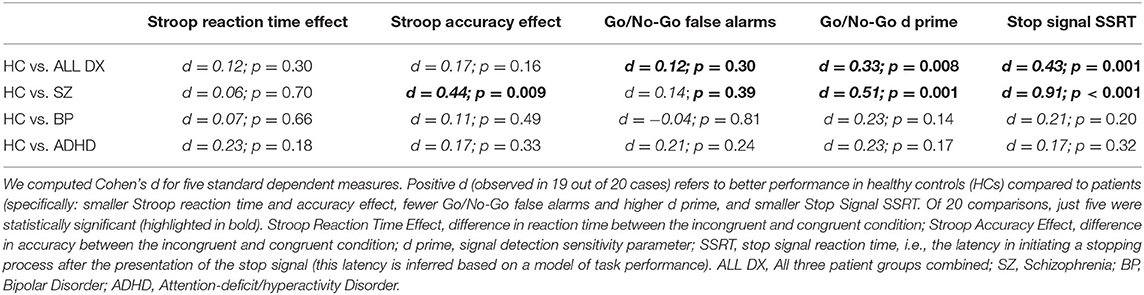

Next, factor analysis, implemented in SPSS 25 (IBM, Armonk, NY), was used to produce an EEA factor from the drift rate parameters derived from the four task conditions. This factor accounted for 50% variance of the variance in the drift rates. Factor loadings were, respectively, for the four task conditions listed above: 0.82, 0.71, 0.73, and 0.61. Cronbach's alpha was 0.69, and it did not increase by dropping any items (Figure 2, top panel).

Figure 2. Factor models for EEA and impulsivity. Factor analysis yielded latent variables for efficiency of evidence accumulation (EEA) and impulsivity. MPQ, Multidimensional Personality Questionnaire.

Additional Neuropsychological and Symptom/Trait Factors

General Intelligence Factor

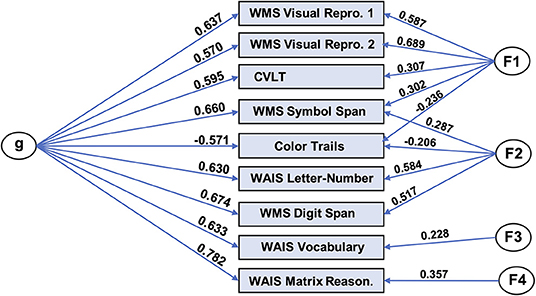

We produced a general intelligence factor from scores from nine cognitive tasks: WAIS-letter number sequencing, WAIS-vocabulary, WAIS-matrix reasoning, color trails test, WMS IV-symbol span, WMS IV-digit span, WMS IV-visual reproduction 1, WMS IV-visual reproduction 2, and California Verbal Learning Test. We used bifactor modeling, given prior results supporting the superiority of this type of model in this domain (46). In particular, we utilized code from Dubois and colleagues (47), which in turn uses the omega function in the psych (v 1.8.4) package (48) in R (v3.4.4) (45). The code performs maximum likelihood-estimated exploratory factor analysis (specifying a bifactor model), oblimin factor rotation, followed by a Schmid-Leiman transformation (49), yielding a general factor (“g”) as well as specific factors.

Using this code, we found a bifactor model fit the data very well by conventional standards (CFI = 0.991; RMSEA = 0.030; SRMR = 0.019; BIC = 7.23). The solution, which included a general factor and four group factors, is depicted in Figure 3. The general factor accounts for 72.2% of the variance [coefficient omega hierarchical ω (50)], while the four specific factors accounted for 16.7% percent of the variance.

Figure 3. Bifactor model of general intelligence. Bifactor modeling was performed on nine cognitive tasks in the UCLA dataset. The resulting model consisted of a general factor (“g”) and four group factors and exhibited excellent fit with the data. WMS, Wechsler Memory Scale; Repro, Reproduction; WAIS, Wechsler Adult Intelligence Scale; Reason, Reasoning.

Trait Impulsivity Factor

We used factor analysis to produce a trait impulsivity factor from scores on three self-report scales measuring impulsivity: Barratt Impulsivity Scale (total score) (51, 52), Dickman Impulsivity Scale (total dysfunctional) (53), and Multidimensional Personality Questionnaire (self-control subscale) (54). This factor accounted for 85% variance of the variance in the scores. Factor loadings for these scales, respectively, were: 0.91, 0.92, and −0.94. Cronbach's alpha was 0.77 and did not improve appreciably by removal of any of the scales (Figure 2, bottom panel).

General Severity Score for Psychopathology

Participants completed the Hopkins Symptom Checklist (55), a 56-item validated scale for symptoms associated with a broad range of psychiatric disorders. We used the global severity of psychopathology score from the Hopkins checklist, which is a summary score of total symptom load across all scale items.

Results

Evidence Accumulation Is Reduced Across Three Mental Disorders

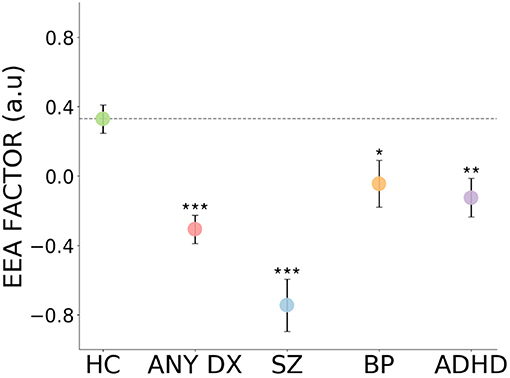

As shown in Figure 4, Table 2, the EEA factor was significantly reduced in all patients (collapsing across the three diagnoses) in comparison to healthy controls. It was also significantly reduced in each patient group separately in comparison to healthy controls. Effect sizes ranged from large (schizophrenia) to medium (all diagnoses and ADHD) to small-to-medium (bipolar disorder). We repeated these analyses controlling for age, gender, and years schooling and differences remained highly statistically significant. Mean and standard deviations per group for all task summary metrics and clinical score variables are shown in Supplementary Table 1.

Figure 4. Impaired evidence accumulation across psychiatric diagnoses. The evidence accumulation factor is significantly reduced in all patients, collapsing across the three diagnoses, in comparison to healthy controls. It is also reduced in each patient groups separately in comparison to healthy controls. HC, Healthy Controls; ALL DX, All three patient groups combined; SZ, Schizophrenia; BP, Bipolar Disorder; ADHD, Attention-deficit/hyperactivity Disorder; a.u., arbitrary units. Error bars reflect represent standard error. ***p < 0.001, **p < 0.01, *p < 0.05.

Table 2. Reduced efficiency of evidence accumulation (EEA) in healthy controls vs. patients with psychiatric disorders.

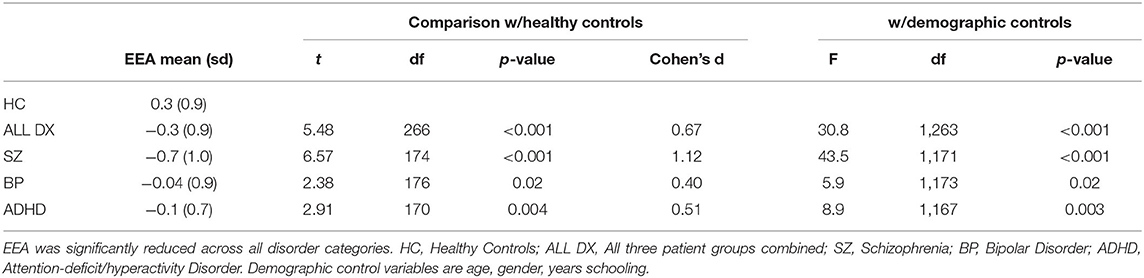

Evidence Accumulation Is Inversely Associated With the Global Psychopathology Severity Score and Self-Reported Impulsivity

Across the whole sample, the EEA factor was inversely correlated with the global psychopathology severity score (r = −0.20, p = 0.001; Figure 5), and this relationship remained significant after controlling for age, gender, and years of schooling [t(263) = 2.6, p = 0.009]. The EEA factor was also inversely correlated with the impulsivity factor (r = −0.22, p < 0.001; Figure 5), and this relationship remained significant after controlling for age, gender, and years of schooling [t(263) = 3.3, p = 0.001]. We also performed analyses that, in addition to demographic variables, include categorical regressors that control for the effect of individual psychiatric diagnoses (i.e., schizophrenia, bipolar, and ADHD). The relationship between EEA and impulsivity remained statistically significant [t(259) = 2.0, p = 0.04], but the relationship between EEA and global psychopathology was not [t(259) = 0.65, p = 0.52].

Figure 5. Evidence accumulation is related to global psychopathology and impulsivity. The efficiency of evidence accumulation (EEA) factor was statistically significantly correlated with a global psychopathology severity score derived from a self-report general symptom scale. The evidence accumulation factor was also significantly correlated with an impulsivity factor derived from three self-report scales of trait impulsivity. Confidence intervals represent 95% predictive intervals, a.u., arbitrary units.

Evidence Accumulation Remains Associated With Psychopathology After Controlling for General Intelligence

The EEA factor was correlated with general intelligence (r = 0.43, p < 0.001), consistent with EEA playing a general role in diverse forms of cognitive processing. Given this correlation, we assessed whether after controlling for general intelligence, the EEA factor remained related to psychopathology, the global psychopathology severity score, and impulsivity. We found that even after these controls, EEA remained statistically significantly reduced in all patients (collapsing across the three diagnoses) in comparison to healthy controls [F(1, 265) = 34.4, p < 0.001, eta2 = 0.10]. It was also reduced in schizophrenia [F(1, 173) = 47.7, p < 0.001, eta2 = 0.20], bipolar disorder [F(1, 175) = 6.5, p = 0.01, eta2 = 0.03], and ADHD [F(1, 171) = 9.8, p = 0.002, eta2 = 0.05]. Additionally, the EEA factor remained statically significantly associated with the impulsivity factor (standardized beta = −0.17, p = 0.003) and the global psychopathology severity score (standardized beta = −0.12, p = 0.03). We additionally examined all the preceding relationships with demographic controls (age, gender, years schooling), and found they all remained with levels of statistical significance that were essentially unchanged.

Additionally, we assessed the preceding relationships in healthy controls alone. In healthy controls, EEA remained significantly correlated with general intelligence (r = 0.40, p < 0.001), but not global psychopathology (r = −0.10, p = 0.22) and impulsivity (r = −0.08, p = 0.31).

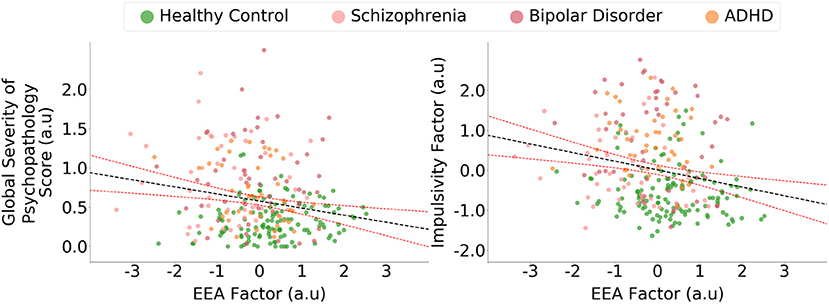

Evidence Accumulation Is More Reliably Linked to Psychopathology Than Traditional Dependent Measures From the Three Behavioral Tasks

We additionally assessed the relationship between more traditional dependent measures from the three behavioral tasks and measures of psychopathology. Table 3 shows five traditional dependent measures for these tasks (further clarification of these measures is found in the Table caption). We found that across 20 total comparisons, just five were statistically significant. It is also notable that in all five cases, the corresponding relationship between EEA and the respective measure of psychopathology was larger (compare Cohen's d values from Tables 2, 3).

Table 3. Relationship between standard dependent measures in three behavioral tasks and measures of psychopathology.

Discussion

Computational psychiatry opens up opportunities to rigorously identify and characterize clinically-significant biobehavioral dimensions of individual variation using precisely-specified mathematical models (8–12). This study appears to be the first to examine in a single group of subjects the relationships between evidence accumulation (EEA), a key computational parameter in sequential sampling models (SSMs) of choice tasks, and multiple measures of psychopathology diagnosis and symptoms. Our major findings are that: (1) EEA is significantly reduced in schizophrenia, bipolar disorder, and ADHD; (2) It is significantly negatively correlated with a global severity of psychopathology score as well as self-reported impulsivity; (3) EEA outperforms traditional metrics from behavioral tasks, such as reaction time and accuracy difference scores, in being more strongly associated with psychopathology and symptom scales. Taken together, these findings suggest diminished EEA is a promising computationally characterized transdiagnostic vulnerability factor in psychopathology.

Sequential sampling models are among the most widely used computational models in psychology and neuroscience (13–16). Key advantages of these models are that they capture complex and subtle features of response profiles across a broad range of psychological tasks (56). Previous studies have also found that EEA values, as indexed by drift rate parameters in SSMs, are related across tasks (21, 22) and stable across sessions (22, 23), suggesting they have trait-like properties. The current study extends these results by showing that EEA has broad, transdiagnostic relationships with psychopathology: It is reduced across all three disorder categories present in this sample, and it is inversely correlated with clinically relevant symptom scales.

Interestingly, while the EEA construct has been extensively invoked to explain task performance in hundreds of studies, the mechanistic basis of individual-differences in EEA, and how these differences relate to differences in other cognitive constructs, are much less explored; but see (39, 57, 58). Several studies using perceptual decision tasks find drift rates are related to general intelligence (59–62), similar to the pattern we found in the present study. But, again, similar to prior work, the correlations we found in this study between EEA and general intelligence were moderate in size, with individuals' general intelligence explaining <25% of the variance in EEA in this sample. Furthermore, we found that EEA continued to be related to multiple measures of psychopathology, including schizophrenia, ADHD, trait impulsivity, and global psychopathology severity, even after adjusting for individuals' general intelligence. Hence, although additional research is needed, current evidence suggests that EEA and intelligence are related, but psychometrically dissociable, individual-difference dimensions.

In contrast to EEA, standard dependent measures from the Stroop, Go/No-Go, and Stop Signal tasks performed relatively poorly in terms of being associated with psychopathology measures, with just five significant effects observed in 20 comparisons (without applying multiple comparisons correction), and these effects were scattered across different comparisons (see also (63, 64). We propose two explanations for why EEA was more strongly related to psychopathology than traditional metrics.

First, a recent pair of influential studies, each examining multiple behavioral tasks, found that standard dependent measures from these tasks exhibit relatively poor test-retest reliability (65, 66). These observations are further supported by theoretical work with simulations that shows that difference score metrics (for example, Stroop interference effects) fare particularly poorly (67, 68). One reason is that subtracting scores from one condition against another will, given plausible assumptions, increase noise relative to signal, thus diminishing test-retest reliability (67). Since the reliability of a measure sets a ceiling on how well it can correlate with another variable (69), the low reliability of standard metrics derived from these tasks could help to explain their weaker relationship with psychopathology. In contrast, EEA estimates from tasks with sufficient numbers of trials have generally been demonstrated to exhibit good reliability (e.g., rs > 0.70) (21), thus potentially enabling higher correlation with psychopathology.

A second possible interpretation for the lack of predictivity of standard metrics, one that is not mutually exclusive with the first, is that these metrics reflect complex interactions among multiple factors, some of which relate to psychopathology but others of which do not (67). Computational modeling can play a key role in disentangling these interacting factors, allowing researchers to identify underlying parameters that are more directly associated with clinically-significant dimensions (24–26, 70).

For example, standard dependent measures from behavioral tasks, such as task accuracy or reaction time, cannot distinguish whether lower values in an individual are due to lower intrinsic ability on the task or preferences to trade off lower accuracy for greater speed (or lower speed for greater accuracy) (71). Sequential sampling models, in contrast, provide an explicit model of this tradeoff (14, 56, 70), which allows the rate of evidence accumulation for the correct response option to be distinguished from the threshold of evidence at which a response is selected (where higher evidence thresholds correspond to greater preference for accuracy over speed). The results of this study provide support for the view that computational models enable better quantification of underlying dimensions of inter-individual variation and yield stronger relationships with measures of psychopathology (16, 25–27).

We found that EEA is reduced transdiagnostically, and the nature of EEA may shed light on why it manifests across diverse disorder types. Evidence accumulation is conceptualized as a basic ability to rapidly extract information from a stimulus to select contextually appropriate responses. This is a highly general ability and thus reductions in EEA would be expected to increase the probability that inappropriate responses are produced across diverse psychological domains (attention, threat detection, and motivation). Consistent with recent hierarchical models of psychopathology, which posit abnormalities at multiple levels of generality, other abnormalities might operate more locally in individual psychological domains (e.g., enhanced sensitivity of threat detection systems). Together, interactions between high-level abnormalities such as impaired EEA and more local domain-specific abnormalities could produce the actually observed clinical pattern involving both substantial covariance as well as specificity in psychiatric symptoms.

The current study has several limitations. First, we used an EEA factor derived from three tasks in the UCLA Phenomics sample that met basic assumptions of SSMs. Future studies should extend this work by examining a more diverse range of tasks. Second, given the availability of subject-level summary statistics, we used the EZDM approach for calculating SSM parameters, rather than performing trial-by-trial modeling. EZ-diffusion model results, however, usually correlate highly with results from trial-by-trial approaches, and some studies suggest EZDM can be more effective in quantifying individual differences (41–43). We also note that newer specialized modeling approaches are emerging for modeling “conflict” (e.g., Stroop) tasks in which unique features of these tasks are explained by a process in which correct responding requires overriding a pre-potent response (72–74). This is a fast-evolving area of research and future studies could employ these newer approaches. Relatedly, it is also possible that performance in the go/no-go task analyzed in this paper is affected by processes that are not described well by the simple EZDM model, e.g., response bias toward the upper boundary (75). However, we note that for tasks that similarly encourage response biases, such as the continuous performance task (CPT), initial findings of EEA deficits in ADHD from simple EZDM fits (27) have been strongly upheld after more comprehensive modeling (76). Finally, this sample included three major mental disorders, schizophrenia, bipolar disorder, and ADHD. A number of others disorders—for example, major depression, obsessive compulsive disorder, autism—were not well-represented in this sample, and future studies should examine a wider range of clinical populations to assess the robustness of our results across disorder categories.

In sum, this study demonstrates that EEA holds promise as a basic dimension of inter-individual neurocognitive variation, and impaired EEA may be a transdiagnostic vulnerability factor in psychopathology.

Data Availability Statement

Code and links to the primary data are available at the following Open Science Framework (OSF) page: https://osf.io/cqksz/.

Ethics Statement

The studies involving human participants were reviewed and approved by UCLA Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

CS and AW contributed to all aspects of the manuscript including study conception, data analysis, interpretation of results, and manuscript writing. Both authors contributed to the article and approved the submitted version.

Funding

CS was supported by R01MH107741. AW was supported by T32 AA007477.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2021.627179/full#supplementary-material

References

1. American Psychiatric Association DSM-5 Task Force. Diagnostic and Statistical Manual of Mental Disorders: DSM-5. Arlington, VA: American Psychiatric Association (2013). doi: 10.1176/appi.books.9780890425596

2. World Health Organization. International Statistical Classification of Diseases and Related Health Problems (11th Revision). Geneva: WHO (2018).

3. Cuthbert BN, Insel TR. Toward the future of psychiatric diagnosis: the seven pillars of RDoC. BMC Med. (2013) 11:126. doi: 10.1186/1741-7015-11-126

4. Cuthbert BN. The RDoC framework: facilitating transition from ICD/DSM to dimensional approaches that integrate neuroscience and psychopathology. World Psychiatry Off J World Psychiatr Assoc WPA. (2014) 13:28–35. doi: 10.1002/wps.20087

5. Morris SE, Cuthbert BN. Research domain criteria: cognitive systems, neural circuits, and dimensions of behavior. Dialogues Clin Neurosci. (2012) 14:29–37. doi: 10.31887/DCNS.2012.14.1/smorris

6. McTeague LM, Goodkind MS, Etkin A. Transdiagnostic impairment of cognitive control in mental illness. J Psychiatr Res. (2016) 83:37–46. doi: 10.1016/j.jpsychires.2016.08.001

7. Goodkind M, Eickhoff SB, Oathes DJ, Jiang Y, Chang A, Jones-Hagata LB, et al. Identification of a common neurobiological substrate for mental illness. JAMA Psychiatr. (2015) 72:305–15. doi: 10.1001/jamapsychiatry.2014.2206

8. Wang X-J, Krystal JH. Computational psychiatry. Neuron. (2014) 84:638–54. doi: 10.1016/j.neuron.2014.10.018

9. Paulus MP, Huys QJ, Maia TV. A roadmap for the development of applied computational psychiatry. Biol Psychiatry Cogn Neurosci Neuroimaging. (2016) 1:386–92. doi: 10.1016/j.bpsc.2016.05.001

10. Montague PR, Dolan RJ, Friston KJ, Dayan P. Computational psychiatry. Trends Cogn Sci. (2012) 16:72–80. doi: 10.1016/j.tics.2011.11.018

11. Huys QJ, Maia TV, Frank MJ. Computational psychiatry as a bridge from neuroscience to clinical applications. Nat Neurosci. (2016) 19:404. doi: 10.1038/nn.4238

12. Adams RA, Huys QJ, Roiser JP. Computational psychiatry: towards a mathematically informed understanding of mental illness. J Neurol Neurosurg Psychiatry. (2016) 87:53–63. doi: 10.1136/jnnp-2015-310737

13. Forstmann BU, Ratcliff R, Wagenmakers E-J. Sequential sampling models in cognitive neuroscience: advantages, applications, and extensions. Annu Rev Psychol. (2016) 67:641–66. doi: 10.1146/annurev-psych-122414-033645

14. Ratcliff R, McKoon G. The diffusion decision model: theory and data for two-choice decision tasks. Neural Comput. (2007) 20:873–922. doi: 10.1162/neco.2008.12-06-420

15. Voss A, Nagler M, Lerche V. Diffusion models in experimental psychology. Exp Psychol. (2013) 60:385–402. doi: 10.1027/1618-3169/a000218

16. White CN, Curl RA, Sloane JF. Using decision models to enhance investigations of individual differences in cognitive neuroscience. Front Psychol. (2016) 7:81. doi: 10.3389/fpsyg.2016.00081

17. Smith PL, Ratcliff R. Psychology and neurobiology of simple decisions. Trends Neurosci. (2004) 27:161–8. doi: 10.1016/j.tins.2004.01.006

18. Turner BM, Van LM, Forstmann BU. Informing cognitive abstractions through neuroimaging: the neural drift diffusion model. Psychol Rev. (2015) 122:312–36. doi: 10.1037/a0038894

19. Cassey PJ, Gaut G, Steyvers M, Brown SD. A generative joint model for spike trains and saccades during perceptual decision-making. Psychon Bull Rev. (2016) 23:1757–78. doi: 10.3758/s13423-016-1056-z

20. Ratcliff R. A theory of memory retrieval. Psychol Rev. (1978) 85:59. doi: 10.1037/0033-295X.85.2.59

21. Lerche V, Voss A. Retest reliability of the parameters of the Ratcliff diffusion model. Psychol Res. (2017) 81:629–52. doi: 10.1007/s00426-016-0770-5

22. Schubert A-L, Frischkorn G, Hagemann D, Voss A. Trait characteristics of diffusion model parameters. J Intell. (2016) 4:7. doi: 10.3390/jintelligence4030007

23. Ratcliff R, Thapar A, McKoon G. Individual differences, aging, and IQ in two-choice tasks. Cognit Psychol. (2010) 60:127–57. doi: 10.1016/j.cogpsych.2009.09.001

24. Heathcote A, Suraev A, Curley S, Gong Q, Love J, Michie PT. Decision processes and the slowing of simple choices in schizophrenia. J Abnorm Psychol. (2015) 124:961. doi: 10.1037/abn0000117

25. White CN, Ratcliff R, Vasey MW, McKoon G. Using diffusion models to understand clinical disorders. J Math Psychol. (2010) 54:39–52. doi: 10.1016/j.jmp.2010.01.004

26. Karalunas SL, Huang-Pollock CL, Nigg JT. Decomposing attention-deficit/hyperactivity disorder (ADHD)-related effects in response speed and variability. Neuropsychology. (2012) 26:684. doi: 10.1037/a0029936

27. Huang-Pollock CL, Karalunas SL, Tam H, Moore AN. Evaluating vigilance deficits in ADHD: a meta-analysis of CPT performance. J Abnorm Psychol. (2012) 121:360. doi: 10.1037/a0027205

28. Weigard A, Huang-Pollock C, Brown S. Evaluating the consequences of impaired monitoring of learned behavior in attention-deficit/hyperactivity disorder using a Bayesian hierarchical model of choice response time. Neuropsychology. (2016) 30:502. doi: 10.1037/neu0000257

29. Weigard A, Huang-Pollock C. A diffusion modeling approach to understanding contextual cueing effects in children with ADHD. J Child Psychol Psychiatry. (2014) 55:1336–44. doi: 10.1111/jcpp.12250

30. Karalunas SL, Huang-Pollock CL. Integrating impairments in reaction time and executive function using a diffusion model framework. J Abnorm Child Psychol. (2013) 41:837–50. doi: 10.1007/s10802-013-9715-2

31. Ziegler S, Pedersen ML, Mowinckel AM, Biele G. Modelling ADHD: a review of ADHD theories through their predictions for computational models of decision-making and reinforcement learning. Neurosci Biobehav Rev. (2016) 71:633–56. doi: 10.1016/j.neubiorev.2016.09.002

32. Weigard AS, Clark DA, Sripada C. Cognitive efficiency beats subtraction-based metrics as a reliable individual difference dimension relevant to self-control. PsyArXiv [Preprint]. (2020). doi: 10.31234/osf.io/qp2ua

33. Moeller FG, Barratt ES, Dougherty DM, Schmitz JM, Swann AC. Psychiatric aspects of impulsivity. Am J Psychiatry. (2001) 158:1783–93. doi: 10.1176/appi.ajp.158.11.1783

34. Chamorro J, Bernardi S, Potenza MN, Grant JE, Marsh R, Wang S, et al. Impulsivity in the general population: a national study. J Psychiatr Res. (2012) 46:994–1001. doi: 10.1016/j.jpsychires.2012.04.023

35. Poldrack RA, Congdon E, Triplett W, Gorgolewski KJ, Karlsgodt KH, Mumford JA, et al. A phenome-wide examination of neural and cognitive function. Sci Data. (2016) 3:160110. doi: 10.1038/sdata.2016.110

36. First MB, Spitzer RL, Gibbon M, Williams JB. Structured Clinical Interview for DSM-IV Axis I Disorders, Research Version, Patient Edition (SCID-IP). New York, NY: Biometrics Research, New York State Psychiatric Institute (2004).

37. Logan GD, Cowan WB, Davis KA. On the ability to inhibit simple and choice reaction time responses: a model and a method. J Exp Psychol Hum Percept Perform. (1984) 10:276–91. doi: 10.1037/0096-1523.10.2.276

38. Band GPH, van der Molen MW, Logan GD. Horse-race model simulations of the stop-signal procedure. Acta Psychol. (2003) 112:105–42. doi: 10.1016/S0001-6918(02)00079-3

39. White CN, Congdon E, Mumford JA, Karlsgodt KH, Sabb FW, Freimer NB, et al. Decomposing decision components in the stop-signal task: a model-based approach to individual differences in inhibitory control. J Cogn Neurosci. (2014) 26:1601–14. doi: 10.1162/jocn_a_00567

40. Wagenmakers E-J, Van Der Maas HL, Grasman RP. An EZ-diffusion model for response time and accuracy. Psychon Bull Rev. (2007) 14:3–22. doi: 10.3758/BF03194023

41. Dutilh G, Annis J, Brown SD, Cassey P, Evans NJ, Grasman RP, et al. The quality of response time data inference: a blinded, collaborative assessment of the validity of cognitive models. Psychon Bull Rev. (2018) 26:1–19. doi: 10.3758/s13423-017-1417-2

42. van Ravenzwaaij D, Donkin C, Vandekerckhove J. The EZ diffusion model provides a powerful test of simple empirical effects. Psychon Bull Rev. (2017) 24:547–56. doi: 10.3758/s13423-016-1081-y

43. van Ravenzwaaij D, Oberauer K. How to use the diffusion model: parameter recovery of three methods: EZ, fast-dm, and DMAT. J Math Psychol. (2009) 53:463–73. doi: 10.1016/j.jmp.2009.09.004

44. Lerche V, Voss A, Nagler M. How many trials are required for parameter estimation in diffusion modeling? A comparison of different optimization criteria. Behav Res Methods. (2017) 49:513–37. doi: 10.3758/s13428-016-0740-2

45. R Core Team. R: A Language and Environment for Statistical Computing (3.6.0). Vienna: R Foundation for Statistical Computing (2019).

46. Cucina J, Byle K. The bifactor model fits better than the higher-order model in more than 90% of comparisons for mental abilities test batteries. J Intell. (2017) 5:27. doi: 10.3390/jintelligence5030027

47. Dubois J, Galdi P, Paul LK, Adolphs R. A distributed brain network predicts general intelligence from resting-state human neuroimaging data. Philos Trans R Soc B Biol Sci. (2018) 373. doi: 10.1098/rstb.2017.0284

48. Revelle W. psych: Procedures for Psychological, Psychometric, and Personality Research. R package version 1.10 (2018).

49. Schmid J, Leiman JM. The development of hierarchical factor solutions. Psychometrika. (1957) 22:53–61. doi: 10.1007/BF02289209

50. Zinbarg RE, Revelle W, Yovel I, Li W. Cronbach's α, Revelle's β, McDonald's ω H. Their relations with each other and two alternative conceptualizations of reliability. Psychometrika. (2005) 70:123–33. doi: 10.1007/s11336-003-0974-7

51. Barratt ES. Anxiety and impulsiveness related to psychomotor efficiency. Percept Mot Skills. (1959) 9:191–8. doi: 10.2466/pms.1959.9.3.191

52. Patton JH, Stanford MS, Barratt ES. Factor structure of the Barratt impulsiveness scale. J Clin Psychol. (1995) 51:768–74. doi: 10.1002/1097-4679(199511)51:6<768::aid-jclp2270510607>3.0.co;2-1

53. Dickman SJ. Functional and dysfunctional impulsivity: personality and cognitive correlates. J Pers Soc Psychol. (1990) 58:95. doi: 10.1037/0022-3514.58.1.95

54. Tellegen A, Waller NG. Exploring personality through test construction: development of the multidimensional personality questionnaire. SAGE Handb Personal Theory Assess. (2008) 2:261–92. doi: 10.4135/9781849200479.n13

55. Derogatis LR, Lipman RS, Rickels K, Uhlenhuth EH, Covi L. The hopkins symptom checklist (HSCL): a self-report symptom inventory. Behav Sci. (1974) 19:1–15. doi: 10.1002/bs.3830190102

56. Ratcliff R, Rouder JN. Modeling response times for two-choice decisions. Psychol Sci. (1998) 9:347–56. doi: 10.1111/1467-9280.00067

57. Shine JM, Bissett PG, Bell PT, Koyejo O, Balsters JH, Gorgolewski KJ, et al. The dynamics of functional brain networks: integrated network states during cognitive task performance. Neuron. (2016) 92:544–54. doi: 10.1016/j.neuron.2016.09.018

58. Brosnan MB, Sabaroedin K, Silk T, Genc S, Newman DP, Loughnane GM, et al. (2020). Evidence accumulation during perceptual decisions in humans varies as a function of dorsal frontoparietal organization. Nat Hum Behav. 4:844–55. doi: 10.1038/s41562-020-0863-4

59. Schmiedek F, Oberauer K, Wilhelm O, Süß H-M, Wittmann WW. Individual differences in components of reaction time distributions and their relations to working memory and intelligence. J Exp Psychol Gen. (2007) 136:414. doi: 10.1037/0096-3445.136.3.414

60. Ratcliff R, Thapar A, McKoon G. Effects of aging and IQ on item and associative memory. J Exp Psychol Gen. (2011) 140:464. doi: 10.1037/a0023810

61. Schulz-Zhecheva Y, Voelkle M, Beauducel A, Biscaldi M, Klein C. Predicting fluid intelligence by components of reaction time distributions from simple choice reaction time tasks. J Intell. (2016) 4:8. doi: 10.3390/jintelligence4030008

62. Schubert A-L, Frischkorn GT. Neurocognitive psychometrics of intelligence: how measurement advancements unveiled the role of mental speed in intelligence differences. Curr Dir Psychol Sci. (2020) 29:140–6. doi: 10.1177/0963721419896365

63. Duckworth AL, Kern ML. A meta-analysis of the convergent validity of self-control measures. J Res Personal. (2011) 45:259–68. doi: 10.1016/j.jrp.2011.02.004

64. Toplak ME, West RF, Stanovich KE. Practitioner review: do performance-based measures and ratings of executive function assess the same construct? J Child Psychol Psychiatry. (2013) 54:131–43. doi: 10.1111/jcpp.12001

65. Hedge C, Powell G, Sumner P. The reliability paradox: why robust cognitive tasks do not produce reliable individual differences. Behav Res Methods. (2018) 50:1166–86. doi: 10.3758/s13428-017-0935-1

66. Enkavi AZ, Eisenberg IW, Bissett PG, Mazza GL, MacKinnon DP, Marsch LA, et al. Large-scale analysis of test–retest reliabilities of self-regulation measures. Proc Natl Acad Sci USA. (2019) 116:5472–7. doi: 10.1073/pnas.1818430116

67. Miller J, Ulrich R. Mental chronometry and individual differences: modeling reliabilities and correlations of reaction time means and effect sizes. Psychon Bull Rev. (2013) 20:819–58. doi: 10.3758/s13423-013-0404-5

68. Rouder JN, Haaf JM. A psychometrics of individual differences in experimental tasks. Psychon Bull Rev. (2019) 26:452–67. doi: 10.3758/s13423-018-1558-y

70. Ratcliff R, Love J, Thompson CA, Opfer JE. Children are not like older adults: a diffusion model analysis of developmental changes in speeded responses. Child Dev. (2012) 83:367–81. doi: 10.1111/j.1467-8624.2011.01683.x

71. Wickelgren WA. Speed-accuracy tradeoff and information processing dynamics. Acta Psychol. (1977) 41:67–85. doi: 10.1016/0001-6918(77)90012-9

72. Ulrich R, Schröter H, Leuthold H, Birngruber T. Automatic and controlled stimulus processing in conflict tasks: superimposed diffusion processes and delta functions. Cognit Psychol. (2015) 78:148–74. doi: 10.1016/j.cogpsych.2015.02.005

73. White CN, Ratcliff R, Starns JJ. Diffusion models of the flanker task: discrete versus gradual attentional selection. Cognit Psychol. (2011) 63:210–38. doi: 10.1016/j.cogpsych.2011.08.001

74. Hübner R, Steinhauser M, Lehle C. A dual-stage two-phase model of selective attention. Psychol Rev. (2010) 117:759. doi: 10.1037/a0019471

75. Gomez P, Ratcliff R, Perea M. A model of the go/no-go task. J Exp Psychol Gen. (2007) 136:389. doi: 10.1037/0096-3445.136.3.389

Keywords: computational psychiatry, evidence accumulation, transdiagnostic, research domain criteria, schizophrenia, bipolar disorder, attention-deficit/hyperactivity disorder

Citation: Sripada C and Weigard A (2021) Impaired Evidence Accumulation as a Transdiagnostic Vulnerability Factor in Psychopathology. Front. Psychiatry 12:627179. doi: 10.3389/fpsyt.2021.627179

Received: 08 November 2020; Accepted: 20 January 2021;

Published: 17 February 2021.

Edited by:

Cynthia H. Y. Fu, University of East London, United KingdomReviewed by:

Deniz Vatansever, Fudan University, ChinaJeffrey Bedwell, University of Central Florida, United States

Copyright © 2021 Sripada and Weigard. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chandra Sripada, c3JpcGFkYUB1bWljaC5lZHU=

Chandra Sripada

Chandra Sripada Alexander Weigard

Alexander Weigard