- 1Ahmanson-Lovelace Brain Mapping Center, University of California, Los Angeles, Los Angeles, CA, United States

- 2Department of Psychiatry and Biobehavioral Sciences, University of California, Los Angeles, Los Angeles, CA, United States

- 3Staglin IMHRO Center for Cognitive Neuroscience, University of California, Los Angeles, Los Angeles, CA, United States

Autism spectrum disorder (ASD) is a neurodevelopmental disorder characterized by lack of attention to social cues in the environment, including speech. Hypersensitivity to sensory stimuli, such as loud noises, is also extremely common in youth with ASD. While a link between sensory hypersensitivity and impaired social functioning has been hypothesized, very little is known about the neural mechanisms whereby exposure to distracting sensory stimuli may interfere with the ability to direct attention to socially-relevant information. Here, we used functional magnetic resonance imaging (fMRI) in youth with and without ASD (N=54, age range 8–18 years) to (1) examine brain responses during presentation of brief social interactions (i.e., two-people conversations) shrouded in ecologically-valid environmental noises, and (2) assess how brain activity during encoding might relate to later accuracy in identifying what was heard. During exposure to conversation-in-noise (vs. conversation or noise alone), both neurotypical youth and youth with ASD showed robust activation of canonical language networks. However, the extent to which youth with ASD activated temporal language regions, including voice-selective cortex (i.e., posterior superior temporal sulcus), predicted later discriminative accuracy in identifying what was heard. Further, relative to neurotypical youth, ASD youth showed significantly greater activity in left-hemisphere speech-processing cortex (i.e., angular gyrus) while listening to conversation-in-noise (vs. conversation or noise alone). Notably, in youth with ASD, increased activity in this region was associated with higher social motivation and better social cognition measures. This heightened activity in voice-selective/speech-processing regions may serve as a compensatory mechanism allowing youth with ASD to hone in on the conversations they heard in the context of non-social distracting stimuli. These findings further suggest that focusing on social and non-social stimuli simultaneously may be more challenging for youth with ASD requiring the recruitment of additional neural resources to encode socially-relevant information.

Introduction

Autism spectrum disorder (ASD) is a common neurodevelopmental disorder characterized by difficulties in social interaction and communication, the presence of repetitive behaviors and restricted interests, as well as sensory processing atypicalities (1). Research in infants who later go on to get an ASD diagnosis has consistently shown that allocation of attention to social stimuli is disrupted early in development [for a review, see (2)]. For instance, young children with ASD fail to show a preference for listening to their mothers' voice (3), as well as to child-directed speech (4); disrupted attention to language early in life may set the stage for subsequent atypical language acquisition, as well as altered development of the neural systems responsible for language processing. Importantly, the ability to selectively attend to and learn from social interactions in one's environment often requires the simultaneous filtering out competing non-social stimuli. As heightened sensory sensitivity to mildly aversive auditory stimuli (e.g., loud noises) is observed in a significant number of children with ASD (5), we hypothesize that this may be one potential mechanism through which attention may be drawn away from social input in favor of other non-social stimuli present in the environment. Despite growing interest in the relationship between sensory processing and social impairments in ASD (6–8), little research to date has investigated how individual variability in neural responses to simultaneous social and non-social sensory stimuli may relate to the ability to “hone in” on socially-relevant input.

Converging neuroimaging data indicate altered brain responses to language in individuals with ASD. While ASD is characterized by a great deal of heterogeneity (9), young children with ASD who go on to have poorer language skills show hypoactivity in temporal cortex during language listening (10), as well as reduced functional connectivity between nodes of the language network (11). In children and adolescents with ASD, functional MRI (fMRI) studies have found reduced functional lateralization and increased rightward asymmetry during a variety of language processing tasks, as compared to the leftward asymmetry observed in neurotypical individuals (12–17), as well as reduced connectivity between voice-selective cortex and reward-related brain regions (18).

Importantly, however, in most real-life situations language is not heard in isolation but against the background of other competing sensory distractors (e.g., a buzzing fan, a barking dog). In neurotypical adults, the bilateral posterior superior temporal sulcus (pSTS) responds selectively to vocal stimuli, and activity in this region is reduced when voice stimuli are degraded or masked by background noise (19, 20). In contrast, individuals with ASD fail to activate voice-selective regions in the pSTS during exposure to vocal stimuli (12) and show increased recruitment of right hemisphere language homologues (21). Furthermore, the ability to detect speech-in-noise appears reduced in individuals with ASD, who are poorer at identifying speech heard in the context of background noise (22, 23). Interestingly, a recent study showed that sensory processing atypicalities modulate brain activity during language processing in youth with ASD during simultaneous processing of sarcastic remarks and distracting tactile stimulation (24). However, it has yet to be examined how sensory distractors in the same sensory modality as speech may affect the allocation of attention to language processing during social interactions. This type of study has implications for understanding how auditory filtering deficits may affect encoding of social information in everyday life where conversations commonly occur in the context of background noises.

In adults with ASD, heightened sensory over-responsivity (SOR)—characterized by extreme behavioral response to everyday sensory stimuli—is related to higher autism traits (25). Importantly, roughly 65% of children with ASD show atypical sensory responsivity to non-social auditory stimuli (26, 27), including a lower tolerance for loud noises (28, 29) and hypersensitivity to certain environmental noises, such as the sound of a dog barking or a vacuum cleaner (30). A growing body of neuroimaging research also suggests that children with ASD who have high levels of SOR display neural hyper-responsivity to aversive visual, tactile, and auditory stimuli in primary sensory brain regions and areas important for salience detection (31, 32), suggesting that there may be an over-allocation of attentional resources to sensory stimuli in youth with ASD. Together, these data suggest that language processing within social contexts in which there are other competing sensory stimuli—such as those that occur in the natural environment—may be particularly challenging for some individuals with ASD.

Here, we examined brain responses to auditory social and non-social stimuli in a paradigm where participants heard brief conversations between two people which were shrouded in competing environmental noises. Ecologically valid stimuli were developed to examine the effects of ASD diagnosis on neural processing of commonly encountered environmental noise, conversation, and conversation-in-noise (i.e., noise and conversation presented simultaneously). In addition, participants completed a post-scan computerized test that probed recognition of the noises and topics of conversation presented during the fMRI paradigm, thus providing a measure of attention to, and encoding of social and non-social information. We hypothesized that, relative to neurotypical youth, youth with ASD would show reduced activity in left hemisphere language cortices when listening to conversation alone, as well as increased activity in sensory cortices when exposed to aversive noise. Further, we expected that the presence of distracting noises during speech processing would result in greater activation of subcortical and cortical brain regions involved in sensory processing in youth with ASD relative to neurotypical youth. Finally, we expected that the ability to recognize details from the conversations heard in presence of background noises would be associated with increased activity in canonical left hemisphere language regions and voice-selective cortex in the pSTS in both groups, reflecting the recruitment of additional neural resources to “hone in” on social stimuli in the context of non-social distractors; to the extent that some youth with ASD may show hypersensitivity to auditory stimuli, we expect this effect would be more pronounced in this group.

Materials and Methods

Participants

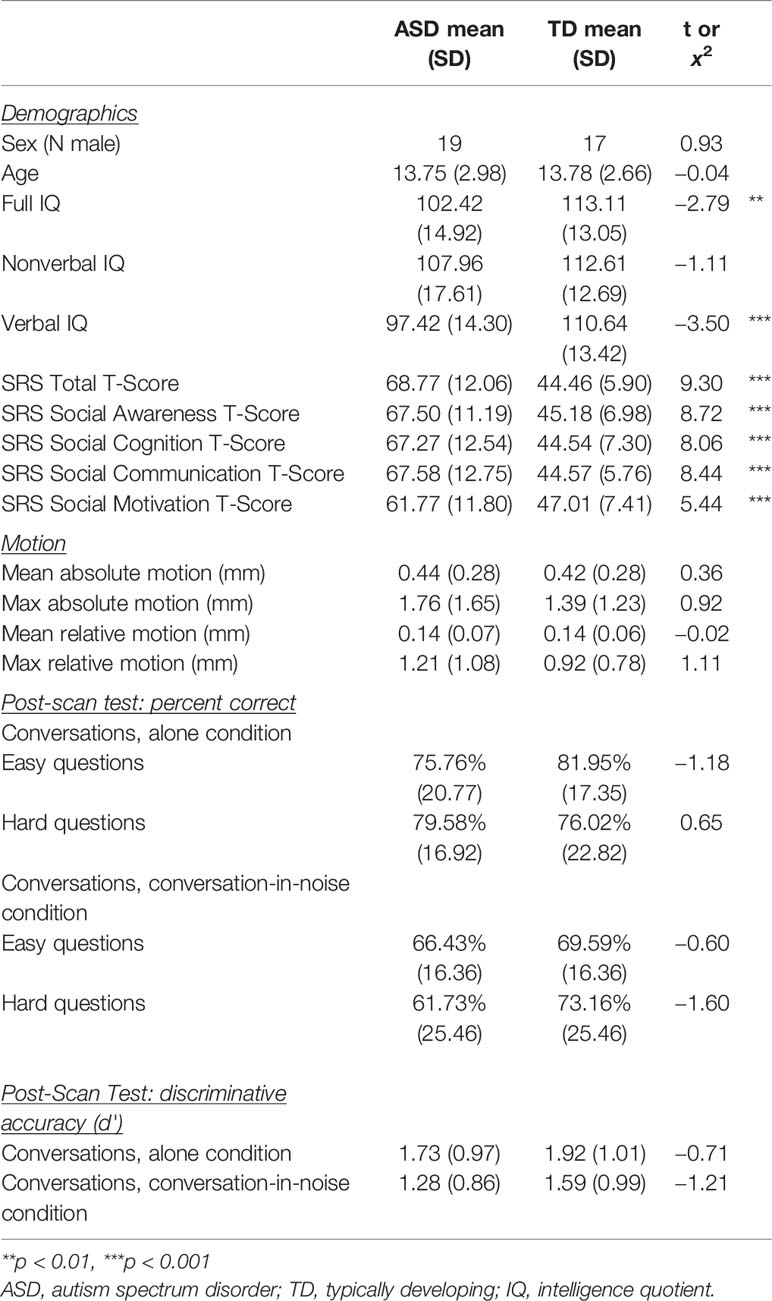

Participants were 26 youth with ASD and 28 age-matched typically-developing (TD) youth who were recruited through referrals from the University of California, Los Angeles (UCLA) Child and Adult Neurodevelopmental (CAN) Clinic, as well as from posted advertisements throughout the greater Los Angeles area. Exclusionary criteria included any diagnosed neurological or genetic disorders, as well as structural brain abnormalities, or metal implants. ASD participants had a prior clinical diagnosis, which was confirmed using the Autism Diagnostic Observation Schedule—2nd Edition (ADOS-2) (33) and Autism Diagnostic Interview-Revised (ADI-R) (34) by licensed clinicians at the UCLA CAN Clinic. All participants had full-scale IQ above 70 as assessed by the Wechsler Abbreviated Scale of Intelligence (35) (Table 1). Data were originally acquired for 30 ASD and 30 TD youth, 4 ASD participants, and 2 TD participants were excluded from the final sample due to excessive head motion during fMRI data acquisition (i.e., greater than 3.5 mm of maximum relative motion; see Table 1 for mean motion parameters in the final sample). Study procedures were approved by the UCLA Institutional Review Board and informed consent and assent to participate in this research were obtained in writing from legal guardians and study participants.

Behavioral Measures

Social functioning was assessed in both ASD and TD youth using the Social Responsiveness Scale—2nd Edition (SRS-2) (36). The SRS-2 is intended for use in both neurotypical populations and individuals with ASD and provides a measure of the severity of social impairment associated with autism. In the current study, we examined the relationship between t-scores for the socially-relevant subscales of the SRS-2 (i.e., social awareness, social cognition, social communication, and social motivation) and neural activity during conversation-in-noise listening.

Experimental Design

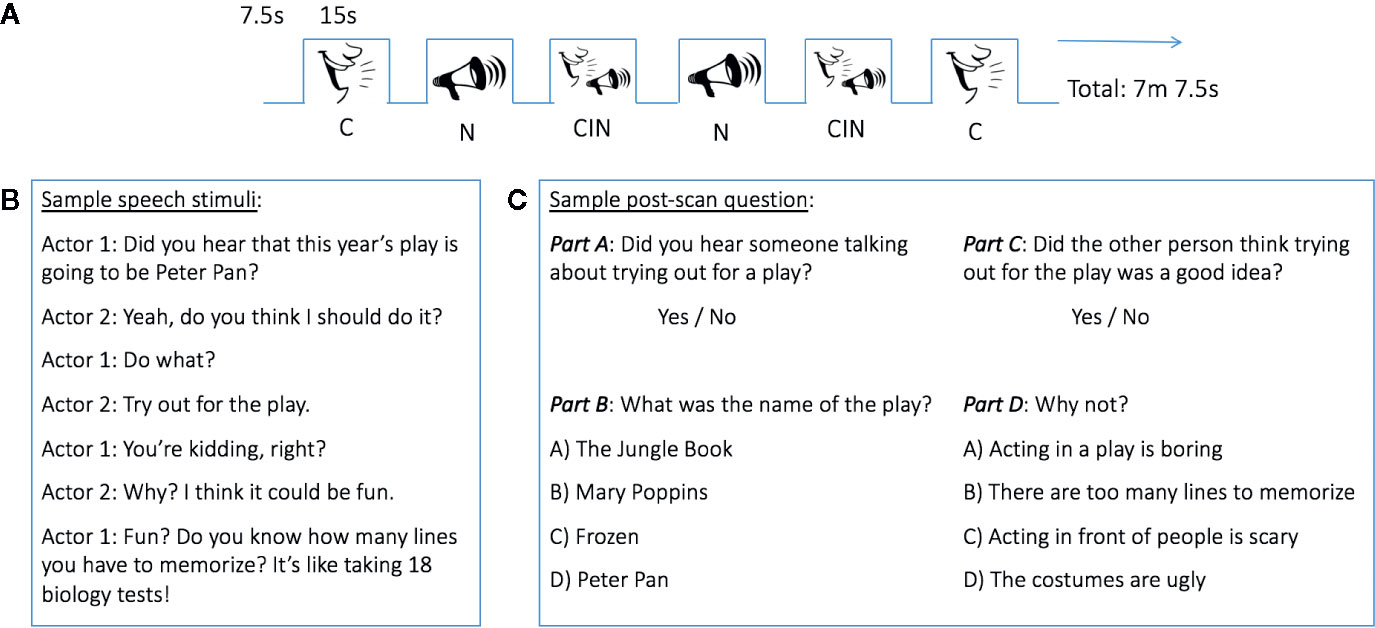

During the fMRI scan, auditory stimuli were presented according to a canonical block design (Figure 1A) using E-Prime 2.0 Software on a Dell Latitude E6430 laptop computer. Each block consisted of 15 s of auditory stimulus presentation alternating with 7.5 s of rest. A crosshair was presented at the center of a white screen throughout the duration of the scan. Blocks consisted of three types: conversation (C), noise (N), and conversation-in-noise (CIN; i.e., conversation and noise presented simultaneously). Stimuli were ecologically valid and mimicked those encountered in everyday life, whereby one overhears two people engaged in a conversation that is shrouded by competing auditory stimuli, thus forcing the listener to “hone in” on the socially relevant speech. Inspiration for conversation topics were taken from scripted television series focusing on childhood/adolescence (Figure 1B). Speech passages were recorded by two actors (one male, one female) using GarageBand 6.0.5 and an Apogee MiC digital microphone connected to a Macintosh computer. Noise stimuli were downloaded from Freesound.org. Selection of noise stimuli ensured that they were ecologically valid (i.e., commonly encountered in everyday life). The aversive nature of the selected noises was rated in an independent sample (N=30) using a 7-point Likert scale (1=not aversive, 7=extremely aversive); the final 12 noise stimuli used in the fMRI paradigm were rated as moderately aversive (rating M=4.7, range 3.6–5.5) and included such sounds as a jackhammer, a police siren, and a blender. Root-mean-square amplitude was normalized across all stimuli to control for loudness. Stimuli were counterbalanced such that half of the participants heard a given conversation without noise, whereas the other half of participants heard the same conversation masked by noise (i.e., in the CIN condition). Likewise, for any given noise, half of participants heard the noise alone, while the other half heard the noise in the CIN condition. Each block type (C, N, CIN) was presented six times; order was counterbalanced across subjects. The total run time was 7 min and 7.5 s. Prior to the fMRI scan, participants were told that they would hear some people talking and some noises; they were instructed to just listen and look at the crosshair on the screen. Participants were not specifically instructed to pay attention to what was said, as we wanted the paradigm to have high ecological validity by mimicking situations encountered in everyday life when we may overhear others talking and are not explicitly asked to pay attention or remember what was said.

Figure 1 Experimental design. (A) Block design functional MRI (fMRI) task. (B) Example of a conversation heard during fMRI data acquisition. (C) Sample of post-scan questions. CIN, conversation-in-noise; C, conversation; N, noise.

To assess the participants' ability to recognize stimuli presented in the three experimental conditions, and thus gain a proximal measure of in-scanner attention, a brief post-MRI scanning questionnaire was administered using E-Prime 2.0 Software on a Dell Latitude E6430 laptop computer. During this post-scanning test, participants heard and read questions about the conversations and noises they were exposed to during the fMRI data acquisition, interspersed with foils (i.e., with questions about conversations and noises they did not hear). For each conversation and noise stimulus presented during the fMRI scan, participants were first asked to answer a question about whether they heard such a particular conversation topic or noise. For the conversations, the post-scan test was tiered such that if a participant's yes/no response to this initial question was correct (Figure 1C, top), a more nuanced question about that conversation was then presented (Figure 1C, bottom). Incorrect responses to the initial yes/no questions resulted in being presented the next set of questions about a different conversation topic. Participant responses were recorded in E-Prime. A sensitivity index (d') was calculated to assess the ability of youth to discriminate between topics of conversation heard during MRI scanning and foils. d' was calculated as the standardized (i.e., z-transformed) proportion of hits minus the standardized proportion of false alarms.

MRI Data Acquisition

MRI data were collected on a 3.0 Tesla Siemens Prisma MRI Scanner using a 64-channel head coil. For each subject, a multi-slice echo-planar (EPI) sequence was used to acquire functional data: 595 volumes; repetition time (TR) = 720 ms; multiband acceleration factor = 8; matrix size = 104 x 104; field of view (FOV) = 208 × 208 mm; in-plane resolution = 2 × 2 mm; slice thickness = 2 mm, no gap; 72 slices; bandwidth = 2,290 Hz per pixel; echo time (TE) = 37 ms. Visual and auditory stimuli were presented via magnetic resonance compatible goggles and headphones (Optoacoustics LTD, Or Yehuda, Israel). Subjects wore earplugs and headphones to lessen scanner noise.

Functional MRI Data Analysis

Data were processed using FSL (FMRIB's Software Library, www.fmrib.ox.ac.uk/fsl) (37) and AFNI (Analysis of Functional NeuroImages) (38). Functional data were motion corrected to the average functional volume with FSL's Motion Correction Linear Registration Tool (MCFLIRT) (39) using sinc interpolation and skull stripped using FSL's Brain Extraction Tool (BET) (40). Time series statistical analyses were run in FSL's FMRI Expert Analysis Tool (FEAT) version 6.0. Functional images were spatially smoothed [full width at half maximum (FWHM) 5 mm] and a temporal high pass filter of 67.5 s was applied. Functional data were linearly registered to the Montreal Neurological Institute (MNI) 2 mm standard brain with 12° of freedom. Motion outliers were identified using FSL's motion outliers tool (comparing the root mean square intensity difference from the center volume to identify outliers) and were included as a confound explanatory variable in the single subject analyses; there was no difference in the mean number of volumes censored between ASD and TD participants (p=0.31). Condition effects were estimated by convolving a box-function for each condition with a double-gamma hemodynamic response function, along with the temporal derivative. Each condition was modeled with respect to resting baseline (C, N, CIN); single-subject models were combined into a group-level mixed effects model (FLAME1+2). Verbal IQ was entered as a covariate in all group-level analyses. Within-group and between-group maps were pre-threshold masked by grey matter and thresholded at z > 3.1 (p < 0.001), cluster-corrected for multiple comparisons at p < 0.05. Between-group comparisons (i.e., ASD vs. TD) were masked by the sum of within-group activity for each condition of interest.

Statistical Analysis

Two-tailed t-tests were performed to assess between-group differences in age, IQ, and motion parameters. To test whether participant's discriminative accuracy (d') for identifying the topics of conversation varied as a function of diagnostic group, condition, or question, a repeated measures ANOVA was conducted with group (i.e., ASD vs. TD) as the between-subjects factor and condition (i.e., N, C, CIN) as within-subjects factors. To further examine differences in behavioral performance, we also ran separate repeated measures ANOVAs comparing percent of correct responses for easy (yes/no) and hard (multiple-choice) questions separately with group (i.e., ASD vs. TD) as the between-subjects factor and condition (i.e., C vs. CIN) as the within-subjects factor.

Results

Demographics

There were no statistically significant differences between ASD and TD youth in sex, age, and non-verbal IQ, or across any of the four motion parameters tested (Table 1). Two-sample t-tests revealed significant differences in full-scale and verbal IQ between ASD and TD youth, whereby TD youth had higher IQ relative to their ASD counterparts. As expected, ASD and TD youth also had significantly different t-scores on the social awareness, social cognition, social communication, and social motivation subscales of the Social Responsiveness Scale (SRS), as well as differences in SRS Total t-scores, indicative of poorer parent-reported social functioning in youth with ASD.

Post-Scan Recognition Test

To assess participants' ability to discriminate between what was actually heard vs. foils (i.e., correctly identifying a conversation, or noise, that was heard—“hits”—vs. incorrectly endorsing a conversation or noise that was not heard—“false alarms”), we calculated a sensitivity index (d') for each participant. In ASD youth, mean d' was 0.64, 0.59, 1.73, 1.28, for noises heard in the alone condition, noises heard in the conversation-in-noise condition, conversations head in the alone condition, and conversations heard in the conversation-in-noise condition, respectively. Likewise, mean d' in TD youth was 0.65, 0.67, 1.92, and 1.59 for noises heard in the alone condition, noises heard in the conversation-in-noise condition, conversations head in the alone condition, and conversations heard in the conversation-in-noise condition, respectively. A repeated-measures ANOVA was performed to test the interaction between group x condition. This analysis revealed no significant group x condition interaction [F(3,156)=0.56, p=0.64)] or main effect of Group [F(1,52)=0.83, p=0.37)]. However, the main effect of condition was significant [F(3,156)=46.46, p < 0.001)]; pairwise comparisons showed that both ASD and TD participants had higher accuracy (d') for conversations heard in the alone condition as compared to noises heard in the alone condition, as well as higher accuracy for conversations than noises when these were heard in the conversation-in-noise condition.

In order to further examine differences in behavioral performance, we also compared subjects' percent accuracy using separate repeated measures ANOVAs for easy (yes/no) and hard (multiple-choice) questions. For the easy questions, the main effect of condition was significant [F(1,52)=19.77, p < 0.001], whereby both groups were more accurate at identifying topics of conversation heard in the conversation alone condition than in the conversation-in-noise condition. However, there was no significant group x condition interaction [F(1,52)=0.38, p=0.54] or main effect of group [F(1,52)=1.02, p=0.32)]. For the hard (multiple-choice) questions, there was also a main effect of condition [F(1,52)=10.00, p < 0.01)], whereby both groups were more accurate at identifying topics of conversation heard in the conversation alone condition. However, while there was no main effect of group [F(1,52)=0.51, p=0.48)], there was a significant group x condition interaction [F(1,52)=5.53, p=0.02)]. Post hoc tests showed that while the ASD and TD groups did not differ in percent accuracy for the conversation alone or conversation-in-noise conditions, the ASD group was significantly more accurate for the conversation alone condition than for the conversation-in-noise (p < 0.01); this was not the case for TD youth (p > 0.05).

Functional MRI Results

Within-Condition Analyses

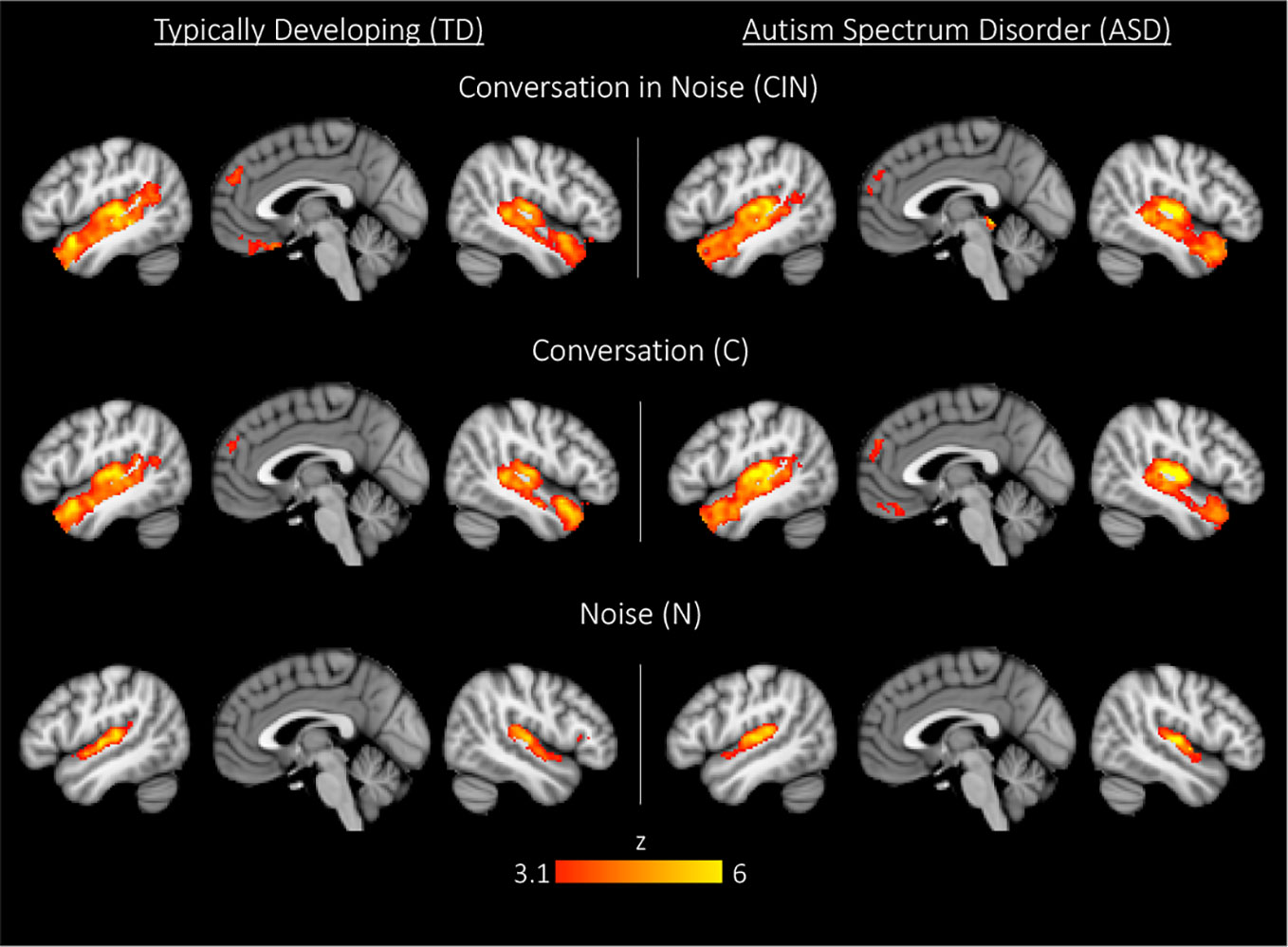

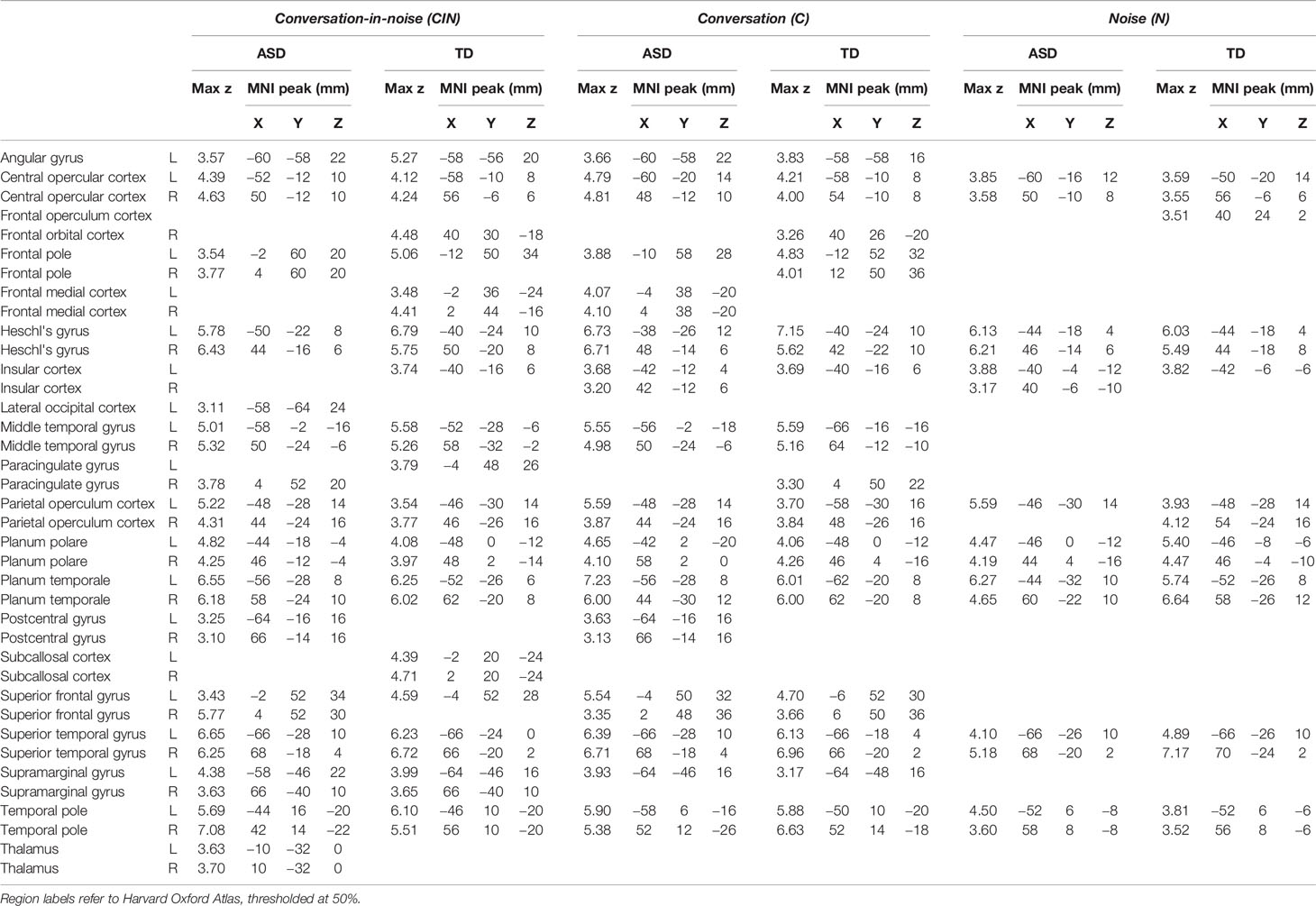

Across each of the three conditions, both youth with ASD and TD youth showed the expected activity in bilateral Heschl's gyrus, superior temporal gyrus, planum temporal, and planum polare (Figure 2, Table 2). During exposure to conversation-in-noise (CIN) and conversation alone (C), both groups showed robust activation in auditory and language cortices, including bilateral superior temporal gyrus (STG), middle temporal gyrus, temporal pole, left angular gyrus, and superior frontal gyrus. Activity in ventromedial prefrontal cortex, a region involved in theory of mind and mentalizing, was observed in TD youth in the CIN condition, and in ASD youth in the C condition. In contrast to the extended network of regions activated during conditions in which speech was presented (i.e., CIN and C), brain activity during the noise condition (N) was restricted to primary and secondary auditory cortices; ASD youth showed additional activation in right inferior frontal gyrus and pars triangularis. No between-group differences were observed for any of the three experimental conditions at this statistical threshold (z > 3.1, p < 0.05).

Figure 2 Whole-brain activation in typically developing (TD) youth and youth with autism spectrum disorder (ASD) during exposure to conversation-in-noise (CIN), conversation (C), and noise (N). Maps are thresholded at z > 3.1, corrected for multiple comparisons at the cluster level (p < 0.05).

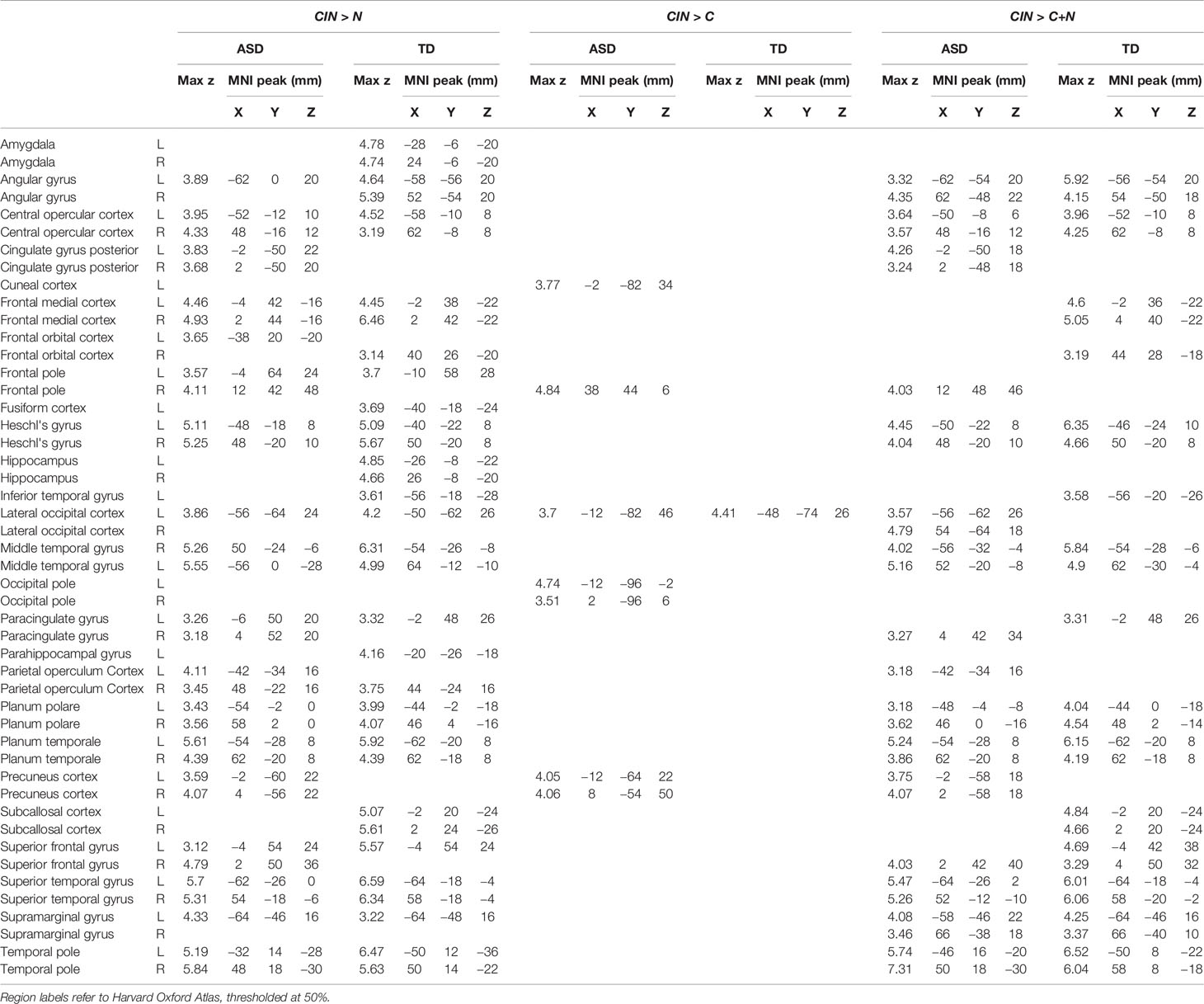

Table 2 Montreal Neurological Institute (MNI) coordinates for each condition (conversation-in-noise, CIN; conversation, C; noise, N) compared to baseline.

Between-Condition Analyses

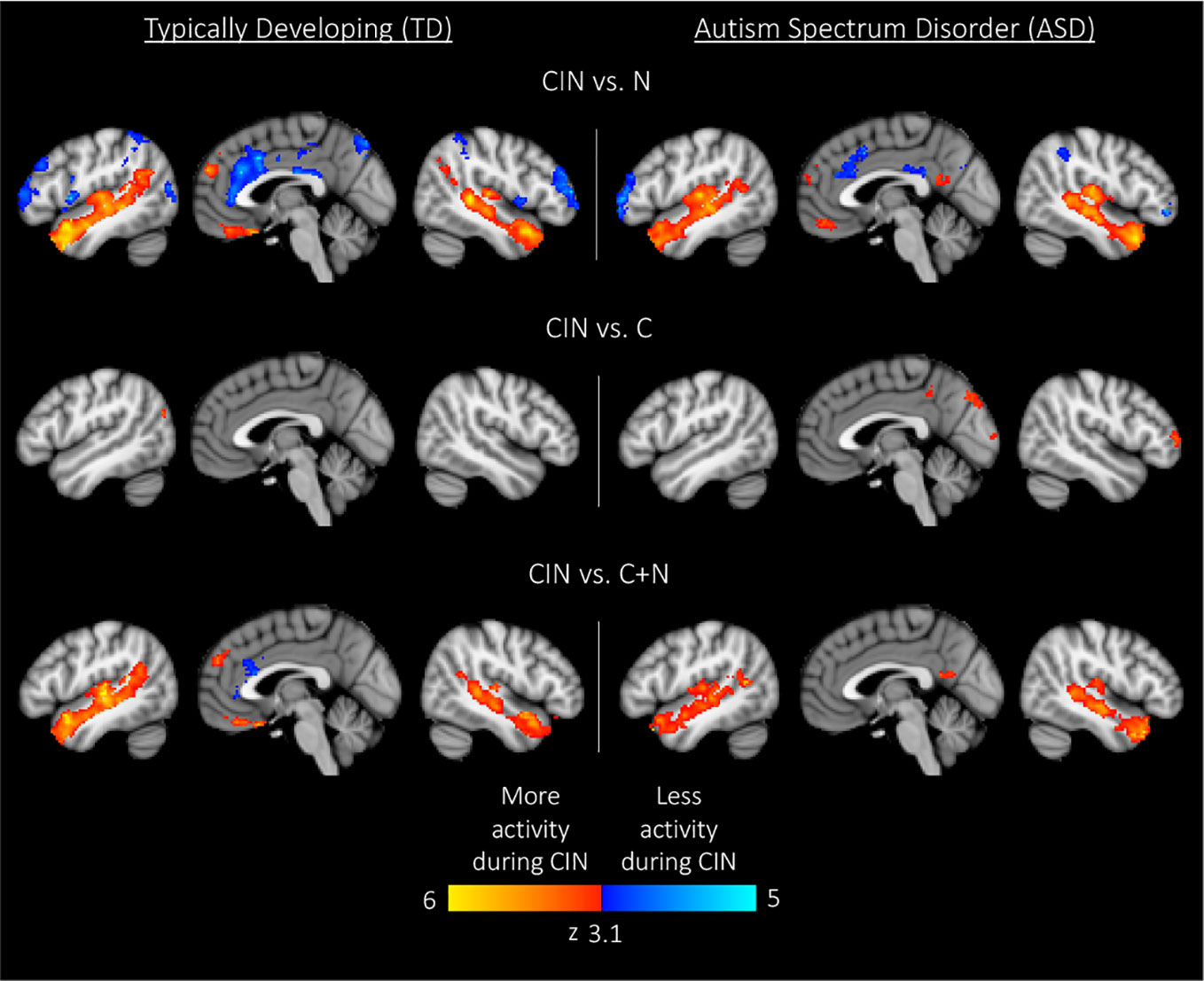

Here we compared brain activity between experimental conditions. First, we examined differences in brain activity when listening to conversation-in-noise relative to listening to noise alone (CIN > N). For this contrast, both TD and ASD youth showed increased activity in bilateral temporal pole, superior temporal gyrus, Heschl's gyrus, superior frontal gyrus, and medial prefrontal cortex (Figure 3, Table 3), consistent with increased attention to language stimuli in the CIN condition. TD youth also showed activation in the right angular gyrus and bilateral hippocampus, whereas ASD youth showed significant activation in the precuneus. No regions showed significant between-group differences when comparing CIN and N conditions.

Figure 3 Within-group results for comparisons between experimental conditions. Maps are thresholded at z > 3.1, corrected for multiple comparisons at the cluster level (p < 0.05). CIN, conversation-in-noise; N, noise; C, conversation.

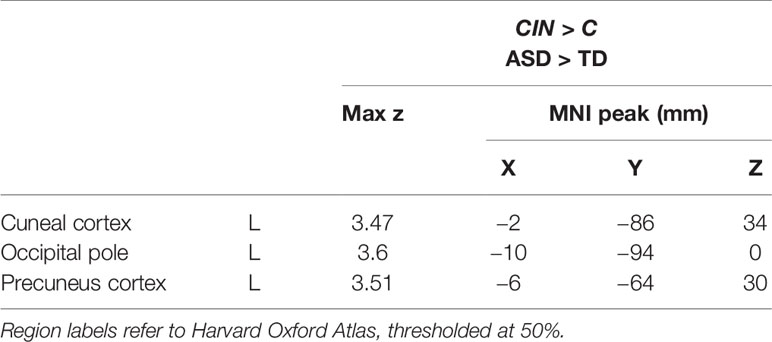

Next, we assessed differences in brain activity when listening to conversation-in-noise versus conversation alone (CIN > C). For this contrast, TD youth showed increased activity in lateral occipital cortex, whereas ASD youth had increased activity in right frontal pole, precuneus, and occipital pole (Figure 3, Table 3). Between-group comparisons revealed that the ASD group had greater activity in primary visual cortex and precuneus relative to TD youth for the contrast of CIN > C; there were no brain regions where TD youth showed greater activity relative to ASD youth (Table 4). No brain regions showed greater activity when listening to conversation alone vs. conversation-in-noise (i.e., C > CIN).

Table 4 Montreal Neurological Institute (MNI) coordinates for between-condition between-group contrasts.

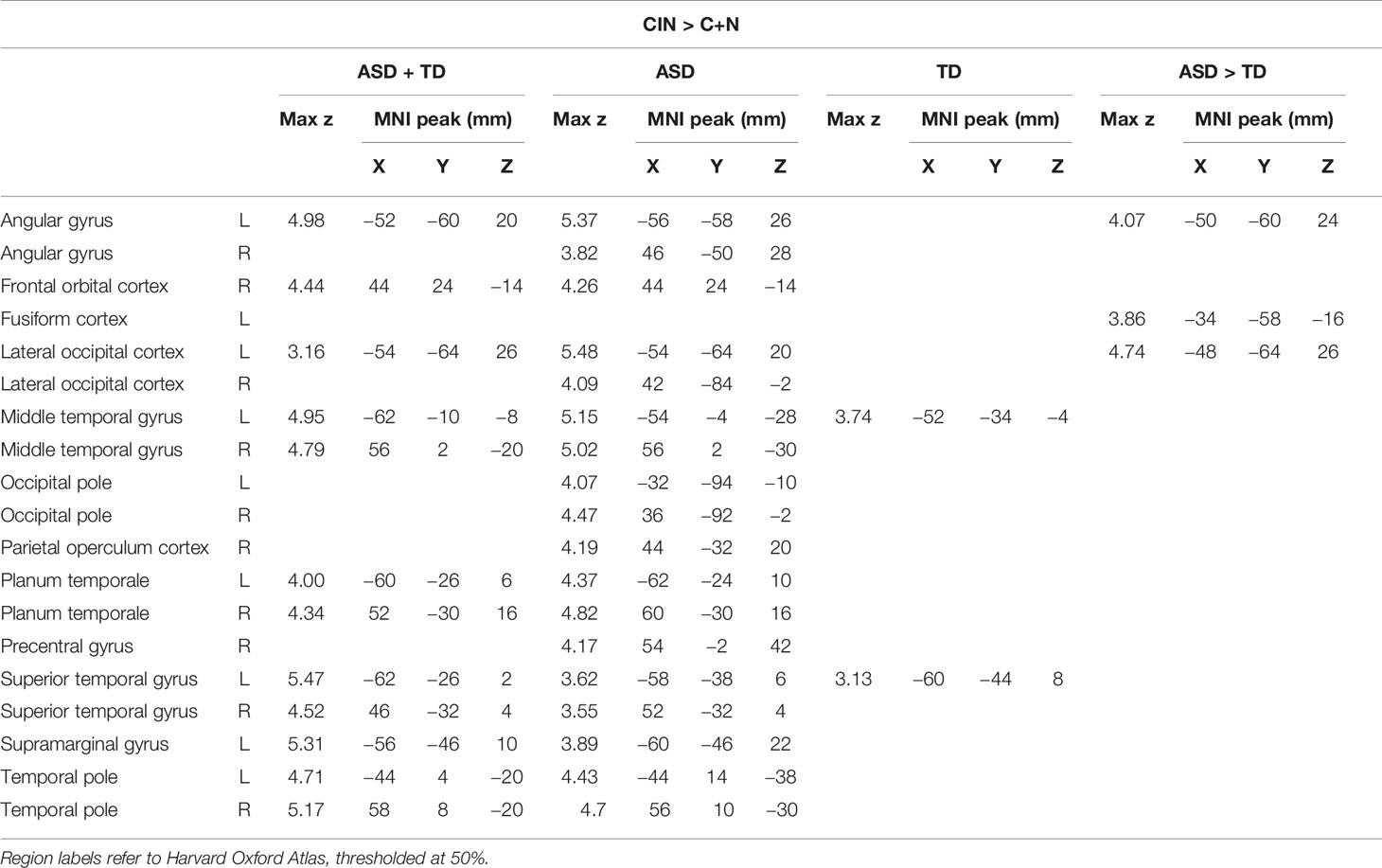

Lastly, to tap into the neural correlates of social attention (i.e., selective attention to speech in the context of background noise), we examined brain activity specifically associated with listening to conversation-in-noise, above and beyond activity observed for the conversation and noise alone conditions (CIN > C+N). For this contrast, both TD and ASD youth displayed activity in brain regions involved in auditory and language processing as well as theory of mind (i.e., angular gyri, superior frontal gyrus, and superior temporal regions); ASD youth displayed additional activity in the precuneus whereas TD youth showed activity in ventral medial frontal cortex (Figure 3, Table 3). No significant between-group differences were observed for this contrast.

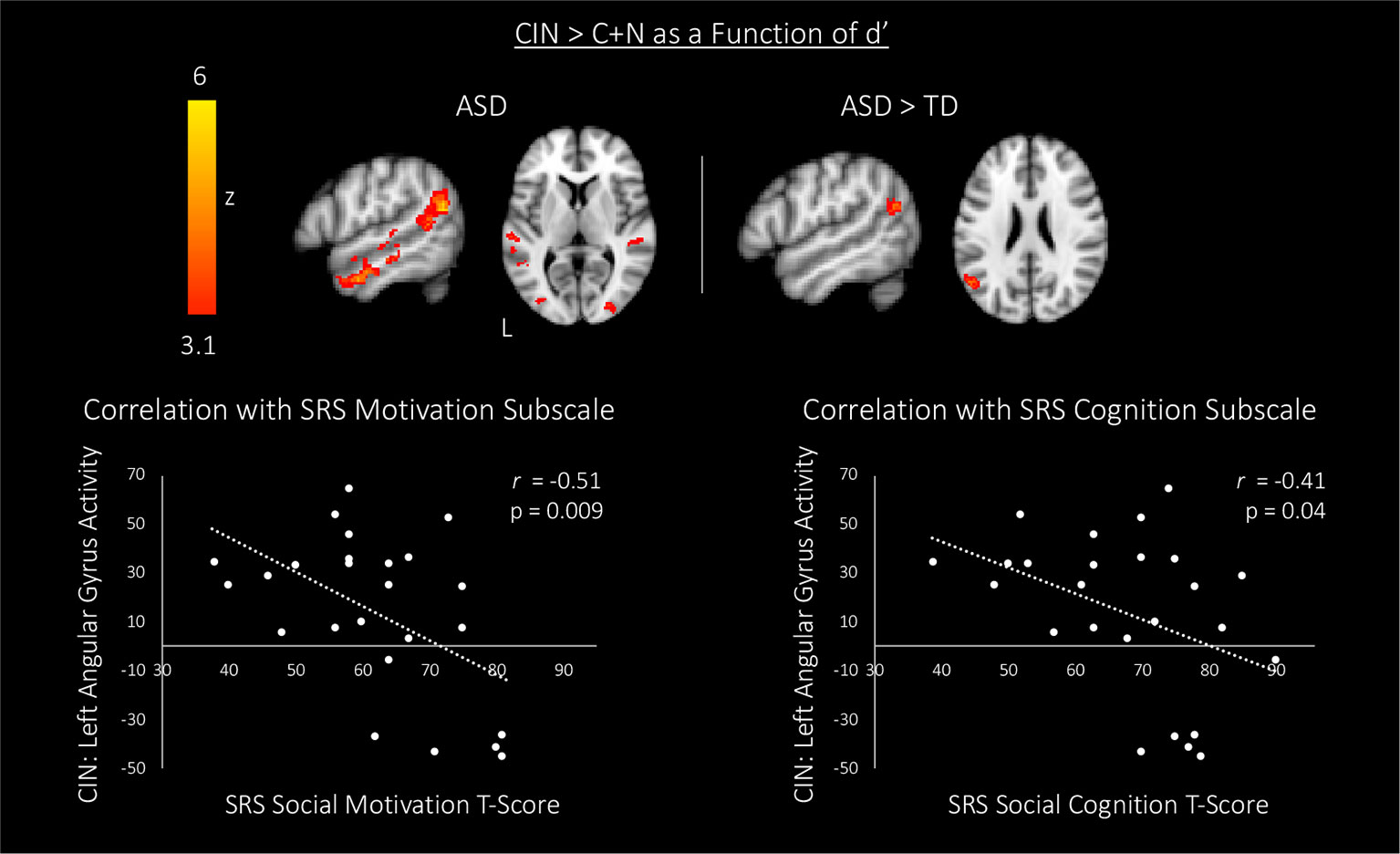

Brain Activity Predicting Post-Scan Performance

In an attempt to identify the neural substrates of social attention, we assessed how brain activity during the fMRI scan might predict accuracy in the post-scan test by entering d' as a regressor of interest in bottom-up regression analyses. We focused these analyses on our primary contrast of interest—CIN > C+N—in order to examine how d' related to brain activity specifically associated with processing conversation-in-noise above and beyond brain activity associated with processing conversation and noise alone. Whereas TD youth with higher d' showed selective activation of left posterior superior temporal sulcus (pSTS; i.e., voice-selective cortex), ASD youth with higher d' showed widespread increased activity primarily in language areas (Figure 4, Table 5). Direct between-group comparisons showed that, relative to TD youth, ASD youth with higher d' showed significantly greater activity in speech-processing cortex in the left angular gyrus; there were no significant results for the reverse contract. To interpret the ASD > TD effect, we examined how activity in this speech-processing region while listening to conversation-in-noise might be related to social functioning in ASD youth. Parameter estimates of activity during the CIN condition were extracted from this region and correlated with scores from the SRS subscales. Higher activity in this left speech-processing region in ASD youth was associated with lower scores on the social motivation (r=−0.51, p=0.009) and social cognition (r=−0.41, p=0.04) SRS subscales, indicating more typical patterns of behavior.

Table 5 Montreal Neurological Institute (MNI) coordinates for brain activity associated with discriminative accuracy (d') for topics of conversation heard in the conversation-in-noise (CIN) condition.

Figure 4 Top: associations between brain activity (CIN > C+N) and discriminative accuracy (i.e., d') for topics of conversation heard in the CIN condition. Maps are thresholded at z > 3.1, corrected for multiple comparisons at the cluster level (p < 0.05). Bottom: correlations between blood oxygen level dependent (BOLD) signal response for the CIN condition and scores on two subscales of the SRS in autism spectrum disorder (ASD) youth. CIN, conversation-in-noise; N, noise; C, conversation; SRS, Social Responsiveness Scale.

Discussion

Here, we examined neural activity in response to ecologically valid social and non-social stimuli in youth with and without ASD to elucidate the neural mechanisms through which attention may be drawn away from socially-relevant information in the presence of distracting sensory stimulation in individuals with ASD. To do so, we employed a novel paradigm whereby participants heard naturalistic conversations in the context of common environmental noises that are often in the background of everyday social interactions. Overall, both youth with ASD and typically-developing youth showed a similar pattern of brain activity in auditory and language networks when listening to conversations presented alone and conversations presented with background noise; further, minimal differences were observed between diagnostic groups when comparing brain activity during listening to conversations alone versus conversations shrouded in noise. When we honed in on neural mechanisms underlying the ability to later recognize the topics of conversations that were heard in the presence of background noise, we found that higher recognition accuracy was associated with greater activity in left hemisphere voice-selective cortex in typically-developing youth. In contrast, in youth with ASD, better recognition accuracy was associated with increased activity in a larger network of regions subserving language processing, with significantly greater activity observed in left speech-processing cortex relative to typically-developing youth. Furthermore, we found that increased activity in this left-hemisphere speech-processing region when listening to conversations masked in noise was related to better social motivation and social cognition in ASD youth.

At the behavioral level, youth with and without ASD were equally accurate at discriminating noises vs. foils (d'), regardless of whether these were presented alone or simultaneously with conversations. As expected, accuracy in discriminating what was heard during the conversations (vs. foils) was overall higher in typically-developing youth, compared to youth with ASD, both when the conversations were presented alone or in the context of background noise; however, these differences were not statistically significant. Notably, we deliberately did not alert participants to pay attention to what was heard in the MRI scanner, as we wanted our paradigm to have high ecological validity by mimicking situations encountered in everyday life, when we may overhear a conversation and are not asked to explicitly pay attention or remember what was said. By explicitly asking participants to carefully listen and try to remember the conversations, any differences in overall discriminative accuracy between diagnostic groups would have likely been further reduced. Indeed, previous studies where direct attentional cues were provided to ASD youth have shown increased brain activity and improved behavioral performance as compared to conditions where such instructions were not given (24, 41). Importantly, both neurotypical youth and youth with ASD had higher discriminative accuracy for conversations than noises when these were each presented alone, as well as higher discriminative accuracy when identifying conversations than noises when conversations and noises were presented simultaneously. In addition, both neurotypical and ASD youth showed the expected pattern whereby accuracy in identifying topics of conversation was poorer for conversations presented over background noise than for conversations presented alone. Although this latter difference was not statistically significant when using d' collapsed across the easy (yes/no) and hard (multiple-choice) questions, when looking at percent accuracy for the harder multiple-choice questions, ASD youth performed significantly worse in the conversation-in-noise condition than in the conversation alone condition, a pattern not observed in TD youth. Overall, these findings are in agreement with previous work in adults and adolescents with ASD showing that recall is poorer for sentences presented simultaneously with background sounds (22, 23). However, our findings of similar discriminative accuracy (d') between typically-developing and ASD youth when identifying conversations heard in the context of background noises are in contrast to previous work suggesting that individuals with ASD are poorer at discriminating speech-in-noise relative to their neurotypical counterparts (22, 23). This difference may in part be explained by our choice of noise stimuli, which were deliberately chosen to be only mildly aversive and, unlike those used in prior studies, also easily recognizable. Indeed, this methodological choice may also explain why we did not observe between-group differences in brain regions previously implicated in processing aversive auditory stimuli (e.g., amygdala, thalamus, auditory cortex), which have previously been documented in ASD participants (24, 31, 32, 42). Importantly, the lack of significant between-group differences in brain responses to mildly aversive noises in this study may also in part reflect the more stringent statistical threshold employed in the current study, in keeping with evolving standards in the neuroimaging field (43). Indeed, at more liberal thresholds we too observed greater activity in the amygdala and primary auditory cortex during exposure to mildly aversive noise in ASD youth as compared to TD youth.

At the neural level, typically-developing and ASD youth showed overall similar patterns of brain activity when listening to conversations alone, noises alone, and conversations shrouded in noise. The only significant between-group difference was detected when comparing brain activity observed when youth were presented with conversations and environmental noises simultaneously versus conversations alone. Here, the addition of background noise to conversations elicited greater activity in the precuneus and primary visual cortex in ASD relative to TD youth. The precuneus is a canonical hub of the default mode network, a network of brain regions implicated in thinking about the self and others (44) and narrative comprehension in neurotypical adults (45, 46). Our finding of increased activity in visual cortex during auditory stimulation in ASD youth, relative to typically-developing youth, is consistent with previous findings in individuals with ASD showing increased brain activity in the visual system during semantic decision making (47) as well as auditory pitch discrimination (48), suggesting atypical integration of auditory and visual sensory systems in ASD (42, 49). Our findings thus suggest that similar behavioral profiles may in part reflect processing differences at the neural level whereby the challenging task of listening to social interactions over background noise requires activation of additional brain regions in youth with ASD, relative to neurotypical controls.

The ability to deploy attention to socially meaningful information rests on being able to divert attention away from less relevant distracting stimuli; accordingly, in an attempt to hone in on the neural substrates of social attention, we next sought to identify brain activity that was related to the successful encoding of the topics of conversation. More specifically, we examined how brain responses while participants listened to conversations in the context of background noise (above and beyond brain responses associated with attending to conversations and noises alone) predicted later recognition of what was heard. In both neurotypical youth and youth with ASD, greater accuracy in identifying the topics of conversations heard in the context of background noise was predicted by greater activity in left hemisphere voice-selective cortex. Previous work in neurotypical adults has shown that this voice-selective region preferentially responds to vocal stimuli, and that activity in this region decreases when voice stimuli are masked by background noise (19, 20). Thus, heightened activity in this region when listening to conversations shrouded in common environmental noises may serve as a compensatory mechanism, allowing both youth with and without ASD to focus their attention on the socially-relevant information in the presence of distracting auditory stimuli. Importantly, better recognition accuracy in youth with ASD was also associated with greater activity in a wider network of brain regions implicated in language processing. Indeed, relative to typically-developing youth, ASD youth showed significantly greater activity in left-hemisphere angular gyrus. This region plays an important role in language comprehension (50–52) and prior work shows that disrupting activity in this area reduces the ability to comprehend speech under difficult listening conditions (53). The angular gyrus is also an important region for theory of mind (TOM)—the ability to understand the actions and thoughts of others (54, 55). TOM is a critical skill in reasoning about others' state of mind and plays a role in high-level language processing including the use and understanding of language within a social environment (56). Thus, similar to the heightened response in the voice-selective-region observed in both neurotypical and ASD youth, this increased activity in speech processing cortex in youth with ASD could reflect compensatory processes resulting in improved sensitivity to speech stimuli, thereby boosting youths' ability to encode and later accurately discriminate between conversation topics heard over background noise. If this interpretation is correct, individual differences in responsivity observed in this region in the context of our paradigm should be associated with the more general ability to hone in on socially-relevant information, and ultimately result in less severe social impairments. Consistent with this hypothesis, neural activity in this speech-processing region while participants listened to conversations shrouded in noise was associated with better social motivation and social cognition in youth with ASD.

This study has several limitations. First, due to the correlational nature inherent to all neuroimaging studies, while we hypothesized that the increased activity in language-related and TOM regions allowed ASD youth to hone in on socially relevant information, we cannot rule out the alternative account that greater activity in these brain regions merely resulted from more successful processing of language through noise. Second, atypical heightened sensitivity to sensory stimuli (known as sensory over-responsivity; SOR) affects over half of children with ASD (26, 27) and is an important contributor to altered processing of both social and non-social stimuli in youth with ASD (24, 31, 32, 42); however, given our small sample size, we were unable to directly compare groups of ASD youth with and without SOR. More work is needed to understand how SOR may mediate neural responses to ecologically valid social and non-social stimuli in the environment. Importantly, recent work also suggests that there may be sex-differences in the development of multisensory speech processing in TD and ASD youth (57); thus, examining the interaction between sex, sensory processing, and social cognition is an important direction for future research. In addition, participants in our study were all high-functioning individuals who developed language and had verbal IQ in the normal range, making it more likely that our participants would have the ability to hone in on social stimuli compared to more affected individuals. In future studies it will be crucial replicate these findings and to extend this work to individuals with more severe ASD phenotypes, as well as to younger children on the autism spectrum. To this end, prospective studies of infants at high risk for developing ASD will be essential to track the longitudinal co-development of sensory responsivity, language acquisition, and ASD symptomatology.

To conclude, using a novel and ecologically valid paradigm, here we sought to better understand the neural correlates of social attention. Our findings indicate youth with ASD who successfully encoded socially-relevant information in the presence of distracting stimuli did so by up-regulating activity in neural systems supporting speech and language processing, thus suggesting that focusing on both social and non-social stimuli simultaneously may be more of a challenge for ASD youth relative to their neurotypical counterparts. This work buttresses the importance of further examining the relationship between social attention and sensory processing atypicalities, particularly early in development, to shed new light on the onset of autism symptomatology, as well as to inform the design of novel interventions.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by University of California, Los Angeles Institutional Review Board. Written informed consent to participate in this study was provided by the participants' legal guardian/next of kin.

Author Contributions

This study was conceived of and designed by LH, SG, KL, JL, SB, and MD. Data acquisition was performed by LH, KL, and JL. Data analysis was completed by LH and MI. All authors contributed to data interpretation and drafting of the manuscript, and provided critical feedback on the manuscript and its intellectual content.

Funding

This work was supported by the National Institute of Mental Health (grants R01MH100028 and K08 MH112871) and the Simons Foundation Autism Research Initiative (grant 345389). Some of the authors were supported by training grants/fellowships from the National Institute of Neurological Disorders and Stroke (F99 NS105206 to LH, T32 NS048004 to LH and KL), the National Institute of Mental Health (F32 MH105167 to SG, F31 MH110140 to KL), the National Institute of Child Health and Human Development (F31 HD088102 to JL), and the Roche/ARCS Foundation Scholar Award Program in the Life Sciences (KL and JL). The project was also in part supported by grants RR12169, RR13642, and RR00865 from the NIH National Center for Research Resources. We are also grateful for generous support from the Brain Mapping Medical Research Organization, Brain Mapping Support Foundation, Pierson-Lovelace Foundation, The Ahmanson Foundation, the William M. and Linda R. Dietel Philanthropic Fund at the Northern Piedmont Community Foundation, the Tamkin Foundation, the Jennifer Jones-Simon Foundation, the Capital Group Companies Charitable Foundation, the Robson Family, and the Northstar Fund. The contents of this paper are solely the responsibility of the authors and do not necessarily represent the official views of the National Institutes of Health.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1. American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders. 5th ed. Arlington, VA: American Psychiatric Publishing (2013).

2. Shultz S, Klin A, Jones W. Neonatal Transitions in Social Behavior and Their Implications for Autism. Trends Cogn Sci (2018) 22:452–69. doi: 10.1016/j.tics.2018.02.012

3. Klin A. Young Autistic Children's Listening Preferences in Regard to Speech: A Possible Characterization of the Symptom of Social Withdrawal. J Autism Dev Disord (1991) 21:29–42. doi: 10.1007/bf02206995

4. Kuhl PK, Coffey-Corina S, Padden D, Dawson G. Links between social and linguistic processing of speech in preschool children with autism: behavioral and electrophysiological measures. Dev Sci (2005) 8:F1–F12. doi: 10.1111/j.1467-7687.2004.00384.x

5. Ben-Sasson A, Hen L, Fluss R, Cermak SA, Engel-Yeger B, Gal EA. Meta-Analysis of Sensory Modulation Symptoms in Individuals with Autism Spectrum Disorders. J Autism Dev Disord (2009) 39:1–11. doi: 10.1007/s10803-008-0593-3

6. Piven J, Elison JT, Zylka MJ. Toward a conceptual framework for early brain and behavior development in autism. Mol Psychiatry (2017) 23:165. doi: 10.1038/mp.2017.131

7. Thye MD, Bednarz HM, Herringshaw AJ, Sartin EB, Kana RK. The impact of atypical sensory processing on social impairments in autism spectrum disorder. Dev Cogn Neurosci (2017) 29:151–67. doi: 10.1016/J.DCN.2017.04.010

8. Hamilton A. Sensory and social features of autism – can they be integrated? Dev Cogn Neurosci (2018) 29:1–3. doi: 10.1016/J.DCN.2018.02.009

9. Hernandez LM, Rudie JD, Green SA, Bookheimer S, Dapretto M. Neural signatures of autism spectrum disorders: Insights into brain network dynamics. Neuropsychopharmacology (2015) 40:171–89. doi: 10.1038/npp.2014.172

10. Lombardo MV, Pierce K, Eyler LT, Carter Barne C, Ahrens-Barbeau C, Solso S, et al. Different Functional Neural Substrates for Good and Poor Language Outcome in Autism. Neuron (2015) 86:567–77. doi: 10.1016/J.NEURON.2015.03.023

11. Dinstein I, Pierce K, Eyler L, Solso S, Malach R, Behrmann M, et al. Disrupted Neural Synchronization in Toddlers with Autism. Neuron (2011) 70:1218–25. doi: 10.1016/J.NEURON.2011.04.018

12. Gervais H, Belin P, Boddaert N, Leboyer M, Coez A, Sfaello I, et al. Abnormal cortical voice processing in autism. Nat Neurosci (2004) 7:801–2. doi: 10.1038/nn1291

13. Kleinhans NM, Müller RA, Cohen DN, Courchesne E. Atypical functional lateralization of language in autism spectrum disorders. Brain Res (2008) 1221:115–25. doi: 10.1016/J.BRAINRES.2008.04.080

14. Lai G, Pantazatos SP, Schneider H, Hirsch J. Neural systems for speech and song in autism. Brain : A J Neurol (2012) 135:961–75. doi: 10.1093/brain/awr335

15. Eyler LT, Pierce K. Courchesne E. A failure of left temporal cortex to specialize for language is an early emerging and fundamental property of autism. Brain (2012) 135:949–60. doi: 10.1093/brain/awr364

16. Mody M, Belliveau JW. Speech and Language Impairments in Autism: Insights from Behavior and Neuroimaging. North Am J Med Sci (2013) 5:157–61. doi: 10.7156/v5i3p157

17. Tryfon A, Foster NEV, Sharda M, Hyde KL. Speech perception in autism spectrum disorder: An activation likelihood estimation meta-analysis. Behav Brain Res (2018) 338:118–27. doi: 10.1016/J.BBR.2017.10.025

18. Abrams DA, Lynch CJ, Cheng KM, Phillips J, Supekar K, Ryali S, et al. Underconnectivity between voice-selective cortex and reward circuitry in children with autism. Proc Natl Acad Sci United States America (2013) 110:12060–65. doi: 10.1073/pnas.1302982110

19. Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature (2000) 403(6767), 309–12. doi: 10.1038/35002078

20. Vander Ghinst M, Bourguignon M, Op de Beeck M, Wens V, Marty B, Hassid S, et al. Left Superior Temporal Gyrus Is Coupled to Attended Speech in a Cocktail-Party Auditory Scene. J Neuroscie : Off J Soc Neurosci (2016) 36:1596–606. doi: 10.1523/JNEUROSCI.1730-15.2016

21. Redcay E, Courchesne E. Deviant Functional Magnetic Resonance Imaging Patterns of Brain Activity to Speech in 2–3-Year-Old Children with Autism Spectrum Disorder. Biol Psychiatry (2008) 64:589–98. doi: 10.1016/J.BIOPSYCH.2008.05.020

22. Alcantara JI, Weisblatt EJL, Moore BCJ, Bolton PF. Speech-in-noise perception in high-functioning individuals with autism or Asperger's syndrome. J Child Psychol Psychiatry (2004) 45:1107–14. doi: 10.1111/j.1469-7610.2004.t01-1-00303.x

23. Dunlop WA, Enticott PG, Rajan R. Speech Discrimination Difficulties in High-Functioning Autism Spectrum Disorder Are Likely Independent of Auditory Hypersensitivity. Front Hum Neurosci (2016) 10:401. doi: 10.3389/fnhum.2016.00401

24. Green SA, Hernandez LM, Bowman HC, Bookheimer SY, Dapretto M. Sensory over-responsivity and social cognition in ASD: Effects of aversive sensory stimuli and attentional modulation on neural responses to social cues. Dev Cogn Neurosci (2018) 29:127–39. doi: 10.1016/j.dcn.2017.02.005

25. Tavassoli T, Miller LJ, Schoen SA, Nielsen DM, Baron-Cohen S. Sensory over-responsivity in adults with autism spectrum conditions. Autism (2014) 18:428–32. doi: 10.1177/1362361313477246

26. Bishop SL, Hus V, Duncan A, Huerta M, Gotham K, Pickles A, et al. Subcategories of restricted and repetitive behaviors in children with autism spectrum disorders. J Autism Dev Disord (2013) 43:1287–97. doi: 10.1007/s10803-012-1671-0

27. Marco EJ, Hinkley LBN, Hill SS, Nagarajan SS. Sensory processing in autism: a review of neurophysiologic findings. Pediatr Res (2011) 69:48R–54R. doi: 10.1203/PDR.0b013e3182130c54

28. Rosenhall U, Nordin V, Sandström M, Ahlsén G, Gillberg C. Autism and hearing loss. J Autism Dev Disord (1999) 29:349–57. doi: 10.1023/a:1023022709710

29. Khalfa S, Bruneau N, Rogé B, Georgieff N, Veuillet E, Adrien JL, et al. Increased perception of loudness in autism. Hearing Res (2004) 198:87–92. doi: 10.1016/J.HEARES.2004.07.006

30. O'Connor K. Auditory processing in autism spectrum disorder: A review. Neurosci Biobehav Rev (2012) 36:836–54. doi: 10.1016/J.NEUBIOREV.2011.11.008

31. Green SA, Hernandez L, Tottenham N, Krasileva K, Bookheimer SY, Dapretto M. Neurobiology of sensory overresponsivity in youth with autism spectrum disorders. JAMA Psychiatry (2015) 72:778–86. doi: 10.1001/jamapsychiatry.2015.0737

32. Green SA, Rudie JD, Colich NL, Wood JJ, Shirinyan D, Hernandez L, et al. Overreactive brain responses to sensory stimuli in youth with autism spectrum disorders. J Am Acad Child Adolesc Psychiatry (2013) 52:1158–72. doi: 10.1016/j.jaac.2013.08.004

33. Lord C, Rutter M, DiLavore P, Risi S, Gotham K, Bishop S. Autism Diagnostic Observation Schedule. 2nd ed. Torrance, CA, USA: Western Psychological Services (2012).

34. Lord C, Rutter M, Le Couteur A. Autism Diagnostic Interview-Revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. J Autism Dev Disord (1994) 24:659–85. doi: 10.1007/BF02172145

35. Wechsler D. Wechsler Abbreviated Scale of Intelligence. New York, NY, USA: The Psychological Corporation, Harcourt Brace & Company (1999).

36. Constantino JN, Gruber CP. Social Responsiveness Scale–Second Edition (SRS-2). Torrance, CA, USA: Western Psychological Services (2012). p. 2nd ed.

37. Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, et al. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage (2004) 23:S208–19. doi: 10.1016/J.NEUROIMAGE.2004.07.051

38. Cox RW. AFNI: Software for Analysis and Visualization of Functional Magnetic Resonance Neuroimages. Comput Biomed Res (1996) 29:162–73. doi: 10.1006/cbmr.1996.0014

39. Jenkinson M, Bannister P, Brady M, Smith S. Improved Optimization for the Robust and Accurate Linear Registration and Motion Correction of Brain Images. NeuroImage (2002) 17:825–41. doi: 10.1006/NIMG.2002.1132

40. Smith SM. Fast robust automated brain extraction. Hum Brain Mapp (2002) 17:143–55. doi: 10.1002/hbm.10062

41. Wang TA, Lee SA, Sigman M, Dapretto M. Reading affect in the face and voice: Neural correlates of interpreting communicative intent in children and adolescents with autism spectrum disorders. JAMA Psychiatry (2007) 64:698–708. doi: 10.1001/archpsyc.64.6.698

42. Green SA, Hernandez L, Lawrence KE, Liu J, Tsang T, Yeargin J, et al. Distinct Patterns of Neural Habituation and Generalization in Children and Adolescents With Autism With Low and High Sensory Overresponsivity. Am J Psychiatry (2019) 176:1010–20. doi: 10.1176/appi.ajp.2019.18121333

43. Eklund A, Nichols TE, Knutsson H. Cluster failure: Why fMRI inferences for spatial extent have inflated false-positive rates. Proc Natl Acad Sci United States America (2016) 113:7900–05. doi: 10.1073/pnas.1602413113

44. Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, Shulman GL. A default mode of brain function. Proc Natl Acad Sci United States America (2001) 98:676–82. doi: 10.1073/pnas.98.2.676

45. Lerner Y, Honey CJ, Silbert L, Hasson U. Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J Neurosci (2011) 31:2906–15. doi: 10.1523/JNEUROSCI.3684-10.2011

46. Xu J, Kemeny S, Park G, Frattali C, Braun A. Language in context: Emergent features of word, sentence, and narrative comprehension. NeuroImage (2005) 25:1002–15. doi: 10.1016/j.neuroimage.2004.12.013

47. Gaffrey MS, Kleinhans NM, Haist F, Akshoomoff N, Campbell A, Courchesne E. A typical participation of visual cortex during word processing in autism: An fMRI study of semantic decision. Neuropsychologia (2007) 45:1672–84. doi: 10.1016/j.neuropsychologia.2007.01.008

48. Jao Keehn RJ, Sanchez SS, Stewart CR, Zhao W, Grenesko-Stevens EL, Keehn B, et al. Impaired downregulation of visual cortex during auditory processing is associated with autism symptomatology in children and adolescents with autism spectrum disorder. Autism Res (2017) 10:130–43. doi: 10.1002/aur.1636

49. Stevenson RA, Segers M, Ferber S, Barense MD, Wallace MT. The impact of multisensory integration deficits on speech perception in children with autism spectrum disorders. Front Psychol (2014) 5:379. doi: 10.3389/fpsyg.2014.00379

50. Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex (2009) 19:2767–96. doi: 10.1093/cercor/bhp055

51. Obleser J, Kotz SA. Expectancy Constraints in Degraded Speech Modulate the Language Comprehension Network. Cereb Cortex (2010) 20:633–40. doi: 10.1093/cercor/bhp128

52. Seghier ML, Fagan E, Price CJ. Functional subdivisions in the left angular gyrus where the semantic system meets and diverges from the default network. J Neurosci (2010) 30:16809–17. doi: 10.1523/JNEUROSCI.3377-10.2010

53. Hartwigsen G, Golombek T, Obleser J. Repetitive transcranial magnetic stimulation over left angular gyrus modulates the predictability gain in degraded speech comprehension. Cortex (2015) 68:100–10. doi: 10.1016/j.cortex.2014.08.027

54. Barron-Cohen S. The development of a theory of mind in autism: deviance and delay? Psychiatr Clinics North America (1991) 14(1):33–51. doi: 10.1016/S0193-953X(18)30323-X

55. Frith U, Happé F. Theory of mind and self-consciousness: What is it like to be autistic? Mind Lang (2002) 14:82–9. doi: 10.1111/1468-0017.00100

56. Boucher J. Language development in autism. Int J Pediatr Otorhinolaryngol (2003) 67:S159–163. doi: 10.1006/cbmr.1996.0014

Keywords: speech, autism, voice-selective, attention, conversation, noise, aversive, sensory

Citation: Hernandez LM, Green SA, Lawrence KE, Inada M, Liu J, Bookheimer SY and Dapretto M (2020) Social Attention in Autism: Neural Sensitivity to Speech Over Background Noise Predicts Encoding of Social Information. Front. Psychiatry 11:343. doi: 10.3389/fpsyt.2020.00343

Received: 03 December 2019; Accepted: 06 April 2020;

Published: 24 April 2020.

Edited by:

Kevin A. Pelphrey, University of Virginia, United StatesReviewed by:

Jeroen M. van Baar, Brown University, United StatesDerek J. Dean, Vanderbilt University, United States

Copyright © 2020 Hernandez, Green, Lawrence, Inada, Liu, Bookheimer and Dapretto. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mirella Dapretto, bWlyZWxsYUB1Y2xhLmVkdQ==

Leanna M. Hernandez

Leanna M. Hernandez Shulamite A. Green

Shulamite A. Green Katherine E. Lawrence

Katherine E. Lawrence Marisa Inada

Marisa Inada Janelle Liu

Janelle Liu Susan Y. Bookheimer

Susan Y. Bookheimer Mirella Dapretto

Mirella Dapretto