95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

BRIEF RESEARCH REPORT article

Front. Polit. Sci. , 09 February 2024

Sec. Political Science Methodologies

Volume 6 - 2024 | https://doi.org/10.3389/fpos.2024.1327662

Understanding the dynamics of citizens' opinions, preferences, perceptions, and attitudes is pivotal in political science and essential for informed policymaking. Although highly sophisticated tools have been developed for analyzing these dynamics through surveys, outside the field of polarization, these analyses often focus on average responses, thereby missing important information embedded in other parameters of data distribution. Our study aims to fill this gap by illustrating how analyzing the evolution of both the mean and the distribution shape of responses can offer complementary insights into opinion dynamics. Specifically, we explore this through the French public's perception of defense issues, both before and after the onset of the war in Ukraine. Our findings underscore how routinely combining classical approaches with the use of existing tools for measuring distribution shapes can provide valuable perspectives for researchers and policymakers alike, by highlighting the nuanced shifts in public opinion that traditional methods might overlook.

Longitudinal surveys are indispensable tools for examining the dynamics of opinions and attitudes over short, medium, and long terms, playing a crucial role in both research and policymaking (Glynn et al., 1999; Eisinger, 2008; Burstein, 2010). Despite known limitations (Hube, 2008; Vannette and Krosnick, 2018), they are particularly valuable to better understand the fluid nature of citizens' opinions, which are influenced by a variety of factors such as context, information intake, policy shifts, and crises.

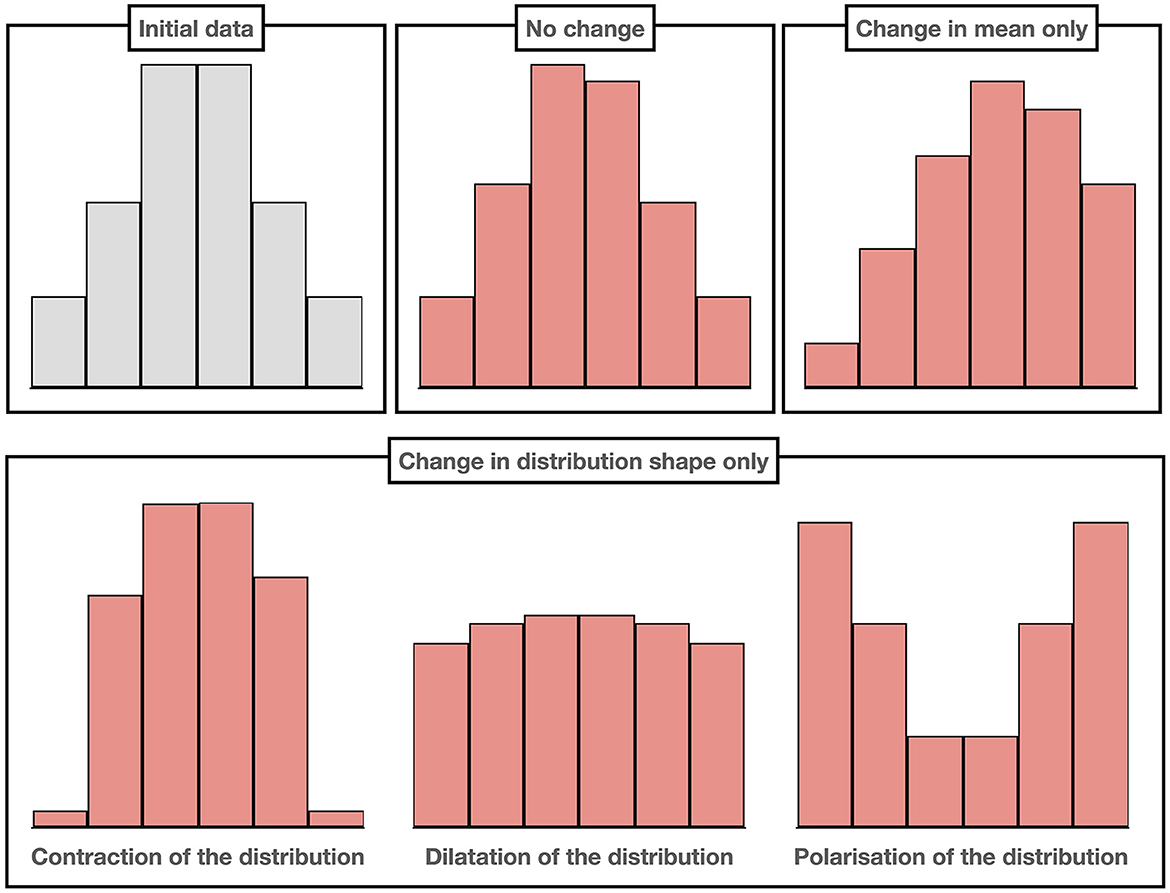

A key challenge in studying opinion dynamics is analyzing their variations over time. Traditionally, research has focused on the study of changes in average trends across survey waves. While information about response dispersion (e.g., standard deviation and variance) is systematically included in preliminary survey analyses, the standard approach overlooks the fact that the shape of the response distribution can also change over time. However, across different waves, responses can vary notably in the spread of their distribution or experience more dramatic shifts, changing from one type of distribution to another (e.g., from a normal to a bimodal distribution). Crucially, these changes can evolve independently from the mean, offering additional, non-redundant, and valuable insights. For instance, increased convergence around a specific value, heightened polarization, or a shift toward a uniform distribution can all occur in the absence of change in the population mean (Figure 1). Conversely, shifts in means can occur without corresponding changes in the distribution shape, or both aspects can evolve simultaneously over time.

Figure 1. Illustration of various changes in mean and distribution shape that are observable across survey waves. Compared to the initial data (in gray), follow-up surveys (in red) can reveal either no statistically significant change in either mean or distribution shape, a change in the mean only, or a change in distribution shape, including contraction around a value, dilatation across the range of potential responses, or polarization.

Analyzing the evolution of the shape of response distribution over time is far from being just another statistical analysis that political scientists can conduct. In the context of opinion evolution, scrutinizing the level of agreement within a population is especially crucial for understanding whether shifts in opinions represent a global uniform change across the population or divergent movements among specific groups. This distinction can help distinguish situations where nearly all individuals move in a specific direction (e.g., the entire population trusting an institution less) from scenarios where specific groups evolve in different directions (e.g., no change in the level of trust of the majority but a decrease in the level of trust of a minority). The significance of such an analysis is underscored by decades of political science research emphasizing the importance of studying opinion agreement dynamics at the societal level. Polarization, for example, has been identified as a major threat to democracies (Dahl, 1967; Lipset, 1981; Poole and Rosenthal, 1984; McCoy et al., 2018; Axelrod et al., 2021), and research on crises has highlighted that rally ‘round the flag effects often matter in response to acute threats (e.g., Kritzinger et al., 2021).

Directly addressing this issue, some studies have included specific questions about respondents' agreement with government decisions or public policy issues (see, e.g., Galasso et al., 2020 for a recent example of research on attitudes toward COVID-19 related restrictions). Concurrently, various statistical tools have been developed to analyse the level of agreement in opinions, attitudes, and perceptions within any survey data (DiMaggio et al., 1996; Evans et al., 2001; Mouw and Sobel, 2001; see, e.g., Bauer, 2019 for a non exhaustive review of these measures; and Zhang and Kanbur, 2001 for an example of the differences between polarization measures). A simple approach involves the analysis of classical dispersion measures such as variance or standard deviations, with less dispersion typically interpreted as more agreement (Mouw and Sobel, 2001). However, two distributions can have identical standard deviations and means yet differ in shape, potentially concealing crucial information about the existence of different groups.

Therefore, a more nuanced approach focuses on the precise analysis of the shape of response distributions, particularly distinguishing between unimodal and bimodal distributions, with the former classically interpreted as indicating more agreement within the population. This bottom-up approach to exploring opinion can reveal convergence among distinct social groups and the emergence of new, context- or issue-specific cleavages. While the simplest version of this approach involves a qualitative description of distribution shapes, this method can oversimplify distributions into discrete categories, obscuring subtle shifts in opinion dynamics. To address this, quantitative descriptions, such as those based on the kurtosis measure, bimodality coefficients or Hartigan's diptest (Hartigan and Hartigan, 1985; Evans et al., 2001; Lelkes, 2016; Menchaca, 2023), are widely used in polarization studies to assess the degree of bimodality of a distribution.

However, the transition from a unimodal to a bimodal distribution is not the only noteworthy change in distribution shape, whether for studying polarization or for understanding opinion dynamics more broadly (see, e.g., Downey and Huffman, 2001 for a discussion on the importance of trimodal distributions). For instance, shifts from a uniform to a normal distribution can be particularly informative in survey analysis, as they may indicate the development of a specific attitude at the population level. These broader variations in distribution shape are captured by statistical tools specifically designed to measure the level of agreement within a population. Given that such tools are available and have already been implemented in standard statistical software for all common survey response modalities (i.e., binary choices, ordinal scales, and nominal scales), they can be readily applied to most surveys and thus offer new opportunities for analyzing both short- and long-term opinion dynamics and their political implications.

In the present study, we have selected the index developed by Van Der Eijk (2001) to examine the French public's perception of defense issues before and after the onset of the war in Ukraine. This index provides a unidimensional measure of response concentration for ordered scales, assessing how responses are distributed across various categories of a scale. For instance, if a survey question offers a range from “strongly disagree” to “strongly agree,” the index evaluates the spread of responses across these options. Importantly, the Van der Eijk index values can be interpreted qualitatively, ranging from −1, for total polarization, to 0, for a uniform distribution, and +1 for total consensus. Therefore, this index is particularly suited for getting a rapid overview of changes in response distribution shapes and understanding the underlying dynamics. Given its applicability to ordinal data, ease of interpretation, and its sensitivity to polarization and consensus, it is a valuable tool in survey analysis.

To illustrate the importance of considering both changes in the response averages and level of agreement when studying the evolution of attitudes, opinions, and behaviors, we conducted an analysis of the perception of defense issues, both before and after the outbreak of the war in Ukraine. Since the 1990's, the minimalist view, which posits that citizens generally lack interest and knowledge in foreign policy, leading to superficial, unstructured, and unstable opinions—a perspective advocated by political scientists since the Almond-Lippmann consensus of the 1950's (Almond, 1950; Converse, 1964; La Balme, 2002; Drezner, 2008)—has faced sustained criticism (Holsti, 1992; Sniderman, 1998; Sinnott, 2000). Several studies have demonstrated that the public can indeed form rational opinions on foreign policy (Hurwitz and Peffley, 1987; Shapiro and Page, 1988; Aldrich et al., 1989, 2006; Holsti, 1992, 2004; Page and Shapiro, 1992). Shapiro and Page (1988) notably argued that public opinion is not just a collection of “non-attitudes” but rather reflects real, stable, and coherent preferences. They suggest that opinion changes are predictable and sensible, influenced by new information or significant international events. Thiébaut (2015) further showed that French public opinion on European defense is stable and responsive to international events, making sense in the context of media and elite presentations. This responsiveness of public opinion to significant international events sets the stage for our case study, which focuses on the French public's perception of defense issues in the context of a major international crisis: the war in Ukraine.

The conflict, triggered by the Russian invasion of Ukraine on 24 February 2022, brought diplomatic and military issues to the forefront of political and media agendas across Europe, including France. It has received extensive coverage in the French media, with major outlets like Le Monde and Libération dedicating their front pages to the conflict between 26 February and 16 March 2022. The war has also sparked numerous debates and analyses on defense issues, such as the nuclear threat and NATO expansion, which are typically less covered by the media. Given the salience of the war in French politics, our case study seeks to identify shifts in the French public's perception of defense issues during the early stages of the crisis.

To gauge the French population's perception of defense, we recruited French participants online, ensuring representative samples based on age, gender, level of education, and geographical location using the quota method (Supplementary Table 1). Overall, we collected data from three independent samples: in July 2021 (i.e., before the war in Ukraine), in March 2022 (i.e., at the start of this war), and in July 2022 (i.e., 4 months into the conflict).

In each sample, participants were asked to rate their current perception of various defense issues on four key issue attributes (emotional intensity, concreteness, obtrusiveness, and social importance). Defense, being a multi-dimensional policy area covering a range of topics with varying public visibility (Richter, 2022), was represented in our survey by 20 different policy issues (Table 1). Additionally, four environmental and educational issues were included to determine whether the evolution in the perception of defense issues was unique or not. Respondents had to rate each issue and attribute on a six-point scale, ranging from “not at all” to “very” emotionally intense, personally concerning, concrete, or important for society. An option to indicate ignorance of the policy issue (“I don't know what this issue refers to”) was also provided and these responses were excluded from the analyses. The questions on different issue attributes were presented in randomized blocks. Within each of the four blocks, each policy issue was displayed on a new page, again in a random order for each respondent to minimize potential response biases due to question order.

Respondents' evaluations were weighted to ensure representativeness of the French population before computing our variables of interest. Therefore, all our analyses and computations were performed on responses weighted distributions. To gain a first comprehensive overview of the evolution of the perception of defense issues, we ran separate mixed linear regressions for each issue attribute, taking participants' evaluations of each issue as data points. These regressions included the study wave and the categorization of the issue as part of the defense domain as predictors, and incorporated both participants and issues as random factors.

We then conducted more precise analyses that accounted for the variability of defense issues. For each issue and each issue attribute (emotional intensity, personal concernedness, concreteness, and societal importance), we calculated the means and level of agreement, using the Van Der Eijk (2001) index. The Van der Eijk index was computed with the R Agrmt library (Ruedin, 2021). For each issue and each issue attribute, we also assessed the statistical significance of changes in means using Wilcoxon rank tests between Wave 1 (data collected in July 2021) and Wave 2 (data collected in March 2022), and between Wave 1 and Wave 3 (data collected in July 2022).

Similarly, we ran issue- and issue attribute-wise analyses for agreement. However, as no standard statistical test has been developed for assessing changes in the Van der Eijk agreement index, we used permutation tests. More precisely, we performed 10,000 permutations for each pair of waves (Waves 1 and 2, Waves 2 and 3, and Waves 1 and 3). For each pair, we randomly assigned evaluations of each issue on each issue attribute to either of the two considered waves. For each permutation, we calculated the difference in agreement between the two generated waves. We then computed a two-sided p-value by comparing these permuted differences in agreement with the observed difference in agreement in our data.

Our mixed regression analyses showed that after an initial intensification of the perception of all issue attributes at the onset of the war in Ukraine (March 2022), there was a slight decrease in the intensity of defense perceptions by July 2022 (Supplementary Figure 1). However, these levels did not return to those observed before the war (July 2021), except for emotional intensity.

Issue-wise analyses subsequently revealed that this result on mean perceptions was driven by certain issues that may have received particular attention since the war's inception: diplomatic and military relations with Russia, international cooperation on defense, and nuclear deterrence (Table 1). More specifically, we observed a significant change in the mean perception of diplomatic and military relations with Russia and international cooperation within NATO and with the UN, with a marked increase in the mean intensity of all four attributes in July 2022 compared to July 2021 (Table 1). This result contrasts with the responses collected just after the war's onset (March 2022), which showed a significant intensification in the perception of most policy issues across all issue attributes (Table 1). These changes might reflect a normalization in the perception of the war in Ukraine after an initially strong reaction, where only issues directly impacting the French population continued to be perceived more intensely.

We also observed an initial general increase in the level of agreement from July 2021 to March 2022, evidenced by a significant rise in the level of agreement for at least half of the issues across all issue attributes (Table 1). This suggests the emergence of consensus on certain attitudes, possibly due to a massive information or a rally ‘round the flag effect in reaction to the war. However, when comparing opinions' distribution between July 2021 and July 2022, two contrasting trends emerged. On one hand, there was a significant increase in agreement regarding the evaluation of the concreteness of most defense issues (13 out of 20, Table 1), which can be interpreted as indicating a general acquisition of information post-war onset. On the other hand, there was a significant decrease in agreement regarding the evaluation of the concernedness for a third of the issues (seven out of 20, Table 1), suggesting varying personal reactions. Importantly, supplementary analyses confirmed that this was not merely due to differences in the age distribution across our samples (Supplementary Table 2).

Furthermore, we observed specific patterns in the evolution of means and agreement levels depending on the issue and issue attribute. For example, some perceptions, like the concreteness of nuclear deterrence, showed a simultaneous increase in mean and level of agreement (Figure 2A). Other issues, such as the importance of cooperation within NATO, were perceived more intensely without any change in their level of agreement (Figure 2B). Conversely, respondents felt more concerned by issues like the diplomatic and military relations with Russia (Figure 2C) and defense cooperation within NATO and the UN, but responses showed lower levels of agreement in the most recent survey (Table 1, in July 2022 compared to July 2021). Importantly, this decrease in the level of agreement was not due to polarization but reflected a more uniform distribution of responses. For instance, while the distribution of the perceived concernedness by the diplomatic and military relations with Russia was skewed toward the lowest ratings in July 2021 (with 46% of respondents choosing one of the two lowest scores), the overall increase in these perceptions by July 2022 did not result from a skewed distribution at the other end. Instead, we observed a more even distribution of evaluations across the scale (32% of respondents choosing one of the two lowest scores, 41% one of the two middle scores, and 27% one of the two highest scores; Figure 2C). This indicates that not all French citizens converged toward feeling more concerned by this issue, contrary to what might be inferred from an analysis of means alone, but rather that only some segment of the population began to feel more concerned by the relations with Russia.

Figure 2. Examples of the observed changes in means and distribution shape after the beginning of the war in Ukraine. All possible combinations of changes in means and distribution shape were observed in our dataset, highlighting the importance of considering these two measures together to understand opinion dynamics. The histograms show data distribution of the evaluation of the specified issues on the indicated issue attributes, with responses collected before the war at the bottom in gray and those collected 4 months into the war at the top in red. The black bar represents the mean of each distribution. (A) Change in mean. Contraction of the distribution (increase in agreement). (B) Change in mean. No change in the distribution shape (no change in agreement). (C) Change in mean. Dilation of the distribution shape (decrease in agreement). (D) No change in mean. Contraction of the distribution (increase in agreement).

Our analyses also highlighted that some issues evolved only in the level of agreement but not their means. For instance, the concernedness regarding civilian casualties during missions and operations exhibited an isolated increase in agreement (Figure 2D). For civilian casualties, the isolated increase in agreement was due to specific changes at the lowest extreme of the distribution, resulting in a more skewed distribution toward feeling more concerned. Therefore, contrary to the conclusion of indifference that could have been inferred from the non-significant change in mean levels of concernedness regarding civilian casualties, our analysis reveals that the French opinion on this issue did evolve after the beginning of the war in Ukraine.

Finally, we ran simulation analyses to further check whether response means and levels of agreement provided non-redundant information from our data. To do so, we tested whether the observed change in the mean evaluation of perceived concernedness regarding the diplomatic and military relations with Russia could coincide with other types of distributional shifts. Our analyses revealed that the observed change in mean could have occurred alongside various distributional shifts: a shift in the distribution's mean without altering its level of agreement, a dilatation of the distribution (as it is the case in our data), a contraction of the distribution around the new mean, or the emergence of a bimodal distribution (Figure 3). These findings underscore the independence of the evolution of means and levels of agreement from each other and from their initial conditions, thereby demonstrating that the two measures provide complementary and non-redundant information about the evolution of opinions.

Figure 3. Examples of potential evolutions of distribution shape of concernedness about the relations with Russia that match the observed change in mean. Histograms represent actual (in gray for data collected in July 2021 and red for data collected in July 2022) and simulated data distribution (in blue), with the black bar indicating the mean of each variable. Data were simulated to match the observed change in mean between July 2021 and July 2022 but with different changes in agreement, illustrating that a given change in mean can be associated with any possible evolution of the distribution shape.

Our findings underscore the complexity of opinion shifts and the importance of analyzing both the mean and the distribution shape of responses to capture the full spectrum of public sentiment. Our study highlights the necessity to combine those analyses to gain an in-depth understanding of the dynamics of public opinion in France regarding defense issues, particularly in the context of the war in Ukraine.

Our analysis revealed that while there was an initial intensification in the perception of defense issues following the onset of the Ukraine war, this intensity waned over time, returning to pre-war levels in some aspects. This trend suggests a normalization in the public's perception of the war, indicating a potential adaptation to the ongoing situation. However, our issue-wise analyses subsequently revealed a much more nuanced and contrasted picture of how the French perceived defense issues before and after the beginning of the war, highlighting the relevance as well as the complementary and non-redundant nature of the information provided by the analysis of means and the analysis of distributions.

For instance, the analysis of means confirmed the expected information effects, with most issues now being perceived as more concrete. Analyses of distribution shapes additionally strengthened the evidence in favor of an information effect, with respondents agreeing more on the concreteness of defense issues after the beginning of the war in Ukraine. They also revealed a general increase in the level of agreement on most issues and dimensions in March 2022. This result highlights the emergence of consensus in certain attitudes—even without a change in average—which can be attributed to a massive information effect (with participants being exposed to similar information, their perceptions also converge over time) or to a more specific reaction to the intensity of the war, which could reflect a rally ‘round the flag effect. Future studies analyzing the evolution of distributions could disentangle those two hypotheses and thus help to better understand the opinion dynamics in times of crisis. On the other hand, the decreased agreement in the perception of specific defense issues, such as nuclear deterrence and diplomatic relations with Russia, highlights the varied impact of international events on public opinion.

In summary, while analyses focusing solely on mean values would have captured the general trend in the average perceptions, our dual analysis, which incorporates changes in distribution shape, has provided deeper insights into the complexity and variability of public opinion. This approach notably helps prevent misinterpretation of non-significant changes in opinions means. To put it differently, average and distribution analyses do not only enable to identify evolutions of public opinion that are not reflected in changes in means but also provide a better understanding of the dynamics underlying changes in means.

Despite these insights, three main limitations to our study must be acknowledged. First, our data was collected from three independent samples, representing a basic application of the methods developed. Panel data analysis would enable a more detailed exploration of opinion dynamics, potentially identifying specific subgroups driving distribution changes. Additionally, randomized control trials and quasi-natural experiments could more conclusively establish causal links between external events and distribution changes (Dunning, 2008).

Second, while we selected a specific measure of agreement for our distribution analysis, other statistical tools are available for measuring opinion polarization across various scales (Bauer, 2019). It is worth noting that different statistical tools are suited to different research questions, as it has been discussed in the polarization literature, especially regarding the detection of cleavages. Researchers should exercise caution in selecting the most appropriate tool for their studies (see, e.g., Berger et al., 2017 for a discussion on the measures of polarization). Nonetheless, as demonstrated in our study, the Van der Eijk index can be a useful tool for obtaining a first, hypothesis-free general overview of changes in response distribution. Indeed, combining mean and agreement analyses enables a rapid overview of changes in opinions and provides the ground for more precise analyses of how these opinions have changed over time.

Lastly, the limited number of non-responses in our study restricted our ability to analyse these data. However, analyzing non-responses could further enrich our understanding of attitude evolution, representing another promising direction for future research (Grichting, 1994; Purdam et al., 2020). This is particular true as non-responses raise a series of methodological questions both during the creation of survey questionnaires and in the interpretation of results (Durand and Lambert, 1988).

In conclusion, our study makes a significant contribution to the understanding of public opinion in the realm of defense policy. It demonstrates the importance of analyzing both mean values and distribution shapes in survey data, an approach that is valuable not only in the study of polarization. This method provides indeed a richer, more nuanced understanding of public opinion dynamics, which is crucial for both academic research and practical policy formulation, especially in the context of international crises.

The original contributions presented in the study are publicly available. This data can be found here: https://osf.io/vh6n9/.

Ethical approval was not required for the studies involving humans because of local legislation and institutional requirements. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

FR: Writing – original draft, Writing – review & editing, Conceptualization. CT: Writing – original draft, Writing – review & editing, Conceptualization, Formal analysis. LS: Writing – original draft, Writing – review & editing, Conceptualization, Formal analysis.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by the French National Research Agency (ANR) under the “Investissements d'Avenir” programme Labex LIEPP (ANR-11-LABX-0091 and ANR-11-IDEX-0005-02) and IdEx Université Paris Cité (ANR-18-IDEX-0001).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpos.2024.1327662/full#supplementary-material

Aldrich, J. H., Gelpi, C., Feaver, P., Reifler, J., and Sharp, K. T. (2006). Foreign policy and the electoral connection. Ann. Rev. Polit. Sci. 9, 477–502. doi: 10.1146/annurev.polisci.9.111605.105008

Aldrich, J. H., Sullivan, J. L., and Borgida, E. (1989). Foreign affairs and issue voting: do presidential candidates “waltz before a blind audience?” Am. Polit. Sci. Rev. 83, 123–141. doi: 10.2307/1956437

Axelrod, R., Daymude, J. J., and Forrest, S. (2021). Preventing extreme polarization of political attitudes. Proc. Natl. Acad. Sci. U. S. A. 118:e2102139118. doi: 10.1073/pnas.2102139118

Bauer, P. C. (2019). Conceptualizing and Measuring Polarization: A Review. Available online at: https://osf.io/preprints/socarxiv/e5vp8 (accessed October 2, 2023).

Berger, W. J., Bramson, A., Fisher, S., Flocken, C., Grim, P., Holman, B., et al. (2017). Understanding polarization: meanings, measures, and model evaluation. Philos. Sci. 84, 115–159. doi: 10.1086/688938

Burstein, P. (2010). “Public opinion, public policy, and democracy,” in Handbook of Politics: State and Society in Global Perspective, eds. K. T. Leicht and J. C. Jenkins (New York, NY: Springer), 63–79.

Converse, P. E. (1964). “The nature of belief systems in mass publics,” in Ideology and Discontent, ed. D. E. Apter (New York, NY: The Free Press of Glencoe), 206–261.

Dahl, R. A. (1967). Pluralist Democracy in the United States: Conflict and Consent. Chicago, IL: Rand McNally.

DiMaggio, P., Evans, J., and Bryson, B. (1996). Have American's social attitudes become more polarized? Am. J. Sociol. 102, 690–755. doi: 10.1086/230995

Downey, D. J., and Huffman, M. L. (2001). Attitudinal polarization and trimodal distributions: measurement problems and theoretical implications. Soc. Sci. Quart. 82, 494–505. doi: 10.1111/0038-4941.00038

Drezner, D. W. (2008). The realist tradition in American public opinion. Perspect. Polit. 6, 51–70. doi: 10.1017/S1537592708080067

Dunning, T. (2008). Improving causal inference: strengths and limitations of natural experiments. Polit. Res. Quart. 61, 282–293. doi: 10.1177/1065912907306470

Durand, R. M., and Lambert, Z. V. (1988). Don't know responses in surveys: analyses and interpretational consequences. J. Bus. Res. 16, 169–188. doi: 10.1016/0148-2963(88)90040-9

Eisinger, R. M. (2008). “The use of surveys by governments and politicians,” in The SAGE Handbook of Public Opinion Research, eds. W. Donsbach and M. W. Traugott (London: SAGE Publications Ltd), 487–495.

Evans, J. H., Bryson, B., and DiMaggio, P. (2001). Opinion polarization: important contributions, necessary limitations. Am. J. Sociol. 106, 944–959. doi: 10.1086/320297

Galasso, V., Pons, V., Profeta, P., Becher, M., Brouard, S., and Foucault, M. (2020). Gender differences in COVID-19 attitudes and behavior: panel evidence from eight countries. Proc. Natl. Acad. Sci. U. S. A. 117, 27285–27291. doi: 10.1073/pnas.2012520117

Glynn, C. J., Herbst, S., O'Keefe, G. J., and Shapiro, R. Y. (1999). Public Opinion. Boulder, CO: Westview Press.

Grichting, W. L. (1994). The meaning of “i don't know” in opinion surveys: indifference versus ignorance. Austr. Psychol. 29, 71–75. doi: 10.1080/00050069408257324

Hartigan, J. A., and Hartigan, P. M. (1985). The dip test of unimodality. Ann. Stat. 13, 70–84. doi: 10.1214/aos/1176346577

Holsti, O. R. (1992). Public opinion and foreign policy: challenges to the Almond-Lippmann Consensus. Int. Stud. Quart. 36, 439–466. doi: 10.2307/2600734

Holsti, O. R. (2004). Public Opinion and American Foreign Policy. Ann Arbor, MI: University of Michigan Press.

Hurwitz, J., and Peffley, M. (1987). How are foreign policy attitudes structured? A hierarchical model. Am. Polit. Sci. Rev. 81, 1099–1120. doi: 10.2307/1962580

Kritzinger, S., Foucault, M., Lachat, R., Partheymüller, J., Plescia, C., and Brouard, S. (2021). “Rally round the flag”: the COVID-19 crisis and trust in the national government. West Eur. Polit. 44, 1205–1231. doi: 10.1080/01402382.2021.1925017

La Balme, N. (2002). Partir En Guerre : Décideurs et Politiques Face à l'opinion Publique. Paris: Editions Autrement.

Lelkes, Y. (2016). Mass polarization: manifestations and measurements. Publ. Opin. Quart. 80, 392–410. doi: 10.1093/poq/nfw005

Lipset, S. M. (1981). Political Man: The Social Bases of Politics. Baltimore, MD: Johns Hopkins University Press.

McCoy, J., Rahman, T., and Somer, M. (2018). Polarization and the global crisis of democracy: common patterns, dynamics, and pernicious consequences for democratic polities. Am. Behav. Sci. 62, 16–42. doi: 10.1177/0002764218759576

Menchaca, M. (2023). Are Americans polarized on issue dimensions? J. Elect. Publ. Opin. Part. 33, 228–246. doi: 10.1080/17457289.2021.1910954

Mouw, T., and Sobel, M. E. (2001). Culture wars and opinion polarization: the case of abortion. Am. J. Sociol. 106, 913–943. doi: 10.1086/320294

Page, B. I., and Shapiro, R. Y. (1992). The Rational Public: Fifty Years of Trends in Americans' Policy Preferences. Chicago, IL: University of Chicago Press.

Poole, K. T., and Rosenthal, H. (1984). The polarization of American politics. J. Polit. 46, 1061–1079. doi: 10.2307/2131242

Purdam, K., Sakshaug, J., Bourne, M., and Bayliss, D. (2020). Understanding “don't know” answers to survey questions - an international comparative analysis using interview paradata. Innovation 2020, 1–23. doi: 10.1080/13511610.2020.1752631

Richter, F. (2022). Guns vs. Butter: The Politics of Attention in Defence Policy. Paris: Sciences Po. Available online at: https://www.theses.fr/2022IEPP0012 (accessed October 2, 2023).

Ruedin, D. (2021). An Introduction to the R Package Agrmt. Available online at: https://CRAN.R-project.org/package=agrmt (accessed October 2, 2023).

Shapiro, R. Y., and Page, B. I. (1988). Foreign policy and the rational public. J. Confl. Resol. 32, 211–247. doi: 10.1177/0022002788032002001

Sinnott, R. (2000). Knowledge and the position of attitudes to a European foreign policy on the real to random continuum. Int. J. Publ. Opin. Res. 12, 113–137. doi: 10.1093/ijpor/12.2.113

Sniderman, P. (1998). Les nouvelles perspectives de la recherche sur l'opinion publique. Politix 41, 123–175. doi: 10.3406/polix.1998.1716

Thiébaut, C. (2015). Opinions, Information et Réception: La Réactivité Du Public Français Aux Représentations Médiatiques de l'Europe de La Défense (1991–2008). Paris: Paris 1. Available online at: https://theses.fr/2015PA010332 (accessed October 2, 2023).

Van Der Eijk, C. (2001). Measuring agreement in ordered rating scales. Qual. Quant. 35, 325–341. doi: 10.1023/A:1010374114305

Vannette, D. L., and Krosnick, J. A. (2018). The Palgrave Handbook of Survey Research. Cham: Springer International Publishing.

Keywords: opinion dynamics, survey data analysis, information effects, defense policy, Ukraine war

Citation: Richter F, Thiébaut C and Safra L (2024) Not just by means alone: why the evolution of distribution shapes matters for understanding opinion dynamics. The case of the French reaction to the war in Ukraine. Front. Polit. Sci. 6:1327662. doi: 10.3389/fpos.2024.1327662

Received: 25 October 2023; Accepted: 19 January 2024;

Published: 09 February 2024.

Edited by:

Naoki Masuda, University at Buffalo, United StatesReviewed by:

Virgilio Perez, University of Valencia, SpainCopyright © 2024 Richter, Thiébaut and Safra. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Friederike Richter, ZnJpZWRlcmlrZS5yaWNodGVyQHVuaWJ3LmRl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.