- 1Faculty of Social Sciences, University of Helsinki, Helsinki, Finland

- 2Political Science With Media and Communication, Åbo Akademi University, Vaasa, Finland

Affective polarization refers to people having favorable attitudes toward their preferred political parties, or inparties, along with their supporters, and negative attitudes toward other parties, or outparties, and their supporters. Originally an American concept, there is now growing interest in studying (AP) in European countries characterized by multiparty systems. So far, researchers have primarily focused on like-dislike ratings when measuring affect, which has relegated another important aspect to the background, namely attitudes toward ordinary supporters of parties. Open questions also remain relating to how political ingroups and outgroups should be conceptualized in situations with large numbers of relevant political parties. We examine these puzzles using data from an online panel in Finland. First, we measure partisan social distance, or feelings toward interacting with supporters of different parties, in addition to commonly used like-dislike ratings of parties. We find that social distance differs from party like-dislike ratings in that respondents are less likely to report animosity toward outparty supporters. Second, we measure multiple party identification based on party support and closeness, and find that people commonly have not one, but many potential inparties. Finally, we build two individual-level AP measures and apply them using both like-dislike ratings and social distance scales. One of the measures is based on identifying a single inparty, while the other takes the possibility of multiple inparties into account. We find that choosing which type of attitude to measure is more consequential for the outcome than how partisanship is operationalized. Our results and discussion clarify relationships between AP and related constructs, and highlight the necessity to consider the political and social context when measuring AP and interpreting results.

Introduction

The concept of affective polarization first appeared in the context of the American two-party system (Iyengar et al., 2012). Since then, the term has been eagerly adopted by political scientists studying other countries, mainly in Europe, or doing cross-country comparisons. These researchers have presented evidence that affective polarization is present, and is often increasing, in many European countries (Lauka et al., 2018; Gidron et al., 2019; Reiljan, 2020; Boxell et al., 2021; Kekkonen and Ylä-Anttila, 2021; Wagner, 2021; Kawecki, 2022). Studies have also yielded many insights as to what is driving these developments, or what consequences they are having (e.g., Hernández et al., 2021; Reiljan and Ryan, 2021; Kawecki, 2022; Torcal and Carty, 2022). Some researchers have also looked for patterns in partisan affect in order to better understand the configurations that an affectively polarized multiparty system can take, and the lines of division that may emerge (Harteveld, 2021; Kekkonen and Ylä-Anttila, 2021).

As the study of affective polarization has moved beyond the US context, it has become necessary to find new ways to operationalize and measure affective polarization in settings where the division into two opposing political camps is not as evident. The resulting literature has been highly useful, but in the process, the focus of the field seems to have shifted in several subtle but important ways. Generally, European studies of affective polarization have focused on attitudes toward political parties themselves. Attitudes toward partisans, which have been central for American scholars (e.g., Iyengar et al., 2012; Druckman and Levendusky, 2019), have received much less attention. Researchers have grappled with the problem of identifying inparties and outparties in situations with a large number of parties, and have often either ignored the possibility of multiple inparties, or downplayed the role of party identification.

In this article, we focus on two puzzles related to understanding, operationalizing and measuring affective polarization in a multiparty setting. We regard affective polarization as a phenomenon that manifests at the individual level through a duality of feelings: favoritism toward the political ingroup in combination with animosity toward the political outgroup.

Our first puzzle relates to what is meant by ingroup favoritism and outgroup animosity when using identification (or the lack thereof) with political parties as the basis for group divisions. We are curious about how favoritism and animosity differ depending on whether the focus is on attitudes toward political parties themselves, or on attitudes toward the ordinary people who support the parties. The second puzzle is related to thinking of and defining the political ingroups and outgroups in settings where individuals can identify with multiple parties. We wonder if this possibility should be accounted for when conceptualizing affective polarization and constructing measures. In both cases, we are curious about whether choices made based on different theoretical assumptions matter in practice, as well as what all of this tells us about the nature of affective polarization in multiparty systems.

This study is based on survey data from Finland, containing questions about like and dislike of political parties, social distance to partisans, and multiple party identifications. By focusing on Finland, we provide a case where many of the problems associated with trying to understand and measure affective polarization are in effect at full force, such as an extremely open multi-party system where a large portion of the electorate do not have strong partisan identities. We present a descriptive analysis of our data, highlighting associations and important differences between items. We then construct individual-level measures of affective polarization based on both like-dislike ratings and social distance items, and on different operationalizations of partisanship. These measures are used as dependent variables in an illustrative analysis to investigate whether operationalizations stemming from different theoretical assumptions yield substantially different results.

Our analysis shows party like-dislike ratings and partisan social distance as being moderately correlated, but having a partial relationship: respondents who like a party were usually happy to associate with its supporters, but those who dislike a party are not always hostile to its supporters. Affective polarization measures based on both types of attitudes are found to differ, and measures based on social distance bring out ideological differences. We also find multiple party identification to be common and individual attitudes to form clusters of mutual favoritism and animosity. This suggests that in many cases, ingroups consisting of multiple parties could be more conceptually appropriate. Nevertheless, explicitly accounting for multiple party identities has no major effect on results, as both types of measures are strongly correlated and yield largely similar results when used as dependent variables in our illustrative analysis.

In our concluding discussion, we suggest that the field of affective polarization research should not only clarify its conceptual underpinnings, but also explain why affective polarization is an important area of research in European multiparty democracies, and outline and operationalize its concepts on this basis. We also caution researchers to be mindful of potential ideological or other biases regarding how they operationalize and measure their key constructs, such as social distance to partisans.

Theory

The first puzzle: Party attitudes and social distance as manifestations of affective polarization

Iyengar et al. (2012) argue for defining political polarization as the extent to which partisans view each other as a disliked out-group. These researchers assert that the basis of partisanship and partisan affect can be found in the social identity approach (Tajfel, 1970; Tajfel and Turner, 1979), which posits a tendency for individuals to show favoritism toward members of the group that they identify with, or the ingroup, and dislike toward outgroup members. In this tradition, affective polarization is, in essence, tied to the salience of partisanship as social identity; a perspective akin to the expressive account of partisanship (Huddy et al., 2018). This social identity, in turn, influences perceptions of political ingroups and outgroups.

Survey-based evidence, on which our article focuses, for affective polarization in the US was originally based on two types of data: data on attitudes toward political parties (from now on referred to as party attitudes), as entities on their own or as represented by their elites and candidates, and on attitudes toward their supporters (henceforth, partisan-directed attitudes). Iyengar et al. (2012) pioneered the use of party thermometers, i.e., ratings of political parties on a numerical scale ranging from “cold” to “warm” feelings, to measure the difference in attitudes toward the “inparty” and the “outparty”. Evidence of inparty favoritism and outparty animosity has also been found in how partisans evaluate the candidates or political elites of these parties, as well as in how they evaluate the personal qualities of partisans or in how willing they are to socially associate socially with outparty partisans, i.e., partisan social distance1 (Iyengar et al., 2012; Iyengar and Krupenkin, 2018).

Many European and comparative studies have used the Comparative Study of Electoral Systems (CSES) question on “liking” or “disliking” a party, which is very similar to the party thermometers used by Iyengar et al. (2012). There is evidence that when asked to evaluate “parties” in general terms, survey respondents tend to think of party elites, while attitudes toward partisans may be milder (Druckman and Levendusky, 2019). We should, therefore, expect like-dislike ratings to mostly reflect party attitudes. Data on partisan-directed attitudes, however, is comparatively limited. Knudsen (2020) and Renström et al. (2021) have used the familiar “happiness with outparty supporters as in-laws” question to measure affective polarization in Norway and Sweden, and found evidence of ingroup biases; the survey done in Sweden also employed trait ratings. Harteveld (2021) compared feeling thermometers directed at party supporters to a party sympathy item and found only moderate correlations, but also that some people—particularly those who care more about politics—are more prone to extending their party antipathy to partisans than others. Similarly, Torcal and Carty (2022) have used partisan feeling thermometers in their analysis of affective polarization and political trust.

Nevertheless, we know relatively little about partisan-directed attitudes in general, or the connection between them and party attitudes in European multiparty democracies. We also know relatively little about the obstacles involved in measuring different types of attitudes. Many social distance or trait rating items are not straightforward to administer in settings with a large number of parties, and the question of whether, or how, to incorporate party identification (discussed in more detail in the next section) emerges.

To give insight into this puzzle, we test a survey battery for measuring partisan-directed attitudes in the form of social distance and compare them to party attitudes in our case country, Finland. We further build and compare individual-level affective polarization measures based on both types of attitude items. In doing so, we also seek to take into account the possibility of multiple partisan identities, which we will discuss next.

The second puzzle: Multiple party identities and affective polarization

As alluded to in the previous section, early studies on affective polarization in the United States relied on categorizing a person as either a Republican or a Democrat, then measuring affect for the inparty and the outparty. Non-US studies have generally taken one of several approaches to measuring affective polarization. The first approach is to find each respondent's inparty using, for instance, the Comparative Study of Electoral Systems (CSES) question on “feeling” close to a party (e.g., Gidron et al., 2019; Reiljan, 2020) or information about which party the respondent last voted for (e.g., Harteveld, 2021; Reiljan and Ryan, 2021), then comparing attitudes toward this inparty with attitudes toward other parties.

A drawback of this approach is that, in multiparty systems, citizens can feel close to or support several parties (Garry, 2007). In some situations, a useful fix is to group parties according to external information, such as co-membership in a governing coalition (Knudsen, 2020). An entirely alternative approach, which seeks to solve this problem, is to work directly with attitude items, without considering inparties and outparties explicitly (Lauka et al., 2018; Wagner, 2021; Kawecki, 2022). The literature on partisanship features survey instruments designed to measure multiple party identities (Garry, 2007; Mayer and Schultze, 2019), but as far as we know, they have not yet been utilized in affective polarization research.

A subtle difference between the two approaches is that the first separates party identification from party attitudes, whereas the second does not. Greene (2002) argues for treating party belonging, i.e., self-identified membership in a group, and attitudes as separate aspects of partisanship2. The approach of Iyengar et al. (2012) reflects this, as partisan identity is treated separately from the items used to measure attitudes. Strategies that use information such as the “closest” party or party choice in elections seem similar to this in spirit. Nevertheless, though non-US studies have adopted terminology from US studies, such as the key concepts of “inparty” and “outparty”, they are often used and operationalized in ways that do not have a clear connection to social identity, or other accounts of partisanship.

Hence, there is considerable uncertainty about how to best deal with the question of inparties and outparties when studying affective polarization in multiparty settings. In essence, the major question is whether respondents should be considered to potentially have multiple partisan identities, or if a single primary partisan identity should be assumed and all other parties be regarded as outparties. Furthermore, the conceptual basis of these partisan identities or party attachment is similarly unclear, as is, ultimately the concept of affective polarization itself. Finally, due to the field's focus on party-related measures we discussed in the previous section, the hurdles that relate particularly to operationalizing party attachment in conjunction with partisan social distance items remain largely unexplored.

To shed light on this puzzle, we tested a multi-party measure of party identification, and used it in conjunction with party and partisan attitude items to measure individual-level affective polarization. The next section describes our analytical strategy and data set in more detail.

Methods and data

Analytical strategy

As we have hitherto discussed, our aim is to elucidate two puzzles: one related to the relationship and differences between party attitudes and partisan-directed attitudes, and another one that relates to the relevance of multiple party identities and the basis of party attachment in studying affective polarization. We address these puzzles in tandem. We use survey data primarily consisting of responses to party like-dislike ratings, to represent party attitudes, partisan social distance items, to represent partisan-directed attitudes, and an inventory of multiple party identification, to serve as the basis for identifying inparties and outparties.

We first describe our data, and outline associations and differences between our items. By doing so, we can show how similar or different like-dislike ratings, partisan social distance measures, and party identification scales are when used to measure a stance toward the same party. After that, we turn our attention to associations across parties, comparing the answers given to the same question for different parties, again relying on correlations and visual examinations. The goal is to see whether a different image of the configuration of the party system emerges when using one survey item or another.

We then present four affective polarization scores computed for each respondent. To get these scores, we use one measure based on a single, exclusive partisan identity and another measure that accounts for multiple partisan identities. These measures are then applied, first, using like-dislike scores toward parties, and, second, using social distance toward regular supporters of parties. This allows us to investigate the empirical consequences of basing an affective polarization measure on either like-dislike ratings or social distance items, as well as differentiating between single and multiple party ingroups.

Finally, we employ each of these measures as the dependent variable in a simple linear regression model. By doing so, we illustrate some of the ways in which basing a statistical analysis on different assumptions can lead to different conclusions about which types of individuals are polarized, and to what degree. Here, our interest is primarily in associations and differences between different approaches, rather than making generalizations about an entire population.

Data and context

Our study employs survey data from Finland collected in April and May 2021. The questionnaire was sent to 2,000 members of an online panel, among which the response rate was 78%. Our data, therefore, contains responses from 1,561 participants: 52.2% of respondents were male, 47% were female, and 0.8% listed their gender as “other”. The mean age was 57 years. The highest level of education for 5.4% was a doctorate or a licentiate degree, for 52.6% it was a master's or bachelor's degree from a university or a university of applied sciences, for 36.9% it was the completion of upper secondary school or vocational school, and for 5% it was the completion of comprehensive school. Of the participants, 8.2% spoke Swedish as their first language, 91.4% Finnish, and 0.5% marked their first language as “other”. Around 90% of the sample had voted in the latest parliamentary election.

Compared to the general Finnish population, the sample is somewhat older, more highly educated, more likely to be Swedish-speaking, and more likely to have voted as well as more interested in politics. We perform all analyses on an unweighted sample, and deal with all missing data by removing an observation if it has a missing value in a relevant field, at each stage of analysis.

At the time the data was collected, there were 10 parties represented in the Finnish parliament, and a number of other parties with no parliamentary representation. We limit our analysis to the eight main parties that have received consistent representation in the most recent elections. All questions on party attitudes, partisan-directed attitudes, and identification with parties were asked about these eight parties. Together, these parties held 198 of the 200 seats in the unicameral Finnish parliament. In decreasing order of size, these were the Social Democratic Party (SDP); the Finns Party (FP); the National Coalition Party (NCP); the Centre Party of Finland (CPF); the Green League (GL); the Left Alliance (LA); the Swedish People's Party (SPP); and the Christian Democrats (CD).

Since the 1980s, the Finnish party system has been characterized by consensus politics and low levels of conflict. The system never gravitated toward bipolarization, such as in neighboring Sweden, and no party has been big enough to reach a dominant position by itself. Government formation has thus often resulted in broad coalitions across traditional divides (Karvonen, 2014). However, new conflict dimensions have subsequently become more important, especially the EU-dimension and socio-cultural issues (Westinen et al., 2016).

From a comparative perspective, the level of affective polarization in Finland has been moderate (Reiljan, 2020; Wagner, 2021), but it reached a new high with the 2019 election (Kekkonen and Ylä-Anttila, 2021; Kawecki, 2022). At the time of this study, the electorate was split into blocs with partly overlapping attitudes and party loyalties (Westinen and Kestilä-Kekkonen, 2015; Im et al., 2019; Kekkonen and Ylä-Anttila, 2021). Mutual positive like-dislike evaluations could be used to distinguish three or four blocs in the Finnish electorate: a red-green bloc, favorable toward LA, GL, and SDP; a bourgeois bloc, aligned with NCP and CPF; a bloc aligned with the Finns Party, which was largely negative toward all other parties, but somewhat overlapped with the bourgeois bloc; and a significant proportion of people who could be viewed as “moderates” (Kekkonen and Ylä-Anttila, 2021).

Measuring party attitudes

Party (feeling) thermometers have been the standard way to measure attitudes toward political parties since Iyengar et al. (2012). We follow this convention and use the common type of question coming from the CSES. This asks respondents to state how much they like a party on a scale ranging from 0 (strongly dislike) to 10 (strongly like). From now on, we refer to these items as like-dislike ratings.

Measuring partisan-directed attitudes

To represent partisan-directed attitudes, we employ an inventory of partisan social distance. Items ultimately derived from Bogardus's (1947) social distance scale have featured prominently in the literature on affective polarization, such as the question about how comfortable a respondent would feel having an outparty supporter as an in-law or a neighbor (e.g., Iyengar et al., 2012; Druckman and Levendusky, 2019; Knudsen, 2020).

We use a seven-point scale, ranging from −3 to 3 where the endpoints and the neutral midpoint are labeled. The first question asks respondents how willing they would be to be friends with someone who openly supports the party in question, with the scale ranging from not willing at all to very willing. The second question asks how they would feel about being close coworkers with someone who openly supports the party in question, and the scale ranges from very uncomfortable to very comfortable. These questions are combined to form a thirteen-point item that we call the social distance scale, where large (or positive) values indicate sympathy and small (or negative) values indicate hostility.

Our approach is a compromise between practicability, tradition and local needs. An interval scale facilitates comparison with like-dislike ratings, and allows for the construction of affective polarization measures. The design also makes it practical to ask the question about a large number of parties. Friends and coworkers are in focus in the hope that their meaning as classes of acquaintances would be roughly similar to most people (in contrast, the meaning of, in-laws, for example, may differ greatly between non-religious and highly religious people). Finally, our approach controls for social distance to inparty supporters to avoid conflating outparty animosity with a general distaste for open partisanship, as advised by Klar et al. (2018).

Measuring multiple party identification

We need a survey instrument that is suited for measuring attachment to multiple parties and fits with the partisanship-as-social-identity perspective. For this purpose, we adapt a two-item inventory from Garry (2007)3. The first of these asks respondents to indicate whether they feel close to or distant from a party, on a five-point scale from very distant to very close. The second asks whether they support or oppose a party, on a five-point scale from strongly oppose to strongly support. Both questions include a Don't know option, which we code as “missing”.

In line with Garry, we combine these items. We rescale the result to form a scale that ranges from 1 to 9. We will refer to it as the party identification (PID) scale, and to the whole battery as the multiple party identification (MPID) battery. PID scores between 1 and 5 are considered negative identification, and scores between 6 and 9 are considered positive identification.

We use these scores as the basis for finding inparties and outparties for each respondent in two ways. To accommodate the possibility of multiple party identification, we consider all parties with a positive PID score to inparties. To measure a more exclusive partisan identity, we use the inventory to look for the party with the highest PID score and use attitude items to break ties, as we explain later on.

Affective polarization measures

The literature on affective polarization features a number of measures that aggregate data on party attitudes into a single polarization score. These scores are often calculated on the individual level with the intention of aggregating scores into a system level index, and they have generally been based on like-dislike ratings4. In our analysis, we use two measures: one that assumes a single inparty, and one that allows for many inparties. Both measures are calculated using both types of attitude items (like-dislike ratings and social distance scales) and seek to assess the difference between inparty and outparty attitudes. The split into inparties and outparties is done separately using the MPID battery.

To compute the multiple-inparty affective polarization (MIAP) measure, we first consider all parties with a positive PID score to be inparties, and the rest to be outparties. We then take the mean inparty attitude score, and subtract from it the mean outparty attitude score. The measure is agnostic to the size of either group, and obtains its maximum value when attitudes toward all inparties, or their supporters, are extremely positive, and attitudes toward all outparties, or their supporters, are extremely negative. This measure thus attempts to solve the challenge posed by multiple potential inparties. Instead of using dispersion values like some others have done (Wagner, 2021; Kawecki, 2022), however, we strive to maintain the theoretical foundation in social identity theory and construct the measure as a distance between ingroup and outgroup attitudes.

The single-inparty affective polarization (SIAP) measure is computed as follows. We first look for the party with the highest PID score and assign it as the inparty. The SIAP score is then obtained by subtracting the mean outparty attitude score from the inparty attitude score. Respondents are maximally polarized when they have a maximally positive attitude toward the party, or the supporters of the party, that they identify with, and a maximally negative attitude toward the rest. The attempt to identify the inparty can yield multiple potential inparties, in which case we look for the one among them with the highest attitude score. If even this results in a tie, it does not matter which party is selected, so we pick one randomly5. This measure is conceptually similar to Wagner's (2021) mean distance from the most-liked party approach. The most important difference is that we assign the inparty using the MPID inventory to ensure comparability with our other measure.

In both cases, if the PID score is not positive for any parties, we consider a respondent to have no inparties. In that case, we surmise that calculating the difference between inparty and outparty attitudes would not make sense, and we discard the respondent from the analysis. Additionally for MPID we do this if the PID score is positive for all parties (i.e., there are no outparties). Both measures range from −10 to 10 for like-dislike ratings, and from −12 to 12 for social distance items. To ensure comparability, we rescale both social distance measures to range from−10 to 10. In practice, the final measures all range from 0 to 106.

Results

Comparing party like-dislike ratings and partisan social distance items

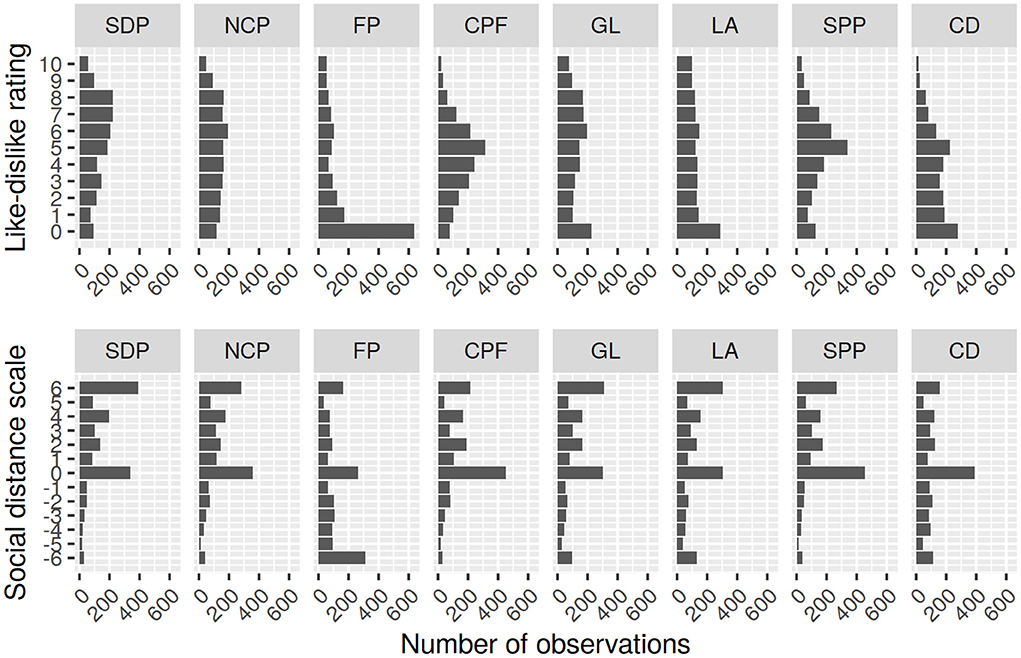

We begin by addressing our first puzzle with a comparison of like-dislike ratings and social distance items. At this stage, we use data from all respondents; respondents will appear in a figure even when responses to individual questions are missing. Figure 1 shows the distributions of both like-dislike ratings and social distance scales for all parties. The items clearly differ from each other: like-dislike ratings are more evenly distributed, whereas social distance scales reveal that for most parties, the most commonly picked answer is either the neutral option, or the most positive option. The items also operate differently for different parties. The Finns Party (FP) stands out in that a very large share of respondents report a strong dislike toward it. It is also the only party for which the most negative social distance score is the most common one.

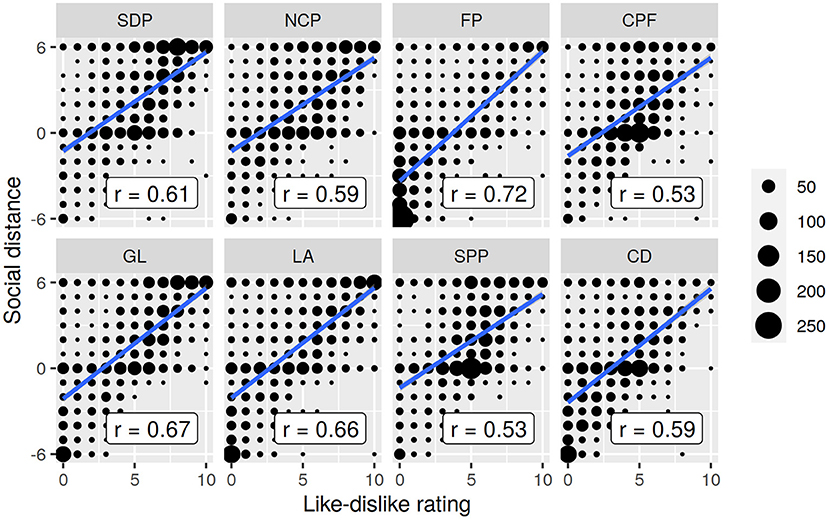

Figure 2 shows the variables plotted against each other along with estimated Pearson correlation coefficients. We can see that correlations are mostly of moderate strength. This finding aligns with earlier studies, which have also found a discrepancy between survey items designed to measure attitudes toward parties and attitudes toward partisans (Druckman and Levendusky, 2019; Knudsen, 2020; Harteveld, 2021). Yet, there are some interesting differences in how the scales operate when questions are asked about different parties. Correlations range from 0.53 for the Center Party and the Swedish People's Party to 0.72 for the Finns Party, suggesting that the relationship is stronger for some parties than others.

Figure 2. Like-dislike ratings plotted against social distance scales for all parties, along with respective Pearson correlation coefficients. Blue lines mark OLS regression lines.

Looking at the plots themselves, we can affirm this finding and note a pattern that is not immediately apparent from correlation coefficients: one half is essentially missing from all plots. A negative value on the social distance scale is almost always accompanied by a low like-dislike rating. The converse is not true, as partisan-directed attitudes can be very positive even when there is significant hostility toward the party itself. The horizontal middle line reflects the earlier finding that the neutral option is popular. It also shows that respondents often combine negative party attitudes with neutral partisan-directed attitudes, and positive party attitudes with positive partisan-directed attitudes.

We can also make comparisons across the other dimension: how responses for different parties are correlated when using either of the two items. Doing so allows us to examine whether a different image of how a party system is configured emerges depending on which item we use. As described above, party attitudes in Finland (Kekkonen and Ylä-Anttila, 2021) and other multiparty systems (Harteveld, 2021) form predictable patterns. We should expect our data to match these earlier findings.

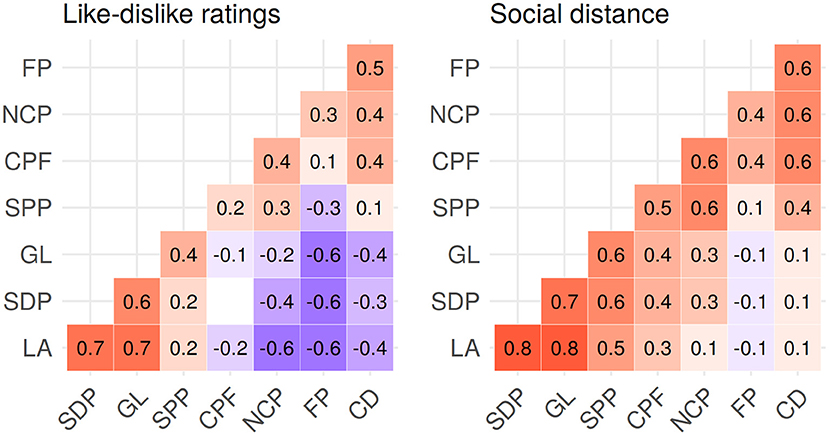

Figure 3 shows party-to-party correlations for both items. People who like one of the red-green parties (SDP, LA and GL) tend to also like the rest of them. Correlations are also positive between CPF, NCP, FP and CD, although to a lesser extent, which reflects the finding that these parties did not form a unified bloc to a similar degree. Correlations between these two sets of parties are generally negative, though SPP and CPF stand somewhat apart from other parties.

Figure 3. Pearson correlations across parties for the like-dislike and social distance batteries. Blank cells mark coefficients that are not distinguishable from 0 at the p <0.01 level of confidence.

At a glance, the correlation matrix for social distance items looks quite different. However, a closer look reveals that the configuration of the party system appears essentially the same. Within blocs, social distance items are clearly positively correlated. Across blocs, however, the negative correlations from like-dislike ratings are generally replaced with weak positive correlations. Only attitudes toward FP supporters are weakly negatively correlated with attitudes toward supporters of red-green parties. Again, this suggests that although people do not display a reluctance to interact with partisans of any party, they do say that they would be particularly happy to interact with partisans of certain parties—and this happiness does not always extend to those who support disliked outparties.

Exploring party identification

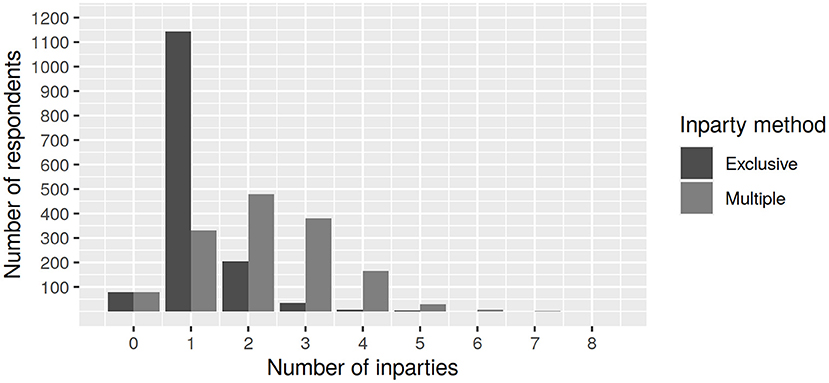

Next, we will investigate how the number of inparties identified for each respondent differs when a more or less exclusive operationalization of partisanship is used. For multiple partisanship, we consider all parties with a positive PID score to be inparties. For exclusive partisanship, we look for the parties with the highest PID score and attempt to break ties by looking for the highest like-dislike rating among them. If there are still ties, all of the remaining parties are considered inparties, for now; in the next section, when calculating the single-inparty affective polarization measure, we randomly pick one of them to be the inparty. From now on, we limit the analysis to those respondents with no missing values in the MPID battery. Out of a total of 1,474 such respondents, 78 do not identify positively with any parties and are considered to have 0 inparties using both approaches.

Figure 4 shows the distribution of the number of inparties obtained using the two approaches. With the multiple inparty approach, we find 2 to be the most common number of inparties, and identification with 3 and 4 parties to also be relatively common. Slightly over 300 respondents have just one inparty, and only a handful or respondents identify positively with more than half of the parties. Using the more exclusive operationalization, where only the party with the highest PID score is considered to be the inparty, a unique inparty can be identified for around 1,150 respondents. To arrive at this number, we used like-dislike ratings to break ties. When social distance is used instead, a unique inparty is found for around 1,050 respondents. If no tie-breaks are used, the number drops to around 950. All in all, this suggests that for many respondents, it makes sense to operationalize the ingroup as consisting of more than just a single, closest and most supported party. The Appendix presents results of varying the threshold when performing the inparty-outparty split.

Figure 4. The number of respondents with a specified number of inparties according to a method that looks for a “primary” inparty, and a method that considers all parties with a positive identification score to be inparties.

Note that the PID scales are strongly correlated with the corresponding like-dislike rating scales, the strength of this correlation varying slightly from party to party, and moderately correlated with social distance scales. Altogether, this points at a close empirical, and probably also conceptual, link between like-dislike ratings and the MPID inventory. While this suggests that the ingroup-outgroup splits could be performed on the basis of like-dislike ratings alone, in the rest of the analysis we follow our original plan and use the MPID inventory to identify the ingroup and the outgroup, and like-dislike ratings and social distance scales to measure attitudes toward these groups. Additional explorations of relationships between these batteries can be found in the Appendix.

Comparing individual-level measures of affective polarization

We now turn our attention to different ways of calculating individual-level affective polarization scores using like-dislike items and social distance scores. As previously described, we use two different approaches: one that seeks to account for multiple party identification based on the MPID battery, and one that assumes a more exclusive partisan identity. Using these two approaches, we will illustrate the ways in which the decision to focus the analysis on either like-dislike ratings or partisan social distance, and the choice of a single-inparty or multi-inparty measure, affect results. There are 13 respondents with at least one negative score; these are discarded from now on.

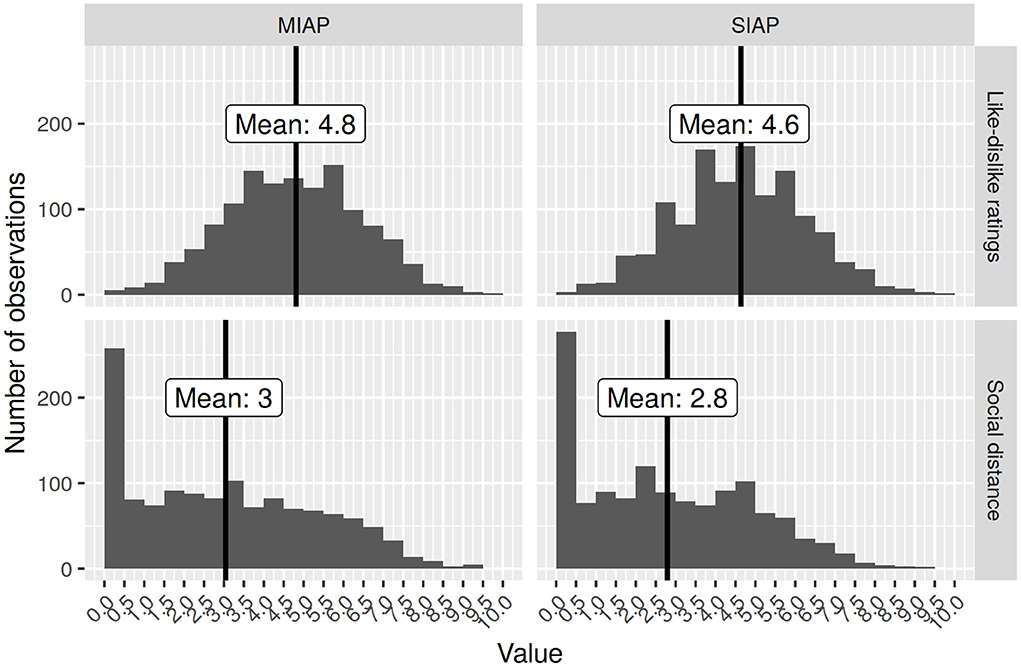

Figure 5 shows the distribution of the four combinations of measures and attitude dimensions. In the figure, both like-dislike measures follow a bell-shaped curve, but social distance measures skew left and contain a large number of respondents at or near zero. Above zero, social distance measures follow a more reasonable, though still left-leaning, distribution. Consequently, affective polarization appears higher when looking at attitudes toward parties, though one should keep in mind that the response scales are also different.

Figure 5. Histograms of single-inparty and multiple-inparty affective polarization calculated based on both like-dislike and social distance batteries, along with respective mean values.

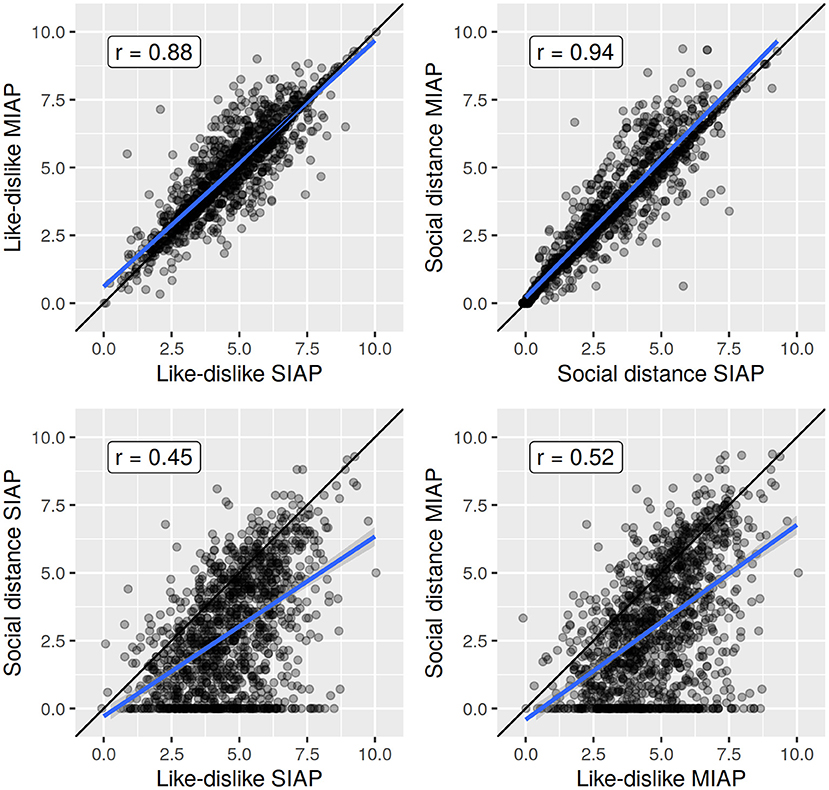

Previous research has found that different methods of aggregating like-dislike scores generally yield similar results (Wagner, 2021; Kawecki, 2022). We also find that different measures for the same type of attitude item are highly correlated, as illustrated in Figure 6. In the upper halves of the topmost panels, polarization is higher according to MIAP measures than SIAP measures; the opposite is true for the bottom halves. We can see that it is somewhat more common for respondents to have higher MIAP scores, but the reverse also occurs quite often. For the most part, the SIAP and MIAP scores only differ by, at most, a few points.

Figure 6. Scatter plots different types of measure for the same type of attitude (upper row) and the same type of measure for different types of attitudes (lower row) along with respective Pearson correlation coefficients.

Associations across different attitude items, however, are less straightforward. We can see that polarization scores based on social distance items are rarely higher than scores based on like-dislike ratings. However, even when like-dislike ratings suggest high polarization, social distance measures may yield low scores. The large number of exactly zero social distance polarization scores are visible at the bottom of both plots: these are accompanied by a wide range of different like-dislike polarization scores.

Regression models for affective polarization measures

As a final assessment of the interplay between the components of our puzzles, we employ each of the four measures of individual-level affective polarization as dependent variables in a simple statistical model. By doing so, we examine whether any potential differences between the methods, built on slightly different assumptions or types of data, are large enough to substantially change what conclusions can be drawn from an analysis. This also allows us to highlight an interesting observation: a potential ideological bias in the common constructs we use to measure affective polarization.

Following the lead that there is an association between ideological extremity and affective polarization (e.g., Reiljan and Ryan, 2021), our models include two measures of self-reported ideological placement as the main independent variables of interest. One of the questions is about placement on the (socio-economic) left-right dimension, and the other relates to the (socio-cultural) liberal-conservative axis. We also include a categorical variable indicating which party, if any, respondents voted for in the latest parliamentary election; the baseline is that the respondent did not vote or did not disclose a party. We use ordinary least squares (OLS) to estimate regression coefficients and also control for age, gender, first language (Finnish or Swedish), education and political interest. Aside from gender and first language, we treat controls as continuous. Respondents with missing data in any of the fields are set aside.

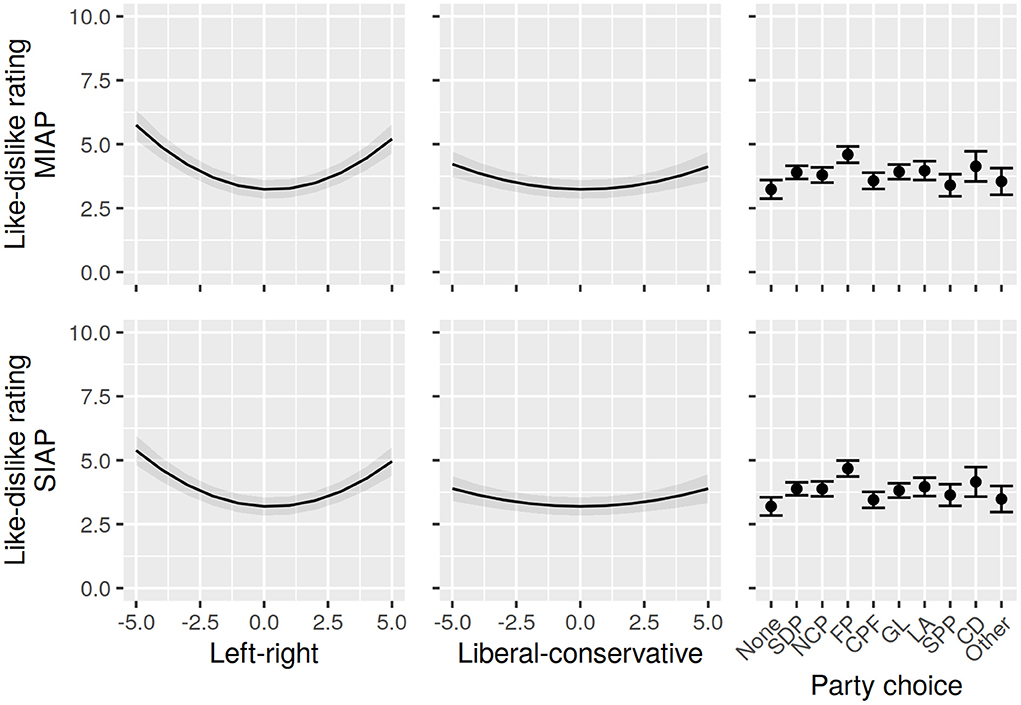

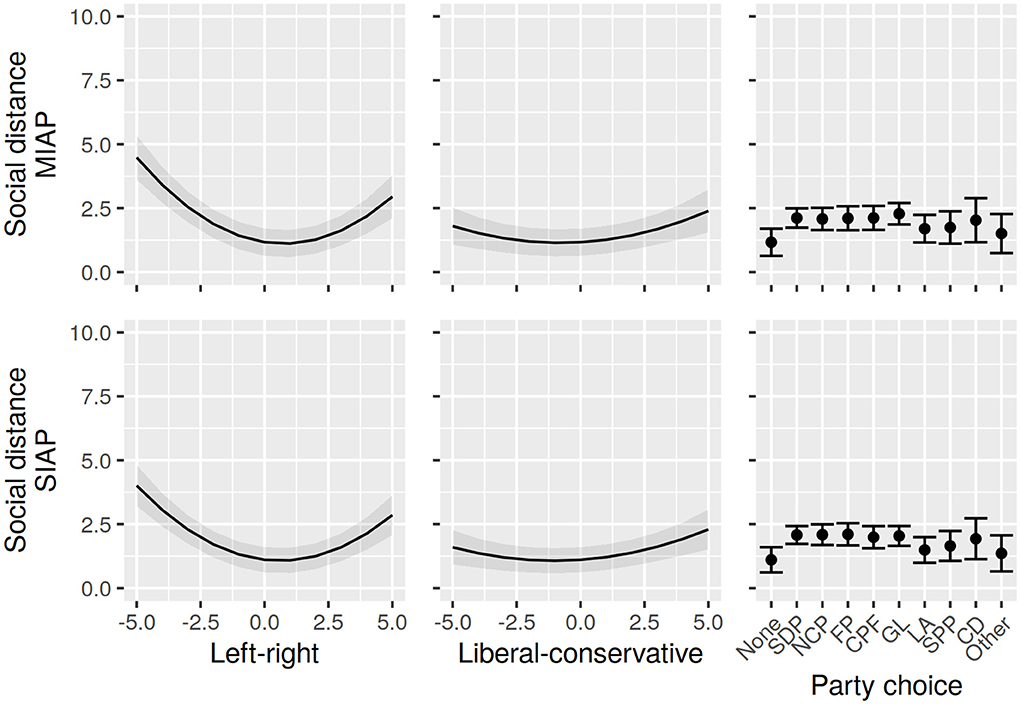

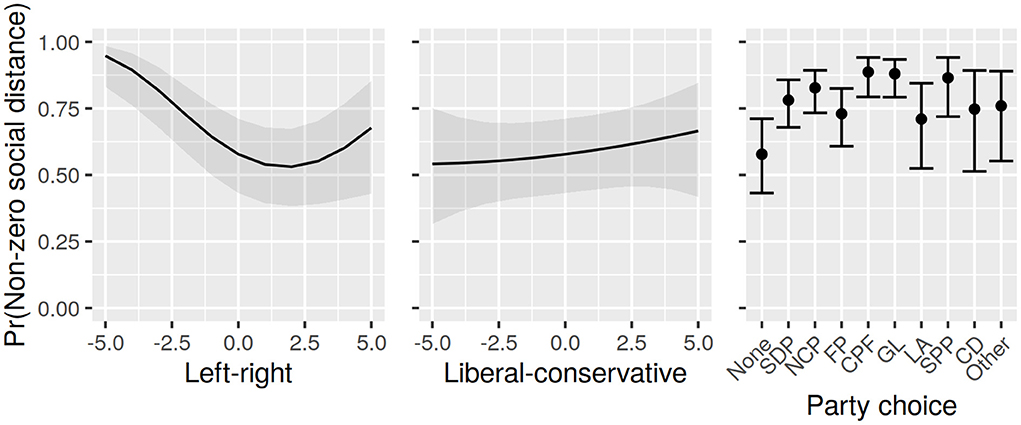

Figures 7, 8 show the marginal effects of the main variables of interest; regression tables are given in the Appendix. All models indicate that extremism on either ideological dimension is associated with being more polarized. Left-right position is more important: keeping liberal-conservative position constant and moving toward either edge of the left-right dimension increases polarization more than keeping left-right position constant and moving toward the edges of the liberal-conservative dimension. All models also identify marginal effects for at least some party choice dummies. They also point at differences between voters and non-voters, and also between different partisan groups as there is considerable variation in the size of party dummy coefficients. Most notably, the FP party dummy has a large effect in both models that use like-dislike ratings. However, we do not rigorously analyze this aspect. The single-inparty and multiple inparty models for the same types of attitudes are highly similar. Coefficients for individual parties move around slightly, but both measures essentially paint the same picture.

Figure 7. Marginal effects of the primary independent variables in models using like-dislike ratings. Both ideological dimensions are kept constant at 0.

Figure 8. Marginal effects of the primary independent variables in models using social distance scales. Both ideological dimensions are kept constant at 0.

Switching over to measures based on social distance items reveals some interesting differences. First, whereas there are hints in the like-dislike based models that left-wingers are more polarized than right-wingers, this effect is clearly visible in models based on social distance. Curiously, these models also suggest that conservatives are more polarized than liberals, but there are no traces of this in the like-dislike models. Nevertheless, the effect of the liberal-conservative dimension is again smaller than that of the left-right dimension, and the difference between liberals and conservatives is only clearly visible in the SIAP model. The effects of party dummies are also different. The large effect associated with the FP dummy is gone, its effect in social distance models being on par with a number of other parties. For the most part, the effects of the party dummies are similar to each other, and no clear conclusions about the marginal effects of vote choice can be drawn. Finally, the independent variables also explain a higher proportion of the outcome variable's variance in models based on like-dislike ratings.

Robustness checks

So far, we have glossed over one important feature of the social distance scales. As we have seen, for most parties, a perfectly neutral social distance score is the mode of the distribution. Indeed, our sample contains a significant number of respondents who straightlined both social distance batteries. They did not necessarily pick the same option for both batteries, but their responses do not differentiate between parties at all, which leads to a score of perfect 0 using both social distance affective polarization measures.

We could imagine there being a qualitative difference between these respondents, and those whose responses indicate non-zero polarization, however small. Because of this, we create a separate variable, and assign it a “0” if a respondent straightlined both social distance batteries, and otherwise a “1”. We then fit a logistic regression model with this variable as the dependent variable, using the same set of independent variables as before. This model is summarized in Figure 9.

Figure 9. Marginal effects of the primary independent variables in a logistic regression model. The outcome variable is 0 if a respondent's respondents to the social distance items show no variation across parties, 1 otherwise. Both ideological dimensions are kept constant at 0.

We see that left-wingers are more likely to have given answers that lead to non-zero social distance polarization. Although the shape of the relationship between position on the left-right dimension and the outcome variable is U-shaped, the lowest probability of belonging in the group with non-zero polarization is among those slightly right-of-center, and those at the extreme right cannot be reliably distinguished from this group. Neither the first-degree nor the second degree coefficients for the liberal-conservative axis can be distinguished from zero. Finally, a look at party dummies reveals that voters of some parties are more likely than non-voters to belong in the non-zero group, most notably GL, CPF, NCP and SPP voters, whereas other party dummies have no effect.

Additionally, we repeated the regression analysis after removing respondents with zero social distance polarization. This should allow us to minimize the impact of potential reluctance to answer to the social distance battery, with the downside that the analysis is limited to more polarized respondents. Marginal plots and regression tables are given in the Appendix. In this subsample, models based on like-dislike ratings are quite similar to those in the full sample, while models based on social distance come to resemble their counterparts more. Notably, the FP dummy has the strongest effect among party dummies in all models, but party dummies generally have slightly weaker effects than in the full sample. Differences among left-wingers and right-wingers, and liberals and conservatives, are no longer clearly visible, but there are still hints of left-wingers and conservatives being more polarized. Some of the changes may be due to the smaller sample size and wider confidence intervals. R2 remains substantially lower in the social distance models.

The Appendix also contains descriptions of various other robustness checks and explorations.

Discussion

Recap of the puzzles

We set out to address two puzzles faced by researchers trying to measure and understand affective polarization in multiparty democracies. First, we wanted to learn more about affective polarization as manifested in attitudes toward political parties themselves, as well as their supporters. Second, we sought to examine the relevance of multiple partisan identities for understanding and measuring affective polarization. To conclude this article, we discuss these puzzles in light of our new evidence based on analyses of survey data from Finland. We outline some recommendations for researching affective polarization, and new avenues for thinking about it.

The puzzle of attitudes toward parties and attitudes toward partisans

Earlier studies have found only moderate correlations between attitudes toward political parties, and attitudes toward people who support parties, and that the latter are generally less hostile (Druckman and Levendusky, 2019; Knudsen, 2020; Harteveld, 2021). Our analysis of party like-dislike ratings and partisan social distance items provides affirmation for those previous findings. However, they also add nuance and present some challenges.

We found that a positive opinion of a party is usually accompanied by a positive attitude toward its supporters. In contrast, negative like-dislike ratings can be accompanied by a wide range of partisan social distance scores, and quite often people who dislike a party profess indifference toward its supporters. We have not seen this asymmetry reported by others who have investigated partisan-directed attitudes (e.g., Knudsen, 2020; Renström et al., 2021). We do not know whether this is because they have found it uninteresting or because it is a feature particular to our data. If it is a general feature of such scales, it presents challenges for their use and interpretation.

There is a strong possibility that respondents are unwilling to openly answer questions about partisan social distance. We solicited open feedback from the respondents, and several of them expressed discomfort with these questions. This is an indication that social desirability bias might skew the responses to such questions. In a sense, this is a good thing: it suggests that social distance items could be able to tap into a meaningful facet of polarization. The presence or absence of social norms against social discrimination based on partisanship could have significant implications, such as protecting civil society from polarizing due to overspill from partisan politics. We therefore believe that this is an important area for future research, both to find better methods of measuring the phenomenon with less bias, and as an interesting phenomenon in and of itself.

We also saw evidence that the reluctance to answer social distance questions might follow ideological splits, as people on the left seem both more likely to report social distance than people on the right, and to the extent they do, they also seem to be more polarized when using this indicator. This highlights the possibility that social distance questions might not be ideologically neutral and raises the question of whether the right is truly less polarized than the left, or merely more reluctant to reveal that they too judge people based on partisanship. In either case, failure to account for this could pose a serious problem for the validity of findings. Note that identifying inparties also means identifying outparties, and we could also envision affect as relating to the target: here the apparent tendency of FP and other populist right parties to be seen particularly negatively by others should be kept in mind (Westinen et al., 2020; Harteveld et al., 2021; Reiljan and Ryan, 2021). Follow-up research should be conducted to examine whether these findings can be replicated outside of the Finnish context.

The puzzle of multiple party identification and affective polarization

Using an inventory for measuring multiple party identification from a study often cited in affective polarization literature (Garry, 2007), we found that multiple partisanship is common. Indeed, our respondents commonly identified with between one and three parties. Furthermore, both the like-dislike battery and the MPID battery resulted in highly correlated evaluations of specific party clusters that match earlier observations (Kekkonen and Ylä-Anttila, 2021). This suggests that strategies that operationalize the “ingroup” as consisting of a single party are conceptually inappropriate for many people.

Nevertheless, individual-level affective polarization measures that assume one inparty or several inparties resulted in highly correlated scores. They also functioned very similarly in our illustrative regression analysis. This suggests that, in practical terms, seeking to account for multiple party identification is not highly consequential—a finding in line with that reported by Wagner (2021). More detailed analyses might be able to tease out situations where the distinction is more or less relevant. For instance, some partisan identities may be more likely to overlap with others, and others more likely to constitute a strong primary identity. When using research designs for which identifying inparties and outparties is necessary, caution should be taken to ensure that identification is operationalized purposefully and in an ideologically neutral fashion.

We sought to emphasize a difference between party identification (or belonging), and party attitudes, as advocated by Greene (2002). In practice, the MPID battery closely resembles like-dislike ratings, suggesting that we did not quite succeed in making this distinction. Perhaps there is none to be made. It could also be because the inventory we chose is still not an explicit measure of partisan social identity in the sense that an inventory based on the classic Identification with a Psychological Group scale (Mael and Tetrick, 1992), such as that introduced by Mayer and Schultze (2019), might be. In any event, our results suggest that more or less the same conclusions about degrees of attachment to parties could be made using like-dislike ratings.

Still, we feel that our endeavor can offer some interesting insights. As Wagner (2021) points out, the phrasing of the ubiquitous CSES like-dislike inventory is very ambiguous. Now we can say that “liking” a party appears similar to a combination of “feeling close to” and “supporting” it, suggesting an affective stance encompassing feelings of belonging and identity. To us, this also suggests that it would be fruitful to clarify the relationship of affective polarization to objects in adjacent fields. Political scientists have long talked not just about partisanship, but also positive and negative partisanship (e.g., Rose and Mishler, 1998; Bankert, 2020). Given the overlap of a common indicator of affective polarization with items intended for measuring party identification, we might ask: what is the additional benefit of talking about affective polarization, instead of simply partisanship or party identification?

We should note that not all multiparty systems are created equal, which limits generalizing from our Finnish context. Even Finland and Sweden, though similar in many ways, have important differences: in Sweden the major parties have long been divided into two opposing blocs along broad ideological lines, whereas in Finland such a system has not developed. The single-inparty and multiple-inparty approaches might differ more in less fragmented party systems, and results might also differ in places where parties form a more salient basis for social identities.

The future of survey-based affective polarization measures

We believe that the methods used in the study of affective polarization in European multiparty systems have, to a significant degree, been driven by the available data, most often in the form of like-dislike ratings from the CSES data sets. This has steered methodological development toward the kinds of aggregation methods that we have discussed. As the field matures, it would be advantageous to consider other approaches.

Here, further theorizing of party identification and other identities may prove worthwhile. For instance, we measured social distance with an interval scale and asked the same questions about all eight parties. Being laborious for respondents, this may have invited a response strategy where parties were compared to each other, instead of bringing out the kinds of gut instincts we had hoped to measure. An alternative could be measuring attitudes toward selected relevant groups, but for this a theoretical basis would be needed. The same would be true for substantially different analytical strategies, such as performing survey or laboratory experiments, which could be highly useful. This would require thinking clearly about the research problem at hand: is it interesting to look at affective reactions toward everyone who is considered either “one of us” or “one of them”, or perhaps reactions toward those who are either the closest or the most distant? Should the focus be on abstract evaluations of parties, indications of trust, social distance, or some other manifestation of affective polarization?

Particularly in contexts where partisanship is not a salient basis for social identities, it would also be important to take other relevant identities into account, as suggested by theories of expressive partisanship (Huddy et al., 2018). We could also imagine parties serving as political outlets for various political and non-political identities: in Finland, for instance, the Center Party has a strongly agrarian image, while the Greens enjoy support among educated liberal urbanites. Parties may serve as useful proxies for studying affective cleavages based on such identities. We feel that one advantage of our approach is that the MPID battery may be able to tap into this phenomenon indirectly, but it would also make sense to devise more direct ways to measure political identities other than parties, and related attitudes.

Finally, it would be useful for the field to devote some attention to the question of, not just what we actually measure when we measure affective polarization, as Druckman and Levendusky (2019) asked, but also why we want to do so. It could be worthwhile, as Watts (2017) argues that social scientists should more often do, to think of what problems we would like to solve.

It seems to us that studies of affective polarization are often motivated, as ours has certainly been, by some kind of a worry that political polarization is increasing. Clarifying these worries might prove beneficial. For example, divergence in like-dislike ratings might be worrying if it manifests as, or serves as a proxy for, genuinely dangerous phenomena, such as the erosion of support for democratic norms (Kingzette et al., 2021). Partisan social distance, in turn, seems to measure something that is worrying in its own right: we hope that people do not start picking their friends on the basis of political allegiance. Affective polarization might manifest differently in different cultural and political contexts, and it is thus important to ask purposeful questions that suit the setting.

In conclusion, we believe that the field would benefit from these types of clarifications to guide the choice of which manifestations of affective polarization to measure, and which methodological choices to make when doing so.

Data availability statement

The dataset was collected from an online panel maintained by Åbo Akademi University. The data produced by the panel is primarily shared through the Finnish Social Science Data Archive (FSD), which guarantees that the data is properly anonymised and follows data protection guidelines. At the time of writing, the transfer process to FSD is still ongoing for this dataset and thus is not yet publicly available. Requests to access the datasets should be directed to YXJ0by5rZWtrb25lbkBpa2kuZmk=.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the participants' legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

AK had the main responsibility for the conceptualization of the study and writing the manuscript and performed all analyses and visualizations. AS managed data collection and contributed to the conceptualization of the study as well as writing and editing the manuscript. DK contributed to the conceptualization of the study as well as writing and editing the manuscript. KS supervised the project and commented and revised the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpos.2022.920567/full#supplementary-material

Footnotes

1. ^“Social distance”, as used in the literature, has two slightly different meanings. Iyengar et al. (2012) use it to refer to partisan-directed attitudes more generally, whereas, Druckman and Levendusky (2019) use the term more specifically to refer to feelings of comfort with having outparty partisans in one's social circles, i.e., an application of Bogardus's (1947) social distance scale. We employ the latter meaning in our usage of the term.

2. ^Greene (2002) acknowledges that party belonging and attitudes are undoubtedly correlated, but argues that they are psychologically distinct. He uses “Blacks” and “poor people” as examples of groups that one might see favorably without being a member of either.

3. ^We made a few modifications to the scale when translating it to Finland's native languages Finnish and Swedish. First, Garry's second scale asks respondents to state whether they consider themselves to be supporters of , or opposed to, a party. Our scale simply refers to supporting or opposing a party, as this phrasing feels more natural when translated Finnish or Swedish. In a pilot study, we tested two versions with slightly different wordings, but did not find substantial differences in responses. Second, whereas Garry uses a seven-point scale, ours has five points.

4. ^As noted in the introduction, we analyze affective polarization at the individual level as the difference between positive affect for the ingroup and negative affect for the outgroup. We do not attempt to aggregate individual scores at the system level and discussions about techniques such as weighing results with party size are therefore outside of the scope of this paper.

5. ^This is done so that we can properly illustrate the difference between assuming a single inparty and assuming multiple inparties. If the aim was to build a measure based on an assumption of exclusive, strong partisan identity, a more reasonable approach would perhaps be to keep all parties identified at this stage.

6. ^A negative score indicates outparty favoritism, which is unlikely both in theory and in practice. A handful of respondents have negative scores and are treated as outliers.

References

Bankert, A.. (2020). Negative and positive partisanship in the 2016 U.S. presidential elections. Pol. Behav. 43:1467–85. doi: 10.1007/s11109-020-09599-1

Bogardus, E. S.. (1947). Measurement of Personal-Group Relations. Sociometry 10, 306–311. doi: 10.2307/2785570

Boxell, L., Gentzkow, M., and Shapiro, J. M. (2021). Cross-Country Trends in Affective Polarization. National Bureau of Economic Research (Working paper). Available online at: https://www.nber.org/papers/w26669 (accessed July 09, 2022).

Druckman, J. N., and Levendusky, M. S. (2019). What do we measure when we measure affective polarization? Public Opin. Q. 83, 114–122. doi: 10.1093/poq/nfz003

Garry, J.. (2007). Making “party identification” more versatile: operationalising the concept for the multiparty setting. Electoral Stud. 26, 346–358. doi: 10.1016/j.electstud.2006.07.003

Gidron, N., Adams, J., and Horne, W. (2019). Toward a comparative research agenda on affective polarization in mass publics. APSA-CP Newslett. 29, 30–36. Available online at: https://urn.fi/URN:NBN:fi:ELE-1753488

Greene, S.. (2002). The Social-Psychological Measurement of Partisanship. Pol. Behav. 24, 171–197. doi: 10.1023/A:1021859907145

Harteveld, E.. (2021). Fragmented foes: affective polarization in the multiparty context of the Netherlands. Electoral Stud. 71, 102332. doi: 10.1016/j.electstud.2021.102332

Harteveld, E., Mendoza, P., and Rooduijn, M. (2021). Affective Polarization and the Populist Radical Right: Creating the Hating? Govern. Oppos. 1–25. doi: 10.1017/gov.2021.31

Hernández, E., Anduiza, E., and Rico, G. (2021). Affective polarization and the salience of elections. Electoral Stud. 69, 102203. doi: 10.1016/j.electstud.2020.102203

Huddy, L., Bankert, A., and Davies, C. (2018). Expressive Versus Instrumental Partisanship in Multiparty European Systems. Pol. Psychol. 39, 173–199. doi: 10.1111/pops.12482

Im, Z., Kantola, A., Kauppinen, T., and Wass, H. (2019). Neljän kuplan kansa. Available online at: https://bibu.fi/neljan-kuplan-kansa-miten-suomalaiset-aanestavat/ (accessed July 09, 2022).

Iyengar, S., and Krupenkin, M. (2018). Partisanship as Social Identity; Implications for the Study of Party Polarization. Forum 16, 23–45. doi: 10.1515/for-2018-0003

Iyengar, S., Sood, G., and Lelkes, Y. (2012). Affect, not ideology: A social identity perspective on polarization. Public Opin. Q. 76, 405–431. doi: 10.1093/poq/nfs038

Karvonen, L.. (2014). Parties, Governments and Voters in Finland. Politics Under Fundamental Societal Transformation (Colchester, United Kingdom: ECPR Press).

Kawecki, D.. (2022). End of Consensus? Ideology, Partisan Identity and Affective Polarization in Finland 2003–2019. Preprint (accessed April 8, 2022).

Kekkonen, A., and Ylä-Anttila, T. (2021). Affective blocs: Understanding affective polarization in multiparty systems. Electoral Stud. 72. doi: 10.1016/j.electstud.2021.102367

Kingzette, J., Druckman, J. N., Klar, S., Krupnikov, Y., Levendusky, M., Ryan, J. B., et al. (2021). How affective polarization undermines support for democratic norms. Public Opin. Q. 85, 663–677. doi: 10.1093/poq/nfab029

Klar, S., Krupnikov, Y., and Ryan, J. B. (2018). Affective Polarization or Partisan Disdain? Public Opin. Q. 82, 379–390. doi: 10.1093/poq/nfy014

Knudsen, E.. (2020). Affective polarization in multiparty systems? Comparing affective polarization toward voters and parties in Norway and the United States. Scan. Pol. Stud. 44, 34–44. doi: 10.1111/1467-9477.12186

Lauka, A., Mccoy, J., and Firat, R. B. (2018). Mass Partisan Polarization: Measuring a Relational Concept. Am. Behav. Sci. 62, 107–126. doi: 10.1177/0002764218759581

Mael, F. A., and Tetrick, L. E. (1992). Identifying Organizational Identification. Educ. Psychol. Measure. 52, 813–824. doi: 10.1177/0013164492052004002

Mayer, S. J., and Schultze, M. (2019). The effects of political involvement and cross-pressures on multiple party identifications in multi-party systems–evidence from Germany. J. Elect. Public Opin. Part 29, 245–261. doi: 10.1080/17457289.2018.1466785

Reiljan, A.. (2020). ‘Fear and loathing across party lines' (also) in Europe: Affective polarisation in European party systems. Eur. J. Pol. Res. 59, 376–396. doi: 10.1111/1475-6765.12351

Reiljan, A., and Ryan, A. (2021). Ideological tripolarization, partisan tribalism and institutional trust: the foundations of affective polarization in the Swedish multiparty system. Scand. Pol. Stud. 44, 195–219. doi: 10.1111/1467-9477.12194

Renström, E. A., Bäck, H., and Carroll, R. (2021). Intergroup threat and affective polarization in a multi-party system. J. Soc. Pol. Psychol. 9, 553–576. doi: 10.5964/jspp.7539

Rose, R., and Mishler, W. (1998). Negative and positive party identification in post-communist countries. Electoral Stud. 17, 217–234. doi: 10.1016/S0261-3794(98)00016-X

Tajfel, H.. (1970). Experiments in intergroup discrimination. Sci. Am. 223, 96–102. doi: 10.1038/scientificamerican1170-96

Tajfel, H., and Turner, J. (1979). An integrative theory of intergroup conflict. In W. G. Austin and S. Worchel (Eds.), The Social Psychology of Intergroup Relations (Monterey, Canada: Brooks/Cole). p. 33–47.

Torcal, M., and Carty, E. (2022). Partisan sentiments and political trust: a longitudinal study of Spain. South Eur. Soc. Pol. 13, 1–26. doi: 10.1080/13608746.2022.2047555

Wagner, M.. (2021). Affective polarization in multiparty systems. Electoral Stud. 69, 102199. doi: 10.1016/j.electstud.2020.102199

Watts, D. J.. (2017). Should social science be more solution-oriented? Nat. Hum. Behav. 1, 0015. doi: 10.1038/s41562-016-0015

Westinen, J., and Kestilä-Kekkonen, E. (2015). Perusduunarit, vihervasemmisto ja porvarit : suomalaisen äänestäjäkunnan jakautuminen ideologisiin blokkeihin vuoden 2011 eduskuntavaaleissa. Politiikka 57, 94–114.

Westinen, J., Kestilä-kekkonen, E., and Tiihonen, A. (2016). “Äänestäjät arvo- ja asenneulottuvuuksilla,” in Poliittisen osallistumisen eriytyminen: Eduskuntavaalitutkimus 2015, eds K. Grönlund and H. Wass (Helsinki: Ministry of Justice), 273–297.

Keywords: affective polarization, partisanship, multiparty systems, social distance, political polarization, Finland

Citation: Kekkonen A, Suuronen A, Kawecki D and Strandberg K (2022) Puzzles in affective polarization research: Party attitudes, partisan social distance, and multiple party identification. Front. Polit. Sci. 4:920567. doi: 10.3389/fpos.2022.920567

Received: 14 April 2022; Accepted: 04 July 2022;

Published: 22 July 2022.

Edited by:

Andres Reiljan, University of Tartu, EstoniaReviewed by:

Lukas F. Stoetzer, Humboldt University of Berlin, GermanyFabian Jonas Habersack, University of Innsbruck, Austria

Alexander Ryan, Mid Sweden University, Sweden

Copyright © 2022 Kekkonen, Suuronen, Kawecki and Strandberg. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Arto Kekkonen, YXJ0by5rZWtrb25lbkBoZWxzaW5raS5maQ==

†These authors have contributed equally to this work

Arto Kekkonen

Arto Kekkonen Aleksi Suuronen2†

Aleksi Suuronen2† Daniel Kawecki

Daniel Kawecki