- 1Department of Political Science, Colorado State University, Fort Collins, CO, United States

- 2Department of Political Science, Simon Fraser University, Burnaby, BC, Canada

A growing number of Americans stay informed about current events through social media. But using social media as a source of news is associated with increased likelihood of being misinformed about important topics, such as COVID-19. The two most popular platforms—Facebook and YouTube—remain relatively understudied in comparison to Twitter, which tends to be used by elites, but less than a quarter of the American public. In this brief research report, we investigate how cognitive reflection can mitigate the potential effects of using Facebook, YouTube and Twitter for news on subsequent conspiracy theory endorsement. To do that, we rely on an original dataset of 1,009 survey responses collected during the first wave of the coronavirus pandemic in the United States, on March 31, 2020. We find that using Facebook and YouTube for news increases conspiracy belief (both general and COVID-19 specific), controlling for cognitive reflection, traditional news media use, use of web-based news media, partisanship, education, age, and income. We also find that the impact of Facebook use on conspiracy belief is moderated by cognitive reflection. Facebook use increases conspiracy belief among those with low cognitive reflection but has no effect among those with moderate levels of cognitive reflection. It might even decrease conspiracy belief among those with the highest levels of cognitive reflection.

Introduction

A growing number of Americans get their news online, and increasingly on social media platforms like Facebook. The number of people in the United States who fall into that category has doubled since 2013.1 It has become conventional wisdom in public discourse that misinformation and conspiracy theories have become more widespread since the advent and growth of social media platforms.2 Research has shown that social media is indeed ripe for spreading misinformation (Vosoughi et al., 2018; Wang et al., 2019; Swire-Thompson Lazer 2020), and getting your news on social media is associated with increased likelihood of being misinformed about important topics, such as vaccines (Stecula et al., 2020). In addition to being more misinformed, social media users are more likely to be exposed to various conspiracy theories (Mitchell et al., 2020), and work has found that use of social media for news correlates with conspiratorial worldview (Foley and Wagner, 2020). This has likely only been exacerbated during the global COVID-19 pandemic, where seemingly countless conspiracy theories about the novel coronavirus, its origins, and COVID-19 vaccines, have gone viral on various social media platforms.3

Most stories reinforcing COVID-19 conspiracy theories tend to originate from fringe online sources and social media posts (Papakyriakopoulos et al., 2020), and those who get their news on social media are more likely to be misinformed about basic facts surrounding COVID-19 (Baum et al., 2020; Bridgman et al., 2020). At the same time, as social media platforms like Facebook continue to grow their user bases, and as increasing number of Americans use these platforms for informing themselves about current events, it is clear that not everyone who uses these platforms endorse conspiracy beliefs. This highlights the need to understand the heterogenous effects that these platforms have on different people using them. In this brief research report, we focus on one factor that might mitigate the effects of social media use: cognitive reflection.

A growing body of work has focused on the link between susceptibility to various forms of misinformation and broad “cognition,” as measured by various concepts including analytical thinking, numeracy skills, or various thinking styles (Pennycook et al., 2018; Guess et al., 2019; Roozenbeek et al., 2020). One particular strain of work focusing on thinking styles found that people who are more reflective (as operationalized by the Cognitive Reflection Test, described in more detail below) are less likely to believe misinformation and generally better at discerning between truth and falsehood (Pennycook and Rand, 2019; Ross et al., 2019; Bago et al., 2020; Pennycook and Rand, 2020).

Cognitive reflection is the capacity to override gut reactions. People engage in two distinct cognitive processes: those executed quickly with little conscious deliberation, and those that are slower and more reflective, sometimes called System one and System two thinking (Kahneman, 2013; Stanovich and West, 2000). System one thinking occurs spontaneously, is intuitive, and does not require attention, while System two thinking requires effort, motivation, and concentration. System one thinking employs mental shortcuts (heuristics) which under certain circumstances can lead to bias in information processing. It is what provides us with an intuitive or gut response to new information. System two thinking is logical and calculating, and can avoid the biases of System one thinking. From a neuroscientific perspective, System one thinking has been associated with activity in regions of the brain known as the Default Mode Network (Gronchi and Giovannelli 2018). The DFN is active during “unconstrained and internally focused cognitive processes” (Spreng 2012). Activity in the DFN has been found to be decreased when individuals engage in attention-demanding cognitive tasks. Simultaneously, activity in parts of the brain known as the task-positive network are more active during such tasks (Fox et al., 2005), suggesting it is associated with System two thinking.4

The Cognitive Reflection Test (CRT) is a measure of the ability of individuals to override and ignore incorrect intuition (System 1) and to instead engage in deeper reflection (System 2) to find the correct answer (Frederick 2005). Importantly, while CRT correlates with other measures of cognitive ability, cognitive reflection is more than intelligence or education (Frederick 2005; Toplak et al., 2011). In other words, it measures something conceptually distinct from other measures of intelligence, as evidenced by the moderate correlations between other intelligence tests and the CRT, and specifically the disposition to resist answering a question with an (incorrect) response that first comes to mind (Frederick 2005).

In the context of this study, cognitive reflection is important because conspiracy theories explicitly prey on System one thinking. Most conspiracy theories are designed to appeal to emotions, intuitive thinking, and gut reactions (Hofstadter, 1966; Hibbing et al., 2014; Radnitz and Underwood, 2015; van Prooijen, 2018). This suggests that not all social media users will be affected by the content they encounter on social media in the same way. Those more cognitively reflective will be more resistant to the conspiratorial content that they might encounter on these platforms, because they are better equipped to resist the intuitively appealing conspiratorial claims, and apply System two cognitive resources to determining the veracity of the conspiratorial content. They are also less likely to encounter such content to begin with, because they likely are better at curating a more reliable information environment on Facebook, Twitter, or YouTube. Previous work suggests that higher levels of cognitive reflection were associated with increased ability to discern fake and real news, and generally more responsible social media use (Pennycook and Rand, 2019; Mosleh et al., 2021). Those less cognitively reflective, on the other hand, will likely be more receptive to these conspiracies, because they are more likely to succumb to the intuitive gut reactions that these conspiracies appeal to. They are also likely to be less skilled at curating a landscape with reliable sources of information, and are therefore more likely to be exposed to these stories on social media platforms. We test the potential mitigating effect of cognitive reflection on the relationship between social media use and conspiracy belief. This is our first contribution.

The most popular social media platforms in the United States for current affairs are Facebook, YouTube, and Twitter.5 However, mostly because of data availability issues, Twitter remains the most studied platform by researchers. Facebook and YouTube, despite being vastly more popular among average Americans,6 remain relatively understudied in comparison to Twitter, which tends to be used by both political and media elites, but only about a quarter of the American public. Furthermore, survey-based research frequently combines social media usage into a single measure (e.x., Stecula et al., 2020), but looking at these platforms individually is important, given that differences between them means that different social media platforms could have different effects on people accepting conspiracy theories.

Research has shown that how information is presented (whether in text or in video form) affects how it is received (Neuman et al., 1992; Sydnor, 2018; Goldberg et al., 2019). Images (e.g., video) are processed automatically and fast, while the processing of text is controlled and slow (Powell et al., 2019). Most recent work has found that video is slightly more persuasive than text across different domains (Wittenberg et al., 2020). This suggests that different social media platforms might have different effects on their consumers. YouTube, for example, is a video platform, while Twitter and Facebook are primarily text based, although both allow for posting of photo and video content. At the same time, Twitter and Facebook are also different, in terms of length of an average post being longer on Facebook, but also in terms of the user base being much broader on Facebook. Given these differences, it is possible that their effects on conspiracy belief vary. It is also possible that the moderating effect of cognitive reflection differs across platforms. The ability to stop and override a gut reaction, and to engage in slow, effortful information processing may be easier when information is presented as text rather than a fast-paced video. This all highlights the need to examine the different social media platforms individually. In this research report, we disaggregate the effects of YouTube, Twitter and Facebook. This is our second contribution.

Method

Our data comes from an original survey of 1,009 adult Americans conducted using Lucid on March 31, 2020, during the first wave of the COVID-19 pandemic. The sample is generally representative of the United States population due to demographic (age, gender, ethnicity, and region) quotas employed by Lucid. Previous research has shown that Lucid provides a high-quality source of opinion data (Coppock and McClellan, 2019). To further ensure our sample is reflective of the general American public, we generated raking weights based on race, ethnicity, and educational attainment benchmarked to the United States Census’s Current Population Survey from February 2018.

Our dependent variable is a measure of agreement with four conspiracies, two of which are about COVID-19 and two of which are more general in nature. Our strategy was to select prominent conspiracies that could have an appeal across the political spectrum. COVID-19 conspiracies are new, but emerged during the global pandemic, when an unprecedented number of people have been following the news. Conspiracy surrounding the 9/11 terrorist attacks have been relatively mainstream in the past two decades, and the Jeffrey Epstein suicide has captivated the news media’s attention for several weeks. We asked: For each of the statements below, please indicate whether you agree or disagree with it:

1. Certain United States government officials planned the attacks of September 11, 2001, because they wanted the United States to go to war in the Middle East.

2. Jeffrey Epstein did not kill himself, but was murdered by powerful people who he had “dirt” on.

3. The Chinese government developed the coronavirus as a bioweapon.

4. There is a vaccine for the coronavirus that national governments and pharmaceutical companies won’t release.

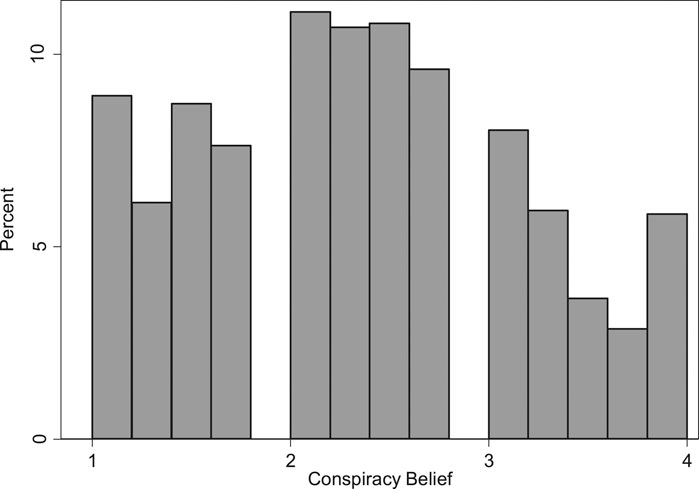

Response categories were coded: Strongly disagree 1), Somewhat disagree 2), Somewhat agree 3), Strongly agree 4). The percent that strongly agreed with each conspiracy is: 12, 26, 19, and 14%, respectively. The Epstein conspiracy is a bit of an outlier here, mostly due to the media salience of Jeffery Epstein’s suicide and the plethora of conspiracies that emerged in light of it among both Republicans and Democrats. We use the average level of agreement across these four issues as the dependent variable. The Cronbach’s alpha coefficient is 0.80, highlighting that these four conspiracies do in fact “move together” and form a reliable scale. Figure 1 shows that the distribution of this variable is fairly uniform with a slight right tail skew. It has a mean of 2.35 and a standard deviation of 0.83.

Use of Twitter, Facebook and YouTube for news was measured by asking the following: “Some people follow politics closely while others don’t have time to do that or do not find it interesting. Now, thinking about your own news habits, how often do you get the news about current affairs from … ” Response categories were coded: Never 1), Hardly Ever 2), Sometimes 3), Often 4).

We measure cognitive reflection using the standard three-item Cognitive Reflection Test (CRT). The CRT measure is the number of correct responses to the three questions:

1. A bat and a ball cost $1.10 in total. The bat costs $1.00 more than the ball. How much does the ball cost? please enter the number of cents below.

2. If it takes five machines 5 min to make five widgets, how long would it take 100 machines to make 100 widgets? please enter the number of minutes below.

3. In a lake, there is a patch of lily pads. Every day, the patch doubles in size. If it takes 48 days for the patch to cover the entire lake, how long would it take for the patch to cover half of the lake? please enter the number of days below.

This scale is a simple and widely used measure of the ability to reflect on a problem and resist providing the first response that comes to mind (Frederick, 2005). The resulting CRT scale has a range from 0 to 3. The mean (and standard deviation) are: 0.36 (0.74). The modal value is zero, with 77% of respondents getting none of the answers correct. A further 14% got one answer correct, 6% got two correct and 3% got all three correct.

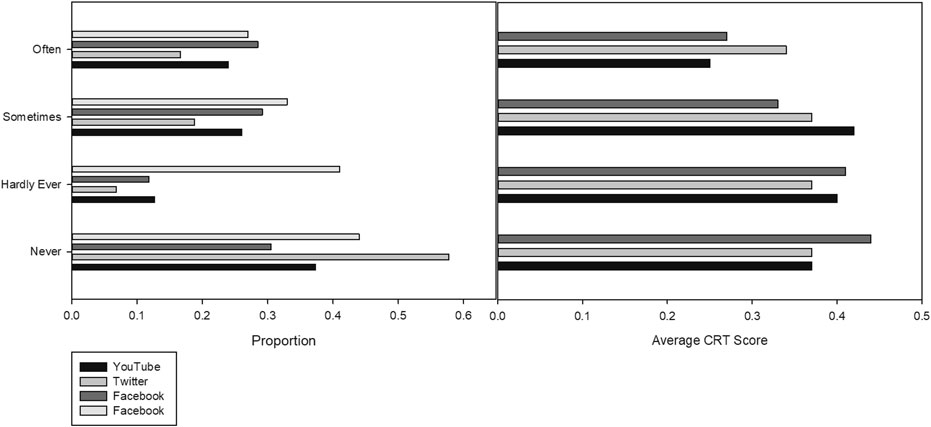

Figure 2 shows the distribution of social media use for news (in the left panel) and the average CRT score for individuals at each level of use (in the right panel). We see that use of Twitter is lower than that of YouTube and Facebook. A full 58% of individuals say they never used Twitter for news. The corresponding numbers for YouTube and Facebook are 37 and 31%. At the other end of use, only 17% of individuals say they often used Twitter for news but 24 and 28% of individuals say they often use YouTube or Facebook.

We also see that the average CRT scores for those that never, hardly ever or sometimes use social media for news are equal to or higher than the average for the population as a whole. Those that often use Facebook or YouTube have lower CRT scores, on average. The difference between those that often use these social media platforms for news compared to those that never, hardly ever or sometimes use them is statistically significant (Facebook p-value = 0.035; YouTube p-value = 0.007). There is no such difference in CRT for those that often use Twitter.

In our models of conspiracy theory belief, we control for partisan identity (Democrat, Independent, Republican), traditional media use (average use of radio, national newspapers or magazines, local newspapers, national television news and local television news for news.7), web based news (use of websites such as Buzzfeed, Vice, or Vox), age (in years), education (university degree), and gender (binary). Partisanship is an important control, because even though people from across the political spectrum can believe in conspiracy theories, research has shown that the unique and highly politicized nature of COVID-19 conspiracies makes Republicans and conservatives more likely to endorse these specific theories (Uscinski et al., 2016; Uscinski et al., 2020). Partisanship might also influence the endorsement of the 9/11 and Epstein conspiracy theories. Furthermore, we control for traditional news consumption because previous work has found that consumers of such news sources are less likely to be misinformed, including about COVID-19 (Bridgman et al., 2020; Stecula et al., 2020).

Results

We begin by regressing conspiracy theory belief on CRT, social media use and our control variables. We use a linear model, but to account for the fact that the dependent variable is bounded by 1 and 4, we use a Tobit model (results using OLS are very similar and provided in Supplementary Information).8

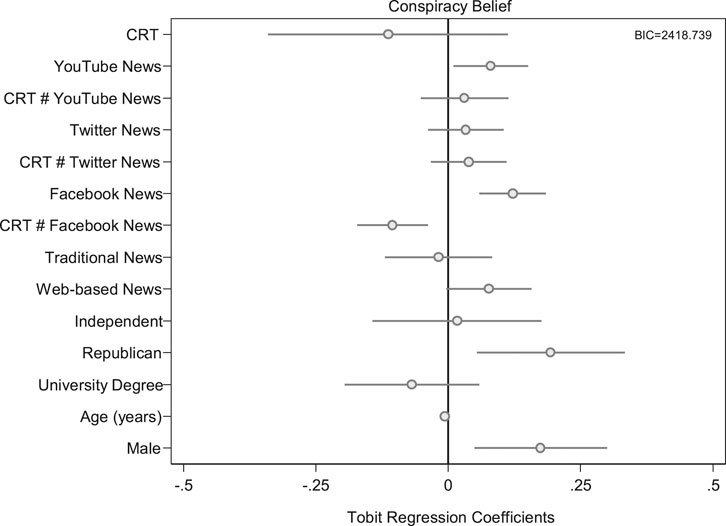

Looking at the results (Figure 3), we can immediately see that CRT has a statistically significant negative effect on conspiracy belief, and the use of YouTube and Facebook for news each have a statistically significant positive effect on conspiracy theory belief.9 Twitter does not have a statistically significant effect (p-value = 0.11). Identifying as Republican (as opposed to a Democrat) and being male (as opposed to female) have statistically significant positive effects, and age has a statistically significant negative effect. The magnitude of the CRT effect is that getting one additional question correct (out of 3) reduces conspiracy belief by 0.21 (p-value < 0.001) on the one to four belief scale. This is a decrease of one quarter of a standard deviation on the belief scale. The magnitude of the effect of increasing CRT by one on the 0 to 3 CRT scale is greater than that of being a Republican vs. a Democrat (0.18; p-value = 0.010), although the effects are in the opposite direction. This is notable as partisanship has been shown to have a substantively important effect on belief in some conspiracies (Uscinski et al., 2016; Uscinski et al., 2020).

An increase of one on the one to four social media use scale increases conspiracy belief by 0.09 (p-value = 0.005) and 0.08 (p-value = 0.004) for YouTube and Facebook, respectively. For example, an individual that never uses Facebook or YouTube for news has an expected score of 2.1 on the conspiracy belief scale. An individual that “somewhat disagrees” with each of the conspiracy theories would obtain such a score. An individual that often uses Facebook and YouTube for news has an expected score of 2.6 on the conspiracy scale. An individual would have to “somewhat agree” or “strongly agree” with at least one of the conspiracies to obtain such a score. This suggests that for an “average individual”, frequent use of Facebook and YouTube can mean the difference between disagreeing with each of the conspiracy theories and agreeing with at least one of the conspiracies. The social media effects are smaller than those for CRT but still potentially important.

We next re-estimate our model including an interaction between CRT and social media use. Looking at the results (Figure 4), we see that the interaction between Facebook use and CRT is statistically significant but the Twitter and YouTube interactions are not. The negative Facebook interaction suggests that the effect of using Facebook as a source of news on conspiracy belief may be limited to those with low CRT. At the lowest level of CRT (obtained by 77% of individuals), an increase of one on the Facebook use scale increases conspiracy belief by 0.12 (p-value < 0.001). For example, an individual that never uses Facebook for news and has a CRT score of 0, has an expected score of 2.2 on the conspiracy belief scale. As before, an individual that somewhat disagrees with each of the conspiracy theories would obtain such a score. An individual that often uses Facebook for news and has a CRT score of 0, has an expected score of 2.6 on the conspiracy scale. Again, an individual would have to somewhat or strongly agree with at least one of the conspiracies to obtain such a score.

For those with higher CRT scores, the effect of social media use is mitigated. For those that had CRT scores of 1 or 2 (obtained by 20% of individuals), the effects of Facebook are substantively small or negative and not statistically significant. At the highest level of CRT (obtained by 3% of individuals), an increase of one on the Facebook use scale actually decreases conspiracy belief by 0.19 (p-value < 0.041). Meanwhile the effect of YouTube use on conspiracy belief is positive at all levels of CRT and the effect of Twitter is not significant at any level.

Discussion

Consistent with previous work, we find that using social media as a source of news is associated with greater likelihood of endorsing various conspiracy theories. Importantly, and in line with our expectations, the effects vary for different social media platforms and different levels of cognitive reflection. The effects are limited to the two biggest social media platforms: Facebook and YouTube, but not Twitter. At the same time, cognitive reflection mitigates these effects for Facebook. In other words, among Facebook users, it is those who easily succumb to gut reactions that are significantly more likely to believe in conspiracy theories, while those high in cognitive reflection, who can slow down and resist the incorrect intuitive answers, are unaffected by Facebook use or even less likely to endorse these conspiracies.

These findings suggest that cognitive reflection is an important moderator that can mitigate the relationship between conspiracy theories and social media use. At the same time, it also highlights that different platforms might influence their users differently. Facebook is primarily a text based social media platform that allows some photo and video content. Twitter is similar but with much shorter average text (and a smaller user base). These differences might explain why we do not see effects for Twitter use. YouTube, on the other hand, is a video platform. Video is processed automatically, while the processing of text is controlled (Powell et al., 2019), so a YouTube video is a more passive form of engagement than reading a Facebook post and, in general, tends to be more persuasive than text (Wittenberg et al., 2020). This may be why cognitive reflection does not mitigate the effects of YouTube use. System two just does not have the same opportunity to engage on YouTube compared to Facebook. This has potentially important implications, as there are indications that Facebook’s user base in the United States is in decline, while the YouTube user base is increasing, and YouTube is more popular among young people.10 Future research should be mindful of the distinctions between platforms and explore these differences in more detail.

Our findings also have implications for the battle against conspiracy theory belief. On the one hand, there exists potential for social media platforms to motivate users to engage in more reflective thinking. As previous work has found, it is possible to prime reflective thinking (Deppe et al., 2015). Furthermore, recent research suggests that shifting attention to accuracy increases the quality of news that people share on Twitter (Pennycook et al., 2021). This is a kind of intervention that social media platforms could easily implement to increase users’ focus on accuracy (Pennycook et al., 2021).

On the individual level, even with the necessary cognitive resources (e.g., vocabulary, numeracy), cognitive reflection requires individuals to be aware of the need to override System one thinking in a given context (conflict detection), and inhibit the intuitive response (sustained inhibition) long enough to deliberately apply cognitive resources to the situation (Bonnefon 2018). Bonnefon (2018) suggests that sustained inhibition is the part of this process most in need of training. We suggest that media literacy courses/training at both the secondary and postsecondary levels be studied for its ability to teach the need to override intuitive responses, sustain inhibition and apply cognitive resources in the context of social media. This is particularly important given the potential consequences of conspiracy theories about COVID-19. The conspiracies surrounding the vaccine will likely proliferate as the efforts to vaccinate national populations across the world become more intense, potentially lowering vaccination rates (Lindholt et al., 2020).

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by University of Pennsylvania IRB and the Simon Fraser University Research Ethics Board. The participants provided their written informed consent to participate in this study.

Author Contributions

Both authors designed the survey, collected the data, conceived of the manuscript, and wrote and edited the manuscript. MP conducted the analysis.

Funding

The authors are grateful for the support of the Social Sciences and Humanities Research Council of Canada (SSHRC) (Pickup’s grant no. 435-2016-1173; Stecula’s postdoctoral fellowship grant no. 756-2018-0168).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1https://www.digitalnewsreport.org/survey/2020/united-states-2020/.

2See, for example, https://www.forbes.com/sites/petersuciu/2020/09/11/conspiracy-theories-have-gained-traction-since-911-thanks-to-social-media/?sh=361413483ddb.

3One prominent example of this was the Plandemic video that went viral in early May on social media before being taken down–https://www.bbc.com/news/uk-53085640.

4It has been found that some tasks can activate both networks (Spreng 2012).

5https://www.digitalnewsreport.org/survey/2020/united-states-2020/.

6According to a 2020 report by the Pew Research Center, and consistently with their previous work, 25% of Americans use Twitter, while 68% use Facebook and 74% use YouTube. That does not mean that the user base of these more popular platforms is without biases, but, on average, they are more widely used by an average American than Twitter.

7The question and response options for each source were the same as those for the social media platforms. The traditional media variable was created by taking the average across the traditional sources. The Cronbach’s alpha scale reliability coefficient is 0.76.

8A power test indicates that our sample size allows us to detect an effect as small as 0.0088 with 80% power.

9Figures include 95% confidence intervals. Statistical significance is determined at the 0.05 level (two-tailed test).

10https://www.digitalnewsreport.org/survey/2020/united-states-2020/

References

Bago, B., Rand, D. G., and Pennycook, G. (2020). Fake News, Fast and Slow: Deliberation Reduces Belief in False (But Not True) News Headlines. J. Exp. Psychol. GeneralGeneral 149 (8), 1608–1613. doi:10.1037/xge0000729

Baum, M. A., Ognyanova, K., Chwe, H., Quintana, A., Perlis, R., Lazer, D., et al. (2020). The State of the Nation: A 50-State COVID-19 Survey Report #14: Misinformation and Vaccine Acceptance. Availableat: http://www.kateto.net/covid19/COVID19%20CONSORTIUM%20REPORT%2014%20MISINFO%20SEP%202020.pdf.

Bonnefon, J.-F. (2018). The Pros and Cons of Identifying Critical Thinking with System 2 Processing. Topoi 37, 113–119. doi:10.1007/s11245-016-9375-2

Bridgman, A., Merkley, E., Loewen, P. J., Owen, T., Ruths, D., Teichmann, L., et al. (2020). The Causes and Consequences of COVID-19 Misperceptions: Understanding the Role of News and Social media. Harv. Kennedy Sch. Misinformation Rev. 1 (3).

Coppock, A., and McClellan, O. A. (2019). Validating the Demographic, Political, Psychological, and Experimental Results Obtained from a New Source of Online Survey Respondents. Res. Polit. 6 (1), 2053168018822174. doi:10.1177/2053168018822174

Deppe, K. D., Gonzalez, F. J., Neiman, J. L., Jacobs, C., Pahlke, J., Smith, K. B., et al. (2015). Reflective Liberals and Intuitive Conservatives: A Look at the Cognitive Reflection Test and Ideology. Judgment Decis. Making 10 (4), 314–331.

Foley, J., and Wagner, M. (2020). How media Consumption Patterns Fuel Conspiratorial Thinking. Availableat: https://www.brookings.edu/techstream/how-media-consumption-patterns-fuel-conspiratorial-thinking/.

Fox, M. D., Snyder, A. Z., Vincent, J. L., Corbetta, M., Van Essen, D. C., and Raichle, M. E. (2005). From the Cover: The Human Brain Is Intrinsically Organized into Dynamic, Anticorrelated Functional Networks. Proc. Natl. Acad. Sci. 102 (27), 9673–9678. doi:10.1073/pnas.0504136102

Frederick, S. (2005). Cognitive Reflection and Decision Making. J. Econ. Perspect. 19 (4), 25–42. doi:10.1257/089533005775196732

Goldberg, M. H., van der Linden, S., Ballew, M. T., Rosenthal, S. A., Gustafson, A., and Leiserowitz, A. (2019). The Experience of Consensus: Video as an Effective Medium to Communicate Scientific Agreement on Climate Change. Sci. Commun. 41 (5), 659–673. doi:10.1177/1075547019874361

Gronchi, G., and Geovannelli, F. (2018). Dual Process Theory of Thought and Default Mode Network: A Possible Neural Foundation of Fast Thinking. Front. Psychol. 9, 1–4. doi:10.3389/fpsyg.2018.01237

Guess, A., Nagler, J., and Tucker, J. (2019). Less Than You Think: Prevalence and Predictors of Fake News Dissemination on Facebook. Sci. Adv. 5 (1), eaau4586. doi:10.1126/sciadv.aau4586

Hibbing, J. R., Smith, K. B., Peterson, J. C., and Feher, B. (2014). The Deeper Sources of Political Conflict: Evidence from the Psychological, Cognitive, and Neuro-Sciences. Trends Cogn. Sci. 18 (3), 111–113. doi:10.1016/j.tics.2013.12.010

Lindholt, M., Jørgensen, F., Bor, A., and Petersen, M. (2020). Willingness to Use an Approved COVID-19 Vaccine: Cross-National Evidence on Levels and Individual-Level Predictors. PsyArXiv. 10.31234/osf.io/8kn5f

Mitchell, A., Jurkowitz, M., Oliphant, J. B., and Shearer, E. (2020). Americans Who Mainly Get Their News on Social Media Are Less Engaged, Less Knowledgeable. Washington, DC: Pew Research Center’s Journalism Project.

Mosleh, M., Pennycook, G., Arechar, A. A., and Rand, D. G. (2021). Cognitive Reflection Correlates with Behavior on Twitter. Nat. Commun. 12 (1), 921. doi:10.1038/s41467-020-20043-0

Neuman, W. R., Just, M. R., and Crigler, A. N. (1992). Common Knowledge: News and the Construction of Political Meaning. 1st edition. Chicago: University of Chicago Press. doi:10.7208/chicago/9780226161174.001.0001

Papakyriakopoulos, O., Serrano, J. C. M., and Hegelich, S. (2020). The Spread of COVID-19 Conspiracy Theories on Social media and the Effect of Content Moderation. Harv. Kennedy Sch. Misinformation Rev. 1 (3).

Pennycook, G., Cannon, T. D., and Rand, D. G. (2018). Prior Exposure Increases Perceived Accuracy of Fake News. J. Exp. Psychol. Gen. 147 (12), 1865–1880. doi:10.1037/xge0000465

Pennycook, G., Epstein, Z., Mosleh, M., Arechar, A. A., Eckles, D., and Rand, D. G. (2021). Shifting Attention to Accuracy Can Reduce Misinformation Online. Nature 592, 590–595. doi:10.1038/s41586-021-03344-2

Pennycook, G., and Rand, D. G. (2019). Lazy, Not Biased: Susceptibility to Partisan Fake News Is Better Explained by Lack of Reasoning Than by Motivated Reasoning. Cognition 188, 39–50. doi:10.1016/j.cognition.2018.06.011

Pennycook, G., and Rand, D. G. (2020). The Psychology of Fake News. The Cognitive Science of Fake News. PsyArXiv. doi:10.31234/osf.io/ar96c

Powell, T. E., Boomgaarden, H. G., De Swert, K., and de Vreese, C. H. (2019). Framing Fast and Slow: A Dual Processing Account of Multimodal Framing Effects. Media Psychol. 22 (4), 572–600. doi:10.1080/15213269.2018.1476891

Radnitz, S., and Underwood, P. (2015). Is Belief in Conspiracy Theories Pathological? A Survey Experiment on the Cognitive Roots of Extreme Suspicion. Br. J. Polit. Sci. 47, 113–129. doi:10.1017/S0007123414000556

Roozenbeek, J., Schneider, C. R., Dryhurst, S., Kerr, J., Freeman, A. L. J., Recchia, G., et al. (2020). Susceptibility to Misinformation about COVID-19 Around the world, R. Soc. open sci., 7. 201199. doi:10.1098/rsos.201199

Ross, R. M., Rand, D., and Pennycook, G. (2019). Beyond “fake news”: The role of analytic thinking in the detection of inaccuracy and partisan bias in news headlines, PsyArXiv Working Paper.

Spreng, R. N. (2012). “The Fallacy of a “Task -negative” Network. Front. Psychol. 3, 1–5. doi:10.3389/fpsyg.2012.00145

Stanovich, K. E., and West, R. F. (2000). Individual Differences in Reasoning: Implications for the Rationality Debate? Behav. Brain Sci. 23 (5), 645–665. doi:10.1017/s0140525x00003435

Stecula, D. A., Kuru, O., and Jamieson, K. H. (2020). How Trust in Experts and media Use Affect Acceptance of Common Anti-vaccination Claims. Harv. Kennedy Sch. (Hks) Misinformation Rev. 1 (1).

Swire-Thompson, B., and Lazer, D. (2020). Public Health and Online Misinformation: Challenges and Recommendations. Annu. Rev. Public Health 41 (1), 433–451. doi:10.1146/annurev-publhealth-040119-094127

Sydnor, E. (2018). Platforms for Incivility: Examining Perceptions across Different Media Formats. Polit. Commun. 35 (1), 97–116. doi:10.1080/10584609.2017.1355857

Toplak, M. E., West, R. F., and Stanovich, K. E. (2011). The Cognitive Reflection Test as a Predictor of Performance on Heuristics-And-Biases Tasks. Mem. Cogn. 39 (7), 1275–1289. doi:10.3758/s13421-011-0104-1

Uscinski, J. E., Enders, A. M., Klofstad, C., Seelig, M., Funchion, J., Everett, C., et al. (2020). Why Do People Believe COVID-19 Conspiracy Theories?. Harv. Kennedy Sch. Misinformation Rev. 1 (3).

Uscinski, J. E., Klofstad, C., and Atkinson, M. D. (2016). What Drives Conspiratorial Beliefs? the Role of Informational Cues and Predispositions. Polit. Res. Q. 69 (1), 57–71. doi:10.1177/1065912915621621

van Prooijen, J.-W. (2018). The Psychology of Conspiracy Theories. Abingdon-on-Thames, England: Routledge.

Vosoughi, S., Roy, D., and Aral, S. (2018). The Spread of True and False News Online. Science 359 (6380), 1146–1151. doi:10.1126/science.aap9559

Wang, Y., McKee, M., Torbica, A., and Stuckler, D. (2019). Systematic Literature Review on the Spread of Health-Related Misinformation on Social Media. Soc. Sci. Med. 240, 112552. doi:10.1016/j.socscimed.2019.112552

Keywords: social media, cognitive reflection, conspiracy theories, COVID-19, misinformation

Citation: Stecula DA and Pickup M (2021) Social Media, Cognitive Reflection, and Conspiracy Beliefs. Front. Polit. Sci. 3:647957. doi: 10.3389/fpos.2021.647957

Received: 30 December 2020; Accepted: 21 May 2021;

Published: 08 June 2021.

Edited by:

Nathalie Giger, Université de Genève, SwitzerlandReviewed by:

Pier Luigi Sacco, Università IULM, ItalyJill Sheppard, Australian National University, Australia

Copyright © 2021 Stecula and Pickup. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dominik A. Stecula, ZG9taW5pay5zdGVjdWxhQGNvbG9zdGF0ZS5lZHU=

Dominik A. Stecula

Dominik A. Stecula Mark Pickup

Mark Pickup