94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 18 June 2024

Sec. Sustainable and Intelligent Phytoprotection

Volume 15 - 2024 | https://doi.org/10.3389/fpls.2024.1393592

The nonuniform distribution of fruit tree canopies in space poses a challenge for precision management. In recent years, with the development of Structure from Motion (SFM) technology, unmanned aerial vehicle (UAV) remote sensing has been widely used to measure canopy features in orchards to balance efficiency and accuracy. A pipeline of canopy volume measurement based on UAV remote sensing was developed, in which RGB and digital surface model (DSM) orthophotos were constructed from captured RGB images, and then the canopy was segmented using U-Net, OTSU, and RANSAC methods, and the volume was calculated. The accuracy of the segmentation and the canopy volume measurement were compared. The results show that the U-Net trained with RGB and DSM achieves the best accuracy in the segmentation task, with mean intersection of concatenation (MIoU) of 84.75% and mean pixel accuracy (MPA) of 92.58%. However, in the canopy volume estimation task, the U-Net trained with DSM only achieved the best accuracy with Root mean square error (RMSE) of 0.410 m3, relative root mean square error (rRMSE) of 6.40%, and mean absolute percentage error (MAPE) of 4.74%. The deep learning-based segmentation method achieved higher accuracy in both the segmentation task and the canopy volume measurement task. For canopy volumes up to 7.50 m3, OTSU and RANSAC achieve an RMSE of 0.521 m3 and 0.580 m3, respectively. Therefore, in the case of manually labeled datasets, the use of U-Net to segment the canopy region can achieve higher accuracy of canopy volume measurement. If it is difficult to cover the cost of data labeling, ground segmentation using partitioned OTSU can yield more accurate canopy volumes than RANSAC.

Precise management, such as application and pruning, of the canopy is important for fruit yield and quality. Canopy volume can provide a reference for precise pesticide application and pruning. Several pesticide application models require the use of canopy volume as an input variable (Gil et al., 2013; Nan et al., 2019; Sultan Mahmud et al., 2021). However, accurate measurement of canopy volume relative to tree height is more difficult (Tsoulias et al., 2019). To accurately obtain canopy volume, traditional methods require a number of expensive manual measurements, which increases the management cost of the production process. With the development of sensor technology, LiDAR, ultrasonic sensors, and cameras are used for nondestructive and rapid measurement of canopy volume.

Terrestrial LiDAR has a wide range of applications in orchard phenology, such as canopy volume measurement and tree height measurement (Pfeiffer et al., 2018; Brede et al., 2019). Due to the long time required for a single scan and the need to scan as many locations as possible to avoid occlusions, a complete scan of a 1-ha orchard can take 3–6 days, even for an experienced team (Wilkes et al., 2017). Mobile LiDAR scanning with a real-time kinematic (RTK) receiver was developed to improve the efficiency of canopy point cloud collection (Karp et al., 2017; Wang et al., 2017; Gené-Mola et al., 2019; Mokroš et al., 2021). It has been shown that tree segmentation and canopy parameter extraction can also be achieved by LiDAR on UAV platforms, which reduce the effect of vibration and are easy to register (Yoshii et al., 2022; Yuan et al., 2022; Caras et al., 2024).

LiDAR still has a high cost compared to cameras. UAV remote sensing imagery has been widely used in the precision management of orchards (Stateras and Kalivas, 2020; Zhang et al., 2021; Pagliai et al., 2022; Sinha et al., 2022). With structure from motion (SFM) technology, three-dimensional information such as tree heights and canopy volume in orchards can be obtained using drone imagery (Mu et al., 2018; Anifantis et al., 2019; Maimaitijiang et al., 2019; Ross et al., 2022; Vélez et al., 2022; Vinci et al., 2023). For some orchards, crown diameter also can be measured (Chang et al., 2020). Leaf area index (LAI) and leaf porosity can even be obtained using multispectral images (Raj et al., 2021; Zhang et al., 2024), while crop water stress index can also be assessed to inform precision irrigation (Chang et al., 2020). Combined with computer vision technology, it can even enable fruit recognition to provide growers with yield information in the early stages of crop growth (Ariza-Sentís et al., 2023). The digital surface modeling (DSM) created by images contains the height of the crop (Zarco-Tejada et al., 2014; Yurtseven et al., 2019; Lu et al., 2021; Tunca et al., 2024).

Furthermore, to obtain the volume of the canopy, the digital terrain model (DTM) needs to be split from the DSM (Patrignani and Ochsner, 2015; Ali-Sisto et al., 2020; Ali et al., 2021), and the canopy height model (CHM) is created by taking the difference between the DSM and the DTM (Eitel et al., 2014; Walter et al., 2018). Next, canopy volume can be obtained by summing the CHM with voxel (Stovall et al., 2017; Wallace et al., 2017). For field crops, it is easier to obtain the DSM as DTM when the crop is not planted (Maimaitijiang et al., 2019). However, for orchards, accurate ground segmentation is required for canopy volume measurement tasks. Algorithms that have been developed for ground segmentation include zone thresholding methods and plane-fitting (Sithole and Vosselman, 2004; Oniga et al., 2023; Wen et al., 2023). The results of ground segmentation can significantly affect the measurement of canopy volume. Therefore, exploring different ground segmentation methods can improve the accuracy of canopy volume measurements.

This study presents a canopy volume measurement pipeline based on UAV remote sensing images, which first constructs the RGB and DSM of the target orchard, then segments the ground and canopy regions, and finally calculates the canopy volume based on the segmented masks using DSM. The effects of different segmentation algorithms on the accuracy of canopy volume measurements are also investigated.

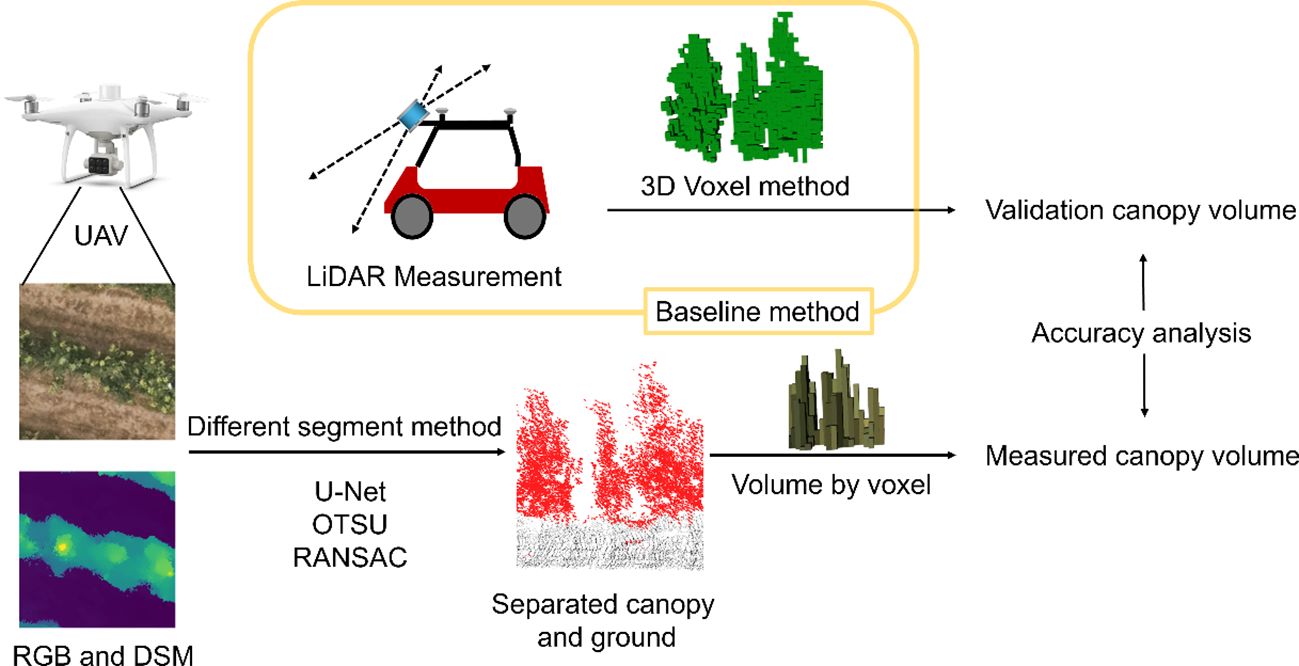

The point cloud acquired from the moving LiDAR scan was voxelated, the voxel volumes were summed, and the calculated canopy volume was taken as the true value. The RGB and DSM images acquired by the UAV are segmented into plots, and the canopy and ground section are segmented by different segmentation methods, and the volume of the canopy is calculated without the use of high-resolution DTM data. Diagram of the experimental design is shown in Figure 1.

Figure 1 Diagram of the experimental design, LiDAR scanning as a baseline method for canopy volume measuremenlt, and comparison of the effects of different ground segmentation methods on the accuracy of canopy volume measurement.

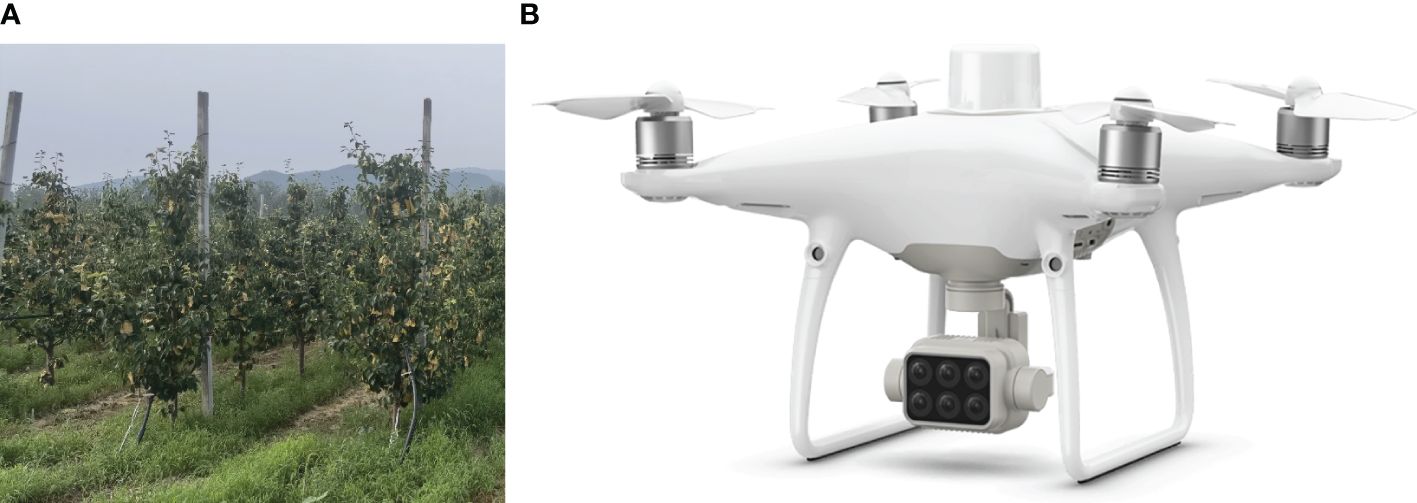

Field experiments were conducted in a pear (Pyrus bretschneideri ‘Zaosuhong’) orchard in Pinggu District, Beijing (40.18°N, 116.97°E, WGS-84). The orchard covered an area of about 3 ha, and the pear trees were BBCH 91 when photographed. The trees were spaced in rows with a 4.5-m interval (Figure 2A) and in rows with a 1.5-m interval between trees, with an average tree height of about 4 m.

Figure 2 Experimental site and UAV conducting experiment. (A) Pear orchard with a row-to-row distance of 4.5 m. (B) UAV used for image acquisition.

The P4 Multispectral (DJI Technology Inc., Shenzhen, China), which has one RGB camera and a multispectral camera array with five cameras covering blue, green, red, red edge, and near-infrared bands, all at 2 megapixels (MP) with global shutter, was used to acquire images (Figure 2B), and only its RGB channel (2 MP) was used in this experiment. The flight height was 30 m, resulting in a ground sample distance (GSD) of 0.016 m/pix. The head and side overlap were both 70%, and the images were taken at equal time intervals. Images were captured between 11:00 and 13:00 to ensure photograph quality. During the capture period, the weather was clear and windless, which eliminated the blur caused by swaying branches. During the flight, the network core service provided by Qianxun Inc. (Shanghai, China) was used to get more accurate RTK positioning.

The image procession utilized a workstation with Windows 10 (64-bit), 32 GB of RAM, i7–8700K, and GTX 1080Ti. The orthophotos and DSM were reconstructed with Terra (3.5.5, DJI Technology Inc., Shenzhen, China). A high reconstruction quality was selected to get high accuracy and resolution. The geographic coordinates were based on the WGS84 (EPSG: 4326) coordinate system in this study. As the P4M is equipped with an RTK receiver and the manufacturer supports phase-free control point technology, no ground image control point was set during the experiment.

The DSM of an orchard can be utilized to calculate the canopy volume (Mahmud et al., 2023). First, a segmentation operation was performed to extract the mask of the canopy region. Next, the volume of the region between the canopy and the ground was calculated as the final measured canopy volume. In this study, a U-Net-based deep learning method, grid-based OTSU, and RANSAC methods were used to segment the canopy, and the accuracy of different segment methods was compared. The orchard is situated on a gentle hillside, resulting in the ground in the orchard not being on the same plane. To avoid misclassification in the ground segmentation, it is important to segment the elevation data of the orchard area separately. In this study, the orchard was divided into multiple 4.5 m times 4.5 m plots. The ground within each plot was considered to be in one plane.

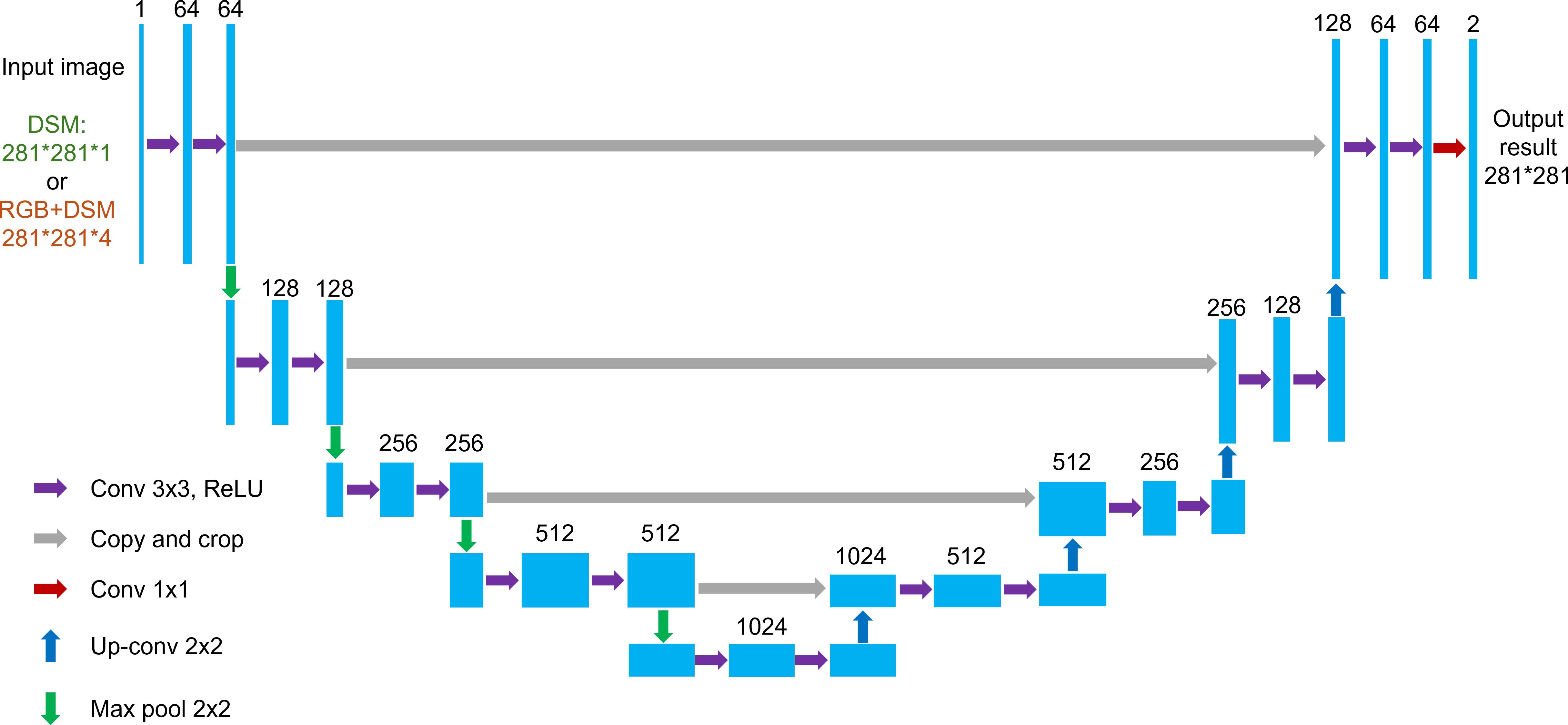

U-Net (Ronneberger et al., 2015) is a widely used deep learning network in remote sensing for efficient semantic segmentation of input images through an encoder and decoder. The classical U-Net can obtain fast segmentation results on smaller datasets with a lightweight structure. In this study, the classical U-Net was directly used, containing 31 million parameters, and the inputs were a four-channel image of 281 pixels times 281 pixels (RGB and DSM) or a single-channel image of 281 pixels times 281 pixels (DSM) for training, and the outputs were the segmented masked images (Figure 3).

Figure 3 U-Net network structure used in the study. Blue boxes correspond to multichannel feature maps with a number of channels marked on the top of each box. Conv, convolution; up-conv, upconvolution; max pool, max pooling with the size of the convolution kernel.

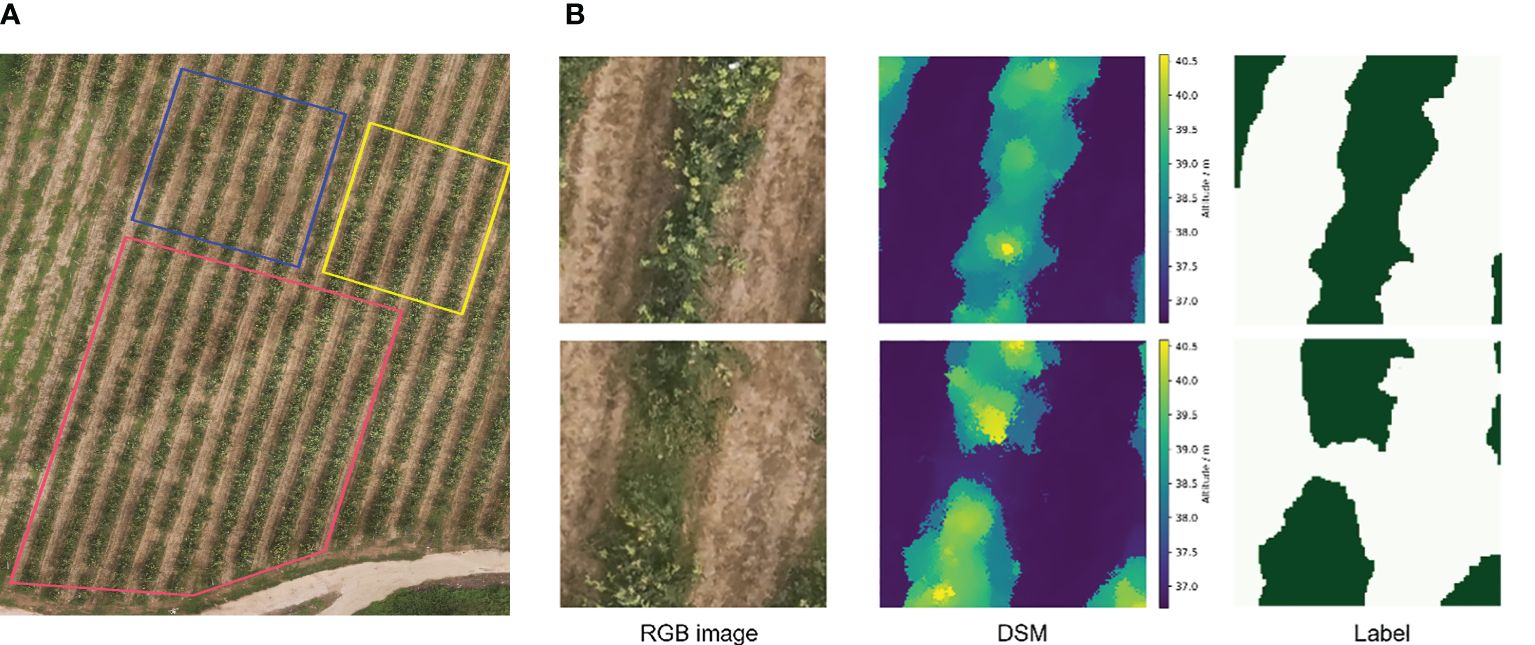

Three subfields from the orthophoto and the corresponding labeled files were divided as the sampling regions for the training set, validation set, and test set, with the number of samples being 400, 50, and 100, respectively (Figure 4). The validation set was used to adjust the epoch, batch size, and learning rate. Labels were created by an open-source annotation tool called labelme (V5.2.1).

Figure 4 Sampling region of the data in training and the RGB images, DSM images, and corresponding labels used. (A) The red subfield is the random sampling region for the training set, and the blue and yellow are the test and validation sets, respectively. (B) Sampled training images and corresponding labels with a total of four channels of RGB and DSM were fed into the network, where the dark-green color in the labels are the canopy and the light-green-colored regions are the ground.

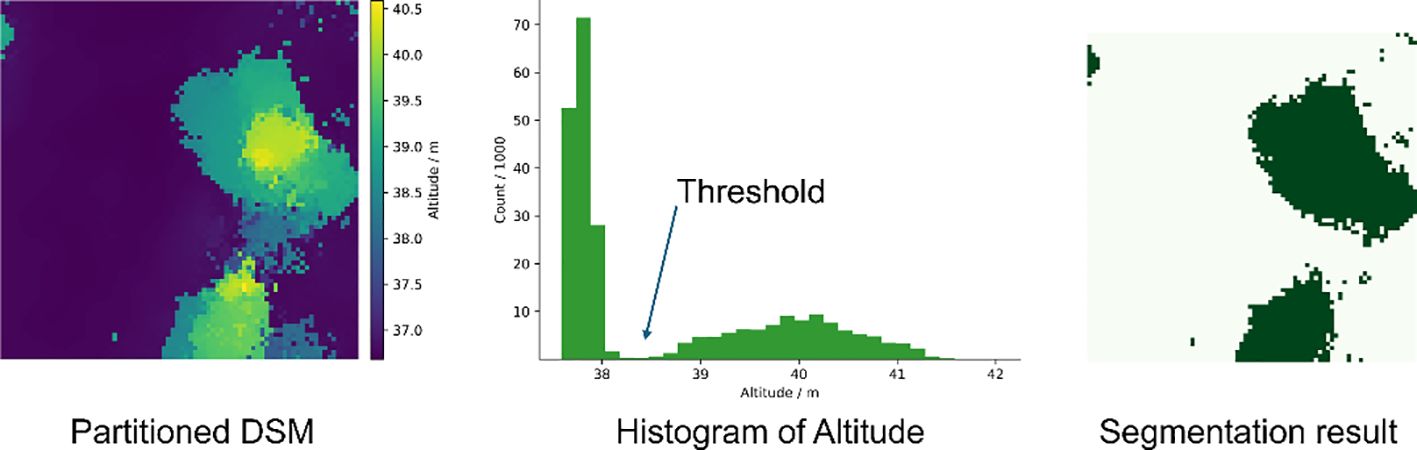

The OTSU threshold segmentation method is widely used in the field of remote sensing. The values of the background and the target have different distributions, and the OTSU method selects the threshold corresponding to when the value of the interclass variance is taken to be the maximum as the optimal threshold. The points belonging to the ground had a low elevation, and the canopy points had a higher elevation in the distribution histogram of DSM, showing two peaks in the histogram. The OTSU method was used to automatically find the threshold value in the middle of the two peaks to maximize the interclass variance of the ground and canopy elevation distributions. Points with elevation above the threshold are categorized as canopy regions, and the rest are ground. Figure 5 illustrates the segmentation process and the binarized mask map.

Figure 5 Segmentation thresholds for DSM obtained by OTSU and a mask of the canopy region, where dark green is the canopy region and light green is the ground.

RANSAC is an iteration-based fitting method that obtains the parameters of a model by randomly sampling the data points and calculating the probability of a successful fit. Given its good robustness, it is often used to extract planes within a point cloud. Open3D is an open-source library that supports rapid development of software that deals with 3D data. The plane fitting function therein was used in this study based on the empirical selection of 50 sampling points, 10,000 iterations (N), and a distance threshold of 0.2 m (D). The ground was fitted and split between the canopy and the ground (Figure 6).

The positions of the tree base were measured using a tilt-featured RTK receiver (E500, Beijing UniStrong Science and Technology Co. Ltd., Beijing, China), and the true height of the tree was measured using a tower ruler with a height accuracy of ± 5 cm. The coordinates and tree heights of 20 trees were measured in the scanned area and used to analyze the accuracy of the tree height measurements. The tree height measured by the LiDAR or UAV was achieved by selecting points within 0.25 m from the root coordinates of the tree and calculating the difference between the maximum and minimum heights.

The volume of the canopy was accumulated from the volume of each pixel in the mask (Equation 1). The volume of the pixel was calculated by the area multiplied by the height, which was the difference between the pixel and the ground mean altitude.

Where Vcanopy is the final volume, GSDis the resolution of DSM (in this study is 0.016 m/pix), hpix is the altitude of each pixel, and is the mean value of the altitude of the ground in the plot.

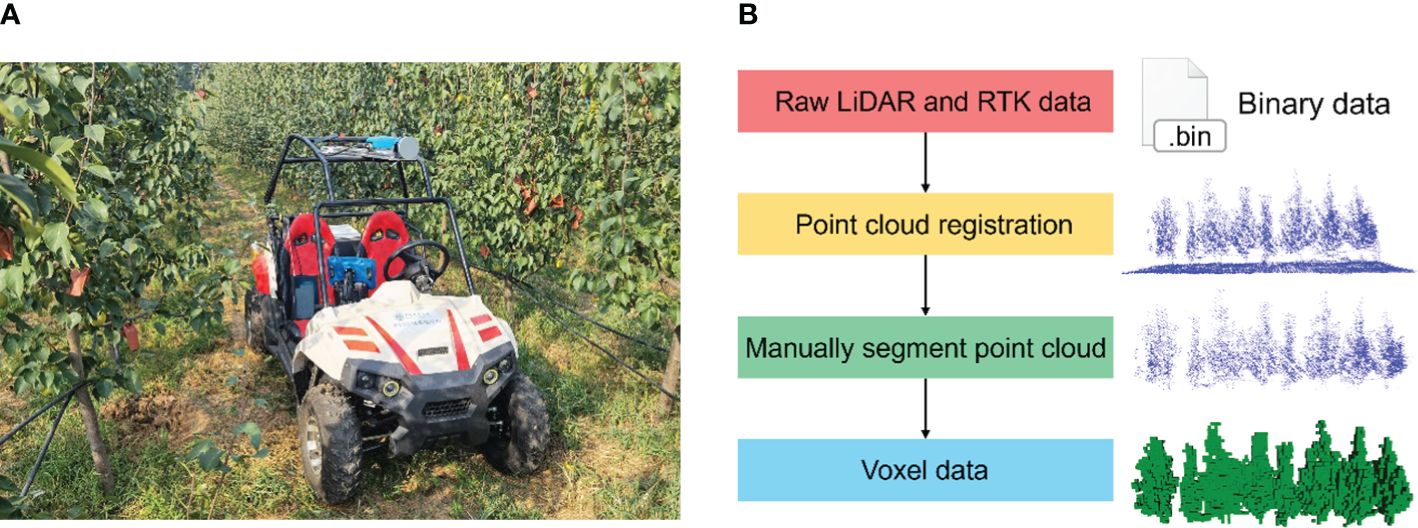

The true value of canopy volume is difficult to measure, and canopy calculations from moving LiDAR scans have typically been used as the true volume in previous studies (Li et al., 2017; Sultan Mahmud et al., 2021). A previously developed LiDAR-RTK fusion information acquisition system (Han et al., 2023) was used to acquire point clouds of the canopy. The LiDAR and RTK mobile stations were mounted on a frame on top of a vehicle, which allowed for smooth travel through the orchard (Figure 7A). Data acquisition was carried out during clear and windless hours, traveling at a speed of about 1 m/s.

Figure 7 Voxel volume of the canopy obtained by LiDAR. (A) Vehicle collecting canopy point cloud with LiDAR and RTK rover. (B) Processing pipeline of point cloud data acquired by mobile laser scanning.

The acquired point cloud was synchronized with the recorded RTK packet to obtain position and heading, and each frame of the point cloud was converted to a geographic coordinate system to obtain a complete point cloud of the scanned area. The complete point cloud was carefully removed from the ground portion manually with Meshlab software (2023.12). It was later constructed as voxel data at 0.1 m in size. Multiplying the number of voxels by the volume of a single voxel calculates the measured canopy volume (Figure 7B). The canopy volume calculated from the moving LiDAR-scanned point cloud was taken as the true value.

Mean intersection of concatenation (MIoU, Equation 2) and mean pixel accuracy (MPA, Equation 3) were used to evaluate the segmentation accuracy of the model. The data in the training set was segmented using OTSU and RANSAC, and the segmentation accuracy was also evaluated.

where TP is the number of correctly classified pixels in canopy samples, TN is the number of correctly classified pixels in ground samples, FP is the number of wrongly classified pixels in canopy samples, FN is the number of incorrectly classified pixels in ground samples, and Pi represents the proportion of correctly classified pixels in a different category.

In total, 50 zones of size 4.5 m * 4.5 m were selected from the scanned area of the LiDAR. The canopy volumes obtained by different methods were calculated, and the accuracy of the volumetric measurements was assessed using the moving LiDAR scans as the true values. Root mean square error (RMSE, Equation 4), relative root mean square error (rRMSE, Equation 5), and mean absolute percentage error (MAPE, Equation 6) were used to assess the error between the measured and true values.

where Vi is the measured volume, is the true volume (measured by moving LiDAR), n is the sample number, and is the mean value of the true volume.

Different hyperparameter settings will have an impact on the training results. In this study, by modifying the default parameters and pretraining, the finalized hyperparameters were an epoch of 20, a batch size of 5, and a learning rate of 10−5. The losses of the U-Net networks trained with different input data during training are shown in Appendix A. Both drop faster in the first three epochs, and the loss stabilizes after 10 epochs of training.

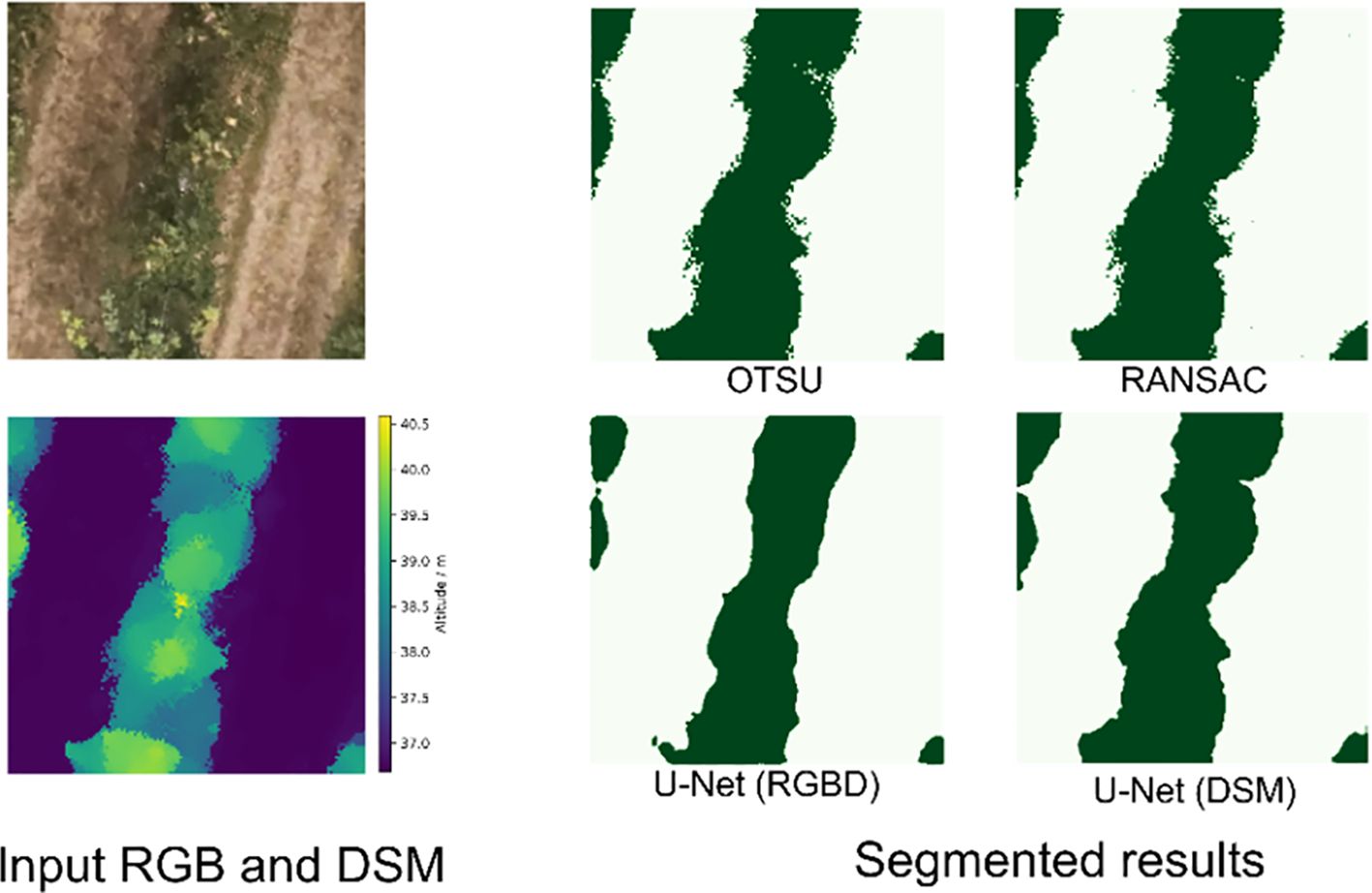

The data in the training set were segmented by trained U-Net, OTSU, and RANSAC methods, and the example results are shown in Figure 8. Deep learning-based segmentation methods possess smoother edges. The neural network trained based on RGB and DSM inputs more information, and its edges will be smoother and more closely fit the actual canopy region. While OTSU and RANSAC segment by simple thresholding, the edges will have a lot of noise and reduce the recognition accuracy. In addition, RANSAC has a fixed distance threshold compared to OTSU, which will incorrectly classify the ground as a tree canopy in some areas.

Figure 8 Results of different segmentation methods for tree rows: dark green for the canopy and light green for the ground.

Table 1 shows the accuracy of different segmentation methods. U-Net trained by RGB images and DSM achieved the highest MIoU and MPA of 84.75% and 92.58%, respectively. RANSAC had the worst segmentation accuracy, with MIoU and MPA of 64.48% and 90.20%, respectively. The MPAs of the four methods were close to each other, indicating that the segmentation accuracies of the different methods were similar for canopy and ground level. While the difference in MIoU suggested that the different segmentation methods had different overlaps of the canopy region, the deep learning method had tidier edges, and the pixel classification accuracy would be higher at the edges. Therefore, the overlap with the correct classification was higher to get a higher MIoU. Although both the DSM-based training U-Net OTSU and RANSAC methods used only altitude data, the results of the segmentation method with deep learning were smooth, with a higher overlap with the actual canopy region.

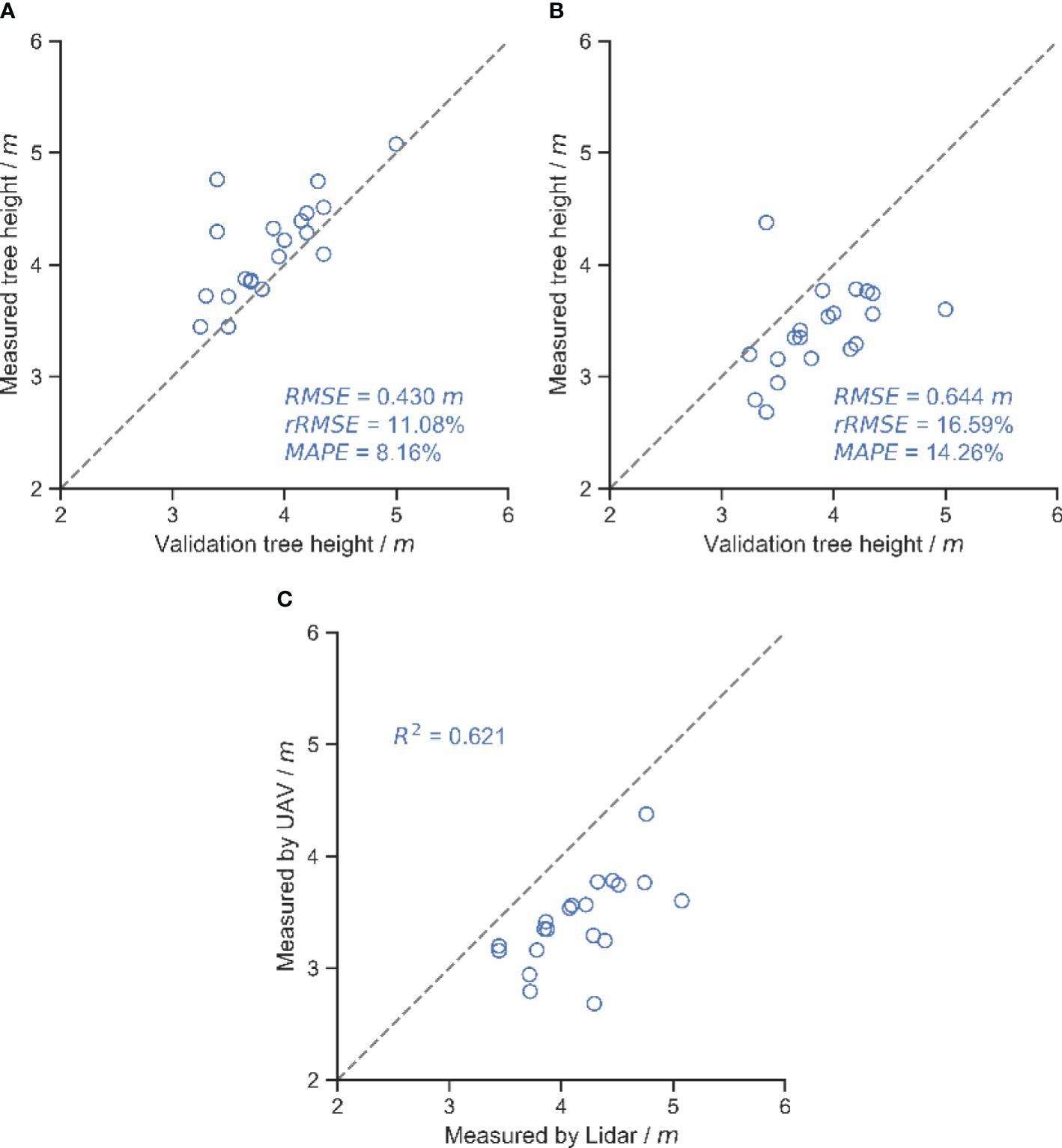

Figure 9 shows the results of tree height measurements using moving LiDAR scanning and a UAV. The RMSE of the tree height measured by LiDAR was 0.430 m and MAPE was 8.16%, while the RMSE and MAPE of UAV were 0.644 m and 14.26%, respectively. The UAV showed a greater error, which was probably due to the DSM construction process with some errors. Comparing the measurements of the two methods for the same tree, it could be found that the UAV’s measurements were low.

Figure 9 Results of different methods of measuring tree height. (A) Tree height measured by LiDAR. (B) Tree height measured by UAV. (C) Comparison of the results of the two methods of measuring tree height.

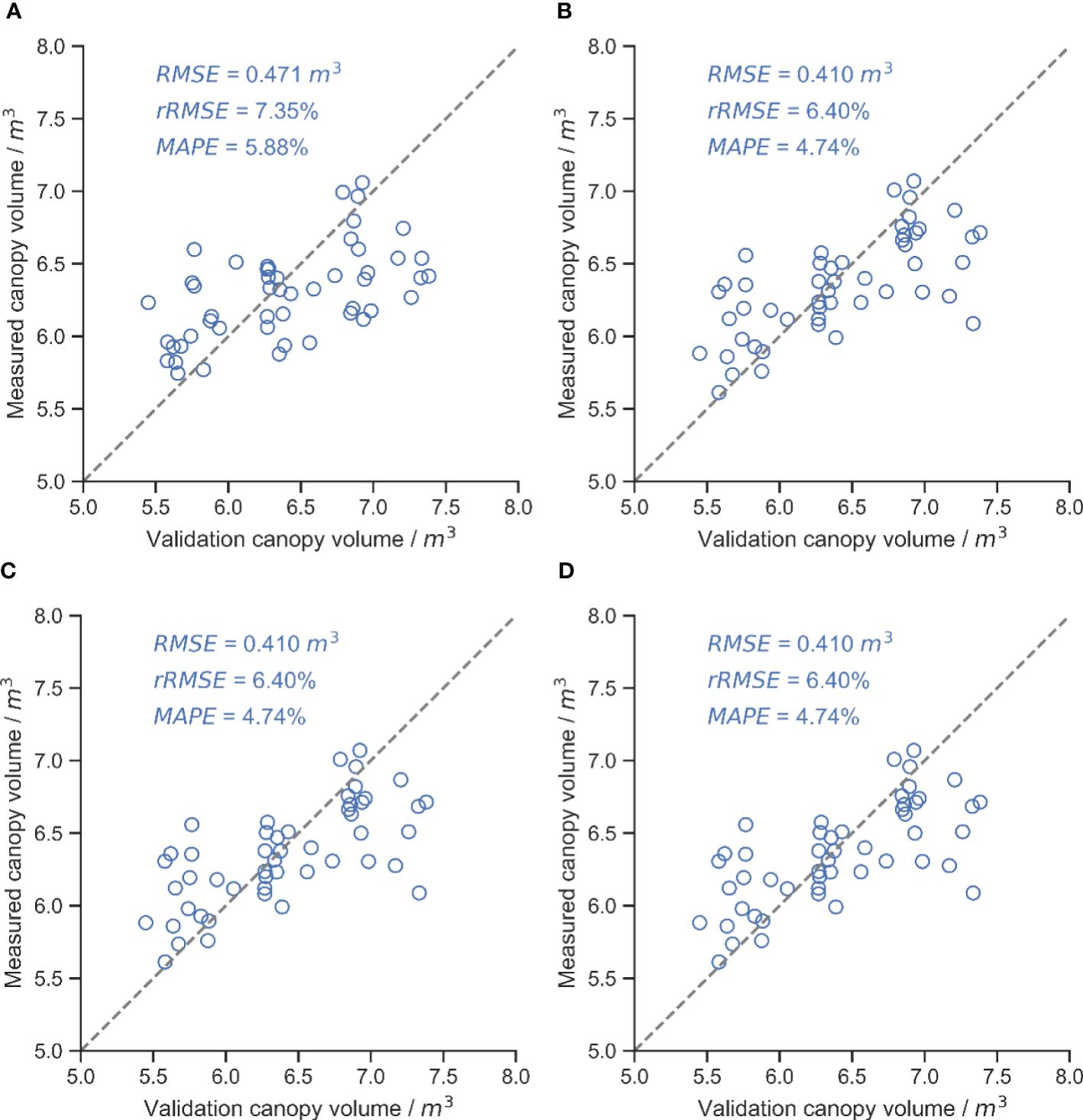

Figure 10 shows the accuracy of canopy volume measurements for different segmentation methods. U-Net trained with DSM obtained the highest canopy volume measurement accuracy with an RMSE of 0.410 m3. However, RANSAC segmentation had the worst canopy volume measurement accuracy, with an RMSE of 0.580 m3. The accuracy of deep learning-based segmentation approaches was higher than that of traditional methods, consistent with the results of segmentation accuracy. In the OTSU and RANSAC methods, the measurement accuracy of OTSU was higher than that of RANSAC.

Figure 10 Results of canopy volume measurements for different segmentation methods, with the gray dashed line in the figure being the 1:1 line. (A) U-Net (RGB and DSM). (B) U-Net (DSM). (C) OTSU. (D) RANSAC.

This study presented a pipeline for measuring canopy volume using UAVs and evaluated the impact of different ground segmentation methods on the accuracy of the measurements. The results indicated that the deep learning-based segmentation method had higher accuracy than the OTSU and RANSAC methods, whether trained with RGB and DSM or only with DSM. The U-Net model trained with an additional RGB channel input provided more color and texture information, improved the segmentation accuracy (Geirhos et al., 2018), and resulted in the highest segmentation accuracy with MIoU of 84.75%. Since the edges of manual labels were smooth, the neural network learned the feature of smooth edges, and the segmentation results do not need filtering operations.

Since the filtering operation on the mask image involved different filtering algorithms and hyperparameters, the output results were not filtered in this study but were directly used to calculate the canopy volume in the next step in order to evaluate the differences between the different algorithms themselves. The difference in the performance of OTSU and RANSAC at the edges might result in the identity of the masked area being larger than that manually labeled, which leads to a lower MIoU. At the same time, RANSAC might incorrectly classify a small number of ground points as a canopy, and some “pretzel-like” noise points can be seen in the ground part of the segmentation results. RANSAC incorrectly classified a small number of ground points as a canopy, and some “pretzel-like” noise points were visible in the ground portion of the segmentation result. In the DSM constructed using UAV, there were weeds in the ground part, and the ground part has a certain “thickness” due to the accuracy of the SFM method, and RANSAC uses a fixed threshold (0.2 m in this study) to misclassify some of the ground points as canopy (Figure 11). This might be the reason why RANSAC has the worst accuracy in segmentation and canopy volume measurements.

LiDAR tended to give higher results compared to manually measured true tree heights due to the fact that the LiDAR-equipped vehicle had a slight wobble when traveling, leading to incorrect measurements of tree heights (Han et al., 2023). In contrast, the tree heights measured in the UAV-based constructed DMS will be low. This might be due to the small area of the treetops, which makes it difficult to recognize the features when constructing the DSM, thus resulting in the highest point of the tree canopy not being correctly identified. Considering the difference in measurement efficiency, it is possible to measure tree height using a UAV.

The accuracy of canopy volume measurements did not follow the same order as the accuracy of canopy area segmentation due to multiple sources of error. The UAV-constructed DSM underestimated the canopy height compared to the point cloud acquired by the moving LiDAR (Figures 9C, 12), resulting in underestimated volumes, while the UAV was unable to access the structure of the inner and lower canopy (Brede et al., 2017; Schneider et al., 2019). Other studies have reported underestimates of UAV measurement (Krause et al., 2019; Pourreza et al., 2022). The overhead captured from the UAV resulted in higher leaves obscuring details of the lower canopy, and the reconstructed DSM contained only the upper surface of the canopy, which could lead to an overestimation of volume. The two errors had opposite effects on the volume measurements. With the combination of these two factors, U-Net trained based on DSM alone obtained the best canopy volume measurement with an RMSE of 0.410 m3. The RMSE of the UAV-based tree height measurements in this study was 0.644 m. An RMSE of 0.51 m was also obtained in young trees (Vacca and Vecchi, 2024). In a previous study, an RMSE of 0.28 m could be obtained using a high-resolution camera on a flat orchard (Mahmud et al., 2023), and an RMSE of about 0.3 m has been reported in a similar study (Wallace et al., 2016; Birdal et al., 2017; Krause et al., 2019). There is almost only one branch extending upwards at the treetop in this study, while the RGB camera only has 200 million pixels, which affects the accuracy of the reconstruction. There are various ways to measure canopy volume, such as the envelope polygon method and the voxel method. The voxel method was used in this study as a baseline method, and the RMSE of the canopy volume measured with the UAV was 0.410 m3. Using the envelope polygon method as a baseline and adjusting the different parameters, the RMSE of measurement is between 0.33 m3 and 0.43 m3 by the UAV (Mahmud et al., 2023). Based on UAV measurements of canopy volume in apple orchards, the best measurements obtained at different flight heights had an RMSE of 1.41 m3, using the ellipsoid fitted by manual measurements as a baseline (Sun et al., 2019). In contrast, the reconstruction process in this study did not use ground control points. With the combination of errors, the accuracy of canopy volume measurements was acceptable.

The canopy measurement process proposed in this study can be used for orchard phenology, pruning, and light interception estimation (Westling et al., 2020; Scalisi et al., 2021). The developed method can obtain the variability of the canopy in spatial distribution and provide prescription maps for precise pesticide spraying, pruning, and other field management work. Compared to LiDAR, the UAV-reconstructed DSM is missing branch details at the treetops, leading to an underestimation of tree height. Also, the ground control point-free reconstruction method may have affected the accuracy of tree height measurement. The UAV-based canopy volume testing process can balance efficiency and accuracy and is particularly suitable for larger orchards.

This study evaluated the effect of different canopy region segmentation methods on the accuracy of UAV-based canopy volume measurements. RGB and DSM orthophotos constructed based on UAV were used to segment the canopy by U-Net, OTSU, and RANSAC methods and calculate the canopy volume. The results showed that U-Net trained by RGB and DSM achieved the best accuracy in the segmentation task, with 84.75% MIoU and 92.58% MPA. The MPA of segmentation by the OTSU and RANSAC methods is similar to that of the deep learning method, but the MIoU is 65.33% and 64.48%, respectively, which is lower than that of the deep learning method due to the lower overlap of the segmented regions and the obtained canopy mask with a lot of noise. In tree height measurement, the RMSE of tree height measured by LiDAR was 0.430 m, while that of the UAV was 0.644 m. However, the canopy volume measurement task was less affected by the accuracy of tree height measurements. The U-Net trained using only DSM achieved the best accuracy with an RMSE of 0.410 m3, an rRMSE of 6.40%, and a MAPE of 4.74%. In contrast, the RMSE of the U-Net segmentation method trained with RGB and DSM was 0.471 m3. The canopy volume measurement accuracy of the traditional OTSU and RANSAC methods was lower than that of the deep learning method, with RMSE of 0.521 m3 and 0.580 m3, respectively. Therefore, in the case of having manually labeled datasets, the segmentation of the canopy region using the deep learning approach can achieve higher accuracy of canopy volume measurement.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

LH: Conceptualization, Investigation, Methodology, Validation, Writing – original draft. ZW: Conceptualization, Investigation, Writing – review & editing. MH: Conceptualization, Investigation, Methodology, Validation, Writing – review & editing. XH: Funding acquisition, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the earmarked fund for CARS (CARS-28), the 2115 talent development program of China Agricultural University, National Natural Science Foundation of China No.31761133019 and the Sanya Institute of China Agricultural University Guiding Fund Project, Grant No. SYND-2021–06.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Ali, M. E. N. O., Taha, L. G. E.-D., Mohamed, M. H. A., Mandouh, A. A. (2021). Generation of digital terrain model from multispectral LiDar using different ground filtering techniques. Egypt. J. Remote Sens. Sp. Sci. 24, 181–189. doi: 10.1016/j.ejrs.2020.12.004

Ali-Sisto, D., Gopalakrishnan, R., Kukkonen, M., Savolainen, P., Packalen, P. (2020). A method for vertical adjustment of digital aerial photogrammetry data by using a high-quality digital terrain model. Int. J. Appl. Earth Obs. Geoinf. 84, 101954. doi: 10.1016/j.jag.2019.101954

Anifantis, A. S., Camposeo, S., Vivaldi, G. S., Santoro, F., Pascuzzi, S. (2019). Comparison of UAV photogrammetry and 3D modeling techniques with other currently used methods for estimation of the tree row volume of a super-high-density olive orchard. Agriculture 9, 233. doi: 10.3390/agriculture9110233

Ariza-Sentís, M., Baja, H., Vélez, S., Valente, J. (2023). Object detection and tracking on UAV RGB videos for early extraction of grape phenotypic traits. Comput. Electron. Agric. 211, 108051. doi: 10.1016/j.compag.2023.108051

Birdal, A. C., Avdan, U., Türk, T. (2017). Estimating tree heights with images from an unmanned aerial vehicle. Geomatics Nat. Hazards Risk 8, 1144–1156. doi: 10.1080/19475705.2017.1300608

Brede, B., Calders, K., Lau, A., Raumonen, P., Bartholomeus, H. M., Herold, M., et al. (2019). Non-destructive tree volume estimation through quantitative structure modelling: Comparing UAV laser scanning with terrestrial LIDAR. Remote Sens. Environ. 233, 111355. doi: 10.1016/j.rse.2019.111355

Brede, B., Lau, A., Bartholomeus, H., Kooistra, L. (2017). Comparing RIEGL RiCOPTER UAV LiDAR derived canopy height and DBH with terrestrial LiDAR. Sensors 17, 2371. doi: 10.3390/s17102371

Caras, T., Lati, R. N., Holland, D., Dubinin, V. M., Hatib, K., Shulner, I., et al. (2024). Monitoring the effects of weed management strategies on tree canopy structure and growth using UAV-LiDAR in a young almond orchard. Comput. Electron. Agric. 216, 108467. doi: 10.1016/j.compag.2023.108467

Chang, A., Yeom, J., Jung, J., Landivar, J. (2020). Comparison of canopy shape and vegetation indices of citrus trees derived from UAV multispectral images for characterization of citrus greening disease. Remote Sens. 12, 4122. doi: 10.3390/rs12244122

Eitel, J. U. H., Magney, T. S., Vierling, L. A., Brown, T. T., Huggins, D. R. (2014). LiDAR based biomass and crop nitrogen estimates for rapid, non-destructive assessment of wheat nitrogen status. F. Crop Res. 159, 21–32. doi: 10.1016/j.fcr.2014.01.008

Geirhos, R., Rubisch, P., Michaelis, C., Bethge, M., Wichmann, F. A., Brendel, W. (2018) ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. Available online at: http://arxiv.org/abs/1811.12231.

Gené-Mola, J., Gregorio, E., Guevara, J., Auat, F., Sanz-Cortiella, R., Escolà, A., et al. (2019). Fruit detection in an apple orchard using a mobile terrestrial laser scanner. Biosyst. Eng. 187, 171–184. doi: 10.1016/j.biosystemseng.2019.08.017

Gil, E., Llorens, J., Llop, J., Fàbregas, X., Escolà, A., Rosell-Polo, J. R. (2013). Variable rate sprayer. Part 2 – Vineyard prototype: Design, implementation, and validation. Comput. Electron. Agric. 95, 136–150. doi: 10.1016/j.compag.2013.02.010

Han, L., Wang, S., Wang, Z., Jin, L., He, X. (2023). Method of 3D voxel prescription map construction in digital orchard management based on LiDAR-RTK boarded on a UGV. Drones 7, 242. doi: 10.3390/drones7040242

Karp, F. H. S., Colaço, A. F., Trevisan, R. G., Molin, J. P. (2017). Accuracy assessment of a mobile terrestrial laser scanner for tree crops. Adv. Anim. Biosci. 8, 178–182. doi: 10.1017/S2040470017000073

Krause, S., Sanders, T. G. M., Mund, J.-P., Greve, K. (2019). UAV-based photogrammetric tree height measurement for intensive forest monitoring. Remote Sens. 11, 758. doi: 10.3390/rs11070758

Li, S., Dai, L., Wang, H., Wang, Y., He, Z., Lin, S. (2017). Estimating leaf area density of individual trees using the point cloud segmentation of terrestrial LiDAR data and a voxel-based model. Remote Sens. 9, 1202. doi: 10.3390/rs9111202

Lu, J., Cheng, D., Geng, C., Zhang, Z., Xiang, Y., Hu, T. (2021). Combining plant height, canopy coverage and vegetation index from UAV-based RGB images to estimate leaf nitrogen concentration of summer maize. Biosyst. Eng. 202, 42–54. doi: 10.1016/j.biosystemseng.2020.11.010

Mahmud, M. S., He, L., Heinemann, P., Choi, D., Zhu, H. (2023). Unmanned aerial vehicle based tree canopy characteristics measurement for precision spray applications. Smart Agric. Technol. 4, 100153. doi: 10.1016/j.atech.2022.100153

Maimaitijiang, M., Sagan, V., Sidike, P., Maimaitiyiming, M., Hartling, S., Peterson, K. T., et al. (2019). Vegetation Index Weighted Canopy Volume Model (CVMVI) for soybean biomass estimation from Unmanned Aerial System-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 151, 27–41. doi: 10.1016/j.isprsjprs.2019.03.003

Mokroš, M., Mikita, T., Singh, A., Tomaštík, J., Chudá, J., Wężyk, P., et al. (2021). Novel low-cost mobile mapping systems for forest inventories as terrestrial laser scanning alternatives. Int. J. Appl. Earth Obs. Geoinf. 104, 102512. doi: 10.1016/j.jag.2021.102512

Mu, Y., Fujii, Y., Takata, D., Zheng, B., Noshita, K., Honda, K., et al. (2018). Characterization of peach tree crown by using high-resolution images from an unmanned aerial vehicle. Hortic. Res. 5, 74. doi: 10.1038/s41438-018-0097-z

Nan, Y., Zhang, H., Zheng, J., Bian, L., Li, Y., Yang, Y., et al. (2019). Estimating leaf area density of Osmanthus trees using ultrasonic sensing. Biosyst. Eng. 186, 60–70. doi: 10.1016/j.biosystemseng.2019.06.020

Oniga, V.-E., Loghin, A.-M., Macovei, M., Lazar, A.-A., Boroianu, B., Sestras, P. (2023). Enhancing LiDAR-UAS derived digital terrain models with hierarchic robust and volume-based filtering approaches for precision topographic mapping. Remote Sens. 16, 78. doi: 10.3390/rs16010078

Pagliai, A., Ammoniaci, M., Sarri, D., Lisci, R., Perria, R., Vieri, M., et al. (2022). Comparison of aerial and ground 3D point clouds for canopy size assessment in precision viticulture. Remote Sens. 14, 1145. doi: 10.3390/rs14051145

Patrignani, A., Ochsner, T. E. (2015). Canopeo: A powerful new tool for measuring fractional green canopy cover. Agron. J. 107, 2312–2320. doi: 10.2134/agronj15.0150

Pfeiffer, S. A., Guevara, J., Cheein, F. A., Sanz, R. (2018). Mechatronic terrestrial LiDAR for canopy porosity and crown surface estimation. Comput. Electron. Agric. 146, 104–113. doi: 10.1016/j.compag.2018.01.022

Pourreza, M., Moradi, F., Khosravi, M., Deljouei, A., Vanderhoof, M. K. (2022). GCPs-free photogrammetry for estimating tree height and crown diameter in arizona cypress plantation using UAV-mounted GNSS RTK. Forests 13, 1905. doi: 10.3390/f13111905

Raj, R., Walker, J. P., Pingale, R., Nandan, R., Naik, B., Jagarlapudi, A. (2021). Leaf area index estimation using top-of-canopy airborne RGB images. Int. J. Appl. Earth Obs. Geoinf. 96, 102282. doi: 10.1016/j.jag.2020.102282

Ronneberger, O., Fischer, P., Brox, T. (2015) U-net: convolutional networks for biomedical image segmentation. Available online at: http://arxiv.org/abs/1505.04597.

Ross, C. W., Loudermilk, E. L., Skowronski, N., Pokswinski, S., Hiers, J. K., O’Brien, J. (2022). LiDAR voxel-size optimization for canopy gap estimation. Remote Sens. 14, 1054. doi: 10.3390/rs14051054

Scalisi, A., McClymont, L., Underwood, J., Morton, P., Scheding, S., Goodwin, I. (2021). Reliability of a commercial platform for estimating flower cluster and fruit number, yield, tree geometry and light interception in apple trees under different rootstocks and row orientations. Comput. Electron. Agric. 191, 106519. doi: 10.1016/j.compag.2021.106519

Schneider, F. D., Kükenbrink, D., Schaepman, M. E., Schimel, D. S., Morsdorf, F. (2019). Quantifying 3D structure and occlusion in dense tropical and temperate forests using close-range LiDAR. Agric. For. Meteorol. 268, 249–257. doi: 10.1016/j.agrformet.2019.01.033

Sinha, R., Quirós, J. J., Sankaran, S., Khot, L. R. (2022). High resolution aerial photogrammetry based 3D mapping of fruit crop canopies for precision inputs management. Inf. Process. Agric. 9, 11–23. doi: 10.1016/j.inpa.2021.01.006

Sithole, G., Vosselman, G. (2004). Experimental comparison of filter algorithms for bare-Earth extraction from airborne laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 59, 85–101. doi: 10.1016/j.isprsjprs.2004.05.004

Stateras, D., Kalivas, D. (2020). Assessment of olive tree canopy characteristics and yield forecast model using high resolution UAV imagery. Agriculture 10, 385. doi: 10.3390/agriculture10090385

Stovall, A. E. L., Vorster, A. G., Anderson, R. S., Evangelista, P. H., Shugart, H. H. (2017). Non-destructive aboveground biomass estimation of coniferous trees using terrestrial LiDAR. Remote Sens. Environ. 200, 31–42. doi: 10.1016/j.rse.2017.08.013

Sultan Mahmud, M., Zahid, A., He, L., Choi, D., Krawczyk, G., Zhu, H., et al. (2021). Development of a LiDAR-guided section-based tree canopy density measurement system for precision spray applications. Comput. Electron. Agric. 182, 106053. doi: 10.1016/j.compag.2021.106053

Sun, G., Wang, X., Ding, Y., Lu, W., Sun, Y. (2019). Remote measurement of apple orchard canopy information using unmanned aerial vehicle photogrammetry. Agronomy 9, 774. doi: 10.3390/agronomy9110774

Tsoulias, N., Paraforos, D. S., Fountas, S., Zude-Sasse, M. (2019). Estimating canopy parameters based on the stem position in apple trees using a 2D LiDAR. Agronomy 9, 740. doi: 10.3390/agronomy9110740

Tunca, E., Köksal, E. S., Öztürk, E., Akay, H., Taner, S.Ç. (2024). Accurate leaf area index estimation in sorghum using high-resolution UAV data and machine learning models. Phys. Chem. Earth Parts A/B/C 133, 103537. doi: 10.1016/j.pce.2023.103537

Vacca, G., Vecchi, E. (2024). UAV photogrammetric surveys for tree height estimation. Drones 8, 106. doi: 10.3390/drones8030106

Vélez, S., Vacas, R., Martín, H., Ruano-Rosa, D., Álvarez, S. (2022). A novel technique using planar area and ground shadows calculated from UAV RGB imagery to estimate pistachio tree (Pistacia vera L.) canopy volume. Remote Sens. 14, 6006. doi: 10.3390/rs14236006

Vinci, A., Brigante, R., Traini, C., Farinelli, D. (2023). Geometrical characterization of hazelnut trees in an intensive orchard by an unmanned aerial vehicle (UAV) for precision agriculture applications. Remote Sens. 15, 541. doi: 10.3390/rs15020541

Wallace, L., Hillman, S., Reinke, K., Hally, B. (2017). Non-destructive estimation of above-ground surface and near-surface biomass using 3D terrestrial remote sensing techniques. Methods Ecol. Evol. 8, 1607–1616. doi: 10.1111/2041-210X.12759

Wallace, L., Lucieer, A., Malenovský, Z., Turner, D., Vopěnka, P. (2016). Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 7, 62. doi: 10.3390/f7030062

Walter, J., Edwards, J., McDonald, G., Kuchel, H. (2018). Photogrammetry for the estimation of wheat biomass and harvest index. F. Crop Res. 216, 165–174. doi: 10.1016/j.fcr.2017.11.024

Wang, H., Lin, Y., Wang, Z., Yao, Y., Zhang, Y., Wu, L. (2017). Validation of a low-cost 2D laser scanner in development of a more-affordable mobile terrestrial proximal sensing system for 3D plant structure phenotyping in indoor environment. Comput. Electron. Agric. 140, 180–189. doi: 10.1016/j.compag.2017.06.002

Wen, L., He, J., Huang, X. (2023). Mountain segmentation based on global optimization with the cloth simulation constraint. Remote Sens. 15, 2966. doi: 10.3390/rs15122966

Westling, F., Mahmud, K., Underwood, J., Bally, I. (2020). Replacing traditional light measurement with LiDAR based methods in orchards. Comput. Electron. Agric. 179, 105798. doi: 10.1016/j.compag.2020.105798

Wilkes, P., Lau, A., Disney, M., Calders, K., Burt, A., Gonzalez de Tanago, J., et al. (2017). Data acquisition considerations for Terrestrial Laser Scanning of forest plots. Remote Sens. Environ. 196, 140–153. doi: 10.1016/j.rse.2017.04.030

Yoshii, T., Matsumura, N., Lin, C. (2022). Integrating UAV-SfM and airborne lidar point cloud data to plantation forest feature extraction. Remote Sens. 14, 1713. doi: 10.3390/rs14071713

Yuan, W., Choi, D., Bolkas, D. (2022). GNSS-IMU-assisted colored ICP for UAV-LiDAR point cloud registration of peach trees. Comput. Electron. Agric. 197, 106966. doi: 10.1016/j.compag.2022.106966

Yurtseven, H., Akgul, M., Coban, S., Gulci, S. (2019). Determination and accuracy analysis of individual tree crown parameters using UAV based imagery and OBIA techniques. Measurement 145, 651–664. doi: 10.1016/j.measurement.2019.05.092

Zarco-Tejada, P. J., Diaz-Varela, R., Angileri, V., Loudjani, P. (2014). Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 55, 89–99. doi: 10.1016/j.eja.2014.01.004

Zhang, C., Chen, Z., Yang, G., Xu, B., Feng, H., Chen, R., et al. (2024). Removal of canopy shadows improved retrieval accuracy of individual apple tree crowns LAI and chlorophyll content using UAV multispectral imagery and PROSAIL model. Comput. Electron. Agric. 221, 108959. doi: 10.1016/j.compag.2024.108959

Zhang, C., Valente, J., Kooistra, L., Guo, L., Wang, W. (2021). Orchard management with small unmanned aerial vehicles: a survey of sensing and analysis approaches. Precis. Agric. 22, 2007–2052. doi: 10.1007/s11119-021-09813-y

Keywords: UAV, ground segmentation, canopy volume, OTSU, RANSAC

Citation: Han L, Wang Z, He M and He X (2024) Effects of different ground segmentation methods on the accuracy of UAV-based canopy volume measurements. Front. Plant Sci. 15:1393592. doi: 10.3389/fpls.2024.1393592

Received: 29 February 2024; Accepted: 30 May 2024;

Published: 18 June 2024.

Edited by:

Wei Qiu, Nanjing Agricultural University, ChinaReviewed by:

Renata Retkute, University of Cambridge, United KingdomCopyright © 2024 Han, Wang, He and He. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiongkui He, eGlvbmdrdWlAY2F1LmVkdS5jbg==

†ORCID: Leng Han, orcid.org/0000-0003-0190-0919

ZhiChong Wang, orcid.org/0000-0002-9720-5496

Miao He, orcid.org/0009-0000-9064-2602

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.