95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 11 September 2023

Sec. Technical Advances in Plant Science

Volume 14 - 2023 | https://doi.org/10.3389/fpls.2023.1224385

This article is part of the Research Topic Control of Plant Diseases with Deep Learning View all 8 articles

Jitong Cai1,2

Jitong Cai1,2 Renyong Pan1,2

Renyong Pan1,2 Jianwu Lin1,2

Jianwu Lin1,2 Jiaming Liu1,2

Jiaming Liu1,2 Licai Zhang1,2

Licai Zhang1,2 Xingtian Wen1,2

Xingtian Wen1,2 Xiaoyulong Chen2,3,4*

Xiaoyulong Chen2,3,4* Xin Zhang1,2*

Xin Zhang1,2*Introduction: Corn is one of the world's essential crops, and the presence of corn diseases significantly affects both the yield and quality of corn. Accurate identification of corn diseases in real time is crucial to increasing crop yield and improving farmers' income. However, in real-world environments, the complexity of the background, irregularity of the disease region, large intraclass variation, and small interclass variation make it difficult for most convolutional neural network models to achieve disease recognition under such conditions. Additionally, the low accuracy of existing lightweight models forces farmers to compromise between accuracy and real-time.

Methods: To address these challenges, we propose FCA-EfficientNet. Building upon EfficientNet, the fully-convolution-based coordinate attention module allows the network to acquire spatial information through convolutional structures. This enhances the network's ability to focus on disease regions while mitigating interference from complex backgrounds. Furthermore, the adaptive fusion module is employed to fuse image information from different scales, reducing interference from the background in disease recognition. Finally, through multiple experiments, we have determined the network structure that achieves optimal performance.

Results: Compared to other widely used deep learning models, this proposed model exhibits outstanding performance in terms of accuracy, precision, recall, and F1 score. Furthermore, the model has a parameter count of 3.44M and Flops of 339.74M, which is lower than most lightweight network models. We designed and implemented a corn disease recognition application and deployed the model on an Android device with an average recognition speed of 92.88ms, which meets the user's needs.

Discussion: Overall, our model can accurately identify corn diseases in realistic environments, contributing to timely and effective disease prevention and control.

Corn is a globally important crop with high nutritional value and significant economic importance for farmers (Rosas-Castor et al., 2014). Corn is affected by a variety of unfavorable factors during the planting process, among which disease is the main disaster that affects corn yield, leading to a significant reduction in the yield of diseased corn and causing economic losses to farmers. Therefore, timely detection of corn diseases is crucial. However, traditional methods of diagnosing corn diseases, which require diagnosticians to physically enter the field and rely on preliminary diagnosis or quantitative analysis to determine the type of disease, are both time-consuming and labor-intensive. Agricultural experts and technicians cannot reach the field in time, while most farmers lack the necessary knowledge to accurately diagnose diseases, resulting in time, cost, and inefficiency in problem solving. In the field of plant disease recognition, existing research suggests two main approaches: traditional machine learning and deep learning (DL).

Traditional image processing methods has improved the efficiency of corn disease prevention and control to some extent (Guruprasad, 2020; O’Mahony et al., 2020). Kai et al. (2011) segmented the lesions based on the texture characteristics of corn diseases using the YCbCr color space technique, extracted the lesion texture features using the co-occurrence matrix spatial gray-level layer, and classified corn diseases using a BP neural network, achieving an accuracy rate of 98%. Aravind et al. (2018) processed the image to obtain a feature bag and texture features based on statistical histograms, and used a multi-class support vector machine to classify the diseases based on the obtained features, achieving an average best accuracy rate of 83.7%.

Recognizing crop diseases using machine learning algorithms requires features to be designed manually, which is time-consuming and laborious. In contrast, DL models are able to autonomously learn information about image target features, and are therefore widely used in the field of image recognition. Praveen et al. (2022) using the FastAI technology with ResNet-32 to diagnose ductal carcinoma, The experimental results show that 93.60% recognition accuracy was achieved on IDC dataset. Srinivasu et al. (2022) proposed an RNN model integrating GRU and LSTM with auxiliary memory components and designed one, for predicting type 2 diabetes, and the experimental results showed that the model achieved a correct recognition rate of 81.8%. For plant disease recognition, Nigam et al. (2023) used fine-tuned EfficientNet-B4 model for recognizing wheat disease and the experimental results showed that 99.35% recognition accuracy was obtained on their collected dataset. Al-Gaashani et al. (2023) based on the ResNet50 architecture, combined with kernel attention mechanism proposed SANET for recognizing rice disease, and the experimental results showed that 98.71% recognition accuracy was obtained on the publicly available rice disease dataset.

In the above study, the images of crop disease datasets usually have a simple background collected in the laboratory, while in the real environment, it is difficult to distinguish the differences between disease features with complex backgrounds to achieve the accuracy of specific disease identification in the previous model. The use of max pooling can highlight the most significant features, help filter some noise, and reduce the interference from complex backgrounds. Sun et al. (2020) proposed a novel max pooling method for CNN trained by noisy samples, and the classification accuracy of CNN with this method for noisy images is much higher than that of traditional pooling methods. Lin et al. (2022b) proposed CAMFFNet for tobacco disease identification using multiple feature fusion module, which introduced additional max pooling branches for feature extraction at different locations of the structure. The results showed that the recognition accuracy of the test set was 89.71%.At the same time, for complex background images, the attention mechanism can make the model focus on the disease region in a targeted manner, improve the ability of the model to learn disease features, and reduce the influence of the model by noise to improve the accuracy of the model. Hu et al. (2023) proposed a Class-Attention-based Lesion Proposal Convolutional Neural Network to locate disease objects of complex backgrounds, and the experimental results showed that the recognition accuracy was 92.56% on a self-constructed field strawberry disease dataset. Stephen et al. (2023) designed a self-attention-based ResNet architecture for rice disease classification, and the experimental results showed that the accuracy of recognition of four types of rice diseases was 98.54%.

The above studies have well demonstrated the superiority of CNN for plant disease identification. However, the above models are heavy-weight and require large computational resources, which are not suitable for efficient deployment on mobile devices for timely disease identification. Therefore, there is a need to design lightweight networks that can be deployed on mobile devices. Vishnoi et al. (2022) proposed a new CNN model using only fewer convolutional layers to reduce the computational burden, and the experimental results showed a 98% classification accuracy for four classes of apple diseases. Lin et al. (2022a) proposed a lightweight CNN model called GrapeNet for recognizing specific grape diseases at different symptom stages, and the experimental results showed that the recognition accuracy for seven classes of grape diseases was 97.85%. Chen et al. (2022) proposed a lightweight CNN model, DFCANet, for recognizing corn diseases in real environments, and the experimental results showed that the classification accuracy for six classes of corn diseases reached 98.47%.

Identification of corn leaf diseases in field environments faces several difficulties, such as complex background disturbances, variability and irregularity of lesion areas. In addition, traditional CNN models with a large number of parameters in crop identification tasks require more computational resources, cannot be deployed on mobile devices and are difficult to scale widely.

To solve the above problems, we focus on corn diseases and propose a lightweight CNN model with high recognition accuracy, FCA-EfficientNet. on top of EfficientNet, we use adaptive fusion (AF) module for shallow feature extraction, fully-convolution-based coordinate attention (FCA) module to focus on disease areas in complex backgrounds, better normalization methods and activation functions to enhance the recognition of the network speed, and removing the network redundant structure of the corn disease dataset to make the network easier to deploy. This study explores the accurate recognition of corn disease images and provides new insights for plant disease recognition research. The main contributions of this study are as follows:

● Since the field environment often has complex background noise, in order to reduce the interference of background on the network recognition results, we use pooling layer to reduce the background interference, and through the AF module, fuse the features of pooling layer and convolutional layer, so that the network can better extract shallow information and improve the recognition accuracy.

● To better focus the network on the lesion area while reducing the interference of background noise, we propose the FCA module, which uses a convolutional structure to extract spatial information, allowing the network to better extract disease areas and reduce the recognition difficulty.

● In order to make the network have better generalization ability, we use more advanced normalization method with activation function to improve the robustness of the network.

● To make the model easy to deploy due to performance limitations of mobile devices, we removed the redundant structure of the model to make the model have faster inference speed and smaller number of parameters.

The paper generally consists of several sections. The “Introduction” section provides a brief overview of the research field, motivation, and objectives. The “Materials and Methods” section describes the source of the dataset and the methods used for data processing. The “Proposed method” section provides an explanation of the technical details adopted in this paper. The next section presents the “Experimental results and analysis”. The “Conclusions” section provides conclusions and outlines future research directions. Finally, the last section includes the references cited in this paper.

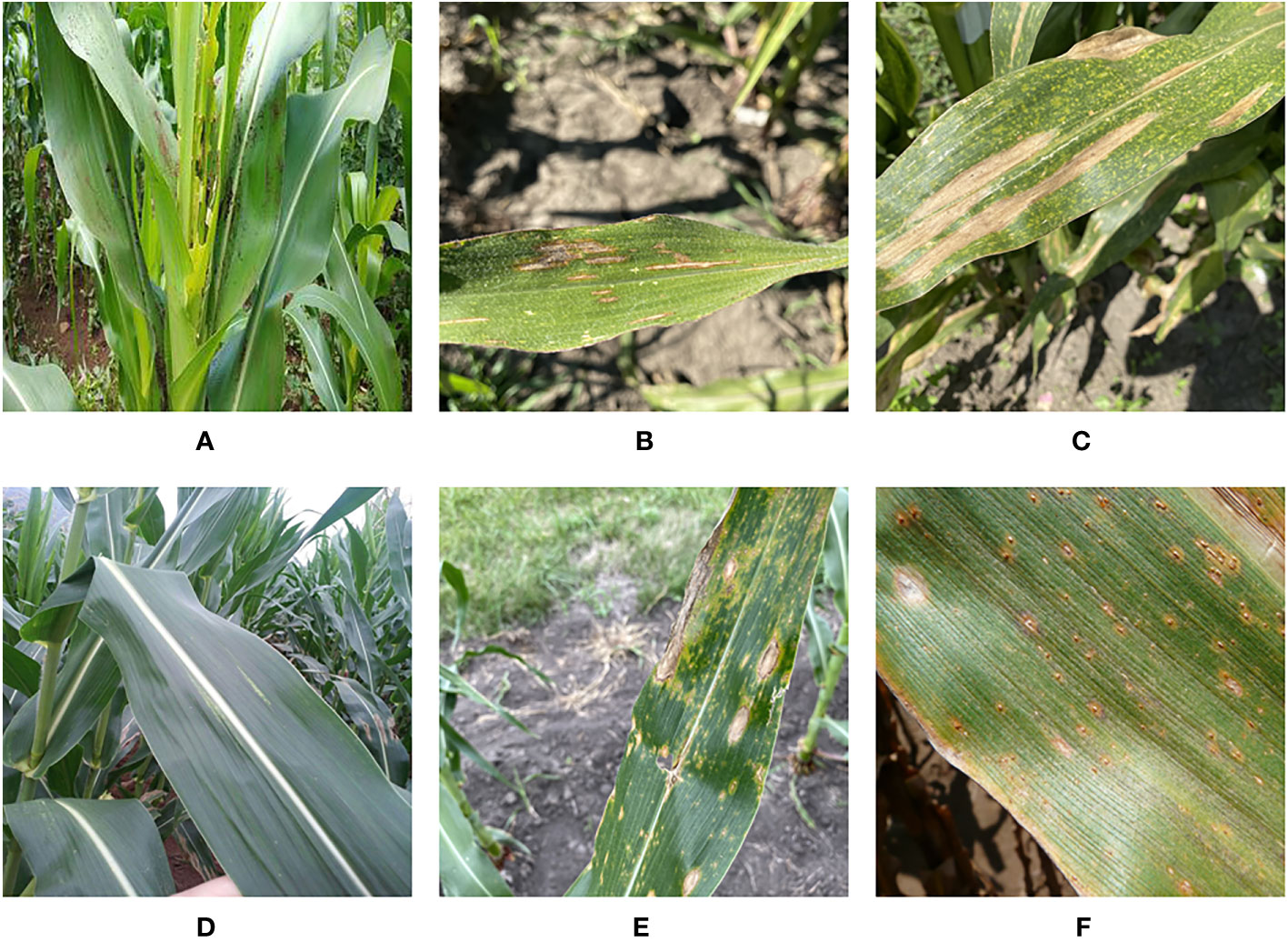

The corn disease data for this paper comes from four different sources. We obtained images of three types of corn diseases images from the CD&S (Ahmad et al., 2021) dataset, namely northern leaf blight (NLB), gray leaf spot (GLS), and northern leaf spot (NLS) respectively. We obtained images of corn rust leaf (RL) under real conditions from the PlantDoc (Singh et al., 2020) dataset. Added to that, we got images of corn leaf infected by fall armyworm on a public website (https://github.com/FXD96/Corn-Diseases). Finally, we collected images of healthy corn leaves (LH) and a few images of other diseases through web crawler technology. Figure 1 shows a sample corn disease dataset in a real environment.

Figure 1 Example of corn leaves: (A) Fall Armyworm. (B) Gray Leaf Spot. (C) Leaf Blight. (D) Leaf Healthy. (E) Northern Leaf Spot. (F) Rust Leaf.

In this paper, we collected 3258 images, including 432 images of Fall Armyworm, 613 images of Gray Leaf Spot, 688 images of Leaf Blight, 537 images of Leaf Healthy, 551 images of Northern Leaf Spot, 437 images of Rust Leaf. After that, the image data of each category are assigned to the training set, validation set and test set in the ratio of 8:1:1 respectively. Table 1 shows the data distribution of corn leaf disease images.

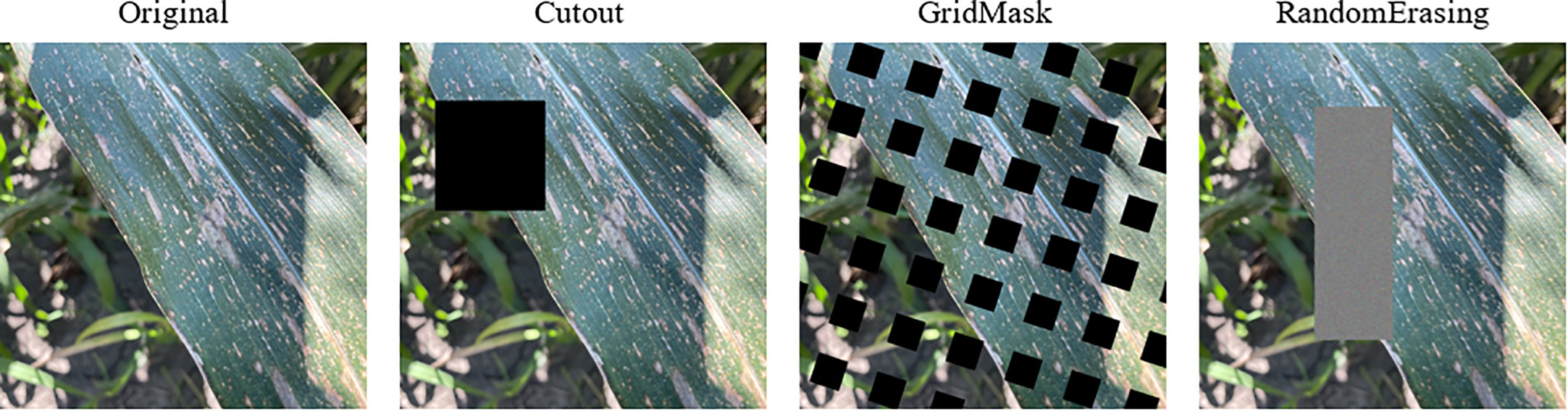

In order to obtain better generalization and robustness and avoid overfitting problems, DL models usually need a lot of data as support (Lin et al., 2023). Therefore, three different online data augmentation methods are used in this paper, namely Cutout (DeVries and Taylor, 2017). Grid Mask (Chen et al., 2020) and Random Erasing (Zhong et al., 2020). Figure 2 illustrates the online data enhancement method used in this paper.

Figure 2 Classical online data augmentation. The Cutout method randomly cuts out part of the sample and fills it with 0 pixel values. Grid Mask generates a mask with the same resolution as the original image, and then multiplies that mask with the original image. Random Erasing randomly selects a region and then overwrites it with a random value.

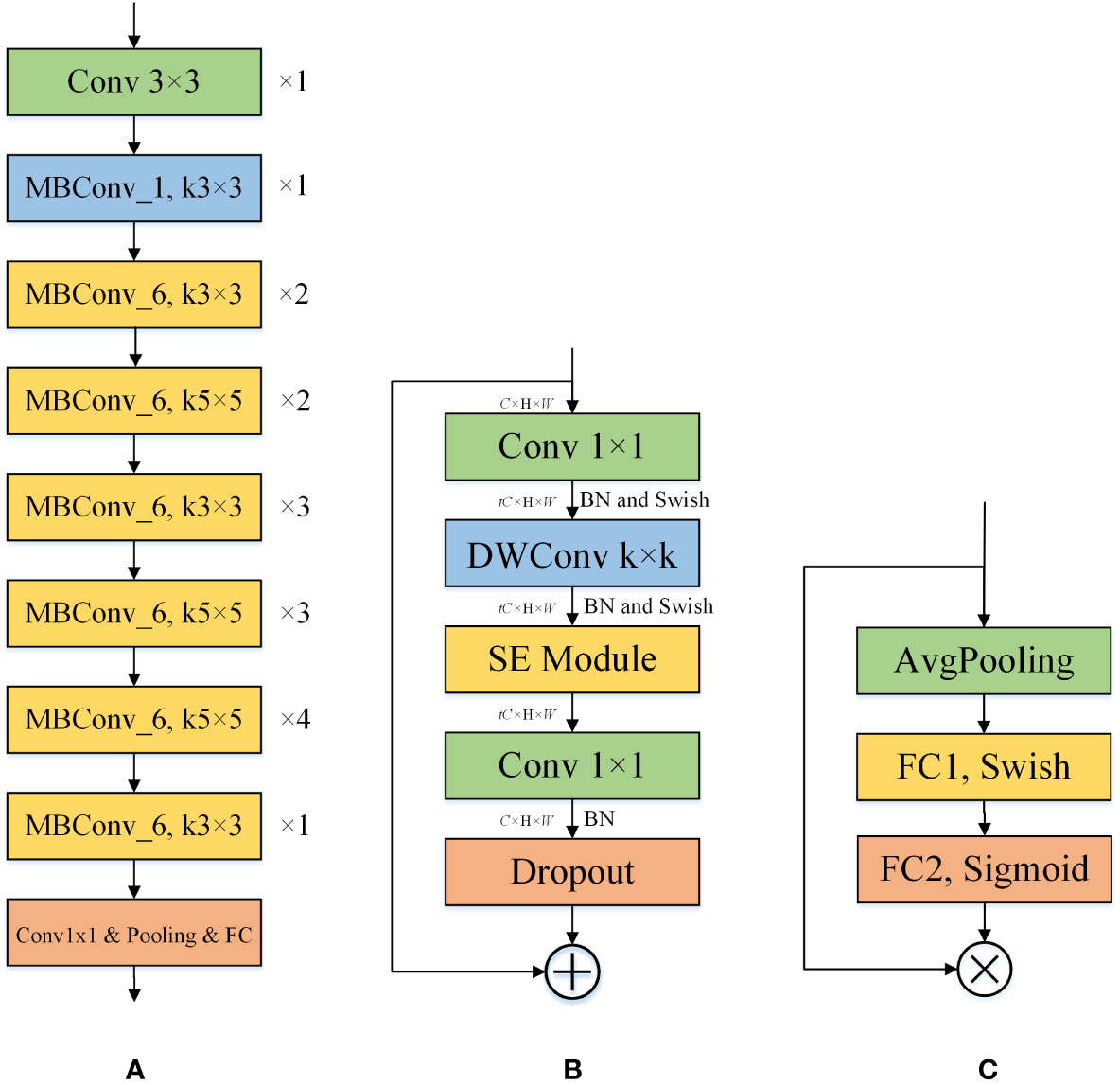

EfficientNet (Tan and Le, 2019) is a network series published by Google in May 2019, designed to improve model accuracy while keeping the model parameter count relatively low. Taking into account the deployment of the network on mobile devices, we chose the EfficientNet-B0 architecture and improved on it. It consists of nine stages, which comprise convolutional layers, MBConv1 layers, MBConv6 layers, pooling layers, and a fully connected layer. The overall architecture of EfficientNet-B0 is shown in Figure 3.

Figure 3 The individual structures included in EfficientNet-B0. (A) The structure of the EfficientNet-B0. ×n indicates that the layer is repeated n times, and kn×n indicates that the size of the convolution kernel is n. (B) The structure of the MBConv_t. k denotes the size of the convolution kernel of the DWConv layer. t indicates that the input channel is expanded by a factor of t. (C) The structure of the SE. SE can perform channel feature enhancement on the input feature map to improve the performance of the model by learning the relationship between different channels.

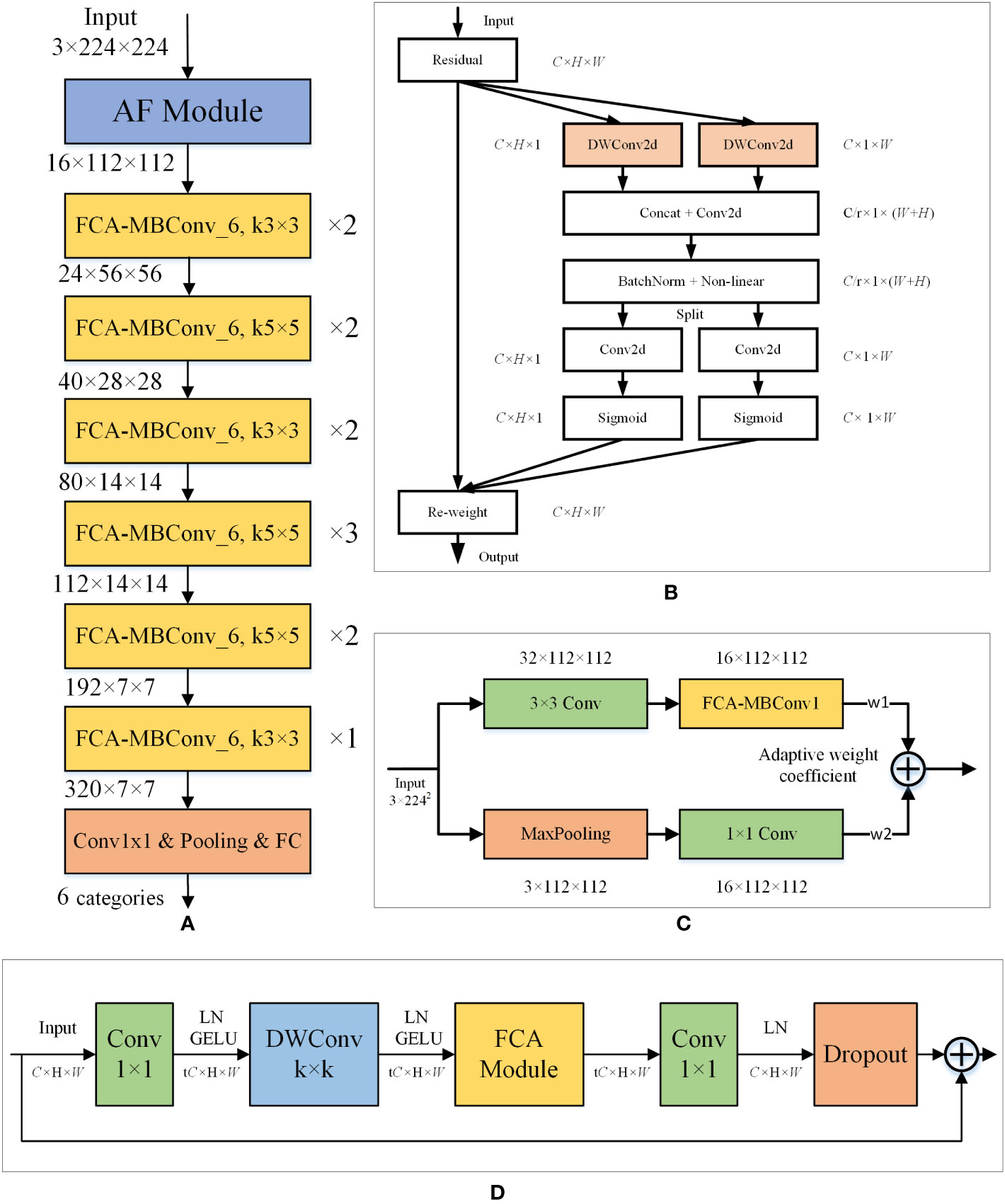

To identify corn disease images more accurately, we propose a new model based on the EfficientNet-B0 architecture, named FCA-EfficientNet. Table 2 provides a detailed layer-by-layer description of FCA-EfficientNet. The overall architecture of FCA-EfficientNet is shown in Figure 4.

Figure 4 Layered architecture diagram of the FCA-EfficientNet. (A) The structure of the FCA-EfficientNet. ×n indicates that the layer is repeated n times, and kn×n indicates that the size of the convolution kernel is n. (B) The structure of the FCA module. Attentional information about spatial orientation is extracted through a convolutional model, allowing the network to better focus on the disease region and reduce interference from complex backgrounds. (C) The structure of the AF module. The AF module uses maximum pooling and 1×1 convolution, which reduces the interference of complex backgrounds and allows the model to better extract shallow features. (D) The structure of the FCA-MBConv_t. k denotes the size of the convolution kernel of the DWConv layer. t indicates that the input channel is expanded by a factor of t.

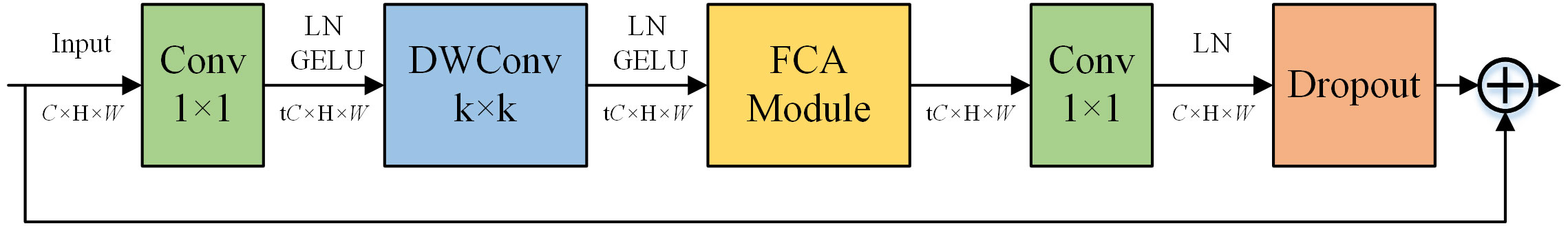

In order to avoid the interference of complex background, we replace the SE module in the MBConv structure with the FCA module, which enables the network to better focus on the lesion area. Figure 5 shows the overall structure of the FCA-MBConv module.

Figure 5 The structure of the FCA-MBConv. k denotes the size of the convolution kernel of the DWConv layer. t indicates that the input channel is expanded by a factor of t.

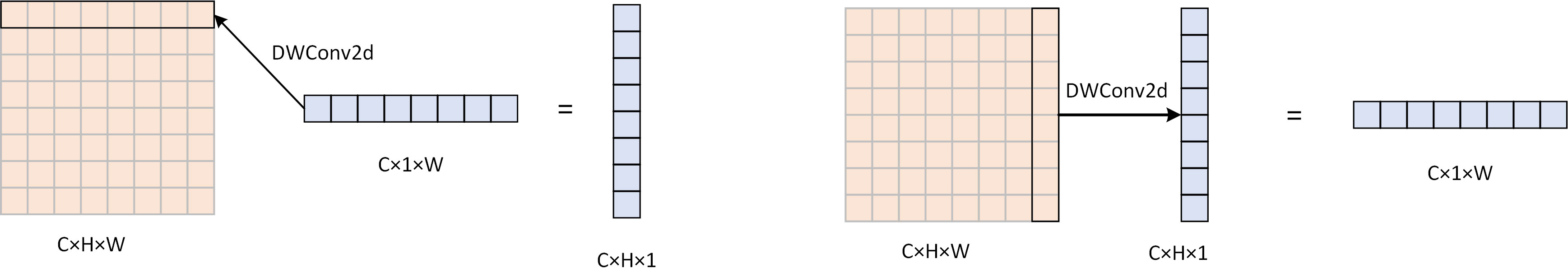

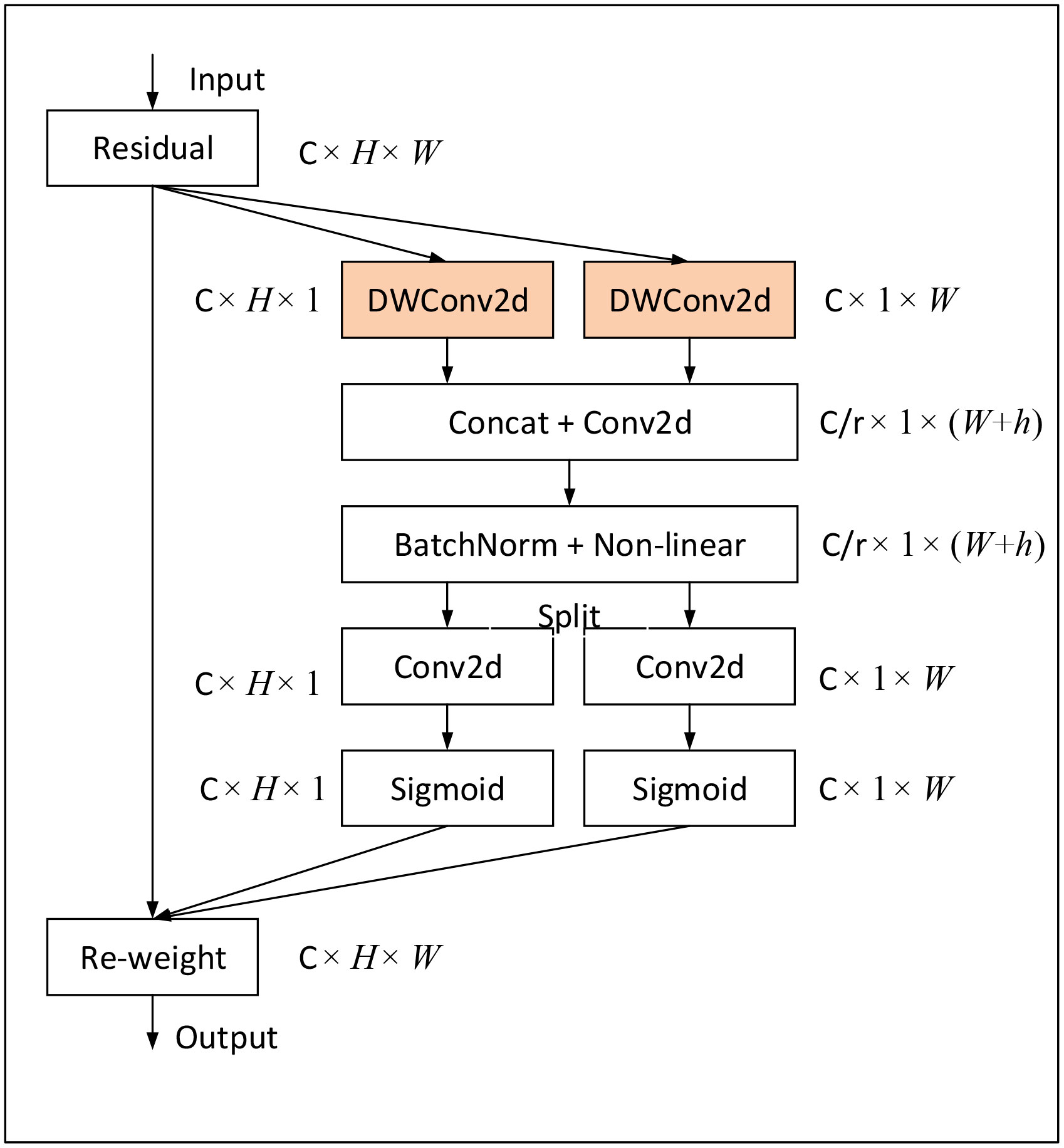

FCA module based on Coordinate Attention (CA) (Hou et al., 2021). While CA employs global average pooling to aggregate horizontal and spatial features, this approach fails to capture critical disease information in the feature map for fine-grained recognition tasks, such as corn disease recognition, leading to recognition accuracy bottlenecks. To overcome this limitation, we remove the global average pooling layer and employ a convolutional kernel with a size of horizontally, and a convolutional kernel with a size of vertically, as shown in Figure 6. This modification enables the attention module to automatically capture salient disease information in different spatial directions and aggregate it, thereby strengthening the ability to capture dependencies between remote disease feature information while preserving precise location information of disease features. The structure of the FCA module is shown in Figure 7.

Figure 6 The computational procedure of DWConv2d, using a DW convolution of 1 × W, can obtain attention in the width direction of size H × 1 by focusing on the feature information in the width direction of the picture. Similarly, using DW convolution of H × 1, attention in the height direction of size 1 × W can be obtained.

Figure 7 The structure of the FCA module. We use DWConv 2d to replace the average pooling layer in CA, which is able to capture critical disease information through convolutional operations while being less susceptible to interference from complex backgrounds.

Assuming the input feature map is xc, where c denotes the number of feature map channel. a new feature map can be obtained by two convolution operations for feature extraction.

Convolution of in the horizontal and vertical directions separately. This results in two output feature maps.

The two output feature maps are concatenated along the channel dimension and passed through a 1x1 convolutional layer followed by a non-linear activation function δ to obtain the output feature map f.

f is decomposed into two outputs along the spatial dimension and transformed into tensors with the same number of channels as the input by two 1×1 convolutions (g and h). The two outputs can be represented by gh and gw:

the output expression of the FCA module is:

Finally, the feature dimensions are reduced and residual joins are performed through the Conv layer, and the output of the FCA-MBConv module is:

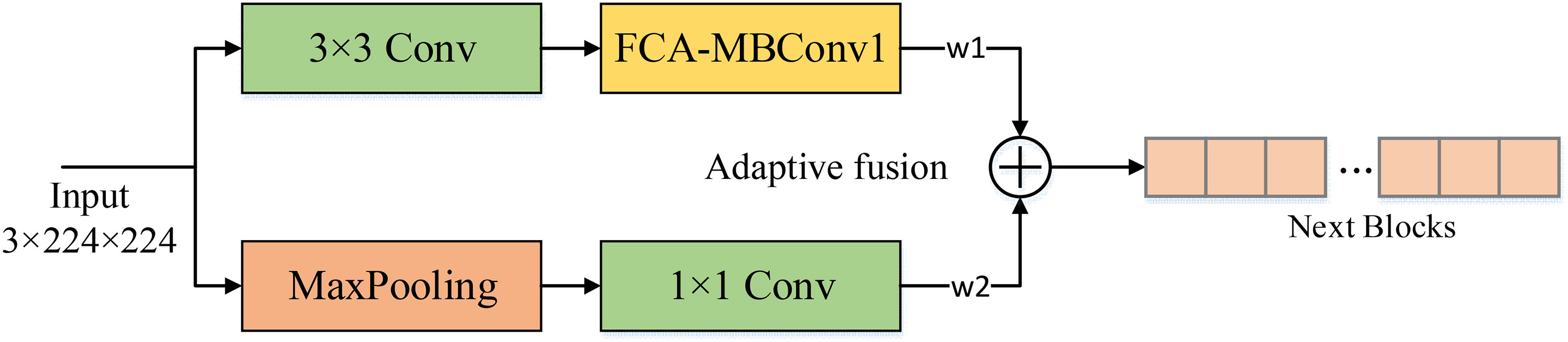

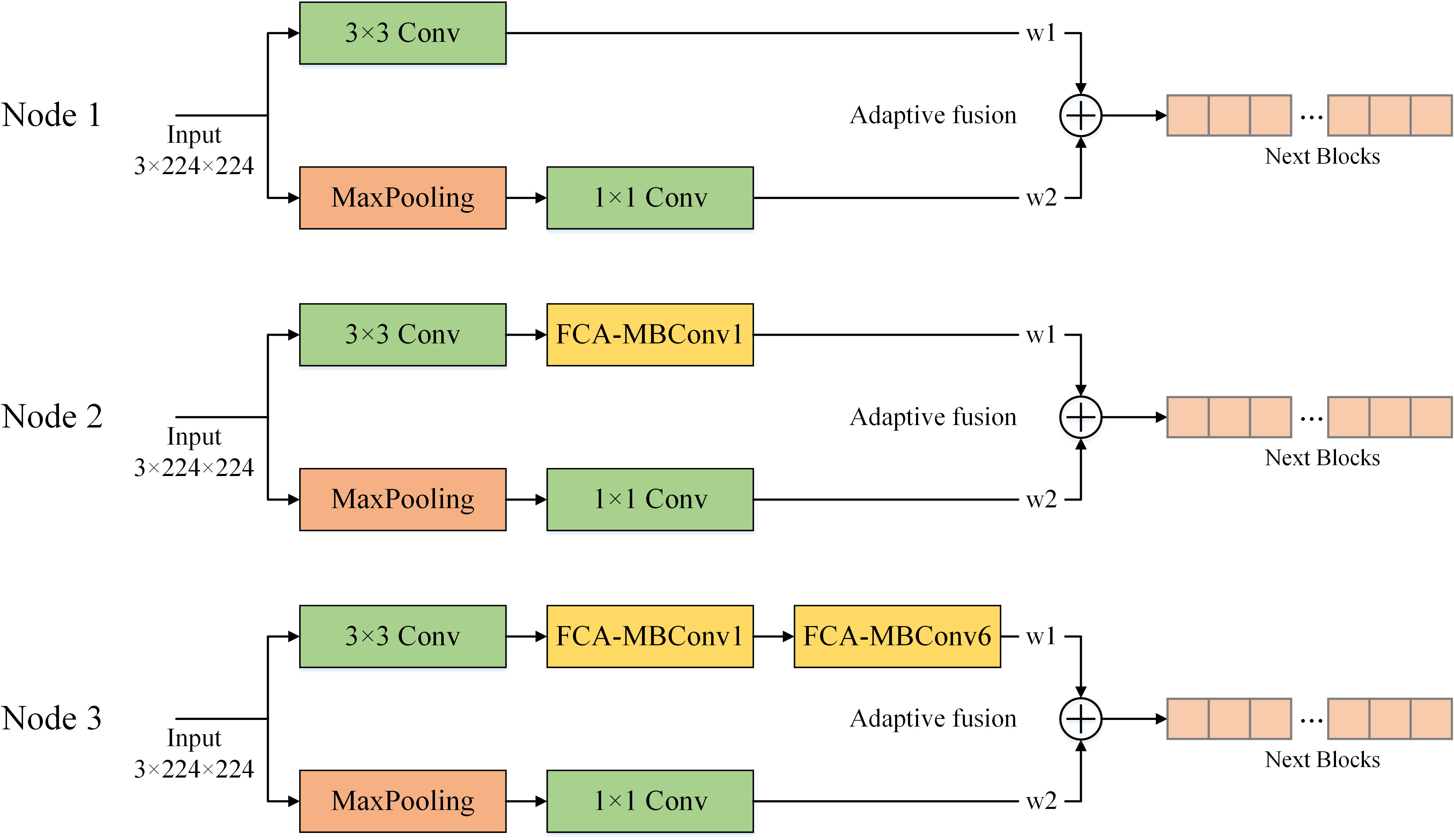

In real-world crop disease images, the presence of complex background noise often makes it challenging to extract shallow features using convolutional layers alone, which can significantly affect the recognition accuracy of the model. To address this issue, we introduced a max-pooling layer to assist in shallow feature extraction. Unlike the conventional feature fusion approaches that involve simple addition or concatenation, we adopted two learnable weighting coefficients to evaluate the feature maps derived from the two methods. This approach enables the model to dynamically allocate weights to the pooled and convolved feature maps based on the complexity of the background information, resulting in effective extraction of shallow convolutional layer features while avoiding interference from complex background noise. As a result, the effectiveness and robustness of the model is significantly improved. The structure of the AF module is shown in Figure 8.

Figure 8 The structure of the AF module. The noise is filtered out using the maximum pooling layer and then dynamically introduced into the backbone network by adaptive parameters to reduce the effect of background noise on the model. w1, w2 are learnable weight coefficients.

Assuming the input image is x, Two output ym,yf can be obtained by max pooling and backbone. We use the learnable weight parameters w1,w2 to weight the two and obtain the final output feature map yAF:

In CNN models, normalization techniques are used to adjust the distribution of the input data to better fit the training process of the neural network. The formula for normalization is as follows.

where x denotes the input feature map and f(x) the output feature map after normalization.

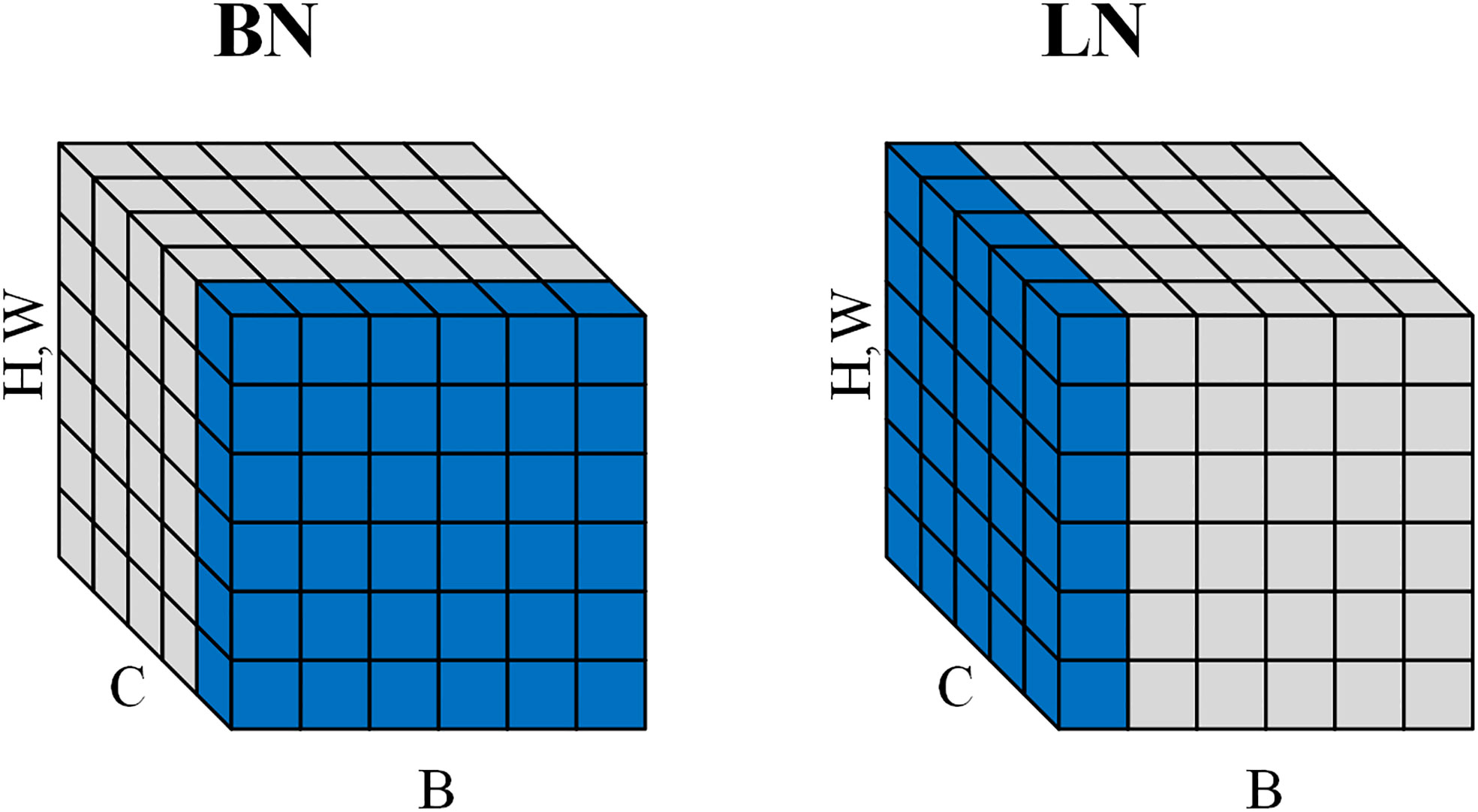

The use of batch normalization (BN) (Ioffe and Szegedy, 2015) in EfficientNet allows the model to accelerate convergence during training, but the inconsistency of BN between training and testing may adversely affect the model performance. Therefore, we adopt layer normalization (LN) (Ba et al., 2016) instead of BN, which has the same performance during training and testing and is easier to implement. LN normalizes the output of each convolutional layer to a normalized value equal to the mean and variance of the inputs, which reduces the memory consumption during the training of the model and improves the generalization ability of the model. As shown in Figure 9. Unlike BNs that are normalized in the batch direction, LN pairs are normalized in the channel direction. This makes LN independent of batch size.

Figure 9 Normalization directions for BN and LN. BN does normalization along the batch direction and calculates the mean of B×H×W. LN does normalization along the channel direction and calculates the mean of C×H×W.

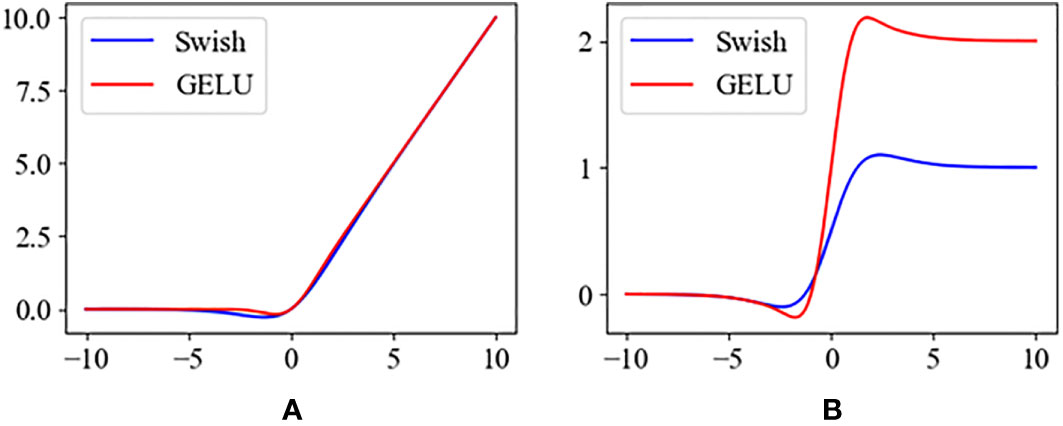

Also to speed up the convergence of the model, we used gaussian error linear unit (GELU) (Hendrycks and Gimpel, 2016) to replace Swish (Ramachandran et al., 2017). Their formulas are as follows.

The plots of Swish and GELU functions and their derivatives are shown in Figure 10. Compared to Swish, GELU tends to converge faster, which makes it more suitable for model training.

Figure 10 (A) The activation functions of the Swish and the GELU. (B) The derivatives of Swish and GELU.

Multiple MBConv layers are included in EfficientNet, and by simply repeating the MBConv layers, the model can be made to learn complex features and achieve better performance. However, EfficientNet was trained by a large dataset like ImageNet with a large number of samples and categories, compared to the corn disease dataset we used with fewer samples and categories. As a result, there are some redundant structures in the original network, and although the effect of these redundant structures on the accuracy can be reduced by the residual structures, these structures cause the model’s to perform unnecessary computations, which affects the recognition speed of the model. Therefore, we remove these redundant structures to reduce the number of parameters of the model and increase the speed of computation without affecting the accuracy of model recognition, which helps in deployment on mobile devices.

Accuracy (Acc), Precision (P), Recall (R), F1 score (F1) are utilized as the evaluation metrics in this study, they are defined as follows:

Where TP, TN, FP, and FN are the abbreviations used to represent the count of true positive, true negative, false positive, and false negative samples, respectively. Furthermore, the parameter count of the model is also considered to evaluate its performance.

This study utilized the language of python-3.7.13 and pytorch-1.12.1 deep learning frameworks for experimentation. All experiments were conducted on a Windows 10 operating system, with an Intel(R) Xeon(R) W-1390 CPU and an NVIDIA RTX3090 GPU. The hyperparameters for model training were set to the configurations listed in Table 3 for all models.

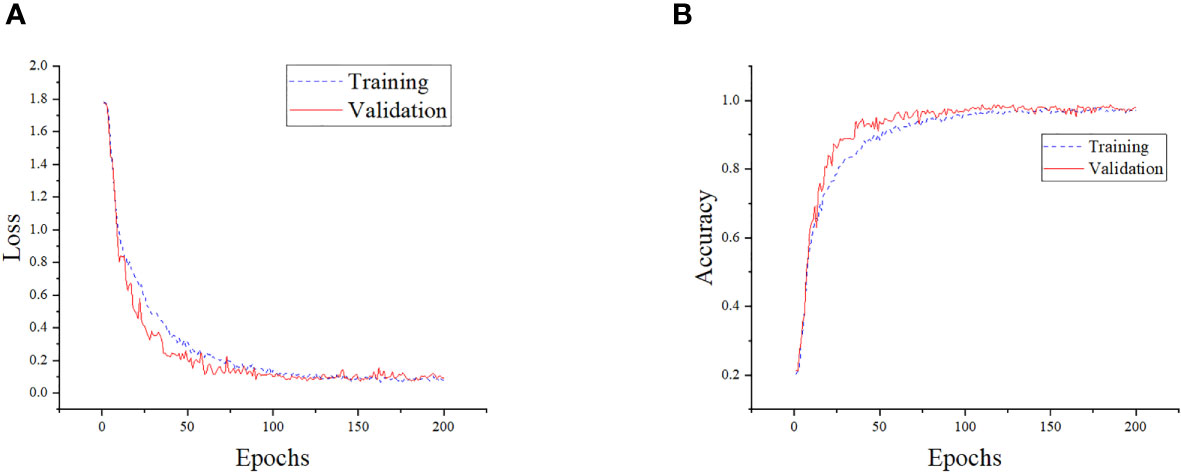

The performance of the model on the training and validation sets is shown in Figure 11. To assess the recognition performance of the model, we conducted a separate analysis of its recognition capabilities on various categories of disease images in the test set. By employing a comprehensive set of evaluation metrics, we performed a quantitative analysis and the experimental results are presented in Table 4. As shown in the table, our model achieved an accuracy of up to 100% in identifying various types of corn diseases, with none falling below 95%. This demonstrates the model’s precise recognition of corn diseases. Notably, the model’s performance in identifying Northern Leaf Spot images was exceptional, with all evaluation metrics reaching the highest levels. Conversely, the model’s recognition performance on Rust Leaf images was comparatively lower, possibly due to the similarity to images of other categories. Additionally, the average precision, recall, and F1 scores of 98.63%, 98.76%, and 98.68%, respectively, demonstrate the model’s superior overall performance, making it highly effective in accomplishing the corn disease classification task.

Figure 11 Training details of the model. (A) Training loss and validation loss of the model. (B) Training accuracy and validation accuracy of the model.

In Figure 12, a confusion matrix is presented to visualize the recognition performance of the designed model on corn disease images. The matrix displays the true and predicted classes of the data along its horizontal and vertical axes, respectively, with the diagonal entries indicating the number of correct predictions. The confusion matrix suggests that the proposed model is capable of effectively identifying multiple classes of corn disease images, as the majority of images in the provided dataset are classified correctly. The misclassifications observed may be attributed to small inter-class differences between certain categories and background interference. Notably, it achieves a high degree of accuracy in correctly classifying all disease images of the GLF, LH, and NLS categories, while maintaining a low rate of false positives for images of other categories. These results underscore the model’s high precision and reliability for identifying corn diseases.

In order to determine the optimal fusion location, we conducted experiments on three different nodes in the shallow network: after the first convolutional layer, after the first FCA-MBConv module, and after the second FCA-MBConv module. Figure 13 shows different fusion nodes. The fusion nodes were selected based on their potential to improve the model’s performance. The results, as presented in Table 5, indicate that the addition of AF at Node1, Node2, and Node3 led to improvements in various evaluation metrics, while maintaining a similar parameter count. Notably, applying AF to the shallow layers of the network resulted in better recognition results compared to the deep layers. Specifically, the performance improvement was most significant when applying AF to Node2, resulting in a 0.92% increase in recognition accuracy and a 0.91% increase in F1-score. These findings suggest that incorporating AF into specific locations of the network can effectively enhance its performance.

Figure 13 Different nodes for adaptive feature fusion. Node 1 is fused after the convolution layer, node 2 is fused after the first FCA-MBConv extracted features, and node 3 is fused after the second FCA-MBConv layer.

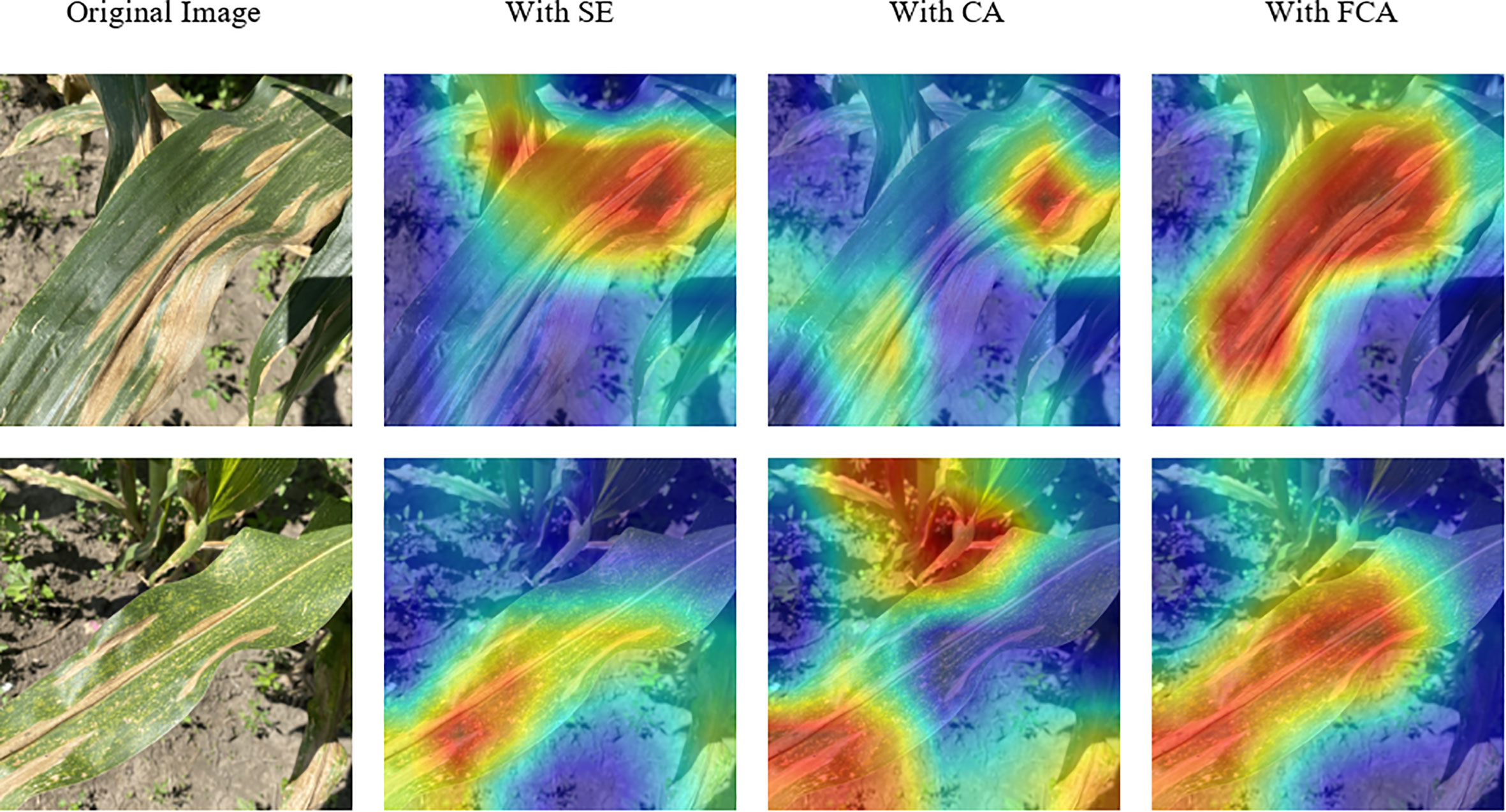

Different attention mechanisms have a significant impact on the performance of CNN models, and adding suitable attention mechanisms can optimize recognition results. This study compared and analyzed the effects of three attention mechanism modules, namely SE, CA, and our proposed FCA on model performance. As shown in the Table 6, adding the CA module did not significantly improve the overall recognition performance of the model, and the F1 score even decreased. However, after adding the FCA module, the model’s performance was significantly improved, with an increase in recognition accuracy of 0.93%. This result verifies that adding the FCA module can effectively promote the model’s focus on disease information, leading to better recognition results.

To analyze the learning ability of models with different attention mechanisms on disease information, we used Grad-Cam (Selvaraju et al., 2017) to generate class activation maps for visualization, as shown in Figure 14. The first column shows the original disease image, and the other columns show the visualization results of different models. The results in the first row of the figure demonstrate that the use of the FCA module enables the model to more comprehensively focus on the primary diseased regions. Moreover, the comparative results presented in the second row of the figure reveal significant disparities between the models. Specifically, the model utilizing the SE module inadequately attends to the entire diseased area, while the model leveraging the CA module primarily focuses on background information. Conversely, the FCA module precisely localizes the distinctive diseased feature region, underscoring its ability to promote the model’s attention to disease information. The results indicate that the FCA module can promote the model’s attention to disease information and is more suitable for identifying corn diseases.

Figure 14 Visualization results of models with different attention mechanisms. The red regions indicate the areas where the model focuses on.

In order to investigate the impact of different normalization methods and activation functions on the model, we conducted comparative analysis experiments by combining BN, LN, SiLU, and GELU. As shown in Table 7, replacing the BN with the LN reduces the complexity of the model, as evidenced by the reduction in Flops, while the parameter counts remain relatively unchanged. In addition, the combination of LN and GELU yields the highest recognition accuracy and precision, and sub-optimal recall and F1-score. The consistency of LN’s performance in training and testing, and the approximately 3% reduction in Flops, make it more suitable for model deployment.

We removing some redundant layers in Stages 5, 6, and 7, the performance of all networks on the test set is shown in the following Table 8. From the table, it can be observed that removing only one redundant layer does not significantly decrease the performance of the model (rows 6, 9, and 11 in the table). The model can still maintain good performance even after removing all redundant layers (row 1 in the table). However, removing all redundant layers in shallow stages while keeping the same number of layers in Stage 7 may lead to overfitting and a significant decrease in model performance (row 3 in the table). With consideration of the accuracy, parameter quantity, and computational complexity of the models, we finally selected 2 layers in Stage 5, 3 layers in Stage 6, and 2 layers in Stage 7 (row 4 in the table).

This study conducted ablation experiments to demonstrate the efficacy of the proposed model in improving model performance. Table 9 presents the results of the ablation experiments on the test set. The introduction of the AF module, FCA module, LN and GELU led to accuracy improvements of 0.9%, 0.9%, and 0.3%, respectively. Moreover, we have identified the optimal neural network architecture that achieves a 0.3% improvement in accuracy on the test set while reducing the model’s parameter count. The improved model reduced the parameters by 0.58M compared to the original EfficientNet model and achieved a 2.4% increase in accuracy. These findings highlight the ability of the designed model to effectively learn disease feature information in corn disease images, thereby achieving superior recognition performance with lower parameter consumption following model optimization.

CNN has shown superior performance in the field of image classification, and CNN-based classification models have diversity for different domains. To demonstrate the classification performance of the designed model, this study conducted experiments on corn disease recognition using existing classic CNN models under the same training conditions. The experiments used different models with varying parameter sizes, including VGG16, ResNet50, Shufflenetv2, and MobileNetv2, and the accuracy change curves of each model on the validation set during 200 epochs of training are shown in Figure 15. It is not difficult to observe from the validation curve graph that, compared with existing classic models, the proposed model can achieve a higher accuracy at a faster rate and with less oscillation, demonstrating better accuracy and stability.

Table 10 shows the recognition performance of different models on the test set, and compares and analyzes the advantages of the proposed model using metrics such as accuracy, precision, recall, F1-score, parameter count, Flops and inference time. Among the existing classic CNN models, VGG16, ResNet50, and DenseNet169 all have an accuracy of around 95%, while lightweight models such as MobileNet and ShuffleNet have lower accuracy at around 94%. In contrast, our model achieves an accuracy of 98.77% with only 3.44M parameters. Moreover, our model has precision, recall, and F1-score all greater than 98% with the lowest number of parameters, indicating strong generalization ability and significant advantages over other models, making it more suitable for the recognition task of corn disease images.

1Model's inference time tested on NVIDIA RTX3090

Meanwhile, in order to further verified the robustness of the model, we changed the contrast and brightness of the test set, images to test the recognition accuracy of the model under different lighting conditions. We used four different magnifications (0.5, 0.67, 1.5, 2) to change the contrast or brightness of the image, respectively. The images before and after enhancement are shown in Figure 16.

Table 11 lists the recognition accuracies of the different networks on the augmented test set, and it can be seen that the recognition accuracies of the networks all produce a large drop due to the substantial changes made to the images, but the recognition accuracies of our proposed network drop to a lesser extent compared to the other networks, which suggests that the model’s is more robust under different lighting conditions.

To further evaluated the performance of the model, we compared it with some related studies that have been conducted. Table 12 lists some of the studies on corn disease recognition, including the techniques used, data sources, disease categories and numbers, and the recognition accuracy of corn images.

In these studies, CNNs showed significant performance strength, while conventional image processing techniques performed poorly in terms of recognition accuracy for multiple types of diseases (Aravind et al., 2018; Kusumo et al., 2018). Meanwhile, CNNs have shown better recognition performance in datasets with simple backgrounds (Ahila Priyadharshini et al., 2019; Lv et al., 2020; Yin et al., 2022), but the robustness of CNNs decreases when they are applied to corn disease identification in real environments (Zeng et al., 2022a). Moreover, our image dataset is similar to the studies of Chen et al. (2022) and Zeng et al. (2022b), but our accuracy is higher and the Flops is smaller. This indicates that FCA-EfficientNet has excellent computational efficiency and potential for practical implementation. Therefore, FCA- EfficientNet has certain performance advantages in the field of corn disease image recognition.

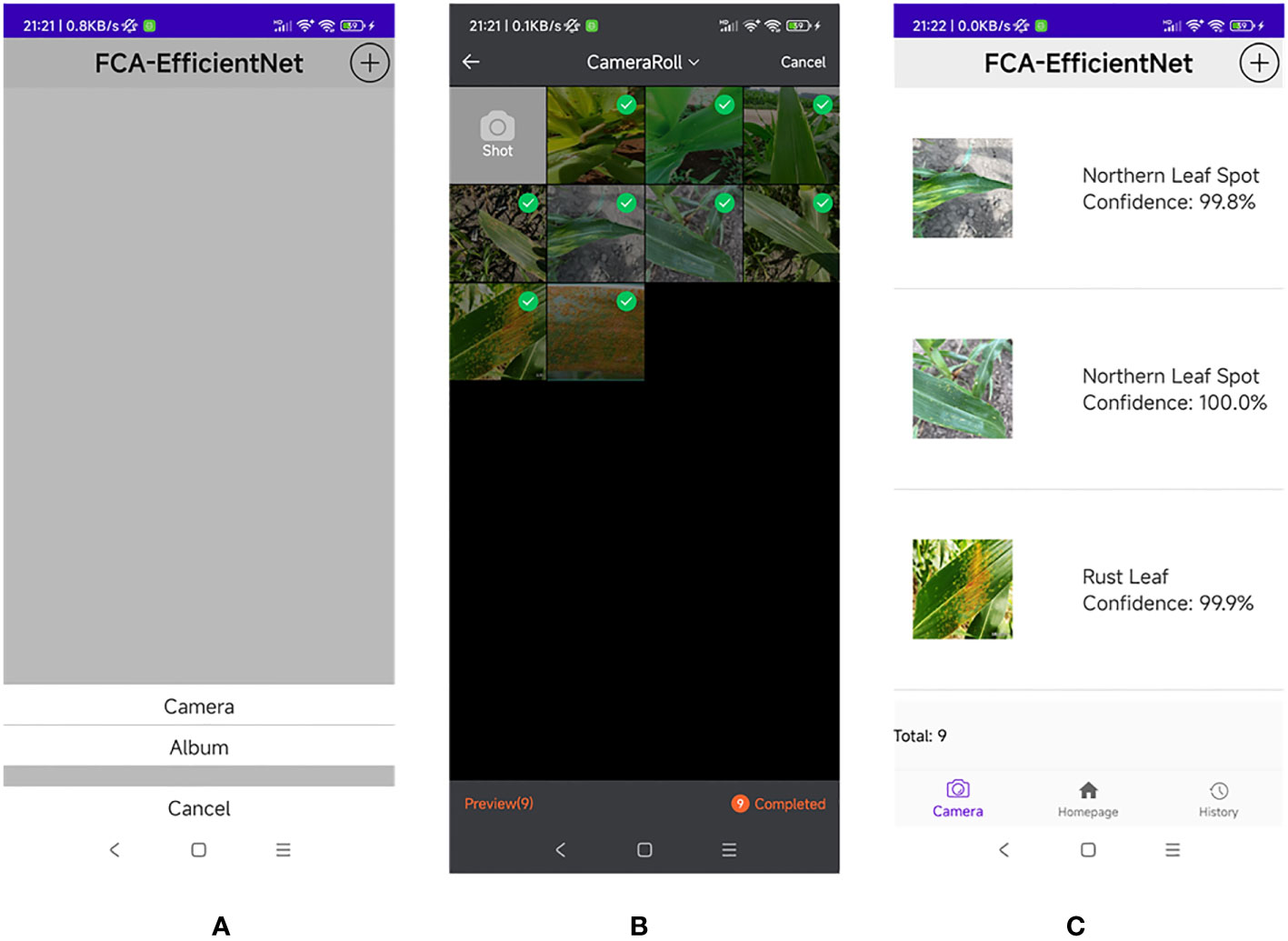

We deploy the model on a mobile device to verify its performance. The mobile device used MediaTek Dimensity 1100, 8GB RAM, and Android 12. We conducted 5 consecutive experiments on this device, each experiment recognizing 1000 images. The average recognition speed for each image on the current device was: 88.34ms, 87.99ms, 91.87ms, 93.16ms, 93.02ms. The average recognition time for the 5 experiments was 92.88ms. Based on these current experimental results, the model can meet daily recognition needs. Figure 17 shows the detailed operation of the system.

Figure 17 Application interface. (A) Open the application and select either “Camera” or “Album” to upload an image. (B) Open the photo gallery and select multiple disease images. (C) Obtain the disease image recognition results and their corresponding confidence levels.

In this study, we propose an improved EfficientNet model, FCA-EfficientNet, to achieve accurate identification of multiple corn diseases. To recognize corn diseases in the field environment, we designed the AF module for filtering background noise and the FCA module that enables the network to be able to focus on diseased areas. In addition, we used LN and GELU to improve the generalization ability of the network. Finally, we removed redundant network structures to make the model more lightweight. In the corn disease dataset, the classification accuracy of FCA-EfficientNet for six corn images is 98.78%, and all the evaluation metrics in the comparison experiments are better than the classical CNN model with good generalization ability. The number of parameters of FCA-EfficientNet is 3.44M, which meets the conditions for deployment on mobile devices. The average recognition speed of each image on the test device is 92.88ms, which has good recognition speed and meets the recognition needs of growers. However, at the present time, the model can only quickly identify a small number of common corn diseases and cannot cover the full range of corn diseases. In the future, we plan to use transfer learning on large-scale plant disease datasets to enhance the model performance, as well as autonomously collect images of more types of corn diseases to further optimize the utility of the model and provide technical support to relevant growers.

The data analyzed in this study is subject to the following licenses/restrictions: The datasets for this manuscript are not publicly available because this research has been carried out as part of a project funded by the Government of China. Requests to access these datasets should be directed toeHpoYW5nMUBnenUuZWR1LmNu.

XC and XZ designed the experiments. JC, RP, JWL, JML, LZ, and XW performed the experiments. JC, RP, and JWL analyzed data. JC drafted the manuscript. XC and XZ conducted visualization and proofreading of the manuscript. All authors contributed to the article and approved the submitted version.

This study was supported by the National Key Research and Development Program of China (2021YFE0107700), the National Natural Science Foundation of China (32360705; 31960555), Guizhou Provincial Science and Technology Program (2019-1410; HZJD[2022]001), Outstanding Young Scientist Program of Guizhou Province (KY2021-026), and Guizhou University Cultivation Project (2019-04). In addition, the study received support by the Program for Introducing Talents to Chinese Universities (111 Program, D20023).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Ahila Priyadharshini, R., Arivazhagan, S., Arun, M., Mirnalini, A. (2019). Maize leaf disease classification using deep convolutional neural networks. Neural Computing Appl. 31, 8887–8895. doi: 10.1007/s00521-019-04228-3

Ahmad, A., Saraswat, D., Gamal, A. E., Johal, G. (2021). Cd&s dataset: Handheld imagery dataset acquired under field conditions for corn disease identification and severity estimation. arXiv preprint arXiv:2110.12084.

Al-Gaashani, M. S., Samee, N. A., Alnashwan, R., Khayyat, M., Muthanna, M. S. A. (2023). Using a resnet50 with a kernel attention mechanism for rice disease diagnosis. Life 13, 1277. doi: 10.3390/life13061277

Aravind, K., Raja, P., Mukesh, K., Aniirudh, R., Ashiwin, R., Szczepanski, C. (2018). “Disease classification in maize crop using bag of features and multiclass support vector machine,” in 2018 2nd International Conference on Inventive Systems and Control (ICISC). (IEEE), 1191–1196.

Ba, J. L., Kiros, J. R., Hinton, G. E. (2016). Layer norMalization. arXiv preprint arXiv:1607.06450.

Chen, Y., Chen, X., Lin, J., Pan, R., Cao, T., Cai, J., et al. (2022). Dfcanet: A novel lightweight convolutional neural network model for corn disease identification. Agriculture 12, 2047. doi: 10.3390/agriculture12122047

Chen, P., Liu, S., Zhao, H., Jia, J. (2020). Gridmask data augmentation. arXiv preprint arXiv:2001.04086.

DeVries, T., Taylor, G. W. (2017). Improved regularization of convolutional neural networks with cutout. arXiv preprint arXiv:1708.04552.

Guruprasad, P. (2020). “Overview of different thresholding methods in image processing,” in TEQIP Sponsored 3rd National Conference on ETACC.

Hendrycks, D., Gimpel, K. (2016). Gaussian error linear units (gelus). arXiv preprint arXiv:1606.08415.

Hou, Q., Zhou, D., Feng, J. (2021). “Coordinate attention for efficient mobile network design,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 13713–13722.

Hu, X., Wang, R., Du, J., Hu, Y., Jiao, L., Xu, T. (2023). Class-attention-based lesion proposal convolutional neural network for strawberry diseases identification. Front. Plant Sci. 14, 1091600. doi: 10.3389/fpls.2023.1091600

Ioffe, S., Szegedy, C. (2015). “Batch norMalization: Accelerating deep network training by reducing internal covariate shift,” in International conference on machine learning. (PMLR), 448–456.

Kai, S., Zhikun, L., Hang, S., Chunhong, G. (2011). “A research of maize disease image recognition of corn based on bp networks,” in 2011 third international conference on measuring technology and mechatronics automation (IEEE), 1, 246–249.

Kusumo, B. S., Heryana, A., Mahendra, O., Pardede, H. F. (2018). “Machine learning-based for automatic detection of corn-plant diseases using image processing,” in 2018 International conference on computer, control, informatics and its applications (IC3INA). (IEEE), 93–97.

Lin, J., Chen, X., Pan, R., Cao, T., Cai, J., Chen, Y., et al. (2022a). Grapenet: A lightweight convolutional neural network model for identification of grape leaf diseases. Agriculture 12, 887. doi: 10.3390/agriculture12060887

Lin, J., Chen, Y., Pan, R., Cao, T., Cai, J., Yu, D., et al. (2022b). Camffnet: A novel convolutional neural network model for tobacco disease image recognition. Comput. Electron. Agric. 202, 107390. doi: 10.1016/j.compag.2022.107390

Lin, J., Yu, D., Pan, R., Cai, J., Liu, J., Zhang, L., et al. (2023). Improved YOLOX-Tiny network for detection of tobacco brown spot disease. Front. Plant Sci. 14.

Lv, M., Zhou, G., He, M., Chen, A., Zhang, W., Hu, Y. (2020). Maize leaf disease identification based on feature enhancement and dms-robust alexnet. IEEE Access 8, 57952–57966. doi: 10.1109/ACCESS.2020.2982443

Mishra, S., Sachan, R., Rajpal, D. (2020). Deep convolutional neural network based detection system for real-time corn plant disease recognition. Proc. Comput. Sci. 167, 2003–2010. doi: 10.1016/j.procs.2020.03.236

Nigam, S., Jain, R., Marwaha, S., Arora, A., Haque, M. A., Dheeraj, A., et al. (2023). Deep transfer learning model for disease identification in wheat crop. Ecol. Inf. 75, 102068. doi: 10.1016/j.ecoinf.2023.102068

O’Mahony, N., Campbell, S., Carvalho, A., Harapanahalli, S., Hernandez, G. V., Krpalkova, L., et al. (2020). “Deep learning vs. traditional computer vision,” in Advances in Computer Vision: Proceedings of the 2019 Computer Vision Conference (CVC), (Springer) 1, 128–144.

Praveen, S. P., Srinivasu, P. N., Shafi, J., Wozniak, M., Ijaz, M. F. (2022). Resnet-32 and fastai for diagnoses of ductal carcinoma from 2d tissue slides. Sci. Rep. 12, 20804. doi: 10.1038/s41598-022-25089-2

Ramachandran, P., Zoph, B., Le, Q. V. (2017). Searching for activation functions. arXiv preprint arXiv:1710.05941.

Rosas-Castor, J. M., Guzmán-Mar, J. L., Hernández-Ramírez, A., Garza-González, M. T., Hinojosa-Reyes, L. (2014). Arsenic accumulation in maize crop (zea mays): a review. Sci. Total Environ. 488, 176–187. doi: 10.1016/j.scitotenv.2014.04.075

Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D. (2017). "Grad-cam: Visual explanations from deep networks via gradient-based localization," in Proceedings of the IEEE international conference on computer vision, 618–626.

Singh, D., Jain, N., Jain, P., Kayal, P., Kumawat, S., Batra, N. (2020). “Plantdoc: A dataset for visual plant disease detection,” in Proceedings of the 7th ACM IKDD CoDS and 25th COMAD. 249–253.

Srinivasu, P. N., Shafi, J., Krishna, T. B., Sujatha, C. N., Praveen, S. P., Ijaz, M. F. (2022). Using recurrent neural networks for predicting type-2 diabetes from genomic and tabular data. Diagnostics 12, 3067. doi: 10.3390/diagnostics12123067

Stephen, A., Punitha, A., Chandrasekar, A. (2023). Designing self attention-based resnet architecture for rice leaf disease classification. Neural Computing Appl. 35, 6737–6751. doi: 10.1007/s00521-022-07793-2

Sun, S., Hu, B., Yu, Z., Song, X. (2020). A stochastic max pooling strategy for convolutional neural network trained by noisy samples. Int. J. Comput. Commun. Control 15 (1). doi: 10.15837/ijccc.2020.1.3712

Tan, M., Le, Q. (2019). “Efficientnet: Rethinking model scaling for convolutional neural networks,” in International conference on machine learning. (PMLR), 6105–6114.

Vishnoi, V. K., Kumar, K., Kumar, B., Mohan, S., Khan, A. A. (2022). Detection of apple plant diseases using leaf images through convolutional neural network. IEEE Access 11, 6594–6609. doi: 10.1109/ACCESS.2022.3232917

Yin, C., Zeng, T., Zhang, H., Fu, W., Wang, L., Yao, S. (2022). Maize small leaf spot classification based on improved deep convolutional neural networks with a multi-scale attention mechanism. Agronomy 12, 906. doi: 10.3390/agronomy12040906

Zeng, W., Li, H., Hu, G., Liang, D. (2022a). Identification of maize leaf diseases by using the skpsnet-50 convolutional neural network model. Sustain. Computing: Inf. Syst. 35, 100695. doi: 10.1016/j.eswa.2020.114514

Zeng, W., Li, H., Hu, G., Liang, D. (2022b). Lightweight dense-scale network (ldsnet) for corn leaf disease identification. Comput. Electron. Agric. 197, 106943. doi: 10.1016/j.compag.2022.106943

Keywords: Convolutional Neural Network, corn leaf disease, real scene, lightweight model, fully-convolution-based coordinate attention

Citation: Cai J, Pan R, Lin J, Liu J, Zhang L, Wen X, Chen X and Zhang X (2023) Improved EfficientNet for corn disease identification. Front. Plant Sci. 14:1224385. doi: 10.3389/fpls.2023.1224385

Received: 17 May 2023; Accepted: 22 August 2023;

Published: 11 September 2023.

Edited by:

Muhammad Fazal Ijaz, Melbourne Institute of Technology, AustraliaReviewed by:

Parvathaneni Naga Srinivasu, Prasad V. Potluri Siddhartha Institute of Technology, IndiaCopyright © 2023 Cai, Pan, Lin, Liu, Zhang, Wen, Chen and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xin Zhang, eHpoYW5nMUBnenUuZWR1LmNu; Xiaoyulong Chen, Y2hlbnhpYW95dWxvbmdAc2luYS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.