94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 31 March 2023

Sec. Sustainable and Intelligent Phytoprotection

Volume 14 - 2023 | https://doi.org/10.3389/fpls.2023.1158837

This article is part of the Research Topic Remote Sensing for Field-based Crop Phenotyping View all 18 articles

Xinkai Sun1

Xinkai Sun1 Zhongyu Yang1

Zhongyu Yang1 Pengyan Su1

Pengyan Su1 Kunxi Wei1

Kunxi Wei1 Zhigang Wang1

Zhigang Wang1 Chenbo Yang1

Chenbo Yang1 Chao Wang1

Chao Wang1 Mingxing Qin2

Mingxing Qin2 Lujie Xiao1

Lujie Xiao1 Wude Yang1

Wude Yang1 Meijun Zhang1

Meijun Zhang1 Xiaoyan Song1

Xiaoyan Song1 Meichen Feng1*

Meichen Feng1*Leaf area index (LAI) is an essential indicator for crop growth monitoring and yield prediction. Real-time, non-destructive, and accurate monitoring of crop LAI is of great significance for intelligent decision-making on crop fertilization, irrigation, as well as for predicting and warning grain productivity. This study aims to investigate the feasibility of using spectral and texture features from unmanned aerial vehicle (UAV) multispectral imagery combined with machine learning modeling methods to achieve maize LAI estimation. In this study, remote sensing monitoring of maize LAI was carried out based on a UAV high-throughput phenotyping platform using different varieties of maize as the research target. Firstly, the spectral parameters and texture features were extracted from the UAV multispectral images, and the Normalized Difference Texture Index (NDTI), Difference Texture Index (DTI) and Ratio Texture Index (RTI) were constructed by linear calculation of texture features. Then, the correlation between LAI and spectral parameters, texture features and texture indices were analyzed, and the image features with strong correlation were screened out. Finally, combined with machine learning method, LAI estimation models of different types of input variables were constructed, and the effect of image features combination on LAI estimation was evaluated. The results revealed that the vegetation indices based on the red (650 nm), red-edge (705 nm) and NIR (842 nm) bands had high correlation coefficients with LAI. The correlation between the linearly transformed texture features and LAI was significantly improved. Besides, machine learning models combining spectral and texture features have the best performance. Support Vector Machine (SVM) models of vegetation and texture indices are the best in terms of fit, stability and estimation accuracy (R2 = 0.813, RMSE = 0.297, RPD = 2.084). The results of this study were conducive to improving the efficiency of maize variety selection and provide some reference for UAV high-throughput phenotyping technology for fine crop management at the field plot scale. The results give evidence of the breeding efficiency of maize varieties and provide a certain reference for UAV high-throughput phenotypic technology in crop management at the field scale.

Maize is one of the essential food crops in the world, and its production mode and planting area are related to world food security (Tester and Langridge, 2010). Leaf area index (LAI) refers to the ratio of total green leaf area per unit land area to the unit land area (Ke et al., 2016), which is one of the important indexes for evaluating crop growth and ecological environment research (Richardson et al., 2011). It is not only closely related to crop photosynthesis (Duncan, 1971) and transpiration (Kang et al., 2003), but also often used as one of the basis for yield estimation (Duan et al., 2019; Fu et al., 2020). Therefore, efficient and precise monitoring of maize LAI is crucial for gaining insights into maize growth dynamics and optimizing maize breeding strategies.

However, the traditional methods to obtain LAI are mostly destructive sampling methods, using leaf area meters to measure isolated blades and calculate them (Yang et al., 2021). This method is time-consuming, laborious and inefficient. Although non-destructive monitoring of LAI can be achieved using handheld instruments such as the SunScan Canopy Analyser (Oguntunde et al., 2012) and the LAI-2200 (Wilhelm et al., 2000), the data obtained using handheld instruments only represent LAI at a small scale, making it difficult to realize rapid and nondestructive monitoring at field scale (Liu et al., 2016). Satellite remote sensing enables rapid and non-destructive monitoring of crop LAI at a regional scale. (Chen et al., 2010). However, its susceptibility to adverse weather conditions, low temporal and spatial resolution limits its ability to meet the quantitative monitoring requirements at the field and plot scales. The ground platform is mainly suitable for small-scale crop growth monitoring, which is affected by the scope of data acquisition and the cost of use, and cannot achieve rapid monitoring at spatial scales.

In recent years, the continuous development of UAV flight platforms and airborne sensor technology has promoted the application of UAV remote sensing technology in agricultural and forestry information monitoring. UAV remote sensing platforms have the advantages of low cost, simple structure, high mobility and high spatial and temporal resolution to make up for the shortage of satellite and ground-based remote sensing platforms (Xie and Yang, 2020). The UAV platform, equipped with visible, multispectral, and hyperspectral cameras, is used to acquire image data. Image processing techniques are applied to extract essential information, such as spectral features, texture, and point clouds, which are subsequently used to build models for crop growth parameter monitoring and yield estimation. (Liu et al., 2018; Sarkar et al., 2021; Jiang et al., 2022). The model construction process often involves a combination of non-linear relationships that affect the model’s universality. Machine learning methods can effectively solve the modelling problem of non-linear relational combinations and have been widely used in remote sensing monitoring. Wang and Jiang (2021) used UAV multispectral remote sensing data to achieve the monitoring of soybean leaf area index, and the support vector machine (SVM) had better predictions compared to the linear model (R2 = 0.688, RMSE = 0.016). Qi et al. (2020) achieved accurate estimation of peanut LAI (R2 = 0.968, RMSE = 0.165) using Back Propagation neural network algorithm (BPNN) combined with UAV spectral features. Kanning et al. (2018) extracted wheat canopy spectra based on UAV hyperspectral images and constructed a model for monitoring wheat LAI and chlorophyll using Partial Least Squares Regression (PLSR), demonstrating the feasibility of UAV hyperspectral imaging technology for monitoring crop growth parameters at the field scale. Although spectral features combined with machine learning algorithm can better estimate crop LAI, when LAI is high, the estimation model constructed by various vegetation indexes will appear “over-fitting” phenomenon (Hang et al., 2021). In addition, UAV multispectral images offer limited spectral information, and relying solely on spectral parameters such as reflectance or vegetation index may result in “same spectrum and different things” or “same thing and different spectrum” scenarios (Liu et al., 2018). Thus, crop growth monitoring should incorporate multiple data dimensions, such as time and space, to account for spectral, temporal, and spatial resolutions.

Texture features are also one of the image features of UAV remote sensing imagery (Yang et al., 2021), and are widely used for image classification and monitoring of crop growth physiological indicators (Haralick et al., 1973; Coburn and Roberts, 2004; Li et al., 2019). Chen et al. (2019) used spectral information and texture information to estimate chlorophyll content of potatoes and found that the fusion of vegetation index and texture features could significantly improve the estimation accuracy of the model. Zhu et al. (2022) developed a machine learning model for remote sensing monitoring of Wheat Scab using multispectral and texture features. Their results demonstrated that the fusion of vegetation indices and texture parameters improved the accuracy of Wheat Scab detection. However, little is known about field-scale remote sensing monitoring of maize LAI using spectral and texture features extracted from UAV multispectral imagery.

Based on the above problems, this study attempts to extract and optimize the spectral and texture features from UAV multispectral images. It combines the optimized features with SVM, Random Forest (RF), BPNN and PLSR to build a field-scale corn LAI remote sensing monitoring model. We compared the effects of spectral features and texture features on LAI estimation. Furthermore, we explored the influence of machine learning method synergistic spectral and texture features on LAI estimation potential.

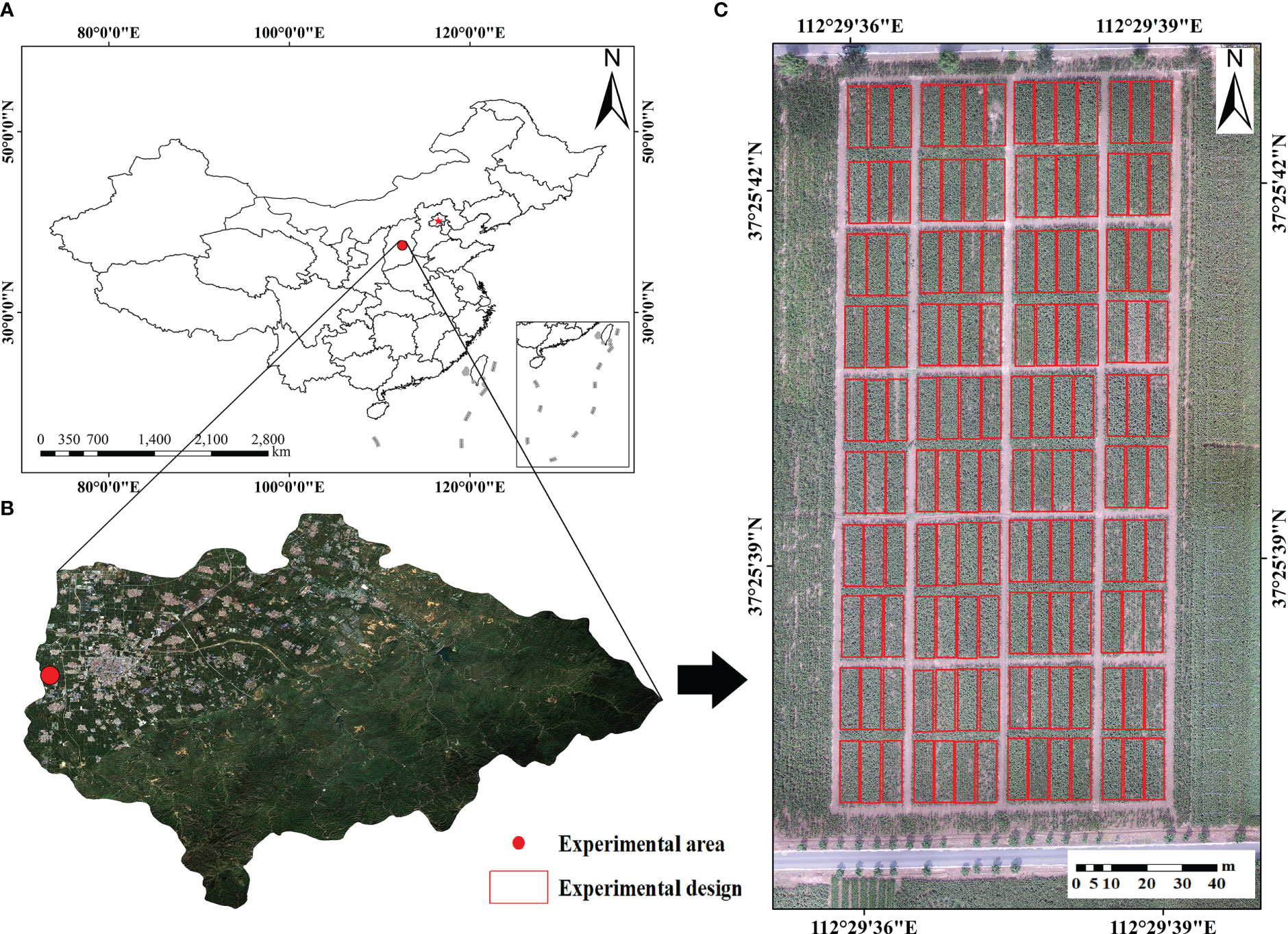

This experiment was conducted in the maize experimental field of Shanxi Agricultural University, Jinzhong City, Shanxi Province (37°25′ N, 112°29′ E) (Figure 1).

Figure 1 Overview of the study area. (A) Geographical location of Taigu District (B) the location of the experiential area (C) the design of the field experience.

The experimental area is located in Taigu District, with an average altitude of about 780 m, belonging to a temperate continental monsoon climate, with an average annual temperature of 6-10 °, an average annual rainfall of 410-450 mm and a frost-free period of 160 days. The climatic conditions such as light, heat and water are suitable for maize growth.

The experiment was conducted in a single-factor design with 140 maize varieties (Xinyu 303, Jinfeng 278, RP818, etc.), each planted on an area of 75 m2, total 140 plots. In five key growth periods of maize, namely, tasseling period (24 July, 2021), silking period (4 August, 2021), flowering period (14 August, 2021), filling period (25 August, 2021) and milk ripening period (8 September, 2021), LAI was measured in areas with high vegetation coverage and consistent growth.

In this study, a Meridian M210 V2 quadcopter UAV (DJI Innovations, Shenzhen, China) (Figure 2A) with a RedEdge-MX imaging system (MicaSense, Seattle, WA, USA) (Figure 2B) was used as a high-throughput remote sensing platform to acquire multispectral images of maize during critical fertility periods. RedEdge-MX dual-camera imaging system has 10 spectral channels with a spectral range of 444-842 nm and can simultaneously obtain 10 discontinuous multispectral images with a resolution of 1280 × 960 pixels. The detailed band parameters are shown in Table 1. The flight time is between 10:00 and 12:00, the flight altitude is set to 60 m, and the forward overlap rate and side overlap rate are set to 85%.

Figure 2 UAV near ground remote sensing platform. (A) Four rotors UAV (B) Multispectral imaging system (C) The calibration panel.

After the flight, the original multispectral images acquired by the UAV and the calibration plate (Figure 2C) images taken before takeoff were imported into Pix4D mapper (Pix4D S.A., Lausanne, Switzerland) together for image stitching and radiometric calibration. After the stitching was completed, the software automatically completed the radiometric calibration according to the DN value of the calibration gray plate and the reflectance calibration fitting equation. After completing the above processing process, the orthoimage of each waveband were obtained. The orthoimages were processed using ENVI Classic 5.5 (Harris Geospatial Solutions, Inc., Broomfield, CO, USA) for band fusion to obtain multispectral image data.

In this study, we selected the SunScan Canopy Analyser to measure maize LAI data while acquiring UAV multispectral images. During the data collection process, maize leaf area index was measured by selecting three inter-row shaded locations of 1 meter in length at random within each plot. The average of the three measurements was used as the LAI value for the plot.

By combining different bands linearly or nonlinearly, the vegetation indices constructed has certain indicative significance for the dynamic changes of vegetation canopy information, which not only reduces the influence of atmospheric and soil environmental factors but also enhances the sensitivity of LAI to canopy reflectivity. According to the previous research results (Qi et al., 2020; Hang et al., 2021), we selected eight commonly used vegetation indices to estimate LAI, and the specific calculation formulas are shown in Table 2.

To improve computational efficiency, we select three bands of red 650 nm, red edge 705 nm and near-infrared 842 nm for texture feature extraction. Grey level co-occurrence matrix (GLCM) is one of the most widely used methods in texture feature extraction (Haralick et al., 1973). This method was proposed by Haralick in 1973, and is mainly used in machine vision, image classification, image recognition and so on (Kavdir and Guyer, 2004; Adjed et al., 2018; Vani et al., 2018). After radiation correction and image fusion, eight texture features such as Mean (mean), variance (var), homogeneity (hom), contrast (con), dissimilarity (dis), entropy (ent), second moment (sm) and correlation (cor) were extracted using the GLCM. A total of 24 texture feature values were selected and the mean value extracted from the region of interest was used as the texture feature value for the corresponding image.

In order to fully explore the application potential of texture features in UAV multispectral images in maize LAI estimation, this study used Matlab 2020a software(MathWorks, Natick, Massachusetts, USA) to traverse and combine eight texture feature values of three-band images and calculates three texture indexes: normalized difference texture index(NDTI) (Zheng et al., 2019), ratio texture index(RTI) and difference texture index(DTI) (Hang et al., 2021). The specific calculation formulas are as follows:

In the formula, T1 and T2 are texture eigenvalues of random bands.

In this study, a total of 700 datasets were collected from five key fertility periods of maize, each containing ground-truthed LAIs and UAV image features such as vegetation indices and texture indices. To ensure that each dataset could be involved in modeling and validation, the datasets were randomly divided into 10 parts using ten-fold cross-validation, with 90% (630 datasets) used for modeling and 10% (70 datasets) for model validation. Each model was trained 10 times to ensure robustness.

We developed 12 LAI estimation models for multiple fertility stages by combining ground truth LAIs with various inputs using four machine learning algorithms, namely SVM, RF, BPNN, and PLSR. The model inputs consisted of univariate and multivariate factors, where the former comprised vegetation indices and texture indices, and the latter involved their combination.

(1) The support vector machine is a popular machine learning algorithm for pattern recognition and nonlinear regression(Cortes and Vapnik, 1995). In this study, we used the SVM algorithm with a radial basis function (RBF) to construct maize LAI estimation models using various predictors. The SVM model requires tuning of the penalty factor c and the kernel function parameter g. After continuous testing, we determined the optimal values of c=1.00 and g=3.03.

(2) Random forest is a nonlinear regression modeling method based on multiple decision trees. It consists of two methods, Bootstrap sampling and Random subspace(Breiman, 2001), and is effective in handling high-dimensional data and covariance problem among variables, with strong noise resistance. The number of decision trees (ntrees) and the number of predictors randomly selected for each split (mtry) are the main parameters that need to be tuned to optimize the performance of a random forest model. After repeated testing, this study set the random forest parameters to ntrees=200 and tuned mtry according to different input variable types.

(3) Back Propagation (BP) based neural network consists of three parts: input layer (input), hidden layer (hidden), and output layer (output). To ensure the model monitoring accuracy, the BPNN model was trained several times and the model parameters were iteratively tuned. Finally, the learning rate of 0.01, 10 hidden layers and 1 output layer were used as the best parameters.

(4) PLSR originates from the nonlinear iterative partial least squares (NUPALS) algorithm proposed by Herman Wold et al. (2001). The number of latent variables (LV) is one of the important influencing factors to determine the prediction accuracy of the PLSR model, and in this study the model automatically adjusted the number of latent variables according to the input variable types.

In this study, coefficient of determination (R2), root mean square error (RMSE) and relative percent error (RPD) were used as evaluation indexes to evaluate the model performance. When the estimated model has higher R2, RPD and smaller RMSE in modeling and validation datasets, it indicates that the model has higher goodness of fit, accuracy and stability.

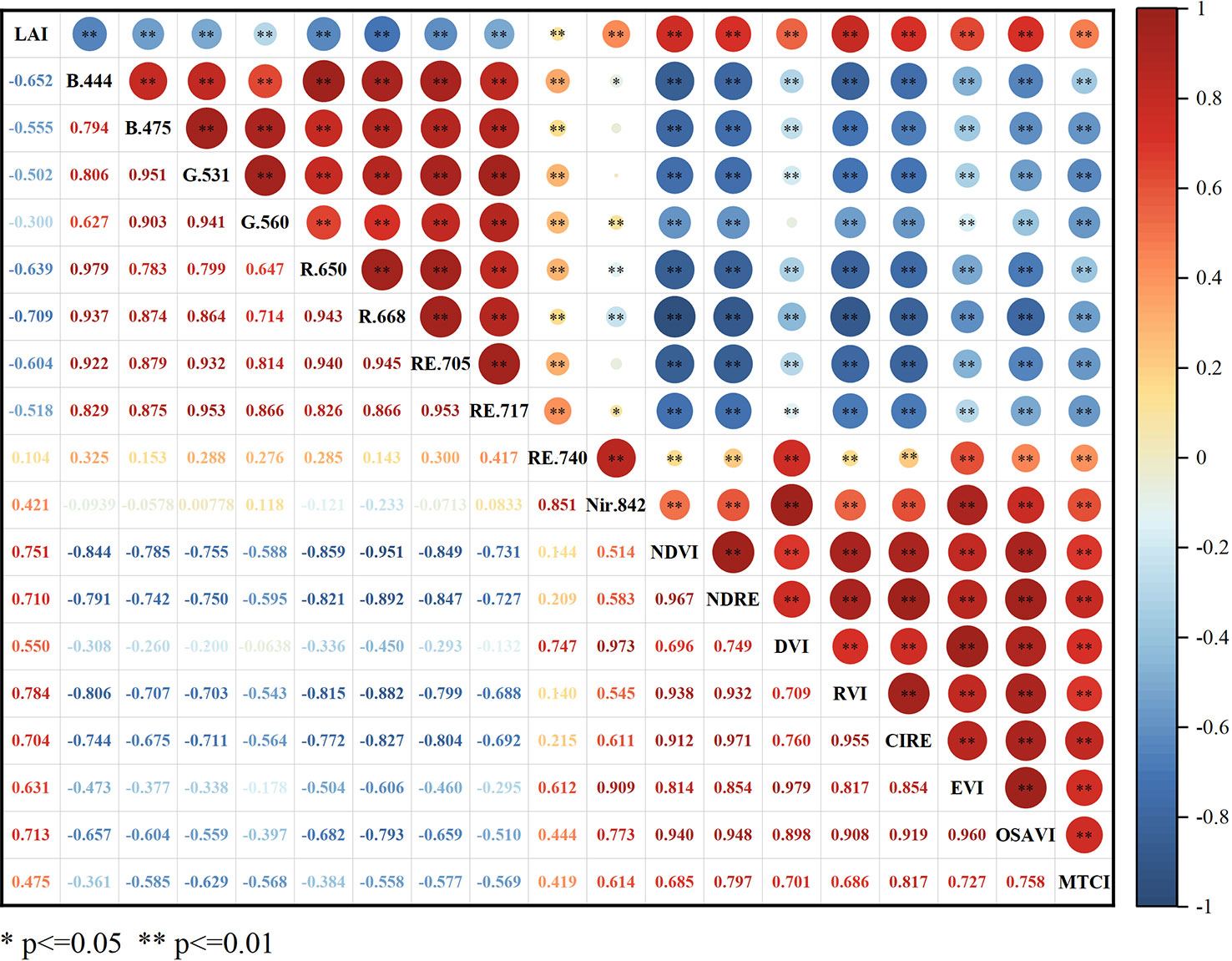

The correlation analysis of the 18 spectral parameters with LAI is shown in Figure 3. The correlation between LAI and canopy reflectance was significantly negative (p < 0.01) in the range of 444-717 nm and positive (p < 0.01) in the range of 740-842 nm. All eight selected vegetation indices were highly significantly positively correlated with LAI (P < 0.01), with RVI being the most strongly correlated with LAI (r = 0.784) and NDVI, OSAVI, NDRE and CIred edge being more strongly correlated with LAI, with correlation coefficients above 0.700. All five vegetation indices included the three bands, red, red edge and NIR, indicating that the band combinations could be better for maize LAI monitoring.

Figure 3 Correlation coefficient between specific parameters and maize LAI. * and * * are significant at 0.05 and 0.01 levels, respectively. The red area indicates positive correlation, the blue area indicates negative correlation. Darker colors and larger circles mean a stronger correlation between LAI and spectral parameters.

The correlation analysis of the three bands of texture features with LAI (Table 3) showed that the mean texture values in the Red and NIR band were significantly correlated with LAI, with a strong correlation (r = -0.687 and -0.703).

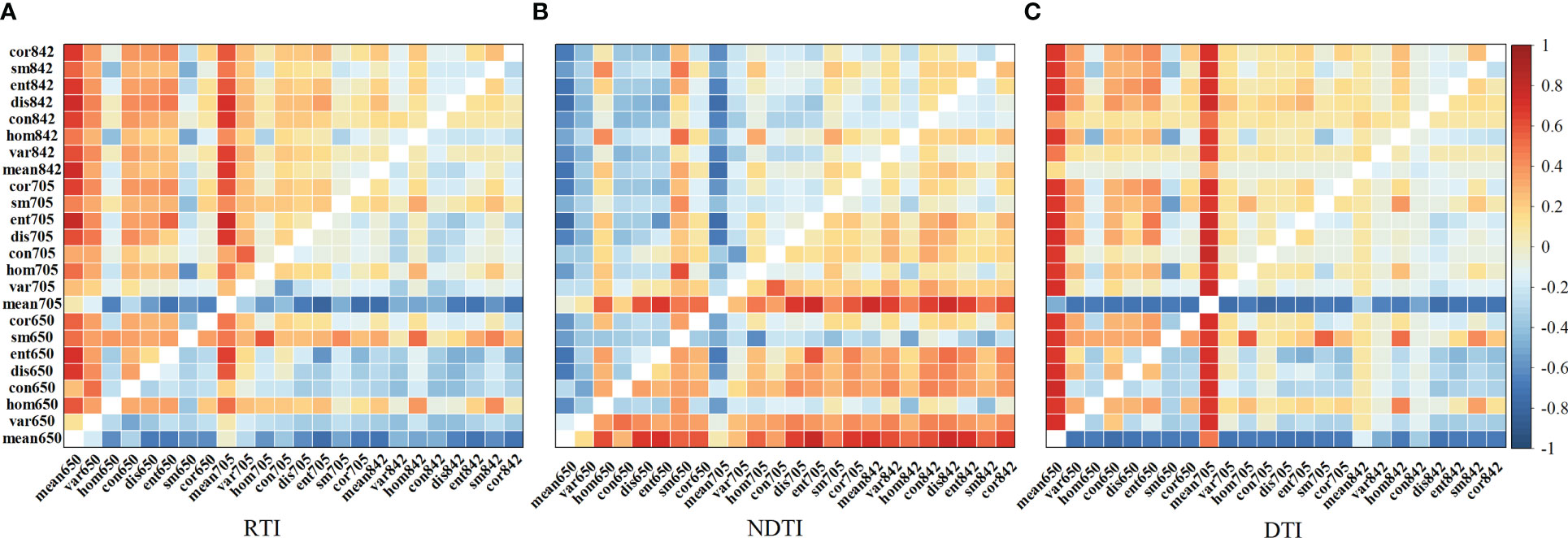

Due to the weak correlation between most texture features and LAI, this study constructed three texture indices composed of different texture feature values in order to improve the potential application of texture features in monitoring maize LAI. The results shown in Figure 4 indicated that the correlation between the linearly transformed texture features and LAI was significantly enhanced. Among them, the RTI (mean705, ent705) had the strongest correlation with LAI, with a correlation coefficient of -0.804, which was a 14.370% increase in the absolute value of the correlation coefficient compared to the red edge mean.

Figure 4 Correlation coefficient matrix between LAI and three types of texture index indices (A) the ratio texture index, (B) the normalized difference texture index, (C) the difference texture index. Each cell in the figure represents the correlation coefficient between the texture index, which is obtained by linearly transforming the original texture parameters corresponding to the x and y coordinates of each cell, and the LAI.

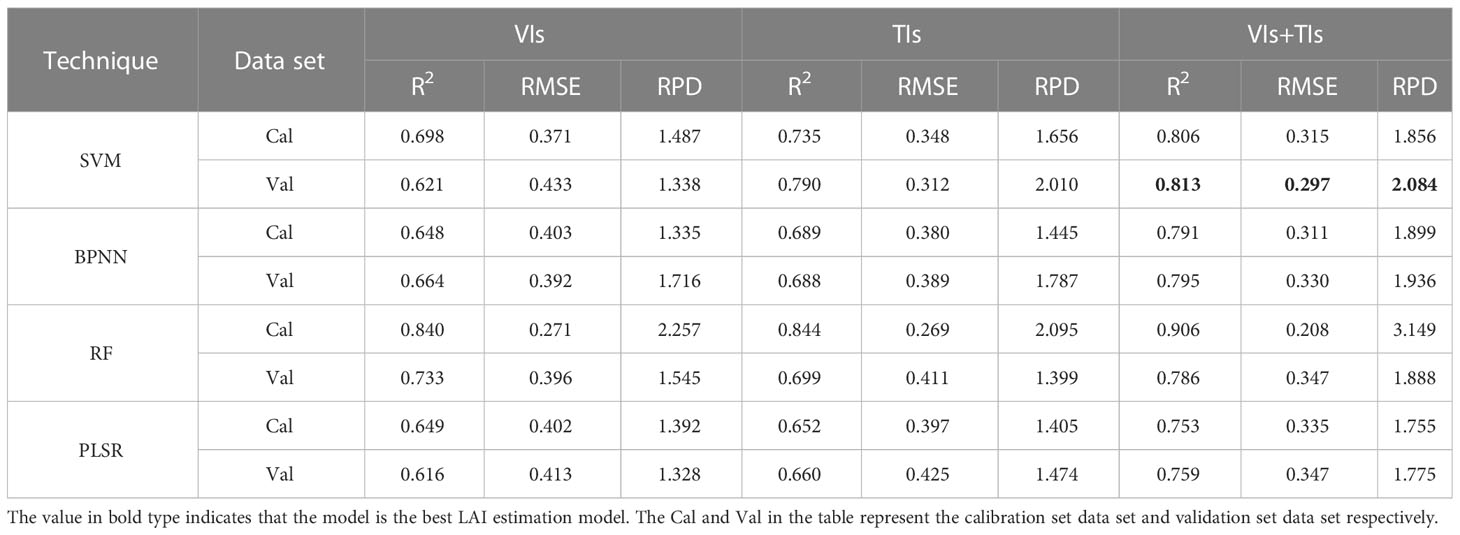

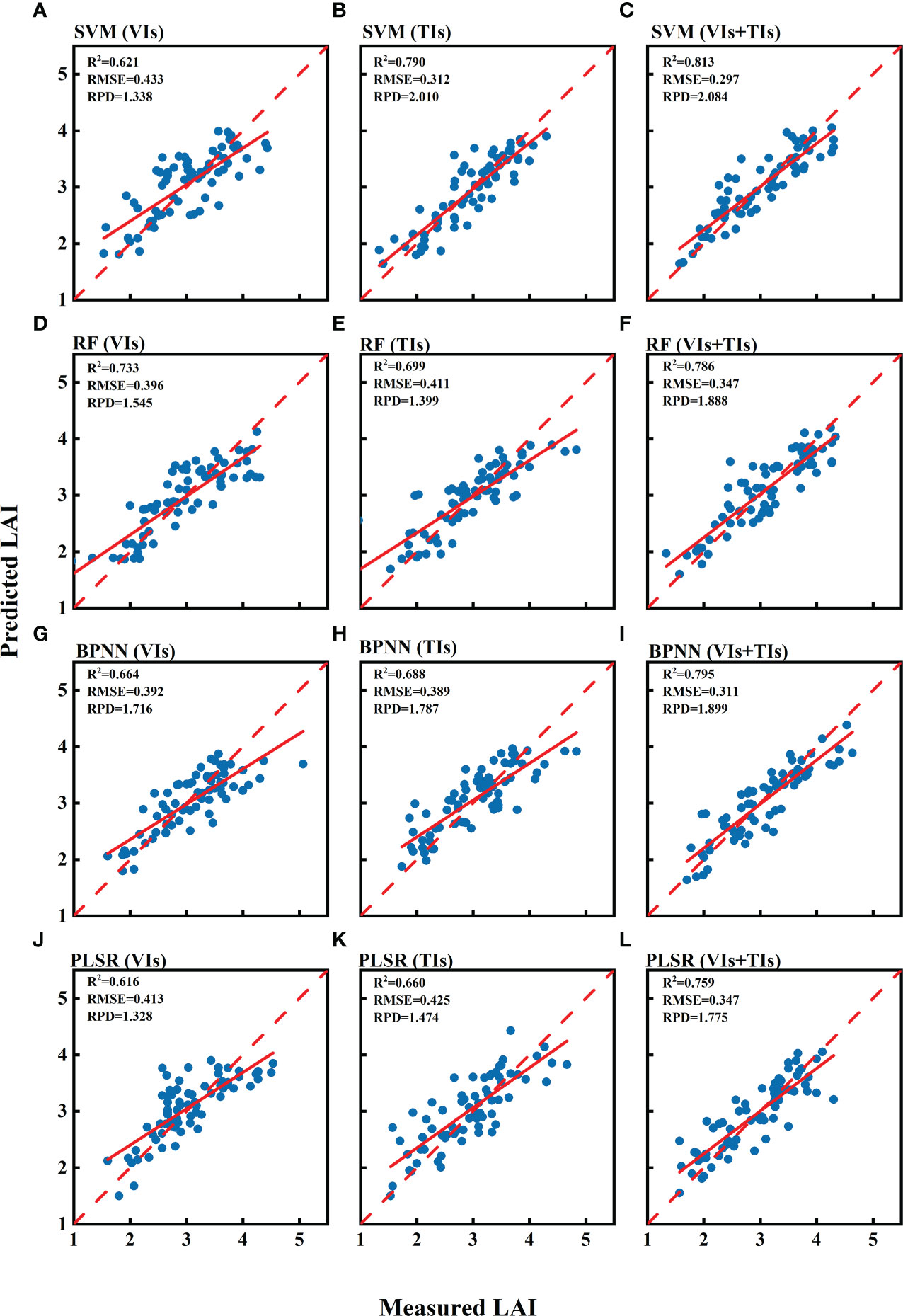

In order to fully investigate the potential of combining UAV spectral and texture features with machine learning algorithms for LAI estimation. Based on the strength of the correlation between different image features and LAI, we selected five vegetation indices (RVI, NDVI, OSAVI, NDRE and CIred edge) and three texture indices (RTI (mean705, ent705), DTI (mean705, con705) and NDTI (mean650, ent705)) as independent variables, and constructed LAI estimation models by using four machine learning algorithms: SVM, RF, BPNN and PLSR respectively. Table 4 shows the training results of machine learning models with different input variables. In the single variable model, from the perspective of modelling methods, RF performed best in the dataset of vegetation indices and SVM performed best in the dataset of texture indices; From the perspective of the different input variables, the estimation model based on the texture indices performs better overall than the vegetation indices when using the same modelling approach. On the whole, the estimation of the SVM model based on TIs was optimal (R2 = 0.790, RMSE = 0.312, RPD = 2.010).

Table 4 Summary of the results of estimating LAI by machine learning models based on different inputs.

Using vegetation and texture indices as multivariate input variables to construct the LAI estimation model. From the perspective of modelling method, the RF model in the calibration set performed the best from the perspective of the modelling approach (R2 = 0.906, RMSE = 0.208, RPD = 3.149), with the SVM model performing second best (R2 = 0.806, RMSE = 0.315, RPD = 1.856). However, in the validation set, the SVM model performed best (R2 = 0.813, RMSE = 0.297, RPD = 2.084). In contrast, the R2 of the RF model plummeted from 0.906 to 0.786, the RMSE increased by 66.827% and the RPD decreased by 40.044%. Although the monitoring effect of BPNN and PLSR is slightly weaker than that of SVM and RF, the estimation accuracy and model stability are also better (R2 > 0.75, RPD > 1.75). The above results show that the SVM model has the best estimation accuracy and stability, and the other three models also have great prediction results.

When analyzed from the perspective of the input variables, the machine learning models constructed by fusing the two types of indices explained significantly more variance in the LAI compared to the single-factor input variables of the vegetation or texture indices. Combining the VIs with the TIs resulted in R2 means of 0.817 and 0.788, RMSE means of 0.292 and 0.330, and RPD means of 2.165 and 1.921 for the calibration and validation sets, respectively. Compared with the single vegetation indices data source model, R2 increased by 14.810% and 19.757%, RMSE decreased by 19.337% and 19.118%, and RPD increased by 33.807% and 29.622%. Compared with the single texture indices model, R2 increases by 11.507% and 11.142%, RMSE decreases by 16.092% and 14.063%, and RPD increases by 31.212% and 15.237%. The above results show that the estimation effect of the model is obviously improved and more stable after fusing different image features.

The scatter plot in Figure 5 showed good consistency between the predicted LAI values from the machine learning estimation model and the measured LAI values in the validation dataset, with an RMSE ranging from 0.297 to 0.433 and an RPD ranging from 1.328 to 2.084. Combining vegetation indices and texture indices resulted in the best estimation results among the four types of machine learning models.

Figure 5 Accuracy evaluation results of LAI estimation models based on vegetation indices (VIs), texture indices (TIs) and combined vegetation indices and texture indices (VIs+TIs) in the validation set. The models evaluated are SVM, RF, BPNN, and PLSR, shown respectively in (A–C), (D–F), (G–I), and (J–L).

UAV remote sensing has great potential in the process of crop phenotype information mining and analysis due to its high spatial and temporal resolution and simple operation (Xie and Yang, 2020). In this study, multispectral images of the study area were acquired by using a UAV with a multispectral camera. The non-destructive and rapid estimation of maize LAI at the plot scale was achieved by extracting different types of image features and combining them with machine learning algorithms.

Vegetation indices are widely used in crop chlorophyll content (Jiang et al., 2022), LAI (Li et al., 2019), biomass (Gnyp et al., 2014) and yield prediction (Fu et al., 2020; García-Martínez et al., 2020). Crop canopy reflectance is easily influenced by leaf pigmentation in the visible bands, which can lead to “oversaturation” of the vegetation indices (Hatfield et al., 2008). In contrast, red-edge and near-infrared reflectance are mainly influenced by canopy structure and have a stronger penetration effect. Hence, researchers usually choose red-edge and near-infrared bands to construct vegetation indices. Shi et al. (2022) demonstrated that vegetation indices based on red light bands and near-infrared bands correlate well with LAI and AGB of red and green beans, allowing for growth monitoring of intercropped crops in plot tea plantations. Zheng et al. (2019) found that the vegetation indices based on red-edge bands were important parameters for rice biomass estimation before tasseling. However, the estimation was significantly reduced after tasseling, mainly because canopy leaf biomass was sensitive to red-edge bands but not stems. Qi et al. (2020) used fixed-wing UAV to monitor peanut growth, and found that red light and near-infrared bands were sensitive bands of peanut LAI, which can effectively predict the changes of peanut LAI. In this study,we found strong correlations (r > 0.700) between maize LAI and five vegetation indices: RVI, NDVI, OSAVI, NDRE, and CIred edge. These indices were identified as effective for monitoring LAI in various maize varieties. The results are in general agreement with the results of previous studies, indicating that spectral indices based on red light, red edge and near-infrared bands are of good application in crop monitoring and can achieve rapid and non-destructive monitoring of crop growth parameters.

In this study, DVI and MTCI performed poorly in estimating LAI, and the correlation coefficients were only 0.550 and 0.475. There may be two possible reasons for this result: (1) the influence of other disturbing factors such as soil background and vegetation shading on the multispectral reflectance; (2) the high LAI level in the middle and late stages of maize growth, resulting in an underestimation of some vegetation indices. In the process of monitoring crop growth using UAV multispectral imagery, the use of spectral features alone may not achieve satisfactory results (Zheng et al., 2019; Yang et al., 2021; Fei et al., 2022). The use of vegetation indices alone can only quantitatively analyze the structural characteristics, biochemical components and productivity trends of crop canopies from a spectral perspective. It cannot deeply explore the effective information of other data dimensions in UAV multispectral imagery.

Texture features can reveal changes in crop canopy information from the data dimension of image spatial features. Previous work has used texture parameters from satellite remote sensing data combined with spectral and topographic features to estimation above-ground biomass in forests, demonstrating the feasibility of applying texture parameters in agricultural remote sensing (Lu and Batistella, 2005; Li et al., 2008). In this study, most of the texture parameters had poor correlation with LAI. However, the correlation between the constructed texture indices and LAI were significantly improved after linear processing. The texture indices RTI (mean705, ent705), DTI (mean705, con705) and NDTI (mean650, ent705) effectively improved the estimation of LAI. Similar conclusions were obtained by Hang et al. (2021) and Fei et al. (2022) when using texture parameters for rice growth monitoring and yield estimation of wheat. This is mainly because the texture is linearly combined and transformed to reduce the influence of soil background, vegetation shading and topographic factors, which can better highlight the changing patterns of feature characteristics.

The multi-input model based on vegetation and texture indices in this study was superior to the model with a single input variable, with significant improvements in fit, estimation accuracy and stability. In particular, the model combining UAV texture and spectral features outperformed the model using only the vegetation indices, with a 19.757% increase in R2, a 19.118% decrease in RMSE and a 29.622% increase in RPD in the validation set. The results of this study are similar to those of previous studies. Ma et al. (2022) used color indices and texture features from UAV RGB images to accurately estimate cotton yield, with the RF_ELM model based on color indices and texture features having the highest accuracy (R2 = 0.911). Yang et al. (2021) used vegetation indices and texture features to achieve an estimation of LAI for rice at full fertility. The combination of spectral features and texture features had superior predictive power than vegetation indices. In summary, combining the UAV spectral features with texture features is an effective method to improve the accuracy of LAI estimation.

Machine learning algorithms combined with remote sensing data have been widely used in areas such as crop growth monitoring(Li et al., 2019; Zheng et al., 2019; Zhang et al., 2021), yield estimation (Fu et al., 2020; García-Martínez et al., 2020; Ma et al., 2022) and disease identification (Guo et al., 2021; Zhu et al., 2022). This study used four machine learning algorithms, SVM, RF, BPNN and PLSR, to construct LAI monitoring models for different maize varieties. The results show that the SVM model performs best as a whole, and the model’s training results and verification results have a high degree of explanation for the variation of LAI. In the machine learning models constructed based on vegetation and texture indices, the validation sets R2 and RPD of SVM were improved and RMSE decreased compared to RF, PLSR and BPNN models, indicating the high performance of SVM modelling. Zhu et al. (2022) realized high-precision monitoring of wheat scab by using machine learning method combined with spectral and texture features of drones, and the model built by SVM in collaboration with VIs + TFs can provide the most accurate monitoring results; Omer et al. (2016) used WorldView-2 multispectral imagery combined with SVM and ANN algorithms to achieve monitoring of LAI of forest endangered tree species, where the SVM model showed excellent prediction accuracy and model stability. The excellent performance of SVM model in LAI estimation may be related to the model structure. SVM uses the principle of structure minimization (Camps-Valls et al., 2006) to solve the nonlinear mapping problem between input variables and response variables. However, to address the issue of unstable RF model performance, a similar situation has been found in other studies, Han et al. (2019) used the cooperative machine learning algorithm of canopy structure information and spectral information to construct maize AGB, the performance of RF model in the training set and test set was quite different. There are two main reasons for the instability of the RF model: (1) the amount of data in the validation set is far less than that in the calibration set, and RF will perform better in extensive sample data; (2) the existence of outliers in the validation set due to human measurement problems reduces the stability of the model.

In this paper, when using different machine learning algorithms to estimate LAI with multivariate input variables, all four machine learning models achieved good performance, indicating that there is a non-linear relationship between the response variables and the various predictors. However, the input variables were selected without deeper mining of the input variable feature selection, and the contribution of different predictors to the LAI estimation model was not considered. To improve the monitoring accuracy of LAI, it is necessary to study the above shortcomings in future research.

Rapid and non-destructive plot-scale maize LAI estimation is important for UAV remote sensing monitoring of crop growth as well as precise agricultural management. In this study, we used image analysis techniques to extract spectral and texture features from UAV multispectral images and used machine learning methods (SVM, RF, BPNN, PLSR) to achieve fast and accurate estimation of maize LAI. Most Vegetation indices based on red, red-edge, and NIR bands exhibited strong correlation with LAI, whereas most texture features demonstrated limited association with LAI. Nevertheless, after applying linear transformation, texture indices displayed a substantially enhanced correlation with LAI. Among the different types of estimation models, the model constructed by SVM method combined with vegetation indices and texture indices was the best for LAI estimation (R2 = 0.813, RMSE=0.297, RPD=2.084), and this result revealed that there was a non-linear relationship between LAI and spectral parameters and texture parameters. The results of this study show that the use of UAV near-ground remote sensing combined with image analysis techniques can achieve accurate monitoring of the growth of different maize varieties and provide guidance for maize variety selection.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Conceptualization, XinS, WY and MF. data curation, XinS, ZY, PS and ZW. formal analysis, XinS and CY. funding acquisition, WY and MF. investigation, XinS, ZY, PS, KW and ZW. project administration, WY and MF. resources, WY and MF. writing—original draft, XinS. writing—review and editing, WY and MF. All authors contributed to the article and approved the submitted version.

This work was funded by the Key Research and Development Program of Shanxi Province, China (201903D211002-01, 201903D211002-05).

We are grateful to the Shanxi Academy of Agricultural Sciences for providing the trial site. We are also grateful to the editor and reviewers.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Adjed, F., Safdar Gardezi, S. J., Ababsa, F., Faye, I., Chandra Dass, S. (2018). Fusion of structural and textural features for melanoma recognition. IET Comput. Vis. 12, 185–195. doi: 10.1049/iet-cvi.2017.0193

Camps-Valls, G., Bruzzone, L., Rojo-Álvarez, J. L., Melgani, F. (2006). Robust support vector regression for biophysical variable estimation from remotely sensed images. IEEE Geosci Remote Sens Lett. 3, 339–343. doi: 10.1109/lgrs.2006.871748

Chen, P., Feng, H., Li, C., Yang, G., Yang, J., Yang, W., et al. (2019). Estimation of chlorophyll content in potato using fusion of texture and spectral features derived from UAV multispectral image. Trans. CSAE. 35, 63–74. doi: 10.11975/j.issn.1002-6819.2019.11.008

Chen, X., Meng, J., Du, X., Zhang, F., Zhang, M., Wu, B. (2010). Remote sensing monitoring model of winter wheat leaf area index based on environmental satellite CCD data. Remote Sens Land Resou 2, 55–58+62. doi: 1001-070X(2010)02-0055-04

Coburn, C., Roberts, A. C. (2004). A multiscale texture analysis procedure for improved forest stand classification. Int. J. Remote Sens. 25, 4287–4308. doi: 10.1080/0143116042000192367

Cortes, C., Vapnik, V. (1995). Support-vector networks. Mach. Learn. 20, 273–297. doi: 10.1007/BF00994018

Dash, J., Curran, P. (2004). The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 23, 5403–5413. doi: 10.1080/0143116042000274015

Duan, B., Fang, S., Zhu, R., Wu, X., Wang, S., Gong, Y., et al. (2019). Remote estimation of rice yield with unmanned aerial vehicle (UAV) data and spectral mixture analysis. Front. Plant Sci. 10. doi: 10.3389/fpls.2019.00204

Duncan, W. J. C. S. (1971). Leaf angles, leaf area, and canopy photosynthesis. Crop Sci. 11, 482–485. doi: 10.2135/cropsci1971.0011183x001100040006x

Fei, S., Hassan, M. A., Xiao, Y., Su, X., Chen, Z., Cheng, Q., et al. (2022). UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis Agric. 24, 187–212. doi: 10.1007/s11119-022-09938-8

Fu, Z., Jiang, J., Gao, Y., Krienke, B., Wang, M., Zhong, K., et al. (2020). Wheat growth monitoring and yield estimation based on multi-rotor unmanned aerial vehicle. Remote Sens. 12, 508. doi: 10.3390/rs12030508

García-Martínez, H., Flores-Magdaleno, H., Ascencio-Hernández, R., Khalil-Gardezi, A., Tijerina-Chávez, L., Mancilla-Villa, O. R., et al. (2020). Corn grain yield estimation from vegetation indices, canopy cover, plant density, and a neural network using multispectral and RGB images acquired with unmanned aerial vehicles. Agric 10, 277. doi: 10.3390/agriculture10070277

Gitelson, A. A., Merzlyak, M. N., Lichtenthaler, H. K. (1996). Detection of red edge position and chlorophyll content by reflectance measurements near 700 nm. J. Plant Physiol. 148, 501–508. doi: 10.1016/s0176-1617(96)80285-9

Gnyp, M. L., Miao, Y., Yuan, F., Ustin, S. L., Yu, K., Yao, Y., et al. (2014). Hyperspectral canopy sensing of paddy rice aboveground biomass at different growth stages. Field Crop Res. 155, 42–55. doi: 10.1016/j.fcr.2013.09.023

Guo, A., Huang, W., Dong, Y., Ye, H., Ma, H., Liu, B., et al. (2021). Wheat yellow rust detection using UAV-based hyperspectral technology. Remote Sens. 13, 123. doi: 10.3390/rs13010123

Han, L., Yang, G., Dai, H., Xu, B., Yang, H., Feng, H., et al. (2019). Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 15, 1–19. doi: 10.1186/s13007-019-0394-z

Hang, Y., Su, H., Yu, Z., Liu, H., Guan, H., Kong, F. (2021). Estimation of rice leaf area index combining UAV spectrum, texture features and vegetation coverage. Trans. CSAE. 37, 64–71. doi: 10.11975/j.issn.1002-6819.2021.09.008

Haralick, R. M., Shanmugam, K., Dinstein (1973). Textural features for image classification. IEEE Trans. Syst. Man Cybern. 6, 610–621. doi: 10.1109/tsmc.1973.4309314

Hatfield, J. L., Gitelson, A. A., Schepers, J. S., Walthall, C. L. (2008). Application of spectral remote sensing for agronomic decisions. Agron. J. 100, S–117-S-131. doi: 10.2134/agronj2006.0370c

Jiang, J., Johansen, K., Stanschewski, C. S., Wellman, G., Mousa, M. A., Fiene, G. M., et al. (2022). Phenotyping a diversity panel of quinoa using UAV-retrieved leaf area index, SPAD-based chlorophyll and a random forest approach. Precis Agric. 23, 961–983. doi: 10.1007/s11119-021-09870-3

Kang, S., Gu, B., Du, T., Zhang, J. (2003). Crop coefficient and ratio of transpiration to evapotranspiration of winter wheat and maize in a semi-humid region. Agric. Water Manage. 59, 239–254. doi: 10.1016/s0378-3774(02)00150-6

Kanning, M., Kühling, I., Trautz, D., Jarmer, T. (2018). High-resolution UAV-based hyperspectral imagery for LAI and chlorophyll estimations from wheat for yield prediction. Remote Sens. 10, 2000. doi: 10.3390/rs10122000

Kavdir, I., Guyer, D. (2004). Comparison of artificial neural networks and statistical classifiers in apple sorting using textural features. Biosyst. Eng. 89, 331–344. doi: 10.1016/j.biosystemseng.2004.08.008

Ke, L., Zhou, Q., Wu, W., Tian, X., Tang, H.-j. (2016). Estimating the crop leaf area index using hyperspectral remote sensing. J. Integr. Agric. 15, 475–491. doi: 10.1016/s2095-3119(15)61073-5

Li, M., Tan, Y., Pan, J., Peng, S. (2008). Modeling forest aboveground biomass by combining spectrum, textures and topographic features. Front. For China. 3, 10–15. doi: 10.1007/s11461-008-0013-z

Li, S., Yuan, F., Ata-UI-Karim, S. T., Zheng, H., Cheng, T., Liu, X., et al. (2019). Combining color indices and textures of UAV-based digital imagery for rice LAI estimation. Remote Sens. 11, 1763. doi: 10.3390/rs11151763

Liang, D., Guan, Q., Huang, W., Huang, L., Yang, G. (2013). Remote sensing inversion of leaf area index based on support vector machine regression in winter wheat. Trans. CSAE. 29, 117–123. doi: 10.3969/j.issn.1002-6819.2013.07.015

Liu, C., Yang, G., Li, Z., Tang, F., Wang, J., Zhang, C., et al. (2018). Biomass estimation of winter wheat by fusing UAV spectral information with texture information. Sci. Agric. Sin. 51, 3060–3073. doi: 10.3864/j.issn.0578-1752.2018.16.003

Liu, J., Zhao, C., Yang, G., Yu, H., Zhao, X., Xu, B., et al. (2016). Review of field-based phenotyping by unmanned aerial vehicle remote sensing platform. Trans. CSAE. 32, 98–106. doi: 10.11975/j.issn.1002-6819.2016.24.013

Lu, D., Batistella, M. (2005). Exploring TM image texture and its relationships with biomass estimation in rondônia, Brazilian Amazon. Acta Amazonica. 35, 249–257. doi: 10.1590/s0044-59672005000200015

Ma, Y., Ma, L., Zhang, Q., Huang, C., Yi, X., Chen, X., et al. (2022). Cotton yield estimation based on vegetation indices and texture features derived from RGB image. Front. Plant Sci. 13. doi: 10.3389/fpls.2022.925986

Naito, H., Ogawa, S., Valencia, M. O., Mohri, H., Urano, Y., Hosoi, F., et al. (2017). Estimating rice yield related traits and quantitative trait loci analysis under different nitrogen treatments using a simple tower-based field phenotyping system with modified single-lens reflex cameras. ISPRS J. Photogramm Remote Sens. 125, 50–62. doi: 10.1016/j.isprsjprs.2017.01.010

Oguntunde, P. G., Fasinmirin, J. T., Abiolu, O. A. (2012). Performance of the SunScan canopy analysis system in estimating leaf area index of maize. Agric. Eng. Int: CIGR Journa. 14, 1–7.

Omer, G., Mutanga, O., Abdel-Rahman, E. M., Adam, E. (2016). Empirical prediction of leaf area index (LAI) of endangered tree species in intact and fragmented indigenous forests ecosystems using WorldView-2 data and two robust machine learning algorithms. Remote Sens. 8, 324. doi: 10.3390/rs8040324

Prabhakara, K., Hively, W. D., McCarty, G. W. (2015). Evaluating the relationship between biomass, percent groundcover and remote sensing indices across six winter cover crop fields in Maryland, united states. Int. J. Appl. Earth Obs. 39, 88–102. doi: 10.1016/j.jag.2015.03.002

Qi, H., Zhu, B., Wu, Z., Liang, Y., Li, J., Wang, L., et al. (2020). Estimation of peanut leaf area index from unmanned aerial vehicle multispectral images. Sensors 20, 6732. doi: 10.3390/s20236732

Richardson, A. D., Dail, D. B., Hollinger, D. (2011). Leaf area index uncertainty estimates for model–data fusion applications. Agr For. Meteorol. 151, 1287–1292. doi: 10.1016/j.agrformet.2011.05.009

Rondeaux, G., Steven, M., Baret, F. (1996). Optimization of soil-adjusted vegetation indices. Remote Sens Environ. 55, 95–107. doi: 10.1016/0034-4257(95)00186-7

Rouse, J. W., Haas, R. W., Schell, J. A., Deering, D. W., Harlan, J. C. (1974). Monitoring the vernal advancement and retrogradation (Green wave effect) of natural vegetation. NASA/GSFCT type III final report no. NASA-CR-139243 (College Station, TX: Texas A&M University).

Sarkar, S., Cazenave, A.-B., Oakes, J., McCall, D., Thomason, W., Abbott, L., et al. (2021). Aerial high-throughput phenotyping of peanut leaf area index and lateral growth. Sci. Rep. 11, 1–17. doi: 10.1038/s41598-021-00936-w

Shi, Y., Gao, Y., Wang, Y., Luo, D., Chen, S., Ding, Z., et al. (2022). Using unmanned aerial vehicle-based multispectral image data to monitor the growth of intercropping crops in tea plantation. Front. Plant Sci. 13. doi: 10.3389/fpls.2022.820585

Tester, M., Langridge, P. (2010). Breeding technologies to increase crop production in a changing world. Science 327, 818–822. doi: 10.2307/40509897

Vani, K., Poongodi, S., Harikrishna, B. (2018). K-Means cluster based leaf disease identification in cotton plants. Indian J. Public Health Resea Dev. 9, 1117–1120. doi: 10.5958/0976-5506.2018.01288.3

Wang, J., Jiang, Y. (2021). Inversion of leaf area index of soybean based on UAV multispectral remote sensing. Chin. Agric. Sci. Bull. 37, 134–142. doi: casb2020-0229

Wilhelm, W., Ruwe, K., Schlemmer, M. R. (2000). Comparison of three leaf area index meters in a corn canopy. Crop Sci. 40, 1179–1183. doi: 10.2135/cropsci2000.4041179x

Wold, S., Sjöström, M., Eriksson, L. (2001). PLS-regression: a basic tool of chemometrics. Chemometr Intell. Lab. 58, 109–130. doi: 10.1016/s0169-7439(01)00155-1

Xie, C., Yang, C. (2020). A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron Agric. 178, 105731. doi: 10.1016/j.compag.2020.105731

Yang, K., Gong, Y., Fang, S., Duan, B., Yuan, N., Peng, Y., et al. (2021). Combining spectral and texture features of UAV images for the remote estimation of rice LAI throughout the entire growing season. Remote Sens. 13, 3001. doi: 10.3390/rs13153001

Zhang, J., Qiu, X., Wu, Y., Zhu, Y., Cao, Q., Liu, X., et al. (2021). Combining texture, color, and vegetation indices from fixed-wing UAS imagery to estimate wheat growth parameters using multivariate regression methods. Comput. Electron Agric. 185, 106138. doi: 10.1016/j.compag.2021.106138

Zheng, H., Cheng, T., Zhou, M., Li, D., Yao, X., Tian, Y., et al. (2019). Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis Agric. 20, 611–629. doi: 10.1007/s11119-018-9600-7

Keywords: UAV, multispectral images, leaf area index, spectral feature, texture feature, maize

Citation: Sun X, Yang Z, Su P, Wei K, Wang Z, Yang C, Wang C, Qin M, Xiao L, Yang W, Zhang M, Song X and Feng M (2023) Non-destructive monitoring of maize LAI by fusing UAV spectral and textural features. Front. Plant Sci. 14:1158837. doi: 10.3389/fpls.2023.1158837

Received: 04 February 2023; Accepted: 20 March 2023;

Published: 31 March 2023.

Edited by:

Jiangang Liu, Chinese Academy of Agricultural Sciences (CAAS), ChinaReviewed by:

Wenjuan Li, Institute of Agricultural Resources and Regional Planning (CAAS), ChinaCopyright © 2023 Sun, Yang, Su, Wei, Wang, Yang, Wang, Qin, Xiao, Yang, Zhang, Song and Feng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Meichen Feng, Zm1jMTAxQDE2My5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.