- 1College of Mechanical and Electrical Engineering, Shihezi University, Shihezi, China

- 2Key Laboratory of Northwest Agricultural Equipment, Ministry of Agriculture and Rural Affairs, Shihezi, China

To accurately evaluate residual plastic film pollution in pre-sowing cotton fields, a method based on modified U-Net model was proposed in this research. Images of pre-sowing cotton fields were collected using UAV imaging from different heights under different weather conditions. Residual films were manually labelled, and the degree of residual film pollution was defined based on the residual film coverage rate. The modified U-Net model for evaluating residual film pollution was built by simplifying the U-Net model framework and introducing the inception module, and the evaluation results were compared to those of the U-Net, SegNet, and FCN models. The segmentation results showed that the modified U-Net model had the best performance, with a mean intersection over union (MIOU) of 87.53%. The segmentation results on images of cloudy days were better than those on images of sunny days, with accuracy gradually decreasing with increasing image-acquiring height. The evaluation results of residual film pollution showed that the modified U-Net model outperformed the other models. The coefficient of determination(R2), root mean square error (RMSE), mean relative error (MRE) and average evaluation time per image of the modified U-Net model on the CPU were 0.9849, 0.0563, 5.33% and 4.85 s, respectively. The results indicate that UAV imaging combined with the modified U-Net model can accurately evaluate residual film pollution. This study provides technical support for the rapid and accurate evaluation of residual plastic film pollution in pre-sowing cotton fields.

Introduction

Plastic film mulching is an agricultural technique that can improve soil temperature, reduce soil water loss, suppress weed growth, and improve crop water use efficiency, yield, and quality (Yan et al., 2014; Xue et al., 2017). However, much of the waste plastic film remains in the soil after harvesting. With polyethylene as raw material, plastic film is decomposed into residual film and microplastics over time under natural conditions (Qi et al., 2020; Zhang et al., 2022). However, complete decomposition of plastic film in the soil requires 200 to 400 years (He et al., 2009). The increase in residual film in the soil has brought a series of serious problems, such as soil structure damage, decreased soil quality, and crop yield loss (Dong et al., 2015).

Cotton is one of the major cash crops in the world (Akter et al., 2018; Alves et al., 2020). China is one of the world’s leading cotton growers, and Xinjiang Province has become an important region for high-quality cotton production in China. In 2021, Xinjiang’s cotton production reached 5.129 million tons, accounting for approximately 89.5 percent of China’s total cotton output. Due to the arid climate in Xinjiang, farms have used film mulching in cotton planting for a long time. However, the accumulation of plastic film waste has caused serious white pollution to farmland (Zhao et al., 2017).

Farmland residual film pollution control is a systematic project. In addition to the development of residual film recycling machines, it is of great significance to carry out efficient and accurate residual film pollution monitoring to provide reference for reducing residual film pollution in farmlands.

At present, artificial collection of residual films is mostly used for residual film pollution evaluation. For example, Zhang et al. (2016) studied the status and distribution characteristics of residual film in Xinjiang, the results indicated that the thickness of the film had significantly negative correlation with the amount of residual film. Wang et al. (2022) analyzed residual film pollution in northwest China and found that plastic debris residing in soil tend to be fragmented, which could make plastic film recovery more challenging and cause severe soil pollution. He et al. (2018) and Wang et al. (2018) used manually stratified sampling to monitor cotton fields with different duration of film mulching according to the weight and area of residual film. They found that residual film content increased year by year as the film mulching continued, and the residual film broke down and moved into the deep soil during crop cultivation. However, artificial collection of residual films, with high labour intensity and low efficiency, cannot meet the requirement for rapid monitoring of residual film pollution. Therefore, it is urgent to develop an efficient evaluation method for evaluating farmland residual film pollution at present.

With the rapid development of UAV remote sensing and deep learning technology, UAV imaging combined with semantic segmentation has been increasingly widely used in agriculture. Zhao et al. (2019) collected UAV RGB and multispectral images of rice lodging and proposed a U-shaped network-based method for rice lodging identification, finding that the Dice coefficients for RGB and multispectral images were 0.9442 and 0.9284, respectively. Zou et al. (2021) proposed a weed density evaluation method using UAV imaging and modified U-Net, and the intersection over union (IOU) was 93.40%. Li et al. (2022) proposed a method for high-density cotton yield estimation based on low-altitude UAV imaging and CD-SegNet. They found that the segmentation accuracy reached 90%, and the average error of the estimated yield was 6.2%.

In recent years, some scholars have preliminarily explored UAV imaging-based plastic film-mulched area detection and residual film identification. Zhu et al. (2019) proposed a method for extracting the plastic film-mulched area in farmlands using UAV images. Based on UAV remote sensing images, the white and black film-mulched areas in farmlands were extracted, and the accuracy reached 94.84%. Sun et al. (2018) proposed an area estimation approach for plastic film-mulched areas based on UAV images and deep learning, and five fully convolutional network (FCN) models were built by multiscale fusion, finding that the optimal identification accuracy of the FCN-4 s model was 97%. Tarantino and Figorito (2012) used the object-oriented nearest neighbour classification method to extract mulching information from aerial images. In addition, focused on farmland residual film pollution Wu et al. (2020) proposed a method for plastic film residue identification using UAV images and a segmentation algorithm. To overcome the influence of light on the accuracy of residual film identification, an impulse coupled neural network based on the S component was built, and the average identification rate was 87.49%. However, this research aimed at farmland that was not ploughed after harvesting in autumn, residual film had good continuity and low fragmentation.

It is of great significance to monitor whether the farmland reaches the qualified conditions for sowing by the rapid detection of residual film pollution in pre-sowing cotton field. Before sowing in the spring, the agricultural mulch turned into film fragments as the cotton field went through a series of operations, such as straw crushing, ploughing, and field preparation et al. Compared with plastic film mulch area detection after sowing in spring and plastic film residue detection after harvest in autumn, residual film pollution evaluation in pre-sowing cotton fields is more difficult.

Aimed at detecting residual film coverage rate in pre-sowing cotton field surface, Zhai et al. (2022) proposed a detection method based on pixel block and machine learning, however, the Mean Intersection Over Union(MIOU) was only 71.25%, and the image acquisition method was near-ground imaging, which is not convenient for rapid monitoring of residual film pollution. Therefore, this study proposed a method for residual film pollution evaluation in pre-sowing cotton fields based on UAV imaging and deep learning semantic segmentation algorithm, aiming to achieve rapid and accurate identification of residual films in pre-sowing cotton fields. This study provides a theoretical basis for further research on the rapid and accurate evaluation technology equipment for residue film pollution.

Materials and methods

Data acquisition

Residual film images were collected from Shihezi City, Xinjiang, China (43°26′ ~ 45°20′N, 84°58′ ~ 86°24′E, a.s.l. 450.8 M), where has a temperate continental climate. The main crops in this area were cotton, and drip irrigation - plastic film mulching has been widely adopted in cotton planting (Wang et al., 2021). The amount of mulch films (thickness: approximately 0.008 mm) used during sowing was between 75 and 120 kg·hm−2. After harvesting in autumn, straw return was performed after crushing, and films were recovered. Ploughing and other operations were carried out in cotton fields before sowing in spring.

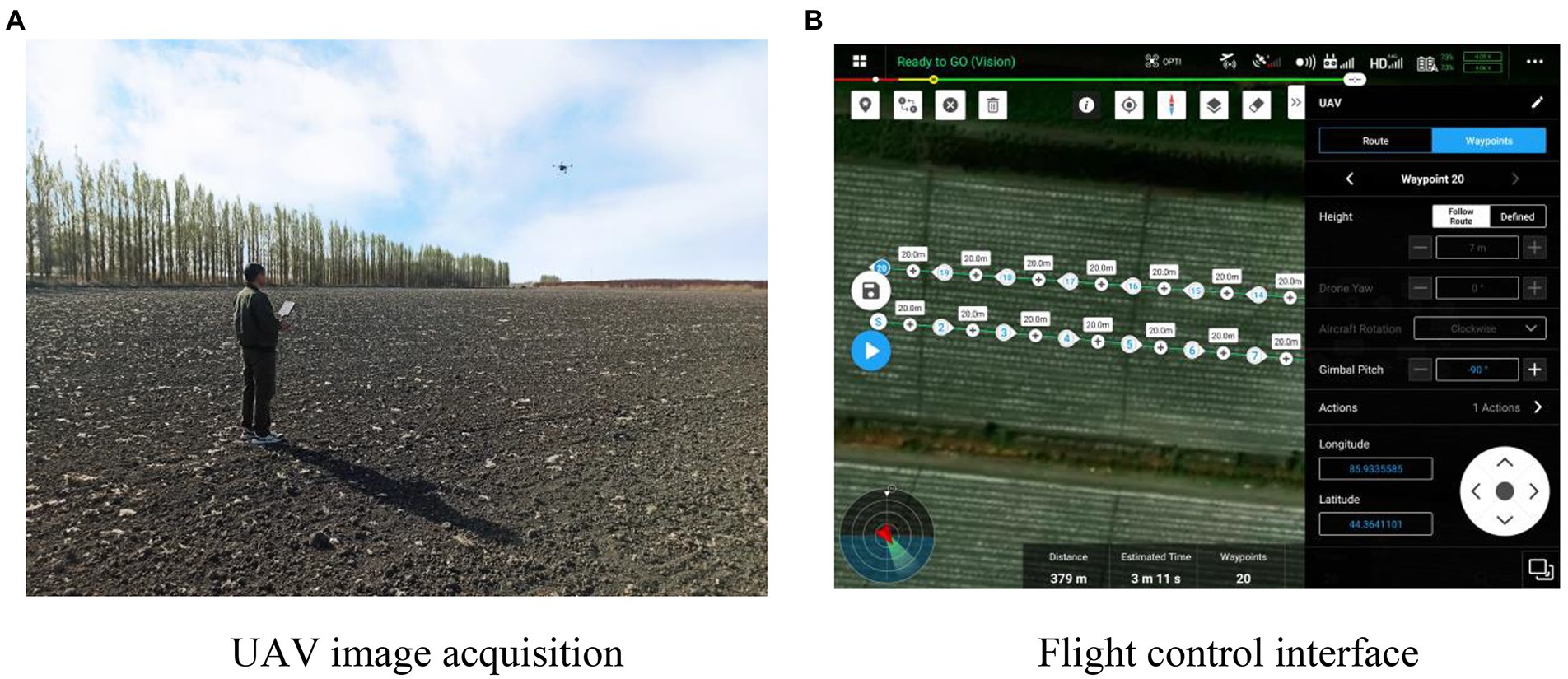

In this study, UAV images of 20 residual plastic film-polluted cotton fields were collected using a DJI M200 aircraft (DJI Innovation Technology Co., Ltd., DJI-Innovations) equipped with a Zen Zenmuse X4S camera from 10:00 to 19:00 on sunny and cloudy days from April 5 to April 15, 2021. The image resolution was 5,472 × 3,078 pixels. As shown in Figure 1, the waypoint method was used for flight for image acquisition. Each cotton field had 10 flight points in a straight line, and the distance between each point was 20 m. The flight speed of the UAV was 3 m/s, the camera angle was 90°, perpendicular to the ground, and the image-acquiring height were 5, 7, and 9 M. A total of 600 images were collected. Original UAV image data distribution of residual film in cotton field is shown in Table 1. In this study, 600 images were divided into a training set (480), validation set (60), and test set (60).

Image labelling and data enhancement

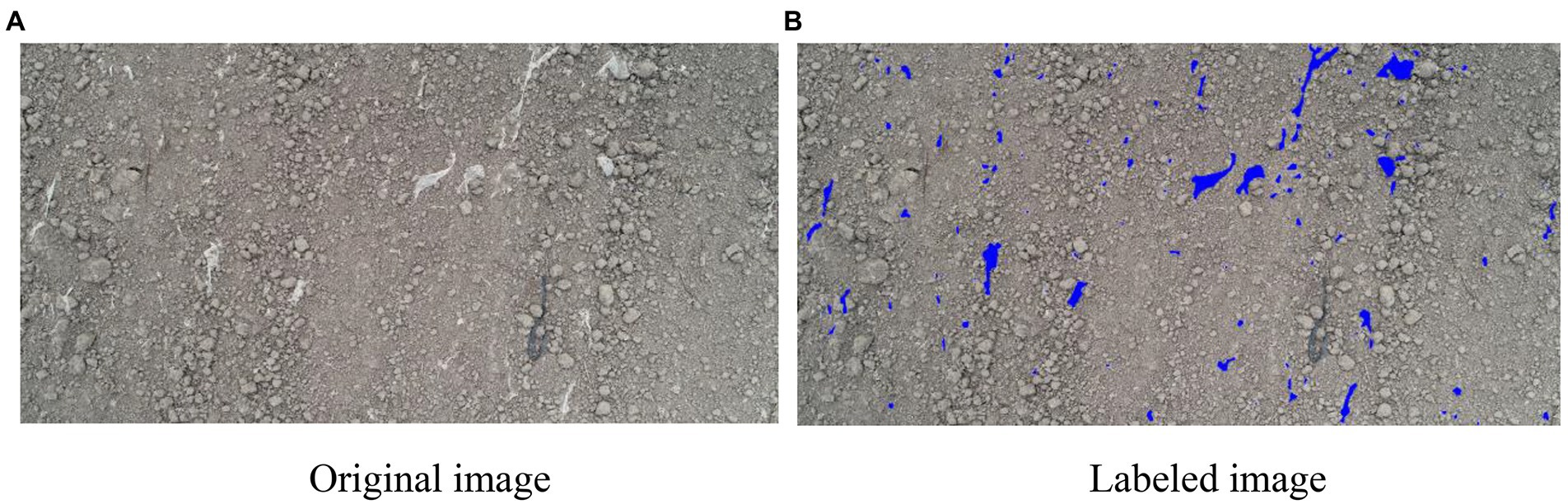

The images were manually annotated using Adobe Photoshop CS5 (Adobe Systems Inc., United States), and all residual films were manually annotated and filled with blue color. Then, the threshold segmentation method was used for binarization. Residual film pixels were labelled as 1, and background pixels such as soil were labelled as 0. The annotation results are shown in Figure 2.

As the original images were too large to directly use for training, to accelerate the model calculation, the image resolution was resized to 1,200 × 600 pixels. In addition, the training set data were enhanced in the process of model training. In each epoch of training, random cutting (size: 1024 × 512 pixels), random flipping (left and right), random flipping (up and down), and brightness adjustment were used for data enhancement. Each training epoch obtained 480 new training data, and 55 epochs of training were conducted. Finally, a total of 26,400 enhanced images were obtained and used for training.

Residual film images segmentation network structure

The U-Net model is a common semantic segmentation network with an “U” shape (Ronneberger et al., 2015; Zhou et al., 2020) for image segmentation (Figure 3A). The left part of the network, the “encoder,” was repeatedly sampled by two convolution layers and one down-sampling layer. The right part of the network, the “decoder,” was connected by a deconvolution layer to the feature graph output by the “encoder.” Then deconvolution was performed two times. Finally, the channels output the desired number of categories through a 1 × 1 convolution operation. Based on the original U-Net model, a modified U-Net model was proposed in this research (Figure 3B) by reducing the number of convolution layers to accelerate the running time. Moreover, the inception module was used to increase the generalization ability and learning ability of the neural network. In the down-sampling layer, the inception module was used to replace the ordinary 3 × 3 convolutional layer, and a 1 × 1 convolution layer was connected after the inception module to reduce the input information and the model size.

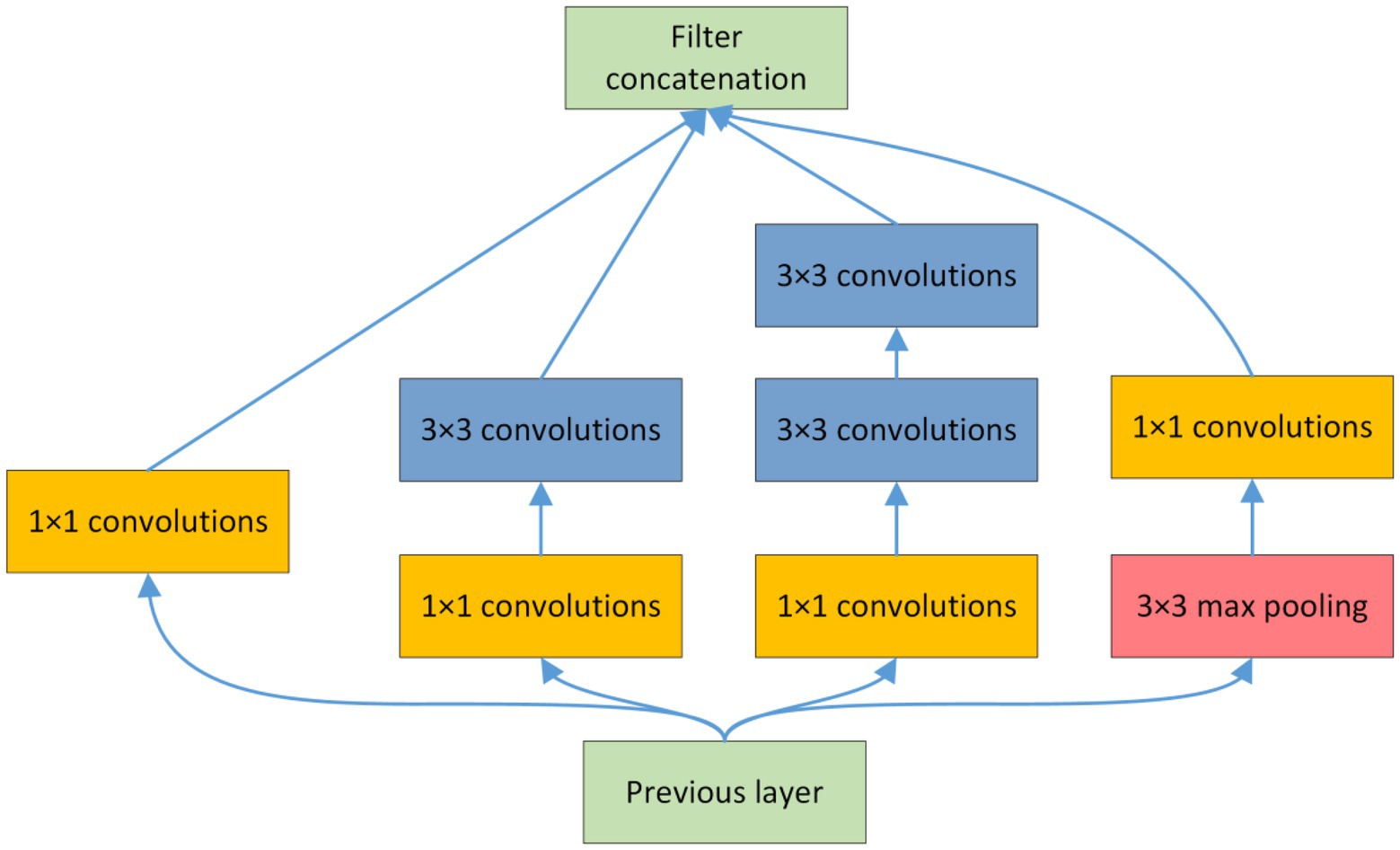

Depth and width are important parameters that affect convolutional neural networks. While increasing the network depth and width, the inception module also solves the problem of too many parameters and reduces the amount of parameter calculation (Szegedy et al., 2015). The inception module used in this study is shown in Figure 4. Features of cotton fields of different scales were extracted using 1 × 1 and 3 × 3 convolutional layers. Therefore, the multiscale inception module is suitable for determining characteristics of the multimorphic, multiscale, and random distribution of residual films in pre-sowing cotton fields. In the inception module, the fusion of different scales and functional branches was realized through the construction of cascade relationships, and then the fusion of multiscale image features was realized.

Figure 4. Structure of the inception module: (A) Training and validation loss; (B) Training and validation accuracy.

Training for residual film detection

The deep learning model training hardware consisted of an Intel(R) Xeon(R) W-2223 CPU @ 3.60 GHz and 128 GB memory, and an NVIDIA GeForce RTX 3090 Graphics with 24 GB memory. The software environment was Windows 10, CUDA 11.2, CUDNN 8.1.1, Python 3.8, and TensorFlow-GPU 2.5.

To simulate the actual application scenario, the hardware and software for the residual film pollution evaluation included an Intel (R) Xeon (R) CPU E3-1230 V2 @ 3.30 GHz, without GPU acceleration, 16 GB memory, Windows 10 operating system, Python 3.7, and TensorFlow-CPU 2.3.

In this study, in the segmentation of residual films, a pixel is either classified as a residual film pixel or not. Similar to other binary classification networks, the “sparse categorical cross-entropy” function was used as the loss function. The neural networks were trained with a gradient descent method. The Adam optimizer algorithm was used to optimize the network, and the initial learning rate was 0.001. The batch of the training set was 6. During the iterative training process, changes in accuracy and loss were recorded, while only the best model was saved. When the number of training iterations reached 55, the training process converged and stopped.

Network segmentation performance evaluation

In this study, the accuracy, F1-score, and mean IOU (MIOU) were used to assess the segmentation performance. The F1-score represents the combined results of precision and recall. The segmentation time and parameters of model were used to assess the segmentation speed and size, respectively.

Where TP is true positive, TN is true negative, FP is false positive, and FN is false negative.

Evaluation of residual film pollution

The residual film coverage rate was used as the evaluation index of residual film pollution. For images with a size of M × N, the residual film coverage rate L is the ratio of the total number of residual film pixels [p (x, y) =1] to the total number of pixels in the image (Equation 6).

To test the accuracy of the modified UNet in residual film pollution evaluation, the L values of 60 images were calculated. Then, the relationship between the predicted residual film coverage rate (L1) and true residual film coverage rate (L2) was evaluated by regression analysis. The coefficient of determination (R2), root mean square error (RMSE), and mean relative error (MRE) were selected as the evaluation indexes.

Where L1 and L2 are the i-th predicted and true L values from N data, respectively.

Results

Training process of the modified U-Net

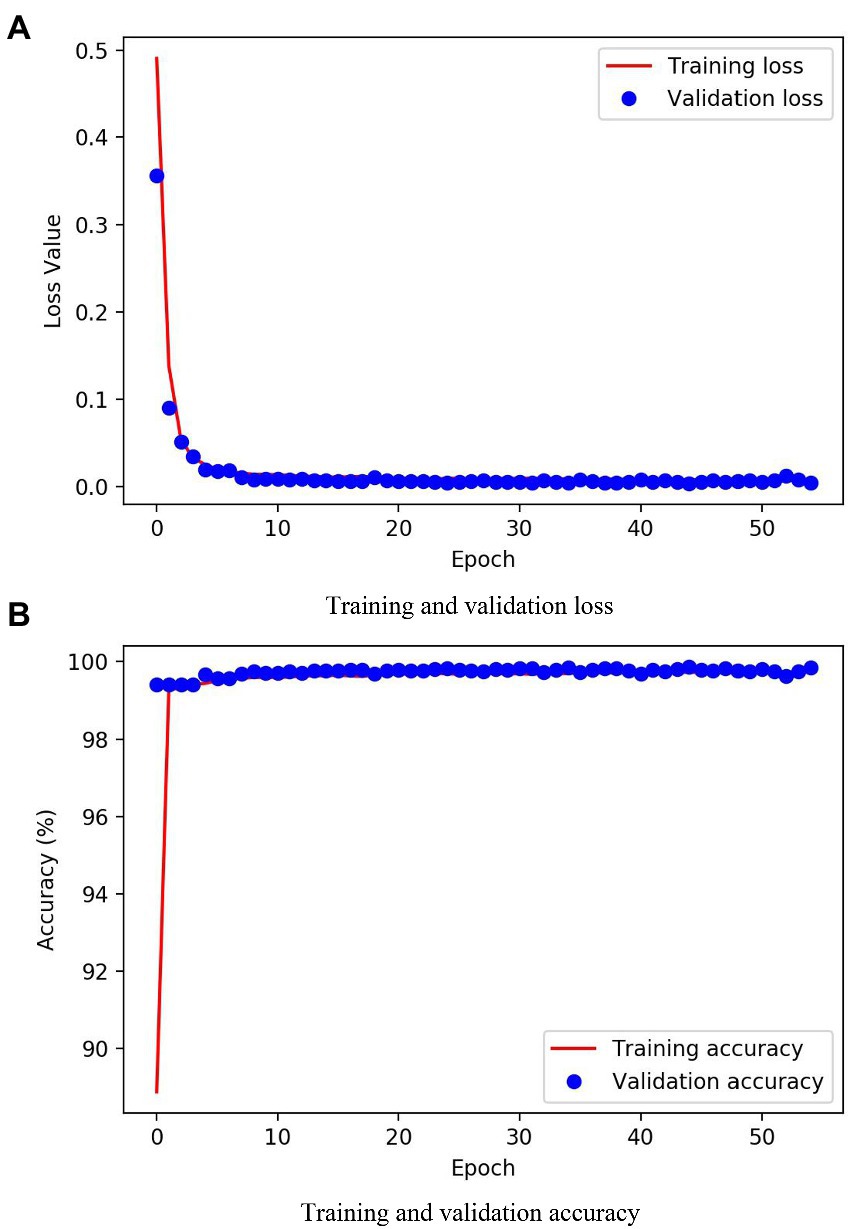

Figure 5 shows the change in loss and accuracy on the training and validation sets as the number of iterations increases during model training. The changes in the loss and accuracy of the training and validation sets showed the same trend. The loss value dropped first and then remained stable, and the accuracy value rose first and then remained stable. After approximately 10 epochs of training, both loss and accuracy remained stable. Furthermore, there was no significant difference in the previous loss values and the accuracy of the training and validation sets, so there was no model over-fitting. After iteratively training the model for 55 epochs, both the loss value and the accuracy converged, indicating that the model achieved good training results. After the model training stage, the loss and accuracy of the validation set were 0.0037 and 99.85%, respectively.

Figure 5. Loss and accuracy changes during training: (A) Training and validation loss; (B) Training and validation accuracy.

Residual film segmentation results

Segmentation results of different models

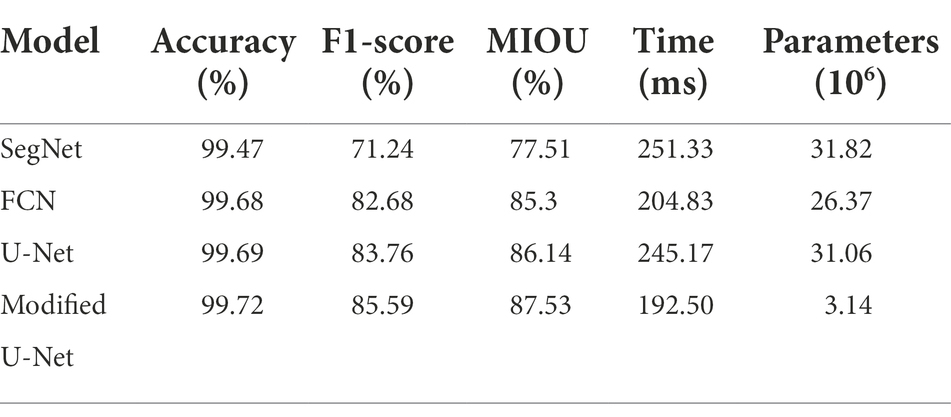

The modified U-Net model was compared with the state-of-the art methods such as SegNet, FCN, and U-Net. The segmentation results of different models are shown in Table 2. The results showed that the modified U-Net model had the best performance and prediction accuracy on the test set. The accuracy of the modified U-Net was 99.72%, which was 0.25, 0.04, and 0.03% higher than that of SegNet, FCN, and U-Net, respectively. The F1-score of the modified U-Net model was 85.59%, which was 14.35, 2.91, and 1.83% higher than that of SegNet, FCN, and U-Net, respectively. The MIOU of the modified U-Net model was 87.53%, which was 10.02, 2.23, and 1.39% higher than that of SegNet, FCN, and U-Net, respectively. In terms of segmentation speed, the average segmentation time per image of the modified U-Net model was 192.50 ms, the minimum parameters of model were 3.14 × 106 and was approximately 1/10 of that of the original U-Net model. Therefore, the modified U-Net model could improve the accuracy and speed of residual film segmentation, which facilitates the rapid and accurate identification of residual film.

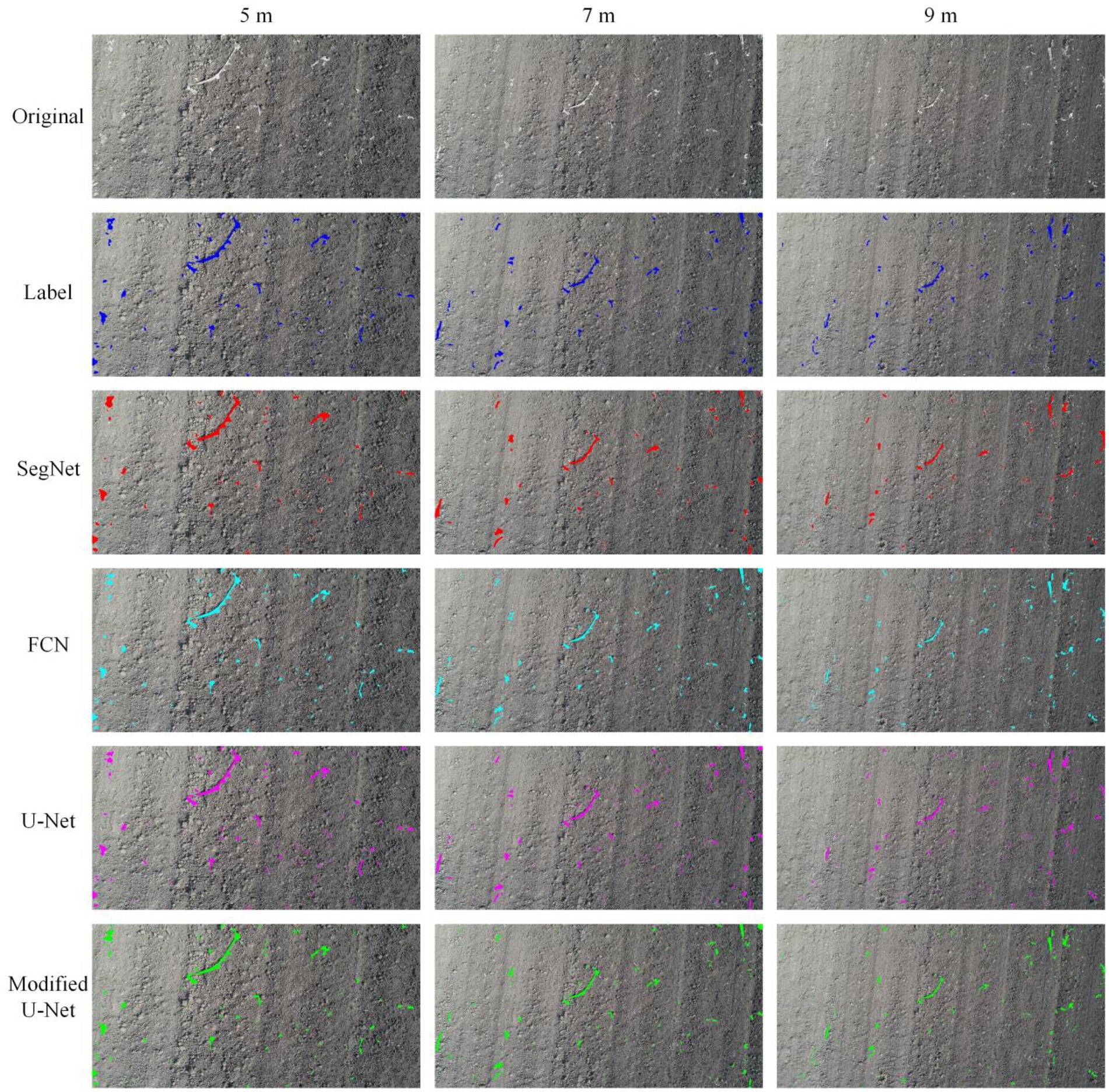

Residual film segmentation results in different weather conditions

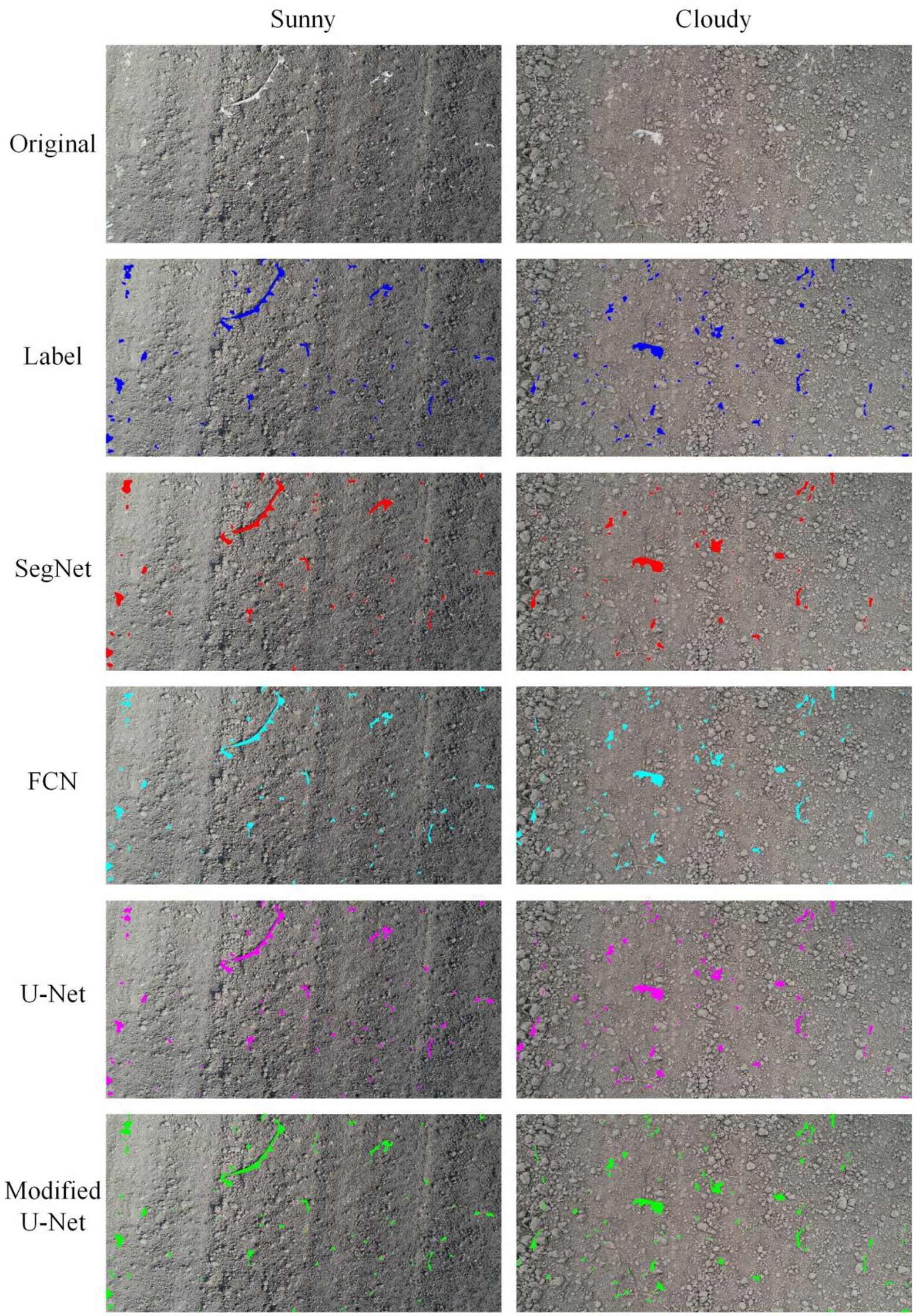

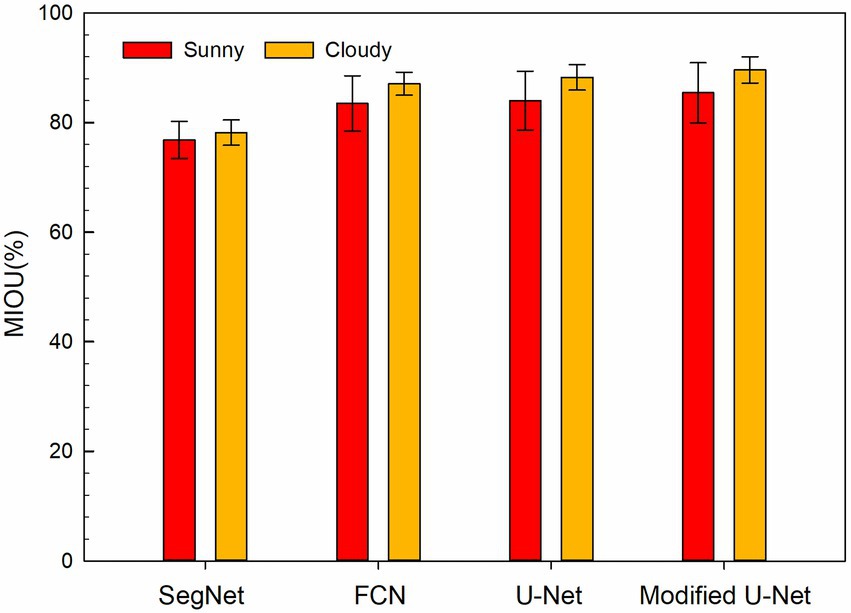

To study the influence of different weather conditions on the segmentation results. The segmentation results of cotton field images acquired in sunny and cloudy weather were compared (Figure 6). The results showed that no matter which model was used, the segmentation performance on images acquired on cloudy days was better than that on sunny days. Figure 7 shows the MIOU of different models based on the images acquired in different weather conditions. It showed that under the same weather conditions, the SegNet model had the worst segmentation performance, followed by the FCN and U-Net models. The modified U-Net model had the optimal performance, with MIOU reaching 85.44 and 89.63% on sunny and cloudy days, respectively.

Segmentation of images acquired at different heights

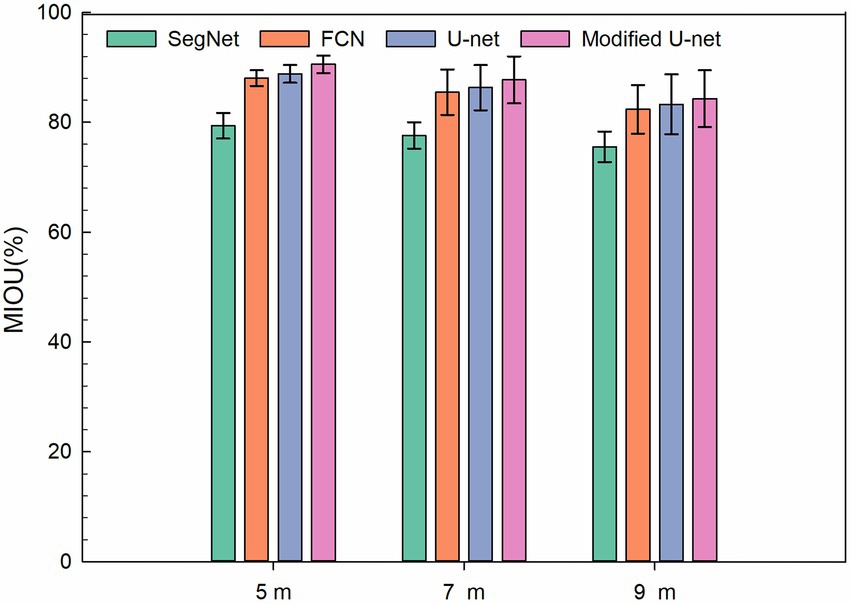

To study the effect of different image-acquiring height on the residual film segmentation results, the segmentation results of images acquired at the heights of 5, 7, and 9 M were compared (Figure 8). The results showed that the segmentation performance gradually decreased with the increase of height. Figure 9 shows the MIOU of different models based on the images acquired at different heights. The results showed that among the models, the SegNet model had the worst identification results at the same heights, followed by the FCN and U-Net models. The modified U-Net model had the optimal results, and its MIOU reached 90.55, 87.72, and 84.32% at 5, 7, and 9 M, respectively.

Residual film pollution evaluation results

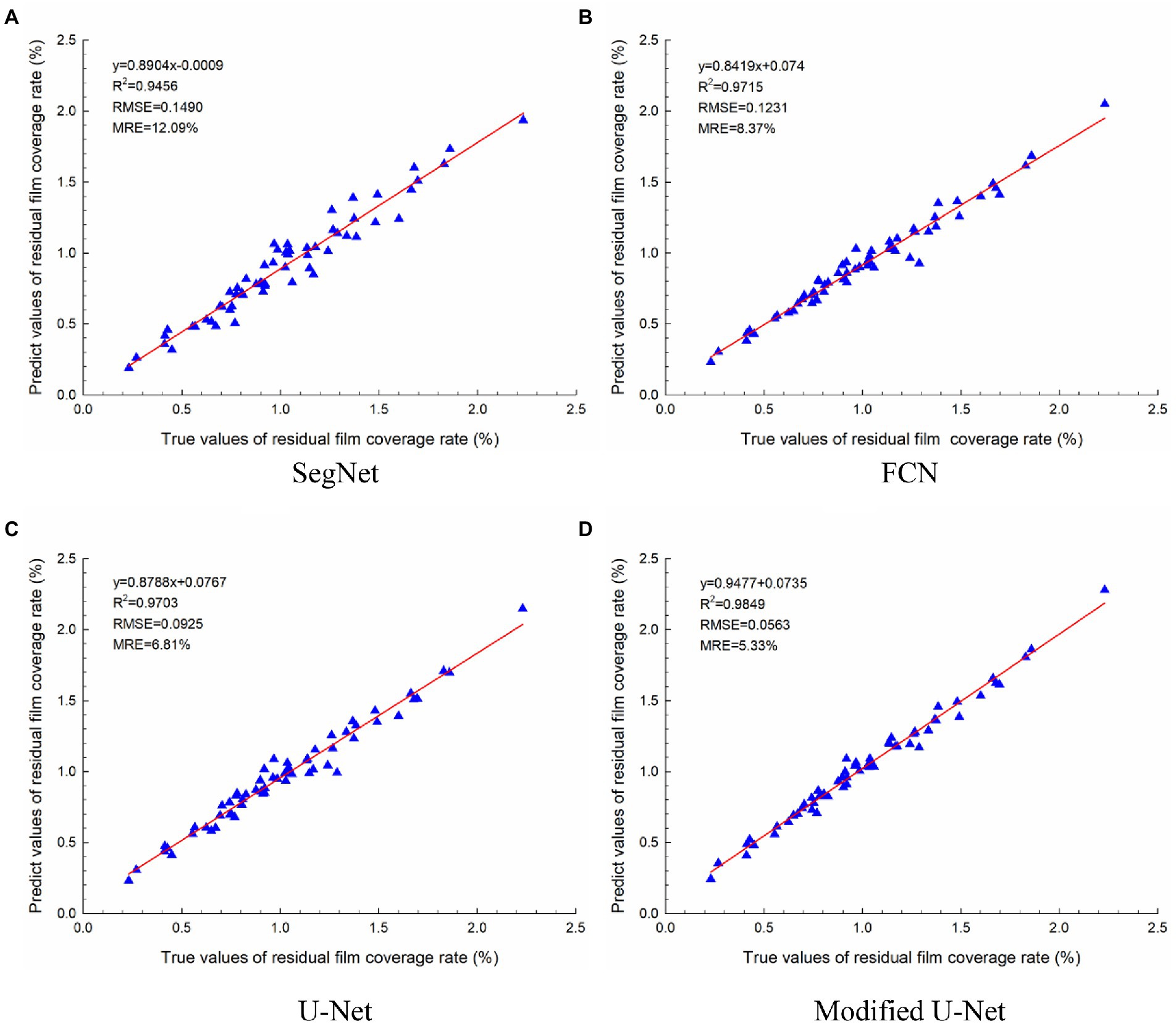

The regression analysis results of the UAV images-based evaluation and manual evaluation of different models are shown in Figure 10. The regression result of the modified U-Net model was slightly better than that of the other models, with a regression equation of y = 0.9477x + 0.7305. The R2, RMSE, and MRE were 0.9849, 0.0563, and 5.33%, respectively. Moreover, it was found that the intercept of the regression equations of different models was positive.

Figure 10. Regression analysis results of the UAV images-based evaluation and manual evaluation: (A) SegNet; (B) FCN; (C) U-Net; (D) Modified U-Net.

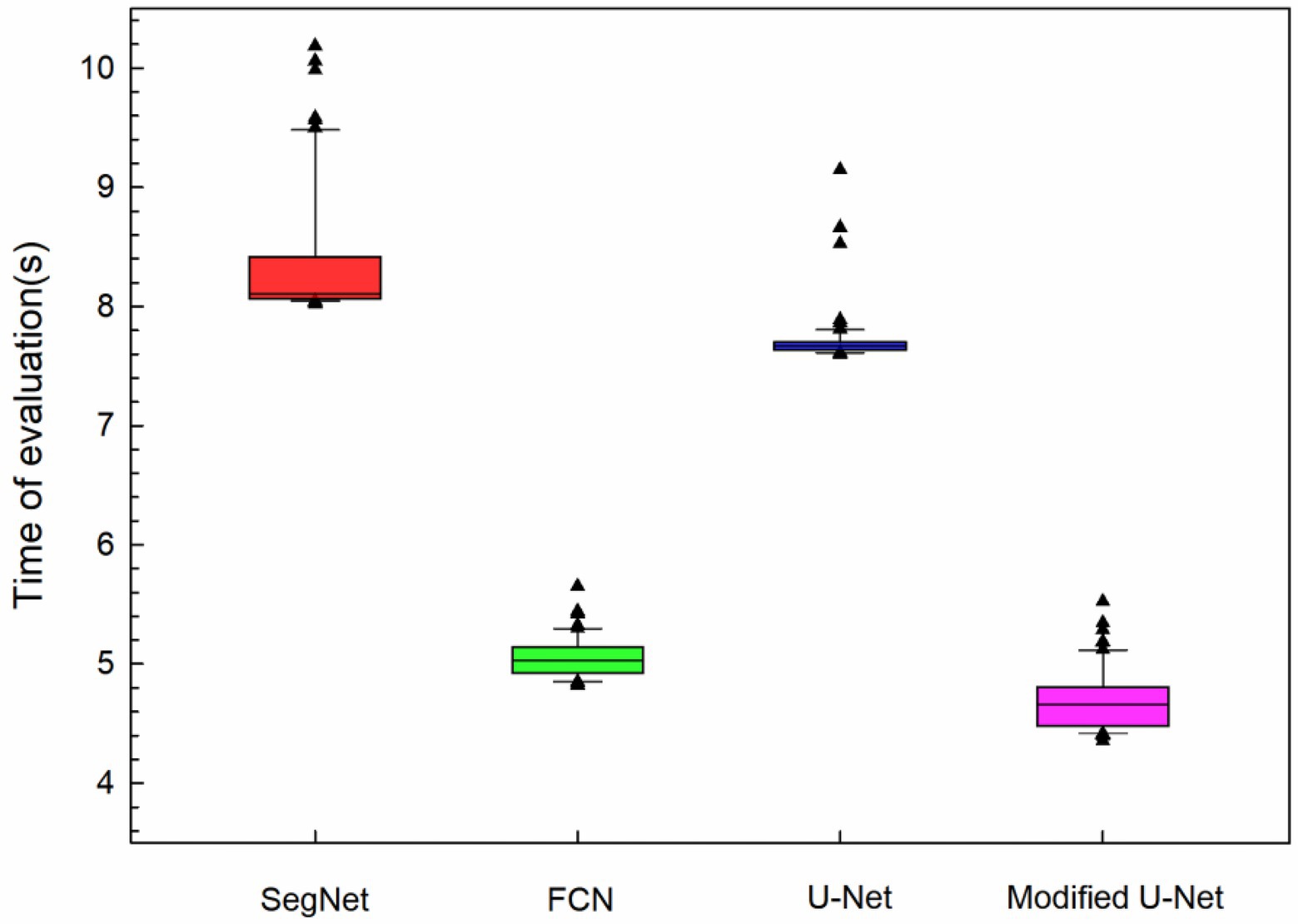

The average evaluation time for 60 images in the test set on the CPU were statistically analyzed, and it was found that the evaluation time was slightly different. The time required to evaluate residual film pollution on the CPU is shown in Figure 11. It was found that the modified U-Net model had a minimum average evaluation time of 4.85 s, which was 41.07% less than the evaluation time of the U-net model.

Discussion

This study identified residual film and evaluated the residual film pollution in cotton fields before sowing using low-altitude UAV imaging and deep learning. Based on the traditional U-Net model, a residual film semantic segmentation model with a modified U-Net model structure was proposed. This model could effectively segment the residual film from UAV images, the MIOU of the residual film recognition results reached 87.53%, which was 16.28 percentage points higher than the residual membrane pixel block identification method (Zhai et al., 2022). In this study, the residual film coverage rate was used to evaluate residual film pollution, and a rapid and accurate evaluation of residual film pollution was achieved based on the residual film semantic segmentation results. The results showed that the R2 of the modified U-Net model was 0.9849, the RMSE was 0.0563, the MRE was 5.33%, and the average evaluation time per image was 4.85 s on the CPU. These results indicate that the modified U-Net model can rapidly and accurately evaluate residual film pollution.

The residual film pollution evaluation method proposed in this study was mainly designed to identify residual films from the surface of cotton fields before sowing and to evaluate the degree of residual film pollution based on the proportion of residual films’ pixels. In this study, a multi classification neural network model was used to identify residual film, soil, straw, etc. Due to the surface of cotton fields includes residual film, soil, straw, drip irrigation belts, etc., it is very difficult to label each item one by one by pixel. Therefore, in the labelling process, only residual films (1) were manually labelled one by one, and soil, straw, and other items were marked as non-residual films (0). As the surface of the residual film attached to soil, the reflection of soil block and other reasons, resulting the existence of false positive (FP) and false negative detections (FN) in this study. The FP represents the segmentation model mistakenly identifies soil, straw and other samples as residual film samples; the FN represents the segmentation model mistakenly identifies residual film samples as soil, straw, etc.

This study proposed a model for residual film semantic segmentation based on a modified U-Net model. The image segmentation in this study is a binary classification, including identification and classification of residual films and non-residual films. Therefore, the feature extraction of the traditional U-Net model was simplified in this study to reduce the number of parameters and speed up the computation. Moreover, the multiscale feature extraction inception module was introduced to achieve accurate segmentation of residual films of different sizes by fusing multiscale image features. This modified network model may not perform as well on other more complex images but outperforms several traditional semantic segmentation models, including U-Net, SegNet, and FCN.

This study compared the identification performance on sunny and cloudy days and found that the identification performance on cloudy days was better than that on sunny days. This may be due to that the reflection of soil blocks causing them to be misjudged as residual films on sunny days. In addition, by comparing the effect of different image-acquiring height on the residual film segmentation, it was found that the lower the height is, the better the residual film segmentation effect. This may be due to that images acquired at lower heights have higher definition. However, when the height was too low, wind from the UAV’s rotor could blow away residual films, affecting the residual film pollution evaluation. Therefore, in practical applications, the height of UAV should be considered while ensuring image definition.

The residual film pollution evaluation method in this paper has application value for the control of residual film pollution. This evaluation system can achieve a rapid and accurate evaluation of residual film pollution. Moreover, rapid evaluation of the degree of residual film pollution can provide some reference for the objective evaluation of the seeding suitability of cotton fields during the spring sowing stage. In addition, this study also provides the theoretical support for the detection of residual film pollution in cotton field plough layer using UAV imaging, the rapid prediction of residual film pollution in cotton field plough layer can be realized by studying the residual film pollution correlation between the surface and plough layer. Compared to manual sampling to monitor residual film pollution, the approach in this study saves manpower and reduces time costs.

Conclusion

In this paper, residual film pollution images in pre-sowing cotton fields were collected by UAV imaging system. The more suitable residual film segmentation model was built by modified U-Net model. Finally, the residual film pollution was evaluated based on residual film coverage rate. Through the analysis of the test results, it was found that:

(1) The modified U-Net model was proposed by simplifying the U-Net model and introducing an inception module, which can realize the accurate segmentation of residual film from cotton fields before sowing. The MIOU of segmentation reached 87.53%.

(2) The identification performance on cloudy days was better than that on sunny days. The identification performance of residual films gradually decreased with increasing image-acquiring height.

(3) The modified U-Net model outperformed other models in residual film pollution evaluation, with R2 of 0.9849, RMSE of 0.0563, MRE of 5.33% and the average evaluation time per image of 4.85 s on the CPU.

(4) This study provides a theoretical reference for further development of evaluation technology and equipment for residual film pollution based on UAV imaging.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material; further inquiries can be directed to the corresponding author.

Author contributions

ZZ: methodology, model design, data analysis, and original manuscript writing. FQ: data collection and data analysis. QM: data analysis and revision. JY: data collection and revision. HW: data collection. XC and RZ: methodology, editing, revision, supervision, and funding. All authors contributed to the article and approved the submitted version.

Funding

The authors gratefully acknowledge the financial support provided by the National Natural Science Foundation of China (32060412), the High-level Talents Research Initiation Project of Shihezi University (CJXZ202104), the earmarked fund for China Agriculture Research System (CARS-15-17), and the Graduate Education Innovation Project of Xinjiang Autonomous Region (XJ2022G082).

Acknowledgments

The authors would also like to thank Mengyun Zhang and Fengjie Cai for their assistance in the experiment.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer GY declared a shared affiliation with the authors to the handling editor at the time of review.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Akter, T., Islam, A. K. M. A., Rasul, M. G., and Kundu, S. (2018). Evaluation of genetic diversity in short duration cotton (Gossypium hirsutum L.). J. Cotton Res. 1, 15–20. doi: 10.1186/s42397-018-0018-6

Alves, A. N., Souza, W. S. R., and Borges, D. L. (2020). Cotton pests classification in field-based images using deep residual networks. Comput. Electron. Agric. 174:105488. doi: 10.1016/j.compag.2020.105488

Dong, H., Liu, T., Han, Z., Sun, Q. M., and Li, R. (2015). Determining time limits of continuous film mulching and examining residual effects on cotton yields and soil properties. J. Environ. Biol. 36, 677–684.

He, H. J., Wang, Z. H., Guo, L., Zheng, X. R., Zhang, J. Z., Li, W. B., et al. (2018). Distribution characteristics of residual film over a cotton field under long-term film mulching and drip irrigation in an oasis agroecosystem. Soil Tillage Res. 180, 194–203. doi: 10.1016/j.still.2018.03.013

He, W. Q., Yan, C. R., Zhao, C. X., Chang, R. Q., Liu, Q., and Liu, S. (2009). Study on the pollution by plastic mulch film and its countermeasures in China. J. Agro-Environ. Sci. 28, 533–538.

Li, F., Bai, J. Y., Zhang, M. Y., and Zhang, R. Y. (2022). Yield estimation of high-density cotton fields using low-altitude UAV imaging and deep learning. Plant Methods 18:55. doi: 10.1186/s13007-022-00881-3

Qi, R. M., Jones, D. L., Li, Z., Liu, Q., and Yan, C. R. (2020). Behavior of microplastics and plastic film residues in the soil environment: a critical review. Sci. Total Environ. 703:134722. doi: 10.1016/j.scitotenv.2019.134722

Ronneberger, O., Fischer, P., and Brox, T., (2015). U-net: convolutional networks for biomedical image segmentation. In 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, 9351.234–241.

Sun, Y., Han, J. Y., Chen, Z. B., Shi, M. C., Fu, H. P., and Yang, M. (2018). Monitoring method for UAV image of greenhouse and plastic-mulched landcover based on deep learning. Trans. Chinese Soc. Agri. Machinery 49, 133–140. doi: 10.6041/j.issn.1000-1298.2018.02.018

Szegedy, C., Liu, W., Jia, Y. Q., Sermanet, P., Reed, S., Anguelov, D., et al. (2015). Going deeper with convolutions. IEEE Confer Comp. Vision Patter. Recog. 2015, 1–9. doi: 10.1109/CVPR.2015.7298594

Tarantino, E., and Figorito, B. (2012). Mapping rural areas with widespread plastic covered vineyards using true color aerial data. Remote Sens. 4, 1913–1928. doi: 10.3390/rs4071913

Wang, S. Y., Fan, T. L., Cheng, W. L., Wang, L., Zhao, G., Li, S. Z., et al. (2022). Occurrence of macroplastic debris in the long-term plastic film-mulched agricultural soil: a case study of Northwest China. Sci. Total Environ. 831:154881. doi: 10.1016/j.scitotenv.2022.154881

Wang, Z. H., He, H. J., Zheng, X. R., Zhang, J. Z., and Li, W. H. (2018). Effect of cotton stalk returning to fields on residual film distribution in cotton fields under mulched drip irrigation in typical oasis area in Xinjiang. Trans. Chinese Soc. Agri. Engineer. 34, 120–127. doi: 10.11975/j.issn.1002-6819.2018.21.015

Wang, S., Wang, X. J., Ji, C. R., Guo, Y. Y., Hu, Q. R., and Yang, M. F. (2021). Effects of climate change on cotton growth and development in Shihezi in recent 40 years. Agric. Eng. 11, 132–136.

Wu, X. M., Liang, C. J., Zhang, D. B., Yu, L. H., and Zhang, F. G. (2020). Identification method of plastic film residue based on UAV remote sensing images. Trans. Chinese Soc. Agri. Machinery 51, 189–195. doi: 10.6041/j.issn.1000-1298.2020.08.021

Xue, Y. H., Cao, S. L., Xu, Z. Y., Jin, T., Jia, T., and Yan, C. R. (2017). Status and trends in application of technology to prevent plastic film residual pollution. J. Agro-Environ. Sci. 36, 1595–1600. doi: 10.11654/jaes.2017-0298

Yan, C. R., Liu, E. K., Shu, F., and Liu, Q. (2014). Review of agricultural plastic mulching and its residual pollution and prevention measures in China. J. Agricu. Resour. Environ. 31, 95–102. doi: 10.13254/j.jare.2013.0223

Zhai, Z. Q., Chen, X. G., Qiu, F. S., Meng, Q. J., Wang, H. Y., and Zhang, R. Y. (2022). Detecting surface residual film coverage rate in pre-sowing cotton fields using pixel block and machine learning. Trans. Chinese Soc. Agri. Engineer. 38, 140–147. doi: 10.11975/j.issn.1002-6819.2022.06.016

Zhang, Z. Q., Cui, Q. L., Li, C., Zhu, X. Z., Zhao, S. L., Duan, C. J., et al. (2022). A critical review of microplastics in the soil-plant system: distribution, uptake, phytotoxicity and prevention. J. Hazard. Mater. 424:127750. doi: 10.1016/j.jhazmat.2021.127750

Zhang, D., Liu, H. B., Hu, W. L., Qin, X. H., Ma, X. W., Yan, C. R., et al. (2016). The status and distribution characteristics of residual mulching film in Xinjiang. J.Integrat. Agricul. 15, 2639–2646. doi: 10.1016/S2095-3119(15)61240-0

Zhao, Y., Chen, X. G., Wen, H. J., Zheng, X., Niu, Q., and Kang, J. M. (2017). Research status and Prospect of control Technology for Residual Plastic Film Pollution in farmland. Trans. Chinese Soc. Agri. Machinery 48, 1–14. doi: 10.6041/j.issn.1000-1298.2017.06.001

Zhao, X., Yuan, Y. T., Song, M. D., Ding, Y., Lin, F. F., Liang, D., et al. (2019). Use of unmanned aerial vehicle imagery and deep learning UNet to extract rice lodging. Sensors 19:3859. doi: 10.3390/s19183859

Zhou, D. Y., Li, M., Li, Y., Qi, J. T., Liu, K., Cong, X., et al. (2020). Detection of ground straw coverage under conservation tillage based on deep learning. Comput. Electron. Agric. 172:105369. doi: 10.1016/j.compag.2020.105369

Zhu, X. F., Li, S. B., and Xiao, G. F. (2019). Method on extraction of area and distribution of plastic-mulched farmland based on UAV images. Trans. Chinese Soc. Agri. Engineer. 35, 106–113. doi: 10.11975/j.issn.1002-6819.2019.04.013

Keywords: UAV imaging, deep learning, cotton field, residual film, pollution

Citation: Zhai Z, Chen X, Zhang R, Qiu F, Meng Q, Yang J and Wang H (2022) Evaluation of residual plastic film pollution in pre-sowing cotton field using UAV imaging and semantic segmentation. Front. Plant Sci. 13:991191. doi: 10.3389/fpls.2022.991191

Edited by:

Muhammad Naveed Tahir, Pir Mehr Ali Shah Arid Agriculture University, PakistanReviewed by:

Rui Xu, University of Georgia, GeorgiaBaohua Zhang, Nanjing Agricultural University, China

Guang Yang, Shihezi University, China

Jiangbo Li, Beijing Academy of Agriculture and Forestry Sciences, China

Copyright © 2022 Zhai, Chen, Zhang, Qiu, Meng, Yang and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ruoyu Zhang, enJ5emp1QGdtYWlsLmNvbQ==

Zhiqiang Zhai

Zhiqiang Zhai Xuegeng Chen1,2

Xuegeng Chen1,2 Ruoyu Zhang

Ruoyu Zhang Fasong Qiu

Fasong Qiu