- National Key Laboratory of Crop Genetic Improvement, Agricultural Bioinformatics Key Laboratory of Hubei Province, National Center of Plant Gene Research, College of Engineering, Huazhong Agricultural University, Wuhan, China

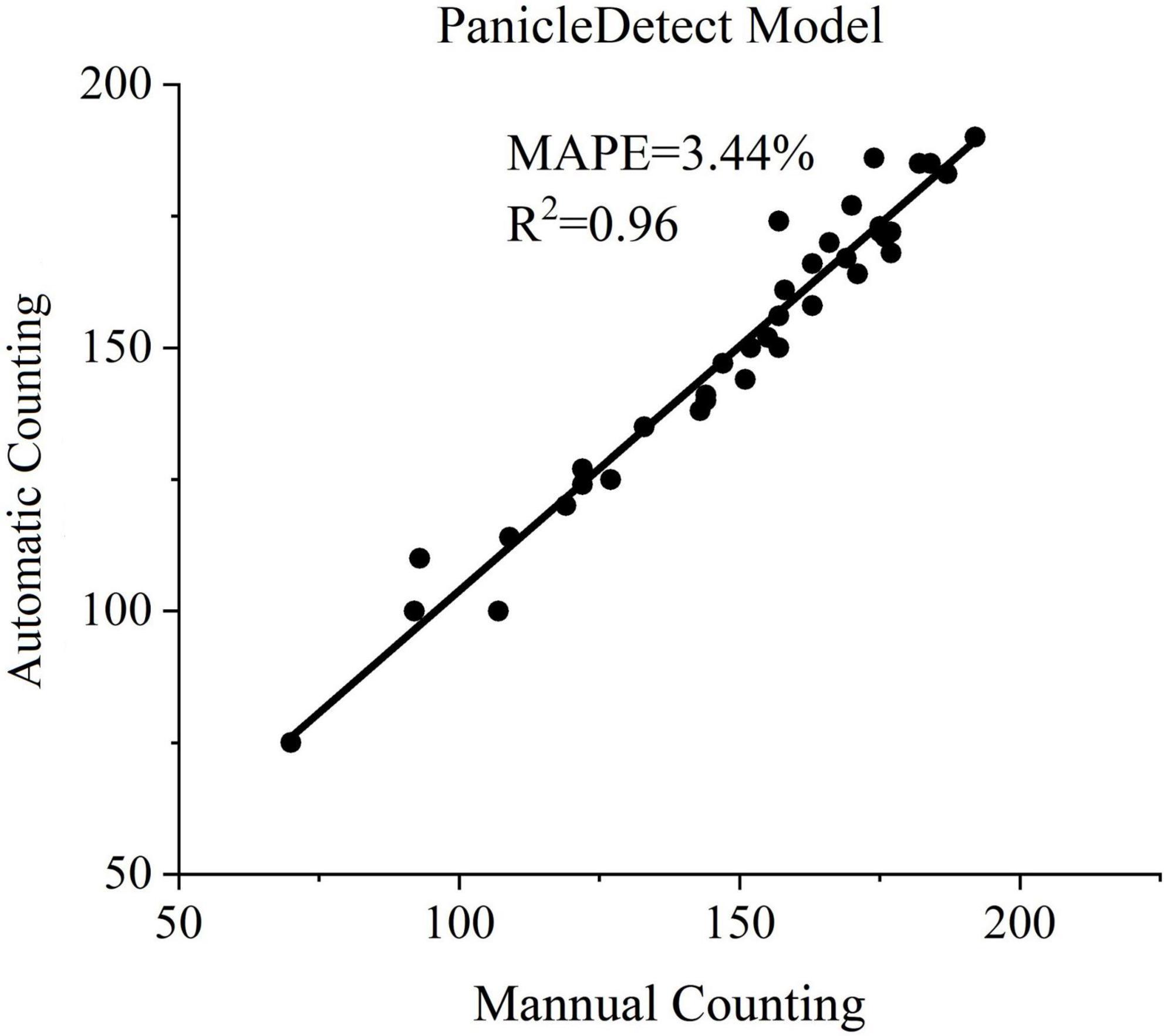

Panicle number is directly related to rice yield, so panicle detection and counting has always been one of the most important scientific research topics. Panicle counting is a challenging task due to many factors such as high density, high occlusion, and large variation in size, shape, posture et.al. Deep learning provides state-of-the-art performance in object detection and counting. Generally, the large images need to be resized to fit for the video memory. However, small panicles would be missed if the image size of the original field rice image is extremely large. In this paper, we proposed a rice panicle detection and counting method based on deep learning which was especially designed for detecting rice panicles in rice field images with large image size. Different object detectors were compared and YOLOv5 was selected with MAPE of 3.44% and accuracy of 92.77%. Specifically, we proposed a new method for removing repeated detections and proved that the method outperformed the existing NMS methods. The proposed method was proved to be robust and accurate for counting panicles in field rice images of different illumination, rice accessions, and image input size. Also, the proposed method performed well on UAV images. In addition, an open-access and user-friendly web portal was developed for rice researchers to use the proposed method conveniently.

Introduction

Rice is one of the important cereal crops in the world, especially in Asia. The yield of cereal crops is related to the number of panicles per square meter, grains per panicle and grain size (Slafer et al., 2014; Lu et al., 2015; Ferrante et al., 2017; Jin et al., 2017). Thus, in order to predict the yield of rice, panicle count is an appropriate method. However, manual counting has the defects of high labor cost, time consuming and error-prone. Also, for yield prediction, studies tend to process infield large size images (Fiorani and Schurr, 2013). It is necessary to develop a method to count panicles fast, accurately and automatically for filed images.

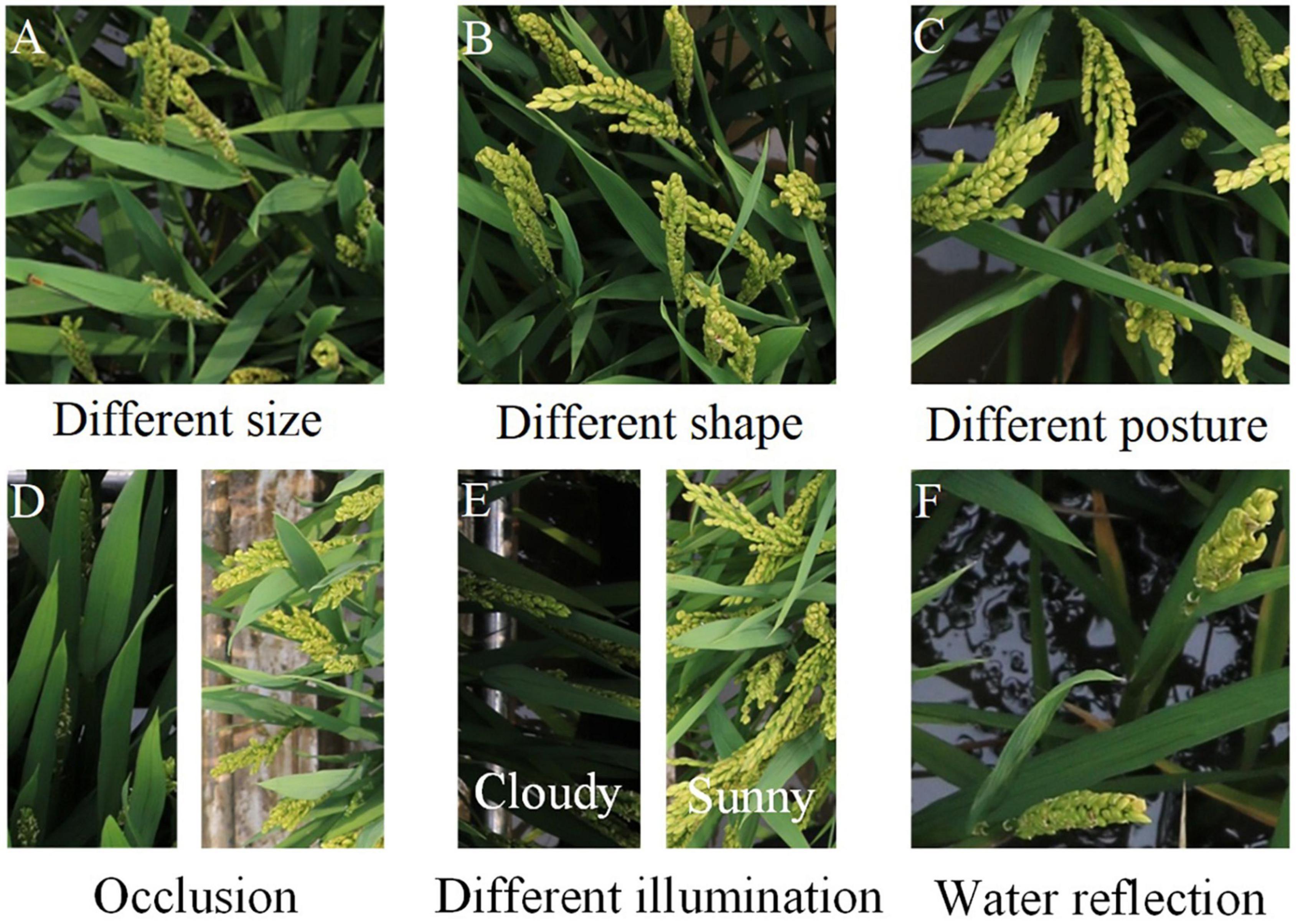

However, automatic panicle count is an enormous challenge. For panicle detection, complexity of the field environment can bring many difficulties, which is shown in Figure 1, such as different size, different shape, different posture, serious occlusion, different illumination and water refection. With the development of artificial intelligence and machine vision technology, many studies used machines to count the number of fruits for crop yield prediction, such as cotton (Singh et al., 2021), corn (Khaki et al., 2021), sugar-beet (Barreto et al., 2021), citrus (Dorj et al., 2017) and so on. For counting, the current studies for cereal panicle count can be mainly divided into three categories: image segmentation, object detection and counting directly through regressing network.

Figure 1. Challenges in rice panicle detection. (A) Different size. (B) Different shape. (C) Different posture. (D) Occlusion. (E) Different illumination. (F) Water reflection.

Image segmentation segments the panicles based on the phenotypic characteristics, such as color and texture. Combined with the counting method, the number of panicles can be counted. Xiong et al. (2017) proposed an algorithm to segment panicles based on superpixel regions generation, CNN and superpixel optimization and the F-measure was 76.73%. Hayat et al. (2020) proposed an algorithm for rice panicle segmentation based on unsupervised Bayesian learning and the mean F1 score was 82.10%. Ma et al. (2020) proposed EarSegNet based on semantic segmentation for winter wheat ears segmentation and the F1 score was 87.25%. Yang et al. (2020) used FPN-Mask model to segment panicles during grain filling stage and the pixel accuracy was 0.99. Misra et al. (2020) proposed SpikeSegNet for wheat spike detection and counting and the average accuracy for spike counting was 95%. Wang et al. (2020) proposed an algorithm using 3D point cloud to obtain agricultural crop dimensions, which was suitable for panicle count at high density. However, this method was designed for indoor images and could not be directly generalized to field. Besides panicle number, panicle shape, size, position and color et al. can also be obtained after panicle segmentation, which is convenient for further phenotypic analysis. However, the accuracy of the counting is largely dependent on the accuracy of panicle segmentation. When the rice panicles occluded with each other, it is hard to separate the panicles. And panicle segmentation needs to be combined with the counting method to obtain the panicle number, which would lead to error accumulation.

Object detection is a common method for counting by detecting and drawing bounding boxes. Besides panicle number, object detection can also obtain information about panicle size and position. Ji et al. (2021) proposed a detection method, which contained light saturation correction and Itti saliency-based system for candidate areas detection and combined with feature extraction and the usage of LS-SVM classifier for elimination of false. The F1 score of this method was 88.36%. However, without deep learning, this method might be limited for directly used in other applications due to comparably insufficient learning ability. In the research of object detection using deep learning, some of the studies directly resized the images due to the need of the deep learning networks. Zhou et al. (2019) proposed an improved R-FCN for rice panicle detection and the F-measure was 87.4%. Yang et al. (2021) proposed an improved YOLOv4 for detection of wheat spikes and the accuracy of the wheat spikes with different density distributions was 94%, 96.04% and 93.11%. However, the above two algorithms directly resized the images before feeding to the model, which might lead to lots of missing of the small panicles as the size of the small panicles would be largely decreased or even disappeared after resize when the original image size was large.

Using object detection for counting panicles in images with large image size, sliding window and image cutting are two commonly used methods. However, repeated detections between the adjacent sub-images bring new challenges. Desai et al. (2019) used a sliding window to detect the flowering regions based deep learning. However, this method counted the regions containing panicles to predict the panicle number, which was not suitable for the situation of dense growth and different sizes of rice panicles. Xu et al. (2020) proposed an algorithm namely multi-scale hybrid window panicle detect (MHW-PD) for rice panicle count. For images with large number of panicles, this algorithm cut the images into sub-images without overlapping and detected the sub-images based on convolutional neural network. If the two bounding boxes in the adjacent sub-images were close and the sum of the area of the two boxes was close to the average size of a panicle, the two boxes would be merged. This algorithm was not suitable for the panicles with different sizes. In addition, the author mentioned that for more dense and occluded rice panicles, the accuracy of the method was reduced and it would cause more miss-detection. For images with 71-80 panicles, the counting accuracy of this algorithm was 86.7%. Lyu et al. (2021) also split the large size images into small tiles and used the DBSCAN algorithm to remove the repeated detections. The average error of this counting method was 33.98%.

Counting directly through regressing network was another commonly used method for object counting. Lu et al. (2017) proposed a regressing network, TasselNet to count tassels directly. However, this method might be less robust in the later growth stage than object detector (Madec et al., 2019). TasselNetV2 and TasselNetV2 + was subsequently proposed by the same research group to improve the counting accuracy and efficiency (Xiong et al., 2019; Lu and Cao, 2020). Compared with other deep convolution neural networks, TasselNetV2 + reduced the use of the video memory and would be able to analyze large size images efficiently. Similarly, Khaki et al. (2022) proposed WheatNet for wheat head counting and its overall prediction error was 8.7%. One disadvantage of the counting directly through regressing network method was that this method can only obtain the panicle number. Thus, it was difficult to make a more specific analysis of the phenotype of panicles after counting.

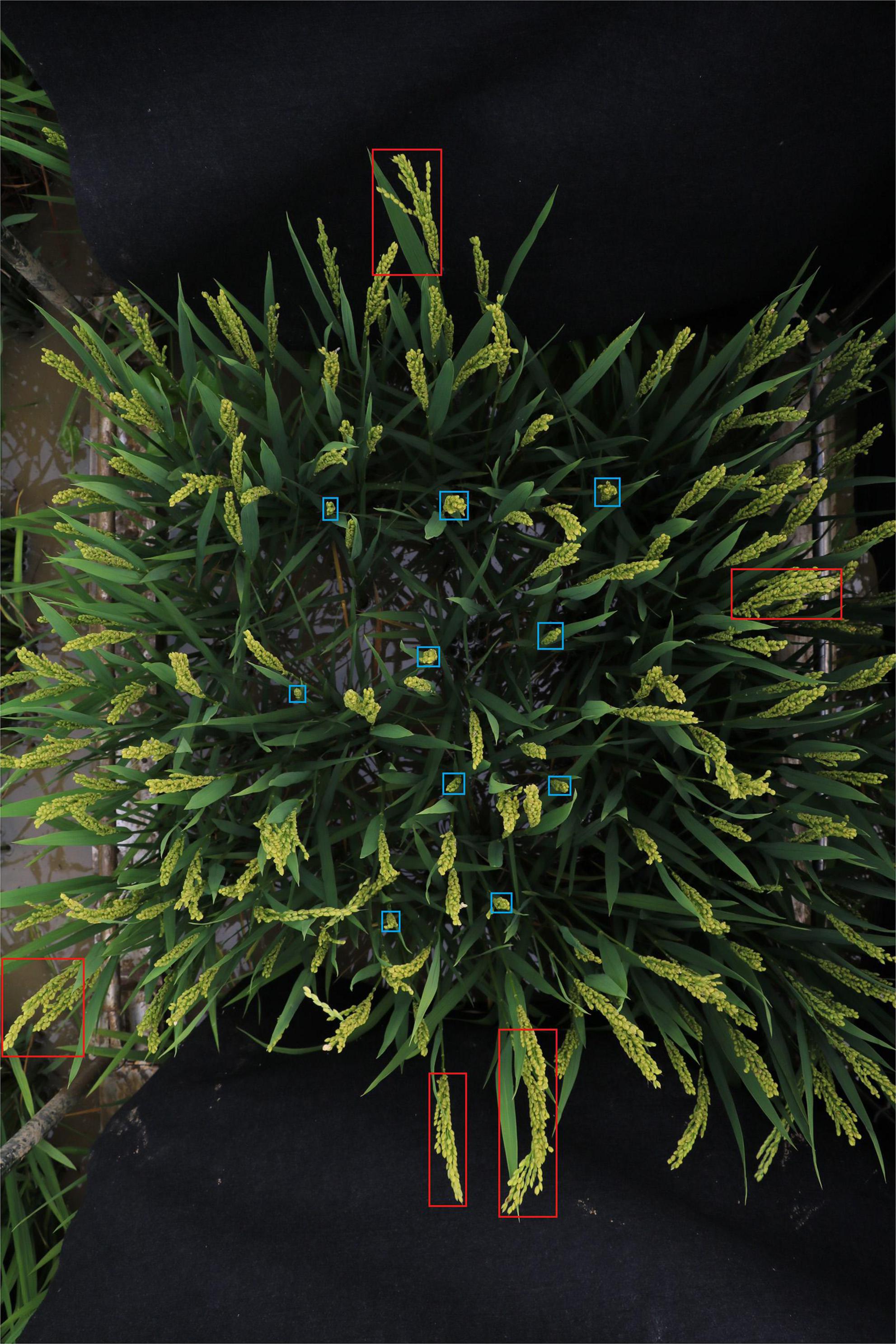

The size of panicles varies greatly even in the same plot. Some panicles would be extremely small (for instance, blue boxes in Figure 2). If the original large size image was directly resized before feeding to the detection network, small panicles would be missed in detection.

Figure 2. Example of rice field image. The size of rice panicles varies greatly. Red boxes show examples of large panicles and blue boxes show examples of small panicles.

To detect and count panicles in rice field images with large image size, an algorithm based on deep learning was proposed in this paper. Firstly, an original high-resolution image was cut into several sub-images in an overlapping manner to ensure that a panicle would be appeared completely in at least one sub-image. Then, the sub-images were fed into the panicle detection networks and the detection results were merged to get the detection result. Three object detectors, namely YOLOv3, YOLOv5 and Faster R-CNN, were used and compared in this study. The repeated detections in the overlapping area of adjacent sub-images were then removed using two indicators. To validate the proposed algorithm, panicle detection for field rice images taken by ground-based imaging system with different illumination, rice accessions and spatial resolution were tested. To further investigate the robustness of the proposed method, panicle detection for field rice images taken by UAV was also tested.

Materials and methods

Rice cultivation and image acquisition

In this study, the experimental paddy field was located in Wuhan, Hubei province, China (30.5N, 114.3E). Rice (O. sativa) seeds were sown and germinated during the summer of 2017. Each field plot (96 × 80 cm2) had 20 rice plants of the same accessions, which were planted in 5 rows and 4 columns. The spacing between each plant was 16 × 16 cm2 and the spacing between each plot was 32 cm. Considered the edge effect, a guard row of rice plants was planted on the boundary between two adjacent plots. Rice plants in different plots belonged to different accessions. In total, 104 rice accessions were used for training and testing in this work. All these accessions come from core germplasm resources of Japonica rice in China. The names of the 104 rice accessions are listed in Supplementary Table 1. The panicle number of each field plot varied from 75 to 190. For each plot, the top-view image was acquired. A ground-based imaging bracket was used to obtain rice plot images. The camera (Canon EOS 760D, 18 mm focal length lens, 6000 × 4000 pixels) were mounted at the top of the imaging bracket. Wireless shutter was used to trigger the camera to take images when the imaging bracket moved manually in the paddy field.

Main flow of rice panicle detection algorithm

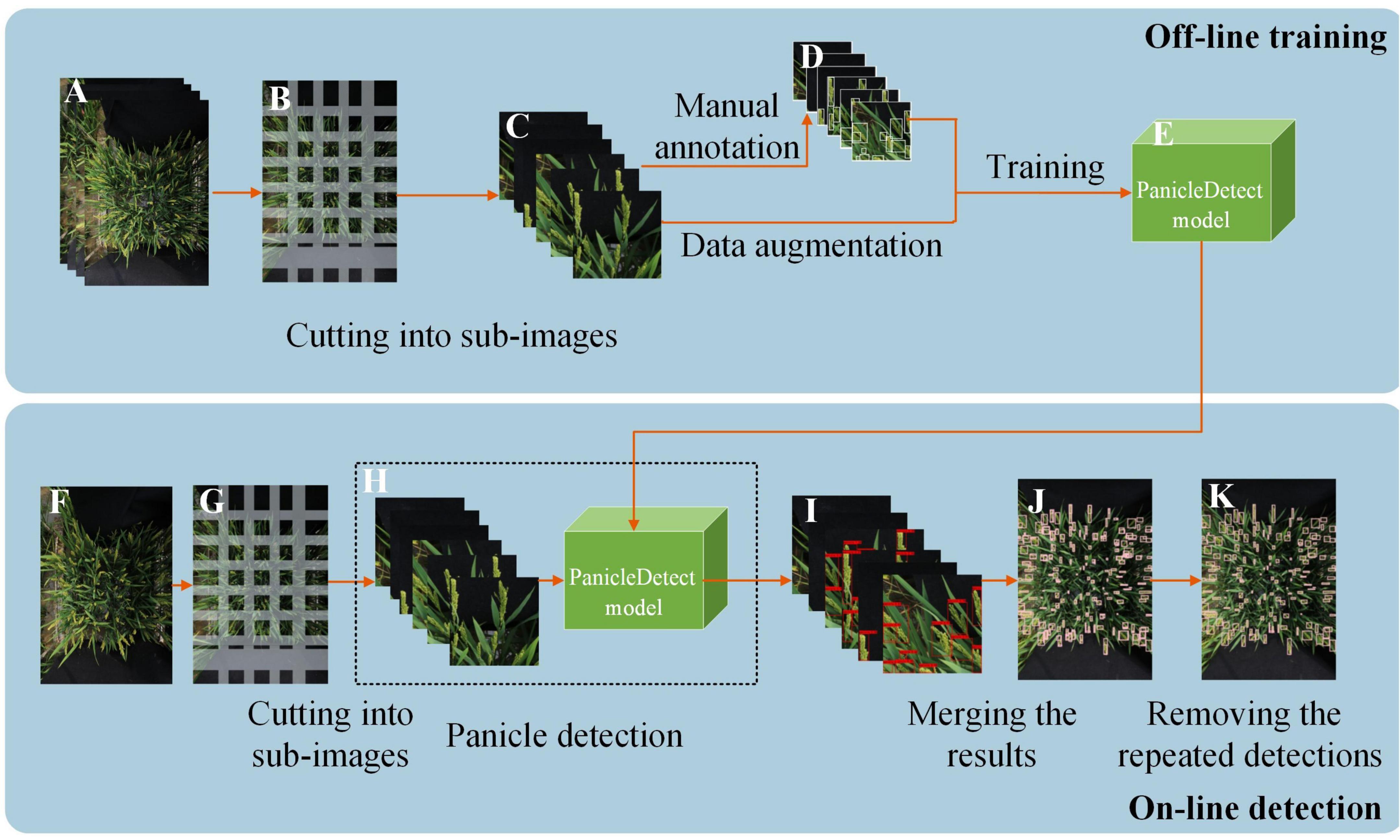

The rice panicle detection algorithm included off-line training and on-line detection (Figure 3).

Figure 3. The flow diagram of the panicle detection algorithm. (A) Original high-resolution image. (B) Cutting into sub-images in an overlapping manner. (C) Sub-images. (D) Manual annotation and data augmentation. (E) Panicle detection model generation. (F) Testing sample. (G) Cutting the testing sample into sub-images in an overlapping manner. (H) Feeding the testing sub-images into the Panicle detection model. (I) Panicle detection results of the sub-images. (J) Panicle detection result after merging the detection results of the sub-images. (K) The final detection results of the original image after deleting the repeated detections in the overlapping area of the adjacent sub-images.

In total, 104 field-rice images (each image belonged to a different accession) with a resolution of 6000 × 4000 was used for training and testing our panicle detection algorithm. The 104 images were randomly split into 2 sets: 67 images for training and 37 images for testing.

The off-line training mainly contained 3 steps: (1) The original training images were divided into sub-images of appropriate size using sliding windows in an overlapping manner (Figure 3C). (2) The sub-images were annotated using the software, LableImg (Figure 3D); (3) The data was augmented and the PanicleDetect model was trained (Figure 3E).

The on-line detection stage mainly included 4 steps: (1) An original testing image was divided into sub-images of appropriate size using sliding windows in an overlapping manner (Figure 3C); (2) All sub-images corresponding to the original image were fed into the pre-trained PanicleDetect model (Figure 3H); (3) The detection results of the sub-images (Figure 3I) were merged (Figure 3J); (4) Repeated detections in the overlapping areas of the adjacent sub-images were deleted (Figure 3K).

An open-access and user-friendly web portal1 was developed for rice researchers to use the proposed method conveniently. The detailed operation of the website is illustrated in Supplementary Video 1. Users can upload a single image or multiple images at a time. Detection results including the resultant images and a text file recording the panicle number at each image can be downloaded.

Training of the PanicleDetect model

From the collected 104 images, 67 images were randomly selected and each original image were divided into sub-images using sliding windows in an overlapping manner. The overlapping size (stride) was determined by the average size of the large panicles to ensure that most of the panicles appeared completely in at least one sub-image. And the size of the sub-image was determined by the panicle size, the selected network and the video memory. In this study, the size of sub-image was set as 1056 × 1056 and the stride was set as 756. Therefore, each field rice image was divided into 40 sub-images. In total, 67 × 40 = 2680 sub-images were obtained for training the PanicleDetect model. Then we randomly selected 2144 images for training and 536 images for validation from the 2680 sub-images in an 8:2 ratio.

The PanicleDetect model was built based on YOLOv5x. YOLOv5x is a fully convoluted network. In the structure of backbone of YOLOv5x, the input image needs to be down-sampled for 5 times, and each down-sampling reduces the image size by half. Therefore, the input image size should be a multiple of 32. In this study, all the sub-images were resized to 416 × 416 pixels before feeding to YOLOv5x.

During training of the object detector, the data was augmented using image resizing, image blurring, image flipping and rotating, and transformation of hue, saturation and value. The training was run on the Windows 10 operating system (16-core i7 CPU, 2.5 GHz per CPU core, 16GB of memory, and an NVIDIA GeForce RTX 2070 super graphics card). The network was pre-trained on the COCO-Train2017 dataset, and the generated weight file was loaded as the initial weight. SGD optimizer (Song et al., 2013) was used in the training and the momentum (He et al., 2019) was set to 0.937. The training of model was divided into two stages, each of which trained for 50 epochs. At the first stage, the parameters of the backbone of YOLOv5x were frozen. And at the second stage, all the parameters of YOLOv5x were trained.

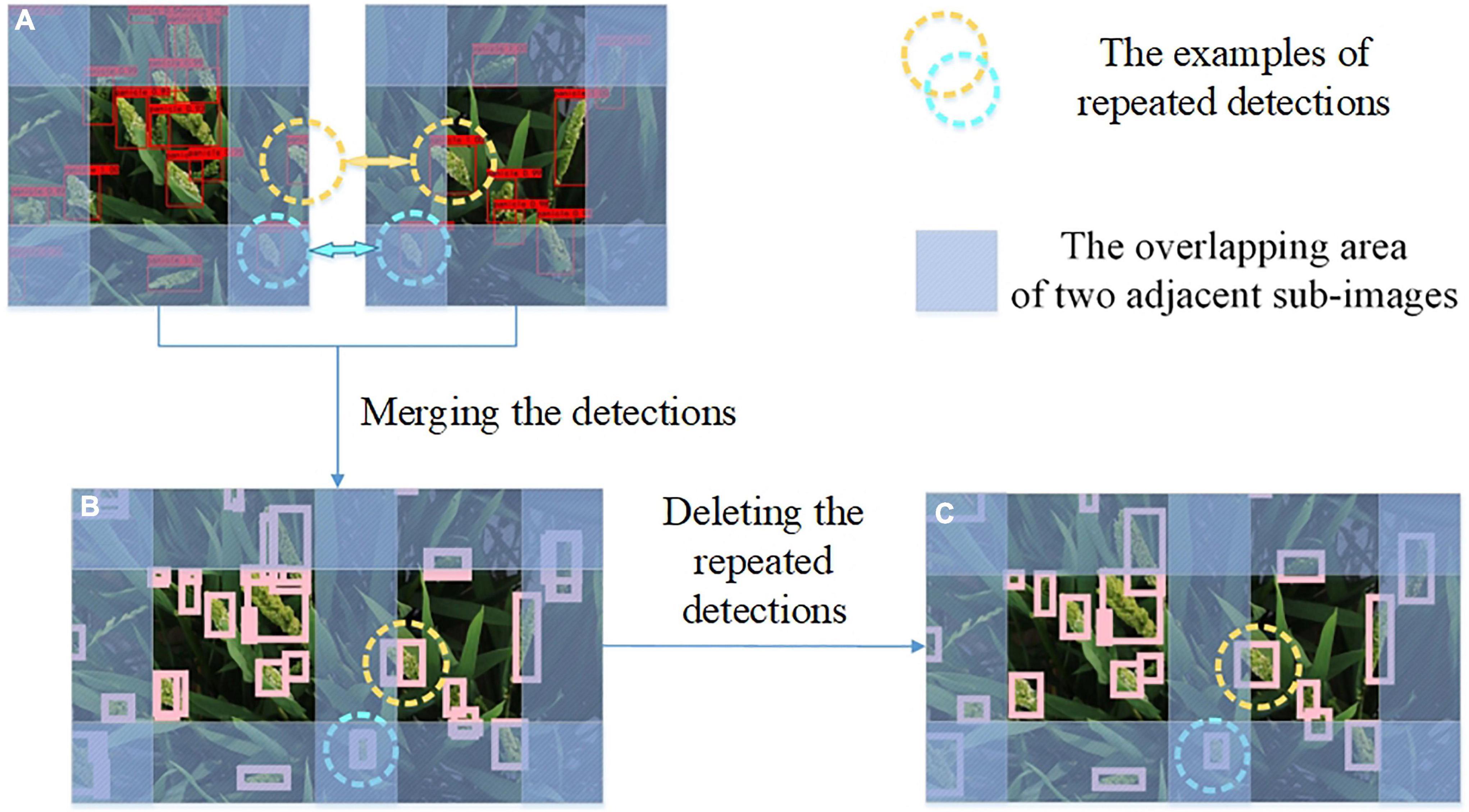

Removing the repeated detections in the overlapping areas

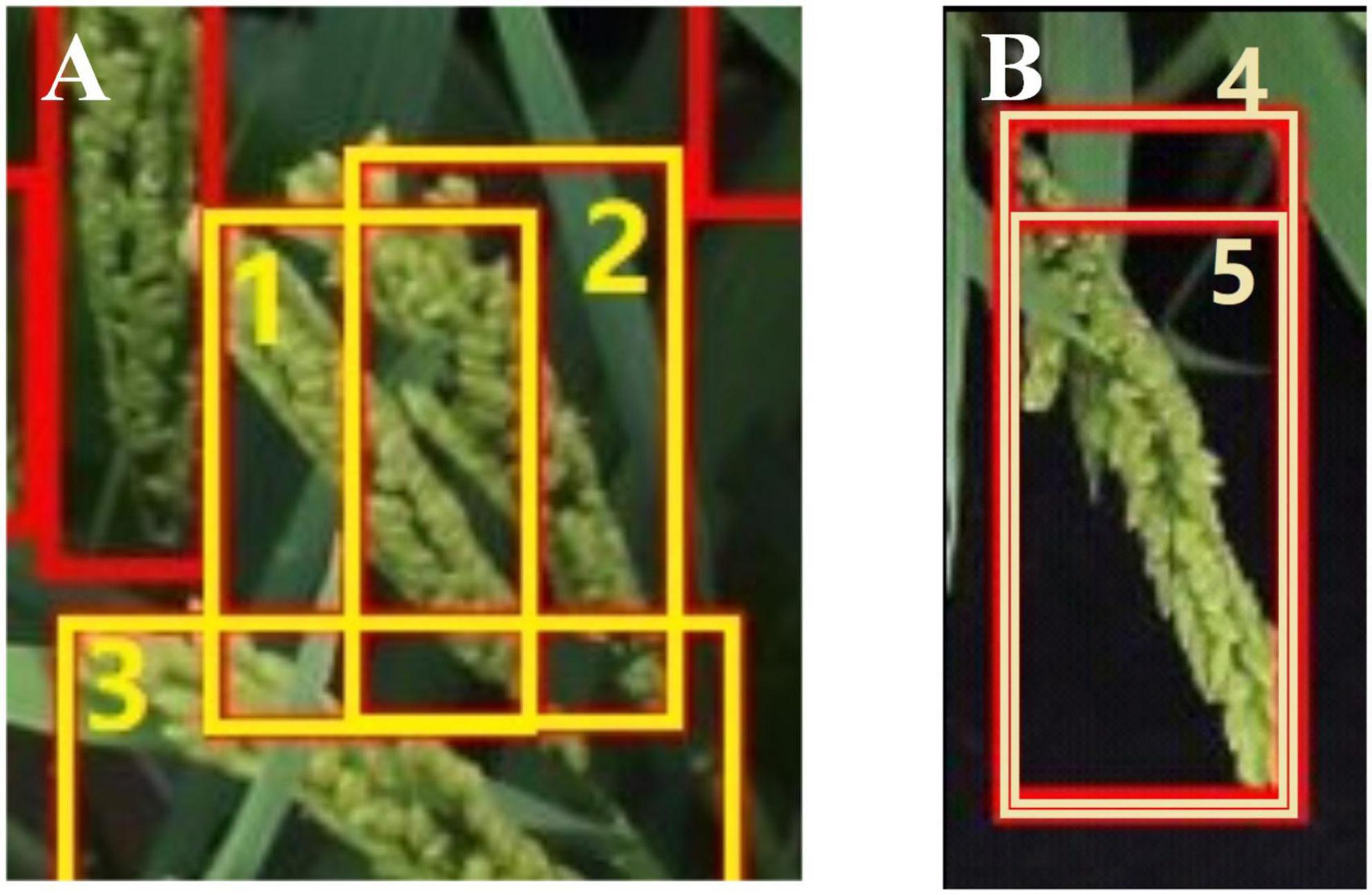

The specific processing steps of removing the repeated detections are illustrated in Figure 4. There were two types of overlapping boxes: overlapped panicles that should be retained (Figure 5A) and repeated detections that should be removed (Figure 5B).

Figure 4. The specific processing steps of deleting the repeated detections. (A) Repeated detections in the overlapping area of two adjacent sub-images. (B) Merging results directly. (C) Deleting the repeated detections in two adjacent sub-images.

Figure 5. Examples of two overlapping situations. (A) high density panicles (1,2,3) that should be retained. (B) Repeated detection (4,5) that should be deleted.

Non-maximum suppression (NMS) method using Intersection over Union (IOU) was the most widely used method to quantify and remove the overlapping detection boxes. Furthermore, methods similar to NMS for removing overlapping results have also been proposed, such as GIOU (Rezatofighi et al., 2019) and DIOU (Zheng et al., 2019). The definitions of IOU, GIOU and DIOU are provided in Eqs. (1)–(3).

where Ac is the smallest enclosing box area, ρ is the Euclidean distance between the center points of two boxes, and c is the diagonal length of the smallest enclosing box.

When the areas of the overlapping boxes were similar, NMS methods can remove the repeated boxes correctly. However, when the areas of the overlapping boxes varied greatly, the union between overlapping boxes was very close to the bigger box, so some NMS methods may not work. In this work, the original large size rice image was divided into small sub-images in an overlapping manner. A panicle would appear in several sub-images. And a small part of a panicle may appear in one sub-image while the complete panicle appears in another sub-image. Therefore, the areas of the overlapping boxes of the repeated detections would vary greatly. In this case, the NMS methods may not be suitable. To remove the repeated detections while retain the overlapped panicles, two parameters, namely IOB and BOU (defined by Equation 4 and 5), were introduced in this manuscript to quantify the overlapping mode and degree between two overlapping boxes. If IOB > threshold of IOB and BOU > threshold of BOU, the bounding box which had a smaller area in two overlapping boxes was removed.

where Boxsmaller is the box with the smaller area of the two overlapping boxes and Boxbigger is the box with the bigger area of the two overlapping boxes.

Performance evaluation using 6 indicators

In order to test the detection algorithm, 37 rice field images were selected. Six indicators, including the mean absolute percentage error (MAPE), Precision, Recall, F-measured, coefficient of determination (R2) and Accuracy were adopted to evaluate the performance of the detection. Among them, MAPE and Accuracy were used to evaluate the detection accuracy of the algorithm. The lower the MAPE and the higher the Accuracy, the more accurate the detection is.

Precision represents that how many panicles detected by the algorithm are ground-truth annotations. And Recall illustrates that among all the panicles identified by the human experts, how many panicles are detected by the algorithm. In practice, Precision and Recall interact with each other, so we need to balance these two indicators. F-measure was used to evaluate the detection performance in a more comprehensive way. A high F-measure value means that the rice panicle detection algorithm has a good performance. In addition, the coefficient of determination (R2) was used to test the fitting degree of machine counting results versus manual counting results. The definition of MAPE, Precision, Recall, F-measure and Accuracy are provided in Eqs. (6)–(10).

Where n is the number of the test images, yi is the panicle number calculated manually, and yi is the panicle number calculated by our algorithm. TP, TN, FP, and FN represent the numbers of true positive, true negative, false positive, and false negative, respectively. In this paper, the true positive (TP) is the number of bounding boxes which detect the rice panicles correctly. The true negative (TN) is always considered to be zero because background is not determined for object detection in this study. The false positive (FP) is the number of bounding boxes which detected backgrounds falsely as rice panicles. The false negative (FN) is the number of ground truth rice panicles which are not detected by the algorithm.

Robustness evaluation of the PanicleDetect model

To evaluate the robustness of the PanicleDetect model to different rice accessions and illumination, 37 field rice images belonging to 37 different rice accessions were tested for panicle detection. In addition, the illumination of the different images varied due to the outdoor environment.

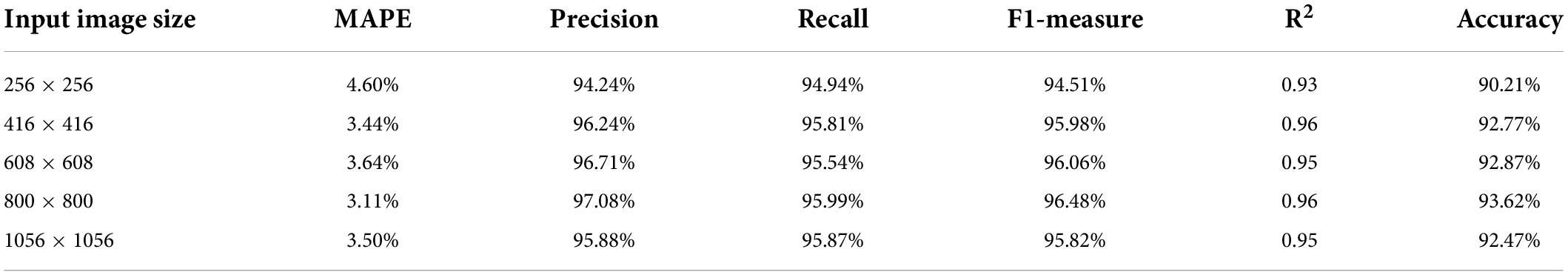

In order to improve the robustness of the model, the height and width of the input images were randomly scaled in data augmentation at the training stage. To evaluate the robustness of the model to different image size and spatial resolution, the sub-images (1056 × 1056) of the test images were resized to 256 × 256, 416 × 416, 608 × 608, 800 × 800 and 1056 × 1056, respectively, in the testing stage. Subsequently, the sub-images were detected by the model trained with sub-images of 416 × 416 pixels.

To further investigate the universality of the proposed method, panicle detection for field rice images taken by UAV was also tested. The tested UAV images were taken by the camera (FUJIFILM GFX 100 camera, 63 mm focal length lens) installed on the UAV platform (DJI M600 Pro, 20 m flight altitude, 1 m/s flight speed). Before detecting, the height and width of the test images were magnified 3.5 times because of the huge differences in spatial resolution between the UAV images and the training set. The spatial resolution of the UAV images was about 2mm per pixel while the spatial resolution of the training images was about 0.2 mm per pixel.

Comparison with other methods for panicle counting

Panicle-SEG (Xiong et al., 2017) was an algorithm for rice panicle segmentation. Combining Panicle-SEG with an appropriate image processing method, the number of the panicles in the image can be obtained by counting the connected components. For the binary images obtained by Panicle-SEG, opening and closing operations with a 5 × 5-size kernel was performed to remove noise and separate occluded rice panicles. Then the number and area of connected components were obtained. In order to deal with the occluded rice panicles, the median area of the connected components in each image was calculated. If the area of a component was larger than twice of the median area, the component’s area would be divided by the median area and then round up to an integer, which was regarded as the number of rice panicles corresponding to the component. For other connected components, each one was regarded as one rice panicle. The panicle number for each image was then computed.

MHW-PD (Xu et al., 2020) was an advanced algorithm for rice panicle count and was similar to the proposed method. In comparison, this paper calculated the mean counting accuracy in their manner (Xu et al., 2020), which is shown in Eq. (11).

where Pc is the counting accuracy, Ncor is the correct (true positive) number of rice panicles detected by the model and Nreal is the actual number of the rice panicles in the test set.

Results and discussion

We tested the proposed panicle detection algorithm using 37 field rice images. Each rice image belonged to a different accession. Convolution neural network, YOLOv5x was trained for panicle detection. The results of YOLOv5x are shown in Figure 6. The mean values of the MAPE, Precision, Recall, F-measure, R2 and Accuracy were 3.44%, 96.24%, 95.81%, 95.98%, 0.96, and 92.77%, respectively.

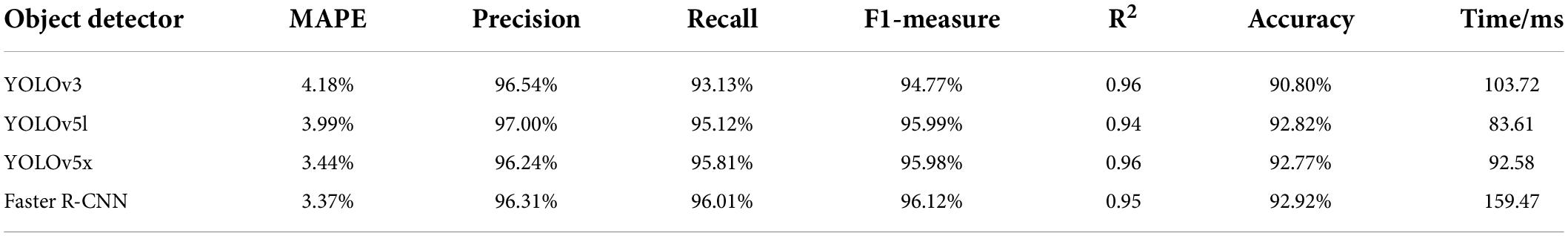

Comparison of different object detection models for panicle counting

Four object detection models: YOLOv3 (Redmon and Farhadi, 2018), YOLOv5l, YOLOv5x and Faster R-CNN (Ren et al., 2017) were tested and compared for panicle counting (Table 1). The average time consumption for detecting one sub-image was also computed. The detection was run on the same environment as training.

The results showed that the proposed counting method had good adaptability to different object detection networks. Furthermore, YOLOv5x and Faster R-CNN outperformed the other two networks. Considering the detection efficiency, YOLOv5x was selected as the optimal network for the PanicleDetect algorithm.

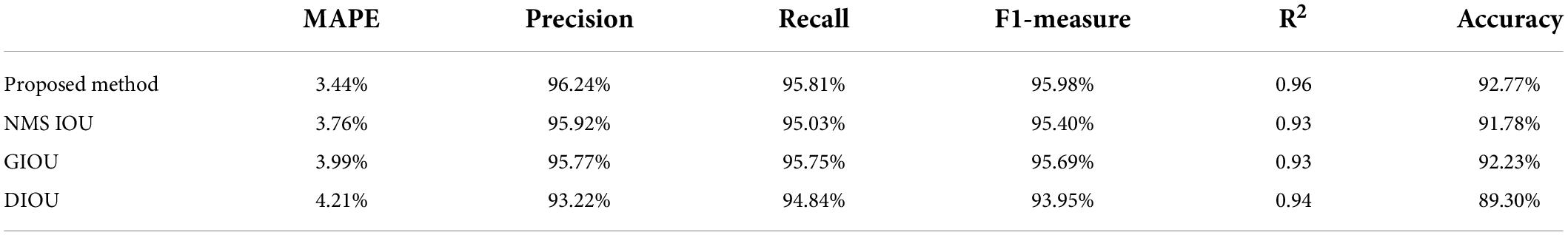

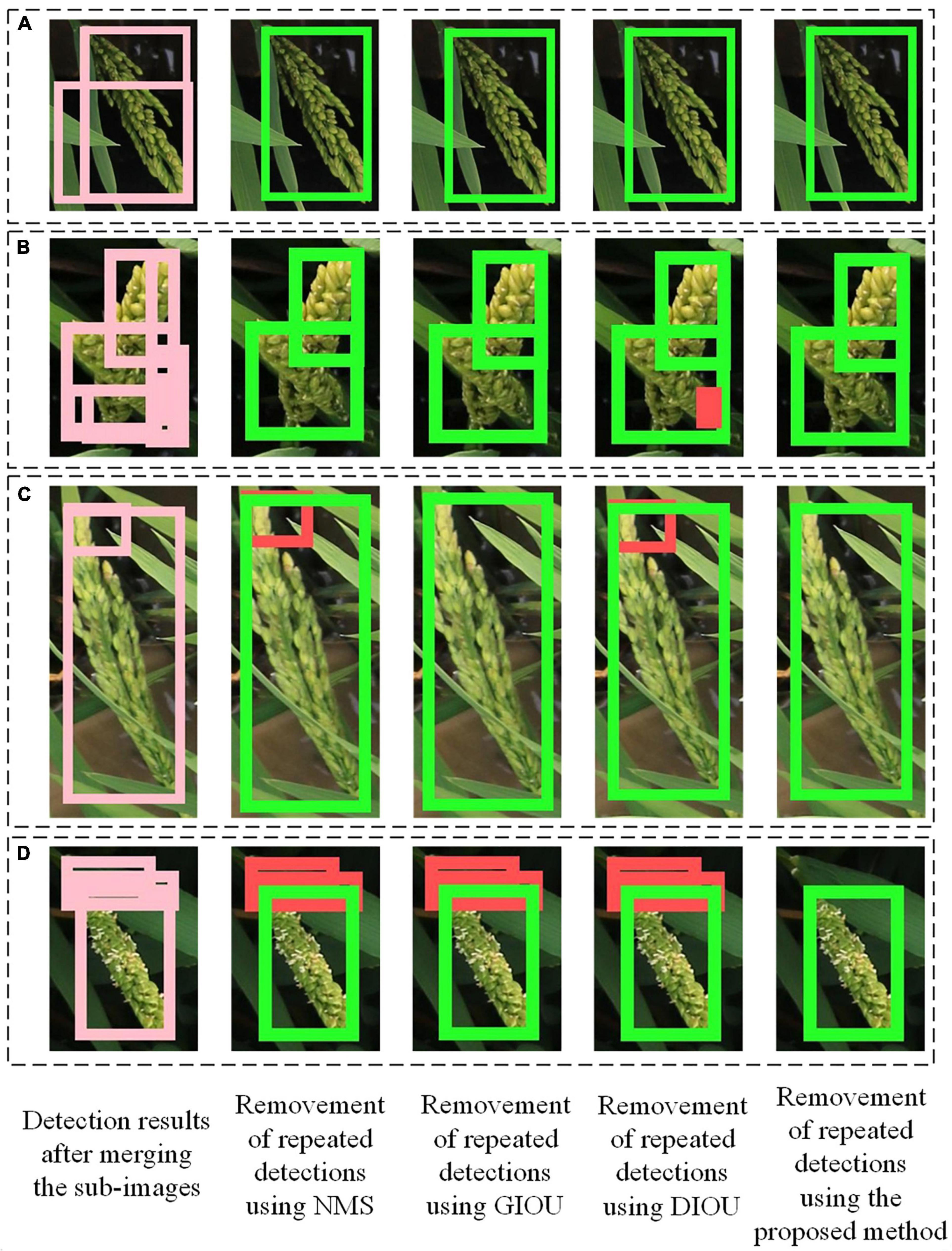

Comparison of different methods for removing repeated detections in the adjacent sub-images

The proposed method using IOB and BOU was compared with the NMS IOU, GIOU and DIOU methods for removing the repeated detections. Six indicators were used to evaluate the results. The results are shown in Table 2. The results showed that the overall performance of the proposed method was better than other methods. The main reason for why the proposed method outperformed the other methods is presented in Figure 7. When the areas of the overlapping boxes were similar, high overlapping degree of the boxes would lead to high IOU, GIOU, DIOU, IOB and BOU, so all the 4 methods can remove the repeated boxes correctly (Figure 7A). However, when the areas of the overlapping boxes varied greatly, even if the overlapping degree of the boxes were very high, for instance, one box completely covered the other box, the IOU, GIOU and DIOU would be also low. In contrast, the IOB and BOU would still be high. In this case, the repeated boxes might be kept by the other methods, but be removed by the proposed method (Figures 7B–D). Thus, the proposed method used IOB and BOU performed better than the other methods.

Figure 7. Detection results of rice image processed by the proposed method and other methods. (A) The areas of the overlapping boxes were similar. (B–D) The areas of the overlapping boxes varied greatly. In the detection results, green boxes are correct bounding boxes and red boxes are FP boxes.

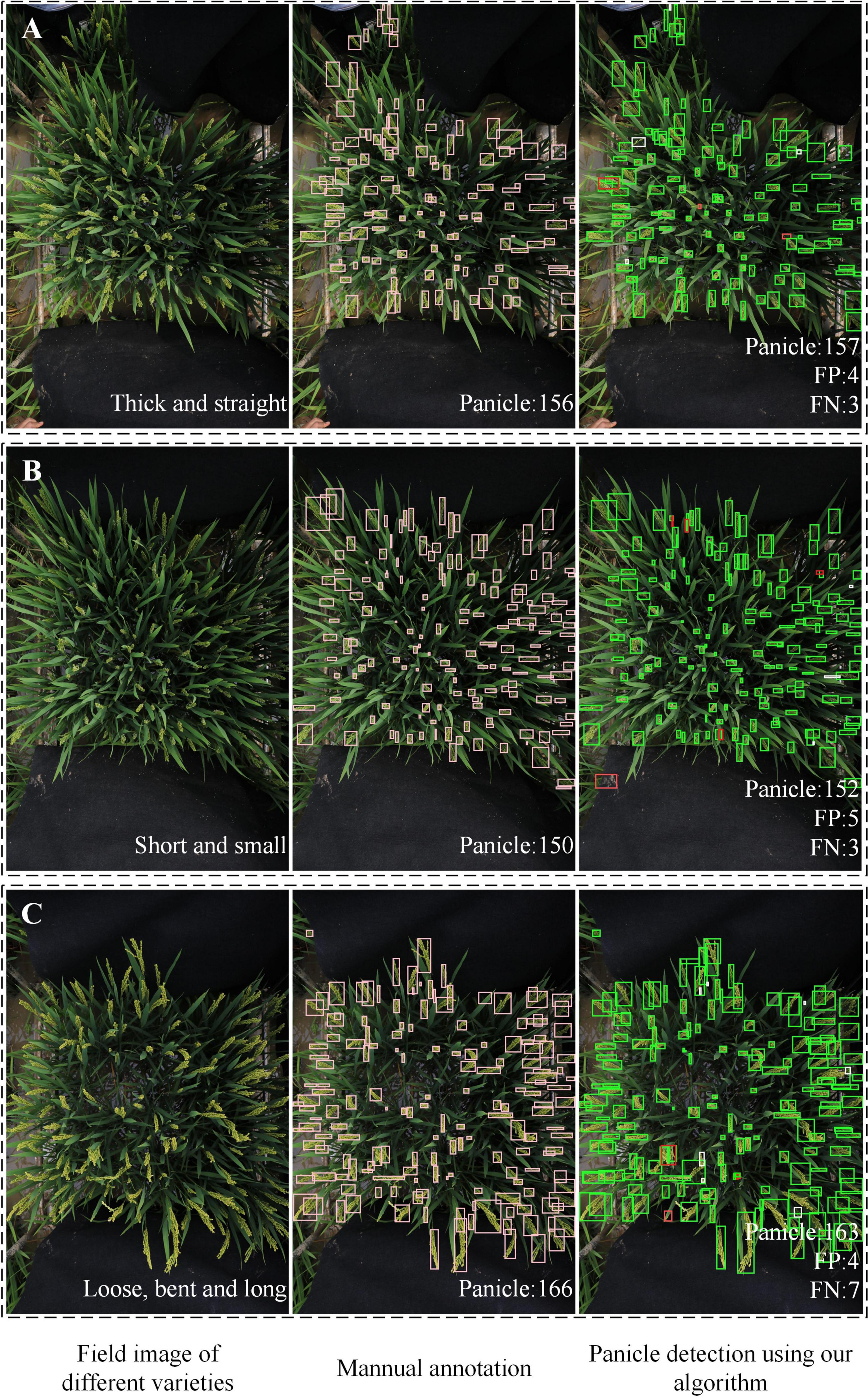

Detection results under different rice accessions and illumination environments

The appearance of rice panicles varies greatly among different rice accessions. For example, the panicles were thick and straight in Figure 8A short and small in Figure 8B, and loose, bent and long in Figure 8C. In addition, the large growth density would cause occlusion between panicles or between leaves and panicles. Results showed that the proposed method was robust for detecting panicles of different rice accessions.

Figure 8. Examples of rice field images with different rice accessions. (A) Thick and straight. (B) Short and small. (C) Loose, bent and long. In the detection results, green boxes are correct bounding boxes; white boxes are FN boxes; red boxes are FP boxes.

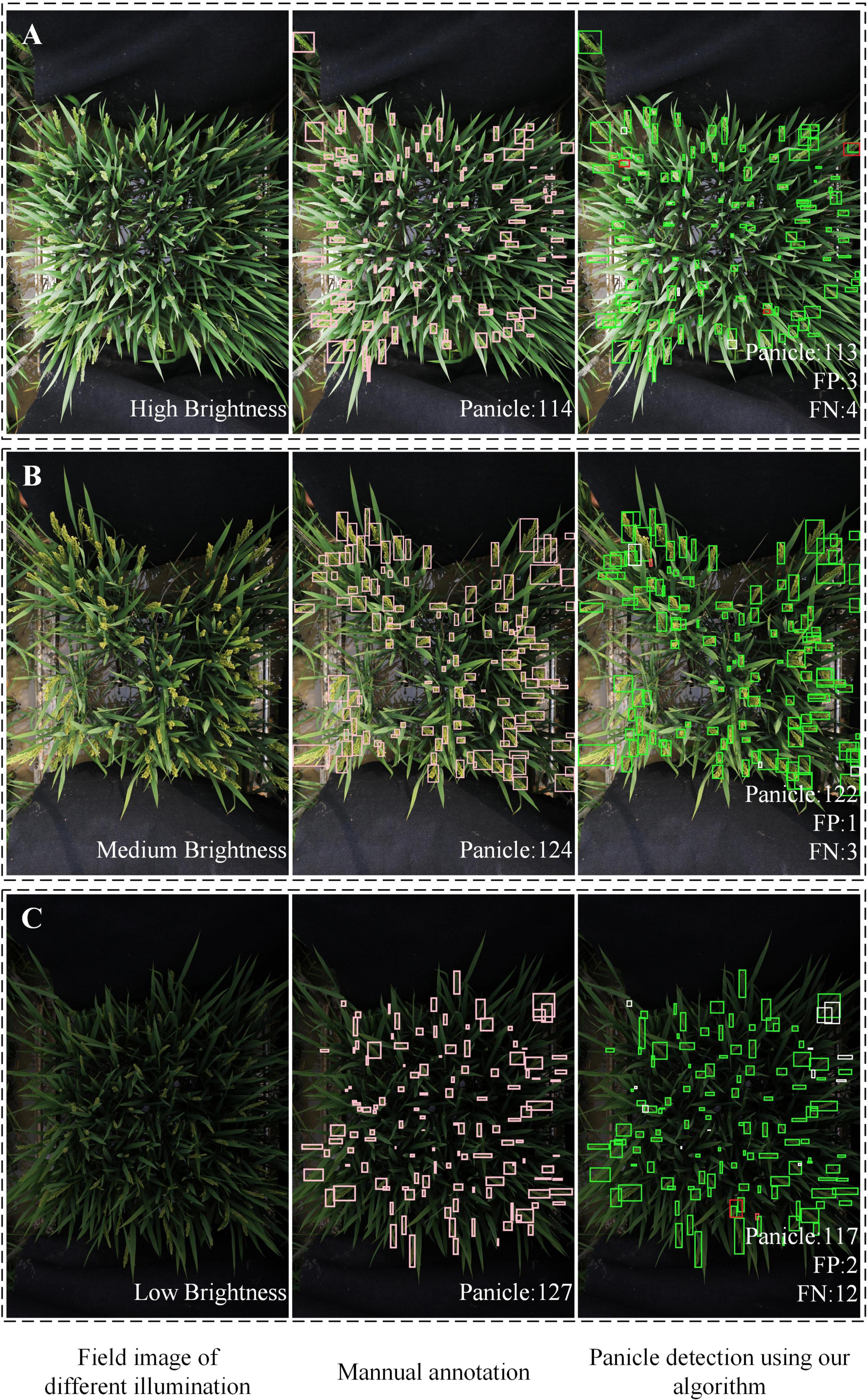

The illumination of the different images varied due to the outdoor environment. Therefore, it was important for the model to accurately detect images under different illumination conditions. As illustrated in Figure 9, for the high brightness image (Figure 9A), the FP is 3 and the FN is 4. In the image of medium brightness (Figure 9B), the FP was 1 and the FN was 3. In the image of low brightness (Figure 9C), the FP was 2 and the FN was 12. From the results, the model performed best for medium brightness image detection. And the model was robust to the images with different brightness. Specially, for images with extremely low brightness, such as Figure 9C, manual annotation was error-prone, labor-intensive and inefficient. However, the proposed algorithm performed well.

Figure 9. Examples of rice field images with different illumination. (A) High brightness. (B) Medium brightness. (C) Low brightness. In the detection results, green boxes are correct bounding boxes; white boxes are FN boxes; red boxes are FP boxes.

Panicle detection of images with different image size and spatial resolution

Different devices and methods are used for capturing rice field images, which may cause differences in spatial resolution. Therefore, it is important that the proposed counting algorithm is robust for the images with different spatial resolution. Table 3 shows the performance of the PanicleDetect model with different input size/spatial resolution. The results showed that, enlarging or reducing the size of the input image by nearly twice, the MAPE of the model detection results could be kept within 5%, meaning that the proposed algorithm was robust to different spatial resolution.

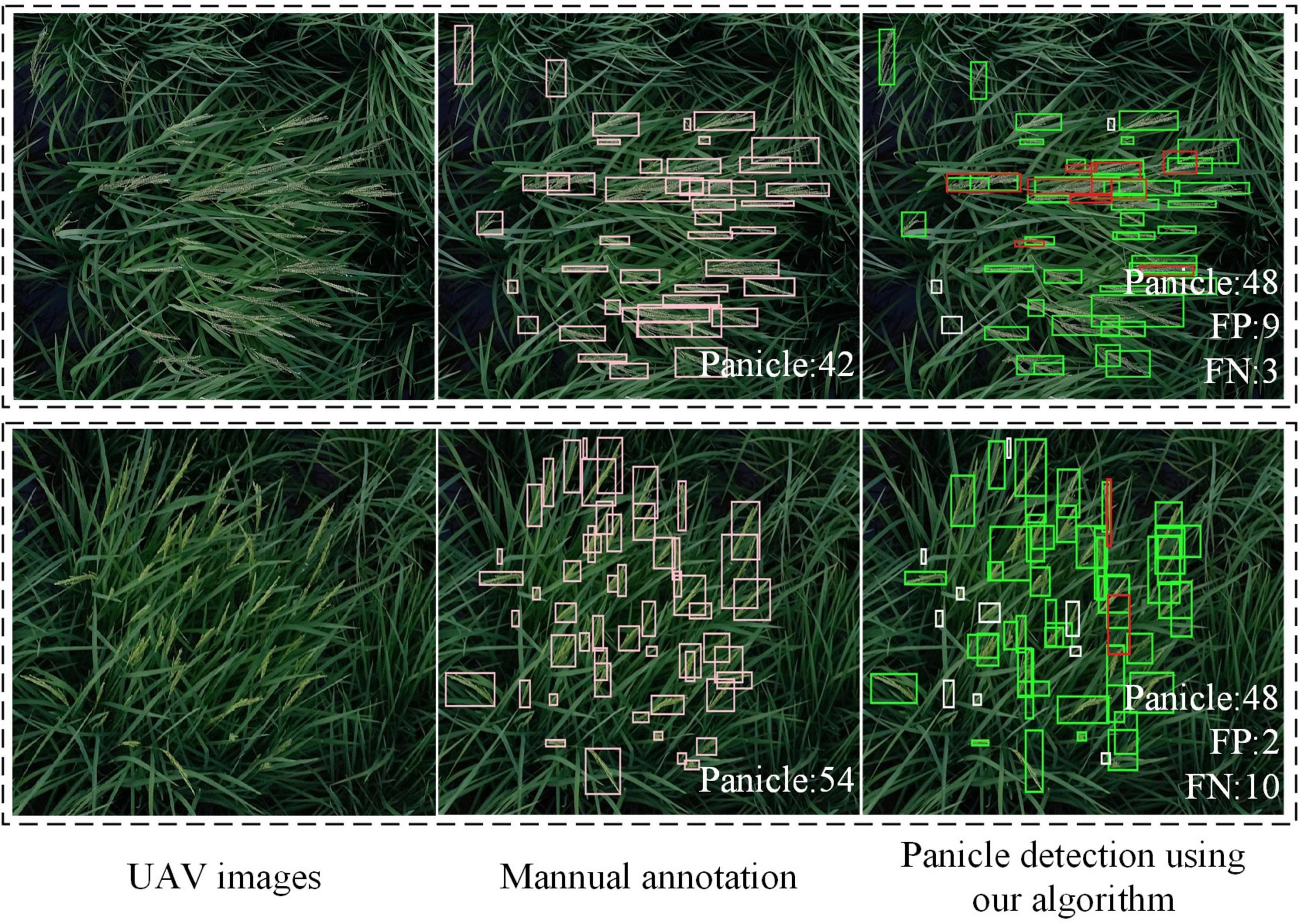

Panicle detection of the field images taken by unmanned aerial vehicle

Using UAV to capture the rice field images is convenient and efficient. Thus, it is meaningful that the proposed algorithm can detect the panicles accurately in the field images taken by UAV. But the images taken by UAV may have the problems of low-resolution or defocus blur, which will bring challenges to rice panicle detection. The PanicleDetect model was trained using data augmentation with image blur, so the model was more robust for this situation. Figure 10 shows the detection results of two representative images with about 1000 × 1000 pixels taken by UAV. The results showed that, the proposed algorithm had a relatively high accuracy for counting panicles in UAV images.

Figure 10. Detection results of field images taken by UAV. In the detection results, green boxes are correct bounding boxes; white boxes are FN boxes; red boxes are FP boxes.

Comparison with other methods for panicle counting

Panicle-SEG (Xiong et al., 2017) was an algorithm for rice panicle segmentation. Combining Panicle-SEG with an appropriate image processing method, the number of the panicles in the image can be obtained by counting the connected components. The counting method was described in detail in the method section. The same testing set was used to evaluate the performance of panicle counting using segmentation method, and the mean values of the MAPE, Precision, Recall, F-measure, R2 and Accuracy were 13.59%, 79.39%, 80.66%, 79.49%, 0.68, and 71.79%, respectively. This counting method has difficulty in dealing with the occluded rice panicles and rice panicles with different sizes. Therefore, the accuracy of this method was relatively low.

MHW-PD (Xu et al., 2020) was an advanced algorithm for rice panicle count and was similar to the proposed method. Specifically, this algorithm firstly cut the images into sub-images without overlapping, then detected the panicles in the sub-images using Faster R-CNN and fused the results. The panicle count accuracy of MHW-PD achieved about 93% for images with 0∼30 panicles per image, and about 87% for images with 31∼80 panicles per image. In comparison, this paper calculated the mean counting accuracy in their manner. The results showed that the proposed method reached an accuracy of 95.81% for images with 75∼190 panicles per image. In conclusion, the proposed method is able to process images with much higher number of rice panicles, and can maintain a higher accuracy.

Conclusion

It is challenging and meaningful to accurately measure panicle number in the field. This paper proposed a rice panicle counting algorithm that are especially designed for field images with extremely large image size. Instead of greatly resizing or cutting images without overlapping, small panicles can be preserved intact in the images. This algorithm enables the object detect networks, which were designed for input of relatively small image size, to detect small objects in large images. For field images of 6000 × 4000 pixels with an average of 140 panicles per image, the MAPE of this algorithm was 3.44%. The proposed method was proved to be robust and accurate for counting panicle in field rice images of different illumination, rice accessions, and spatial resolution. The proposed method also performed well on UAV images. One limitation for this work is that the proposed method was only tested at a single planting density, which was slightly higher than the typical planting density used for rice cultivation. Panicle detection at different planting densities will be tested in our future work. Generally, the method was robust and especially useful for panicle detection of extremely large images. In addition, this algorithm can be visited online so the researchers can use the algorithm to get panicle numbers conveniently.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

XW and LD developed the detection method, conducted the experiments, and wrote the manuscript. QL, WY, CH, and XL were assistant to the experiments. GC and LX contributed in rice materials and rice managements. All authors read and approved the final manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (32170411, 31701317, and U21A20205), the Foundation of Hubei Hongshan Laboratory (2021hskf005), the Fundamental Research Funds for the Central Universities (2662020GXPY007 and 2021ZKPY006), Key projects of Natural Science Foundation of Hubei Province (2021CFA059), and HZAU-AGIS Cooperation Fund (SZYJY2022014).

Acknowledgments

We would like to thank all the colleagues in the Crop Phenotyping Center, Huazhong Agricultural University for their helping the rice field experiments.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpls.2022.966495/full#supplementary-material

Supplementary Video 1 | The instruction of the website for rice panicle counting.

Abbreviations

CNN, convolutional neural network; UAV, unmanned aerial vehicle; TP, true positive; TN, true negative; FP, false positive; FN, false negative; NMS, non-maximum suppression; IOU, intersection over union.

Footnotes

References

Barreto, A., Lottes, P., Ispizua Yamati, F. R., Baumgarten, S., Wolf, N. A., Stachniss, C., et al. (2021). Automatic UAV-based counting of seedlings in sugar-beet field and extension to maize and strawberry. Comput. Electron. Agric. 191:106493. doi: 10.1016/j.compag.2021.106493

Desai, S. V., Balasubramanian, V. N., Fukatsu, T., Ninomiya, S., and Guo, W. (2019). Automatic estimation of heading date of paddy rice using deep learning. Plant Methods 15:76. doi: 10.1186/s13007-019-0457-1

Dorj, U.-O., Lee, M., and Yun, S. (2017). An yield estimation in citrus orchards via fruit detection and counting using image processing. Comput. Electron. Agric. 140, 103–112. doi: 10.1016/j.compag.2017.05.019

Ferrante, A., Cartelle, J., Savin, R., and Slafer, G. A. (2017). Yield determination, interplay between major components and yield stability in a traditional and a contemporary wheat across a wide range of environments. Field Crops Res. 203, 114–127. doi: 10.1016/j.fcr.2016.12.028

Fiorani, F., and Schurr, U. (2013). Future Scenarios for Plant Phenotyping. Annu. Rev. Plant Biol. 64, 267–291. doi: 10.1146/annurev-arplant-050312-120137

Hayat, M. A., Wu, J., and Cao, Y. (2020). Unsupervised Bayesian learning for rice panicle segmentation with UAV images. Plant Methods 16:18. doi: 10.1186/s13007-020-00567-8

He, K., Fan, H., Wu, Y., Xie, S., and Girshick, R. (2019). Momentum Contrast for Unsupervised Visual Representation Learning. arXiv [Preprint]. doi: 10.48550/arXiv.1911.05722

Ji, M., Yang, Y., Zheng, Y., Zhu, Q., Huang, M., and Guo, Y. (2021). In-field automatic detection of maize tassels using computer vision. Inf. Process. Agric. 8, 87–95. doi: 10.1016/j.inpa.2020.03.002

Jin, X., Liu, S., Baret, F., Hemerlé, M., and Comar, A. (2017). Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 198, 105–114.

Khaki, S., Pham, H., Han, Y., Kuhl, A., Kent, W., and Wang, L. (2021). DeepCorn: A semi-supervised deep learning method for high-throughput image-based corn kernel counting and yield estimation. Knowl. Based Syst. 218:106874. doi: 10.1016/j.knosys.2021.106874

Khaki, S., Safaei, N., Pham, H., and Wang, L. (2022). WheatNet: A lightweight convolutional neural network for high-throughput image-based wheat head detection and counting. Neurocomputing 489, 78–89. doi: 10.1016/j.neucom.2022.03.017

Lu, H., and Cao, Z. (2020). TasselNetV2+: A Fast Implementation for High-Throughput Plant Counting From High-Resolution RGB Imagery. Front. Plant Sci. 11:541960. doi: 10.3389/fpls.2020.541960

Lu, H., Cao, Z., Xiao, Y., Fang, Z., Zhu, Y., and Xian, K. (2015). Fine-grained maize tassel trait characterization with multi-view representations. Comput. Electron. Agric. 118, 143–158. doi: 10.1016/j.compag.2015.08.027

Lu, H., Cao, Z., Xiao, Y., Zhuang, B., and Shen, C. (2017). TasselNet: Counting maize tassels in the wild via local counts regression network. Plant Methods 13:79. doi: 10.1186/s13007-017-0224-0

Lyu, S., Noguchi, N., Ospina, R., and Kishima, Y. (2021). Development of phenotyping system using low altitude UAV imagery and deep learning. Int. J. Agric. Biol. Eng. 14, 207–215. doi: 10.25165/j.ijabe.20211401.6025

Ma, J., Li, Y., Liu, H., Du, K., Zheng, F., Wu, Y., et al. (2020). Improving segmentation accuracy for ears of winter wheat at flowering stage by semantic segmentation. Comput. Electron. Agric. 176:105662. doi: 10.1016/j.compag.2020.105662

Madec, S., Jin, X., Lu, H., Solan, B. D., Liu, S., Duyme, F., et al. (2019). Ear density estimation from high resolution RGB imagery using deep learning technique. Agric. For. Meteorol. 264, 225–234. doi: 10.1016/j.agrformet.20183010.013

Misra, T., Arora, A., Marwaha, S., Chinnusamy, V., Rao, A. R., Jain, R., et al. (2020). SpikeSegNet-a deep learning approach utilizing encoder-decoder network with hourglass for spike segmentation and counting in wheat plant from visual imaging. Plant Methods 16:40. doi: 10.1186/s13007-020-00582-9

Redmon, J., and Farhadi, A. (2018). Yolov3: An incremental improvement. arXiv [Preprint]. doi: 10.48550/arXiv.1804.02767

Ren, S., He, K., Girshick, R., and Sun, J. (2017). Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39, 1137–1149. doi: 10.1109/TPAMI.2016.2577031

Rezatofighi, H., Tsoi, N., Gwak, J., Sadeghian, A., Reid, I., and Savarese, S. (2019). “Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), (Piscataway), 658–666. doi: 10.1109/CVPR.2019.00075

Singh, N., Tewari, V. K., Biawas, P. K., Pareek, C. M., and Dhruw, L. K. (2021). Image processing algorithms for in-field cotton boll detection in natural lighting conditions. Artif. Intell. Agric. 5, 142–156. doi: 10.1016/j.aiia.2021.07.002

Slafer, G. A., Savin, R., and Sadras, V. O. (2014). Coarse and fine regulation of wheat yield components in response to genotype and environment. Field Crops Res. 157, 71–83. doi: 10.1016/j.fcr.2013.12.004

Song, S., Chaudhuri, K., and Sarwate, A. D. (2013). “Stochastic gradient descent with differentially private updates,” in 2013 IEEE Global Conference on Signal and Information Processing, (Austin, TX), 245–248. doi: 10.1109/GlobalSIP.2013.6736861

Wang, F., Mohan, V., Thompson, A., and Dudley, R. (2020). “Dimension fitting of wheat spikes in dense 3D point clouds based on the adaptive k-means algorithm with dynamic perspectives,” in IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), (Trento: IEEE), 144–148. doi: 10.1109/MetroAgriFor50201.2020.9277611

Xiong, H., Cao, Z., Lu, H., Madec, S., Liu, L., and Shen, C. (2019). TasselNetv2: In-field counting of wheat spikes with context-augmented local regression networks. Plant Methods 15:150. doi: 10.1186/s13007-019-0537-2

Xiong, X., Duan, L., Liu, L., Tu, H., Yang, P., Wu, D., et al. (2017). Panicle-SEG: A robust image segmentation method for rice panicles in the field based on deep learning and superpixel optimization. Plant Methods 13:104. doi: 10.1186/s13007-017-0254-7

Xu, C., Jiang, H., Yuen, P., Ahmad, K. Z., and Chen, Y. (2020). MHW-PD: A robust rice panicles counting algorithm based on deep learning and multi-scale hybrid window. Comput. Electron. Agric. 173:105375. doi: 10.1016/j.compag.2020.105375

Yang, B., Gao, Z., Gao, Y., and Zhu, Y. (2021). Rapid Detection and Counting of Wheat Ears in the Field Using YOLOv4 with Attention Module. Agronomy 11:1202. doi: 10.3390/agronomy11061202

Yang, Z., Gao, S., Xiao, F., Li, G., Ding, Y., Guo, Q., et al. (2020). Leaf to panicle ratio (LPR): A new physiological trait indicative of source and sink relation in japonica rice based on deep learning. Plant Methods 16:117. doi: 10.1186/s13007-020-00660-y

Zheng, Z., Wang, P., Liu, W., Li, J., Ye, R., and Ren, D. (2019). Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. Comput. Vis. Pattern Recognit. 34, 12993–13000. doi: 10.48550/arXiv.1911.08287

Keywords: field rice, panicle detection, panicle counting, large image size, deep learning

Citation: Wang X, Yang W, Lv Q, Huang C, Liang X, Chen G, Xiong L and Duan L (2022) Field rice panicle detection and counting based on deep learning. Front. Plant Sci. 13:966495. doi: 10.3389/fpls.2022.966495

Received: 11 June 2022; Accepted: 26 July 2022;

Published: 12 August 2022.

Edited by:

Marcos Egea-Cortines, Universidad Politécnica de Cartagena, SpainReviewed by:

Sijia Yu, Rutgers, The State University of New Jersey – Busch Campus, United StatesQing Liu, Guangdong Academy of Agricultural Sciences (GDAAS), China

Copyright © 2022 Wang, Yang, Lv, Huang, Liang, Chen, Xiong and Duan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lingfeng Duan, ZHVhbmxpbmdmZW5nQG1haWwuaHphdS5lZHUuY24=

Xinyi Wang

Xinyi Wang Wanneng Yang

Wanneng Yang Qiucheng Lv

Qiucheng Lv Chenglong Huang

Chenglong Huang Xiuying Liang

Xiuying Liang Lizhong Xiong

Lizhong Xiong Lingfeng Duan

Lingfeng Duan