- 1College of Engineering, Anhui Agricultural University, Anhui, China

- 2College of Engineering, Northeast Agricultural University, Harbin, China

- 3Institute of Agricultural Facilities and Equipment, Jiangsu Academy of Agricultural Sciences (JAAS), Jiangsu, China

Atrazine is one of the most widely used herbicides in weed management. However, the widespread use of atrazine has concurrently accelerated the evolution of weed resistance mechanisms. Resistant weeds were identified early to contribute to crop protection in precision agriculture before visible symptoms of atrazine application to weeds in actual field environments. New developments in unmanned aerial vehicle (UAV) platforms and sensor technologies promote cost-effective data collection by collecting multi-modal data at very high spatial and spectral resolution. In this study, we obtained multispectral and RGB images using UAVs, increased available information with the help of image fusion technology, and developed a weed spectral resistance index, WSRI = (RE-R)/(RE-B), based on the difference between susceptible and resistant weed biotypes. A deep convolutional neural network (DCNN) was applied to evaluate the potential for identifying resistant weeds in the field. Comparing the WSRI introduced in this study with previously published vegetation indices (VIs) shows that the WSRI is better at classifying susceptible and resistant weed biotypes. Fusing multispectral and RGB images improved the resistance identification accuracy, and the DCNN achieved high field accuracies of 81.1% for barnyardgrass and 92.4% for velvetleaf. Time series and weed density influenced the study of weed resistance, with 4 days after application (4DAA) identified as a watershed timeframe in the study of weed resistance, while different weed densities resulted in changes in classification accuracy. Multispectral and deep learning proved to be effective phenotypic techniques that can thoroughly analyze weed resistance dynamic response and provide valuable methods for high-throughput phenotyping and accurate field management of resistant weeds.

Introduction

Weeds are one of the major factors affecting crop growth and are the most significant contributors to yield loss globally (Quan et al., 2021). Overreliance on commonly used chemical herbicides has resulted in the appearance of several herbicide-resistant weed biotypes (Colbach et al., 2017). Developing a method that can indicate herbicide resistance within an acceptable timeframe after an application can potentially help growers manage their fields more effectively (Krähmer et al., 2020).

Atrazine (chemical name: 2-chloro-4-ethylamino-6-isopropylamino-1,3,5-triazine) belongs to the S-triazine class of herbicides and blocks the electron flow between photosystems (Foyer and Mullineaux, 1994). Atrazine herbicide can significantly reduce photosynthesis by reducing photosystem II (Sher et al., 2021) and is a widely used herbicide in maize fields to control broadleaf and grassy weeds (Williams et al., 2011). Its widespread use has also accelerated the evolution of weed resistance mechanisms (Kelly et al., 1999; Williams et al., 2011; Perotti et al., 2020).

However, high-throughput herbicide resistance phenotyping remains a technical bottleneck, limiting the ability to effectively manage weeds in the field. Before herbicide application, there is no significant difference in the visual appearance of susceptible and resistant weeds of the same species (Eide et al., 2021a). Laboratory determination of various enzymes present within plant leaves can identify atrazine resistance but is impractical to use in large-scale applications (Liu et al., 2018). Hyperspectral systems to detect differences between resistant and susceptible biotypes have shown potential in controlled environments (Shirzadifar et al., 2020b), but their effectiveness is drastically reduced once introduced into field conditions (Shirzadifar et al., 2020a). The unstable performance of thermal imagery further suggested that canopy temperature data were likewise not a reliable predictor of weed resistance (Eide et al., 2021b). Outdoor resistance identification methods include whole-plant dose–response assay tests (Huan et al., 2011), but their investigation area is fixed and limited, resulting in high deployment expense and poor timeliness. Thus, current phenotypic analysis methods can hardly satisfy the high-throughput survey requirements for resistant weeds in the field.

Field-based fast, accurate, and robust phenotyping methods are essential for atrazine-resistant weed investigation. Atrazine applications reduce the efficiency of the photosynthetic mechanism and affect chlorophyll and other pigments, which change the spectral reflectance of plants in the visible/near-infrared range (Sher et al., 2021). Therefore, it is assumed that the spectral characteristics of susceptible weeds should show different pathways compared to resistant weeds after herbicide application. These physiological changes induced by herbicide stress have laid the foundation for monitoring resistance using vegetation indices (VIs) (Duddu et al., 2019). Multispectral bands and the normalized difference vegetation index (NDVI) provide improved glyphosate resistance classification (Eide et al., 2021a). Therefore, Vis-based high-throughput phenotyping methods can be reliably applied to atrazine-resistant weed investigation in the field.

Unmanned aerial vehicles (UAVs) are a popular remote sensing platform successfully used to obtain high-resolution aerial images for weed detection and mapping (Su et al., 2022) because they can be equipped with various imaging sensors to collect high-spatial, -spectral, and -temporal resolution images (Yang et al., 2017, 2020). For example, UAVs have been used for physiological and geometric plant characterization (Zhang et al., 2020; Meiyan et al., 2022), as well as for pest and disease classification (Dai et al., 2020; Xia et al., 2021) and resistant weed identification (Eide et al., 2021a). In addition, remote sensing imagery is linked to specific farm problems through deep learning for the identification of biological and non-biological stresses in crops (Francesconi et al., 2021; Ishengoma et al., 2021; Jiang et al., 2021; Zhou et al., 2021), segmentation, and classification (He et al., 2021; Osco et al., 2021; Vong et al., 2021). These studies show that the combination of UAV remote sensing and deep learning provides the scope for large-scale resistant weed evaluation (Krähmer et al., 2020; Wang et al., 2022).

This study explores the potential for using multispectral images collected by UAVs in crop fields for identifying resistant weeds and proposes an effective method to identify resistant weeds in real field environments. We propose a weed spectral resistance index called WSRI = (RE-R)/(RE-B) to investigate resistant weeds by analyzing the canopy spectral response of barnyardgrass and velvetleaf. The fusion of multispectral and RGB images combining canopy spectral and texture feature information and applying a deep convolutional neural network (DCNN) are carried out to evaluate the potential for identifying resistant weeds in the field based on their dynamic response.

Materials and Methods

Test Site and Experimental Setup

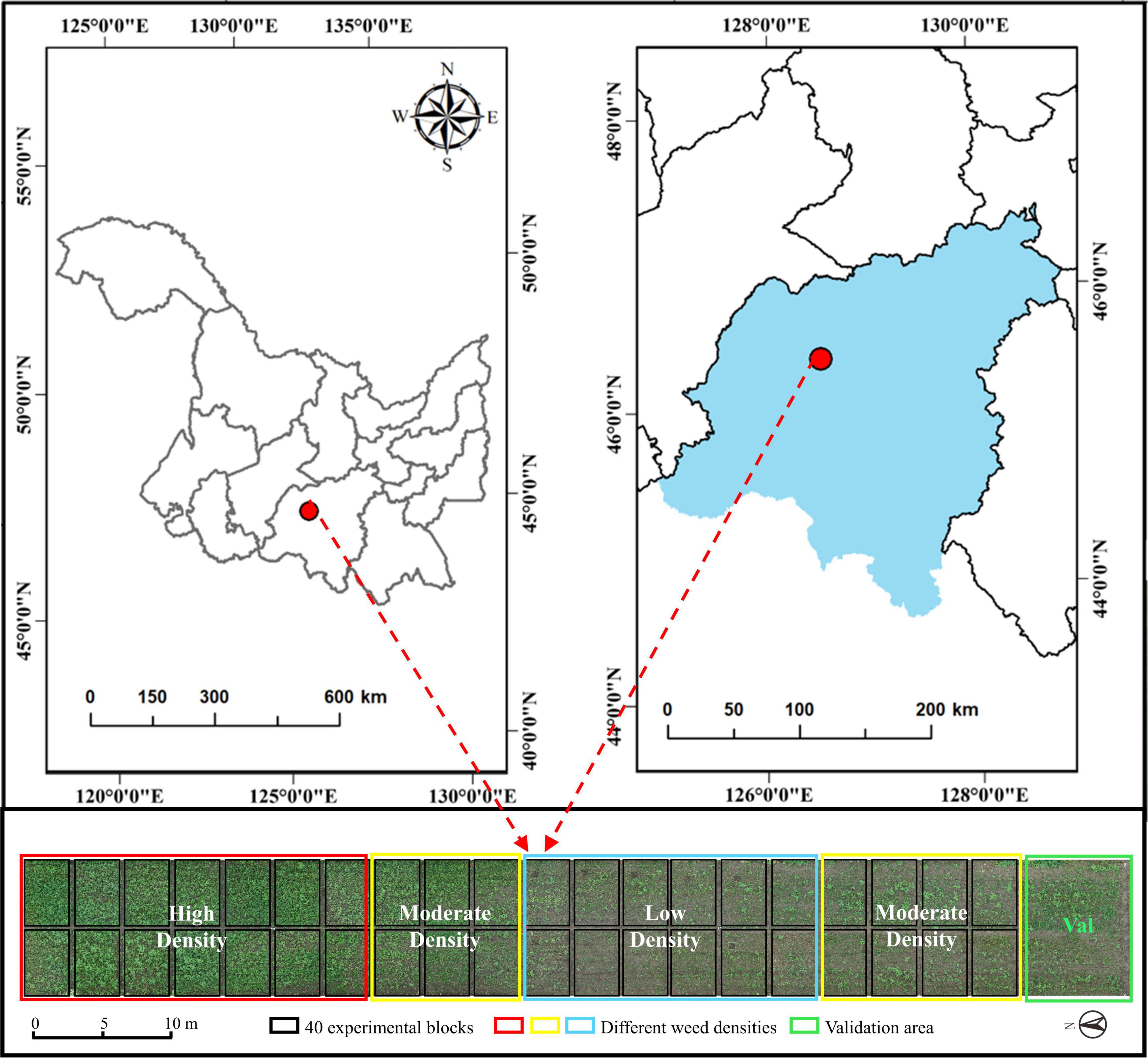

The weed resistance experiment was conducted at the Xiangyang Farm, Northeast Agricultural University, Harbin, Heilongjiang, China (45°61′ N, 126°97′ E), as shown Figure 1. The region has a cold-temperate continental climate, with average annual precipitation of 400–600 mm and an average annual effective temperature of 2,800°C. The experimental soil type is black soil, with a soil tillage layer, a nitrogen content of 0.07–0.11%, a fast-acting phosphorous content of 20.5–55.8 mg/kg, and a fast-acting potassium content of 116.6–128.1 mg/kg.

Two different weed species were selected for this study. Common broadleaf and grassy weeds in the Heilongjiang region include barnyardgrass (Echinochloa crusgalli (L.) Beauv) and velvetleaf (Abutilon theophrasti Medicus). Weed seeds were collected from 20 different fields in Heilongjiang and confirmed to be atrazine-susceptible and -resistant biotypes (Liu et al., 2018). The seeds were air-dried and stored at 4°C. The field was treated with glufosinate at 0.45 kg active ingredient (AI) ha–1 plus pendimethalin at 1.12 kg AI ha–1 before planting to kill existing vegetation and provide residual weed control 1 week before crop planting.

In this trial, maize seeds were first sown in black soil on May 13. The weed seeds were mixed with sand, dropped on the soil surface, and then harrowed immediately after maize sowing. Weed seed dropping is divided into three densities (low, 40 seeds m–2; moderate, 160 seeds m–2; high, 320 seeds m–2). After maize germination, slight spray irrigation was applied to the whole field to accelerate weed germination. The herbicide atrazine (Ji Feng Pesticide Co., Jilin, China) was then sprayed at a uniform rate on 1st June when the maize reached the three-leaf stage.

In the experimental field, 40 plots were divided into three weed density treatments (Figure 2B). Each treatment consisted of 12 or 14 plots measuring 3 m × 5 m in six rows with a 0.6-m row spacing. A 1-meter-wide protection plot surrounded the entire field to reduce edge effects. This study investigated the ground truthing data before the atrazine application day. The manual measurements for ground truthing consisted of the survival status of the two weed types and geographical coordinates after application.

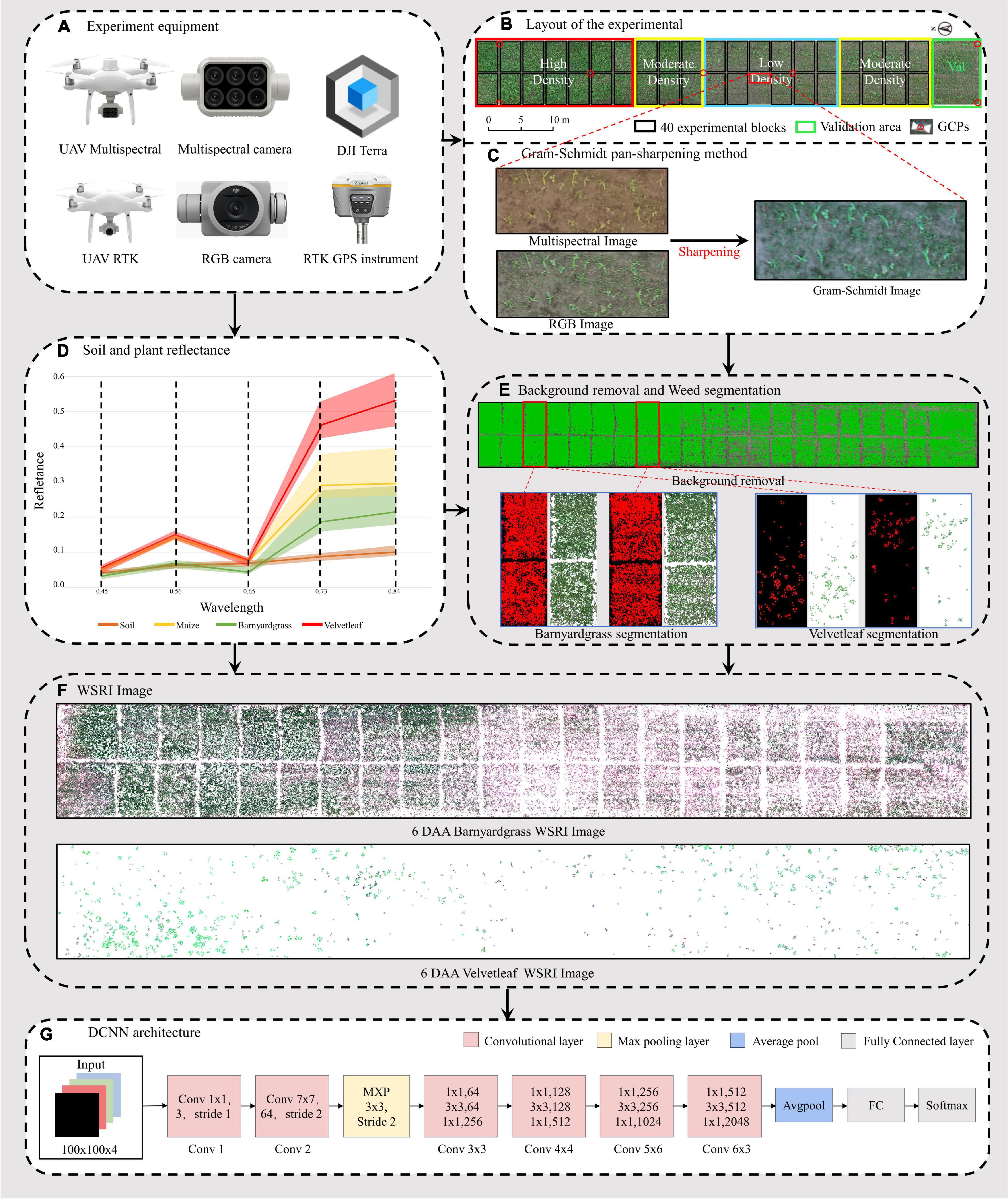

Figure 2. Workflow of the unmanned aerial vehicle (UAV) high-throughput field weed resistance approach. (A) DJI Phantom 4 Multispectral, DJI Phantom 4 RTK, DJI Terra, and RTK GPS instrument for collecting field images. (B) Digital orthophoto maps (DOM) of three maize field densities (low, moderate, high) for weed resistance research. (C) Gram–Schmidt sharpening for improving spectral image information. (D) Reflectance values of four objects in the orthophoto (soil, maize, barnyardgrass, and velvetleaf). (E) Soil and maize removal and two types of weed segmentation, including barnyardgrass and velvetleaf. (F) Two weed image datasets from 6 days after atrazine application (6 DAA) used in the classification models. (G) Deep convolutional neural network (DCNN) architecture.

Data Acquisition

Unmanned Aerial Vehicle Image Collection

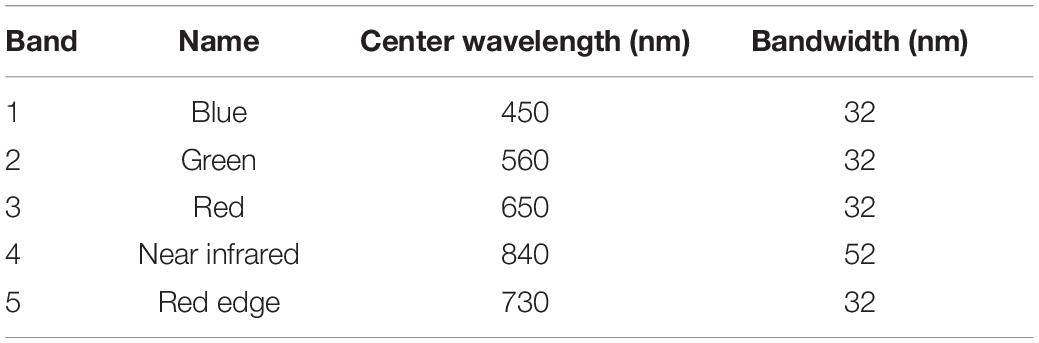

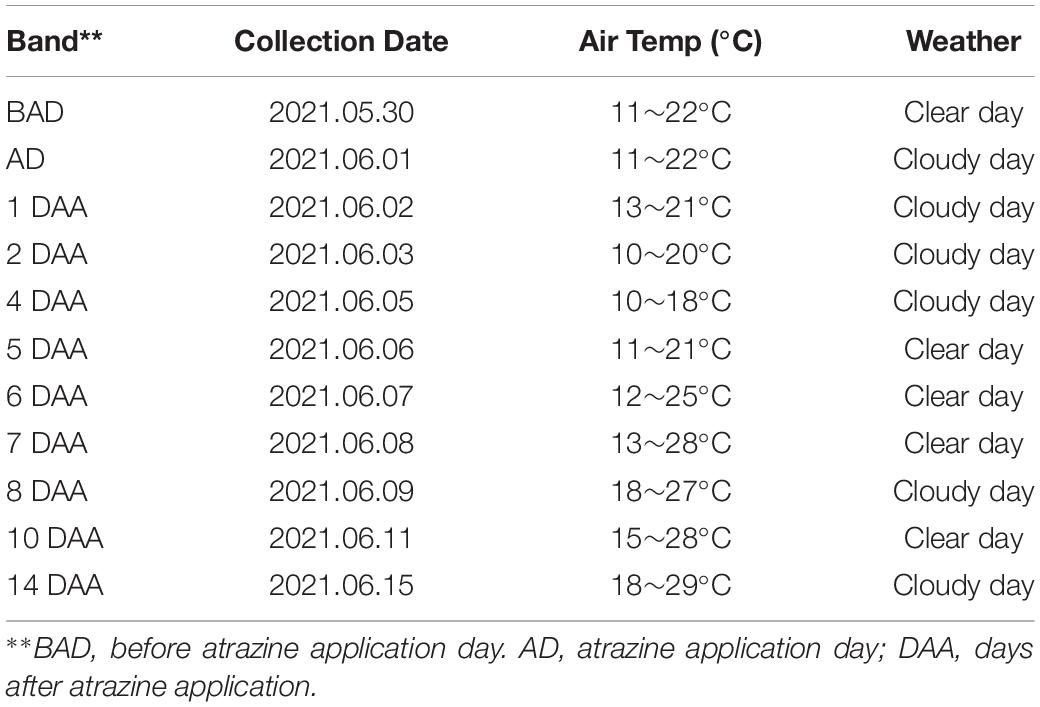

Multispectral and RGB images were collected with DJI Phantom 4 Multispectral and DJI Phantom 4 RTK UAVs (SZ DJI Technology Co., Ltd., Shenzhen, China), as shown in Figure 2A. The UAVs are equipped with centimeter-level navigation and positioning systems. The DJI Phantom 4 Multispectral camera simultaneously acquires images in blue (B), green (G), red (R), red edge (RE), and near-infrared (NIR) bands (Table 1) at a 1600 × 1300 pixel resolutions. The DJI Phantom 4 RTK has a camera with an FC6310R lens (f = 8.8 mm) and a 4864 × 3648 pixel resolution. Based on a UAV flight test with manually controlled height varying from 10 to 30 m above ground, the UAV altitude was finally set to 15 m with no disturbance to the leaves. The ground sampling distances (GSDs) of multispectral and RGB images were 0.79 and 0.41 cm pixel–1, respectively. UAV flights were conducted in the field on 6th and 20th May 2021 to collect the early season information needed for the study. RGB images were acquired first, and then multispectral images were acquired each day. The mean forward overlap of the photographs was 80%, and the mean sidelap was 70%. The UAV observations covered the complete experimental range (Table 2). However, some data are missing because of weather conditions.

Image Preprocessing

Approximately 2,000 images per flight were used for the photogrammetry process using DJI Terra software (SZ DJI Technology Co., Ltd., Shenzhen, China) to obtain images of the entire experimental area. The global navigation satellite system (GNSS) real-time motion control measured seven ground control points (G) to obtain accurate geographical references. The seven GCPs were measured with a GNSS real-time kinematic (RTK) receiver (RTK GPS instrument i50, CHC Navigation Co., Ltd., Shanghai, China). The reflectance correction and radiometric calibration use a 3 m2 carpet reference and the Spectron on software (Resonon Inc., Bozeman, MT, United States). The empirical line method was then used to convert the image’s digital number (DN) value to a reflectance value (Figure 2D).

Development of Specific Indices Identifying Atrazine-Resistant Weeds

Canopy spectral reflectance differs between weed species, and some spectrum regions may better identify atrazine resistance status. Sample selection was based on the weed survival 14 days after application. The reflectance of susceptible and resistant biotypes of two weed species was counted in the multispectral images after 2 days of application.

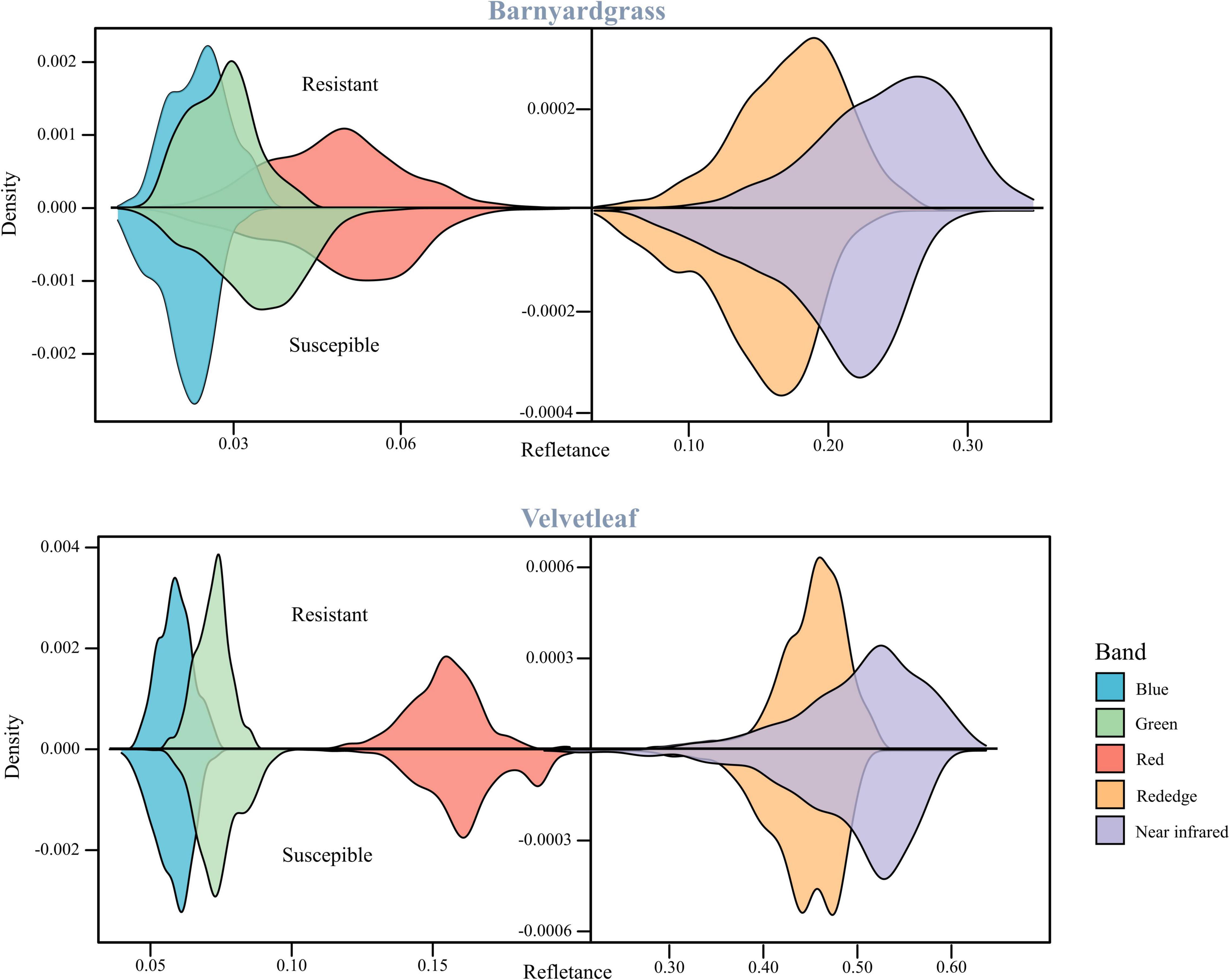

Figure 3 shows barnyardgrass and velvetleaf reflectance density maps for five bands extracted from multispectral images of susceptible and resistant biotype regions. Slight differences between susceptible and resistant biotypes were observed in the green, red, red edge, and near-infrared bands, and the differences between the red (650 nm) and red edge (780 nm) bands show greater stability (Jin et al., 2020). Part of the blue (450 nm) band was observed to reduce the differences in leaf surface reflectance, thereby improving the correlation between the vegetation index and leaf pigment content (Sims and Gamon, 2002). Therefore, we proposed a weed spectral resistance index named WSRI = (RE-R)/(RE-B) to calculate and evaluate actual field environmental resistant weeds and tested it in this study (Figure 2F).

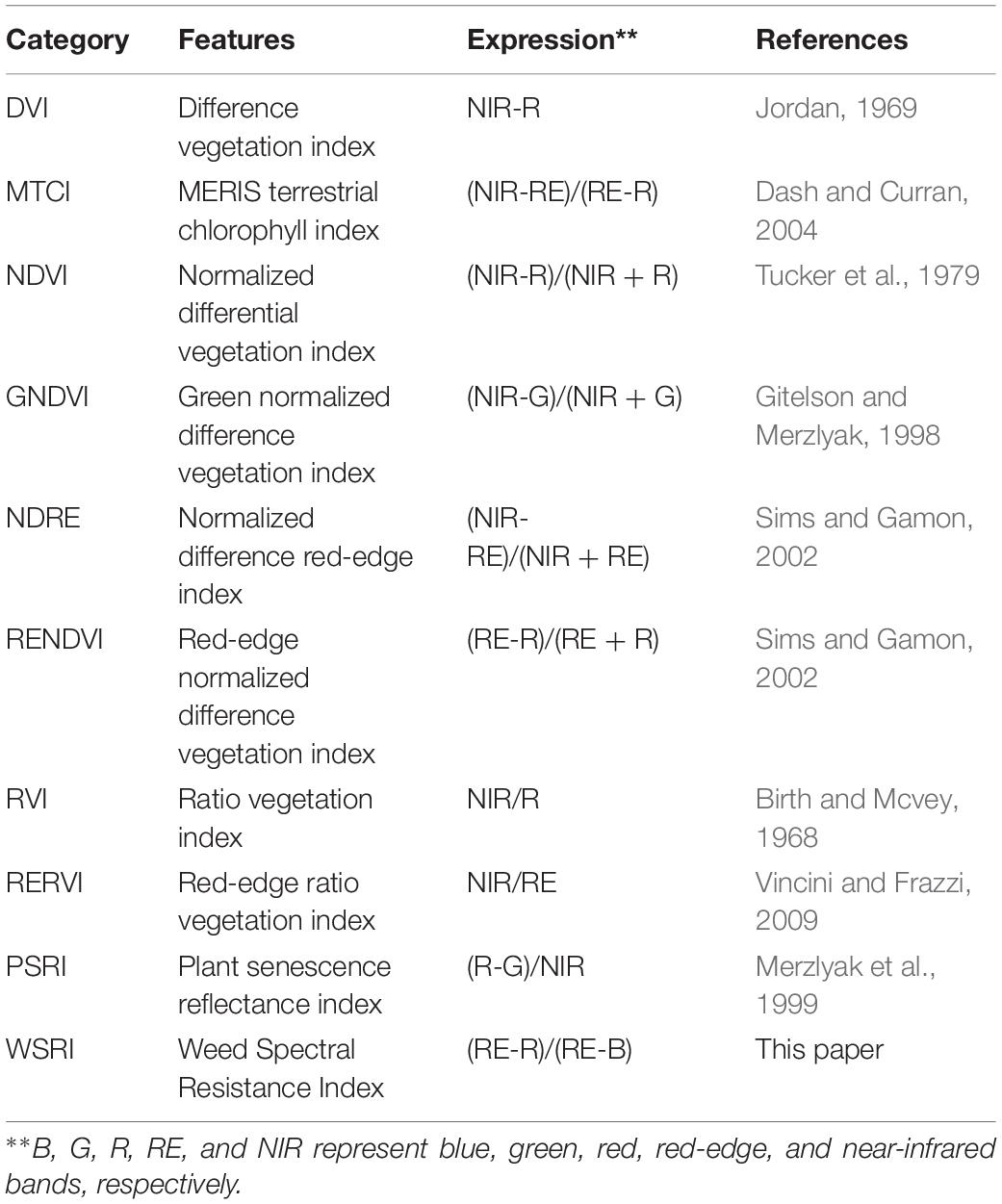

Many VIs have similar effects when dealing with classification problems, differing in their index form expressions. Simple vegetation index forms, such as the NDVI and ratio vegetation index (RVI), are universal to the problem and reflect vegetation information well in many cases. In this study, we entered our multispectral image data into nine previously published VIs (Table 3) and the WSRI to evaluate and compare their weed resistance classification accuracies.

Image Fusion

The multispectral images with low spatial resolution used for classification lost almost all texture features. However, susceptible and resistant biotype differences are expressed in the texture information. The high spatial resolution of RGB images compensated for the lost texture information in the multispectral images, so image fusion using the Gram–Schmidt pan-sharpening method in ENVI 5.4.1 (EXELIS, Boulder, CO, United States) was used (Figure 2C). The fusion images have five bands: blue, green, red, red-edge, and near-infrared.

The Gram–Schmidt pan-sharpening method is based on Gram–Schmidt (GS) orthogonalization. GS orthogonalization is performed to orthogonalize matrix data or digital image bands (Laben and Brower, 2000). It first created a simulated low-resolution panchromatic band as a weighted linear combination of multispectral bands. Then, GS orthogonalization is performed using all bands, including the simulated panchromatic and multispectral bands. The simulated panchromatic band is the first band in GS orthogonalization. After making all bands orthogonal by using GS orthogonalization, the high-spatial resolution panchromatic band replaces the first GS band. Last, an inverse GS transform creates the pan-bands (Laben and Brower, 2000; Ehlers et al., 2010).

Background Removal and Weed Segmentation

Because of the reflectance differences between soil and plants (Figure 2D), Otsu’s thresholding algorithm (Ostu et al., 1979) was used to separate vegetation from the soil, find an optimal value to be used for segmentation, and then adjust the threshold value, if necessary, to improve separation of the plants from the soil (Figure 2E; Liao et al., 2020).

Manual segmentation of maize and weeds has higher accuracy but is expensive and time-consuming. UAV multispectral and RGB images were segmented for maize, barnyardgrass, and velvetleaf using the support vector machine (SVM) classifier (Cortes et al., 1995). The four-leaf stage of maize did not shade the weeds significantly and separated the maize and weeds better. A binary mask layer was created to segment the maize and the two weed types from the UAV images’ extracted spectral and texture features for further processing (Figure 2E). The binary mask layer was generated in ENVI based on manually tagged template data.

The performance of the SVM classifier was evaluated using the confusion matrix and accuracy statistics, with the overall accuracy based on randomly selected independent test samples. The overall accuracy of SVM classification is 94.4%, which meets the experimental requirements. The zonal statistics were obtained using ArcPy and the Python 2.7 programming language to remove soil and maize and segment the barnyardgrass and velvetleaf.

Dataset Production

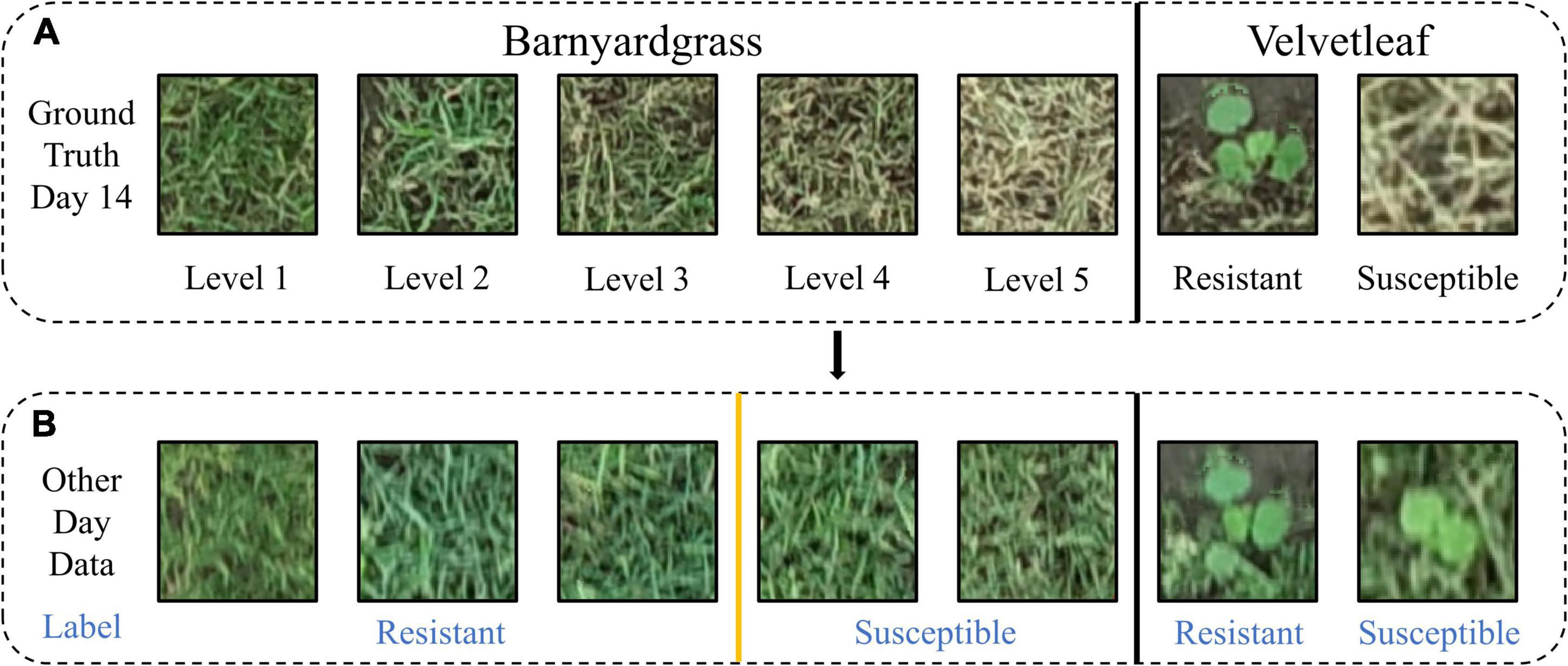

Different application effects were observed in the experimental area, and a training template was created for individual velvetleaf plants based on survival status 14 days after application (Figure 4A). The training template contained two classes: susceptible velvetleaf and resistant velvetleaf (Figure 4B).

Figure 4. Two weeds belonging to blocks were evaluated as susceptible and resistant. (A) Manual resistance level and label based on weed death coverage 14 days after atrazine application. (B) Visualization of the data labels on other days.

Because barnyardgrass grows densely and is mostly aggregated, it is not easy to separate them into individual plants (Maun and Barrett, 1986). In this study, the resistance level was set according to the death rate of barnyardgrass in the same area 14 days after application. Figure 4A shows the example plants from blocks at different resistance levels (example of barnyardgrasses in high-density areas). Resistance level 1 is defined as 0–25% death of barnyardgrasses; resistance level 2 is 26–50% death of barnyardgrasses; resistance level 3 is 51–75% death of barnyardgrasses; resistance level 4 is 76–95% death of barnyardgrasses; and resistance level 5 indicates an entirely dead barnyardgrass block. Blocks with resistance levels less than or equal to 3 were considered resistant (Figure 4B) because these blocks exceeded the threshold for weed control in farmland weeds (Anru and Cuijuan, 2014).

The image patch of each weed plot must be cropped from the barnyardgrass WSRI fusion segmentation image to build the dataset for DCNN modeling. Thus, a region of interest (ROI) shapefile was created in ArcMap 10.3 (Esri Inc., Redlands, CA, United States), and rectangles measuring around 0.5 m × 0.5 m were drawn. The cropped patch sizes were approximately 100 × 100 pixels. All data sets are four bands with a combination of WSRI images and RGB images.

The other UAV images throughout and after application were also processed to generate time-series image patches for dynamic weed resistance classification. Rotated image enhancement was applied to display the different shapes and directions of the weeds in the field. Four clockwise rotations (0°, the original data; 90°; 180°; and 270°) were performed for image enhancement. For the barnyardgrass dataset, the original 1,750 observations were increased four times, with 3,128 observations representing resistant blocks and 3,872 observations representing susceptible blocks for 7,000 observations each day and 28,000 total observations. For the velvetleaf dataset, the original 480 observations were increased four times, with 1,136 observations representing resistant plants and 784 observations representing susceptible plants for 1,920 observations each day and 7,680 total observations. Before the data augmentation, all data were randomly split into training and validation sets in an 8:2 ratio. The model performance was tested using a validation area (Figure 4B) to illustrate model’s the generality and robustness.

Deep Convolutional Neural Network for Resistant Weed Classification

A DCNN (Figure 2G) for classifying resistant weeds was constructed using MATLAB R2021a (MathWorks Inc., Natick, MA, United States). The model was trained and tested on an NVIDIA 2080Ti GPU with 48-GB RAM and on a 64-bit Windows 10 operating system. CUDA version is 11.4.

The network was built based on the ResNet-50 model (He et al., 2016) and transfer learning (Kieffer et al., 2017). This study used the Resnet-50 model pre-trained on ImageNet (Krizhevsky et al., 2012) without fully connected (FC) layers for transfer learning. The input size was changed to 100 × 100 × 4 to match the size of the image patches. Then a convolutional layer (size of 3 × 3 × 3) was added behind the input 4-band images for reduced dimension on data. The ReLU activation layer was appended behind the convolutional layer to add non-linear characteristics. The dropout regularization method was deployed after the FC layer to reduce overfitting (Srivastava et al., 2014), and the dropout rate was set at 30%.

An Adam optimizer (Kingma and Ba, 2014) was used with a 10–4 learning rate and 10–3 decay to adaptively optimize the training process. The batch size was set to 128, and the data generator generated each batch with real-time data augmentation. The model was trained for 300 epochs with 10 batches per epoch. The accuracy of each classification was observed using a confusion matrix. Accuracy metrics were averaged from five repeats of randomized holdback cross-validation.

Results

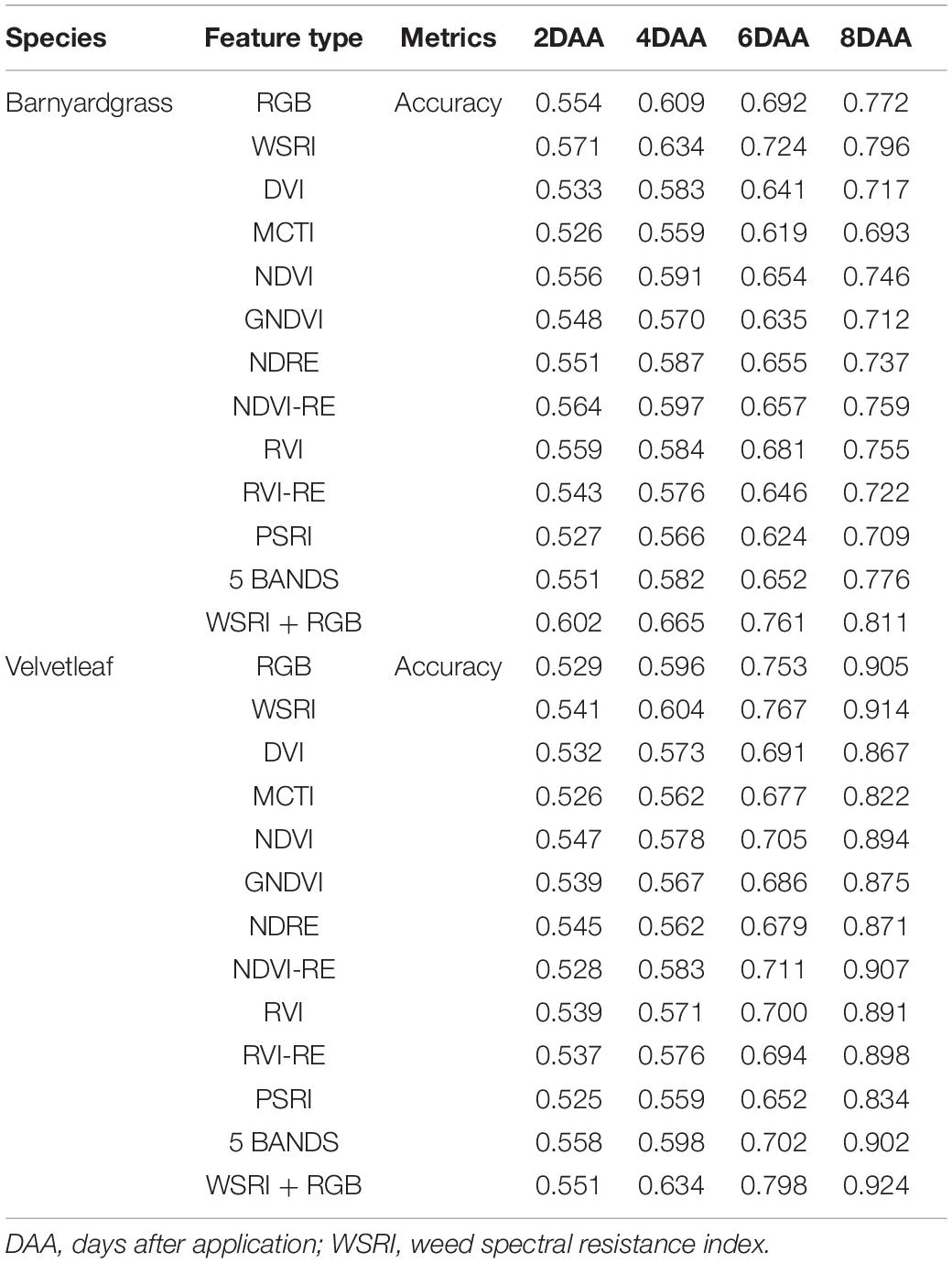

The DCNN was applied to classify weed resistance using the canopy spectral and textural information extracted from the UAV multispectral and RGB sensors, and the results are shown in Table 4.

Contribution of Spectral Bands and Vegetation Indices in the Resistant Weed Classification

The susceptible and resistant biotype reflectance densities of barnyardgrass and velvetleaf after atrazine application are shown in Figure 3. Spectral band differences between susceptible and resistant biotypes are related to the chlorophyll content and cell wall structure of the weed species.

Atrazine-resistant weed biotypes showed a slightly lower reflectance than susceptible weed biotypes in the visible light region. The differences between susceptible and resistant biotypes were more significant in the red edge and near-infrared regions, and the resistant biotypes showed increased spectral reflectance. These effects are related to the low chlorophyll content of susceptible biotypes, corresponding to plant stress response (Gomes et al., 2016). The main reason is that the application of atrazine reduces photosynthesis and destroys the pigments (Hess, 2000; Zhu et al., 2009). The red band is the central band of chlorophyll, which is the specific chlorophyll absorption band (Tros et al., 2021). The red edge position, which is the slope inflection point between red absorption and near-infrared reflectance, is usually used to correlate the chlorophyll content (Horler et al., 1983; Zarco-Tejada et al., 2019). Thus, the red and red edge bands are stable for the classifying atrazine-resistant weed biotypes.

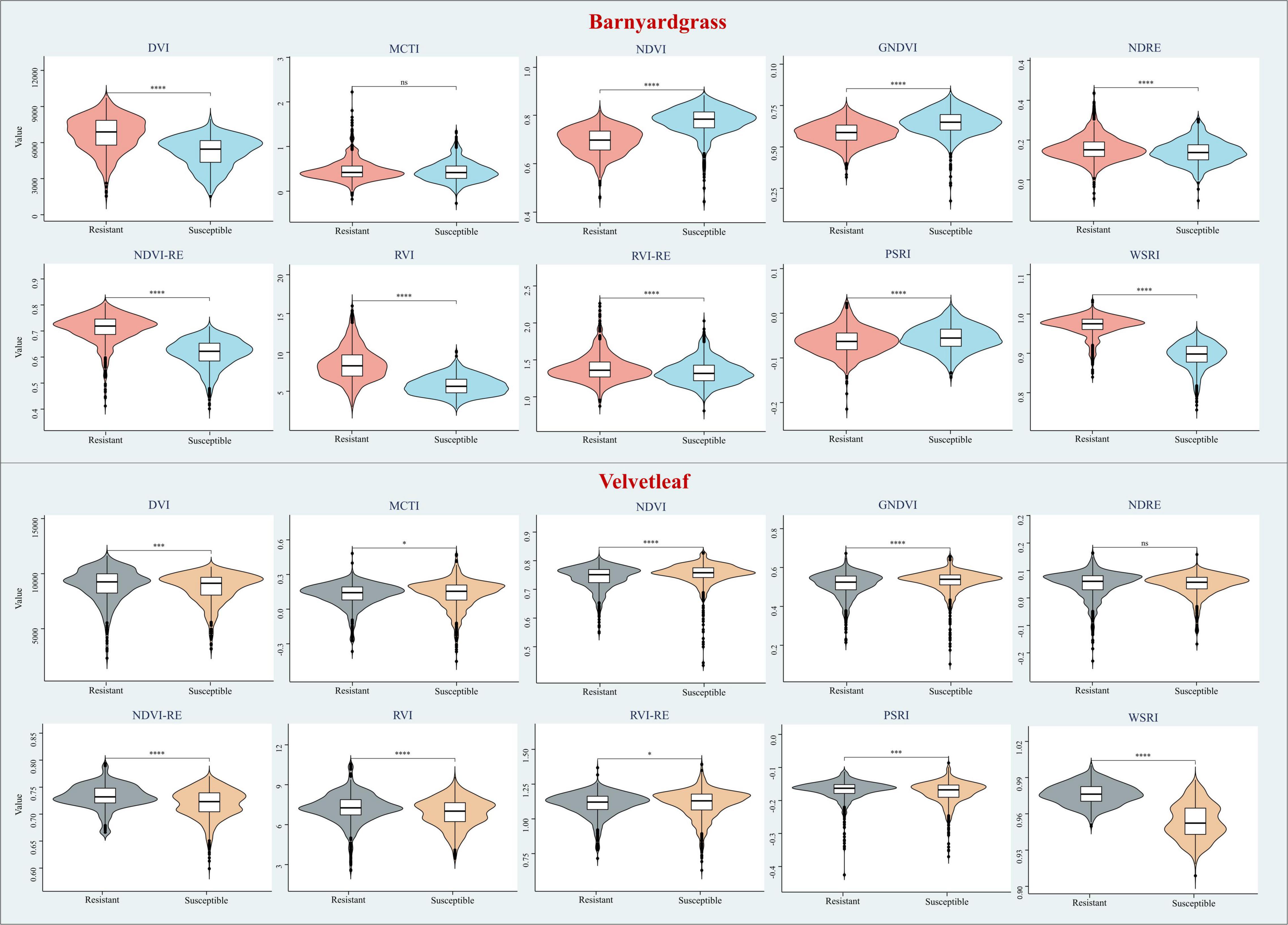

This study selected the most commonly used VIs to include some stress indices and compare their results from the DCNN with the WSRI (Table 4). Among these, stress and pigmentation changes resulted from atrazine herbicide application. To further explore the differences in the vegetation index distributions for observing susceptible and resistant biotypes, violin plots of barnyardgrass and velvetleaf for 10 VIs 2 days after application are shown in Figure 5.

Figure 5. Violin plots of different vegetation indices for susceptible and resistant biotypes of barnyardgrass and velvetleaf 2 days after application, where *p < 0.05; **p < 0.01; ***p < 0.001; and ****p < 0.0001 indicate significant differences between the susceptible and resistant biotypes, and ns indicates no significance differences.

The results in Figure 5 show that WSRI, DVI, NDVI, NDVI-RE, and RVI VIs distinguish between susceptible and resistant barnyardgrasses, with a common trait of these indices being that they all contain red bands. The difference vegetation index (DVI) and the RVI have little differences in susceptible and resistant biotypes because they do not integrate multi-band information well. The NDVI-RE used the red-edge bands to replace the NIR bands, resulting in a slightly better classification than the NDVI.

The WSRI retained the numerator structure of the NDVI-RE index and added the blue bands to the denominator to eliminate the spectral interference between pigments to achieve a better classification result. However, the WSRI classification of susceptible and resistant velvetleaf was very poor compared with barnyardgrass at the early stage of application, and the NDVI-RE and WSRI provided only partial classification. The WSRI makes the resistant weed data more concentrated and the susceptible weed data more dispersed, widening their differences. The average spectral response of barnyardgrass shows a more prominent separation than velvetleaf, possibly via lower herbicide uptake at the cuticular level, causing it to respond more slowly to herbicide stress (Couderchet and Retzlaff, 1995). In addition, velvetleaf has a higher reflectance than barnyardgrass, resulting in spectral changes that are more difficult to represent effectively.

Contribution of Spectra and RGB in Resistant Weed Classification

Spectral information with the DCNN resulted in the highest weed resistance classification accuracy when using a single sensor. The single-band WSRI vegetation information surpasses the RGB texture information, even though the RGB image resolution is about 10 times higher than that of the spectral images. The difference in accuracy between them was largest 6 days after application, while the difference was smallest 8 days after application.

As shown in Table 4, the combination of WSRI spectral and RGB structural information improved accuracy, compared to using only a single sensor. RGB-derived detailed texture features, such as slight leaf discoloration and rolling, are not obtained from spectral features (Rischbeck et al., 2016; Stanton et al., 2017). In addition, canopy structure information can overcome the asymptotic saturation problems inherent to spectral features to some extent (Wallace, 2013; Maimaitijiang et al., 2020). Therefore, the combination of spectral and textural information improves classification accuracy. It should be noted that the accuracy improvement was not substantial, and combining multispectral and RGB information is likely attributed to information homogeneity and redundancy among canopy spectral and textural features (Pelizari et al., 2018; Maimaitijiang et al., 2020).

Impacts of Different Times in Resistant Weed Classification

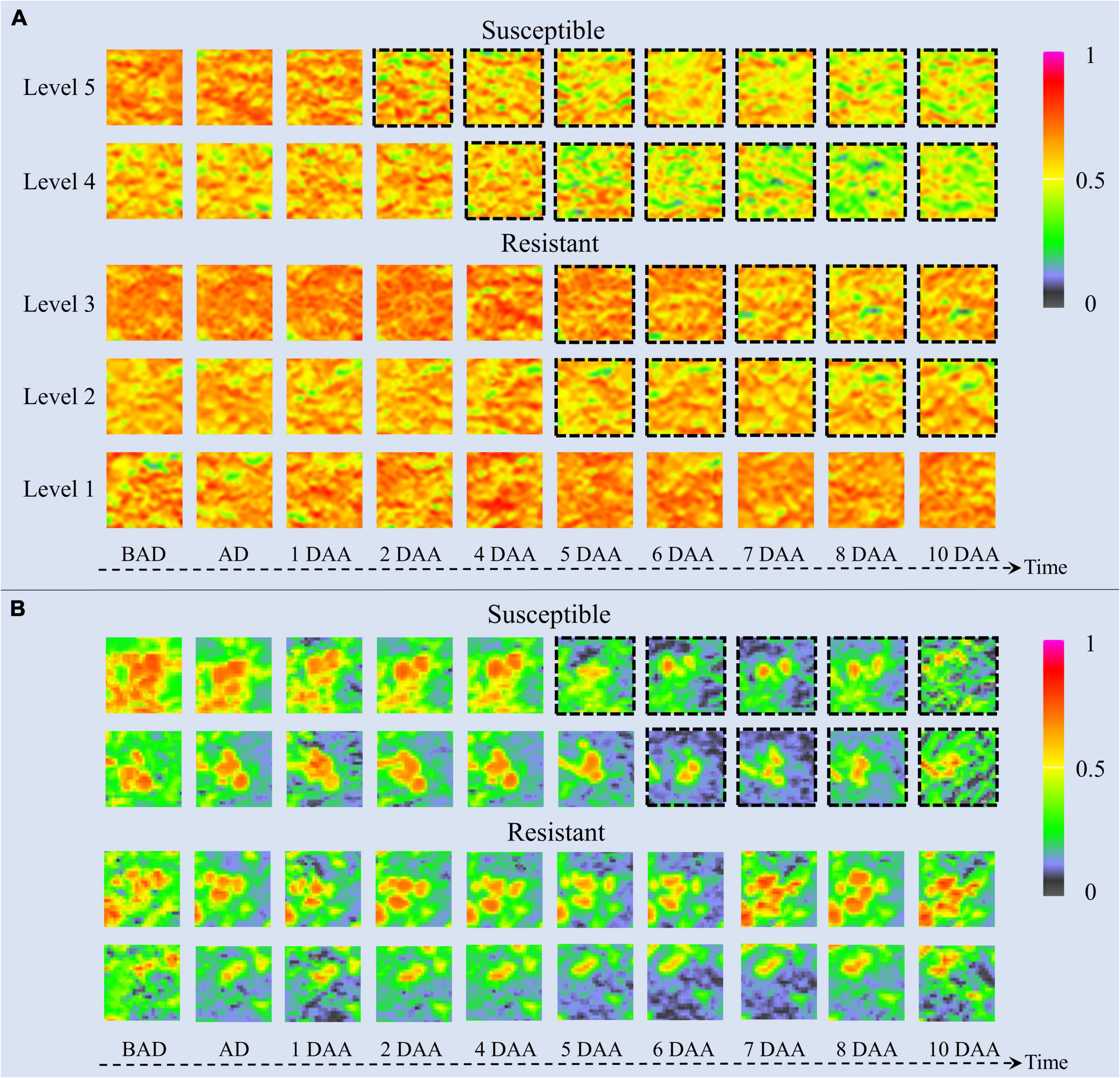

The time-series NDVI image patches of barnyardgrass and velvetleaf during the atrazine application stage are visualized in Figure 6. NDVI images reflect vegetation health status and nutrient information (Eide et al., 2021a). The color of the plant areas in the NDVI images represent the plant health status, where NDVI values close to 1 and redder plant regions mean healthier plants. As time increased, herbicide stress became more severe, and differences in resistance levels among weed blocks were increasingly evident. As shown in Figure 6A for barnyardgrass, the susceptible biotypes changed rapidly under herbicide application. About 3 days after application, the NDVI image of the leaves changed from red to yellow or even green, meaning that the vital characteristics of the susceptible biotypes gradually diminished.

Figure 6. UAV-based visualization of two weed species with dynamic changes in NDVI images. (A) Image patches of five resistance levels of barnyardgrass under herbicide stress, where dashed black borders around the patches indicate significant changes. (B) Image patches of two susceptible and two resistant velvetleaf under herbicide stress, where dashed black borders around the patches indicate significant changes. BAD is before application day; AD is application day; DAA is days after application.

By contrast, the resistant biotypes changed slowly with low amplitudes under the herbicide application. About 5 days after application, the NDVI image of the leaves changed slightly from red to yellow. The higher the resistance level, the smaller the change toward yellow. The highest resistance level showed only signs of stopping the growth and then completely recovered to normal growth about 4 days after application.

The change rate under herbicide stress conditions and the recovery speed after the application reflect resistance at different stages. The higher the resistance level of the barnyardgrass plots, the later the changes appeared. The large number of resistant barnyardgrasses surrounding susceptible barnyardgrasses made it difficult to observe changes in high-resistance level plots. A significant difference between susceptible and resistant plots in later stages is that the recovery of many resistant barnyardgrasses in the resistant plots compensated for the death of susceptible barnyardgrasses.

The dynamics of velvetleaf herbicide stress are easier to analyze because of their individual plant growth characteristics. Velvetleaf had longer herbicide stress response times than barnyardgrass, and the susceptibility and resistance of velvetleaf were not directly related to size, as shown in Figure 6B. The mechanism of prolonged plant death generated by sink tissue toxicity in velvetleaf may be the main reason (Fuchs et al., 2002). Atrazine caused gradual inhibition of photosynthesis in velvetleaf leaves that increased over several days and was nearly complete by 5 days (Qi et al., 2018). Therefore, the difference in the spectral response of velvetleaf is smaller than that of barnyardgrass 2 days after application.

About 5 days after application, the susceptible velvetleaf began to change significantly. The NDVI images show large red leaf area reductions with relatively little activity. By contrast, the NDVI images of the resistant velvetleaf leaves changed slightly from red to yellow about 5 days after application. However, the formerly red areas began recovering about 7 days after application, sometimes even before the herbicide application, indicating that the resistant velvetleaf had resumed growth.

Both susceptible and resistant velvetleaf biotypes showed growth inhibition at the beginning of herbicide stress, but the spectral information was still better classified by inhibition differences, demonstrating the potential of spectral information for the study of resistant weeds.

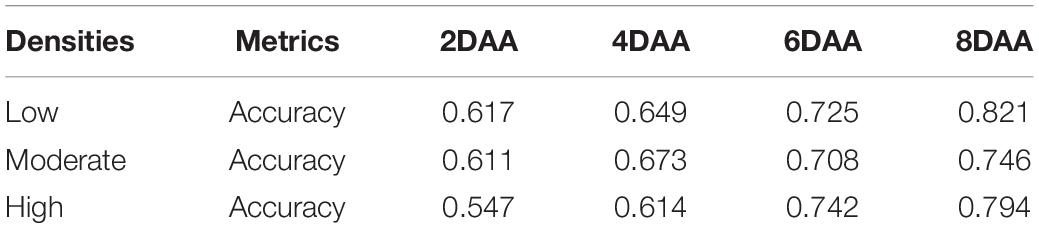

Impacts of Different Densities in Resistant Weed Classification

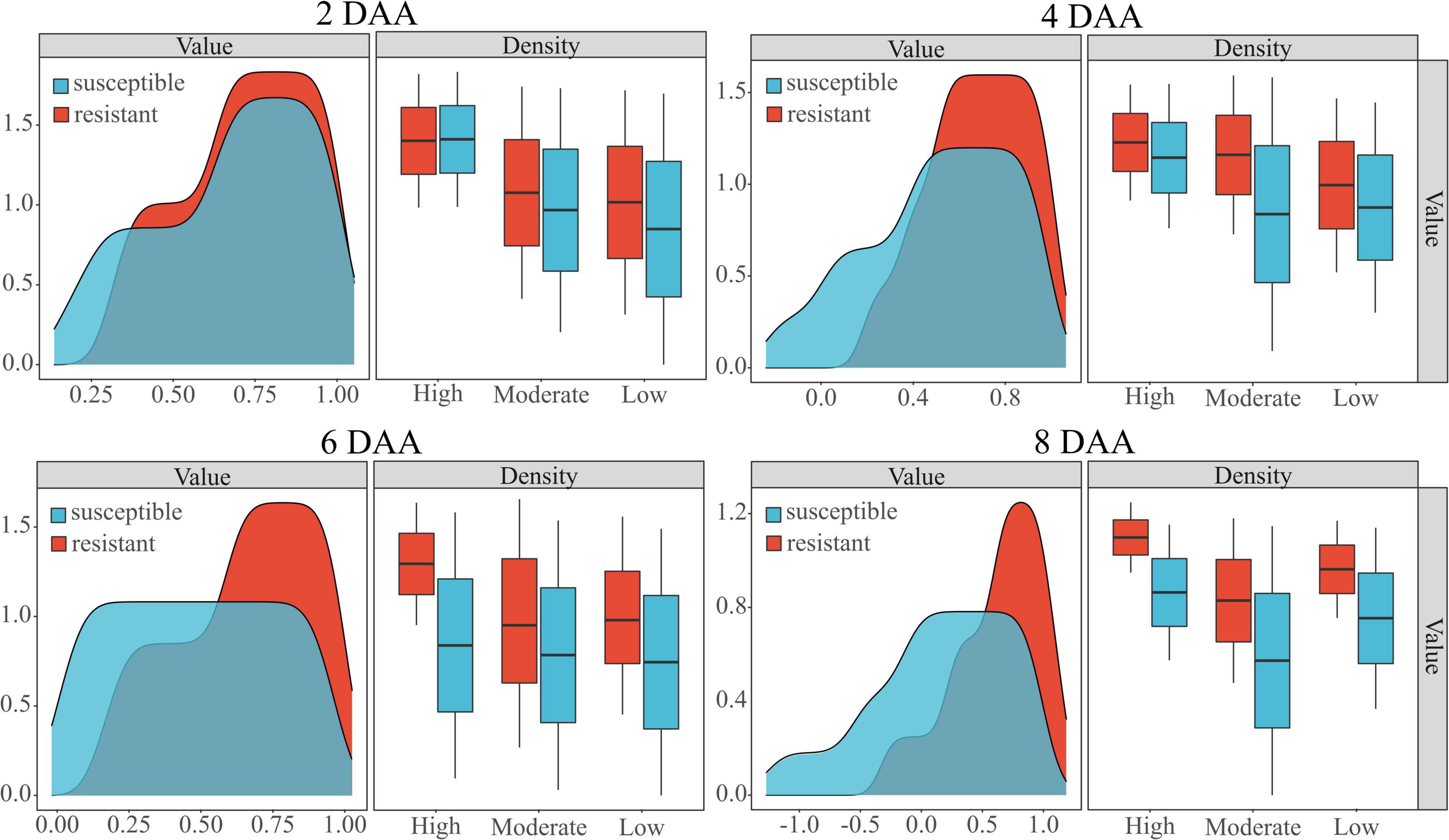

The distribution of barnyardgrass in a real farmland environment shows a clustered distribution (Maun and Barrett, 1986). Therefore, studies were conducted for different weed densities. The classification model was applied to different barnyardgrass densities to evaluate their reliability and adaptability. As shown in Table 5, the classification accuracy after herbicide application had maximum accuracies of 0.794 for low-density weeds, 0.736 for medium-density weeds, and 0.821 for high-density weeds. However, the velvetleaf distribution was rarely clustered, and different velvetleaf densities had almost no effect on the classification model.

The performance of the WSRI was evaluated for each plot random samples on different densities. Figure 7 shows the WSRI density plots for susceptible and resistant barnyardgrass in the sample areas at different times after application and the box plots at different weed densities. The results show gaps between susceptible and resistant biotypes at different densities, and the gaps gradually increased over time.

Figure 7. Classification of susceptible and resistant barnyardgrass biotypes at different densities (high, moderate, low) by the WSRI. DAA is days after application.

In summary, atrazine spraying would encounter problems such as shading and uneven spraying under high-density barnyardgrass conditions, potentially overestimating resistance levels in susceptible areas. Moreover, symbiotic areas of resistant and susceptible barnyardgrass would affect the spectral response value, resulting in a low-classification accuracy model at early application. The low- and moderate-density areas contain few weeds. Some susceptible weeds died with increasing time after application, resulting in small fluctuations in spectral response gaps and small improvements in accuracy rates. In addition, the massive death of susceptible weeds over time in high-density areas widened the gap and improved the classification accuracy.

Model Validation

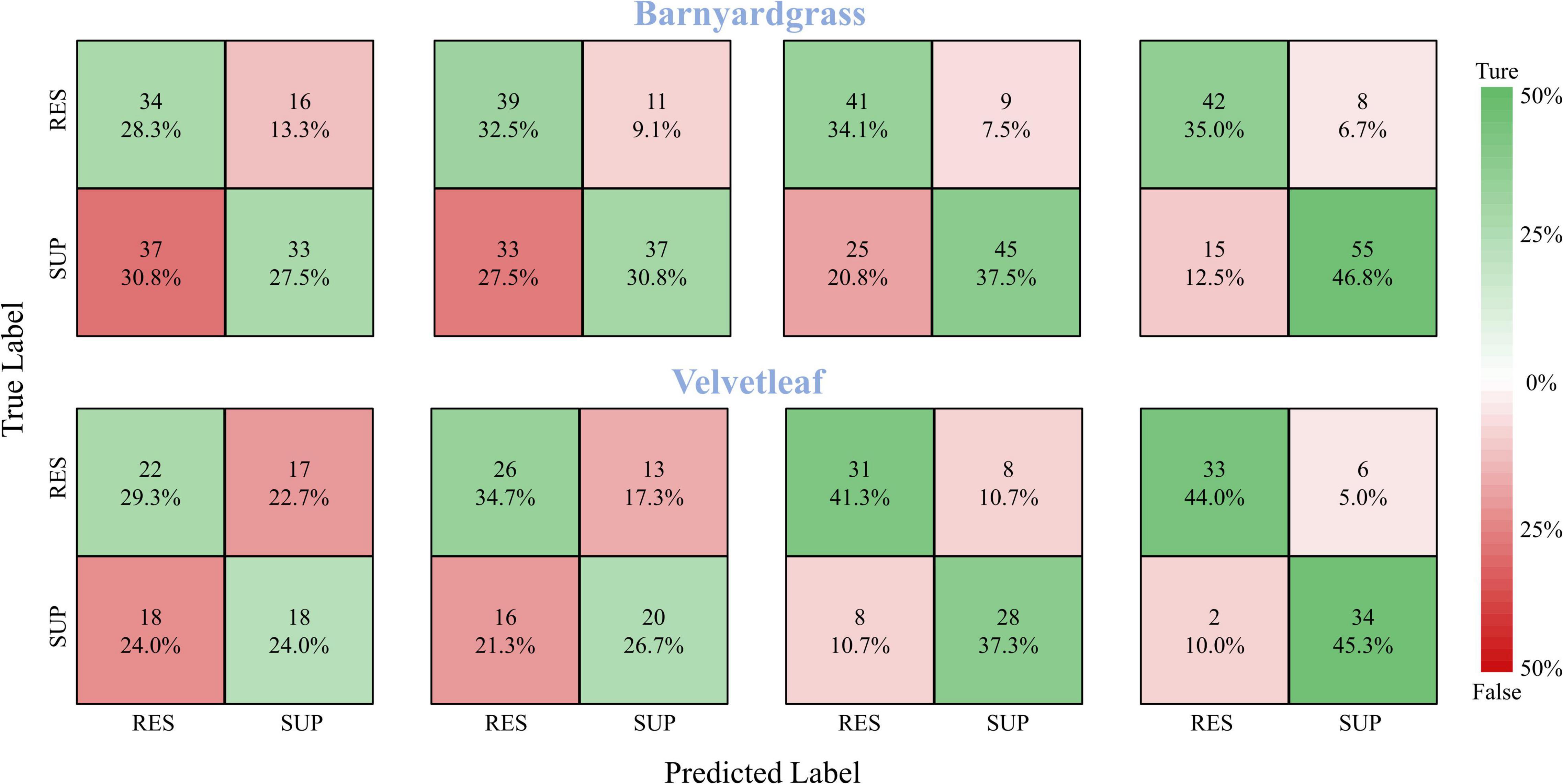

A robust model should be able to generalize to new datasets and still perform well. Therefore, the model validation used the DCNN model to classify the susceptible and resistant weeds in the validation area, and the confusion matrices of the classification results are shown in Figure 8. At 8 days after application, the DCNN provided the highest classification accuracy, with 81.8% for barnyardgrass and 89.3% for velvetleaf. At 6 days after application, the DCNN provided better classification accuracy, with 71.6% for barnyardgrass and 78.6% for velvetleaf. The test results also confirmed the WSRI and DCNN model’s robustness and generality for further application.

Figure 8. Confusion matrices for barnyardgrass and velvetleaf in the validation area. RES are resistant weeds. SUP are susceptible weeds.

Discussion

Impacts of Different Information in Resistant Weed Classification

Spectral information can reflect the physiological properties of the plant (Rajcan and Swanton, 2001), and physiological properties express differences between resistant and susceptible biotypes faster than appearance. Therefore, multispectral images can assess weed resistance faster and better than RGB images at the early stage of application. As reported in many previous works, spectral information such as VIs has become the primary remote sensing indicator for plant phenotypes because of their stable and superior performance (Ballester et al., 2017). RGB canopy structure information yielded slightly lower, but still comparable, performance than spectral information, indicating that canopy structure information is a promising alternative to commonly used VIs.

The results of this study indicate that the choice of the band is critical when establishing the vegetation index. The red and red edge bands had a significant influence on the classification of resistant weeds, and the reflectance changes of these two bands correlated with the degree of herbicide stress. The study results confirmed that the WSRI (RE-R)/(RE-B) performed well in classifying resistant weeds. The WSRI combines the effects of blue, red, and red-edge wavelengths to provide a comprehensive picture of weed dynamics after application and displayed better performance than other indices. Therefore, it provides powerful support for monitoring and investigating resistant weeds over a large canopy area using UAVs or satellites.

Time series of susceptible and resistant weed biotypes are dynamic expressions of herbicide stress. In this study, 4 days after application (4DAA) was the watershed timeframe for studying resistant weeds. The accurate timing of resistant weed investigation affects effective farmland time management. The rate of change and recovery after herbicide stress begins is key to classifying susceptible and resistant weed biotypes. Different weed species mean that differences in susceptible and resistant biotypes are expressed at different times. The classification effect of barnyardgrass was better than that of velvetleaf at the beginning of the application because of differences in their shape, physiology, and distribution characteristics. As the application time increased, the classification effect of velvetleaf became better than that for barnyardgrass.

Weed density is another factor influencing the research of resistant weeds. It is better to investigate the resistance of clustered weeds using different weed densities. The DCNN trained separately for different weed densities may increase the accuracy of susceptible and resistant barnyardgrass classifications. It is worth noting that higher densities mean the possibility of more resistant weeds, and untimely treatment multiplies the damage to the crop (Alipour et al., 2022).

Effectiveness and Limitations of Unmanned Aerial Vehicle Traits in Resistant Weed Investigation

This study first proposed the multispectral image-derived WSRI to classify susceptible and resistant weeds in real farmland environments. For resistant weed investigation, it took at least 2 h for three raters to manually measure the distribution of resistant weeds in 40 plots. The UAV field flights took less than 15 min, which was fast enough to capture accurate data while avoiding fluctuations in environmental factors such as cloud or wind. More importantly, the high efficiency of UAV phenotyping makes dynamic monitoring with high temporal resolution possible. Therefore, UAVs have shown great potential in the emerging study of field-resistant weeds.

However, there are some limitations for the WSRI. First, changes at the early application stage may not adequately reflect the overall weed resistance because resistant weeds grow slowly during herbicide stress conditions. This is supported by the fact that differences between susceptible and resistant biotypes were not significant at the early stages, so 4 days after herbicide application is the optimal time to investigate resistant weeds. Additionally, the WSRI is an unstable measure easily affected by temperature, humidity, and light conditions in the field. Therefore, resistant weeds in the field should continue to be the subject of in-depth study and discussion.

Conclusion

The proposed UAV–WSRI phenotypic method investigates the potential of fused multispectral and RGB image data combined with deep learning for resistant weed identification in the field. Compared with imaging chambers and expensive unmanned ground vehicle platforms, the UAV platform is more flexible and efficient to deploy for high-throughput phenotyping under field conditions. In addition, the timeliness of UAVs guarantees the reliability of phenotypic traits for resistant weed identification in the field.

The WSRI introduced in this study showed better consistency than previously published spectral VIs, with actual data for atrazine-resistant weed in maize fields. The WSRI provides better classification results than high-resolution RGB data, and the fusion of the two data types further improves the results. The robust deep learning model (DCNN) makes it possible to monitor the dynamic response to resistant weeds in the field precisely, regardless of complex environmental factors.

Our results also show that time series and weed density are closely related to resistant weed identification. The UAV–WSRI phenotypic method could be extended to evaluate the resistance response of other field weeds under herbicide stress, providing a valuable step for further field weed resistance studies.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

FX: conceptualization, methodology, software, formal analysis, investigation, and writing—review and editing. LQ: conceptualization, writing—review and editing, supervision, and project administration. ZL and DS: visualization. HL: data curation, conceptualization, and supervision. XL: data curation. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Natural Science Foundation of China (52075092) and the Provincial Postdoctoral Landing Project (LBH-Q19007).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

UAV, unmanned aerial vehicle; DCNN, deep convolutional neural network; VIs, vegetation index; WSRI, weed spectral resistance index; B, blue band; G, green band; R, red band; NIR, near-infrared band; RE, red edge band; RTK, real-time kinematic; GCPs, round control points; GS, Gram–Schmidt; DOM, digital orthophoto maps; BAD, before application day; AD, application day; DAA, days after application; RES, resistant weeds; SUP, susceptible weeds.

References

Alipour, A., Karimmojeni, H., Zali, A. G., Razmjoo, J., and Jafari, Z. (2022). Weed management in Allium hirtifolium L. production by herbicides application. Ind. Crops Prod. 177:114407. doi: 10.1016/j.indcrop.2021.114407

Ballester, C., Hornbuckle, J., Brinkhoff, J., Smith, J., and Quayle, W. (2017). Assessment of in-season cotton nitrogen status and lint yield prediction from unmanned aerial system imagery. Remote Sens. 9:1149. doi: 10.3390/rs9111149

Birth, G. S., and Mcvey, G. R. (1968). Measuring the color of growing turf with a reflectance spectrophotometer. Agron. J. 60, 640–643. doi: 10.2134/agronj1968.00021962006000060016x

Colbach, N., Fernier, A., Le Corre, V., Messéan, A., and Darmency, H. (2017). Simulating changes in cropping practises in conventional and glyphosate-tolerant maize. I. Effects on weeds. Environ. Sci. Pollut. Res. 24, 11582–11600. doi: 10.1007/s11356-017-8591-7

Cortes, C., Cortes, C., Vapnik, V., Llorens, C., Vapnik, V. N., Cortes, C., et al. (1995). Support-vector networks. Mach. Learn. 20, 273–297. doi: 10.1007/BF00994018

Couderchet, M., and Retzlaff, G. (1995). Daily changes in the relative water content of velvetleaf (Abutilon theophrasti Medic.) may explain its rhythmic sensitivity to bentazon. J. Plant Physiol. 145, 501–506. doi: 10.1016/S0176-1617(11)81778-5

Dai, Q., Cheng, X., Qiao, Y., and Zhang, Y. (2020). Crop leaf disease image super-resolution and identification with dual attention and topology fusion generative adversarial network. IEEE Access 8, 55724–55735. doi: 10.1109/ACCESS.2020.2982055

Dash, J., and Curran, P. J. (2004). MTCI: the meris terrestrial chlorophyll index. Int. J. Remote Sens. 25, 151–161. doi: 10.1109/IGARSS.2004.1369009

Duddu, H. S. N., Johnson, E. N., Willenborg, C. J., and Shirtliffe, S. J. (2019). High-throughput UAV image-based method is more precise than manual rating of herbicide tolerance. Plant Phenomics 2019:6036453. doi: 10.34133/2019/6036453

Ehlers, M., Klonus, S., Åstrand, P., and Rosso, P. (2010). Multi-sensor image fusion for pansharpening in remote sensing. Int. J. Image Data Fusion 1, 25–45. doi: 10.1080/19479830903561985

Eide, A., Koparan, C., Zhang, Y., Ostlie, M., Howatt, K., and Sun, X. (2021a). UAV-assisted thermal infrared and multispectral imaging of weed canopies for glyphosate resistance detection. Remote Sens. 13:4606. doi: 10.3390/rs13224606

Eide, A., Zhang, Y., Koparan, C., Stenger, J., Ostlie, M., Howatt, K., et al. (2021b). Image based thermal sensing for glyphosate resistant weed identification in greenhouse conditions. Comput. Electron. Agric. 188:106348. doi: 10.1016/j.compag.2021.106348

Foyer, C. H., and Mullineaux, P. M. (1994). Causes of photooxidative stress and amelioration of defense systems in plants. Environ. Agric. 44, 522–538. doi: 10.1201/9781351070454

Francesconi, S., Harfouche, A., Maesano, M., and Balestra, G. M. (2021). UAV-based thermal, rgb imaging and gene expression analysis allowed detection of fusarium head blight and gave new insights into the physiological responses to the disease in durum wheat. Front. Plant Sci. 12:628575. doi: 10.3389/fpls.2021.628575

Fuchs, M. A., Geiger, D. R., Reynolds, T. L., and Bourque, J. E. (2002). Mechanisms of glyphosate toxicity in velvetleaf (Abutilon theophrasti medikus). Pestic. Biochem. Physiol. 74, 27–39. doi: 10.1016/S0048-3575(02)00118-9

Gitelson, A. A., and Merzlyak, M. N. (1998). Remote sensing of chlorophyll concentration in higher plant leaves. Adv. Space Res. 22, 689–692. doi: 10.1016/S0273-1177(97)01133-2

Gomes, M. P., Manac’H, S. G. L., Maccario, S., Labrecque, M., and Juneau, P. (2016). Differential effects of glyphosate and aminomethylphosphonic acid (AMPA) on photosynthesis and chlorophyll metabolism in willow plants. Pestic. Biochem. Physiol. 130, 65–70. doi: 10.1016/j.pestbp.2015.11.010

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceeding of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Piscataway, NJ: IEEE), doi: 10.1109/CVPR.2016.90

He, Z., Wu, H., and Wu, G. (2021). Spectral-spatial classification of hyperspectral images using label dependence. IEEE Access 9, 119219–119231. doi: 10.1109/ACCESS.2021.3107976

Horler, D. N. H., Dockray, M., and Barber, J. (1983). The red edge of plant leaf reflectance. Int. J. Remote Sens. 4, 273–288. doi: 10.1080/01431168308948546

Huan, Z.-B., Zhang, H.-J., Hou, Z., Zhang, S.-Y., Zhang, Y., Liu, W.-T., et al. (2011). Resistance level and metabolism of barnyard-grass (Echinochloa crusgalli (L.) Beauv.) populations to quizalofop-p-ethyl in heilongjiang province, China. Agric. Sci. China 10, 1914–1922. doi: 10.1016/S1671-2927(11)60192-2

Ishengoma, F. S., Rai, I. A., and Said, R. N. (2021). Identification of maize leaves infected by fall armyworms using UAV-based imagery and convolutional neural networks. Comput. Electron. Agric. 184:106124. doi: 10.1016/j.compag.2021.106124

Jiang, Z., Tu, H., Bai, B., Yang, C., Zhao, B., Guo, Z., et al. (2021). Combining UAV-RGB high-throughput field phenotyping and genome-wide association study to reveal genetic variation of rice germplasms in dynamic response to drought stress. New Phytol. 232, 440–455. doi: 10.1111/nph.17580

Jin, X., Li, S., Zhang, W., Zhu, J., and Sun, J. (2020). Prediction of soil-available potassium content with visible near-infrared ray spectroscopy of different pretreatment transformations by the boosting algorithms. Appl. Sci. 10:1520. doi: 10.3390/app10041520

Jordan, C. F. (1969). Derivation of leaf area index from light quality of the forest floor. Ecology 50, 663–666. doi: 10.2307/1936256

Kelly, S. T., Coats, G. E., and Luthe, D. S. (1999). Mode of resistance of triazine-resistant annual bluegrass (Poa annua). Weed Technol. 13, 747–752. doi: 10.1614/WT-03-148R1

Kieffer, B., Babaie, M., Kalra, S., and Tizhoosh, H. R. (2017). “Convolutional neural networks for histopathology image classification: training vs. using pre-trained networks,” in Proceeding of the 2017 7th International Conference on Image Processing Theory, Tools and Applications (IPTA), (Piscataway, NJ: IEEE), doi: 10.1109/IPTA.2017.8310149

Kingma, D., and Ba, J. (2014). Adam: a method for stochastic optimization. Comput. Sci. doi: 10.48550/arXiv.1412.6980

Krähmer, H., Andreasen, C., Economou-Antonaka, G., Holec, J., Kalivas, D., Kolářová, M., et al. (2020). Weed surveys and weed mapping in Europe: state of the art and future tasks. Crop Prot. 129:105010. doi: 10.1016/j.cropro.2019.105010

Krizhevsky, A., Sutskever, I., and Hinton, G. (2012). ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 84–90.

Laben, C. A., and Brower, B. V. (2000). Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. Technical Report US Patent No: 6,011,875. Rochester, NY: Eastman Kodak Company.

Liao, J., Wang, Y., Zhu, D., Zou, Y., Zhang, S., and Zhou, H. (2020). Automatic segmentation of crop/background based on luminance partition correction and adaptive threshold. IEEE Access 8, 202611–202622. doi: 10.1109/ACCESS.2020.3036278

Liu, Y., Tang, X., Liu, L., Zhu, J., and Zhang, J. (2018). Study on resistances of Echinochloa crusgalli (L.) beauv to three herbicides of corn field in Heilongjiang Province. J. Northeast Agric. Univ. 49, 29–39.

Maimaitijiang, M., Sagan, V., Sidike, P., Hartling, S., Esposito, F., and Fritschi, F. B. (2020). Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 237:111599. doi: 10.1016/j.rse.2019.111599

Maun, M. A., and Barrett, S. (1986). The biology of canadian weeds: 77. Echinochloa crus-galli (L.) beauv. Revue Canadienne De Phytotechnie 66, 739–759. doi: 10.4141/cjps86-093

Meiyan, S., Mengyuan, S., Qizhou, D., Xiaohong, Y., Baoguo, L., and Yuntao, M. (2022). Estimating the maize above-ground biomass by constructing the tridimensional concept model based on UAV-based digital and multi-spectral images. Field Crops Res. 282:108491. doi: 10.1016/j.fcr.2022.108491

Merzlyak, M. N., Gitelson, A. A., Chivkunova, O. B., and Rakitin, V. Y. (1999). Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol. Plant. 106, 135–141. doi: 10.1034/j.1399-3054.1999.106119.x

Osco, L. P., Marcato Junior, J., Marques Ramos, A. P., de Castro Jorge, L. A., Fatholahi, S. N., de Andrade Silva, J., et al. (2021). A review on deep learning in UAV remote sensing. Int. J. Appl. Earth Obs. Geoinf. 102:102456. doi: 10.1016/j.jag.2021.102456

Ostu, N., Nobuyuki, O., and Otsu, N. (1979). A thresholding selection method from gray level histogram. IEEE Trans. Syst. Man Cybern. 9, 62–66. doi: 10.1109/TSMC.1979.4310076

Pelizari, P. A., Sprhnle, K., Gei, C., Schoepfer, E., and Taubenbck, H. (2018). Multi-sensor feature fusion for very high spatial resolution built-up area extraction in temporary settlements. Remote Sens. Environ. 209, 793–807. doi: 10.1016/j.rse.2018.02.025

Perotti, V. E., Larran, A. S., Palmieri, V. E., Martinatto, A. K., and Permingeat, H. R. (2020). Herbicide resistant weeds: a call to integrate conventional agricultural practices, molecular biology knowledge and new technologies. Plant Sci. 290:110255. doi: 10.1016/j.plantsci.2019.110255

Qi, Y., Li, J., Fu, G., Zhao, C., Guan, X., Yan, B., et al. (2018). Effects of sublethal herbicides on offspring germination and seedling growth: redroot pigweed (Amaranthus retroflexus) vs. velvetleaf (Abutilon theophrasti). Sci. Total Environ. 645, 543–549. doi: 10.1016/j.scitotenv.2018.07.171

Quan, L., Li, H., Li, H., Jiang, W., Lou, Z., and Chen, L. (2021). Two-stream dense feature fusion network based on RGB-D data for the real-time prediction of weed aboveground fresh weight in a field environment. Remote Sens. 13:2288. doi: 10.3390/rs13122288

Rajcan, I., and Swanton, C. J. (2001). Understanding maize–weed competition: resource competition, light quality and the whole plant. Field Crops Res. 71, 139–150. doi: 10.1016/S0378-4290(01)00159-9

Rischbeck, P., Elsayed, S., Mistele, B., Barmeier, G., Heil, K., and Schmidhalter, U. (2016). Data fusion of spectral, thermal and canopy height parameters for improved yield prediction of drought stressed spring barley. Eur. J. Agron. 78, 44–59. doi: 10.1016/j.eja.2016.04.013

Sher, A., Mudassir Maqbool, M., Iqbal, J., Nadeem, M., Faiz, S., Noor, H., et al. (2021). The growth, physiological and biochemical response of foxtail millet to atrazine herbicide. Saudi J. Biol. Sci. 28, 6471–6479. doi: 10.1016/j.sjbs.2021.07.002

Shirzadifar, A., Bajwa, S., Nowatzki, J., and Shojaeiarani, J. (2020b). Development of spectral indices for identifying glyphosate-resistant weeds. Comput. Electron. Agric. 170:105276. doi: 10.1016/j.compag.2020.105276

Shirzadifar, A., Bajwa, S., Nowatzki, J., and Bazrafkan, A. (2020a). Field identification of weed species and glyphosate-resistant weeds using high resolution imagery in early growing season. Biosyst. Eng. 200, 200–214. doi: 10.1016/j.biosystemseng.2020.10.001

Sims, D. A., and Gamon, J. A. (2002). Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 81, 337–354. doi: 10.1016/S0034-4257(02)00010-X

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., and Salakhutdinov, R. (2014). Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15, 1929–1958. doi: 10.5555/2627435.2670313

Stanton, C., Starek, M. J., Elliott, N., Brewer, M., Maeda, M. M., and Chu, T. (2017). Unmanned aircraft system-derived crop height and normalized difference vegetation index metrics for sorghum yield and aphid stress assessment. J. Appl. Remote Sens. 11:026035. doi: 10.1117/1.JRS.11.026035

Su, J., Yi, D., Coombes, M., Liu, C., Zhai, X., McDonald-Maier, K., et al. (2022). Spectral analysis and mapping of blackgrass weed by leveraging machine learning and UAV multispectral imagery. Comput. Electron. Agric. 192:106621. doi: 10.1016/j.compag.2021.106621

Tros, M., Mascoli, V., Shen, G., Ho, M.-Y., Bersanini, L., Gisriel, C. J., et al. (2021). Breaking the red limit: efficient trapping of long-wavelength excitations in chlorophyll-f-containing photosystem I. Chem 7, 155–173. doi: 10.1016/j.chempr.2020.10.024

Tucker, C. J., Elgin, J. H., Iii, M. M., and Fan, C. J. (1979). Monitoring corn and soybean crop development with hand-held radiometer spectral data. Remote Sens. Environ. 8, 237–248. doi: 10.1016/0034-4257(79)90004-X

Vincini, M., and Frazzi, E. (2009). Active sensing of the N status of wheat using optimized wavelength combination: impact of seed rate, variety and growth stage. Precision Agric. 9, 23–30.

Vong, C. N., Conway, L. S., Zhou, J., Kitchen, N. R., and Sudduth, K. A. (2021). Early corn stand count of different cropping systems using UAV-imagery and deep learning. Comput. Electron. Agric. 186:106214. doi: 10.1016/j.compag.2021.106214

Wallace, L. O. (2013). “Assessing the stability of canopy maps produced from UAV-LiDAR data,” in Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, (Piscataway, NJ: IEEE), doi: 10.1109/IGARSS.2013.6723679

Wang, S., Han, Y., Chen, J., He, X., Zhang, Z., Liu, X., et al. (2022). Weed density extraction based on few-shot learning through UAV remote sensing RGB and multispectral images in ecological irrigation area. Front. Plant Sci. 12:735230. doi: 10.3389/fpls.2021.735230

Williams, M. M., Boydston, R. A., Peachey, R. E., and Robinson, D. (2011). Performance consistency of reduced atrazine use in sweet corn. Field Crops Res. 121, 96–104. doi: 10.1016/j.fcr.2010.11.020

Xia, L., Zhang, R., Chen, L., Li, L., and Xie, C. (2021). Evaluation of deep learning segmentation models for detection of pine wilt disease in unmanned aerial vehicle images. Remote Sens. 13:3594. doi: 10.3390/rs13183594

Yang, G., Liu, J., Zhao, C., Li, Z., Huang, Y., Yu, H., et al. (2017). Unmanned aerial vehicle remote sensing for field-based crop phenotyping: current status and perspectives. Front. Plant Sci. 8:1111. doi: 10.3389/fpls.2017.01111

Yang, M., Hassan, M. A., Xu, K., Zheng, C., Rasheed, A., Zhang, Y., et al. (2020). Assessment of water and nitrogen use efficiencies through UAV-based multispectral phenotyping in winter wheat. Front. Plant Sci. 11:927.

Zarco-Tejada, P. J., Hornero, A., Beck, P. S. A., Kattenborn, T., Kempeneers, P., and Hernández-Clemente, R. (2019). Chlorophyll content estimation in an open-canopy conifer forest with Sentinel-2A and hyperspectral imagery in the context of forest decline. Remote Sens. Environ. 223, 320–335.

Zhang, N., Su, X., Zhang, X., Yao, X., Cheng, T., Zhu, Y., et al. (2020). Monitoring daily variation of leaf layer photosynthesis in rice using UAV-based multi-spectral imagery and a light response curve model. Agric. For. Meteorol. 291:108098. doi: 10.1016/j.agrformet.2020.108098

Zhou, J., Zhou, J., Ye, H., Ali, M. L., Chen, P., and Nguyen, H. T. (2021). Yield estimation of soybean breeding lines under drought stress using unmanned aerial vehicle-based imagery and convolutional neural network. Biosyst. Eng. 204, 90–103. doi: 10.1016/j.biosystemseng.2021.01.017

Keywords: atrazine-resistant weed, multispectral reflectance, vegetation indices (VIs), unmanned aerial vehicle (UAV), deep convolutional neural networks (DCNNs)

Citation: Xia F, Quan L, Lou Z, Sun D, Li H and Lv X (2022) Identification and Comprehensive Evaluation of Resistant Weeds Using Unmanned Aerial Vehicle-Based Multispectral Imagery. Front. Plant Sci. 13:938604. doi: 10.3389/fpls.2022.938604

Received: 07 May 2022; Accepted: 10 June 2022;

Published: 05 July 2022.

Edited by:

Ruirui Zhang, Beijing Academy of Agricultural and Forestry Sciences, ChinaReviewed by:

Pei Wang, Southwest University, ChinaLang Xia, Institute of Agricultural Resources and Regional Planning (CAAS), China

Jianli Song, China Agricultural University, China

Copyright © 2022 Xia, Quan, Lou, Sun, Li and Lv. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Longzhe Quan, cXVhbmxvbmd6aGVAMTYzLmNvbQ==

Fulin Xia

Fulin Xia Longzhe Quan1,2*

Longzhe Quan1,2* Zhaoxia Lou

Zhaoxia Lou Deng Sun

Deng Sun Hailong Li

Hailong Li