- 1College of Mechanical and Electrical Engineering, Shihezi University, Shihezi, China

- 2Key Laboratory of Modern Agricultural Machinery of Xinjiang Production and Construction Corps, Shihezi, China

The magnetized water and fertilizer liquid can produce biological effect of magnetic field on crops, but its residual magnetic field strength is difficult to be expressed quantitatively in real time, and accurate prediction of it is helpful to define the scope of action of liquid magnetization. In this paper, a prediction model for liquid magnetization series data is presented. It consists of conditional generative adversarial network (CGAN) and projected gradient descent (PGD) algorithm. First, the real training dataset is used as the input of PGD attack algorithm to generate antagonistic samples. These samples are added to the training of CGAN as true samples for data enhancement. Second, the training dataset is used as both the generator and discriminator input of CGAN to constrain the model, capture distribution of the real data. Third, a network model with three layers of CNN is built and trained inside CGAN. The input model is constructed by using the structure of two-dimensional convolution model to predict data. Lastly, the performance of the model is evaluated by the error between the final generated predicted value and the real value, and the model is compared with other prediction models. The experimental results show that, with limited data samples, by combining PGD attack with CGAN, the distribution of the real data can be more accurately captured and the data can be generated to meet the actual needs.

Introduction

In recent years, with the emergence of large-scale time-series data and the improvement of computing power, time-series data prediction has become increasingly important in many fields, such as weather (Hewage et al., 2020; Karevan and Suykens, 2020), energy consumption (Divina et al., 2019), financial indicators (Zhang et al., 2019), retail (Beheshti-Kashi et al., 2015), medical monitoring (Xia et al., 2022), anomaly detection (Munir et al., 2018), and traffic prediction (Li et al., 2015). The deep neural network shows great potential in mapping complex non-linear feature interaction. When processing time-series data, it can automatically learn the time correlation of time series and directly adapt to the data without any prior assumptions. It has been proved that it can successfully solve the problem of time-series data prediction (Sen et al., 2019; Wan et al., 2019).

Time-series data are a series of data indexed by time dimension. This kind of data describes the measured value of a measured subject at each time point in a time range, that is, a one-dimensional corresponding relationship is constructed between the data and time nodes. If the range of time-series data is extended, the data collected on different time nodes will be extended to the data collected on different detection nodes. This detection node is a one-dimensional physical variable that plays a core role in the detection data, that is, a series of physical quantities with the same rhythm as the time node, such as magnetic field strength, voltage, and current. These physical quantities can reflect the change trend of detection data in dimension. In this way, many machine learning models applied to time-series data prediction will be able to predict a wider range of series data, such as prediction and deployment of physical and chemical parameters (Nie et al., 2021a; Wang et al., 2022). This will help to improve the ability of data mining based on the series data.

The biological effect of magnetic field refers to the dominant or recessive effect on the growth and metabolism of organisms under the action of magnetic field environment (Nyakane et al., 2019; Radhakrishnan, 2019). In the process of agricultural production, the integrated management and distribution of crop water and fertilizer supply can be realized by using the integrated irrigation equipment of water and fertilizer. The steady-state controllable magnetic field can be generated by energizing, and the mixed liquid of water and fertilizer with different residual magnetic field strength can be induced by changing the magnetic field parameters. The influence of magnetic field is applied to crops through drip irrigation equipment, and then, a series of magnetic field biological effects are produced to improve the yield and quality of crops (Nie et al., 2021b). The residual magnetic field strength of water fertilizer mixed liquid magnetized by magnetic field is the fundamental factor affecting the biological effect of crop magnetic field. Therefore, this paper hopes to predict the magnetization series data of water and fertilizer mixed liquid, so as to lay a foundation for further clarifying the degree of biological effects of magnetic field on crops.

The residual magnetic field strength of liquid magnetization is affected by the magnetic field strength of magnetization space, magnetization time, the change of permeability caused by different water, fertilizer ratio, and other factors. The ratio of water and fertilizer required by crops in different growth periods is determined. When the water and fertilizer liquid with a certain flow rate passes through the magnetized space, the magnetization time is the same. Therefore, the influence of the magnetic field strength of magnetization space on the residual magnetic field strength of the liquid magnetization is the core factor. The liquid magnetization series data have the same characteristics as other time-series data. The mapping relationship is as follows: The residual magnetic field strength data map the change data we are concerned about, such as weather temperature, retail sales, and energy consumption, and the magnetization spatial magnetic field strength maps the time series. All series data are faced with such a problem, that is, limited by the equipment and conditions, the data samples obtained are limited. How to carry out in-depth data mining on this basis is a problem worthy of exploration.

The application of machine learning in data mining of series data needs to solve the problem of few data samples, while the few-shot learning method does not rely on large-scale samples training data and can be quickly generalized to the new task containing only a small number of supervised information samples, avoiding the high cost of collecting data (Li and Chao, 2020; Wang et al., 2020; Li and Yang, 2021; Yang et al., 2022). Few-shot learning has many excellent algorithm models in image classification (Liu et al., 2018; Li et al., 2020) and text classification tasks (Yan et al., 2018). In addition, using few-shot learning for tasks such as oral comprehension (Kumar and Baghel, 2021), image extraction (Li et al., 2021), and disease diagnosis (Zhong et al., 2020; Li and Chao, 2021a) also has good generalization ability. The idea of learning with a small amount of data to get a good effect model can greatly improve the ability of in-depth learning in the field of agricultural production and has achieved good results in some applications where data samples are not easy to obtain or sample size is small, such as pest control (Li and Yang, 2020), crop counts, and positioning (Karami et al., 2020). At the same time, how to balance between sample quantity and sample quality has also attracted the attention of researchers. Li et al. proposed an effective indicator of distance entropy to distinguish the quality of sample data from the perspective of information and through an embedding range judgment (ERJ) method in the feature space (Li and Chao, 2021b,c; Li et al., 2022). It is confirmed that good data with a small number of choices can achieve the same performance as all training data.

How to optimize the prediction model with reasonable data expansion methods when the sample data are limited is a problem that needs to be solved. Few-shot learning based on data enhancement improves the diversity of samples. In the case of less series data, generating effective samples according to the existing few-shot data and data enhancement of target domain samples has become a new solution direction. Generative adversarial network (GAN) is one of the most promising data enhancement driven algorithms for unsupervised learning in complex distribution in recent years. Through the mutual game learning of generative model and discriminant model, it can produce quite good output, which shows the great potential of inferring physical phenomena. Although GAN has achieved some success in generating data, it still has defects such as mode collapse and unstable training. In order to solve these problems, scholars put forward an improved method of adding antagonistic attack to GAN. Liu and Hsieh proved that the integration of GAN and antagonistic attack can enhance each other (Liu and Hsieh, 2019). By adding antagonistic attack to GAN, such as projection gradient descent (PGD) attack, it can not only improve the defense success rate of discriminator against samples, but also accelerate the network convergence speed and guide the training of better generators. Because the data generation method of the original GAN is too free, it cannot fully capture the distribution of real data and cannot produce data that fully meets the needs. Therefore, conditional generative adversarial network (CGAN) is formed after adding conditional constraints on the basis of the original GAN. CGAN can be seen as an improvement of turning unsupervised GAN into a supervised model. Under the guidance of conditional constraints, it can improve the quality of generated data, so as to better meet the needs of researchers.

In this paper, aiming at the prediction of residual magnetic field strength of water and fertilizer liquid, when the series data samples are limited, PGD antagonistic samples are added to the training of CGAN as true samples for data enhancement, and a prediction modeling method based on CGAN data enhancement is proposed. First, the real training dataset is used as the input of PGD attack algorithm to generate antagonistic samples, and these samples are added to the training of CGAN as true samples for data enhancement. Then, the training dataset is used as the condition input of generator and discriminator of CGAN at the same time to constrain the model, capture the real detection data distribution, and improve the discriminator's ability by antagonistic samples, so as to improve the generator's ability to generate real data. Finally, the network model of three-layer convolutional neural network (CNN) inside CGAN is established and trained. The structure of two-dimensional convolution model is used to construct the input model to predict data. The performance of the model is evaluated by the error between the final generated predicted value and the real value, and the model is compared with other prediction models. This study can provide some implications for prediction of series data in future with limited samples.

Materials and Methods

Dataset

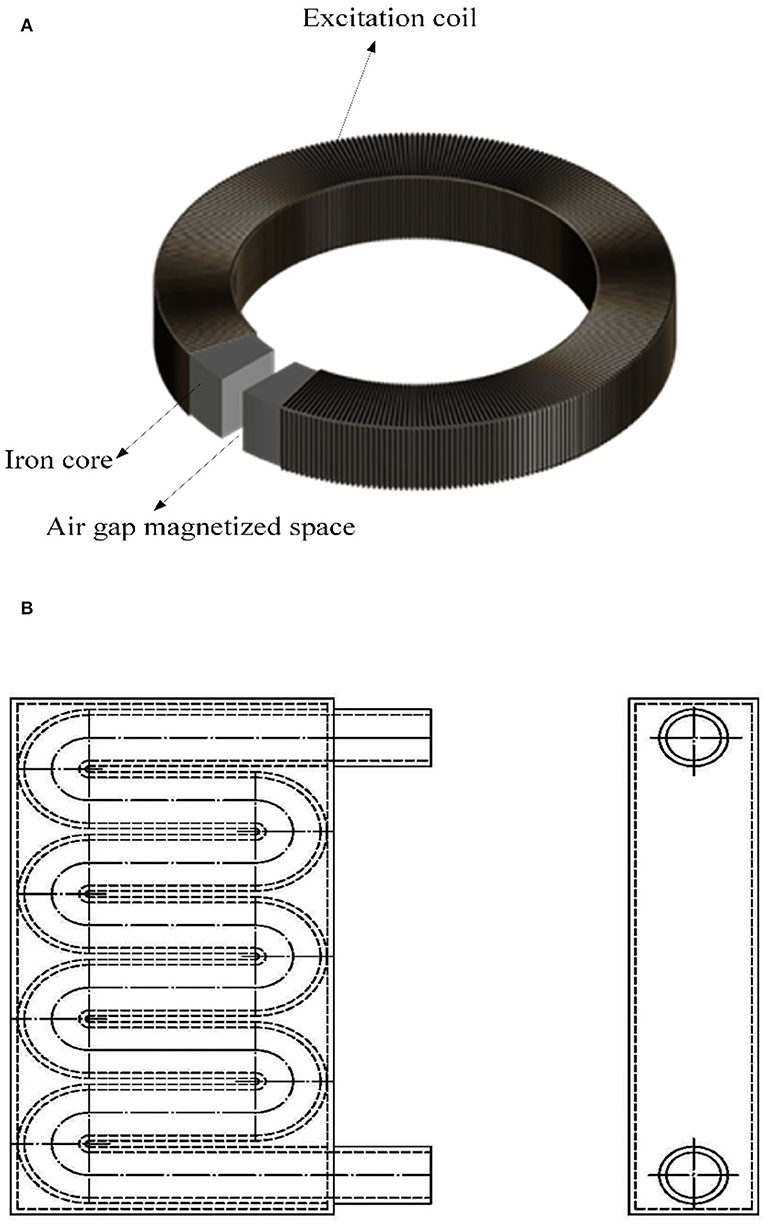

The training and test datasets used in this study are based on the data of residual magnetic field strength after magnetization of water and fertilizer liquids with different flow rates collected on the liquid magnetization test platform. It consists of a magnetizer and a liquid magnetization space pipeline. The structure of liquid magnetizer consists of iron core, excitation coil, and air gap magnetization space as shown in Figure 1A. The iron core structure is annular, the material is cold-rolled non-oriented silicon steel B50A470, the excitation coil uses circular copper-coated wire, the insulation grade is F, the nominal diameter is 1.50 mm, the reserved air gap magnetization space size is 100*40*200 mm, and the excitation mode is DC adjustable excitation. To fully magnetize the liquid as it passes through the air gap magnetized space, the liquid flow through the magnetized space pipeline is designed as a closed S-shaped composite pipe structure, which is placed in the air gap magnetized space as shown in Figure 1B. When the liquid is magnetized, it flows in from the upper tube opening and out from the lower tube opening.

There are many input variables that affect the magnetization effect of liquids. Ignoring the influence of secondary variables, the magnetic field strength in the air gap magnetization space and liquid velocity are selected as the main variables that affect the magnetization effect of liquids, and the residual magnetic field strength of the liquid magnetization is selected as the index to measure the magnetization effect. When collecting test data, adjust the current of excitation coil by adjustable DC regulator power supply, change the intensity of magnetic field in the air gap magnetization space, measure the magnetic field strength at the center point of the air gap magnetization space by Tesla meter, measure the flow rate by placing a velocity meter at the water inlet of the liquid magnetization space pipeline, set a sampling hole at the fixed interval of the water outlet, collect the magnetized liquid, and measure the residual magnetic field strength by Tesla meter.

In order to improve the generalization of the model and avoid modeling within local data intervals, data are collected within the magnetization working interval of the liquid magnetizer by uniform sampling. By adjusting the excitation current of the magnetizer, the residual magnetic field strength series data at the same distance can be obtained for liquids with different flow rates, assuming that there are n liquids with different flow rates passing through the liquid magnetized space pipeline. For the flow rate of vi liquid after magnetization by a magnetizer, under the action of j different air gap magnetization space magnetic field strength, there is n set of detection data, which is recorded as .

Methods

CGAN

GAN is a data modeling algorithm that generates a set of samples from the data probability distribution pdata, providing a way to learn deep representation without a lot of training data. This method, proposed by Goodfellow et al. (2015), has rapidly become a research hotspot in recent years. It is also one of the most interesting ideas in the field of machine learning in recent years. The most successful applications of GAN are image processing and computer vision, such as image super-resolution (Bulat et al., 2018), image synthesis and manipulation (Dong et al., 2018; Wang et al., 2018), and video processing (Liao et al., 2019). GAN processes series data by learning the probability distribution of a given dataset and generating synthetic data that conforms to the same distribution. Therefore, it can synthesize seemingly real artificial data. The application of GAN to series data mainly focuses on discrete issues, such as text generation tasks (Xu et al., 2018), and in a continuous state, GAN is used to generate auditory data (Liu et al., 2021). In addition to these data types, there are also some attempts to apply GAN, such as medical time-series data generation (Kiyasseh et al., 2020), wind speed probability prediction (Cheng et al., 2018), and composite time series in smart grid (Zhang et al., 2018). However, there are few studies on the application of GAN to the probability prediction of sensor detection data. In fact, the detected data have the same mapping relationship as the time-series data. Based on the limited data of residual magnetic field strength of water and fertilizer liquids, this paper uses GAN method to predict its change trend.

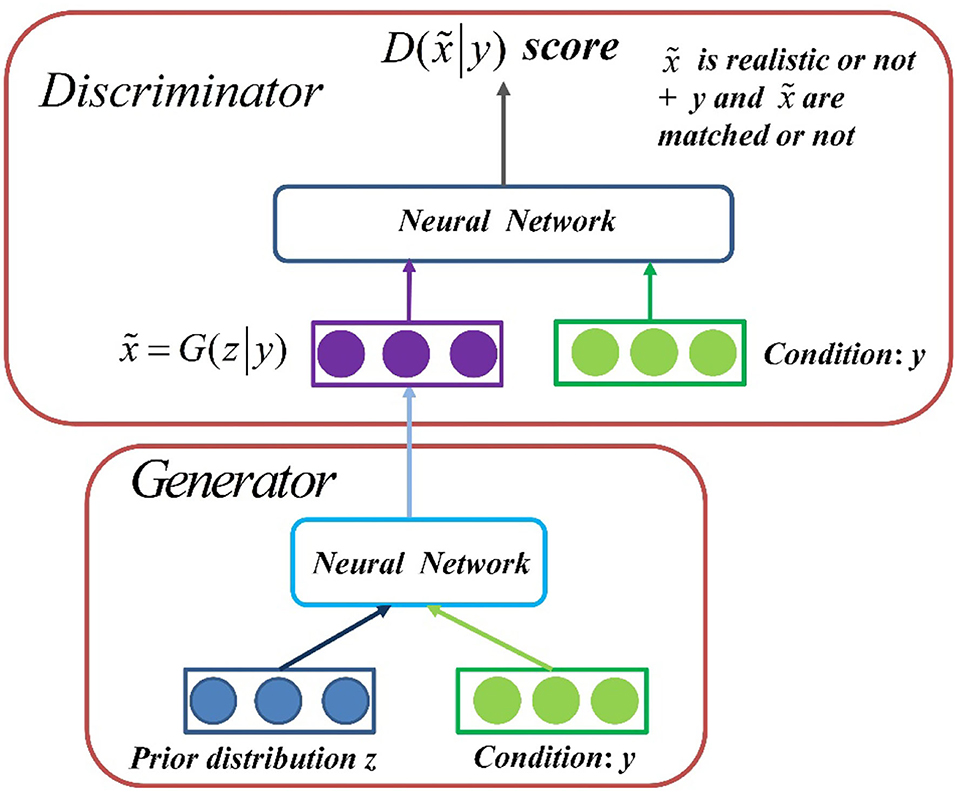

GAN is an unsupervised learning method that improves the quality of generated data by learning through a game between generator and discriminator. This competitive approach no longer requires a hypothetical data distribution, but uses a distribution to sample directly, thus truly approaching the real data in theory, which is the greatest advantage of GAN. However, this way of entering a random vector and getting a resulting object is too free for us to control what kind of object is produced. Therefore, CGAN is formed when conditional constraints are added to the original GAN. CGAN is an extension of GAN, which allows us to put models on some extra information y. This information can be any type of ancillary information, such as category labels or data from other modes. CGAN achieves conditional constraints on the generated object by conveying additional information y to the discriminant model and the generated model as part of the input layer.

CGAN contains two “confrontation” models: Generation Model to capture data distribution and Discrimination Model to estimate the probability that a sample will come from real data instead of synthetic samples. Generator inputs two objects at the same time, a sample z sampled from a prior distribution and a conditional y, which outputs the resulting data . To learn how to generate distribution x on real dataset pdata(x), noise vector z is sampled from known prior distribution pnoise(z) (usually Gaussian or uniform), the generator takes z as input, and it also needs to sample from conditional y to generate sampled data whose distribution follows pdata(x). A mapping function G(z|y) is constructed from pnoise(z) and y to data space. In the generation model, a priori input noise pnoise(z) and conditional information y form a joint hidden layer representation. Discriminator also has two inputs, conditional y and generator generating data , and outputs a scalar that represents a score . This score evaluates two things: whether is real or not, and whether and y match. Models G and D are trained simultaneously: fixed discriminant model D, adjusted G parameters to minimize log(1−D(G(z|y))) expectations; fixed the generation model G and adjusted the parameters of D to maximize the expectations of . This optimization process can be boiled down to a “minimax two-player game” problem with the objective function as shown in Equation (1):

where E represents mathematical expectation, x represents the sample of sampling distribution pdata(x) in real data, which corresponds to the collected training set data in this study, z represents the noise sampled in a priori distribution pnoise(z), D(x|y) represents the probability that the discriminant model judges that the real data are true data under the constraint of condition y, and D(G(z|y)) represents the probability that the discriminant model judges that the data generated by random noise pnoise(z) and condition y is true data, and V(D, G) represents the value function of the discrimination model and the generation model. In an ideal state, it is expected that the value function of the discrimination model will obtain the maximum value and the value function of the generation model will obtain the minimum value.

The network architecture of CGAN is shown in Figure 2. The generator takes the sample z and condition y sampled from the a priori distribution as the input to generate data . The discriminator inputs both condition y and generated data into a network to get a score to measure whether the object is true and whether it matches the constraint y.

Project Gradient Descent Attack

The concept of confrontation attack comes from the input samples formed by adding subtle disturbances to the samples, which makes the network output wrong prediction. The basic idea of counter training is as follows:

The inner meaning in Equation (2) to find a group of antagonistic samples in sample space D that maximizes the loss function L and the countermeasure sample x is obtained by the combination of the original sample and the disturbance term radv obtained by some means, and the disturbance term radv is in disturbance space S. Outer meaning: facing the countermeasure sample set composed of such a group of samples, the expected loss of the model on the countermeasure sample set should be minimized by updating the model parameter θ.

Confrontation learning has achieved good results in the image field (Chen et al., 2019), and this way of confrontation attack training can be transferred to series data. How to add disturbance to the confrontation attack is a thorny problem in the prediction of series data. PGD attack algorithm is improved on the basis of fast gradient method (FGM) algorithm. Its essence is to find the optimal disturbance through multiple iterations. Therefore, it is very suitable for discrete non-linear series data model. In this study, the antagonistic samples are generated through the algorithm. On the basis of real data, the disturbance in confrontation radv is optimized and calculated through multiple iterations of PGD attack algorithm. The calculation process is shown in Equation (3).

where f represents the network parameterized by weight θ, and L represents the loss function. The purpose Equation (3) is to find disturbance radv to maximize the loss value of a point xadv: = x+radv, so that this point is most likely to become the antagonistic sample. Specifically, it is to carry out forward and backward propagation again and again, calculate loss in forward, calculate parameter grad in back, calculate disturbance radv according to gradient again and again, and add new disturbance radv to the gradient of embedding layer again and again. If it exceeds a range, it will be mapped back to the given range. Finally, the gradient calculated in the last step is accumulated on the original gradient, that is, the original gradient is updated by accumulating the gradient corresponding to the disturbance in step t. The multiple iterative process against disturbance radv is shown in Equations (4) and (5).

where ||radv||2 ≤ ε is the constraint space of the disturbance, α is the small step size, and ∏ means to perform projection on ε−ball. If the disturbance amplitude is too large, pull the origin part back to the boundary of ball to ensure that the disturbance is superimposed in ball for many times after multiple operations.

In this study, the antagonistic samples generated by PGD attack algorithm are used as the expansion of the original dataset and added to the model training together, which is equivalent to a way of data expansion. The purpose of confrontation attack training is no longer to defend against gradient based malicious attacks, but more as a kind of regulation to improve the generalization ability of the model.

CGAN Data Enhancement Model Framework

Overall Framework

In this paper, PGD attack algorithm is combined with CGAN. Aiming at the magnetization series data of irrigation water and fertilizer mixture, under the condition of limited data samples, a prediction model using CGAN to enhance the data based on the original data is constructed. The original sample data are attacked by PGD attack algorithm to generate antagonistic sample data. The PGD antagonistic sample is added to the training as a true sample to enhance the data, improve the defense success rate of antagonistic samples, and enable CGAN to generate series data closer to the distribution of real samples.

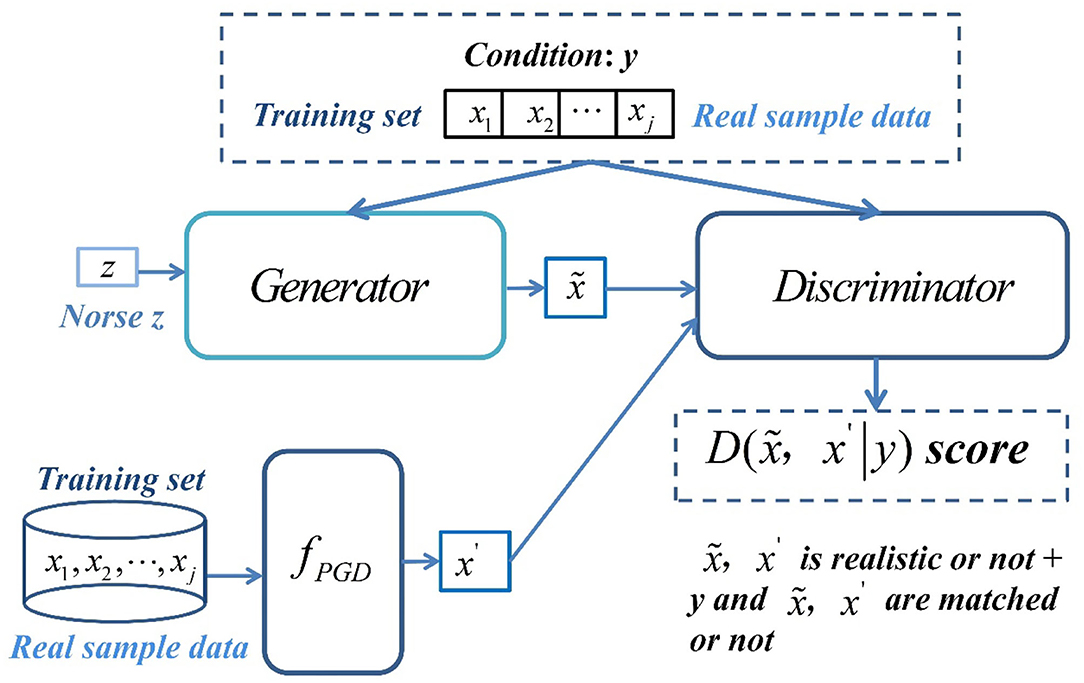

CGAN data enhancement model is mainly composed of generator, discriminator, attack algorithm fPGD, and conditional dataset y. Its overall framework is shown in Figure 3. The fPGD algorithm is responsible for generating antagonistic samples. Its training dataset is completely the sampled real sample data. The generated antagonistic samples are used as the extended training dataset of discriminator. The work of generator is to convert random noise into realistic data with semantic information, and the work of discriminator is to distinguish whether the input data are true as much as possible.

The overall objectives of the model are as follows: The random noise vector z is given in the generator, and the training dataset containing real sample data is used as the input of conditional dataset y to generate conditional constraints and the generator generates synthetic sample . The training sample, that is, the real sample (x1, x2, …, xj−1, xj), is used as the input of the PGD attack algorithm to generate the antagonistic sample x′. The discriminator receives the synthetic sample and the antagonistic sample x′ at the same time and takes the condition y as the judgment basis to judge whether and x′ are true and match the condition y. The generator and discriminator are trained alternately under the guidance of discrimination loss to gradually enhance the ability of discrimination antagonistic samples, so as to make the sample data generated by the generator more real.

Generator and Discriminator Network Framework

In this paper, convolutional neural network (CNN) is introduced to construct the internal structure of generator and discriminator. CNN has the powerful ability of multi-hidden layer feature extraction. The introduction of CNN can improve the stability, convergence speed, and data quality of CGAN, because the samples of one-dimensional convolution model are easy to over fit during training, and the anti-noise performance of one-dimensional convolution model is not as good as that of two-dimensional convolution model. In order to enhance the generalization ability and robustness of the whole model, the structure of two-dimensional convolution model is used to construct the input model. Therefore, it is necessary to transform the one-dimensional series data into two-dimensional equivalent information degree and arrange it into two-dimensional form of n×n for convolution, which is more conducive to model feature extraction. In order to ensure the integrity of sliding sampling of two-dimensional convolution kernel, the input data and output data form an n×n matrix. The random noise and conditional data are spliced into an n×n matrix and input into CNN. In order to enable the network to learn more suitable spatial sampling methods independently, the spatial pooling in CNN is not used, and the step convolutions are used to enable the network to sample in the autonomous learning space. Batch normalization is used between levels to accelerate convergence and slow down over fitting, so as to make the gradient propagation deeper. In the output layer, tanh activation function is used, and the other layers are activated by ReLU. Finally, the network generates prediction data.

The generator is composed of three layers of CNN, and its structure is shown in Figure 4. The random noise and conditional data are spliced into a matrix and then input. The convolution layer C1 is obtained by convolution of 32 6 ×6 convolution cores, the convolution layer C2 is obtained by convolution of 64 6 ×6 convolution cores, and the convolution layer C3 is obtained by convolution of 1 6 ×6 convolution core to obtain the prediction data and output. The sliding step size is set to 2.

Discriminator splices conditional data, generated samples, and antagonistic samples into a matrix as the input of convolution layer. The discrimination model is composed of three layers of CNN, and the hidden layer of the discrimination model uses Leaky ReLU function as the activation function. Finally, the full link and sigmoid activation function are used to judge the true and false, so that the result is mapped between (0, 1). In the discrimination model, convolution layer C1 is obtained by convolution of 32 6 ×6 convolution cores, convolution layer C2 is obtained by convolution of 64 6 ×6 convolution cores, convolution layer C3 is obtained by convolution of 128 6 ×6 convolution cores, and the sliding step size is set to 2. Finally, the full link outputs the discrimination result, and its structure is shown in Figure 5.

When training CGAN, the generation model and discrimination model are trained alternately. In the process of training the generation model, the weight value of the generation model is set according to the deviation between the prediction data generated by the generation model and the real data, the condition deviation, and the discrimination result of the discrimination model. In the process of training the discrimination model, the conditional data, the data generated by the generation model and the antagonistic samples, need to be input into the discrimination model. The discrimination model needs to judge whether the input data are the probability of the real detection data conforming to the conditional distribution and update its own parameters according to the discrimination deviation. In the training process, the random gradient descent algorithm is used to update the discriminant model once and then update the generated model.

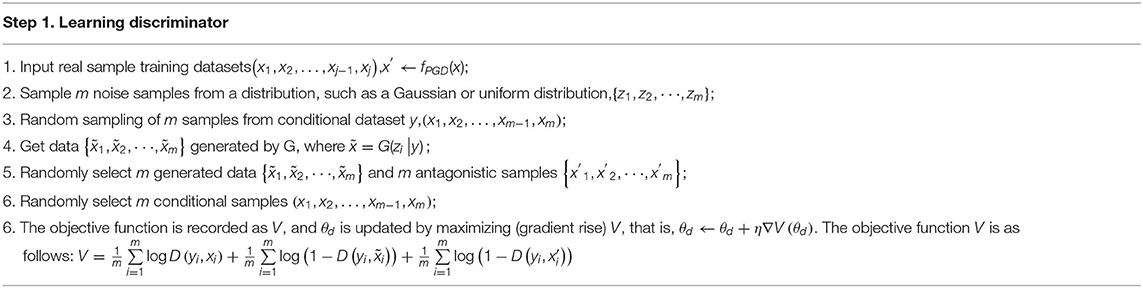

Algorithm

First, initialize parameter θd of discriminator and parameter θg of generator, fix generator, and make discriminator learn. Several vectors are randomly sampled from a Gaussian distribution or uniform distribution. At the same time, m samples are randomly selected from the conditional dataset y and input into the generator to obtain the corresponding generated data and input into the discriminator. At the same time, the training samples, that is, the real samples, are generated by the fPGD attack algorithm and sent to the discriminator to improve the generalization ability of the model. Discriminator also randomly selects m samples from the conditional dataset y to learn how to identify the real data and the generated data, give high scores to the real data and low scores to the generated data as much as possible, and update the parameters of discriminator by regression task in the training process. The algorithm of learning discriminator is shown in Table 1.

Then, fix the discriminator and adjust the parameters of the generator. The goal is to enable the data generated by the generator to deceive the discriminator and get high scores as much as possible. The algorithm of learning generator is shown in Table 2.

Results

Experimental Data Preparation

In this study, simulating the integrated management process of cotton water and fertilizer, drip irrigation pipeline network is laid out with one film, two tubes and four rows, and main, branch and capillary network structure. The water and fertilizer liquid magnetizer is placed at the head of the capillary network. The capillary outer diameter is ϕ20mm, and the capillary flow is . There will be differences in different growth stages of cotton, and the corresponding liquid flow rate is . In the process of data collection, the flow rate of liquid was set as , increasing by 1m/s. The data of residual magnetic field strength after water and fertilizer liquid passing through the magnetized space pipeline at different flow rates were collected in n = 6 groups. Each group of data was sampled from to 20mT with uniform increment of gap magnetized space magnetic field strength. There were j = 40 data samples in each flow rate group, and N = n×j = 240 series data were obtained. The water–fertilizer fusion liquid ratio is 1000kg water with urea (containing N46%)50kg, calcium superphosphate (containing P2O564%)25kg, potassium sulfate (containing K2O50%) 25kg added.

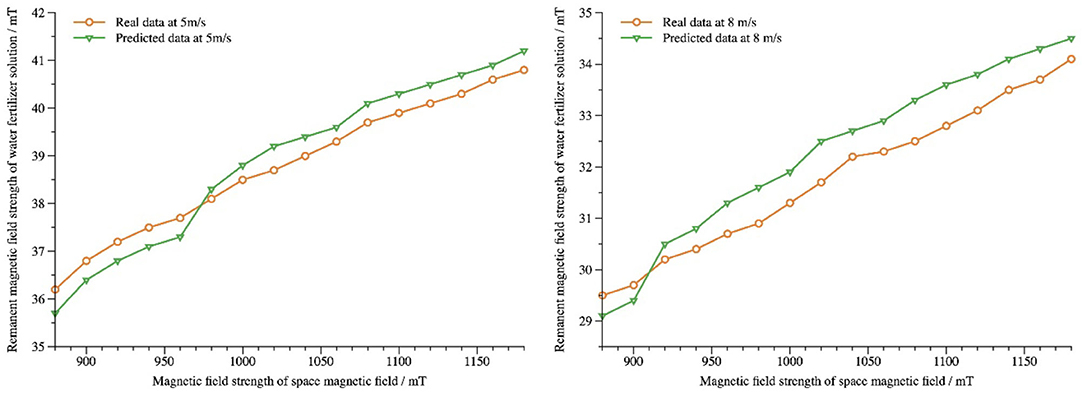

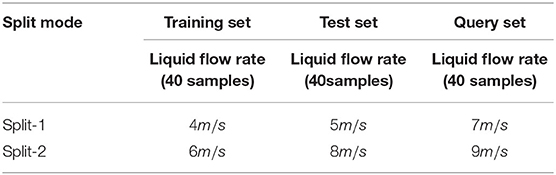

The dataset is divided into training set, test set, and query set, and there is no intersection among these three parts. The training set selects data for measuring residual magnetic field strength of water and fertilizer liquids at a flow rate. The test set selects another test data at a different flow rate. To fully compare the performance of the prediction models and other models proposed in this study, the query set will select the detection data at different flow rates than the training set and the test set. In order to verify the correctness and generalization of the proposed model for predicting the magnetic field strength of water and fertilizer liquids, training set, test set, and query set are divided twice on the dataset, and the details are shown in Table 3.

In two different segmented datasets, we use the training dataset as the conditional dataset for the entire model. It consists of 40 conditional data, all of which are true test series data, which constrain the data generated by generator and serve as the basis for discriminator to determine whether the input data are true and the data distribution is reasonable. The training dataset is also used as input to the fPGD attack algorithm to generate antagonistic samples.

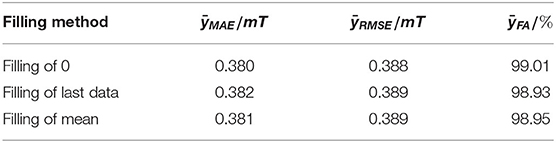

The input data to generation model are conditional data and noise data, since conditional data are a segmented training dataset, including 40 series data at a set of flow rates. The generated model will generate 16 prediction data based on the input conditional velocity data, which form the matrix of 4 ×4. To maintain the correspondence between the noise data and the output data of the generated model, 16 random noise data are generated by the function as input. The generated model splices 16 input noise data and 40 conditional data, expands the edge data, and fills in eight “0” at the end to form 8 ×8 input matrix. To maintain the correspondence with the data generated by the generation model, the fPGD attack algorithm also generates 16 antagonistic samples on the basis of each set of training data. The discrimination model splices 16 antagonistic samples generated by the fPGD attack algorithm, 16 predictive data generated by Generator, and 40 conditional data and fills in nine “0” at the end to form the 9 ×9 input matrix. The purpose of generator and discriminator input data expansion is to fill in the required input matrix. In this study, the input matrix is expanded with “0,” or with the last data value or mean. Expansion of edge data has no effect on the extraction of sample features. The prediction errors and accuracy of the models with different filling modes are compared in Table 4. Generator's generated data, input data arrangement, and discriminator's input data arrangement are shown in Figure 6.

Evaluation Index

To evaluate the prediction performance of the model, the average absolute error yMAE, root mean square error yRMSE, and prediction accuracy yFA are used as evaluation criteria, and the formulas are defined as follows:

In the formula, xreal(j) is the jth true test data; xpred(j) is the jth prediction data; and N is the number of predictions. When the yMAE and yRMSE values are smaller and the yFA values are larger, the residual magnetic field strength representing the predicted water and fertilizer liquid is closer to the true measured value, that is, the prediction accuracy is higher.

Experimental Results and Comparison

During model training, antagonistic samples are generated by the fPGD attack algorithm, which improves the model's defense success rate against antagonistic samples, enhances the game antagonism between generator and the discriminator, improves the generalization ability of the model, and enables CGAN to generate series data closer to the true sample distribution.

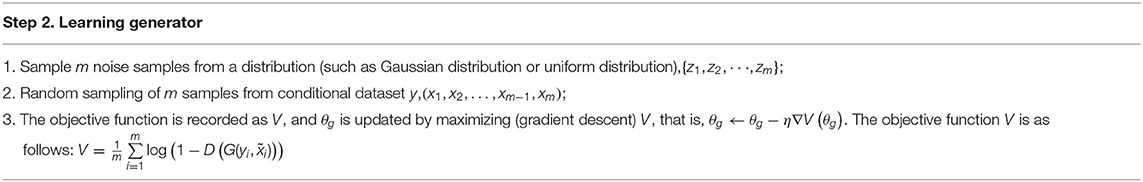

In this study, the dataset is divided twice differently (Table 3). Residual magnetic field strength data of liquid passing through magnetized space pipeline at speeds 4m/s and 6m/s are selected for training dataset. Residual magnetic field strength data at velocity 5m/s and 8m/s are used for testing dataset, 16 prediction data are generated, and real detection data are compared for performance evaluation. The comparison between predicted and real data for flow velocities 5m/s and 8m/s is shown in Figure 7. From the graph, the prediction model can capture the distribution of real data and predict it well.

Calculate the yMAE, yRMSE, and yFA values of the predicted data of dynamic water and fertilizer liquid under the magnetization condition of spatial magnetic field strength (equal difference 20mT) at flow rates 5m/s and 8m/s, respectively, as shown in Table 5. It can be seen from the table that the model can accurately predict the residual magnetic field strength of dynamic water and fertilizer liquid.

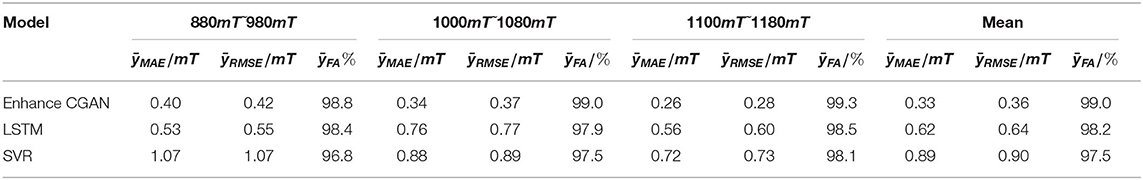

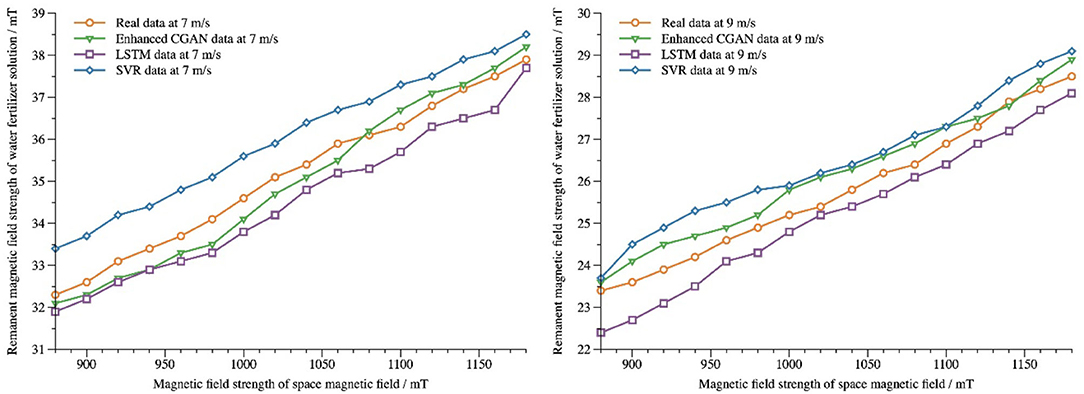

Residual magnetic field strength data from query datasets 7m/s and 9m/s are tested and compared with LSTM and SVR models with optimized parameters. The parameters of the LSTM model are set to a learning rate of 0.01, a number of iterations of 1,000, 128 cells in the first hidden layer, and 64 cells in the second hidden layer. The parameters of the SVR model are set to radial basis function kernel, penalty factor C = 10, and kernel parameter Y = 0.25. Figure 8 shows the predicted data of residual magnetic field strength of water and fertilizer liquids at 7m/sand 9m/s flow rates and the comparison of predicted results of different prediction models. From the diagram, it can be seen that all three models can make a good prediction of the trend of residual magnetic field strength. The predicted results of the models presented in this paper have the highest coincidence with the actual measured data, and the results are the best.

When the flow rate of water and fertilizer liquid is 7m/s, the prediction errors and precision of several models are compared under the influence of different spatial magnetic field strength in air gap as shown in Table 6. It can be seen that the average absolute error and root mean square error of the model presented in this paper are the smallest and the prediction precision is the highest compared with those of the other two models for the strength of the gap spatial magnetic field in different intervals.

Table 6. Comparison of prediction error and precision under different magnetization conditions (7m/s velocity).

Discussion

For current prediction algorithms, researchers pay more attention to time-series data, while there are a large number of series data with weak correlation with time in agricultural production, such as soil humidity and salinity, PH value, and surface tension of irrigation water. These series data are often influenced by a variety of factors, but once the major variables are identified, they change in the same rhythm as time series. Therefore, it is a meaningful attempt to use the machine learning model applied to time-series data prediction to study other series data prediction. This will help to improve the ability of data mining based on the limited detection series data.

In order to fully verify the superiority of the proposed model, we further compare the prediction errors and accuracy of different prediction models for magnetized liquid series data. Long short-term memory (LSTM) neural network is widely used in time-series data prediction, such as weather forecast (Karevan and Suykens, 2020), finance (Cao et al., 2019), and oil production forecast (Song et al., 2020). The architecture of LSTM makes it easy to capture patterns in series data. It has the advantage of being able to learn and remember long sequences and does not rely on pre-specified window observations as input. Therefore, LSTM tends to do a better job of predicting unstable time series with more fixed components. In reference (Maldonado et al., 2019), a power load forecasting method based on Support Vector Regression (SVR) is introduced by Maldonado et al. The method follows the backward variable elimination process based on gradient descent optimization and adjusts the width of the anisotropic Gaussian kernel iteratively. In the model comparison of this paper, the model structure of LSTM and SVR is used for reference to predict the series data of magnetized liquid. The comparison results show that the enhanced CGAN model proposed in this paper has better performance in terms of data. On the one hand, the addition of antagonistic samples in this model improves the performance of discriminator and promotes the generator to generate better data. On the other hand, the real training data are taken as the conditional input of the model so that the model can capture the real data distribution. However, it is undeniable that, as a comparative model, this study did not carry out detailed parameter optimization for LSTM and SVR models, but simply set parameters. At the same time, because LSTM combines long-term memory with short-term memory, it selectively forgets some secondary information and captures the main characteristics of data distribution, so its performance in this experiment is better than that of SVR model.

Because the proposed enhanced CGAN model can further improve the prediction accuracy of liquid magnetization series data, it can help agricultural production managers to better adjust the spatial magnetic field strength during water and fertilizer magnetization irrigation, so that the magnetic biological effect produced by water and fertilizer liquid is more conducive to crop growth and improve its yield and quality. In this study, the technology of anti-attack defense and CGAN generation of prediction data was introduced into the field of liquid magnetization series data prediction, in order to improve the precision of integrated management of agricultural water and fertilizer. Agricultural producers will benefit more if real-time data acquisition technology and computing power of field hardware can be improved. However, the internal network of CGAN in this study only adopts a CNN sub-model. In the case of relatively dense series data, the prediction accuracy is not improved much, and there are still some limitations.

Conclusion

On the basis of a small amount of detection data, how to make accurate data prediction and provide basis for future decision-making is of great significance to producers and researchers. we propose to combine PGD attack algorithm with CGAN to predict the series data in order to obtain the true data distribution as possible. On the one hand, the limited series data are expanded, and on the other hand, the generated antagonistic samples are used to improve the defense success rate of discriminator in CGAN, so as to guide generator to generate more conditionally distributed data. Experiments show that the proposed prediction model can accurately predict the residual magnetic field strength of water and fertilizer liquids. By comparing with other prediction models, it is also proved that the model proposed in this paper has advantages in prediction precision.

This paper evaluates model performance based on point-by-point error, which is incomplete, because the point-by-point error measurement does not fully reflect the distribution similarity between the predicted data and the real data. At the same time, the dataset used has a single trend. Subsequent work will analyze the characteristics of the detected data under complex changing trends, build comprehensive and accurate evaluation index to reproduce the data distribution, and further improve the prediction precision and universality of the model.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

JN: methodology and writing—original draft. NW: conceptualization and software. JL: writing—review. YW: visualization. KW: editing. All authors read and approved the final manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 31860333).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Beheshti-Kashi, S., Karimi, H. R., Thoben, K. D., Lütjen, M., and Teucke, M. (2015). A survey on retail sales forecasting and prediction in fashion markets. Syst. Sci. Control Eng. 3, 154–161. doi: 10.1080/21642583.2014.999389

Bulat, A., Yang, J., and Tzimiropoulos, G. (2018). “To learn image super-resolution, use a gan to learn how to do image degradation first,” in Proceedings of the European Conference on Computer Vision (ECCV) (Munich: Springer), 185–200. doi: 10.1007/978-3-030-01231-1_12

Cao, J., Li, Z., and Li, J. (2019). Financial time series forecasting model based on CEEMDAN and LSTM. Phys. A Stat. Mech. Applic. 519, 127–139. doi: 10.1016/j.physa.2018.11.061

Chen, L., Bentley, P., Mori, K., Misawa, K., Fujiwara, M., and Rueckert, D. (2019). “Intelligent image synthesis to attack a segmentation CNN using adversarial learning,” in International Workshop on Simulation and Synthesis in Medical Imaging (Cham: Springer), 90–99. doi: 10.1007/978-3-030-32778-1_10

Cheng, L., Zang, H., Ding, T., Sun, R., Wang, M., Wei, Z., et al. (2018). Ensemble recurrent neural network based probabilistic wind speed forecasting approach. Energies 11, 1958. doi: 10.3390/en11081958

Divina, F., Garcia Torres, M., Goméz Vela, F. A., and Vazquez Noguera, J. L. (2019). A comparative study of time series forecasting methods for short term electric energy consumption prediction in smart buildings. Energies 12, 1934. doi: 10.3390/en12101934

Dong, H., Liang, X., Gong, K., Lai, H., Zhu, J., and Yin, J. (2018). Soft-gated warping-gan for pose-guided person image synthesis. Adv. Neural Inf. Process. Syst. 31, 1–17. doi: 10.48550/arXiv.1810.11610

Goodfellow, I. J., Shlens, J., and Szegedy, C. (2015). Explaining and harnessing adversarial examples. arXiv [Preprint]. arXiv: 1412.6572v3. doi: 10.48550/arXiv.1412.6572

Hewage, P., Behera, A., Trovati, M., Pereira, E., Ghahremani, M., Palmieri, F., et al. (2020). Temporal convolutional neural (TCN) network for an effective weather forecasting using time-series data from the local weather station. Soft Comput. 24, 16453–16482. doi: 10.1007/s00500-020-04954-0

Karami, A., Crawford, M., and Delp, E. J. (2020). Automatic plant counting and location based on a few-shot learning technique. IEEE J. Selected Top. Appl. Earth Observ. Remote Sens. 13, 5872–5886. doi: 10.1109/JSTARS.2020.3025790

Karevan, Z., and Suykens, J. A. (2020). Transductive LSTM for time-series prediction: an application to weather forecasting. Neural Netw.5, 1–9. doi: 10.1016/j.neunet.2019.12.030

Kiyasseh, D., Tadesse, G. A., Thwaites, L., Zhu, T., and Clifton, D. (2020). PlethAugment: GAN-based PPG augmentation for medical diagnosis in low-resource settings. IEEE J. Biomed. Health Informatics 24, 3226–3235. doi: 10.1109/JBHI.2020.2979608

Kumar, N., and Baghel, B. K. (2021). Intent focused semantic parsing and zero-shot learning for out-of-domain detection in spoken language understanding. IEEE Access 9, 165786–165794. doi: 10.1109/ACCESS.2021.3133657

Li, L., Su, X., Zhang, Y., Lin, Y., and Li, Z. (2015). Trend modeling for traffic time series analysis: an integrated study. IEEE Trans. Intell. Transport. Syst. 16, 3430–3439. doi: 10.1109/TITS.2015.2457240

Li, Y., and Chao, X. (2020). ANN-based continual classification in agriculture. Agriculture 10, 178. doi: 10.3390/agriculture10050178

Li, Y., and Chao, X. (2021a). Semi-supervised few-shot learning approach for plant diseases recognition. Plant Methods 17, 1–10. doi: 10.1186/s13007-021-00770-1

Li, Y., and Chao, X. (2021b). Distance-entropy: an effective indicator for selecting informative data. Front. Plant Sci. 12, 818895. doi: 10.3389/fpls.2021.818895

Li, Y., and Chao, X. (2021c). Toward sustainability: trade-off between data quality and quantity in crop pest recognition. Front. Plant Sci. 12. doi: 10.3389/fpls.2021.811241

Li, Y., Chao, X., and Ercisli, S. (2022). Disturbed-entropy: a simple data quality assessment approach. ICT Express. doi: 10.1016/j.icte.2022.01.006

Li, Y., Nie, J., and Chao, X. (2020). Do we really need deep CNN for plant diseases identification?. Comput. Electron. Agricult. 178, 105803. doi: 10.1016/j.compag.2020.105803

Li, Y., and Yang, J. (2020). Few-shot cotton pest recognition and terminal realization. Computers and Electronics in Agriculture 169, 105240. doi: 10.1016/j.compag.2020.105240

Li, Y., and Yang, J. (2021). Meta-learning baselines and daTablease for few-shot classification in agriculture. Comput. Electron. Agric. 182, 106055. doi: 10.1016/j.compag.2021.106055

Li, Y., Yang, J., and Wen, J. (2021). Entropy-based redundancy analysis and information screening. Digital Commun. Netw. doi: 10.1016/j.dcan.2021.12.001

Liao, K., Lin, C., Zhao, Y., and Gabbouj, M. (2019). DR-GAN: automatic radial distortion rectification using conditional GAN in real time. IEEE Trans. Circuits Syst. Video Technol. 30, 725–733. doi: 10.1109/TCSVT.2019.2897984

Liu, B., Yu, X., Yu, A., Zhang, P., Wan, G., and Wang, R. (2018). Deep few-shot learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 57, 2290–2304. doi: 10.1109/TGRS.2018.2872830

Liu, S., Li, S., and Cheng, H. (2021). Towards an end-to-end visual-to-raw-audio generation with GAN. IEEE Trans. Circuits Syst. Video Technol. 32, 1299–1312. doi: 10.1109/TCSVT.2021.3079897

Liu, X., and Hsieh, C. J. (2019). “Rob-gan: generator, discriminator, and adversarial attacker,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Long Beach, CA: IEEE), 11234–11243. doi: 10.1109/CVPR.2019.01149

Maldonado, S., Gonzalez, A., and Crone, S. (2019). Automatic time series analysis for electric load forecasting via support vector regression. Appl. Soft Comput. 83, 105616. doi: 10.1016/j.asoc.2019.105616

Munir, M., Siddiqui, S. A., Dengel, A., and Ahmed, S. (2018). DeepAnT: a deep learning approach for unsupervised anomaly detection in time series. Ieee Access 7, 1991–2005. doi: 10.1109/ACCESS.2018.2886457

Nie, J., Wang, N., Li, J., Wang, K., and Wang, H. (2021a). Meta-learning prediction of physical and chemical properties of magnetized water and fertilizer based on LSTM. Plant Methods 17, 1–13. doi: 10.1186/s13007-021-00818-2

Nie, J., Wang, N., Wang, K., Li, Y., Chao, X., and Li, J. (2021b). “Effect of drip irrigation with magnetised water and fertiliser on cotton nutrient absorption,” in IOP Conference Series: Earth and Environmental Science, Vol. 697 (Guangzhou: IOP Publishing), 012009. doi: 10.1088/1755-1315/697/1/012009

Nyakane, N. E., Markus, E. D., and Sedibe, M. M. (2019). The effects of magnetic fields on plants growth: a comprehensive review. Int J Food Eng 5, 79–87. doi: 10.18178/ijfe.5.1.79-87

Radhakrishnan, R (2019). Magnetic field regulates plant functions, growth and enhances tolerance against environmental stresses. Physiol. Mol. Biol. Plants 25, 1107–1119. doi: 10.1007/s12298-019-00699-9

Sen, R., Yu, H. F., and Dhillon, I. S. (2019). Think globally, act locally: A deep neural network approach to high-dimensional time series forecasting. Adv. Neural Inf. Process. Syst. 32, 1–14. doi: 10.48550/arXiv.1905.03806

Song, X., Liu, Y., Xue, L., Wang, J., Zhang, J., Wang, J., et al. (2020). Time-series well performance prediction based on Long Short-Term Memory (LSTM) neural network model. J. Petroleum Sci. Eng. 186, 106682. doi: 10.1016/j.petrol.2019.106682

Wan, R., Mei, S., Wang, J., Liu, M., and Yang, F. (2019). Multivariate temporal convolutional network: a deep neural networks approach for multivariate time series forecasting. Electronics 8, 876. doi: 10.3390/electronics8080876

Wang, N., Nie, J., Li, J., Wang, K., and Ling, S. (2022). A compression strategy to accelerate LSTM meta-learning on FPGA. ICT Express. doi: 10.1016/j.icte.2022.03.014

Wang, T. C., Liu, M. Y., Zhu, J. Y., Tao, A., Kautz, J., and Catanzaro, B. (2018). “High-resolution image synthesis and semantic manipulation with conditional gans,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Salt Lake City, UT: IEEE), 8798–8807. doi: 10.1109/CVPR.2018.00917

Wang, Y., Yao, Q., Kwok, J. T., and Ni, L. M. (2020). Generalizing from a few examples: a survey on few-shot learning. ACM Comput. Surv. 53, 1–34. doi: 10.1145/3386252

Xia, Z., Qin, L., Ning, Z., and Zhang, X. (2022). Deep learning time series prediction models in surveillance data of hepatitis incidence in China. PLoS ONE 17, e0265660. doi: 10.1371/journal.pone.0265660

Xu, J., Ren, X., Lin, J., and Sun, X. (2018). “Diversity-promoting GAN: a cross-entropy based generative adversarial network for diversified text generation,” in Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing (Brussels: Association for Computational Linguistics), 3940–3949. doi: 10.18653/v1/D18-1428

Yan, L., Zheng, Y., and Cao, J. (2018). Few-shot learning for short text classification. Multimed. Tools Appl. 77, 29799–29810. doi: 10.1007/s11042-018-5772-4

Yang, J., Guo, X., Li, Y., Marinello, F., Ercisli, S., and Zhang, Z. (2022). A survey of few-shot learning in smart agriculture: developments, applications, and challenges. Plant Methods 18, 1–12. doi: 10.1186/s13007-022-00866-2

Zhang, C., Kuppannagari, S. R., Kannan, R., and Prasanna, V. K. (2018). “Generative adversarial network for synthetic time series data generation in smart grids,” in 2018 IEEE International Conference on Communications, Control, and Computing Technologies for Smart Grids (SmartGridComm) (Aalborg: IEEE), 1–6. doi: 10.1109/SmartGridComm.2018.8587464

Zhang, M., Jiang, X., Fang, Z., Zeng, Y., and Xu, K. (2019). High-order Hidden Markov Model for trend prediction in financial time series. Phys. A Stat. Mech. Applic. 517, 1–12. doi: 10.1016/j.physa.2018.10.053

Keywords: series data, liquid magnetization, data enhancement, prediction, biological effect of magnetic field

Citation: Nie J, Wang N, Li J, Wang Y and Wang K (2022) Prediction of Liquid Magnetization Series Data in Agriculture Based on Enhanced CGAN. Front. Plant Sci. 13:929140. doi: 10.3389/fpls.2022.929140

Received: 26 April 2022; Accepted: 16 May 2022;

Published: 17 June 2022.

Edited by:

Jiachen Yang, Tianjin University, ChinaReviewed by:

Zhilong Chen, Taiyuan University of Technology, ChinaPaul Kumar, Melbourne Institute of Technology, Australia

Copyright © 2022 Nie, Wang, Li, Wang and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jingbin Li, bGlqaW5nYmluODA5NTIwMjBAMTYzLmNvbQ==

Jing Nie

Jing Nie Nianyi Wang

Nianyi Wang Jingbin Li

Jingbin Li Yi Wang

Yi Wang Kang Wang

Kang Wang