95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 30 June 2022

Sec. Technical Advances in Plant Science

Volume 13 - 2022 | https://doi.org/10.3389/fpls.2022.927832

This article is part of the Research Topic State-of-the-art Technology and Applications in Crop Phenomics, Volume II View all 18 articles

The currently available methods for evaluating most biochemical traits of plant phenotyping are destructive and have extremely low throughput. However, hyperspectral techniques can non-destructively obtain the spectral reflectance characteristics of plants, which can provide abundant biophysical and biochemical information. Therefore, plant spectra combined with machine learning algorithms can be used to predict plant phenotyping traits. However, the raw spectral reflectance characteristics contain noise and redundant information, thus can easily affect the robustness of the models developed via multivariate analysis methods. In this study, two end-to-end deep learning models were developed based on 2D convolutional neural networks (2DCNN) and fully connected neural networks (FCNN; Deep2D and DeepFC, respectively) to rapidly and non-destructively predict the phenotyping traits of lettuces from spectral reflectance. Three linear and two nonlinear multivariate analysis methods were used to develop models to weigh the performance of the deep learning models. The models based on multivariate analysis methods require a series of manual feature extractions, such as pretreatment and wavelength selection, while the proposed models can automatically extract the features in relation to phenotyping traits. A visible near-infrared hyperspectral camera was used to image lettuce plants growing in the field, and the spectra extracted from the images were used to train the network. The proposed models achieved good performance with a determination coefficient of prediction (

Plant phenotyping is an interdisciplinary field of research that collects and analyses plant phenotyping traits, such as biophysical, biochemical, and physiological traits using non-destructive imaging and sensor-derived time-series data (Rebetzke et al., 2019; Roitsch et al., 2019; Grzybowski et al., 2021). Currently, rapid progress has been made in the plant phenotyping field based on quantifying traits of interest using hyperspectral imaging (HSI). HSI has been used to estimated biophysical traits, such as plant height and biomass (Aasen et al., 2015; Yue et al., 2017); biochemical traits, such as water content, chlorophyll, and nitrogen (Quemada et al., 2014; Zhou et al., 2018; Xie and Yang, 2020); physiological traits, such as salt, heat, and drought stress tolerance and photosynthesis (Zarco-Tejada et al., 2013; Mo et al., 2017). HSI is a non-destructive, rapid, remotely sensed method of plant phenotyping that simultaneously extracts spectral and spatial information relevant to the overall plant heath. Hyperspectral images contain hundreds and even thousands of continuous wavebands in visible near-infrared (Vis–NIR) range, and thus the spectral information obtained is rich. However, image processing is complex and the spectra contain redundant information (Xie and Yang, 2020).

Lettuce (Lactuca sativa L. var. longifolia) is one of the most popular vegetables in the world, rich in vitamins, carotenoids, dietary fiber, and other trace elements (Kim et al., 2016; Xin et al., 2020). Moreover, the soluble solids content (SSC) and pH in biochemical traits are key indicators of lettuce taste and harvest time, and thus it is crucial in the lettuce growing industry (Eshkabilov et al., 2021). Due to the rising demand for lettuce in recent years and the tendency of this crop to lose moisture in a short time at room temperature, rapid strategies for lettuce quality assessment are needed (Mo et al., 2015; De Corato, 2020; Eshkabilov et al., 2021). Lettuce quality is evaluated based on nutrient content, appearance, and shelf life (Eshkabilov et al., 2021). The traditional evaluation methods are mainly visual and destructive. Moreover, these methods require specialists and are time-consuming and costly (Simko et al., 2018; Simko and Hayes, 2018). Therefore, it is important to develop a method that can rapidly and non-destructively assess lettuce quality in phenotyping traits analysis.

Predictive modeling of some lettuce phenotyping traits has been developed using spectral reflectance obtained via hyperspectral technique combined with machine learning methods. Eshkabilov et al. (2021) detected the nutrient content of lettuces using partial least squares regression (PLSR) and principal component analysis (PCA). Wavelet transform (WT) and PLSR have been used to assess the moisture content in lettuce leaves (Zhou et al., 2018). Also, ANOVA, artificial neural networks (ANN), competitive adaptive reweighed sampling (CARS), random forest (RF), successive projections algorithm (SPA), and least squares support vector regression (LSSVR) have been used to study the responses of lettuce to biotic and abiotic stresses, such as worms, water and pesticide (Mo et al., 2017; Osco et al., 2019). Most studies have focused on the leaf scale of lettuces, with only a few focusing on the canopy scale of lettuces. However, high-throughput plant phenotyping (HTPP) platforms can extract canopy scale features (Nyonje et al., 2021). Besides, some studies had small sample sizes of less than 100 and only one cultivar (Mo et al., 2015, 2017; Sun et al., 2018; Eshkabilov et al., 2021). The spectra of lettuces are distinguishing between different varieties, and for the same variety of lettuces, the spectra are also distinguishing in different growth states. Therefore, the insufficient sample size and number of cultivars can compromise the robustness of the established models used to predict plant phenotyping traits. Additionally, raw spectra used to develop models contain noise and redundant information, limiting their application in HTPP platforms. As a result, pretreatment and wavelength selection are usually conducted using multivariate analysis methods before modeling (Gao et al., 2021). The spectra obtained by different pretreatment methods have a great influence on the modeling, and sometimes even have negative effects. Therefore, the application of multivariate analysis method to the HTPP platforms may reduce the throughput and prediction accuracy.

Recent advances in machine learning have shown that deep learning algorithms can automatically learn to extract features from raw data and significantly improve modeling performance for many spectral analysis tasks (Singh et al., 2018; Kanjo et al., 2019; Rehman et al., 2020). Sun et al. (2019, 2021) employed a deep brief network to estimate cadmium and lead contents of lettuces with high accuracy. Rehman et al. (2020) also developed a modified Inception module to predict the relative water content (RWC) of maize leaves and achieved a determination coefficient (R2) of 0.872 for RWC. Aich and Stavness (2017) used deep convolutional and deconvolutional networks for leaf counting and obtained mean and standard deviation of absolute count difference of 1.62 and 2.30. Wang et al. (2019) developed the SegRoot model based on convolutional neural networks (CNN) to segment root from complex soil background with R2 of 0.9791. Wang et al. (2017) employed deep VGG16 model to evaluate apple black rot with accuracy of 90.4%. Deep learning models include CNN model and fully connected neural networks (FCNN) model (Furbank et al., 2021). The purposes of this study are: (1) two end-to-end deep learning models were developed for rapid and non-destructive prediction of phenotyping traits of lettuce canopy in hyperspectral applications; (2) the proposed models can directly use the raw reflectance spectra as input to obtain prediction for biochemical traits, such as SSC and pH in lettuce phenotyping traits; and (3) to compare the difference in performance between the models built by various linear and nonlinear multivariate analysis methods and deep learning models. Specifically, the developed FCNN model (DeepFC) and the two-dimensional CNN model (Deep2D) could directly use the raw average spectral reflectance as input to predict the SSC and pH of lettuce canopy. The models could automatically learn to better extract the abstract features related to SSC and pH and thus did not require pretreatment or dimensionality reduction. Our models predicted SSC and pH better than multivariate analysis methods and were suitable for application in the HTPP platforms.

In this study, three annual bolting lettuce cultivars (Butter, Leaf, and Roman) with fast-growing and excellent quality were planted under open field conditions at the Research Center of Information Technology, Beijing Academy of Agriculture and Forestry Sciences (39.9438°N, 116.2876°E) on April 12, 2021. A field HTPP platform (LQ-FieldPheno, Beijing, China) was deployed at the experimental site (Figure 1). The plants were grown on raised beds with furrows between the beds (Figure 1). The growing environments of plants are: (1) no fertilizers were applied to the soil; (2) drip irrigation was conducted under professional supervision; and (3) water treatment was the same for all plants. Forty-five lettuces were selected from each cultivar on May 15, 20, and 25, 2021, put into the flowerpots, and then taken to the laboratory near the field for HSI. Each lettuce was imaged, then the lettuce juice was obtained as follows: (1) the lettuce roots were removed to obtain the leaves; (2) a hand-crank juicer (LKM-ZZ01, Like-me Technology Co., Ltd., China) was then used to extract the juice, finally store (3) in a centrifugal tube (capacity: 50 ml). A total of 387 lettuce juices were finally analyzed since the labels of eighteen lettuce samples were lost during the experiment (Supplementary Table S1). A digital refractometer (PAL-1, ATAGO Co., Ltd., Japan) and a pH meter (206pH1, Testo, Germany) were used to measure SSC and pH of lettuce juices, respectively.

The Vis-NIR hyperspectral images of lettuce plants were acquired in reflectance mode in a black room (length: 1.5 m, width: 1.5 m, and height: 2.5 m). The HSI system consists of the following modules: (1) a high spectrograph (GaiaField-V10E, Dualix Spectral imaging, China), (2) a CCD camera, (3) four tungsten halogen lamps, and (4) a computer (Ins 15-7,510-R1645S, Dell, United States) with image acquisition software (Specview, Dualix Spectral imaging, China). The spectrograph is a built-in push-broom style line-scanning and has a spectral range of 400–1,000 nm, with 256 spectral bands at a spectral resolution of 2.8 nm. The CCD camera has a spatial resolution of 696 pixels per scan line and was equipped with a 23 mm lens. The lamps provide 350–2,500 nm light with a power of 50 W. The distance between the lettuce plants and lens was set to 1 m, and the angle between the lamp and camera was set at 45° to provide enough light to the imaging area for image acquisition. The images (dimension: 775 × 696 × 256) were obtained at the exposure time, frame rate, gain, spatial binning, and spectral binning of 30 millisecond, 14 frames per second, 1, 2, and 4, respectively.

The obtained raw hyperspectral images were distorted since the imaging mode of the hyperspectral system was set at push-broom style, and the non-planar camera lens and spectrometer were separated. As a result, the raw hyperspectral images were subjected to lens correction using the lens correction function provided by Specview. The corrected images were then calibrated to remove uneven light distribution and dark current from the sensor (Mishra et al., 2019; Gao et al., 2021). A 99% reflectivity flat polyvinyl chloride (PVC) board was scanned as the white reference to calibrate the light changes in the images, and the dark current from the hyperspectral sensor was removed by collecting the dark reference. The following calibration formula was used:

R, Rraw, Rw, and Rd represent the calibrated image, raw hyperspectral image, white reference image acquired from the PVC board with 99% reflectance, and dark reference image obtained through shutting the lamps and covering the camera lens, respectively.

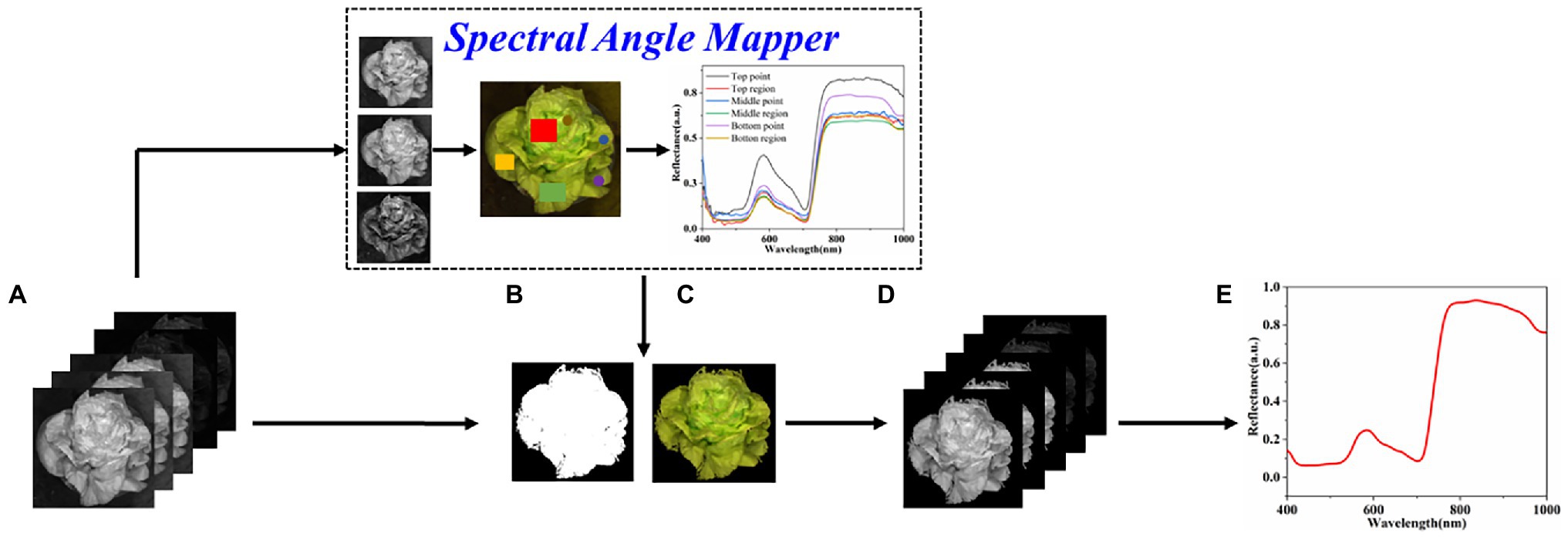

The difference in plant height causes significant differences in spectral reflectance and brightness in different plant parts. Common threshold segmentation method cannot adequately extract the region of interest (ROI) of the entire canopy. In this study, the mask of the ROI was obtained from the calibrated hyperspectral image using spectral angle mapper (SAM; Kim, 2021). SAM was conducted based on six spectra extracted from the top point, top region, middle point, middle region, bottom point, and bottom region of plant (Figure 2) via ENVI 5.3 software (ITT Visual Information Solutions, Boulder, CO, United States). The mask was multiplied using the calibrated image to obtain the ROI image. The mean spectrum was calculated by averaging the spectra of all pixels in the ROI images for further modeling analysis.

Figure 2. The process of plant spectra extraction. (A) Calibrated hyperspectral images, (B) binary plant mask, (C) masked RGB plant, (D) 256 bands corresponding to the ROI, and (E) extracted plant mean reflectance spectrum.

Deep learning models are beneficial in the HTPP field due to their excellent information mining ability (Wang et al., 2019). For instance, it can be used for leaf counting (Aich and Stavness, 2017), image segmentation (Song et al., 2020), and quantitative or qualitative analysis (Wang et al., 2017; Kerkech et al., 2020). However, the deep learning model and spectroscopy combination are rarely used to quantify plant phenotyping traits. Herein, the models based on the two networks (2DCNN and FCNN) were developed for SSC and pH analysis of lettuces to understand the prediction accuracy of different network models for plant phenotyping traits.

The CNN emulates the visual perceptual mechanisms of living things (Fu et al., 2020). CNN can learn grid-like topology features, such as pixels and audio. The amount of calculation is small due to the sharing of convolution kernel parameters in the hidden layer and the sparseness of inter-layer connection makes, and thus CNN has a stable effect and no additional feature engineering requirements for data. In the previous investigation, the performance of the model with linear stacking of convolutional layers was poor, possibly due to the insufficient extraction of the raw spectral features. Herein, the Inception module improved on the naïve version was introduced (Figures 3A,B). The CNN and FCNN layers of Deep2D used ELU and linear activation functions, respectively. The optimizers, loss, and metrics of Deep2D were Adam, root mean square error (RMSE), and mean absolute error (MAE). The Inception module was introduced because: (1) the use of different size convolutional kernels implies different size perceptual fields enabling learning features at multiple scales; (2) the final concatenate operation can fuse features at multiple scales; and (3) the depth and width of the network are well balanced to prevent the network from falling into saturation. As shown in Figure 3B, three scale features were used, and then the features were subjected to a concatenation operation. The dimension of concatenated features was large, and thus fully connected module was used to reduce the feature dimension and improve the robustness of Deep2D.

The FCNN model is a multi-layer perceptron (Scabini and Bruno, 2021). The principle of the perceptron is to find the most logical and robust hyperplane between classes. As shown in Figure 4A, unlike traditional perceptron, each node of the FCNN model has an operational relationship with all nodes in the next layer. FCNN usually has multiple hidden layers. Although adding hidden layers can better separate the data features, too many hidden layers can also increase the training time and produce overfitting. Herein, the dropout operation was introduced to prevent overfitting. The structure of the DeepFC is shown in Figure 4B, and the features in the input layer were first scaled up and then scaled down. The dropout operation was conducted on the top 2 layers after the inputting features. Moreover, the linear activation function was applied to hidden layers of DeepFC. The optimizers, loss, and metrics of DeepFC were the same as those of Deep2D.

Three linear multivariate analysis methods [PLSR, locally weighted regression (LWR) and multiple linear regression (MLR)] and two nonlinear methods [ANN and support vector regression (SVR)] were used to establish models to compare with the proposed deep learning models (Deep2D and DeepFC).

PLSR is a multi-dependent variable Y against the multi-independent variable X modeling method. The method maximally extracts the principal components in Y and X, and maximizes the correlation between the principal components extracted from X and Y during the modeling process (Yu et al., 2015). Herein, X and Y represent the spectra and predicted values (SSC or pH), respectively. LWR is a nonparametric method for local regression analysis. It divides the samples into cells, performs polynomial fitting on the samples, and continuously repeats the process to obtain weighted regression curves in various cells. Finally, the centers of these regression curves are connected to form a complete regression curve (Raza and Zhong, 2019). MLR obtains a weighted summation relationship between each feature and the predicted values. The problems to be dealt with in practical work are usually complex multiple features, and thus compared with the univariate linear regression method, MLR is more suitable for use in practical work (Chung et al., 2021).

Artificial neural networks abstracts human brain neural networks based on information processing, thus establishing some simple models. Different networks are formed according to different connection methods. It is an operational model consisting of several interconnected nodes. Each node represents a specific output function (activation function). The connection between every two nodes represents a weight value for the signal passing through the connection (Osco et al., 2019). SVR is a key application branch of the support vector machine. It uses an optimal hyperplane that minimizes the total deviation of all sample points from the hyperplane, and then fits all the data through the optimal hyperplane (Zhang et al., 2017).

Light inhomogeneity and background interference generate noise in the reflectance spectra extracted from the hyperspectral images. Moreover, there are pitfalls of wavelengths unrelated to SSC and pH and a high correlation between adjacent wavelengths in the high dimensional spectra. As a result, the accuracy of the models developed via multivariate analysis methods may be reduced. However, spectral pretreatment methods, such as moving window smoothing (MWS), Savitzky–Golay Filter (SG), first-order derivative (FDR), second-order derivative (SDR), and WT and wavelength selection including CARS may address these problems and improve the performance of the models.

MWS sets a smooth window that is moved over each spectrum to average the spectra, thus denoising spectra. SG is a filtering method based on a local polynomial least squares fit in the time domain. The most important characteristic of this filter is that the shape and width of the spectra can be ensured to be constant while filtering out the noise. Derivative spectra, such as FDR and SDR can effectively eliminate background interference and improves the signal-to-noise ratio of the spectra via derivation of the spectra. CARS uses the absolute value of the regression coefficient as index to weigh the importance of wavelengths, and can effectively select the optimal combination of wavelengths.

The reflectance spectra of 387 lettuces were divided into calibration and prediction sets with a ratio of 2:1 based on Kennard–Stone (KS) algorithm (Saptoro et al., 2012). A 15% proportion of the spectra from the calibration set was then randomly selected as the validation set. Specifically, the sample number of calibration, validation, and prediction sets were 219, 39, and 129, respectively. The SSC and pH values of 387 lettuces are shown in Table 1. For the models established via the multivariate analysis methods, the data sets were divided after pretreatment or wavelength selection. Spectra of 219 lettuces were used to develop the models, spectra of 39 lettuces were used to optimize the parameters of the models, and spectra of 129 lettuces were used to evaluate the performance of the established models.

The performance of all established models was evaluated using the coefficient of determination and root mean square error for calibration set ( , RMSEC), validation set ( , RMSEV), and prediction set ( , RMSEP), and the relative percent difference of prediction set (RPD). The formulas for R2, RMSE, and RPD were as follows:

where yp, yr, and y are the prediction values, reference values, and mean value of reference values, respectively, and n is the number of samples, and SD is the standard deviation. The smaller the RMSEC, RMSEV, and RMSEP, the larger the , , , and RPD, and the better the performance of the model. The Deep2D and DeepFC were performed using Python 3.7.10 with TensorFlow 2.4.1 environment. The multivariate analysis methods, spectral pretreatment and wavelength selection were conducted using MATLAB2020 (Mathworks, Inc., United States). All experiments were conducted using DELL OptiPlex 7080 (Dell, Inc., United States) equipped with a 2.90 GHz Intel® Core™ i7-10700 processor, 32 GB of random-access memory, and an Nvidia Quadro P2200 graphical processing unit. The computer had Windows® 10 Home Edition 20H2 operation system.

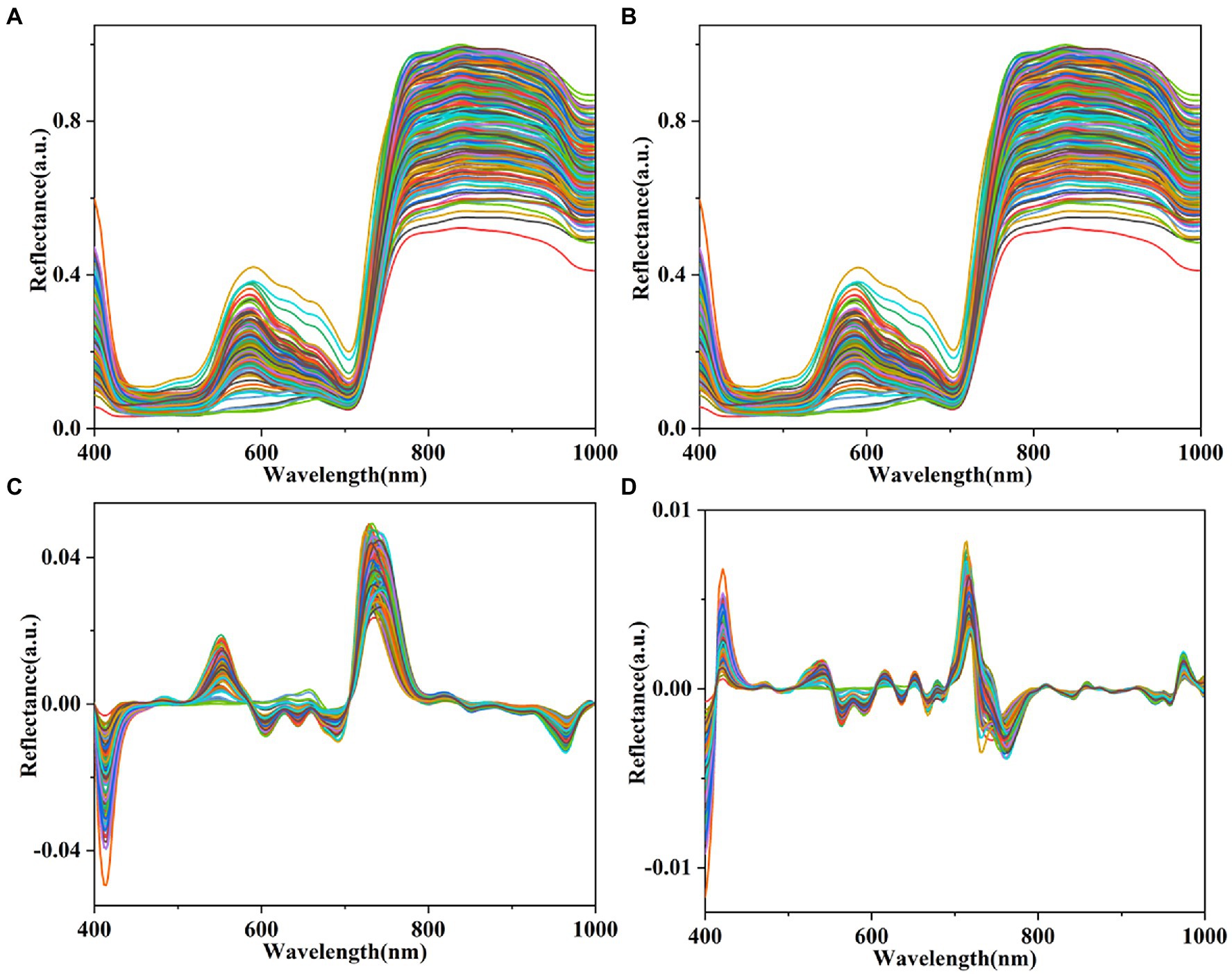

The spectral reflectance of the lettuces in the wavelength ranges from 400 to 1,000 nm obtained from the hyperspectral images is shown in Figure 5A. The spectral reflectance signature of plants provides information on biophysical, physiological, and chemical features (Rehman et al., 2020). The band around 580 nm is related to xanthophylls (Pacumbaba and Beyl, 2011). The 710–760 nm (red-edge) band and band around 700 nm are related to chlorophyll (ElMasry et al., 2007; Pacumbaba and Beyl, 2011). The band around 980 nm in the NIR region is related to the O—H of water (Zhang et al., 2018). The spectral reflectance of the hyperspectral images of all lettuce plants pretreated using MWS, FDR, and SDR are shown in Figures 5B–D. Four bandwidth regions (400–435 nm, 515–650 nm, 690–780 nm, and 960–1,000 nm) of all plants have distinct variations. Herein, the reflectance values of MWS were not significantly different from the raw reflectance values. Compared with raw reflectance values, the variation of the reflectance values of FDR and SDR was significantly enhanced, especially SDR variation. A previous study also reported similar results (Eshkabilov et al., 2021).

Figure 5. Mean spectral reflectance of plants. (A) Raw spectra and spectra pretreated by MWS (B), FDR (C), and SDR (D).

The spectral reflectance of lettuces with different SSC and pH is shown in Figure 6. The reflectance values of lettuces with different SSC and pH had significant differences and changes. This phenomenon indicates that reflectance spectroscopy can be used to detect SSC and pH in lettuce. The spectral reflectance change at 600–800 nm was irregular with increasing SSC and pH. In the 400–500 nm and 800–1,000 nm regions, the spectral reflectance first increased, then decreased, and finally increased with increasing SSC. In contrast, the spectral reflectance change at the 800–1,000 nm region showed the opposite trend with increasing pH.

The SSC and pH of lettuces were predicted using the regression models developed by Deep2D and DeepFC. The RMSE of the training, validation, and test sets were used as the evaluation criteria for the optimization of hyperparameters, such as batch size and learning rate. A batch size of 4 and a learning rate of 0.0001 provided a relatively lower RMSE and were selected to train the models. The detailed hyperparameters of the Deep2D and DeepFC are shown in Supplementary Tables S2, S3, and the analysis results of SSC and pH of lettuces are shown in Table 2.

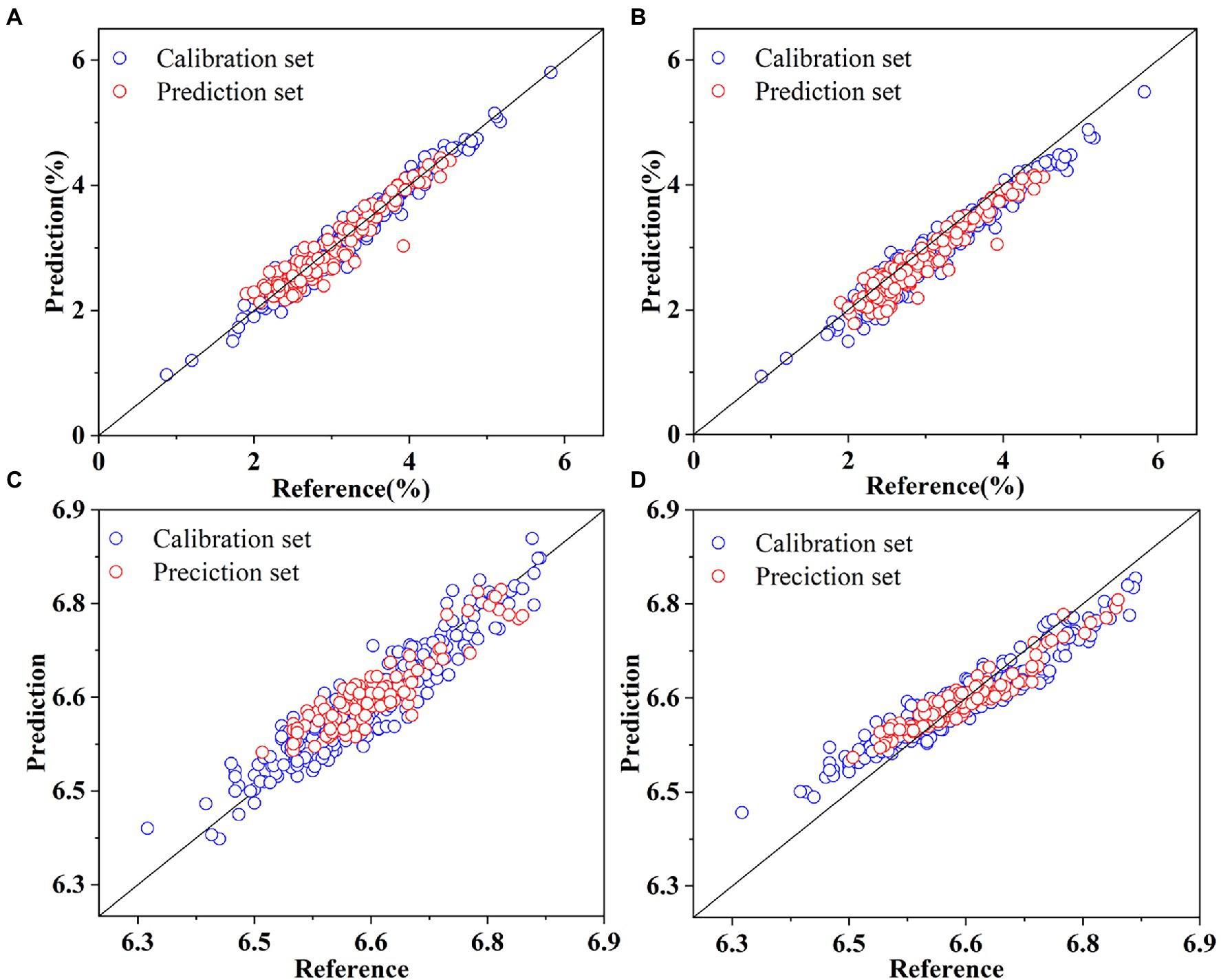

Deep2D obtained better results for SSC prediction than DeepFC ( : 0.9642, RMSEC: 0.1500, : 0.8974, RMSEV: 0.1671, : 0.9030, RMSEP: 0.1969, and RPD: 3.2237). Deep2D had excellent performance in predicting the SSC of lettuces since it had RPD greater than 3. This indicated that the multi-scale features associated with SSC extracted by Deep2D contain more abundant information than the single-scale features extracted by DeepFC. The robustness of Deep2D and DeepFC was similar in terms of the difference between and . However, the DeepFC had higher accuracy in analyzing pH ( : 0.8670, RMSEC: 0.0342, : 0.8674, RMSEV: 0.0257, : 0.8490, RMSEP: 0.0260, and RPD: 2.5839). The multi-scale features extracted by the Deep2D in relation to pH may have redundancy, and thus reduce the robustness of the model. DeepFC was more robust than Deep2D because it had a smaller difference between and . The prediction results for SSC and pH in the calibration and prediction sets based on the Deep2D and DeepFC are shown in Figure 7. The figures showed that the prediction values based on Deep2D were generally closer to the reference values for SSC. In the analysis of pH, for calibration set, the error of Deep2D was smaller, while for prediction set, the error of DeepFC was smaller. And the error of prediction set is more significant in actual application.

Figure 7. Prediction of SSC based on Deep2D (A) and DeepFC (B), and pH based on Deep2D (C) and DeepFC (D).

The two deep learning models had significantly different prediction abilities for various phenotyping traits. Therefore, a follow-up study should identify suitable network structures for prediction of different phenotyping traits. Moreover, a general network structure should be determined for simultaneous and accurate predictions of multiple phenotyping traits. Meanwhile, many studies have developed deep learning models combined with HSI to predict the phenotyping traits of plants and obtained good results. For instance, Rehman et al. (2020) used end-to-end deep model to predict the RWC of maize plants and obtained an of 0.872. Moreover, Zhou et al. (2020) detected heavy metals in lettuce using a stack convolution auto encoder ( : 0.9418, RMSEP: 0.04123, and RPD: 3.214). Sun et al. (2019) estimated cadmium content in lettuce leaves using deep brief network and obtained optimal performance ( = 0.9234, RMSEP = 0.5423, and RPD = 3.5894). Most previous studies were based on the leaf scale of lettuce and obtained satisfactory results. Although the phenotyping traits of lettuce canopy are also crucial in the actual production needs and consumer choices, the study of the lettuce canopy is rare. This study and the abovementioned studies have demonstrated that deep learning models combined with HSI techniques can significantly predict plant phenotyping traits.

Multivariate analysis methods, such as PLSR, LWR, MLR, ANN, and SVR were used to analyze the SSC and pH of the lettuces to more intuitively understand the performance of the Deep2D and DeepFC. Compared with deep learning models, multivariate analysis methods had much less ability to identify noise and effective information in spectra. As a result, multiple pretreatment methods (MWS, SG, FDR, SDR, and WT) were used to pretreat the spectra to reduce these negative effects before building regression models using multivariate analysis methods. The prediction results of SSC and pH of lettuces are shown in Tables 3, 4, and the corresponding parameter settings are shown in Supplementary Tables S4, S5.

Compared with the other reflectance spectra, the FDR and SDR-pretreated spectra obtained better results for the models established by different multivariate analysis methods for SSC analysis, possibly because FDR and SDR enhanced the differences between the spectra of different SSC. All the pretreatment methods improved the precision of MLR models, and SDR obtained the best results with of 0.9476, RMSEC of 0.1801, of 0.9320, RMSEV of 0.1987, of 0.8148, RMSEP of 0.2910 and RPD of 2.3327. Compared with other models, SVR models had poor performance, with FDR spectra having > 0.8. The PLSR and LWR models had relatively great robustness. Moreover, LWR model with SDR spectra had the best results ( = 0.9183, RMSEC = 0.2209, = 0.9249, RMSEV = 0.2040, = 0.8587, RMSEP = 0.2657, and RPD = 2.6705). However, the best SSC results obtained by multivariate analysis methods were still lower than the results of Deep2D.

The ANN models had the worst performance for pH prediction. Compared with the raw spectra, only SG improved the accuracy of the models and obtained of 0.9090, RMSEC of 0.0279, of 0.8476, RMSEV of 0.0316, of 0.6724, RMSEP of 0.0396, and RPD of 1.7546. Although all pretreatment methods enhanced the robustness of MLR models, the corresponding to the best results was lower than 0.7. The PLSR and LWR models had relatively high performance. The results of PLSR, LWR, and MLR were similar to their results for SSC. Moreover, MWS, SG, and WT improved the accuracy of both PLSR and LWR models, and LWR model with MWS spectra had the best results ( : 0.8734, RMSEC: 0.0329, : 0.8511, RMSEV: 0.0313, : 0.7380, RMSEP: 0.0354, and RPD: 1.9619).

The results of the models established by multivariate analysis methods showed that the influence of different pretreatment methods on the same model was not always positive, and the effect of the same pretreatment method on different models was different. Therefore, it is important to select the appropriate multivariate analysis methods and pretreatment methods. Compared with the deep learning models, the manual feature engineering of multivariate analysis methods is indispensable before modeling, which reduces the throughput of data process in the HTPP platforms. The optimal prediction results for SSC and pH based on multivariate analysis methods are shown in Figure 8. The number of data points concentrated near the fitting line was significantly less than the optimal results in Figure 7. In summary, the performance of the models developed by multivariate analysis methods was significantly inferior to the performance of the proposed deep learning models. Therefore, deep learning algorithms can automatically learn to extract features from raw data and develop highly accurate models compared with multivariate analysis methods. Some previous studies also reported similar results (Singh et al., 2018; Kanjo et al., 2019; Rehman et al., 2020).

The reflectivity spectra extracted from hyperspectral images have a narrow bandwidth and a high dimension, leading to a high correlation of adjacent wavelengths and the existence of information unrelated to SSC and pH in the wavelengths (Tian et al., 2018). As a result, this can decrease the precision of the models established via multivariate analysis methods and increase the computational complexity. Herein, CARS was used to select key wavelengths from the pretreated spectra to obtain relatively better results. Three preferred pretreatment methods were selected for wavelength selection (SG, FDR, and SDR for SSC and MWS, SG, and WT for pH). Models were then established based on the superior multivariate analysis methods (PLSR and LWR) to observe result changes compared with the full-range spectra.

The analysis results are shown in Table 5, and specific parameter settings and concrete selected wavelengths are shown in Supplementary Tables S6, S7. Only model based on LWR and SG had improved performance for SSC prediction ( : 0.9210, RMSEC: 0.2225, : 0.8865, RMSEV: 0.2233, : 0.8450, RMSEP: 0.2475, and RPD: 2.5400). Moreover, the number of variables decreased by about 90% after CARS. Compared with the performance of the model for the full-range spectra, the performance of the models slightly decreased based on the selected wavelengths. The PLSR models showed better performance for pH analysis based on wavelengths selected by CARS. The WT-pretreated spectra had the least wavelengths and better results ( : 0.7739, RMSEC: 0.0449, : 0.7589, RMSEV: 0.0331, : 0.7404, RMSEP: 0.0322, and RPD: 1.9626). Meanwhile, the LWR and WT model had lowest prediction errors ( = 0.7628, RMSEC = 0.0459, = 0.6461, RMSEV = 0.0382, = 0.7477, RMSEP = 0.0330, and RPD = 2.0532). Besides, only the LWR and WT model obtained an RPD > 2 among the pH prediction models based on multivariate analysis methods. However, DeepFC still outperformed the optimal multivariate analysis methods-based model. These results showed that CARS selected different number of bands and specific bands for different pretreated spectra and models. Therefore, compared with multivariate analysis methods-based models, deep learning algorithms reduce the time and failure rate of manual feature extraction and are thus more suitable for application in HTPP platforms.

In this study, two end-to-end deep learning models based on 2DCNN and FCNN were proposed to predict SSC and pH of lettuce canopy, supplementing the research on phenotyping traits prediction of lettuce canopy scale. In the previous studies on the phenotyping traits of lettuce, before inputting the spectra into the model, manual feature engineering, such as pretreatment and wavelength selection was required. However, the proposed model of this study can take as input the raw mean reflectance spectra extracted from the hyperspectral images, and then directly output the SSC and pH of the lettuce canopy. The performance of the proposed models was also compared with the performance of five multivariate analysis methods (PLSR, SVR, MLR, ANN, LWR). Various pretreatment methods (MWS, SG, FDR, SDR, and WT) were used to denoise the spectra, while CARS was used to remove the redundant variables in the spectra to improve the performance of the models based on these five methods.

The proposed Deep2D and DeepFC were superior to all multivariate analysis methods. Herein, the Deep2D predicted the best results for SSC ( : 0.9030, RMSEP: 0.1969, and RPD: 3.2237). In contrast, the DeepFC predicted the best results for pH ( : 0.8490, RMSEP: 0.0260, and RPD: 2.5839). Additionally, PCA was used in previous study to predict SSC and pH of lettuce with R2 of 0.88 and 0.81, respectively (Supplementary Table S8), and the performance of the proposed deep learning models was also better than PCA models. These results indicate that the proposed Deep2D and DeepFC do not require any pretreatment or dimensionality reduction since they can automatically extract the optimal features associated with SSC and pH from the raw reflectance spectra. Therefore, deep learning models can predict SSC and pH better than multivariate analysis methods, reducing the time and error rate of feature selection in the analysis of plant phenotyping traits. However, it is necessary to determine the corresponding suitable or general networks structure for better quantitative analysis of various phenotyping traits of plant.

In further study, it is necessary to understand change of plant phenotyping traits over time to determine the optimal harvest time. Also, there are differences in the morphology of different varieties of lettuce, and thus the image characteristics can be considered to be integrated into the input of the proposed models to observe the performance of the models.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding authors.

SY, JF, and SS designed the research. SY, JF, XL, and WW conducted the experiments. SY analyzed the data and wrote the manuscript. SY and JF revised the manuscript. JF, XG, and CZ obtained project funding. All authors contributed to the article and approved the submitted version.

This research was funded by the Construction of Collaborative Innovation Center of Beijing Academy of Agricultural and Forestry Sciences (KJCX201917), Beijing Nova Program (Z211100002121065), and Science and Technology Innovation Special Construction Funded Program of Beijing Academy of Agriculture and Forestry Sciences (KJCX20210413).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpls.2022.927832/full#supplementary-material

Aasen, H., Burkart, A., Bolten, A., and Bareth, G. (2015). Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 108, 245–259. doi: 10.1016/j.isprsjprs.2015.08.002

Aich, S., and Stavness, I.. (2017). Leaf counting with deep convolutional and deconvolutional networks. Proceedings of the IEEE International Conference on Computer Vision Workshops. IEEE. 2080–2089. doi: 10.7780/kjrs.2021.37.3.12

Chung, J., Son, M., Lee, Y., and Kim, S. (2021). Estimation of soil moisture using Sentinel-1 SAR images and multiple linear regression model considering antecedent precipitations. Korean J. Remote Sens. 37, 515–530.

De Corato, U. (2020). Improving the shelf-life and quality of fresh and minimally-processed fruits and vegetables for a modern food industry: a comprehensive critical review from the traditional technologies into the most promising advancements. Crit. Rev. Food Sci. Nutr. 60, 940–975. doi: 10.1080/10408398.2018.1553025

ElMasry, G., Wang, N., ElSayed, A., and Ngadi, M. (2007). Hyperspectral imaging for nondestructive determination of some quality attributes for strawberry. J. Food Eng. 81, 98–107. doi: 10.1016/j.jfoodeng.2006.10.016

Eshkabilov, S., Lee, A., Sun, X., Lee, C. W., and Simsek, H. (2021). Hyperspectral imaging techniques for rapid detection of nutrient content of hydroponically grown lettuce cultivars. Comput. Electron. Agric. 181:105968. doi: 10.1016/j.compag.2020.105968

Fu, L., Majeed, Y., Zhang, X., Karkee, M., and Zhang, Q. (2020). Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 197, 245–256. doi: 10.1016/j.biosystemseng.2020.07.007

Furbank, R. T., Silva-Perez, V., Evans, J. R., Condon, A. G., Estavillo, G. M., He, W., et al. (2021). Wheat physiology predictor: predicting physiological traits in wheat from hyperspectral reflectance measurements using deep learning. Plant Methods 17, 1–15. doi: 10.1186/s13007-021-00806-6

Gao, D., Li, M., Zhang, J., Song, D., Sun, H., Qiao, L., et al. (2021). Improvement of chlorophyll content estimation on maize leaf by vein removal in hyperspectral image. Comput. Electron. Agric. 184:106077. doi: 10.1016/j.compag.2021.106077

Grzybowski, M., Wijewardane, N. K., Atefi, A., Ge, Y., and Schnable, J. C. (2021). Hyperspectral reflectance-based phenotyping for quantitative genetics in crops: progress and challenges. Plant Commun. 2:100209. doi: 10.1016/j.xplc.2021.100209

Kanjo, E., Younis, E. M. G., and Ang, C. S. (2019). Deep learning analysis of mobile physiological, environmental and location sensor data for emotion detection. Inform. Fusion 49, 46–56. doi: 10.1016/j.inffus.2018.09.001

Kerkech, M., Hafiane, A., and Canals, R. (2020). Vine disease detection in UAV multispectral images using optimized image registration and deep learning segmentation approach. Comput. Electron. Agric. 174:105446. doi: 10.1016/j.compag.2020.105446

Kim, Y. S. (2021). Detection of ecosystem distribution plants using drone Hyperspectral Spectrum and spectral angle mapper. J. Env. Sci. Int. 30, 173–184. doi: 10.5322/JESI.2021.30.2.173

Kim, M. J., Moon, Y., Tou, J. C., Mou, B., and Waterland, N. L. (2016). Nutritional value, bioactive compounds and health benefits of lettuce (Lactuca sativa L.). J. Food Compos. Anal. 49, 19–34. doi: 10.1016/j.jfca.2016.03.004

Mishra, P., Nordon, A., Mohd Asaari, M. S., Lian, G., and Redfern, S. (2019). Fusing spectral and textural information in near-infrared hyperspectral imaging to improve green tea classification modelling. J. Food Eng. 249, 40–47. doi: 10.1016/j.jfoodeng.2019.01.009

Mo, C., Kim, G., Kim, M. S., Lim, J., Lee, S. H., Lee, H. S., et al. (2017). Discrimination methods for biological contaminants in fresh-cut lettuce based on VNIR and NIR hyperspectral imaging. Infrared Phys. Technol. 85, 1–12. doi: 10.1016/j.infrared.2017.05.003

Mo, C., Kim, G., Lim, J., Kim, M., Cho, H., and Cho, B. K. (2015). Detection of lettuce discoloration using hyperspectral reflectance imaging. Sensors 15, 29511–29534. doi: 10.3390/s151129511

Nyonje, W. A., Schafleitner, R., Abukutsa-Onyango, M., Yang, R. Y., Makokha, A., and Owino, W. (2021). Precision phenotyping and association between morphological traits and nutritional content in vegetable Amaranth (Amaranthus spp.). J. Agri. Food Res. 5:100165. doi: 10.1016/j.jafr.2021.100165

Osco, L. P., Ramos, A. P. M., Moriya, É. A. S., Bavaresco, L. G., Lima, B. C., Estrabis, N., et al. (2019). Modeling hyperspectral response of water-stress induced lettuce plants using artificial neural networks. Remote Sens. 11:2797. doi: 10.3390/rs11232797

Pacumbaba, R. O. Jr., and Beyl, C. A. (2011). Changes in hyperspectral reflectance signatures of lettuce leaves in response to macronutrient deficiencies. Adv. Space Res. 48, 32–42. doi: 10.1016/j.asr.2011.02.020

Quemada, M., Gabriel, J. L., and Zarco-Tejada, P. (2014). Airborne hyperspectral images and ground-level optical sensors as assessment tools for maize nitrogen fertilization. Remote Sens. 6, 2940–2962. doi: 10.3390/rs6042940

Raza, A., and Zhong, M. (2019). Lane-based short-term urban traffic parameters forecasting using multivariate artificial neural network and locally weighted regression models: a genetic approach. Can. J. Civ. Eng. 46, 371–380. doi: 10.1139/cjce-2017-0644

Rebetzke, G. J., Jimenez-Berni, J., Fischer, R. A., Deery, D. M., and Smith, D. J. (2019). High-throughput phenotyping to enhance the use of crop genetic resources. Plant Sci. 282, 40–48. doi: 10.1016/j.plantsci.2018.06.017

Rehman, T. U., Ma, D., Wang, L., Zhang, L., and Jin, J. (2020). Predictive spectral analysis using an end-to-end deep model from hyperspectral images for high-throughput plant phenotyping. Comput. Electron. Agric. 177:105713. doi: 10.1016/j.compag.2020.105713

Roitsch, T., Cabrera-Bosquet, L., Fournier, A., Ghamkhar, K., Jiménez-Berni, J., Pinto, F., et al. (2019). New sensors and data-driven approaches—A path to next generation phenomics. Plant Sci. 282, 2–10. doi: 10.1016/j.plantsci.2019.01.011

Saptoro, A., Tadé, M. O., and Vuthaluru, H. (2012). A modified Kennard-stone algorithm for optimal division of data for developing artificial neural network models. Chem. Prod. Process. Model. 7:1645. doi: 10.1515/1934-2659.1645

Scabini, L. F. S., and Bruno, O. M.. (2021). Structure and performance of fully connected neural networks: emerging complex network properties. arXiv, 2107, 14062. doi: 10.48550/arXiv.2107.14062 [Epub ahead of print].

Simko, M., Chupac, M., and Gutten, M.. (2018). Thermovision measurements on electric machines. International Conference on Diagnostics in Electrical Engineering (Diagnostika). IEEE 1–4.

Simko, I., and Hayes, R. J. (2018). Accuracy, reliability, and timing of visual evaluations of decay in fresh-cut lettuce. PLoS One 13:e0194635. doi: 10.1371/journal.pone.0194635

Singh, A. K., Ganapathysubramanian, B., Sarkar, S., and Singh, A. (2018). Deep learning for plant stress phenotyping: trends and future perspectives. Trends Plant Sci. 23, 883–898. doi: 10.1016/j.tplants.2018.07.004

Song, Z., Zhang, Z., Yang, S., Ding, D., and Ning, J. (2020). Identifying sunflower lodging based on image fusion and deep semantic segmentation with UAV remote sensing imaging. Comput. Electron. Agric. 179:105812. doi: 10.1016/j.compag.2020.105812

Sun, J., Cao, Y., Zhou, X., Wu, M., Sun, Y., and Hu, Y. (2021). Detection for lead pollution level of lettuce leaves based on deep belief network combined with hyperspectral image technology. J. Food Saf. 41:e12866. doi: 10.1111/jfs.12866

Sun, J., Cong, S., Mao, H., Wu, X., and Yang, N. (2018). Quantitative detection of mixed pesticide residue of lettuce leaves based on hyperspectral technique. J. Food Process Eng. 41:e12654. doi: 10.1111/jfpe.12654

Sun, J., Wu, M., Hang, Y., Lu, B., Wu, X., and Chen, Q. (2019). Estimating cadmium content in lettuce leaves based on deep brief network and hyperspectral imaging technology. J. Food Process Eng. 42:e13293. doi: 10.1111/jfpe.13293

Tian, X., Li, J., Wang, Q., Fan, S., and Huang, W. (2018). A bi-layer model for nondestructive prediction of soluble solids content in apple based on reflectance spectra and peel pigments. Food Chem. 239, 1055–1063. doi: 10.1016/j.foodchem.2017.07.045

Wang, T., Rostamza, M., Song, Z., Wang, L., McNickle, G., Iyer-Pascuzzi, A. S., et al. (2019). SegRoot: a high throughput segmentation method for root image analysis. Comput. Electron. Agric. 162, 845–854. doi: 10.1016/j.compag.2019.05.017

Wang, G., Sun, Y., and Wang, J. (2017). Automatic image-based plant disease severity estimation using deep learning. Comput. Intell. Neurosci. 2017, 1–8. doi: 10.1155/2017/2917536

Xie, C., and Yang, C. (2020). A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 178:105731. doi: 10.1016/j.compag.2020.105731

Xin, Z., Jun, S., Yan, T., Quansheng, C., Xiaohong, W., and Yingying, H. (2020). A deep learning based regression method on hyperspectral data for rapid prediction of cadmium residue in lettuce leaves. Chemom. Intell. Lab. Syst. 200:103996. doi: 10.1016/j.chemolab.2020.103996

Yu, L., Hong, Y., Geng, L., et al. (2015). Hyperspectral estimation of soil organic matter content based on partial least squares regression. Trans. Chinese Soc. Agr. Engin. 31, 103–109. doi: 10.11975/j.issn.1002-6819.2015.14.015

Yue, J., Yang, G., Li, C., Li, Z., Wang, Y., Feng, H., et al. (2017). Estimation of winter wheat above-ground biomass using unmanned aerial vehicle-based snapshot hyperspectral sensor and crop height improved models. Remote Sens. 9:708. doi: 10.3390/rs9070708

Zarco-Tejada, P. J., Catalina, A., González, M. R., and Martín, P. (2013). Relationships between net photosynthesis and steady-state chlorophyll fluorescence retrieved from airborne hyperspectral imagery. Remote Sens. Environ. 136, 247–258. doi: 10.1016/j.rse.2013.05.011

Zhang, N., Liu, X., Jin, X., Li, C., Wu, X., Yang, S., et al. (2017). Determination of total iron-reactive phenolics, anthocyanins and tannins in wine grapes of skins and seeds based on near-infrared hyperspectral imaging. Food Chem. 237, 811–817. doi: 10.1016/j.foodchem.2017.06.007

Zhang, D., Xu, L., Liang, D., Xu, C., Jin, X., and Weng, S. (2018). Fast prediction of sugar content in dangshan pear (Pyrus spp.) using hyperspectral imagery data. Food Anal. Methods 11, 2336–2345. doi: 10.1007/s12161-018-1212-3

Zhou, X., Sun, J., Mao, H., Wu, X., Zhang, X., and Yang, N. (2018). Visualization research of moisture content in leaf lettuce leaves based on WT-PLSR and hyperspectral imaging technology. J. Food Process Eng. 41:e12647. doi: 10.1111/jfpe.12647

Keywords: plant phenotyping, hyperspectral imaging, deep learning, lettuce, SSC, pH

Citation: Yu S, Fan J, Lu X, Wen W, Shao S, Guo X and Zhao C (2022) Hyperspectral Technique Combined With Deep Learning Algorithm for Prediction of Phenotyping Traits in Lettuce. Front. Plant Sci. 13:927832. doi: 10.3389/fpls.2022.927832

Received: 25 April 2022; Accepted: 13 June 2022;

Published: 30 June 2022.

Edited by:

Chu Zhang, Huzhou University, ChinaReviewed by:

Chengquan Zhou, Zhejiang Academy of Agricultural Sciences, ChinaCopyright © 2022 Yu, Fan, Lu, Wen, Shao, Guo and Zhao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xinyu Guo, Z3VveHlAbmVyY2l0YS5vcmcuY24=; Chunjiang Zhao, emhhb2NqQG5lcmNpdGEub3JnLmNu

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.