94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci., 24 June 2022

Sec. Technical Advances in Plant Science

Volume 13 - 2022 | https://doi.org/10.3389/fpls.2022.886804

This article is part of the Research TopicComputer Vision in Plant Phenotyping and AgricultureView all 18 articles

Remote sensing using unmanned aerial vehicles (UAVs) and structure from motion (SfM) is useful for the sustainable and cost-effective management of agricultural fields. Ground control points (GCPs) are typically used for the high-precision monitoring of plant height (PH). Additionally, a secondary UAV flight is necessary when off-season images are processed to obtain the ground altitude (GA). In this study, four variables, namely, camera angles, real-time kinematic (RTK), GCPs, and methods for GA, were compared with the predictive performance of maize PH. Linear regression models for PH prediction were validated using training data from different targets on different flights (“different-targets-and-different-flight” cross-validation). PH prediction using UAV-SfM at a camera angle of –60° with RTK, GCPs, and GA obtained from an off-season flight scored a high coefficient of determination and a low mean absolute error (MAE) for validation data (R2val = 0.766, MAE = 0.039 m in the vegetative stage; R2val = 0.803, MAE = 0.063 m in the reproductive stage). The low-cost case (LC) method, conducted at a camera angle of –60° without RTK, GCPs, or an extra off-season flight, achieved comparable predictive performance (R2val = 0.794, MAE = 0.036 m in the vegetative stage; R2val = 0.749, MAE = 0.072 m in the reproductive stage), suggesting that this method can achieve low-cost and high-precision PH monitoring.

Remote sensing is a key technology for the sustainable management of agricultural fields. Agricultural management based on remote sensing strengthens food production and reduces natural resource use. Thus, remote sensing technologies have found applications, such as growth monitoring, irrigation management, weed detection, and yield prediction (Sishodia et al., 2020). Furthermore, the applications of remote sensing in agriculture have gained widespread attention in recent years (Weiss et al., 2020).

Unmanned aerial vehicles (UAVs) are commonly used for the remote sensing of agricultural fields owing to their high-resolution imagery and cost-effectiveness. Sensors (e.g., RGB or multispectral cameras, laser scanning devices, etc.) and processing strategies (e.g., vegetation index calculation, machine learning, 3D structure analysis, etc.) have been combined to solve problems in remote sensing applications (Tsouros et al., 2019).

Three-dimensional (3D) structural analysis is useful for determining plant height (PH) and volume, which reflect the growth and biomass of crops (Yao et al., 2019). Strategies for 3D structure analysis include generating 3D models from multiview aerial images of UAVs using structure from motion (SfM) algorithms or obtaining 3D point clouds with light detection and ranging (LiDAR) systems (Paturkar et al., 2021). The SfM approach with UAV imagery (UAV-SfM) can be conducted at a relatively low cost using normal RGB cameras to suit the requirements of agricultural applications. The UAV-SfM approach for monitoring growth or biomass has been applied to various crops, such as wheat (Holman et al., 2016; Madec et al., 2017; Volpato et al., 2021), barley (Bendig et al., 2013, 2014), rice (Jiang et al., 2019; Kawamura et al., 2020; Lu et al., 2022), and maize (Li et al., 2016; Ziliani et al., 2018; Tirado et al., 2020).

However, the UAV-SfM approach has a problem with regard to balancing precision and cost. During the SfM process, matching features over multiple images are detected, camera positions are estimated, and dense point clouds are generated (Westoby et al., 2012). Ground control points (GCPs), which are points whose coordinates are known from ground surveys, are often used to correct the camera positions. The coordinates of each aerial image surveyed with the global navigation satellite system (GNSS) are commonly recorded in the metadata of the image and can be used for the SfM process. SfM analysis without GCPs often faces coordinate errors owing to the uncertainty of GNSS (Wu et al., 2020) or an SfM-specific distortion termed the central “doming” effect (Rosnell and Honkavaara, 2012). However, the installation and maintenance of GCPs on the ground require time and effort. Additionally, annotation of GCPs on aerial images also takes time if weeds or reflection of sunlight interfere with the auto-detection algorithms for GCPs.

An orthomosaic (an orthographic image composed of geometrically corrected aerial images) and a digital surface model (DSM; a representation of elevation on the 3D model) are constructed from the point clouds. When aerial images of an on-season agricultural field are taken and processed, a DSM obtained by the SfM process shows an elevation that includes the PH. To extract PH from the DSM, data for ground altitude (GA), such as that obtained from a digital terrain model (DTM; digital topographic maps indicating ground surface), are necessary.

Ground altitude is mainly obtained by processing off-season (pre-germination or post-harvest) images to create a DTM (Roth and Streit, 2018; Ziliani et al., 2018; Jiang et al., 2019; Kawamura et al., 2020) or by extracting the altitude of the soil surface in the on-season DSM (Tirado et al., 2020). The former method (processing off-season images) requires an extra flight, along with the SfM process. In addition, the models obtained from different flights generally deviate from each other owing to the uncertainty of the coordinates and distortion of the 3D models mentioned above. Such deviations cause errors in PH prediction and affect reproducibility. Therefore, correction with GCPs is crucial for this method. The latter method (extracting soil altitude) requires the presence of a bare soil surface in the seasonal DSM. However, this method may be difficult to apply when the ground is fully covered by plants. To obtain GA in plants, extracted soil coordinates were interpolated to generate a DTM (Murakami et al., 2012; Gillan et al., 2014; Iqbal et al., 2017). Such interpolation methods can achieve low-cost and accurate PH predictions when adequate soil coordinates are extracted for the interpolation algorithm. Although other sources, such as airborne laser scanning (ALS), can be used to determine the altitude of the terrain (Li et al., 2016), the applicable scope is mostly restricted because of the equipment costs and time demands for aerial scanning.

The precision of UAV-SfM analysis is determined by the camera angles, real-time kinematics (RTK), and GCPs. SfM point clouds generated from aerial images taken at diagonal camera angles can have fewer errors that result from the “doming” effect (James and Robson, 2014). Furthermore, UAVs equipped with high-precision positioning systems using RTK-GNSS have become increasingly popular, although the initial and operational costs for RTK-UAVs remain higher. Finally, GCPs are generally used to correct camera positions, as mentioned above. In addition to these three parameters, methods for obtaining GA need to be considered for PH prediction. Although comparative studies have been conducted on one or a few of these variables (Holman et al., 2016; Xie et al., 2021; Lu et al., 2022), their effects on the precision of PH monitoring have not been fully investigated.

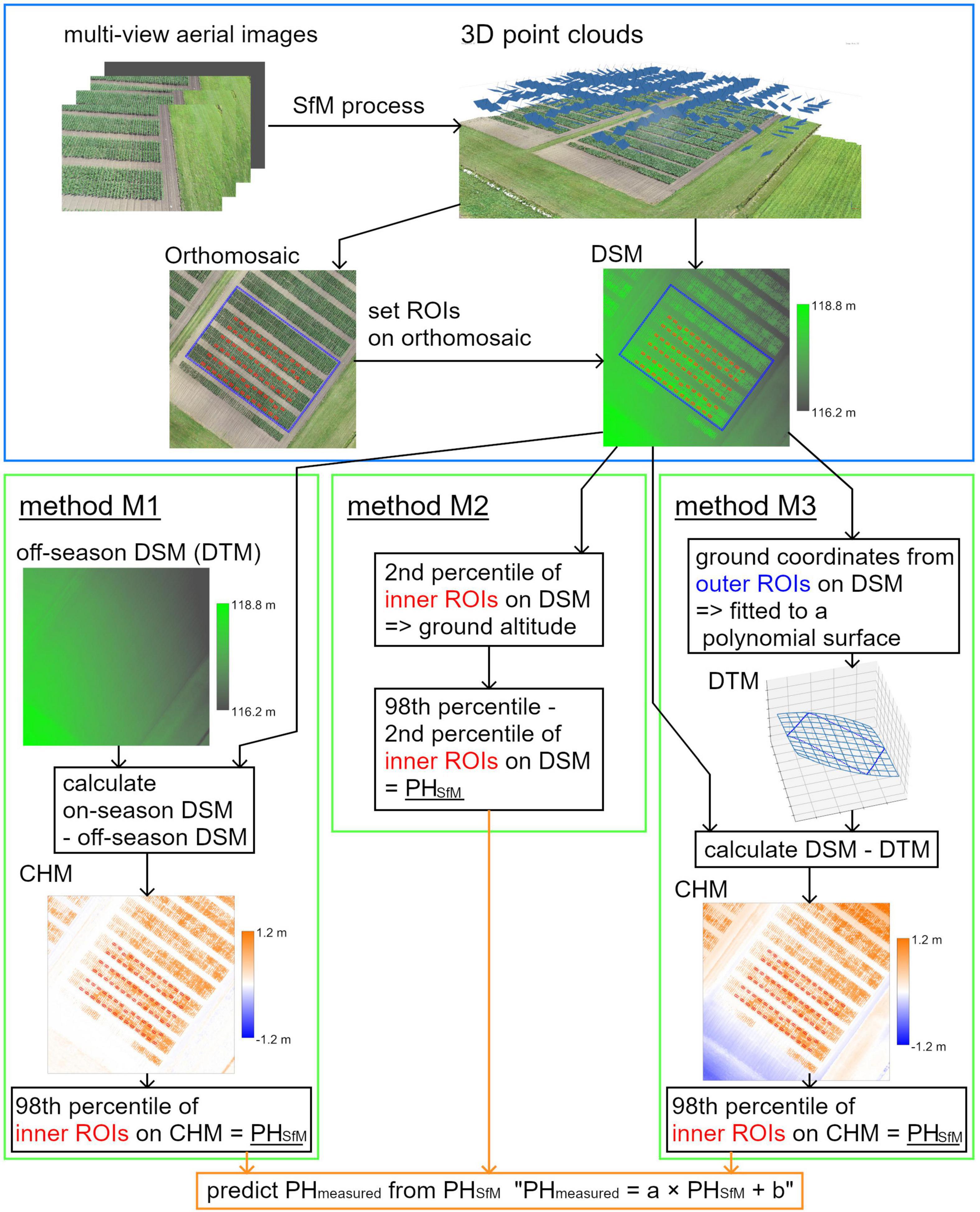

In this study, four variables, namely, camera angles, RTK, GCPs, and methods for obtaining GA, were compared for PH prediction in maize. Two existing methods for obtaining GA, using off-season DSMs (method M1) and extracting the altitude of the soil surface (method M2), were demonstrated (Figure 1). In addition, an interpolation method for obtaining GA (method M3) was considered, wherein the coordinates of the terrain around the field were obtained and fitted to a polynomial surface. The surface can then be used as a DTM, and the DTM subtracted from a DSM provides a crop height model (CHM), representing the PH of the crop (Chang et al., 2017). This method can achieve high precision without GCPs, even when the inside of the field is covered with plants.

Figure 1. An overview of the SfM process and three methods for obtaining ground altitude (GA); methods M1, M2, and M3. A DSM is a digital surface model that represents elevation on the 3D model, a DTM is a digital terrain model that represents elevation without plants, and a CHM is a crop height model that represents plant height of crop.

The data were collected from the Hokkaido Agricultural Research Center (Hokkaido, Japan). Two maize fields (Fields 1 and 2) under variety tests were used in this study. An overview of these two fields is presented in Figure 2. Field 1 was used for method M3, and Field 2 was used for validation of PH prediction under all conditions of camera angles, RTK, GCPs, and methods for obtaining GA. There were 42 plots (14 varieties) in Field 1 and 84 plots (21 varieties) in Field 2. Each plot had four rows, and each row contained 18 plants. The row spacing was 0.75 m and the in-row plant spacing was 0.18 m (7.41 plants per square meter) in both fields.

Figure 2. An overview of two fields of maize used in this study. Light blue (Field 1) and orange (Field 2) solid lines show the plots. Dash lines show the analysis region of each field. White circles around or in Field 2 show the locations of GCPs. Red rectangles show ROIs on rows (inner ROIs). Blue rectangles show ROIs around the field (outer ROIs).

Nine checkerboard square markers used as GCPs for the SfM process were placed in Field 2. Eight GCPs were located around the field and one GCP was located at the center of the field. The locations of the GCPs are shown in Figure 2. The coordinates of the GCPs were surveyed using D-RTK 2 (SZ DJI Technology, Nanshan, Shenzhen, China).

The ground truth of PH was measured using rulers as the height from the ground to the highest point of the plant at two different growth stages. During the vegetative stage, the highest point was at the apex of the top leaf, while during the reproductive stage, the highest point was at the apex of the tassel. Actual measurements were conducted in the middle two of the four rows in each plot. The PH of five consecutively placed plants in each row was measured and averaged. The average from one row was regarded as one sample (PHmeasured). Subsequently, PHmeasured was obtained from 84 rows in Field 1 and 168 rows in Field 2. The schedule of the actual PH measurements and UAV image acquisition is presented in Table 1.

A DJI Phantom 4 RTK (SZ DJI Technology) with a mounted camera (lens: 8.8 mm focal length, sensor: 1” CMOS 20 M) was used for image acquisition. The analysis region (35.5 m × 54 m) for the SfM process in each field was determined, as shown in Figure 2. The flight plan was generated automatically using DJI GS RTK (SZ DJI Technology) to cover the analysis region of each field with an adequate margin. The flight height was 25 m, the forward overlapping rate was 80%, and the side-overlapping rate was 60%. For the flight in Field 1, the camera angle (angles of a camera’s forward direction from a horizontal plane) was –90°, and RTK was not used. For the flight in Field 2, the camera angle was set to –60° or –90° as shown in Figure 3, and RTK was switched on or off. Therefore, four flight conditions were applied. The details of UAV image acquisition are shown in Supplementary Table 1 (Field 1) and Supplementary Table 2 (Field 2).

In Field 1, three flight repetitions were conducted at each growth stage. For Field 2, image acquisition was conducted in three stages, namely, the pre-germination stage (for obtaining off-season data of method M1), the vegetative stage, and the reproductive stage. At each stage, 12 flights (four conditions with three flight repetitions) were arranged using a randomized block design.

The SfM process was conducted using the Agisoft Metashape Professional 1.7.3 (Agisoft LLC, St. Petersburg, Russia). Three-dimensional point clouds were generated from the image sets, and orthomosaic images and DSMs were constructed. The parameters selected for the process are listed in Table 2. From each image set of Field 2, another Metashape project file was created for the GCP-corrected analysis. In the project file, markers were set at the locations of nine GCPs, the coordinates of GCPs by the ground survey were input, and the SfM process was conducted in a similar manner. The conditions for the SfM products are summarized in Supplementary Table 3.

Each analysis row in each field was divided into three blocks. Using QGIS Desktop 3.16.8, one polygon enclosing each field (Field 1 or 2) and polygon enclosing blocks were created on each orthomosaic image and written to a shapefile (the locations of polygons are shown in Supplementary Figure 1). Regions of interest (ROIs) in rows (inner ROIs, for all methods) and around the field (outer ROIs, for method M3) were determined using the shapefile. For the inner ROIs (red rectangles in Figure 2), the coordinates were calculated with the locations of the plants measured in the blocks. For outer ROIs (blue rectangles), coordinates of 180 rectangular areas with a size of approximately 1 × 0.5 m (on the corner: 0.5 × 0.5 m) enclosing each field were calculated. The coordinates were saved as CSV files. In Figure 2, the locations of the ROIs are drawn on an orthomosaic according to the coordinates used for visualization.

For method M1 (only Field 2), an off-season DSM was used as the DTM, which was subtracted from the on-season DSM. For each on-season DSM, an off-season DSM under the same conditions and repetition was applied; thus, the same number of CHMs (24 CHMs for Field 2) were obtained.

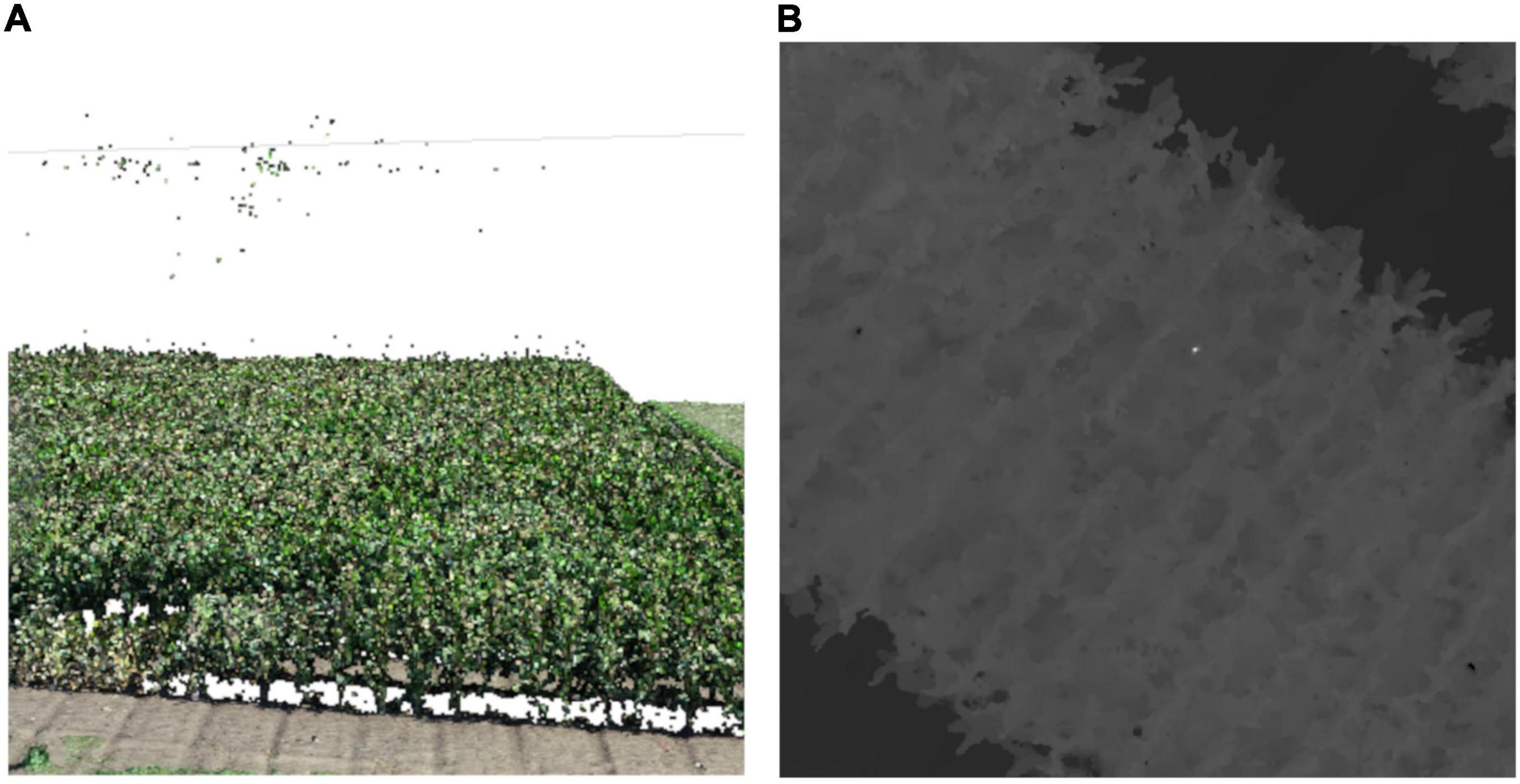

The 90th–99th percentiles have often been applied as representative values of PH (Holman et al., 2016; Malambo et al., 2018; Tirado et al., 2020), as they express height (altitude of the highest point) better than a mean and are subjected to less noise than a maximum. In this study, the size of an inner ROI was approximately 2,500 pixels, and noises that were blobs of adjacent 30 pixels or smaller were observed on a DSM (Figure 4). Therefore, to exclude noise less than 2% of the ROI (50 pixels), the 98th percentile was used in this study. For the CHMs, the 98th percentile from the inner ROIs was calculated as PHSfM.

Figure 4. An example of noises in point clouds. (A) A point cloud with noises (some points floating over plants). (B) A DSM with noises (an extremely high area near the center). The DSM is shown in grayscale; when the pixel is white, the altitude is high.

For method M2 (only Field 2), the 2nd and 98th percentiles from the inner ROIs were calculated as the altitude of the ground and plant apex, respectively. These percentiles were applied for the same reason mentioned above. The difference between the 2nd and 98th percentiles was obtained as PHSfM.

For method M3 (Fields 1 and 2), a DTM was obtained by polynomial fitting with the coordinates of the terrain around the field on an on-season DSM. The median altitude was calculated from each outer ROI as the z-coordinate of the area, and the center of gravity of the rectangle was calculated as the x and y-coordinates. A total of 180 points (x, y, z) were fitted to an n-dimensional polynomial surface (1) using the least squares method.

where n is the dimension of the polynomial surface, k and i are the indices for summation, and aki is the parameter to be estimated. This polynomial surface was used as the DTM. This DTM was subtracted from the original DSM to obtain a CHM. On the CHMs, the 98th percentiles from the inner ROIs were calculated as PHSfM (PH obtained from UAV-SfM analysis).

The dimension of the polynomial (n) was set to 0–4 for data from Field 1. The dimension that achieved the strongest correlation between the measured PH (PHmeasured) and PHSfM in Field 1 was applied for a comparative study of Field 2.

The analysis in this and the following sections were conducted using Python 3.6.8 and QGIS.

The Pearson correlation coefficient (r) between PHmeasured and PHSfM in each dataset and the bias (PHSfM−PHmeasured) were calculated. For each condition, the three coefficients obtained from the repetitions were averaged.

Cross-validation of the linear regression models to predict PHmeasured from PHSfM was conducted using data from Field 2. As the SfM point clouds from different flights could deviate from each other, validation with different target data on a different flight’s point cloud was needed (“different-targets-and-different-flight” validation) to ensure that a regression model from one flight can be applied to as training data to unknown data. The 168 data points from Field 2 were divided into three groups (the grouping in Field 2 is shown in Figure 2). A linear regression model (2) was fitted with the least-squares method using data from two groups (112 training data points).

where a and b are the parameters to be estimated (a: slope and b: intercept, respectively). A total of 56 data points from different flight repetition groups were used for validation. There were six flight repetitions and three groups for training and validation; thus, 18 sets of validations were conducted for each condition (all sets of flight repetitions and sample groups for cross-validation are shown in Supplementary Table 4). The coefficient of determination (R2), mean absolute error (MAE), root mean squared error (RMSE), and mean absolute percentage error (MAPE) were calculated using the validation data (equations of these evaluation metrics are shown in Supplementary Table 5).

A summary of the measured PH (PHmeasured) is provided in Table 3. The range of the measured PH was 0.632–1.190 m in the vegetative stage and 2.18–3.14 m in the reproductive stage. The standard deviations in Fields 1 and 2 were 0.075 and 0.102 m in the vegetative stage and 0.126 and 0.181 m in the reproductive stage, respectively. These results indicate high variability in PH in the fields.

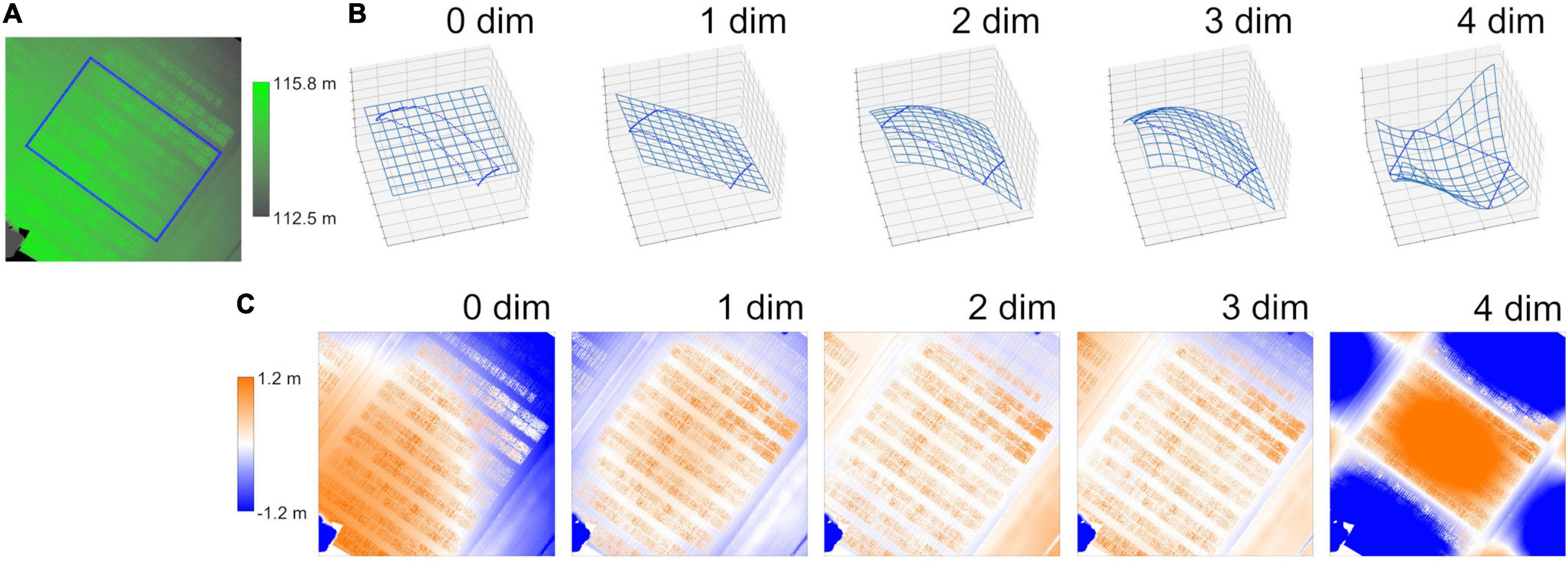

The DSM of Field 1 shows ground inclination, with the northeast being lower and the southwest being higher (Figure 5). The CHM calculated from the 0 dim (flat) DTM left the inclination, as the 0 dim DTM cannot model such a tilted plane. The CHM calculated from the 1 dim (plane) DTM did not leave the inclination but left the central bulge. The 2 and 3 dim DTMs fitted better to the true terrain. The CHMs from the 2 and 3 dim DTMs left neither the inclination nor bulge inside the ground ROIs. The 4 dim DTM, however, overfitted the sample points of the ground, and thus, the CHM from the DTM was strongly distorted.

Figure 5. An example of the process involved in method M3. (A) A digital surface model (DSM) of Field 1. Blue rectangles show ROIs around the field (outer ROIs). (B) Digital terrain models (DTMs) fitted to polynomial surfaces. Blue points show coordinates of the outer ROIs and meshes show the fitted DTMs. “n dim” shows the dimension of the polynomial surface. (C) Crop height models (CHMs) calculated as the difference between DSM and DTMs. These figures were created with the dataset of rep. 1 on Field 1 in the vegetative stage.

The mean correlation coefficients (r) between PHmeasured and PHSfM on the CHMs of Field 1 from the three flight repetitions are summarized in Table 4. The correlation was stronger with the 3 dim DTM in both growth stages. Thus, the 3 dim DTM was applied to method M3 in validation with Field 2.

PHSfM of Field 2 was calculated for 24 conditions that differed in the four parameters, namely, camera angles, RTK, GCPs, and methods for obtaining GA (method M1: using off-season DSM, M2: extracting altitude of the soil surface, and M3: fitting coordinates of the terrain around the field to a polynomial surface). The correlation coefficients (r) between PHmeasured and PHSfM were compared at each growth stage (vegetative stage, Table 5; reproductive stage, Table 6).

In the vegetative stage (Table 5), the correlation was stronger when a camera angle of −60° (diagonal) and method M3 were applied, even without RTK or GCPs. Using method M1, the correlation was stronger when RTK or GCPs were present. With method M2, although the correlation was strong at a −90° (nadir) camera angle, it was weak at −60°. The bias (PHSfM−PHmeasured) was negative (−0.07 to −0.09 m) for the highest four conditions in correlation coefficients. PHSfM tended to be lower than PHmeasured.

In the reproductive stage (Table 6), for both methods M1 and M3, the correlation was strong with −60° camera angle and RTK. When RTK and GCPs were not applied, the correlation was stronger using method M3. In the M2 method, the correlation was weak. PHSfM tended to be lower than PHmeasured during the reproductive stage (bias = approx. −0.2 to −0.3 m under higher correlation coefficient conditions).

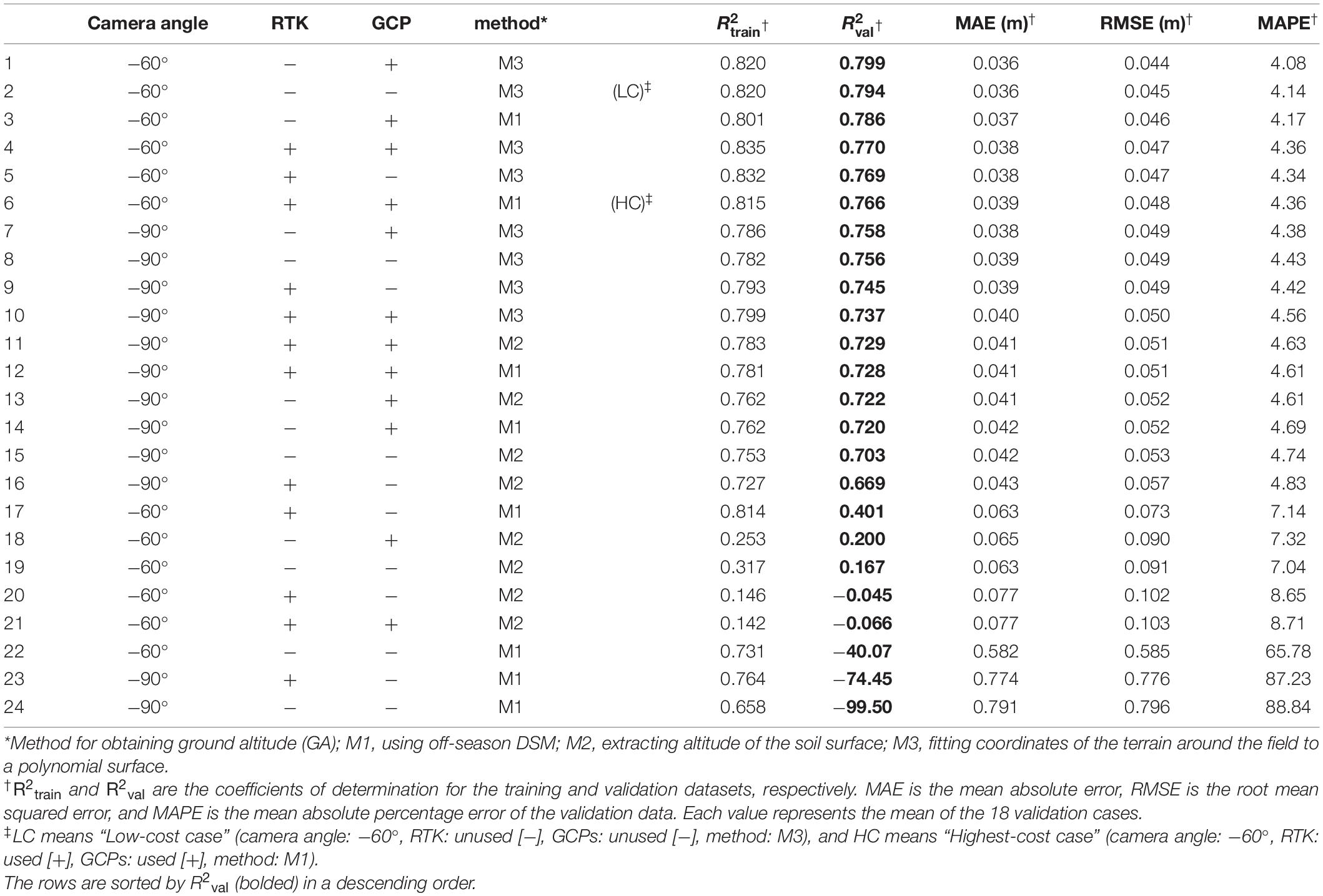

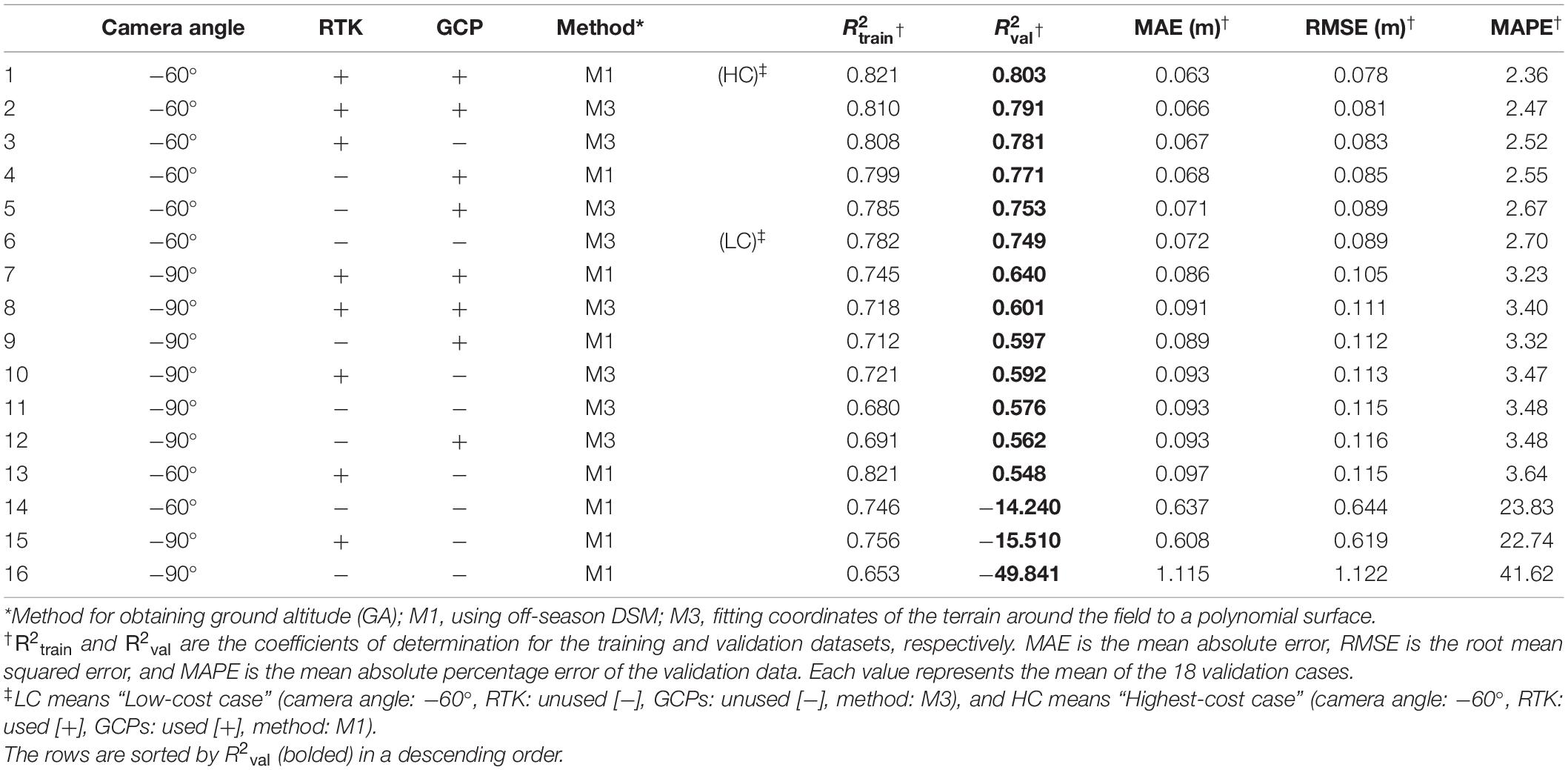

A linear regression model was necessary for PH prediction with UAV-SfM because PHSfM tended to be lower than PHmeasured. Simple regression models for PH prediction were trained and the “different-targets-and-different-flight” cross-validation was conducted under all conditions (vegetative stage: Table 7, reproductive stage: Table 8). In the reproductive stage, method M2 was omitted because the correlation between PHmeasured and PHSfM was weak (Table 6).

Table 7. Evaluation metrics on cross-validation of PH regression models in the vegetative stage of Field 2.

Table 8. Evaluation metrics on cross-validation of PH regression models in the reproductive stage of Field 2.

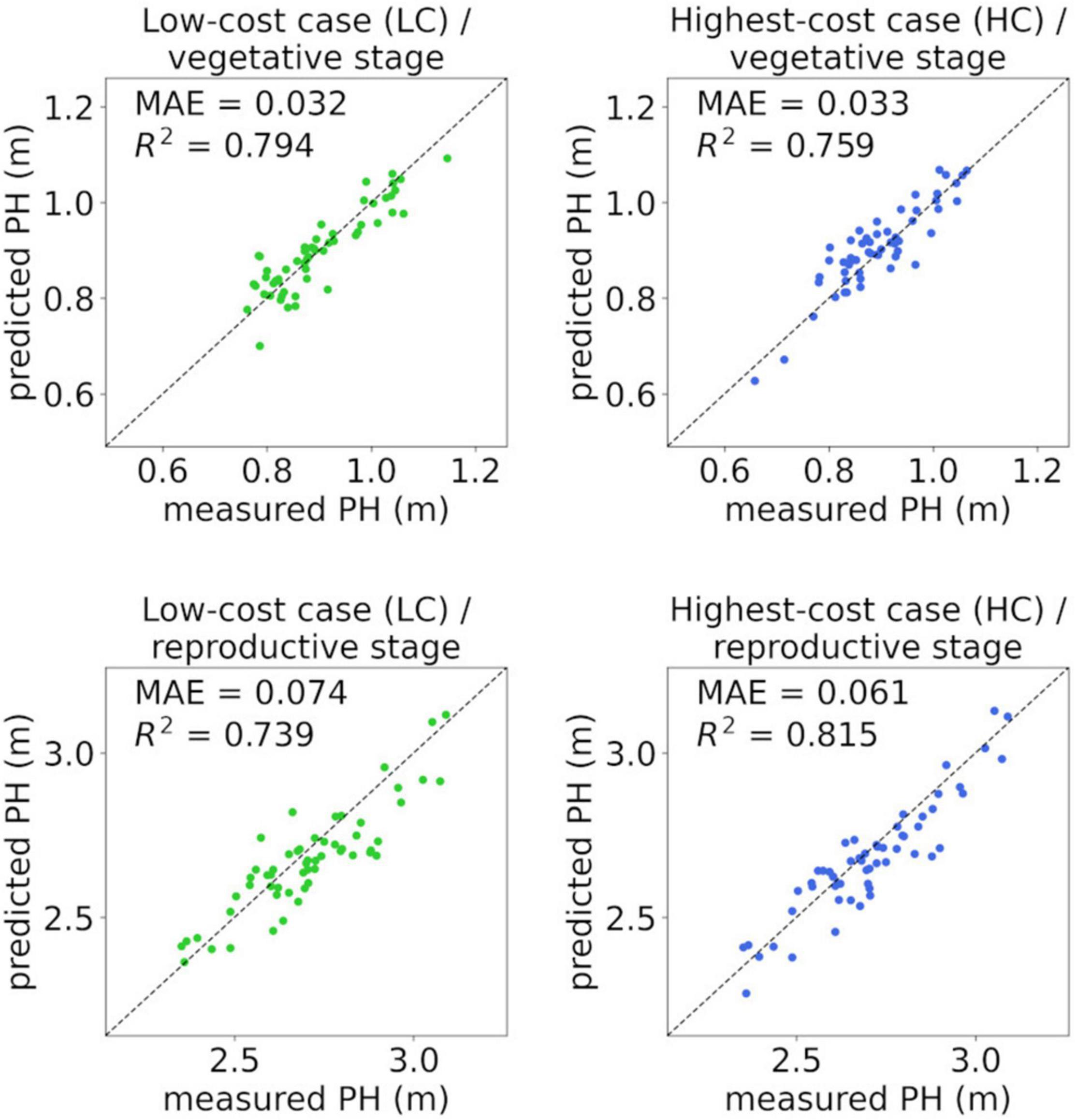

In the vegetative stage (Table 7), the coefficient of determination of the validation data () was high when the −60° camera angle and method M3 were applied, as was the correlation coefficient between PHmeasured and PHSfM (Table 5). Even in the “Low-cost case (LC)” (camera angle: −60°, RTK: unused [−], GCPs: unused [−], method: M3), which can be conducted with minimum equipment and without an extra flight, the regression model showed a high predictive performance ( = 0.794, MAE = 0.036 m). In the “Highest-cost case (HC)” (camera angle: −60°, RTK: used [+], GCPs: used [+], method: M1), which seems to achieve high-precision sensing with method M1, the was 0.766, which was lower than that of LC. With method M1, was high only when GCPs were used.

The predictive performance of HC was highest in the reproductive stage (Table 8) ( = 0.803, MAE = 0.063 m). was also high when the camera angle was −60° and method M3 was applied, including LC ( = 0.749, MAE = 0.072 m). With method M1, the predictive performance was low when GCPs were not used, similar to the vegetative stage. Although the overall mean absolute errors in the validation data (MAEs) in the reproductive stage were larger than those in the vegetative stage, the mean absolute percentage errors (MAPEs) in the reproductive stage were smaller (MAPE = 2–3% in the six highest conditions in ).

Examples of cross-validation, that is, scatterplots between the measured PH (PHmeasured) and PH predicted by the regression model from PHSfM on the validation data, are shown in Figure 6. From 18 validation cases for each condition, the average case (nearest to the mean in ) was selected for the figure.

Figure 6. Scatterplots between measured PH (PHmeasured) and PH predicted by the linear regression model from PHSfM on validation data. “Low-cost case (LC)” is the condition with camera angle: −60°, RTK: unused [−], GCPs: unused [−], method: M3; and “Highest-cost case (HC)” is the condition with camera angle: −60°, RTK: used [+], GCPs: used [+], method: M1. In each set of analysis conditions (LC or HC) and stage (vegetative or reproductive), the nearest to the mean in was selected from 18 validation cases.

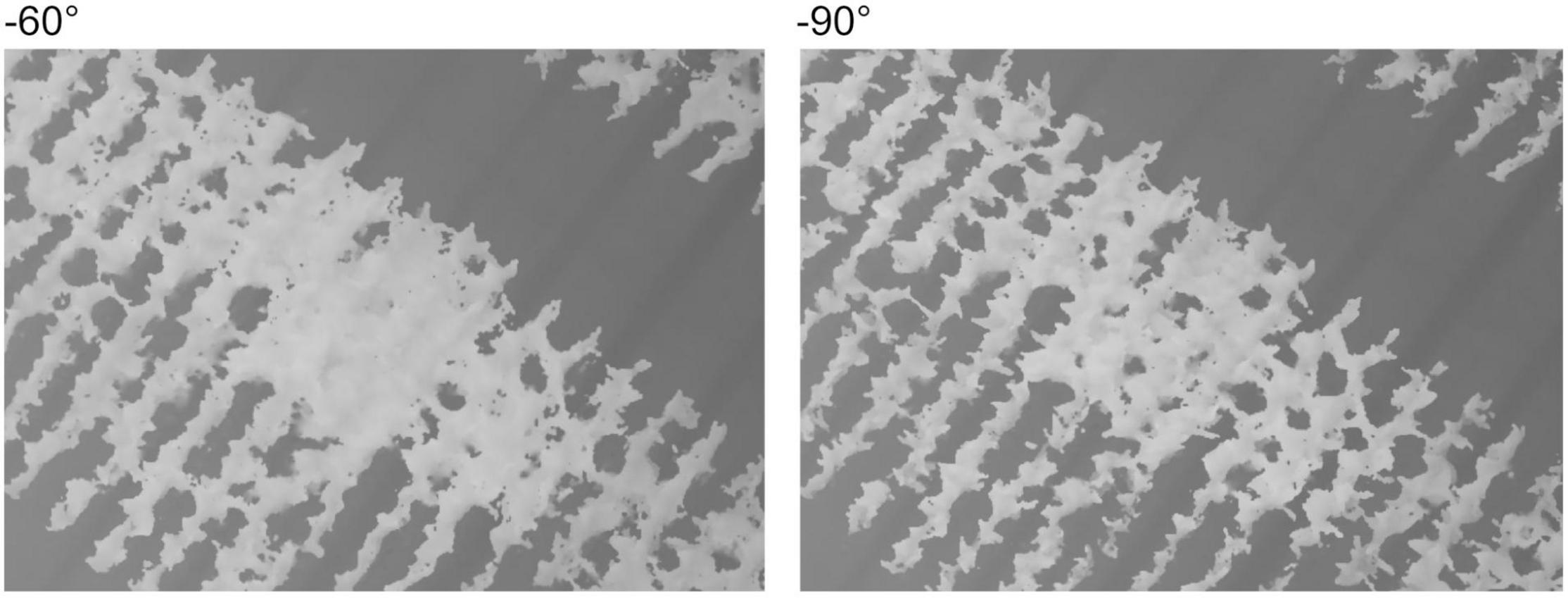

The correlation between PHmeasured and PHSfM was stronger with a −60° camera angle than with −90°, except for method M2 (Tables 5, 6). This tendency for a strong correlation of −60° was observed even when GCPs were used. Therefore, the diagonal camera angle could both suppress the “doming” effect and grasp the 3D structures of the plants well with a lateral view. However, in the vegetative stage using method M2, the soil surface in some plots was difficult to image at a diagonal camera angle, and the low accuracy of the GA appeared to result in a weak correlation. In some examples, a DSM with a −60° camera angle had wider plant areas than a DSM with a −90° camera angle and a hidden soil surface (Figure 7). In the reproductive stage, the inner ROI was mostly covered with plants, and thus, M2 was difficult to apply with both −60° and −90° camera angles.

Figure 7. A comparison of DSMs with −60° and −90° for the camera angle. The DSMs are shown in grayscale; when the pixel is white, the altitude is high.

The ranks of the regression models differed from those of the correlation coefficients (r) between PHmeasured and PHSfM (Tables 5–8). For example, in the vegetative stage, the condition with camera angle: −60°, RTK: used [+], GCPs: unused [−], and method: M1 scored high correlation coefficients (r = 0.903, rank = 6; Table 5) and thus high goodness of fit for the training data ( = 0.814; Table 7). However, the regression models had low predictive performance on “different-targets-and-different-flight” validation data ( = 0.401, rank = 17; Table 7). Although a strong correlation in one flight leads to high goodness of fit for the training data, the model showed low predictive performance on unknown data from different flights.

The predictive performance on unknown data was higher with the M1 and GCP methods or with method M3 (Tables 7, 8). With method M1, GCPs seemed to prevent the deviation between 3D models from different flights and contributed to the high predictive performance. For method M3, the predictive performance was not affected by such deviation, even without GCPs. The ground surface was determined for each on-season flight by using method M3. In this process, the effect of the overall deviation was reduced.

The contribution of RTK positioning to the precision of PH monitoring was restricted in this study, although only small effects were observed. With RTK used [+], GCPs unused [−], and method M1 applied, the was lower despite some improvement by a diagonal (−60°) camera angle (Tables 7, 8). Although RTK positioning installed on UAVs enables centimeter-level precision on a DSM (Forlani et al., 2018), the differences in PH were also at the centimeter level. Moreover, SfM photogrammetry based on RTK positioning has larger vertical errors than horizontal errors (Forlani et al., 2018; Štroner et al., 2020). A previous study on a paddy field under similar conditions of UAV image acquisition reported a 0.031 m vertical coordinate error (MAE) with a −60° camera angle and 2.10 m with a −90° camera angle on UAV-SfM point clouds with RTK without GCPs (Fujiwara et al., in press). A slight vertical deviation of point clouds with RTK positioning may cause low predictive performance for different flight data. For UAV-SfM reproducibility between different flights, GCPs seem to be more reliable than RTK.

In this study, the regression models in the two growth stages were trained separately. In contrast, a common model across growth stages scored a high coefficient of determination (R2) in several studies (Madec et al., 2017; Tirado et al., 2020; Lu et al., 2022). Considering that data from multiple growth stages have high variance, the proportion of the variation explained by the model could be large. That is, when the data obtained from an early stage (e.g., PH = 0.5–1 m) and from a later stage (e.g., PH = 2–3 m) are mixed and fitted to a model, a high coefficient of determination is expected. However, errors such as MAE and RMSE may be larger than those specific to the growth stage. Stage-specific models are important for simultaneous evaluation. In this study, PH prediction of centimeter-level accuracy was made possible by separating the models from the vegetative and reproductive stages. Strategies should be selected by considering the target and accuracy.

With method M3, the coordinates of the terrain are extracted only around a field, and thus, this method is applicable to a field covered with plants. In this study, although the trial fields had passages without plants (Figure 2), these passages were not used as GA. This was because of the assumption of production fields without such a passage. It was shown that method M3 worked when the inside of the field was covered with plants.

In this study, all outer ROIs were used for polynomial fitting because bare soil was always visible, that is, weeds were few. When such bare soil areas around a field are unavailable, it may be better to eliminate some outer ROI areas. For a field completely covered with plants without any margin, applying method M3 would be difficult. In such a situation, method M1 with RTK, GCP, or both may be more suitable.

The shapefiles for ROIs were created on each orthomosaic in this study; thus, the horizontal deviation of the 3D models did not affect the predictive performance. However, when common ROIs in a field are used for different flights, such horizontal deviations can cause errors. To reduce the cost of creating ROIs on time-series datasets, high-precision positioning with GCPs or RTK could be beneficial, regardless of the method used to obtain GA.

Three-dimensional structural analysis with UAV-SfM is applicable to PH monitoring, yield prediction (Bendig et al., 2014; Li et al., 2016; Roth and Streit, 2018; Jiang et al., 2019; Karunaratne et al., 2020), and lodging detection (Chu et al., 2017; Yang et al., 2017). Moreover, PH data from UAV-SfM, such as the mean, percentiles, and coefficient of variation, can be combined with RGB and multispectral data for crop-monitoring systems (Li et al., 2016; Jiang et al., 2019; Karunaratne et al., 2020). The PH obtained using a high-precision and low-cost method is the basis for advanced demonstrations. The UAV-SfM methods demonstrated in this study can be applied to various targets and analytical strategies.

In this study, to evaluate the predictive performance of unknown data from another flight, a “different-targets-and-different-flight” cross-validation was conducted. It was suggested that method M1 with GCPs and method M3 could build regression models with the goodness of fit to unknown data. Particularly, with method M3, the predictive performance was high on “LC” without the use of GCPs or RTK. Therefore, this could work as a high-precision and low-cost method for general analysis based on UAV-SfM. Three-dimensional structural analysis using this method may prove useful for remote sensing of production fields.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

RF, TK, HS, and YA designed this study and performed experiments. RF has contributed new analytical tools and analyzed the data. RF and YA wrote the manuscript. All authors have contributed to the manuscript and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We thank K. Ushijima, M. Suzuki, and K. Uesaka for their assistance in conducting the measurements.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpls.2022.886804/full#supplementary-material

CHM, crop height model; DSM, digital surface model; DTM, digital terrain model; GA, ground altitude; GCP, ground control point; GNSS, global navigation satellite system; HC, highest-cost case; LC, low-cost case; LiDAR, light detection and ranging; MAE, mean absolute error; MAPE, mean absolute percentage error; PH, plant height; RMSE, root mean squared error; ROI, region of interest; RTK, real-time kinematic; SfM, structure from motion; UAV, unmanned aerial vehicle.

Bendig, J., Bolten, A., and Bareth, G. (2013). UAV-based imaging for multi-temporal, very high resolution crop surface models to monitor crop growth variability. PFG Photogramm Fernerkund Geoinformation 6, 551–562. doi: 10.1127/1432-8364/2013/0200

Bendig, J., Bolten, A., Bennertz, S., Broscheit, J., Eichfuss, S., and Bareth, G. (2014). Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 6, 10395–10412. doi: 10.3390/rs61110395

Chang, A. J., Jung, J. H., Maeda, M. M., and Landivar, J. (2017). Crop height monitoring with digital imagery from Unmanned Aerial System (UAS). Comput. Electron. Agric. 141, 232–237. doi: 10.1016/j.compag.2017.07.008

Chu, T. X., Starek, M. J., Brewer, M. J., Murray, S. C., and Pruter, L. S. (2017). Assessing lodging severity over an experimental maize (Zea mays L.) field using UAS images. Remote Sens. 9:923. doi: 10.3390/rs9090923

Forlani, G., Dall’Asta, E., Diotri, F., Morra di Cella, U., Roncella, R., and Santise, M. (2018). Quality assessment of DSMs produced from UAV flights georeferenced with On-Board RTK positioning. Remote Sens. 10:311. doi: 10.3390/rs10020311

Fujiwara, R., Yasuda, H., Saito, M., Kikawada, T., Matsuba, S., Sugiura, R., et al. (in press). Investigation of a method to estimate culm length of rice based on aerial images using an unmanned aerial vehicle (UAV) equipped with high-precision positioning system. Breed. Res. doi: 10.1270/jsbbr.21J09

Gillan, J. K., Karl, J. W., Duniway, M., and Elaksher, A. (2014). Modeling vegetation heights from high resolution stereo aerial photography: an application for broad-scale rangeland monitoring. J. Environ. Manage. 144, 226–235. doi: 10.1016/j.jenvman.2014.05.028

Holman, F. H., Riche, A. B., Michalski, A., Castle, M., Wooster, M. J., and Hawkesford, M. J. (2016). High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 8:1031. doi: 10.3390/rs8121031

Iqbal, F., Lucieer, A., Barry, K., and Wells, R. (2017). Poppy crop height and capsule volume estimation from a single UAS flight. Remote Sens. 9:647. doi: 10.3390/rs9070647

James, M. R., and Robson, S. (2014). Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 39, 1413–1420. doi: 10.1002/esp.3609

Jiang, Q., Fang, S. H., Peng, Y., Gong, Y., Zhu, R. S., Wu, X. T., et al. (2019). UAV-based biomass estimation for rice-combining spectral, TIN-based structural and meteorological features. Remote Sens. 11:890. doi: 10.3390/rs11070890

Karunaratne, S., Thomson, A., Morse-McNabb, E., Wijesingha, J., Stayches, D., Copland, A., et al. (2020). The fusion of spectral and structural datasets derived from an airborne multispectral sensor for estimation of pasture dry matter yield at paddock scale with time. Remote Sens. 12:2017. doi: 10.3390/rs12122017

Kawamura, K., Asai, H., Yasuda, T., Khanthavong, P., Soisouvanh, P., and Phongchanmixay, S. (2020). Field phenotyping of plant height in an upland rice field in Laos using low-cost small unmanned aerial vehicles (UAVs). Plant Prod. Sci. 23, 452–465. doi: 10.1080/1343943X.2020.1766362

Li, W., Niu, Z., Chen, H. Y., Li, D., Wu, M. Q., and Zhao, W. (2016). Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 67, 637–648. doi: 10.1016/j.ecolind.2016.03.036

Lu, W., Okayama, T., and Komatsuzaki, M. (2022). Rice height monitoring between different estimation models using UAV photogrammetry and multispectral technology. Remote Sens. 14:78. doi: 10.3390/rs14010078

Madec, S., Baret, F., de Solan, B., Thomas, S., Dutartre, D., Jezequel, S., et al. (2017). High-throughput phenotyping of plant height: comparing unmanned aerial vehicles and ground LiDAR estimates. Front. Plant Sci. 8:2002. doi: 10.3389/fpls.2017.02002

Malambo, L., Popescu, S. C., Murray, S. C., Putman, E., Pugh, N. A., Horne, D. W., et al. (2018). Multitemporal field-based plant height estimation using 3D point clouds generated from small unmanned aerial systems high-resolution imagery. Int. J. Appl. Earth Obs. Geoinf. 64, 31–42. doi: 10.1016/j.jag.2017.08.014

Murakami, T., Yui, M., and Amaha, K. (2012). Canopy height measurement by photogrammetric analysis of aerial images: application to buckwheat (Fagopyrum esculentum Moench) lodging evaluation. Comput. Electron. Agric. 89, 70–75. doi: 10.1016/j.compag.2012.08.003

Paturkar, A., Sen Gupta, G., and Bailey, D. (2021). Making use of 3D models for plant physiognomic analysis: a review. Remote Sens. 13, doi: 10.3390/rs13112232

Rosnell, T., and Honkavaara, E. (2012). Point cloud generation from aerial image data acquired by a Quadrocopter type micro unmanned aerial vehicle and a digital still camera. Sensors (Basel) 12, 453–480. doi: 10.3390/s120100453

Roth, L., and Streit, B. (2018). Predicting cover crop biomass by lightweight UAS-based RGB and NIR photography: an applied photogrammetric approach. Precis. Agric. 19, 93–114. doi: 10.1007/s11119-017-9501-1

Sishodia, R. P., Ray, R. L., and Singh, S. K. (2020). Applications of remote sensing in precision agriculture: a review. Remote Sens. 12:3136. doi: 10.3390/rs12193136

Štroner, M., Urban, R., Reindl, T., Seidl, J., and Brouèek, J. (2020). Evaluation of the georeferencing accuracy of a photogrammetric model using a Quadrocopter with onboard GNSS RTK. Sensors (Basel). 20:2318. doi: 10.3390/s20082318

Tirado, S. B., Hirsch, C. N., and Springer, N. M. (2020). UAV-based imaging platform for monitoring maize growth throughout development. Plant Direct 4:e00230. doi: 10.1002/pld3.230

Tsouros, D. C., Bibi, S., and Sarigiannidis, P. G. (2019). A review on UAV-based applications for precision agriculture. Information 10:349. doi: 10.3390/info10110349

Volpato, L., Pinto, F., González-Pérez, L., Thompson, I. G., Borém, A., Reynolds, M., et al. (2021). High throughput field phenotyping for plant height using UAV-based RGB imagery in wheat breeding lines: feasibility and validation. Front. Plant Sci. 12:591587. doi: 10.3389/fpls.2021.591587

Weiss, M., Jacob, F., and Duveiller, G. (2020). Remote sensing for agricultural applications: a meta-review. Remote Sens. Environ. 236:111402. doi: 10.1016/j.rse.2019.111402

Westoby, M. J., Brasington, J., Glasser, N. F., Hambrey, M. J., and Reynolds, J. M. (2012). ‘Structure-from-Motion’ photogrammetry: a low-cost, effective tool for geoscience applications. Geomorphology 179, 300–314. doi: 10.1016/j.geomorph.2012.08.021

Wu, W., Guo, F., and Zheng, J. (2020). Analysis of Galileo signal-in-space range error and positioning performance during 2015–2018. Satell. Navig. 1, 1–13. doi: 10.1186/s43020-019-0005-1

Xie, T. J., Li, J. J., Yang, C. H., Jiang, Z., Chen, Y. H., Guo, L., et al. (2021). Crop height estimation based on UAV images: methods, errors, and strategies. Comput. Electron. Agric. 185:13. doi: 10.1016/j.compag.2021.106155

Yang, M. D., Huang, K. S., Kuo, Y. H., Tsai, H. P., and Lin, L. M. (2017). Spatial and spectral hybrid image classification for rice lodging assessment through UAV imagery. Remote Sens. 9:583. doi: 10.3390/rs9060583

Yao, H., Qin, R. J., and Chen, X. Y. (2019). Unmanned aerial vehicle for remote sensing applications—a review. Remote Sens. 11:1443. doi: 10.3390/rs11121443

Keywords: unmanned aerial vehicle, structure from motion, remote sensing, plant height, 3D structure analysis, maize

Citation: Fujiwara R, Kikawada T, Sato H and Akiyama Y (2022) Comparison of Remote Sensing Methods for Plant Heights in Agricultural Fields Using Unmanned Aerial Vehicle-Based Structure From Motion. Front. Plant Sci. 13:886804. doi: 10.3389/fpls.2022.886804

Received: 01 March 2022; Accepted: 20 May 2022;

Published: 24 June 2022.

Edited by:

Hanno Scharr, Julich Research Center, Helmholtz Association of German Research Centres (HZ), GermanyReviewed by:

Mario Fuentes Reyes, Earth Observation Center, German Aerospace Center (DLR), GermanyCopyright © 2022 Fujiwara, Kikawada, Sato and Akiyama. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yukio Akiyama, YWtreUBhZmZyYy5nby5qcA==

†Present address: Hisashi Sato, Kyushu-Okinawa Agricultural Research Center, NARO, Koshi, Japan

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.