94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci., 20 May 2022

Sec. Sustainable and Intelligent Phytoprotection

Volume 13 - 2022 | https://doi.org/10.3389/fpls.2022.791018

This article is part of the Research TopicIntelligent Plant Protection Utilizing Emerging Technology and Artificial IntelligenceView all 5 articles

Remote sensing and machine learning (ML) could assist and support growers, stakeholders, and plant pathologists determine plant diseases resulting from viral, bacterial, and fungal infections. Spectral vegetation indices (VIs) have shown to be helpful for the indirect detection of plant diseases. The purpose of this study was to utilize ML models and identify VIs for the detection of downy mildew (DM) disease in watermelon in several disease severity (DS) stages, including low, medium (levels 1 and 2), high, and very high. Hyperspectral images of leaves were collected in the laboratory by a benchtop system (380–1,000 nm) and in the field by a UAV-based imaging system (380–1,000 nm). Two classification methods, multilayer perceptron (MLP) and decision tree (DT), were implemented to distinguish between healthy and DM-affected plants. The best classification rates were recorded by the MLP method; however, only 62.3% accuracy was observed at low disease severity. The classification accuracy increased when the disease severity increased (e.g., 86–90% for the laboratory analysis and 69–91% for the field analysis). The best wavelengths to differentiate between the DS stages were selected in the band of 531 nm, and 700–900 nm. The most significant VIs for DS detection were the chlorophyll green (Cl green), photochemical reflectance index (PRI), normalized phaeophytinization index (NPQI) for laboratory analysis, and the ratio analysis of reflectance spectral chlorophyll-a, b, and c (RARSa, RASRb, and RARSc) and the Cl green in the field analysis. Spectral VIs and ML could enhance disease detection and monitoring for precision agriculture applications.

Florida is the second-largest watermelon producer in the United States behind Texas. Based on the information from USDA Economic Research Service in 2017, more than 113,000 acres were cultivated throughout the United States, producing 40 million pounds of watermelon. The USA’s annual total watermelon budget was $578.8 million in 2016. Several diseases adversely impact watermelon production in Florida, and accurate disease identification is critical to implementing timely and effective management tactics. One of the significant diseases of watermelon is downy mildew (DM) caused by the fungal-like oomycete (Pseudoperonospora cubensis). Symptoms of DM are found on the leaves where the lesions begin as chlorotic (yellow) areas that become necrotic (brown/black) areas surrounded by a chlorotic halo. Under humid conditions, dense sporulation of the pathogen on the underside of the leaves within the lesions appears fuzzy. Severely affected leaves become crumpled and brown and may appear scorched. Downy mildew infection spreads quickly and, if left unchecked, can destroy an entire planting within days, hence the nickname “wildfire” for both the rapid disease development and scorched leaf appearance. Downy mildew does not affect stems or fruit directly; however, defoliation due to DM leaves fruit exposed to sunburning, making the fruit non-marketable. Therefore, successful field scouting for diseases would improve the yield production by implementing timely and effective management actions.

Because of the rapid and destructive occurrence of the disease, early detection and preventative fungicide applications are critical to its management. Non-destructive methods have been utilized as remote sensing tools for identifying and evaluating diseases occurring over a season (Immerzeel et al., 2008). Bagheri (2020) applied aerial multispectral imagery for the detection of fire blight infected pear trees by utilizing unmanned aerial vehicle (UAV), and several vegetation indices (VIs) (IPI, RDVI, MCARI1, MCARI2, TVI, MTVI1, MTVI2, TCARI, PSRI, and ARI) were evaluated for disease detection. The support vector machine (SVM) method was used to detect diseased trees with an accuracy of 95%. In another example, cotton root rot disease was detected by using UAV remote sensing and three automatic classification methods to delineate cotton root rot-contaminated areas (Wang et al., 2020). Ye et al. (2020) developed a technique for detecting and monitoring Fusarium wilt disease by utilizing UAV multispectral imagery. Eight VIs associated with pigment concentration and plant development changes were selected to determine the plants’ biophysical and biochemical characteristics during the disease progress development stages (Ye et al., 2020). Unmanned aerial vehicles can cover large crop areas by employing aerial photography to monitor the progress of a disease over time. Dense period sequence analysis can provide additional information on the timing of plant field changes and enhance the quality and accuracy of information derived from remote sensing (Woodcock et al., 2020).

One of the benefits of aerial imaging using UAVs is providing information on disease hot spots. Remote sensing (e.g., UAV-based hyperspectral imagery) can detect plants with diseases in asymptomatic and early disease development stages, which are critical for timely disease management (Hariharan et al., 2019). Abdulridha et al. (2019, 2020a) successfully detected different disease development stages of laurel wilt in avocado and bacterial and target spots in tomatoes with high classification accuracies utilizing remote sensing and machine learning. Lu et al. (2018) utilized spectral reflectance data to detect three different diseases in tomatoes, late blight, target spot, and bacterial spot, and several VIs were extracted to distinguish between healthy and diseased plants. Only a few studies utilized hyperspectral data for watermelon disease detection, which were conducted mainly in laboratories. Blazquez and Edwards (1986) utilized a spectroradiometer technique to measure the spectral reflectance of healthy watermelon and distinguish it from two diseases (Fusarium wilt and downy mildew). They found significant differences between the disease categories, especially in the NIR region (700–900 nm). Kalischuk et al. (2019) used UAV-based multispectral imaging and several VIs to detect watermelons infected with gummy stem blight, anthracnose, Fusarium wilt, Phytophthora fruit rot, Alternaria leaf spot, and cucurbit leaf crumple virus in the field.

However, all the benefits of remote sensing for disease detection are wasted (or squandered) if not timed correctly with early control management. If early disease detection is achieved and management practices are applied in time, limiting the disease spread throughout the field and minimizing economic losses are possible. One of the main goals of precision agriculture is to optimize fungicide and pesticide usage by detecting diseased areas (hotspots) and performing site-specific spraying. A sensor-based detection and mapping of stress symptoms in crops are required to accomplish spatially precise applications. Some recent studies have focused on sensor-based detection of pathogen infections in crops to implement site-specific fungicide applications (Abdulridha et al., 2020b,c). High-throughput phenotyping tools, the internet of things, and a smart environment can be used to observe the heterogeneity of crop vigor and could be helpful to optimize agricultural input usage through improved decisions on the spot and accurate timing and dose of chemical applications (West et al., 2003; Almalki et al., 2021; Saif et al., 2021; Alsamhi et al., 2022).

Early disease detection is key to limiting the spread, reducing the severity, and minimizing crop damage. Accurate disease identification at the beginning of an outbreak is essential for implementing effective management tactics. To the best of our knowledge, a high-throughput technique for detecting and monitoring DM severity stages in watermelon fields has not yet been developed. In this study, hyperspectral images were collected in the field (via UAVs) and in the laboratory (via a benchtop system) to (i) train ML models for the detection and monitoring of the DM for several DS stages in watermelon, and (ii) select the best bands and VIs to distinguish between a healthy and a DM-affected watermelon plant. To the best of our knowledge, we are the first to develop a UAV-based hyperspectral imaging technique to detect DM-affected watermelon plants in several disease severity (DS) stages.

The experiments were conducted at the University of Florida’s Southwest Florida Research and Education Center in Immokalee, FL, United States. Guidelines established by the University of Florida were followed for land preparation, fertility, irrigation, weed management, and insect control. The beds were 0.81 m wide with 3.66 m centers covered with black polyethylene film. Four-week-old watermelon transplants “Crimson Sweet” were planted on 9 March 2019 into the soil (Immokalee fine sand) in a complete randomized block treatment design with four replicates. Each plot consisted of 10 plants spaced 0.91 m apart within an 8.23 m row with 3.05 m between each plot. The plants were infected naturally by DM.

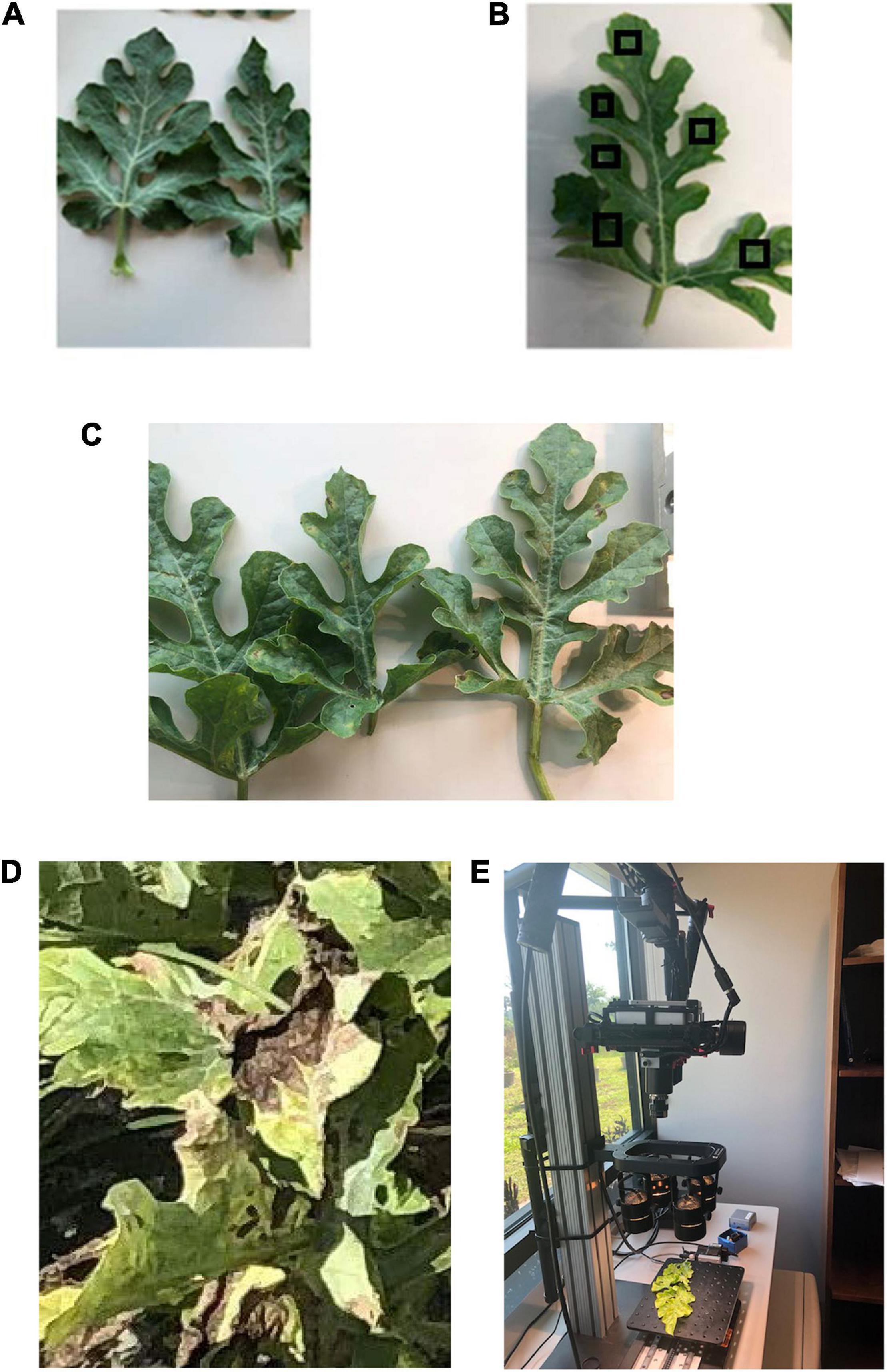

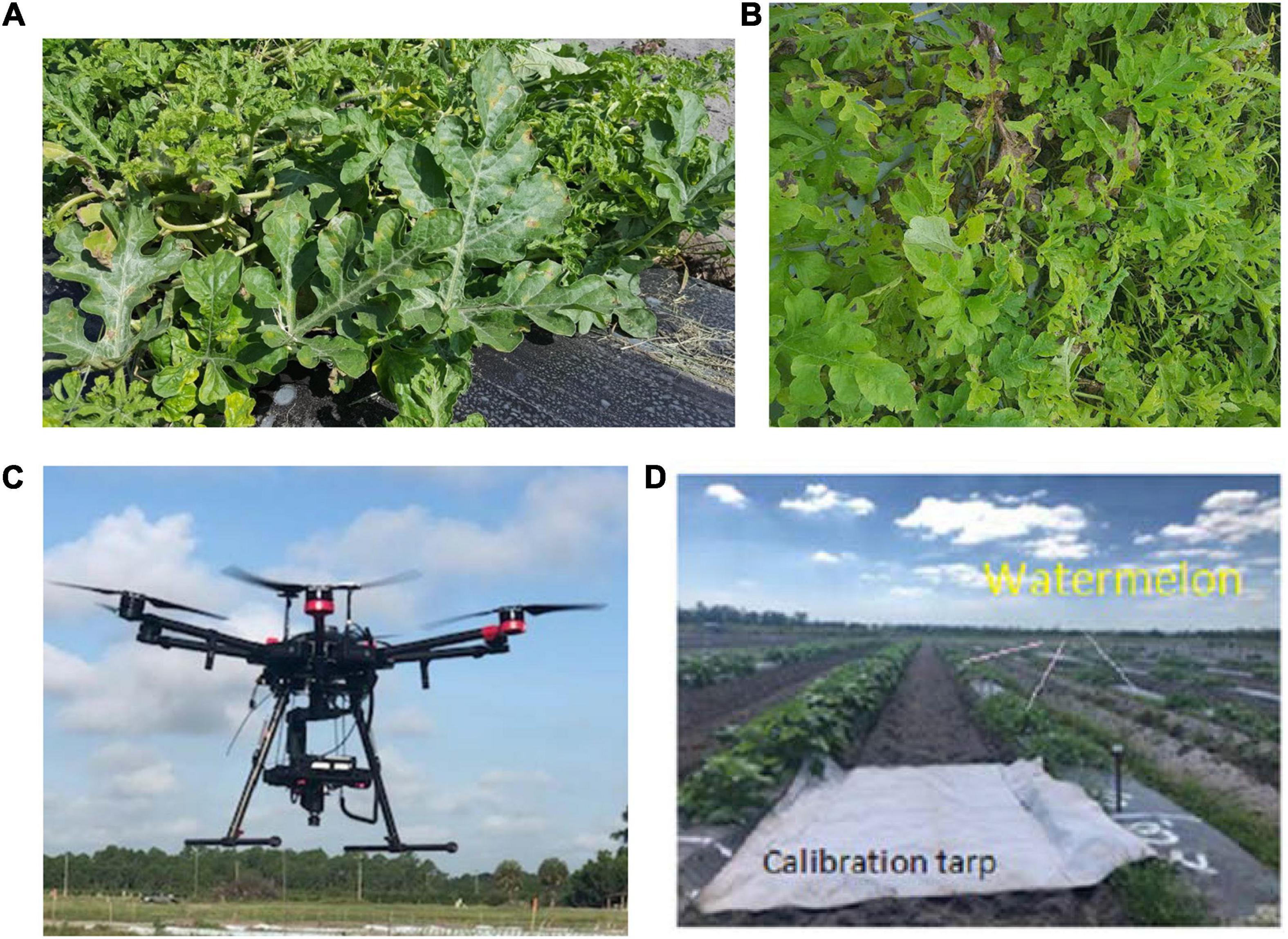

Healthy watermelon leaves were collected on April 10, 2019. After the first detection of DM, leaves were collected from DM-affected plants in five disease severities (DS; percentage of leaf tissue affected) stages. For the laboratory analysis, leaves were collected in the low DS stage (few lesions); then, from the medium 1 and 2, high and very high DS stages. The grading system of DM severity is as follows: the low DS had 5–10% severity, and as the disease progressed, the percentage of infection gradually increased in low to medium DS stages 1 and 2 (11–20% and 21–30% severity, respectively), and then the high and very high DS stages increased dramatically from 31 to 50% and 51 to 75% severity, respectively (Figure 1). In this study, spectral data were not collected when the DS was more than 75%, because the leaves were in very bad shape and desiccated. In the field (UAV-based) study, DS was categorized in two stages: low and high (Figure 2). The difference between the number of DS stages in the laboratory and field was due to the fact that, in the laboratory, each leaf was analyzed as a sample, and in the field, an entire plant was used as a sample. Hence, it was easier to quantify the DS stage in a single leaf than in an entire plant, which can include leaves in different DS stages.

Figure 1. (A) Healthy watermelon leaves and downy mildew infected leaves in different severity stages (as examples): (B) low (this image includes examples of regions of interest, RoIs); (C) medium; and (D) high. (E) Hyperspectral data collection in the laboratory by a Pika L2 (Resonon Inc., Bozeman MT, United States) hyperspectral camera.

Figure 2. Downy mildew severity stages in the field: (A) low; (B) high; (C) UAV-based hyperspectral imaging system; and (D) a calibration tarp.

Spectral data were collected using a benchtop hyperspectral imaging system, Pika L 2.4 (Resonon Inc., Bozeman MT, United States) (Figure 1E). The Pika L 2.4 was equipped with a 23-mm lens, which has a spectral range of 380–1,030 nm, 15.3° field of view, and a spectral resolution of 2.1 nm. The same hyperspectral camera was utilized in the laboratory (Figure 1E) and field (Figure 2C) after changing lenses, which covered the same spectral range. Resonon’s hyperspectral imagers (RHI), known as push-broom imagers, are line-scan imagers, which have 281 spectral channels. The system is made up of a linear stage assembly, which is shifted by a stage motor. In the laboratory, controlled broadband halogen lighting sources were set up above the linear stage to produce ideal situations for conducting spectral scans. The hyperspectral imaging system was arranged in a way that the lens’ distance from the linear stage was 0.5 m. The lights were positioned at the same level as the lens on a parallel plane. All scans were performed using the Spectronon Pro (Resonon Inc., Bozeman, MT, United States) software, which was connected to the camera system using a USB cable. Before performing the scans of the leaves, dark current noise was removed using the software. Then, the camera was calibrated by using a white tile (reflectance reference), provided by the manufacturer, and placed under the same conditions as used for performing scans. The selection of the regions of interest (RoIs) (e.g., Figure 1B) was done manually by picking six spectral scan regions per leaf (10 leaves per DS stage) to prevent the occurrence of any bias. The total spectral scans (RoIs) selected for each DS stage was 60. Regions of interest were selected in such a way that they included both the affected and unaffected areas of leaf tissue. The pixel number of each spectral scan selected was between 800 and 900 pixels. The average of 60 spectral scans was used to form an overall spectral scan signature curve for each DS stage. The Spectronon Pro software, which is a post-processing data analysis software, was used to analyze the spectral data of each leaf scan. Several areas containing the symptomatic and non-symptomatic regions on the leaves were selected using the selection tool and the spectrum was generated. For the healthy and DM-affected plans (five DS stages), several random spots on leaves were selected and the average spectral reflectance was calculated and used to form the spectral signature curves.

Spectral data were collected in the field by using a UAV (Matrice 600 Pro, Hexacopter, DJI Inc., Shenzhen, China) and the same hyperspectral camera (Pika L 2.4). The UAV-based imaging system included (Figure 2C): (i) a Resonon Pika L 2.4 hyperspectral camera; (ii) a visible-NIR (V-NIR) objective lens for the Pika L camera with a focal length of 17 mm, field of view (FOV) of 17.6 degrees, and an instantaneous field of view (IFOV) of 0.71 mrad; (iii) a Global Navigation Satellite System (GNSS) (Tallysman 33-2710NM-00-3000, Tallysman Wireless Inc., Ontario, Canada)/Inertial Measurement Unit (IMU) (Ellipse N, SGB Systems S.A.S., France) flight control system for multirotor aircraft to record sensor position and orientation, and (iv) a Resonon hyperspectral data analysis software (Spectronon Pro, Resonon, Bozeman, MT, United States), which is capable of rectifying the GPS/IMU data using a georectification plugin. The data were collected at 30 m above the ground and a speed rate of 1.5 m s–1. In the produced map, the pixel size is a function of the working distance (distance between the camera lens and the scanning stage/field) and FOV. This value varies according to the flight parameters. In this study, it was around 35 mm per pixel. Gray tarp (Group VIII Technologies) was utilized to correct the data reflectance from radiance; the reflectance tarp was 36%. Radiometric calibration was performed by using a calibrated integrating sphere. The manufacturer took 100 lines of spectral data and built a radiometric calibration file that contains a lookup table with all combinations of integration times and frame rates. These data were used to convert raw camera data (digital numbers) to physical units of radiance in micro flicks. The Pika L 2.4 camera is a “pushbroom” or line-scan type imager that produces a 2-D image, where every pixel in the image contains a continuous reflectance spectrum. A calibration tarp was used to calibrate the data for various illumination conditions in the field (Figure 2D). The RoIs were randomly handpicked for each plant, and several spectral scans were done to cover the entire canopy. Each RoI contained four pixels, and four RoIs were selected for each plant. The total sample size for each DS stage was 20 plants. The RoIs were then transferred as a text file and processed using the SPSS software (SPSS 13.0, Inc., Chicago; Microsoft Corp., Redmond, WA, United States).

Vegetation indices could serve as indicators to identify DS stages based on any defectiveness in the functioning of plants such as physiochemical defects that affect photosynthesis, metabolic, and nutritional processes. The factors that are most affected by diseases and that could be measured are chlorophyll content, cell structure, cell sap, presence, and relative abundance of pigments concentration, water content, and carbon as expressed in the solar-reflected optical spectrum (400–2,500 nm) (Xiao et al., 2014). A neural network multilayer perceptron was performed to select the best VIs that could identify DM disease and its DS. For data analysis and evaluation of all VIs evaluated in this study (Table 1), the SPSS software was used. The correlation coefficient was another parameter that was utilized in this study to evaluate its VI’s performance in detecting DM severity stages.

A decision tree (DT) is a non-parametric managed learning process used for organization and regression. The objective of DT is to produce a model that calculates the value of a goal variable by learning simple choice instructions deducted from data features. A decision tree has the capability of handling data measured on different rulers in the absence of any models for the proportion distributions of the data individually from the modules, elasticity, and capability to handle non-linear relationships among features and modules (Friedl and Brodley, 1997). The decision tree can be qualified rapidly and are quick in execution. It is a widely used technique in image processing for detecting several plant diseases. In this study, the classification was accrued between healthy and DM-affected watermelon plans in several DS stages. The dataset was split into 70% training and 30% testing.

Multilayer perceptron (MLP), which is a deep artificial neural network, was applied to identify the difference between healthy and several DM disease severity stages. Similar to a neural network, MLP is a function of predictors, also called inputs, or independent variables that minimize the prediction error of target variables, also called outputs. These models can learn by example. Thus, when using a neural network, there is no need to program how the output is obtained for the given certain input; rather, a learning algorithm is used by the neural network to calculate the relationship between input and output, which is then utilized to predict output with the entered input values. The neural network creates a fitted model in an analytical form, where the parameters are weight, bias, and network topology. The multilayer perceptron is a fully connected multilayer feed-forward supervised learning network trained by the back-propagation algorithm to minimize a quadratic error criterion; no values are fed back to earlier layers. The multilayer perceptron is composed of an input layer, a hidden layer, and an output layer. The input and output layers are not weighted, and the transfer functions on the hidden layer nodes are radially symmetric. The full dataset was randomly split into two datasets by partitioning the active dataset into training (70%) and testing (30%) samples. After learning, the MPL model was run on the test set that provided an unbiased estimate of the generalization error.

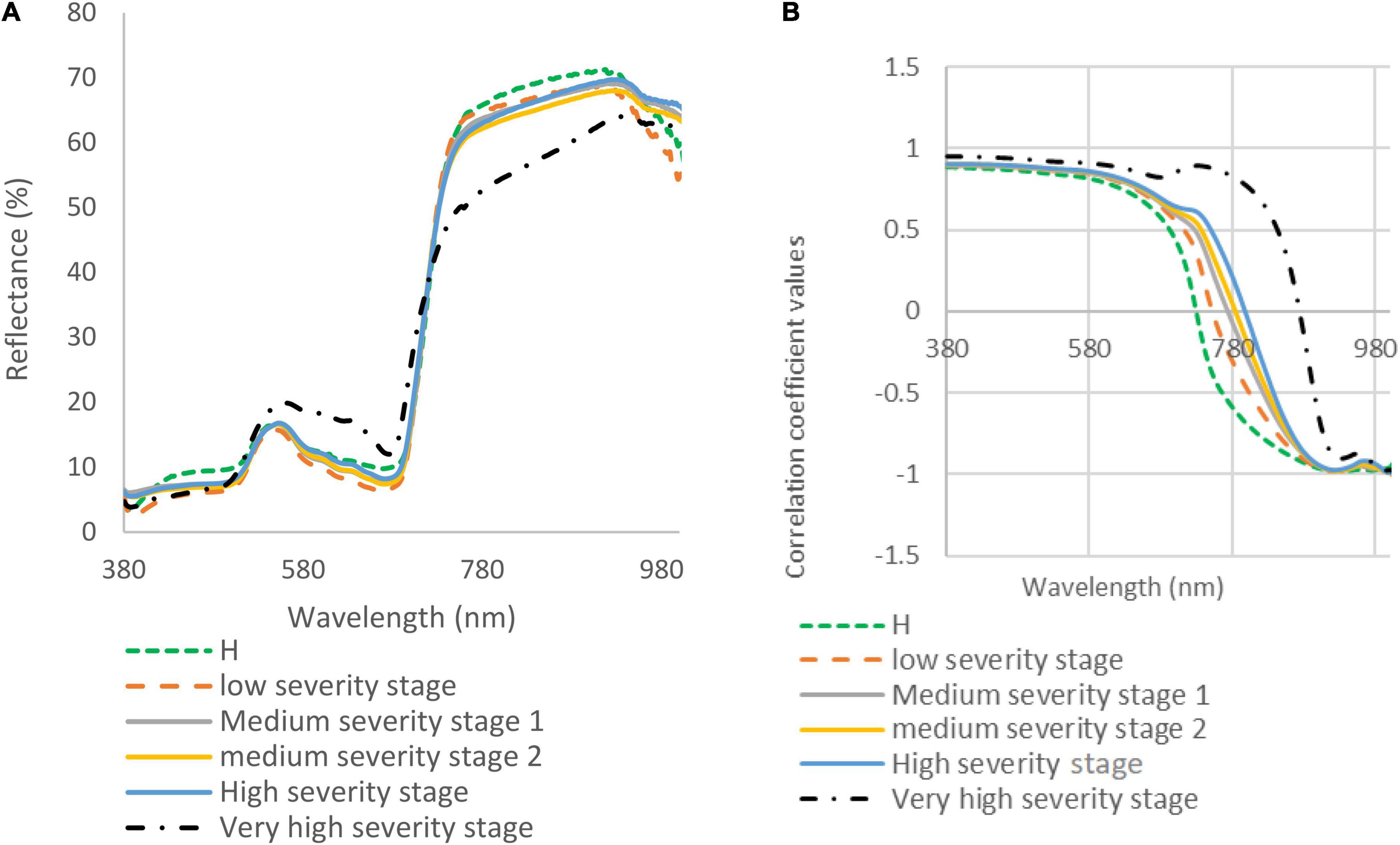

During the spectral data collection under optimal light and temperature conditions in the laboratory, the spectral reflectance of five DM disease severity stages was taken. The spectral signatures and correlation coefficient at different disease severities were measured and compared (Figure 3). The spectral reflectance of the very high DS stage showed a significant increase in the green and red range (450–650 nm), while other stages showed lower spectral reflectance in the visible range (380–700 nm). The spectral reflectance values of the very high DS stage were decreased in the NIR range, and the spectral reflectance in the red edge diverged from other stages. The leaves of watermelon in the low and medium DS stages had very few symptoms; therefore, there were only slight differences between the spectral reflectance for these stages. The spectral reflectance signature is strongly related to the severity of the expected DM pathogen symptom (Figure 3A). In the visible range (380–700 nm), there were not many differences in the correlation coefficient signature of all DS stages (Figure 3B). The signatures were identical until the red-edge range (700 nm), where it gradually showed differences between DS stages (especially the high and very high severity stages). It is obvious from Figure 3B that the correlation coefficient showed wide differences in the NIR range as the DS increased.

Figure 3. (A) Spectral reflectance signatures (collected in the laboratory) of downy mildew affected watermelon leaves in five disease severity (DS) stages; and (B) correlation coefficient for watermelon leaves in healthy (H) and five DS stages.

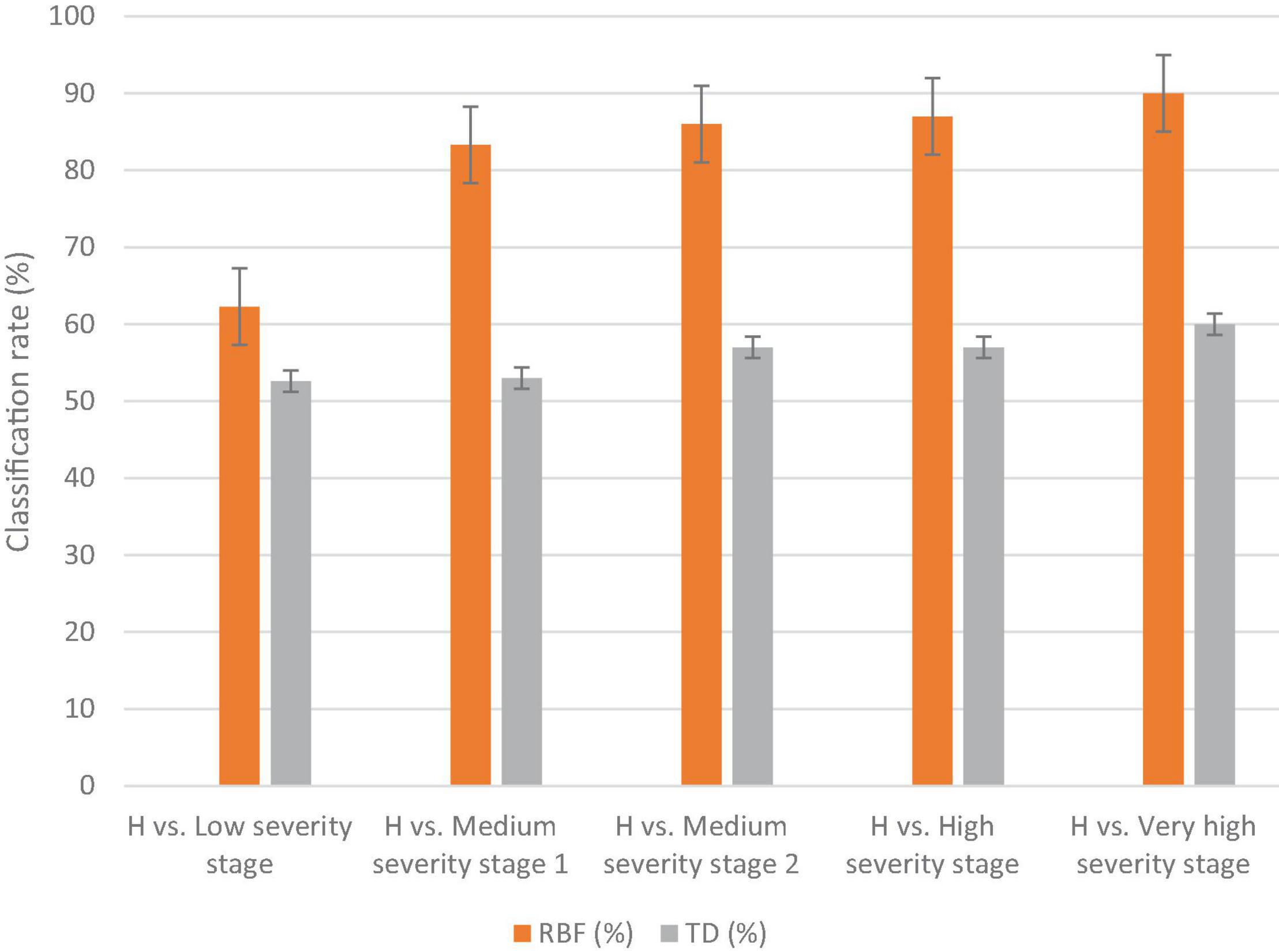

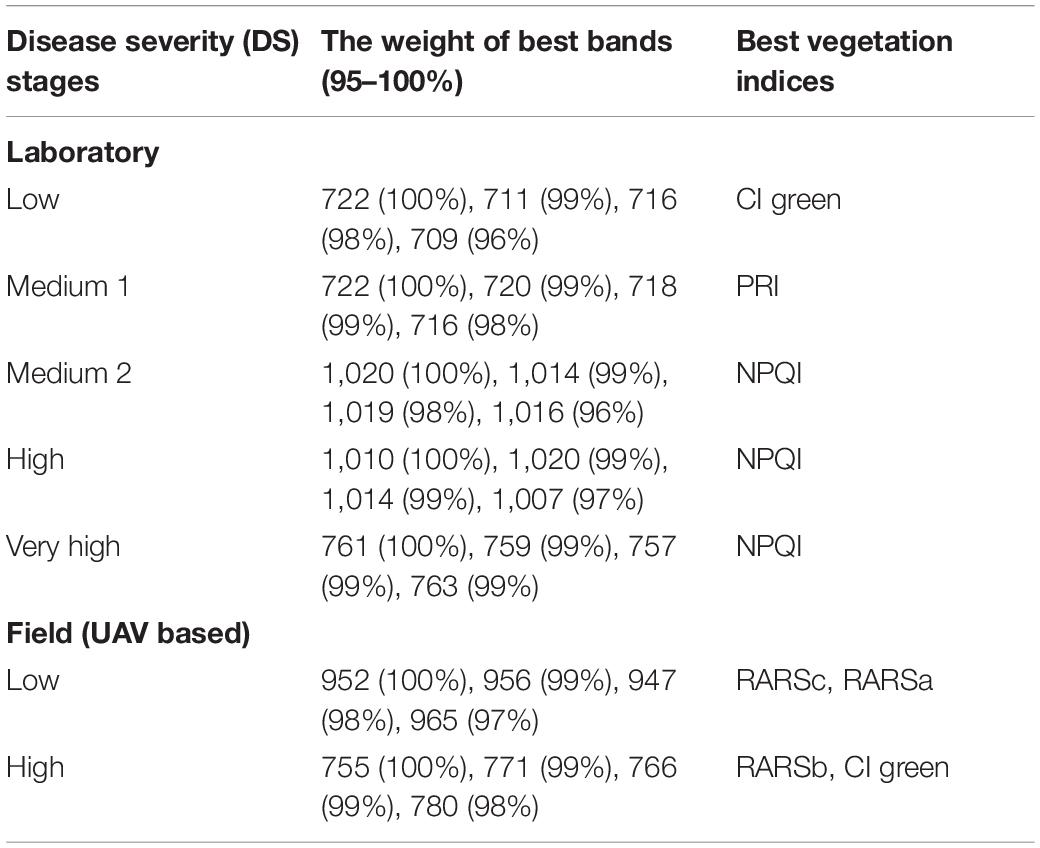

The results of the classification varied based on disease severity, as in the low DS stage, the classification was lower than the other stages (e.g., 62.3 and 52.6% for MLP and DT, respectively). The classification rate increased as the severity of disease symptoms increased (Figure 4). The highest classification value of MLP was in the very high DS stage at 90%. The DT classification method had lower detection accuracies than the MLP classification method for all DS stages (Figure 4). Hence, the MLP method was selected as the best classification method for DM detection and DS stages classification. Therefore, it was used for the selection of the best wavebands and VIs for disease detection. The best wavebands for detecting the low and medium 1 DS stages were found on the red edge (711–722 nm), medium 2 and high DS stages were found at 1,007–1,020 nm, and the very high DS stage was found in red edge (759–761 nm). The best VIs, selected by using the MLP classification method, for low and medium DS stages 1 were the Cl green and PRI, respectively, while for medium 2, high, and very high DS stages were the NPQI (Table 2).

Figure 4. The classification results of the MLP and DT methods to distinguish healthy (H) against several disease severity stages of downy mildew disease in watermelon in the laboratory. The vertical lines on the columns are error bars.

Table 2. Best wavebands and vegetation indices measured in the laboratory and field for detecting different disease severity stages of downy mildew.

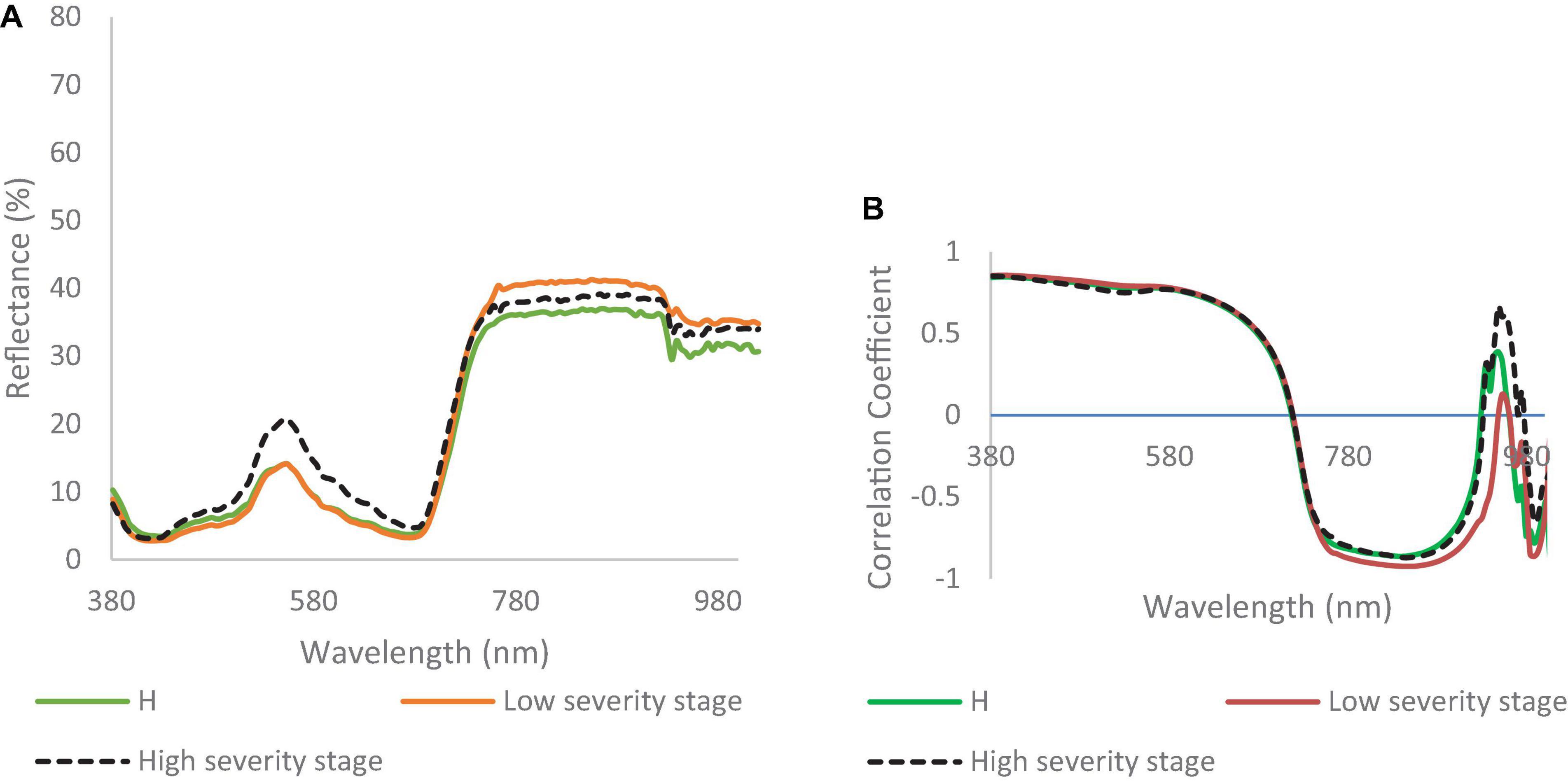

Figure 5A shows the UAV-based spectral signatures of healthy and DM-affected plants. In the visible range, the spectral reflectance values of healthy plants and DM-affected plans in a low DS stage were lower than in the high DS stage, which had a peak value of 20% at the green band. The spectral reflectance values of healthy plants were lower than the low and high DS stages in the NIR range. The spectral reflectance of the low DS stage was higher than the high DS stage, especially in the range of 750–915. Major differences cannot be seen in the red edge, where both DS stages had almost identical signatures. In the NIR range (700–1,000 nm), the spectral reflectance values of the low and high DS stages were higher than the spectral reflectance values of healthy plants.

Figure 5. (A) Spectral reflectance signatures developed from hyperspectral imaging collected in the field; and (B) correlation coefficient for watermelon plant in healthy (H), low, and high downy mildew severity stages.

The correlation coefficients for healthy, low, and high DS stages were almost identical in the visible range (Figure 5B). In the range of 750–1,000 nm, there were some differences recorded between the correlation coefficient values of the two DS stages (Figure 5B). Figure 5B shows differences between the low and high DS stages at 750 and 1,000 nm.

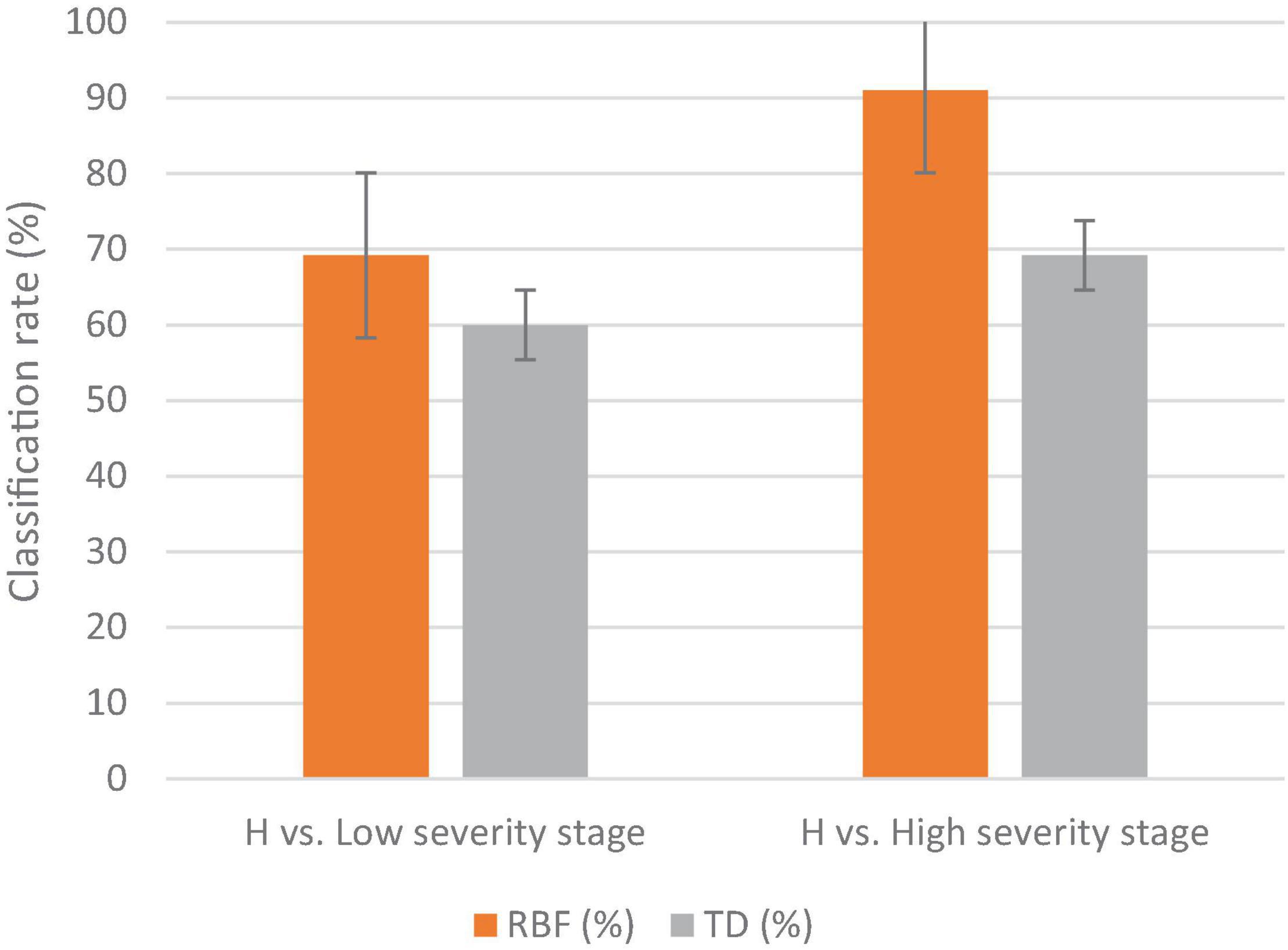

The MLP method gave a higher classification accuracy than the DT method; in the low DS stage, the classification accuracy of the MLP was 69%, while the classification accuracy of the DT was 60% (Figure 6). The highest classification accuracy was achieved in the high DS stage at 91% for MLP, while it was 69% for DT. The best wavebands for low DS stage classification were between 952 and 965 nm, while in the high DS stage the best wavebands were in red edge (755–780 nm). The best VIs for disease detection were the RARSc and RARSa for the low DS stage, and the RARSb and CI green for the high DS stage (Table 2).

Figure 6. The classification results of the MLP and DT methodologies for detecting several disease severity stages of downy mildew in watermelon plants in the field against healthy plants (H). The vertical lines on the columns are error bars.

Watermelon plants are very susceptible to diseases; therefore, several studies developed non-destructive and high-throughput techniques to detect diseases like anthracnose, leaf blight, and leaf spot (He et al., 2021). Blazquez and Edwards (1986) developed a laboratory-based technique to detect watermelon infected with Fusarium wilt, downy mildew, and watermelon mosaic. All three diseases had significant differences in the near infra-red range (700–900 nm). Kalischuk et al. (2019) applied UAV multispectral imaging to identify, using NDVI, gummy stem blight, anthracnose, Fusarium wilt, Phytophthora fruit rot, Alternaria leaf spot, and cucurbit leaf crumple disease. Disease incidence and severity ratings were significantly different between conventional scouting and UAV-assisted scouting. There is no other UAV-based system available for the detection and monitoring of DM in the field.

The difference between our results (aka, DS detection accuracy by the models, and best wavelengths and VIs for DS detection) in the laboratory and field can be explained by the different environmental conditions and data collection procedures. For example, in the laboratory, data are collected from single leaves at a close distance and with artificial light, in contrast to the field where data are collected by a UAV (30 m above ground) from entire plants. Hence, the results from the laboratory and field analysis cannot be directly compared.

The DM-affected watermelon leaves showed varied spectral reflectance signatures for each DS stage (both in the laboratory and field). However, in the visible range, there were no significant differences between the healthy and the low DS stage (5–10% infection), which indicates that it is very difficult to distinguish among these stages with visual observation. For that reason, hyperspectral imaging and machine learning can be used for a more efficient and rapid early plant disease and severity detection (compared to visual detection methods).

Laboratory measurements showed that the spectral reflectance of healthy plants was higher than the other DS stages in the blue range. The main differences between healthy and infected plants in the spectral signatures, both in the laboratory and field measurements, were found in the high severity stage in the green, red edge, and NIR range (700–1,000), while in NIR, the spectral reflectance signature of healthy plants was higher than the other DS stages. The high DS stage had a unique signature that can be used to distinguish this stage from others (and healthy plants). Although we were able to visually observe the change of the colors of the leaves for some of the DS stages, not all had the same level of change.

In the field, the results did not show significant differences between the low and high DS stages in the visible range; only slight differences were observed. However, that minor differences in the spectral reflectance in the visible range can be still considered as an indication of color change in the leaves. The leaves and the plant canopy in the low DS stage showed very few visual symptoms, and the classification accuracy was low, both in the laboratory and field. As the chlorophyll content and water content decrease, the leaf cell damage increases (Barnawal et al., 2017), and that helps the classification methods to better classify the DS stages, especially in the medium and very high DS stages.

The common practice for selecting significant wavelengths for the DS detection of new VIs is by the correlation to a biochemical or biophysical trait, for example, chlorophyll a + b content leaf structure parameter, the water content, and so on (Gitelson and Merzlyak, 1996; Hatfield et al., 2008). Regularly, the fluctuation of spectral reflectance might guide to the detection of plants under stress without specifying or providing a description of what type of stress cause the damage to the plant (Carter and Miller, 1994; Gitelson and Merzlyak, 1994). For example, as was mentioned earlier, several factors could reduce the chlorophyll content, of which some are physiological or biochemical caused by DM severity and this reduction might influence the photosynthesis activity.

In both experimental conditions, the best vegetation indices (e.g., the PRI, this index is more related to the green range; and the CI green) were able to discriminate between healthy and DM-affected plants in the low and the high DS stages. The PRI, which is produced by normalizing 531 and 570 nm, basically relays on the green range. Any deficiency or disorder in chlorophyll will influence the PRI value; the PRI has increasingly been used as an indicator of photosynthetic efficiency (Garbulsky et al., 2011) and as an indicator of water stress (Suarez et al., 2008). One of the first changes in a DM-affected plant is the reduction of the chlorophyll concentration that affects the process of photosynthesis in the infected leaf, and some VIs associated with chlorophyll content could be used to detect these changes (Mandal et al., 2009; Bellow et al., 2013; Barnawal et al., 2017). Our findings suggest the same.

The selected best spectral VIs resulted in high specificity and sensitivity for the detection and identification of downy mildew disease in different stages of severity. Lower classification results were achieved in the low DS stage, because of the minor changes in leaf composition (compared to a healthy plant). The highest classification results were obtained from the MLP method in high and very high DS stages (87–90%), while the DT method recorded lower classification results (compared to MLP) for all DS stages. Some VIs can be used for disease detection and classification of the DS stages. The use of hyperspectral imaging for identifying the most significant VIs to detect and identify several DS stages will further enhance the understanding and specificity of disease detection. Future work includes the development of a simple and inexpensive UAV-based sensor, based on previous research and developments, that only measures spectral reflectance at narrow bands (e.g., customized multispectral camera), centered at specific wavelengths for early DM detection in the field.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

JA, YA, JQ, and PR conceived, designed, processed, analyzed, and interpreted the experiments. JA, YA, and PR acquired the data. JA and YA analyzed the data and prepared the manuscript. JQ and PR edited the manuscript. All authors contributed to the article and approved the submitted version.

This material was made possible, in part, by a Cooperative Agreement from the U.S. Department of Agriculture’s Agricultural Marketing Service through grant AM190100XXXXG036.

The contents are solely the responsibility of the authors and do not necessarily represent the official views of the USDA.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdulridha, J., Ehsani, R., Abd-Elrahma, A., and Ampatzidis, Y. (2019). A remote sensing technique for detecting laurel wilt disease in avocado in presence of other biotic and abiotic stresses. Comput. Electron. Agric. 156, 549–557. doi: 10.1016/j.compag.2018.12.018

Abdulridha, J., Ampatzidis, Y., Kakarla, S. C., and Roberts, P. (2020a). Detection of target spot and bacterial spot disease in tomato using UAV-based and benchtop-based hyperspectral imaging techniques. Precis. Agric. 21, 955–978. doi: 10.1007/s11119-019-09703-4

Abdulridha, J., Ampatzidis, Y., Qureshi, J., and Roberts, P. (2020b). Laboratory and UAV-based identification and classification of tomato yellow leaf curl, bacterial spot, and target spot diseases in tomato utilizing hyperspectral imaging and machine learning. Remote Sens. 12:2732. doi: 10.3390/rs12172732

Abdulridha, J., Ampatzidis, Y., Roberts, P., and Kakarla, S. C. (2020c). Detecting powdery mildew disease in squash at different stages using UAV-based hyperspectral imaging and artificial intelligence. Biosyst. Eng. 197, 135–148. doi: 10.1016/j.biosystemseng.2020.07.001

Almalki, F. A., Soufiene, B., Alsamhi, S. H., and Sakli, H. (2021). A Low-Cost Platform for Environmental Smart Farming Monitoring System Based on IoT and UAVs. Sustainability 13:5908. doi: 10.3390/su13115908

Alsamhi, S. H., Almalki, F. A., Afghah, F., Hawbani, A., Shvetsov, A. V., Lee, B., et al. (2022). Drones’ Edge Intelligence Over Smart Environments in B5G: blockchain and Federated Learning Synergy. IEEE Trans. Green Commun. Netw. 6, 295–312. doi: 10.1109/tgcn.2021.3132561

Babar, M. A., Reynolds, M. P., Van Ginkel, M., Klatt, A. R., Raun, W. R., and Stone, M. L. (2006). Spectral reflectance to estimate genetic variation for in-season biomass, leaf chlorophyll, and canopy temperature in wheat. Crop Sci. 46, 1046–1057. doi: 10.2135/cropsci2005.0211

Bagheri, N. (2020). Application of aerial remote sensing technology for detection of fire blight infected pear trees. Comput. Electron. Agric. 168:105147. doi: 10.1016/j.compag.2019.105147

Barnawal, D., Pandey, S. S., Bharti, N., Pandey, A., Ray, T., Singh, S., et al. (2017). ACC deaminase-containing plant growth-promoting rhizobacteria protect Papaver somniferum from downy mildew. J. Appl. Microbiol. 122, 1286–1298. doi: 10.1111/jam.13417

Barnes, J. D., Balaguer, L., Manrique, E., Elvira, S., and Davison, A. W. (1992). A Reappraisal of the Use of Dmso for the Extraction and Determination of Chlorophylls-A and Chlorophylls-B in Lichens and Higher-Plants. Environ. Exp. Bot. 32, 85–100. doi: 10.1016/0098-8472(92)90034-y

Bellow, S., Latouche, G., Brown, S. C., Poutaraud, A., and Cerovic, Z. G. (2013). Optical detection of downy mildew in grapevine leaves: daily kinetics of autofluorescence upon infection. J. Exp. Bot. 64, 333–341. doi: 10.1093/jxb/ers338

Blackburn, G. A. (1998). Spectral indices for estimating photosynthetic pigment concentrations: a test using senescent tree leaves. Int. J. Remote Sens. 19, 657–675. doi: 10.1080/014311698215919

Blazquez, C. H., and Edwards, G. J. (1986). Spectral reflectance of healthy and diseased watermelon leaves. Ann. Appl. Biol. 108, 243–249. doi: 10.1111/j.1744-7348.1986.tb07646.x

Broge, N. H., and Leblanc, E. (2001). Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 76, 156–172. doi: 10.1016/s0034-4257(00)00197-8

Carter, G. A., and Miller, R. L. (1994). Early detection of plant stress by digital imaging within narrow stress-sensitive wavebands. Remote Sens. Environ. 50, 295–302. doi: 10.1016/0034-4257(94)90079-5

Chappelle, E. W., Kim, M. S., and McMurtrey, J. E. (1992). Ration analysis of reflectance spectra (RARS)-An algorithm for the remote estimation concentration of chlorophyll-a, chlorophyll-b, and carotenoid soybean leaves. Remote Sens. Environ. 39, 239–247. doi: 10.1016/0034-4257(92)90089-3

Friedl, M. A., and Brodley, C. E. (1997). Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 61, 399–409. doi: 10.1016/s0034-4257(97)00049-7

Gamon, J. A., Penuelas, J., and Field, C. B. (1992). A narrow-waveband spectral index that tracks diurnal changes in photosynthetic efficiency. Remote Sens. Environ. 41, 35–44. doi: 10.1016/0034-4257(92)90059-S

Garbulsky, M. F., Penuelas, J., Gamon, J., Inoue, Y., and Filella, I. (2011). The photochemical reflectance index (PRI) and the remote sensing of leaf, canopy and ecosystem radiation use efficiencies A review and meta-analysis. Remote Sens. Environ. 115, 281–297. doi: 10.1016/j.rse.2010.08.023

Gitelson, A., and Merzlyak, M. N. (1994). Quantitative estimation of chlorophyll-a using reflectance spectra - experiments with autumn chestnut and maple leaves. J. Photochem. Photobiol. B Biol. 22, 247–252. doi: 10.1016/1011-1344(93)06963-4

Gitelson, A. A., Gritz, Y., and Merzlyak, M. N. (2003). Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 160, 271–282. doi: 10.1078/0176-1617-00887

Gitelson, A. A., Kaufman, Y. J., Stark, R., and Rundquist, D. (2002). Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 80, 76–87. doi: 10.1016/s0034-4257(01)00289-9

Gitelson, A. A., and Merzlyak, M. N. (1996). Signature analysis of leaf reflectance spectra: algorithm development for remote sensing of chlorophyll. J. Plant Physiol. 148, 494–500. doi: 10.1016/s0176-1617(96)80284-7

Gitelson, A. A., Merzlyak, M. N., and Chivkunova, O. B. (2001). Optical properties and nondestructive estimation of anthocyanin content in plant leaves. Photochem. Photobiol. 74, 38–45. doi: 10.1562/0031-8655(2001)074<0038:opaneo>2.0.co;2

Haboudane, D., Miller, J. R., Pattey, E., Zarco-Tejada, P. J., and Strachan, I. B. (2004). Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: modeling and validation in the context of precision agriculture. Remote Sens. Environ. 90, 337–352. doi: 10.1016/j.rse.2003.12.013

Haboudane, D., Miller, J. R., Tremblay, N., Zarco-Tejada, P. J., and Dextraze, L. (2002). Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 81, 416–426. doi: 10.1016/s0034-4257(02)00018-4

Hariharan, J., Fuller, J., Ampatzidis, Y., Abdulridha, J., and Lerwill, A. (2019). Finite difference analysis and bivariate correlation of hyperspectral data for detecting laurel wilt disease and nutritional deficiency in avocado. Remote Sens. 11:1748. doi: 10.3390/rs11151748

Hatfield, J. L., Gitelson, A. A., Schepers, J. S., and Walthall, C. L. (2008). Application of spectral remote sensing for agronomic decisions. Agron. J. 100, S117–S131.

He, X., Fang, K., Qiao, B., Zhu, X. H., and Chen, Y. N. (2021). Watermelon Disease Detection Based on Deep Learning. Int. J. Pattern Recogn. Artificial Intelligence 35:2152004. doi: 10.1142/s0218001421520042

Immerzeel, W. W., Gaur, A., and Zwart, S. J. (2008). Integrating remote sensing and a process-based hydrological model to evaluate water use and productivity in a south Indian catchment. Agric. Water Manag. 95, 11–24. doi: 10.1016/j.agwat.2007.08.006

Jordan, C. F. (1969). Derivation of leaf area index from quality of light on the forest floor. Ecology 50, 663–666. doi: 10.2307/1936256

Kalischuk, M., Paret, M. L., Freeman, J. H., Raj, D., Da Silva, S., Eubanks, S., et al. (2019). An Improved Crop Scouting Technique Incorporating Unmanned Aerial Vehicle-Assisted Multispectral Crop Imaging into Conventional Scouting Practice for Gummy Stem Blight in Watermelon. Plant Dis. 103, 1642–1650. doi: 10.1094/PDIS-08-18-1373-RE

Lu, J. Z., Ehsani, R., Shi, Y. Y., de Castro, A. I., and Wang, S. (2018). Detection of multi-tomato leaf diseases (late blight, target and bacterial spots) in different stages by using a spectral-based sensor. Sci. Rep. 8:2793. doi: 10.1038/s41598-018-21191-6

Mandal, K., Saravanan, R., Maiti, S., and Kothari, I. L. (2009). Effect of downy mildew disease on photosynthesis and chlorophyll fluorescence in Plantago ovata Forsk. J. Plant Dis. Prot. 116, 164–168. doi: 10.1007/bf03356305

Merton, R. (1998). “Monitoring Community Hysteresis Using Spectral Shift Analysis and the Red-Edge Vegetation Stress Index,” in JPL Airborne Earth Science Workshop, (Pasadena, CA: NASA, Jet Propulsion Laboratory).

Penuelas, J., Baret, F., and Filella, I. (1995). Semiempirical indexes to assess carotenoids chlorophyll-a ratio from leaf spectral reflectance. Photosynthetica 31, 221–230.

Penuelas, J., Pinol, J., Ogaya, R., and Filella, I. (1997). Estimation of plant water concentration by the reflectance water index WI (R900/R970). Int. J. Remote Sens. 18, 2869–2875. doi: 10.1080/014311697217396

Raun, W. R., Solie, J. B., Johnson, G. V., Stone, M. L., Lukina, E. V., Thomason, W. E., et al. (2001). In-season prediction of potential grain yield in winter wheat using canopy reflectance. Agron. J. 93, 131–138. doi: 10.2134/agronj2001.931131x

Roujean, J. L., and Breon, F. M. (1995). Estimating Par Absorbed by Vegetation from Bidirectional Reflectance Measurements. Remote Sens. Environ. 51, 375–384. doi: 10.1016/0034-4257(94)00114-3

Saif, A. K., Dimyati, K., Noordin, A. N. S., Mohd Shah, S., Alsamhi, H., and Abdullah, Q. (2021). “Energy Efficient Tethered UAV Development in B5G for Smart Environment and Disaster Recovery,” in 1st International conference on Emerging smart technology, IEEE, (Piscataway: IEEE).

Suarez, L., Zarco-Tejada, P. J., Sepulcre-Canto, G., Perez-Priego, O., Miller, J. R., Jimenez-Munoz, J. C., et al. (2008). Assessing canopy PRI for water stress detection with diurnal airborne imagery. Remote Sens. Environ. 112, 560–575. doi: 10.1016/j.rse.2007.05.009

Wang, T. Y., Thomasson, J. A., Yang, C. H., Isakeit, T., and Nichols, R. L. (2020). Automatic Classification of Cotton Root Rot Disease Based on UAV Remote Sensing. Remote Sens. 12:21.

West, J. S., Bravo, C., Oberti, R., Lemaire, D., Moshou, D., and McCartney, H. A. (2003). The potential of optical canopy measurement for targeted control of field crop diseases. Annu. Rev. Phytopathol. 41, 593–614. doi: 10.1146/annurev.phyto.41.121702.103726

Woodcock, C. E., Loveland, T. R., Herold, M., and Bauer, M. E. (2020). Transitioning from change detection to monitoring with remote sensing: A paradigm shift. Remote Sens. Environ. 238:111558. doi: 10.1016/j.rse.2019.111558

Xiao, Y. F., Zhao, W. J., Zhou, D. M., and Gong, H. L. (2014). Sensitivity Analysis of Vegetation Reflectance to Biochemical and Biophysical Variables at Leaf, Canopy, and Regional Scales. IEEE Trans. Geosci. Remote Sens. 52, 4014–4024. doi: 10.1109/tgrs.2013.2278838

Keywords: artificial intelligence, hyperspectral imaging, plant disease, remote sensing, UAV

Citation: Abdulridha J, Ampatzidis Y, Qureshi J and Roberts P (2022) Identification and Classification of Downy Mildew Severity Stages in Watermelon Utilizing Aerial and Ground Remote Sensing and Machine Learning. Front. Plant Sci. 13:791018. doi: 10.3389/fpls.2022.791018

Received: 07 October 2021; Accepted: 25 April 2022;

Published: 20 May 2022.

Edited by:

Glen C. Rains, University of Georgia, United StatesReviewed by:

Xiangming Xu, National Institute of Agricultural Botany (NIAB), United KingdomCopyright © 2022 Abdulridha, Ampatzidis, Qureshi and Roberts. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jaafar Abdulridha, ZnRhc2hAdWZsLmVkdQ==; Yiannis Ampatzidis, aS5hbXBhdHppZGlzQHVmbC5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.