- 1Key Laboratory of Advanced Process Control for Light Industry, Ministry of Education, Jiangnan University, Wuxi, Jiangsu, China

- 2Visual Algorithm R&D Department, XINJIE Electronic Limited Company, Wuxi, Jiangsu, China

Aiming at the stability of hand-eye calibration in fruit picking scene, a simple hand-eye calibration method for picking robot based on optimization combined with TOF (Time of Flight) camera is proposed. This method needs to fix the TOF depth camera at actual and calculated coordinates of the peach the end of the robot, operate the robot to take pictures of the calibration board from different poses, and record the current photographing poses to ensure that each group of pictures is clear and complete, so as to use the TOF depth camera to image the calibration board. Obtain multiple sets of calibration board depth maps and corresponding point cloud data, that is, “eye” data. Through the circle center extraction and positioning algorithm, the circle center points on each group of calibration plates are extracted, and a circle center sorting method based on the vector angle and the center of mass coordinates is designed to solve the circle center caused by factors such as mirror distortion, uneven illumination and different photographing poses. And through the tool center point of the actuator, the coordinate value of the circle center point on the four corners of each group of calibration plates in the robot end coordinate system is located in turn, and the “hand” data is obtained. Combined with the SVD method, And according to the obtained point residuals, the weight coefficients of the marker points are redistributed, and the hand-eye parameters are iteratively optimized, which improves the accuracy and stability of the hand-eye calibration. the method proposed in this paper has a better ability to locate the gross error under the environment of large gross errors. In order to verify the feasibility of the hand-eye calibration method, the indoor picking experiment was simulated, and the peaches were identified and positioned by combining deep learning and 3D vision to verify the proposed hand-eye calibration method. The JAKA six-axis robot and TuYang depth camera are used to build the experimental platform. The experimental results show that the method is simple to operate, has good stability, and the calibration plate is easy to manufacture and low in cost. work accuracy requirements.

1. Introduction

In recent years, the output of agricultural products in my country has increased year by year. By 2022, China’s output of fruits and vegetables will rank first in the world. At present, fruit and vegetable picking is mainly based on manual picking. However, with the process of urbanization, the shortage of labor has been exacerbated, resulting in a substantial increase in the cost of fruit and vegetable picking. Therefore, the development of picking robots that can improve production efficiency and reduce picking costs is an inevitable trend in fruit and vegetable production.

Since the 1980s, various systematic theoretical researches on picking robots have been carried out at home and abroad (Willigenburg et al., 2004; Ji et al., 2011; Gan et al., 2018; Li and Tian, 2018; Tian et al., 2019). The working environment of picking robots is complex, there are many uncertain factors, and picking is difficult. An efficient, fast and stable fruit and vegetable picking robot system is urgently needed. And picking robotics robots are enabled by technologies not available in the earlier literature era, including huge advances in computing power and speed, advanced machine vision and imaging systems, image processing techniques, artificial intelligence techniques, and improved gripping and handling. As a result, in 2020-2021, picking robots will face a highly competitive market, and many large companies will introduce new reliable and efficient picking robots (Bogue, 2020). Studies have shown that the correlation and precise positioning of vision and robots, that is, hand-eye calibration, are the key technologies and prerequisites for realizing active vision and automatic picking of picking robots (Tian et al., 2019). The main purpose of hand-eye calibration is to obtain the conversion relationship from the camera to the end of the robot, which is convenient for controlling the robot’s arm to complete the corresponding task. Therefore, the research on hand-eye calibration is of great significance to the picking robot.

There are two ways to associate vision and robots: Eye-to-Hand and Eye-in-Hand. In the process of researching picking robots, it is found that active vision measurement has better advantages in outdoor picking, because compared with the situation where the camera is fixed in the Eye-to-Hand association method, the camera is bound to the end of the robotic arm. As the robotic arm changes the shooting pose, it can overcome the influence of uncertain factors such as uneven ground, wind disturbance, and illumination changes in the wild environment (Mo et al., 2017). The Hand-eye system is the main way for picking robots to achieve active vision (Xu et al., 2008).

As early as the 1980s, Tsai and Daniilidis et al. (Tsai and Lenz, 1989; Chen, 1991; Daniilidis, 1999; Malm and Heyden, 2000) proposed a classic hand-eye calibration method for the Eye-in-Hand vision and robot correlation method, which are mainly divided into “two-step method” and “one-step method”, and its mathematical model boils down to solving the AX=XB matrix equation. After that, various more efficient hand-eye calibration algorithms and mathematical models have been proposed one after another (Malti, 2013; Nobre and Heckman, 2019; Koide and Menegatti, 2019; Deng et al., 2021; Fei et al., 2021; Liu et al., 2021; Pedrosa et al., 2021; Wang and Min, 2021; Wu et al., 2021). In China, there have also been some researches on the hand-eye calibration method of picking robots. For example, Mo Yuda of South China Agricultural University (Mo et al., 2017) proposed a hand-eye calibration optimization method to solve the homogeneous transformation matrix equation and applied it to the litchi picking robot. “Two-step method” solves the problem of error transmission to optimize results; and Jin Yucheng (Jin et al., 2021) proposed a deep vision hand-eye coordination planning strategy to solve the problems of low picking accuracy and efficiency of existing picking robots. Abroad, University of Wageningen developed a new hand-eye sensing and servo control framework and applied it to picking robots in dense vegetation (Barth et al., 2016).

In recent years, with the development of depth cameras, depth cameras have been increasingly applied to the field of visual picking (Wang et al., 2018; Zhang et al., 2021; Pan and Ahamed, 2022), which has improved the recognition ability and efficiency of fruit and vegetable picking. In addition, depth cameras have also been applied. In hand-eye calibration (Ðurović et al., 2017; Du et al., 2018; Ge et al., 2022). TOF (Time of flight) depth camera is a camera with active vision measurement function. Its working principle is to continuously send light pulses to the target, and then use the sensor to receive the light returned from the object, by detecting the flight round-trip time of the light pulses Compared with other 3D cameras, it has the advantages of cheap price, small size, low power consumption, strong anti-light interference and fast calculation of depth information (Li et al., 2021), which is very suitable for the application of wild fruit picking.

This paper studies a hand-eye calibration method combined with a depth camera. It adopts the eye-in-hand correlation method, combines the TOF depth camera to take pictures of fixed feature points, and uses the TCP contact measurement method to obtain the hand-eye data, which is transformed into the solution of the equation AX=B, and then iteratively optimizes the hand-eye parameters by changing the weight coefficient of the marker points to obtain the final hand-eye calibration matrix, and the hand-eye calibration method is verified in the indoor simulated peach picking experiment. The main work is as follows:

1) The traditional circular calibration plate used for picking is modified and designed to facilitate the contour extraction and center positioning of the circular target, as well as the tool center point (TCP) of the auxiliary robot end effector to accurately measure the center point base mark.

2) A circle center sorting method based on the vector angle and the center of mass coordinates of the circular calibration plate is proposed, which can solve the problem of out-of-order extraction of circle centers and specify the sorting direction.

3) Combined with the SVD algorithm, the hand-eye matrix is solved, the residuals of the points are estimated, the weight coefficients of the marker points are redistributed, and the hand-eye parameters are iteratively optimized, which improves the accuracy and stability of the hand-eye calibration of the picking robot.

4) A method for peach identification and localization is designed, combined with the deep network model and TOF camera, to verify the reliability of the hand-eye calibration method.

2. Hand-eye calibration scheme of picking robot

2.1. Hand-eye calibration model

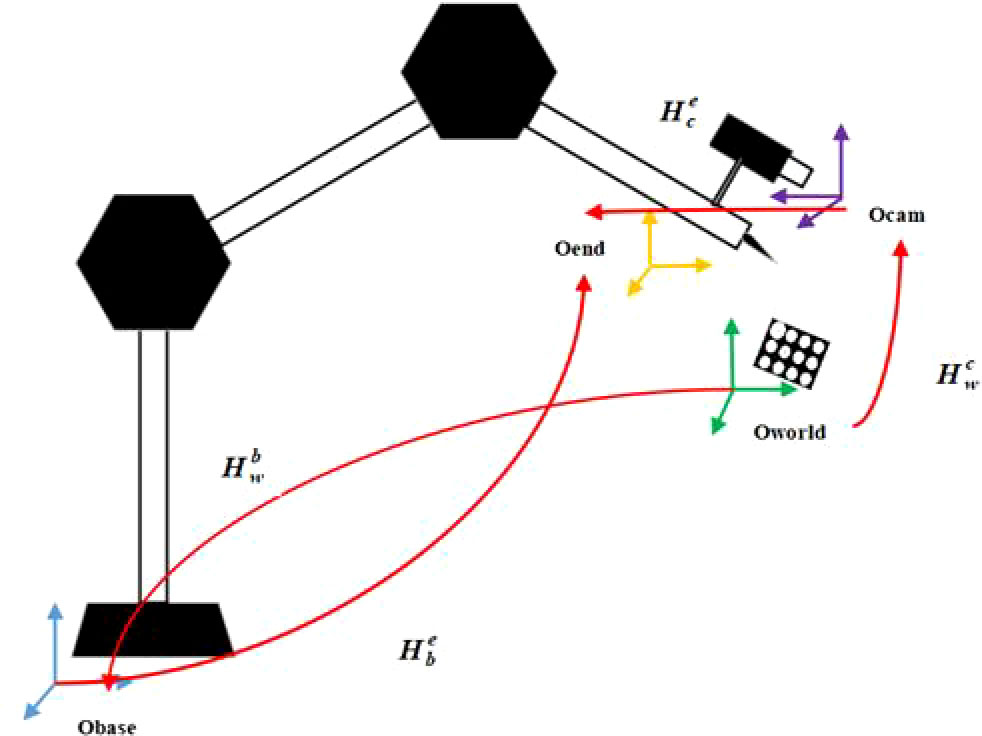

This method mainly studies the hand-eye calibration model of the eye on the hand, that is, the camera is fixed at the end of the robotic arm. As shown in Figure 1, Obase represents the base coordinate system of the robot, Oend represents the execution end coordinate system of the robot, a camera is installed at the end of the robot, Ocam is the camera coordinate system, and a calibration board is fixed in the robot’s field of view, and Oworld is the world coordinate system. (Calibration board coordinate system), these four coordinate systems and their mutual conversion relationship constitute the mathematical model of the picking robot’s hand-eye vision system. The relationship between the calibration board and the robot base coordinates is fixed. Hand-eye calibration.

The TOF camera can realize the conversion from the world coordinate system Oworld to the camera coordinate system Ocam, and the conversion relationship is ; . Indicates the conversion from the world coordinate system Oworld to the robot base coordinate system Obase, and the position of the calibration board in the base coordinate system can be obtained; the motion feedback data of the robot can obtain through the conversion relationship from the robot base coordinate system Obase to the execution end coordinate system Oend; represents the conversion relationship from the camera coordinate system Ocamto the execution end coordinate system Oend. The above four transformation relationship matrices are the key information to realize the positioning and guidance of the robot.

2.2. Design of hand-eye calibration method

First, TCP calibration is carried out for the robot, and the ‘Four point calibration method (Liu et al., 2012)’ is used to calibrate the position of TCP in the end coordinate system of the robot. Then, the end of the robot is operated to locate the fixed mark points on the calibration board by contact, and the TOF depth camera is used to take pictures of the calibration board from different poses. The calibration method is as follows:

1) First, teach the robot’s pose to ensure that the camera can capture a complete and clear picture of the calibration board in each group of poses. Then, the host computer sends the “start calibration” command, and the robot takes pictures of the calibration board according to the taught pose, and can obtain m(m∈[5,10]) group depth pictures, point cloud information, and pose coordinates when the robot takes pictures.

2) Control the end effector (needle tip) of the robot, in the fixed order of upper left, upper right, lower left and lower right, respectively contact the center points on the four corners of the calibration plate to obtain a set of homogeneous coordinates of the center points under the robot base coordinate system Obase;

3) After processing the depth images of each calibration plate collected by the TOF camera, :a set of three-dimensional center point homogeneous coordinates can be obtained by combining the point cloud coordinates and the index numbers of the circle center points on the four corners of the calibration plate through the circle center positioning algorithm, and get the pose parameters of the robot PTat this time from the robot controller, xT、yT、zT is the position vector of the end of the robot tool end coordinate system in the robot base coordinate system, and RxT 、 RyT 、 RzT is the Euler angle of each joint of the robot under the robot posture at this time;

4) Through each set of pose coordinates, obtain the three-dimensional homogeneous coordinates of the circle center point under the robot base coordinate system Obase under the execution end coordinate system Oend, which is obtained by formula (1):

Among this, , ,decompose into rotation part (3x3) and translation part (3x1), can be got by formula (2):

in formula(2), Rx, Ry and Rz are respectively:

is obtained by formula(3):

5) Extract the homogeneous coordinates of the center of the point cloud corresponding to each set of calibration plates under the camera coordinate system Ocam : PCamAll, and the homogeneous coordinates of all circle centers under the end coordinate system Oend obtained by formula(1): PEndAll, n=4*m,

thus, the conversion relationship from the camera coordinate system Ocam to the execution end coordinate system Oend: is obtained, then can be decomposed into rotation matrix (3x3) and translation vector (3x1),as shown in formula(4):

3. Hand-eye calibration algorithm and optimization

3.1. Calibration plate design

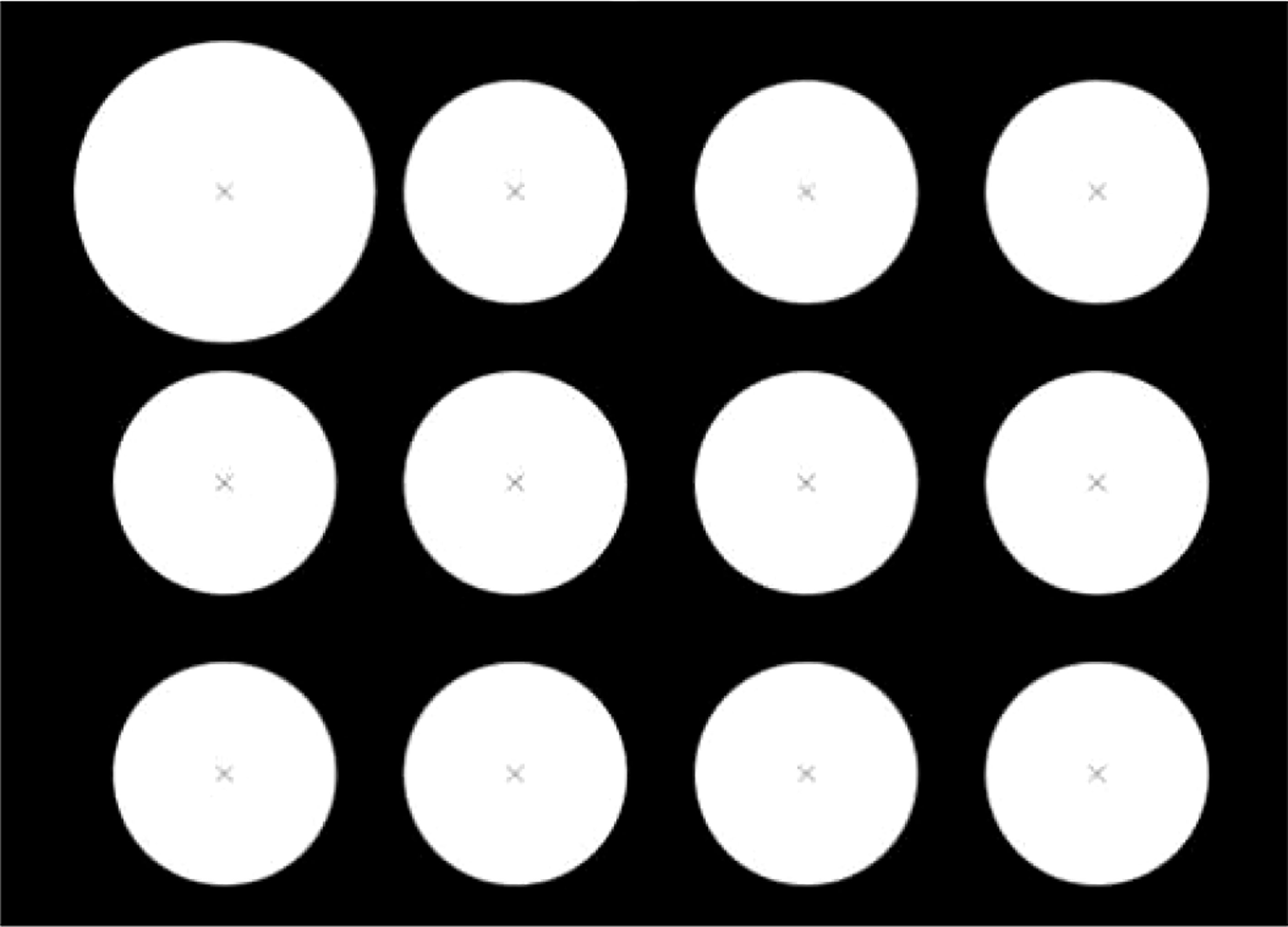

Compared with the traditional round calibration board, the calibration board designed in this paper is simple to make and has lower cost. One A4 paper is enough, and it does not need to ensure high-precision size spacing. It can also assist TCP to locate the center of the circle and ensure the consistency of the order of extracting the center of the circle. The improvements are as follows:

1) Ensure that the circular target in the upper left corner is significantly larger than other circular targets in any photographing posture, which is helpful for the subsequent sorting of marker points;

2) The circular target is required to be separated from the background, and the colors should be very different, so that the outline of the circular target can be quickly extracted;

3) The size of the calibration plate should be such that when the camera is shooting at close range, the camera field of view can cover the entire calibration plate;

4) In the center of each circular target, draw a “ × “ symbol, which is helpful for the precise positioning of the contact center by the end of the execution.

The designed calibration board is shown in Figure 2.

3.2. Algorithm for center positioning and sorting

The circular calibration plate generally takes the center of the characteristic circle as the feature point, adopts the center detection and positioning algorithm, first preprocesses the image of the calibration plate, then performs Canny edge detection, and then sets the conditions such as roundness and area to screen the obtained contour, and finally Use least squares ellipse fitting to obtain the center of the circle. The method has low real-time performance, but has strong robustness and high precision to the influence of external factors, and can reach the sub-pixel level.

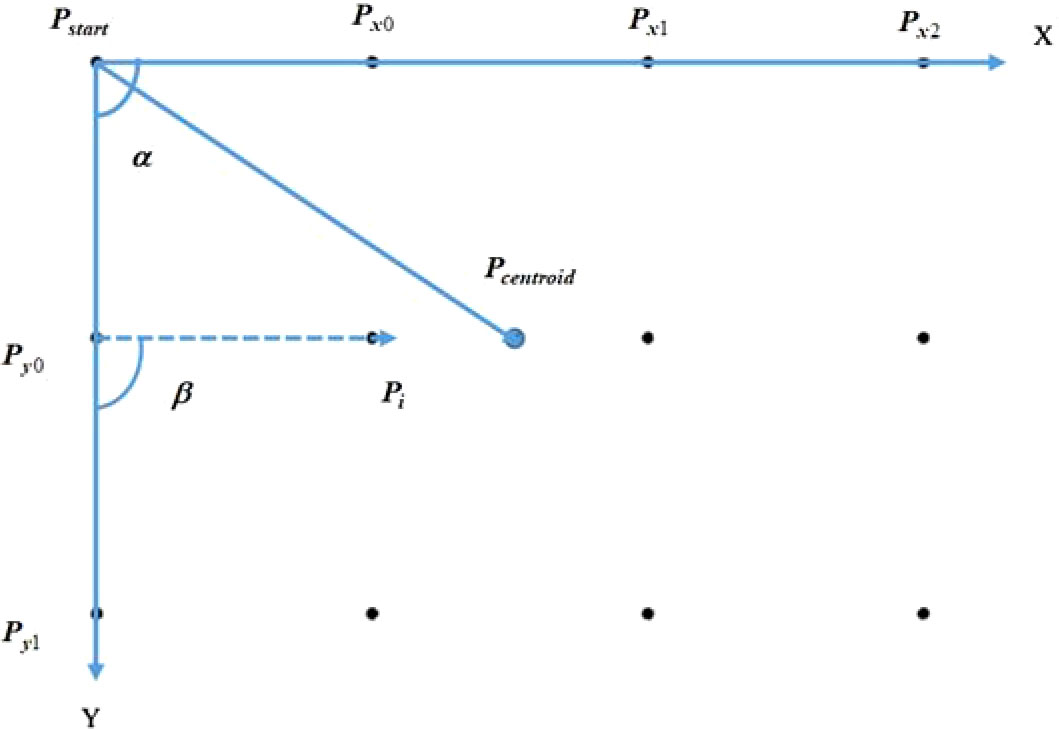

Aiming at the problem of out-of-order extraction of center points caused by factors such as mirror distortion, uneven illumination and different photographing poses, a center sorting method based on vector angles and centroid coordinates is adopted to make the center lattice sorting algorithm with rotation invariance. The coordinates of the center of the circle are extracted in the order of X direction and then Y direction. The schematic diagram of the sorting of circle points is shown in Figure 3. The method is as follows:

1) Determine the initial point, according to the circular contour obtained by Canny edge detection and contour screening, search the contour with the most subpixel points on the contour, and then carry out least squares ellipse fitting to obtain the initial point Pstart;

2) Calculate the Euclidean distances from other circle centers to the initial point respectively, and sort them from small to large according to the Euclidean distance, and select the two nearest points, namely Px 0and Py0;

3) Find the centroid coordinates of all the circle centers Pcentroid, and get three direction vectors, , ,and , with the starting point is the Pstart. The formula (5) is introduced below to judge the positional relationship between the vectors:

if , is in the counterclockwise direction of ; if , is in the clockwise direction of ; if =0, is collinear with ; from this, it can be judged that the positional relationship of the three direction vectors , ,and . In this article, let is in the counterclockwise direction of , that is, represent the X direction; is in the clockwise direction of , that is, represent Y direction;

4) Calculate the angle α between the vector from the center of the other circle to the starting point and the X direction, set the angle threshold, the reference point in the Y direction is the one that meets the conditions, and sort according to the Euclidean distance from the initial point from small to large, you can get and;

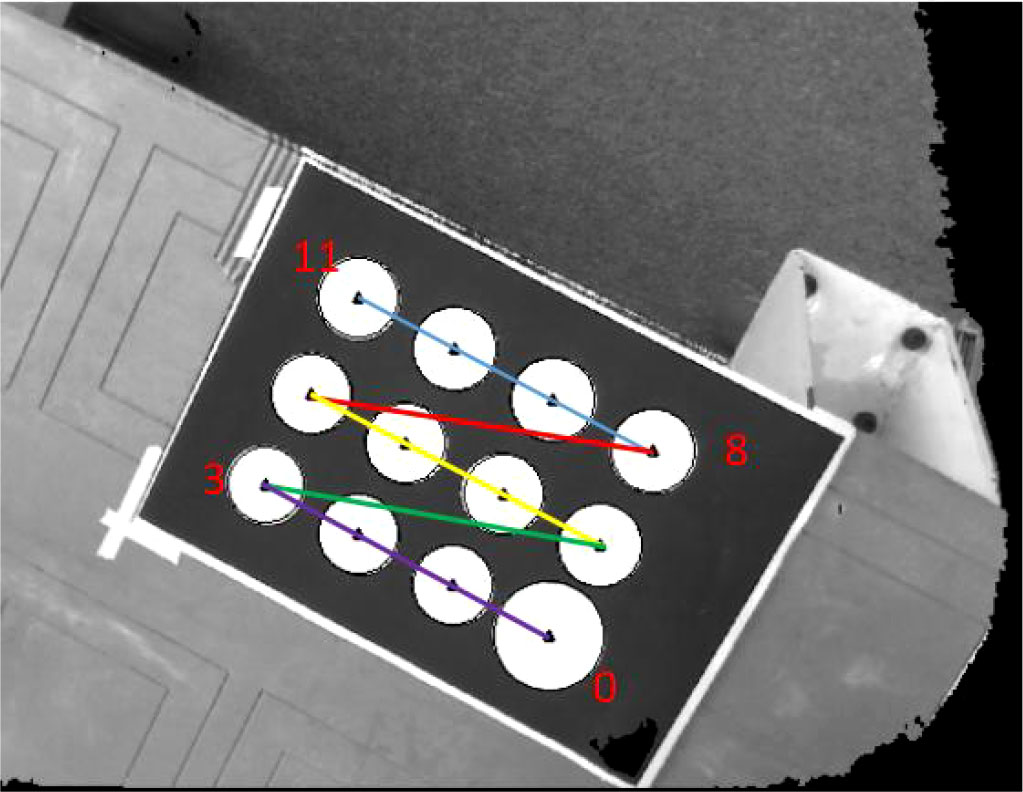

5) Take the reference point in the Y direction as the reference point in turn, calculate the angle β between the vectors from the center of the other circles to the reference point and the Y direction, set the angle threshold, filter out the center of the circle that is collinear with the Xdirection, and follow the distance with the reference point. Euclidean distance is sorted from smallest to largest. The sequence diagram of the center of the circle after sorting is shown in Figure 4.

After the sorting is completed, a set of sub-pixel coordinates is obtained. It is necessary to precisely locate the three-dimensional coordinates of the marker point in the point cloud coordinate system. Assuming that the sub-pixel coordinates of the extracted marker point is P=(u,v), then the coordinates of the four surrounding integer pixels are P0=(u0, v0), P1=(u0+1,v0), P2=(u0,v0+1), P3=(u0+1, v0+1), Then, a method proposed by Cheng Qi (Cheng et al., 2021) using the reciprocal of the subpixel area ratio as the weight of spatial point interpolation is used to accurately estimate the point cloud coordinates of the marker points.

3.3 Solving the initial hand-eye calibration matrix

The hand-eye calibration method used in this paper is to convert the data under the camera coordinate system Ocamto the execution end coordinate system Oend. This process can be regarded as the rigid body transformation of the point cloud, which can be solved by the method of SVD and least squares. The calculation accuracy and efficiency is high, its mathematical model is:

in the formula, R is the rotation matrix, T is the translation matrix,wi is the weight coefficient, and the initial value is set to 1; n is the number of marker points. By taking the partial derivative of formula (10), the minimum value can be obtained, which can be simplified and sorted as follows:

where and are respectively the centroid points of the set of points, defined as:

Substitute equation (7) into (6) and eliminate T, then can get

where P'CamAlli = PCamAll - 、 P'EndAlli = PEndAll - , the optimization problem can be transformed into:

convert equation (11) into:

Let , and perform SVD decomposition on H:

Where U is a left singular matrix, V is a right singular matrix, and Λ is a diagonal matrix.

Finally get:

In the formula,U, V, Λ are all orthogonal matrices, at this time:

3.4. Weighted iterative method to optimize hand-eye calibration parameters

During the calibration process, the camera itself is easily affected by the environment (light, temperature, humidity, pressure, etc.), which affects the measurement accuracy; at the same time, some human factors will also cause large errors in the measurement, such as the end of the operation robot contacting and positioning the center of the circle. If it is large, a large gross error is introduced into the feature points. At this time, if the calculation method of equal weight is used for coordinate system transformation, the stability of the SVD algorithm will be reduced. Therefore, the allocation of weight coefficients needs to be considered during the execution of the algorithm.

The point residual for each marker point is:

At this time, the absolute mean value of the point residuals is:

The weight coefficients are redistributed according to the residual error of each marker point, so that the weight of the measurement point with larger error is reduced, thereby increasing the stability of the SVD algorithm. Here, take the weight function as the Danish (Wang, 2006) function:

In the formula, ui=ei/σ , σ is the error in the point position, , k0 is the harmonic coefficient of the weight function, usually 1.0~2.5, this paper takes k0 = 2.5.The weight coefficient matrix corrected according to equation (19) is re-substituted into equation (6) for iterative solution. If the number of iterations or the error threshold is reached, the iteration is terminated.

4. Experimental results and analysis

4.1. Experimental equipment

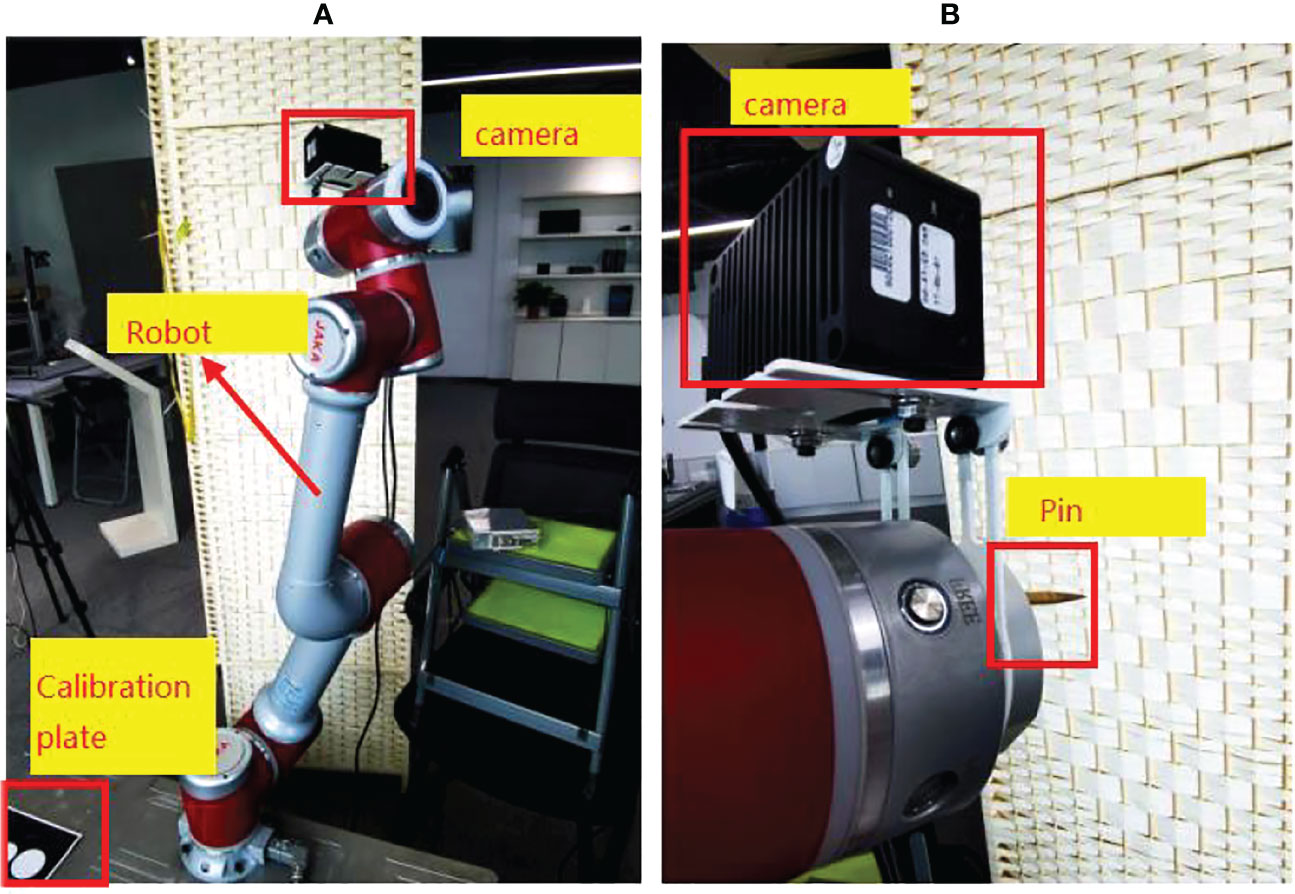

The built experimental platform is shown in Figure 5. The image acquisition device is TuYang TM460-E2 TOF camera, the working distance is 0.1m-2.4m, the size is 87.4mm*51.5mm*38mm, the weight is 257g, and the depth image resolution is 640 *480, this depth camera is small in size and light in weight, which is very suitable for outdoor picking; the picking robot is JAKA Zu 5, the end load is 5kg, and the repeat positioning accuracy is ±0.02mm; the vision software is independently developed, and the development environment Visual Studio, The development language is C++.

4.2 Experiment results and analysis of hand-eye calibration

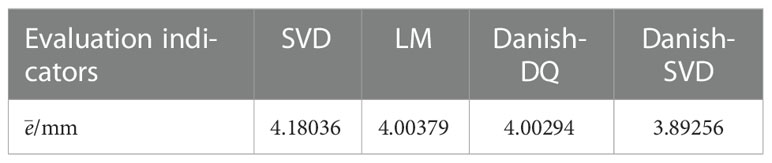

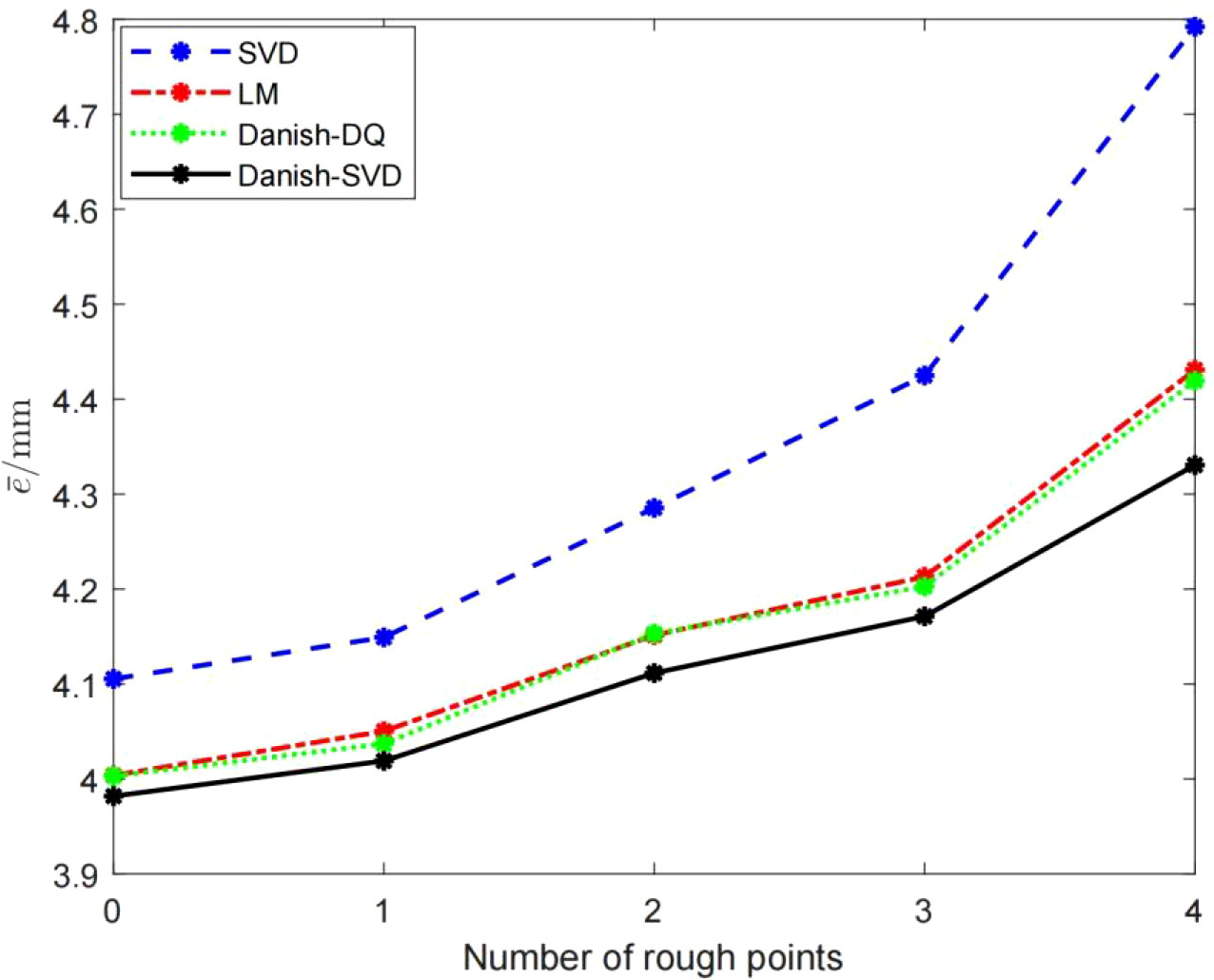

In order to test the effect and robustness of the weighted SVD method proposed in this paper, a comparison experiment will be carried out under two different conditions of no gross error and gross error. The comparison experiments include SVD algorithm, LM algorithm, Danish-DQ (Cheng et al., 2021),and the Danish-SVD proposed in this paper. In the case of no noise and no gross error, the average absolute error of the point is shown in Table 1:

It can be seen from Table 1 that the LM algorithm, the Danish-DQ algorithm and the Danish-SVD algorithm proposed in this paper can improve the accuracy of hand-eye calibration, but compared to the algorithm proposed in this paper, the effect is better.

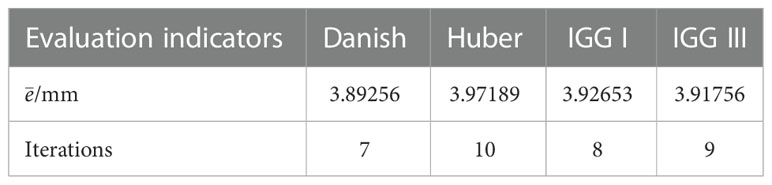

The Danish weight function used in this paper is to reduce the influence of gross error by re weighting the residual error. This paper will conduct comparative experiments with other common weight functions, such as Huber function, IGG I function, IGG III function, to verify the advantages of Danish weight function. The experimental results are shown in Table 2:

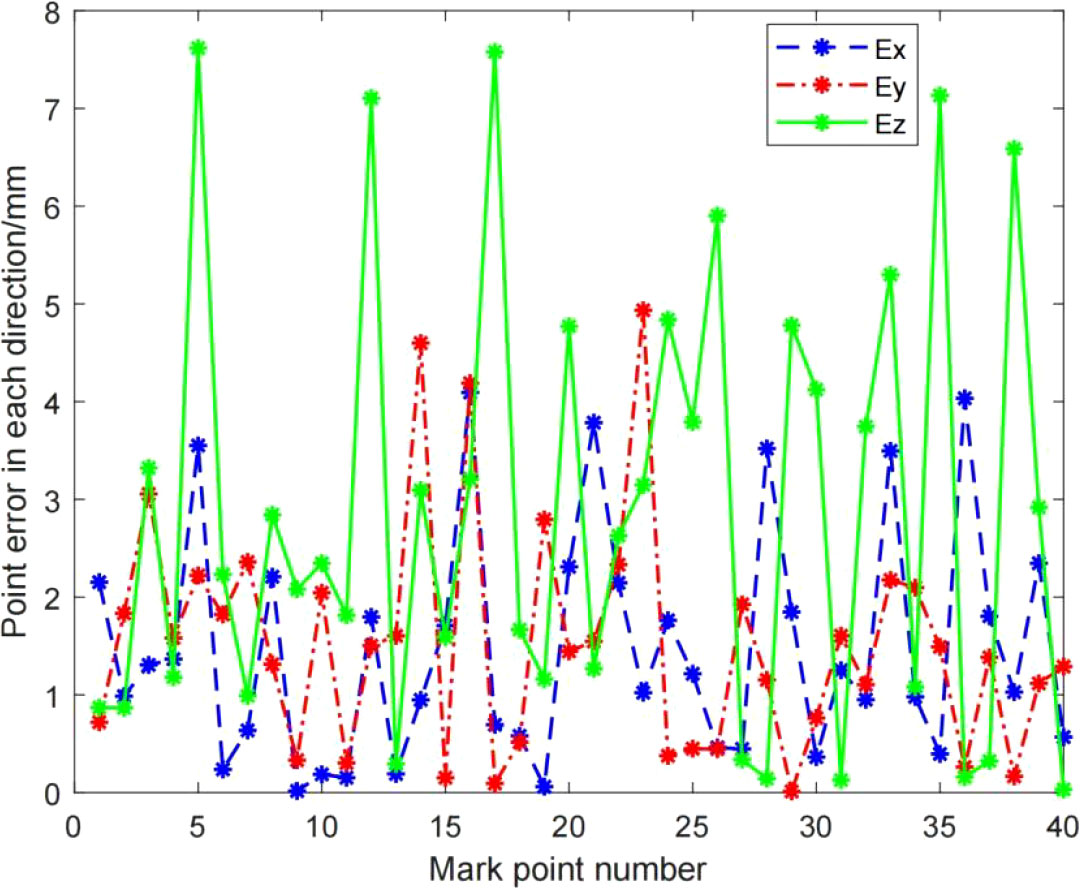

As can be seen from Table 2, the Danish weight function used in this paper is better than other weight functions in terms of precision and iteration number. However, the overall average absolute error value is relatively large. The reason is analyzed from the absolute error of each marker point in the X, Y, and Z axis directions under the operation result of the Danish-SVD algorithm proposed in this paper.

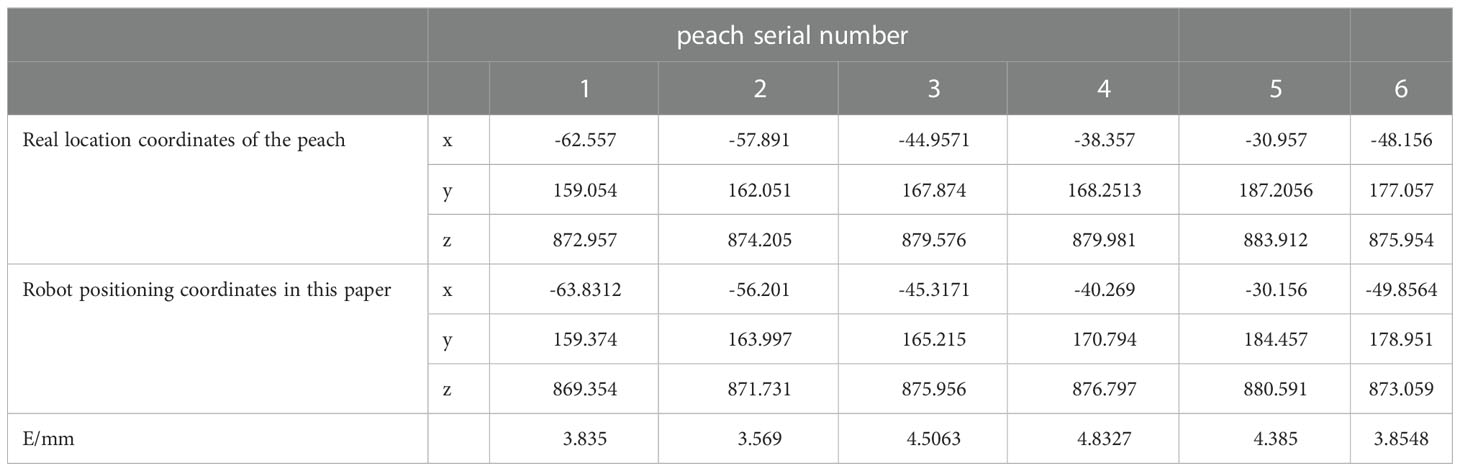

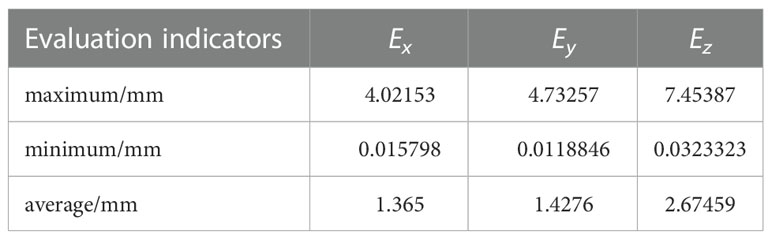

As can be seen from Figure 6 and Table 3, the absolute error of the marker point in the Z-axis direction is larger, and the accuracy of this TM460-E2 depth camera in the Z-direction is 5mm-20mm, combined with the working principle of the TOF camera, and after analyzing the data in Table 4, it is concluded that the higher the camera pose during hand-eye calibration, the greater the absolute error. Therefore, when teaching the camera pose, it is required that the camera can shoot a complete circular calibration plate. pose as low as possible. And the resolution of this TOF camera is only 640*480. The lower resolution is also the main reason for the large mean absolute error.

Table 3 The maximum value, minimum value and average value of the absolute error of the mark point in the X, Y, Z axis directions.

Table 4 The maximum value, minimum value and average value of the absolute error of the marker points in the Z-axis direction and the value of at different heights.

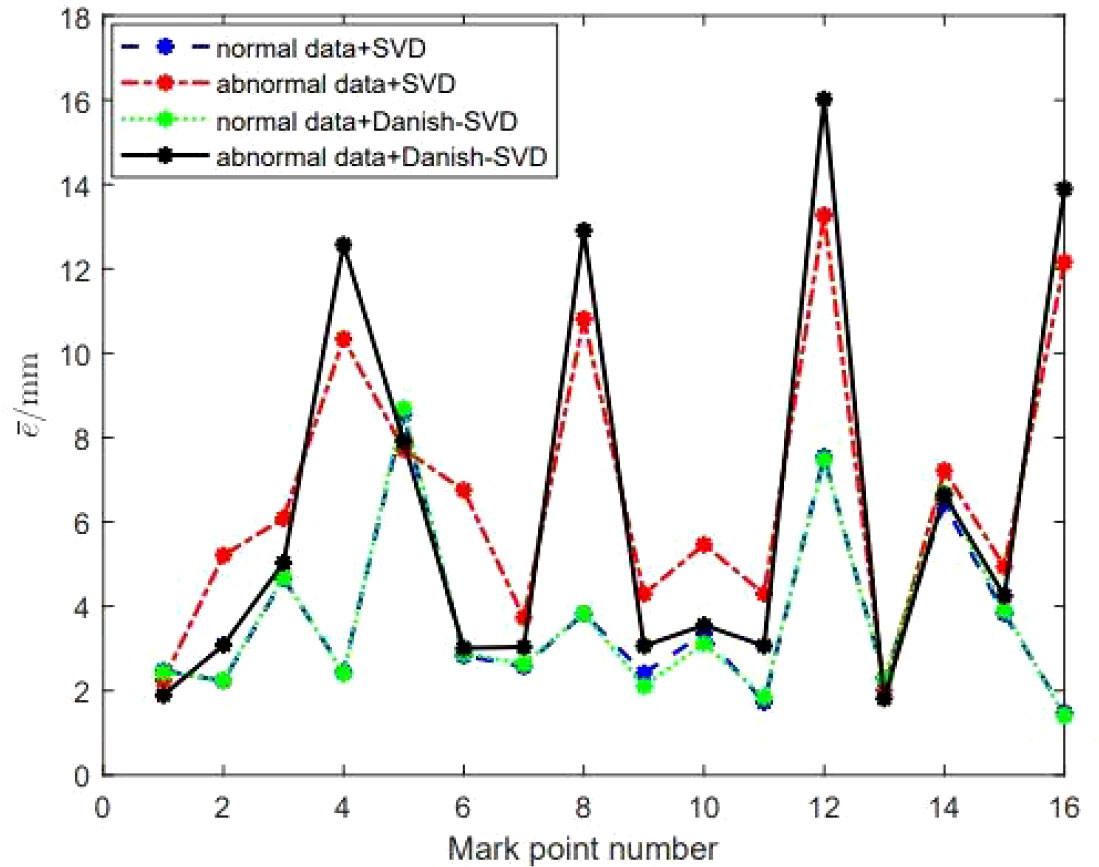

Considering the robustness requirements of outdoor picking for field hand-eye calibration, this paper adds (1, 0, 1), (-1, 0, 1),(0,-1,0), (1,-1,0), which the gross error in mm to the marker points in the order of contact and positioning marker points in the point set PBase, simulates the gross error introduced by human factors caused by the mis-operation of contact positioning, each algorithm has different numbers of gross error points The absolute error of the point below is shown in Figure 7. It can be seen that the LM algorithm, the Danish-DQ algorithm and the Danish-SVD algorithm proposed in this paper have better resistance to errors than the SVD method, but the performance of the method proposed in this paper is better than that of the SVD method.

In order to test the ability of the Danish-SVD method proposed in this paper to locate the gross error, the mark point with the serial number of 3 in the point set PBase refers to (10, 10, 10), which the gross error in mm, and the mark points of the first four groups of calibration plates are taken. After analyzing the data, it can be seen from Figure 8 that the absolute errors of the other three markers are closer to the SVD method under normal data and interference data, and can more effectively locate the position of gross errors, indicating that the Danish-SVD algorithm proposed in this paper is used. It can more effectively suppress the influence of a single interference data, and has a strong ability to identify gross errors.

Figure 8 Performance comparison of SVD and Danish-SVD algorithms under the condition of introducing large gross errors.

4.3. Experiment results and analysis of peach picking

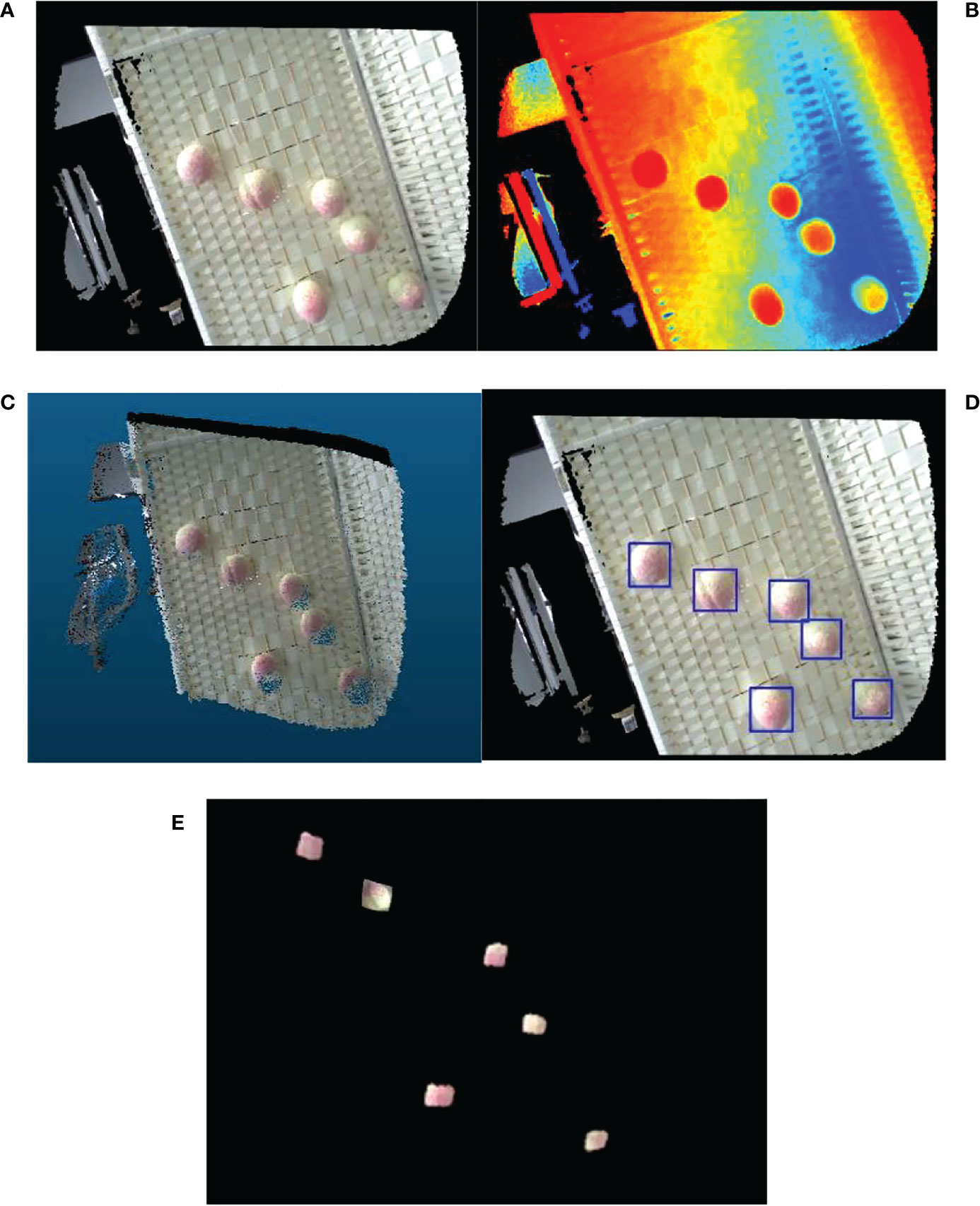

The peach picking experiment is simulated in an indoor environment, and the reliability of the hand-eye calibration method proposed in this paper is verified according to the positioning accuracy of peach picking. The experimental design is as follows:

1) Collect peach pictures, use the MVTEC DLT tool to label the pictures, call the HALCON deep network model to train the pictures, and obtain the training model;

2) Perform hand-eye calibration on the robot to obtain the conversion relationship from the camera coordinate system Ocam to the robot execution end coordinate system Oend;

3) Shoot the peaches to be picked (9.a, 9.b), perform correction and registration to obtain a color point cloud (9.c), call the training model to process the color image of the peaches, and identify and locate the peaches (9.d), map the positioning coordinates to the point cloud, and segment the peach point cloud (9.e);

4) Using the hand-eye conversion relationship, convert the coordinates of the peach point cloud to the execution end coordinate system Oend, and obtain the coordinates of the center of mass, that is, the positioning and grasping coordinates of the peach, and obtain and analyze the positioning accuracy.

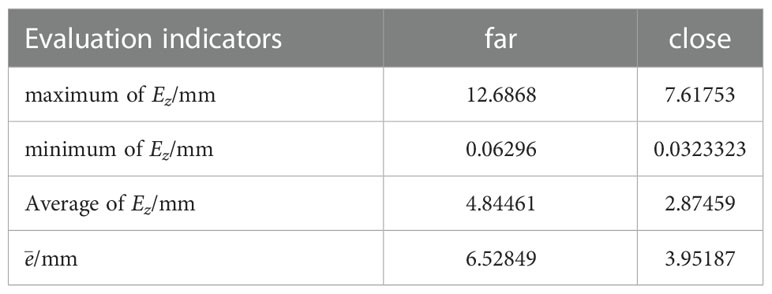

Comparing the peach positioning result in Figure 9 with the real value, the positioning accuracy error of peach is obtained. As shown in Table 5, it can be seen that the positioning error of peach is basically within 5mm, which can meet the accuracy requirements of peach picking operation.

Figure 9 Peaches identification and localization (A) Peach color map (B) Peach depth map (C) Color point cloud image of peach (D) Identification map of peach (E) Peach point cloud segmentation map.

5. Summary

Aiming at the problem of great influence in the outdoor picking environment, this paper proposes a six-axis robot hand-eye calibration method based on TOF depth camera. The hand-eye data is obtained by shooting and extracting the fixed mark points on the calibration board and contact positioning, so as to obtain the hand-eye data. Find the hand-eye calibration matrix. The weighting idea is proposed to optimize the hand-eye parameters, and the experimental platform is used to carry out the hand-eye calibration experiment and the peach picking experiment. The experiments show that the method proposed in this paper has good stability, reliability and good calibration accuracy, which can meet the operation of peach picking require.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

All persons who meet authorship criteria are listed as authors, and all authors certify that they have participated sufficiently in the work to take public responsibility for the content, including participation in the concept, design, analysis, writing, or revision of the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of interest

Author GL is employed by XINJE Electronic Limited Company.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Barth, R., Hemming, J., Henten, E. V. (2016). Design of an eye-in-hand sensing and servo control framework for harvesting robotics in dense vegetation. Biosyst. Eng. 146, 71–84. doi: 10.1016/j.biosystemseng.2015.12.001

Chen, H. H. (1991). “A screw motion approach to uniqueness analysis of head-eye geometry,” in Proceedings of the 1991 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 145–151. doi: 10.1109/CVPR.1991.139677

Cheng, Q., Pan, F., Yuan, Y. J. (2021). Hand-eye calibration method of gantry robot based on 3D vision sensor. Opto-Electronic Eng. 48 (04), 30–38. doi: 10.12086/oee.2021.200239

Daniilidis, K. (1999). Hand-eye calibration using dualquarternions. Int. J. Robot Res. 18 (3), 286–298. doi: 10.1177/02783649922066213

Deng, S. C., Mei, F., Yang, L., Liang, C. G., Jiang, Y., Gaoxing, Y., et al. (2021). Research on the hand-eye calibration method based on monocular robot. J. Physics: Conf. Ser. 1820 (1), 012007. doi: 10.1088/1742-6596/1820/1/012007

Du, H. B., Song, G., Zhao, Y., Han, J. D. (2018). Hand-eye calibration method for manipulator and RGB-d camera using 3D-printed ball. Robot. 40(06), 835–842. doi: 10.13973/j.cnki.robot.170642

Durović, P., Grbić, R., Cupec, R. (2017). Visual servoing for low-cost SCARA robots using an RGB-d camera as the only sensor. Automatika 58 (4), 495–505. doi: 10.1080/00051144.2018.1461771

Fei, Z. G., Wu, Z. Y., Xiao, Y. Q., Wang, C. D., Fu, J. X., Li, P T. (2021). Adam-Based hand-eye calibration method for robot with binocular vision. Mach. Tool & Hydraulics 49 (11), 26–30. doi: 10.3969/j.issn.1001-3881.2021.11.006

Gan, H., Lee, W. S., Alchanatis, V., Ehsani, R., Schueller, J. K. (2018). Immature green citrus fruit detection using color and thermal images. Comput. Electronicsin Agric. 152, 117–125. doi: 10.1016/j.compag.2018.07.011

Ge, S. Q., Yang, Y. H., Zhou, Z. W. (2022). Research and application of robot hand-eye calibration method based on 3D depth camera. Modern Electron. Technique 45 (02), 172–176. doi: 10.16652/j.issn.1004-373x.2022.02.033

Ji, C., Feng, Q. C., Yuan, T., Tan, Y. Z., Li, W. (2011). Development and performance analysis on cucumber harvesting robot system in greenhouse. Robot 33 (6), 146–158. doi: 10.3724/SP.J.1218.2011.00726

Jin, Y. C., Gao, Y., Liu, J. Z., Hu, C. H., Zhou, Y., Li, P. P. (2021). Hand eye coordination planning with deep visual servo for harvesting robot. Modular Mach. Tool Automatic Manufactuering Technique 52 (06), 18–25+42. doi: 10.6041/j.issn.1000-1298.2021.06.002

Koide, K., Menegatti, E. (2019). “General robot-camera synchronization based on reprojection error minimization,” in IEEE Robotics and Automation Letters, 4 (2), 1021–1028. doi: 10.1109/LRA.2019.2893612.

Li, Y., Tian, L. (2018). Fruit recognition method and path planning for fruit picking manipulator. Comput. Measurement Control. 26(11), 267–271. doi: 10.16526/j.cnki.11-4762/tp.2018.11.058.

Liu, C. Y., Li, W. G., Ma, S. G., Zhi, J. B., Liu, G. L., Wu, H. (2012). A robot tool frame calibration method. Shandong Sci. 25 (01), 69–74. doi: 10.3976/j.issn.1002-4026.2012.01.015

Liu, Z. Y., Liu, X., Duan, G. F., Tan, J. (2021). Precise hand-eye calibration method based on spatial distance and epipolar constraints. Robotics Autonomous Syst. 145. doi: 10.1016/j.robot.2021.103868

Li, Z., Yan, S. H., Liu, Y., Guo, Y. S., Hong, T. S., Lv, S. L. (2021).Error analysis and compensation method for TOF depth-sensing camera. Modern Electron. Technique 44 (07), 50–55. doi: 10.16652/j.issn.1004-373x.2021.07.010

Malm, H., Heyden, A. (2000). “A new approach to hand-eye calibration,” in Proceedings 15th International Conference on Pattern Recognition 1525. doi: 10.1109/ICPR.2000.905391

Malti, A. (2013). Hand-eye calibration with epipolar constraints: Application to endoscopy. Robotics Autonomous Syst. 61 (2), 161–169. doi: 10.1016/j.robot.2012.09.029

Mo, Y. D., Zou, X. J., Ye, M., Situ, W. M., Luo, S. F., Wang, C. L., et al. (2017). Hand-eye calibration method based on sylvester equation deformation for lychee harvesting robot. Trans. Chin. Soc. Agric. Eng. 33 (4), 47–54. doi: 10.11975/j.issn.1002-6819.2017.04.007

Nobre, F., Heckman, C. (2019). Learning to calibrate: Reinforcement learning for guided calibration of visual–inertial rigs. Int. J. Robotics Res. 38 (12-13). doi: 10.1177/0278364919844824

Pan, S., Ahamed, T. (2022). Pear recognition in an orchard from 3D stereo camera datasets to develop a fruit picking mechanism using mask r-CNN. Sensors (Basel) 22 (11), 4187. doi: 10.3390/s22114187

Pedrosa, E., Oliveira, M., Lau, N., Santos, V. (2021). A general approach to hand–eye calibration through the optimization of atomic transformations. IEEE Trans. Robotics 37 (5), 1619–1633. doi: 10.1109/TRO.2021.3062306

Tian, Y. N., Yang, G. D., Wang, Z., Wang, H., Li, E., Liang, Z. Z. (2019). Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 157, 417–426. doi: 10.1016/j.compag.2019.01.012

Tsai, R. Y., Lenz, R. K. (1989). A new technique for fully autonomous and efficient 3D robotics hand/eye calibration. IEEE Trans. Robot Autom 5 (3), 345–358. doi: 10.1109/70.34770

Wang, L., Min, H. S. (2021). Dual quaternion hand-eye calibration algorithm based on LMI optimization. Mach. Tool & Hydraulics 49 (21), 8–14. doi: 10.3969/j.issn.1001-3881.2021.21.002

Wang, L., Xu, W., Du, K. W., Lu, W., Zhu, J. H., Zhang, J. (2018). Portabellamushrooms measurement in situ based on SR300depth camera. Trans. Chin. Soc. Agric. Machinery 49 (12), 13–19+108. doi: 10.6041/j.issn.1000-1298.2018.12.002

Willigenburg, L., Hol, C., Henten, E. (2004). On-line near minimum-time path planning and control of an industrial robot for picking fruits. Comput. Electron. Agric. 44 (3), 223–237. doi: 10.1016/j.compag.2004.05.004

Wu, Q. H., Li, Z. Q., Li, Z. Y., Qiu, J. F. (2021). Robot hand-eye calibration algorithm based on quaterion multiplication. Modular Mach. Tool Automatic Manufactuering Technique 08), 48–51. doi: 10.13462/j.cnki.mmtamt.2021.08.012

Xu, H. X., Wang, Y. N., Wan, Q., Zhu, J. (2008). A self-calibration approach to hand-eye relation of robot. Robot 30 (4), 1–8. doi: 10.3901/JME.2008.10.089

Keywords: fruit picking, picking robot, hand-eye calibration, TOF camera, point cloud

Citation: Zhang X, Yao M, Cheng Q, Liang G and Fan F (2023) A novel hand-eye calibration method of picking robot based on TOF camera. Front. Plant Sci. 13:1099033. doi: 10.3389/fpls.2022.1099033

Received: 15 November 2022; Accepted: 22 December 2022;

Published: 17 January 2023.

Edited by:

Ning Yang, Jiangsu University, ChinaReviewed by:

Xianfang Wang, Henan Institute of Science and Technology, ChinaBin Xin, Beijing Institute of Technology, China

Copyright © 2023 Zhang, Yao, Cheng, Liang and Fan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiangsheng Zhang, enhzQGppYW5nbmFuLmVkdS5jbg==

Xiangsheng Zhang

Xiangsheng Zhang Meng Yao

Meng Yao Qi Cheng

Qi Cheng Gunan Liang2

Gunan Liang2