95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 19 December 2022

Sec. Sustainable and Intelligent Phytoprotection

Volume 13 - 2022 | https://doi.org/10.3389/fpls.2022.1077568

This article is part of the Research Topic Precision Control Technology and Application in Agricultural Pest and Disease Control View all 8 articles

Md. Ashraful Haque1*

Md. Ashraful Haque1* Sudeep Marwaha1*

Sudeep Marwaha1* Alka Arora1

Alka Arora1 Chandan Kumar Deb1

Chandan Kumar Deb1 Tanuj Misra2

Tanuj Misra2 Sapna Nigam1

Sapna Nigam1 Karambir Singh Hooda3

Karambir Singh Hooda3Maydis leaf blight (MLB) of maize (Zea Mays L.), a serious fungal disease, is capable of causing up to 70% damage to the crop under severe conditions. Severity of diseases is considered as one of the important factors for proper crop management and overall crop yield. Therefore, it is quite essential to identify the disease at the earliest possible stage to overcome the yield loss. In this study, we created an image database of maize crop, MDSD (Maydis leaf blight Disease Severity Dataset), containing 1,760 digital images of MLB disease, collected from different agricultural fields and categorized into four groups viz. healthy, low, medium and high severity stages. Next, we proposed a lightweight convolutional neural network (CNN) to identify the severity stages of MLB disease. The proposed network is a simple CNN framework augmented with two modified Inception modules, making it a lightweight and efficient multi-scale feature extractor. The proposed network reported approx. 99.13% classification accuracy with the f1-score of 98.97% on the test images of MDSD. Furthermore, the class-wise accuracy levels were 100% for healthy samples, 98% for low severity samples and 99% for the medium and high severity samples. In addition to that, our network significantly outperforms the popular pretrained models, viz. VGG16, VGG19, InceptionV3, ResNet50, Xception, MobileNetV2, DenseNet121 and NASNetMobile for the MDSD image database. The experimental findings revealed that our proposed lightweight network is excellent in identifying the images of severity stages of MLB disease despite complicated background conditions.

In India, maize (Zea Mays L.) is the third most important cereal grain crop. The maize crop is being grown in Kharif and rabi seasons across the country (Kaur et al., 2020). It is considered as the ‘Queen of Cereals’ due to its multiple use cases, such as staple food for human beings, feed-fodder for livestock animals, raw materials for several processed foods, industrial products, a rich source of starch and so on. As per the reports, around 31.65 mt of maize was produced across the country during 2020-2021 (ICAR-IIMR, 2021). Every year, around 13.2% of the total crop yield is damaged due to the attack of several disease-causing pathogens (Aggarwal et al., 2021). Among several diseases, Maydis leaf blight or MLB (aka Southern corn leaf Blight) is a serious fungal disease across maize-growing regions of India. Generally, the country’s warm and humid climatic condition is extremely favorable for the disease development (Malik et al., 2018). The MLB disease is caused by Bipolaris maydis (Nisik. & Miyake) Shoemaker 1959 fungus. In the early stages, its symptoms appear as small and oval to diamond-shaped, necrotic to brown-colored lesions on the leaf surfaces. These lesions get elongated as the disease progresses (Aggarwal et al., 2021). It is reported that this disease alone is capable of causing damage approx. 70% of the total crop yield in severe conditions (Hooda et al., 2018). The severity of diseases is an important parameter that measures the intensity level of disease symptoms in the affected portion of the crop and is crucial for disease management too (Hooda et al., 2018). Therefore, our first and foremost aim must be to identify and control the disease at the earliest possible stage of severity to minimize the risk of potential yield loss of maize crop. However, the conventional approach for identifying the severity stages involves visual observations and laboratory analysis. But the fact is, these approaches require highly trained and experienced personnel, which makes them practically infeasible many times. Hence, there is a much need for a precise, quick, cost-effective and automated approach to identify the disease severity stages in the field conditions.

In recent years, several computer vision techniques have been applied to several challenging agricultural problems (Kamilaris and Prenafeta-Boldú, 2018). In this connection, the convolutional neural networks (aka CNNs) are considered as the benchmark for different image-based problem identification in the agriculture domain. The CNN approaches have eased the image recognition process by automatically extracting the features from the images as compared to the hand-engineered feature extractions in the traditional machine learning approaches (LeCun et al., 2015). In case of diagnosis of diseases as well as their severity stages, CNNs have shown significantly better results than the traditional image processing and machine learning techniques. In this context, a very limited number of works have been reported to diagnose disease severity stages in maize crop using in-field images. Therefore, we proposed a novel lightweight CNN network for identifying the severity stages of MLB disease in maize crop. This network would be a practical and viable solution for the farm community of the country. The main contributions of this study are provided below:

● Created an image database known as MDSD (Maydis leaf blight Disease Severity Dataset) containing digital images of maize leaves infected with MLB disease covering all severity stages. The images of MDSD were collected in non-destructive manner with natural field backgrounds from different agricultural fields.

● Proposed a lightweight and efficient convolutional neural network (CNN) model augmented with modified inception modules. The proposed network is trained and validated on the images of the MDSD database for automatic identification of severity stages of MLB disease.

● To evaluate the effectiveness of the proposed network, we conducted a comparative analysis of the prediction performance between the proposed model and a few popular state-of-the-art pretrained networks.

This article is organized into six sections. Section 1 (present section) highlights the importance of maize crop, the devastating effect of MLB diseases, constraints of the conventional approaches of disease recognition and management, importance of computer vision-based technologies etc.: Section 2 explores and briefly discusses the related works relevant to the present study, Section 3 explains the materials and methodologies used to carry out the current study; Section 4 reports and discusses the experimental results and finding of the study; Section 5 presents the ablation studies; and Section 6 concludes the whole study highlighting the impact and crucial finding and aligns the future perspective of this study.

In this section, we will briefly discuss the methodologies proposed by research works from across the globe for recognizing diseases as well their severity stages. In recent years, deep learning-based techniques are gaining momentum for identifying diseases of several crops. Several authors like Mohanty et al. (2016); Sladojevic et al. (2016); Ferentinos (2018); Barbedo (2019) and Atila et al. (2021) focused on identifying the diseases of crops at once by applying variety of deep learning models such as state-of-the-art networks, transfer learning models, custom defined models, hybrid CNN models and many more. These works targeted identifying diseases of multiple crops by a single deep learning model. Whereas most of the reported works aimed at crop-specific disease identification problems such as for Rice crop (Lu et al., 2017; Chen et al., 2020; Rahman et al., 2020), Wheat crop (Lu et al., 2017; Picon et al., 2019; Nigam et al., 2021), Tomato crop (Fuentes et al., 2018; Zhang et al., 2018), Maize crop (DeChant et al., 2017; Priyadharshini et al., 2019; Lv et al., 2020; Haque et al., 2021; Haque et al., 2022a; Haque et al., 2022b), etc. The experimental findings of these research works reported significant results by employing several types of CNN-based networks to identify the diseases using color images. Some of these works used lab-based images of crop-diseases such as plant village for their model development, while some has used in-field images

Nowadays, the identification of severity stages of diseases has also attracted the attention of researchers. Significant works have been carried out to identify the disease severity stages using digital images. Wang et al. (2017) applied transfer learning of popular deep CNN models to diagnose disease severity in apple plants and obtained more than 94% classification accuracy on the test dataset. They used publicly available images and assessed them into 4 categories of severity stages for their experiment. Liang et al. (2019) proposed a robust approach for disease diagnosis and disease severity estimation of several crops using deep learning models. Verma et al. (2020) worked on tomato late blight disease and Prabhakar et al. (2020) worked on estimating the severity stages of tomato early blight disease using Deep CNN models. Recently, Sibiya and Sumbwanyambe (2021) used Deep CNN models to classify images of common rust disease of maize crop into four classes of severity levels. They applied fuzzy logic-based techniques to automatically categorize the diseased images into severity categories. Nigam et al. (2021) classified the stem rust disease of wheat crop into four severity categories using deep convolutional neural networks. Chen et al. (2021) worked on estimating the severity of the rice bacterial leaf streak disease using a segmentation-based approach. Wang et al. (2021) proposed an image-segmentation-based approach by integrating a deep CNN model to recognize severity stages of downy mildew, powdery mildew and cucumber viral diseases of cucumber crops. Ji and Wu (2022) proposed fuzzy logic integrated deep learning model for detecting the severity levels of grape black measles disease. Liu et al. (2022) developed a two-stage CNN model for diagnosing the severity of Alternaria leaf blotch disease of the Apple plant. A summary of the previous works is provided in Table 1.

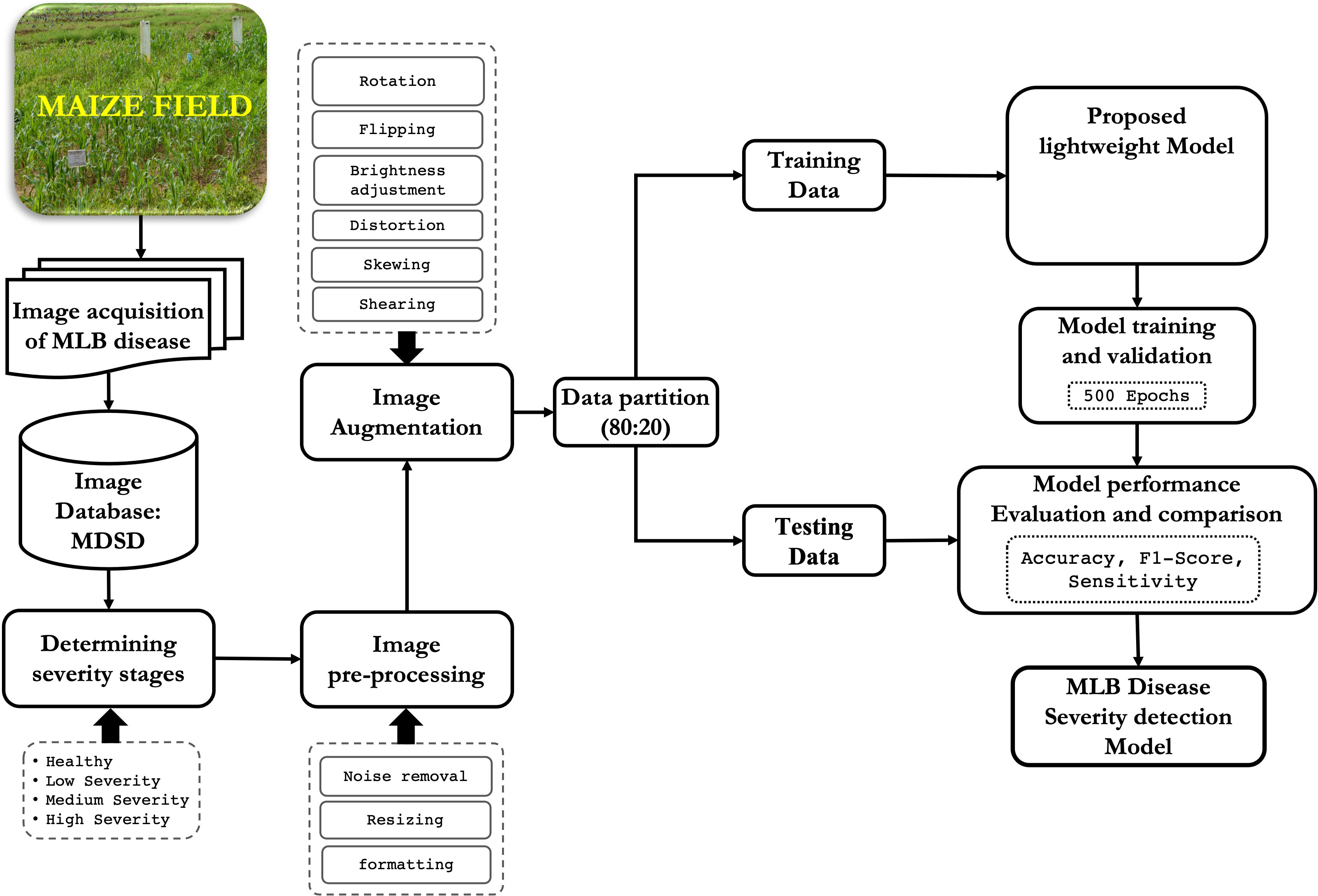

The workflow of the proposed disease severity identification approach is depicted graphically in Figure 1. First, digital images of MLB disease of maize crop were captured from the fields and MDSD image database was created. Next, images were labelled into respective severity categories based on domain experts’ observations and saved into respective folders in the storage disk. Then, images were pre-processed and augmented to increase the training dataset; After that, the whole image dataset was split into two categories viz. training and testing sets and the proposed CNN model was trained and validated. Finally, based on the performance evaluation, the MLB disease-severity identification model was finalized and its architecture was saved on the disk. Detailed illustrations of these phases are discussed in the following sections.

Figure 1 Overall framework of the proposed approach for recognition of severity stages of MLB disease.

In this study, we created an image database known as MDSD containing digital images of maize leaves affected with MLB disease. The images were collected in a non-destructive manner from several agricultural plots located at Bidhan Chandra Krishi Visvavidyalaya, Kalyani (22.9920° N, 88.4495° E) and ICAR-Indian Agricultural Research Institute, New Delhi (28.6331° N, 77.1525° E) during 2018-2020. Digital cameras (Nikon D3500 W/AF) and smartphones (Redmi Y2 and Asus Max Pro M1) were used for capturing the images under normal daylight conditions. We collected the images of MLB disease by focusing the camera lens on the symptomatic portions of leaves starting from the disease incidence stage to the highest severity stage with complex field backgrounds.

The images of MLB disease were thoroughly verified and categorized into four groups based on their symptomatic characteristics viz. healthy (no symptoms), low severity, medium severity and high severity stages as provided in Table 2. The categorization into the severity groups was done under the strict supervision of subject matter specialists (domain experts) of maize pathology at ICAR-IIMR, Ludhiana, India. Sample images of each category of MLB disease are shown in Figure 2.

Figure 2 Sample images of MLB disease of maize crop grouped into four categories (A) Healthy (B) Low Severity (C) Medium Severity and (D) High Severity.

Prior to training process, slight pre-processing of the raw images was required for better modelling. At first, unwanted images like duplicate, noisy, out-of-focus, blurred images were discarded from the raw images. After that, images were resized to 256 × 256 pixel size by keeping hardware system constraints in mind and for better interpretation by the proposed model.

In order to increase the number of images for model training, synthetic images were generated and augmented with the original dataset. Here, we used two techniques to generate the synthetic images: geometric transformation and brightness adjustment. The overall summary of images in the MDSD database is provided in Table 2.

Geometric transformation means transforming the orientation of the images. In this study, we applied several geometric transformations randomly to generate artificial images which involved rotating (90°, 180° and 270°), flipping (top-down and left-right), skewing, and zooming. The geometric transformations were applied using the ‘Augmentor’ library (Bloice et al., 2019) which provides translation invariance transformation of the images.

As the images were captured using different devices and at different periods of time, the images weren’t homogeneous in terms of illumination. The light intensity on the diseased images greatly impacts when we apply computer vision techniques. Hence, we applied a gamma function in our images to generate synthetic images with different brightness levels. The gamma function is an image processing technique that applies the non-linear adjustment to individual pixel values to encode and decode the luminance of an image. The gamma function can be defined mathematically by the following formula (eq. 1).

where, iin is the input images with pixel values scaled from [0, 255] to [0, 1], γ is the gamma value, iout is the output image scaled back to [0, 255] and a is a constant value (mainly equal to 1). The gamma values ( γ ) < 1 will shift the image towards the darker end of the spectrum while gamma values ( γ ) > 1 will make the image brighter and the γ=1 will not affect the input image.”

In this study, we proposed a lightweight convolutional neural network (CNN) to identify the severity stages of MLB disease of maize crop. In this network, we have incorporated the modified Inception modules into a simple CNN framework, enhancing the network’s finer and multi-scale feature extraction capability. The proposed model is composed of several computational modules which are discussed in following subsections:

The CRB is the most important layer in the proposed lightweight model which encompasses three popular operations viz. Convolution operation, ReLU and Batch Normalization operation as shown in Figure 3. The main function of this CRB layer was to generate pattern detectors from the images in the form of feature maps.

The convolution operation involves the extraction of inherent features (aka feature maps) from the input images by using a set of kernels/filters (LeCun et al., 1998). The kernel/filters are of smaller size than the input images such as 3 × 3 or 1 × 1. Mathematically, the convolution operation is expressed by eq. 2:

where,

denotes the output feature map of k-th input at l-th layer of the model

denotes the k-th input feature map at l-th layer of the model

and denotes the weights and bias at the l-th layer of the model

ReLU (Rectifier Linear Unit) is the widely used activation function for the CNN models that enhances the non-linear attributes within the input feature maps (Haque et al., 2021). The ReLU function requires less computation hence speed up the overall training process. Its convergence speed is higher than the other functions and induces sparsity in feature maps. It is expressed by the following equation (eq 3):

where, zk denotes the output feature map of k-th input feature map

The batch normalization process transforms a batch of images (say m) to have a mean zero and standard deviation of one. It speeds up the training process and handles the internal covariances of the input feature maps (Ioffe, 2017). The batch normalization is expressed as the following equations (eq. 4 and eq. 5):

where,

yi denotes the output feature map

is the normalized input feature map

E(zi) denotes the mean of the input feature map zivar(zi) denotes the variance of the input feature map zi γ and β are the scaling and offset factors of the network that are trainable

The maxpooling operation extracts the maximum element from the respective regions of feature map covered by the pooling kernels (Chollet, 2021). The maxpool layer outputs the most promising features from the input images without adding any extra trainable parameters to the network. In this proposed model, we applied maxpool with a kernel size of 3 x 3 and strides of 1 and 2.

Generally, the ‘inception’ module of Inception networks obtain the integration of sparse structure by approximating the available dense component of the network (Szegedy et al., 2015; Szegedy et al., 2016). In this study, we proposed a modified inception module by applying few changes with respect to the kernel sizes, number of filters and parallel convolutions. In the proposed inception module, we applied symmetrical (1 x 1) and asymmetrical convolution kernels in a parallel manner with a maxpool operation. Here, we factorized the convolutions with spatial filters of n × n (for 3 x 3 or 5 x 5) into asymmetrical convolutions with filter sizes n×1 and 1×n (e.g., 3 x 1 and 1 x 3; 5 x 1 and 1 x 5). Prior to each asymmetrical convolution, one 1 x 1 convolution kernel is incorporated to reduce the representational bottleneck of the network. We also applied ReLU in each convolution operation to induce sparsity in the feature maps (as shown in Figure 4). Finally, the outputs from all parallel convolutions and maxpool layers were concatenated and passed to the next layer of the network.

The GAP or Global Average Pooling is a unique pooling operation designed to generate a scalar vector of features by computing the average of each feature map. It aggressively summarizes the presence of a feature in an image by downsampling the entire input feature map to a single value (Lin et al., 2013). The purpose of the GAP layer was to reduce the chance of overfitting as it doesn’t add any extra learnable parameters to the network.

A softmax layer was added at the end point of the proposed CNN model. The softmax layer contains the same number of nodes as the number of classes in the dataset under study. The softmax function generates the output probability values from the input feature vectors. It converts the non-normalized feature vectors of the network into a probability distribution over the predicted output class (Bouchard, 2007). Mathematically, softmax function is expressed as the following equation (eq: 6):

where, zj denotes the j-th item of the output feature vector

The overall framework of the proposed network in a graphical manner is provided in Figure 5. Also, a detailed layer-wise description like layer names, kernel/filter sizes, strides, output shapes, number of kernels/filters and number of training parameters is provided in Table 3.

We evaluated the prediction performance of the proposed CNN model on the images of testing data. We computed the confusion matrix (CM) which represents the model’s prediction performance in a tabular fashion. In CM, row elements denote the actual values, while the column entities present the predicted values. In the CM the diagonal elements represent the correct predictions (i.e. true positives (TP) and true negatives (TN)), while the incorrect predictions (i.e. false positives (FP), false negatives (FN)) are denoted by the off-diagonal elements. Also computed the relevant evaluation metrics such as Recall, Precision and f1-score.

Classification Accuracy: The classification accuracy (or accuracy) defines the proportion of the correct prediction out of the total predictions. The following expression measures it:

Recall (Sensitivity): The recall or sensitivity is the measure which tells that the % of actual positive are predicted as positive. The following expression calculates it-

Precision: Precision is the measure which gives the % of predicted as positives that are actually positive. The following expression calculates it-

f1-Score: f1-Score is the measure that tells us about the robustness of the model. It is the harmonic mean of precision and recall. The following expression calculates it-

In this study, 1,760 images of MLB disease of maize were collected under the MDSD database from agricultural fields which were then augmented to 14,389 images. The MDSD image database is categorized into 4 groups viz. healthy, low severity, medium severity and high severity based on the intensity levels of the disease symptoms on leaves. We randomly split the whole dataset into two sets viz. training and testing sets in the ratio of 80:20. Here, the proposed convolutional neural network (CNN) was trained and tested with the MDSD dataset for automated diagnosis of severity stages of MLB disease. In this approach, several combinations of CRB and inception modules were attempted. However, CNN network with 10 CRB and 2 modified inception modules gave the optimal classification performance. Furthermore, to inspect the effectiveness of the proposed model, we also employed a few state-of-the-art pre-trained models viz: VGG16, VGG19, InceptionV3, ResNet50, Xception, MobileNetV2, DenseNet121 and NASNetMobile networks in this study. All the models were trained and tested with similar hyperparameters and configurations as shown in Table 4. All the model architectures were implemented in python using the tensorflow environment, an open-source deep learning framework. We performed all the experimental analyses by utilizing the high computation power of the Tesla V100 GPUs in the NVIDIA DGX servers.

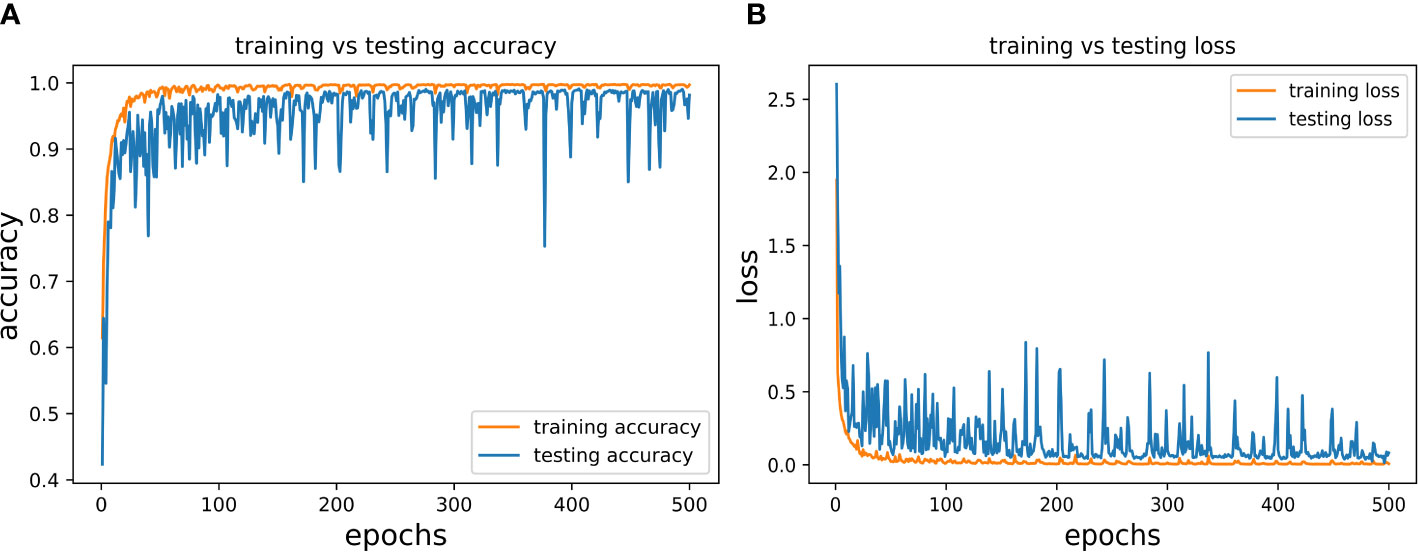

In the present study, we trained and validated our proposed CNN model 500 times (epochs) using a batch size of 128 (according to the hardware system feasibility) on the MDSD database. Our proposed model achieved the training accuracy of 99.78% with loss of 0.046, whereas the testing accuracy achieved so far was 99.13% with loss of 0.0317. We presented the epoch-wise training and testing behavior (for both classification accuracy and loss) of the proposed model in Figures 6A, B to showcase the model’s efficiency on images of MDSD database.

Figure 6 Epoch-wise behaviour of training and testing of the proposed CNN model (A) Classification accuracy: training vs testing and (B) Loss: training vs testing.

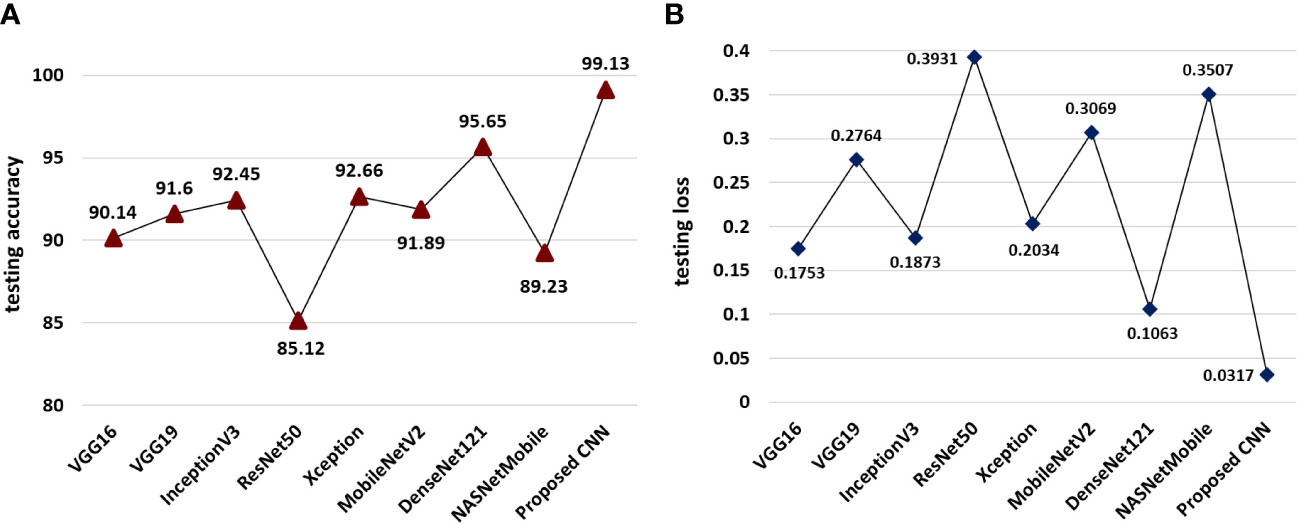

The experimental findings on the testing set of the MDSD image database reported that our proposed model achieved the overall classification accuracy (99.13%) which is far better than the employed pre-trained networks as shown in Figures 7A, B. However, among the state-of-the-art pre-trained models, the DenseNet121 model achieves the highest accuracy of 95.65% on the test dataset (shown in Figure 7A). The rest of the models achieve accuracy within 85 to 92%. The proposed model also obtained the lowest (0.0317) of all, while the DenseNet121 model reaches 0.1063 (can be seen in Figure 7B). These experimental results cater the superiority and effectiveness of the proposed model over the popular pre-trained models.

Figure 7 Comparative performance of the proposed model and pretrained models (A) models wise classification accuracies on test data and (B) model-wise testing loss.

The interpretation of the model’s performance evaluation based on classification accuracy and training loss wouldn’t be sufficient. Hence, we calculated the average f1-scores of all the models to evaluate the models in an unbiased way. We presented the obtained f1-scores of the models (proposed as well as pre-trained) in Figure 8. It is quite evident from Figure 8, that our proposed model obtained the highest f1-score than the pre-trained models in the testing dataset of MLB disease. Our proposed model’s prediction performance on the MLB disease dataset was far better than the popular pretrained models. This result implies that our proposed CNN model could identify the unknown images of MDSD database and classify them into respective severity classes.

To better understand the prediction performance of our proposed model, we presented the confusion matrix in Figure 9. Figure 9 shows that our proposed model was 100% accurate in predicting the healthy samples, 98% accurate for the low severity samples, 99% accurate for both samples of medium severity and high severity. Moreover, we also computed recall, precision and f1-score to present the class-wise prediction performance of the proposed model as shown in Table 5. Table 5 shows that the proposed model obtained quite high scores (approx. 99%) for all three metrics. It is evident from the confusion matrix and the performance metrics (recall, precision, and f1-score) that our model performed remarkably well for all the classes of the severity of MLB disease in MDSD database. The model’s performance was quite appreciable not only for healthy or high severity images but also for low severity images in which the symptoms of the disease are very mild. This result supports the significance of the proposed CNN model in recognizing severity levels for the unknown images of MLB disease of maize crop.

From the overall analysis of all the employed models, it is apparent that our proposed lightweight CNN model outperforms the popular pre-trained models for identifying the severity stages of MLB disease. However, the most important aspect of this study is that the proposed model can identify the images of the severity of MLB disease even with complex background conditions. This makes the proposed CNN model an effective and cost-effective approach for identifying the appropriate disease severity stages for the researchers, subject matter specialists and farmers in the field condition.

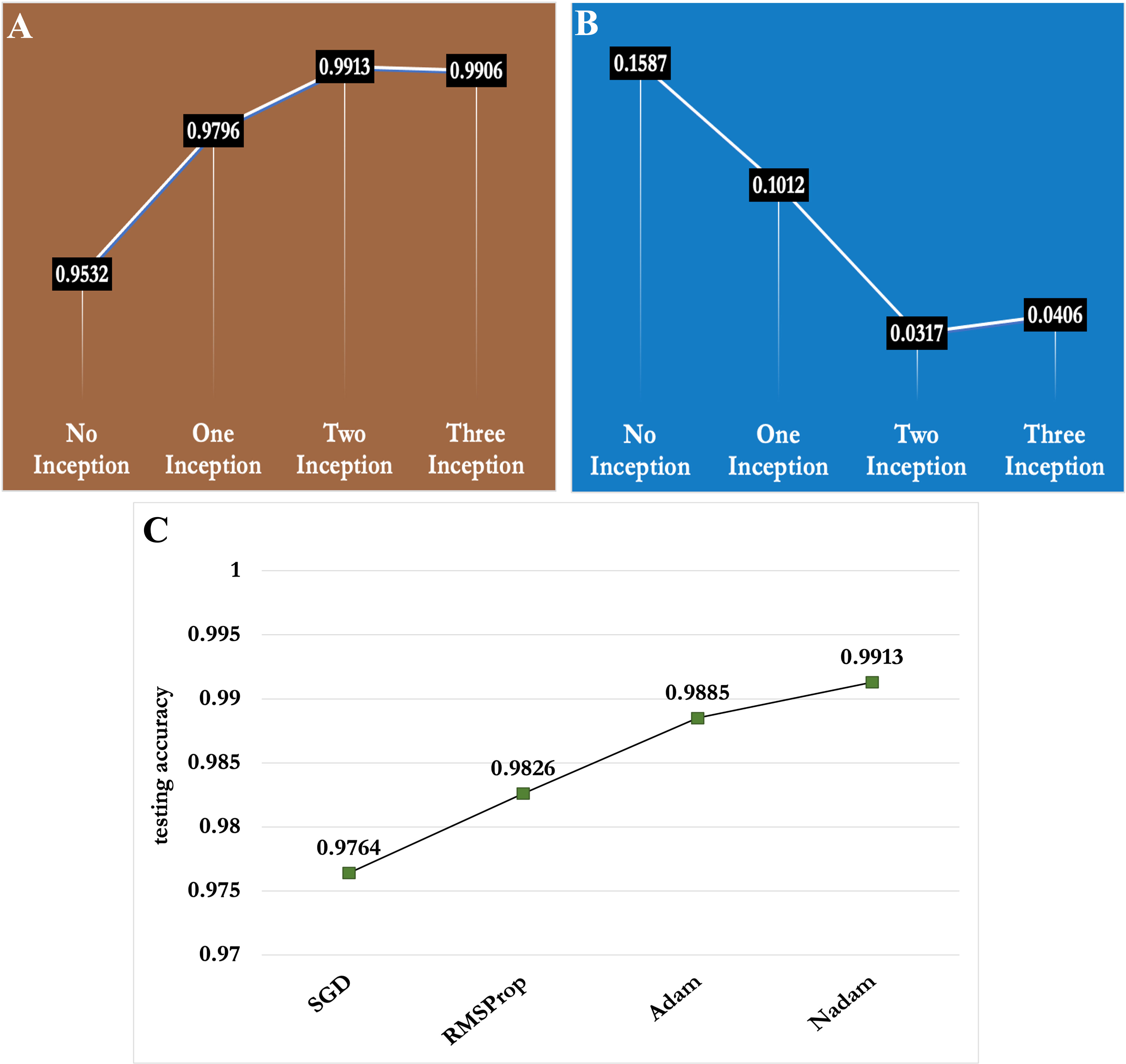

In this section, we presented the ablation studies for selecting the optimum number of inception modules and best optimization function for the proposed model. First, we trained our proposed CNN model by incorporating 0,1,2 and 3 Inception modules. The experimental results reported in Figures 10A, B, depict that the proposed CNN framework achieved around 95% testing accuracy without any inception module. However, the accuracy kept increasing as the number of Inception modules increased as shown in Figure 10A. As a result, the proposed model showed the best prediction performance (classification accuracy of 99.13%) with two Inception modules compared to the others. From Figure 10B, it is apparent that as the number of Inception modules increased, the testing loss decreased and the proposed model achieved the lowest testing loss (i.e. 0.317) with two Inception modules. Hence, the two Inception modules were selected for the proposed CNN model.

Figure 10 Depiction of the effect of number of Inception modules and optimization functions in performance of the proposed CNN model (A) Number of Inception modules vs classification accuracy (B) Number of Inception modules vs testing loss and (C) Optimization functions vs classification accuracy .

We also conducted experiments with different optimization functions, which have a huge role in model convergence and feature learning. We experimented with four types of optimization functions viz. Stochastic gradient descent (SGD), RMSProp, Adam and Nadam in the proposed model and presented the results in Figure 10C. Among the four optimization functions, Nadam function showed the best performance in the MLB disease severity dataset of maize crop.

In this study, we addressed the major issue of crop management i.e., disease severity stages by proposing a deep learning-based diagnosis approach. In this regard, we created an image database known as MDSD containing images of MLB disease with four different severity stages viz. healthy, low severity, medium and high severity. Next, we proposed a novel lightweight CNN model to identify of severity stages of MLB disease using the images of MDSD. The proposed CNN model’s basic framework comprises a stack of computational layers like the CBR layer (Convolution, ReLU and Batch normalization) augmented with two modified Inception modules. On the test dataset, our proposed model reported 99.13% classification accuracy with an f1-score of 98.97% which is quite superior than most of the popular state-of-the-art pretrained models. Furthermore, the overall experimental analysis demonstrated that our proposed CNN model efficiently captures the promising features of the images with complex backgrounds and classifies them into respective severity classes. Therefore, this automated approach for identifying the severity stages of MLB disease using the proposed CNN model would be feasible and cost-effective for the farm community and the subject matter specialists. However, in the present study, the proposed CNN model only applies to the MLB disease of maize crop. In the future, the study can be further expanded to identify severity stages of other major diseases of maize crop and diseases of other crops as per the availability of image dataset.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

MH: Conceptualization, Methodology, Investigation, Writing - Original Draft, Visualization; SM: Conceptualization, Supervision, Investigation; AA: Supervision, Writing - Review and Editing; CD: Conceptualization, Supervision, Writing - Original Draft, Visualization; TM: Writing - Review and Editing; SN: Writing - Original Draft, Visualization; KH: Field data generation; Data curation. All authors contributed to the article and approved the submitted version.

Authors acknowledge the support and resources provide by ICAR-Indian Agricultural Statistical Research Institute, New Delhi; ICAR-Indian Institute of Maize research, Ludhiana and ICAR-Indian Agriculture Research Institute, New Delhi for carrying out this research work. Authors also acknowledge the resources provided by the National Agricultural Science Funds (NASF), ICAR and National Agricultural Higher Education Project (NAHEP), ICAR.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Aggarwal, S. K., Gogoi, R., Rakshit, S. (2021). Major diseases of maize and their management (Ludhiana, Punjab: ICAR-IIMR), pp 27. Available at: https://iimr.icar.gov.in/publications-category/technical-bulletins/. 141004.

Atila, Ü., Uçar, M., Akyol, K., Uçar, E. (2021). Plant leaf disease classification using EfficientNet deep learning model. Ecol. Inf. 61, 101182. doi: 10.1016/j.ecoinf.2020.101182

Barbedo, J. G. A. (2019). Plant disease identification from individual lesions and spots using deep learning. Biosyst. Engineering 180, 96–107. doi: 10.1016/j.biosystemseng.2019.02.002

Bloice, M. D., Roth, P. M., Holzinger, A. (2019). Biomedical image augmentation using augmentor. Bioinformatics 35 (21), 4522–4524. doi: 10.1093/bioinformatics/btz259

Bouchard, G. (2007). “Efficient bounds for the softmax function, applications to inference in hybrid models,” in NIPS Workshop for Approximate Bayesian Inference in Continuous/Hybrid Systems. (Whistler, CA).

Chen, J., Zhang, D., Nanehkaran, Y. A., Li, D. (2020). Detection of rice plant diseases based on deep transfer learning. J. Sci. Food Agric. 100 (7), 3246–3256. doi: 10.1002/jsfa.10365

Chen, S., Zhang, K., Zhao, Y., Sun, Y., Ban, W., Chen, Y., et al. (2021). An approach for rice bacterial leaf streak disease segmentation and disease severity estimation. Agriculture 11 (5), 420. doi: 10.3390/agriculture11050420

DeChant, C., Wiesner-Hanks, T., Chen, S., Stewart., E. L., Yosinski, J., Gore, M. A., et al. (2017). Automated identification of northern leaf blight-infected maize plants from field imagery using deep learning. Phytopathology 107 (11), 1426–1432. doi: 10.1094/PHYTO-11-16-0417-R

Ferentinos, K. P. (2018). Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 145, 311–318. doi: 10.1016/j.compag.2018.01.009

Fuentes, A. F., Yoon, S., Lee, J., Park, D. S. (2018). High-performance deep neural network-based tomato plant diseases and pests diagnosis system with refinement filter bank. Front. Plant Sci. 9, 1162. doi: 10.3389/fpls.2018.01162

Haque, M. A., Marwaha, S., Arora, A., Paul, R. K., Hooda, K. S., Sharma, A., et al. (2021). Image-based identification of maydis leaf blight disease of maize (Zea mays) using deep learning. Indian J. Agric. Sci. 91 (9), 1362–1369. doi: 10.56093/ijas.v91i9.116089

Haque, M. A., Marwaha, S., Deb, C. K., Nigam, S., Arora, A. (2022b). Recognition of diseases of maize crop using deep learning models. Neural Computing Appl., 1–15. doi: 10.1007/s00521-022-08003-9

Haque, M. A., Marwaha, S., Deb, C. K., Nigam, S., Arora, A., Hooda, K. S., et al. (2022a). Deep learning-based approach for identification of diseases of maize crop. Sci. Rep. 12 (1), 6334. doi: 10.1038/S41598-022-10140-Z

Hooda, K. S., Bagaria, P. K., Khokhar, M., Kaur, H., Rakshit, S. (2018). Mass screening techniques for resistance to maize diseases (Ludhiana: ICAR-Indian Institute of Maize Research, PAU Campus), pp 93. Available at: https://iimr.icar.gov.in/publications-category/technical-bulletins/. 141004.

ICAR-IIMR (2021). Annual report (Ludhiana: ICAR-Indian Institute of Maize Research Punjab Agricultural University campus). Available at: https://iimr.icar.gov.in/publications-category/annual-reports/. 141004.

Ioffe, S. (2017). Batch renormalization: Towards reducing minibatch dependence in batch-normalized models. doi: 10.48550/arXiv.1702.03275

Ji, M., Wu, Z. (2022). Automatic detection and severity analysis of grape black measles disease based on deep learning and fuzzy logic. Comput. Electron. Agric. 193, 106718. doi: 10.1016/j.compag.2022.106718

Kamilaris, A., Prenafeta-Boldú, F. X. (2018). “Deep learning in agriculture: A survey,” in Computers and electronics in agriculture. 147, 70–90. doi: 10.1016/j.compag.2018.02.016

Kaur, H., Kumar, S., Hooda, K. S., Gogoi, R., Bagaria, P., Singh, R. P., et al. (2020). Leaf stripping: an alternative strategy to manage banded leaf and sheath blight of maize. Indian Phytopathol. 73 (2), 203–211. doi: 10.1007/s42360-020-00208-z

LeCun, Y., Bengio, Y., Hinton, G. (2015). Deep learning. Nature 521 (7553), 436–444. doi: 10.1038/nature14539

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P. (1998). Gradient-based learning applied to document recognition. In Proc. IEEE 86 (11), 2278–2324. doi: 10.1109/5.726791

Liang, Q., Xiang, S., Hu, Y., Coppola, G., Zhang, D., Sun, W. (2019). PD2SE-net: Computer-assisted plant disease diagnosis and severity estimation network. Comput. Electron. Agric. 157, 518–529. doi: 10.1016/j.compag.2019.01.034

Lin, M., Chen, Q., Yan, S. (2013). Network in network. arXiv preprint 1312, 4400. arXiv. doi: 10.48550/arXiv.1312.4400

Liu, B. Y., Fan, K. J., Su, W. H., Peng, Y. (2022). Two-stage convolutional neural networks for diagnosing the severity of alternaria leaf blotch disease of the apple tree. Remote Sensing 14 (11), 2519. doi: 10.3390/rs14112519

Lu, J., Hu, J., Zhao, G., Mei, F., Zhang, C. (2017). An in-field automatic wheat disease diagnosis system. Comput. Electron. Agric. 142, 369–379. doi: 10.1016/j.compag.2017.09.012

Lu, Y., Yi, S., Zeng, N., Liu, Y., Zhang, Y. (2017). Identification of rice diseases using deep convolutional neural networks. Neurocomputing 267, 378–384. doi: 10.1016/j.neucom.2017.06.023

Lv, M., Zhou, G., He, M., Chen, A., Zhang, W., Hu, Y. (2020). Maize leaf disease identification based on feature enhancement and DMS-robust alexnet. IEEE Access 8, 57952–57966. doi: 10.1109/ACCESS.2020.2982443

Malik, V. K., Singh, M., Hooda, K. S., Yadav, N. K., Chauhan, P. K. (2018). Efficacy of newer molecules, bioagents and botanicals against maydis leaf blight and banded leaf and sheath blight of maize. Plant Pathol. J. 34 (2), 121. doi: 10.5423/PPJ.OA.11.2017.0251

Mohanty, S. P., Hughes, D. P., Salathé, M. (2016). Using deep learning for image-based plant disease detection. Front. Plant Sci. 7 (1419). doi: 10.3389/fpls.2016.01419

Nigam, S., Jain, R., Marwaha, S., Arora, A. (2021). Wheat rust disease identification using deep learning. Gruyter, 239–250. doi: 10.1515/9783110691276-012

Nigam, S., Jain, R., Prakash, S., Marwaha, S., Arora, A., Singh, V. K. (2021). “& prakasha, t. l., (2021, july). Wheat disease severity estimation: A deep learning approach,” in International conference on Internet of things and connected technologies (Cham: Springer), 185–193.

Picon, A., Alvarez-Gila, A., Seitz, M., Ortiz-Barredo, A., Echazarra, J., Johannes, A. (2019). Deep convolutional neural networks for mobile capture device-based crop disease classification in the wild. Comput. Electron. Agric. 161, 280–290. doi: 10.1016/j.compag.2018.04.002

Prabhakar, M., Purushothaman, R., Awasthi, D. P. (2020). Deep learning based assessment of disease severity for early blight in tomato crop. Multimedia Tools Appl. 79 (39), 28773–28784. doi: 10.1007/s11042-020-09461-w

Priyadharshini, R. A., Arivazhagan, S., Arun, M., Mirnalini, A. (2019). Maize leaf disease classification using deep convolutional neural networks. Neural Computing Appl. 31 (12), 8887–8895. doi: 10.1007/s00521-019-04228-3

Rahman, C. R., Arko, P. S., Ali, M. E., Iqbal Khan, M. A., Apon, S. H., Nowrin, F., et al. (2020). Identification and recognition of rice diseases and pests using convolutional neural networks. Biosyst. Eng. 194, 112–120. doi: 10.1016/j.biosystemseng.2020.03.020

Sibiya, M., Sumbwanyambe, M. (2021). Automatic fuzzy logic-based maize common rust disease severity predictions with thresholding and deep learning. Pathogens 10 (2), 131. doi: 10.3390/pathogens10020131

Sladojevic, S., Arsenovic, M., Anderla, A., Culibrk, D., Stefanovic, D. (2016). Deep neural networks based recognition of plant diseases by leaf image classification. Comput. Intell. Neurosci. 2016, 6. doi: 10.1155/2016/3289801

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., et al. (2015). “Going deeper with convolutions,” in Proceedings of IEEE conference on computer vision and pattern recognition (IEEE), 1–9.

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z. (2016). “Rethinking the inception architecture for computer vision,” in Proceedings of IEEE conference on computer vision and pattern recognition (IEEE), 2818–2826.

Verma, S., Chug, A., Singh, A. P. (2020). Application of convolutional neural networks for evaluation of disease severity in tomato plant. J. Discrete Math. Sci. Cryptography 23 (1), 273–282. doi: 10.1080/09720529.2020.1721890

Wang, C., Du, P., Wu, H., Li, J., Zhao, C., Zhu, H. (2021). A cucumber leaf disease severity classification method based on the fusion of DeepLabV3+ and U-net. Comput. Electron. Agric. 189, 106373. doi: 10.1016/j.compag.2021.106373

Wang, G., Sun, Y., Wang, J. (2017). Automatic image-based plant disease severity estimation using deep learning. Comput. Intell. Neurosci. 2017, 1–8. doi: 10.1155/2017/2917536

Keywords: maydis leaf blight disease, maize crop, disease severity stages, MDSD image database, convolutional neural network, inception module

Citation: Haque MA, Marwaha S, Arora A, Deb CK, Misra T, Nigam S and Hooda KS (2022) A lightweight convolutional neural network for recognition of severity stages of maydis leaf blight disease of maize. Front. Plant Sci. 13:1077568. doi: 10.3389/fpls.2022.1077568

Received: 23 October 2022; Accepted: 01 December 2022;

Published: 19 December 2022.

Edited by:

Yunchao Tang, Zhongkai University of Agriculture and Engineering, ChinaReviewed by:

Jana Shafi, Prince Sattam Bin Abdulaziz University, Saudi ArabiaCopyright © 2022 Haque, Marwaha, Arora, Deb, Misra, Nigam and Hooda. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sudeep Marwaha, c3VkZWVwQGljYXIuZ292Lmlu; Md. Ashraful Haque, YXNocmFmdWwuaGFxdWVAaWNhci5nb3YuaW4=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.