94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci., 02 December 2022

Sec. Sustainable and Intelligent Phytoprotection

Volume 13 - 2022 | https://doi.org/10.3389/fpls.2022.1051348

This article is part of the Research TopicSustainable and Intelligent Plant Health Management in Asia (2022)View all 5 articles

Cheng-Feng Tsai1†

Cheng-Feng Tsai1† Chih-Hung Huang2,3†

Chih-Hung Huang2,3† Fu-Hsing Wu4†

Fu-Hsing Wu4† Chuen-Horng Lin5

Chuen-Horng Lin5 Chia-Hwa Lee2,6

Chia-Hwa Lee2,6 Shyr-Shen Yu7

Shyr-Shen Yu7 Yung-Kuan Chan1,3*

Yung-Kuan Chan1,3* Fuh-Jyh Jan2,3,6*

Fuh-Jyh Jan2,3,6*Phalaenopsis orchids are one of the most important exporting commodities for Taiwan. Most orchids are planted and grown in greenhouses. Early detection of orchid diseases is crucially valuable to orchid farmers during orchid cultivation. At present, orchid viral diseases are generally identified with manual observation and the judgment of the grower’s experience. The most commonly used assays for virus identification are nucleic acid amplification and serology. However, it is neither time nor cost efficient. Therefore, this study aimed to create a system for automatically identifying the common viral diseases in orchids using the orchid image. Our methods include the following steps: the image preprocessing by color space transformation and gamma correction, detection of leaves by a U-net model, removal of non-leaf fragment areas by connected component labeling, feature acquisition of leaf texture, and disease identification by the two-stage model with the integration of a random forest model and an inception network (deep learning) model. Thereby, the proposed system achieved the excellent accuracy of 0.9707 and 0.9180 for the image segmentation of orchid leaves and disease identification, respectively. Furthermore, this system outperformed the naked-eye identification for the easily misidentified categories [cymbidium mosaic virus (CymMV) and odontoglossum ringspot virus (ORSV)] with the accuracy of 0.842 using two-stage model and 0.667 by naked-eye identification. This system would benefit the orchid disease recognition for Phalaenopsis cultivation.

Orchids, the flowering plants, are popular and well-received house plants worldwide. Phalaenopsis shared 79% of the global orchid market in 2018 (Yuan et al., 2021). Phalaenopsis is an important exporting flower for Taiwan. Taiwan’s Phalaenopsis export reached 160 million US dollars in 2021 (https://www.coa.gov.tw/). To meet the demand of the increasing share of the global orchid market, it is imperative to develop better techniques for quality control and management and the optimization of the cost for the production process. (Shaharudin et al., 2021; Yuan et al., 2021).

Phalaenopsis are propagated with tissue culture technique and often densely planted in greenhouses in Taiwan. Such cultivation practice can easily lead to the spread of virus diseases (Lee et al., 2021). Phalaenopsis are cultivated in greenhouses that provide suitable temperature, humidity, daylight, irrigation, and fertilization. However, the close planting environment in greenhouses is quite suitable for virus spread as well (Koh et al., 2014). Viruses might infect the whole orchid plants under cultivation or even spread to most orchids in the entire cultivation area. Orchids infected by viruses will greatly lose their commercial value. Hence, developing better viral disease detection protocols has always been one essential task for the quality control of orchid cultivation (Koh et al., 2014).

There are nearly 60 viruses that reportedly infect orchids. The most common viruses are odontoglossum ringspot virus (ORSV) and cymbidium mosaic virus (CymMV) worldwide (Huang et al., 2019; Lee et al., 2021). It is a common phenomenon that the coinfection of ORSV and CymMV on orchids resulted in a synergistic effect on symptoms. Capsicum chlorosis virus (CaCV), previously found on Phalaenopsis, was once known as “Taiwan virus”. CaCV belongs to Orthotospovirus which can be transmitted by thrips. These viruses frequently appear on orchid farms in Taiwan. Plants suffered from viral diseases grow slowly and eventually lose the economic values (Zheng et al., 2008; Lee et al., 2021). Early detection and removal of diseased plants is a prerequisite for ensuring the quality of orchids. The most commonly used detection methods for orchid viruses involve the nucleic acid amplification and serology. For instance, the enzyme-linked immunosorbent assay (ELISA), immunostrip test, reverse transcription-polymerase chain reaction (RT-PCR), polymerase chain reaction (PCR), and reverse transcription-loop mediated isothermal amplification (RT-LAMP) were often used (Eun et al., 2002; Chang, 2018; Lee et al., 2021). However, these detection methods are labor-intensive and expansive economically. Moreover, some of the early-stage symptoms on the orchid leaf infected by one of the common viruses are not noticeable and often not easily identified by the naked eye. Therefore, an automated and precise intelligent image analysis system for the identification of Phalaenopsis orchid viral diseases can be a powerful tool for better management of the viral diseases of orchids.

During the past decade, using artificial intelligence (AI) methods with image data or big data for the prediction or detection of plant diseases has become very popular globally (Chan et al., 2018; Wu et al., 2022). For example, Ali et al. (2017) reported the detection of citrus diseases using machine learning (ML) models, k-nearest neighbor (KNN) and support vector machine (SVM), with color histogram and texture features of the infected leaf images. The classification of soybean leaf diseases was reported using the convolution neural network (CNN, a deep learning model) with the segmentation of soybean leaf and the background of each image, as well as data augmentation (by image translation and rotation) for increasing the case number of training data (Karlekar and Seal, 2020). A tomato leaf disease classification using a deep learning model, which integrated DenseNet121 and transfer learning, with conditional generative adversarial network (C-GAN) (Mirza and Osindero, 2014) for data augmentation was proposed and achieved with great accuracy (Abbas et al., 2021).

A recent review article (Dhaka et al., 2021) revealed that the AI models proposed in 20 articles for classifying different plant types (apple, cucumber, tomato, radish, wheat, rice, or maize in individual research) could reach good or excellent accuracies (0.8598-0.9970). ML-based approaches were adopted in the identification of plant growth stages, taxonomic classification of leaf images, plant image segmentation (reported in 6 studies); and deep learning (DL) architectures with transfer learning were applied in segmentation of crops and weeds, weed identification, disease classification of 12 plant species, identification of biotic and abiotic stress, and leaf counting (reported in 6 studies) (Nabwire et al., 2021).

AI models were designed to identify plant leaf diseases; they could reach fair, good, or excellent accuracies (0.590-0.9975) as mentioned in 32 articles (Dhaka et al., 2021). Basically, these researches focused on identifying leaf diseases for one of the following plant types: banana, apple, 14 crop species, 6 plant species, tomato, cucumber, rice, 25 plant species, olive, wheat, radish, 14 plant species, potato, cassava, maize, radish, grapevine, and tea (Dhaka et al., 2021). Various feature extraction methods reported in 9 studies for the image-based plant disease detection were reviewed (Ghorai et al., 2021). It was clearly documented that texture and/or color features were adopted in most of these studies on disease detection for soyabean, maize seedling, grape, bean, tomato, rice and one study on orchid (Huang, 2007; Ghorai et al., 2021).

A review article (Vishnoi et al., 2021) reported that the top three crops associated with plant disease detection using image processing techniques during 2009-2020 were rice (11%), tomato (11%), and corn (7%). Reportedly, only 1% of the studies was associated with orchids and in them there was very few studies using the AI method for the identification of common orchid diseases using orchid images (Vishnoi et al., 2021). We aimed to develop the AI system with images of orchid seedlings for accurate detection of common viral diseases of orchids. The system will provide higher accurate detection capability than that with the naked eye, and suitable for early detection that in turn can reduce the economic loss. The system enables non-destructive detection and can be developed toward automatic and fast detection that can replace the nucleic acid amplification and serology detection that are labor-intensive and neither time nor cost efficient for the comprehensive detection. Thereby, the proposed intelligence system can benefit the orchid cultivation industry.

The Phalaenopsis images and methods described in this section were prepared and designed for accurate identification of common viral diseases of orchids. The orchid virus inoculation and Phalaenopsis image acquisition were described in section 2.1. The design flow of the proposed AI system, associated with the adopted image processing methods and AI models, for realizing the accurate identification of common viral diseases of orchids using the acquired Phalaenopsis images was sequentially described in section 2.2.

Leaves of ORSV- or CaCV-infected N. benthamiana and CymMV-infected C. quinoa were ground in phosphate buffer (0.1 M potassium phosphate buffer, pH 7.0) and the resultant saps were inoculated to Phaleanopsis seedlings. Inoculated plants were maintained in a growth chamber with the temperature set at 25°C located in the National Chung Hsing University (NCHU, Taichung, Taiwan) for symptom development.

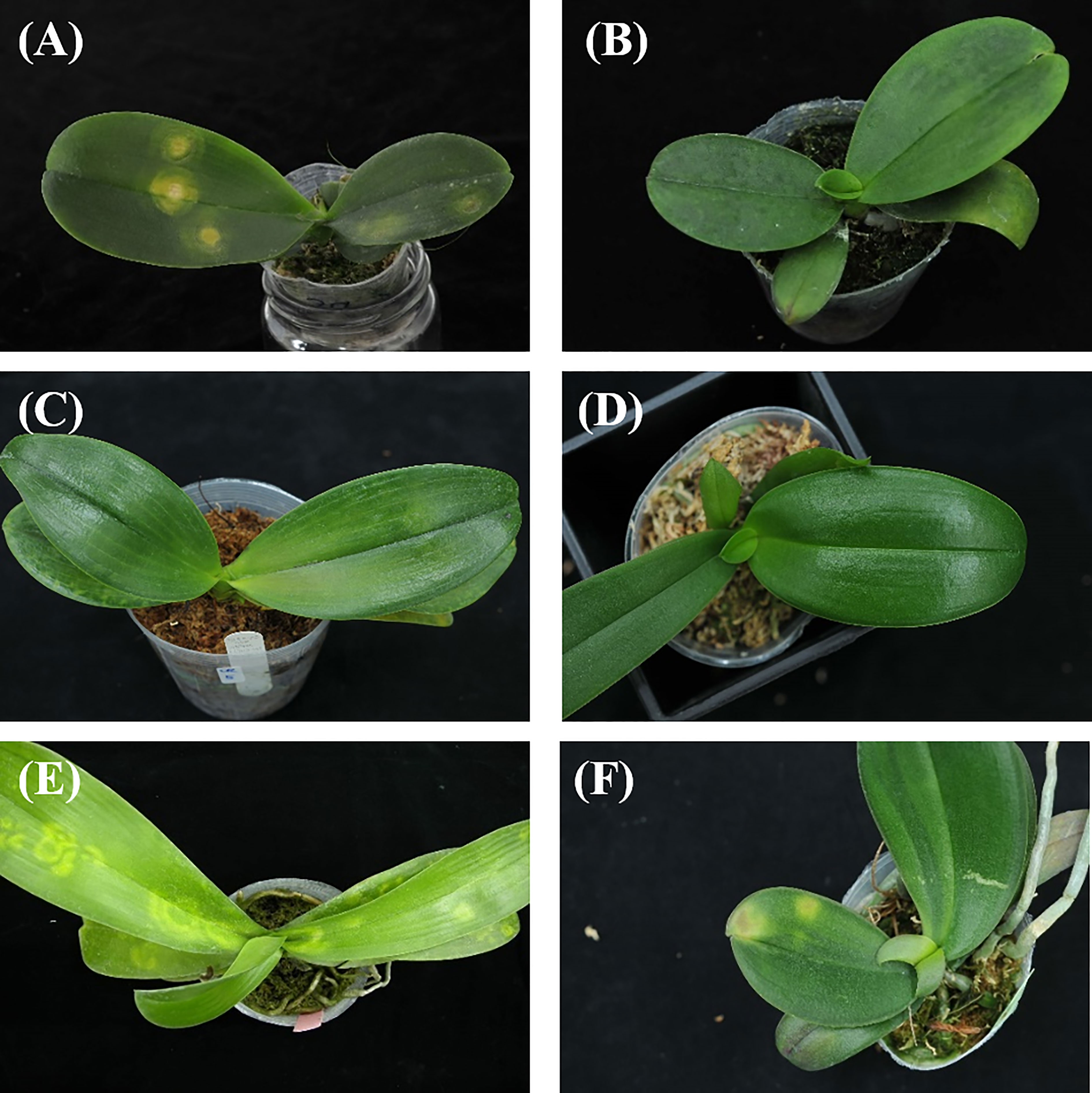

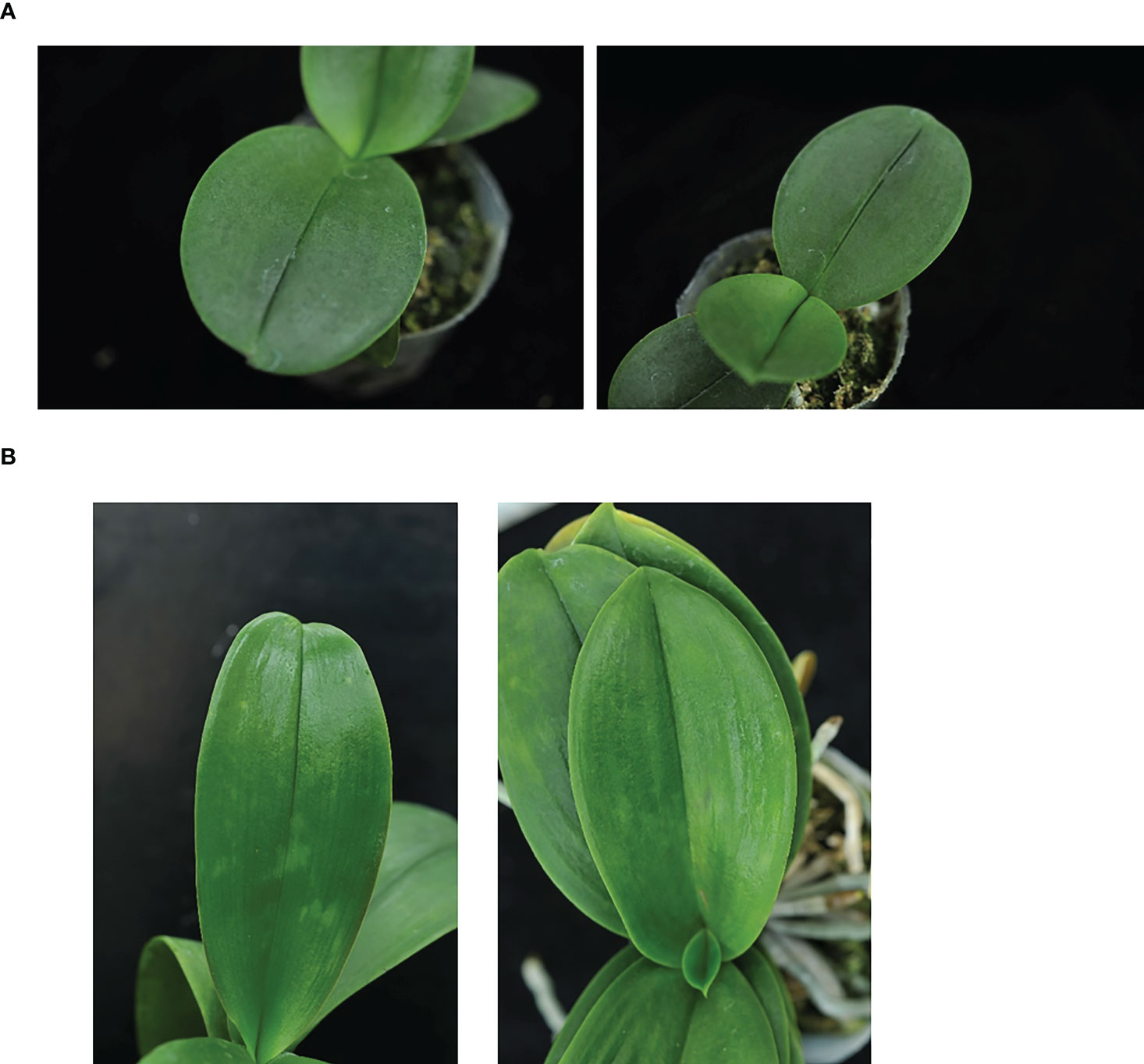

A total of 1888 images of Phalaenopsis consisted of five categories (CymMV infection, ORSV infection, CaCV infection, health and others) were generated. Photographic samples included collections from various orchid farms and artificially inoculated Phalaenopsis. The virus-infected Phalaenopsis was photographed with a digital single-lens reflex (DSLR) camera (Nikon D300). Plants were placed on black cloth for photos from different angles. Figure 1 shows examples of these images. The study viruses were inoculated into Phalaenopsis mechanically. ORSV-infected plants exhibited chlorotic mosaic symptoms on the apical leaves. CymMV-infected plants exhibited mosaic symptoms on the apical leaves. CaCV-infected plants exhibited chlorotic ringspots symptoms on the inoculated leaves. All samples were detected by ELISA for the presence of viruses. ORSV infection induced symptoms of chlorotic mosaic, mosaic, chlorotic arch and chlorotic spots on Phalaenopsis leaves. CymMV infection induced symptoms of chlorotic necrosis, mosaic, necrotic streaks, and necrotic spot turned dark on Phalaenopsis leaves. CaCV infection induced symptoms of chlorotic ring spots of different sizes on Phalaenopsis leaves. In Taiwan, eight viruses reportedly infect orchids. The most common orchid viral diseases in Taiwan are ORSV, CymMV, and CaCV. Phalaenopsis orchids showing virus-like symptoms were collected in Taiwan. All samples were detected by ELISA for the presence of viruses. Phalaenopsis leaves with virus-like symptoms but from which no virus was detected were listed in the category of “others”. In other words, symptomatic leaves other than CymMV-, ORSV-, and CaCV-infected leaves were listed in the category as “others”.

Figure 1 Image examples of orchids: (A) Capsicum chlorosis virus (CaCV) infection, (B) Cymbidium mosaic virus (CymMV) infection, (C) Odontoglossum ringspot virus (ORSV) infection, (D) health, (E) and (F) others.

The system architecture for the identification of Phalaenopsis orchid diseases (shown in Figure 2) included two major portions, namely, the leaf segmentation and disease identification. The leaf segmentation can keep only the leaf image with all other parts being removed from a Phalaenopsis orchid image. The leaf segmentation can be regarded as the image preprocessing before the disease identification. The disease identification, following the leaf segmentation, was designed to identify the five categories (described in section 2.1) using the two-stage-AI model with the segmented leaf image and the acquired leaf texture features of the Phalaenopsis orchid image. The proposed AI system performed all the procedures sequentially, shown in the 8 blocks in Figure 2, for the identification of orchid diseases. The design methods of this identification system, illustrated in Figure 2, were described in detail in the following subsections. The detailed procedures and methods of two major portions, the leaf segmentation and disease identification, were described in section 2.2.1 and section 2.2.2, respectively, with each subsection title corresponding to the block in Figure 2. For example, the subsection title “Contrast enhancement” follows the first paragraph of section 2.2.1 is also presented as one procedure shown in the second left block in Figure 2. The details of each procedure are described after the corresponding subsection title. This identification system, including AI models and image processing approaches, were developed using Python 3.6.10 (with Tensorflow 1.15 and Keras) under Windows 10 pro and executed in Intel i7-9700K 8-core CPU and Nvidia RTX-2080TI GPU.

The first half of this system architecture, illustrated in the upper half of Figure 2, mainly performs the leaf segmentation from each Phalaenopsis orchid image by the contrast enhancement (CE) using color space transformations and gamma correction for solving the non-uniform brightness issue of the input images; leaf region prediction using the U-net model for selecting the leaf regions in an orchid image; and removal of non-leaf fragment areas using connected component labeling for removing small fragment areas and keeping the desired leaf regions in an orchid image. Technical details of these procedures are explained as follows.

The non-uniform brightness of input images was a common issue in the field of image processing. The following image preprocessing method, the contrast enhancement with color space transformations was adopted for brightness adjustment in this study. A color image is usually expressed using the RGB format with each pixel range from 0-255 levels for red, green, and blue components, respectively. The RGB format can be transferred into the YUV space using Equation (1) (wikipedia.org, 2022). In the YUV space, Y, U, and V components represent the luminance (or brightness), chrominance, and chroma, respectively. The YUV space can also be transferred back to the RGB space by Equation (2) (wikipedia.org, 2022).

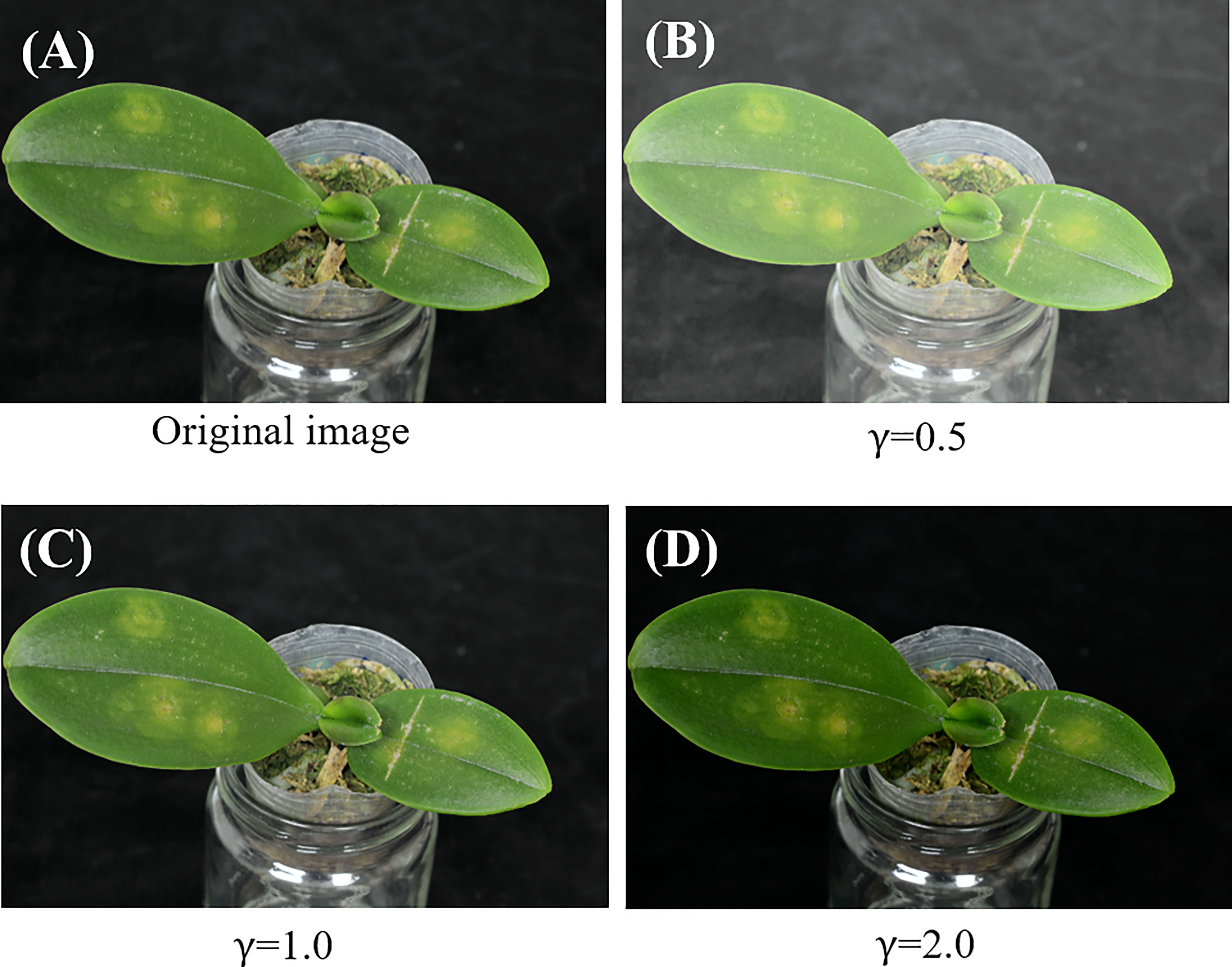

The gamma correction, expressed in Equation (3), can be used to adjust brightness level within an image by computing a power of gamma γ of the normalized brightness from Y-component IY(x,y) , brightness value for each pixel within the image. If γ is smaller than 1, the detail of the dark region can be enhanced (highlighted). If γ is larger than 1, the detail of the bright region can be enhanced.

The brightness of the input Phalaenopsis orchid images may not be uniform. Some of the Phalaenopsis orchid images may be bright and some may be dark as shown in (Figures 3A, B) respectively. Because of this issue, a color Phalaenopsis orchid image was read in and followed by the contrast enhancement. The contrast enhancement was performed by transferring the RGB space of the input image, such as shown in (Figure 4A), into the YUV space using Equation (1) and followed by the gamma correction, using Equation (3), for obtaining the enhanced Y component IY'. The U and V components (chrominance and chroma) were remained the same to keep the color information of each orchid image including symptom colors and symptom textures of each leaf image. Figures 4B–D shows the images after the contrast enhancement with different gamma values.

Figure 4 (A) An original orchid image and (B-D) images after contrast enhancement with different gamma values.

In order to prevent objects unrelated to leaf symptoms such as the soil and potted container from affecting the subsequent model of disease identification, we separated the Phalaenopsis orchid leaf region from the image using a U-net model before performing the disease identification.

The U-net (Ronneberger et al., 2015) was a very popular AI network architecture for image segmentation. Because the network architecture of the model is very similar to a capital letter U, hence named U-net. U-net consists of two portions, namely the encoder and decoder. The encoder mainly extracts image features of different sizes by multiple sub-models. The decoder concatenates features from the same level layers of the encoder and up sample to generate the trained image mask. The U-net features performing the image segmentation with a small amount of training images.

The U-net model adopted in this study was illustrated in Figure 5. The leaf region and background region were specified in white and black colors, respectively, (as illustrated in Figure 6) using an image labeling tool for preparing the training data. Then the output of the trained U-net model will provide the leaf region and background region. 1504 and 377 images (randomly split from 1881 Phalaenopsis orchid images) were used as the training dataset (80%) and testing dataset (20%), respectively, for designing the U-net model. U-net parameters adopted in this study include that image size=256x256, 3 channels, batch size=8, epoch=200, Adam optimizer, and learning rate=0.0001.

The contrast enhanced YUV space after gamma correction, using Equation (3), with different values of γ were transferred back to the RGB space using Equation (2) and input to the U-net model for obtaining the best value of γ .

The performances of segmenting the leaf region from the background region in a Phalaenopsis orchid image using the U-net model were quantified by the parameters formulated in Equations (4)-(7). TP, TN, FP and FN represent for the true positive, true negative, false positive, and false negative, respectively.

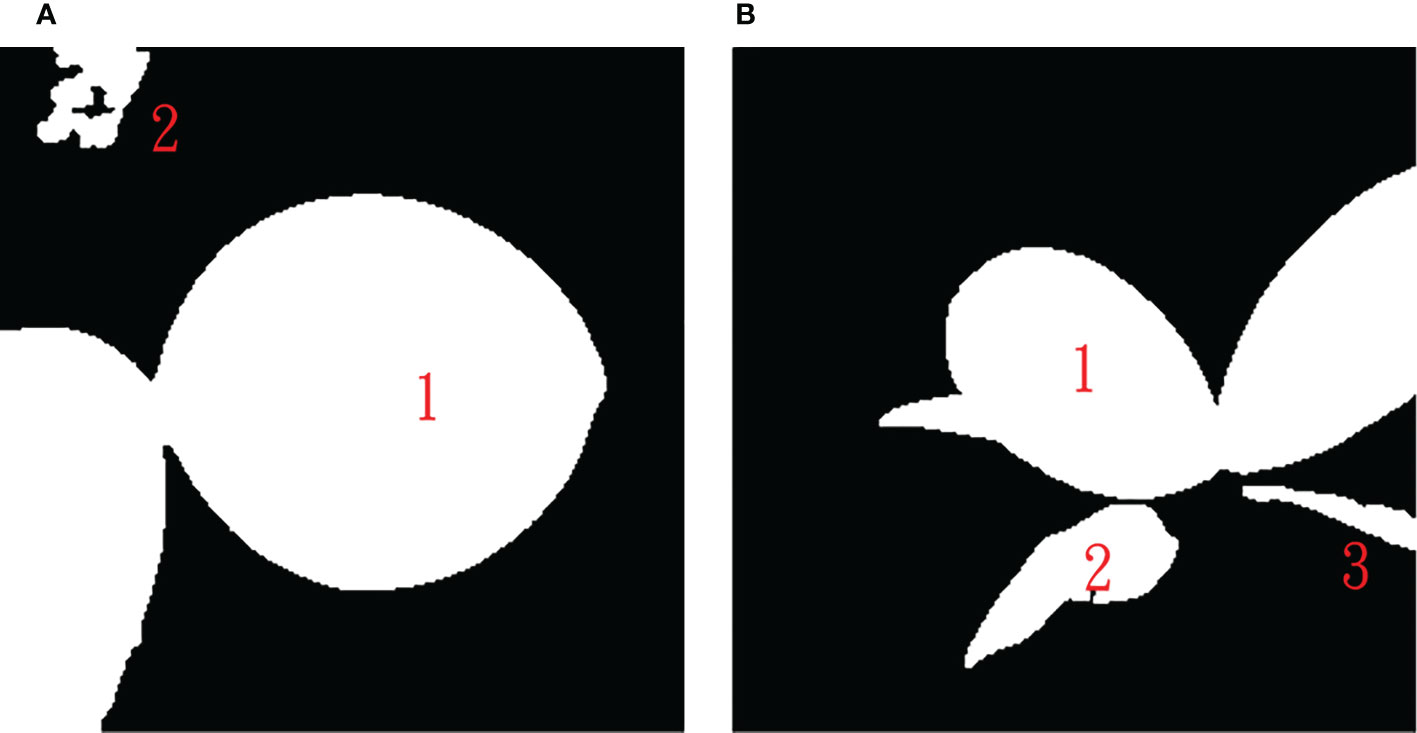

After the contrast enhancement and leaf region prediction using the U-net model, the mask region generated may include non-leaf regions such as the white mask region indicated by the red oval in the right panel of (Figure 7A). Besides that, the leaf regions in an image may not be connected as shown in (Figure 7B). Furthermore, sometimes the side-view picture of a leaf was taken, such as that shown in the left panel of (Figure 7C), and its top-view leaf texture may not be observed. Therefore, the predicted mask of a side-view leaf may be less helpful for the disease identification.

U-net predicted leaf regions (mask), such as shown in the right three panels in Figure 7, were further processed using the connected component labeling method with 8-neighbor connectivity (Chen et al., 2018) to recognize each connected region to obtain multiple connected regions in an Phalaenopsis orchid image. Figure 8 shows two examples of the automatic labeled connected regions with consecutive Arabic numerals after performing the connected component labeling.

Figure 8 Results after performing the connected component labeling with (A) two connected regions and (B) three connected regions.

The maximum area, maxarea, of connected leaf regions obtained was computed (excluding the background region). If the area of any of the rest connected regions is smaller than a threshold area THarea (a ratio of maxarea), this connected region was regarded as a non-leaf fragment area and will be removed from the predicted leaf region. Experimental results obtained in this study suggested that THarea=1/20 maxarea could have the highest accuracy for the automatic removal of non-leaf fragment regions and leaf segmentation.

The second half of this system architecture, illustrated in the lower half of Figure 2, mainly performs the disease identification from segmented leaf images by sequentially performing the image augmentation for obtaining more and balanced numbers of images among all categories, leaf texture feature acquisition for obtaining and quantifying symptom features on each leaf image, and two-stage identification model for accurate disease identification. Technical details of the procedures were described as follows.

The numbers of five categories of Phalaenopsis orchid images collected in this study were imbalanced as shown in Figure 9. The data augmentation was performed to solve the imbalanced issue and avoid overfitting for designing a better identification model. The smaller number categories, ORSV and “others”, were augmented by randomly selecting some images and rotating them by ±15° . The image numbers of ORSV and “others” were increased by 0.5 and 2 folds, respectively. More than 400 images for each category were obtained.

The texture features of the Phalaenopsis orchid leaf were computed and extracted using two techniques: the method of rotation invariant local binary pattern (LBPri) (Ojala et al., 2002) and the method of gray level co-occurrence matrix (GLCM) (Haralick et al., 1973).

The technique of LBPri can provide the rotation invariant features using a local binary pattern, LBP, (Ojala et al., 2002). The computation of LBPri is shown in Equation (8). LBPri creates P -bit circular patterns and encoded them as P -bit binary numbers for a point with P circular neighbors (with radius R ). The minimum value of all rotation results is selected as the LBP value of the pattern center. For R =1 and P =8, 36 rotation invariant patterns (features) can be obtained and were used as 36 features for leaf texture in the current study.

where ROR(LBPP,R,k) rotates k bit clockwise with radius R and P neighbors of a circular pattern LBP (Ojala et al., 2002).

The method of GLCM can extract and quantify multiple features for the texture of a gray level image (Haralick et al., 1973; Ozdemir et al., 2008; Zulpe and Pawar, 2012; Hall-Beyer, 2017; Andono et al., 2021). Six features, contrast, dissimilarity, homogeneity, angular second moment (ASM), energy, and correlation, were computed using GLCM with Equations (9)-(14) (Haralick et al., 1973; Ozdemir et al., 2008; Zulpe and Pawar, 2012; Hall-Beyer, 2017; Andono et al., 2021) in the current study. In the matrix of GLCM, the matrix element, Pd,θ(i,j), represents the relative occurrence frequency for the gray level pair with gray level i and j among all pixel pairs separated with distance d and relative angle θ in a gray level image.

where μx , μy σx , and σy are the means and standard deviations of , and , respectively.

Each of the six features was computed for four different angles (θ=0°,45°,90°,and 135° ) with distance d=1 , as mentioned in the method of GLCM (Haralick et al., 1973) and select the maximum value as this feature as shown in Equation (15).

Totally 42 features of the leaf texture were extracted from a Phalaenopsis orchid image using methods of LBPri and GLCM, and each feature was normalized within 0~1.

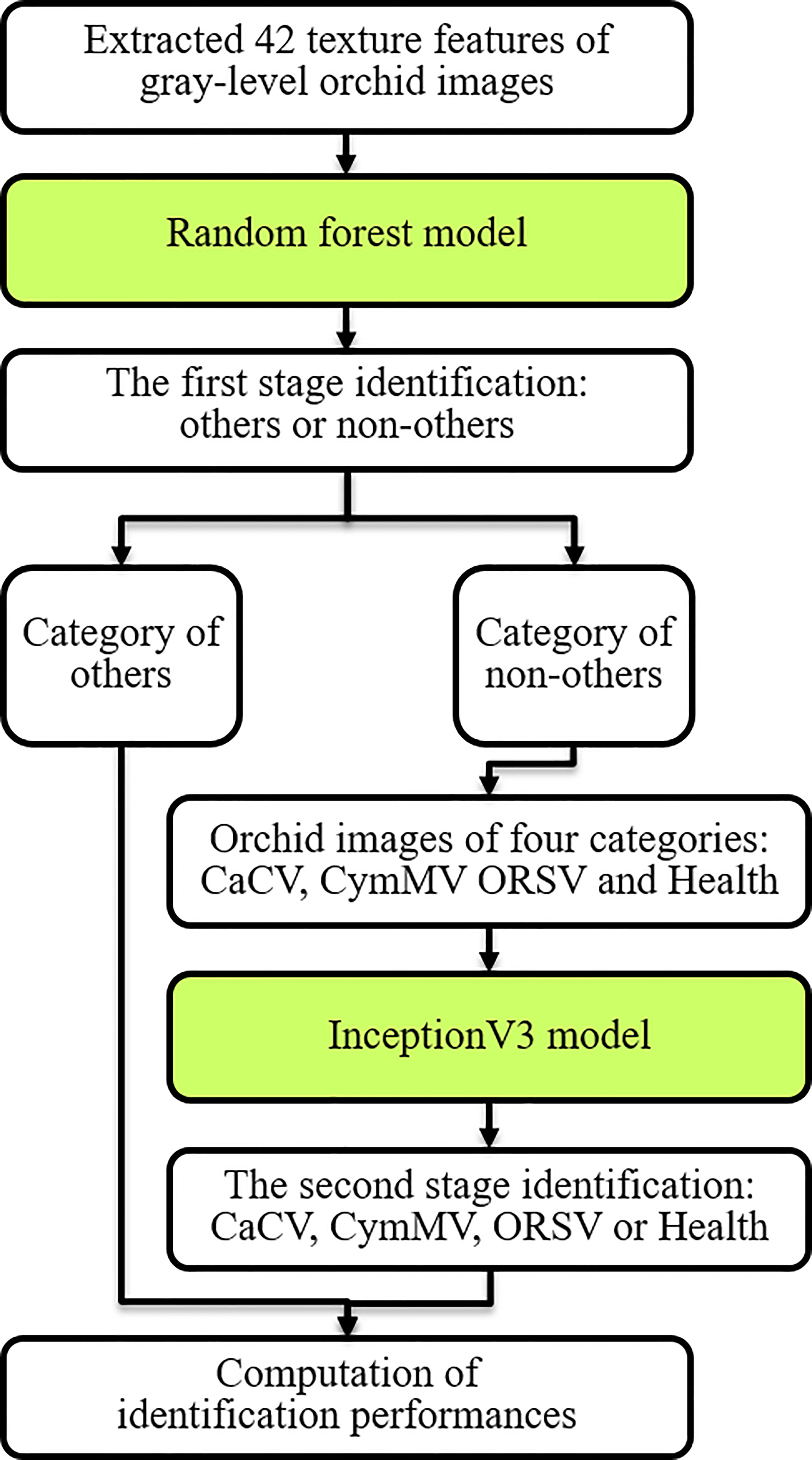

We adopted and proposed the two-stage model as shown in Figure 10 for Phalaenopsis orchid disease identification with excellent identification performances. Firstly, a random forest (RF) model (Breiman, 2001) was designed using 42 features of leaf texture obtained using methods of LBPri and GLCM to preliminarily classify the 5 categories (mentioned in section 2.1) into 2 categories: “others” and “non-others”. Secondly, the InceptionV3 model (Bal et al., 2021), a deep learning model as illustrated in Figure 11, was created using the Phalaenopsis orchid images to identify an input image is the CaCV-infected, CymMV-infected, ORSV-infected or healthy leaf.

Figure 10 Procedures of the two-stage identification model for identifying orchid diseases using extracted leaf features and orchid images.

The random forest model (Breiman, 2001; Athey et al., 2019; Musolf et al., 2021; Singh et al., 2021; Yang et al., 2021; Al-Mamun et al., 2022) is one of a popular machine learning models for classification. Before the first stage of the identification model, the R, G and B components of each Phalaenopsis orchid image were individually transferred to a gray level image and the methods of LBPri and GLCM described above were used to obtain the texture features of each gray-level image. There were 1841 (80%) and 462 (20%) images for training and testing, respectively. Three datasets of texture features corresponding to the transferred gray-level image of R, G, or B component of all these images were extracted respectively. Each of the three datasets was used to design and verify the identification performance of a random forest model with 300 decision trees individually.

Recently, the feature importance of a RF model was increasingly presented in AI applications (Musolf et al., 2021; Algehyne et al., 2022; Loecher, 2022). The package of gini importance (or mean decrease impurity) built in Python (scikit-learn package) was adopted for the feature importance computation of the RF model (Menze et al., 2009; Wei et al., 2018; Płoński, 2020). Furthermore, the method of principal component analysis, PCA, (Bro and Smilde, 2014; Zhao et al., 2019) was adopted to reduce the dimension of data features to analyze and visualize the distribution of the most important features among all categories for category selection in the first stage design.

An InceptionV3 model, the third generation of GoogleNet Inception, can excellently extract the detail information and feature of an image, reduce training parameters, and solve overfitting issue by the modified neural structures (Gunarathna and Rathmayaa, 2020; Jayswal and Chaudhari, 2020; Bal et al., 2021; Xiao et al., 2022). In the second stage of the disease identification model, an InceptionV3 model was designed as the identification structure shown in Figure 11. The feature extraction portion of the inceptionV3 model included the convolution layers, pooling layers, and inception model layers A-E as illustrated in Figure 11, and was followed by classification layers (which sequentially included two fully connected layers (FC1 with L2 normalization and FC2), a dropout layer (for solving the overfitting issue during training), and the third fully connected layer (FC3) with the softmax activation function) for classification (Bal et al., 2021). The flatten layer (Bal et al., 2021) was adopted for connecting the feature extraction portion to the classification layers.

An inceptionV3 model pretrained using the ImageNet dataset (which includes 1000 categories for object identification) was selected and transferred to the inceptionV3 model, illustrated in Figure 11, for initializing hyper parameters (Russakovsky et al., 2015; Chen et al., 2019; Gunarathna and Rathmayaa, 2020; Morid et al., 2021). In this study, 1841 (80%) and 462 (20%) images were adopted for further training and testing the InceptionV3 model. The output category of inceptionV3 model for each image was determined by the category with the highest confidence (among the four categories). Adopted parameters of the optimized InceptionV3 model included the input image size=224x224, 3 channels, batch size=16, epoch=500, Adam optimizer, and learning rate=0.000005.

The performances of the leaf segmentation with contrast enhancement (described in section 2.2.1) are shown in Figure 12 for different gamma γ values. The accuracy=0.9707 is highest when γ =1.5, and the corresponding precision, recall and F1-score are excellent as listed in the row of (CE + U-net) of Table 1. Only three cases gave their predicted masks with the larger errors (accuracy< 0.9) as shown in the right panels of Figure 13.

The performances of leaf segmentation (using CE, U-net model, and RNLFA) listed in the row of (CE + U-net + RNLFA) in Table 1, were also excellent and we adopted this integrated leaf segmentation method to exclude the non-leaf area for favoring the following disease identification.

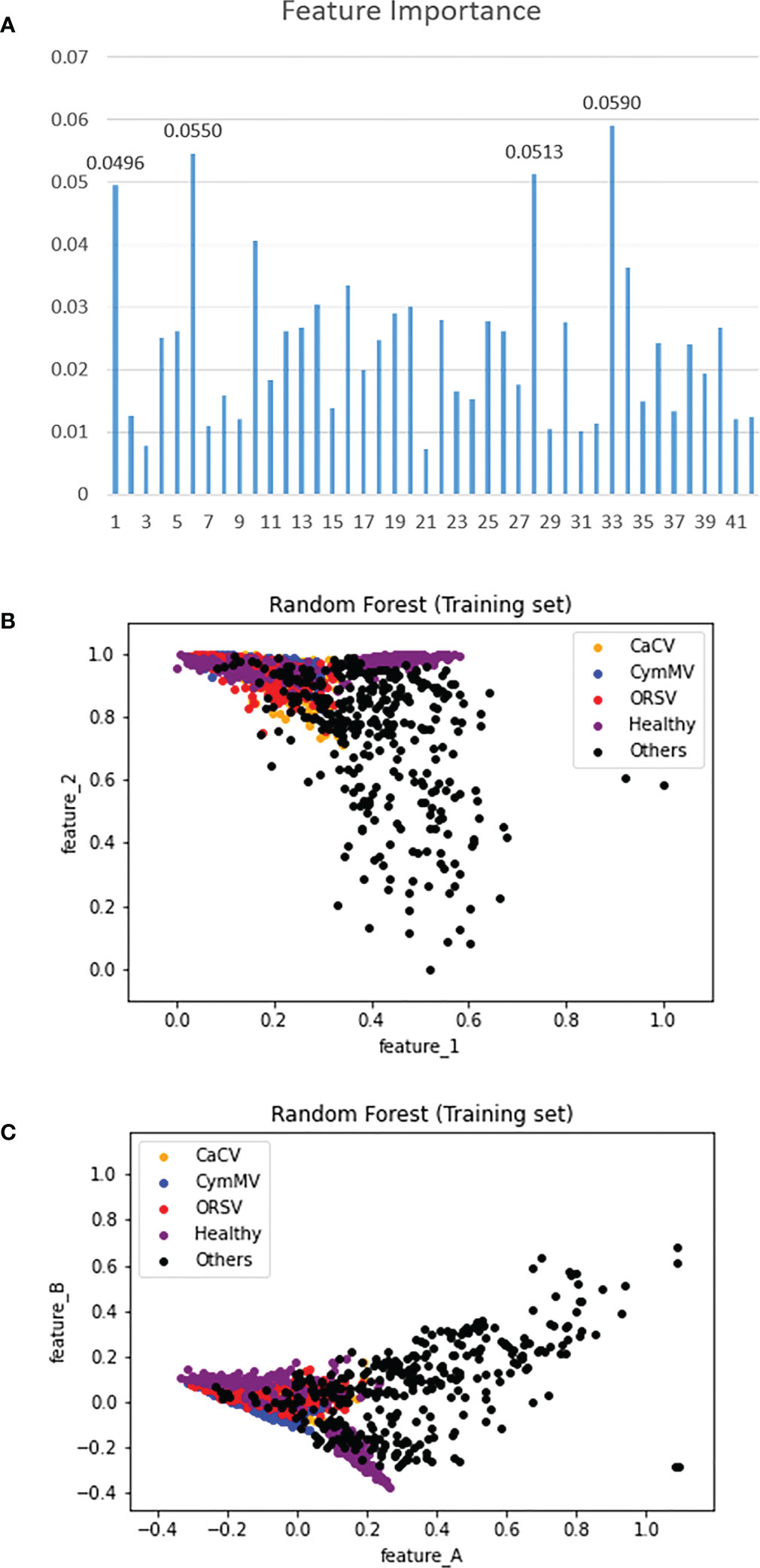

The relative importance of 42 features was illustrated in (Figure 14A). The numbers in the horizontal axis represent the 42 features (contrast, dissimilarity, homogeneity, ASM, energy, correlation, and followed by 36 features of LBPri) extracted using methods of GLCM and LBPri from the leaf texture. The top four features were contrast and correlation of GLCM as well as the 22nd and 27th of LBPri features. (Figure 14B) presents the distribution of the five categories of orchid leaves associated with the top two features. Furthermore, (Figure 14C) shows the category distribution associated with the two features (namely, feature_A and feature_B) after the dimension reduction using PCA (described in section 2.2.2) from the top 4 features shown in (Figure 14A). (Figures 14B, C) illustrated the distribution of the category “others” differs most from the other four categories. This phenomenon explains the reason why we classified categories into “others” and “non-others” in the first stage of the proposed two-stage model shown in Figure 10.

Figure 14 (A) The importance of 42 extracted features, (B) the category distribution in the top-2 feature plan, and (C) the category distribution related to 2 features obtained using the method of PCA with top 4 features.

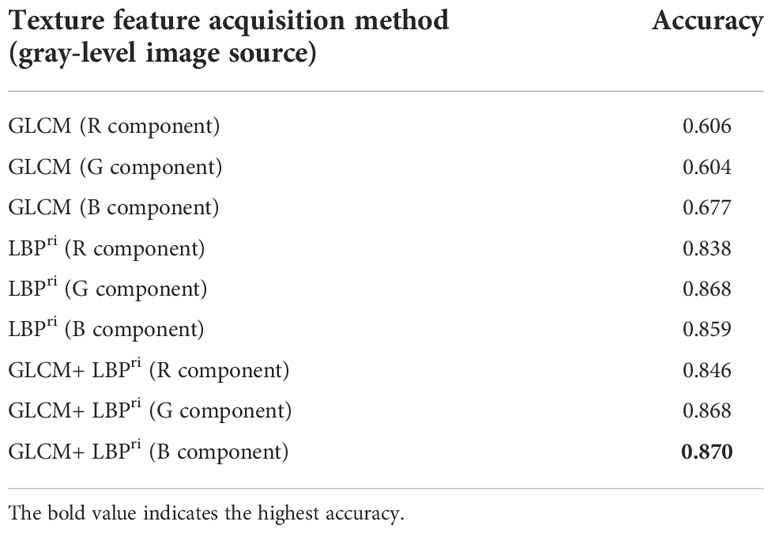

Table 2 shows the accuracies of the single-stage identification using the random forest models, designed with different methods of leaf texture feature acquisition (GLCM or/and LBPri) and different gray-level images (obtained from the R, G or B component of each segmented leaf image), to identify the five categories (listed in section 2.1). It was obvious that the best performances were with models designed using GLCM + LBPri for the leaf texture feature acquisition (the last three rows in Table 2). This was the reason for using GLCM + LBPri to extract leaf texture features in this study. The accuracy (0.87) of the random forest model designed using GLCM + LBPri with gray-level image of B component (the last row in Table 2) was the highest.

Table 2 Identification performances of single-stage RF models designed with different combination of texture feature acquisition methods and gray-level images (obtained from the different R, G or B component).

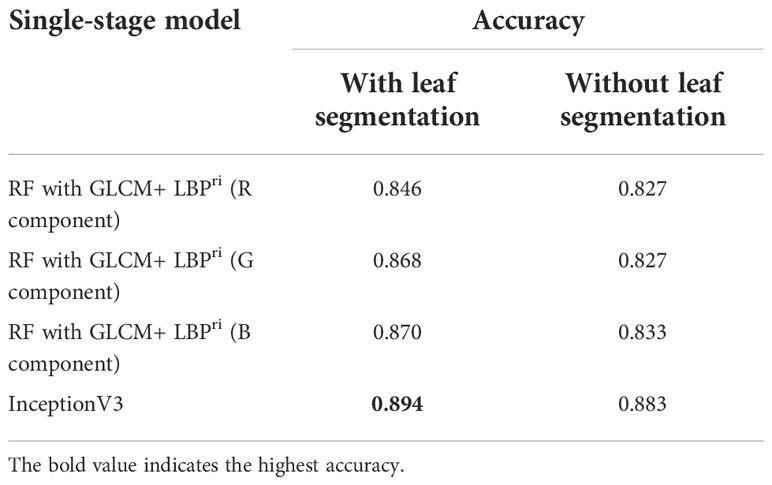

Identification accuracies of four single-stage models with or without the leaf segmentation are listed in Table 3. Furthermore, Table 4 shows the values of recall and precision by category using single-stage models with leaf segmentation in identifying leaves with the CaCV infection, CymMV infection, ORSV infection, health status, and other diseases.

Table 3 Identification accuracies of single-stage models (for identifying five categories of leaf diseases) with or without leaf segmentation.

Table 5 shows the identification performances for leaf diseases using the proposed two-stage architecture shown in Figures 2, 10, and described in section 2.2. Each bold value in Table 5 indicates that it outperformed other single-stage models shown in Tables 4, 3, except for the category of “others” which performs equally to the RF model with B-component features shown in Table 4. This equal performance resulted from the first stage of the two-stage model was using this RF model with B-component features shown in Table 4 for preliminary categories identification of “others” and “non-others”.

Table 5 Identification performances (the recall, precision and mean accuracy) of the proposed two-stage model.

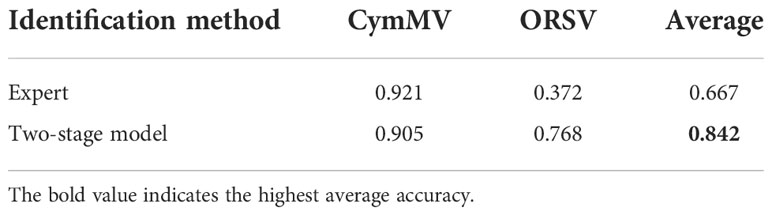

Based on the experimental results (Table 5), it was obvious that the identification performances of two categories (CymMV and ORSV) were the lowest among the five categories. This mainly resulted from the differences between symptoms of the two categories (CymMV and ORSV) were not noticeable or in some cases they were even similar. However, these two categories were confirmed by ELISA assay. Examples of misclassified CymMV and ORSV cases are shown in Figure 15. The accuracy comparison in the identification between these two categories (CymMV and ORSV) using the proposed two-stage model and by the expert was performed. The results presented in Table 6 showed that the proposed two-stage model outperformed expert identification in the identification of the easily misidentified categories (CymMV and ORSV).

Figure 15 Misidentified examples between two categories, cymbidium mosaic virus (CymMV) and odontoglossum ringspot virus (ORSV). (A) ORSV cases misidentified as CymMV. (B) CymMV cases misidentified as ORSV.

Table 6 The accuracy comparison between the proposed two-stage model and expert identifications for the CymMV and ORSV categories.

The contrast enhancement, including Equation (3), the Y-component of the color space YUV with the color space transformations, and Equations (1)-(2), was adopted for brightness adjustment to solve the non-uniform brightness issue in this study. The effectiveness of brightness adjustment was shown in (Figures 4B, D) with different values of gamma γ . Moreover, the accuracy, precision, recall, and F1 score of the leaf segmentation (illustrated in Figure 12) with different values (0.1-2) of gamma γ were all excellent (>0.962). The results described above directly or indirectly proved that the adopted contrast enhancement method solved the issue of non-uniform brightness effectively. Besides the YUV space, other color spaces (such as YCbCr, HSI, Lab, HSL, etc.) which include one component of brightness, intensity, or lightness might be other options with the corresponding color space transformations for implementing the contrast enhancement to solve the non-uniform brightness issue (Banerjee et al., 2016; Sabzi et al., 2017; Shi et al., 2019; Tan and Isa, 2019; Xiong and Shang, 2020).

Predicted masks with the larger errors (only three cases were obtained with the accuracy< 0.9 in this study) obtained in the leaf segmentation process as shown in the three right panels of (Figures 13A–C) resulted from (a) one of the leaves was confused with the green objects inside the pot, (b) the pot color was close to the colors of leaves, and (c) the leaf was seriously injured (there were only 2 images of this kind of the seriously injured leaf in the entire dataset of this study), respectively.

From our experimental results of the single-stage identification, the identification performance listed in Table 3 shows that the accuracy of every model with the leaf segmentation (accuracy=0.846-0.894) outperformed the corresponding model without leaf segmentation (accuracy=0.827-0.883). And The inceptionV3 model performed best with accuracy=0.894. The results verified the importance of using the leaf segmentation for accurate identification.

Table 4 shows that all three RF models with gray-level images of R, G or B component outperform the inceptionV3 model in the first-stage identification results (recall and precision) for the identification of the category “others”. The RF model with 42 texture features extracted from the gray-level image of B component performed best in the identification of the category “others”. This was the reason why we adopted the RF model with B-component texture features as the first stage model in designing the proposed two-stage model (Figure 10) to precisely classify two categories of “others” and “non-others”.

From the identification performances of categories of “non-others” listed in Table 5, it is clear that the proposed two-stage model outperformed all single-stage models listed in Table 4 and Table 3 (except that the recall=0.957 of health category listed in Table 5 was slightly lower than that of the single-stage inceptionV3 model with recall=0.967 listed in Table 4). For the identification of the category “others”, the proposed two-stage model performed excellently and equally to that of the RF model with B-component features which was adopted as the first stage model in the proposed two-stage model (Figure 10) in this study as mentioned above.

In Table 5, the overall accuracy (0.918) for the identification of five categories of Phalaenopsis orchid leaf status using the proposed two-stage model (RF + InceptionV3) was excellent and higher than that of the one-stage model (inceptionV3 model) with the best accuracy (0.894) among all one-stage models listed in Table 3.

In the identification performance of two-stage model listed in Table 5, the recall (0.972) and precision (0.981) in the identification of CaCV-infected leaf symptoms were both the highest among the five categories. While the recall (0.768) and precision (0.797) in the identification of ORSV-infected leaf symptoms were both the lowest among the five categories. Based on the confusion matrix of identification results of the proposed two-stage model, it was clear that the most misclassification cases happened between two categories, CymMV and ORSV infections. Symptoms caused by viral infection may vary in Phalaenopsis and often depend on the plant genotype, environment, planting, and virus species and isolates. CymMV and ORSV infections are often misidentified by the naked eye due to their relatively unnoticeable and similar symptoms in early stage of infection or different Phalaenopsis cultivars (Wong et al., 1996; Seoh et al., 1998; Koh et al., 2014). Figure 15 shows four examples of misclassification cases (identified using the two-stage model) between CymMV-infected and ORSV-infected categories. CymMV- and ORSV-infected leaves often exhibit mild symptoms in the early stage of infection or in some Phalaenopsis cultivars as shown in Figure 15.

Table 6 shows the proposed two-stage model identification (accuracy=0.842) outperformed the expert identification (accuracy=0.667) in the identification of CymMV and ORSV categories (easily misidentified). The excellent identification performances shown in Tables 5, 6 demonstrated that the proposed identification system (with architecture shown in Figure 2) would be beneficial toward an automatic and accurate disease identification using Phalaenopsis orchid leaf image, which in turn would benefit the orchid cultivation industry.

Future study may focus only on the development of more precise identification of the only categories of CymMV- and ORSV-infected leaves by designing a modified model with the third stage. On the other hand, as to identify more infection classes individually and precisely maybe worth trying.

Plants were placed on a black cloth for photo-taking to verify the feasibility of this developed recognition system. The following options may be considered for building a working system for the real practice in the greenhouse. The first option: an automatic conveyor system (usually with the black plane of a conveyor belt) may be built to take the orchid image for simultaneous disease detection and other cultivation activities by sequentially putting the orchid seedlings or plants on the conveyor belt for the real practice. This should be an easy realization way but may not be smart nor be fully automatic way. The second option: each orchid seedling or plant may be cultivated with a pot surrounded by the black supporting mechanism. The developed disease detection method may be integrated into an APP of a smart device with auto focus function for taking photo of an orchid. Sequentially taking photos of all (or sampled) orchids in a greenhouse by a person or robot (or a moveable robotic arm system) may be considered. The third option: a robot (or a moveable robotic arm system) with automatic orchid detection function can be adopted to sequentially screen all (or sampled) orchid seedlings or plants in a greenhouse. The black cloth or some object like that can be automatically and sequentially placed surrounding each target orchid pot by the robot. Then, the robot can automatically move the camera to the suitable position to take the photo of an orchid seedling or plant for disease detection. The fourth option: similarly, a robot (or a moveable robotic arm system) with automatic orchid detection function can be adopted to sequentially screen all orchid seedlings or plants in a greenhouse. Then, the robot can automatically move the camera to the suitable position to take the photo of an orchid seedling or plant. The non-orchid area (the area without orchid leaf nor its pot in an orchid image) can be identified and removed using an AI model with image processing techniques before performing the disease identification. Any of the above four options for the real practice of the disease identification system may be considered to simultaneously integrate with the original routine cultivation activities, the disease identification method proposed in this study, and a suitable APP in the smart device.

As mentioned in section 1.1, many previous studies focused on AI models or image processing techniques for the identification of plant diseases. For example, the review paper (Vishnoi et al., 2021) mentioned that the top 3 studied plants were rice (11%), tomato (11%) and corn (7%) in addition to many other plants using image processing or AI methods during 2009-2020. However, orchid disease identification studies using AI or image processing methods shared only 1% (Vishnoi et al., 2021). Based on reports of other review articles (Dhaka et al., 2021; Ghorai et al., 2021) and the survey results, there were very few orchid disease identification studies involved the use of the AI model and leaf images.

Huang (2007) adopted an AI model (back-propagation neural network, BPNN, with lesion area segmentation and texture features) in detecting and classifying Phalaenopsis seedling diseases including 4 categories: the bacterial soft rot (BSR), bacterial brown spot (BBS), Phytophthora black rot (PBR), and OK with the average accuracy reached 0.896 (Huang, 2007). The classified diseases (BSR, BBS and PBR) (Huang, 2007) were different from diseases (CaCV infection, CymMV infection, ORSV infection, and others) identified in this study. Furthermore, the image symptoms or texture features of CymMV- and ORSV-infected leaves, shown in (Figures 1B, C), are often less obvious than that presented on the leaves of BSR, BBS and PBR diseases (Huang, 2007). The symptoms or texture features of a CymMV- or ORSV-infected leaf, (Figures 1B, C), are often not easily identified by the naked eye, even though they were confirmed by ELISA assay. The mean identification accuracy (=0.918) of the current study outperforms that (=0.896) of the previous study (Huang, 2007). Moreover, the recall and precision were not provided, nor the disease type of others was included in previous study (Huang, 2007).

The main difference and the importance of the proposed algorithm versus other state-of-the-art algorithms include the following points. As described above and in section 1.1, many AI models with image processing methods were proposed in detection diseases for different plants in the previous studies (Dhaka et al., 2021; Ghorai et al., 2021; Vishnoi et al., 2021) and reached fair, good, or excellent accuracies (0.590-0.9975) as mentioned in 32 articles (Dhaka et al., 2021). However, around 99% of these previous studies were not associated with the orchid disease (Vishnoi et al., 2021). Furthermore, the image and detection conditions adopted among these previous studies differed a lot. Nevertheless, the mean accuracy (0.918) of the current study reached the excellent detection performance for orchid diseases when compared to that (0.896) of previous studies.

An aforementioned study, the AI model used for orchid disease detection was adopted from a traditional AI model, BPNN, which was used for the detection of two bacterial and one Phytophthora infection symptoms that were obviously identifiable even with the necked eye (Huang, 2007). Moreover, many of their images were taken after fixing the leaves on a plane using pushpins, that could limit the usage for real practice (Huang, 2007). Contrarily, our study designed the more advanced AI model for detecting more common virus diseases of orchid seedlings with symptoms that were less noticeable and often could not be easily identified by the naked eye.

Besides that, the proposed model system of the current study reached an accuracy of 0.842 and outperformed the human expert with an accuracy of 0.667 in the identification of easily misidentified categories between CymMV and ORSV which often showed no noticeable symptoms in the early stage of infection. Therefore, our proposed method should be beneficial to accurately detect the common virus diseases of orchid seedlings for early detection followed by early preventive measures to avoid the extended infection and loss.

Phalaenopsis orchid cultivation is often hindered by viral diseases. The proposed system architecture for the identification of Phalaenopsis orchid viral diseases were successfully implemented and reached excellent identification performances. This study conducted a system included designing the U-net model for leaf segmentation with contrast enhancement techniques followed by the approach of removal of non-leaf fragment areas, as well as creating a two-stage model (a random forest model and an InveptionV3 model) with methods of image augmentation and leaf texture feature acquisition from segmented leaf images. This system reached identification accuracy of 0.918 in the identification of five categories of orchid leaves. Moreover, this system provided an accuracy of 0.842 and outperformed the human expert with an accuracy of 0.667 in the identification of easily misidentified categories between CymMV and ORSV infections. In the future, we will continue to provide more database images to improve the recognition accuracy of CymMV and ORSV categories. We believe this outcome would serve as a solid ground for the development of the accurate, automatic, and cost-effective disease identification system for supporting orchid cultivation industry.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

F-JJ, and Y-KC conceived the study. Y-KC, and F-JJ designed the approach and performed the computational analysis with C-FT. C-HL, S-SY, Y-KC and F-JJ supervised the work and tested the program. F-HW, C-FT, Y-KC, C-WL, and F-JJ wrote the manuscript. C-HL, C-HH, C-FT, and F-JJ collected the data. C-FT, C-HL, C-HH, F-HW, C-HL, S-SY, Y-KC, and F-JJ contributed analyzing experimental studies. All authors read and approved the final manuscript. C-FT, C-HH, and F-HW contributed equally and are the first authors. Y-KC, and F-JJ contributed equally and are the correspondents. All authors contributed to the article and approved the submitted version.

This research was funded by the Advanced Plant Biotechnology Center from The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE) in Taiwan.

We are grateful to Dr Chung-Jan Chang, Professor Emeritus of the University of Georgia, for his critical review of the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbas, A., Jain, S., Gour, M., Vankudothu, S. (2021). Tomato plant disease detection using transfer learning with c-GAN synthetic images. Comput. Electron. Agric. 187, 106279. doi: 10.1016/j.compag.2021.106279

Algehyne, E. A., Jibril, M. L., Algehainy, N. A., Alamri, O. A., Alzahrani, A. K. (2022). Fuzzy neural network expert system with an improved gini index random forest-based feature importance measure algorithm for early diagnosis of breast cancer in Saudi Arabia. Big Data Cogn. Computing 6, 13. doi: 10.3390/bdcc6010013

Ali, H., Lali, M., Nawaz, M. Z., Sharif, M., Saleem, B. (2017). Symptom based automated detection of citrus diseases using color histogram and textural descriptors. Comput. Electron. Agric. 138, 92–104. doi: 10.1016/j.compag.2017.04.008

Al-Mamun, H. A., Dunne, R., Tellam, R. L., Verbyla, K. (2022). Detecting epistatic interactions in genomic data using random forests. bioRxiv. doi: 10.1101/2022.04.26.488110

Andono, P. N., Rachmawanto, E. H., Herman, N. S., Kondo, K. (2021). Orchid types classification using supervised learning algorithm based on feature and color extraction. Bull. Electrical Eng. Inf. 10, 2530–2538. doi: 10.11591/eei.v10i5.3118

Athey, S., Tibshirani, J., Wager, S. (2019). Generalized random forests. Ann. Stat 47, 1148–1178. doi: 10.1214/18-AOS1709

Bal, A., Das, M., Satapathy, S. M., Jena, M., Das, S. K. (2021). BFCNet: a CNN for diagnosis of ductal carcinoma in breast from cytology images. Pattern Anal. Appl. 24, 967–980. doi: 10.1007/s10044-021-00962-4

Banerjee, J., Ray, R., Vadali, S. R. K., Shome, S. N., Nandy, S. (2016). Real-time underwater image enhancement: An improved approach for imaging with AUV-150. Sadhana 41, 225–238. doi: 10.1007/s12046-015-0446-7

Bro, R., Smilde, A. K. (2014). Principal component analysis. Analytical Methods 6, 2812–2831. doi: 10.1039/C3AY41907J

Chan, Y.-K., Chen, Y.-F., Pham, T., Chang, W., Hsieh, M.-Y. (2018). Artificial intelligence in medical applications. J. Healthc Eng. 2018, 4827875. doi: 10.1155/2018/4827875

Chang, C.-A. (2018). “Virus detection”, in orchid propagation: From laboratories to greenhouses—methods and protocols. Eds. Lee, Y.-I., Yeung, E.-T.. (New York, NY: Humana Press), 331–344.

Chen, Q., Hu, S., Long, P., Lu, F., Shi, Y., Li, Y. (2019). A transfer learning approach for malignant prostate lesion detection on multiparametric MRI. Technol. Cancer Res. Treat 18, 1533033819858363. doi: 10.1177/1533033819858363

Chen, J., Nonaka, K., Sankoh, H., Watanabe, R., Sabirin, H., Naito, S. (2018). Efficient parallel connected component labeling with a coarse-to-fine strategy. IEEE Access 6, 55731–55740. doi: 10.1109/ACCESS.2018.2872452

Dhaka, V. S., Meena, S. V., Rani, G., Sinwar, D., Ijaz, M. F., Woźniak, M. (2021). A survey of deep convolutional neural networks applied for prediction of plant leaf diseases. Sensors 21, 4749. doi: 10.3390/s21144749

Eun, A. J.-C., Huang, L., Chew, F.-T., Fong-Yau Li, S., Wong, S.-M. (2002). Detection of two orchid viruses using quartz crystal microbalance-based DNA biosensors. Phytopathology 92, 654–658. doi: 10.1094/PHYTO.2002.92.6.654

Ghorai, A., Mukhopadhyay, S., Kundu, S., Mandal, S., Barman, A. R., De Roy, M., et al. (2021). Image processing based detection of diseases and nutrient deficiencies in plants. Available at: https://www.researchgate.net/profile/Ankit-Ghorai/publication/349707825_Image_Processing_Based_Detection_of_Diseases_and_Nutrient_Deficiencies_in_Plants/links/603dd839299bf1e0784d1416/Image-Processing-Based-Detection-of-Diseases-and-Nutrient-Deficiencies-in-Plants.pdf.

Gunarathna, M. M., Rathmayaa, R. M. K. T., Kandegama, W. M. W. (2020). Identification of an Efficient Deep Learning Architecture for Tomato Disease Classification Using Leaf Images. Journal of Food and Agriculture 13, 33–53. doi: 10.4038/jfa.v13i1.5230

Hall-Beyer, M. (2017). GLCM texture: A tutorial v. 3.0. Available at: https://prism.ucalgary.ca/handle/1880/51900.

Haralick, R. M., Shanmugam, K., Dinstein, I. H. (1973). Textural features for image classification. IEEE Trans. Syst. Man Cybernetics 6, 610–621. doi: 10.1109/TSMC.1973.4309314

Huang, K.-Y. (2007). Application of artificial neural network for detecting phalaenopsis seedling diseases using color and texture features. Comput. Electron. Agric. 57, 3–11. doi: 10.1016/j.compag.2007.01.015

Huang, C.-H., Tai, C.-H., Lin, R.-S., Chang, C.-J., Jan, F.-J. (2019). Biological, pathological, and molecular characteristics of a new potyvirus, dendrobium chlorotic mosaic virus, infecting dendrobium orchid. Plant Dis. 103, 1605–1612. doi: 10.1094/PDIS-10-18-1839-RE

Jayswal, H. S., Chaudhari, J. P. (2020). Plant leaf disease detection and classification using conventional machine learning and deep learning. Int J Emerging Technol. 11, 1094–1102.

Karlekar, A., Seal, A. (2020). SoyNet: Soybean leaf diseases classification. Comput. Electron. Agric. 172, 105342. doi: 10.1016/j.compag.2020.105342

Koh, K. W., Lu, H.-C., Chan, M.-T. (2014). Virus resistance in orchids. Plant Sci. 228, 26–38. doi: 10.1016/j.plantsci.2014.04.015

Lee, C.-H., Lee, G.-B. V., Wang, G.-J., Jan, F.-J. (2021). “Development of novel techniques to detect orchid viruses,” In Orchid Biotechnology Iv. Chen, W.-H., Chen, H.-H. Eds. (Singapore: World Scientific), 207–218.

Loecher, M. (2022). Unbiased variable importance for random forests. Commun. Statistics-Theory Methods 51, 1413–1425. doi: 10.1080/03610926.2020.1764042

Menze, B. H., Kelm, B. M., Masuch, R., Himmelreich, U., Bachert, P., Petrich, W., et al. (2009). A comparison of random forest and its gini importance with standard chemometric methods for the feature selection and classification of spectral data. BMC Bioinf. 10, 1–16. doi: 10.1186/1471-2105-10-213

Mirza, M., Osindero, S. (2014). Conditional generative adversarial nets. Available at: https://arxiv.org/abs/1411.1784.

Morid, M. A., Borjali, A., Del Fiol, G. (2021). A scoping review of transfer learning research on medical image analysis using ImageNet. Comput. Biol. Med. 128, 104115. doi: 10.1016/j.compbiomed.2020.104115

Musolf, A. M., Holzinger, E. R., Malley, J. D., Bailey-Wilson, J. E. (2022). What makes a good prediction? feature importance and beginning to open the black box of machine learning in genetics. Hum. Genet. 141, 1515–28. doi: 10.1007/s00439-021-02402-z

Nabwire, S., Suh, H.-K., Kim, M. S., Baek, I., Cho, B.-K. (2021). Application of artificial intelligence in phenomics. Sensors 21, 4363. doi: 10.3390/s21134363

Ojala, T., Pietikainen, M., Maenpaa, T. (2002). Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 24, 971–987. doi: 10.1109/TPAMI.2002.1017623

Ozdemir, I., Norton, D. A., Ozkan, U. Y., Mert, A., Senturk, O. (2008). Estimation of tree size diversity using object oriented texture analysis and aster imagery. Sensors 8, 4709–4724. doi: 10.3390/s8084709

Płoński, P. (2020) Random forest feature importance computed in 3 ways with Python. Available at: https://mljar.com/blog/feature-importance-in-random-forest/.

Ronneberger, O., Fischer, P., Brox, T. (2015). “U-Net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical image computing and computer-assisted intervention. Navab, N., Hornegger, J., Wells, W., Frangi, A. Eds. (Cham: Springer), 234–241. doi: 10.1007/978-3-319-24574-4_28

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., et al. (2015). Imagenet large scale visual recognition challenge. Int. J. Comput. Vision 115, 211–252. doi: 10.1007/s11263-015-0816-y

Sabzi, S., Abbaspour-Gilandeh, Y., Javadikia, H. (2017). Machine vision system for the automatic segmentation of plants under different lighting conditions. Biosyst. Eng. 161, 157–173. doi: 10.1016/j.biosystemseng.2017.06.021

Seoh, M.-L., Wong, S.-M., Zhang, L. (1998). Simultaneous TD/RT-PCR detection of cymbidium mosaic potexvirus and odontoglossum ringspot tobamovirus with a single pair of primers. J. Virol. Methods 72, 197–204. doi: 10.1016/S0166-0934(98)00018-4

Shaharudin, M. R., Hotrawaisaya, C., Abdul, N. R. N., Rashid, S. S., Adzahar, K. A. (2021). Collaborative, planning, forecasting and replenishment in orchid supply chain: A conceptual model. International Journal of Academic Research in Business and Social Sciences 11(11), 1459–68.

Shi, M., Zhao, X., Qiao, D., Xu, B., Li, C. (2019). Conformal monogenic phase congruency model-based edge detection in color images. Multimedia Tools Appl. 78, 10701–10716. doi: 10.1007/s11042-018-6617-x

Singh, A. K., Chourasia, B., Raghuwanshi, N., Raju, K. (2021). Green plant leaf disease detection using K-means segmentation, color and texture features with support vector machine and random forest classifier. J. Green Eng. 11, 3157–3180.

Tan, S. F., Isa, N. A. M. (2019). Exposure based multi-histogram equalization contrast enhancement for non-uniform illumination images. IEEE Access 7, 70842–70861. doi: 10.1109/ACCESS.2019.2918557

Vishnoi, V. K., Kumar, K., Kumar, B. (2021). Plant disease detection using computational intelligence and image processing. J. Plant Dis. Prot. 128, 19–53. doi: 10.1007/s41348-020-00368-0

Wei, G., Zhao, J., Yu, Z., Feng, Y., Li, G., Sun, X. (2018). “An effective gas sensor array optimization method based on random forest” In Proceedings of the 2018 IEEE SENSORS. New Delhi, India, 1–4.

wikipedia.org (2022) YUV. Available at: https://zh.wikipedia.org/zh-tw/YUV.

Wong, S.-M., Chng, C., Lee, Y., Lim, T. (1996). An appraisal of the banded and paracrystalline cytoplasmic inclusions induced in cymbidium mosaic potexvirus-and odontoglossum ringspot tobamovirus infected orchid cells using confocal laser scanning microscopy. Arch. Virol. 141, 231–242. doi: 10.1007/BF01718396

Wu, F.-H., Lai, H.-J., Lin, H.-H., Chan, P.-C., Tseng, C.-M., Chang, K.-M., et al. (2022). Predictive models for detecting patients more likely to develop acute myocardial infarctions. J. Supercomputing 78, 2043–2071. doi: 10.1007/s11227-021-03916-z

Xiao, K., Zhou, L., Yang, H., Yang, L. (2022). Phalaenopsis growth phase classification using convolutional neural network. Smart Agric. Technol. 2, 100060. doi: 10.1016/j.atech.2022.100060

Xiong, X., Shang, Y. (2020). An adaptive method to correct the non-uniform illumination of images. Opt. Sens. Imag. Technol. 11567, 173–179. doi: 10.1117/12.2575590

Yang, X., Baskin, C. C., Baskin, J. M., Pakeman, R. J., Huang, Z., Gao, R., et al. (2021). Global patterns of potential future plant diversity hidden in soil seed banks. Nat. Commun. 12, 1–8. doi: 10.1038/s41467-021-27379-1

Yuan, S.-C., Lekawatana, S., Amore, T. D., Chen, F.-C., Chin, S.-W., Vega, D. M., et al. (2021). “The global orchid market” In The Orchid Genome. Chen, F.-C., Chin, S.-W. Eds. (Cham, Switzerland: Springer), 1–28.

Zhao, H., Zheng, J., Xu, J., Deng, W. (2019). Fault diagnosis method based on principal component analysis and broad learning system. IEEE Access 7, 99263–99272. doi: 10.1109/ACCESS.2019.2929094

Zheng, Y.-X., Chen, C.-C., Yang, C.-J., Yeh, S.-D., Jan, F.-J. (2008). Identification and characterization of a tospovirus causing chlorotic ringspots on phalaenopsis orchids. Eur. J. Plant Pathol. 120, 199–209. doi: 10.1007/s10658-007-9208-7

Zulpe, N., Pawar, V. (2012). GLCM textural features for brain tumor classification. Int. J. Comput. Sci. Issues (IJCSI) 9, 354–359. Available at: https://www.cocsit.org.in/download/publications/nsz/nsz_glcm_textural_features_for_btc.pdf.

Keywords: orchid disease, texture feature, deep learning, U-net, random forest, inception network

Citation: Tsai C-F, Huang C-H, Wu F-H, Lin C-H, Lee C-H, Yu S-S, Chan Y-K and Jan F-J (2022) Intelligent image analysis recognizes important orchid viral diseases. Front. Plant Sci. 13:1051348. doi: 10.3389/fpls.2022.1051348

Received: 22 September 2022; Accepted: 11 November 2022;

Published: 02 December 2022.

Edited by:

Kuo-Hui Yeh, National Dong Hwa University, TaiwanReviewed by:

Xiaoyi Jiang, University of Münster, GermanyCopyright © 2022 Tsai, Huang, Wu, Lin, Lee, Yu, Chan and Jan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fuh-Jyh Jan, ZmpqYW5AbmNodS5lZHUudHc=; Yung-Kuan Chan, eWtjaGFuQG5jaHUuZWR1LnR3

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.