94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 07 October 2022

Sec. Plant Bioinformatics

Volume 13 - 2022 | https://doi.org/10.3389/fpls.2022.1031748

This article is part of the Research Topic Recent Advances in Big Data, Machine, and Deep Learning for Precision Agriculture View all 22 articles

Muhammad Shoaib1†

Muhammad Shoaib1† Tariq Hussain2

Tariq Hussain2 Babar Shah3

Babar Shah3 Ihsan Ullah4

Ihsan Ullah4 Sayyed Mudassar Shah5

Sayyed Mudassar Shah5 Farman Ali6*†

Farman Ali6*† Sang Hyun Park4*

Sang Hyun Park4*Plants contribute significantly to the global food supply. Various Plant diseases can result in production losses, which can be avoided by maintaining vigilance. However, manually monitoring plant diseases by agriculture experts and botanists is time-consuming, challenging and error-prone. To reduce the risk of disease severity, machine vision technology (i.e., artificial intelligence) can play a significant role. In the alternative method, the severity of the disease can be diminished through computer technologies and the cooperation of humans. These methods can also eliminate the disadvantages of manual observation. In this work, we proposed a solution to detect tomato plant disease using a deep leaning-based system utilizing the plant leaves image data. We utilized an architecture for deep learning based on a recently developed convolutional neural network that is trained over 18,161 segmented and non-segmented tomato leaf images—using a supervised learning approach to detect and recognize various tomato diseases using the Inception Net model in the research work. For the detection and segmentation of disease-affected regions, two state-of-the-art semantic segmentation models, i.e., U-Net and Modified U-Net, are utilized in this work. The plant leaf pixels are binary and classified by the model as Region of Interest (ROI) and background. There is also an examination of the presentation of binary arrangement (healthy and diseased leaves), six-level classification (healthy and other ailing leaf groups), and ten-level classification (healthy and other types of ailing leaves) models. The Modified U-net segmentation model outperforms the simple U-net segmentation model by 98.66 percent, 98.5 IoU score, and 98.73 percent on the dice. InceptionNet1 achieves 99.95% accuracy for binary classification problems and 99.12% for classifying six segmented class images; InceptionNet outperformed the Modified U-net model to achieve higher accuracy. The experimental results of our proposed method for classifying plant diseases demonstrate that it outperforms the methods currently available in the literature.

We have been domesticating animals and cultivating crops for centuries. Agriculture enabled all of this to be possible. Food insecurity is the primary cause of plant infections (Chowdhury, et al., 2020; Global Network Against Food Crisis, 2022). It is also one of the reasons why humanity faces grave problems. One study indicates that plant diseases account for approximately 16 percent of global harvest yield losses. The global pest and disease losses for wheat and soybean are anticipated to be approximately 50 percent and 26 to 29 percent, respectivel (Prospects and Situation, 2022). The classifications of plant pathogens include fungi, fungus-like species, bacteria, viruses, virus-like organisms, nematodes, protozoa, algae, and parasitic plants. Artificial intelligence and machine vision have benefited numerous applications, including power forecasting from non-depletable assets (Khandakar et al., 2019; Touati et al., 2020) and biomedical uses (M. H. Chowdhury et al., 2019; Chowdhury et al., 2020). Artificial intelligence is beneficial. It has been utilized globally for the identification of lung-based diseases. In addition, this method has accumulated predictive applications for the virus (Chowdhury et al., 2021). Using these comparative trend-setting innovations, early-stage plant diseases can be identified. AI and computer vision are advantageous for the detection and analysis of plant infections. As physically inspecting plants and detecting diseases is a very laborious and tiring process, there is a chance of error. Consequently, the use of these techniques is very advantageous because they are not particularly taxing, they do not require a great deal of labour, and they reduce the likelihood of error. Sidharth et al. (Chouhan et al., 2018) utilized a distributed premise work organization (BRBFNN) with an accuracy of 83.07 percent. This network is used to improve bacterial searching to identify and organize plant diseases1. The convolutional neural network is a well-known neural organization that has been successfully applied to a variety of computer vision tasks (Lecun and Haffner, 1999). Different CNN structures have been utilized by analysts to classify and distinguish evidence of plant diseases. For instance, “Sunayana et al. compared various CNN structures for recognizing potato and mango leaf infection, with AlexNet achieving 98.33 percent accuracy and shallow CNN models achieving 90.85 percent accuracy (Arya and Singh, 2019)”. “Using the mean of the VGG16 model, Guan et al. predicted the disease severity of apple plants with a precision rate of 90.40 percent. They utilized a LeNet model (Wang, 2017; Arya and Singh, 2019) Jihen et al. employed a model known as LeNet. This model was used to identify healthy and diseased banana leaves with a 99.72 percent accuracy rate (Amara, et al., 2017).

Tomato is one of the most commonly consumed fruits on a daily basis. As tomatoes are utilized in condiments such as ketchup, sauce, and puree, their global utilization rate is high. It constitutes approximately fifteen percent (15%) of all vegetables and fruits, with an annual per capita consumption of twenty kilograms. An individual in Europe consumes approximately thirty-one (31) kilograms of tomatoes per year. In North America, this percentage is relatively high. A person consumes approximately forty-two (42) kilograms of tomatoes annually (Laranjeira et al., 2022). The high demand for tomatoes necessitates the development of early detection technologies for viruses, bacterial, and viral contaminations. Several studies have been conducted using technologies based on artificial intelligence. These technologies are used to increase tomato plants’ resistance to disease. “Manpreet et al. characterized seven tomato diseases with a 98.8 percent degree of accuracy (Gizem Irmak and Saygili, 2020)”. The Residual Network was utilized to classify and characterize these diseases. This residual network is built utilizing the CNN architecture. This network is generally known as ResNet. Rahman et al. (Rahman et al., 2019) projected a network with 99.25 percent accuracy. This network is utilized to determine how to distinguish bacterial spots, late blight, and segregation spots from tomato leaf images. Fuentes et al. (Fuentes et al., 2017) employed three distinct types of detectors. These detectors were used to differentiate ten diseases from images of tomato leaf. A convolutional neural network is one type of detector. This network is comprised of faster regions. The second detector is a network of convolutions. The third detector is a multi-box finder with a single shot (SSD). These indicators are coupled with a variety of deep component extractor variants. The Tomato Leaf Disease Detection (ToLeD) model, proposed by Agarwal et al. is CNN-based technology for classifying ten infections from images of tomato leaf with an accuracy of 91.2%. Durmus et al. (Durmus, et al., 2017) classified ten infections from images of tomato leaves with 95.5% accuracy using the Alex Net and Squeeze Net algorithms. Although infection grouping and identification of plant leaves are extensively studied in tomatoes, few studies include segmented leaf images from their specific environments. The function also occurred in other plant leaves; however, no studies have segmented images of leaves from their specific case. Since lighting conditions can drastically alter an image’s accuracy, improved segmentation techniques could help AI models focus on the area of interest rather than the setting.

U-net derives its moniker from its U-shaped network design. It is an architecture for cutting-edge image segmentation technology based on deep learning. U-net is designed to aid in the segmentation of biomedical images (Navab et al., 2015). In addition, unlike conventional CNN models, U-net includes convolutional layers for up-sampling or recombining feature maps into complete images. The experimental results of research articles (Rahman et al., 2020) demonstrated promising segmentation performance. The segmentation results are summarized using the cutting-edge U-Net model (Navab et al., 2015). In contrast, Inception Net is a modernized network. In addition, the Inception Net network is classification-based network used to predict the health condition of the crop using the tomato leaf data (Louis, 2013). The main contribution of the proposed model can be summarized as:

1) Different U-net versions were explored to choose the optimum segmentation model by comparing the segmented model mask with the images of ground truth masks.

2) This study used three different classification methods: A comparison of different CNN architecture for classification tasks involving binary and multiclass classification of tomato diseases. Several experiments were carried out with various CNN architectures. (a) Binary classification of healthy and ill leaves on a scale of one to ten. (b) Two-level classification of healthy leaves and four levels of categorization for ill leaves (five levels total). (c) A ten-level categorization of healthy individuals, as well as nine illness categories. A twofold characterization of solid and infected leaves, a five-level order of sound, four unhealthy leaves, a ten-level grouping of solid, and nine disease classes.

3) The results achieved in this study outperform the most current state-of-the-art studies in this field in terms of accuracy and precision.

The remaining paper is organized as follows: The first section contains a detailed introduction, a review of literature, and the study’s motivation. Various kinds of pathogens that attacked plants are described in Section no. 2. Section 3 contains information about the study’s methodology and techniques, including a description of the dataset, pre-processing techniques, and experimental details. In section no. 4, the study’s findings are reported, including discussion in section no. 5 in Section 4, and then in the 6th section.

Tan et al. (Louis, 2013) introduced the Inception Net CNN model. We performed transfer learning for the detection of various tomato plant leaf diseases. The developer, Inception Net CNN model, ensured that the model is balance in all aspects, i.e., width, resolution, and depth. Moreover, the developers of Inception Net were the first to find the connection b/w all of the three dimensions, while other CNN ranging techniques use the single-dimensional ranking factor.

The writers used the MnasNet network (Sevilla et al., 2022) to create their baseline architecture, prioritizing model accuracy and FLOPA using a neural network architecture to search multi-objects. Next, they built the InceptionNet2, which was the same as MnasNet but with only one difference, i.e., more extensive than the Inception Net network. It happened because Inception Net’s FLOPS target is higher. Its crucial building block is the mobile reverse bottleneck MBConv (Sandler et al., 2018), including squeezing and excitation optimization (Hu et al., 2020). Finally, we employed a composite way, that composition method is based on InceptionNet1, which employs compound coefficients σ to scale. Using this scale, the neural network width, depth and dimensions can be detected. All of these three criteria are detected uniformly using the Equation below:

We use a, b and c, as the following constant variables that can be recognized with the help of an efficient grid scan. The alpha value is the constant coefficient declared by a user, which adjusts the number of possessions utilized for scaling up the model. The values a, b, and c determine how these additional resources can be assigned to the neural network’s width, dimension, and depth. Here are some constants a, b, and c that may be discovered by quickly scanning the grid. As shown in the following Equation, the parameters for network width, depth, and resolution are defined by the variables a through c. The parameter may set the parameter for model scaling, represented by the parameter for model scaling.

It creates a family of Inception Net (1 to 2) by scaling up the reference point of the system and setting the constants as a, b, and c while scaling up the network reference point with various a, b, and c networks in Table 1. The accuracy of 97.1 percent achieved by InceptionNet1 on ImageNet is in the top five, even though on inference it is 6.1 times quicker and 8.4 times smaller than the finest existing ConvNets such as SENet (Hu et al., 2020) and Gpipe (Huang et al., 2019).

The InceptionNet1, InceptionNet3 and InceptionNet2 algorithms were utilized while constructing our design; A GAP layer was added to the network’s last layer to increase accuracy while also reducing overfitting. We added a thick layer Ensuing GAP, with a 1024x1024 resolution and a 25% loss. Followed by another Dense layer. A SoftMax layer is then applied to produce the likelihood estimate points for identifying leaf diseases of tomato, which is the final step to begin with Inception Net as a baseline; we scale it up in two steps using our compound scaling method:

The first step is to fix = one, assuming twice as many resources are available. The InceptionNet2 finest values for are, in particular, a,b,c at 1.2,1.1,1.15 respectively with a = 1.2 being the best overall.

Second, we fix the values of constants as a, b and c and utilize Equation (1) to scale up the baseline variety of network values to get InceptionNet2 through InceptionNet1.

In some instances, looking for the three variables near a big model, for example, can provide even better results. Still, the cost of the search becomes prohibitively expensive for larger models. The First step in addressing this issue is performing a single search on a tiny baseline network and scaling all other models in the second step with the same scaling coefficients as the small baseline network.

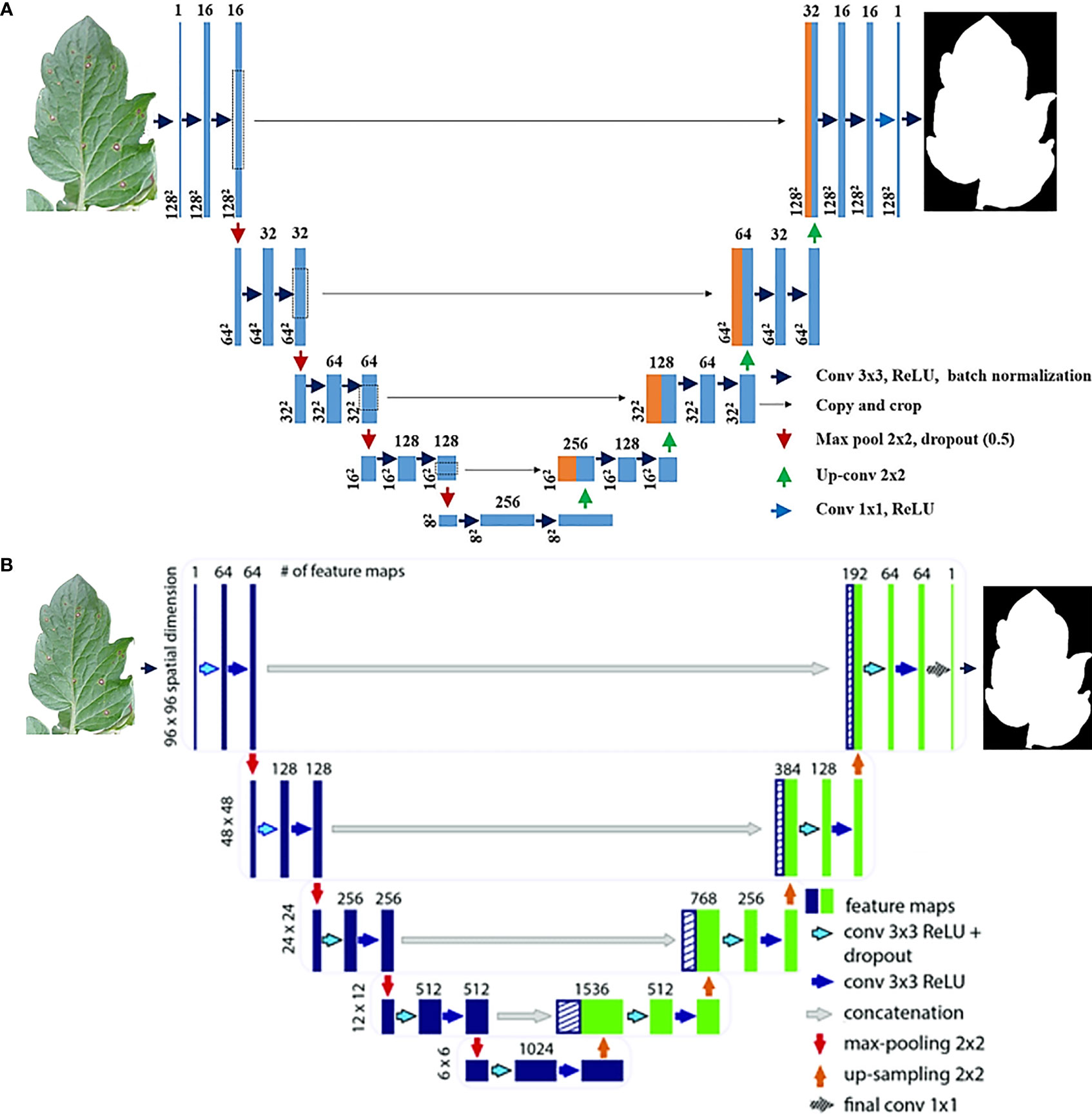

In U-net architecture, many segmentation designs may be built. This research compared two versions of the unique U-Net (Navab et al., 2015) and two distinct versions of the Modified U-Net (Navab, 2020) to see which version performed the best. You can see how the original U-Net design, the Improved U-Net design, and the Modified U-Net design are displayed in Figures 1, 2. When considering the U-net, the first thing to bear in mind is that it comprises two pathways: one that expands while contracting and another that contracts while extending. An unpadded convolution (or convolution with padding) is performed many times along the contracting route. Each iteration consists of a ReLU followed by a pooling operation with stride 2 for down sampling. In the latter stages of the expanding path, the third convolution is followed by a ReLU. The up-sampled feature map is combined with the contracting path’s feature map, which is doubled, and two 3 x 3 convolutions, followed by a ReLU in the contracting approach. Every stage of the network takes into account 23 convolutional layers.

Figure 1 (A) Original Baseline U-Net Architecture, (B) Modified improved U-net Deep Neural Network Architecture Livne et al., 2019.

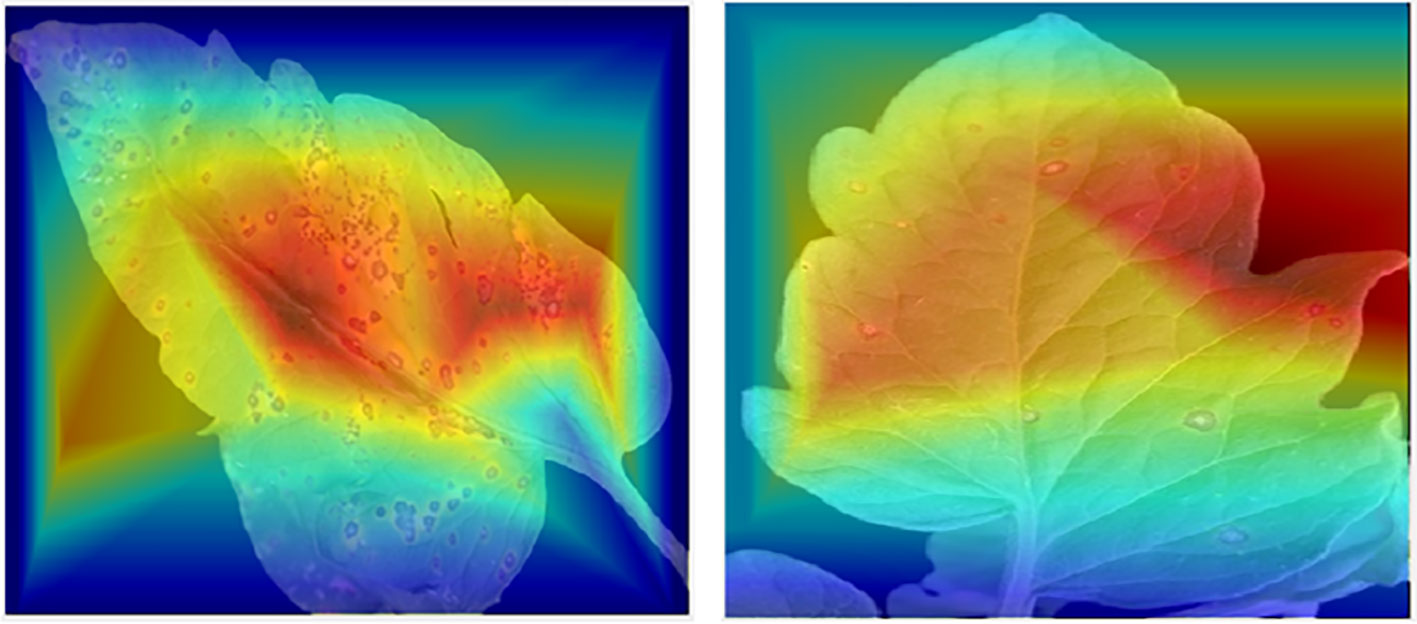

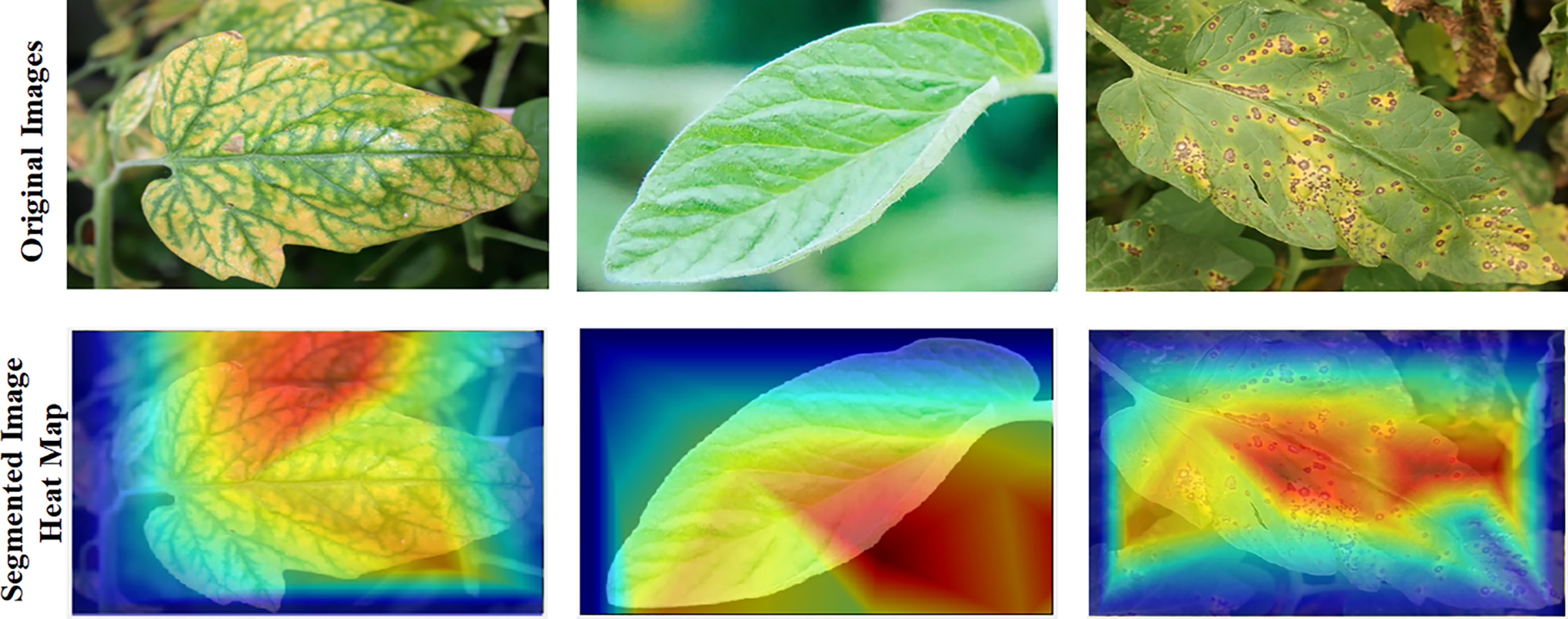

Figure 2 Sisualization of tomato leaf images using the Score-CAM tool, demonstrating affected regions where CNN classifier makes the majority of its decisions.

This research used a modified U-Net (Manjunath and Kwadiki, 2022) model, which includes some modest changes to its decoding component. U-Net refers to a route that provides for four encoding blocks and four decoding blocks, after which there is a second route that expands the first route by adding four encoding blocks and four decoding blocks. The decoding block of the new U-Net design uses three convolutional layers rather than two, which results in a substantial improvement in decoding block performance. Every block in every encoded picture has two 3x3 convolutional layers, and then the layers are repeated for each encoded image. During the up-sampling phase, the algorithm processes a more extensive set of images in the training set. Then, two three-by-three convolutional layers, one concatenation layer, and another three-by-three convolutional layer come into play. Convolutional layers also conduct batch normalization and ReLU activation. A 1 x 1 convolution is performed at each pixel on the SoftMax result from the previous layer. By allowing the final layer to differentiate between background and object pixels, this feature increases image quality. At the layer level of abstraction, this classification is carried out.

CNNs are showing a greater interest in internal mechanics. Therefore, visualization approaches have been developed to aid in their understanding. Visualization methods help in the knowledge of CNN decision-making processes. Additionally, this makes the model more understandable to people, helping increase the faith in the findings of neural networks. Recently, “Score-CAM (Chen and Zhong, 2022) was employed in this investigation because of its good output, such as Smooth Grad (Smilkov et al., 2017), Grad-CAM (Selvaraju et al., 2020), Grad-CAM++ (Chattopadhay et al., 2018), and Score-CAM (Wang et al., 2020).” The weight for each activation map is based on the target class’s forward passing score, and the outcome is the product of weights and activation maps. After calculating the forward passing score for each activation map, Score-CAM removes the requirement for gradients. In Figure 2, the leaf areas were in control of CNN decision-making, as seen by the heat map. The statement above can assist consumers in understanding how the network makes decisions, which increases end-user confidence.

Septoria leaf spot, Early blight,target spot, and molds of leaf are only a few of the plant diseases caused by a fungus that exists. Fungi may infect plants in a change of ways via seeds and soil. The pathogenic fungus can spread across plants via animals, human contact, equipment, and soil contamination. An infection of the plant’s leaves by a fungal pathogen is the cause of initial blight tomato plants disease. All terms used to describe this condition are fruit rot, stem lesion, Collar rot and. When fighting early blight, cultural control, which includes fungicidal pesticides and good soil and nutrient management, is essential. Septoria leaf spot is caused by a fungus that grows on tomato plants and produces tomatines enzyme, which causes the breakdown of steroidal glycoalkaloids in the tomato plant to occur. Known as spot disease, it is a fungal disease that affects tomato plants and manifests itself as necrosis lesions with a color displayed as mild brown in the center. Defoliation occurs early in the course of progressive lesions (Pernezny et al., 2018; Abdulridha et al., 2020).

When the goal location is struck, it causes immediate harm to the fruit. This illness, called the fungal disease, develops upon moist leaves remain for a lengthy period. Bacteria is also a type plant pathogen. Bites, trimming, and cuts allow insects to penetrate plants. The availability, humidity, Temperature, nutrient meteorological conditions, ventilation, and soil conditions are crucial for bacterial development and plant harm. Bacterial spot is a disease caused by bacteria (Louws et al., 2001; Qiao et al., 2020). Plants can spread illness because of mold growth. Mold Plant causes the late blight in tomato and potato plants stems and leaf tips might have dark, irregular blemishes. The Tomato Yellow Leaf Cur (TYLC) virus causes illness in tomatoes. This virus has infected the plant and is transmitted by an insect. However, tomato plants bear damaged leaves and are divided into ten different classifications. In research 2, several groups of unhealthy and stable leaf photographs were categorized.

Some investigations show that plants of beans, peppers, eggplant and tobacco can also be harmed by virus. The current priority is to combat yellow leaf curl disease due to the illness’s extensive geographical range. Tomato Mosaic Virus is also a type pathogen which impacts the tomato plants (Ghanim et al., 1998; Ghanim and Czosnek, 2000; Choi et al., 2020; He et al., 2020). This virus is prevalent everywhere, affecting several plants, including tomatoes. Necrotic blemishes and twisted and fern-like stems define ToMV infection (Broadbent, 1976; Xu et al., 2021).

The proposed framework is summarized in the given below Figure 3. The dataset used in this research-based project comes from the village of plant benchmark dataset (Hughes and Salathe, 2015; SpMohanty, 2018); the dataset consists of the leaf and their segmented mask images. As discussed in the above sections, this work is performed using three different classification strategies i.e.

(1) the binary classification that only classifies the leaves into healthy and non-healthy classes.

(2) the experiment was performed on five unhealthy and one class of healthy segmented images of leaves.

The paper also investigates the most effective segmentation network for background leaf segmentation is the U-net segmentation model. The segmented tomato leaf pictures are then utilized to verify the cam imagining, which has been proved trustworthy in several applications.

The proposed models are evaluated using the Plant Villag dataset consisting of 18161 images and segmented binary mask images (Hughes and Salathe, 2015). The Plant Village is benchmark and widely used dataset utilized for the training and validation of the classification and segmentation model.

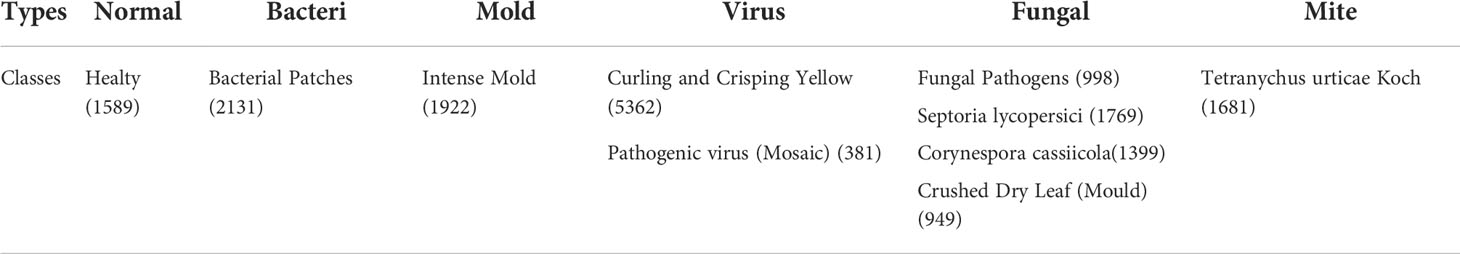

This dataset was additionally used to prepare a division and order model for tomato leaves. The images from the dataset were divided into ten classifications where only one class is healthy while all others are from the unhealthy class. The entirety of the pictures was separated into ten classes, one of which was strong and the other nine were damage (e.g., bacterial smear, early leaf mold, leaf shape, leaf mold, and yellow leaf curl infection and the nine undesirable classifications were additionally partitioned into five subgroups (i.e., microscopic organisms, infections, growths, molds, and parasites). Figure 4 shows some examples of segmented tomato leaf and mask leaf pictures for the healthy and unhealthy classes. Table 2 additionally incorporates a point-by-point depiction of the number of images in the dataset, which is valuable for the arrangement work debated in the more prominent aspect of the accompanying area.

Table 2 Total amount of healty and disease affected tomato leaves images in the Plant Village Dataset.

The image input size for various CNN architectures for segmentation and classification Varies. All the images from the dataset for training a U-net model were resized to 256x256x3, while for InceptionNet (1, 2, and 3), the images were resized 299x299x3. CNN networks have input picture size necessities that should be met. All the images in the dataset were normalized using the z-score normalization, where the value of z-score was computed from the standard deviation (SD) and mean of the training images dataset.

As the dataset is imbalanced and doesn’t have equivalent images in various classes, training with an unequal dataset may lead to models’ overfitting or underfitting issues. The number of pictures in all the classes is kept equal by augmenting (increasing the quantity of image data) the images. An equal number of images in all the programs (balance dataset) can train a reliable model to provide better performance accuracy (M. H. Chowdhury et al., 2019; Chowdhury et al., 2020; Rahman et al., 2020; Rahman et al., 2020; Tahir et al., 2022).

Three types of augmentation are applied to the image data, i.e., image rotation, image translation, and image scaling, to create a balanced dataset using data augmentation. To apply rotation to the training images, the images were rotated in a clockwise and anti-clockwise direction with an angle from 5 to 15 degrees. The scaling of images is zooming in or zooming out of an image; in our case, the scaling up and scaling down percentage is 2.5 to 10. The translation of an image is the processing of changing the location of objects in an image; the leaf region is translated horizontally and vertically by a percentage of 5-15.

To determine and select the best leaf segmentation model, various U-net segmentation models are trained. K-Fold cross-validation method is applied to split up the data into trainset and a test set. The value of K is 5, which means that each model will be trained five times and validated five times; in each fold, 80% of the data (leaf and segmented mask images) will be used for training the model while the remaining will be used for model validation. (Table 3).

The trainset and test set class distribution are equal. As we know that each fold consists of 80% of the data for model training. So out of 80%, 90% of the data is going for model training, while the remaining 10% will be used for model validation which will assist the model in avoiding the overfitting issue. In this research, three state-of-the-art loss functions, i.e., Binary Cross-Entropy Mean-Squared Error loss and Negative Log-Likelihood, are performed to choose the optimal act evaluation metrics used to select the best segmentation model for tomato leaves.

Moreover, a proposed model training stunting condition is reported in some updated research work. If there is no improvement in the validation loss for the first five epochs, the model training should be immediately stopped (Chowdhury et al., 2020; Rahman et al., 2020; Tahir et al., 2022).

This research study explored a deep learning-based system that used a newly built convolutional neural net called Inception Net to categorize segmented tomato leaf disease pictures to improve disease detection accuracy. Three distinct types of picture categorization tests were conducted as part of this study. As shown in Table 4, the images utilized in the analyses for training multiple classification models using the segmented leaf images were taken from different sources. Table 5 contains a summary of the experiment’s parameters, as well as the results of the study into picture classification and segmentation techniques.

Table 5 List of Hypermaters, loss and optimizer used for training classification and segmentation models.

InceptionNet is a collection of deep neural networks that were developed using the Inception module. The initial edition of this series, Googlenett, is a 22-layer deep network. The Inception module is built on the idea that neurons with a shared objective (such as feature extraction) should learn together. In the bulk of early iterations of convolutional architecture, the main focus was on adjusting the size of the kernel to obtain the most relevant features. In contrast, InceptionNet’s architecture emphasises parallel processing as well as the simultaneous extraction of a number of different feature maps. This is the trait that most distinguishes InceptionNet from all other picture categorization models currently available.

Inception v2 and Inception v3 are presented in the same paper. There exists an initial architecture inception-V1 where inception-V-2 and V-3 are widely used in the literature as transfer learning methods to solve various problems. For the Inception part of the network, we have 3 standard modules of 35×35 filter size, each with 288 filters in a layer. This is reduced to a 17 × 17 grid with 768 filters using a grid reduction technique. In inception-V3, the decomposed 5 initial modules, as shown, are reduced to an 8 × 8 × 1280 grid using the grid reduction technique. A grid reduction technique is used to reduce this to an 8 × 8 × 1280 grid. The inception-V3 model consists of two coarsest 8 × 8 levels of the Inception module, and each block has a tandem output filter bank size of 2048. The detailed architecture of the network, including the size of the filter banks in the Inception module, is given in the base research paper (Szegedy et al., 2014).

The intuition is that the neural network performs better when the convolution does not significantly change the input dimensionality. Too much dimensionality reduction may lead to information loss, called a “representation bottleneck”. Using intelligent decomposition methods, convolution can be made more efficient in terms of computational complexity. To increase the computational speed, the 5x5 convolution is decomposed into two 3x3 convolution operations. Although this may seem counterintuitive, the cost of a 5x5 convolution is 2.78 times that of a 3x3 convolution. Therefore, stacking two 3x3 convolutions can improve performance.

This translation was created with the assistance of the DeepL.com Translator (free version)

All of the experiments were performed on an Intel-based corei7 9th generation CPU with a RAM of 64 GB and NVIDIA RTX 2080Ti 11GB GDDR6 GPU using the python 3.7 popular deep learning framework the PyTorch library.

Performance matrix: Segmentation of tomato leaves: The proposed lesion segmentation model performance evaluation metrics are listed below (2)– (4).

Classification of segmented tomato leaves: The leaf classification performance evaluation metrics are listed below (5)– (9).

In the False Positive and False Negative measurements, you can see the photos of healthy and sick tomato leaves mistakenly identified. The True positive rate (TPR) indicates the number of adequately detected healthy leaf pictures. Although the True negative rate (TN) denotes the number of properly identified diseased leaf pictures, the actual percentage of healthy leaves represented is the Healthy Leaf Volume Index (HVI). Additionally, image segmentation and classification models are compared using Equation No. 9, which depicts the time required to test a single picture.

Where to denotes when a segmentation or classification model starts to process the image I while t’’ denotes the completion time when an image I am segmented or classified.

This section details the performance evaluation of different neural network architectures (Segmentation & Classification) in various experiments.

To segment the tomato leaf pictures, two different deep learning-based segmentation models are employed. Namely, The U-net (Navab et al., 2015) and Modified U-net (Navab, 2020) are two neural networks that are trained and verified using pictures of tomato leaves.

Table 6 illustrates the presentation of two advanced segmentation deep learning designs tested against one another using various loss functions to demonstrate how effectively they compete against one another (NLL, MSE, and BCE). Notably, the Improved U-net with NLL loss function may have surpassed the unique U-net in terms of the quantity and quality of segments created for the ROI (leaf region) across all images instead of the original U-net.

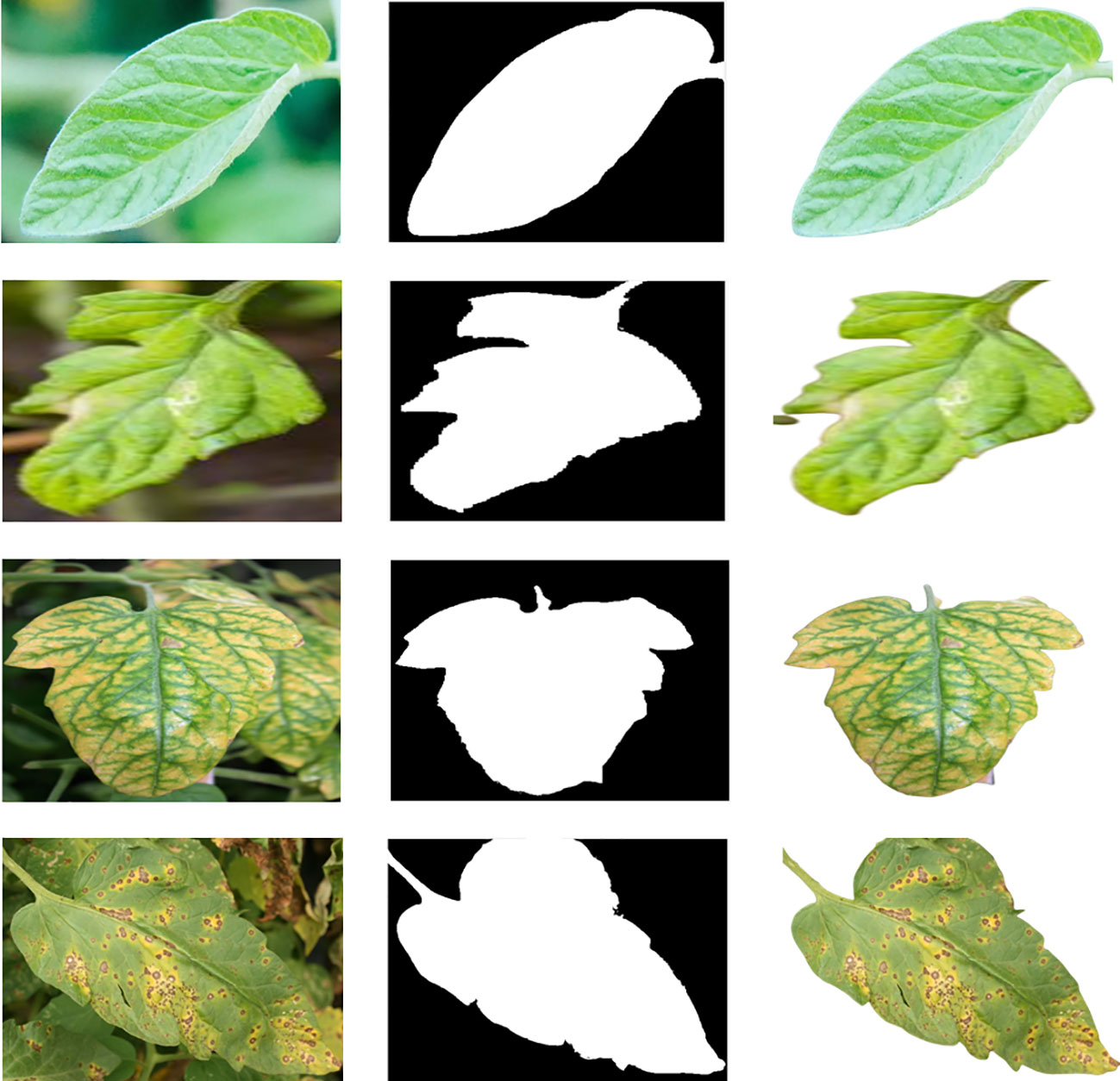

A modified U-net design with a negative log-likelihood loss function was used to segment leaves into leaf regions. The following parameters were computed: validation loss, validation accuracy, IoU, and dice. The results for the Modified U-net model with Negative Log-Likelihood loss function were 0.0076, 98.66, 98.5, and 98.73 for the four variables. Example test leaf images from the Plant Village dataset are shown in Figure 5 with their ground truth masks. Segmented ROI images were produced using the Modified U-net model with a Negative Log-Likelihood loss function, which was trained on the Plant Village dataset and is displayed in Figure 6.

Figure 5 Original Tomota Leaf Images, Ground Truth Mask and Segmented Leaf using Modified U-Net CNN Model.

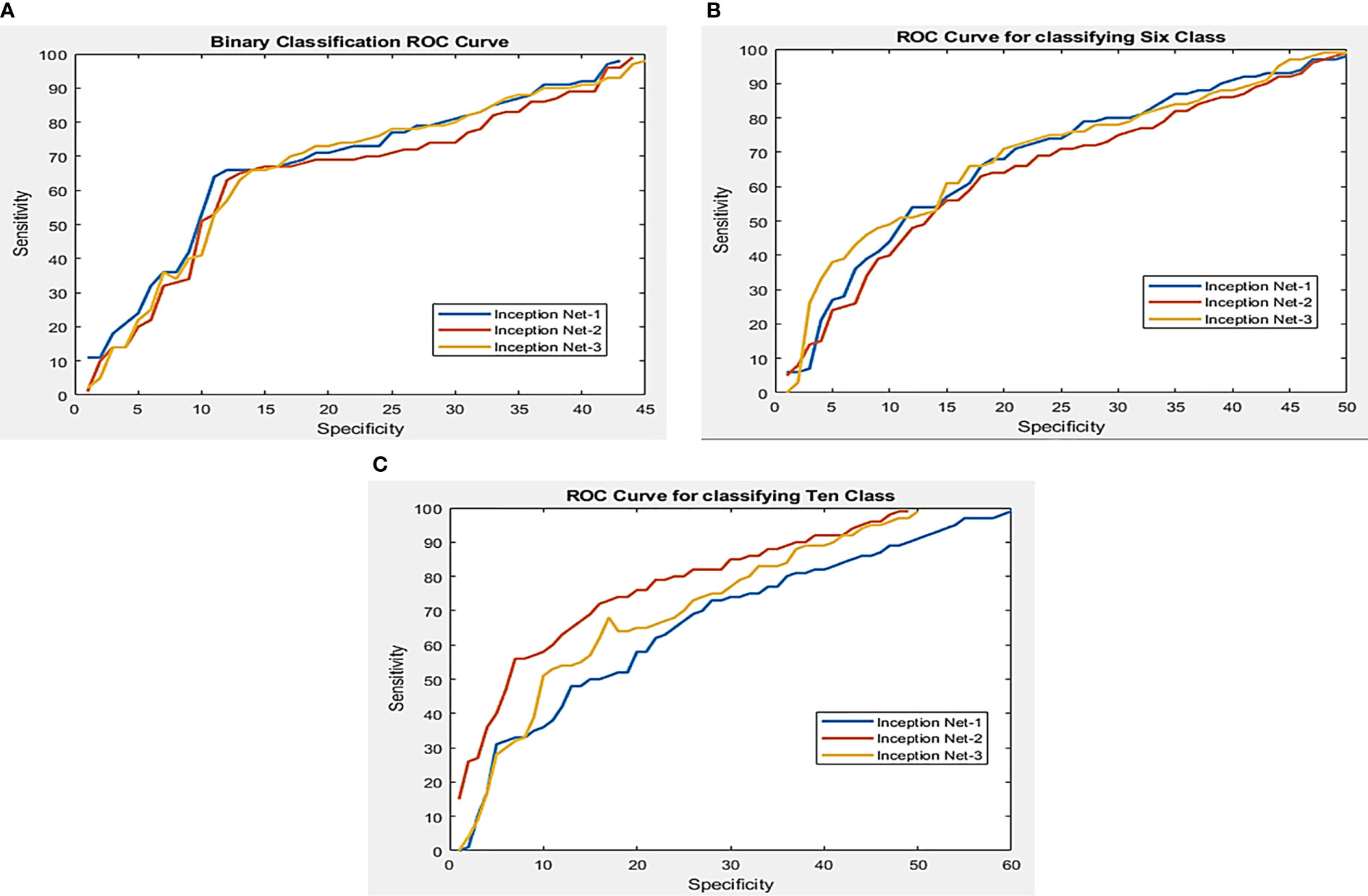

Figure 6 Working feature curves for (A) binary segmented leaf classification, (B) sixth class segmented leaf classification, and (C) tenth class classification of the segmented leaf.

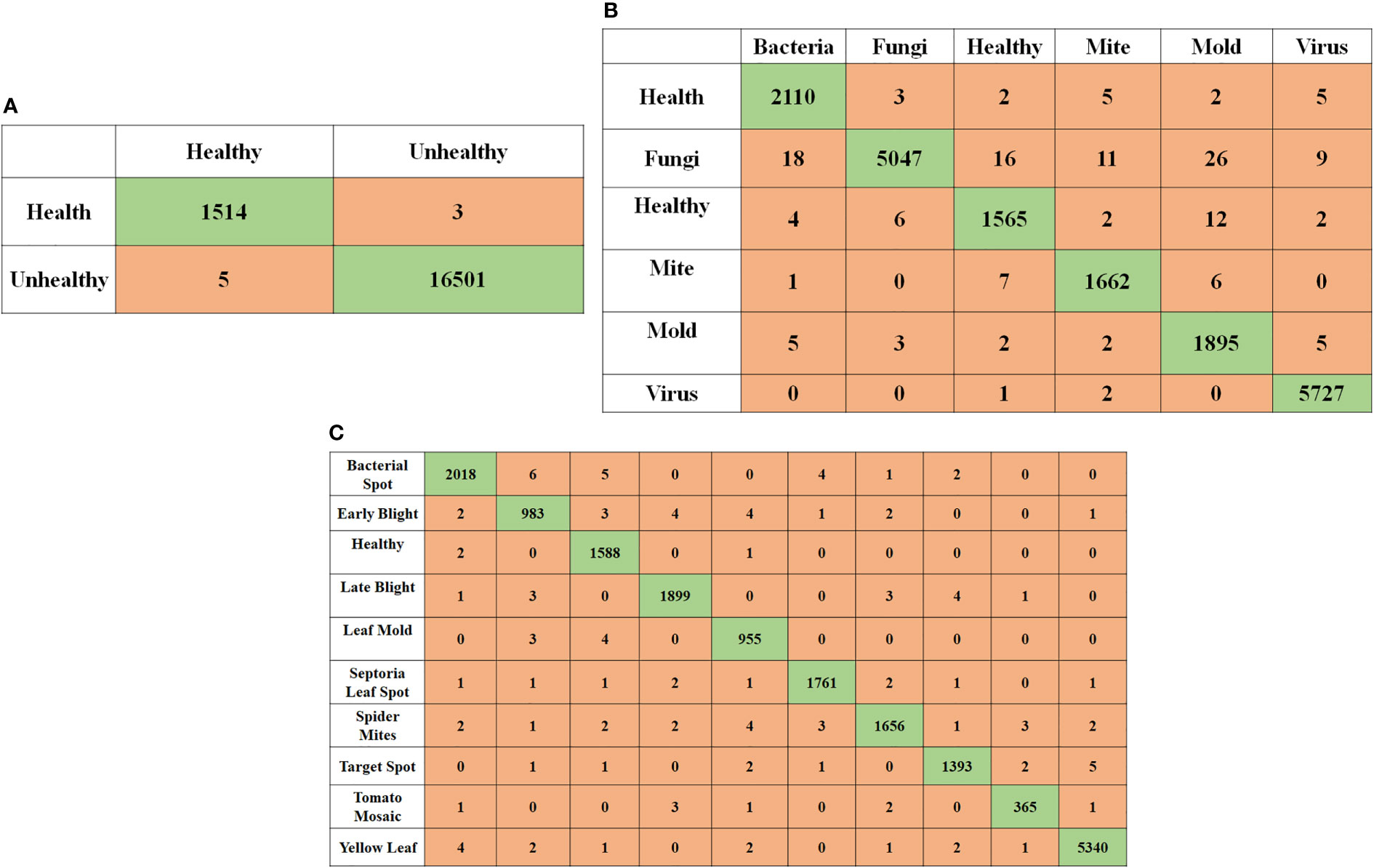

In this work, three separate tests were done using pictures of segmented tomato leaves, each with a different outcome. Using three distinct Inception Net families, the performance of segmented leaf pictures classified using three other classification techniques such as InceptionNet1, InceptionNet2, and InceptionNet3 is compared in Table 7. Pre-trained models perform exceptionally well in identifying healthy and diseased tomato leaf pictures, as shown in Figure 7, in problems with two classes, six classes, and ten classes, respectively. In addition, when non-segmented pictures were used, the results were superior.

Figure 7 Image classification using compound scaling CNN-based models of healthy and diseased tomato leaves for segmented leaf images (A) for 2 class classification, (B) for 6 class classification, and (C) for 10 class classification.

Aside from ten-class problems, where InceptionNet3 performed marginally better than InceptionNet1 compared to other training models, InceptionNet1 outperformed other trained models when utilizing leaf pictures segmented of two, six, and ten-class issues and without it. We conducted extensive testing on several versions of Inception Net. They observed that when the depth, breadth, and resolution of the network are raised, the performance of the network increases. Because the depth, breadth, and resolution of the network are scaled as the Inception Net model grows in depth, width, and resolution, the testing time (T) grows. In contrast, when the classification scheme grows more complex, the performance of Inception Net’s scaled version does not appear to increase substantially.

A two-class and a six-class problem with InceptionNet1 outperforms the competition in segmenting tomato leaf images, achieving a 99.95 percent accuracy, 99.95 percent specificity, and 99.77 percent specificity for two-class 99.12 percent, 99.11 percent, and 99.81 percent specificity for three-class problems. For its part, InceptionNet3 achieved the highest accuracy, sensitivity, and specificity scores in the ten-class test, with 99.999% accuracy, 99.44 percent sensitivity, and specificity scores in the ten-class test, respectively. Figure 5 illustrates that increasing the number of parameters in a network result in marginally better performance for 2, 6, and 10 class issues. On the other hand, deep networks can give a more significant performance gain for problems with two and six classes, respectively. Figure 6 depicts the Images of segmented tomato leaves used to create Receiver Operating Characteristic (ROC) curves for problems involving two-class, six-class, and ten-class difficulties. This section shows how the receiver working features curves for second class, sixth class, and tenth class issues utilizing segmented tomato leaf pictures look.

The Design with NLL loss function produced masks (2nd left), segmented leaf with matching segmentation (2nd right), and ground truth images of tomato leaves are shown in Figure 5. (right).

To categorize tomato leaf diseases, segmented and original leaf pictures to get the findings shown in Table 7. Italicized outcomes denote the most favorable outcomes).

For the best performing networks depicts the confusion matrix when applying tomato leaf pictures to different classification tasks. Of the six out of 16,570 unhealthy tomato leaf pictures that were correctly classified as healthy, the network with the most outstanding performance, InceptionNet1, accurately categorized them. however, the network with the worst performance, InceptionNet1, incorrectly classified 1591 of them as unhealthy.

Six different classes of unhealthy tomato leaf images, which consisted of one healthy class and five distinct unhealthy classes, were identified in the six-class issue. While only three misclassified images were found in the six-class issue, 1591 healthy tomato leaf images were found in the healthy tomato leaf category. Only one tomato leaf was misclassified in the six-class problem, which consisted of healthy and unhealthy classes of one hundred and ninety-nine different types. There were 16,570 images of diseased tomato leaves to choose from in the six-class assignment. A study discovered that the best network for the ten-class problem was InceptionNet3, which had only four misclassifications of healthy imageries and 105 misclassifications of unhealthy images in healthy tomato leaf images.

The dependability of trained networks was evaluated in this study through visualization tools based on five distinct score-CAM categories; it was determined that they were either healthy or sick. The 10-class problem was solved using temperature maps created from tomato leaf segmented pictures. Figure 8 depicts the unique tomato leaf samples and the temperature maps created on segmented tomato leaf segments. Figure 8 illustrates how the networks learn from the leaf pictures in the segmented leaf, which increases the reliability of the network’s decisions. Doing so contributes to disproving the notion that CNN makes choices based on irrelevant variables and is untrustworthy (Schlemper et al., 2019). Figure 9 further illustrates how segmentation has assisted in categorization, with the network learning from the area of attention resulting from segmentation. Using this reliable learning method, we could classify erroneous information correctly. Comparing segmented pictures to non-segmented images, we found that division assisted in knowledge and making judgments from germane areas associated with non-segmented imageries see Figure 10.

Figure 8 Accurate classification and visualization of ROI using the CAM-Score tool: The red intensity indicates the severity of the lesion.

Plant diseases pose a substantial danger to the global food supply. The agricultural industry requires cutting-edge technology for disease control, which is currently unavailable. The application of technologies based on artificial intelligence to the identification of plant diseases is currently the subject of intensive research. Popularity of computer vision-based disease detection systems can be attributed to their durability, ease of data collection, and quick turnaround time. In this study, classification and segmentation of tomato leaf images are used to evaluate the performance of model scaling CNN-based architectures relative to their predecessors. The initial categorization (Healthy and Unhealthy) employed a two-category classification; subsequently, a six-category classification was employed (Healthy, Fungi Bacteria Mould Virus, and Mite). Prior to the completion of the final classification, a preliminary two-class classification (Healthy and Unhealthy) was utilized (Healthy, Early blight, Septoria leaf spot, Target spot, Leaf mold, Bacterial spot, Late bright mold, Tomato Yellow Leaf Curl Virus, Tomato Mosaic Virus, and Two-spotted spider mite). This study determined that the InceptionNet1 model was the most successful across all classes, outperforming all others with the exception of binary and segmented image classification, which the InceptionNet1 model outperformed. Utilizing segmented photographs and binary classification, this model outperformed all others in binary classification and 6-class classification using segmented images. This model performed significantly better than other models at classifying segmented 6-class images.

InceptionNet1 has an overall accuracy of 99.5% when using segmented images to classify sick and healthy tomato leaves into two classes. Using a 6-class classification method, the InceptionNet1 algorithm achieves an overall accuracy of 99.12%, according to the study’s findings. InceptionNet3 demonstrated an overall accuracy of 99.89 percent on a 10-class classification test involving segmented images and photos. Table 8 highlights the article’s findings, which are comparable to the current state of knowledge in their respective fields of study. The Plant Village dataset utilized in this study consists of images captured in a variety of environments; however, it was collected in a specific location and only contained images of specific tomato varieties. Using a dataset consisting of images of tomato plant varieties from around the world, a study was conducted to develop a more robust framework for identifying early illness in tomato plants. In addition, due to their simpler design, CNN models may be useful for testing portable solutions with non-linearity in the removal layer feature.

This study presents the outcomes of a CNN built on the recently proposed Inception Net CNN architecture. The CNN model was effective, and which accurately assign a class label to a tomato leaf image as healthy or non-healthy. The reported results were obtained using the benchmark publicly available Plant Village dataset (Hughes and Salathe, 2015), demonstrating that our model outperforms a number of current deep learning techniques. Compared to other architectures, modified U-net was superior at separating leaf images from the background. In addition, InceptionNet1 was superior to other designs in removing high-priority features from snaps.

In addition, when the systems were trained with a greater number of parameters, their overall performance significantly improved. Using trained models may allow for the automated and early detection of plant diseases. Professionals require years of training and experience to diagnose an illness through a visual examination, but anyone can utilize our methodology, regardless of their level of experience or expertise. If there are any new users, the network will operate in the background, receiving input from the visual camera and immediately notifying them of the result so they can take the appropriate action. As a result, preventative measures may be taken sooner rather than later. Utilizing new technologies such as intelligent drone cameras, advanced mobile phones, and robotics, this research could aid in the early and automated detection of diseases in tomato crops. By combining the proposed framework with a feedback system that provides beneficial recommendations, cures, control measurement, and disease management, it is possible to increase crop yields. Work will be expanded to evaluate the performance of the proposed method in an embedded system and camera-based real-time application. The real-time system will be a hardware product that, after training with deep learning, will monitor and predict the health of plants.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

MS conceptualized of this study, conducted experiments,wrote the original draft, and revised the manuscript. TH wrote the manuscript and performed the experiments. BS made the experimental plan, supervised the work and revised the manuscript. IU performed the data analysis and reevised the manuscript. SS made the experimental plan and revised the manuscript. FA evaluated the developed technique and revised the manuscript. SP designed the experimental plan, supervised the work andrevised the manuscript. All authors have read and agreed to the published version of the manuscript.

This work was supported by the DGIST R&D program of the Ministry of Science and ICT of KOREA (22-KUJoint-02, 19-RT-01, and 21-DPIC-08), and the National Research Foundation of Korea (NRF) grant funded by the Korean Government (MSIT) (No. 2019R1C1C1008727). This research work was also supported by the Cluster grant R20143 of Zayed University, UAE.

We thank all the authors for their contribution.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdulridha, J., Ampatzidis, Y., Kakarla, S. C., Roberts, P. (2020). Detection of target spot and bacterial spot diseases in tomato using UAV-based and benchtop-based hyperspectral imaging techniques. Precis. Agric. 21 (5), 955–978. doi: 10.1007/s11119-019-09703-4

Agarwal, M., Singh, A., Arjaria, S., Sinha, A., Gupta, S. (2020). ToLeD: Tomato leaf disease detection using convolution neural network. Proc. Comput. Sci. 167 (2019), 293–301. doi: 10.1016/j.procs.2020.03.225

Amara, J., Bouaziz, B., Algergawy, A. (2017). “A deep learning-based approach for banana leaf diseases classification’, lecture notes in informatics (LNI),” in Proceedings - series of the gesellschaft fur informatik (GI) (Germany: Digital library), vol. 266, 79–88.

Arya, S., Singh, R. (2019). A comparative study of CNN and AlexNet for detection of disease in potato and mango leaf’. IEEE Int. Conf. Issues Challenges Intelligent Computing Techniques ICICT 2019 1, 1–6. doi: 10.1109/ICICT46931.2019.8977648

Broadbent, L. (1976). Epidemiology and control of tomato mosaic virus. Annual review of Phytopathology, 14 (1), 75–96. Available at: https://www.annualreviews.org/doi/pdf/10.1146/annurev.py.14.090176.000451

Chattopadhay, A., Sarkar, A., Howlader, P., Balasubramanian, V. N. (2018). “Grad-CAM++: Generalized gradient-based visual explanations for deep convolutional networks” in Proceedings - 2018 IEEE winter conference on applications of computer vision (USA: WACV 2018), 839–847. doi: 10.1109/WACV.2018.00097

Chen, Y., Zhong, G. (2022). Score-CAM++: Class discriminative localization with feature map selection. J. Physics: Conf. Ser. 2278 (1), 1–11. doi: 10.1088/1742-6596/2278/1/012018

Choi, H., Jo, Y., Cho, W. K., Yu, J., Tran, P. T., Salaipeth, L., et al. (2020). Identification of viruses and viroids infecting tomato and pepper plants in vietnam by metatranscriptomics. Int. J. Mol. Sci. 21 (20), 1–16. doi: 10.3390/ijms21207565

Chouhan, S. S., Kaul, A., Singh, U. P., Jain, S. (2018). Bacterial foraging optimization based radial basis function neural network (BRBFNN) for identification and classification of plant leaf diseases: An automatic approach towards plant pathology. IEEE Access 6, 8852–8863. doi: 10.1109/ACCESS.2018.2800685

Chowdhury, M. E. H., Khandakar, A., Alzoubi, K., Mansoor, S., Tahir, M., Reaz, A., et al. (2019). Real-time smart-digital stethoscope system for heart diseases monitoring. Sensors (Switzerland) 19 (12), 1–22. doi: 10.3390/s19122781

Chowdhury, M. H., Shuzan, M. N.I., Chowdhury, M. E., Mahbub, Z. B., Uddin, M. M., Khandakar, A., et al. (2020). Estimating blood pressure from the photoplethysmogram signal and demographic features using machine learning techniques. Sensors (Switzerland) 20 (11), 1–24. doi: 10.3390/s20113127

Chowdhury, M. E. H., Rahman, T., Khandakar, A., Al-Madeed, S., Zughaier, S. M., Hassen, H., et al. (2021). An early warning tool for predicting mortality risk of COVID-19 patients using machine learning. Cogn. Comput. 13, 1–16. doi: 10.1007/s12559-020-09812-7

Chowdhury, M. E. H., Khandakar, A., Ahmed, S., Al-Khuzaei, F., Hamdalla, J., Haque, F., et al. (2020). Design, construction and testing of iot based automated indoor vertical hydroponics farming test-bed in qatar. Sensors (Switzerland) 20 (19), 1–24. doi: 10.3390/s20195637

Chowdhury, M. E. H., Rahman, T., Khandakar, A., Mazhar, R., Kadir, M. A., Mahbub, Z. B., et al. (2020). Can AI help in screening viral and COVID-19 pneumonia? IEEE Access 8, 132665–132676. doi: 10.1109/ACCESS.2020.3010287

Chowdhury, M. E. H., Rahman, T., Khandakar, A., Ayari, M. A., Khan, A. U., Khan, M. S., et al. (2021). Automatic and reliable leaf disease detection using deep learning techniques. AgriEngineering 3.2, 294–312.11. doi: 10.3390/agriengineering3020020

Dookie, M., Ali, O., Ramsubhag, A., Jayaraman, J. (2021). ‘Flowering gene regulation in tomato plants treated with brown seaweed extracts’. Scientia Hortic. 276, 109715. doi: 10.1016/j.scienta.2020.109715

Durmus, H., Gunes, E. O., Kirci, M. (2017). ‘Disease detection on the leaves of the tomato plants by using deep learning’, 2017 6th international conference on agro-geoinformatics. Agro-Geoinformatics 2017, 1–5. doi: 10.1109/Agro-Geoinformatics.2017.8047016

Fuentes, A., Yoon, S., Kim, S. C., Park, D. S. (2017). A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors (Switzerland) 17 (9), 1–21. doi: 10.3390/s17092022

Ghanim, M., Czosnek, H. (2000). Tomato yellow leaf curl geminivirus (TYLCV-is) is transmitted among whiteflies ( bemisia tabaci ) in a sex-related manner. J. Virol. 74 (10), 4738–4745. doi: 10.1128/jvi.74.10.4738-4745.2000

Ghanim, M., Morin, S., Zeidan, M., Czosnek, H. (1998). Evidence for transovarial transmission of tomato yellow leaf curl virus by its vector, the whitefly bemisia tabaci. Virology 240 (2), 295–303. doi: 10.1006/viro.1997.8937

Global Network Against Food Crisis (2022). ‘FSIN food security information network’. (FSIN food security information network) 11–60. Available at: https://www.fsinplatform.org/.

He, Y. Z., Wang, Y. M., Yin, T. Y., Fiallo-Olivé, E., Liu, Y. Q., Hanley-Bowdoin, L., et al. (2020). A plant DNA virus replicates in the salivary glands of its insect vector via recruitment of host DNA synthesis machinery. Proc. Natl. Acad. Sci. United States America 117 (29), 16928–16937. doi: 10.1073/pnas.1820132117

Huang, Y., et al. (2019). “GPipe: Efficient training of giant neural networks using pipeline parallelism” in Advances in neural information processing systems (Canada: Neural information processing system), vol. 32. , 1–11.

Hu, J., Shen, L., Albanie, S., Sun, G., Wu, E. (2020). Squeeze-and-Excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 42 (8), 2011–2023. doi: 10.1109/TPAMI.2019.2913372

Hughes, D. P., Salathe, M. (2015) ‘An open access repository of images on plant health to enable the development of mobile disease diagnostics’. Available at: http://arxiv.org/abs/1511.08060.

Irmak, G., Saygili, A. (2020). “Tomato leaf disease detection and classification using convolutional neural networks” in Proceedings - 2020 innovations in intelligent systems and applications conference (Turkey: ASYU 2020), 2–4. doi: 10.1109/ASYU50717.2020.9259832

Khandakar, A., Chowdhury, M. E. H., Kazi, M. K., Benhmed, K., Touati, F., Al-Hitmi, M., et al. (2019). Machine learning based photovoltaics (PV) power prediction using different environmental parameters of Qatar. Energies 12 (14), 1–19. doi: 10.3390/en12142782

Laranjeira, T., Costa, A., Faria-Silva, C., Ribeiro, D., Ferreira de Oliveira, J. M. P., Simões, S., et al. (2022). Sustainable valorization of tomato by-products to obtain bioactive compounds: Their potential in inflammation and cancer management. Molecules 27 (5), 1–19. doi: 10.3390/molecules27051701

LeCun, Y., Haffner, P., Bottou, L., Bengio, Y. (1999). Object recognition with gradient-based learning. In Shape, contour and grouping in computer vision. (Berlin, Heidelberg: Springer), 319–345. doi: 10.1007/3-540-46805-6_19

Livne, M., Rieger, J., Aydin Orhun, U., Taha Abdel, A., Akay Ela, M., Kossen, T., et al (2019). A U-Net deep learning framework for high performance vessel segmentation in patients with cerebrovascular disease. Front. Neurosci (2019) 13, 97. doi: 10.3389/fnins.2019.00097

Louis, M. (2013). ‘20:21’, Canadian journal of emergency medicine, (Canada: Cambrige) Vol. 15. 190. doi: 10.2310/8000.2013.131108

Louws, F. J., Wilson, M., Campbell, H. L., Cuppels, D. A., Jones, J. B., Shoemaker, P. B., et al. (2001). Field control of bacterial spot and bacterial speck of tomato using a plant activator. Plant Dis. 85 (5), 481–488. doi: 10.1094/PDIS.2001.85.5.481

Manjunath, R. V., Kwadiki, K. (2022). ‘Modified U-NET on CT images for automatic segmentation of liver and its tumor’. Biomed. Eng. Adv. 4, 100043. doi: 10.1016/j.bea.2022.100043

Navab, N. (2020) Medical image computing and computer-assisted intercention-MICCAI 2015. (Germany: Springer) Available at: https://github.com/imlab-uiip/lung-segmentation-2d#readme.

Navab, N., Hornegger, J., Wells, W. M., Frangi, A. F. (2015). ‘Medical image computing and computer-assisted intervention - MICCAI 2015: 18th international conference Munich, Germany, October 5-9, 2015 proceedings, part III’,” in Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), vol. 9351. , 12–20. doi: 10.1007/978-3-319-24574-4

Pernezny, K., Stoffella, P., Collins, J., Carroll, A., Beaney, A. (2018). ‘Control of target spot of tomato with fungicides, systemic acquired resistance activators, and a biocontrol agent’. Plant Prot. Sci. 38 (No. 3), 81–88. doi: 10.17221/4855-pps

Prospects, C., Situation, F. (2022). Crop prospects and food situation #2, July 2022. (Food and Argriculture Organization). doi: 10.4060/cc0868en

Qiao, K., Liu, Q., Huang, Y., Xia, Y., Zhang, S. (2020). ‘Management of bacterial spot of tomato caused by copper-resistant xanthomonas perforans using a small molecule compound carvacrol’. Crop Prot. 132, 105114. doi: 10.1016/j.cropro.2020.105114

Rahman, T., Chowdhury, M. E. H., Khandakar, A. (2020). A’pplied sciences transfer learning with deep convolutional neural network ( CNN ) for pneumonia detection using’, MDPI. J. app Sci. 3233, 1–17. doi: 10.3390/app10093233

Rahman, M. A., Islam, M. M., Mahdee, G. M. S., Ul Kabir, M. W. (2019). ‘Improved segmentation approach for plant disease detection’, 1st international conference on advances in science Vol. pp (Bangladesh: Engineering and Robotics Technology 2019, ICASERT 2019), 1–5. doi: 10.1109/ICASERT.2019.8934895

Rahman, T., Khandakar, A., Kadir, M. A., Islam, K. R., Islam, K. F., Mazhar, R., et al. (2020). Reliable tuberculosis detection using chest X-ray with deep learning, segmentation and visualization. IEEE Access 8, 191586–191601. doi: 10.1109/ACCESS.2020.3031384

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L. C. (2018). “MobileNetV2: Inverted residuals and linear bottlenecks” in Proceedings of the IEEE computer society conference on computer vision and pattern recognition, USA: IEEE vol. pp. , 4510–4520. doi: 10.1109/CVPR.2018.00474

Schlemper, J., Oktay, O., Schaap, M., Heinrich, M., Kainz, B., Glocker, B., et al. (2019). Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 53, 197–207. doi: 10.1016/j.media.2019.01.012

Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D. (2020). Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vision 128 (2), 336–359. doi: 10.1007/s11263-019-01228-7

Sevilla, J., et al. (2022) ‘Compute trends across three eras of machine learning’. Available at: http://arxiv.org/abs/2202.05924.

Smilkov, D., et al. (2017) ‘SmoothGrad: removing noise by adding noise’. Available at: http://arxiv.org/abs/1706.03825.

SpMohanty (2018) PlantVillage-dataset. Available at: https://github.com/spMohanty/PlantVillage-Dataset (Accessed 21 January 2021).

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.. (2016). Rethinking the inception architecture for computer vision;. In Proceedings of the IEEE conference on computer vision and pattern recognition. (USA: IEEE), 2818–2826. doi: 10.1109/CVPR.2016.308

Tahir, A. M., Qiblawey, Y., Khandakar, A., Rahman, T., Khurshid, U., Musharavati, F., et al. (2022). ‘Deep learning for reliable classification of COVID-19, MERS, and SARS from chest X-ray images’. Cogn. Comput. 2019, 17521772. doi: 10.1007/s12559-021-09955-1

Touati, F., Khandakar, A., Chowdhury, M. E. H., Gonzales, A. J. S. P., Sorino, C. K., Benhmed, K. (2020). ‘Photo-voltaic (PV) monitoring system, performance analysis and power prediction models in Doha, qatar’ in Renewable energy. rijeka. Eds. Taner, T., Tiwari, A., Ustun, T. S. (IntechOpen). doi: 10.5772/intechopen.92632

Wang, H., Wang, Z., Du, M., Yang, F., Zhang, Z., Ding, S., et al. (2020). ‘Score-CAM: Score-weighted visual explanations for convolutional neural networks’. IEEE Comput. Soc. Conf. Comput. Vision Pattern Recognition Workshops, 111–119. doi: 10.1109/CVPRW50498.2020.00020

Wang, G., Sun, Y., Wang, J. (2017). Automatic image-based plant disease severity estimation using deep learning. Comput. Intell. Neurosci. 2017, 1–9. doi: 10.1155/2017/2917536

Xu, Y., Zhang, S., Shen, J., Wu, Z., Du, Z., Gao, F. (2021). ‘The phylogeographic history of tomato mosaic virus in eurasia’. Virology 554, 42–47. doi: 10.1016/j.virol.2020.12.009

Keywords: plant disease detection, deep learning, U-Net CNN, inception-net, object detection and recognition

Citation: Shoaib M, Hussain T, Shah B, Ullah I, Shah SM, Ali F and Park SH (2022) Deep learning-based segmentation and classification of leaf images for detection of tomato plant disease. Front. Plant Sci. 13:1031748. doi: 10.3389/fpls.2022.1031748

Received: 30 August 2022; Accepted: 15 September 2022;

Published: 07 October 2022.

Edited by:

Marcin Wozniak, Silesian University of Technology, PolandReviewed by:

Moazam Ali, Bahria University, PakistanCopyright © 2022 Shoaib, Hussain, Shah, Ullah, Shah, Ali and Park. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sang Hyun Park, c2hwYXJrMTMxMzVAZGdpc3QuYWMua3I=; Farman Ali, RmFybWFua2FuanVAc2Vqb25nLmFjLmty

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.