94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Plant Sci., 09 April 2021

Sec. Technical Advances in Plant Science

Volume 12 - 2021 | https://doi.org/10.3389/fpls.2021.616689

This article is part of the Research TopicHyperspectral Imaging Technology: A Novel Method for Agricultural and Biosecurity Diagnostics View all 10 articles

Biomass is an important indicator for evaluating crops. The rapid, accurate and nondestructive monitoring of biomass is the key to smart agriculture and precision agriculture. Traditional detection methods are based on destructive measurements. Although satellite remote sensing, manned airborne equipment, and vehicle-mounted equipment can nondestructively collect measurements, they are limited by low accuracy, poor flexibility, and high cost. As nondestructive remote sensing equipment with high precision, high flexibility, and low-cost, unmanned aerial systems (UAS) have been widely used to monitor crop biomass. In this review, UAS platforms and sensors, biomass indices, and data analysis methods are presented. The improvements of UAS in monitoring crop biomass in recent years are introduced, and multisensor fusion, multi-index fusion, the consideration of features not directly related to monitoring biomass, the adoption of advanced algorithms and the use of low-cost sensors are reviewed to highlight the potential for monitoring crop biomass with UAS. Considering the progress made to solve this type of problem, we also suggest some directions for future research. Furthermore, it is expected that the challenge of UAS promotion will be overcome in the future, which is conducive to the realization of smart agriculture and precision agriculture.

Agriculture plays an important role in maintaining all human activities. By 2050, population and socioeconomic growth are expected to double the current food demand (Niu et al., 2019). To solve the increasingly complex problems in the agricultural production system, the development of smart agriculture and precision agriculture provides important tools for meeting the challenges of sustainable agricultural development (Sharma et al., 2020). Biomass is a basic agronomic parameter in field investigations and is often used to indicate crop growth status, the effectiveness of agricultural management measures and the carbon sequestration ability of crops (Bendig et al., 2015; Li W. et al., 2015). Fast, accurate and nondestructive monitoring of biomass is the key to smart agriculture and precision agriculture (Lu et al., 2019; Yuan et al., 2019).

Traditional biomass measurement methods are based on destructive measurements that require the manual harvesting (Gnyp et al., 2014), weighing and recording of crops, which makes large-scale, long-term measurements challenging and time-consuming, and these measurements are not only time-consuming and laborious but also difficult to apply over large areas (Boschetti et al., 2007; Yang et al., 2017). In other research areas, many studies have used satellite remote sensing to monitor biomass. Navarro et al. (2019) used Sentinel-1 and Sentinel-2 data to monitor the aboveground biomass (AGB) of a mangrove plantation. However, meteorological conditions have a great influence on satellite images, such as cloud and aerosol interference, surface glare and poor synchrony with tides (Tait et al., 2019). In addition, satellite data cannot provide sufficient data resolution for precision agricultural applications (Jiang et al., 2019; Song et al., 2020), and it is difficult to obtain timely and reliable data (Prey and Schmidhalter, 2019). Similar to satellite remote sensing, manned airborne equipment can cover a wide range, but the data are not detailed enough (Sofonia et al., 2019; ten Harkel et al., 2020). Meanwhile, although vehicle-mounted equipment can guarantee high accuracy, it has poor flexibility and slow speed (Selbeck et al., 2010; Tian et al., 2020). Unmanned aerial systems (UAS) represent a noncontact and nondestructive measurement method that can obtain the spectral, structural, and texture features of the target at different spatiotemporal scales (Jiang et al., 2019). These systems have the ability to obtain high spatial and temporal resolution data and have great application potential (Niu et al., 2019; Ramon Saura et al., 2019).

To date, most reviews of UAS in the field of agriculture are general reviews involving multiple fields in agriculture, and the description of biomass monitoring is not detailed enough (Hassler and Baysal-Gurel, 2019; Kim et al., 2019; Maes and Steppe, 2019). Reviews of remote sensing for crop biomass monitoring are rare and mainly introduce satellite remote sensing, while the application of UAS in crop biomass monitoring is rarely introduced (Chao et al., 2019). Therefore, the motivation of our study was to conduct a comprehensive review of almost all UAS-related studies in the field of crop biomass monitoring, including information on the equipment used in the field of crop biomass monitoring, biomass indices, and data processing and analysis methods. Finally, the relevant applications are reviewed according to different development directions.

Unmanned aerial systems consist of unmanned aerial vehicle (UAV) platforms, autopilot systems, navigation sensors, mechanical steering components, data acquisition sensors, and other components (Jeziorska, 2019), among which the most important are the data acquisition sensors (Toth and Jozkow, 2016). Meanwhile, the type of UAV platforms and flight conditions will have a great impact on the data acquisition process of sensors, which need to be considered (Domingo et al., 2019; ten Harkel et al., 2020).

The most commonly used platforms in crop biomass monitoring are fixed-wing drones and rotor drones (Hassler and Baysal-Gurel, 2019). Hogan et al. (2017) summarized the characteristics of fixed-wing aircrafts and rotorcrafts. Fixed-wing aircrafts usually have a larger payload capacity, faster flight speed, longer flight time, and longer range than rotorcrafts. For these reasons, fixed-wing systems are particularly useful for collecting data over large areas. Fixed-wing aircrafts have poor mobility, need more space to land, and have more expensive prices than rotor UAVs. Rotor UAVs are very maneuverable and can hover, rotate and take pictures at almost any angle. Although there are also expensive models, more low-cost models have widely appeared in the market. Compared with fixed-wing aircrafts, the main disadvantage of rotor UAVs is their short range and flight time. Figure 1 shows DJI Inspire 2 Rotor Drone1 and eBee X Fixed-Wing Drone2.

The flight planning of a fixed-wing UAV is very similar to that of a manned aircraft, while a rotor UAV can meet almost any trajectory requirements, including hover, slow motion and attitude control (Toth and Jozkow, 2016). These features enable rotor UAVs to perform extremely accurate tasks (Kim et al., 2019). Therefore, rotor UAVs are more commonly used in biomass monitoring than fixed-wing aircrafts.

Unmanned aerial systems usually obtain data through spectral sensors and depth sensors (Toth and Jozkow, 2016). Spectral sensors mainly include RGB sensors, multispectral sensors, and hyperspectral sensors, which can obtain color and texture information from the crop surface (Li et al., 2019). The difference between these three types of sensors is their ability to sense the spectrum (Shentu et al., 2018; Zhong et al., 2018; Kelly et al., 2019). Light detection and ranging (LiDAR) is a typical example of a depth sensor and can clearly obtain the three-dimensional structure and height information of crops (Wijesingha et al., 2019). Figure 2 shows several UAV-mounted sensor types.

Based on the same imaging principle, RGB, multispectral, and hyperspectral sensors all capture images by sensing spectral bands but have abilities to sense different spectral bands.

RGB sensors are a type of visible light camera that can detect three bands of color: red (R), green (G), and blue (B) (Shentu et al., 2018). The data from the three bands represent the intensity of R, G, and B in each pixel (Tait et al., 2019). Although RGB sensors have low accuracy compared with other sensors because they can collect spectral data from only three bands, the low-cost characteristic of RGB sensors is not possessed by others. Due to the need for low cost during the large-scale use of UAS in the monitoring of crop biomass, RGB sensors have received increasing attention because of their low-cost characteristics (Calou et al., 2019; Lu et al., 2019; Yue et al., 2019). The combination of RGB data with better biomass indices and advanced algorithms can obtain high accuracy at a low cost (Acorsi et al., 2019; Lu et al., 2019; Yue et al., 2019). Therefore, in the field of crop biomass monitoring by UAS, RGB sensors play an irreplaceable role.

Since spectral information is lost during the process of color image recording, the use of RGB input obviously limits the amount of information to extract from the highlighted area (Kelly et al., 2019). Compared with three-channel RGB imaging, multispectral images contain more imaging bands (Shentu et al., 2018).

Multispectral image data containing several near-infrared (NIR) spectral regions are superior to RGB data (Cen et al., 2019), but the disadvantage is that the cost of multispectral sensors is higher than that of RGB sensors (Costa et al., 2020). Different spectral bands can reflect the characteristics of different plants and can be used to effectively distinguish different crops (Xu et al., 2019). Song and Park (2020) used the RedEdge multispectral camera from MicaSense to analyze the spectral characteristics of aquatic plants and found that waterside plants exhibited the highest reflectivity in the NIR band, while floating plants had high reflectivity in the red-edge band.

Hyperspectral sensors can obtain more abundant spectral information than multispectral sensors (Zhong et al., 2018). Yue et al. (2018) used the UHD 185 Firefly (UHD 185 Firefly, Cubert GmbH, Ulm, Baden-Württemberg, Germany) hyperspectral sensor to collect panchromatic images with radiation records of 1000 × 1000 (1 band) and hyperspectral cubes of 50 × 50 (125 bands), with rich texture and spectral information. The disadvantage is that the cost of hyperspectral sensors is higher than that of multispectral sensors. In addition, the spatial resolution of hyperspectral images is lower than that of ordinary images, which may cause the loss of detail information for small targets. Meanwhile, more spectral information may not be useful in some cases. Tao et al. (2020) used hyperspectral sensors to study the correlation between different vegetation indices (VIs) and red-edge parameters and crop biomass. It was found that using too many spectral features as independent variables will lead to overfitting of the model, so it is necessary to use an appropriate number of spectral features that are highly related to biomass.

How to improve the reliability of spectral data is an unavoidable problem when using spectral sensors to collect data. First, the image resolution will affect the results of AGB monitoring, and the higher the image resolution is, the higher the prediction accuracy (Domingo et al., 2019). Yue et al. (2019) found that using image texture information to estimate the best image resolution for AGB monitoring depends on the size and row spacing of the crop canopy. Second, fisheye lenses may have an advantage over flat lenses. Calou et al. (2019) coupled a 16-megapixel plane lens with a 12-megapixel fisheye lens on a UAV for data collection, and the results showed that the fisheye lens estimation was the most accurate at an altitude of 30 m. Finally, at present, some applications using spectral data are processed without accurate or rough calibration. Guo et al. (2019) proposed a general calibration equation that is suitable for images under clear sky conditions and even under a small amount of clouds. The method needs to be further verified.

Although RGB sensors can only collect spectral data from the R, G, and B bands, the equipment is inexpensive. Although hyperspectral sensors can collect spectral information from many bands, the equipment is expensive. Unless there are special requirements for detailed hyperspectral images and the equipment is inexpensive, a multispectral sensor that balances the richness of spectral bands and equipment costs exhibits the highest cost performance and should be the default imaging choice (Hassler and Baysal-Gurel, 2019; Niu et al., 2019).

Spectral data have poor robustness in the case of target overlap, occlusion, large illumination changes, shadows, and complex scenes. Depth data that do not change with brightness and color can provide additional useful information for complex scenes (Shuqin et al., 2016). At present, the depth sensors on UAV platforms are mainly LiDAR (Wallace et al., 2012; Qiu et al., 2019; Tian et al., 2019; Wang D.Z. et al., 2019; Yan et al., 2020). LiDAR has become an important information source for the evaluation of the vegetation canopy structure, which is especially suitable for species that limit artificial and destructive sampling (Brede et al., 2017). Figure 3 shows a schematic illustration of the difference between LiDAR and spectral data (Zhu Y.H. et al., 2019).

Light detection and ranging is an active remote sensing technology that accurately measures distance by emitting laser pulses and analyzing the returned energy (Calders et al., 2015). With the development of global positioning system (GPS), inertial measurement unit (IMU), laser and computing technology, which make it possible to use LiDAR more inexpensively and accurately, a LiDAR system based on a UAV platform has become possible (Lohani and Ghosh, 2017). Compared with spectral sensors, LiDAR tends to provide accurate results of biomass prediction (Acorsi et al., 2019) because spectral data tend to be saturated in the middle and high canopy (Féret et al., 2017), and LiDAR can improve this through depth information (Hassler and Baysal-Gurel, 2019).

However, when it is difficult to estimate plant height, it is difficult to accurately monitor biomass through LiDAR. ten Harkel et al. (2020) used a VUX-SYS laser scanner to monitor the biomass of potato, sugar beet, and winter wheat. The researchers achieved good results in monitoring the biomass of sugar beet (R2 = 0.68, RMSE = 17.47 g/m2) and winter wheat (R2 = 0.82, RMSE = 13.94 g/m2), but the reliability for monitoring potato biomass was low. The reason for this result is that potatoes have complex canopy structures and grow on a ridge, and the other two crops have vertical structures and uniform heights. Therefore, for potatoes, it is difficult to visually determine the highest point of a specific position.

Finally, how to improve the data reliability by adjusting the LiDAR parameters is still lacking in more research. For instance, the sampling intensity of LiDAR has an impact on the accuracy of monitoring biomass (Wang D.Z. et al., 2020), but the current research in this area needs to be further verified.

The combination of data obtained from multiple sensors is an effective method to improve the accuracy of biomass estimation. On the one hand, the density of LiDAR point clouds has been improved with increased data resolution and penetrability (Wallace et al., 2012; Yan et al., 2020), which can improve the disadvantage that spectral data collected by RGB, multispectral and hyperspectral sensors are easily saturated in the middle and high canopy (Gitelson, 2004; Féret et al., 2017). On the other hand, the texture and spectral features that can be collected by RGB, multispectral, and hyperspectral sensors are also beyond the reach of LiDAR (Liu et al., 2019; Yue et al., 2019; Zheng et al., 2019). A variety of sensors with different characteristics are used to collect data, and the data that can reflect different characteristics of target crops are combined to provide more effective characteristics that are not cross-correlated that are needed for data analysis with a regression algorithm (Niu et al., 2019) to improve the accuracy of biomass estimation.

Wang et al. (2017) first evaluated the application of the fusion of hyperspectral and LiDAR data in maize biomass estimation. The results show that the fusion of hyperspectral and LiDAR data can provide better estimates of maize biomass than using LiDAR or hyperspectral data alone. Different from the previous methods of using LiDAR and optical remote sensing data to predict AGB separately or in combination, Zhu Y.H. et al. (2019) divided the estimation of maize AGB into two parts: aboveground leaf biomass (AGLB) and aboveground stem biomass (AGSB). AGLB was measuring with multispectral data, which are sensitive to the vegetation canopy. AGSB was measured with LiDAR point cloud data, which are sensitive to the vegetation structure. Compared with using LiDAR data alone or using multispectral data alone, the combination of LiDAR data and multispectral data can more accurately estimate AGB, in which the R2 increases by 0.13 and 0.30, the RMSE decreases by 22.89 and 54.92 g/m2, and the NRMSE decreases by 4.46 and 7.65%.

Other researchers have also carried out many studies in the field of multisensor data fusion and obtained the same results in studies on the monitoring of crop biomass, such as rice (Cen et al., 2019) and soybean (Maimaitijiang et al., 2020). In addition, different crops have different characteristics, and the same crop will show different characteristics under different growth conditions (Johansen et al., 2019; ten Harkel et al., 2020), which requires the use of different sensors to collect crop information comprehensively and screen out some information most related to biomass. The combination of data from multiple sensors is an effective method to improve the accuracy of biomass estimation.

To ensure the most accurate results for biomass monitoring, further tests should be carried out before experiments to determine the optimal flight parameters, such as altitude, speed, location of flight lines, and overlap (Domingo et al., 2019). Increasing the UAV flight height will reduce image resolution (Lu et al., 2019), and the sensitivity of the accuracy of biomass monitoring to the image spatial resolution is an important reference for the configuration of a UAV flight height. The estimation of the other flight parameters did not exhibit much different effects on the overall effect of biomass monitoring from that of standard flight parameters, but different flight parameters can lead to different point densities and distributions, which have a greater impact on biomass monitoring than altitude and velocity. A better crossover model and a closer flight path may improve biomass monitoring overall (ten Harkel et al., 2020). The images need to be overlapped sufficiently to improve the accuracy of biomass monitoring (Borra-Serrano et al., 2019). Therefore, the UAV flight plan should be wide enough. Domingo et al. (2019) found that reducing side overlap from 80 to 70% while maintaining a fixed forward overlap of 90% may be an option to reduce flight time and procurement costs. For specific species, such as rice, due to the physiological characteristics of rice, the analysis of the solar elevation angle during the creation of a flight plan is very important to avoid the influence of sun glint and hotspot effects (Jiang et al., 2019).

It is a common method in biomass monitoring to use biomass indices to obtain data directly related to biomass. Common biomass indices include VIs and crop height (CH), which are extracted from images or three-dimensional point clouds. There are also relevant studies that do not use biomass indices but directly use images or three-dimensional point clouds to conduct correlation analysis with biomass (Nevavuori et al., 2019).

A variety of VIs from remote sensing images can be used to monitor the state of vegetation on the ground. This method is also able to quantitatively evaluate the richness, greenness and vitality of vegetation. After years of development, VIs can be divided into various monitoring and calculation methods, among which the most commonly used is the normalized difference vegetation index (NDVI) proposed by Rouse et al. (1974). The NDVI is usually used to reflect information such as vegetation cover and growth, and its calculation formula is as follows:

Near-infrared is the reflectance in NIR band, and R is the reflectance in red band. The value range of NDVI is (−1, 1). It is generally believed that an NDVI value less than 0 represents no vegetation coverage, while a value greater than 0.1 represents vegetation coverage (Li Z. et al., 2015). Since the index is positively correlated with the density of vegetation, the higher the NDVI value is, the higher the vegetation coverage will be.

Different VIs have unique characteristics, and more spectral features can be identified by using multiple VIs to obtain high monitoring accuracy. Marino and Alvino (2020) used the soil adjusted vegetation index (SAVI), NDVI and OSAVI to characterize 10 winter wheat varieties in a field at different growth stages and obtained optimal biomass monitoring results. Villoslada et al. (2020) combined 13 VIs to obtain the highest accuracy.

Vegetation indices can be built not only on the basis of spectral information but also on the basis of texture information. Texture is an important characteristic for identifying objects or image areas of interest. In several texture algorithms, the gray level co-occurrence matrix (GLCM), which includes variance (VAR), entropy (EN), data range (DR), homogeneity (HOM), second moment (SE), dissimilarity (DIS), contrast (CON), and correlation (COR), which are based on Haralick et al. (1973), is often used to test the effects of texture analysis from UAS data on biomass estimation potential (Zheng et al., 2019).

Vegetation indices based on image texture are usually combined with VIs based on spectral information to monitor crop biomass, and this combination can improve the accuracy of monitoring biomass significantly (Liu et al., 2019; Yue et al., 2019; Zheng et al., 2019). Zheng et al. (2019) predicted rice AGB using stepwise multiple linear regression (SMLR) in combination with VIs and image texture, and the results showed that the combination of texture information and spectral information significantly improved the accuracy of rice biomass estimations compared with the use of spectral information alone (R2 = 0.78, RMSE = 1.84 t/ha).

Previously, as the required data were obtained by satellites, the spectral data collected would be affected by clouds. When there was cloud cover in the observation area, the information received by the satellite-borne sensor would be all cloud information, instead of reflecting the local vegetation cover (Feng et al., 2009). The low altitude and flexibility of UAS solve this problem, making VIs more widely used. At present, VIs have become indispensable biomass indices for monitoring crop biomass. Common VIs are shown in Table 1.

Crop height is an important indicator to characterize the vertical structure, and CH is usually strongly correlated with biomass (Scotford and Miller, 2004; Prost and Jeuffroy, 2007; Salas Fernandez et al., 2009; Montes et al., 2011; Hakl et al., 2012; Alheit et al., 2014). The crop surface model (CSM) is an effective CH information extraction technique and has been widely used for different crops (Han et al., 2019).

Crop height data can be obtained using RGB sensors and multispectral sensors. Cen et al. (2019) established a CSM to determine the CH (Tilly et al., 2014) based on spliced RGB images. First, structure from motion (SfM) was used to generate a point cloud, and the specific steps can be found in the study of Tomasi and Kanade (1992). Point clouds consist of matching points between overlapping images such as crop canopies and topographic surfaces. A digital elevation model (DEM) and digital terrain model (DTM) were obtained by classifying the point clouds. The DEM was based on a complete dense point cloud representing the height of the crop canopy, while the DTM was developed from only the surface dense point cloud. The CSM could be obtained by subtracting the DTM from the DEM by importing the two models into ArcGIS software (ArcGIS, Esri Inc., Redlands, CA, United States). Hassan et al. (2019) used a Sequoia 4.0 multispectral camera with the same method to measure the CH of wheat, and the results showed that the correlation between the CH data from UAS and the actual height was very high (R2 = 0.96).

Crop height data can also be obtained using LiDAR. Zhu W.X. et al. (2019) used CloudCompare open-source software to construct CH raster data from the LiDAR point cloud and studied the effects of CH on monitoring the AGB of crops. The results showed that CH is a robust indicator that can be used to estimate biomass, and the high spatial resolution of the CH data set was helpful to improve the effect of maize AGB estimation.

The monitoring of crop biomass by a single biomass index is sometimes unreliable. On the one hand, it is difficult to obtain reliable CH data from LiDAR in some cases. Johansen et al. (2019) found that dust storms can cause tomato plants to flatten and that once the tomato fruits become large and heavy, the weight may cause the branches to bend downward, thereby reducing the height of the plants. ten Harkel et al. (2020) found that potatoes have complex canopy structures and grow on ridges, so it is difficult to visually determine the highest point of a specific position. In the above cases, VIs can achieve better results than other measurements. On the other hand, CH data can better reflect the three-dimensional information of crops and can more accurately reflect the biomass of crops in the scene of target overlap, occlusion, large changes in light, shadow, and complex scenes. In addition, the information collected by UAS includes not only target crops but also other interference information. If this interference information cannot be effectively eliminated, it will have a negative impact on the monitoring of crop biomass, which can be improved by the combination of multiple biomass indices (Niu et al., 2019). Therefore, the combination of multiple models for biomass estimation is an effective method to improve the accuracy of biomass estimation.

The combination of multiple models for biomass estimation is an effective method to improve the accuracy of biomass estimation. The combination of spectral and textural features to construct VIs or the combination of VIs and CH has been shown to improve the results of biomass estimation.

Based on the idea of combining VIs with CH, Cen et al. (2019) used a biomass model that combined VIs and CH to monitor rice biomass under different nitrogen treatments. The results showed that the CH extracted by the CSM exhibited a high correlation with the actual CH. The monitoring model that incorporated RGB and multispectral image data with random forest regression (RFR) significantly improved the prediction results of AGB, in which the RMSEP decreased by 8.33–16.00%, R2 = 0.90, RMSEP = 0.21 kg/m2, and RRMSE = 14.05%.

Relevant studies have proven that a biomass model combined with VIs and CH can also improve the biomass estimation accuracy for corn (Niu et al., 2019), wheat (Lu et al., 2019), ryegrass (Borra-Serrano et al., 2019), and other crops. These cases prove that the combination of VIs and CH is an effective way to build a biomass model. However, Niu et al. (2019) pointed out that the fusion of CH data derived from RGB images in the VIs model, which was based on MLR, did not significantly improve the estimation of the VI model, which may be caused by the clear correlation between VIs and CH in this crop (Schirrmann et al., 2016). Therefore, it is necessary to combine biomass indices reasonably for different crops.

According to the idea of combining spectral information with image texture to build VIs, Liu et al. (2019) used a linear regression model to convert the digital number (DN) of the original image into surface reflectance. The reflectivity obtained from the gain and offset values of each band was used to calculate the VIs and image texture. The results showed that the introduction of image texture into the partial least squares regression (PLSR) and RFR models could estimate winter rape AGB more accurately than a model based on VIs alone. The accuracy of the prediction of AGB by the RFR model using VIs and texture measurements (RMSE = 274.18 kg/ha) was slightly higher than that of the PLSR model (RMSE = 284.09 kg/ha). The same idea has also obtained good results in applications to winter wheat (Yue et al., 2019), rice (Zheng et al., 2019), soybean (Maimaitijiang et al., 2020), and other crops. Biomass models combined with VIs and image texture have great potential in the estimation of crop biomass.

Data analysis is the key link to build the relationship between the data obtained from UAS and the actual crop biomass, and it is an important part of UAS. The data obtained from UAS often contain different noises, and the information is highly correlated. Generally, effective data analysis methods are needed to interpret the data and establish a robust prediction model (Cen et al., 2019). Therefore, scientific and systematic data analysis methods often play an important role.

Since the data collected by UAS cannot be directly used to monitor biomass, a series of preprocessing steps is needed for the data. When spectral sensors are used, an indispensable step is geometric correction and mosaicking of the image. Common software includes Pix4DMapper and Agisoft Photoscan.

Pix4DMapper software (Pix4D, S.A., Lausanne, Switzerland) is UAS photography geometric correction and mosaic technology based on feature matching and SfM photogrammetry technology. Initially, images were processed in any model space to create three-dimensional point clouds. The point clouds could be transformed into a real-world coordinate system using either direct geolocation techniques to estimate the camera’s location or GCP techniques for automatic identification within the point cloud. The point cloud was then used to generate the DTM required for image correction. Subsequent geographic reference images are linked together to form a mosaic of the study area (Turner et al., 2012).

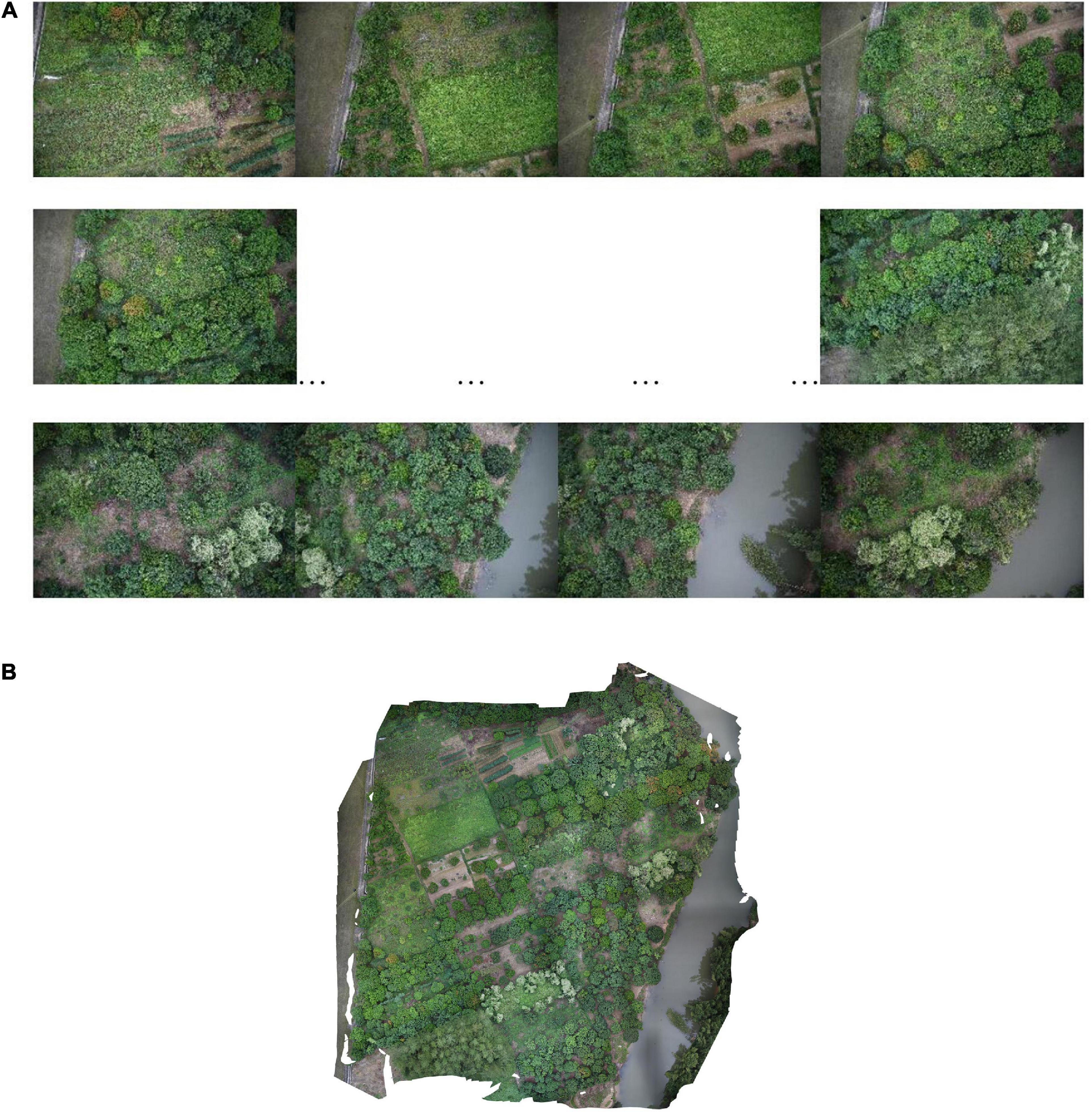

Agisoft Photoscan software (Agisoft LLC, St. Petersburg, Russia) is also a common UAS data preprocessing software. The processing procedure is similar to that of Pix4DMapper. Finally, the UAS image is exported to TIFF image format for subsequent analysis (Acorsi et al., 2019; Lu et al., 2019). Figure 4 shows RGB imagery datasets were processed using the software Agisoft PhotoScan (Sun et al., 2019).

Figure 4. RGB imagery datasets were processed using the software Agisoft PhotoScan. (A) High-resolution proof images of the acquisition area. (B) Overall map of research area processed by Agisoft PhotoScan.

(a) High-resolution proof images of the acquisition area

(b) Overall map of research area processed by Agisoft PhotoScan.

Machine learning algorithms are widely used to process biomass information. According to whether the input dataset is labeled, machine learning algorithms can be divided into supervised learning algorithms and unsupervised learning algorithms (Dike et al., 2018). Supervised learning algorithms depend on a labeled dataset. Classification algorithms and regression algorithms are the two output forms of supervised learning. In crop biomass monitoring, regression algorithms are more often used than classification algorithms. Because the expected biomass results are often continuous instead of discrete. Unsupervised learning does not rely on a labeled dataset. It is often used when the cost of labeled datasets is unacceptable (Sapkal et al., 2007). This is also a common method to reduce the dimensionality of the data. Most unsupervised learning algorithms are in the form of cluster analysis. Figure 5 shows the types of machine learning algorithms.

Biomass monitoring is a typical regression problem, which can to be solved by supervised learning algorithms. Enough labeled datasets are the basis of supervised learning algorithms. Actual biomass data tend to obtain through destructive samplings (Jiang et al., 2019; Yue et al., 2019). Field trials are limited by the area of cropland and the crop growing season. Therefore, sufficiently large datasets are often not available. How to properly divide the datasets into training datasets and validation datasets is a challenge to train supervised learning algorithms. To solve this problem, Jiang et al. (2019) used fivefold cross validation, Han et al. (2019) used repeated 10-fold cross validation, Zhu W.X. et al. (2019) used leave-one-out-cross validation (LOOCV) to reduce generalization error. Fivefold cross validation and repeated 10-fold cross validation belong to k-fold cross validation. LOOCV is a special case of k-fold cross validation, in which the number of folds equals the number of instances (Wong, 2015). k-fold cross validation divides the datasets into k folds, treats each fold as a validation dataset and regards the other k−1 folds as a training dataset (Wong and Yang, 2017). The value of folds can be large and the value of replications should be small if k-fold cross validation is applied in the classification algorithms (Wong and Yeh, 2020).

Support vector regression (SVR) is a boundary detection algorithm for identifying/defining multidimensional boundaries (Sharma et al., 2020), and the basis of this method is to solve the regression problem by using appropriate kernel functions to map the training data to the new hyperspace characteristics and transform the multidimensional regression problem into a linear regression problem (Navarro et al., 2019). Duan et al. (2019) in an analysis of VIs and image texture using SVR found that the SVR itself has the ability to find a suitable combination of different reflectance bands, which shown that SVR has strong adaptability to complex data and is suitable for data analysis in biomass monitoring. Yang et al. (2019) compared PLSR and SVR, and the results showed that the accuracy of SVR was higher than that of PLSR, and the SVR optimized by particle swarm optimization (PSO) could obtain more appropriate parameters and improve the accuracy of the model.

Random forest regression is a data analysis and statistical method that is widely used in machine learning and remote sensing research (Viljanen et al., 2018). Compared with artificial neural networks (ANNs), RFR does not suffer from overfitting problems because of the law of large numbers, and the injection of suitable randomness makes them precise regressors (Breiman, 2001). The random forest algorithm makes full use of all input data and can tolerate outliers and noise (Jiang et al., 2019). This algorithm has the advantages of high prediction accuracy, no need for feature selection and insensitivity to overfitting (Tewes and Schellberg, 2018; Viljanen et al., 2018).

An ANN is an information processing paradigm that is inspired by the way biological nervous systems such as the brain process information (Awodele and Jegede, 2013). ANN is a nonparametric nonlinear model that uses a neural network to transmit between layers and simulates reception and processing of information by the human brain (Zha et al., 2020). In individual cases, the results of the algorithm are not better than those of the multiple linear regression (MLR) method. The reason for this difference may be that in these applications, a small sample set will not meet the needs of the artificial neural network (Han et al., 2019; Zhu W.X. et al., 2019; Zha et al., 2020), and compared with RFR, ANN needs large data sets and a large number of repetitions to generate appropriate nonlinear mapping and obtain the optimal neural network (Devia et al., 2019; Han et al., 2019); however, RFR can still be applied for a small amount of sample data (Han et al., 2019; Liu et al., 2019), which leads to more frequent biomass monitoring use of RFR. Therefore, before the development of a deep neural network (DNN), the remote sensing field, including UAS studies, shifted the focus of data analysis methods from ANN to SVR and RFR (Ma et al., 2019).

The appearance of DNN and a series of methods to solve overfitting improved the effect of ANN (Maimaitijiang et al., 2020). Nevavuori et al. (2019) used a convolutional neural network (CNN) to predict the biomass of wheat and barley. The researchers tested the influence of the selection of the training algorithm, the depth of the network, the regularization strategy, the adjustment of super parameters, and other aspects of CNN on the prediction efficiency to improve the monitoring effect. This study proved that if enough information can be collected to increase the number of samples and solve the overfitting problem, ANN will perform no worse than RFR (Zhang et al., 2019).

Multiple linear regression (Borra-Serrano et al., 2019; Devia et al., 2019; Han et al., 2019; Zhu W.X. et al., 2019), SMLR (Lu et al., 2019; Zheng et al., 2019) and PLSR (Borra-Serrano et al., 2019; Liu et al., 2019; Yue et al., 2019) are also commonly used multiple regression algorithms. However, with the gradual progress of SVR, RFR, and ANN, these algorithms have gradually become references for SVR, RFR, and ANN and are no longer the main focus of data analysis.

Devia et al. (2019) described an MLR equation for monitoring rice biomass with VIs. In general, there was a linear relationship between the accumulation of biomass and VIs. However, the relationship between biomass and VIs in other crops at maturity can be nonlinear. Therefore, MLR does not apply to these nonlinear relations of crops. Zheng et al. (2019) used SMLR to establish the relationship between rice biomass and remote sensing variables (VIs, image texture, and the combination of VIs and image texture). Although the estimation accuracy was high, the model was complex and difficult to generalize. Moeckel et al. (2018) tested the ability of PLSR, RFR, and SVR to predict the CH of eggplant, tomato and cabbage, and the results showed that PLSR did not exceed the performance of RFR and SVR, so it was excluded first.

The monitoring of biomass is a typical nonlinear problem (Zha et al., 2020). These regression techniques are more suitable for data showing linear or exponential relationships between remote sensing variables and crop parameters (Atzberger et al., 2010; Jibo et al., 2018; Lu et al., 2019). These methods are often not as good as SVR, RFR, and ANN in the monitoring of biomass.

The construction of a high-performance monitoring model based on advanced algorithms (such as machine learning algorithms) is a good method to improve the effect of crop biomass monitoring (Niu et al., 2019). The monitoring of biomass is a typical multi-feature nonlinear problem (Zha et al., 2020), and machine learning algorithms (such as SVR, RFR, and ANN) exhibit superior results in solving these types of problems (Breiman, 2001; Navarro et al., 2019; Maimaitijiang et al., 2020). During the study, by comparing with RFR, the researchers found that ANN was often superior to RFR when dealing with large sample sizes and complex, nonlinear, and redundant data sets (LeCun et al., 2015; Schmidhuber, 2015; Kang and Kang, 2017; Zhang et al., 2018; Maimaitijiang et al., 2020). However, in a small sample size, the lack of samples often leads to the phenomenon of overfitting, and RFR will achieve better results than ANN due to its stronger robustness and generalization ability (Zhang and Li, 2014; Yao et al., 2015; Yuan et al., 2017; Yue et al., 2017; Zheng et al., 2018; Zhu W.X. et al., 2019; Zha et al., 2020).

Accuracy is an important indicator to evaluate the effects of UAS in the field of crop biomass estimation. In addition, reducing the cost to promote the large-scale application of UAS in this field is a difficult problem. From the perspective of improving the accuracy of crop biomass estimations, multisensor data fusion, multi-index fusion, the consideration of a variety of features not directly related to the monitoring of biomass, and the use of advanced algorithms are feasible directions (Maimaitijiang et al., 2020). Considering the promotion of large-scale applications, the use of low-cost sensors and the combination of suitable models and algorithms to improve the estimation accuracy of low-cost sensors, rather than the use of more expensive sensors, is an effective research path to promote the large-scale application of UAS in the field of crop biomass monitoring (Acorsi et al., 2019; Lu et al., 2019; Niu et al., 2019; Yue et al., 2019). RGB sensors are currently the most widely used low-cost sensor (Lussem et al., 2019), and studies based on RGB sensors are expected to promote the large-scale application of UAS in the field of crop biomass monitoring.

RGB sensors are not capable of providing NIR band data. Therefore, VIs associated with NIR bands cannot be used, which inhibits the enhancement of vegetation vitality contrast (Lu et al., 2019) and may affect the accuracy of biomass estimation. Lu et al. (2019) used a combination of advanced algorithms and multi-index fusion to compensate for this deficiency. Yue et al. (2019) fused the image texture and VIs to obtain the most accurate estimated value of AGB (R2 = 0.89, MAE = 0.67 t/ha, RMSE = 0.82 t/ha). These study proves that the use of low-cost sensors can guarantee the accuracy of biomass estimation and is expected to promote large-scale applications.

Solar elevation angle (Jiang et al., 2019), meteorological conditions (Devia et al., 2019; Wang F. et al., 2019), rainfall (Liu et al., 2019; Rose and Kage, 2019), soil characteristics (Acorsi et al., 2019; Vogel et al., 2019), the spatial distribution of multiple plants in a block (Han et al., 2019), and other characteristics not directly related to biomass monitoring also affect the accuracy of biomass estimations. The monitoring accuracy of low-cost sensors can be improved by considering the characteristics that are not directly related to biomass monitoring.

Jiang et al. (2019) calculated the solar elevation angle to avoid sun glint and hotspot effects. In addition, growing degree days (GDD) was incorporated into the model to estimate rice AGB as a meteorological feature. Models incorporating meteorological features achieved better estimation accuracy (R2 = 0.86, RMSE = 178.37 g/m2, MAE = 127.34 g/m2) than models that did not use these features (R2 = 0.64, RMSE = 286.79 g/m2, MAE = 236.49 g/m2).

Other studies have also demonstrated the importance of considering features that are not directly related to monitoring biomass. Borra-Serrano et al. (2019) also took GDD as a meteorological feature and obtained the best estimate by combining CH, VIs and meteorological data variables in an MLR model (R2 = 0.81) to monitor ryegrass dry-matter biomass. Devia et al. (2019) also mentioned the influence of solar elevation angle and indicated that weather conditions (sunny and cloudy) can affect the quality of the data, especially in lowland crops where moisture reflection changes the appearance of the image. The above studies showed that the accuracy of biomass estimation can be improved by considering meteorological characteristics and solar elevation angle. More sample points must be obtained from multiple research sites and under different environmental conditions in future studies to train a more robust multivariate model (Liu et al., 2019). Summary of relevant studies are shown in Table 2.

As a high precision, high flexibility and nondestructive remote sensing system, UAS have been widely used to monitor crop biomass. The application of UAS in the monitoring of crop biomass in recent years was reviewed in this article. Four kinds of data acquisition equipment (LiDAR, RGB sensor, multispectral sensor, and hyperspectral sensor), two biomass indices (VIs and CH) and three data analysis methods (SVR, RFR, and ANN) were introduced.

Despite the rapid progress in this area, difficulties remain. First, we need to improve the speed of data acquisition and processing. Although multisensor data fusion improves the accuracy of evaluation, it makes the process of data collection more complex, data sorting more difficult, and objectively reduces the speed of monitoring. In addition, although advanced algorithms improve the evaluation accuracy, they require a long training time. Second, there is no universal method that can be applied to all crops in all cases. Different crops, even the same crops in different environments, have different characteristics. This difference requires us to carefully distinguish the characteristics of crops, use appropriate sensors to collect characteristics, and test multiple indices to determine the best biomass indices. Third, the high cost of equipment hinders the large-scale use of UAS in crop biomass monitoring. Although research on low-cost sensors has appeared, the method that is needed to improve the estimation accuracy when using low-cost sensors still needs further research. It is predicted that adopting multi-index fusion, considering features not directly related to monitoring biomass, and the adoption of advanced algorithms can effectively improve the monitoring effect of low-cost sensors on crop biomass, which is the future development direction.

Because of its high precision, flexibility and nondestructive nature, UAS have the potential to become an important method for the monitoring of crop biomass. Crop biomass monitoring systems based on multisensor fusion and multi-index fusion, the consideration of features that are not directly related to biomass monitoring and the adoption of advanced algorithms are effective methods and development directions to improve the accuracy of crop biomass estimation by UAS. Because of their low cost, using RGB sensors have become an effective method to promote the large-scale application of UAS in the field of crop biomass monitoring. In the field of biomass monitoring, UAS still have great attraction, and there are an increasing number of studies on the monitoring of crop biomass based on UAS. Furthermore, it is expected that the challenges of UAS promotion will be overcome in the future, which is conducive to the realization of smart agriculture and precision agriculture.

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

The work in this article was supported by the Key Research and Development Program of Nanning (20192065), the National Natural Science Foundation for Young Scientists of China (31801804), the projects subsidized by special funds for Science Technology Innovation and Industrial Development of Shenzhen Dapeng New District (Grant No. PT202001-06), and the National Innovation and Entrepreneurship Training Plan for College Students (201910593010).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors thank native English speaking expert from the editing team of American Journal Experts for polishing our article.

Acorsi, M. G., Miranda, F. D. A., Martello, M., Smaniotto, D. A., and Sartor, L. R. (2019). Estimating biomass of black oat using UAV-based RGB imaging. Agronomy Basel 9:14. doi: 10.3390/agronomy9070344

Alheit, K. V., Busemeyer, L., Liu, W., Maurer, H. P., Gowda, M., Hahn, V., et al. (2014). Multiple-line cross QTL mapping for biomass yield and plant height in triticale (x Triticosecale Wittmack). Theor. Appl. Genet. 127, 251–260. doi: 10.1007/s00122-013-2214-6

Atzberger, C., Guerif, M., Frederic, B., and Werner, W. (2010). Comparative analysis of three chemometric techniques for the spectroradiometric assessment of canopy chlorophyll content in winter wheat. Comput. Electron. Agric. 73, 165–173. doi: 10.1016/j.compag.2010.05.006

Awodele, O., and Jegede, O. (2013). “Neural networks and its application in engineering,” in Proceedings of the Insite: Informing Science + It Education Conference (Macon, USA).

Bendig, J., Yu, K., Aasen, H., Bolten, A., Bennertz, S., Broscheit, J., et al. (2015). Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Observ. Geoinform. 39, 79–87. doi: 10.1016/j.jag.2015.02.012

Borra-Serrano, I., De Swaef, T., Muylle, H., Nuyttens, D., Vangeyte, J., Mertens, K., et al. (2019). Canopy height measurements and non-destructive biomass estimation of Lolium perenne swards using UAV imagery. Grass Forage Sci. 74, 356–369. doi: 10.1111/gfs.12439

Boschetti, M., Bocchi, S., and Brivio, P. A. (2007). Assessment of pasture production in the Italian Alps using spectrometric and remote sensing information. Agric. Ecosyst. Environ. 118, 267–272. doi: 10.1016/j.agee.2006.05.024

Brede, B., Lau, A., Bartholomeus, H. M., and Kooistra, L. (2017). Comparing RIEGL RiCOPTER UAV LiDAR derived canopy height and DBH with terrestrial LiDAR. Sensors 17:16. doi: 10.3390/s17102371

Calders, K., Newnham, G., Burt, A., Murphy, S., Raumonen, P., Herold, M., et al. (2015). Nondestructive estimates of above-ground biomass using terrestrial laser scanning. Methods Ecol. Evol. 6, 198–208. doi: 10.1111/2041-210x.12301

Calou, V. B. C., Teixeira, A. D., Moreira, L. C. J., Neto, O. C. D., and da Silva, J. A. (2019). ESTIMATION OF MAIZE BIOMASS USING UNMANNED AERIAL VEHICLES. Engenharia Agricola 39, 744–752. doi: 10.1590/1809-4430-Eng.Agric.v39n6p744-752/2019

Cen, H. Y., Wan, L., Zhu, J. P., Li, Y. J., Li, X. R., Zhu, Y. M., et al. (2019). Dynamic monitoring of biomass of rice under different nitrogen treatments using a lightweight UAV with dual image-frame snapshot cameras. Plant Methods 15:16. doi: 10.1186/s13007-019-0418-8

Chao, Z., Liu, N., Zhang, P., Ying, T., and Song, K. (2019). Estimation methods developing with remote sensing information for energy crop biomass: a comparative review. Biomass Bioenergy 122, 414–425. doi: 10.1016/j.biombioe.2019.02.002

Costa, L., Nunes, L., and Ampatzidis, Y. (2020). A new visible band index (vNDVI) for estimating NDVI values on RGB images utilizing genetic algorithms. Comput. Electron. Agric. 172:13. doi: 10.1016/j.compag.2020.105334

Daughtry, C. S. T., Walthall, C. L., Kim, M. S., de Colstoun, E. B., and McMurtrey, J. E. (2000). Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 74, 229–239. doi: 10.1016/S0034-4257(00)00113-9

Devia, C. A., Rojas, J. P., Petro, E., Martinez, C., Mondragon, I. F., Patino, D., et al. (2019). High-throughput biomass estimation in rice crops using UAV multispectral imagery. J. Intell. Robot. Syst. 96, 573–589. doi: 10.1007/s10846-019-01001-5

Dike, H. U., Zhou, Y., Deveerasetty, K. K., and Wu, Q. (2018). “Unsupervised learning based on artificial neural Network: a review,” in Proceedings of the 2018 IEEE International Conference on Cyborg and Bionic Systems (CBS), (Shenzhen: IEEE), 322–327.

Domingo, D., Orka, H. O., Naesset, E., Kachamba, D., and Gobakken, T. (2019). Effects of UAV image resolution, camera type, and image overlap on accuracy of biomass predictions in a tropical Woodland. Remote Sens. 11:17. doi: 10.3390/rs11080948

Duan, B., Liu, Y. T., Gong, Y., Peng, Y., Wu, X. T., Zhu, R. S., et al. (2019). Remote estimation of rice LAI based on Fourier spectrum texture from UAV image. Plant Methods 15:12. doi: 10.1186/s13007-019-0507-8

Feng, L., Yue, D., and Guo, X. (2009). A review on application of normal different vegetation index. For. Inventory Plan. 34, 48–52. doi: 10.3969/j.issn.1671-3168.2009.02.013

Féret, J. B., Gitelson, A. A., Noble, S. D., and Jacquemoud, S. (2017). PROSPECT-D: towards modeling leaf optical properties through a complete lifecycle. Remote Sens. Environ. 193, 204–215. doi: 10.1016/j.rse.2017.03.004

Gitelson, A., and Merzlyak, M. N. (1997). Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 18, 2691–2697. doi: 10.1080/014311697217558

Gitelson, A., Viña, A., Ciganda, V., Rundquist, D., and Arkebauer, T. (2005). Remote estimation of canopy chlorophyll in crops. Geophys. Res. Lett. 32:L08403. doi: 10.1029/2005GL022688

Gitelson, A. A. (2004). Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 161, 165–173. doi: 10.1078/0176-1617-01176

Gitelson, A. A., Gritz †, Y., and Merzlyak, M. N. (2003). Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 160, 271–282. doi: 10.1078/0176-1617-00887

Gnyp, M. L., Bareth, G., Li, F., Lenz-Wiedemann, V. I. S., Koppe, W., Miao, Y., et al. (2014). Development and implementation of a multiscale biomass model using hyperspectral vegetation indices for winter wheat in the North China Plain. Int. J. Appl. Earth Observ. Geoinform. 33, 232–242. doi: 10.1016/j.jag.2014.05.006

Guo, Y., Senthilnath, J., Wu, W., Zhang, X., Zeng, Z., and Huang, H. (2019). Radiometric calibration for multispectral camera of different imaging conditions mounted on a UAV platform. Sustainability 11, 1–24. doi: 10.3390/su11040978

Haboudane, D., Miller, J. R., Tremblay, N., Zarco-Tejada, P. J., and Dextraze, L. (2002). Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 81, 416–426. doi: 10.1016/S0034-4257(02)00018-4

Hakl, J., Hrevušová, Z., Hejcman, M., and Fuksa, P. (2012). The use of a rising plate meter to evaluate lucerne (Medicago sativa L.) height as an important agronomic trait enabling yield estimation. Grass Forage Sci. 67, 589–596. doi: 10.1111/j.1365-2494.2012.00886.x

Han, L., Yang, G. J., Dai, H. Y., Xu, B., Yang, H., Feng, H. K., et al. (2019). Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 15:19. doi: 10.1186/s13007-019-0394-z

Haralick, R. M., Shanmugam, K., and Dinstein, I. (1973). Textural features for image classification. IEEE Trans. Syst. Man Cybern. SMC-3, 610–621. doi: 10.1109/TSMC.1973.4309314

Hassan, M. A., Yang, M., Fu, L., Rasheed, A., Zheng, B., Xia, X., et al. (2019). Accuracy assessment of plant height using an unmanned aerial vehicle for quantitative genomic analysis in bread wheat. Plant Methods 15:37. doi: 10.1186/s13007-019-0419-7

Hassan, M. A., Yang, M., Rasheed, A., Jin, X., Xia, X., Xiao, Y., et al. (2018). Time-series multispectral indices from unmanned aerial vehicle imagery reveal senescence rate in bread wheat. Remote Sens. 10:809. doi: 10.3390/rs10060809

Hassler, S. C., and Baysal-Gurel, F. (2019). Unmanned aircraft system (UAS) technology and applications in agriculture. Agronomy Basel 9:21. doi: 10.3390/agronomy9100618

Hogan, S., Kelly, M., Stark, B., and Chen, Y. (2017). Unmanned aerial systems for agriculture and natural resources. Calif. Agric. 71, 5–14. doi: 10.3733/ca.2017a0002

Huete, A., Didan, K., Miura, T., Rodriguez, E. P., Gao, X., and Ferreira, L. G. (2002). Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 83, 195–213. doi: 10.1016/S0034-4257(02)00096-2

Huete, A. R. (1988). A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 25, 295–309. doi: 10.1016/0034-4257(88)90106-X

Jeziorska, J. (2019). UAS for wetland mapping and hydrological modeling. Remote Sens. 11:39. doi: 10.3390/rs11171997

Jiang, Q., Fang, S. H., Peng, Y., Gong, Y., Zhu, R. S., Wu, X. T., et al. (2019). UAV-based biomass estimation for rice-combining spectral, TIN-based structural and meteorological features. Remote Sens. 11:19. doi: 10.3390/rs11070890

Jiang, Z., Huete, A. R., Didan, K., and Miura, T. (2008). Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 112, 3833–3845. doi: 10.1016/j.rse.2008.06.006

Jibo, Y., Feng, H., Guijun, Y., and Li, Z. (2018). A comparison of regression techniques for estimation of above-ground winter wheat biomass using near-surface spectroscopy. Remote Sens. 10:66. doi: 10.3390/rs10010066

Johansen, K., Morton, M. J. L., Malbeteau, Y., Aragon, B., Al-Mashharawi, S., Ziliani, M., et al. (2019). “Predicting biomass and yield at harvest of salt-stressed tomato plants using uav imagery,” in Proceedings of the 4th ISPRS Geospatial Week 2019, June 10, 2019 – June 14, 2019, Vol. 42, (Enschede: International Society for Photogrammetry and Remote Sensing), 407–411.

Kang, H. W., and Kang, H. B. (2017). Prediction of crime occurrence from multi-modal data using deep learning. PLoS One 12:19. doi: 10.1371/journal.pone.0176244

Kelly, J., Kljun, N., Olsson, P.-O., Mihai, L., Liljeblad, B., Weslien, P., et al. (2019). Challenges and best practices for deriving temperature data from an uncalibrated UAV thermal infrared camera. Remote Sens. 11:7098. doi: 10.3390/rs11050567

Kim, J., Kim, S., Ju, C., and Son, H. I. (2019). Unmanned aerial vehicles in agriculture: a review of perspective of platform, control, and applications. IEEE Access 7, 105100–105115. doi: 10.1109/access.2019.2932119

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539

Li, S. Y., Yuan, F., Ata-Ui-Karim, S. T., Zheng, H. B., Cheng, T., Liu, X. J., et al. (2019). Combining color indices and textures of UAV-based digital imagery for rice LAI estimation. Remote Sens. 11:21. doi: 10.3390/rs11151763

Li, W., Niu, Z., Huang, N., Wang, C., Gao, S., and Wu, C. Y. (2015). Airborne LiDAR technique for estimating biomass components of maize: a case study in Zhangye city, Northwest China. Ecol. Indic. 57, 486–496. doi: 10.1016/j.ecolind.2015.04.016

Li, Z., Hu, D., Zhao, D., and Xiang, D. (2015). Research advance of broadband vegetation index using remotely sensed images. J. Yangtze River Sci. Res. Inst. 32, 125–130. doi: 10.3969/j.issn.1001-5485.2015.01.026

Liu, Y. N., Liu, S. S., Li, J., Guo, X. Y., Wang, S. Q., and Lu, J. W. (2019). Estimating biomass of winter oilseed rape using vegetation indices and texture metrics derived from UAV multispectral images. Comput. Electron. Agric. 166:10. doi: 10.1016/j.compag.2019.105026

Lohani, B., and Ghosh, S. (2017). Airborne LiDAR technology: a review of data collection and processing systems. Proc. Natl. Acad. Sci. India Sec. A Phys. Sci. 87, 567–579. doi: 10.1007/s40010-017-0435-9

Lu, N., Zhou, J., Han, Z. X., Li, D., Cao, Q., Yao, X., et al. (2019). Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 15:16. doi: 10.1186/s13007-019-0402-3

Lussem, U., Bolten, A., Menne, J., Gnyp, M. L., Schellberg, J., and Bareth, G. (2019). Estimating biomass in temperate grassland with high resolution canopy surface models from UAV-based RGB images and vegetation indices. J. Appl. Remote Sens. 13:034525. doi: 10.1117/1.Jrs.13.034525

Ma, L., Liu, Y., Zhang, X. L., Ye, Y. X., Yin, G. F., and Johnson, B. A. (2019). Deep learning in remote sensing applications: a meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 152, 166–177. doi: 10.1016/j.isprsjprs.2019.04.015

Maes, W. H., and Steppe, K. (2019). Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 24, 152–164. doi: 10.1016/j.tplants.2018.11.007

Maimaitijiang, M., Sagan, V., Sidike, P., Hartling, S., Esposito, F., and Fritschi, F. B. (2020). Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 237:20. doi: 10.1016/j.rse.2019.111599

Marino, S., and Alvino, A. (2020). Agronomic traits analysis of ten winter wheat cultivars clustered by UAV-derived vegetation indices. Remote Sens. 12:16. doi: 10.3390/rs12020249

Masjedi, A., Crawford, M. M., Carpenter, N. R., and Tuinstra, M. R. (2020). Multi-temporal predictive modelling of sorghum biomass using UAV-based hyperspectral and lidar data. Remote Sens. 12:3587. doi: 10.3390/rs12213587

Moeckel, T., Dayananda, S., Nidamanuri, R. R., Nautiyal, S., Hanumaiah, N., Buerkert, A., et al. (2018). Estimation of vegetable crop parameter by multi-temporal UAV-borne images. Remote Sens. 10:18. doi: 10.3390/rs10050805

Montes, J. M., Technow, F., Dhillon, B. S., Mauch, F., and Melchinger, A. E. (2011). High-throughput non-destructive biomass determination during early plant development in maize under field conditions. Field Crops Res. 121, 268–273. doi: 10.1016/j.fcr.2010.12.017

Navarro, J. A., Algeet, N., Fernandez-Landa, A., Esteban, J., Rodriguez-Noriega, P., and Guillen-Climent, M. L. (2019). Integration of UAV, sentinel-1, and sentinel-2 data for mangrove plantation aboveground biomass monitoring in senegal. Remote Sens. 11:23. doi: 10.3390/rs11010077

Nevavuori, P., Narra, N., and Lipping, T. (2019). Crop yield prediction with deep convolutional neural networks. Comput. Electron. Agric. 163:9. doi: 10.1016/j.compag.2019.104859

Niu, Y. X., Zhang, L. Y., Zhang, H. H., Han, W. T., and Peng, X. S. (2019). Estimating above-ground biomass of maize using features derived from UAV-based RGB imagery. Remote Sens. 11:21. doi: 10.3390/rs11111261

Prey, L., and Schmidhalter, U. (2019). Simulation of satellite reflectance data using high-frequency ground based hyperspectral canopy measurements for in-season estimation of grain yield and grain nitrogen status in winter wheat. ISPRS J. Photogram. Remote Sens. 149, 176–187. doi: 10.1016/j.isprsjprs.2019.01.023

Prost, L., and Jeuffroy, M.-H. (2007). Replacing the nitrogen nutrition index by the chlorophyll meter to assess wheat N status. Agron. Sustain. Dev. 27, 321–330. doi: 10.1051/agro:2007032

Qiu, P. H., Wang, D. Z., Zou, X. Q., Yang, X., Xie, G. Z., Xu, S. J., et al. (2019). Finer resolution estimation and mapping of mangrove biomass using UAV lidar and worldview-2 data. Forests 10:21. doi: 10.3390/f10100871

Ramon Saura, J., Reyes-Menendez, A., and Palos-Sanchez, P. (2019). Mapping multispectral digital images using a cloud computing software: applications from UAV images. Heliyon 5:e01277. doi: 10.1016/j.heliyon.2019.e01277

Raper, T. B., and Varco, J. J. (2015). Canopy-scale wavelength and vegetative index sensitivities to cotton growth parameters and nitrogen status. Precis. Agric. 16, 62–76. doi: 10.1007/s11119-014-9383-4

Rondeaux, G., Steven, M., and Baret, F. (1996). Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 55, 95–107. doi: 10.1016/0034-4257(95)00186-7

Rose, T., and Kage, H. (2019). The contribution of functional traits to the breeding progress of central-european winter wheat under differing crop management intensities. Front. Plant Sci. 10:1521. doi: 10.3389/fpls.2019.01521

Rouse, J., Haas, R., Schell, J., and Deering, D. (1974). Monitoring vegetation systems in the great plains with ERTS. NASA Special Publication 351:309.

Salas Fernandez, M. G., Becraft, P. W., Yin, Y., and Lubberstedt, T. (2009). From dwarves to giants? Plant height manipulation for biomass yield. Trends Plant Sci. 14, 454–461. doi: 10.1016/j.tplants.2009.06.005

Sapkal, S. D., Kakarwal, S. N., and Revankar, P. S. (2007). “Analysis of classification by supervised and unsupervised learning,” in Proceedings of the International Conference on Computational Intelligence and Multimedia Applications (ICCIMA 2007), Vol. 1, (Sivakasi: IEEE), 280–284.

Schirrmann, M., Giebel, A., Gleiniger, F., Pflanz, M., Lentschke, J., and Dammer, K.-H. (2016). Monitoring agronomic parameters of winter wheat crops with low-cost UAV imagery. Remote Sens. 8:706. doi: 10.3390/rs8090706

Schmidhuber, J. (2015). Deep learning in neural networks: an overview. Neural Networks 61, 85–117. doi: 10.1016/j.neunet.2014.09.003

Scotford, I. M., and Miller, P. C. H. (2004). Combination of spectral reflectance and ultrasonic sensing to monitor the growth of winter wheat. Biosyst. Eng. 87, 27–38. doi: 10.1016/j.biosystemseng.2003.09.009

Selbeck, J., Dworak, V., and Ehlert, D. (2010). Testing a vehicle-based scanning lidar sensor for crop detection. Can. J. Remote Sens. 36, 24–35. doi: 10.5589/m10-022

Sharma, R., Kamble, S. S., Gunasekaran, A., Kumar, V., and Kumar, A. (2020). A systematic literature review on machine learning applications for sustainable agriculture supply chain performance. Comput. Oper. Res. 119:17. doi: 10.1016/j.cor.2020.104926

Shentu, Y. C., Wu, C. F., Wu, C. P., Guo, Y. L., Zhang, F., Yang, P., et al. (2018). “Improvement of underwater color discriminative ability by multispectral imaging,” in Proceedings of the OCEANS 2018 MTS, (Charleston, SC: IEEE).

Shuqin, T., Yueju, X., Yun, L., Ning, H., and Xiao, Z. (2016). Review on RGB-D image classification. Laser Optoelectron. Progr. 53, 29–42. doi: 10.3788/lop53.060003

Sofonia, J., Shendryk, Y., Phinn, S., Roelfsema, C., Kendoul, F., and Skocaj, D. (2019). Monitoring sugarcane growth response to varying nitrogen application rates: a comparison of UAV SLAM LIDAR and photogrammetry. Int. J. Appl. Earth Observ. Geoinform. 82:15. doi: 10.1016/j.jag.2019.05.011

Song, B., and Park, K. (2020). detection of aquatic plants using multispectral UAV imagery and vegetation index. Remote Sens. 12:16. doi: 10.3390/rs12030387

Song, P., Zheng, X., Li, Y., Zhang, K., Huang, J., Li, H., et al. (2020). Estimating reed loss caused by Locusta migratoria manilensis using UAV-based hyperspectral data. Sci. Total Environ. 719:137519. doi: 10.1016/j.scitotenv.2020.137519

Sun, Z., Jing, W., Qiao, X., and Yang, L. (2019). Identification and monitoring of blooming mikania micrantha outbreak points based on UAV remote sensing. Trop. Geogr. 39, 482–491.

Tait, L., Bind, J., Charan-Dixon, H., Hawes, I., Pirker, J., and Schiel, D. (2019). Unmanned aerial vehicles (UAVs) for monitoring macroalgal biodiversity: comparison of RGB and multispectral imaging sensors for biodiversity assessments. Remote Sens. 11:18. doi: 10.3390/rs11192332

Tao, H., Feng, H., Xu, L., Miao, M., Long, H., Yue, J., et al. (2020). Estimation of crop growth parameters using UAV based hyperspectral remote sensing data. Sensors 20:1296. doi: 10.3390/s20051296

ten Harkel, J., Bartholomeus, H., and Kooistra, L. (2020). Biomass and crop height estimation of different crops using uav-based lidar. Remote Sens. 12:18. doi: 10.3390/rs12010017

Tewes, A., and Schellberg, J. (2018). Towards remote estimation of radiation use efficiency in maize using UAV-based low-cost camera imagery. Agronomy Basel 8:15. doi: 10.3390/agronomy8020016

Tian, H., Wang, T., Liu, Y., Qiao, X., and Li, Y. (2020). Computer vision technology in agricultural automation —A review. Inform. Process. Agric. 7, 1–19. doi: 10.1016/j.inpa.2019.09.006

Tian, J. Y., Wang, L., Li, X. J., Yin, D. M., Gong, H. L., Nie, S., et al. (2019). Canopy height layering biomass estimation model (chl-bem) with full-waveform lidar. Remote Sens. 11:13. doi: 10.3390/rs11121446

Tilly, N., Hoffmeister, D., Cao, Q., Huang, S., Lenz-Wiedemann, V., Miao, Y., et al. (2014). Multitemporal crop surface models: accurate plant height measurement and biomass estimation with terrestrial laser scanning in paddy rice. J. Appl. Remote Sens. 8:083671. doi: 10.1117/1.Jrs.8.083671

Tomasi, C., and Kanade, T. (1992). Shape and motion from image streams under orthography: a factorization method. Int. J. Comput. Vis. 9, 137–154. doi: 10.1007/BF00129684

Toth, C., and Jozkow, G. (2016). Remote sensing platforms and sensors: a survey. ISPRS J. Photogram. Remote Sens. 115, 22–36. doi: 10.1016/j.isprsjprs.2015.10.004

Tucker, C. J. (1979). Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 8, 127–150. doi: 10.1016/0034-4257(79)90013-0

Turner, D., Lucieer, A., and Watson, C. (2012). An automated technique for generating georectified mosaics from ultra-high resolution unmanned aerial vehicle (UAV) imagery, based on structure from motion (sfm) point clouds. Remote Sens. 4, 1392–1410. doi: 10.3390/rs4051392

Viljanen, N., Honkavaara, E., Nasi, R., Hakala, T., Niemelainen, O., and Kaivosoja, J. (2018). A novel machine learning method for estimating biomass of grass swards using a photogrammetric canopy height model, images and vegetation indices captured by a drone. Agric. Basel 8:28. doi: 10.3390/agriculture8050070

Villoslada, M., Bergamo, T. F., Ward, R. D., Burnside, N. G., Joyce, C. B., Bunce, R. G. H., et al. (2020). Fine scale plant community assessment in coastal meadows using UAV based multispectral data. Ecol. Indic. 111:13. doi: 10.1016/j.ecolind.2019.105979

Vogel, S., Gebbers, R., Oertel, M., and Kramer, E. (2019). Evaluating soil-borne causes of biomass variability in grassland by remote and proximal sensing. Sensors 19:4593. doi: 10.3390/s19204593

Wallace, L., Lucieer, A., Watson, C., and Turner, D. (2012). Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 4, 1519–1543. doi: 10.3390/rs4061519

Wang, C., Nie, S., Xi, X., Luo, S., and Sun, X. (2017). Estimating the biomass of maize with hyperspectral and LiDAR data. Remote Sens. 9:11. doi: 10.3390/rs9010011

Wang, D. Z., Wan, B., Liu, J., Su, Y. J., Guo, Q. H., Qiu, P. H., et al. (2020). Estimating aboveground biomass of the mangrove forests on northeast Hainan Island in China using an upscaling method from field plots, UAV-LiDAR data and Sentinel-2 imagery. Int. Journal of Appl. Earth Observ. Geoinform. 85:16. doi: 10.1016/j.jag.2019.101986

Wang, D. Z., Wan, B., Qiu, P. H., Zuo, Z. J., Wang, R., and Wu, X. C. (2019). Mapping height and aboveground biomass of mangrove forests on Hainan Island using UAV-LiDAR sampling. Remote Sens. 11:25. doi: 10.3390/rs11182156

Wang, F., Wang, F., Zhang, Y., Hu, J., Huang, J., and Xie, J. (2019). Rice yield estimation using parcel-level relative spectra variables from UAV-based hyperspectral imagery. Front. Plant Sci. 10:453. doi: 10.3389/fpls.2019.00453

Wijesingha, J., Moeckel, T., Hensgen, F., and Wachendorf, M. (2019). Evaluation of 3D point cloud-based models for the prediction of grassland biomass. Int. J. Appl. Earth Observ. Geoinform. 78, 352–359. doi: 10.1016/j.jag.2018.10.006

Wong, T., and Yang, N. (2017). Dependency analysis of accuracy estimates in k-fold cross validation. IEEE Trans. Knowl. Data Eng. 29, 2417–2427. doi: 10.1109/TKDE.2017.2740926

Wong, T., and Yeh, P. (2020). Reliable accuracy estimates from k-fold cross validation. IEEE Trans. Knowl. Data Eng. 32, 1586–1594. doi: 10.1109/TKDE.2019.2912815

Wong, T.-T. (2015). Performance evaluation of classification algorithms by k-fold and leave-one-out cross validation. Pattern Recogn. 48, 2839–2846. doi: 10.1016/j.patcog.2015.03.009

Xu, R., Li, C., and Paterson, A. H. (2019). Multispectral imaging and unmanned aerial systems for cotton plant phenotyping. PLoS One 14:e0205083. doi: 10.1371/journal.pone.0205083

Yan, W. Q., Guan, H. Y., Cao, L., Yu, Y. T., Li, C., and Lu, J. Y. (2020). A self-adaptive mean shift tree-segmentation method using UAV LiDAR data. Remote Sens. 1214. doi: 10.3390/rs12030515

Yang, B. H., Wang, M. X., Sha, Z. X., Wang, B., Chen, J. L., Yao, X., et al. (2019). Evaluation of aboveground nitrogen content of winter wheat using digital imagery of unmanned aerial vehicles. Sensors 19:18. doi: 10.3390/s19204416

Yang, S., Feng, Q., Liang, T., Liu, B., Zhang, W., and Xie, H. (2017). Modeling grassland above-ground biomass based on artificial neural network and remote sensing in the Three-River Headwaters Region. Remote Sens. Environ. 204, 448–455. doi: 10.1016/j.rse.2017.10.011

Yao, X., Huang, Y., Shang, G., Zhou, C., Cheng, T., Tian, Y., et al. (2015). Evaluation of six algorithms to monitor wheat leaf nitrogen concentration. Remote Sens. 7, 14939–14966. doi: 10.3390/rs71114939

Yuan, H., Yang, G., Li, C., Wang, Y., Liu, J., Yu, H., et al. (2017). Retrieving soybean leaf area index from unmanned aerial vehicle hyperspectral remote sensing: analysis of rf, ann, and svm regression models. Remote Sens. 9:309. doi: 10.3390/rs9040309

Yuan, M., Burjel, J. C., Isermann, J., Goeser, N. J., and Pittelkow, C. M. (2019). Unmanned aerial vehicle-based assessment of cover crop biomass and nitrogen uptake variability. J. Soil Water Conserv. 74, 350–359. doi: 10.2489/jswc.74.4.350

Yue, J., Feng, H., Jin, X., Yuan, H., Li, Z., Zhou, C., et al. (2018). A comparison of crop parameters estimation using images from UAV-mounted snapshot hyperspectral sensor and high-definition digital camera. Remote Sens. 10:1138. doi: 10.3390/rs10071138

Yue, J., Yang, G., Li, C., Li, Z., Wang, Y., Feng, H., et al. (2017). Estimation of winter wheat above-ground biomass using unmanned aerial vehicle-based snapshot hyperspectral sensor and crop height improved models. Remote Sens. 9:708. doi: 10.3390/rs9070708

Yue, J. B., Yang, G. J., Tian, Q. J., Feng, H. K., Xu, K. J., and Zhou, C. Q. (2019). Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogram. Remote Sens. 150, 226–244. doi: 10.1016/j.isprsjprs.2019.02.022

Zha, H. N., Miao, Y. X., Wang, T. T., Li, Y., Zhang, J., Sun, W. C., et al. (2020). Improving unmanned aerial vehicle remote sensing-based rice nitrogen nutrition index prediction with machine learning. Remote Sens. 12:22. doi: 10.3390/rs12020215

Zhang, H., and Li, D. (2014). Applications of computer vision techniques to cotton foreign matter inspection: a review. Comput. Electron. Agric. 109, 59–70. doi: 10.1016/j.compag.2014.09.004

Zhang, L., Shao, Z., Liu, J., and Cheng, Q. (2019). Deep learning based retrieval of forest aboveground biomass from combined lidar and landsat 8 data. Remote Sens. 11:1459. doi: 10.3390/rs11121459

Zhang, N., Rao, R. S. P., Salvato, F., Havelund, J. F., Moller, I. M., Thelen, J. J., et al. (2018). MU-LOC: machine-learning method for predicting mitochondrially localized proteins in plants. Front. Plant Sci. 9:634. doi: 10.3389/fpls.2018.00634

Zheng, H., Li, W., Jiang, J., Liu, Y., Cheng, T., Tian, Y., et al. (2018). A comparative assessment of different modeling algorithms for estimating leaf nitrogen content in winter wheat using multispectral images from an unmanned aerial vehicle. Remote Sens. 10:2026. doi: 10.3390/rs10122026

Zheng, H. B., Cheng, T., Zhou, M., Li, D., Yao, X., Tian, Y. C., et al. (2019). Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 20, 611–629. doi: 10.1007/s11119-018-9600-7

Zhong, Y. F., Wang, X. Y., Xu, Y., Wang, S. Y., Jia, T. Y., Hu, X., et al. (2018). Mini-UAV-borne hyperspectral remote sensing from observation and processing to applications. IEEE Geosci. Remote Sens. Mag. 6, 46–62. doi: 10.1109/mgrs.2018.2867592

Zhu, W. X., Sun, Z. G., Peng, J. B., Huang, Y. H., Li, J., Zhang, J. Q., et al. (2019). Estimating maize above-ground biomass using 3d point clouds of multi-source unmanned aerial vehicle data at multi-spatial scales. Remote Sens. 11:22. doi: 10.3390/rs11222678

Keywords: unmanned aerial systems, unmanned aerial vehicle, remote sensing, crop biomass, smart agriculture, precision agriculture

Citation: Wang T, Liu Y, Wang M, Fan Q, Tian H, Qiao X and Li Y (2021) Applications of UAS in Crop Biomass Monitoring: A Review. Front. Plant Sci. 12:616689. doi: 10.3389/fpls.2021.616689

Received: 13 October 2020; Accepted: 18 March 2021;

Published: 09 April 2021.

Edited by:

Penghao Wang, Murdoch University, AustraliaReviewed by:

Lea Hallik, University of Tartu, EstoniaCopyright © 2021 Wang, Liu, Wang, Fan, Tian, Qiao and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xi Qiao, cWlhb3hpQGNhYXMuY24=; Yanzhou Li, bHl6MTk3OTE2QDEyNi5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.