- 1Division of BioMedical Sciences, Faculty of Medicine, Memorial University of Newfoundland, St. John’s, NL, Canada

- 2Department of Computer Science, Faculty of Science, Memorial University of Newfoundland, St. John’s, NL, Canada

- 3Cancer Science Institute of Singapore, National University of Singapore, Singapore, Singapore

Confocal microscopy has evolved to be a widely adopted imaging technique in molecular biology and is frequently utilized to achieve accurate subcellular localization of proteins. Applying colocalization analysis on image z-stacks obtained from confocal fluorescence microscopes is a dependable method of revealing the relationship between different molecules. In addition, despite the established advantages and growing adoption of 3D visualization software in various microscopy research domains, there have been few systems that can support colocalization analysis within a user-specified region of interest (ROI). In this context, several broadly employed biological image visualization platforms are meticulously explored in this study to understand the current landscape. It has been observed that while these applications can generate three-dimensional (3D) reconstructions for z-stacks, and in some cases transfer them into an immersive virtual reality (VR) scene, there is still little support for performing quantitative colocalization analysis on such images based on a user-defined ROI and thresholding levels. To address these issues, an extension called ColocZStats (pronounced Coloc-Zee-Stats) has been developed for 3D Slicer, a widely used free and open-source software package for image analysis and scientific visualization. With a custom-designed user-friendly interface, ColocZStats allows investigators to conduct intensity thresholding and ROI selection on imported 3D image stacks. It can deliver several essential colocalization metrics for structures of interest and produce reports in the form of diagrams and spreadsheets.

1 Introduction

Compared with conventional fluorescence microscopes, the most significant advantage of confocal microscopes is that they can exclude the out-of-focus light from either above or below the current focal plane (Jonkman et al., 2020). This capability facilitates precise detection of the specific organelle in which the target molecule is present. In addition, the confocal microscope’s features of sharpening fluorescent images and reducing haze contribute to enhancing image clarity (Collazo et al., 2005). Many of these two-dimensional (2D) image slices can be continuously collected from focal planes at different depths along the z-dimension and eventually assembled to produce a z-stack comprising the specimen’s entire three-dimensional (3D) data (Collazo et al., 2005; Theart et al., 2017). The resulting z-stacks are digitized volumetric representations of cellular structures arranged in a 3D grid of voxels, where each voxel value corresponds to the amount detected of a particular stained structure or molecule at the voxel’s location. The number of bits representing each voxel value is called the “bit-depth” of the stack.

Examining interactions between different proteins or molecular structures holds significant importance in biological sciences. Biologists often perform colocalization analysis on confocal image z-stacks to better understand the roles and interactions of proteins (Pompey et al., 2013; Bolte and Cordelières, 2006). Colocalization detects the spatial overlap between distinct fluorescent labels with different emission wavelengths to determine whether the fluorophores are close or within the same region (Lacoste et al., 2000; Adler and Parmryd, 2013). Colocalization involves two aspects: co-occurrence and correlation. “Co-occurrence” refers to the simple spatial intersection of different fluorophores. “Correlation” refers to distinct fluorophores co-distributed proportionally with a more apparent statistical relationship (Adler and Parmryd, 2013; Dunn et al., 2011). Typical application examples of colocalization analysis include confirming whether a specific protein associates with microtubules (Bassell et al., 1998; Nicolas et al., 2008) or mitochondria (Lynch et al., 1996), or verifying whether different proteins are associated with identical plasma membrane domains (Lachmanovich et al., 2003).

Visualizing superimposed fluorescence micrographs is the most common method for assessing colocalization. Generally, the acquired images or stacks are grayscale for each channel captured which are then assigned a pseudo-color during visualization. When the images of each fluorescence label are thus merged, their combined contribution can be indicated by the color of the microstructure’s appearance. For instance, because of the combined effects of channels displayed as green and red, the colocalization of fluorescein and rhodamine is recognized in yellow structures in Dunn et al. (2011). When analyzing z-stacks, a popular visualization method involves performing 3D reconstruction using techniques such as volume rendering, which transforms the data into 3D semi-translucent voxels to enhance the user’s perception when observing samples (Theart et al., 2017; Lucas et al., 1996; Liu and Chiang, 2003). Another cutting-edge visualization technology is virtual reality (VR). VR is a digitally created immersive 3D simulated environment that closely resembles reality. While fully immersed in this environment, users can navigate through and interact with virtual objects. It is noteworthy that as VR technology has developed, the characteristic it supplies that allows immersive observation of biological samples has led to this technology being continuously combined with a variety of visual analysis methods in recent years, enabling biologists to obtain a more realistic 3D awareness in the process of scientific exploration (Patil and Batra, 2019; Sommer et al., 2018). Although the application of VR in various subfields of bioinformatics has been steadily increasing, Theart et al. (2017) revealed that no applications had previously provided the capability for colocalization analysis in VR. Nevertheless, this study, along with follow-up research (Theart et al., 2018), collectively demonstrated the substantial potential and advantages of performing colocalization analysis for z-stacks based on the generated volume-rendered images in an immersive VR environment—that the efficiency of conducting such analysis and the precision of inspecting and assessing biological samples can be significantly improved.

Given this background, several well-known applications with a focus on 3D graphics systems with VR functionalities were investigated during the study period to gain an overview of the current status of these commonly accepted bioimaging visualization and analysis platforms for visualizing confocal microscopy data and evaluating its degree of colocalization.

ExMicroVR (Immersive Science LLC, 2024b) (https://www.immsci.com/home/exmicrovr) is a VR tool created for the immersive visualization and manipulation of multi-channel confocal image stacks. Its VR environment and easy-to-use user interface allow it to considerably expand microscopic samples, permitting biologists to view and explore the molecules’ structures in greater detail. Another software, ConfocalVR (Immersive Science LLC, 2024a) (https://www.immsci.com/home/confocalvr), in its current form is an upgraded version of ExMicroVR. Not only does it have more added interactive features, but it also provides a range of relatively advanced image analysis capabilities, such as adding markers in 3D scenes, counting the number of interesting objects, and measuring the distance between them, allowing researchers to further investigate the complexity of cellular structures. ChimeraX (Goddard et al., 2018; Pettersen et al., 2021) (https://www.cgl.ucsf.edu/chimerax) is an interactive platform for visualizing diverse types of data, including atomic structures, sequences, and 3D multi-channel microscopy data. It supplies approximately 100 different analysis functionalities. ChimeraX VR (https://www.cgl.ucsf.edu/chimerax/docs/user/vr.html) is the VR extension of ChimeraX; it enables users to interact with cellular protein structures with stereo depth perception. When activated, a floating panel, precisely the same as the desktop interface, is available in the VR scene to help users utilize controllers to perform all necessary manipulations on the images. 3D Slicer (Fedorov et al., 2024) (https://www.slicer.org) is a broadly recognized, accessible, and open-source platform that provides numerous biomedical image processing and visualization features (Kikinis et al., 2013). Similarly, SlicerVR (https://github.com/KitwareMedical/SlicerVirtualReality) is the VR extension within 3D Slicer. Thanks to the capabilities of the 3D Slicer ecosystem, SlicerVR offers seamless VR integration in this popular image computing application (Lasso et al., 2018) so that observers can quickly transfer images displayed in desktop mode to the VR scenario. Additionally, it supports multi-user collaboration, allowing images within the exact scene to be manipulated synchronously (Pinter et al., 2020).

This investigation found that all these platforms are capable of reading datasets generated by fluorescence confocal microscopy and converting them into 3D semi-translucent voxels that can be delivered to VR scenes for visualization. The 3D rendering of these voxels can aid researchers in visually assessing the level of colocalization between channels. However, for more comprehensive colocalization analysis, sole reliance on subjective identification of the relative distribution of different molecules from a visual perspective is insufficient. Non-overlapping channels may also display merged colors when they fall on the same line of sight, which could lead to false impressions and affect the accuracy of the analysis. Therefore, objective and quantitative analysis is crucial. Despite the commendable image visualization and diverse analytical capabilities inherent in these platforms, there is still room for them to improve in obtaining colocalization statistics for confocal stacks. As indicated by Stefani et al. (2018), developing a dedicated tool to gain objective colocalization measurements remains one of the goals and challenges for ConfocalVR.

To address this limitation and take advantage of the comparatively superior high extensibility of 3D Slicer, which supports the creation of interactive and batch-processing tools for various purposes (Kapur et al., 2016), a free, open-source extension called ColocZStats has been developed for 3D Slicer. ColocZStats is currently designed as a desktop application which enables users to visually observe the spatial relationship between different biological microstructures while performing thresholding and ROI selection on the channels’ 3D volumetric representations via an easy-to-use graphical user interface (GUI) and then acquiring critical colocalization metrics with one mouse click. This proposed tool contributes to supplementing the capabilities of 3D Slicer in visualizing multi-channel confocal image stacks and quantifying colocalization, thereby broadening its scope as an integrated image analysis platform.

2 Materials and equipment

2.1 Description of sample data source

To showcase the capabilities of ColocZStats discussed here, we predominantly utilized confocal z-stack data collected during a study on the colocalization of DSS1 nuclear bodies with other nuclear body types. DSS1, also known as SEM1, is a gene that encodes a protein crucial for various cellular processes, most notably the function of the 26S proteasome complex in protein degradation. The relevant z-stack imaging was performed using a Zeiss LSM800 confocal microscope with Airyscan. The above process utilized three distinct dyes to specifically label DSS1 nuclear bodies (red), promyelocytic leukemia (PML) nuclear bodies (green), and the nucleus (blue). The data file was named “Sample Image Stack.tif”, and the sampling step in z was 0.15 µm. An additional z-stack was used along with these data for the description in the Case Study. Please refer to the Supplementary Material for a detailed description of these image stacks.

2.2 Description of VR equipment

All screenshots of the VR environments presented in the upcoming section were captured using an HTC Vive Cosmos Elite VR System. The VR system’s head-mounted display (HMD) provides an approximate field of view (FoV) of approximately 110° through two displays refreshed at a rate of 90 Hz.

3 Methods

3.1 ColocZStats development

High-quality 3D reconstruction or rendering is an essential prerequisite for accurate colocalization analysis on confocal z-stacks as it can reveal more detail in structures, enhance users’ recognition of colocalization areas, and elevate colocalization analysis sensitivity.

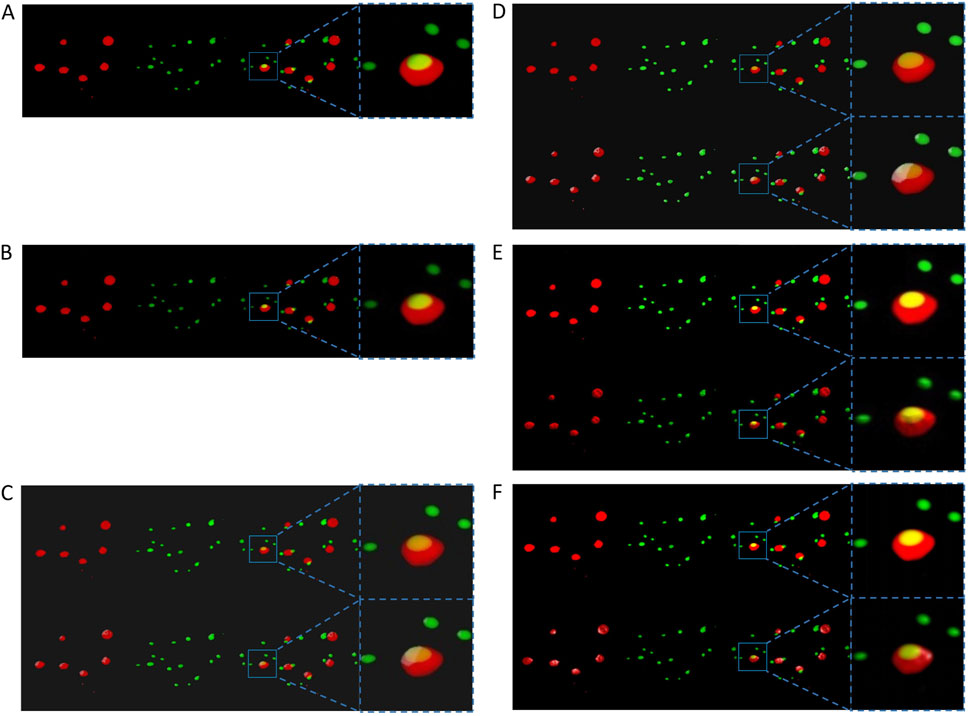

Figure 1 shows two channels of the same sample dataset and their combination displayed as colored semi-transparent voxels in ChimeraX desktop, ChimeraX VR, ExMicroVR, ConfocalVR, SlicerVR, and 3D Slicer desktop separately. Although 3D Slicer supports volume rendering, before the development of ColocZStats it could not automatically split multi-channel confocal z-stack channels and color them, nor did it provide specialized GUI widgets for separately manipulating each channel or navigating between them. Therefore, the FIJI image processing package (Schindelin et al., 2012) was initially utilized to save the channels as separate multi-page z-stack files. Subsequently, these files were selected and imported as scalar volumes into the Volume Rendering module of 3D Slicer (National Alliance for Medical Image Computing, 2024) for rendering. Their colors were then manually configured to ensure consistency with the others. Currently, ChimeraX desktop and ChimeraX VR do not support the application of lighting to volumetric renderings but only to surfaces and meshes (Goddard, 2024). However, adjustable highlights or shadows are necessary for showing 3D semi-translucent voxels as this can help create refined volumetric representations and allow viewers to distinguish intricate topologies and distance relationships more easily (The American Society for Cell Biology, 2024). Unlike them, the remaining programs in Figure 1 possess the capability of adding lighting and shadows to such renderings. For example, the Volume Rendering module of 3D Slicer provides many options for calculating shading effects, which enables finer adjustments to the rendered volume’s appearance. Regarding the rendering effects, it was observed that the quality produced by 3D Slicer desktop or SlicerVR is not inferior to that of other visualization software programs.

Figure 1. Screenshots displaying the visualization of the same specimen’s channels from an identical perspective across all mentioned programs. For each sub-figure, from left to right, two separate channels of the sample stack, their superposition, and a magnification of a specific region in the superposition are shown. (A) The volumetric rendering of the two channels in ChimeraX’s desktop version. (B) The same series of scenes as (A). They were captured from a VR environment created by ChimeraX VR. The first rows in (C–F) respectively show the scenes without external lighting in the ExMicroVR, ConfocalVR, SlicerVR, and the desktop viewport of 3D Slicer. The second row of (C–F) shows the scenes with external lighting turned on.

More importantly, researchers have discovered that they can efficiently develop and assess new methods and add more capabilities through custom modules by benefiting from the highly extendable features of 3D Slicer. Thus, developers in 3D Slicer do not need extra time to redevelop primary data import/export, visualization, or interaction functionalities. Instead, they can easily call or integrate these characteristics and focus on developing new required features (Fedorov et al., 2024; Vipiana and Crocco, 2023). Although ChimeraX is also extensible, many of its functional application programming interfaces (APIs) were not documented during tool development, and many existing APIs were experimental. In contrast, 3D Slicer has provided relatively extensive API documentation and developer tutorials. Moreover, the 3D Slicer community has offered a large and active forum for developers, where considerable issues related to tool development can be identified, discussed, or raised for timely feedback. ConfocalVR and ExMicroVR, on the other hand, have not provided publicly available APIs. All the above factors constitute the rationale for developing a 3D Slicer tool.

ColocZStats is a scripted extension that utilizes the 3D Slicer APIs (Slicer Community, 2024) and is implemented using Python 3.11.6. The code was written in PyCharm (JetBrains s.r.o., 2024), an integrated development environment (IDE) designed explicitly for Python programming. The fundamental organizational structures of ColocZStats are “classes”, which represent independent code blocks containing a group of functions and methods.

Once a multi-channel confocal z-stack is loaded into ColocZStats, the stack’s metadata in the program’s background will be parsed to extract individual Numpy arrays of each channel. Simultaneously, several methods from a base module, “util.py” and a core class, “vtkMRMLDisplayNode,” of 3D Slicer will be iteratively invoked to establish separate scalar volume nodes for each channel’s Numpy array and assign individual pseudo-colors to them. In addition, the rendering method from 3D Slicer’s Volume Rendering module (Fedorov et al., 2024) is applied to all individual channels separately, eventually presenting their merged visual appearance. Like most open-source medical imaging systems, such as MITK (Nolden et al., 2013), itksNAP (Yushkevich et al., 2006), and CustusX (Askeland et al., 2016), the volume rendering back-end of 3D Slicer is based on the Visualization Toolkit (VTK) (Drouin and Collins, 2018). VTK is a powerful cross-platform library that supports a variety of visualization and image-processing techniques, leading to its wide adoption in open-source and commercial visualization software (Bozorgi and Lindseth, 2015). The Volume Rendering module provides three volume rendering methods: (i) VTK CPU ray casting, (ii) VTK GPU ray casting, and (iii) VTK multi-volume. VTK GPU ray casting is the default rendering method because graphics hardware can significantly accelerate rendering (National Alliance for Medical Image Computing, 2024).

Moreover, the moment a stack is loaded, a set of separate GUI elements is created for each channel to adjust channel threshold values or reveal any number of channels in the scene by controlling their visibility. The GUIs in ColocZStats are provided by the Qt toolkit (The Qt Company, 2024). Numerous modules in 3D Slicer offer a suite of reusable, modifiable GUI widgets, allowing developers to seamlessly integrate them into custom user interfaces via Qt Designer. Qt Designer is a tool for crafting and constructing GUIs with Qt Widgets. For instance, in ColocZStats, the widget integrated for controlling each channel’s threshold range is the “qMRMLVolumeThresholdWidget,” which is also a widget within 3D Slicer’s Volumes module (3D Slicer, 2024b).

By integrating and leveraging several existing classes and methods in 3D Slicer, the initial need for a series of manual operations to create appropriate volumetric representations for channels has evolved into the current state where all these steps can be automatically completed, with each channel equipped with individually controllable GUI widgets. The above process is also an illustrative example of how the high extensibility of 3D Slicer can be exploited to fulfill certain specific requirements of the tool.

3.2 Statistical analysis of colocalization

As previously mentioned in the Introduction above, ColocZStats not only helps researchers identify the relative distribution of different cellular molecules but can also generate effective metrics for quantifying colocalization to help investigate the presence of spatial relationship between different channels. These coefficients are now described in detail.

3.2.1 Pearson’s correlation coefficient

In the field of colocalization analysis, intensity correlation coefficient-based (ICCB) analysis methods constitute one of the primary categories for assessing colocalization events. They depend on the image’s channel intensity information, which provides a powerful way of quantifying the degree of spatial overlapping of two channels (Georgieva et al., 2016). A large number of colocalization analysis tools employing ICCB methods have been widely integrated into various image analysis applications (Bolte and Cordelières, 2006). Pearson’s correlation coefficient (PCC) is one of the most commonly employed ICCB methods (Bolte and Cordelières, 2006; Dunn et al., 2011). It originated in the 19th century and has been extensively used to evaluate the linear correlation between two datasets (Adler and Parmryd, 2010). When analyzing colocalization, PCC is employed to quantify the linear relationship between the signal intensities in one channel and the related values in another (Aaron et al., 2018). The PCC can be considered a normalized assessment of two channels’ covariance (Aaron et al., 2018). The formula for PCC applied in ColocZStats is defined below as Equation 1, with the signal intensities of two channels at each voxel included in the calculation:

For any pair of selected channels in the tool, the

The range of PCC is between +1 and −1. Two channels entirely linearly correlated are indicated by +1, and −1 means that the two channels are perfectly but inversely correlated. A zero-value means that the distributions of the two channels are uncorrelated (Dunn et al., 2011). Although PCC is theoretically not influenced by thresholds, they are incorporated into the tool’s computation to handle specified threshold settings uniformly across all coefficients computed by ColocZStats. The specific approach of setting thresholds for calculating PCC is similar to that of the Colocalization Analyzer in the Huygens Essential software (Scientific Volume Imaging B.V, 2024a; 2024b). This widely acknowledged desktop software visualizes and analyzes microscopic images (Pennington et al., 2022; Davis et al., 2023; Volk et al., 2022). The distinction between the two is that, in ColocZStats, not only the value of the lower threshold but also that of the upper threshold can be specified for each channel. The purpose of allowing the setting for channels’ upper threshold values is analogous to the design of ConfocalVR’s previous Cut Range Max widget or its latest Max Threshold widget, which is used to filter out oversaturated voxels that may be occasionally observed by observers in some particular circumstances (Stefani et al., 2018). During the calculation process, if a particular channel is set with lower and upper thresholds, voxel intensities greater than the upper threshold will be set to 0. Based on that, the lower threshold will be subtracted from the remaining voxel intensities. If any negative voxel values occur after the subtraction, they will be set to 0.

3.2.2 Intersection coefficients

In distinction from the PCC, which is calculated based on actual voxel intensities, more straightforward coefficients can be calculated based only on the presence or absence of signals in a voxel, regardless of its actual intensity value. All intersection coefficients calculated by ColocZStats are examples of such coefficients, and the computation methods were borrowed from the Huygens Colocalization Analyzer (Scientific Volume Imaging B.V., 2024a). Similarly, the difference is that the upper threshold value for each selected channel is included in ColocZStats’s calculation. More specifically, once a voxel’s intensity value is within a specific intensity range bounded by upper and lower thresholds, it can be regarded as having some meaningful signal. If so, its value could be considered 1 regardless of its actual intensity and 0 otherwise. This indicates that a binary image

Based on the definition of

The numerator refers to the intersecting voxels’ total volume. For each voxel, the overlapping contribution is defined as the product of

Another contribution of ColocZStats is that it allows researchers to choose up to three channels in a z-stack for statistical analysis. Extending from the above-mentioned formulas, the intersection coefficients’ formulas tailored to this scenario have also been proposed. In this case, the formula for

Likewise, the three channels’ intersecting volume of a given voxel

4 Results

4.1 Comprehensive comparison of visualization tools

The related features of the programs mentioned in the Introduction section, including ColocZStats, for visualizing confocal z-stacks and measuring their colocalization are summarized in Table 1. This table divides these features into four categories that encompass the most meaningful comparable features for the execution of colocalization analysis. Consequently, although certain programs may have many other functionalities, they are not included in this table. Regarding the choice of programs, ChimeraX VR and SlicerVR are VR extensions of ChimeraX and 3D Slicer, correspondingly, and their image processing and analysis capabilities almost entirely depend on the specifics of each platform. As a result, only ChimeraX and 3D Slicer are listed in this table for comparison. For each feature in the table, if the programs themselves or any plugins or modules they incorporate offer matching functionality, the corresponding cell is marked with a check. For instance, in the case of 3D Slicer, its Volume Rendering module supports loading multi-page Z-stack files; thus, the corresponding cell is checked. Another module in 3D Slicer, ImageStacks, allows loading the image sequence of z-stack slices, resulting in the respective cell also being checked. It is worth noting that because the ImageStacks module is designed specifically for working with image stacks, all the listed image formats in the “Supported image format” for 3D Slicer are consistent with those supported by the ImageStacks module.

The Confocal Image Z-stack Observation category summarizes the general practical features for meticulous observation of the channels in confocal z-stacks. Most of them are common to several tools in this comparison. Among these, as for “ExMicroVR” or “ConfocalVR”, if one intends to manipulate individual channels separately, a necessary step is to extract each channel of the original z-stack as a single multi-page z-stack file using FIJI and save all of them into a designated directory for loading. Therefore, while both tools support users in individually manipulating channels, they do not have the functionality to automatically separate all channels of the z-stack to enable this operation. Before the completion of ColocZStats development, as indicated by the 3D Slicer community, 3D Slicer still needs specialized tools for properly visualizing multi-channel confocal z-stacks and manipulating channels separately. Therefore, no features in the table related to operating z-stack channels corresponding to 3D Slicer have been checked. As a side note, in the context of the third and seventh items within this category, ‘Delete’ refers to removing channel rendering and matching GUI widgets from the scene rather than deleting any associated files from the file system.

The information presented in Table 1 indicates that in contrast to other platforms, ColocZStats not only retains several necessary control options for channels of confocal z-stacks but can also objectively analyze the colocalization in such stacks. Significantly, this comparison also reveals some limitations in the current functionalities of ColocZStats.

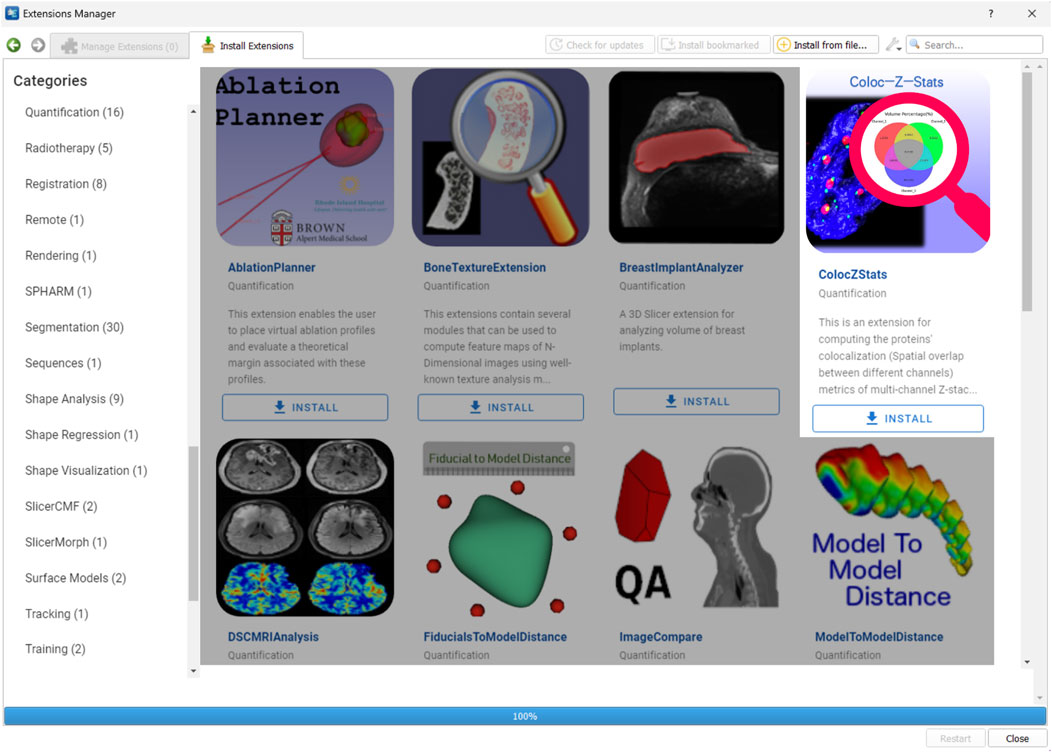

4.2 Availability of ColocZStats

ColocZStats is freely available under an MIT license and requires the stable version of 3D Slicer (3D Slicer., 2024a) for compatibility. The Slicer community maintains a website called the “Slicer Extensions Catalog” for finding and downloading extensions; ColocZStats is available there (3D Slicer., 2024c). The Extensions Manager in 3D Slicer provides direct access to the website, facilitating easy installation, updating, or uninstallation of extensions with a few clicks in the application. As shown in Figure 2, ColocZStats can be found within the Quantification category in the catalog. After installing ColocZStats, it will be presented to users as a built-in extension. More information about installing and using ColocZStats can be found on the homepage of its repository on GitHub (https://github.com/ChenXiang96/SlicerColoc-Z-Stats).

Figure 2. Screenshot showing the Slicer Extensions Catalog via the Extensions Manager in 3D Slicer. ColocZStats is offered within the Quantification category.

4.3 Input image file

For compatibility with ColocZStats, each input image file must be a 3D multi-channel confocal image z-stack in TIFF format that maintains the original intensity values, with each channel in grayscale. Each channel must possess the same dimensions, image order, and magnification. The bit-depth (number of bits dedicated for each voxel) of the input image file can be 8-, 16-, or 32-bit. Even though ColocZStats supports loading z-stacks containing up to 15 channels, only up to three can be specified for each colocalization computation because the statistical analysis and the possible interactions between channels become more complex with each additional channel.

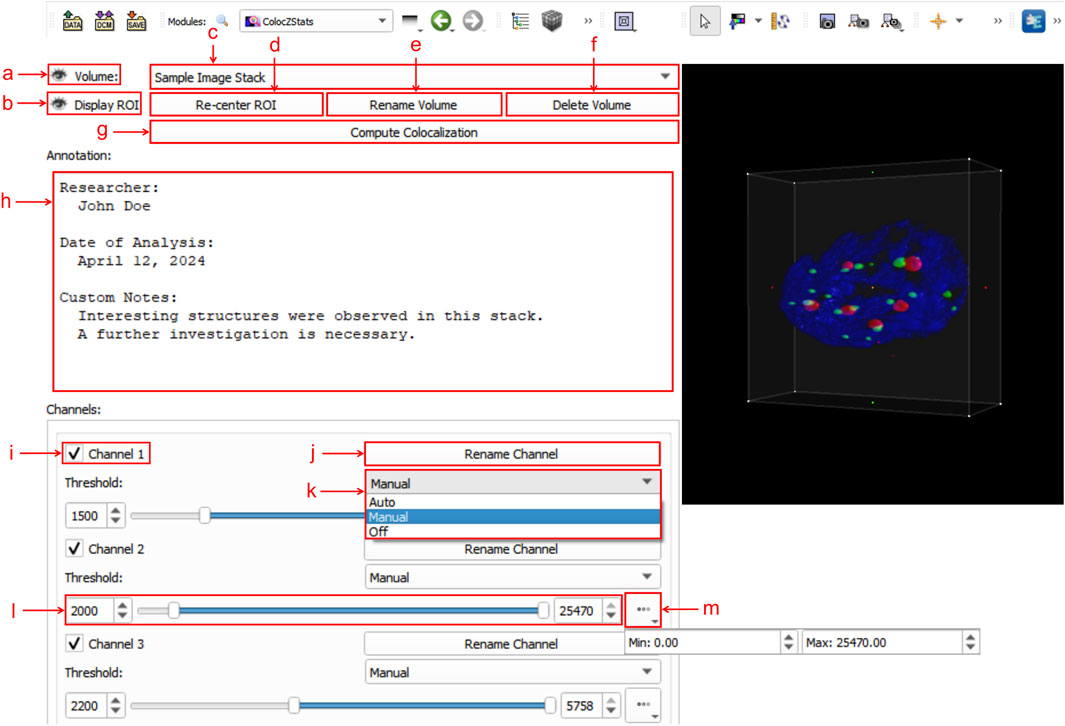

4.4 User interface

Figure 3 shows an example of ColocZStats’s user interface, which consists of two separate areas: a control panel on the left side that provides a series of interactive widgets, and a 3D view on the right that displays the volumetric rendering of the loaded sample image stack. Users can adjust these volume-rendered images and calculate the corresponding colocalization metrics by manipulating the widgets on the control panel. To display the details of these widgets clearly, a close-up of the control panel is shown in Figure 3. The following subsection describes a typical workflow for ColocZStats.

Figure 3. Graphical user interface (GUI) of ColocZStats. (a) Clickable eye icon for managing the visibility of the entire image stack’s rendering. (b) Clickable eye icon for managing the visibility of the adjustable box for selecting ROI regions. (c) Combo box for switching between multiple loaded image stacks, displaying the stack’s filename without extensions by default. (d) Button for repositioning the rendering within the ROI box to the 3D view’s center. (e) Button for triggering a pop-up text box for customizing the displayed name on the combo box. (f) Button for deleting the current stack from the scene, along with its associated annotation and GUI widgets. (g) Button for performing colocalization analysis. (h) Text field for adding a customized annotation for the current stack. (i) Checkbox for managing the visibility of each channel. The indices in these default channel name labels start from “1”. (j) Button for triggering a pop-up text box for customizing the corresponding channel’s name label. (k) Drop-down list for selecting the channel threshold control mode, comprising three options: “Auto,” “Manual,” and “Off”. (l) Adjustable sliders for setting lower and upper thresholds. Both values can also be specified in the two input fields. (m) Drop-down box for displaying the initial threshold boundaries of the associated channel.

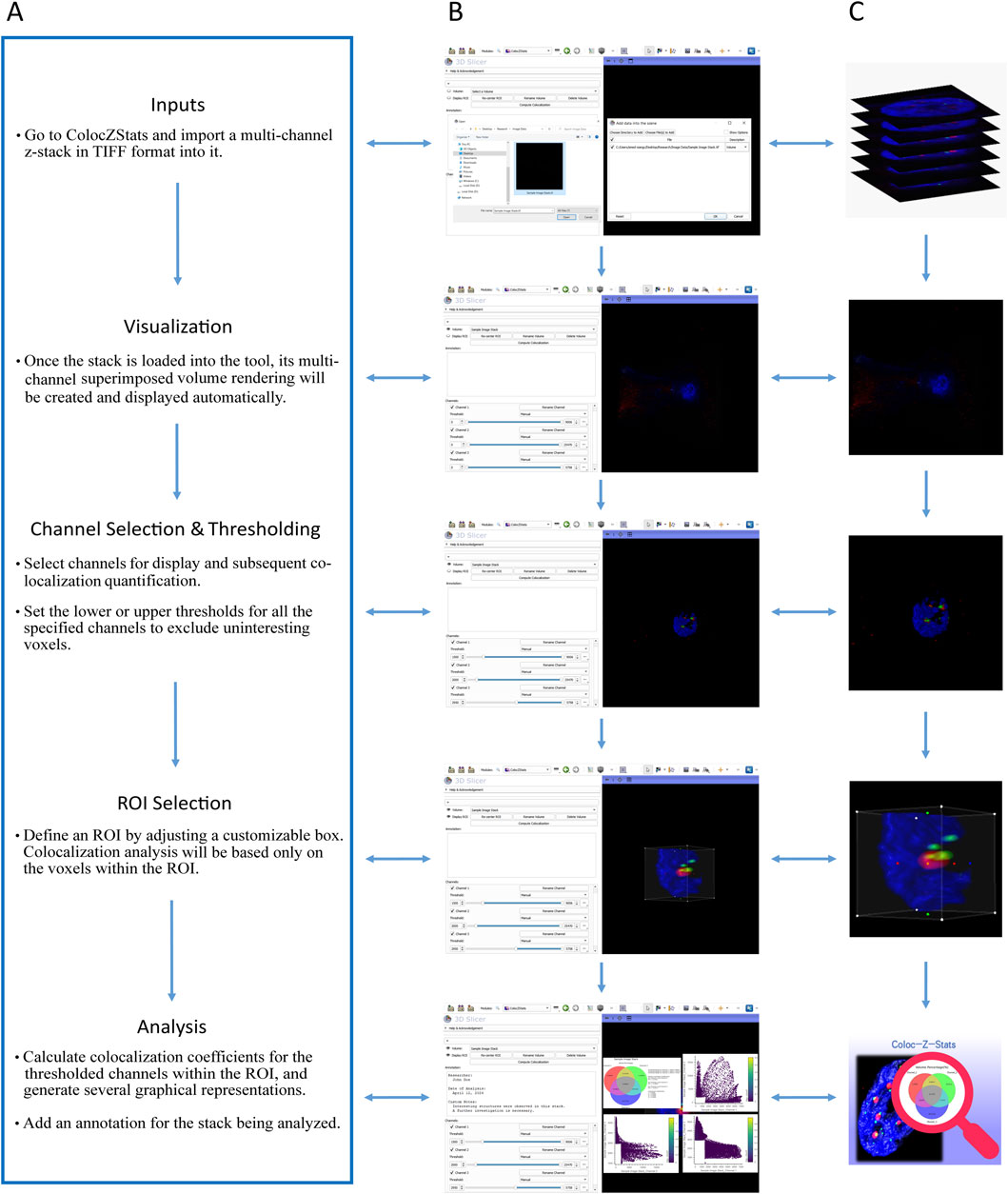

4.5 The workflow of ColocZStats

A typical workflow of ColocZStats is shown in Figure 4A, comprising five steps: (i) inputs, (ii) visualization, (iii) channel selection and thresholding, (iv) ROI selection, and (v) analysis. The sequence of certain steps in this workflow can be slightly adjusted based on particular conditions or personal preferences. In 3D Slicer, a file browser can be opened by clicking the “DATA” button at the upper-left corner to load a multi-channel confocal z-stack file into the scene. All channels’ colored volume-rendered images will immediately appear in the 3D view by default when the stack is loaded into ColocZStats, and they can be moved and rotated arbitrarily with mouse clicks and movements.

Figure 4. (A) Typical workflow of ColocZStats. (B) Example operation scenarios in ColocZStats corresponding to the steps in the workflow. (C) Visual abstractions corresponding to the steps in the workflow.

The widgets in the Channels sub-panel will also be displayed concurrently upon loading the input file. The checkbox in front of each channel’s name label is not only used for managing the visibility of the channel’s rendered volume but also for determining whether the channel will be included in the colocalization analysis. Figures 4B and C represent an example where all three channels of the sample data are selected. After channel selection, threshold segmentation is often necessary because it is helpful for extracting interesting voxels from the image. For individual channels, as any slider for controlling the thresholds is adjusted, the display range of the channel’s volumetric representation will be changed synchronously, facilitating users’ observation and decision-making for the following analysis. By default, when a confocal z-stack is imported, the checkboxes of all its channels are turned on, and the threshold ranges for all channels are displayed as their original ranges.

The ROI box integrated into ColocZStats facilitates researchers’ analysis of any parts of the loaded stack. By default, there is no ROI box for the imported stack in the scene. Upon the first click on the eye icon beside “Display ROI”, an ROI box will be created; after that, its visibility can be customized to be toggled on or off. While the ROI box is displayed, depth peeling will automatically be applied to the volumetric rendering to produce a better translucent appearance. The handle points on the ROI box can be dragged to crop the rendered visualization with six planes. As any handle point is dragged, the volumetric rendering outside the box will disappear synchronously. The final analysis will only include the voxels inside the ROI box. When there is a need to analyze the entire stack, the ROI box should be adjusted to enclose it completely. The functionality to extract the voxels inside the ROI is enabled by the Crop Volume module, and the related methods will be called in the back end when a calculation is executed. The Crop Volume module is a built-in loadable module of 3D Slicer that allows the extraction of a rectangular sub-volume from a scalar volume. With the above control options, users can conveniently configure voxel intensity thresholds and ROI for all channels to be analyzed while intuitively focusing on the critical structures to obtain the final colocalization metrics.

The “Analysis” step is performed when clicking the “Compute Colocalization” button; all thresholded channels and overlapping areas inside the ROI will be identified in the program’s background process. Subsequently, the colocalization coefficients described previously will be computed. Meanwhile, a series of graphical representations will pop up on the screen, such as a Venn diagram illustrating volume percentages and 2D histograms showing the combinations of intensities for all possible pairs of selected channels. The following subsection provides detailed descriptions of these generated graphical representations. In addition, users can add a custom annotation for the associated image stack at any step after it is loaded to record any necessary information.

4.6 Graphical representations produced

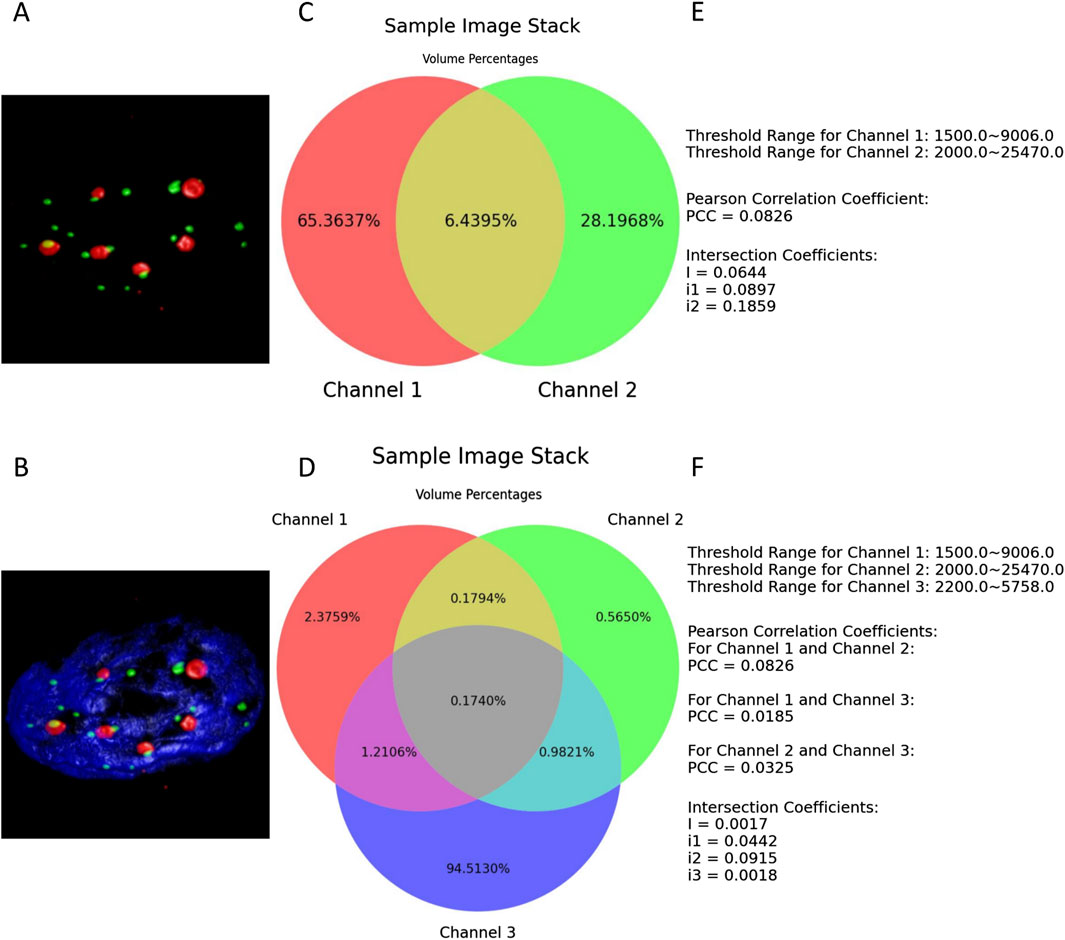

4.6.1 Venn diagrams

ColocZStats allows two or three channels to be selected simultaneously to perform colocalization measurements. The Venn diagrams generated from the above two scenarios allow researchers to quickly recognize the volume percentages of overlapping regions along with that of the remaining parts. The popular Python package “matplotlib-venn” was utilized to implement this functionality while leveraging its “venn2_unweighted” and “venn3_unweighted” functions to create the two kinds of Venn diagrams consisting of two or three circles without area weighting, respectively.

In the Venn diagrams generated, the colors in these circles match the colors in the volume-rendered images, and the area where all circles intersect signifies the part where all specified channels intersect, with the displayed percentage corresponding to the result obtained by multiplying the global intersection coefficient by 100 and retaining four decimal places. Based on this, the percentages of the remaining parts of these channels can be derived and displayed in the other areas of the Venn diagrams. Moreover, the Venn diagram’s title matches the specified name on the GUI’s combo box, and the channel names shown there correspond to the channel name labels defined on the interface.

The examples shown in Figure 5 demonstrate a typical scenario of setting lower thresholds for each channel and configuring their upper thresholds to their maximum values. Figures 5E and F are also components of the results’ illustrations composed together with the two Venn diagrams; for clarity, they were extracted as two separate figures.

Figure 5. (A, B) present the thresholded volume renderings when two or three channels of the entire sample image stack are selected. (C, D) depict the respective resulting Venn diagrams. (E, F) illustrate all the defined thresholds, with the colocalization metrics results retained to four decimal places.

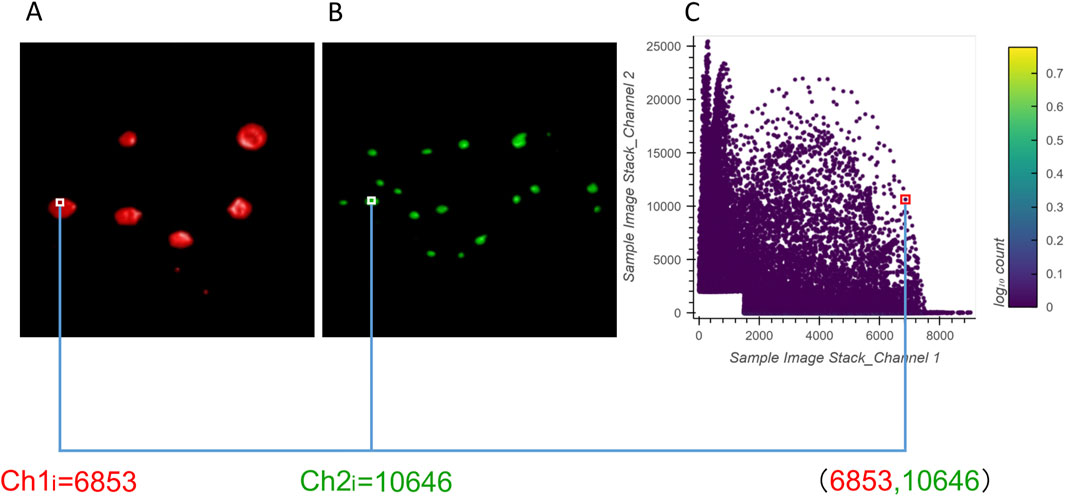

4.6.2 2D histograms

The 2D histograms produced by ColocZStas serve as a supplementary tool for visually assessing colocalization, providing a qualitative indication. The feature of generating 2D histograms has become a basic functionality of most colocalization analysis software. One specific application of 2D histograms is that they can be employed to identify populations within different compartments (Brown et al., 2000; Wang et al., 2001). For any two channels, a 2D histogram illustrates the connection of intensities between them, where the x-axis corresponds to the intensities of the first channel and the y-axis corresponds to the intensities of the second channel. The histogram’s points can be closely observed to be gathering along a straight line if the two channels are highly correlated. The line’s slope indicates the two channels’ fluorescence ratio. Following Caltech’s “Introduction to Data Analysis in the Biological Sciences” course in 2019, a Python library in ColocZStats called “Holoviews,” (Stevens et al., 2015) was applied to plot the 2D histograms. With the scenario corresponding to Figure 5A, Figure 6 demonstrates the generation of a 2D histogram. For any precise position within the channels, the related intensities of both channels are combined to define a coordinate in the 2D histogram. Simultaneously, the count of points at this coordinate is incremented by one. As shown in Figure 6C, the blank area in the lower-left corner represents the background. For any background’s voxel, the intensity values of its two channels are both outside the respective valid channel threshold ranges, so such voxels will not be plotted as points in the 2D histogram. The definition of the valid threshold ranges mentioned in this context is consistent with those applied when calculating the intersection coefficients. The color bar on the histogram’s right indicates the number of points with the same intensity combinations. The 2D histograms generated by ColocZStats will be saved as static images and interactive HTML files that can be viewed in more detail.

Figure 6. Visual explanation of a 2D histogram’s generation process. (A) Volume rendering of channel 1 and an example intensity at a specific position. (B) Volume rendering of channel 2 and an example intensity at the same position. (C) Coordinates in the 2D histogram are generated by the combination of those intensities.

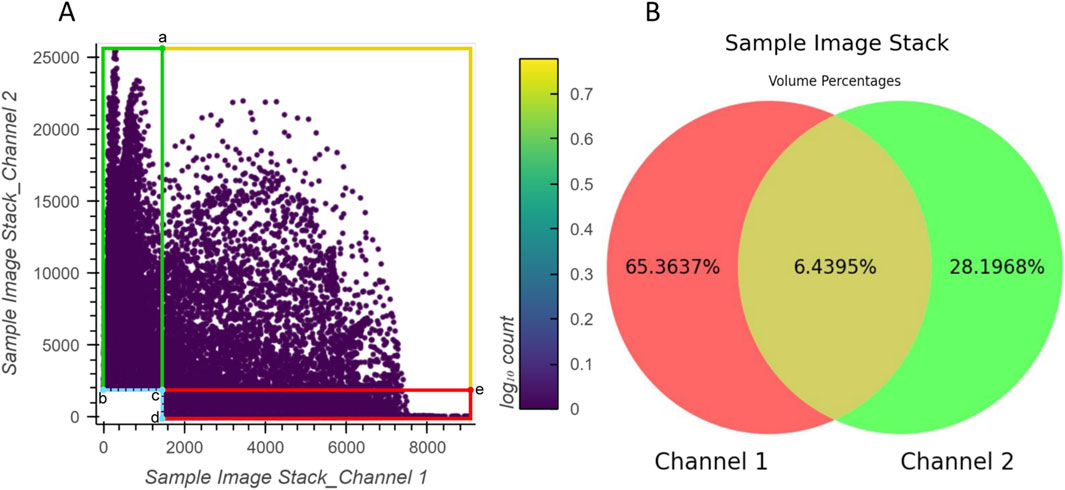

The correspondence between the example 2D histogram in Figure 6 and its related Venn diagram is depicted in Figure 7. The defined threshold value boundaries for the channels delineate four regions in Figure 7A. In this 2D histogram, the colored outlines, which are aligned with the boundaries, and the rectangular regions they enclose represent all possible distribution positions of points. All points along the yellow outline and those within the region enclosed by the outline and points a, c, and e represent all the voxels that contain valid signals in both channels. The percentage of all these points aligns with the percentage displayed in the yellow area of Figure 7B. Likewise, all points along the red outline and those distributed in the region enclosed by it and points c and d and all points along the green outline and those within the region enclosed by it and points b and c represent the voxels with exclusively valid signals in channels 1 or 2, respectively. The percentages of these points are consistent with the values shown in the red and green areas of the Venn diagram, respectively. Furthermore, no points are plotted on coordinates corresponding to b, c, and d or the boundaries formed between them as they lie outside the defined threshold ranges.

Figure 7. Relationship between the example 2D histogram (A) and its corresponding Venn diagram (B). Coordinate of point (a): (1500,25470); coordinate of point (b): (0,2000); coordinate of point (c): (1500,2000); coordinate of point (d): (1500,0); coordinate of point (e): (9006,2000).

4.7 Results spreadsheet

After clicking the “Compute Colocalization” button (Figure 3G), a comprehensive results spreadsheet is automatically saved for researchers’ further reference or sharing. It contains all graphical representations, coefficient results, and the ROI-related information generated from each calculation. Please refer to the Supplementary Material for a detailed description of the spreadsheet.

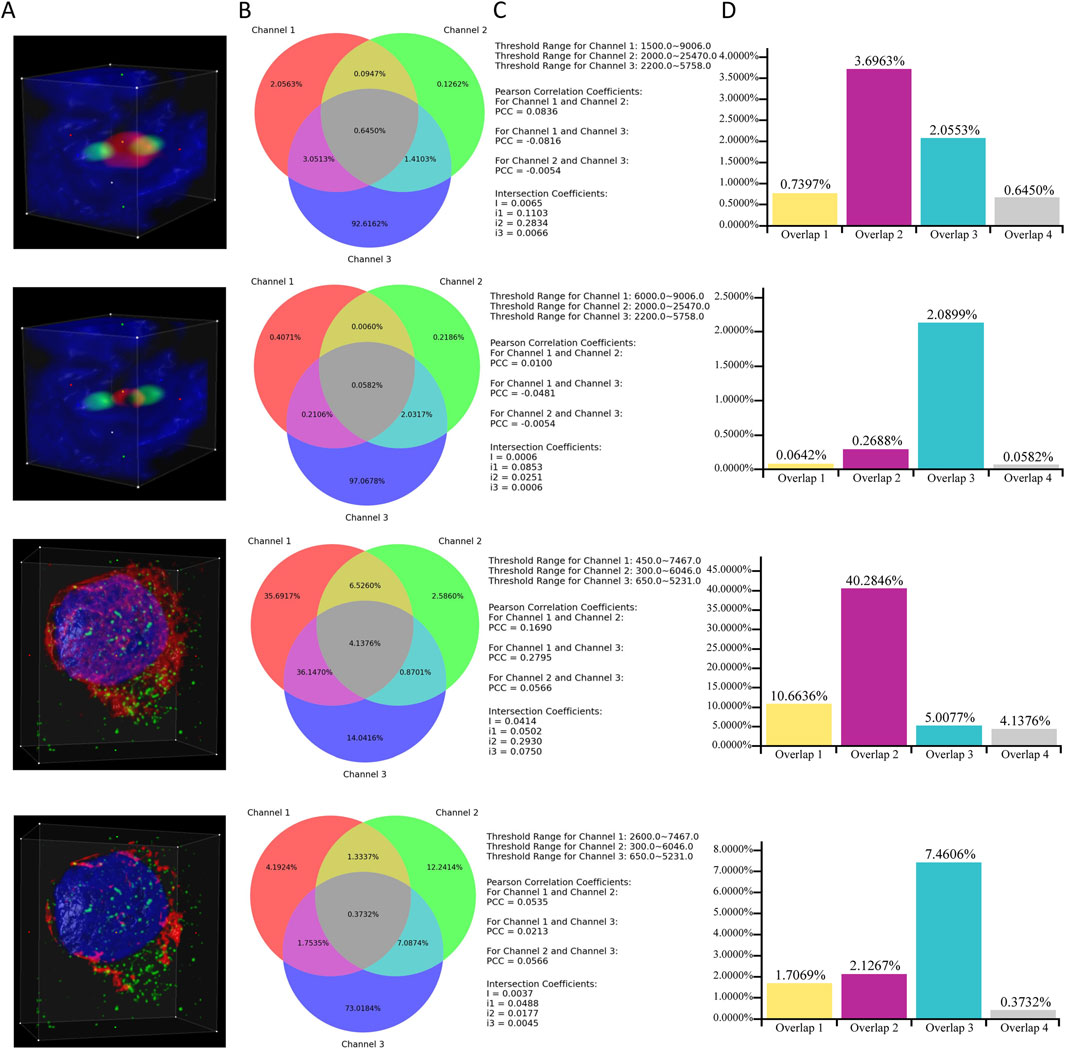

4.8 Case study

This subsection provides an example that demonstrates a specific scenario of applying ColocZStats to perform a colocalization analysis task. It primarily focuses on how changes in the threshold range of an individual channel influence the objective quantitative colocalization indicators and the variations in colocalization degree that can be revealed during this process. In Figure 8, two image stacks are included. For each stack, the ROI box remains unchanged throughout the two corresponding cases, and the red channel is individually assigned two distinct lower threshold values. In contrast, the threshold range for the other channels remains unchanged. By observing the 3D rendering appearances provided by this tool, a continuous change can be found; that is, for each stack, as the red channel’s lower threshold gradually increases, the volume of its overlap with other channels shows an evident decreasing trend. This impression can then be verified by pressing the “Compute Colocalization” button to obtain objective measurements that confirm the visual impressions. Combining a series of objective quantifications in this way will aid in validating these subjective visual impressions and obtaining a more reliable assessment at the end of the analysis process.

Figure 8. Case Study. (A) Volume rendering of channels within ROI boxes. (B) Venn diagrams corresponding to the cases. (C) Associated thresholds and colocalization metrics’ results. (D) Additional histograms illustrating the proportions of overlaps. They were created based on the data derived directly from the Venn diagrams. “Overlap 1” between Channels 1 and 2. “Overlap 2” between Channels 1 and 3. “Overlap 3” between Channels 2 and 3. “Overlap 4” of all three channels.

Through Figure 8C, a noticeable phenomenon regarding the variation of colocalization coefficients is exposed: for each stack, as the lower threshold value of channel 1 increases, the PCCs between channel 1 and the other two channels gradually approach 0, indicating a diminishing linear correlation between channel 1 and the other two channels. Meanwhile, as demonstrated by the additional histograms that are based on the Venn diagrams, with the reduction in the threshold range of channel 1 for each stack, the proportions of overlapping regions between channel 1 and the other two channels also decrease. Integrating the above observations effectively validates a biological fact: for each stack, the reduction of channel 1 leads to a decline in its colocalization with the other two channels. In addition, the values of the global intersection coefficient and its three derived coefficients also gradually decrease, implying a decline in the overall colocalization degree of the three channels. This example also demonstrates how the visual representations and coefficients produced by this tool can provide multiple perspectives for assisting colocalization analysis.

Although ColocZStats can provide researchers with objective quantitative results for further analysis, many critical factors can affect the reliability of colocalization analysis. One such factor is setting appropriate threshold ranges for the channels, as this will determine which signals should be separated from the background and identified as foreground, which significantly affects subsequent quantification (Adler and Parmryd, 2014). Generally, the background noise level for confocal images can often reach up to 30% of the maximum intensity (Zinchuk et al., 2007). Nevertheless, broadly applying such thresholding standards to a more significant number of images remains challenging.

To make it easier for analysts to select appropriate thresholds when using ColocZStats, the use of image processing techniques that help reduce background noise before analysis is strongly recommended to improve the signal-to-noise ratio (SNR) of the stacks being analyzed, resulting in the effective signals becoming more prominent. For example, earlier studies have successfully utilized lowpass, median, and Gaussian filters to achieve this (Landmann, 2002). Additionally, given the user interface features offered by 3D Slicer, users can freely rotate 3D image stacks to explore their structure from various angles. During this process, the shadows created by external lighting change with different viewing angles. This enhanced spatial awareness has the potential to help users distinguish between signals and backgrounds more efficiently, leading to more accurate threshold settings.

Moreover, it would be helpful to incorporate automated thresholding methods to more effectively avoid potential bias in threshold settings due to subjectivity. This will be discussed in more detail in the following section.

5 Discussion

Through a comprehensive examination of several widely recognized biological image visualization applications, all with VR functionalities and some already with extensive analytical features, it has been determined that these tools lack dedicated built-in options and user interfaces for 3D graphics to perform the quantitative colocalization analysis for multi-channel z-stacks generated by confocal microscopes. The development of an open-source 3D Slicer extension named ColocZStats has contributed to expanding potential solutions for addressing this challenge. Certain functionalities from multiple modules within 3D Slicer were reasonably utilized and integrated by ColocZStats, enabling the effective presentation of confocal microscopy images’ merged multi-channel volumetric appearances. This endeavor aims to support researchers in quickly discerning spatial relationships between molecular structures within organisms. In addition, ColocZStats can generate colocalization metrics for thresholded channels within ROIs of samples, which has positive significance for biologists who now have a tool to gain detailed and objective insights into biological processes.

More specifically, ColocZStats allows users to select up to three channels of each z-stack concurrently for analysis. It permits customized control over channels’ lower or upper threshold limits according to researchers’ specific needs and obtains metrics such as PCCs for all possible channel pairwise combinations and intersection coefficients. The above distinctive characteristics distinguish ColocZStats from most tools which typically restrict users from performing colocalization analysis between two channels at a time and only allow setting the lower threshold for each channel. Via the Venn diagram it generates, the ratios of all parts, including the part where all channels intersect, can be clearly displayed. Moreover, users can further enhance their understanding of the intensity relationship between different channels through the 2D histograms generated. For each calculation, all the results and diagrams are conveyed to researchers through a supplementary spreadsheet, making it easy for them to share or compare data. In summary, with the ultimate goal of merging with SlicerVR, ColocZStats presently functions as a desktop extension for 3D Slicer, incorporating an intuitive GUI that allows users to customize ROIs and define the threshold ranges for all stack channels while supporting the one-click generation and saving of colocalization analysis results. More importantly, the development of the ColocZStats extension further enhances the comprehensiveness of 3D Slicer, which means that its extensive audience can now seamlessly perform colocalization analysis for confocal stacks without frequently switching between different tools.

In colocalization analysis, crosstalk and bleed-through between fluorescent channels are significant factors that affect the accuracy of the results. The coefficients, such as PCC, are highly sensitive to these issues and may account for false-positive colocalization signals. Crosstalk occurs when different fluorochromes are excited with the same wavelength at a time (simultaneous scan) due to the partial overlap of their excitation spectra. Thus, to prevent crosstalk in laser scanning microscopy, it is strongly advised to perform sequential scan acquisitions by exciting one fluorochrome at a time while switching between detectors simultaneously. Similarly, bleed-through may result from detecting fluorescence emission in an inappropriate detection channel from an overlap of emission spectra. For example, when FITC and Cy3 are excited sequentially at 488 nm and 543 nm, the lower energy portion of the FITC emission may bleed through and be detected as overlapping with the emission peak of Cy3 in the Cy3 detection channel. Therefore, it is crucial to have single-labeled controls for each fluorochrome to check for bleed-through on the detector unless the emission of both fluorochromes used is far apart (Bolte and Cordelières, 2006).

Additionally, in fluorescence microscopy, the source of the collected image sampling affects how accurately the biological tissues are represented. Hence, to faithfully reproduce images, adhering to the Nyquist–Shannon sampling theorem is often considered the gold standard (Pawley, 2006). According to this theorem, the specified sampling rate should be as high as twice the resolutions of the highest spatial frequency in the image to prevent aliasing effects (Huang et al., 2009). It is advisable to stringently move toward proper Nyquist sampling in the context of super-resolution microscopy when aiming to obtain spatial resolutions that go beyond the diffraction limit of light microscopy, thereby ensuring the accurate reconstruction of fine details in the sample and avoiding the occurrence of artifacts (Shroff et al., 2008).

At present, as a purpose-built tool for colocalization analysis, ColocZStats requires prioritized improvements in the following aspects. First, there is a need to expand the variety of colocalization metrics. In addition to the existing PCC, other coefficients belonging to the ICCB methodology that are also extensively employed, such as Manders’ coefficients, Spearman’s coefficient, and the overlap coefficient, could be integrated into the extension to further enhance its analytical capabilities. As more colocalization coefficients become incorporated into ColocZStats, enhancing the capability of image pre-processing will become increasingly essential. The need for this enhancement arises from the potential impact of excessive noise in microscopic images which affects the correlation between distinct signals and leads to an underestimation of colocalization analysis results (Adler et al., 2008). The filtering techniques mentioned in the Case Study involve convolving the image with a filter kernel, which can result in a loss of resolution (Landmann, 2002). In contrast, deconvolution is a more advanced and effective method for image filtering and restoration (Landmann and Marbet, 2004). Integrating this technique into ColocZStats could significantly help eliminate image noise, improve image quality (Wu et al., 2012), and consequently improve the accuracy of subsequent analysis.

Meanwhile, to further increase the coverage of ColocZStats for a wider range of input images and the efficiency analysis of multi-channel image stacks, it is anticipated that features allowing compatibility with stacks containing more channels and permitting the simultaneous selection of more channels in a single computation will be implemented.

Moreover, as discussed in the User Interface subsection above, ColocZStats supports changing channel thresholds by manually adjusting the sliders or entering values in the input fields to help biologists customize structures of interest. Nonetheless, relying on the visual inspection of images to estimate suitable thresholds can be challenging and may result in inconsistent outcomes. Figure 3K, as one of the components of the “qMRMLVolumeThresholdWidget” provided by 3D Slicer, includes an “Auto” option that can be used to automatically assign a threshold range for any channel based on the principle of excluding the top/bottom 0.1% of all values of image intensity. Incorporating more reliable, robust, and objective automated threshold methods will undoubtedly further enhance the functionality of ColocZStats. The method developed by Costes et al. (2004) to determine the appropriate threshold value for background identification is expected to be appropriately integrated into ColocZStats. The Costes method is founded on the linear fitting of the 2D histograms of channels (Costes et al., 2004; Pike et al., 2017) and has been proven to be a robust and reproducible approach that can be readily automated (Dunn et al., 2011). Additional ways to improve the histograms produced would be to add lines guiding the eye to show the background levels and rescaling of the color bars. Since image segmentation via global thresholding might not always be appropriate or sufficiently accurate, another venue for future work could include providing other segmentation methods or support importing into ColocZStats segmented masks or segmented objects from other programs to perform colocalization analysis of the imported items.

Furthermore, allowing users to utilize more diverse ROI selection tools is another significant potential development direction that can enhance the robustness of the analysis. Future development plans include integrating more ROI shapes such as ellipsoids and cylinders, and more complex selection methods such as freehand drawing or automated ROI selection based on image intensity or structural features. This enhancement will further improve the flexibility of the ROI selection process, enabling users to capture more useful information and thereby increasing the accuracy of the analysis.

The colocalization analysis in ColocZStats is conducted on a PC (desktop or laptop). Enabling users to utilize ColocZStats within an immersive VR environment is a primary objective for future work. In general, VR images would not lose contrast during rendering, but their resolutions differ from those of 2D desktop displays and may lose resolution compared with some high-resolution PC displays. The visual effect of the change in resolution will largely depend on the VR platform used for deploying ColocZStats. For instance, state-of-the-art VR headsets such as the Meta Quest 3 feature high resolutions of 2,064 × 2,208 pixels (approximately 5 megapixels) per eye, offering a realistic visual experience. Another device, the Apple Vision Pro, has a higher resolution of 3,660 × 3,200 pixels (approximately 11.7 megapixels) per eye. Therefore, the quality of visual detail that users experience will largely be influenced by the hardware in the VR headset.

Developers of 3D Slicer have been working on improving an extension called “SlicerVR”. Once this extension is in place and provides the functionality announced by the designers of 3D slicer, the desktop interface will be available within the VR environment, and thus the complete workflow that is PC-based at this time will be available (NA-MIC Project Weeks, 2024; Pinter et al., 2020). For instance, it is foreseeable that with this extension working properly, users will be able to use the ColocZStats GUI widgets in the VR environment to make real-time adjustments to 3D volume rendering and analyze the graphical representations produced. This work highlights the significant potential for extending ColocZStats into the immersive scene offered by Slicer VR, which will provide valuable assets and support for this forthcoming endeavor. Through these and other such improvements, ColocZStats will be continually enhanced to become a more efficient and flexible software tool.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/Supplementary Material.

Ethics statement

Ethical approval was not required for the studies on humans in accordance with local legislation and institutional requirements because only commercially available established cell lines were used.

Author contributions

XC: conceptualization, data curation, formal analysis, investigation, methodology, software, validation, visualization, writing–original draft, and writing–review and editing. TT: data curation, resources, and writing–review and editing. AJ: data curation, resources, and writing–review and editing. TB: conceptualization, data curation, funding acquisition, investigation, methodology, project administration, resources, software, supervision, validation, visualization, writing–original draft, and writing–review and editing. OM-P: conceptualization, data curation, funding acquisition, investigation, methodology, project administration, resources, software, supervision, validation, visualization, writing–original draft, writing–review and editing, and formal analysis.

Funding

The authors declare that financial support was received for the research, authorship, and/or publication of this article. This research was undertaken, in part, thanks to funding from the Canada Research Chairs program (TB), the Faculty of Medicine of Memorial University of Newfoundland (TB), the NSERC Discovery Grant Program (OM-P), and the School of Graduate Studies of Memorial University of Newfoundland (OM-P, XC).

Acknowledgments

We thank the Memorial University of Newfoundland’s School of Graduate Studies, the Memorial University of Newfoundland’s Department of Computer Science, and the Memorial University of Newfoundland’s Faculty of Medicine for their financial and equipment support. Finally, we would like to thank Dedy Sandikin at the National University of Singapore for his assistance during final edits and for providing relevant information regarding the sample datasets being published.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fphys.2024.1440099/full#supplementary-material

References

3D Slicer (2024a). 3D Slicer image computing platform homepage. Available at: https://www.slicer.org/ (Accessed April 10, 2024).

3D Slicer (2024b). Slicer API documentation. Available at: https://apidocs.slicer.org/master/ (Accessed April 10, 2024).

3D Slicer (2024c). Slicer extensions manager. Available at: https://extensions.slicer.org/catalog/Quantification/32448/win (Accessed April 10, 2024).

Aaron J. S., Taylor A. B., Chew T. L. (2018). Image co-localization–co-occurrence versus correlation. J. cell Sci. 131, jcs211847. doi:10.1242/jcs.211847

Adler J., Pagakis S., Parmryd I. (2008). Replicate-based noise corrected correlation for accurate measurements of colocalization. J. Microsc. 230, 121–133. doi:10.1111/j.1365-2818.2008.01967.x

Adler J., Parmryd I. (2010). Quantifying colocalization by correlation: the pearson correlation coefficient is superior to the mander’s overlap coefficient. Cytom. Part A 77, 733–742. doi:10.1002/cyto.a.20896

Adler J., Parmryd I. (2013). Colocalization analysis in fluorescence microscopy. Cell Imaging Tech. Methods Protoc. 931, 97–109. doi:10.1007/978-1-62703-056-4_5

Adler J., Parmryd I. (2014). Quantifying colocalization: thresholding, void voxels and the hcoef. PloS one 9, e111983. doi:10.1371/journal.pone.0111983

Askeland C., Solberg O. V., Bakeng J. B. L., Reinertsen I., Tangen G. A., Hofstad E. F., et al. (2016). Custusx: an open-source research platform for image-guided therapy. Int. J. Comput. assisted radiology Surg. 11, 505–519. doi:10.1007/s11548-015-1292-0

Bassell G. J., Zhang H., Byrd A. L., Femino A. M., Singer R. H., Taneja K. L., et al. (1998). Sorting of beta-actin mRNA and protein to neurites and growth cones in culture. J. Neurosci. 18, 251–265. doi:10.1523/JNEUROSCI.18-01-00251.1998

Bolte S., Cordelières F. P. (2006). A guided tour into subcellular colocalization analysis in light microscopy. J. Microsc. 224, 213–232. doi:10.1111/j.1365-2818.2006.01706.x

Bozorgi M., Lindseth F. (2015). Gpu-based multi-volume ray casting within vtk for medical applications. Int. J. Comput. assisted radiology Surg. 10, 293–300. doi:10.1007/s11548-014-1069-x

Brown P. S., Wang E., Aroeti B., Chapin S. J., Mostov K. E., Dunn K. W. (2000). Definition of distinct compartments in polarized madin–darby canine kidney (mdck) cells for membrane-volume sorting, polarized sorting and apical recycling. Traffic 1, 124–140. doi:10.1034/j.1600-0854.2000.010205.x

Chen S. G. (2014). “Reduced recursive inclusion-exclusion principle for the probability of union events,” in 2014 IEEE international conference on industrial engineering and engineering management, 11–13.

Collazo A., Bricaud O., Desai K. (2005). “Use of confocal microscopy in comparative studies of vertebrate morphology,” in Methods in enzymology (Philadelphia: Elsevier), 521–543.

Costes S. V., Daelemans D., Cho E. H., Dobbin Z., Pavlakis G., Lockett S. (2004). Automatic and quantitative measurement of protein-protein colocalization in live cells. Biophysical J. 86, 3993–4003. doi:10.1529/biophysj.103.038422

Davis J., Meyer T., Smolnig M., Smethurst D. G., Neuhaus L., Heyden J., et al. (2023). A dynamic actin cytoskeleton is required to prevent constitutive vdac-dependent mapk signalling and aberrant lipid homeostasis. Iscience 26, 107539. doi:10.1016/j.isci.2023.107539

Drouin S., Collins D. L. (2018). Prism: an open source framework for the interactive design of gpu volume rendering shaders. PLoS One 13, e0193636. doi:10.1371/journal.pone.0193636

Dunn K. W., Kamocka M. M., McDonald J. H. (2011). A practical guide to evaluating colocalization in biological microscopy. Am. J. Physiology-Cell Physiology 300, C723–C742. doi:10.1152/ajpcell.00462.2010

[Dataset] Fedorov A., Beichel R., Kalpathy-Cramer J., Finet J., Fillion-Robin J.-C., Pujol S., et al. (2024). Slicer - multi-platform, free open source software for visualization and image computing. Available at: https://github.com/Slicer/Slicer (Accessed April 10, 2024).

Georgieva M., Cattoni D. I., Fiche J.-B., Mutin T., Chamousset D., Nollmann M. (2016). Nanometer resolved single-molecule colocalization of nuclear factors by two-color super resolution microscopy imaging. Methods 105, 44–55. doi:10.1016/j.ymeth.2016.03.029

Goddard T. D., Brilliant A. A., Skillman T. L., Vergenz S., Tyrwhitt-Drake J., Meng E. C., et al. (2018). Molecular visualization on the holodeck. J. Mol. Biol. 430, 3982–3996. doi:10.1016/j.jmb.2018.06.040

Goddard, T. (2024). Visualizing 4D light microscopy with ChimeraX. [Dataset] Available at: https://www.rbvi.ucsf.edu/chimera/data/light-apr2017/light4d.html. [Accessed April 10, 2024]

Huang B., Bates M., Zhuang X. (2009). Super-resolution fluorescence microscopy. Annu. Rev. Biochem. 78, 993–1016. doi:10.1146/annurev.biochem.77.061906.092014

Immersive Science LLC (2024a). ConfocalVR: an immersive multimodal 3D bioimaging viewer. Available at: https://www.immsci.com/home/confocalvr/ (Accessed April 10, 2024).

Immersive Science LLC (2024b). ExMicroVR: 3D image data viewer. Available at: https://www.immsci.com/home/exmicrovr/ (Accessed April 10, 2024).

JetBrains s.r.o (2024). PyCharm: the Python IDE for data science and web development. Available at: https://www.jetbrains.com/pycharm/ (Accessed April 10, 2024).

Jonkman J., Brown C. M., Wright G. D., Anderson K. I., North A. J. (2020). Tutorial: guidance for quantitative confocal microscopy. Nat. Protoc. 15, 1585–1611. doi:10.1038/s41596-020-0313-9

Kapur T., Pieper S., Fedorov A., Fillion-Robin J.-C., Halle M., O’Donnell L., et al. (2016). Increasing the impact of medical image computing using community-based open-access hackathons: the na-mic and 3d slicer experience. Med. Image Anal. 33, 176–180. doi:10.1016/j.media.2016.06.035

Kikinis R., Pieper S. D., Vosburgh K. G. (2013). “3d slicer: a platform for subject-specific image analysis, visualization, and clinical support,” in Intraoperative imaging and image-guided therapy (New York, NY: Springer), 277–289.

Lachmanovich E., Shvartsman D., Malka Y., Botvin C., Henis Y., Weiss A. (2003). Co-localization analysis of complex formation among membrane proteins by computerized fluorescence microscopy: application to immunofluorescence co-patching studies. J. Microsc. 212, 122–131. doi:10.1046/j.1365-2818.2003.01239.x

Lacoste T. D., Michalet X., Pinaud F., Chemla D. S., Alivisatos A. P., Weiss S. (2000). Ultrahigh-resolution multicolor colocalization of single fluorescent probes. Proc. Natl. Acad. Sci. 97, 9461–9466. doi:10.1073/pnas.170286097

Landmann L. (2002). Deconvolution improves colocalization analysis of multiple fluorochromes in 3d confocal data sets more than filtering techniques. J. Microsc. 208, 134–147. doi:10.1046/j.1365-2818.2002.01068.x

Landmann L., Marbet P. (2004). Colocalization analysis yields superior results after image restoration. Microsc. Res. Tech. 64, 103–112. doi:10.1002/jemt.20066

Lasso A., Nam H. H., Dinh P. V., Pinter C., Fillion-Robin J.-C., Pieper S., et al. (2018). Interaction with volume-rendered three-dimensional echocardiographic images in virtual reality. J. Am. Soc. Echocardiogr. 31, 1158–1160. doi:10.1016/j.echo.2018.06.011

Liu Y.-C., Chiang A.-S. (2003). High-resolution confocal imaging and three-dimensional rendering. Methods 30, 86–93. doi:10.1016/s1046-2023(03)00010-0

Lucas L., Gilbert N., Ploton D., Bonnet N. (1996). Visualization of volume data in confocal microscopy: comparison and improvements of volume rendering methods. J. Microsc. 181, 238–252. doi:10.1046/j.1365-2818.1996.117397.x

Lynch R. M., Carrington W., Fogarty K. E., Fay F. S. (1996). Metabolic modulation of hexokinase association with mitochondria in living smooth muscle cells. Am. J. Physiology-Cell Physiology 270, C488–C499. doi:10.1152/ajpcell.1996.270.2.C488

[Dataset] NA-MIC Project Weeks (2024). SlicerVR: interactive UI panel in the VR environment. Available at: https://projectweek.na-mic.org/PW35_2021_Virtual/Projects/SlicerVR/ (Accessed April 10, 2024).

[Dataset] National Alliance for Medical Image Computing (2024). The user guide of 3D Slicer’s Volume Rendering module. Available at: https://slicer.readthedocs.io/en/latest/user_guide/modules/volumerendering.html (Accessed April 10, 2024).

Nicolas C. S., Park K.-H., El Harchi A., Camonis J., Kass R. S., Escande D., et al. (2008). I ks response to protein kinase a-dependent kcnq1 phosphorylation requires direct interaction with microtubules. Cardiovasc. Res. 79, 427–435. doi:10.1093/cvr/cvn085

Nolden M., Zelzer S., Seitel A., Wald D., Müller M., Franz A. M., et al. (2013). The medical imaging interaction toolkit: challenges and advances: 10 years of open-source development. Int. J. Comput. assisted radiology Surg. 8, 607–620. doi:10.1007/s11548-013-0840-8

Patil P., Batra T. (2019). “Virtual reality in bioinformatics,” in Open access journal of science (Edmond, OK: MedCrave Group, LLC), 63–70.

Pawley J. (2006). Handbook of biological confocal microscopy. Madison, WI: Springer Science and Business Media.

Pennington K. L., McEwan C. M., Woods J., Muir C. M., Pramoda Sahankumari A., Eastmond R., et al. (2022). Sgk2, 14-3-3, and huwe1 cooperate to control the localization, stability, and function of the oncoprotein ptov1. Mol. Cancer Res. 20, 231–243. doi:10.1158/1541-7786.MCR-20-1076

Pettersen E. F., Goddard T. D., Huang C. C., Meng E. C., Couch G. S., Croll T. I., et al. (2021). Ucsf chimerax: structure visualization for researchers, educators, and developers. Protein Sci. 30, 70–82. doi:10.1002/pro.3943

Pike J. A., Styles I. B., Rappoport J. Z., Heath J. K. (2017). Quantifying receptor trafficking and colocalization with confocal microscopy. Methods 115, 42–54. doi:10.1016/j.ymeth.2017.01.005

Pinter C., Lasso A., Choueib S., Asselin M., Fillion-Robin J.-C., Vimort J.-B., et al. (2020). Slicervr for medical intervention training and planning in immersive virtual reality. IEEE Trans. Med. robotics bionics 2, 108–117. doi:10.1109/tmrb.2020.2983199

Pompey S. N., Michaely P., Luby-Phelps K. (2013) “Quantitative fluorescence co-localization to study protein–receptor complexes,” in Protein-ligand interactions: methods and applications, 439–453doi. doi:10.1007/978-1-62703-398-5_16

Schindelin J., Arganda-Carreras I., Frise E., Kaynig V., Longair M., Pietzsch T., et al. (2012). Fiji: an open-source platform for biological-image analysis. Nat. methods 9, 676–682. doi:10.1038/nmeth.2019

[Dataset] Scientific Volume Imaging B.V. (2024a). Huygens colocalization analyzer with unique 3D visualization. Available at: https://svi.nl/Huygens-Colocalization-Analyzer (Accessed April 10, 2024).

[Dataset] Scientific Volume Imaging B.V. (2024b). Huygens essential home page. Available at: https://svi.nl/Huygens-Essential (Accessed April 10, 2024).

Shroff H., Galbraith C. G., Galbraith J. A., Betzig E. (2008). Live-cell photoactivated localization microscopy of nanoscale adhesion dynamics. Nat. methods 5, 417–423. doi:10.1038/nmeth.1202

Slicer Community (2024). Slicer developer guide. Available at: https://slicer.readthedocs.io/en/latest/developer_guide (Accessed April 10, 2024).

Sommer B., Baaden M., Krone M., Woods A. (2018). From virtual reality to immersive analytics in bioinformatics. J. Integr. Bioinforma. 15, 20180043. doi:10.1515/jib-2018-0043

Stefani C., Lacy-Hulbert A., Skillman T. (2018). Confocalvr: immersive visualization for confocal microscopy. J. Mol. Biol. 430, 4028–4035. doi:10.1016/j.jmb.2018.06.035

Stevens J.-L. R., Rudiger P. J., Bednar J. A. (2015). “Holoviews: building complex visualizations easily for reproducible science,” in SciPy, 59–66.

The American Society for Cell Biology (2024). Seeing beyond sight: new computational approaches to understanding cells. Available at: https://www.ascb.org/science-news/seeing-beyond-sight-new-computational-approaches-to-understanding-cells/(Accessed April 10, 2024).

Theart R. P., Loos B., Niesler T. R. (2017). Virtual reality assisted microscopy data visualization and colocalization analysis. BMC Bioinforma. 18, 64–16. doi:10.1186/s12859-016-1446-2

Theart R. P., Loos B., Powrie Y. S., Niesler T. R. (2018). Improved region of interest selection and colocalization analysis in three-dimensional fluorescence microscopy samples using virtual reality. PloS one 13, e0201965. doi:10.1371/journal.pone.0201965

The Qt Company (2024). Qt designer manual. Available at: https://doc.qt.io/qt-6/qtdesigner-manual.html (Accessed April 10, 2024).

Vipiana F., Crocco L. (2023). Electromagnetic imaging for a novel generation of medical devices: fundamental issues, methodological challenges and practical implementation. Switzerland, Cham: Springer Nature.

Volk L., Cooper K., Jiang T., Paffett M., Hudson L. (2022). Impacts of arsenic on rad18 and translesion synthesis. Toxicol. Appl. Pharmacol. 454, 116230. doi:10.1016/j.taap.2022.116230

Wang E., Pennington J. G., Goldenring J. R., Hunziker W., Dunn K. W. (2001). Brefeldin a rapidly disrupts plasma membrane polarity by blocking polar sorting in common endosomes of mdck cells. J. cell Sci. 114, 3309–3321. doi:10.1242/jcs.114.18.3309

Wu Y., Zinchuk V., Grossenbacher-Zinchuk O., Stefani E. (2012). Critical evaluation of quantitative colocalization analysis in confocal fluorescence microscopy. Interdiscip. Sci. Comput. Life Sci. 4, 27–37. doi:10.1007/s12539-012-0117-x

Yushkevich P. A., Piven J., Hazlett H. C., Smith R. G., Ho S., Gee J. C., et al. (2006). User-guided 3d active contour segmentation of anatomical structures: significantly improved efficiency and reliability. Neuroimage 31, 1116–1128. doi:10.1016/j.neuroimage.2006.01.015

Keywords: confocal microscopy, colocalization analysis, confocal microscopy visualization, 3D slicer, virtual reality, volume rendering

Citation: Chen X, Thakur T, Jeyasekharan AD, Benoukraf T and Meruvia-Pastor O (2024) ColocZStats: a z-stack signal colocalization extension tool for 3D slicer. Front. Physiol. 15:1440099. doi: 10.3389/fphys.2024.1440099

Received: 29 May 2024; Accepted: 12 August 2024;

Published: 04 September 2024.

Edited by:

Cristiana Corsi, University of Bologna, ItalyReviewed by:

Anca Margineanu, Helmholtz Association of German Research Centers (HZ), GermanyMarilisa Cortesi, University of Bologna, Italy

Copyright © 2024 Chen, Thakur, Jeyasekharan, Benoukraf and Meruvia-Pastor. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Oscar Meruvia-Pastor, b3NjYXJAbXVuLmNh; Touati Benoukraf, dGJlbm91a3JhZkBtdW4uY2E=

†These authors share last authorship

Xiang Chen

Xiang Chen Teena Thakur

Teena Thakur Anand D. Jeyasekharan3

Anand D. Jeyasekharan3 Touati Benoukraf

Touati Benoukraf Oscar Meruvia-Pastor

Oscar Meruvia-Pastor