- 1Tissue Repair and Translational Physiology Research Program, School of Biomedical Sciences and Institute of Health and Biomedical Innovation, Queensland University of Technology, Brisbane, QLD, Australia

- 2Sport Performance Innovation and Knowledge Excellence, Queensland Academy of Sport, Brisbane, QLD, Australia

- 3Movement Neuroscience and Injury Prevention Program, Institute of Health and Biomedical Innovation, Queensland University of Technology, Brisbane, QLD, Australia

- 4Clinical and Sports Consulting Services, Providence, RI, United States

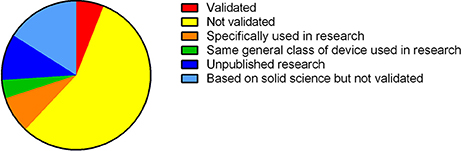

The commercial market for technologies to monitor and improve personal health and sports performance is ever expanding. A wide range of smart watches, bands, garments, and patches with embedded sensors, small portable devices and mobile applications now exist to record and provide users with feedback on many different physical performance variables. These variables include cardiorespiratory function, movement patterns, sweat analysis, tissue oxygenation, sleep, emotional state, and changes in cognitive function following concussion. In this review, we have summarized the features and evaluated the characteristics of a cross-section of technologies for health and sports performance according to what the technology is claimed to do, whether it has been validated and is reliable, and if it is suitable for general consumer use. Consumers who are choosing new technology should consider whether it (1) produces desirable (or non-desirable) outcomes, (2) has been developed based on real-world need, and (3) has been tested and proven effective in applied studies in different settings. Among the technologies included in this review, more than half have not been validated through independent research. Only 5% of the technologies have been formally validated. Around 10% of technologies have been developed for and used in research. The value of such technologies for consumer use is debatable, however, because they may require extra time to set up and interpret the data they produce. Looking to the future, the rapidly expanding market of health and sports performance technology has much to offer consumers. To create a competitive advantage, companies producing health and performance technologies should consult with consumers to identify real-world need, and invest in research to prove the effectiveness of their products. To get the best value, consumers should carefully select such products, not only based on their personal needs, but also according to the strength of supporting evidence and effectiveness of the products.

Introduction

The number and availability of consumer technologies for evaluating physical and psychological health, training emotional awareness, monitoring sleep quality, and assessing cognitive function has increased dramatically in recent years. This technology is at various stages of development: some has been independently tested to determine its reliability and validity, whereas other technology has not been properly tested. Consumer technology is moving beyond basic measurement and telemetry of standard vital signs, and predictive algorithms based on static population-based information. Health and performance technology is now moving toward miniaturized sensors, integrated computing, and artificial intelligence. In this way, technology is becoming “smarter,” more personalized with the possibility of providing real-time feedback to users (Sawka and Friedl, 2018). Technology development has typically been driven by bioengineers. However, effective validation of technology for the “real world” and development of effective methods for processing data requires collaboration with mathematicians and physiologists (Sawka and Friedl, 2018).

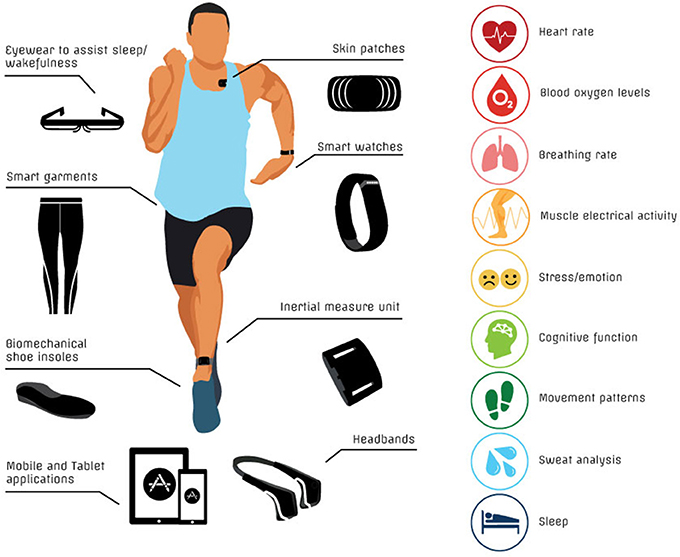

Although there is some overlap between certain technologies, there are also some differences, strengths and weaknesses between related technologies. Various academic reviews have summarized existing technologies (Duking et al., 2016; Halson et al., 2016; Piwek et al., 2016; Baron et al., 2017). However, the number and diversity of portable devices, wearable sensors and mobile applications is ever increasing and evolving. For this reason, regular technology updates are warranted. In this review, we describe and evaluate emerging technologies that may be of potential benefit for dedicated athletes, so-called “weekend warriors,” and others with a general interest in tracking their own health. To undertake this task, we compiled a list of known technologies for monitoring physiology, performance and health, including concussion. Devices for inclusion in the review were identified by searching the internet and databases of scientific literature (e.g., PubMed) using key terms such as “technology,” “hydration,” “sweat analysis,” “heart rate,” “respiration,” “biofeedback,” “respiration,” “muscle oxygenation,” “sleep,” “cognitive function,” and “concussion.” We examined the websites for commercial technologies for links to research, and where applicable, we sourced published research literature. We broadly divided the technologies into the following categories (Figure 1):

• devices for monitoring hydration status and metabolism

• devices, garments, and mobile applications for monitoring physical and psychological stress

• wearable devices that provide physical biofeedback (e.g., muscle stimulation, haptic feedback)

• devices that provide cognitive feedback and training

• devices and applications for monitoring and promoting sleep

• devices and applications for evaluating concussion.

Figure 1. Summary of current technologies for monitoring health/performance and targeted physical measurements.

Our review investigates the key issues of: (a) what the technology is claimed to do; (b) has the technology been independently validated against some accepted standard(s); (c) is the technology reliable and is any calibration needed, and (d) is it commercially available or still under development. Based on this information we have evaluated a range of technologies and provided some unbiased critical comments. The list of products in this review is not exhaustive; it is intended to provide a cross-sectional summary of what is available in different technology categories.

Devices for Monitoring Hydration Status and Metabolism

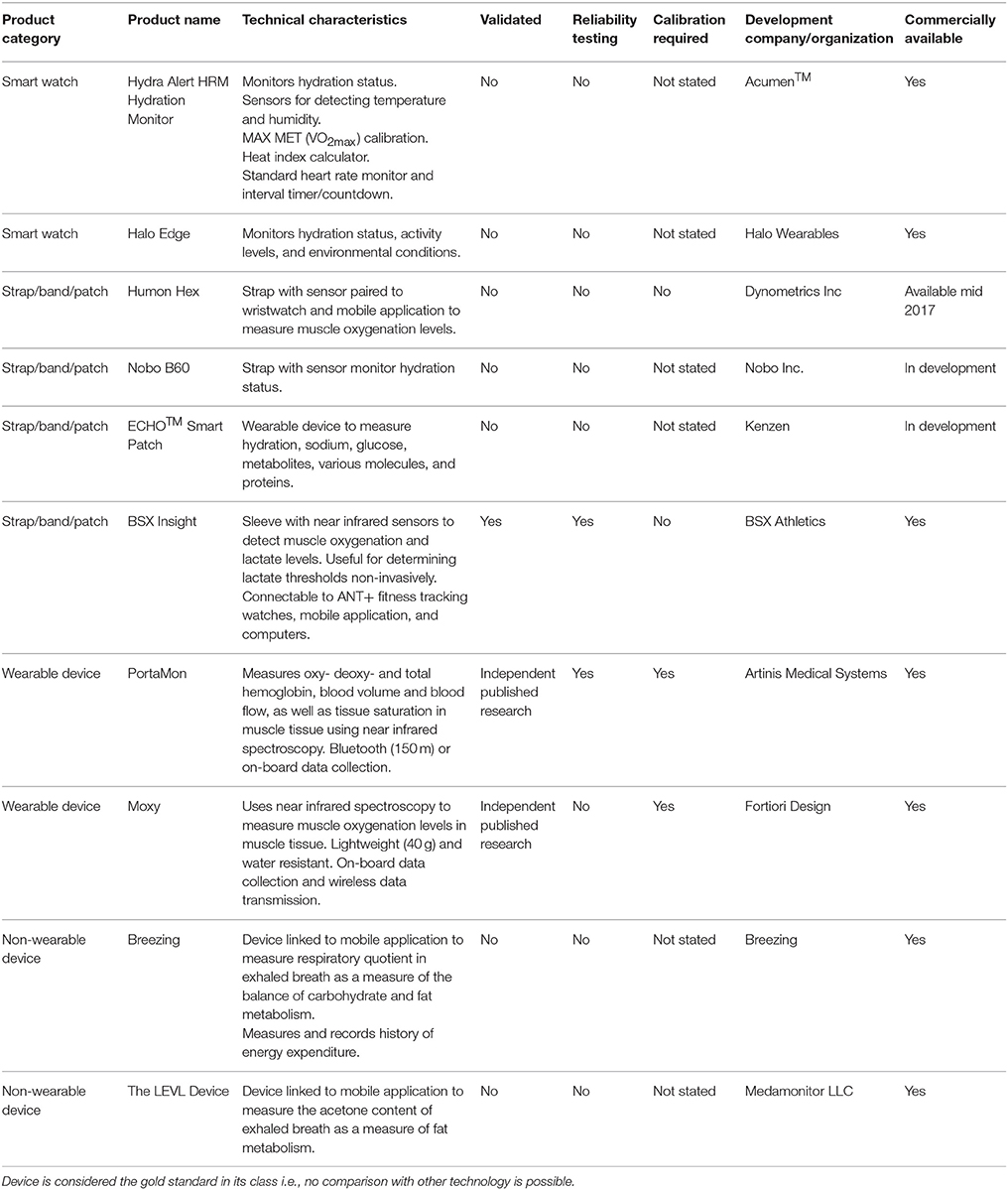

Several wearable and portable hardware devices have been developed to assess hydration status and metabolism, as described below and in Table 1. Very few of the devices have been independently validated to determine their accuracy and reliability. The Moxy device measures oxygen saturation levels in skeletal muscle. The PortaMon device measures oxy-, deoxy-, and total hemoglobin in skeletal muscle. These devices are based on principles of near infrared spectroscopy. The PortaMon device has been validated against phosphorus magnetic resonance spectroscopy (31P-MRS) (Ryan et al., 2013). A similar device (Oxymon) produced by the same company has been proven to produce reliable and reproducible measurements of muscle oxygen consumption both at rest (coefficient of variation 2.4%) and after exercise (coefficient of variation 10%) (Ryan et al., 2012). Another study using the Oxymon device to measure resting cerebral oxygenation reported good reliability in the short term (coefficient of variation 12.5%) and long term (coefficient of variation 15%) (Claassen et al., 2006). The main limitation of these devices is that some expertise is required to interpret the data that they produce. Also, although these devices are based on the same scientific principles, they do vary in terms of the data that they produce (McManus et al., 2018).

The BSX Insight wearable sleeve has been tested independently (Borges and Driller, 2016). Compared with blood lactate measurements during a graded exercise test, this device has high to very high agreement (intraclass correlation coefficient >0.80). It also has very good reliability (intraclass correlation coefficient 0.97; coefficient of variation 1.2%) (Borges and Driller, 2016). This device likely offers some useful features for monitoring muscle oxygenation and lactate non-invasively during exercise. However, one limitation is that the sleeve that houses the device is currently designed only for placement on the calf, and may therefore not be usable for measuring muscle oxygenation in other muscle groups. The Humon Hex is a similar device for monitoring muscle oxygenation that is touted for its benefits in guiding warm-ups, monitoring exercise thresholds and recovery. For these devices, it is unclear how reference limits are set, or established for such functions.

Other non-wearable devices for monitoring metabolism, such as Breezing and the LEVL device, only provide static measurements, and are therefore unlikely to be useful for measuring metabolism in athletes while they exercise. Sweat pads/patches have been developed at academic institutions for measuring skin temperature, pH, electrolytes, glucose, and cortisol (Gao et al., 2016; Koh et al., 2016; Kinnamon et al., 2017). These devices have potential applications for measuring heat stress, dehydration and metabolism in athletes, soldiers, firefighters, and industrial laborers who exercise or work in hot environments. Although these products are not yet commercially available, they likely offer greater validity than existing commercial devices because they have passed through the rigorous academic peer review process for publication. Sweat may be used for more detailed metabolomic profiling, but there are many technical and practical issues to consider before this mode of bioanalysis can be adopted routinely (Hussain et al., 2017).

Technologies for Monitoring Training Loads, Movement Patterns, and Injury Risks

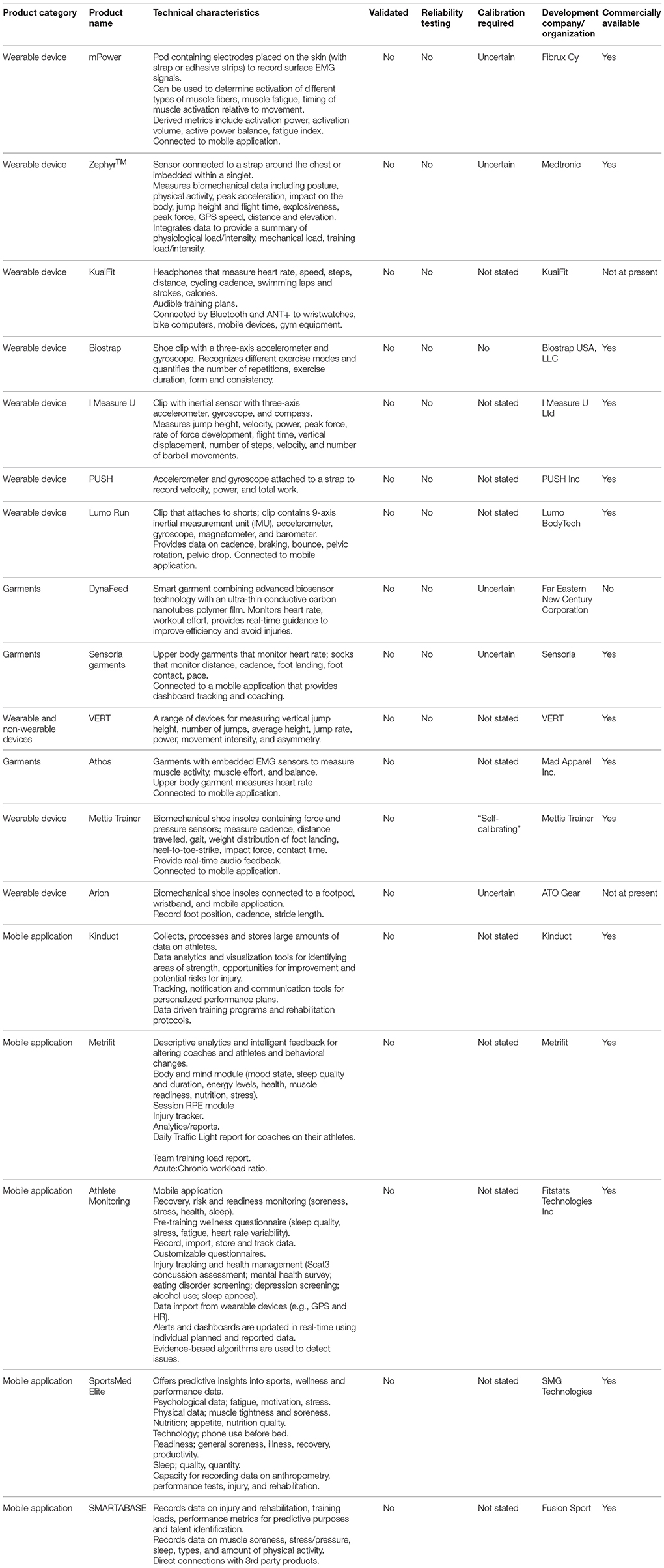

A wide range of small attachable devices, garments, shoe insoles, equipment, and mobile applications have been developed to monitor biomechanical variables and training loads (Table 2). Among biomechanical sensors, many are based around accelerometer and gyroscope technology. Some of the devices that attach to the body provide basic information about body position, movement velocity, jump height, force, power, work, and rotational movement. This data can be used by biomechanists and ergonomists to evaluate movement patterns, assess musculoskeletal fatigue profiles, identify potential risk factors for injury and adjust techniques while walking, running, jumping, throwing, and lifting. Thus, these devices have application in sporting, military and occupational settings.

Among these devices listed in Table 2, the I Measure U device is lightweight, compact and offers the greatest versatility. Other devices and garments provide information about muscle activation and basic training metrics (e.g., steps, speed, distance, cadence, strokes, repetitions etc). The mPower is a pod placed on the skin that measures EMG. It provides a simple, wireless alternative to more complex EMG equipment. Likewise, the Athos garments contain EMG sensors, but the garments have not been properly validated. It is debatable whether the Sensoria and Dynafeed garments offer any more benefits than other devices. The Mettis Trainer insoles (and Arion insoles in development) could provide some useful feedback on running biomechanics in the field. None of these devices have been independently tested to determine their validity or reliability. Until such validity and reliability data become available, these devices should (arguably) be used in combination with more detailed motion-capture video analysis.

Various mobile applications have been developed for recording and analyzing training loads and injury records (Table 2). These applications include a wide range of metrics that incorporate aspects of both physical and psychological load. The Metrifit application provides users with links to related unpublished research on evaluating training stress. Many of the applications record and analyze similar metrics, so it is difficult to differentiate between them. The choice of one particular application will most likely be dictated by individual preferences. With such a variety of metrics—which are generally recorded indirectly—it is difficult to perform rigorous validation studies on these products. Another limitation of some of these applications is the large amount of data they record and how to make sense of all the data.

Technologies for Monitoring Heart Rate, Heart Rate Variability, and Breathing Patterns

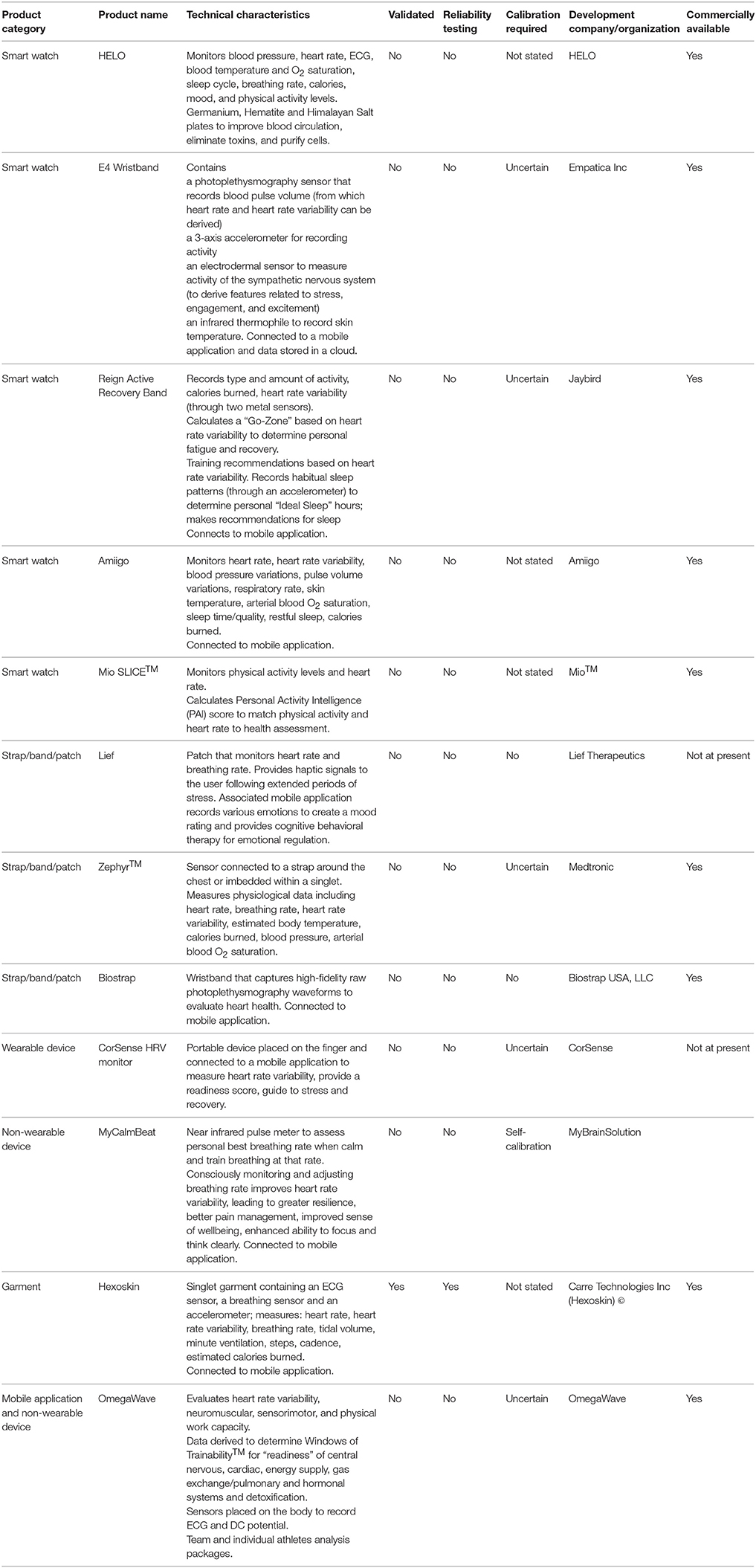

Various devices and mobile applications have been developed for monitoring physiological stress and workloads during exercise (Table 3). The devices offer some potential advantages and functionality over traditional heart rate monitors to assess demands on the autonomic nervous system and the cardiovascular system during and after exercise. They can therefore be used by athletes, soldiers and workers involved in physically demanding jobs (e.g., firefighters) to monitor physical strain while they exercise/work, and to assess when they have recovered sufficiently.

Among the devices listed in Table 3, the OmegaWave offers the advantages that it directly records objective physiological data such as the electrocardiogram (ECG) as a measure of cardiac stress and direct current (DC) potential as a measure of the activity of functional systems in the central nervous system. However, one limitation of the OmegaWave is that some of the data it provides (e.g., energy supply, hormonal function, and detoxification) are not measured directly. Accordingly, the validity and meaningfulness of such data is uncertain.

The Zephyr sensor, E4 wristband and Reign Active Recovery Band offer a range of physiological and biomechanical data, but these devices have not been validated independently. The E4 wristband is also very expensive for what it offers. The Mio SLICE™ wristband integrates heart rate and physical activity data with an algorithm to calculate the user's Personal Activity Intelligence score. Over time, the user can employ this score to evaluate their long-term health status. Although this device itself has not been validated, the Personal Activity Intelligence algorithm has been tested in a clinical study (Nes et al., 2017). The results of this study demonstrated that individuals with a Personal Activity Intelligence score ≥100 had a 17–23% lower risk of death from cardiovascular diseases.

The HELO smart watch measures heart rate, blood pressure, and breathing rate. It also claims to have some more dubious health benefits, none of which are supported by published or peer-reviewed clinical studies. One benefit of the HELO smart watch is that it can be programmed to deliver an emergency message to others if the user is ill or injured.

The Biostrap smart watch measures heart rate. Although it has not obviously been validated, the company provides a link to research opportunities using their products, which suggests confidence in their products and a willingness to engage in research. The Lief patch measures stress levels through heart rate variability (HRV) and breathing rate, and provides haptic feedback to the user in the form of vibrations to adjust their emotional state. The option of real-time feedback without connection to other technology may provide some advantages. If worn continuously, it is uncertain if or how this device (and others) distinguishes between changes in breathing rate and HRV associated with “resting” stress, as opposed to exercise stress (Dupré et al., 2018). But it is probably safe to assume that users will be aware of what they are doing (i.e., resting or exercising) during monitoring periods. Other non-wearable equipment is available for monitoring biosignals relating to autonomic function and breathing patterns. MyCalmBeat is a pulse meter that attaches to a finger to assess and train breathing rate, with the goal of improving emotional control. The CorSense HRV device will be available in the future, and will be tailored for athletes by providing a guide to training readiness and fatigue through measurements of HRV. It is unclear how data from these devices compare with applications such as OmegaWave, which measures ECG directly vs. by photoplethysmography.

A range of garments with integrated biosensor technology have been developed. The Hexoskin garment measures cardiorespiratory function and physical activity levels. It has been independently validated (Villar et al., 2015). The device demonstrates very high agreement with heart rate measured by ECG (intraclass correlation coefficient >0.95; coefficient of variation <0.8%), very high agreement with respiration rate measured by turbine respirometer (intraclass correlation coefficient >0.95; coefficient of variation <1.4%), and moderate to very high agreement with hip motion intensity measured using a separate accelerometer placed on the hip (intraclass correlation coefficient 0.80 to 0.96; coefficient of variation <6.4%). This device therefore offers value for money. Other garments including Athos and DynaFeed appear to perform similar functions and are integrated with smart textiles, but have not been validated.

Technologies for Monitoring and Promoting Better Sleep

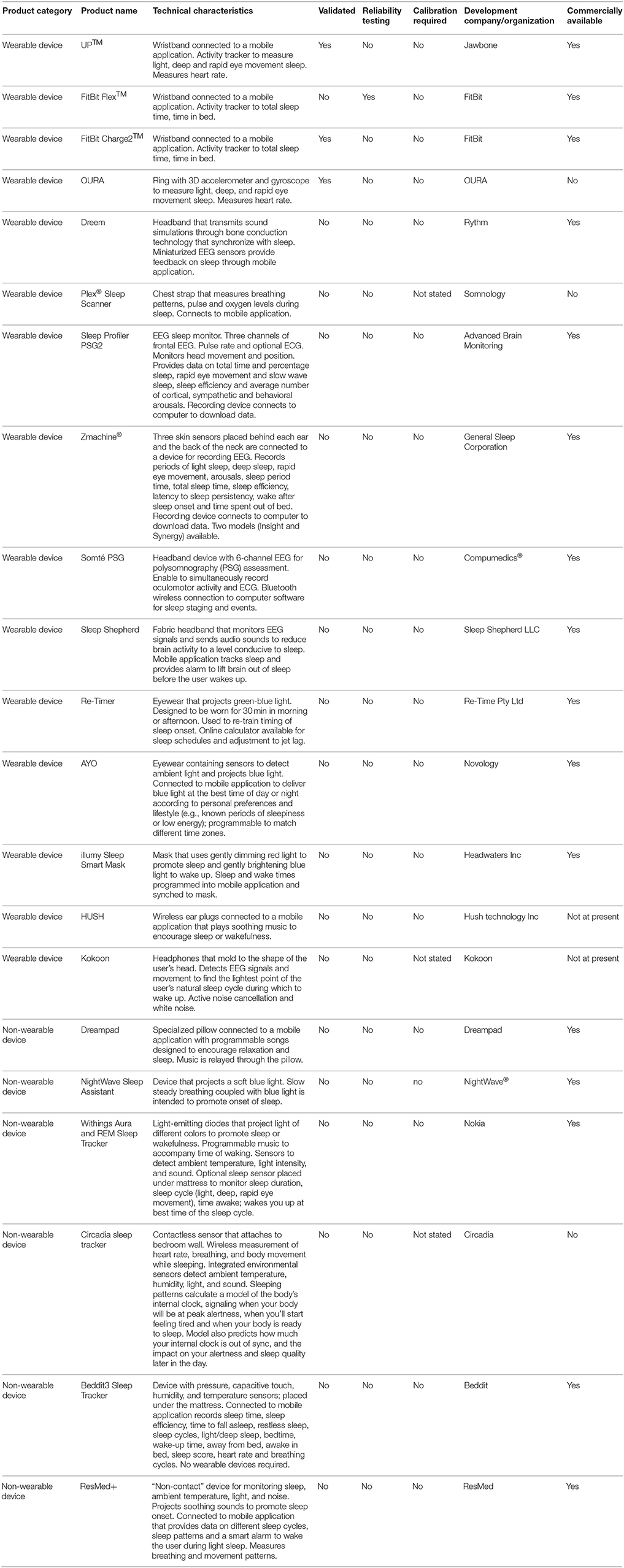

Many devices have been designed to monitor and/or promote sleep (Table 4). Baron et al. (2017) have previously published an excellent review on these devices. Sleep technologies offer benefits for anyone suffering sleep problems arising from chronic disease (e.g., sleep apnea), anxiety, depression, medication, travel/work schedules, and environmental factors (e.g., noise, light, ambient temperature). The gold standard for sleep measurement is polysomnography. However, polysomnography typically requires expensive equipment and technical expertise to set up, and is therefore not appropriate for regular use in a home environment.

The Advanced Brain Monitoring Sleep Profiler and Zmachine Synergy have been approved by the US Food and Drug Administration. Both devices monitor various clinical metrics related to sleep architecture, but both are also quite expensive for consumers to purchase. The disposable sensor pads required to measure encephalogram (EEG) signals add an extra ongoing cost. The Somté PSG device offers the advantage of Bluetooth wireless technology for recording EEG during sleep, without the need for cables.

A large number of wearable devices are available that measure various aspects of sleep. Several of these devices have been validated against gold-standard polysomnography. The UP™ and Fitbit Flex™ devices are wristbands connected to a mobile application. One study reported that compared with polysomnography, the UP device has high sensitivity for detecting sleep (0.97), and low specificity for detecting wake (0.37), whereas it overestimates total sleep time (26.6 ± 35.3 min) and sleep onset latency (5.2 ± 9.6 min), and underestimates wake after sleep onset (31.2 ± 32.3 min) (de Zambotti et al., 2015). Another study reported that measurements obtained using the UP device correlated with total sleep time (r = 0.63) and time in bed (r = 0.79), but did not correlate with measurements of deep sleep, light sleep or sleep efficiency (Gruwez et al., 2017). Several studies have reported similar findings for the Fitbit Flex™ device (Montgomery-Downs et al., 2012; Mantua et al., 2016; Kang et al., 2017). In a validation study of the OURA ring, it was shown to record similar total sleep time, sleep latency onset and wake after sleep onset, and had high sensitivity for detecting sleep (0.96). However, it had lower sensitivity for detecting light sleep (0.65), deep sleep (0.51) and rapid eye movement sleep (0.61), and relatively poor specificity for detecting wake (0.48). It also underestimated deep sleep by about 20 min, and overestimated the rapid eye movement sleep stage of sleep by about 17 min (de Zambotti et al., 2017b). Similar results were recently reported for the Fitbit Charge2™ device (de Zambotti et al., 2017a). These devices therefore offer benefits for monitoring some aspects of sleep, but they also have some technical deficiencies.

Various other devices are available that play soft music or emit light of certain colors to promote sleep or wakefulness. Some similar devices are currently in commercial development. Although devices such as the Withings Aura and REM Sleep Tracker, Re-Timer and AYO have not been independently validated, other scientific research supports the benefits of applying blue light to improve sleep quality (Viola et al., 2008; Gabel et al., 2013; Geerdink et al., 2016). The NightWave Sleep Assistant is appealing based on its relatively low price, whereas the Withings Aura and REM Sleep Tracker records sleep patterns. The Re-Timer device is useful based on its portability.

Some devices also monitor temperature, noise and light in the ambient environment to identify potential impediments to restful sleep. The Beddit3 Sleep Tracker does not require the user to wear any equipment. The ResMed S+ and Circadia devices are entirely non-contact, but it is unclear how they measure sleep and breathing patterns remotely.

Technologies for Monitoring Psychological Stress and Evaluating Cognitive Function

The nexus between physiological and psychological stress is attracting more and more interest. Biofeedback on emotional state can assist in modifying personal appraisal of situations, understanding motivation to perform, and informing emotional development. This technology has application for monitoring the health of people who work under mentally stressful situations such as military combat, medical doctors, emergency service personnel (e.g., police, paramedics, fire fighters) and traffic controllers. Considering the strong connection between physiology and psychology in the context of competitive sport, this technology may also provide new explanations for athletic “underperformance” (Dupré et al., 2018).

Technology such as the SYNC application designed by Sensum measures emotions by combining biometric data from third-party smartwatches/wristbands, medical devices for measuring skin conductance and HR and other equipment (e.g., cameras, microphones) (Dupré et al., 2018). The Spire device is a clip that attaches to clothing to measure breathing rate and provide feedback on emotional state through a mobile application. Although this device has not been formally validated in the scientific literature, it was developed through an extended period of university research. The Feel wristband monitors emotion and provides real-time coaching about emotional control.

In addition to the mobile applications and devices that record and evaluate psychological stress, various applications and devices have also been developed to measure EEG activity and cognitive function (Table 5). Much of this technology has been extensively engineered, making it highly functional. Although the technology has not been validated against gold standards, there is support from the broader scientific literature for the benefits of biofeedback technology for reducing stress and anxiety (Brandmeyer and Delorme, 2013). The Muse™ device produced by InterAxon is an independent EEG-biofeedback device itself, but it has also been coupled with other biofeedback devices and mobile applications (e.g., Lowdown Focus, Opti Brain™). The integration of these technologies highlights the central value of measuring EEG and the versatility of the Muse™ device. The NeuroTracker application is based around the concept of multiple object tracking, which was established 30 years ago as a research tool (Pylyshyn and Storm, 1988). NeuroTracker has since been developed as a training tool to improve cognitive functions including attention, working memory, and visual processing speed (Parsons et al., 2016). This technology has potential application for testing and training cognitive function in athletes (Martin et al., 2017) and individuals with concussion (Corbin-Berrigan et al., 2018), and improving biological perception of motion in the elderly (Legault and Faubert, 2012). The NeuroTracker application has not been validated.

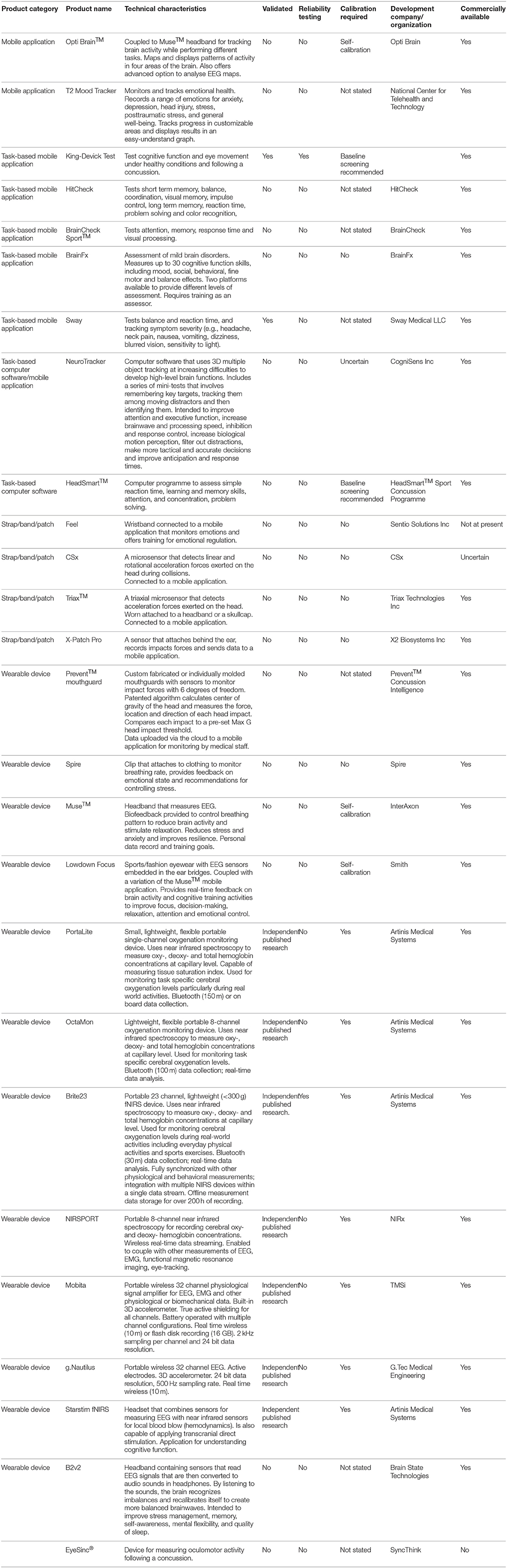

Table 5. Wearable devices and mobile applications for monitoring psychological stress, brain activity, and cognitive function.

In the fields of human factors and ergonomics, there is increasing interest in methods to assess cognitive load. Understanding cognitive load has important implications for concentration, attention, task performance, and safety (Mandrick et al., 2016). The temporal association between neuronal activity and regional cerebral blood flow (so-called “neurovascular coupling”) is recognized as fundamental to evaluating cognitive load. This assessment is possible by combining ambulatory functional neuroimaging techniques such as EEG and functional near infrared spectroscopy (fNIRS) (Mandrick et al., 2016). Research exists on cognitive load while walking in healthy young and older adults (Mirelman et al., 2014; Beurskens et al., 2016; Fraser et al., 2016), but there does not appear to be any research to date evaluating cognitive load in athletes. A number of portable devices listed in Table 5 measure fNIRS, and some also measure EEG and EMG. These integrated platforms for measuring/assessing multiple physiological systems present significant value for various applications. These devices all measure physiological signals directly from the brain and other parts of the body. Research using these devices has demonstrated agreement between measurements obtained from fNIRS vs. the gold standard of functional magnetic resonance imaging (Mehagnoul-Schipper et al., 2002; Huppert et al., 2006; Sato et al., 2013; Moriguchi et al., 2017). These devices require some expertise and specialist training.

Concussion is a common occurrence in sport, combat situations, the workplace, and in vehicular accidents. There is an ever-growing need for simple, valid, reliable, and objective methods to evaluate the severity of concussion, and to monitor recovery. A number of mobile applications and wearable devices have been designed to meet this need. These devices are of potential value for team doctors, physical trainers, individual athletes, and parents of junior athletes.

The King-Devick Test® is a mobile application based on monitoring oculomotor activity, contrast sensitivity, and eye movement to assess concussion. It has been tested extensively in various clinical settings, and proven to be easy to use, reliable, valid, sensitive, and accurate (Galetta et al., 2011; King et al., 2015; Seidman et al., 2015; Walsh et al., 2016). Galetta et al. (2011) examined the value of the King-Devick Test® for assessing concussion in boxers. They discovered that worsening scores for the King-Devick Test® were restricted to boxers with head trauma. These scores also correlated (ρ = 90; p = 0.0001) with scores from the Military Acute Concussion Evaluation, and showed high test–retest reliability (intraclass correlation coefficient 0.97 [95% confidence interval 0.90–1.0]). Other studies have reported a very similar level of reliability (King et al., 2015). Performance in the King-Devick Test® is significantly impaired in American football players (Seidman et al., 2015), rugby league players (King et al., 2015), and combat soldiers (Walsh et al., 2016) experiencing concussion. Because the King-Devick Test® is simple to use, it does not require any medical training, and is therefore suitable for use in the field by anyone.

The EyeSync® device employs a simple test that records eye movement during a 15-s circular visual stimulus, and provides data on prediction variability within 60 s. It is not yet commercially available, and has therefore not been validated. The BrainCheck Sport™ mobile application employs the Flanker and Stroop Interference test to assess reaction time, the Digit Symbol Substitution test to evaluate general cognitive performance, the Trail Making test to measure visual attention and task switching, and the Coordination test. It has not been independently validated, but is quick and uses an array of common cognitive assessment tools.

The Sway mobile application tests balance and reaction. Its balance measurements have been validated in small scale studies (Patterson et al., 2014a,b). Performance in the Sway test was inversely correlated (r = −0.77; p < 0.01) with performance in the Balance Error Scoring System test (Patterson et al., 2014a) and positively correlated (r = 0.63; p < 0.01) with performance in the Biodex Balance System SD (Patterson et al., 2014b). Further testing is needed to confirm these results. One limitation of this test is the risk of bias that may occur if individuals intentionally underperform during baseline testing to create lower scores than they may attain following a concussion (so as to avoid time out of competition after concussion).

Various microsensors have been developed for measuring impact forces associated with concussion (Table 5). Some of these microsensors attach to the skin, whereas others are built into helmets, headwear or mouth guards. The X-Patch Pro device is a device that attaches behind the ear. Although it has not been scientifically validated against any gold standard, it has been used in published concussion research projects (Swartz et al., 2015; Reynolds et al., 2016), which supports its sensitivity for assessing head impact forces. The Prevent™ mouth guard is a new device for measuring the impact of head collisions. Its benefits include objective and quantitative data on the external force applied to the head. Many of the sensors vary in accuracy, and only record linear and rotational acceleration. Whereas, many sports involve constantly changing of direction, planes of movement will provide the most accurate data. A study by Siegmund et al. (2016) reported that the Head Impact Telemetry System (HITS) sensors detected 861 out of the 896 impacts (96.1%). If a sensor is detecting better than 95%, it has good reliability. However, helmetless sports have fewer options for such accuracy and actionable data.

Considerations and Recommendations

In a brief, yet thought-provoking commentary on mobile applications and wearable devices for monitoring sleep, Van den Bulck makes some salient observations and remarks that are applicable to all forms of consumer health technologies (Van den Bulck, 2015). Most of these technologies are not labeled as medical devices, yet they do convey explicit or implicit value statements about our standard of health. There is a need to determine if and how using technology influences peoples' knowledge and attitude about their own health. The ever-expanding public interest in health technologies raises several ethical issues (Van den Bulck, 2015). First, self-diagnosis based on self-gathered data could be inconsistent with clinical diagnoses provided by medical professionals. Second, although self-monitoring may reveal undiagnosed health problems, such monitoring on a large population level is likely to result in many false positives. Last, the use of technologies may create an unhealthy (or even harmful) obsession with personal health for individuals or their family members who use such technologies (Van den Bulck, 2015). Increasing public awareness of the limitations of technology and advocating health technologies that are both specific and sensitive to certain aspects of health may alleviate these issues to some extent, but not entirely.

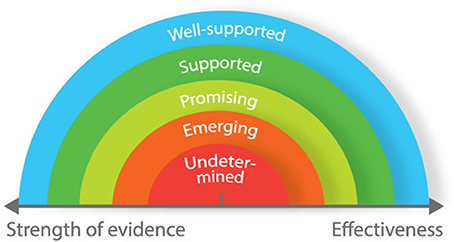

For consumers who want to evaluate technologies for health and performance, we propose a matrix based around two dimensions: strength of evidence (weak to strong) and effectiveness (low to high) (Figure 2). This matrix is based on a continuum that was developed for use in a different context (Puddy and Wilkins, 2011), but is nonetheless appropriate for evaluating technology. When assessing the strength of evidence for any given technology, consumers should consider the following questions: (i) how rigorously has the device/technology been evaluated? (ii) how strong is the evidence in determining that the device/technology is producing the desired outcomes? (iii) how much evidence exists to determine that something other than this device/technology is responsible for producing the desired outcomes? When evaluating the effectiveness of technologies, consumers should consider whether the device/technology produces desirable or non-desirable outcomes. Applying the matrix in Figure 2, undetermined technologies would include those that have not been developed according to any real-world need and display no proven effect. Conversely, well-supported technologies would include those that have been used in applied studies in different settings, and proven to be effective.

Most of the health and performance technologies that we have reviewed have been developed based on real-world needs, yet only a small proportion has been proven effective through rigorous, independent validation (Figure 3). Many of these technologies described in this review should therefore be classified “emerging” or “promising.” Independent scientific validation provides the strongest level of support for technology. However, it is not always possible to attain higher standards of validation. For example, cognitive function is underpinned by many different neurological processes. Accordingly, it is difficult to select a single neurological measurement to compare against. Some technologies included in this review have not been independently validated per se; but through regular use in academic research, it has become accepted that they provide reliable and specific data on measurement items of interest. Even without formal independent validation, it is unlikely (in most instances at least) that researchers would continue using such technologies if they did not offer reliable and specific data. In the absence of independent validation, we therefore propose that technologies that have not been validated against a gold standard (but are regularly used in research) should be considered as “well-supported.” Other technical factors for users to consider include whether the devices require calibration or specialist training to set up and interpret data, the portability and physical range for signal transmission/recording, Bluetooth/ANT+ and real-time data transfer capabilities, and on-board or cloud data storage capacity and security.

Figure 3. Classification of technologies based on whether they have been validated and/or used in research.

From a research perspective, consumer health technologies can be categorized into those that have been used in validation studies, observational studies, screening of health disorders, and intervention studies (Baron et al., 2017). For effective screening of health disorders and to detect genuine changes in health outcomes after lifestyle interventions, it is critical that consumer health technologies provide valid, accurate and reliable data (Van den Bulck, 2015). Another key issue for research into consumer health technologies is the specificity of study populations with respect to the intended use of the technologies. If technologies have been designed to monitor particular health conditions (e.g., insomnia), then it is important for studies to include individuals from the target population (as well as healthy individuals for comparison). Scientific validation may be more achievable in healthy populations compared with populations who have certain health conditions (Baron et al., 2017). There is some potential value for commercial technology companies to create registries of people who use their devices. This approach would assist in collecting large amounts of data, which would in turn provide companies with helpful information about the frequency and setting (e.g., home vs. clinic) of device use, the typical demographics of regular users, and possible feedback from users about devices. Currently, very few companies have established such registries, and they are not consistently publishing data in scientific journals. Proprietary algorithms used for data processing, the lack of access to data by independent scientists, and non-random assignment of device use are also factors that are restricting open engagement between the technology industry and the public at the present time (Baron et al., 2017).

It would seem advisable for companies producing health and performance technologies to consult with consumers to identify real-world needs and to invest in research to prove the effectiveness of their products. However, this seems to be relatively rare. Budget constraints may prevent some companies from engaging in research. Alternatively, some companies may not want to have their products tested independently out of a desire to avoid public scrutiny about their validity. In the absence of rigorous testing, before purchasing health and performance technologies, consumers should therefore carefully consider whether such technologies are likely to be genuinely useful and effective.

Author Contributions

JP and JS conceived the concept for this review. JP, GK, and JS searched the literature and wrote the manuscript. JP designed the figures. JP, GK, and JS edited and approved the final version of the manuscript.

Funding

This work was supported by funding from Sport Performance Innovation and Knowledge Excellence at the Queensland Academy of Sport, Brisbane, Australia.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We acknowledge assistance from Bianca Catellini in preparing the figures.

References

Baron, K. G., Duffecy, J., Berendsen, M. A., Cheung Mason, I., Lattie, E. G., and Manalo, N. C. (2017). Feeling validated yet? A scoping review of the use of consumer-targeted wearable and mobile technology to measure and improve sleep. Sleep Med. Rev. doi: 10.1016/j.smrv.2017.12.002. [Epub ahead of print].

Beurskens, R., Steinberg, F., Antoniewicz, F., Wolff, W., and Granacher, U. (2016). Neural correlates of dual-task walking: effects of cognitive versus motor interference in young adults. Neural Plast. 2016:8032180. doi: 10.1155/2016/8032180

Borges, N. R., and Driller, M. W. (2016). Wearable lactate threshold predicting device is valid and reliable in runners. J. Strength Cond. Res. 30, 2212–2218. doi: 10.1519/JSC.0000000000001307

Brandmeyer, T., and Delorme, A. (2013). Meditation and neurofeedback. Front. Psychol. 4:688. doi: 10.3389/fpsyg.2013.00688

Claassen, J. A., Colier, W. N., and Jansen, R. W. (2006). Reproducibility of cerebral blood volume measurements by near infrared spectroscopy in 16 healthy elderly subjects. Physiol. Meas. 27, 255–264. doi: 10.1088/0967-3334/27/3/004

Corbin-Berrigan, L. A., Kowalski, K., Faubert, J., Christie, B., and Gagnon, I. (2018). Three-dimensional multiple object tracking in the pediatric population: the NeuroTracker and its promising role in the management of mild traumatic brain injury. Neuroreport 29, 559–563. doi: 10.1097/WNR.0000000000000988

de Zambotti, M., Claudatos, S., Inkelis, S., Colrain, I. M., and Baker, F. C. (2015). Evaluation of a consumer fitness-tracking device to assess sleep in adults. Chronobiol. Int. 32, 1024–1028. doi: 10.3109/07420528.2015.1054395

de Zambotti, M., Goldstone, A., Claudatos, S., Colrain, I. M., and Baker, F. C. (2017a). A validation study of Fitbit Charge 2 compared with polysomnography in adults. Chronobiol. Int. 35, 465–476. doi: 10.1080/07420528.2017.1413578

de Zambotti, M., Rosas, L., Colrain, I. M., and Baker, F. C. (2017b). The sleep of the ring: comparison of the OURA sleep tracker against polysomnography. Behav Sleep Med. doi: 10.1080/15402002.2017.1300587. [Epub ahead of print].

Düking, P., Hotho, A., Holmberg, H. C., Fuss, F. K., and Sperlich, B. (2016). Comparison of non-invasive individual monitoring of the training and health of athletes with commercially available wearable technologies. Front. Physiol. 7:71. doi: 10.3389/fphys.2016.00071

Dupré, D., Bland, B., Bolster, A., Morrison, G., and McKeown, G. (2018). “Dynamic model of athletes' emotions based on wearable devices,” in Advances in Human Factors in Sports, Injury Prevention and Outdoor Recreation, ed T. Ahram (Cham: Springer International Publishing), 42–50.

Fraser, S. A., Dupuy, O., Pouliot, P., Lesage, F., and Bherer, L. (2016). Comparable cerebral oxygenation patterns in younger and older adults during dual-task walking with increasing load. Front. Aging Neurosci. 8:240. doi: 10.3389/fnagi.2016.00240

Gabel, V., Maire, M., Reichert, C. F., Chellappa, S. L., Schmidt, C., Hommes, V., et al. (2013). Effects of artificial dawn and morning blue light on daytime cognitive performance, well-being, cortisol and melatonin levels. Chronobiol. Int. 30, 988–997. doi: 10.3109/07420528.2013.793196

Galetta, K. M., Barrett, J., Allen, M., Madda, F., Delicata, D., Tennant, A. T., et al. (2011). The King-Devick test as a determinant of head trauma and concussion in boxers and MMA fighters. Neurology 76, 1456–1462. doi: 10.1212/WNL.0b013e31821184c9

Gao, W., Emaminejad, S., Nyein, H. Y. Y., Challa, S., Chen, K., Peck, A., et al. (2016). Fully integrated wearable sensor arrays for multiplexed in situ perspiration analysis. Nature 529, 509–514. doi: 10.1038/nature16521

Geerdink, M., Walbeek, T. J., Beersma, D. G., Hommes, V., and Gordijn, M. C. (2016). Short blue light pulses (30 min) in the morning support a sleep-advancing protocol in a home setting. J. Biol. Rhythms 31, 483–497. doi: 10.1177/0748730416657462

Gruwez, A., Libert, W., Ameye, L., and Bruyneel, M. (2017). Reliability of commercially available sleep and activity trackers with manual switch-to-sleep mode activation in free-living healthy individuals. Int. J. Med. Inform. 102, 87–92. doi: 10.1016/j.ijmedinf.2017.03.008

Halson, S. L., Peake, J. M., and Sullivan, J. P. (2016). Wearable technology for athletes: information overload and pseudoscience? Int. J. Sports Physiol. Perform. 11, 705–706. doi: 10.1123/IJSPP.2016-0486

Huppert, T. J., Hoge, R. D., Diamond, S. G., Franceschini, M. A., and Boas, D. A. (2006). A temporal comparison of BOLD, ASL, and NIRS hemodynamic responses to motor stimuli in adult humans. Neuroimage 29, 368–382. doi: 10.1016/j.neuroimage.2005.08.065

Hussain, J. N., Mantri, N., and Cohen, M. M. (2017). Working up a good sweat - the challenges of standardising sweat collection for metabolomics analysis. Clin. Biochem. Rev. 38, 13–34.

Kang, S. G., Kang, J. M., Ko, K. P., Park, S. C., Mariani, S., and Weng, J. (2017). Validity of a commercial wearable sleep tracker in adult insomnia disorder patients and good sleepers. J. Psychosom. Res. 97, 38–44. doi: 10.1016/j.jpsychores.2017.03.009

King, D., Hume, P., Gissane, C., and Clark, T. (2015). Use of the King-Devick test for sideline concussion screening in junior rugby league. J. Neurol. Sci. 357, 75–79. doi: 10.1016/j.jns.2015.06.069

Kinnamon, D., Ghanta, R., Lin, K. C., Muthukumar, S., and Prasad, S. (2017). Portable biosensor for monitoring cortisol in low-volume perspired human sweat. Sci. Rep. 7:13312. doi: 10.1038/s41598-017-13684-7

Koh, A., Kang, D., Xue, Y., Lee, S., Pielak, R. M., Kim, J., et al. (2016). A soft, wearable microfluidic device for the capture, storage, and colorimetric sensing of sweat. Sci. Transl. Med. 8, 366ra165. doi: 10.1126/scitranslmed.aaf2593

Legault, I., and Faubert, J. (2012). Perceptual-cognitive training improves biological motion perception: evidence for transferability of training in healthy aging. Neuroreport 23, 469–473. doi: 10.1097/WNR.0b013e328353e48a

Mandrick, K., Chua, Z., Causse, M., Perrey, S., and Dehais, F. (2016). Why a comprehensive understanding of mental workload through the measurement of neurovascular coupling is a key issue for neuroergonomics? Front. Hum. Neurosci. 10:250. doi: 10.3389/fnhum.2016.00250

Mantua, J., Gravel, N., and Spencer, R. M. (2016). Reliability of sleep measures from four personal health monitoring devices compared to research-based actigraphy and polysomnography. Sensors 16:E646. doi: 10.3390/s16050646

Martín, A., Sfer, A. M., D'Urso Villar, M. A., and Barraza, J. F. (2017). Position affects performance in multiple-object tracking in rugby union players. Front. Psychol. 8:1494. doi: 10.3389/fpsyg.2017.01494

McManus, C. J., Collison, J., and Cooper, C. E. (2018). Performance comparison of the MOXY and PortaMon near-infrared spectroscopy muscle oximeters at rest and during exercise. J. Biomed. Opt. 23, 1–14. doi: 10.1117/1.JBO.23.1.015007

Mehagnoul-Schipper, D. J., van der Kallen, B. F., Colier, W. N., van der Sluijs, M. C., van Erning, L. J., Thijssen, H. O., et al. (2002). Simultaneous measurements of cerebral oxygenation changes during brain activation by near-infrared spectroscopy and functional magnetic resonance imaging in healthy young and elderly subjects. Hum. Brain Mapp. 16, 14–23. doi: 10.1002/hbm.10026

Mirelman, A., Maidan, I., Bernad-Elazari, H., Nieuwhof, F., Reelick, M., Giladi, N., et al. (2014). Increased frontal brain activation during walking while dual tasking: an fNIRS study in healthy young adults. J. Neuroeng. Rehabil. 11:85. doi: 10.1186/1743-0003-11-85

Montgomery-Downs, H. E., Insana, S. P., and Bond, J. A. (2012). Movement toward a novel activity monitoring device. Sleep Breath. 16, 913–917. doi: 10.1007/s11325-011-0585-y

Moriguchi, Y., Noda, T., Nakayashiki, K., Takata, Y., Setoyama, S., Kawasaki, S., et al. (2017). Validation of brain-derived signals in near-infrared spectroscopy through multivoxel analysis of concurrent functional magnetic resonance imaging. Hum. Brain Mapp. 38, 5274–5291. doi: 10.1002/hbm.23734

Nes, B. M., Gutvik, C. R., Lavie, C. J., Nauman, J., and Wisløff, U. (2017). Personalized activity intelligence (PAI) for prevention of cardiovascular disease and promotion of physical activity. Am. J. Med. 130, 328–336. doi: 10.1016/j.amjmed.2016.09.031

Parsons, B., Magill, T., Boucher, A., Zhang, M., Zogbo, K., Bérubé, S., et al. (2016). Enhancing cognitive function using perceptual-cognitive training. Clin. EEG Neurosci. 47, 37–47. doi: 10.1177/1550059414563746

Patterson, J., Amick, R., Pandya, P., Hankansson, N., and Jorgensen, M. (2014a). Comparison of a mobile technology application with the balance error scoring system. Int. J. Athl. Ther. Train. 19, 4–7. doi: 10.1123/ijatt.2013-0094

Patterson, J. A., Amick, R. Z., Thummar, T., and Rogers, M. E. (2014b). Validation of measures from the smartphone Sway Balance application: a pilot study. Int. J. Sports Phys. Ther. 9, 135–139.

Piwek, L., Ellis, D. A., Andrews, S., and Joinson, A. (2016). The rise of consumer health wearables: promises and barriers. PLoS Med. 13:e1001953. doi: 10.1371/journal.pmed.1001953

Puddy, R., and Wilkins, N. (2011). Understanding Evidence Part 1: Best Available Research Evidence. A Guide to the Continuum of Evidence of Effectiveness. Atlanta, GA: Centers for Disease Control and Prevention.

Pylyshyn, Z. W., and Storm, R. W. (1988). Tracking multiple independent targets: evidence for a parallel tracking mechanism. Spat. Vis. 3, 179–197. doi: 10.1163/156856888X00122

Reynolds, B. B., Patrie, J., Henry, E. J., Goodkin, H. P., Broshek, D. K., Wintermark, M., et al. (2016). Practice type effects on head impact in collegiate football. J. Neurosurg. 124, 501–510. doi: 10.3171/2015.5.JNS15573

Ryan, T. E., Erickson, M. L., Brizendine, J. T., Young, H. J., and McCully, K. K. (2012). Noninvasive evaluation of skeletal muscle mitochondrial capacity with near-infrared spectroscopy: correcting for blood volume changes. J. Appl. Physiol. 113, 175–183. doi: 10.1152/japplphysiol.00319.2012

Ryan, T. E., Southern, W. M., Reynolds, M. A., and McCully, K. K. (2013). A cross-validation of near-infrared spectroscopy measurements of skeletal muscle oxidative capacity with phosphorus magnetic resonance spectroscopy. J. Appl. Physiol. 115, 1757–1766. doi: 10.1152/japplphysiol.00835.2013

Sato, H., Yahata, N., Funane, T., Takizawa, R., Katura, T., Atsumori, H., et al. (2013). A NIRS-fMRI investigation of prefrontal cortex activity during a working memory task. Neuroimage 83, 158–173. doi: 10.1016/j.neuroimage.2013.06.043

Sawka, M. N., and Friedl, K. E. (2018). Emerging wearable physiological monitoring technologies and decision aids for health and performance. J. Appl. Physiol. 124, 430–431. doi: 10.1152/japplphysiol.00964.2017

Seidman, D. H., Burlingame, J., Yousif, L. R., Donahue, X. P., Krier, J., Rayes, L. J., et al. (2015). Evaluation of the King-Devick test as a concussion screening tool in high school football players. J. Neurol. Sci. 356, 97–101. doi: 10.1016/j.jns.2015.06.021

Siegmund, G. P., Guskiewicz, K. M., Marshall, S. W., DeMarco, A. L., and Bonin, S. J. (2016). Laboratory validation of two wearable sensor systems for measuring head impact severity in football players. Ann. Biomed. Eng. 44, 1257–1274. doi: 10.1007/s10439-015-1420-6

Swartz, E. E., Broglio, S. P., Cook, S. B., Cantu, R. C., Ferrara, M. S., Guskiewicz, K. M., et al. (2015). Early results of a helmetless-tackling intervention to decrease head impacts in football players. J. Athl. Train. 50, 1219–1222. doi: 10.4085/1062-6050-51.1.06

Van den Bulck, J. (2015). Sleep apps and the quantified self: blessing or curse? J. Sleep Res. 24, 121–123. doi: 10.1111/jsr.12270

Villar, R., Beltrame, T., and Hughson, R. L. (2015). Validation of the Hexoskin wearable vest during lying, sitting, standing, and walking activities. Appl. Physiol. Nutr. Metab. 40, 1019–1024. doi: 10.1139/apnm-2015-0140

Viola, A. U., James, L. M., Schlangen, L. J., and Dijk, D. J. (2008). Blue-enriched white light in the workplace improves self-reported alertness, performance and sleep quality. Scand. J. Work Environ. Health 34, 297–306. doi: 10.5271/sjweh.1268

Keywords: health, performance, stress, emotion, sleep, cognitive function, concussion

Citation: Peake JM, Kerr G and Sullivan JP (2018) A Critical Review of Consumer Wearables, Mobile Applications, and Equipment for Providing Biofeedback, Monitoring Stress, and Sleep in Physically Active Populations. Front. Physiol. 9:743. doi: 10.3389/fphys.2018.00743

Received: 13 November 2017; Accepted: 28 May 2018;

Published: 28 June 2018.

Edited by:

Billy Sperlich, Universität Würzburg, GermanyReviewed by:

Filipe Manuel Clemente, Polytechnic Institute of Viana do Castelo, PortugalNicola Cellini, Università degli Studi di Padova, Italy

Copyright © 2018 Peake, Kerr and Sullivan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jonathan M. Peake, am9uYXRoYW4ucGVha2VAcXV0LmVkdS5hdQ==

Jonathan M. Peake

Jonathan M. Peake Graham Kerr

Graham Kerr John P. Sullivan

John P. Sullivan