- 1School of Electronic Information Engineering, Foshan University, Foshan, China

- 2Guangdong Provincial Key Laboratory of Industrial Intelligent Inspection Technology, Foshan University, Foshan, China

- 3School of Mechatronic Engineering and Automation, Foshan University, Foshan, China

Regular inspections of pipelines are of great significance to ensure their long-term safe and stable operation, and the rapid 3D reconstruction of constant-diameter straight pipelines (CDSP) based on monocular images plays a crucial role in tasks such as positioning and navigation for pipeline inspection drones, as well as defect detection on the pipeline surface. Most of the traditional 3D reconstruction methods for pipelines rely on marked poses or circular contours of end faces, which are complex and difficult to apply, while some existing 3D reconstruction methods based on contour features for pipelines have the disadvantage of slow reconstruction speed. To address the above issues, this paper proposes a rapid 3D reconstruction method for CDSP. This method solves for the spatial pose of the pipeline axis based on the geometric constraints between the projected contour lines and the axis, provided that the radius is known. These constraints are derived from the perspective projection imaging model of the single-view CDSP. Compared with traditional methods, the proposed method improves the reconstruction speed by 99.907% while maintaining similar accuracy.

1 Introduction

Pipelines serve as crucial and ubiquitous transportation infrastructures in industries such as oil, natural gas, and chemicals. Due to increased years of service, issues like corrosion, wear, and cracks often arise [1, 2]. In order to avoid accidents such as transmission medium leakage caused by pipeline problems, regular pipeline inspections and surface defect detections are mandatory to prevent accidents, reduce economic losses, and extend the service life of pipelines [3–5]. To enhance the safety and efficiency of pipeline operations, traditional manual inspection methods can no longer fully meet the demands. Therefore, scholars at home and abroad have conducted extensive research on 3D reconstruction methods based on computer vision technology. At the same time, this technology is widely used in tasks such as positioning and navigation of pipeline inspection drones [6] and surface defect detection of pipelines [7].

At present, 3D reconstruction methods are divided into contact and non-contact methods according to the data sources [8]. Contact methods generally use specific instruments to directly measure the scene to obtain 3D information, such as three coordinate measuring machines [9]. Since such methods require physical contact with the surface to be measured, they are difficult to implement in many scenes [10]. However, the non-contact 3D reconstruction methods based on visual feature extraction have received widespread attention. The currently common visual 3D reconstruction methods mainly include active vision and passive vision methods [11]. Laser scanning [12] and structured light [13] are commonly used active vision 3D reconstruction methods, but the equipment cost is high and the operation is complex. Monocular vision, as a type of passive vision method, has the advantages of simple structure, low cost, and wide range of applications. In the research of 3D reconstruction methods for constant-diameter straight pipelines (CDSP), it can be divided into two categories according to different sources of feature information: methods based on surface markers and methods based on contour edges.

(1) Methods based on surface markers

Methods based on surface markers determine the 3D pose of the object to be measured by estimating the pose of markers. Hwang et al. [14] proposed a method for estimating the 3D pose of a catheter with markers based on single-plane perspective. This method utilizes the center points of the three marker bands on the catheter surface and their spacing to solve for the catheter’s direction and position, thereby achieving catheter pose estimation. Zhang et al. [15] proposed a method using a hybrid marker to estimate the pose of a cylinder. This method estimates the cylinder’s pose by solving the pose of circle points and chessboard corner points using the PnP algorithm. Lee et al. [16] proposed a method for estimating the pose of a cylinder by constructing rectangular standard marker. This method uses the edge features of the label as input to find two pairs of points to form rectangular standard markers, and then realizes cylinder pose estimation based on the features of the two pairs of points and the geometric characteristics of the label. Since markers are not allowed in many scenes, the above methods cannot be widely used.

(2) Methods based on contour edges

Methods based on contour edges estimate the 3D pose by extracting the edge features of the object to be measured. Shiu et al. [17] proposed a method to determine the pose of a cylinder using elliptical projection and lateral projection. This method determines the pose of the cylinder by solving the center of the end face circle and the intersection points of the end face circle and line features. However, since it is impossible to extract the circular contour of the end face from the long-distance transmission pipeline, the above method is not suitable for the 3D reconstruction task of long-distance transmission pipelines. Zhang et al. [18] proposed a 3D reconstruction method for pipelines based on multi-view stereo vision. This method divides the extracted projection axis into line segments and arc segments, performs NURBS curve fitting, and then completes the 3D reconstruction of curve control points. This method regards the centerline of the contour in the image plane as the projection axis, and this approximate calculation introduces significant system errors. Doignon et al. [19] proposed a 3D pose estimation method for cylinders based on degenerate conic fitting. This method first performs degenerate conic fitting on the edge feature points of the cylinder and then calculates the pose of the cylinder axis using algebraic methods. Cheng et al. [20] proposed a 3D reconstruction method for pipeline perspective projection models based on coupled point pairs. This method completes the 3D reconstruction of the pipeline axis based on the geometric constraints between the coupled point pairs on the cross-sectional circle and the center of the cross-section. Since edge features require iteration, the 3D reconstruction process is relatively slow.

To address the above issues, this paper proposes a method for rapid 3D reconstruction of CDSP under perspective projection. This method does not require adding markers to the outer surface of the pipeline or extracting circular contours of the end faces. It only needs to extract the contour lines of the pipeline, making the operation simpler. While ensuring the accuracy of reconstruction, this method has a faster reconstruction speed, effectively solving the problem of low efficiency in the reconstruction process. This article first analyzes the imaging process of CDSP perspective projection, and then derives a fast solution for axial position under the premise of known radius. Finally, the experimental results show that compared with traditional methods, this method achieves faster reconstruction speed.

The organization of this paper is as follows: Section 2 introduces the single-view perspective projection imaging model of CDSP. Section 3 details the steps of our proposed rapid 3D reconstruction method of CDSP. In Section 4, we conduct experiments using both simulated and real data to validate the effectiveness of our method and compare with traditional methods. Finally, Section 5 presents the conclusion of this paper.

2 The single-view perspective projection imaging model of CDSP

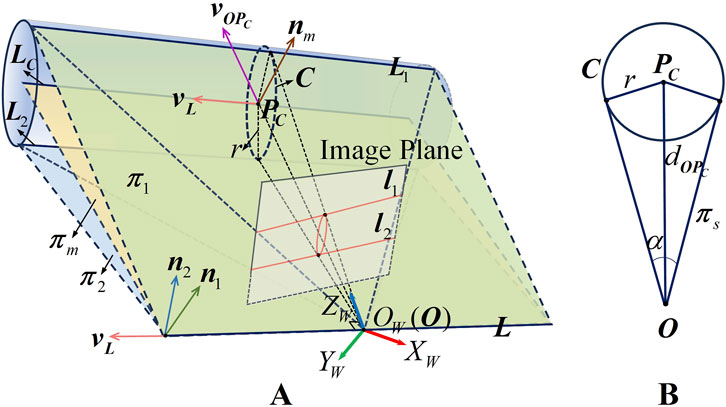

To address the 3D reconstruction problem of CDSP, this paper first establishes the perspective projection imaging model of single-view CDSP, as shown in Figure 1. Figure 1A illustrates the perspective projection imaging process of single-view CDSP. A world coordinate system

Figure 1. The perspective projection imaging model of single-view CDSP. (A) The perspective projection imaging process of CDSP, (B) The support plane of cross section circle

For any CDSP in space, it can be considered as being composed of a stack of constant-diameter cross section circles that are perpendicular to the axis

where

Let

Let

Considering that radius

We assume that the camera coordinate system coincides with the world coordinate system. At this point, the rotation matrix is a

Based on the geometric constraints derived from any CDSP perspective projection imaging model in the above, and with a known radius of r, the 3D pose of the CDSP axis can be determined by point

3 The rapid 3D reconstruction method of CDSP

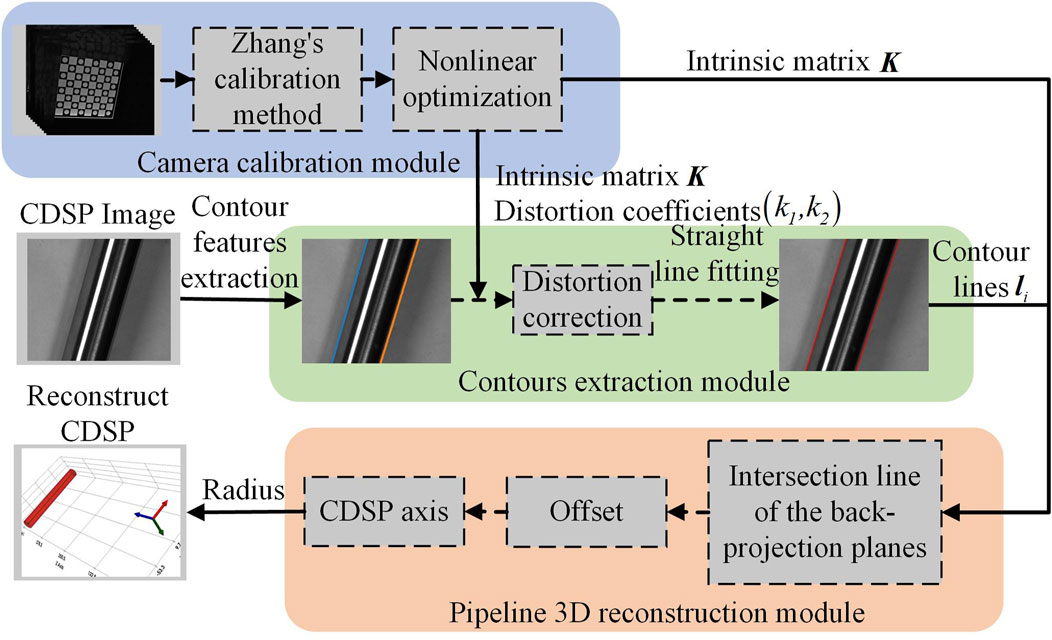

Based on the above single-view perspective projection imaging model of CDSP, the rapid 3D reconstruction method process of CDSP designed in this paper is shown in Figure 2.

The above process includes camera calibration module, contours extraction module, and pipeline 3D reconstruction module. In the camera calibration module, we use

3.1 Camera calibration module

Camera calibration is a fundamental issue in visual technology, a key step in linking 2D image information with 3D spatial information, and a necessary condition for 3D reconstruction. The checkerboard target is not only easy to make, but also provides rich and easily detectable feature points in the image. Therefore, this article uses a checkerboard target with clear corner features for calibration. The parameters that need to be calibrated include the camera intrinsic matrix

where

3.2 Contours extraction module

The edge contour of CDSP comprises circular contours of end faces and straight contours. The method presented in this paper completes 3D reconstruction based on the constraint relationship between the contour lines and the axis of CDSP. Therefore, this method does not require detecting the circular contours of end faces of CDSP and can achieve 3D reconstruction for CDSP of any length. In this paper, we use the subpixel edge detection method proposed by Trujillo-Pino [23] to obtain the pixel coordinates

where

Due to the apparent contours of CDSP in the image are two straight lines, we use the RANSAC algorithm [24] to estimate one of the straight line models by randomly selecting sample points from the contour feature points. Then, the distance from other points to this model is calculated to determine whether they belong to the model’s feature points. After selecting the points that comply, we obtain the feature points set

where

Similarly, the system of equations, which is constructed using the homogeneous coordinates of the feature points of the

For the above two systems of equations, the eigenvector corresponding to the smallest eigenvalue of the coefficient matrix represents the least squares solution of the equations. Alternatively, we can perform SVD decomposition on the coefficient matrix and then take the vector in the right singular matrix that corresponds to the smallest singular value as the optimal solution. After solving from Equation 10 and Equation 11, we can obtain two contour lines

3.3 Pipeline 3D reconstruction module

In this section, based on the geometric constraints provided by the perspective projection imaging model of CDSP, the process of solving the axis 3D pose using the contour lines of CDSP in the image as input is derived. According to the contour lines

where

Since

Besides, since the radius

According to the distance

where

Based on the above derivation, under the premise that the radius

4 Experiments

To verify the effectiveness of the rapid 3D reconstruction method of CDSP, this section will conduct simulation experiment and real experiment. Specifically, the simulation experiment is designed to validate the correctness of the method, while the real experiment is aimed at verifying the feasibility of the method. This article uses a computer equipped with Intel Core i5-8250U CPU and 8 GB RAM for experiments, and uses time-consuming for 3D reconstruction as the speed evaluation metric. In the simulation experiment, we reconstruct a pipe and calculate the root mean square error (RMSE) between the distance from each point in the reconstructed pipe axis to the ideal axis and the true distance

where

Assuming

A parallelogram is formed with

Meanwhile, according to the parallelogram area formula, the area

Combining Equation 18, Equation 19 and Equation 20, the distance

4.1 Simulation experiment

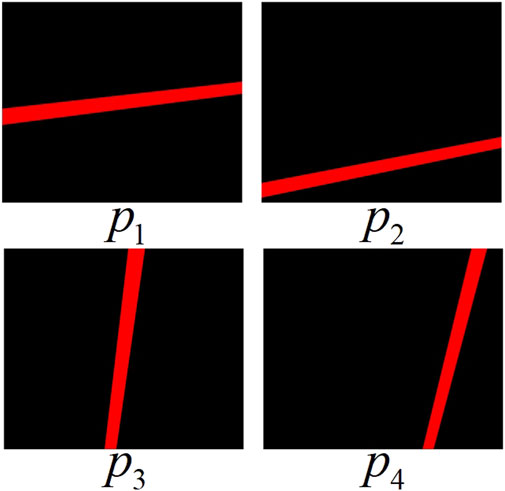

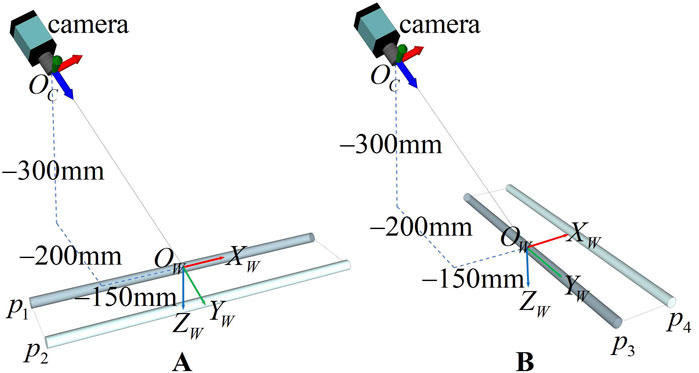

In this part, the proposed method is tested on simulated data. The cell size is set as

In addition, the radius and length of CDSP are set as

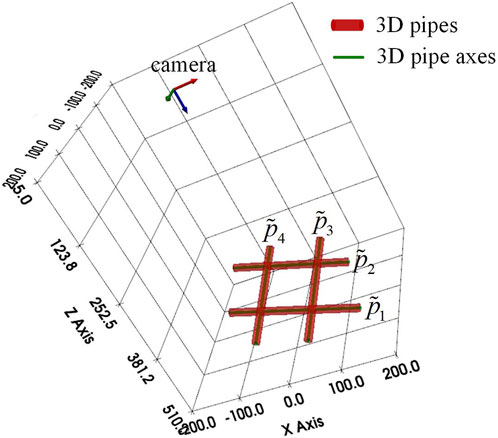

Figure 3. The scene of simulation experiment. (A) The experimental scene of

Based on the scene of the above simulation experiment, The images of

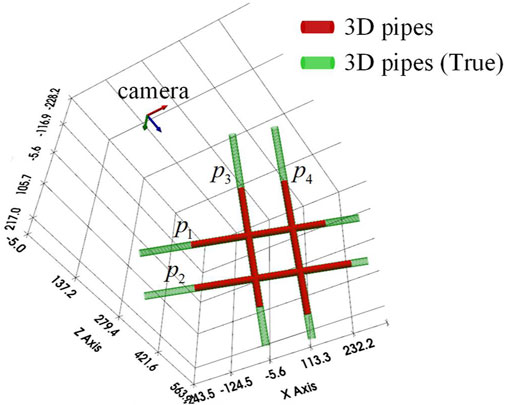

In order to verify the correctness of the proposed method, the projected contour lines of CDSP are obtained from the simulated data through the cylindrical target perspective projection model [25]. Then, we use the proposed method to achieve 3D reconstruction of four pipes

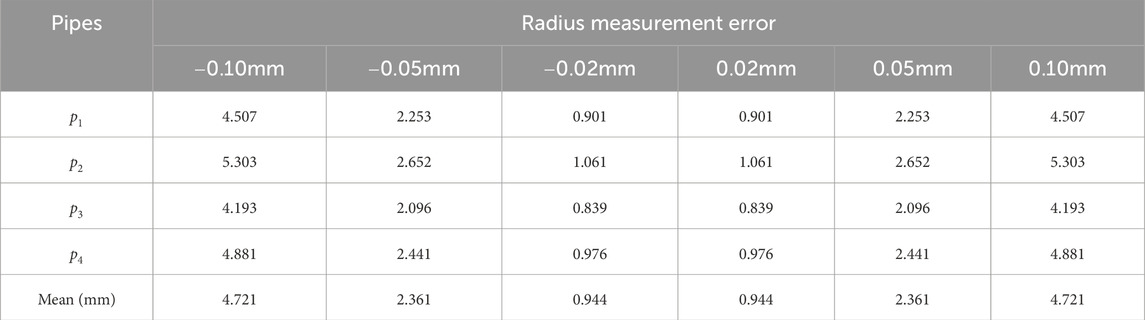

In order to consider the impact of radius measurement errors on reconstruction, we set the radius measurement errors to

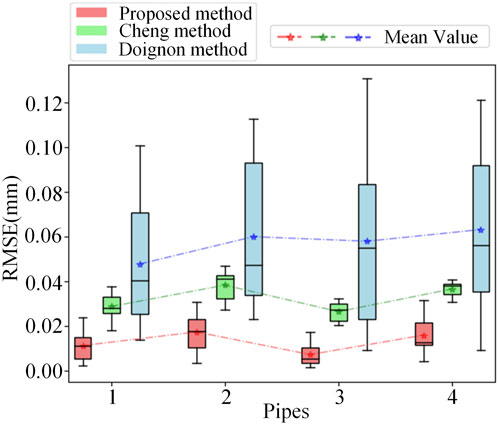

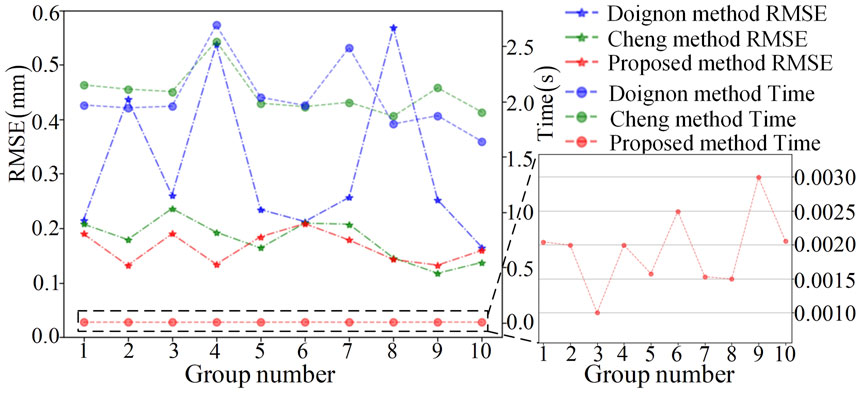

In addition, in order to approach the actual experimental conditions more closely, we add gaussian random noise with a mean of 0 and a noise level of 0.1 to the contour feature points, and then conduct a comparison experiment in terms of accuracy and speed using the Doignon [19] method, the Cheng [20] method, and the proposed method respectively. In the accuracy comparison experiment, each method was tested 10 times and the RMSE was calculated using Equation 17. The calculation results are shown in Figure 6. Figure 6 displays the RMSE for 10 reconstructions of each pipe using three different methods, as well as the average RMSE over the 10 times. The average RMSE for reconstructing the four pipes using the proposed method is 0.013mm, while the average RMSE for the Doignon [19] method is 0.057mm, and the average RMSE for the Cheng [20] method is 0.033 mm. Compared with the other two methods, the RMSE of the proposed method is the lowest, which also indicates that the proposed method is less sensitive to noise. This is because the proposed method adopts simple straight line fitting model, which has low sensitivity to noise. The Cheng [20] method affects the matching accuracy of the coupled point pairs due to noise, which in turn affects the accuracy of the reconstruction results. The Doignon [19] method uses curve model for fitting, and its parameter estimation is easily affected by noise, which in turn affects the accuracy of pose parameters. Compared with the other two methods, the proposed method is faster and less sensitive to noise. In terms of applicability, Cheng [20] method can achieve 3D reconstruction of constant-diameter pipelines, while the proposed method and Doignon [19] method are only applicable to 3D reconstruction of constant-diameter straight pipelines. This is a potential drawback of the method proposed in this article and also an area for improvement in our future work.

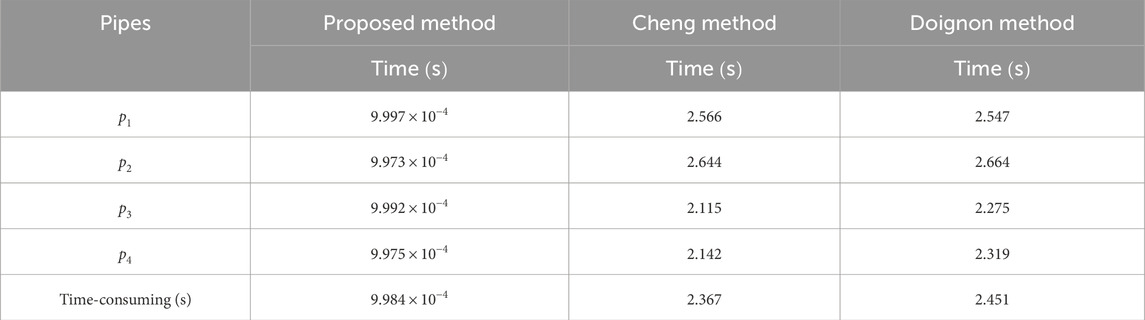

Afterwards, we calculate the time taken by the three methods to complete the reconstruction of pipes

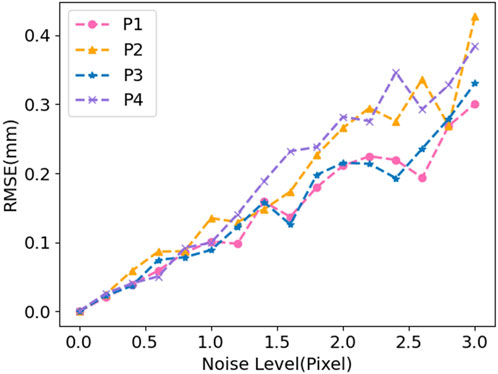

To test the noise resistance of the proposed method in this article, we added Gaussian noise with an average value of 0 and a standard deviation range of 0 to 3 pixel (step size of 0.2 pixel) to the feature point coordinates of the synthesized image, and conducted 10 independent experiments for each noise level. Calculate the RMSE for different noise levels after completing the reconstruction. The RMSE for each noise level is shown in Figure 7. As can be seen from Figure 7, the accuracy gradually decreases with the increase of noise level, and it has a linear relationship with the noise level. When the accuracy requirement reaches 0.2mm, 1.5 pixel of noise can be tolerated. When the accuracy requirement reaches 0.4mm, 3 pixel of noise can be tolerated.

4.2 Real experiment

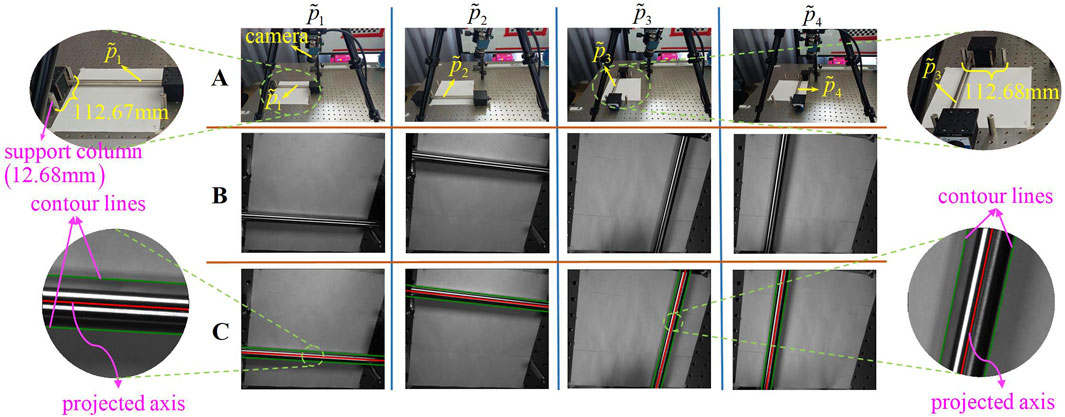

The above simulation experiment has verified the correctness of the proposed method. In order to further verify the feasibility of the proposed method, this section will conduct real experiment based on real data. The camera used in the experiment is Daheng MER-503-23 GM-P camera with resolution of

Figure 8. (A) The scene of real experiment, (B) The images collected in the experiment, (C) The images with contour lines and projected axis.

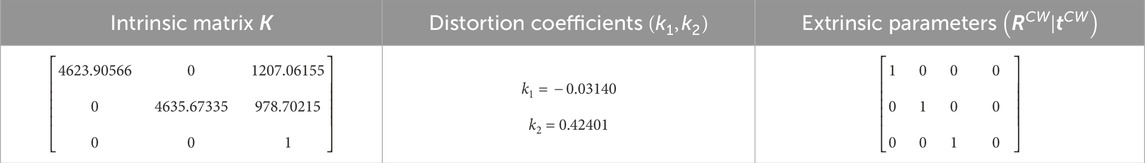

Based on the scene of the above real experiment, we first calibrate the camera used in the experiment. The calibration parameters obtained using the method in the camera calibration module are shown in Table 3.

Then, we collect images of

In addition, based on the scene of the above real experiment, we collect five groups of images for

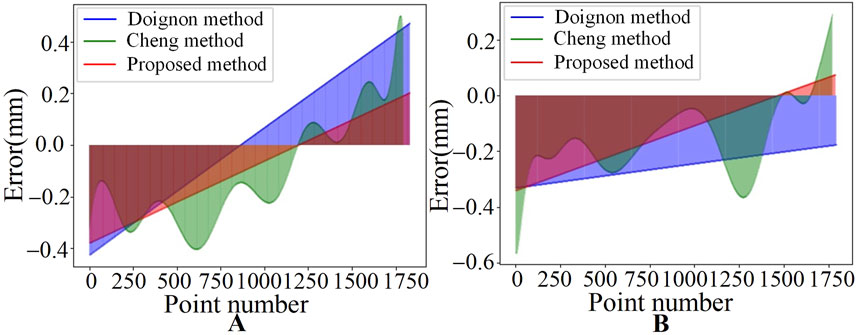

Figure 10. Comparison of reconstruction error results for one group of experiments. (A) The reconstruction error results for

After completing the 3D reconstruction of 10 groups of images using three different methods, we calculate the RMSE of each group using Equation 17. Meanwhile, we calculate the time taken to complete the reconstruction for each pipe and used the average of the time taken to complete the reconstruction for the two pipes as the time-consuming for each group of experiments. In the experimental results shown in Figure 11, the x-axis represents the group number, and the y-axis shows the RMSE of the reconstructed pipe for each group and the average time-consuming to complete the pipe reconstruction for each group. The average RMSE for the 10 groups using the proposed method is

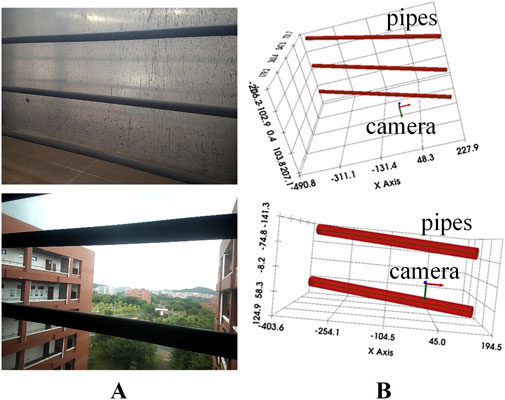

To discuss the robustness of the proposed method under different lighting conditions and backgrounds, we collected CDSP images under different lighting conditions and backgrounds for experiments. For more complex scenes, we use manual filtering to extract contour features, and then use the proposed method for reconstruction. The collected images and their reconstructed effects are shown in Figure 12. Figure 12A shows the collected CDSP images, and Figure 12B shows the 3D reconstruction effect of CDSP. From the experimental results, it can be seen that the method proposed in this paper can effectively achieve 3D reconstruction of CDSP under different lighting conditions and backgrounds, provided that the contour features can be accurately extracted.

Figure 12. Collected CDSP images and their reconstruction effects. (A) the collected CDSP images, (B) the 3D reconstruction effect of CDSP.

5 Conclusion

This paper proposes a rapid 3D reconstruction method of CDSP based on the single-view perspective projection imaging model to address the inefficiency of 3D pipeline reconstruction in tasks such as positioning and navigation for pipeline inspection drones and pipeline surface defect detection. This method first establishes a single-view perspective projection imaging model of CDSP, and under the premise of known radius, the geometric constraints of this model provide a direct method to solve the 3D pose of the CDSP axis. Subsequently, the results of the simulation experiment indicate that the reconstructed pipeline overlaps with the simulated pipeline, and under low noise conditions in the simulated images, the proposed method achieves an average reconstruction accuracy of

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

JY: Conceptualization, Formal Analysis, Methodology, Visualization, Writing–original draft. XC: Conceptualization, Funding acquisition, Methodology, Project administration, Writing–review and editing. HT: Methodology, Supervision, Writing–review and editing. XL: Supervision, Writing–review and editing. HZ: Methodology, Writing–review and editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This research was supported by the National Natural Science Foundation of China (62201151, 62271148).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Wu Y, Gao L, Chai J, Li Z, Ma C, Qiu F, et al. Overview of health-monitoring technology for long-distance transportation pipeline and progress in DAS technology application. Sensors (2024) 24(2):413. doi:10.3390/s24020413

2. Nguyen HH, Park JH, Jeong HY. A simultaneous pipe-attribute and PIG-Pose estimation (SPPE) using 3-D point cloud in compressible gas pipelines. Sensors (2023) 23(3):1196. doi:10.3390/s23031196

3. Lyu F, Zhou X, Ding Z, Qiao X, Song D. Application research of ultrasonic-guided wave technology in pipeline corrosion defect detection: a review. Coatings (2024) 14(3):358. doi:10.3390/coatings14030358

4. Cirtautas D, Samaitis V, Mažeika L, Raišutis R, Žukauskas E. Selection of higher order lamb wave mode for assessment of pipeline corrosion. Metals (2022) 12(3):503. doi:10.3390/met12030503

5. Hussain M, Zhang T, Chaudhry M, Jamil I, Kausar S, Hussain I. Review of prediction of stress corrosion cracking in gas pipelines using machine learning. Machines (2024) 12(1):42. doi:10.3390/machines12010042

6. Chen X, Zhu X, Liu C. Real-time 3D reconstruction of UAV acquisition system for the urban pipe based on RTAB-Map. Appl Sci (2023) 13(24):13182. doi:10.3390/app132413182

7. Cheng X, Zhong B, Tan H, Qiao J, Yang J, Li X. Correction for geometric distortion in the flattened representation of pipeline external surface. Eng Res Express (2024) 6(2):025218. doi:10.1088/2631-8695/ad4cb1

8. Varady T, Martin RR, Cox J. Reverse engineering of geometric models—an introduction. Computer-aided Des (1997) 29(4):255–68. doi:10.1016/s0010-4485(96)00054-1

9. He WM, Sato H, Umeda K, Sone T, Tani Y, Sagara M, et al. A new methodology to evaluate error space in CMM by sequential two points method. In: Mechatronics for safety, security and dependability in a new era. Elsevier (2007). p. 371–6. doi:10.1016/B978-008044963-0/50075-8

10. Zhou L, Wu G, Zuo Y, Chen X, Hu H. A comprehensive review of vision-based 3D reconstruction methods. Sensors (2024) 24(7):2314. doi:10.3390/s24072314

11. Isgro F, Odone F, Verri A. An open system for 3D data acquisition from multiple sensor. In: Seventh international workshop on computer architecture for machine perception (CAMP'05). IEEE (2005) p. 52–7. doi:10.1109/camp.2005.13

12. Huang Z, Li D. A 3D reconstruction method based on one-dimensional galvanometer laser scanning system. Opt Lasers Eng (2023) 170:107787. doi:10.1016/j.optlaseng.2023.107787

13. Al-Temeemy AA, Al-Saqal SA. Laser-based structured light technique for 3D reconstruction using extreme laser stripes extraction method with global information extraction. Opt And Laser Technology (2021) 138:106897. doi:10.1016/j.optlastec.2020.106897

14. Hwang S, Lee D. 3d pose estimation of catheter band markers based on single-plane fluoroscopy. In: 2018 15th international conference on ubiquitous robots (UR). IEEE (2018) p. 723–8. doi:10.1109/urai.2018.8441789

15. Zhang L, Ye M, Chan PL, Yang GZ. Real-time surgical tool tracking and pose estimation using a hybrid cylindrical marker. Int J Comput Assist Radiol Surg (2017) 12:921–30. doi:10.1007/s11548-017-1558-9

16. Lee JD, Lee JY, You YC, Chen CH. Determining location and orientation of a labelled cylinder using point-pair estimation algorithm. Int J Pattern Recognition Artif Intelligence (1994) 8(01):351–71. doi:10.1142/s0218001494000176

17. Shiu H. Pose determination of circular cylinders using elliptical and side projections. In: IEEE 1991 international conference on systems engineering. IEEE (1991) p. 265–8. doi:10.1109/icsyse.1991.161129

18. Zhang T, Liu J, Liu S, Tang C, Jin P. A 3D reconstruction method for pipeline inspection based on multi-vision. Measurement (2017) 98:35–48. doi:10.1016/j.measurement.2016.11.004

19. Doignon C, Mathelin MD. A degenerate conic-based method for a direct fitting and 3-d pose of cylinders with a single perspective view. In: Proceedings 2007 IEEE international conference on robotics and automation. IEEE (2007) p. 4220–5. doi:10.1109/robot.2007.364128

20. Cheng X, Sun J, Zhou F, Xie Y. Shape from apparent contours for bent pipes with constant diameter under perspective projection. Measurement (2021) 182:109787. doi:10.1016/j.measurement.2021.109787

21. Zhang Z. A flexible new technique for camera calibration. IEEE Trans pattern Anal machine intelligence (2000) 22(11):1330–4. doi:10.1109/34.888718

22. Levenberg K. A method for the solution of certain non-linear problems in least squares. Q Appl Mathematics (1944) 2(2):164–8. doi:10.1090/qam/10666

23. Trujillo-Pino A, Krissian K, Alemán-Flores M, Santana-Cedrés D. Accurate subpixel edge location based on partial area effect. Image Vis Comput (2013) 31(1):72–90. doi:10.1016/j.imavis.2012.10.005

24. Fischler MA, Bolles RC. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun ACM (1981) 24(6):381–95. doi:10.1145/358669.358692

Keywords: monocular vision, 3D reconstruction, constant-diameter straight pipeline, apparent contour, geometric constraint

Citation: Yao J, Cheng X, Tan H, Li X and Zhao H (2024) Rapid 3D reconstruction of constant-diameter straight pipelines via single-view perspective projection. Front. Phys. 12:1477381. doi: 10.3389/fphy.2024.1477381

Received: 07 August 2024; Accepted: 26 November 2024;

Published: 16 December 2024.

Edited by:

Zhe Guang, Georgia Institute of Technology, United StatesReviewed by:

Shengwei Cui, Hebei University, ChinaYouchang Zhang, California Institute of Technology, United States

Copyright © 2024 Yao, Cheng, Tan, Li and Zhao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaoqi Cheng, Y2hleHFpQDE2My5jb20=; Haishu Tan, dGFuaHNAMTYzLmNvbQ==

Jiasui Yao1,2

Jiasui Yao1,2 Xiaoqi Cheng

Xiaoqi Cheng Xiaosong Li

Xiaosong Li