- 1Centro Brasileiro de Pesquisas Físicas, Rio de Janeiro, RJ, Brazil

- 2Petróleo Brasileiro S.A., Centro de Pesquisas Leopoldo Miguez de Mello, Rio de Janeiro, Brazil

Recent advancements in quantum computing and quantum-inspired algorithms have sparked renewed interest in binary optimization. These hardware and software innovations promise to revolutionize solution times for complex problems. In this work, we propose a novel method for solving linear systems. Our approach leverages binary optimization, making it particularly well-suited for problems with large condition numbers. We transform the linear system into a binary optimization problem, drawing inspiration from the geometry of the original problem and resembling the conjugate gradient method. This approach employs conjugate directions that significantly accelerate the algorithm’s convergence rate. Furthermore, we demonstrate that by leveraging partial knowledge of the problem’s intrinsic geometry, we can decompose the original problem into smaller, independent sub-problems. These sub-problems can be efficiently tackled using either quantum or classical solvers. Although determining the problem’s geometry introduces some additional computational cost, this investment is outweighed by the substantial performance gains compared to existing methods.

1 Introduction

Quadratic unconstrained binary optimization (QUBO) problems [1] are equivalent formulations of some specific type of combinatorial optimization problems, where one (or a few) particular configuration is sought among a finite huge space of possible configurations. This configuration maximizes the gain (or minimizes the cost) of a real function

The sought optimal solution

The QUBO problem is NP-Hard and is equivalent to finding the ground state of a general Ising model with an arbitrary value and numbers of interactions, commonly used in condensed matter physics [2, 3]. The ground state of the related quantum Hamiltonian encodes the optimal configuration and can be obtained from a general initial Hamiltonian using a quantum evolution protocol. This is the essence of quantum computation by quantum annealing [4], where the optimal solution is encoded in a physical Ising quantum ground state. Hybrid quantum–classical methods, digital analog algorithms, and classical computing inspired by quantum computation are promising Ising solvers (see [5]).

Essential classes of problems, not necessarily combinatorial, can be handled using QUBO solvers. For example, the problem of solving systems of linear equations has been previously studied in the context of quantum annealing in [6–9]. The complexity and usefulness of the approach were discussed in [10, 11]. From those, we can say that quantum annealing is promising for solving linear equations even for ill-conditioned systems and when the number of rows far exceeds the number of columns.

In another context, QUBO formulation protocols were recently developed to train machine learning models with the promising expectation that quantum annealing could solve this type of hard problem more efficiently [12]. Machine learning algorithms and specific quantum-inspired formulations of these strategies in the quantum circuit approach have grown substantially in recent years; see, for example, [13–18] and references therein. At the core of the machine learning approach, linear algebra is a fundamental tool used in these formulations. Therefore, the study of QUBO formulations of linear problems and their enhancement can be of interest in the use of the quantum annealing process in machine learning approaches. Another recent example is the study of simplified binary models of inverse problems where the QUBO matrix represents a quadratic approximation of the forward non-linear problem (see [19]). It is interesting to note that in classical inverse problems, the necessity of solving linear system equations is an essential step in the whole process.

In this work, we propose a new method to enhance the convergence rate of an iterative algorithm used to solve a system of equations with an arbitrary condition number. At each stage, the algorithm maps the linear problem to a QUBO problem and finds appropriate configurations using a QUBO solver, either classical or quantum. In previous implementations, the feasibility of the method was linked to the specific binary approximation used. Generally, as the condition number increases, more bits are required, which increases the dimension of the QUBO problem. Our contribution shows that a total or partial knowledge of the intrinsic geometry of the problem helps reformulate the QUBO problem, stabilizing the convergence to the solution and, therefore, improving the performance of the algorithm. In the case of full knowledge of the geometry, we show that the associated QUBO problem is trivial. If the geometry is only partially known, we show that the QUBO problems are small in principle, solvable with low binary approximation.

The remainder of this paper is organized as follows: Section 2 briefly describes how to convert the problem of solving a system of linear equations into a QUBO problem. The conventional algorithm for this problem is presented and illustrated with examples. Subsequently, we analyze the geometrical structure of the linear problem

2 System of linear equations

2.1 Writing a system of equations as a QUBO problem

Solving a system of

where

Define the vector

where

where

of length

To construct the QUBO problem associated with solving the linear system, we provide a concrete example with

The solution

with

where

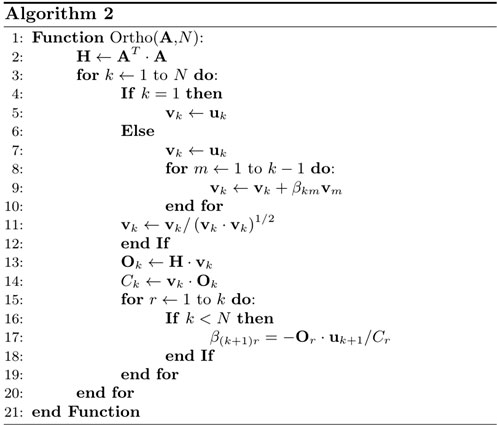

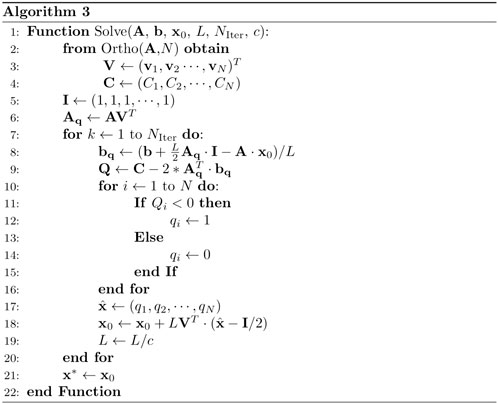

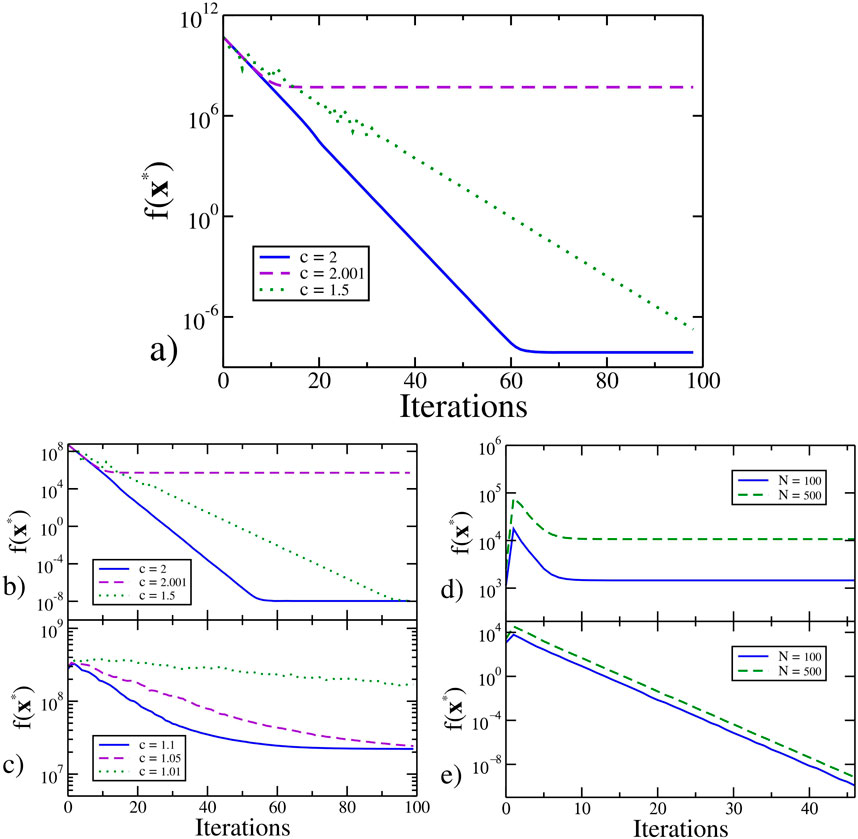

Figure 1. Performance of the original algorithm for solving linear equation systems. (A)

In our particular case, we have

To construct the QUBO matrix used in Equation 1, we expand

where

The binary vector

Once the vector

For our concrete example

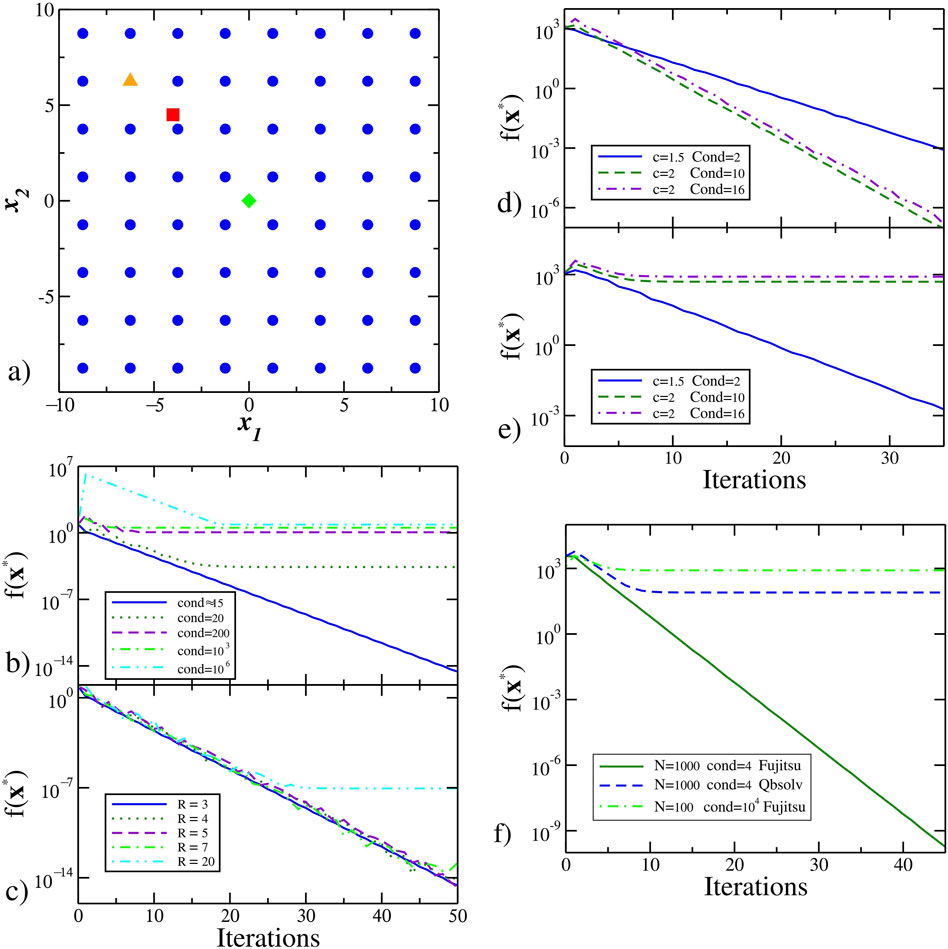

Other algorithms to tackle QUBO problems are mentioned in the review [1]. Once a QUBO solver is chosen, we can use the iterative process to find the solution of the linear equations system. We implement this procedure in Algorithm 1, as shown in Figure 2.

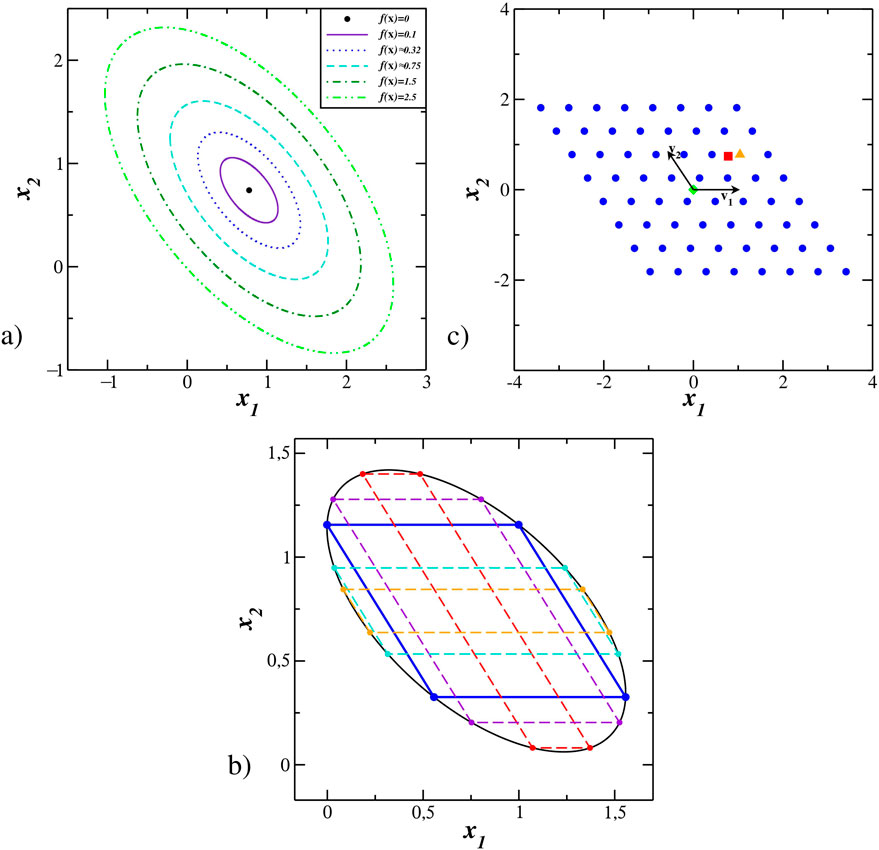

Figure 2. Preparation of the QUBO problem to solve a linear system of equations

3 Methods

After developing the appropriate mathematical tools, we implemented three methods in Python to solve the associated QUBO problem.

The coefficients of the linear systems that we studied were randomly generated. After transforming these coefficients into a QUBO format, we converted them into JavaScript Object Notation (JSON) for efficient data exchange. The resulting JSON data were then sent to the Fujitsu system for optimization. Inquiries regarding the implementation details or the code itself can be directed to the authors.

4 Results

Section 4.1 presents the performance of the algorithm in Figure 2 applied to problems with a small condition number. The algorithm works well in this case, but if we increase the condition number, convergence is only obtained by increasing the factor

In Section 4.2, the previous issue is addressed by determining the geometry of the hypersurfaces with

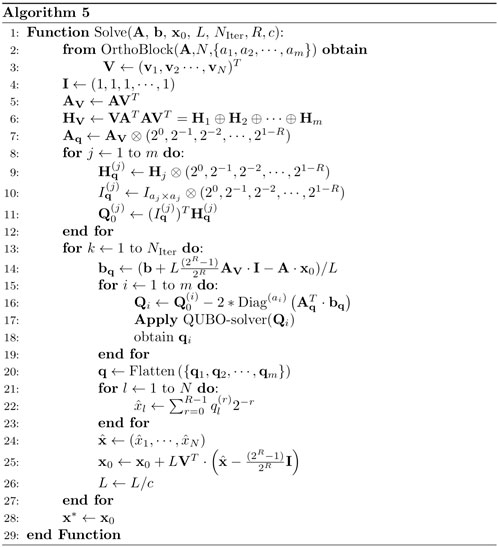

In Section 4.3, it is shown that partial knowledge of the geometry simplifies the QUBO approach. In particular, it is demonstrated that in a large problem with a condition number where the algorithm in Figure 2 fails, it is possible to decompose the original problem into many independent QUBO sub-problems, each with a condition number amenable to being approached by the algorithm in Figure 2. Such decomposition is obtained with only partial knowledge of the geometry.

4.1 Convergence of the conventional algorithm

The performance of Algorithm 1 strongly depends on the type of matrix

Another option is to increase the factor

In Figures 1D, E, we solve three different systems of linear equations with

Figure 1F shows that for

The Fujitsu digital annealer enhances the well-known simulated annealing algorithm with other physics-inspired strategies that resemble quantum annealing procedures (see [24]). In our case, involving large matrices, small binary approximations, and small condition numbers, the Fujitsu system seems to be very efficient at solving these types of problems. Large QUBO problems can be solved using the Fujitsu system (QUBO with dimensions up to

4.2 Rhombus geometry applied to the problem

4.2.1 Geometry of the problem

The entire discrete set of possible configurations defines the QUBO. Generally, there is little structure in this set. However, since the problem is written in the language of vector space, there is a robust mathematical structure that we can use to improve the performance of existing algorithms. It is not difficult to observe that the subset of

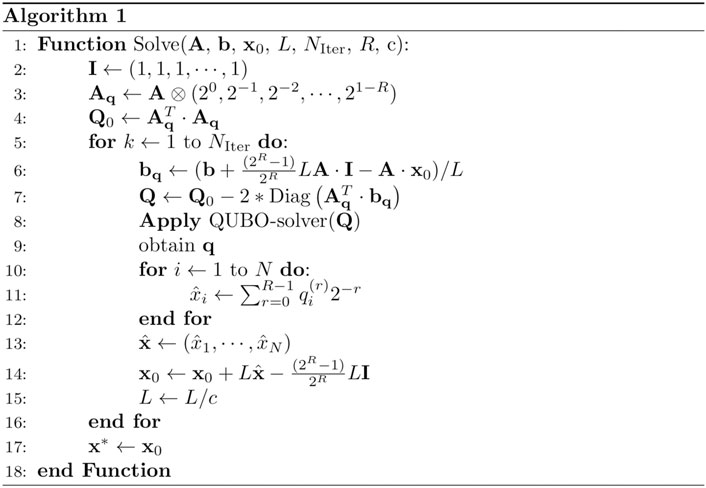

Figure 3. Geometry of the matrix problem associated with the solution of a linear system. In (A), concentric ellipsoidal geometry in the inversion problem (5) for a particular case when

All the ellipses in Figure 3A are concentric and similar. Therefore, we can take a unique representative. Each ellipse contains a family of parallelograms with different sizes but congruent angles; see Figure 3B. In Figure 1A, the problem is formulated using a square lattice geometry. However, nothing prevents us from using other geometries, especially those better suited to the problem. We can choose a lattice with the parallelogram geometry. In particular, we choose the parallelogram with equal-length sides (rhombus). Figure 3C illustrates how possible configurations are chosen using the rhombus geometry.

The choice of this geometry brings advantages in the final algorithm efficiency since we need only a few iterations with the rhombus geometry to obtain convergence to the solution. Given an initial guess

We emphasize that the square geometry used in previous works only coincides with the matrix inversion geometry when the matrix

4.2.2

The ellipsoid form in the matrix inversion problem is given by the symmetric matrix

Given the

The coefficients

This procedure is implemented in Algorithm 2, as shown in Figure 4. The calculated non-orthogonal unitary vectors (in the standard scalar product)

4.2.3 Modified search region

Considering the intrinsic rhombus geometry, the iterative algorithm converges exponentially fast with respect to the number of iterations, making it sufficient to use

where

and

From Equation 7 and the

Figure 5. Modified iterative algorithm using the rhombus geometry. The

4.2.4 Implementation of the algorithm

Algorithm 3 works whenever the rhombus that contains the QUBO configurations also includes the exact solution

Figure 6. Performance of the new method for solving linear systems. In (A), we shown the iterative QUBO modified algorithm applied to a linear system with 5000 variables and 5000 equations and

In the last section of this work, we show that having partial knowledge of the conjugated vectors

4.3 Solving large systems of equations using binary optimization

4.3.1 Decomposing QUBO matrices in smaller sub-problems

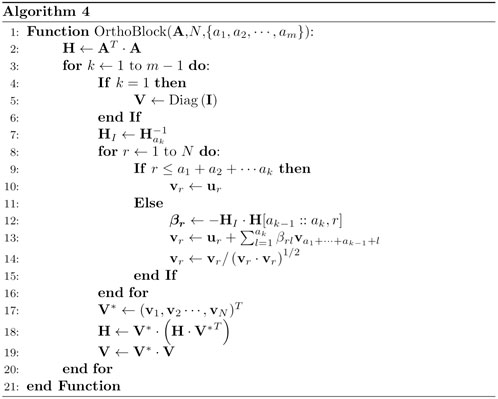

In the previous section, we show that the knowledge of the conjugated vectors that generate the rhombus geometry simplifies the QUBO resolution and improves the convergence rate to the exact solution. However, the calculus of these vectors in Algorithm 2 has approximately

Another interesting possibility is to use the notion of

Techniques for decomposing into sub-problems are standard in the search process for some QUBO solvers. One notable example is the QUBO-solver Qbsolv, a heuristic hybrid algorithm that decomposes the original problem into many QUBO sub-problems that can be approached using classical Ising or Quantum QUBO solvers. The solution of each sub-problem is projected into the actual space to infer better initial guesses in the classical heuristic algorithm (Tabu search); see [26] for details. Our algorithm decomposes the original QUBO problem associated with

To see how the decomposition method works, we use the generalized Gram–Schmidt orthogonalization only between different groups of vectors. We choose

For the other vectors, we use

We also require that the first group of

This last condition determines all the coefficients

where

where

and

To determine the new set of

where

Repeating the same process another

and

We use the notation

Figure 7. Block diagonal transformation of the matrix

To effectively decompose a large matrix

4.3.2 Implementation of the algorithm

Suppose that the matrix

Figure 8. Modified iterative algorithm using the block diagonal decomposition of

5 Discussion

It has recently been conjectured that the use of quantum technologies would improve the learning process in machine learning models. In the standard quantum circuit paradigm, many proposals and generalizations exist, promising better performance with the advent of quantum computers. Machine learning formulations such as QUBO problems are also another possible strategy that can be improved with the development of quantum annealing hardware. In such cases, the approach of addressing linear algebra problems through QUBO problems is of general interest because linear algebra is one of the natural languages in which machine learning is written. In this work, we proposed a new method to solve a system of linear equations using binary optimizers. Our approach guarantees that the optimal configuration is the closest to the exact solution. Additionally, we demonstrated that partial knowledge of the problem’s geometry allows decomposition into a series of independent sub-problems that can be solved using conventional QUBO solvers. The solution to each sub-problem is then aggregated, enabling rapid determination of an optimal solution. We show that the original formulation as QUBO is efficient only when the condition number of the associated matrix

However, identifying the vectors that determine the sub-problem decomposition incurs computational costs that influence the overall performance of the algorithm. Nevertheless, two factors could lead to significant improvements: better methods for identifying the vectors associated with the geometry and faster QUBO solvers. In our study, when using a QUBO solver such as the Fujitsu digital annealer, we focus on finding elite QUBO solutions. This is because when the condition number is small, we are guaranteed that the associated configuration is very close to the solution to the problem. Finding elite solutions to QUBO problems is very costly for large problems due to their NP-hardness. However, the only criterion for obtaining convergence in Algorithm 1 is to get a configuration in the same quadrant that contains the solution to the linear system of equations. The number of configurations in each quadrant (there are

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

EC: conceptualization, data curation, formal analysis, investigation, methodology, software, validation, visualization, writing–original draft, and writing–review and editing. EM: conceptualization, investigation, methodology, project administration, resources, software, validation, visualization, and writing–review and editing. RS: methodology, resources, supervision, validation, and writing–review and editing. AS: conceptualization, formal analysis, investigation, methodology, resources, software, supervision, validation, visualization, and writing–review and editing. IO: conceptualization, funding acquisition, methodology, project administration, resources, supervision, visualization, and writing–review and editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Brazilian National Institute of Science and Technology for Quantum Information (INCT-IQ) (Grant No. 465 469/2 014-0), the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior—Brasil (CAPES)—Finance Code 001, Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq), and PETROBRAS: Projects 2017/00 486-1, 2018/00 233-9, and 2019/00 062-2. AMS acknowledges support from FAPERJ (Grant No. 203.166/2 017). ISO acknowledges FAPERJ (Grant No. 202.518/2 019).

Conflict of interest

Author EM was employed by Petróleo Brasileiro S.A.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fphy.2024.1443977/full#supplementary-material

References

1. Kochenberger G, Hao JK, Glover F, Lewis M, Lü Z, Wang H, et al. The unconstrained binary quadratic programming problem: a survey. J Comb Optim (2014) 28:58–81. doi:10.1007/s10878-014-9734-0

2. Barahona F. On the computational complexity of ising spin glass models. J Phys A: Math Gen (1982) 15:3241–53. doi:10.1088/0305-4470/15/10/028

3. Lucas A. Ising formulations of many np problems. Front Phys (2014) 2:1–15. doi:10.3389/fphy.2014.00005

4. Kadowaki T, Nishimori H. Quantum annealing in the transverse ising model. Phys Rev E (1998) 58:5355–63. doi:10.1103/PhysRevE.58.5355

5. Mohseni N, McMahon PL, Byrnes T. Ising machines as hardware solvers of combinatorial optimization problems. Nat Rev Phys (2022) 4:363–79. doi:10.1038/s42254-022-00440-8

6. O’Malley D, Vesselinov VV. Toq.jl: a high-level programming language for d-wave machines based on julia. In: IEEE conference on high performance extreme computing. Waltham, MA, United States: D-Wave (2016). p. 1–7doi. doi:10.1109/HPEC.2016.7761616

7. Pollachini GG, Salazar JPLC, Góes CBD, Maciel TO, Duzzioni EI. Hybrid classical-quantum approach to solve the heat equation using quantum annealers. Phys Rev A (2021) 104:032426. doi:10.1103/PhysRevA.104.032426

8. Rogers ML, Jr RLS. Floating-point calculations on a quantum annealer: division and matrix inversion. Front Phys (2020) 8:265. doi:10.3389/fphy.2020.00265

9. Souza AM, Martins EO, Roditi I, Sá N, Sarthour RS, Oliveira IS. An application of quantum annealing computing to seismic inversion. Front Phys (2021) 9:748285. doi:10.3389/fphy.2021.748285

10. Borle A, Lomonaco SJ. Analyzing the quantum annealing approach for solving linear least squares problems. In: WALCOM: algorithms and computation. Springer (2019). p. 289–301doi. doi:10.1007/978-3-030-10564-8_23

11. Borle A, Lomonaco SJ. How viable is quantum annealing for solving linear algebra problems? arXiv:2206 (2022). doi:10.48550/arXiv.2206.10576

12. Date P, Arthur D, Pusey-Nazzaro L. Qubo formulations for training machine learning models. Sci Rep (2021) 11:10029. doi:10.1038/s41598-021-89461-4

13. Gong C, Zhou N-R, Xia S, Huang S. Quantum particle swarm optimization algorithm based on diversity migration strategy. Fut Gen Comp Syst (2024) 157:445–58. doi:10.1016/j.future.2024.04.008

14. Gong L-H, Ding W, Li Z, Wang Y-Z, Zhou N-R. Quantum k-nearest neighbor classification algorithm via a divide-and-conquer strategy. Adv Quan Technol (2024) 7:2300221. doi:10.1002/qute.202300221

15. Gong L-H, Pei J-J, Zhang T-F, Zhou N-R. Quantum convolutional neural network based on variational quantum circuits. Opt Commun (2024) 550:129993. doi:10.1016/j.optcom.2023.129993

16. Huang S-Y, An W-J, Zhang D-S, Zhou N-R. Image classification and adversarial robustness analysis based on hybrid quantum–classical convolutional neural network. Opt Commun (2023) 533:129287. doi:10.1016/j.optcom.2023.129287

17. Wu C, Huang F, Dai J, Zhou N-R. Quantum susan edge detection based on double chains quantum genetic algorithm. Phys A: Statis Mech Its Appl (2022) 605:128017. doi:10.1016/j.physa.2022.128017

18. Zhou N-R, Zhang T-F, Xie X-W, Wu J-Y. Hybrid quantum–classical generative adversarial networks for image generation via learning discrete distribution. Sign Proc Ima Commun (2023) 110:116891. doi:10.1016/j.image.2022.116891

19. Greer S, O’Malley D. Early steps toward practical subsurface computations with quantum computing. Front Comput Sci (2023) 5:1235784. doi:10.3389/fcomp.2023.1235784

20. Alkhamis TM, Hasan M, Ahmed MA. Simulated annealing for the unconstrained binary quadratic pseudo-boolean function. Eur J Oper Res (1998) 108:641–52. doi:10.1016/S0377-2217(97)00130-6

21. Dunning I, Gupta S, Silberholz J. What works best when? a systematic evaluation of heuristics for max-cut and qubo. INFORMS J Comput (2018) 30:608–24. doi:10.1287/ijoc.2017.0798

22. Hauke P, Katzgraber HG, Lechner W, Nishimori H, Oliver WD. Perspectives of quantum annealing: methods and implementations. Rep Prog Phys (2020) 83:054401. doi:10.1088/1361-6633/ab85b8

23. Booth M, Berwald J, Uchenna Chukwu JD, Dridi R, Le D, Wainger M, et al. (2020). Qci qbsolv delivers strong classical performance for quantum-ready formulation. doi:10.48550/arXiv.2005.11294

24. Aramon M, Rosenberg G, Valiante E, Miyazawa T, Tamura H, Katzgraber HG. Physics-inspired optimization for quadratic unconstrained problems using a digital annealer. Front Phys (2019) 7:48. doi:10.3389/fphy.2019.00048

25. Shewchuk JR. An introduction to the conjugate gradient method without the agonizing pain. Pittsburgh, PA, USA: Carnegie-Mellon University, Department of Computer Science (1994).

26. Booth M, Reinhardt SP, Roy A. Partitioning optimization problems for hybrid classical/quantum execution. Burnaby, BC, Canada: D-Wave The Quantum Computing Company (2017).

Keywords: linear algebra algorithms, quadratic unconstrained binary optimization formulation, digital annealing, conjugate geometry approach, convergence analysis

Citation: Castro ER, Martins EO, Sarthour RS, Souza AM and Oliveira IS (2024) Improving the convergence of an iterative algorithm for solving arbitrary linear equation systems using classical or quantum binary optimization. Front. Phys. 12:1443977. doi: 10.3389/fphy.2024.1443977

Received: 04 June 2024; Accepted: 26 August 2024;

Published: 27 September 2024.

Edited by:

Nanrun Zhou, Shanghai University of Engineering Sciences, ChinaReviewed by:

Lihua Gong, Shanghai University of Engineering Sciences, ChinaMengmeng Wang, Qingdao University of Technology, China

Zhao Dou, Beijing University of Posts and Telecommunications, China

Copyright © 2024 Castro, Martins, Sarthour, Souza and Oliveira. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Erick R. Castro, ZXJpY2tjQGNicGYuYnI=

Erick R. Castro

Erick R. Castro Eldues O. Martins

Eldues O. Martins Roberto S. Sarthour1

Roberto S. Sarthour1 Alexandre M. Souza

Alexandre M. Souza Ivan S. Oliveira

Ivan S. Oliveira