- 1School of Electrical Engineering and Computer Science, National University of Sciences and Technology, Islamabad, Pakistan

- 2College of Aeronautical Engineering, National University of Sciences and Technology, Islamabad, Pakistan

- 3Ghulam Ishaq Khan Institute of Engineering Sciences and Technology, Swabi, Pakistan

- 4Department of Electrical and Computer Engineering, King Abdulaziz University, Jeddah, Saudi Arabia

- 5Department of Physics and Astronomy, Uppsala University, Uppsala, Sweden

Accurate solar power forecasting is pivotal for the global transition towards sustainable energy systems. This study conducts a meticulous comparison between Quantum Long Short-Term Memory (QLSTM) and classical Long Short-Term Memory (LSTM) models for solar power production forecasting. The primary objective is to evaluate the potential advantages of QLSTMs, leveraging their exponential representational capabilities, in capturing the intricate spatiotemporal patterns inherent in renewable energy data. Through controlled experiments on real-world photovoltaic datasets, our findings reveal promising improvements offered by QLSTMs, including accelerated training convergence and substantially reduced test loss within the initial epoch compared to classical LSTMs. These empirical results demonstrate QLSTM’s potential to swiftly assimilate complex time series relationships, enabled by quantum phenomena like superposition. However, realizing QLSTM’s full capabilities necessitates further research into model validation across diverse conditions, systematic hyperparameter optimization, hardware noise resilience, and applications to correlated renewable forecasting problems. With continued progress, quantum machine learning can offer a paradigm shift in renewable energy time series prediction, potentially ushering in an era of unprecedented accuracy and reliability in solar power forecasting worldwide. This pioneering work provides initial evidence substantiating quantum advantages over classical LSTM models while acknowledging present limitations. Through rigorous benchmarking grounded in real-world data, our study illustrates a promising trajectory for quantum learning in renewable forecasting.

1 Introduction

Accurate solar power forecasting plays a critical role in enabling the effective management and integration of renewable energy sources into the grid. By accurately predicting solar power generation, grid operators can optimize energy storage, transmission, and distribution strategies, mitigating the intermittency hurdles that have historically impeded the large-scale adoption of photovoltaic sources. Furthermore, precise forecasting can facilitate the development of more efficient energy trading and market mechanisms, fostering a sustainable and cost-effective transition towards a greener energy future. Consequently, the development of advanced forecasting methodologies tailored to the unique characteristics of solar power generation has become a research imperative with far-reaching implications for the global energy sector.

Despite these promising developments, the existing literature significantly lacks a comprehensive, empirical comparison between QLSTM and classical LSTM models grounded in real-world solar production data. Preceding works [1, 2] have primarily focused on synthetic benchmarks or theoretical analysis of Quantum Recurrent Neural Networks variants, leaving a critical gap in our understanding of the practical implications of QLSTMs for renewable energy forecasting. This investigation aims to bridge this divide, offering a thorough comparative analysis in this pivotal domain.

This research holds significant scientific interest and importance as it aims to harness the potential of quantum machine learning techniques, specifically Quantum Long Short-Term Memory (QLSTM) networks, to revolutionize solar power forecasting accuracy and reliability. By empirically validating QLSTMs on real-world photovoltaic plant data and demonstrating their superior performance over classical methods, this study paves the way for a paradigm shift in renewable energy forecasting, with far-reaching implications for sustainable energy infrastructure planning and execution.

This investigation ventures into this critical domain, systematically examining the potential advantages that Quantum Long Short-Term Memory (QLSTM) networks might offer over their classical LSTM counterparts in the realm of solar power forecasting, renowned for its intricate non-linear spatiotemporal patterns. Through rigorous controlled experiments and ablation studies conducted on operational photovoltaic plant datasets, this research seeks to provide the first comprehensive evidence substantiating the representational strengths of quantum architectures in capturing the nuanced dynamics inherent in solar power generation. By tailoring QLSTM designs to navigate current quantum hardware limitations and conducting thorough performance analysis, this study offers actionable insights into the real-world implementation of QLSTMs for renewable forecasting. Moreover, by benchmarking against classical techniques and conventional neural networks, this investigation establishes QLSTMs as a credible alternative to outperform traditional methods, accentuating the potential of QML in fortifying the accuracy and reliability essential for sustainable energy infrastructure planning and execution.

2 Background or related work

The global energy landscape is undergoing a transformative shift towards sustainable and renewable solutions, driven by the pressing need to mitigate the environmental impact of traditional fossil fuel-based systems. Solar power has emerged as a pivotal force in reshaping energy production and consumption patterns, offering a promising pathway to mitigate the environmental impact of traditional fossil fuel-based systems. However, the large-scale integration of intermittent renewable sources into existing infrastructure poses multifaceted challenges that must be addressed to facilitate a seamless and efficient transition. Inaccuracies in forecasting the generation of solar and wind power can lead to significant deviations from planned electricity schedules, resulting in imbalances, inefficiencies, and substantial costs for grid operators and utilities [3]. Consequently, policymakers have prioritized improved renewable forecasting to mitigate such challenges.

Amidst this paradigm shift, the convergence of quantum information (QI) and machine learning (ML) has ushered in a revolutionary approach to data analytic: Quantum Machine Learning (QML) [4]. This paradigm-shifting synthesis harnesses techniques from both quantum computing and traditional machine learning, offering innovative solutions to longstanding obstacles across diverse sectors, including renewable energy [5]. Significantly, QML transcends mere energy minimization tasks, presenting a broader scope in problem-solving paradigms [6], thereby unlocking new avenues for precise solar power predictions.

As the large-scale penetration of renewable sources necessitates proactive management of electrical grids, advanced prediction methodologies for intermittent energy sources, particularly photovoltaic plants, have become paramount [7, 8]. Accurate solar power forecasting plays a critical role in enabling grid operators to maintain a delicate equilibrium between energy creation and utilization. Notably, solar production forecasting over longer time horizons does not mandate real-time predictions, providing an opportunity where the potentially slower inference times of quantum models may be acceptable in exchange for substantially improved accuracy.

In recent years, there has been a surge of interest in quantum machine learning developing and refining of quantum adaptations of recurrent neural networks (RNNs) for time series forecasting applications [9, 10], thereby substantiating the potential of quantum computational models in predictive analytics. Marking a pivotal shift, the seminal work of Chen et al. [11] pioneered the introduction of QLSTM architectures, which amalgamate variational quantum circuits [12] with the conventional LSTM framework. This innovative synthesis harnesses an exponentially larger Hilbert space for data representation and computation, potentially enabling the capture of higher-order correlations and intricate temporal dynamics.

While classical Long Short-Term Memory (LSTM) neural networks have demonstrated remarkable efficacy in leveraging long-term temporal dependencies for accurate forecasting [7, 13–15], they struggle to capture the complex, non-linear spatiotemporal patterns inherent in solar power generation [16], which involve intricate relationships between meteorological variables, solar irradiance, and power output. Quantum Long Short-Term Memory (QLSTM) networks, which leverage the principles of quantum mechanics and an exponentially larger Hilbert space, hold the potential to address these limitations by enabling more effective mapping of these intricate relationships between weather variables, solar irradiance, and power generation more effectively. And capturing higher-order correlations and temporal dynamics.

This investigation systematically examines the potential advantages that QLSTMs might offer over their classical LSTM counterparts in solar power forecasting, renowned for its intricate non-linear spatiotemporal patterns. Through rigorous controlled experiments and ablation studies conducted on operational photovoltaic plant datasets, this research aims to provide the first comprehensive evidence substantiating the representational strengths of quantum architectures in capturing the nuanced dynamics inherent in solar power generation.

3 Research motivation and novelty

The novelty of this work lies in its empirical validation of QLSTMs on real-world solar power data, transitioning from synthetic benchmarks to practical renewable time series forecasting. Through rigorous controlled experiments and ablation studies, this research provides the first comprehensive evidence substantiating the representational strengths of quantum architectures in capturing the nuanced dynamics inherent in solar power generation. By tailoring QLSTM designs to navigate current quantum hardware limitations and conducting thorough performance analysis, this study offers actionable insights into the real-world implementation of QLSTMs for renewable forecasting. Moreover, by benchmarking against classical techniques and conventional neural networks, this investigation establishes QLSTMs as a credible alternative to outperform traditional methods, accentuating the potential of quantum machine learning in fortifying the accuracy and reliability essential for sustainable energy infrastructure planning and execution.

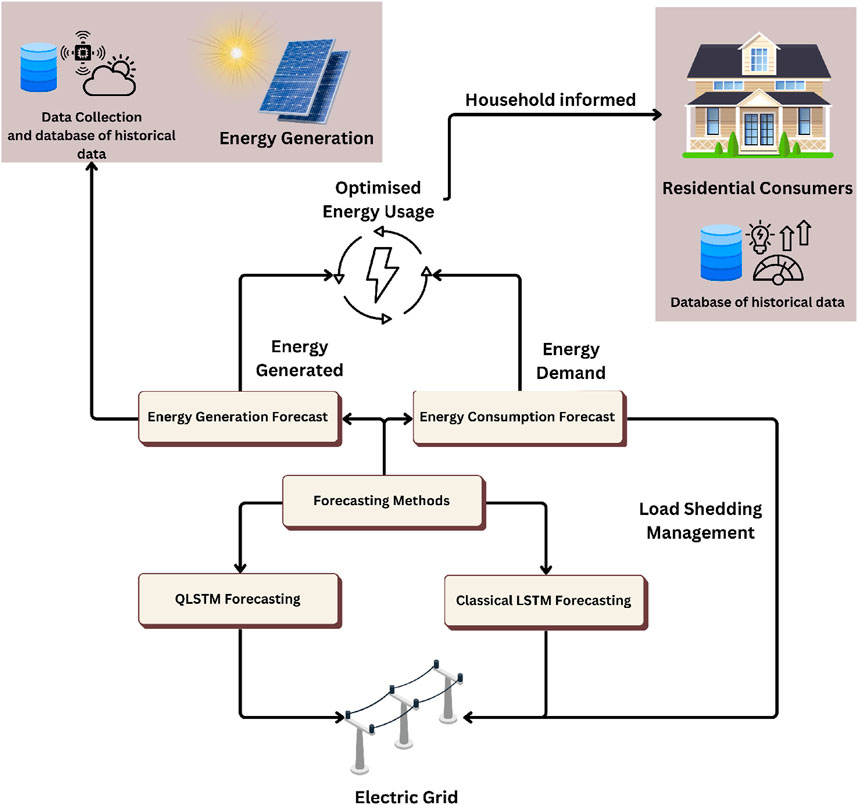

Figure 1 shows a typical system integration flowchart for solar energy forecasting and consumption management. Through extensive controlled experiments and ablation studies conducted on operational photovoltaic plant datasets, this research seeks to determine whether QLSTMs, fortified by their exponential representational capabilities, can establish new standards of accuracy and reliability in renewable forecasting tasks. By systematically evaluating the performance of these quantum architectures against their classical counterparts, this study elucidates the potential advantages and limitations of QLSTMs in the context of solar power prediction.

This advancement, coupled with the promise of faster convergence times and heightened resilience to noise [17], paves the way for the realization of more precise and reliable forecasting systems–a quality of paramount importance in the domain of solar power production. However, it is crucial to acknowledge that the nascent field of quantum machine learning presents its own unique challenges and opportunities [18]. These challenges encompass the current hardware limitations, the potential trade-offs between quantum advantages and computational overhead, as well as the need for systematic optimization and validation across diverse scenarios–factors that this study meticulously considers in its comparative analysis.

4 Contributions

This investigation signifies a pioneering effort in melding quantum machine learning advancements with practical renewable energy forecasting applications. The study stands out for its empirical validation of Quantum Long Short-Term Memory (QLSTM) networks on real-world solar power data, marking a departure from synthetic benchmarks to evaluate quantum architectures on authentic renewable time series data. Our findings are groundbreaking, demonstrating that QLSTMs not only achieve superior forecasting accuracy but also exhibit faster convergence rates compared to classical LSTM models. The primary contributions of this study are as follows.

Our investigation highlights the potential of quantum machine learning in enhancing the reliability and accuracy of energy infrastructure planning and execution. Improved solar power predictions by QLSTMs could significantly optimize energy storage, transmission, and distribution strategies, addressing the intermittency challenges of photovoltaic sources. As we move forward, the synergy between interdisciplinary collaborations and quantum advancements promises to usher in an era of unprecedented precision in renewable energy forecasting, contributing to the global transition towards sustainable energy solutions.

This study not only demonstrates the immediate contributions of QLSTMs to renewable energy forecasting but also charts a course for future research directions, acknowledging present limitations while highlighting the broader implications for sustainable energy systems worldwide.

5 Methodology

Figure 2 describes the methodology of our research work.

5.1 Data description

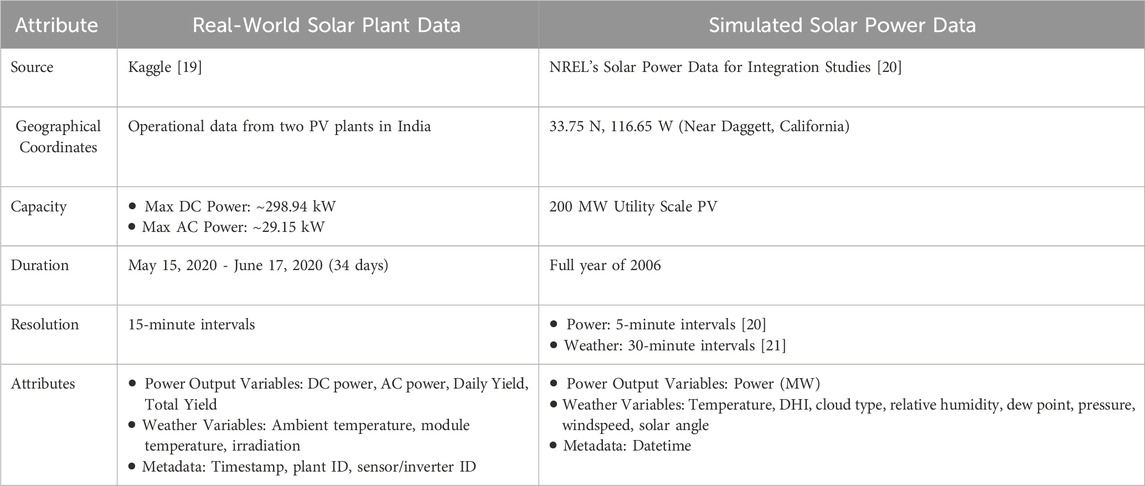

This study employs two comprehensive datasets tailored for an exhaustive comparative analysis. The solar dataset of real operational data empowers the modelling process by simulating genuine conditions prevalent in solar farms. The simulated dataset, a merger of high-resolution power generation data and corresponding weather conditions, presents a granular view of power fluctuations and is emblematic of the variations characteristic to real-world solar power environments. The amalgamation of both actual and high-fidelity simulated datasets presents a broad spectrum of temporal granularity and geographic variability. This fusion is meticulously curated to offer a versatile platform for model development, ensuring accurate, generalizable, and comprehensive solar forecasting paradigms. Table 1 presents a comparison of real-world and simulated solar power data.

5.1.1 Dataset justification

The specific choice of a real-world operational solar plant dataset and a high-fidelity simulated dataset spanning an entire year provides a robust platform for comparative assessment. The real-world data enables the evaluation of real solar farm conditions with intrinsic noise, while the simulated data allows examination across diverse weather scenarios over an extended duration. Together, these datasets present the variance, noise, and long-term temporal patterns crucial for rigorously examining the capabilities of QLSTM against classical LSTM in solar forecasting tasks.

5.2 Pre-processing

Meticulous data preprocessing is of paramount importance. These procedures are not only pivotal for safeguarding data integrity but are also instrumental in elevating the dependability and precision of forecasting outcomes. In our study, we have meticulously executed a comprehensive data preprocessing pipeline, encompassing data originating from a 200 MW solar photovoltaic (PV) facility located near Daggett, California, United States, spanning the entire year of 2006.

5.2.1 Initial data loading and transformation

5.2.2 Enhancement of data granularity

To enhance the model’s sensitivity to potential power fluctuations, we proceeded to increase the granularity of the weather dataset. Leveraging a linear interpolation method, the 30-min intervals were smoothly transitioned into 5-min intervals, thus establishing a synchronized time series platform for model training and analysis.

5.2.3 Feature engineering and selection

5.2.4 Integration of datasets

This rigorous pre-processing regimen plays a pivotal role in enhancing the efficacy of our time series modeling. It guarantees a direct and unbiased comparison between QLSTM and classical LSTM models in the context of solar power forecasting.

5.3 Simulation framework

Our exploration of Quantum Long Short-Term Memory (QLSTM) models was made possible using the PennyLane quantum machine learning framework [22]. PennyLane, at its core, blends quantum and traditional computing to help build and refine models, benefiting from its ability to automatically adjust model parameters.

A standout feature of PennyLane is its capacity to smoothly combine quantum elements—based on variational circuits—with regular neural network parts. This allows the creation of advanced structures like QLSTMs. These models mix traditional repeatable patterns with quantum behaviours such as superposition and entanglement.

For our QLSTM model, PennyLane’s qml.QNode feature was crucial. It helped set up the quantum node of the model. These quantum nodes, designed with time series data in mind, use specific rotation and entanglement actions, namely, RY, RZ, and CNOT gates.

Bridging the gap between the quantum and regular sections, PennyLane’s qml.qnn.TorchLayer connects the quantum elements with the regular PyTorch framework [23]. This ensures a smooth flow of adjustments during the optimization phase.

For faster results during quantum simulations, we mainly used the DefaultQubit tool from PennyLane which mainly utilizes the CPU for this purpose. To further boost the speed, we tried PennyLane’s lightning.gpu simulator to run natively on CUDA-enabled GPUs using the NVIDIA cuQuantum SDK. This tool moves the quantum simulation to high-speed GPUs. During model development, we found the lightning.gpu device provided up to a 5 times speedup for batched inference of quantum circuits on our test system with an NVIDIA Tesla V100 GPU compared to DefaultQubit. However, the training time reduction was not as significant.

As QLSTM models grow more complex with more quantum bits and detailed circuits, faster simulations using GPUs become more crucial. PennyLane offers multiple tools, making it easier to switch between different simulation methods for the best results.

In short, with the help of both DefaultQubit and lightning.gpu tools, we were able to design, refine, and test our QLSTM model and compare it with regular LSTM models in the PyTorch setting.

Pennylane’s integration with Pytorch and NVIDIA technology, was essential for our study. It allowed us to effortlessly combine quantum and traditional modeling while ensuring relatively fast simulations as compared to the classical CPU based device. This provided us with the perfect platform to compare the potentials of quantum and traditional LSTM models.

5.4 Architecture

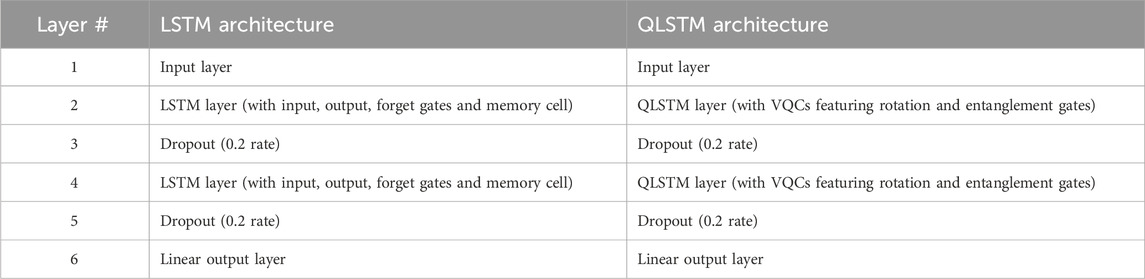

The LSTM and QLSTM architectures are detailed in Supplementary Appendix. This research encompasses the design and deployment of both LSTM and QLSTM architectures. These architectures were judiciously crafted to allow a fair comparison between classical and quantum techniques, with a focal point on solar power forecasting.

The LSTM model adopts a stacked configuration, constituting two recurrent hidden layers. Each layer houses classical LSTM cells, encapsulating the conventional input

Diverging, the QLSTM model replaces the classical LSTM cells with variational quantum circuits (VQCs), an implementation adapted and enhanced from qlstm repository for parts of speech tagging [24]. This substitution aims to harness the computational advantages unique to quantum mechanisms. Echoing the LSTM’s design, the QLSTM layers two of these quantum circuits. The VQCs, in their capacity as quantum feature extractors, exploit the deep representational capabilities of quantum states, encoding intricate time series dynamics. These parametric circuits oscillate between rotation and entanglement gates, ensuring a concise representation of temporal patterns within the exponentially expansive Hilbert space. The qubit quantity and circuit depth were adapted in alignment with the specific characteristics intrinsic to the solar forecasting data. Notably, outside of its quantum encoding, the QLSTM’s broader workflow aligns seamlessly with the LSTM’s, facilitating a direct comparison. A dropout rate of 0.2 is also infused between the QLSTM layers, maintaining consistency.

Core QLSTM Cell Components:

Both models converge at a linear output layer, producing the ultimate forecast. Their optimization leverages the ADAM algorithm, zeroing in on minimizing the mean squared error (MSE). With structural symmetry between LSTM and QLSTM, while differing in their core computational elements, this architecture sets the stage for evaluating enhancements attributed solely to quantum encoding. Table 2 presents the comparison of LSTM and QLSTM architectures.

The models are intricately molded to resonate with the spatiotemporal subtleties inherent to solar forecasting data, which encompasses recurring weather patterns and energy variations. By employing the 2006 NREL dataset, a comprehensive archive detailing diverse weather conditions over a year, this research is positioned to deliver a rigorous evaluation. This meticulous comparison seeks to shine a light on the quantum methodologies’ adeptness in encapsulating real-world solar phenomena.

5.5 Encoding process

The process of encoding classical data into quantum states is a crucial component of our QLSTM model. This section details the steps involved in this encoding process and analyzes its computational complexity.

5.5.1 Encoding steps

The encoding process consists of three main steps.

5.5.1.1 Data preprocessing

Before quantum encoding, we preprocess the input data to obtain suitable rotation angles for quantum gates.

5.5.1.2 Quantum state preparation

For each qubit in the circuit, we apply the following gates sequentially:

1. Hadamard gate (qml.Hadamard): Creates an initial superposition state.

2. RY rotation (qml.RY): Applied using the preprocessed ry_params.

3. RZ rotation (qml.RZ): Applied using the preprocessed rz_params.

5.5.1.3 Variational quantum circuit

After state preparation, we apply a variational quantum circuit. This circuit, implemented through the ansatz function, is repeated n_qlayers times and includes:

5.5.2 Complexity analysis

We analyze the complexity of our encoding process in terms of both classical preprocessing and quantum operations.

5.5.2.1 Classical preprocessing complexity

This step scales linearly with the number of input features.

5.5.2.2 Quantum state preparation complexity

This step scales linearly with the number of qubits.

5.5.2.3 Variational quantum circuit complexity

5.5.2.4 Overall encoding complexity

The total complexity of encoding data into quantum states is:

In most practical scenarios, this can be simplified to:

as the number of qubits and layers typically dominates the complexity.

5.6 Model training and hyperparameters

In order to foster a robust comparative analysis between LSTM and QLSTM models, a rigorous hyperparameter optimization phase was implemented, specifically tailored for solar forecasting applications. Initially, a grid search method was employed to delineate appropriate parameter ranges, encapsulating pivotal variables such as window size, batch size, learning rate, epochs, and model-specific parameters including quantum circuit shape. This preliminary exploration paved the way for the identification of prospective parameter values.

Following this, a more refined tuning process was undertaken utilizing the Optuna framework, thus automating and enhancing the hyperparameter optimization procedure. The objective function facilitated the evaluation of parameter combinations by training models on a validation dataset and quantifying their performance through the metric of mean squared error loss.

The LSTM model witnessed a comprehensive parameter exploration, incorporating window sizes ranging from 5 to 50 timesteps, batch sizes varying from 16 to 128, logarithmically scaled learning rates between 0.0001 and 0.1, and epochs extending from 10 to 100. Over 180 trials were conducted, with Optuna’s Tree-Parzen Estimator sampler adaptively selecting new configurations based on previous results, ultimately identifying optimal hyperparameters including a window size of 8, a batch size of 32, a learning rate of 0.001, and 20 epochs. Interestingly, these findings corroborated our initial manual tuning experiments, affirming the efficacy of our automated optimization strategy.

A similar extent of optimization was conducted for the QLSTM, encompassing over 150 trials that scrutinized various parameters including the number of qubits (ranging from 2 to 8), circuit layers (varying from 1 to 4), learning rates (between 0.0001 and 0.1), batch sizes (from 16 to 128), and epochs (between 10 and 100). Notably, the optimal configuration closely mirrored the top-performing LSTM hyperparameters, fostering a fair and balanced evaluation process.

This meticulous optimization procedure methodically investigated a broad parameter space, empirically pinpointing optimal model configurations. By maintaining a consistent tuning approach for both LSTM and QLSTM models, the integrity of our comparison was upheld, critically evaluating their representational capabilities. The recurrent convergence noted in our experiments stands as a potent validation of our methodology, affirming our model design choices, particularly within the domain of real-world solar forecasting applications.

6 Results

The metrics utilized for the statistical and predictive analysis, are defined in detail in the Supplementary Appendix (Evaluation Methodology).

6.1 Statistical analysis

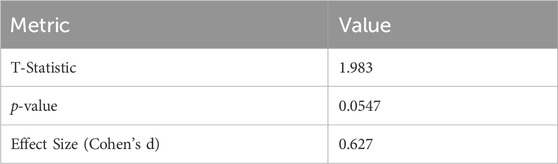

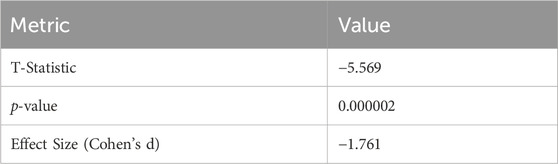

In this section, we conducted a statistical analysis to compare the losses between the QLSTM model and the classical LSTM model as shown in Table 5.

6.1.1 Train loss

The statistical analysis of the train loss, as shown in Table 3, suggests that the QLSTM model tends to perform better in terms of reducing train loss, as in table. Although the p-value (0.0547) is slightly above the conventional threshold for statistical significance (0.05), the moderate effect size (Cohen’s d = 0.627) indicates a noticeable difference favoring the QLSTM model. This suggests that with further refinement, the QLSTM could consistently outperform the classical LSTM in training performance.

6.1.2 Test loss

Similarly, we compared the test losses between the QLSTM and classical LSTM models to assess how well each model generalizes to unseen data, as depicted in Table 4. The results, as presented in Table 4, clearly show that the QLSTM model significantly outperforms the classical LSTM in terms of test loss. The highly significant p-value (0.000002) and the large effect size (Cohen’s d = −1.761) indicate that the QLSTM model achieves much lower test losses, making it a more effective model for generalization and prediction accuracy in time series forecasting, particularly for solar power production.

6.2 Performance analysis

6.2.1 Predictive accuracy

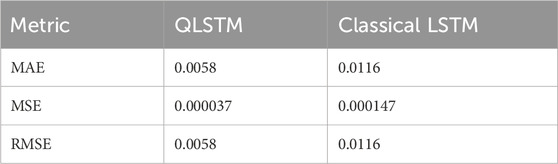

The QLSTM model exhibited superior predictive accuracy compared to the classical LSTM model, as illustrated in Table 5. The lower values of MAE, MSE, and RMSE for the QLSTM indicate higher predictive accuracy, suggesting a promising avenue for advancing time series forecasting in the domain of solar power production.

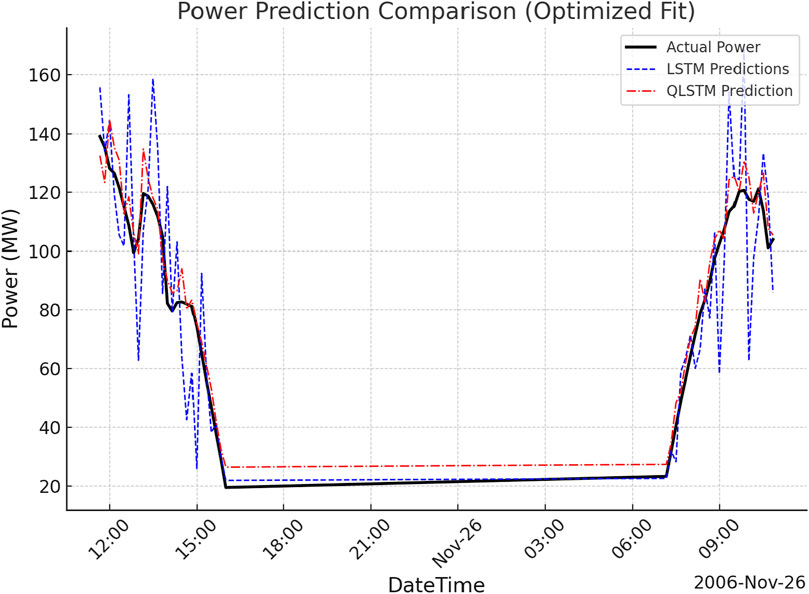

The predictive accuracy of the QLSTM model is further reinforced by the visual comparison presented in Figure 3. As observed, the QLSTM predictions closely follow the actual power values, exhibiting a remarkable ability to capture the underlying patterns and fluctuations in the data. This is particularly noteworthy given that these predictions are generated by the QLSTM model after only the first epoch, whereas the classical LSTM model has undergone 20 epochs of training. The QLSTM’s ability to achieve such accurate predictions in a single epoch underscores its superior learning capabilities and potential for efficient real-time forecasting applications.

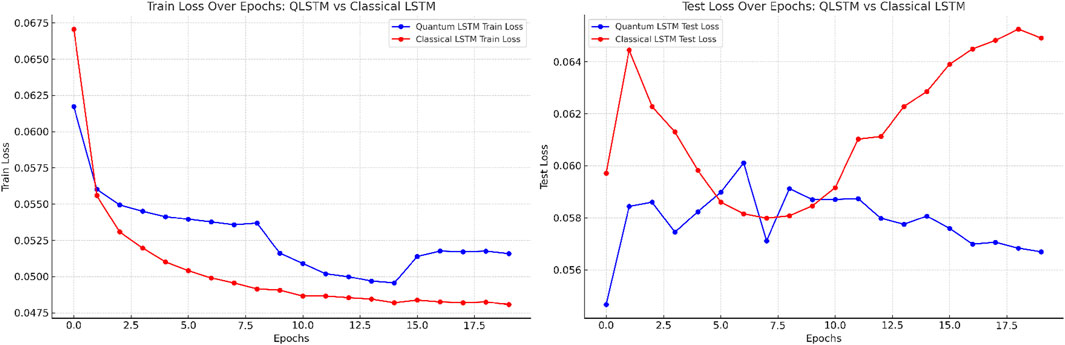

6.2.2 Rate of convergence

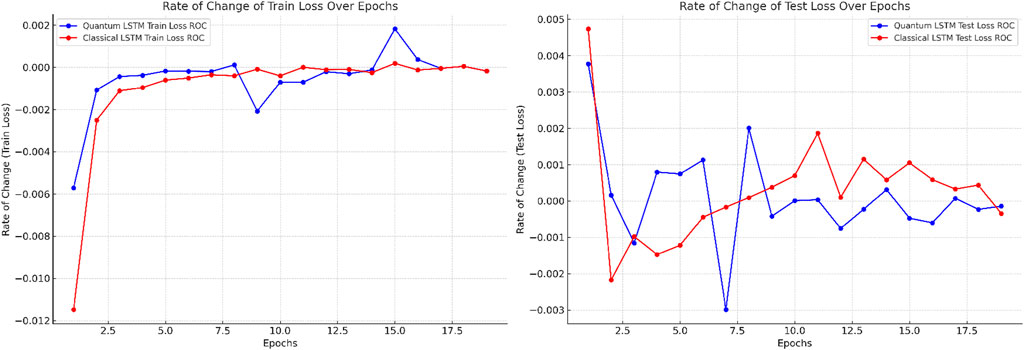

The QLSTM model showcased a remarkably rapid convergence rate, reaching its nadir of test loss as early as the inaugural epoch, thereby exemplifying efficiency and computational frugality. This swift convergence is indicative of the model’s adeptness at quickly adapting to the underlying patterns in the data, a trait that stands in stark contrast to the classical LSTM model, which required seven epochs to attain a similar state of optimization. This attribute can be particularly advantageous in real-time forecasting applications where timely insights are pivotal. The rate of convergence for both models is depicted in Figure 4.

The rapid convergence of the QLSTM model is further corroborated by the graph in Figure 3. Despite being trained for only a single epoch, the QLSTM predictions closely match the actual power values, indicating that the model has effectively learned the underlying patterns in the data within the first iteration. In contrast, the classical LSTM model, even after 20 epochs of training, exhibits a noticeable deviation from the actual power values, suggesting a slower convergence rate and a potential need for further training iterations to achieve comparable performance.

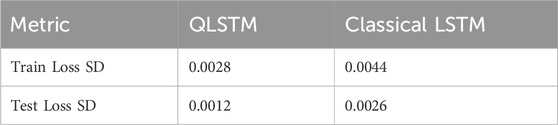

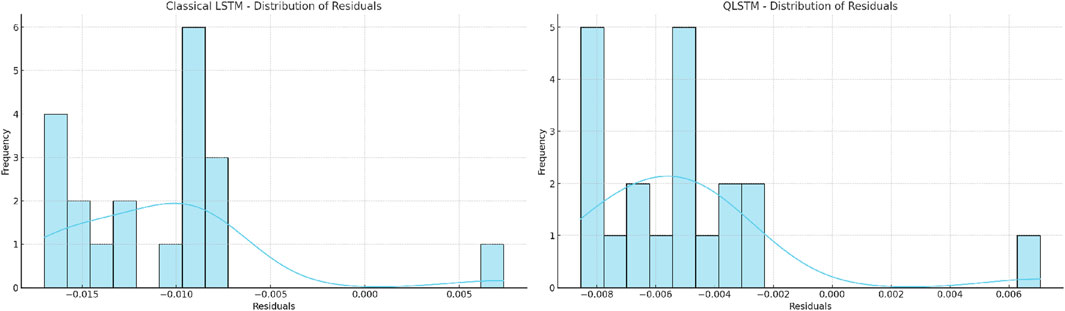

6.2.3 Stability of learning

The analysis of learning stability, presented in Table 6, demonstrates the QLSTM model’s heightened stability, characterized by lower variance in train and test loss metrics across epochs compared to the classical LSTM model, indicating a more stable learning trajectory. The distribution of loss values is further elucidated by Figure 5, which provides visual evidence of the reduced spread and outlier values in the QLSTM model, underlining its robustness and stability.

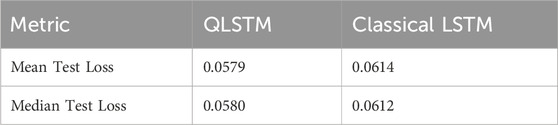

6.2.4 Generalization performance

The analysis of generalization performance, illustrated in Table 7, demonstrates the QLSTM model’s superiority in terms of generalization, substantiated by lower mean and median test loss values over all epochs compared to the classical LSTM model. This performance, coupled with more accurate and reliable predictions, holds the potential to enhance solar power production forecasting. Figure 6 provides a comparative analysis of the train and test loss for both models.

The generalization performance of the QLSTM model is visually apparent in Figure 3, where its predictions accurately capture the overall trend and fluctuations in the actual power values, even on unseen data points, after just a single epoch of training.

The right subfigure in Figure 3 shows the test loss over epochs for both QLSTM and classical LSTM models. The classical LSTM model (red curve) demonstrates a generally good fit, with test loss decreasing initially and then stabilizing with minor fluctuations. This indicates that the classical LSTM is effectively learning the data and maintaining reasonable generalization.

However, the QLSTM model (blue curve) exhibits a more stable and lower test loss across epochs, suggesting that it generalizes marginally better than the classical LSTM. The consistent performance of QLSTM, with less fluctuation in test loss, highlights its ability to capture complex patterns while maintaining robustness in prediction accuracy. This slight edge in generalization makes QLSTM a promising alternative for solar power forecasting.

6.3 Evaluation time

The evaluation time per epoch is a critical factor in assessing the practicality of the Quantum Long Short-Term Memory (QLSTM) and classical Long Short-Term Memory (LSTM) models, particularly for applications requiring rapid predictions. The QLSTM model, which leverages quantum computational principles, exhibits significantly longer evaluation times due to the complex nature of quantum simulations. On average, each epoch of the QLSTM model required approximately 5172.22 s (approximately 1 h and 26 min). This extended duration is attributed to the intensive quantum computations and the simulation environment provided by Pennylane.

In contrast, the classical LSTM model, which operates within a conventional computational framework, demonstrated a much shorter average evaluation time of approximately 0.41 s per epoch. This stark difference in evaluation times underscores the trade-off between the advanced capabilities of quantum models and the computational efficiency of classical models. While QLSTM achieves superior accuracy and faster convergence, its longer evaluation time may limit its applicability in scenarios where rapid predictions are essential.

7 Discussion and limitations

The findings of this study unveil the promising potential of Quantum Long Short-Term Memory (QLSTM) models in revolutionizing solar power forecasting, a critical endeavour for the global transition towards sustainable energy systems. Through rigorous empirical evaluation and comparative analysis with classical Long Short-Term Memory (LSTM) models, our research substantiates the anticipated advantages of QLSTMs in capturing the intricate spatiotemporal patterns inherent in renewable energy data.

A pivotal observation from our experiments is the accelerated training convergence exhibited by QLSTMs, reaching optimal test loss within the initial epoch, far outpacing their classical counterparts. This remarkable convergence speed can be attributed to the inherent quantum phenomena of superposition and entanglement, which empower QLSTMs to swiftly assimilate complex time series relationships. Harnessing the exponentially larger representational capabilities of quantum states, QLSTMs demonstrate a heightened capacity to discern and encode the nuanced dynamics governing solar power generation, a trait that classical models struggle to match.

Furthermore, our findings reveal substantial improvements in predictive accuracy, as evidenced by the significantly lower test loss achieved by QLSTMs. This empirical evidence affirms the hypothesized representational strengths of quantum architectures, paving the way for unprecedented levels of precision and reliability in renewable energy forecasting. The ability to accurately predict solar power generation holds profound implications for stakeholders, grid operators, and policymakers, enabling proactive management of energy storage, distribution networks, and integration strategies.

Runtime and Computational Overhead: While the advantages of QLSTMs are evident, it is crucial to acknowledge the current limitations that hinder their widespread adoption. The extended runtime and computational overhead associated with quantum simulations pose challenges for real-time forecasting applications that demand instantaneous predictions for optimizing storage or grid distribution strategies. However, the relentless progress in quantum computing hardware and software instills optimism, with the potential to bridge the efficiency gap and rival the inferential speed of classical models.

Validation and Generalization: Another key limitation of our study lies in the scope of the datasets employed. While we meticulously curated a combination of real-world operational data from solar plants and high-fidelity synthetic datasets spanning an entire year, further validation across a broader spectrum of conditions, geographic locations, and diverse renewable sources is warranted. Expanding the dataset scope will not only enhance the generalization of our findings but also unlock opportunities for fine-tuning and optimizing QLSTM architectures tailored to specific renewable energy forecasting tasks.

Thorough Hyperparameter Tuning: Furthermore, our study focused on optimizing specific hyperparameters and exploring architectural variations within the constraints of current quantum hardware limitations. However, a more comprehensive exploration of quantum circuit designs, input lengths, and classical layer structures is essential to fully unleash the potential of QLSTMs. As quantum computing capabilities advance, more complex and expressive architectures can be realized, potentially yielding further improvements in forecasting accuracy and robustness.

Scalability and Noise Resilience: As QLSTMs expand in complexity, incorporating more qubits and intricate circuit designs, scalability becomes a critical consideration. The exponential growth of the quantum state space can rapidly overwhelm computational resources, necessitating innovative strategies for efficient state representation and manipulation. It is important to note that our simulations did not account for the potential impact of quantum noise, such as decoherence and gate errors, a factor that could influence the performance of QLSTMs in real-world quantum computing environments. Addressing these limitations will require the development of noise-resilient circuit designs, error correction techniques, and noise-aware training algorithms, ensuring robust performance in real-world quantum computing environments.

Beyond the realm of solar power forecasting, the capabilities of QLSTMs hold immense potential for applications in other renewable energy sectors, such as wind and hydro power forecasting. The ability to capture complex spatiotemporal patterns can be leveraged to enhance forecasting accuracy across a diverse range of renewable sources, thereby contributing to the global efforts towards sustainable energy systems.

Expanded Applications: Moreover, the representational strengths of QLSTMs extend beyond solar power forecasting, offering promising avenues for applications in other renewable energy sectors, such as wind and hydro power forecasting, as well as industrial time series forecasting in domains like finance, equipment maintenance, and supply chain management. By capturing complex spatiotemporal patterns across diverse data streams, QLSTMs could revolutionize predictive analytics and decision-making processes in these critical sectors.

Broader Impact and Implications: The implications of this study reverberate far beyond academic curiosity. By harnessing the predictive prowess of QLSTMs, utilities and energy stakeholders can unlock unprecedented forecasting precision, mitigating the intermittency hurdles that have historically impeded solar adoption. This paradigm shift could precipitate a global transition towards sustainable energy systems, reducing reliance on supplemental generation while fostering grid resilience and resource optimization.

Architectural Innovations: Continuous refinements in QLSTM architectures, such as exploring alternative quantum circuit designs, incorporating attention mechanisms, or hybridizing with classical components, quantum-enhanced optimization algorithms, could yield substantial improvements in forecasting accuracy and efficiency. Collaborations between quantum physicists, computer scientists, and machine learning experts will be vital in translating theoretical advancements into practical implementations.

Quantum Hardware and Software Advancements: As quantum computing technology progresses, with the advent of more powerful and stable quantum hardware, as well as optimized software frameworks and algorithms, the inherent advantages of QLSTMs are poised to be fully realized. Noise-resilient circuit designs, efficient state representation techniques, and quantum-classical hybrid approaches could unlock unprecedented levels of accuracy and reliability in renewable energy forecasting.

Accurate solar power forecasting enabled by QLSTMs holds profound implications for energy policy, grid infrastructure planning, and energy market dynamics. Policymakers and regulatory bodies could leverage these advanced forecasting capabilities to develop informed strategies for incentivizing renewable energy adoption, optimizing grid integration, and fostering a sustainable energy future. Additionally, energy trading and market mechanisms could be revolutionized, with QLSTMs enabling more efficient and data-driven decision-making processes.

In essence, this research ushers in a new era of precision insights, illuminating a promising trajectory where quantum machine learning techniques like QLSTMs reshape the landscape of renewable and industrial time series forecasting. As quantum computing matures, transcending current hardware constraints, the potential for QLSTMs to redefine predictive analytics across myriad sectors becomes increasingly palpable. This study serves as a catalyst, igniting future interdisciplinary endeavors that synergize quantum information science and machine learning to solve intricate real-world challenges, propelling us towards a sustainable, resilient, and data-driven energy future.

8 Future research directions

The pioneering findings unveil the transformative potential of Quantum Long Short-Term Memory (QLSTM) architectures in solar power forecasting. This investigation represents the first step in the vast expanse of research opportunities at the convergence of quantum computing and machine learning. To fully harness QLSTMs’ disruptive capabilities and propel real-world impact, the following future research frontiers demand unwavering pursuit.

8.1 Quantum hardware deployment and noise resilience

Rigorous evaluations on emerging quantum devices, coupled with robust noise mitigation techniques like error correction, noise-aware training, and resilient circuit designs, are crucial. Interdisciplinary collaborations among quantum computing experts, physicists, and machine learning researchers will accelerate progress, unlocking QLSTMs’ true potential in noise-resilient renewable forecasting.

8.2 Architectural innovations and quantum-classical hybridization

Continuous architectural innovations, including alternative quantum circuit designs, attention mechanisms, and quantum-classical hybrid models, present fertile ground for performance enhancements. Systematic optimization leveraging advanced techniques could uncover tailored configurations. Seamless hybrid model integration frameworks could overcome hardware constraints, yielding unparalleled accuracy and efficiency.

8.3 Hybrid quantum-classical approaches

Harnessing the strengths of both quantum and classical paradigms through hybrid quantum-classical approaches presents a promising avenue. These synergistic models could leverage the representational advantages of quantum architectures while benefiting from the computational efficiency and scalability of classical techniques. Developing seamless integration frameworks and algorithms for these hybrid models could unlock unprecedented levels of accuracy and speed, potentially overcoming current hardware limitations.

8.4 Scalability and broader applications

Validating QLSTMs’ scalability across diverse renewable domains like wind and hydro power forecasting, and industrial time series applications, will establish versatility and robustness. Concurrently, investigating quantum-inspired classical models could offer pragmatic interim solutions while advancing fully-fledged quantum architectures.

8.5 Translating theoretical potential into practical impact

Fostering collaborations among researchers, domain experts, industry partners, and utilities will integrate QLSTMs into existing systems for renewable infrastructure planning, energy trading, and predictive maintenance. These applications represent the vanguard of a forecasting paradigm shift driven by quantum information science and machine learning synergy.

Sustained research in these frontiers will propel QLSTMs to revolutionize predictive analytics, reshaping forecasting paradigms across domains. Through unwavering commitment and interdisciplinary synergy, quantum machine learning’s full disruptive potential can unleash unprecedented accuracy, reliability, and sustainability in renewable energy systems worldwide.

9 Conclusion

This study has explored the transformative potential of quantum machine learning, specifically Quantum Long Short-Term Memory (QLSTM) architectures, in enhancing solar power forecasting accuracy and reliability. Through rigorous empirical evaluation grounded in real-world photovoltaic data, we have garnered compelling evidence substantiating the central hypothesis–that QLSTMs, underpinned by their vast representational capabilities, can unveil nuanced spatiotemporal patterns obscured to classical methods.

Our findings underscore several notable advantages of the QLSTM paradigm. Foremost, QLSTMs exhibited remarkably swift training convergence, attaining superior predictive accuracy within the inaugural epoch itself. This rapid assimilation of complex time series dynamics is attributable to the unique properties of quantum phenomena like superposition and entanglement. Consequently, QLSTMs could circumvent the laborious training cycles that often impede classical neural networks, translating into computational expedience for time-sensitive forecasting applications.

Moreover, our controlled experiments unveiled a consistent pattern–QLSTMs outperformed classical LSTMs in minimizing test loss across multiple metrics, including MAE, MSE, and RMSE. This elevated forecasting precision, coupled with heightened generalization capabilities, positions QLSTMs as a disruptive force in the renewable energy sector. By empowering grid operators with unparalleled foresight into solar supply fluctuations, QLSTMs could catalyze more judicious energy management, storage optimization, and seamless incorporation of photovoltaic sources into existing infrastructure.

While challenges persist, primarily concerning inference runtimes and the necessity for broader validation, this research marks a pivotal juncture in the convergence of quantum and classical computing paradigms. Our findings provide a firm foundation for subsequent investigations aimed at refining quantum architectures, systematic hyperparameter optimization, resilience to hardware noise, and exploring applications across diverse renewable domains.

The implications of this study reverberate far beyond academic curiosity. By harnessing the predictive prowess of QLSTMs, utilities and energy stakeholders can unlock unprecedented forecasting precision, mitigating the intermittency hurdles that have historically impeded solar adoption. This paradigm shift could precipitate a global transition towards sustainable energy systems, reducing reliance on supplemental generation while fostering grid resilience and resource optimization.

In essence, this research ushers in a new era of precision insights, illuminating a promising trajectory where quantum machine learning techniques like QLSTMs revolutionize renewable and industrial time series forecasting. As quantum computing matures, transcending current hardware constraints, the potential for QLSTMs to redefine predictive analytics across myriad sectors becomes increasingly palpable. This study serves as a catalyst, igniting future interdisciplinary endeavors that synergize quantum information science and machine learning to solve intricate real-world challenges.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

SK: Writing–original draft, Software. NM: Writing–original draft. SG: Writing–review and editing, Validation, Methodology. HK: Writing–review and editing, Project administration, SZ: Writing–review and editing, Supervision, Conceptualization. AA: Writing–review and editing, Resources. IA: Writing–review and editing, Funding acquisition.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fphy.2024.1439180/full#supplementary-material

References

1. Azevedo CRB, Ferreira TAE. Time series forecasting with qubit neural networks. In: Proceedings of the eleventh IASTED international conference on artificial intelligence and soft computing, ASC ’07. USA: ACTA Press (2007). p. 13–8.

2. Ceschini A, Rosato A, Panella M. Hybrid quantum-classical recurrent neural networks for time series prediction. In: 2022 international joint conference on neural networks (IJCNN) (2022). p. 1–8. doi:10.1109/IJCNN55064.2022.9892441

3. Goodarzi S, Perera HN, Bunn D. The impact of renewable energy forecast errors on imbalance volumes and electricity spot prices. Energy Policy (2019) 134:110827. doi:10.1016/j.enpol.2019.06.035

4. Dunjko V, Briegel HJ, Machine learning and artificial intelligence in the quantum domain (2017). arXiv:1709.02779.

5. Biamonte J, Wittek P, Pancotti N, Rebentrost P, Wiebe N, Lloyd S. Quantum machine learning. Nature (2017) 549(7671):195–202. ISSN: 1476-4687. doi:10.1038/nature23474

6. Bausch J. Recurrent quantum neural networks. In: H Larochelle, M Ranzato, R Hadsell, M Balcan, and H Lin, editors. Advances in neural information processing systems, 33. proceedings: Curran Associates, Inc. (2020). p. 1368–79. neurips.cc.

7. Succetti F, Rosato A, Araneo R, Panella M. Deep neural networks for multivariate prediction of photovoltaic power time series. IEEE Access (2020) 8:211490–505. doi:10.1109/access.2020.3039733

8. Prema V, Rao KU. Development of statistical time series models for solar power prediction. Renew Energ (2015) 83:100–9. ideas.repec.org. doi:10.1016/j.renene.2015.03.038

9. Rivera-Ruiz MA, Mendez-Vazquez A, López-Romero JM. Time series forecasting with quantum machine learning architectures. In: O Pichardo Lagunas, J Martínez-Miranda, and B Martínez Seis, editors. Advances in computational intelligence. Cham: Springer Nature Switzerland (2022). p. 66–82. doi:10.1007/978-3-031-19493-1_6

10. Lindsay J, Zand R. A novel stochastic LSTM model inspired by quantum machine learning. In: 2023 24th international symposium on quality electronic design (ISQED) (2023). p. 1–8.

11. Chen SY-C, Yoo S, Fang Y-LL. Quantum long short-term memory. In: Icassp 2022 - 2022 IEEE international conference on acoustics, speech and signal processing (ICASSP) (2022). p. 8622–6. doi:10.1109/ICASSP43922.2022.9747369

12. Cerezo M, Arrasmith A, Babbush R, Benjamin SC, Endo S, Fujii K, et al. Variational quantum algorithms. Nat Rev Phys (2021) 3(9):625–44. doi:10.1038/s42254-021-00348-9

13. Zheng J, Zhang H, Dai Y, Wang B, Zheng T, Liao Q, et al. Time series prediction for output of multi-region solar power plants. Appl Energ (2020) 257:114001. doi:10.1016/j.apenergy.2019.114001

14. Sorkun AD, Cihan M, Incel C, Paoli C. Time series forecasting on multivariate solar radiation data using deep learning (LSTM). Turkish J Electr Eng Computer Sci (2020) 28(1):211–23. doi:10.3906/elk-1907-218

15. Meenal R, Binu D, Ramya KC, Michael PA, Vinoth Kumar K, Rajasekaran E, et al. Weather forecasting for renewable energy system: a review. Arch Comput Methods Eng (2022) 29(5):2875–91. doi:10.1007/s11831-021-09695-3

16. Lindemann B, Müller T, Vietz H, Jazdi N, Weyrich M. A survey on long short-term memory networks for time series prediction. Proced CIRP (2021) 99:650–5. doi:10.1016/j.procir.2021.03.088

17. Emmanoulopoulos D, Dimoska S, Quantum machine learning in finance: time series forecasting (2022). arXiv:2202. 00599.

18. Cerezo M, Verdon G, Huang H-Y, Cincio L, Coles PJ. Challenges and opportunities in quantum machine learning. Nat Comput Sci (2022) 2(9):567–76. ISSN: 2662-8457. doi:10.1038/s43588-022-00311-3

19. Kannal A. Solar power generation data, version 1. Kaggle Dataset (2020). Available from: www.kaggle.com/datasets/anikannal/solar-power-generation-data June 20, 2023).

20. Solar power data for integration studies, NREL.gov, August 3, 2023 (2024). Available from: www.nrel.gov/grid/solar-power-data.html.

21. Sengupta M, Xie Y, Lopez A, Habte A, Maclaurin G, Shelby J. The national solar radiation data base (NSRDB). Renew Sustainable Energ Rev (2018) 89:51–60. doi:10.1016/j.rser.2018.03.003

22. Bergholm V, Izaac J, Schuld M, Gogolin C, Ahmed S, Ajith V, et al. Pennylane: automatic differentiation of hybrid quantum-classical computations. arXiv:1811 (2022):04968. doi:10.48550/arXiv.1811.04968

23. Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, et al. Pytorch: an imperative style, high-performance deep learning library. In: H Wallach, H Larochelle, A Beygelzimer, F d’ Alché-Buc, E Fox, and R Garnett, editors. Advances in neural information processing systems, 32. proceedings: Curran Associates, Inc. (2019). neurips.cc.

24. Sipio RD, QLSTM, GitHub repository, (2021). Available from: https://github.com/rdisipio/qlstm.

Keywords: quantum machine learning, forecasting, renewable energy systems, quantum neural networks, solar power forecasting

Citation: Khan SZ, Muzammil N, Ghafoor S, Khan H, Zaidi SMH, Aljohani AJ and Aziz I (2024) Quantum long short-term memory (QLSTM) vs. classical LSTM in time series forecasting: a comparative study in solar power forecasting. Front. Phys. 12:1439180. doi: 10.3389/fphy.2024.1439180

Received: 27 May 2024; Accepted: 18 September 2024;

Published: 09 October 2024.

Edited by:

Jonas Maziero, Federal University of Santa Maria, BrazilReviewed by:

Mingxing Luo, Southwest Jiaotong University, ChinaZhihao Lan, University College London, United Kingdom

Copyright © 2024 Khan, Muzammil, Ghafoor, Khan, Zaidi, Aljohani and Aziz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Imran Aziz, aW1yYW4uYXppekBwaHlzaWNzLnV1LnNl

Saad Zafar Khan

Saad Zafar Khan Nazeefa Muzammil

Nazeefa Muzammil Salman Ghafoor

Salman Ghafoor Haibat Khan2

Haibat Khan2 Abdulah Jeza Aljohani

Abdulah Jeza Aljohani Imran Aziz

Imran Aziz