- 1School of Computer Science and Engineering, Vellore Institute of Technology, Vellore, India

- 2School of Computer Science Engineering and Information Systems, Vellore Institute of Technology, Vellore, India

This article explores deep learning models in the field of malware detection in cyberspace, aiming to provide insights into their relevance and contributions. The primary objective of the study is to investigate the practical applications and effectiveness of deep learning models in detecting malware. By carefully analyzing the characteristics of malware samples, these models gain the ability to accurately categorize them into distinct families or types, enabling security researchers to swiftly identify and counter emerging threats. The PRISMA 2020 guidelines were used for paper selection and the time range of review study is January 2015 to Dec 2023. In the review, various deep learning models such as Recurrent Neural Networks, Deep Autoencoders, LSTM, Deep Neural Networks, Deep Belief Networks, Deep Convolutional Neural Networks, Deep Generative Models, Deep Boltzmann Machines, Deep Reinforcement Learning, Extreme Learning Machine, and others are thoroughly evaluated. It highlights their individual strengths and real-world applications in the domain of malware detection in cyberspace. The review also emphasizes that deep learning algorithms consistently demonstrate exceptional performance, exhibiting high accuracy and low false positive rates in real-world scenarios. Thus, this article aims to contribute to a better understanding of the capabilities and potential of deep learning models in enhancing cybersecurity efforts.

1 Introduction

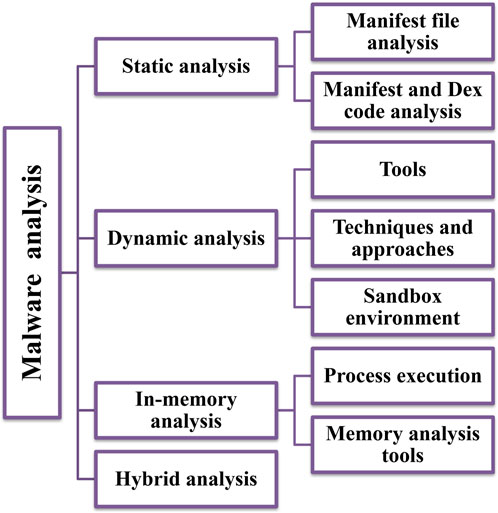

This comprehensive review delves into the burgeoning role of deep learning (DL) models in the face of the ever-evolving menace of malware in cyberspace. Malware represents a continuously evolving cybersecurity threat, and traditional detection technologies often struggle to keep up with the rapid creation of new malware types [1]. However, deep learning models have gained significance in this field due to their ability to automatically learn features from large datasets [2]. Deep learning models also possess the remarkable capability to adapt to emerging threats by learning from extensive and diverse datasets [3]. They excel in extracting intricate and subtle features within malware samples, a task that may be challenging for rule-based or signature-based systems. This feature extraction prowess contributes to heightened accuracy in distinguishing between benign and malicious files, thereby reducing false positives that can disrupt legitimate operations. Moreover, deep learning models offer speed, efficiency, scalability, and continuous improvement, making them invaluable tools for real-time or near-real-time detection and response in the dynamic landscape of cybersecurity [4]. Figure 1 illustrates different categories in malware analysis.

DL models can reliably categorize malware samples into numerous families or types by analyzing their individual properties, assisting security researchers and practitioners in recognizing and responding to emerging threats more efficiently [5]. This review aims to provide an in-depth understanding of various DL architectures utilized in this field, including Recurrent Neural Networks (RNNs), Deep Autoencoders (DAEs), Long Short-Term Memory (LSTM) networks, Deep Neural Networks (DNNs), Deep Belief Networks (DBNs), Deep Convolutional Neural Networks (CNNs), and Deep Generative Models (such as Generative Adversarial Networks or GANs). RNNs are designed for sequential data processing and can capture dependencies in data over time. They are commonly used in tasks like natural language processing and speech recognition. Deep DAEs are utilized for unsupervised learning and data compression. They comprise an encoder and a decoder and find applications in feature learning and anomaly detection. LSTMs, a type of RNN, have specialized memory cells that capture long-term dependencies in data. They are particularly effective in sequential tasks where retaining context is crucial. DNNs consist of multiple layers of interconnected neurons and are employed for supervised learning tasks like image and speech recognition. They form the core of many deep learning applications. DBNs are generative models composed of multiple layers of stochastic, latent variables. They are used in tasks such as feature learning, collaborative filtering, and dimensionality reduction. CNNs are designed for processing grid-like data, such as images, and use convolutional layers to automatically learn spatial hierarchies of features. They find wide applications in image and video analysis. Deep Generative Models, including GANs, are capable of generating data rather than classifying it. GANs, for example, consist of a generator and a discriminator that compete in a game, resulting in the generation of realistic data. They are often used in image generation and data augmentation. This review investigates these models in terms of their unique capabilities and applications in the field of cybersecurity.

1.1 Limitations of previous reviews

In recent research, various issues and challenges related to malware detection using data mining have been extensively explored [6]. One significant challenge is the imbalance of classes within datasets, which affects the accuracy and robustness of malware detection models. Additionally, the need for open and public benchmarks, the emergence of concept drift, and the concerns surrounding adversarial learning techniques all pose significant obstacles to the effectiveness of these detection mechanisms. Furthermore, the interpretability of models remains a critical concern, impeding the deployment of reliable and understandable solutions. When it comes to Cyber-Physical System (CPS) malware detection, the complexity of different malware classes and their numerous variants ma kes detection even more challenging [7]. The rise of Advanced Persistent Threats (APTs) adds another layer of sophistication, demanding advanced strategies to combat coordinated and purposeful attacks. Analyzing malware, including static and dynamic aspects, presents difficulties in understanding and identifying malware, necessitating robust detection strategies. While signature-based and behavior-based methods offer distinct advantages, they also face challenges related to accuracy and efficiency in classifying programs as malicious or benign [6].

An examination of the strengths and weaknesses of signature-based and behavior-based malware detection reveals that each method has its own merits and shortcomings [6]. Signature-based detection is fast and efficient but struggles to detect polymorphic malware, whereas behavior-based detection excels in identifying unconventional attacks but faces challenges regarding storage and time complexity. Ransomware detection and prediction techniques have received significant attention, particularly in the context of machine learning methods [8]. However, there has been a lack of emphasis on predicting ransomware, and identified shortcomings in real-time protection and 0-day ransomware identification highlight the need for more comprehensive approaches. Adversarial machine learning exploitation and concept drift further complicate the landscape of machine learning models in this domain.

Deep Learning (DL)-based malware detection frameworks encounter several challenges, including data imbalance, interpretability issues, susceptibility to adversarial attacks, the need for regular updates, and difficulties in achieving cross-platform detection [9]. Efficient feature extraction techniques and the recognition of new characteristics in 0-day malware add further complexity to the development and deployment of DL models. Deep learning for 0-day malware detection and classification focuses on learning paradigms, feature types, benchmark datasets, and evaluation metrics. API/System calls are the most common type of feature, and prevalent benchmark datasets include Drebin. Evaluation metrics encompass Accuracy, Precision, Recall, F1-score, False Positive Rate, False Negative Rate, Area Under the Curve, and Evasion rate.

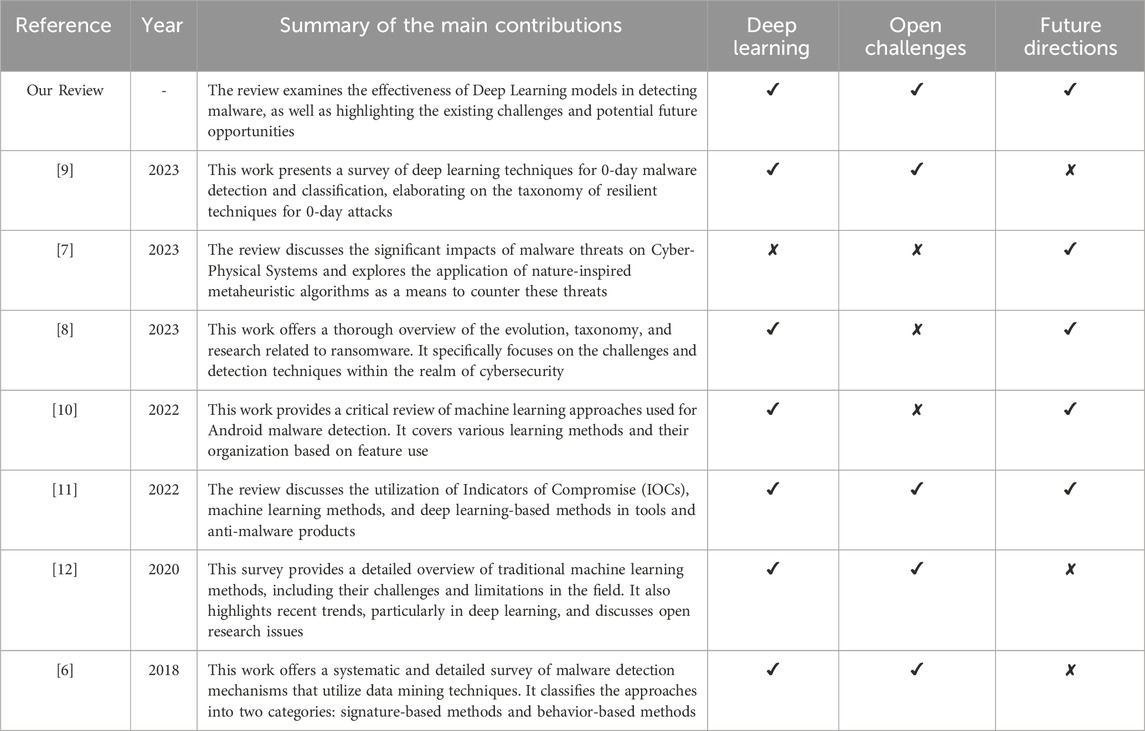

In the domain of Android malware detection using machine learning, challenges and advancements in static analysis have been explored [10]. Machine learning techniques applied to features extracted through static analysis have shown varying degrees of success, relying on tools like APK Tool and Androguard for decompiling and analyzing APK files. The challenges posed by adversarial attacks for PE (Portable Executable) malware are multifaceted. Adversarial attacks in both feature-space and problem-space encounter difficulties in maintaining the format, executability, and maliciousness of PE files. The taxonomy of attacks includes white-box attacks, where the attacker has full knowledge of the model, and black-box attacks, where limited or no knowledge of the model’s internals presents additional challenges [11]. Feature-space attacks involve direct manipulation of features, while problem-space attacks entail altering the actual inputs, such as PE files. These challenges highlight the need for robust defenses against adversarial threats in the context of malware detection. Table 1 provides a comparison with previous review papers with a similar focus.

1.2 Motivation and objectives of this review

The rapidly evolving landscape of cybersecurity presents an ongoing challenge, particularly in the realm of malware detection. Traditional methods struggle to keep pace with the relentless creation of new malware variants. They struggle to keep up with evolving threats, making deep learning’s ability to autonomously extract features from vast datasets crucial. Deep Learning models excel in discerning intricate patterns within malware, offering scalability, efficiency, and continuous improvement. As a result, there’s a pressing need for innovative solutions that can adapt to emerging threats and provide robust protection against cyberattacks.

This review aims to delve into the burgeoning role of deep learning models in combating malware threats in cyberspace. It provides a thorough exploration of various deep learning architectures and their applications in malware detection. By analyzing the strengths and limitations of each model, the review offers valuable insights to researchers and practitioners seeking to harness deep learning techniques for cybersecurity. Recognizing the dynamic nature of cyber threats, the review also sheds light on the evolving landscape of malware and the increasing sophistication of cyber-attacks. It identifies future research directions, emphasizing the need for innovative DL-based solutions that can adapt to dynamic malware behavior and effectively counter adversarial attacks.

1.3 Contributions of this review

The main contributions of this article are as follows:

a) This review provides a critical assessment of the existing literature in the field of deep learning-powered malware detection in cyberspace. Further, it helps to identify gaps and areas for improvement, guiding future research directions and ensuring a more comprehensive understanding of the subject matter.

b) This review extends the scope of traditional approaches to encompass the rapidly growing threat landscape targeting mobile devices. This expansion of focus ensures that the review remains relevant and up-to-date with emerging trends in cybersecurity, providing insights into the unique challenges and opportunities presented by mobile malware.

c) By including recent tools in malware analysis and detection, this review offers readers a comprehensive overview of the current state-of-the-art technologies and methodologies available for combating malware threats. This enables researchers and practitioners to stay abreast of the latest advancements in the field and make informed decisions when selecting and implementing detection tools and techniques.

d) By incorporating a diverse range of tools in malware detection, including behavioral analysis tools, threat intelligence platforms, deception tools, and memory forensic tools, this review provides a holistic perspective on the multifaceted nature of malware detection. This ensures that readers gain insights into the various approaches and methodologies employed in the detection and analysis of malware, enhancing their understanding of the complexities involved in combating cyber threats.

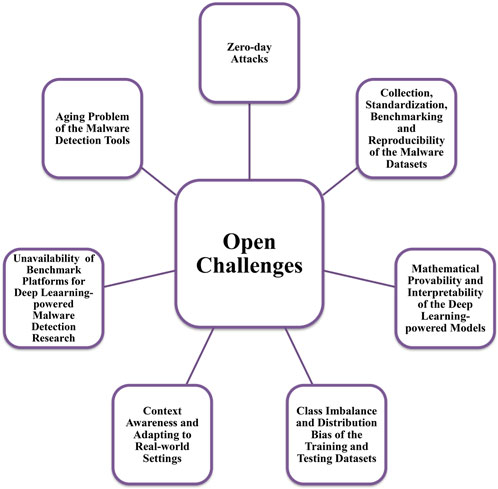

e) By highlighting open challenges in the field of malware detection using deep learning, this review identifies areas where further research and development are needed to address existing gaps and limitations. This stimulates discussion and collaboration within the research community, fostering innovation and driving progress towards more effective and robust solutions for malware detection using deep learning techniques.

2 Survey methodology

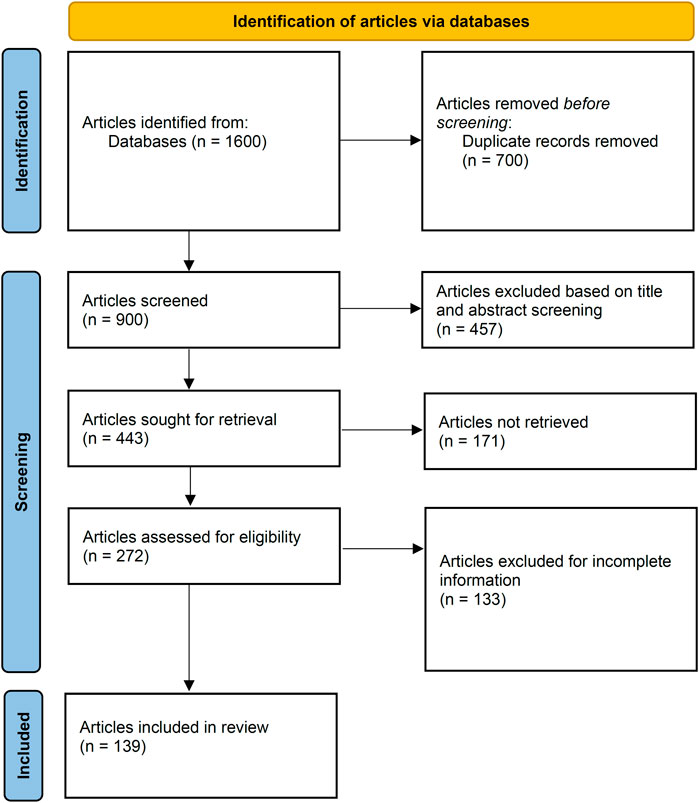

Figure 2 illustrates the process of article selection for this review, adhering to the PRISMA guidelines [13]. A comprehensive search for deep learning models in malware analysis and detection was conducted in three databases, namely, Google Scholar, Scopus, and Web of Science, spanning from January 2015 to December 2023. The search string “Cyberspace, Deep Learning, and Malware Detection” was employed to collect relevant articles. Inclusion and exclusion criteria were applied to determine the articles to be included in the review. Specifically, the articles had to be written in English, published in peer-reviewed journals or conferences, and relevant to both malware analysis and detection and deep learning. During the initial stage, 900 non-duplicate articles were obtained, as depicted in Figure 2. Following the screening of titles and abstracts, 457 articles were excluded. Subsequently, 171 articles for which full-text reports could not be retrieved were also removed from consideration. Additionally, 272 articles were assessed for eligibility, leading to the removal of 133 articles with incomplete information. Finally, a total of 139 articles met all the criteria and were selected for this review.

3 Deep learning-powered malware detection in cyberspace

Deep learning (DL) models are highly proficient in autonomously learning features from extensive datasets, making them particularly suitable for detecting malware in the digital realm. By thoroughly analyzing malware samples, DL models acquire the capability to accurately categorize them into distinct families or types.

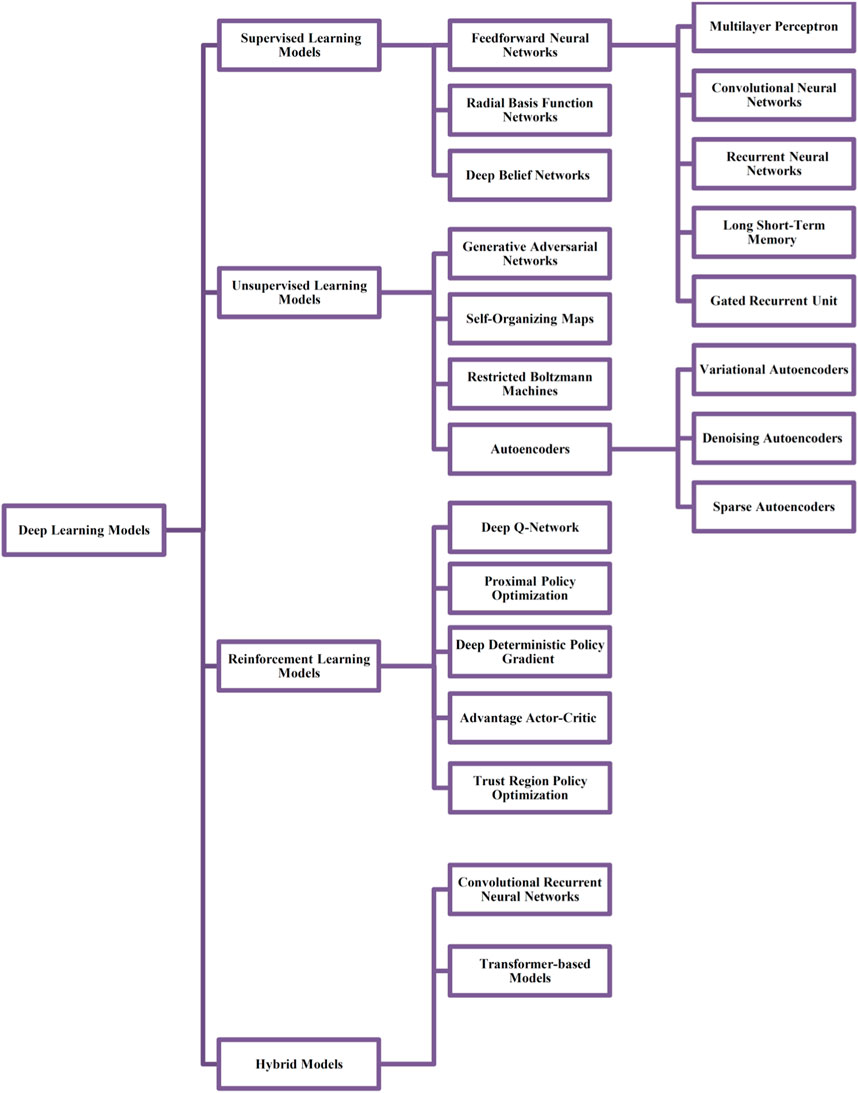

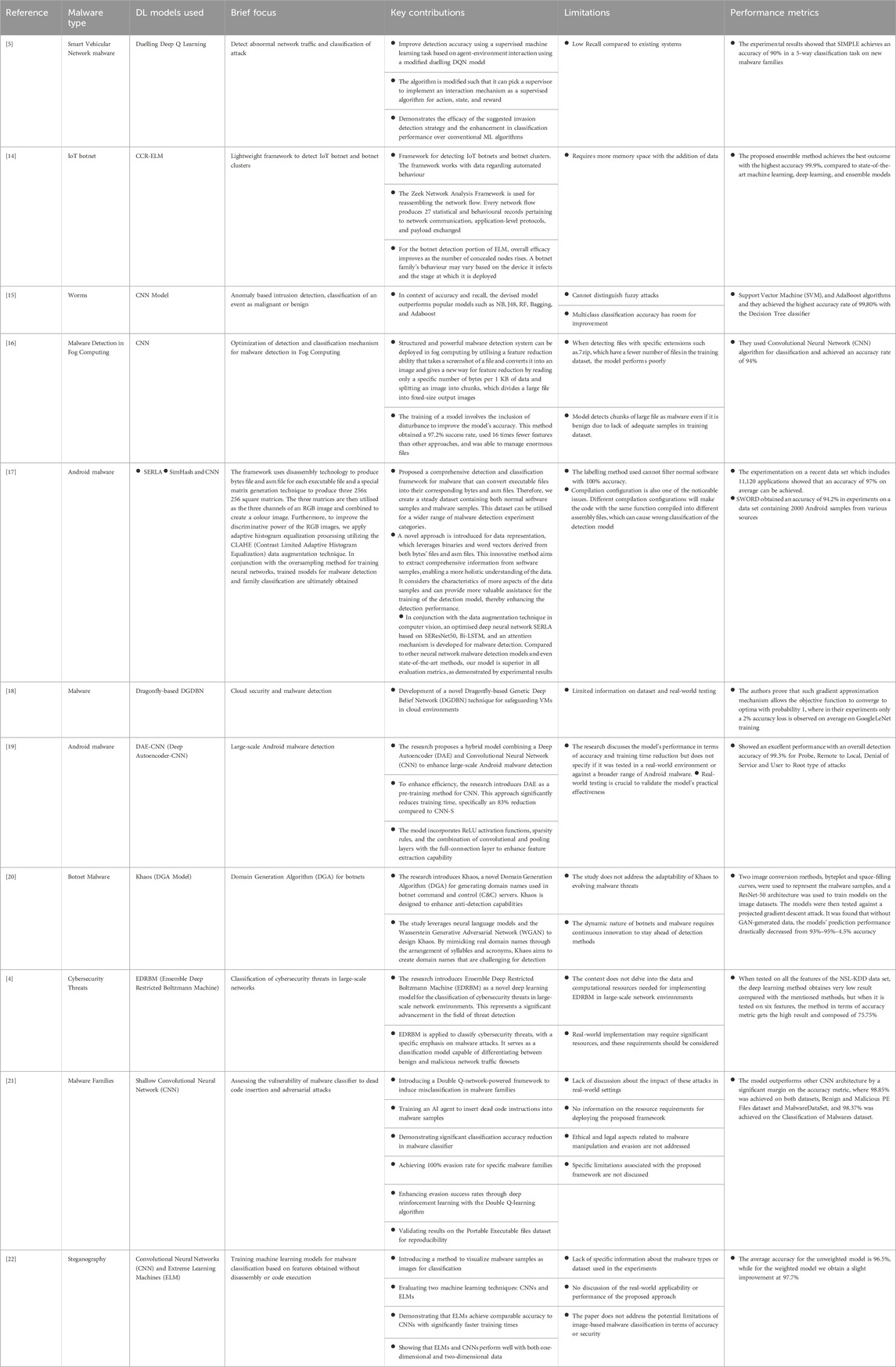

DL models undergo training using comprehensive sets of extracted attributes, including elements such as opcode sequences, API calls, and system calls. This training empowers the models to differentiate intricate patterns that distinguish malware from benign software. Consequently, these well-trained models can be deployed to classify new and previously unknown samples, providing a powerful tool for robust detection and in-depth analysis of malware. Figure 3 illustrates the current taxonomy of deep learning models for malware detection in cyberspace. Additionally, Table 2 provides a summary of research conducted on deep learning models for malware detection in cyberspace.

3.1 Recurrent neural networks

Recurrent Neural Networks (RNNs) play a significant role in the field of malware detection in cyberspace due to their ability to handle sequential input data. In the context of malware detection, RNNs are useful for assessing system calls, API calls, and network traffic generated by software applications to identify potentially harmful activities. System calls provide insights into a program’s actions within the system, allowing the detection of deviations from normal software behavior that may indicate malicious activity. API calls reveal how a program interacts with the underlying system, enabling the identification of specific APIs used for malicious purposes, such as modifying system settings. Network traffic data is crucial for detecting malware that communicates with external servers, attempts data exfiltration, or engages in suspicious data exchange over the network.

RNNs excel at analyzing sequences of data and capturing temporal dependencies in the behavior of potentially malicious software. They are particularly effective at processing sequential data encountered in malware analysis, such as sequences of system calls, API calls, or network traffic generated by software. By being exposed to sequential data, RNNs become adept at discerning correlations and patterns that indicate malware behavior. They take sequential data as input, working through it one element at a time and updating their internal state based on the observed data. This mechanism allows RNNs to capture temporal dependencies and patterns in the data, which is essential for understanding the dynamic nature of malware. The hidden state within RNNs serves as a form of memory, retaining information about previous observations and enabling the contextualization of past events while predicting the current one. However, these strengths are counterbalanced by challenges inherent in its application. There is a limitation that Kaspersky malware family classification criteria of the malware sample used for analysis in this paper may not be accurate. Only the types and order of the APIs were taken into consideration that were called when extracting patterns for APIs called by malware and evaluating them. Since the API itself is higher-level than the machine code or assembly in the computer, the performance may be improved if the semantic criteria and semantic distinction of malware API to be extracted [1].

To train RNNs for malware detection, historical data is used to adjust their internal parameters. Backpropagation through time is employed to update the model’s weights and biases based on prediction errors, allowing the them to learn patterns associated with malware. Additionally, their temporal modeling capabilities enable the identification of anomalous behavior trends within an application over time, facilitating the early detection of novel or previously undiscovered strains of malware. The ability of RNNs to capture nuanced and evolving behavioral patterns makes them a valuable tool in malware detection. They enhance security by providing a dynamic, adaptable, and context-aware approach to identifying malicious software, especially in the face of rapidly changing cybersecurity threats. RNNs are effective at identifying evasive and polymorphic malware, which employ techniques to avoid detection and continually change their code to generate different variants. RNNs can tackle these challenges by recognizing deviations from normal behavior and analyzing the evolving patterns in the code.

Addressing data imbalance in training RNNs demands a strategic approach. One method involves data augmentation, wherein synthetic data is generated by introducing variations to the existing minority class samples, thereby enriching the dataset. Additionally, employing sampling techniques such as oversampling (replicating minority class samples) or undersampling (reducing the number of majority class samples) can help balance the dataset distribution. Moreover, integrating cost-sensitive learning proves effective by assigning varying costs to misclassification errors across different classes, thereby accommodating the imbalance and enhancing model performance. These strategies collectively empower RNNs to navigate the challenges posed by skewed data distributions, ultimately fostering more robust and accurate predictions.

Experiments were conducted with 787 malware samples belonging to nine families. In the experiments that were carried out, representative API call patterns of nine malware families on 551 samples were extracted as a training set and performed classification on the 236 samples as a test set. Classification accuracy results using API call patterns extracted from RNN were measured as 71% on average. The results show the feasibility of our approach using RNN to extract representative API call pattern of malware families for malware family classification. First, the similarities of the representative API call patterns with extracted from each family and the API call sequences of the malware belonging to the test set are compared. Then, top three representative API call patterns were selected with the highest similarity compared to each malware in the test set and compare the top three family results with the correct answer. Jaccard similarity coefficient was used as the similarity measure [1].

Experimental results using a balanced dataset showed 83% accuracy and a 0.44 loss, which outperformed the baseline model in terms of the minimum loss. The imbalanced dataset’s accuracy was 98%, and the loss was 0.10, which exceeded the state-of-the-art model’s accuracy. This demonstrates how well the suggested model can handle malware classification [23].

One successful application of RNNs in malware detection is dynamic behavioral analysis. This involves analyzing software behavior, such as file operations, system calls, registry accesses, and network interactions, to identify potentially malicious activities. They can create behavioral profiles of software by observing and analyzing its interactions with the operating system and external resources. These profiles can be compared against known malware behavior patterns to identify potential threats. RNNs excel in anomaly detection, crucial for identifying malware that exhibits unusual or unexpected behavior. By continuously learning and adapting to evolving malware behavior, RNN-based systems can update their models to detect novel threats, making them effective against 0-day attacks. They also reduce false positives by focusing on behavioral analysis, prioritizing potential threats for security professionals to investigate. RNNs can consider the context of each action within a software’s behavior, distinguishing legitimate activities from malicious ones. Given their proficiency in processing ordered data, RNNs are well-suited for tasks involving time series data. They adapt to various application domains, including speech recognition, language modeling, translation, and image captioning, where sequential data analysis is crucial [1].

3.2 Deep autoencoder

Deep Autoencoders (DAEs) have ascended as potent tools in the fight against malware, particularly within the realm of unsupervised learning. DAEs are a type of neural network that encodes high-dimensional input into a lower-dimensional representation and then decodes it back into its original format. They serve as valuable tools for uncovering the inherent characteristics and patterns exhibited by benign software applications. By training on extensive datasets of benign applications, DAEs learn and internalize the defining traits of harmless programs.

Labeled datasets are crucial in training DAEs for malware detection. These datasets play a pivotal role in imparting the model with the ability to discern nuanced patterns that distinguish benign software from malicious counterparts. Operating within a supervised learning framework, they are trained on input-output pairs derived from labeled datasets. Each input represents features extracted from both benign and malicious samples, enabling the model to comprehend the distinctive characteristics associated with each class. This process contributes to the model’s generalization ability, allowing it to recognize common patterns indicative of malware across different variations and instances, ensuring effectiveness on previously unseen data.

Addressing data imbalance in training deep DAEs and variational autoencoders (VAEs) involves employing several strategic approaches. One method involves leveraging the inherent generative capacity of VAEs to counter data scarcity by generating synthetic data. This technique helps balance the class distribution, enhancing the model’s ability to learn from underrepresented classes. Additionally, adversarial training can be utilized to foster robustness against imbalances. By exposing the model to adversarial examples, it learns to create more resilient representations, mitigating the impact of data imbalance. These strategies collectively empower them to handle skewed datasets effectively, improving their capacity to generalize and learn meaningful representations across all classes.

When presented with novel applications, DAEs can assess whether they deviate significantly from the learned benign patterns. This assessment is made possible by evaluating the reconstruction error generated during the decoding process. A high reconstruction error indicates a substantial departure from the expected benign behavior, raising suspicion of potential malicious activity. They can also be seamlessly integrated with other machine learning algorithms, enhancing the comprehensiveness of malware detection strategies.

The approach without autoencoder, both precision and recall are 99 Percentage for just the Bi-LSTM model in detecting malicious activities in cyber security. Average precision and recall of the performed model with autoencoder is 93% [24].

The architectural framework of DAEs consists of two pivotal stages: encoding and decoding. In the decoding phase, the compressed representation is reconstructed back to its original form, with each network layer performing a distinctive transformation on the input data. Their adaptability benefits applications like natural language processing (NLP), picture recognition, identity verification, and data reduction.

While DAEs have ascended as potent tools in the fight against malware, deploying them for real-world malware detection comes with various challenges. Scalability is a significant challenge, as training and deploying DAEs at scale can strain organizational infrastructure. Efficient scaling becomes essential as datasets grow in size and models become more complex. The demand for computational resources, especially during training, poses another challenge. Organizations must address the need for processing power, which can lead to longer inference times and increased operational costs. Real-time analysis, crucial for timely malware identification, can be challenging with DAEs, particularly those with complex architectures that struggle to achieve low-latency predictions. Imbalances in real-world malware datasets pose challenges related to biased models favoring the majority class, resulting in suboptimal detection of less common or emerging malware variants. The interpretability and explainability of DAEs, often seen as black-box models, become critical in a production setting to gain the trust of security analysts and stakeholders [24].

In malware detection, a comparative analysis reveals distinctive strengths and weaknesses among DAEs, traditional machine learning algorithms, and other deep learning approaches. DAEs excel in unsupervised feature learning and automatically capture intricate representations vital for complex tasks. Their ability to detect anomalies without labeled data is advantageous for identifying new malware variants. Traditional machine learning algorithms, like Decision Trees, offer superior interpretability and computational efficiency but rely on manual feature engineering and have limitations in anomaly detection [19]. Deep learning approaches, such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), excel in spatial and temporal contexts but require large labeled datasets and can be computationally intensive. Factors like interpretability, data availability, and analysis requirements play a crucial role in choosing among DAEs, traditional algorithms, or other deep learning models. DAEs, with their focus on unsupervised feature learning and anomaly detection, stand out in the malware detection toolkit, each approach presenting unique strengths tailored to the demands of the cybersecurity landscape.

Deep Autoencoders (DAEs) find practical application in unsupervised learning for discerning inherent traits within benign software. They leverage extensive datasets of benign applications to learn characteristic features. Through this learning process, DAEs become adept at identifying deviations from these established norms, effectively flagging potential malware presence. By analyzing and detecting anomalies in software behavior, DAEs serve as a valuable tool in the continuous battle against cybersecurity threats, enabling proactive identification of suspicious activities and potential threats [25].

Unsupervised learning presents a promising avenue for discerning the inherent traits of malware, and its efficacy lies in the ability to perform effective feature learning without relying on labeled data. However, despite its potential, DAEs encounter notable challenges. Scalability poses a significant hurdle during both training and deployment phases, demanding innovative solutions to handle the complexities of large-scale data. Additionally, the vulnerability to adversarial attacks presents a pressing concern, necessitating robust defense mechanisms to fortify these models. Furthermore, integrating unsupervised learning methodologies with existing security infrastructure proves to be a challenging task, demanding a concerted effort to harmonize these disparate elements effectively. Thus, while holding considerable promise, the practical implementation of unsupervised learning in identifying malware characteristics necessitates a strategic approach to mitigate these formidable challenges.

3.3 LSTM

The Long Short-Term Memory (LSTM) architecture has demonstrated its effectiveness in virus detection due to its ability to identify long-term dependencies within sequential data. LSTMs are a type of recurrent neural network (RNN) that use memory cells and gates to control the flow of information within the network. This makes them valuable for analyzing sequences of system calls, API calls, or network traffic generated by applications, especially in the context of malware detection in cyberspace. By processing sequential data using LSTM networks, patterns and correlations indicative of malicious behavior can be discovered. The following techniques are used in this scenario:

a) Sequence Encoding: System call sequences are encoded into numerical vectors, where each system call is represented as an integer or a one-hot encoded vector. This encoding enables effective processing by the LSTM network.

b) Sequence Padding: Sequences are often padded or truncated to a fixed length to ensure uniform input lengths. This step is crucial for creating consistent input for the LSTM.

c) LSTM Architecture: LSTM layers are utilized to capture the temporal dependencies and the order of system calls within the encoded sequences. LSTMs excel at modeling long-range dependencies, making them well-suited for this task.

d) Output Classification: The output of the LSTM layer is typically connected to a classification layer responsible for distinguishing between benign and malicious behavior based on the patterns learned from the system call sequences.

Like system call analysis, LSTM networks can be used for analyzing sequences of API calls to detect malware in cyberspace. The techniques involved are similar to those used in system call analysis, including sequence encoding, padding, LSTM architecture, and output classification. Additionally, they can also be utilized to model the behavior of an application over time, enabling the identification of anomalous activities that may indicate the presence of a new or previously undiscovered malware strain [25]. For enhanced malware detection strategies, LSTMs can also be seamlessly integrated with other machine learning techniques, such as Convolutional Neural Networks (CNNs).

The development of the LSTM architecture was primarily motivated by the need to address the vanishing gradient problem present in standard neural networks [26]. This problem arises when each connection within a network has its individual weight that remains unchanged over time, leading to training difficulties. As a type of RNN, LSTMs leverage memory cells and gating mechanisms to effectively control the flow of information, making them well-suited for the analysis of sequences involving system calls, API calls, or network traffic while identifying patterns and correlations indicative of malicious behavior.

A high-quality dataset consisting of 2,060 benign and memory-resident programs was created. In other words, the dataset contains 1,287,500 labeled sub-images cut from the MRm-DLDet transformed ultra-high resolution RGB images. MRm-DLDet was implemented for Windows 10, and it performs better than the latest methods, with a detection accuracy of up to 98.34%. Twelve diferent neural networks were trained and the F-measure up to 99.97% [27].

To address the challenges posed by data imbalance in LSTM training, several strategies are employed. One approach involves employing data augmentation techniques, which entail generating synthetic data by introducing variations to the existing minority class samples. This method aids in balancing the dataset and providing the model with more diverse instances to learn from. Another valuable strategy involves leveraging sampling techniques such as oversampling or undersampling. Oversampling involves replicating minority class samples to balance the class distribution, while undersampling focuses on reducing the number of majority class samples. These methods help create a more equitable representation of classes within the dataset, enabling the LSTM to learn effectively from both the majority and minority classes. Furthermore, adopting cost-sensitive learning techniques proves beneficial. By assigning varying costs to misclassification errors in different classes, the model can account for the imbalance and prioritize accurate classification of the minority class. This approach ensures that the LSTM places appropriate emphasis on correctly identifying instances from both the majority and minority classes, thereby enhancing overall performance despite data imbalances [26].

LSTM’s mastery in capturing temporal dependencies enables a deep comprehension of malware’s dynamic behavior over time. However, this strength comes with inherent challenges. The resource-intensive nature of training poses a significant obstacle, while vulnerability to adversarial attacks is a critical concern. Additionally, the constant need for updates to align with the ever-evolving array of malware variants presents an ongoing demand. Despite these hurdles, the methodology’s proficiency in unraveling intricate data sequences remains a promising frontier in the realm of deciphering and combating malware conduct.

3.4 Deep neural network

Deep Neural Networks (DNNs) have gained prominence in virus detection due to their remarkable capacity to comprehend complex patterns and data characteristics. DNNs represent a class of artificial neural networks characterized by numerous interconnected layers of nodes. These layers collaboratively process incoming data, ultimately yielding predictions or classifications. In the realm of malware detection in cyberspace, DNNs are trained on extensive datasets encompassing both benign and malicious programs, enabling them to discern the fundamental attributes and patterns inherent to each class.

Leveraging the knowledge acquired from this training, DNNs can effectively categorize new applications as either benign or malicious based on their intrinsic characteristics. They exhibit versatility in assessing diverse forms of input data, spanning system calls, API calls, and network traffic, making them adaptable to various malware detection scenarios. For instance, in API call pattern recognition, Convolutional Neural Networks (CNNs), a type of DNN, can effectively analyze sequences for anomaly detection or malware identification by representing each API call as a feature vector. In network traffic analysis, DNNs, including CNN architectures, excel at detecting spatial patterns within data for intrusion detection, often extracting features from packet headers or payloads. Additionally, CNNs prove valuable in image-based malware detection, where they process images of executable files to identify malicious code patterns. Furthermore, the ability of DNNs to integrate multiple forms of input data in multimodal threat analysis, combining features from system calls, API patterns, and network traffic, highlights their capability for comprehensive threat assessment [28].

The utilization of transfer learning allows DNNs trained on one malware classification task to be fine-tuned for related challenges, showcasing their adaptability and knowledge transfer capabilities in cybersecurity applications. The training process for DNNs typically involves backpropagation, a technique that seeks to minimize the loss function’s value through the gradient descent approach. The training process involves several key stages, starting with the initialization of weights and biases, a critical step that establishes the foundation for effective learning [29]. As the input data undergoes forward propagation, traversing through the network’s layers, activations are computed, and the output is generated based on the current parameters. Simultaneously, the loss function calculates the disparity between the predicted output and actual labels, providing a quantifiable metric for the network’s performance. Backpropagation follows, utilizing the chain rule to compute gradients and propagate errors backward through the network. This process enables the network to discern the contribution of each weight to the overall error. Subsequently, gradient descent optimization adjusts the weights and biases to minimize the loss function, guiding the network toward optimal configurations for proficient malware detection. The entire sequence of forward propagation, loss calculation, backpropagation, and weight updates iterate over multiple epochs, allowing the network to progressively refine its parameters. These iterative adjustments enhance the network’s capacity to generalize and effectively identify previously unseen malware variants, underscoring its effectiveness in the realm of cybersecurity. Resampling methods such as oversampling, undersampling, or using techniques like SMOTE (Synthetic Minority Over-sampling Technique) can rebalance the dataset, ensuring equal representation of classes. Additionally, adjusting class weights during training serves as a means to penalize misclassifications of the minority class more heavily, allowing the model to prioritize learning from the underrepresented data. These strategies collectively aim to mitigate the impact of data imbalance, enabling DNNs to better generalize and make more accurate predictions across all classes in the dataset.

A deep neural network based malware detection system that Invincea has developed, achieves a usable detection rate at an extremely low false positive rate and scales to real world training example volumes on commodity hardware. Their system achieves a 95% detection rate at 0.1% false positive rate (FPR), based on more than 400,000 software binaries sourced directly from our customers and internal malware databases [28].

To generate predictions or classifications, DNNs meticulously process data through their interconnected layers of nodes. When applied to malware detection, these networks can be trained on extensive datasets containing both benign and malicious programs, equipping them with the knowledge needed to distinguish between the two categories. By synergizing DNNs with other machine learning techniques, such as Deep Autoencoders (DAEs) or Long Short-Term Memory (LSTM) networks, a more comprehensive and robust approach to malware identification can be achieved. Deep Autoencoders, as unsupervised models, play a pivotal role in feature learning and extraction, providing compact and meaningful representations of input data, particularly valuable in high-dimensional spaces like raw system call sequences or network traffic patterns. This enhances the model’s robustness against various malware variants by capturing latent features and anomalies during pre-training on unlabeled data. On the other hand, Long Short-Term Memory Networks excel in capturing temporal dependencies and sequences, crucial for understanding dynamic aspects of malware behavior over time. Integrated into a DNN architecture, LSTMs contribute temporal context awareness, enabling the model to discern evolving patterns exhibited by sophisticated malware. Ensemble learning techniques, such as stacking or bagging, further amplify the model’s robustness by combining the strengths of DNNs, DAEs, and LSTMs [28]. The ensemble approach leverages the diversity of information captured by each component, resulting in a more accurate and resilient model less sensitive to noise and outliers. Additionally, the utilization of transfer learning facilitates knowledge transfer from related tasks, such as feature learning or sequential modeling, enhancing the DNN’s generalization performance in malware identification.

While leveraging DNNs has significantly propelled the field of malware detection towards greater accuracy and efficiency, their application comes with inherent limitations and challenges. Firstly, scalability issues pose a substantial hurdle, as training large-scale DNNs demands significant computational resources and can be financially burdensome. The complexity of DNN architectures, coupled with the extensive data required for effective training, exacerbates this challenge, particularly for organizations with limited computational capabilities. Secondly, interpretability challenges impede the widespread adoption of DNNs in malware detection in cyberspace. DNNs are often considered black-box models, lacking transparency in their decision-making processes. In intricate tasks like malware detection, understanding the rationale behind a specific decision is crucial for building trust and ensuring alignment with the expectations of security experts. Adversarial attacks constitute another formidable challenge. DNNs are susceptible to intentional manipulations of input data by malicious actors, leading to misclassifications and compromising the reliability of malware detection systems. Such attacks pose a significant security risk, requiring robust defenses to mitigate their impact [29]. Furthermore, the issue of data imbalance within malware datasets complicates the generalization performance of DNNs. Imbalances, where certain types of malware are underrepresented, can result in model biases towards prevalent classes, leading to suboptimal detection of less common or emerging malware variants. Lastly, the lack of explainability in DNNs’ decision-making processes hinders their integration into security workflows. The opacity of these models makes it challenging for security analysts to comprehend the basis for a classification, impeding effective collaboration between automated systems and human experts.

3.5 Deep Belief Network

Deep Belief Networks (DBNs) are powerful tools in the realm of malware detection in cyberspace. These neural networks excel at capturing intricate patterns and features within vast datasets, making them invaluable for identifying malicious software. DBNs are particularly effective in analyzing software behavior and identifying anomalies or suspicious activities. They have the ability to autonomously discover relevant features, which is advantageous in the context of rapidly evolving malware. By processing various aspects of software behavior, such as system calls, API calls, or network traffic patterns, DBNs can differentiate between normal software operations and potentially harmful ones. This approach allows for the detection of previously unseen malware strains or novel attack techniques, making them a critical component of modern cybersecurity systems. The versatility and adaptability of DBNs in handling large and diverse datasets make them an essential tool for protecting against the ever-growing landscape of malware threats [2].

End-to-end deep learning architectures, specifically Bidirectional Long Short-Term Memory (BiLSTM) neural networks, are employed for the static behavior analysis of Android bytecode. Unlike conventional malware detectors that rely on handcrafted features, this system autonomously extracts insights from opcodes. This approach demonstrates the superiority of deep learning models over traditional machine learning methods, offering a promising solution to safeguard Android users from malicious applications [30].

Researchers have also explored the suitability of deep learning models for mobile malware detection. They utilize a deep neural network (DNN) implementation called DeepLearning4J (DL4J), which successfully identifies mobile malware with high accuracy rates. The study suggests that adding more layers to the DNN models improves their accuracy in detecting mobile malware, showcasing the feasibility of using DNNs for continuous learning and anticipating new types of attacks [22].

An anti-malware system that uses customized learning models, which are sufficiently deep, and are end to end deep learning architectures report an accuracy of 0.999 and an F1-score of 0.996 on a large dataset of more than 1.8 million Android applications [2]. The SOFS-OGCNMD system achieves system’s average accuracy is 98.28%, average precision is 98.65%, recall is 98.53%, and F1-Score is 98.47 [22].

In addition, a method has been proposed to address the challenges of malware detection in Cyber-Physical Systems (CPS) within the Internet of Things (IoT). The model, called Snake optimizer-based feature selection with optimum graph convolutional network for malware detection (SOFS-OGCNMD), demonstrates remarkable results in accuracy, precision, recall, and F1-Score, outperforming recent models and contributing to the protection of CPS and IoT systems from evolving cyber threats [18].

Furthermore, a system has been designed to enhance the security of power systems through a Deep Belief Network (DBN)-based malware detection system. This system deconstructs malicious code into opcode sequences, extracts feature vectors, and utilizes DBN classifiers to categorize malicious code. It effectively utilizes unlabeled data for training and outperforms other classification algorithms in terms of accuracy. The research showcases the potential of DBNs for enhancing malware detection accuracy and reducing feature vector dimensions, thereby contributing to safeguarding power systems from cyber threats.

3.6 Deep convolutional neural network

The realm of malware detection in cyberspace, particularly in the context of evolving cyber threats, is experiencing a surge in innovative approaches driven by deep learning and convolutional neural networks (CNNs). Deep Convolutional Neural Networks (DCNNs) have emerged as robust and efficient technologies for detecting malware. Their ability to automatically extract complex features from various forms of data makes them exceptionally well-suited for this task. In the context of malware analysis, CNNs excel at processing and identifying malicious patterns within binary code, enabling the detection of known malware strains and even the discovery of novel threats. These networks are particularly effective in detecting malware through the analysis of file content and structure, which includes identifying suspicious code segments and unusual behaviors. Deep CNNs offer a significant advantage in terms of adaptability as they can be trained on diverse and evolving datasets to keep up with the continuous evolution of malware. Additionally, their capacity to handle large-scale data and discern subtle variations in binary files enables the identification of both prominent and more subtle malicious patterns. They are at the forefront of malware detection, contributing to the defense against the ever-growing sophistication of cyber threats [3].

DCNNs have proven to be highly effective in tasks related to image processing, excelling in capturing intricate spatial hierarchies and patterns. Their performance, often measured through precision, recall, and F1-score, is particularly notable in image classification tasks, especially when trained on extensive and diverse datasets. When compared to traditional machine learning models like SVM or Random Forest, DCNNs consistently outshine them in image-related tasks, showcasing a superior ability to discern complex patterns. Transfer learning models, such as VGG16 or ResNet, compete strongly with them, benefiting from pre-trained networks on large datasets. However, DCNNs, especially those incorporating transfer learning architectures, often emerge as leaders, demonstrating heightened precision, recall, and F1-score by leveraging their effective feature extraction capabilities. In tasks involving sequential data, where Recurrent Neural Networks (RNNs) excel in capturing temporal dependencies, DCNNs maintain their superiority in scenarios where spatial features hold more significance, as seen in image-related tasks. Ensemble models, combining various techniques, present a competitive alternative, sometimes matching or exceeding DCNNs’ performance, particularly in cases where diverse models contribute to improved generalization. These models find a significant application in the financial sector, particularly in credit scoring. In credit scoring, financial institutions aim to predict the probability of a loan applicant defaulting on a loan. This prediction is based on a myriad of factors including credit history, income, employment status, and others. Ensemble models combine various machine learning models like Decision Trees, Logistic Regression, and Neural Networks to assess these factors. In the real world, this translates to more accurate credit scoring, which helps financial institutions in reducing the risk of loan defaults while approving more loans for credit-worthy applicants.

The evaluation of DCNNs on diverse datasets is indispensable for gauging their generalizability and robustness across various malware types. Assessing these models on multiple datasets provides crucial insights into their adaptability and real-world performance. Key considerations include exploring malware variability, addressing imbalances in datasets, accounting for temporal aspects in malware evolution, cross-domain evaluation to assess adaptability, examining scenarios involving transfer learning, evaluating resilience against adversarial attacks, accounting for geographical variations in malware prevalence, ensuring versatility in handling different feature representations, and maintaining consistent evaluation metrics such as precision, recall, F1-score, and area under the ROC curve. This comprehensive approach to evaluation enables researchers and practitioners to develop DCNN models that can effectively navigate the dynamic and complex landscape of malware detection, ensuring their efficacy across diverse and evolving cybersecurity scenarios.

An advanced intelligent IoT malware detection model proposed based on deep learning and ensemble learning algorithms, called DEMD-IoT achieves the best outcome with the highest accuracy 99.9%, compared to state-of-the-art machine learning, deep learning, and ensemble models [3]. The 4L-DNN model outperforms other DNN architecture by a significant margin on the accuracy metric, where 98.85% was achieved on both datasets, Benign and Malicious PE Files dataset and Malware Dataset, and 98.37% was achieved on the Classification of Malwares dataset [31].

To address the challenges related to malware detection, the DEMD-IoT model leverages the power of deep learning and ensemble learning techniques. It comprises a stack of three one-dimensional convolutional neural networks (1D-CNNs) tailored to analyze IoT network traffic patterns. The model also features a meta-learner, utilizing the Random Forest algorithm, to integrate results and produce the final prediction. DEMD-IoT’s advantages lie in its ensemble strategy to enhance performance and the use of hyperparameter optimization to fine-tune base learners. Notably, it employs 1D-CNNs, avoiding the complexity of preprocessing phases. Empirical evaluation on the IoT-23 dataset demonstrates that this ensemble method outperforms other models, achieving a remarkable accuracy of 99.9% [31].

Another study introduces a web-based malware detection system centered on deep learning, specifically a one-dimensional convolutional neural network (1D-CNN). Unlike traditional methods, it focuses on static features within portable executable files, making it ideal for real-time detection. The 1D-CNN architecture, tailored for these executable files, facilitates efficient feature extraction. Comparisons with state-of-the-art methods across diverse datasets confirm the model’s superiority. As malware poses a significant security threat, this web-based system offers user-friendly malware detection, reducing vulnerability to cyberattacks and benefiting individuals and organizations alike. The study emphasizes the importance of deploying deep learning models in web-based applications to enhance usability and accessibility [32].

In another paper, the authors propose an efficient neural network model, EfficientNetB1, for classifying malware families using image representations of malware at the byte level. By employing computer vision techniques, they aim to detect sophisticated and evolving malware. The evaluation of various pretrained CNN models highlights the importance of minimizing computational resource consumption during training and testing. EfficientNetB1 achieves an impressive accuracy of 99% in classifying malware classes, requiring fewer network parameters compared to other models. This work contributes to the field of cybersecurity by providing a novel approach that combines efficient neural network models and diverse image representation methods for accurate and resource-efficient malware classification [33].

To address the persistent challenge of malware detection in Windows systems, a Convolutional Neural Network (CNN)-based approach is used. It leverages the execution time behavioral features of Portable Executable (PE) files to identify and classify elusive malware. The approach was evaluated using a dataset comprising MIST files, generating images from N-grams selected by various Feature Selection Techniques. Results from 10-fold cross-validation tests showcase the remarkable malware detection accuracy, particularly when employing N-grams recommended by the Relief Feature Selection Technique. In comparison to other machine learning-based classifiers, this CNN-based approach outperforms them, offering a promising solution to enhance malware detection in Windows systems.

3.7 Deep generative models

Deep Generative Models offer a promising avenue for enhancing malware detection techniques in cyberspace. These models operate by generating synthetic data that mimics the characteristics of malicious code, thereby providing an innovative approach to detect malware. By leveraging techniques such as Variational Autoencoders (VAEs) or Generative Adversarial Networks (GANs), deep generative models can create artificial malware samples to diversify training datasets. This augmentation helps improve the robustness of malware detection systems, enabling them to recognize new and evolving threats. These models can also be employed in anomaly detection, identifying deviations from normal software behavior, which often indicates the presence of malware. Furthermore, they can generate features that enhance feature-based malware detection in cyberspace. Their adaptability and ability to generate data like malicious code samples contribute to strengthening the overall cybersecurity landscape, offering a proactive approach to identifying and combating malware threats [34].

In contrast, state-of-the-art methods and alternative approaches encompass signature-based detection, heuristic-based detection, and traditional machine learning models. Signature-based methods are efficient in identifying known malware through predefined patterns but face limitations in detecting novel threats. Heuristic approaches rely on rules and behavioral patterns, demonstrating adaptability but may produce false positives or negatives. Traditional machine learning models, while interpretable and computationally efficient, are constrained by the need for manual feature engineering and may struggle with high-dimensional data. Interpretability favors signature-based and heuristic-based methods, as well as certain traditional machine learning models, over deep generative models. However, the adaptability to novel threats is shared by deep generative models and heuristic-based approaches, distinguishing them from the limitations of signature-based methods. Deep generative models, with their strengths in unsupervised learning and anomaly detection, offer a promising avenue for addressing challenges posed by evolving and novel malware threats.

The two features extracted from the data with their respective characteristics are concatenated and entered into the malware detector of a hybrid deep generative model. By using both features, the proposed model achieves an accuracy of 97.47%, resulting in the state-of-the-art performance. [34]. A model which was verified by extensive experiments on the benchmark datasets KDD’99 and NSL-KDD effectively identifies normal and abnormal network activities. It achieves 99.73% accuracy on the KDD’99 dataset and 99.62% on the NSL-KDD dataset [35].

To tackle the challenge of detecting obfuscated malware, which often employs techniques like null value insertion and code reordering to evade traditional detection methods, a deep generative model is proposed. This model combines both global and local features by transforming malware into images to capture global characteristics efficiently and extracting local features from binary code sequences. By fusing these two types of features, the model achieves an impressive accuracy of 97.47% [36]. A novel approach is also introduced that leverages generative adversarial networks (GANs) for plausible malware training and augmentation. By training a discriminator using malware images generated by GAN models, the framework enhances the robustness of detection against 0-day malware. This eliminates the need for inefficient malware signature analysis, reducing signature complexity. The study emphasizes the importance of understanding 0-day malware features through explainable AI techniques and suggests future work on expanding the framework’s applicability [35]. Despite the inherent black-box nature of these models, Explainable AI (XAI) provides a set of methodologies to shed light on their decision-making processes. Layer-wise Relevance Propagation (LRP) assigns relevance scores to input features, aiding in the identification of crucial patterns for 0-day malware features. Saliency maps highlight significant regions in input data, offering interpretability by emphasizing key areas in images or sequences. Integrated Gradients calculates feature attribution, providing nuanced insights into how variations in input features contribute to the identification of 0-day malware characteristics. Local Interpretable Model-agnostic Explanations (LIME) generates faithful interpretations by perturbing input instances, creating surrogate models for better understanding. Attention mechanisms focus on relevant parts of input sequences, aiding in the interpretation of the importance of different elements, particularly beneficial for sequential data. Counterfactual explanations generate alternative instances, showcasing the impact of input feature variations on model predictions, enhancing understanding of 0-day malware identification. Rule-based explanations extract decision rules approximating the behavior of deep generative models, offering a simplified representation for accessibility and understanding by security analysts. These explainability methods collectively contribute to a more transparent and interpretable framework, allowing analysts to dissect and comprehend the decision-making processes of deep generative models in the complex domain of 0-day malware detection.

To tackle network intrusion detection, where high-dimensional data, the scarcity of labeled samples, and real-time detection pose challenges, the proposed solution utilizes deep learning. It employs a multichannel Simple Recurrent Unit (SRU) model that outperforms traditional LSTM algorithms in efficiency and accuracy. To address the scarcity of labeled samples, a generative adversarial model (DCGAN) is used to generate training data, significantly improving system detection rates and reducing false alarms. The paper introduces efficient data preprocessing and demonstrates an impressive detection accuracy of 99.73% on KDD datasets. The SRU-based approach offers real-time intrusion detection capabilities and enhances network security [20].

While offering unique strengths, Deep Generative Models also come with several drawbacks in the context of malware detection in cyberspace. One key limitation lies in their interpretability, as these models are often perceived as black-box systems, making it challenging for security analysts to comprehend and trust their decision-making processes. Moreover, the computational intensity required for training, stemming from complex architectures and large datasets, poses practical challenges for deployment, particularly in resource-constrained environments. Data dependency is another drawback, with deep generative models relying on substantial amounts of labeled data for effective training. Acquiring diverse and representative datasets for various malware types can be logistically challenging, considering the dynamic nature of the cybersecurity landscape. Additionally, these models are vulnerable to adversarial attacks, similar to other deep learning approaches, which pose a threat to their reliability in real-world scenarios. The need for large-scale training data is a practical concern, as optimal performance often hinges on access to extensive and diverse datasets. Adapting to the dynamic nature of cybersecurity threats is another limitation, requiring frequent updates and retraining to effectively address new malware variants. Incorporating domain knowledge or expert-defined rules into the learning process can be difficult for deep generative models, hindering their ability to leverage human expertise in refining malware detection.

3.8 Deep Boltzmann machine

Deep Boltzmann Machines (DBMs) are powerful tools in malware detection in cyberspace. These deep learning algorithms excel in capturing intricate patterns within large datasets. When used for malware detection, they analyze binary code or behavioral data to identify malicious patterns and anomalies. By modeling the complex relationships between features, they effectively distinguish between benign and malicious software. DBMs offer the advantage of unsupervised learning, making them adept at uncovering novel and previously unseen malware variants. They can identify subtle and evolving threat vectors, making them crucial in the battle against constantly changing malware. Additionally, DBMs can be used for feature extraction, reducing data dimensionality and enhancing the efficiency of other detection algorithms.

Compared to other malware detection techniques, including traditional machine learning algorithms, Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs), each approach brings its unique attributes. Traditional algorithms are known for their interpretability but may require manual feature engineering. CNNs excel in spatial feature extraction for image-based tasks. RNNs outperform DBMs in handling sequential data and capturing temporal dependencies.

In the field of cybersecurity, DBMs play a pivotal role in bolstering defenses and ensuring the early identification of emerging malware threats [37]. A multi-objective RBM model aims to improve robustness and data classification accuracy. This study addresses challenges such as dataset imbalance, complex deep learning network models, and the need for multiple objectives. It leverages non-dominated sorting genetic algorithms (NSGA-II) to tackle imbalanced malware families. The proposed model, in conjunction with NSGA-II, significantly enhances data classification accuracy within HetNets, demonstrating its effectiveness in safeguarding data fusion processes [38].

To tackle dimensionality, Subspace-based Restricted Boltzmann Machines (SRBM) introduce a novel approach that combines RBMs with subspace learning. SRBM efficiently reduces feature dimensionality while considering non-linear feature relationships. Compared to other methods like PCA and Stacked Auto Encoder (SAE), SRBM stands out with significant improvements in performance metrics, enhancing efficiency and accuracy in Android malware detection [4].

To explore the application of deep learning in the detection of Denial of Service (DoS) attacks, a deep Gaussian-Bernoulli-type RBM is introduced with additional layers, optimizing hyperparameters for improved detection accuracy. This deep RBM model supports continuous data and demonstrates superior accuracy when compared to alternative RBM models, such as Bernoulli-Bernoulli RBM. The study underscores the importance of developing systems capable of detecting malicious behavior within network traffic, particularly in the context of DoS attacks [39].

In order to confirm the effect of the proposed method (RBM + NSGA-II) on the accuracy of data classification, the recall rate values with five other methods are compared. The methods are GIST + KNN, GIST + SVM, GLCM + KNN, GLCM + SVM, and DRBA, and the recall rates are 91.7, 91.4, 92.3, 93, and 94.5 percent, respectively [37]. The method proposed here has a recall rate of 95.83 percent. Next, by comparing the values of loss, recall rate, and false alarm rate with, it was found that the proposed multi-objective RBM model has loss values (loss = 0.083, 0.080, and 0.086) and recall rate values (recall = 88.64, 93.48, and 95.83 percent) are all better in three different resolutions, and the value of FPR at 50 × 50 resolution is slightly worse (FPR = 12.5 percent is greater than 11.50 percent) [37].

Obtaining and labeling appropriate training data for Deep Boltzmann Machines (DBMs) in malware detection presents multifaceted challenges with implications for model generalizability and real-world applicability. Firstly, imbalances in class distribution within malware datasets pose a challenge, potentially leading to biased model training and diminished effectiveness in detecting less common malware types. Annotating malware samples is resource-intensive, and the dynamic cybersecurity landscape introduces new variants regularly, contributing to limitations in dataset size and timeliness. This can hinder the model’s capacity to generalize to evolving threats. Moreover, inherent biases in malware datasets from different sources create potential limitations. Models trained on biased datasets may struggle to generalize across different contexts, impacting performance when faced with malware variants from underrepresented sources or regions. The active involvement of malicious actors in crafting adversarial samples further complicates the training process. Adversarial samples, intentionally manipulated to deceive the model, can compromise the robustness and reliability of the DBM in real-world scenarios. Additionally, the heterogeneous nature of malware, ranging from simple to highly sophisticated attacks, presents a challenge in capturing this diversity within a single training dataset. A lack of diversity may result in a model that struggles to identify novel and sophisticated malware types, further constraining its efficacy in practical, real-world scenarios.

3.9 Deep reinforcement learning

Deep reinforcement learning (DRL) plays a crucial role in enhancing malware detection by introducing innovative approaches to address evolving cybersecurity challenges. This advanced technique utilizes artificial intelligence and deep learning algorithms to train intelligent agents that learn to make decisions based on interactions with malware samples. These agents can determine optimal sequences of actions to modify malware, making it more difficult for anti-malware engines to detect. DRL is particularly effective in scenarios where traditional machine learning approaches struggle, especially in dealing with adversarial attacks. By allowing the agents to iteratively interact with malware, it is possible to enhance the agility and evasiveness of malware, making detection more challenging. This approach empowers researchers and cybersecurity professionals to proactively combat cyber threats, adapt to new evasion techniques, and continuously strengthen their malware detection systems [40].

In comparison to established methods, signature-based detection techniques prove effective in identifying known malware patterns, offering computational efficiency and a well-established presence in cybersecurity practices. Heuristic-based approaches leverage rules and behavioral patterns, adapting to new threats through heuristic updates. While computationally efficient, heuristics may generate false positives or negatives based on predefined rules. Traditional machine learning models, such as Support Vector Machines (SVMs) or Random Forests, provide interpretability and efficiency but may struggle with complex relationships in data due to their reliance on manual feature engineering. Analysis of these approaches reveals the superiority of DRL techniques in sequential decision-making tasks and adaptability to dynamic environments, addressing limitations seen in signature-based and traditional machine learning methods.

One method explores the evolution from traditional signature-based methods to machine learning-based algorithms for malware detection. While machine learning approaches have significantly improved detection accuracy, they remain vulnerable to adversarial attacks. The study delves into the creation of adversarial samples to test the resilience of these systems, particularly focusing on binary file modification. It discusses the complexities involved in avoiding corruption of the binary and the need to strengthen the defenses of machine learning models. The research highlights the ongoing need to enhance the robustness of malware classifiers against adversarial attacks. Another study introduces a novel framework called DQEAF (Deep Q-Learning for Evading Anti-Malware Engines), which employs DRL to bypass anti-malware engines. This framework trains an artificial intelligence agent to iteratively interact with malware samples and determine optimal sequences of non-destructive actions that modify the samples, enabling them to evade detection. The study emphasizes the effectiveness of this approach, achieving a 75% success rate in evading detection by anti-malware engines, particularly in the context of Portable Executable (PE) samples [40].

Alternative approaches delve into network security and leverage Software-Defined Networking (SDN) to optimize traffic analysis through Deep Packet Inspection (DPI). One such approach utilizes deep reinforcement learning, specifically Deep Deterministic Policy Gradient (DDPG), to intelligently allocate sampling resources in SDN-capable networks. The goal is to capture malicious network flows while minimizing the load on multiple traffic analyzers. The study showcases the efficacy of this approach in achieving more efficient traffic monitoring and cyber threat detection, highlighting the importance of data-driven decisions in traffic sampling [41]. Additionally, the vulnerability of a leading malware classifier to dead code insertion is explored, and a framework employing deep reinforcement learning, specifically a Double Q-network, is introduced to induce misclassification in the classifier. An intelligent agent, trained through a convolutional Q-network, strategically inserts NOP instructions into malware code sequences. The results demonstrate a significant reduction in the classifier’s accuracy, showcasing the potential for evasion using the dead code insertion technique.

One of the primary performance metrics of DRLs lies in the rewards earned over time, depicting the agent’s learning progress by maximizing cumulative rewards through interactions with an environment. The reported values for the performance comparison were an average of 500 iterations with a 95% confidence interval. Parameter values used for network topologies. traffic steering overheads than other methods while maintaining a load-balancing of traffic analyzers over 88% [41].

DRL models exhibit remarkable adaptability to new and unknown malware samples, making them valuable assets in the ever-changing landscape of cybersecurity. Their adaptability arises from the models’ ability to learn optimal strategies through dynamic interactions with their environment, mirroring real-world cybersecurity scenarios effectively. In the realm of malware detection, DRL models such as Deep Q Networks (DQN) or Proximal Policy Optimization (PPO) excel in sequential decision-making tasks. This capability proves vital in scenarios where the identification process involves a series of actions and responses, enabling these models to learn optimal sequences of actions for effective detection and response to emerging threats. The models exhibit a remarkable feature learning capability, automatically extracting relevant patterns from raw input data. This reduces the reliance on predefined features or signatures, facilitating adaptability as the models can discover novel patterns associated with new malware samples without explicit feature engineering.

DRL models shine in 0-day threat detection, showcasing their prowess in identifying previously unseen and unknown threats. By learning from the dynamics of the environment and comprehending the underlying patterns of normal and malicious behavior, DRL models can adeptly adapt to emerging threats that lack historical data or predefined signatures. The support for continuous learning allows the models to stay current with the evolving threat landscape, ensuring they can effectively counter emerging risks. Reinforcement learning agents within DRL can dynamically adjust their policies based on feedback from the environment, enabling the model to update its knowledge as it encounters new malware samples. This dynamic policy adjustment significantly enhances the model’s ability to handle unknown threats effectively.

Tackling data imbalance is pivotal for effective model training. One key strategy involves reward balancing, a technique aimed at adjusting reward mechanisms to address the imbalance between minority and majority classes. This approach seeks to ensure that the learning process does not disproportionately favor the majority class while neglecting the minority. By fine-tuning the reward system, the algorithm can be guided to allocate appropriate attention to underrepresented scenarios, encouraging the model to learn from these instances as rigorously as from the dominant ones. This balance fosters a more comprehensive understanding of the environment, enabling the reinforcement learning agent to make informed decisions across diverse situations. By strategically adjusting reward structures, DRL algorithms can overcome data imbalance challenges, ultimately enhancing their adaptability and performance in complex real-world scenarios.

While this model holds promise for malware detection in cyberspace, its practical use faces notable challenges. High computational requirements, especially for complex models like Deep Q Networks and Proximal Policy Optimization, pose a constraint, particularly in resource-constrained environments. Additionally, the demand for substantial training data raises concerns about data efficiency, affecting performance when labeled malware samples are limited. Lengthy training times of DRL models, particularly deep neural networks, can hinder timely deployment in dynamic cybersecurity scenarios. The black-box nature of DRL models presents interpretability challenges, making it difficult to understand the decision-making processes and the features crucial for malware detection in cyberspace. Moreover, sample inefficiency, sensitivity to hyperparameters, and difficulties in generalizing across diverse malware variants further limit the effectiveness of DRL. Vulnerability to adversarial attacks adds another layer of concern, as intentional manipulations could compromise the reliability of the model. Deploying DRL models at scale in complex network environments requires addressing scalability challenges. Ethical considerations, especially regarding privacy and potential misuse, necessitate compliance with regulatory frameworks for responsible deployment. Balancing these challenges is crucial for unlocking the full potential of DRL in the realm of cybersecurity.

3.10 Extreme Learning Machine

Extreme Learning Machine (ELMs) are increasingly used in malware detection due to their versatility and efficiency. ELMs excel in feature extraction, making them suitable for processing various data types crucial for malware analysis. Their single hidden layer with randomized weight assignments enables them to process many features quickly, which is beneficial for comprehensive malware detection in cyberspace. While this allows for quick processing of many features, it may not capture complex relationships and dependencies in the data as effectively as models with multiple hidden layers and optimized weight assignments. ELMs are particularly favored for their fast-training process, as they do not involve iterative weight optimization. This speed and their ability to handle diverse data types make ELMs a valuable tool in the ongoing battle against malware [42].