- 1International Research Center of Spectroscopy and Quantum Chemistry─IRC SQC, Siberian Federal University, Krasnoyarsk, Russia

- 2Department of Computer Science and Engineering, Bharati Vidyapeeth’s College of Engineering, New Delhi, India

- 3Department of Physics and Astronomy, Uppsala University, Uppsala, Sweden

- 4Department of Information Technology, Vishwakarma Institute of Information Technology, Pune, India

- 5Laboratory of Theory and Optimization of Chemical and Technological Processes, University of Tyumen, Tyumen, Russia

- 6Laboratory of Crystal Physics, Kirensky Institute of Physics, Federal Research Center KSC SB RAS, Krasnoyarsk, Russia

- 7Department of Computational Intelligence, SRM Institute of Science and Technology, Kattankulathur, India

- 8Centre for Machine Intelligence and Data Science, Indian Institute of Technology Bombay, Mumbai, India

- 9Department of Physics, Pondicherry University, Puducherry, India

In the context of the 21st century and the fourth industrial revolution, the substantial proliferation of data has established it as a valuable resource, fostering enhanced computational capabilities across scientific disciplines, including physics. The integration of Machine Learning stands as a prominent solution to unravel the intricacies inherent to scientific data. While diverse machine learning algorithms find utility in various branches of physics, there exists a need for a systematic framework for the application of Machine Learning to the field. This review offers a comprehensive exploration of the fundamental principles and algorithms of Machine Learning, with a focus on their implementation within distinct domains of physics. The review delves into the contemporary trends of Machine Learning application in condensed matter physics, biophysics, astrophysics, material science, and addresses emerging challenges. The potential for Machine Learning to revolutionize the comprehension of intricate physical phenomena is underscored. Nevertheless, persisting challenges in the form of more efficient and precise algorithm development are acknowledged within this review.

1 Introduction

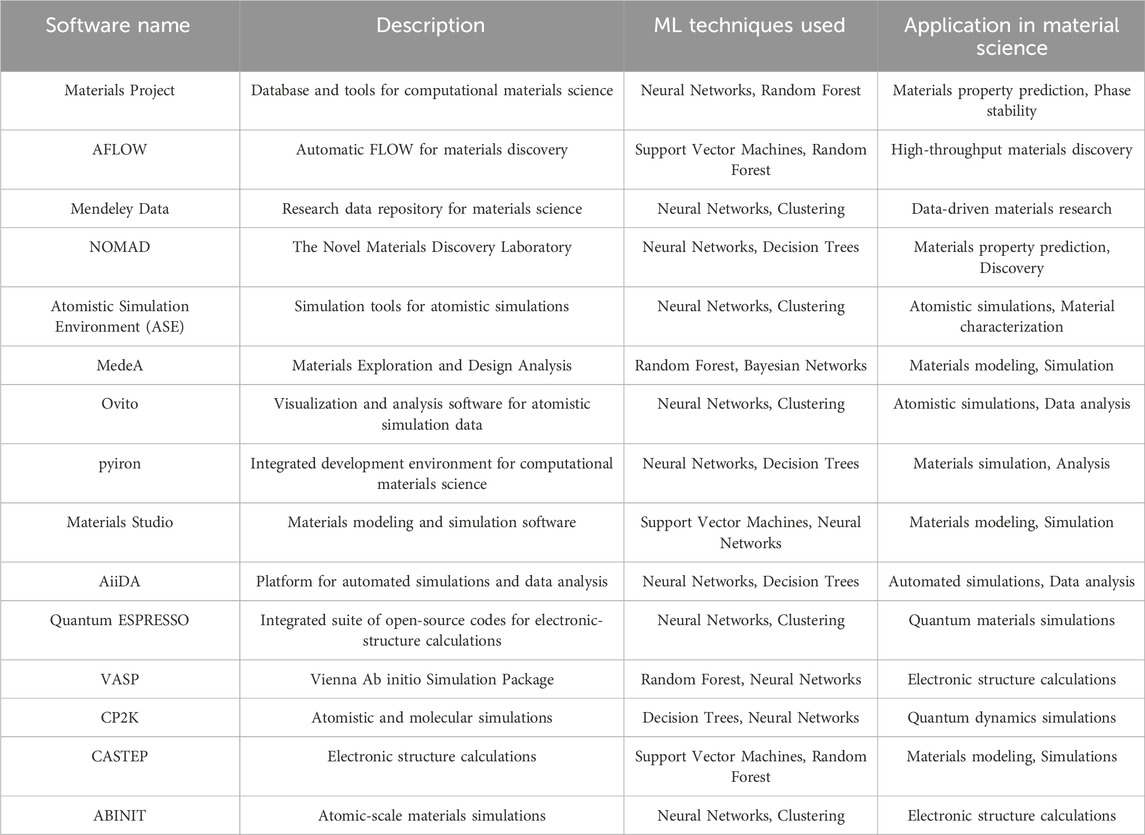

The evolution of programming languages within the context of machine learning techniques is marked by significant milestones. Notably, Alan Turing’s publication of “Computing Machinery and Intelligence” introduced the Turing test, laying the groundwork for AI exploration through human-computer textual interaction [1]. Moreover, the pioneering work of Marvin Minsky and Dean Edmonds in developing Stochastic Neural Analog Reinforcement Calculator (SNARC), the first artificial neural network (ANN) employing 3,000 vacuum tubes to simulate a 40-neuron network, stands as a seminal moment in machine learning history. Additionally, the coining of the term ‘artificial intelligence’ by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, among other pivotal events, played a foundational role in the emergence of AI. Newell and Simon’s 1956 creation, the Logic Theorist [2] marked a pivotal achievement as it operated as a computer program capable of proving theorems in symbolic logic from Principia Mathematica. This groundbreaking program simulated human problem-solving abilities and significantly influenced the burgeoning field of information-processing psychology, shaping the foundational principles still integral to cognitive psychology and human factors studies today. Frank Rosenblatt’s development of the perceptron, an early form of an artificial neural network (ANN) [3–5], revolutionized machine learning by introducing a model capable of learning from input data and adjusting its parameters to make predictions. Although limited in solving only linearly separable problems, the perceptron laid the foundation for modern neural networks, inspiring subsequent advancements in the field of artificial intelligence and pattern recognition [6]. Oliver Selfridge’s 1959 paper, “Pandemonium: A Paradigm for Learning,” [7] introduced a revolutionary model in machine learning, presenting a framework of interconnected ‘demons’ responsible for cognitive tasks like pattern recognition. This hierarchical model emphasized the collaboration of simpler components to achieve complex cognitive functions, influencing the evolution of neural networks and significantly impacting the fields of artificial intelligence and cognitive psychology. These milestones collectively define the trajectory of programming languages in shaping the landscape of machine learning advancements. In general, ML algorithms are divided into supervised and unsupervised learning [8] as shown in Table 1 and Figures 1 and 2.

FIGURE 1. Classification of the machine learning algorithm [9].

Apart from Supervised and Unsupervised learning, reinforcement learning [10] is widely used in different aspects of robotics. In reinforcement learning based on the actions of algorithms in the environment, it is given some rewards. Considering the goal of maximizing the rewards, the algorithm learns on its own. Using reinforcement learning makes it easier to design complex, hard-to-hand-engineer frameworks, and rules that are necessary for robots to perform a task. Creating rules becomes complex because the real environments that robots face are not controllable, so a robot trained in a controlled environment with deterministic rules will perform poorly in real-world instances. Through trial-and-error interactions with its surroundings, a robot can independently learn the best behaviour through reinforcement learning (RL) [11]. Further in this section, we will be discussing all these learning processes along with different algorithms in detail.

In Supervised Learning [12], the network is provided with an output for every input pattern. The goal of these algorithms is to map input x to output y. The weights used by the model are updated during the prediction process such that the model produces outputs close to the actual output. As evident from Table 1, supervised learning is utilized for regression and classification-based problems. The goal of regression is to predict one or more target continuous outputs from a given input vector [13]. Similar to regression, classification happens to be one of the most common tasks in ML. Here the discriminant is a function that considers the input and maps it to one of the classes, i.e., discrete outputs [14]. It should be noted that input vectors also can contain continuous and/or discrete parameters. The continuous input data can be easily treated by ML, but discrete data should be encoded or treated by Decision Tree only. There are many predefined models in ML following multiple methodologies e.g., Decision Trees follow the divide and conquer algorithms [15], Naïve Bayes algorithm [16] follows a probabilistic approach, and so on. The simplest model in classification is logistic regression [17] which uses the Gradient Descent approach for parametric estimation. Based on the number of classes i.e., 2 or more, the classifiers are divided into binary and multivariate classifiers. A comparative analysis of Supervised ML Techniques is provided in Table 2. However, it is important to note that there may be several drawbacks of using some methods that although are powerful, fail to account for various case scenarios. One such technique is the Decision Tree. There are several limitations in the use of Decision Trees. One significant limitation of the Decision Tree method is its comparatively low model accuracy when compared to other methods using the same data set [18–20]. The number of samples plays a crucial role in determining the depth of the Decision Tree, which in turn affects the accuracy of its predictions. Furthermore, both the root and each node of the Decision Tree divide the samples in the feature space into two groups using a plane perpendicular to the feature parameter axis, resulting in a rather coarse division. These factors hinder the widespread application of Decision Trees in various domains. Second one is overfitting. Decision Trees can be prone to overfitting, especially if they are very deep. As a result, the model may fit the training data too closely, leading to poor generalization on new data [18]. Third one is sensitivity to noise. Decision Trees can be sensitive to noise in the data, as they aim to create rules that best separate the training examples. Therefore, if the data contains noise or outliers, Decision Trees may create incorrect splits [18–20]. Forth one is inefficiency with large datasets. Building and using Decision Trees can be computationally expensive, especially with large volumes of data. This can limit their practical use in some cases. Last one is multicollinearity issue. Decision Trees may struggle with handling multicollinearity, where features in the data are highly correlated with each other. This can result in incorrect splits and reduced model effectiveness [18–21]. While Naive Bayes presents a viable classification approach, its reliance on assuming distinct and independent features poses limitations in practical scenarios. The algorithm’s inability to generate predictions in the absence of training instances for a particular class results in zero probabilities, rendering it unsuitable for real-life applications where comprehensive training data might not cover all possible scenarios. This issue is commonly referred to as the ‘zero probability/frequency problem’ in the Naive Bayes model [22]. Future research efforts must focus on mitigating this challenge to enhance the algorithm’s applicability in real-world prediction tasks.

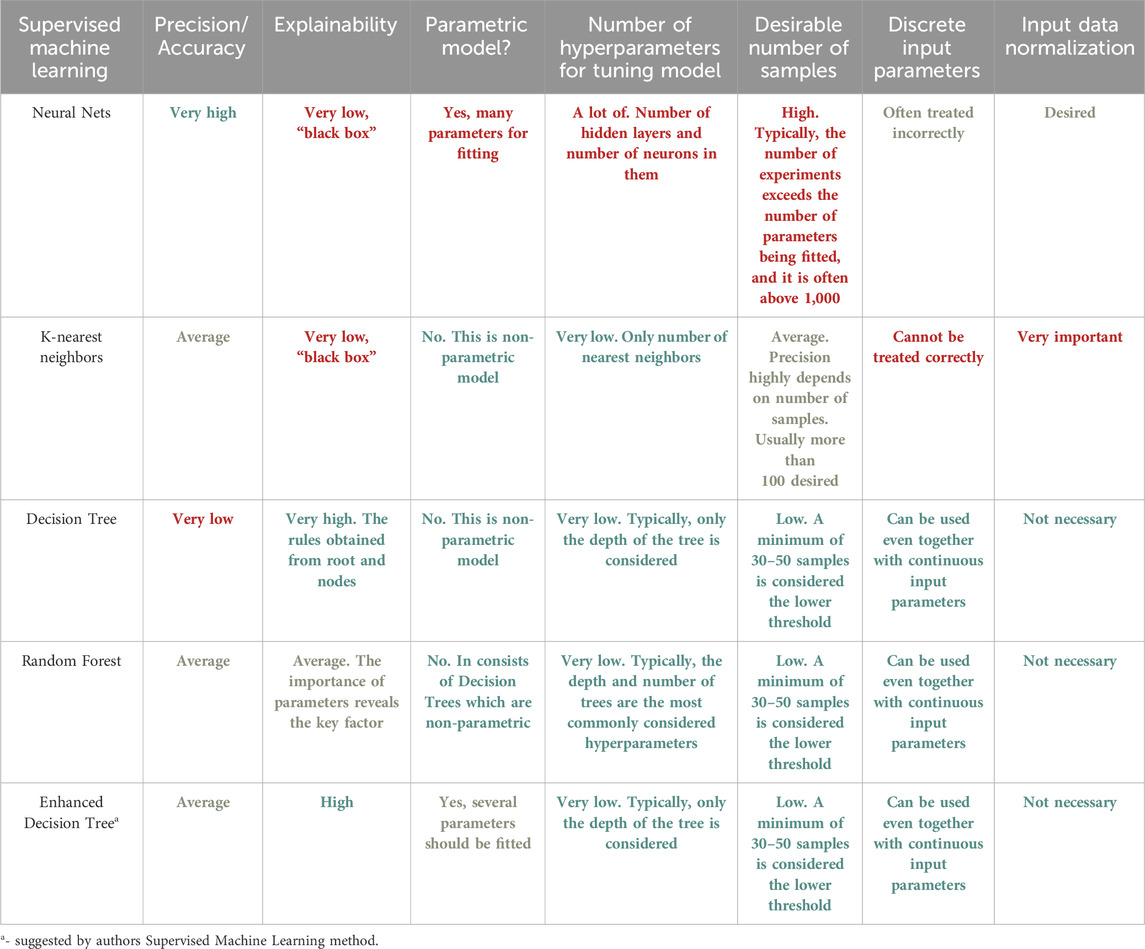

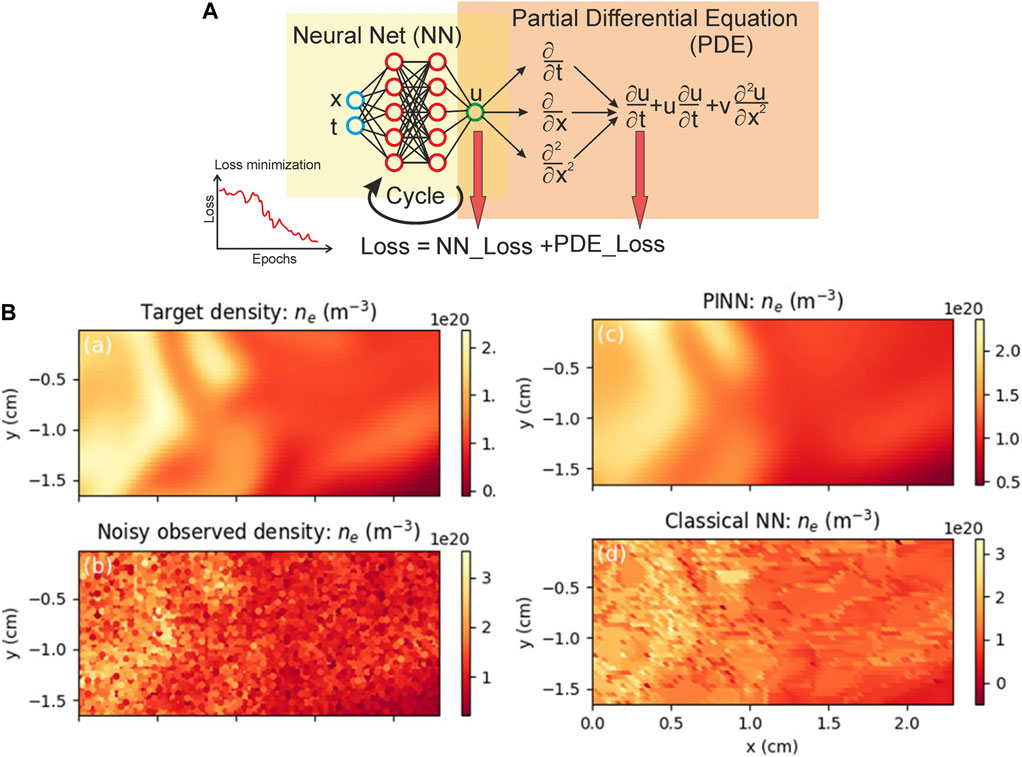

TABLE 2. Comparative analysis of various Supervised Machine Learning Techniques. Green means excellent feature, orange—average feature, red—bad feature.

In unsupervised learning, our available data is solely the input. Here, the aim is to find regularities and (dis)similarities in the input data [23]. This is also called descriptive or knowledge discovery. Unsupervised Learning is widely used in discovering clusters [15], latent factors [24] and graph structures [25]. To build clusters multiple algorithms like K-means [26] and Hierarchical clustering [27] are used. In image analysis, the dimensions of images notably impact the reduction of algorithmic time complexity. To address this, we utilize Principal Component Analysis (PCA) [28], an unsupervised technique that condenses high-dimensional image data into a lower-dimensional space. PCA identifies and captures key variations within the dataset, streamlining subsequent computational tasks like feature extraction and classification by emphasizing crucial image information while reducing computational overhead.

In the realm of physics, the choice between supervised and unsupervised learning techniques hinges on the nature of the available data and the specific objectives of the analysis. In scenarios where labelled datasets are abundant and well-defined, supervised learning proves to be a powerful tool [29]. For instance, in experimental setups where outcomes are known and categorized, such as particle identification in high-energy physics experiments, supervised learning algorithms like convolutional neural networks can efficiently classify and predict outcomes based on training data [29]. On the other hand, unsupervised learning techniques shine in situations where the data lacks clear labels or predetermined classifications. In experimental investigations where patterns or anomalies are sought without prior knowledge, clustering algorithms like k-means or hierarchical clustering can uncover hidden structures within datasets [30,31]. An example could be the analysis of cosmic microwave background radiation maps, where unsupervised techniques can reveal subtle patterns or anomalies that might elude human intuition. Table 2 and Table 3 display a comparisons of supervised and unsupervised techniques.

TABLE 3. Comparative analysis of various Unsupervised Machine Learning Techniques. Green means excellent feature, orange—average feature, red—bad feature.

In reinforcement learning, we have a learner who is a decision-making agent that takes actions in an environment and receives a reward for their action in trying to solve a problem. After a set of trial-and-error runs, it learns the best policy to maximize the reward. However, in the modern era, Deep Learning is a widely popular tool. The major reason for preferring Deep Learning over ML is the capability of extracting features automatically [32,33]. However, the major requirement here is to have a lot of data. There exists a diverse array of algorithms within Deep Learning such as Multilayer Perceptron [21], Recurrent Neural Networks [22], and Convolutional Neural Networks [23], Generative Adversarial Networks (GANs), Long Short-Term Memory Networks (LSTMs), Autoencoders, Transformer Networks, Reinforcement Learning models [34–38] such as Deep Q-Networks (DQN), Capsule Networks (CapsNets) and various other architectures designed to address specific tasks, each offering unique capabilities and applications within the realm of Deep Learning [39–41]. Deep Learning has also been attractive in recent days due to better activation functions, better optimization functions, and better regularization techniques.

A Multilayer Perceptron [39,42,43] is a class of feed-forward neural networks that particularly involves one input and output layer and multiple hidden layers. It is a supervised learning technique that has nonlinear activation functions and is trained using a backpropagation algorithm [43]. Similarly, the major advantage of Convolutional Neural Networks [41] is their ability to extract features automatically. This reduces the tasks of extracting features using multiple image processing algorithms. Having enough data for training, this algorithm can be the best option in multiple places including applications like image captioning [44], image classification [45] and object localization [46]. When it comes to time series algorithms, Recurrent Neural Networks (RNN) [47] are widely used. They are special types of NN particularly meant to deal with sequential data. The output of the network acts as feedback to the preceding neuron which allows sharing of parameters. This algorithm along with some modifications can be widely used in multiple applications such as weather forecasting [48], and the prediction of missing data [49]. High Throughput Computation (HTC) involves using distributed computing facilities for tasks requiring high computational power [50] typically provided with clusters and workstations. The tasks on HTC can take a long time varying from a few weeks to a few months. In science, it is widely used in the field of material sciences [51–53].

In spite of ML being a popular tool and finding several application in physics and chemistry, it is not as widespread in these fields as it should be. It seems that the problem is associated with the complexity and diversity of ML methods which have their own hidden disadvantages and specialitied. So actually one can find a big gap between ML and physics because a physicist knows how to collect correct data, but does not know how to treat it correctly and vice versa with ML specialists. We think that in order to make a real ML revolution physicists should use ML as a mandatory tool. The current review is aimed to spread ML in physics by discovering important instruments and highlighting the “underwater rocks” of ML for non-specialists. Additionally the review highlights the problems related to physics (for example: lack of data collection; mixed discrete and real parameters as input data; obtaining the simplest rules from the model) and even some ways of how they can be resolved. The review will hence of interest to ML specialists in order to understand how to improve some methods and write code. The last but not the least problem is that the results of ML models usually cannot be interpreted well even by specialists in ML and this is important to the field of Physics. The current review highlights, for physicists, in the shortest way which methods should be used and why in order to get an interprettable model.

2 History

It is difficult to pinpoint exactly the first-time when ML was used, but looking at its history we can apprehend that it has been recounted with several important events. The entire timeline of the evolution of ML is neatly summarised in these articles [54–56]. The foundation of the Bayes Theorem dates back to 1763 [57] which was further followed by the invention of various statistical techniques like the least square method in 1805 [58] and Markov Chains in 1913 [59]. Walter Pitts, a logician, and cognitive psychologist, and Warren McCulloch, an American neurophysiologist wrote a paper in 1943 related to human cognition in which they quantitatively map out mental processes and decision-making which is considered to be the first neural model invented [60]. Furthermore, Alan Turing’s proposal of the Turing Machine in 1950 [1] was one of the most significant events. This was an artificially intelligent machine that could learn on its own. This discovery piqued the curiosity of many academics, and it played an essential role in the development of the field into what it is today.

The success of ML in recent decades has been boosted by advances in technology and computational capacity. As the area of ML grows in prominence, more scientists and researchers are becoming interested in its applications in a variety of disciplines. As mentioned by Carleo et al. both the disciplines of physics and ML have a similar approach to solving problems but differ in terms of the interpretation of results [61]. In physics, results are gained by scientists applying their knowledge and intuition to solve issues, whereas, in ML, algorithms supply the essential “intelligence” by identifying underlying patterns in data. Consequently, while some advocate for applying ML in physics, others remain skeptical due to a lack of comprehension regarding the acquisition of results. The integration of ML as a tool in physics is a relatively recent concept. The link between statistical mechanics and learning theory began in the mid-1980s, when statistical learning from examples overtook logic and rule-based AI, owing in large part to contributions by statistical physicists. This was a collaborative effort between two key papers, Hopfield’s neural-network model of associative memory [62], which prompted the rich application of notions from spin glass theory to neural-network models, and Valiant’s theory of the learnable [63], which paved the path for rigorous statistical learning in AI.

3 Recent trends in machine learning in physics

The field of Machine Learning is a versatile domain that lies at the frontier of cutting-edge computer science and is allowing us to push the limits of computing to the aid of science. Today, we are surrounded by, generate and record an immense amount of data every second - which has paved the way for advanced AI algorithms to analyse trends by drawing information from data in various fields in science, education, manufacturing, healthcare, telecommunications, marketing, transportation, social networking, and physics [64,65]. Albeit being a very new field, Machine Learning is highly researched, with various new algorithms and strategies arising to tackle different problems and implement solutions to support both theoretical and experimental physics through simulation, trend analysis, and various other models which have helped us deepen our understanding of the universe by big measures [66,67]. Often advances in physics can help accelerate the growth and effectiveness of Machine Learning itself, through research as well as through the investigation of specific domains which directly impact computing technology, for instance, research in quantum computing has significantly helped to accelerate Machine Learning in a world where Moore’s law is approaching its limits [61,68]. While futuristic technologies like quantum computing hold immense promise for revolutionizing computational capabilities, this review primarily focuses on contemporary advancements in machine learning within physics. However, it is important to note that the intersection of quantum computing and machine learning presents a compelling direction for potential future applications in solving complex computational challenges within the realm of physics.

3.1 Automated Machine learning

Many different Machine Learning approaches have been formulated to identify as well as solve problems across various fields. Most problems can be mainly divided into classification analysis, regression analysis, data clustering, association rule learning, feature engineering for dimensionality reduction, as well as deep learning methods [64]. As discussed above, unsupervised learning is a part of ML where the algorithm identifies trends in data by itself, without the need for labels. A recent development in the field of unsupervised learning is Automated Machine learning or AutoML. The goal of AutoML is to provide techniques that construct appropriate Machine Learning models with little to no human involvement [69]. AutoML focuses on automating the construction and training of Machine Learning models which include pre-processing, algorithm selection, and hyperparameter tuning [3]. The problem of hyperparameter tuning has been researched and has led to the development of various techniques such as Feature Engineering, which revolves around automating the selection of the most discriminant features for a particular Machine Learning problem [69,70]. Meta-learning is an AutoML practice consisting of a series of methods that utilize available metadata and those generated from a problem, concerning the types of datasets, algorithms, benchmark numbers, and other statistical figures to help automate the optimization of Machine Learning algorithms as well as model comparison [71]. For example, learning curve prediction allows a machine to predict the performance of Machine Learning models on given problems as well as to compare the performances of chosen models pre-hand [72]. Architecture search is yet another AutoML method that attempts to evaluate the best possible architecture and model that suits a given problem [69,73]. The likelihood function assesses a statistical model’s fit to observed data across various parameter values, while the log-likelihood function simplifies computations by converting products into sums and enhancing numerical stability. These functions are pivotal in optimizing parameters for smoother algorithm convergence and in choosing the most suitable models for the given data [74].

3.2 Explainable AI

Although there has been substantial progress in Machine Learning methods over the past years leading to many algorithms being developed and adopted for solving problems, it has also resulted in cutting-edge Machine Learning algorithms becoming highly complex both syntactically and architecturally. Explainable AI (XAI) is a development in a class of AI that aims at reducing the barrier of complexity and allowing a better human understanding of Machine Learning and AI models in general [75]. Implementing XAI models can lead to a move from “black-box” models toward more transparent Machine Learning models and hence expand our scope for the application of Machine Learning to various other domains [76,77]. In the field of physics, there is a lot of debate regarding the adoption of Machine Learning for computationally intensive tasks and the resolution of new science because of their potential agnostic nature and the “black box” characteristic making the use of such models ‘opaque’ to the understanding [61]. XAI is a frontrunner in resolving the ‘opaqueness’ and revealing the scientific underpinnings left within the workings of a model applied to a research problem, which can also result in the discovery of newer science [78]. Through the use of XAI and interpretable models, we can achieve more clarity with the use and implementation of models on problems, leading to a significant boost in the propagation and development of research, especially in the core sciences.

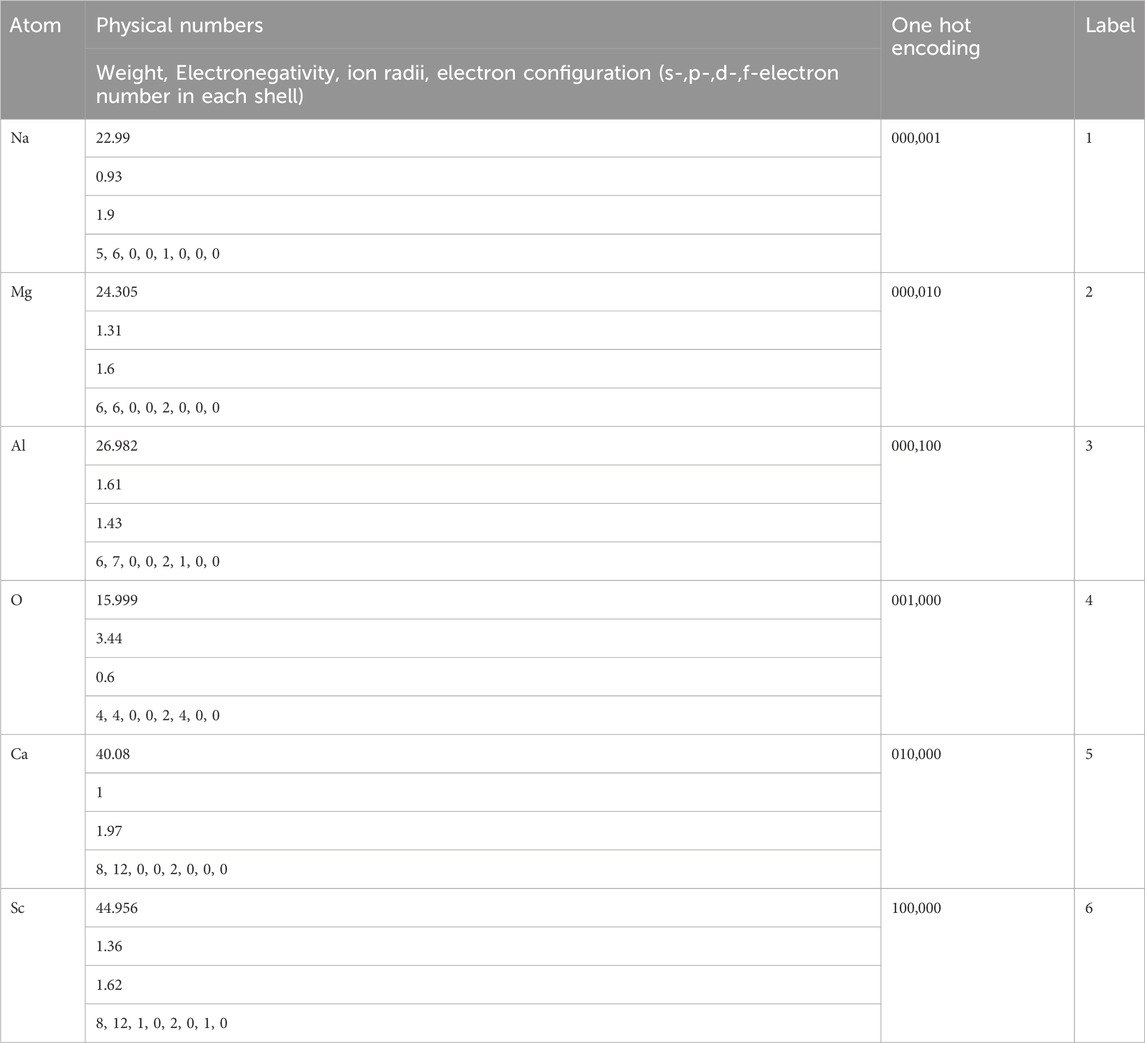

The XAI aims not only to “get the rules”, but also to understand where the model works and where it does not. Investigators cannot trust a model if they do not understand its uses and the cases where it most often does not work. Even 1% of really bad forecasts can reduce the use of the model in medicine, in driving a car, that is, in areas where a person’s life is decided. The XAI should solve mistrust problem and increase the pace of technological development in the ML field. Physics, chemistry and medicine offer devices/materials that can endanger the life of a person or even the whole of humanity. However, on the way, we can find several problems that prevent us from getting the XAI model. One of the problems is that there are numerous numbers of discrete feature parameters in these fields e.g., types of medication, treatment regimens in medicine; types of chemical compositions dissolved in solution in chemistry; atom types in physics, all of them can be hardly presented as numbers. For example, there are several ways to enumerate, Na, Mg, Al, O, Ca, Sc atoms (Table 4).

Using atom weight, electronegativity, ion radii, electron configuration seems to be most specious, but in this case each atom in the chemical formula can combine 11 and more real number parameters, most of them really correlate with others but cannot be diminished easily, and feature parameter vector becomes really large which quench explainability. The « one hot » encoding also leads to a large number of feature parameters. Moreover, further interpretation of ‘1’ and ‘0’ is really hard. The “label” case is invalid at all, because it is not understandable why O ion has greater weight than Na, but lower than Ca. One can see that a lot of ML algorithms cannot be applied with discrete parameters in order to make XAI. At least 1 ML method, named Decision Tree, can work with discrete parameters as is, without transformation to the real numbers. This is the most interpretable ML algorithm with the highest XAI performance. However, it suffers from low prediction accuracy. Ensemble of Decision Trees, named Random Forest, intends to increase accuracy, however, drastically losing explainability. Therefore, the global mathematical aim is to find a method which can still work with discrete parameters as is, and give high accuracy and explainability. Until it is found, the Decision Trees seems to be the most appropriate for some cases.

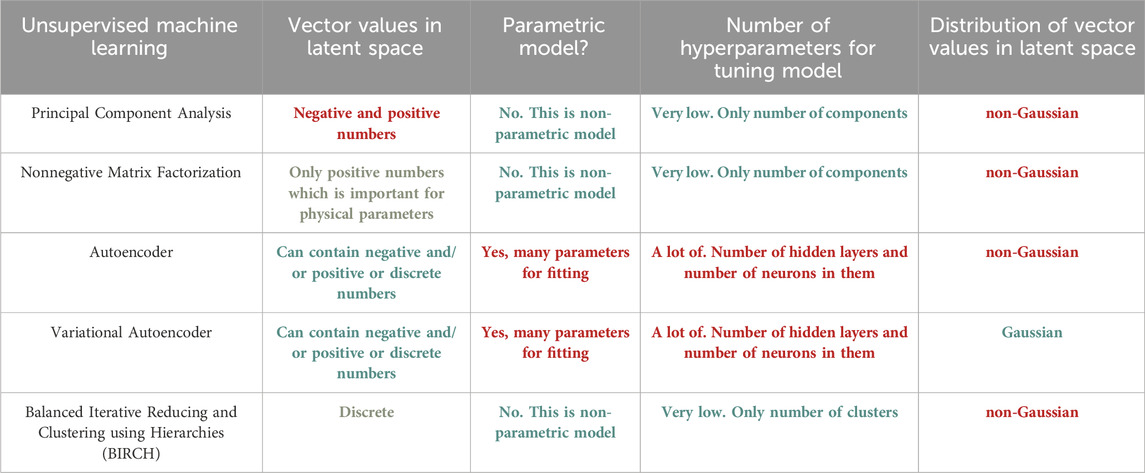

Further improvement of the Decision Tree model seems to be related to enhancing of data sorting procedure and segregation. Currently, continuous feature data

FIGURE 3. (A) Workflow of y = red/blue class segregation with continuous x1, x2 feature parameters using DT and Enhanced DT. (B) Workflow of y = red/blue class segregation with discrete x feature parameters (star, circle, square, pentagon) using DT and Enhanced DT.

The same improvement can be done with DT which treats discrete feature parameters

It is expected that Enhanced DT can give impulse to XAI model creation in many spheres, including in physics and chemistry where the rules and understanding of model work is preferable.

3.3 Physical-informed machine learning

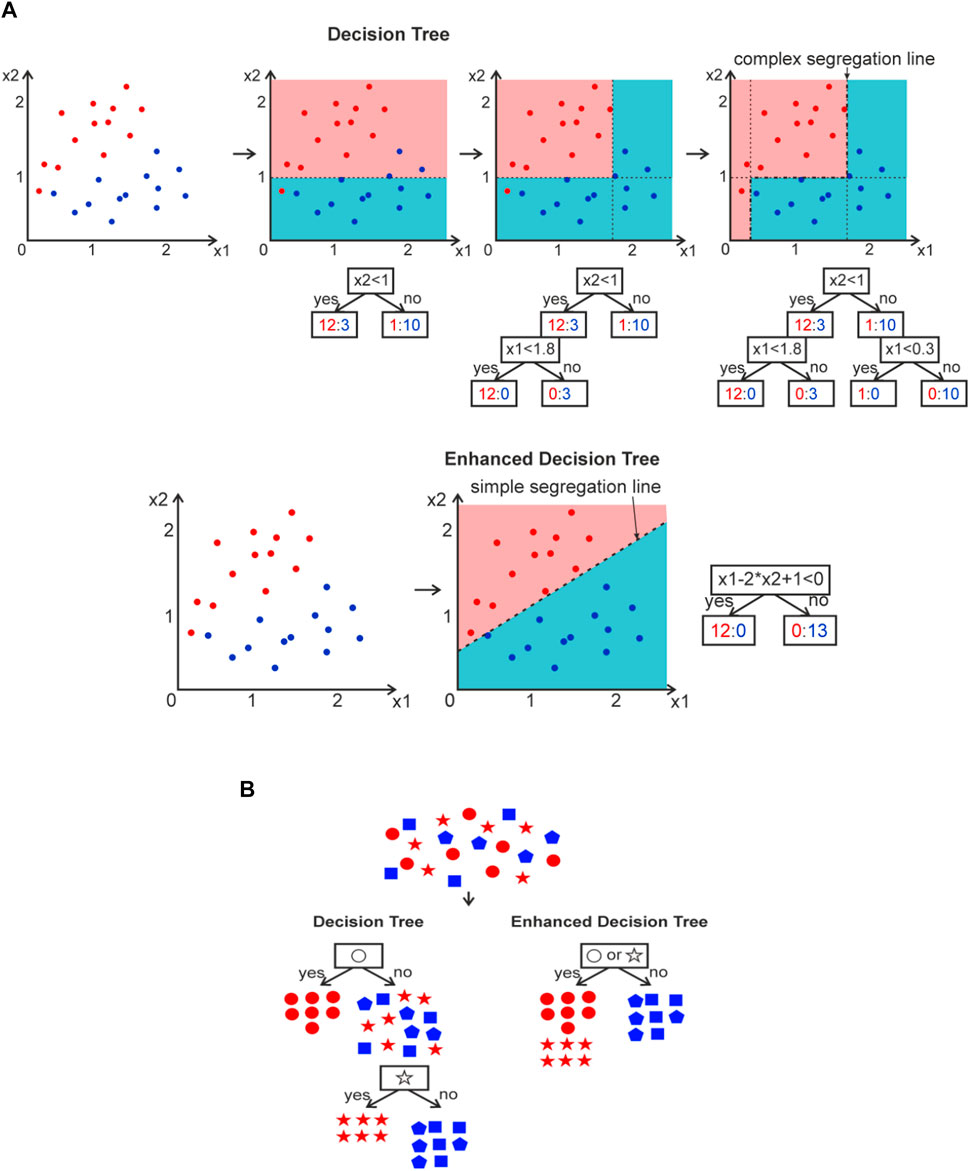

To date, modelling and predicting the dynamics of multiphysics and multiscale systems have made great strides by numerically solving partial differential equations (PDEs) using finite differences, finite elements, spectral and even meshless methods. However, modelling and predicting the evolution of nonlinear multiscale systems with inhomogeneous cascades-of-scales inevitably faces severe challenges and introduces prohibitive costs and multiple sources of uncertainty [79]. Another problem is physical tasks with missing or noisy boundary conditions, which cannot be solved through traditional approaches. Machine learning methods which use many observable datasets can be used to identify multi-dimensional correlations and manage such problems, but predictions may be physically inconsistent or implausible even for well-fitted purely data-driven models. To fit this problem G.E. Karniadakis with collaborators suggested physics-informed neural networks—neural networks that are trained to solve supervised learning tasks while respecting any given law of physics described by general nonlinear partial differential equations [79,80]. The core idea is to use Physics-informed neural networks (PINN) by constructing a neural network (NN) u(x,t; θ) with θ the set of trainable weights w and biases b. After that, the measurement data {xi, ti, ui } for u and the residual points {xj, tj } for the PDE is specified and the loss L is also specified by summing the weighted losses of the data together with PDE. So that the NN is trained to find the best parameters θ* by minimizing the loss L (Figure 4A). This method was successfully applied to extract edge plasma behaviour for magnetic confinement fusion which is important to reactor performance and operation (Figure 4B).

FIGURE 4. (A) Physics-informed neural networks (PINNs) was used to accurately reconstruct the unknown turbulent electric field (middle panel) and underlying electric potential (right panel), directly from partial observations of the plasma’s electron density and temperature from a single test discharge (left panel). The top row shows the reference target solution, while the bottom row depicts the PINN model’s prediction [81]. (B) Principal scheme of Physics-informed neural networks (PINN) [81].

4 Applications of machine learning in physics

4.1 Astronomy and astrophysics

The field of astrophysics is very data-intensive, with huge amounts of computationally worthy data being produced by instruments around the globe. For example, the Gaia Data Release 3 alone (DR3) contains more than 1.812 billion light sources with five to six parameter solutions (Brown et al. 2021). Such an immense amount of data has a lot of potential in store for Machine and Deep Learning applications which may help resolve what is not initially apparent. ML is slowly finding itself commonplace in the field of astronomy for automation of data-filtering and significantly increased workflow.

4.1.1 Gravitational wave detection

The detection of gravitational waves from the GW150914 black-hole merger (BHM) event using the Laser Interferometer Gravitational-wave Observatory (LIGO) [83] made waves in the astrophysics community. This was further followed by the detection of more high-energy events including BHMs with objects nearing 50☉ and beyond [84,85] Gravitational wave (GW) detections were crucial evidence to validate yet another aspect of Einstein’s General Relativity and this has been made possible by rigorous astrophysics simulations of super heavy objects like black holes [86]. Observing such events also yields massive amounts of data, allowing us to deduce the nature of the event in question as well as the astronomical parameters of the objects involved.

LIGO currently employs the “matched-filtering” algorithm as the primary GW detection method. However, this matched-filtering approach has inconsistent behaviour [87] and may overlook GW signals produced by smaller events, black-hole binaries, and other compact binary interactions, according to various investigations. Several promising methods rooted in deep learning have been able to replicate the result of a matched filtering algorithm. A deep learning-based approach was provided by Gabbard et al. (2017) who used whitened time series data as input of measured gravitational-wave strain, while using data from simulated binary black hole mergers as training and testing data [88]. The training datasets consist of 4 × 105 independent timeseries data, 50% of the data with signal-to-noise and the rest with only noise data. A CNN approach was used and yielded results that are close in accuracy to matched filtering [89]. Later, Yan et al. (2022) proposed MNet-Shallow and MNet-Deep which are Neural-Network equivalents to the matched filtering method, and exceed the previous strategy in terms of computational efficiency in detecting GW from LIGO noise [90,91]. Mnet-Shallow is a shallow neural network approach, while Mnet-deep is a deep learning approach. The L1 strain data from the LIGO O2 run is used as noise data after down sampling and dividing the data into 0.6 s segments.

Other deep-learning methods such as deep-filtering which employ GPU-accelerated CNNs trained on GW signal injections into simulated noise with a high signal-to-noise ratio (>90%) have shown promise in outperforming current methods used to detect GWs [92,93]. This accelerated computational method allows for real-time verification of detection results by conventional matched-filtering methods due to the reduction of waiting time from CPU hours. Deep filtering also shows promise in making automated GW detection faster. Shen et.al. (2017) perform an experiment on gravitational denoising using variational autoencoders (VAEs) [94–98] which introduced the Staired Multi-Timestep Denoising Autoencoder (SMTDAE) based on a sequence-to-sequence bi-directional long-short term memory recurrent neural network (LSTM-RNNs) [37,95,99–102]. It is a model trained on white Gaussian noise capable of removing LIGO input data as well as simulated noise, achieving excellent performance in both scenarios according to the report. Later, Wei and Huerta (2020) also propose a DL-based GW denoising approach by applying WaveNet to noise-contaminated binary black hole merger waveforms [103,104]. The study also finds that CNNs are best suited to remove noise from binary black hole GW events [41,93]. The tuned WaveNet model is used to denoise signals embedded into simulated Gaussian noise as well as raw LIGO noise, obtaining consistent results with denoising binary black hole merger signals with moderate signal-to-noise ratio [105–107]. Recently, Powell et. al. (2023) have applied generative adversarial networks (GANs) to successfully generate artificial noise artefacts into GW merger signals for the purpose of providing test data to studies like those done by Wei and Huerta (2020), as mentioned above [103,108]. The study also investigates an experiment on benchmarking the accuracy of the GANs at simulating real noise and glitches similar to the ones observed at LIGO, KAGRA and VIRGO detectors by classifying them into types of glitches in GW detectors using CNNs, achieving a classification accuracy of over 99% over 22 types of glitches [93,108–110].

4.1.2 Transit event vetting

It is possible to identify the existence of exoplanets through continuous photometry of candidate stars over a large time period to look for periodic dips in observed flux. The captured flux for each particular system after processing can be plotted over time and can be analysed as light curves. Several space and ground-based missions have been deployed to observe a multitude of stars simultaneously. Kepler performed highly sensitive photometry on ∼500,000 stars in Cygnus and Lyra with a very large field-of-view (FOV) of 115 square degrees till it was retired in 2018 [111]. The Kepler Science Processing Pipeline compiled the Threshold Crossing Events (TCEs) identified by Kepler into data releases [112]. However, these data releases demanded human intervention for the removal of false positives. Later, the Transiting Exoplanet Survey Satellite (TESS) made use of four wide-field optical CCD cameras capable of surveying the sky in 600–1,000 nm bandpass [113] with an observing sector of 24 × 24° each, for a total sector of 24 × 96°. TESS proceeded to survey <75% of the night sky and confirmed special candidates named TESS objects of interest (TOIs) which are potential or confirmed exoplanet-harboring systems. TOIs are usually released after passing diagnostic and filtering tests to separate exoplanet candidates from false positives, and further data validation through close inspection by special vetting teams [114]. The TESS Science Office (TSO) has released a list of 2,545 objects which include 120+ confirmed exoplanets, and 757 objects with rp < Rearth, [115]. Data from Kepler and later, (TESS) contained false positives stemming from various sources, a large chunk of which was attributed to eclipsing binaries. Manual screening of light curves is hence unreasonable because of the time taken to validate transit events by humans.

Recently, automatic vetting of transit data, also called Auto-Vetting has been experimented with to some degree in the past few years. Many auto-vetting methods have been developed using Machine Learning (ML) and Deep Learning (DL) algorithms. A Random Forest-based model was developed by McCauliff et. al. to classify TCEs into subclasses of either Planet Candidates (PCs) or False Positives (FPs) and achieve an error rate of 5.85% [116]. Robovetter, by Coughlin et al., was the first major algorithm capable of entirely replacing human-aided vetting and producing fully automated catalogues from the Kepler transit data pipeline, and classifying it into either PCs or FPs using several flags to identify the source of each non-transit-like event [117]. A Locality Preserving Projection metric (LPP) defined by Thompson et. al. in 2015 was used by Robovetter for dimensionality reduction and K-nearest neighbours for classification [118].

Later, several projects would bring promising results when dealing with classification on TESS Data Releases, by Osborn et al. in 2019 using a neural network model by Ansdell et al. and produced 97% precision and 92% accuracy on simulations created by the Lilith model [119]. Further, a transfer learning approach through a model pre-trained on Kepler DR24 data was utilized by Stefano et al. on TESS ExoFOP data producing significant results [120]. Agnes et. al (2022) proposed the models ExoSGAN and ExoACGAN in their study which utilizes semi-supervised and auxiliary classifier GANs to train a discriminator model by generating artificial exoplanet transit event data against which the discriminator model could be trained. The ExoACGAN model produced an accuracy of 99.8% with an F-score of 97.6%, with only 8 out of 5,050 non-exoplanet stars being misclassified as exoplanet systems [109,121,122].

4.2 Fluid systems and dynamics

It is known from the theory of fluid systems that the transport of conserved quantities or evolution of observed phenomena can be simplified by a small number of coherent structures or several dynamic processes [123]. This possibility motivates scientists to extract these essential mechanisms from measurements. Several statistical tools, such as variance analysis, conditional averaging, principal component analysis or proper orthogonal decomposition (POD) (Figure 5) [123,124] were used to describe complex fluid behaviour.

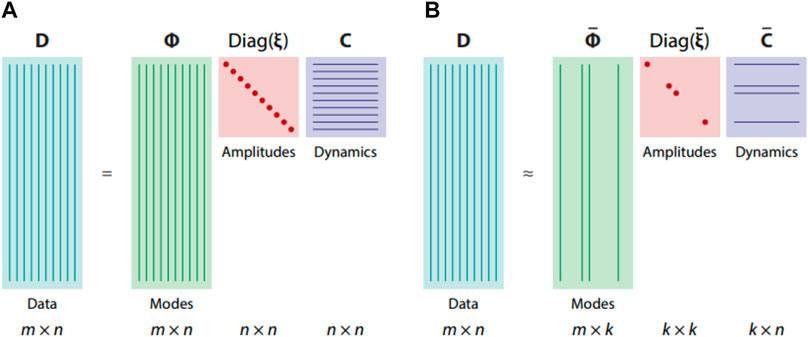

FIGURE 5. Example of application data matrix D factorization into modes Φ, amplitudes diag(ξ), and dynamics C, applying (A) spectral analysis and (B) model reduction [123].

4.2.1 Dynamic mode decomposition in fluid systems

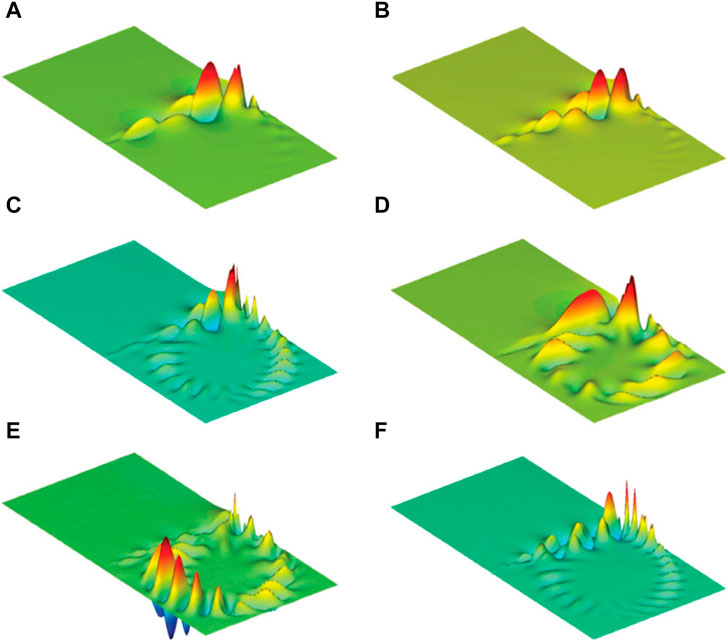

There are other data-driven approaches that are actively developing for fluid systems at present and complement the main methods based on model building. For example, the dimensionality reduction technique for sequential data streams, known as dynamic mode decomposition (DMD) can extract spectral information (Figure 6) from observed data sequences and emphasize various extensions and generalizations [123]. DMD is a factorization and dimensionality reduction technique for data sequences, i.e., Unsupervised ML method, which was first introduced by Schmid as a numerical procedure for extracting dynamical features from flow data [125].

FIGURE 6. Example of application DMD to extract representative dynamic modes. (A) Most unstable dynamic mode, (B–D) dynamic mode from the unstable branch, (E, F) dynamic modes from the stable branch [125].

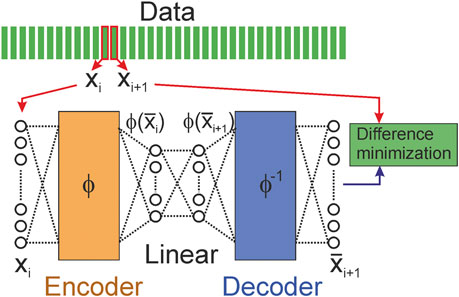

There is Extended DMD (EDMD) [123] which is the DMD extension that utilizes a larger set of observable functions to obtain more accurate approximations. The EDMD can be assumed as a higher-order Taylor series expansion near equilibrium points, whereas the standard DMD only captures the linear term. Recently [123] Machine Learning techniques have been used to optimize the methodology. For example, an autoencoder [94,96–98,126] (Figure 7), which solves the problem of EDMD, namely, how to choose a set of nonlinear observables has been used.

FIGURE 7. Example of autoencoder (the encoder and decoder parts consist of multiple layers of neural nets) which can be used in EDMD [123].

4.2.2 Sparse identification of nonlinear dynamics

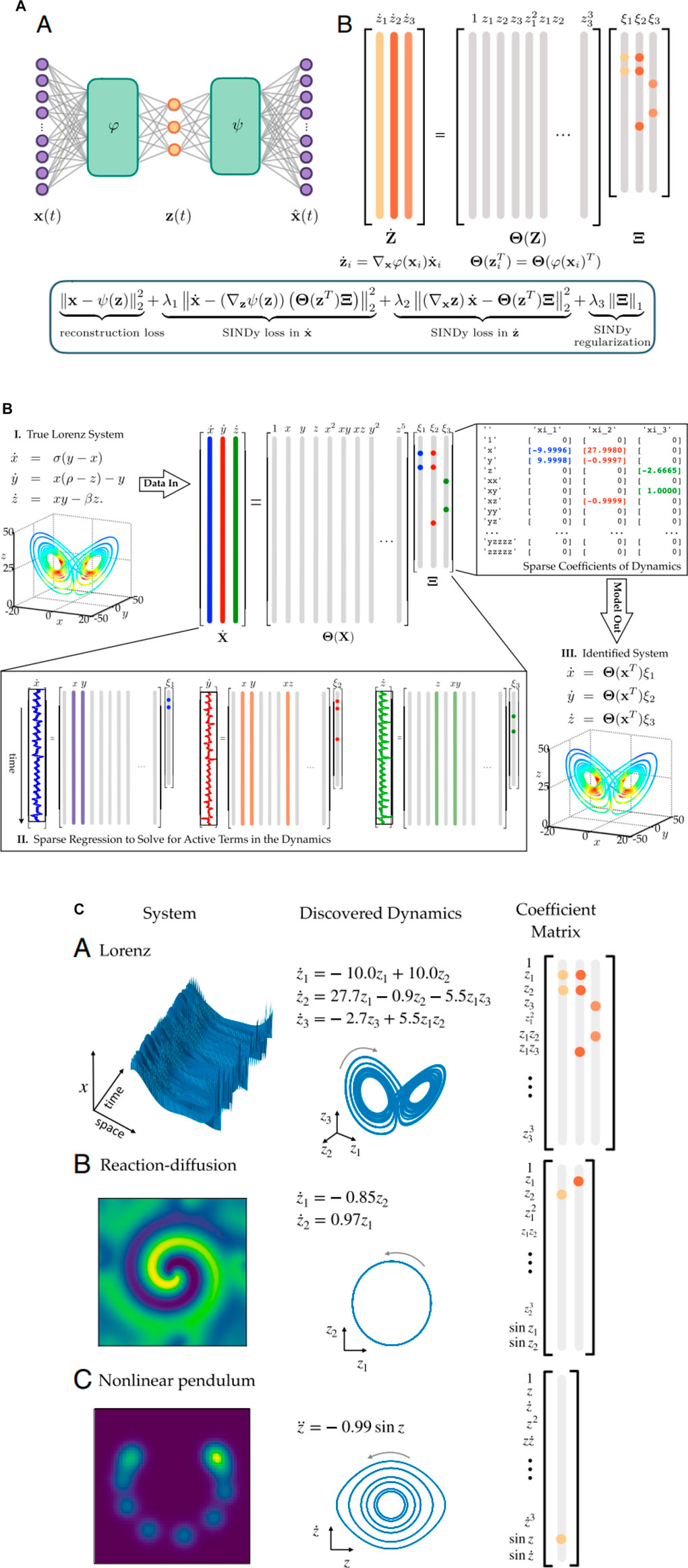

One of the urgent tasks of nonlinear dynamic systems is the discovery of governing equations from the data, and advances in sparse regression allows for the extraction of the structure and parameters of a nonlinear dynamical system from data [127]. The smallest number of terms can be extracted which can describe the dynamics without losing important information, which correlates with the well-known Occam’s razor approach. S.L. Brunton together with K. Champion suggested and demonstrated how deep neural networks together with sparse identification of nonlinear dynamics (SINDy) can be used to solve this complex task [127,128] (Figure 8A). The main idea is to use the SINDy autoencoder method [94,96–98,126] for the simultaneous discovery of coordinates and parsimonious dynamics (Figure 8B). It discovers intrinsic coordinates z from high-dimensional input data x and a SINDy model captures the dynamics of these intrinsic coordinates. The active terms in the dynamics are identified by the nonzero elements in Ξ, which are learned as part of the Neural Net training. The time derivatives of z are calculated using the derivatives of x and the gradient of the encoder ϕ [127]. The detailed scheme to use this method for a Lorentz system is demonstrated in (Figure 8C) and several discovered equations obtained in such a way from the data are demonstrated as well.

FIGURE 8. (A) Example of autoencoder architecture: the encoder ϕ(x) which maps the input data to the intrinsic coordinates z, and decoder ψ(z) which reconstructs x from the intrinsic coordinates, both consist of multiple layers of neural nets [128]. (B) Schematic of the SINDy algorithm, demonstrated on the Lorenz equations [127]. Several discovered models as the examples. (A–C) Equations, SINDy coefficients Ξ, and attractors for Lorenz (A), reaction–diffusion (B), and nonlinear pendulum (C) systems [128].

4.3 Nuclear and particle physics

After the completion of the standard model with the discovery of the Higgs Boson [129], the field of high-energy physics is entering a new phase and is being led by well-funded and large-scale experiments like the Large Hadron Collider (LHC). These experiments output large amounts of data which have sown seeds of potential in ML methods to probe observations yet unexplained by the standard model.

4.3.1 Nuclear mass prediction

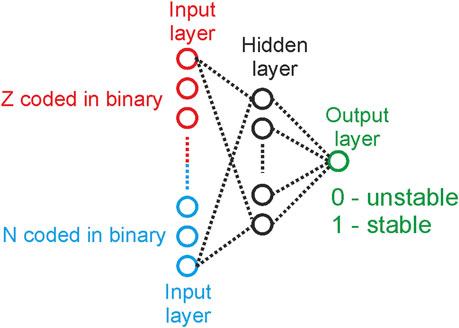

The application of ML in the nuclear physics domain certainly is not new. Gazula et al (1992) worked towards applying artificial neural networks to predict nuclear mass excesses and nuclear stability and analyse neutron separation energies through a feedforward neural network (Figure 9) [130]. Further, feedforward neural nets were trained to learn atomic masses. Nuclear spins and parities and generate highly accurate predictions for test nuclei [131]. With progress in ML algorithms, new strategies were adopted to study nuclear spins and parities, as well as beta-decays. Clark and Li et. al. (2006) reported a study that applied SVMs that worked on atomic and mass numbers of several elements and was able to predict nuclear masses, and beta-decay lifetimes as well as deduce the spins/parities of nuclear ground states [132].

FIGURE 9. Neural Network that us taught to distinguish between stable and unstable nuclides (A). Deviation Mexp–Mcalc of learned values of atomic masses Q17 from their experimental values [130].

More recently, Carnini et. al. (2020) brought major improvements in nuclear mass prediction models by using the decision tree algorithm to enhance the accuracy of the liquid drop mass model and the Duflo-Zuker mass model. They commented that even simple algorithms like decision-trees show promise in improving the description of nuclear masses [133]. Later Kernel Regression (KR) model and Artificial Neural Networks (ANNs) were applied to liquid drop mass models [134,135].

4.3.2 Event selection and classification in high-energy particle collisions

ML is an integral part of modern-day high-energy experiments. ML found great success in automating event selection from a multitude of signals produced in a high-energy collision and distinguishing wanted particle signals from the background. Byron et. al. (2004) utilized boosted decision trees for particle identification (PID) at Fermilab, following which, ML has found a wide range of applications in particle physics and PID [136]. The idea of classifying high-energy subatomic particles, known as Jett Classification, encompasses a wide range of classification problems such as identifying jets from heavy and light quarks, gluons, W, Z and H bosons [137]. Precise and effective data analysis is very important for such events. Baldi et. al. (2014) concluded that deep networks (DNs) with low-level features outperformed shallower networks with a similar HIGGS benchmark [138] and hence investigated a supersymmetric particle search algorithm based on DL to improve upon the PID power of particle detector experiments, noting that the DN can also be used a standalone module inside a larger neural network classifier [139]. The ALICE project at the Large Hadron Collider (LHC) is an integral experiment for PID and utilizes a random forest approach to detect ultrarelativistic particles in heavy-ion collisions across a broad momentum range [140]. RNNs were utilized as the first tools for flavour-tagging, which is the classification of particle jets into either light-flavoured or heavy-flavoured quarks by spatially discriminating them since heavy quarks decay quickly in the order of picoseconds [137,141]. The JETNET package was used in flavour-tagging operations in LEP to classify b and c quarks by E.Boos who used a feedforward model for jet classification [4,142].

Perhaps one of the most important uses of Machine Learning based classification in High Energy Physics is the development of the Toolkit for Multivariate Analysis (TMVA) in the ROOT analysis package, a library developed by the Hoecker et. al. (2009) of the CMS collaboration. This method employs several Machine Learning techniques and most notably the widely used Boosted Decision Tree (BDT) classifier, trained on one million simulated events from reconstructions of the CMS detector [143,144]. The classification categories are split based on the momentum of the dimuon pair and presence of high-invariant mass dijet pairs, looking for vector-boson fusion events. The training sample for the project was split into a 75% training and 25% testing regime. The experiment optimizes for maximum signal strength using multivariate ML and reported a 23% increase in signal sensitivity [136,143–146].

Later, G. Aad and B. Abbott from the ATLAS Collaboration produced updated results on the experiment, utilizing an algorithm called XGBoost, which also inherited the 14 kinematic variables and 12 categories with the intent to optimize signal strength contrast with the background [147,148]. The project achieved 50% higher expected sensitivity than the ATLAS result which can be attributed to the increase in luminosity from the algorithmic refinements and analysis. The study also analyses a search for Higgs decay that analysed jet topologies of two types based on data from CERN (2016)—resolved-jets and merged-jets [145,149]. Gradient boosted BDT is used to enhance the separation between the signal and the background for the resolved jet topology with 4 classification categories and 25 variable inputs during training [146]. There has been a paradigm shift towards DL from BDT as newer studies opt for Deep Neural Network implementations for multivariable classification of particles and jets [150]. Another experiment by the CMS collaboration (2017) to measure quark-antiquark pairs used feedforward Neural Networks comprising of three hidden layers for classification the single lepton channel, and BDTs in the dilepton channel. This experiment provided the first evidence for ttH production resulting from

Another attempt was made by Guest et. al. (2016) using a deep-learning-based solution by utilising a database of training samples for three classes of jets (light-flavour, charm, heavy-flavour quarks), trained on feedforward, Long Short-Term Memory (LSTM) and Outer Recursive Networks (ORNs). The feedforward networks with 9 fully connected layers with a learning rate of 0.01 produced an area-under-curve accuracy (AUC), with larger AUC indicative of better performance - of 0.939. The study found that LSTM models best fit the jet classification problem have a small size of the hidden state representation. The LSTM and ORM produced an AUC of 0.939 and 0.937 respectively [100–102,153]. [153]Recently CNN and image classification methods as a basis to classify particle jets were also experimented upon by several studies because of the inherent similarity of HEP detectors to image pixels, with a few managing to outperform shallow neural networks at jet detection [93,154,155]. It is important to note that experiments at LHC may share scope for Machine Learning based cooperation with other particle detection experiments such as neutrino detectors, and searches for dark matter particles [156].

Keck (2017) proposes FastBDT, a Boosted Decision Tree (BDT) based implementation for multivariate classification in the Belle II experiment at the SuperKEKB collider. In the study, the author benchmarks FastBDT against conventional Stochastic Gradient Boosted Decision Tree implementations and provided similar classification strength with less CPU time [157–159]. Hong et. al. (2021) propose a BDT based implementation to keep up with high data rates and volumes of the future LHC experiments [137,160,161]. The solution is presented in the form of a software package called FWXMACHINA applied to two classification problems—distinguishing photons vs. electrons and event classification for Higgs Bosons produced by vector boson fusion in contrast to the multijet classification method [129]. The study also offers several optimization strategies to decrease latency at the nanosecond interval that can be implemented to FWXMACHINA in six sequential steps, achieving a latency of 10 ns.

4.3.3 Fast simulation in particle collisions

A simulation is a powerful tool in the physical sciences since it allows physicists to study and observe complex systems that may be difficult or impossible to observe directly as well as visualize and display important findings or theories. Simulations have proven as a reliable way of computationally solving various enigmas in physics since they can be used to test and predict the outcomes of a theory or experiment virtually, quickly and repeatedly. Simulation is essential in the field of particle physics since it allows particle physicists to visualise the interactions of various particles by modelling particle interactions based on acquired data to very high precision. However, this task is computationally very demanding and often requires millions of CPU hours. The GEANT4 simulation toolkit is a staple library to simulate Monte Carlo samples of high-energy particle interactions with visualization tools, with the drawback of a large computational footprint (Rahmat et al.; Agostinelli et al. 2003; Allison et al. 2006; Aad et al. 2008, 2010). The ATLAS experiment in LHC required billions of CPU hours with consuming a significant portion of the computational resources allotted to LHC, with Monte Carlo particle simulations taking up more than 50% of WLCG workload [167–170]. To simulate such large number of events, in the order of 1017 background interactions requires a lot of computational time [169,171].

To combat the high computational footprint and time taken by Monte Carlo simulations in particle physics, Oliveira and Paginini et.al. (2017) developed Location Aware Generative Adversarial Networks or LAGANs. LAGANs could successfully reconstruct jet images, which are two-dimensional representations of a radiation pattern from the scattering of quarks and gluons at high energy [172,173]. LAGAN utilises two-dimensional convolutional layers with leaky rectified activation to accurately simulate the location-based data from high energy particle jets [174]. Further, Paginini et. al. (2017) extended their knowledge from LAGANs to CaloGAN, a Deep Neural Network (DNN) model utilising GANs to produce electromagnetic calorimeter simulations about 100,000 times faster than the conventional Monte Carlo approach [169,172,175]. CaloGAN converges the implicit probability function f on the hypothetical data generation to ensure a realistic simulation. In an experiment, CaloGAN was used to learn and simulate GEANT4 data distributions of γ, e+, and π+ using a training dataset consisting of images that represent the pixelwise energy depositions in calorimeter layers [176]. It is also interesting to note that the model penalizes any absolute deviation between nominal and reconstructed energy, i.e., |E0 - Ê|.

Further research and development activity at CERN as documented by Vallecorsa (2018) and Carminati et. al. (2020) for the GeantV project—the successor of GEANT4 for faster and accessible simulation of particle showers—details on the three-dimensional GAN application on high-energy particle physics to simulate 3-D particle showers. The studies evaluate GeantV 3-D GAN as a proof of concept for utilizing GANs to simulate particles at desired energies [163,172,177,178]. This model utilizes DNNs and CNNs for the purpose of classification, energy regression and fast simulation of particles in high-energy collision environments using Machine Learning, in order to match the load of high data volumes from future projects, like the High Luminosity LHC cycle projected in 2025 [93,150,160]. The discriminator and generator models in the Deep Convolutional GAN consist of multiple 3D convolution layers as well as the use of batch normalization layers to improve performance [179,180]. The size and number of filters optimizing the transverse and longitudinal showers shape generations, allowing it to perform three-dimensional image reconstruction of particle showers. The study also stresses the performance leap in fast simulation of particle showers when GANs are used, by approximately 6 orders of magnitude, with the GAN based approach taking about O(10–3) ms per event simulation in contrast with conventional approaches which may take several minutes.

Another study by Ghosh (2019) explores various Variational Autoencoder (VAE) [94,96–98,126] as well as GAN methods utilized for fast simulation comparable to full simulation by Geant4 [95,181,182]. The VAE consists of two stacked neural networks made up of four hidden layers that act as encoders and decoders for the VAE. The model is conditioned on the energy of the incident particle which allows it to control the specificity of the energy the particle showers are generated at. The encoder and decoder work in tandem in the algorithm which is based on a latent variable model; the encoder compresses the input into a lower dimensional latent space and the decoder reconstructs a new model from this latent representation by learning the inverse mapping of this data. This allows the decoder to generate new data samples independently of the encoder [182].

Graph Neural Networks (GNNs) are Machine Learning models that utilize learning set elements and their pairwise relations have also provided interesting solutions to problems in HEP [183,184] Qasim et. al. (2019) utilize GNNs for the purpose of calorimeter particle shower reconstruction through two distance weighted graph models—GarNET and GravNET. GravNET utilizes a nearest neighbour approach for neighbours in a latent space, and GarNET uses aggregated nodes which are n number of additional nodes in the graph. These nodes represent and provides an output for the energy of a calorimeter cell that corresponds to a particle [183–185]. Keisler (2020) proposes a loss formulation through the object condensation method. The method offers a simplified approach to particle reconstruction and particle flow simulation applications through a graph reduction—this is done by condensing multiple representative points and properties into a single particle [185,186]. It is interesting to note that the object condensation method may also be applied to overlapping particles or objects with a lack of spatial boundaries [187]. The author compares the performance of the algorithm with a much larger LHC environment, with the algorithm producing less fake particle rates and higher efficiency [184,186]. Hariri et al. (2021) propose a Graph Variational Autoencoder based model that combines the properties of GNNs and VAEs. This model, called GVAE, learns compressed data representations for particle reconstruction in high energy environments. The authors also explore the addition of spatial graph convolutional layers to this model aiming at compressing the graphs into representative nodes. The study also benchmarks GVAE on several GPUs to rank performance scaling [183,184,188].

4.4 Material science

Material Science is a field where research is data-driven, and deals with physical and chemical constants on a scale that most other branches of physics do not. This has led to producing large datasets and endeavours to automate the prediction and resolution of material properties as well as material discovery from known data. Machine learning plays a crucial role in advancing material sciences by revolutionizing the way materials are discovered, designed, and optimized. Traditional methods for exploring new materials were time-consuming and labour-intensive, relying heavily on trial and error [189]. Machine learning, however, has transformed this approach by efficiently analysing vast datasets and identifying complex patterns within them. Through algorithms and predictive modelling, machine learning accelerates the identification of novel materials with desired properties, such as strength, conductivity, or thermal resistance [190]. Additionally, it aids in understanding the relationships between the atomic or molecular structure of materials and their resulting characteristics, enabling scientists to make informed decisions during the materials design process.

HTC in material science represents a transformative approach that leverages advanced computational methods to accelerate the discovery, design, and optimization of materials. HTC is recognized as an emerging area in computational materials design. It combines advanced thermodynamic and electronic-structure methods with intelligent data management and analysis techniques, enhancing the understanding and development of new materials [191]. The integration of HTC with data science technologies has shown significant potential in accelerating the discovery and design of novel materials. The vast amount of data generated from HTC [191,192], alongside density functional theory calculations, is being increasingly used with machine learning techniques. This integration is key to accelerating materials discovery and design, making the process more efficient and data-driven [193]. HTC enables the completion of material screenings in large parameter spaces, which would be impractical with manual methods. This is made possible through the design of effective HTC systems based on first-principles calculations, providing a practical approach to screen materials for desired applications such as magnetic materials [194], biomaterials [195], li = ion batteries [196], catalysis [197], optoelectronics [198], and many others.

4.4.1 Material discovery and prediction of material properties

The use of ML for the synthesis of new materials as well as assessing their properties dates back to the 1960s with the Dendritic Algorithm project (DENDRAL) [199,200]. DENDRAL employed an expert system for organic molecular structure synthesis by employing a constrained generator (CONGEN). Modeling and prediction of chemical/molecular properties of known or unknown compounds through ML may take two routes—the first incorporating the physical laws governing atoms and chemistry with ML, and the other dives directly into the prediction of physical properties and structure of a given material, with the latter being usually more computationally intensive [201]. Advanced techniques such as random sampling and simulated annealing algorithms which employ Monte Carlo simulations [202] as well as genetic models such as those studied by Bush et. al. that was able to successfully predict crystal structures of Li3RuO4 [203], Gottwald et. al. (2005) which employed genetic algorithms to calculate zero-temperature phase diagrams to predict candidate solid crystal structures that a given fluid may freeze in. However, the prediction of valid organic or crystal structures was either inefficient or inaccurate mainly due to time and computing constraints. Recent interest and development in ML technologies, as well as the increased abundance of specific data, has sparked several successful studies in this field. ML provides a promising solution to this problem, which was pioneered by Corey and Wipke in 1969 through expert systems [204]. Recent advancements in structure predictions, made by Coley et. al (2017), use a dataset of experimental reaction records consisting of over 15,000 patents to train a network that produces and ranks reactions that would most likely produce a chemical compound by predicting a small set of atoms and bonds in the reaction center and then producing all possible candidates and bond configurations [205]. Ren et. al (2018) study an iterative and high throughput ML based approach aimed at discovering metallic glasses [206,207]. The study uses four different supervised ML models including a retuned model by Ward et. al. (2016) and various models trained on sputtering data, and is benchmarked against Ward’s model [208]. The ML model is trained on 6,789 melt-spinning experiments [209]. The model also discovers a previously unexplored Co-V-Zr ternary which indicates a large region of metallic glasses. K. Schütt et. al (2014) presented an ML to predict electronic state densities at Fermi energy, employing the use of a spin-density dataset to train their model [210]. Later in 2018, Schütt et. al. present SchNet—a deep learning model consisting of continuous filter CNNs (O’Shea and Nash 2015; Schütt et al. 2018). SchNet is a DL implementation, a variation of the Deep Tensor Neural Network (DTNN) architecture—instead of tensor layers, it features continuous filter convolutions with filter generating networks [212–214]. The model can build and learn on atom-wise embeddings and layers of those embeddings and predict material properties through a sum over atom-wise calculation which can be approximated by taking an average of atomic contributions to the material’s properties. SchNet was trained with a learning rate decay of 0.96/10,000 steps, on the QM9 dataset of over 1.31 × 105 organic molecules [215–217]. The algorithm fails to perform when predicting the electronic spatial extent and polarizability of the molecule, but does well in the other 8 properties predicted by the model. Interestingly, SchNet requires only 750 epochs to reach convergence in the study. SchNet is also able to learn and predict the formation energies of materials, achieving an absolute mean error of 0.127 eV/atom when trained on the Materials Project repository [218]. As a final study, the model is used to study the molecular dynamics of C20-fullerene to resolve the basic properties of the molecular system, and achieves an error in nuclear and quantum effects by the scale of 0.5%/nm and high accuracy energy predictions.

Behler et. al (2016) applied kernel regression to successfully predict the electronic properties of metal oxides and elastic (shear and bulk modulus) of 1,173 crystals [219]. Improving on that, de Jong et. al (2016) used an ML model called gradient boosting multivariate local regression framework to predict bulk and shear moduli for inorganic compounds using a catalogue of over 1900 compounds as their database, intending to find super-hard materials. The study yielded a relative error of <10% and a root mean square (RMS) error of 0.075log(GPa) (Figure 10) [200,220]. The utilization of ordinal networks, combined with deep convolutional neural networks (CNN) and complexity-entropy methods, represents an innovative approach in exploring physical and optical properties of liquid crystals. This integrative methodology enables a comprehensive analysis to decode intricate patterns within liquid crystal structures, offering deeper insights into their characteristics and behaviors [221–223].

FIGURE 10. Comparison of DFT training data with predictions for e elastic bulk modulus K (A) and shear modulus G (B). Training set consists of 65 unary, 1,091 binary, 776 ternary, and 8 quaternary compounds [220].

Qiao et. al. produced OrbNet, an ML method utilizing a graph neural network (GNN) architecture that takes rules from quantum mechanics into account allowing it to outperform previous models to predict chemical synthesis and electronic-structure energies with very high accuracy and achieving a 33% improvement in prediction accuracy over the second-best method [224,225]. Choudhary et. al. (2020) introduce The Joint Automated Repository for Various Integrated Simulations through Machine Learning (JARVIS-ML) for the purpose of accelerating material discovery [226,227]. This package was used in 2019 to discover materials that can be used to build solar cells. The lack of proper materials to build solar cells is a critical hurdle to solving sustainable and cheap renewable energy problems. This was achieved by using JARVIS-ML to predict materials with high spectroscopic limited maximum efficiency (SLME), finding over 1900 potential materials with an SLME higher than 10% [228]. More recently, Chen et. al. (2021) used random forests on a training dataset of over 10,000 compounds and over 60 attributes to predict elastic properties for the discovery of potential super-hard materials. Further, the study also substantiates its results through evolutionary structure prediction and density functional theory [229].There are several machine learning tools that have emerged from the abundance of vast datasets and the development of like the aforementioned predictive models. PyMatGen [230] stands as a cornerstone tool, playing a pivotal role in advancing research and discovery. Developed in Python [231], PyMatGen offers a comprehensive suite of functionalities tailored for materials analysis, particularly in the realm of crystallography and electronic structure [232,233]. Its application spans from the generation and manipulation of crystal structures to the calculation of electronic and thermodynamic properties. Researchers widely embrace PyMatGen for its robustness in automating repetitive tasks, facilitating high-throughput computations, and enabling the systematic exploration of materials databases [234,235]. PyMatGen is closely linked to other essential technologies and tools in materials informatics, forming a synergistic ecosystem. Integration with databases like the Materials Project [218] and MatCloud [236] provides a vast repository of materials data for exploration. Furthermore, the combination of PyMatGen with machine learning libraries such as scikit-learn [237] or TensorFlow [238] allows for the development of predictive models, enhancing the efficiency of property prediction and materials discovery. Close ties with visualization tools like VESTA [239] enhance the interpretability of complex crystal structures, offering researchers a comprehensive toolkit for materials exploration and design [240].

Matminer [241], a powerful Python library tailored for data mining in materials science, has found widespread utility across various domains within the field. One prominent application is in the extraction and analysis of materials data from diverse sources [242,243]. Researchers employ Matminer to seamlessly retrieve information from databases, research papers, and experimental datasets, streamlining the process of aggregating data for materials informatics. Additionally, Matminer facilitates the pre-processing and featurization of raw data, enabling efficient utilization in machine learning workflows [244]. In the realm of property prediction, Matminer is instrumental in constructing and fine-tuning predictive models, allowing scientists to forecast material behaviors and characteristics. Its versatility extends to applications such as high-throughput screening, where the library aids in the systematic exploration of large materials databases to identify promising candidates for specific applications.

ElemNet has emerged as a transformative tool in various facets of material science research due to its unique ability to automatically learn material properties from elemental compositions using deep learning [245]. In the realm of materials informatics, ElemNet has been instrumental in predicting the stability of crystal structures, offering a departure from traditional machine learning approaches that necessitate manual feature engineering. Researchers leverage ElemNet to analyze vast datasets, such as those from the Open Quantum Materials Database, enabling efficient identification of stable compounds and the exploration of previously uncharted material compositions. Furthermore, ElemNet finds application in the rapid screening of material candidates, facilitating combinatorial investigations across a wide composition space. Its speed and accuracy advantages over conventional ML models make ElemNet particularly valuable in accelerating the materials discovery process. Beyond stability predictions, ElemNet has been adopted for tasks like property optimization, aiding researchers in tailoring materials with specific engineering properties.

DeepChem [246], a powerful open-source deep learning framework, has made significant contributions to material science research across diverse applications. One key application lies in its role in molecular property prediction, where DeepChem’s deep learning models analyze and predict various molecular properties, such as binding affinities, electronic structures, and chemical reactivities. The framework facilitates the development of accurate and efficient models for drug discovery and materials design [247]. DeepChem is also instrumental in the field of cheminformatics, enabling the analysis of chemical datasets, structure-activity relationships, and the identification of novel compounds with specific properties. Furthermore, in materials informatics, DeepChem supports the prediction of material properties based on molecular structures, aiding researchers in the exploration and optimization of materials for various applications, including catalysis, energy storage, and electronic devices. Its flexibility and versatility make DeepChem a valuable tool for researchers seeking to leverage the capabilities of deep learning in unraveling the complexities of materials science.

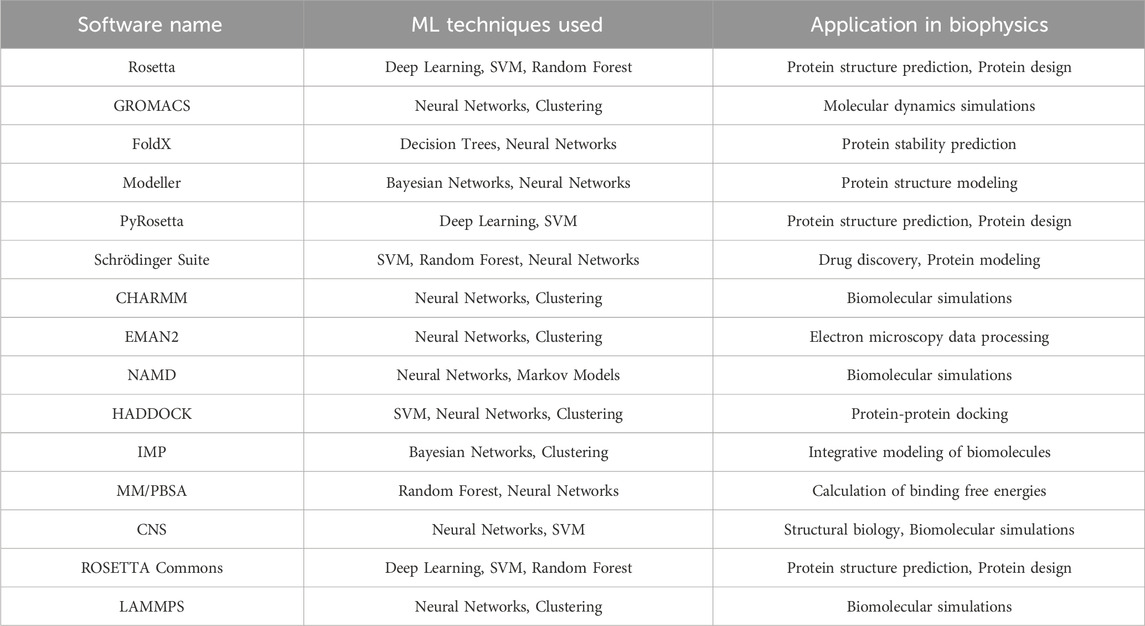

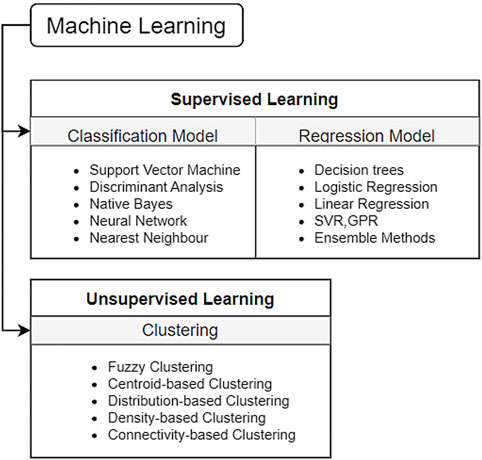

Citrination, the materials data platform developed by Citrine Informatics, has become an invaluable resource in advancing material science across various domains. Its application spans a wide range of areas, with one of the key contributions being in materials discovery and design [248]. Citrination serves as a centralized hub for materials data, allowing researchers to access and analyze an extensive collection of experimental and computational data. This facilitates the rapid identification of trends, correlations, and patterns, enabling scientists to make informed decisions in materials research. Few other software that harness the power of ML is shown in Table 5.

4.5 Nanophysics

The last few decades have seen an incline in the use of AI tools in the research of nanotechnology [249]. The scale of nanotechnology is a double-edged sword, where it provides huge technological breakthroughs but the price of developing technology in this domain is limited by its sheer size because of the difficulties encountered in the development, design, and manufacture of such technology. The physical laws at this scale differ from what is relevant in macroscopic situations [249,250].

4.5.1 Design of nanoscale computation systems

The idea of Nanoscale devices or Nanodevices is incredibly valued today because of space constraints on computing devices. As more and more computational power gets packed in a smaller volume day by day, Moore’s law is reaching its very limits for traditional transistors, because of quantum mechanical effects coming into play [68,249]. Nanocomputers are a front-running and promising solution to this problem [251]. There have been several initial attempts at nanocomputer construction [252,253]. Early attempts have been made to apply reinforcement learning by Lawson (2004) to program randomly placed “nano-electric components” with the vision of reducing manufacturing costs for highly detailed small computing devices which use transistors [254]. Optimization techniques for nanoscale circuits have emerged, and a study by Bahar et. al (2003) used Markov Random Fields for circuit framework optimization. Improving on that, Kumawat et. al. (2005) proposed probabilistic modelling approaches for the optimization of nanocircuit designs, which aim on making nanocomputers more reliable and remove defects using Markov Random Fields and Probabilistic Decision Diagrams, with Probabilistic Decision Diagrams having the least time complexity amongst the approaches featured in the study [255]. ML has also seen recent use to design computers that can enable high-throughput calculations as well as solve complex optimization problems [249].

4.5.2 Finding and analysing nanomaterials

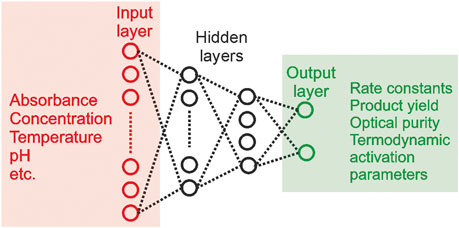

Similar to material science, ML models have been used to classify and predict nanomaterial properties. For example, artificial neural networks (ANNs) have been popular in resolving the properties of thin-film nanomaterials because of their nonlinear nature [249]. Xu B. et. al. (2004) used an ANN with a Levenberg Marquadt algorithm as an optimizer to predict Poisson’s Ratio, Young’s modulus, and other elastic properties of a thin film substrate by feeding surface displacement responses into the neural network. Out of the 96 samples used in the study, training data consisted of 80 samples and the remaining 16 samples were used as testing data for the ANN, producing a relative error of 4.0% for Poisson’s ratio, 0.48% for Young’s modulus, and 4.9% for density thickness [256]. Further, ANNs were also used by Jiangong et. al. (2007) used an ANN to calculate dispersion curves of a functionally graded material (FGM) plate. This study, like the previous one, used the Levenberg-Marquardt algorithm to hasten the learning process of the neural network [257]. Aside from thin-film nanomaterials, kinetic models have been constructed for steam in naphtha surrogates in a NiMgAl catalyst using ANNs trained for kinetic model discrimination recently by Natalia et. al. (2022) [258] which derived from a study by Amato F. et. al. The model demonstrated an overall accuracy of 74.9% for the test set data containing 800 samples [5] to evaluate kinetic data (Figure 11).

FIGURE 11. Typical Neural Net architecture for application in kinetics [5].

NanoSolveIT [259]is a groundbreaking research project dedicated to advancing safety assessments of engineered nanomaterials (ENMs). By leveraging advanced computational models, the project focuses on predictive toxicology to anticipate and understand potential risks associated with nanomaterials. These computational tools use sophisticated algorithms to simulate various scenarios, incorporating physicochemical properties, exposure conditions, and toxicological outcomes. Notably, NanoSolveIT excels in data integration, consolidating information from diverse sources into a comprehensive database. This integrated approach provides a holistic understanding of nanomaterial behavior.

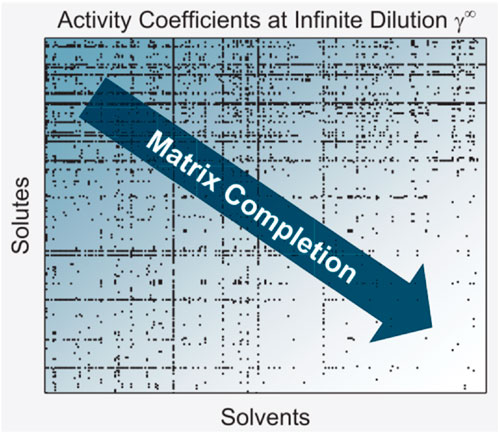

4.6 Thermodynamics

Thermodynamics is referred to as the science of the relationship between heat, work, temperature, and energy [260]. Thermodynamics, in its broadest sense, is concerned with the transfer of energy from one location to another and from one form to another. Heat can be defined as an interaction distinguishable from work. It involves energy and entropy transfers [261]. In the development of chemical engineering processes, the thermodynamic properties of complex systems are critical. Predicting the thermodynamic parameters of complex systems across a large range and describing the behaviour of ions and molecules in complex systems remains difficult. Because it can explain complicated relationships beyond the capabilities of standard mathematical functions, ML emerges as a powerful tool for resolving this challenge. ML can be applied in three major areas of molecular thermodynamics. In the first area, ML is used to predict the thermodynamic properties of a broad spectrum of systems based on known data. The second area is to integrate ML and molecular simulations to accelerate the discovery of materials. The third area is to develop an ML force field for eliminating the barrier between quantum mechanics and all-atom molecular dynamics simulations. The applications in these three areas illustrate the potential of ML in the molecular thermodynamics of chemical engineering [262].

4.6.1 Machine learning assisted thermodynamical simulations

ML approaches are effective in automatically distinguishing different phases of matter, and they offer a fresh perspective on the study of physical events. On training a restricted Boltzmann machine (RBM) on data constructed using Monte Carlo simulations of spin configurations taken from the Ising Hamiltonian at various temperatures and external magnetic fields, an astute observation was found that the trained RBM’s flow approaches the spin configurations of the maximum feasible specific heat, which mirror the Ising model’s near-criticality area. The trained RBM converges to the critical point of the lattice model’s renormalization group (RG) flow in the exceptional case of the vanishing magnetic field. Instead of linking the recognition technique directly with the RG flow and its fixed points, the findings show that the machine recognizes physical phase transitions by identifying particular attributes of the configuration, such as the maximization of the specific heat, suggesting the importance of using ML methods [263].