95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Phys. , 27 July 2023

Sec. Statistical and Computational Physics

Volume 11 - 2023 | https://doi.org/10.3389/fphy.2023.1195562

This article is part of the Research Topic Advances in Information Geometry: Beyond the Conventional Approach View all 4 articles

Geoffrey Wolfer1*

Geoffrey Wolfer1* Shun Watanabe2

Shun Watanabe2Information geometry and Markov chains are two powerful tools used in modern fields such as finance, physics, computer science, and epidemiology. In this survey, we explore their intersection, focusing on the theoretical framework. We attempt to provide a self-contained treatment of the foundations without requiring a solid background in differential geometry. We present the core concepts of information geometry of Markov chains, including information projections and the pivotal information geometric construction of Nagaoka. We then delve into recent advances in the field, such as geometric structures arising from time reversibility, lumpability of Markov chains, or tree models. Finally, we highlight practical applications of this framework, such as parameter estimation, hypothesis testing, large deviation theory, and the maximum entropy principle.

Markov chains are stochastic models that describe the probabilistic evolution of a system over time and have been successfully used in a wide variety of fields, including physics, engineering, and computer science. Conversely, information geometry is a mathematical framework that provides a geometric interpretation of probability distributions and their properties, with applications in diverse areas such as statistics, machine learning, and neuroscience. By combining the insights and methods from both fields, researchers have, in recent years, developed novel approaches for analyzing and modeling systems with time dependencies.

As the fields of information geometry and Markov chains are broad, it is not possible to review all topics exhaustively, and we had to confine the scope of our survey to certain basic topics. Our focus will be on time-discrete, time-homogeneous Markov chains that take values from a finite alphabet. In particular, we will not cover time-continuous Markov chains [1, 2] nor discuss quantum information geometry or hidden Markov models [3, 4]. Our introduction to information geometry in the distribution setting will be limited to the basics. For a more comprehensive treatment, we recommend referring to the monographs [5, 6].

This survey is structured into five sections.

Section 1 is a brief introduction that provides an outline, lists the main concepts and results found in this survey, and clarifies its scope.

In Section 2, we lay out the notation that will be used throughout this paper and provide a primer on irreducible Markov chains and information geometry in the context of distributions. Along the way, we recall how to extend notions of entropy and Kullback–Leibler (KL) divergence from distributions to Markov chains.

In Section 3, following Nagaoka [7], we introduce a Fisher metric and a pair of dual affine connections on the set of irreducible stochastic matrices, which allows us to define the orthogonality of curves and parallel transport. We then proceed to define exponential families (e-families) and mixture families (m-families) of Markov chains. Importantly, the set of irreducible stochastic matrices is shown to form both an e-family and m-family, endowing it with the structure of a dually flat manifold. We explore minimality conditions for exponential families and chart transition maps between their natural and expectation parameters. Additionally, we define geodesics and their generalizations and conclude the section with a discussion on information projections and decomposition theorems. Specifically, similar to the distribution setting, the dual affine connections induce two notions of convexity, leading to Pythagorean identities.

In Section 4, we explore some recent developments in the field. First, we list and analyze the geometric properties of important subfamilies of stochastic matrices, such as symmetric or bistochastic Markov chains. The highlights of this section include the analysis of geometric properties induced by the time reversibility of Markov chains. This analysis leads to the establishment of the em-family structure of the reversible set, the derivation of closed-form expressions for reversible information projections, and the characterization of the reversible set as geodesic hulls of contained families. We continue this section by discussing some notable advancements in the context of data processing of Markov chains. Mirroring congruent embeddings in a distribution setting, we present a construction of embeddings of families of stochastic matrices that are congruent with respect to the lumping operation of Markov chains. These embeddings preserve the Fisher metric, the pair of dual affine connections, and the e-family structure. Additionally, we explore the establishment of a foliation structure on the manifold of lumpable stochastic matrices. Lastly, we conclude this section by presenting results in the context of tree models.

Section 5 is devoted to applications of the information geometry framework to large deviations, estimation theory, hypothesis testing, and the maximum entropy principle.

Let

and

A time-discrete, time-homogeneous Markov chain is a random process

with

As we assume

It will also be convenient to define

In particular,

is called the edge measure pertaining to P. Observe that the map from an irreducible transition matrix P to its edge measure is one-to-one (see, e.g., [8]) and that the set of all edge measures

We refer the reader to Levin et al. [9] for a thorough treatment of Markov chains.

Let us first recall the definition of the Shannon entropy of a random variable. We let

and where by convention 0 log 0 = 0. The entropy rate of a stationary stochastic process

where for any

where Q is the edge measure pertaining to P. In other words, the entropy rate of the process is computed from P only. We can thus overload H to define

For two random variables X ∼ μ, X′ ∼ μ′ with

Extending the aforementioned definition to Markov processes, the information divergence rate [10] (see also [73, Section 3.5]) of

which is also agnostic on initial distributions, inviting us to lift the definition of D to stochastic matrices:

We briefly introduce basic concepts related to information geometry in the context of distributions. The central idea is to regard

where

where

In addition to

In the parametrization θ, the connections are specified by their coefficients (Christoffel symbols):

where

As a consequence, the curvature tensors associated with ∇(e), ∇(m) vanish simultaneously. In particular, they vanish for

Similar to the distributional setting, we regard

Our first order of business is to establish a dually flat structure on the set of stochastic matrices, following Nagaoka [7]. A smooth manifold structure can be established on

Recall the definition of the information divergence from one stochastic matrix

and regard

(i)

(ii)

(iii)

(iv)

We call

the dual divergence of D.

From any divergence function D on a manifold

where the Riemannian metric

As the metric and connections are derived from the KL divergence, they all depend solely on the transition matrices and are, in particular, agnostic of initial distributions. From calculations, we obtain the Fisher metric [7, (9)]:

and the coefficients for the pair of torsion-free affine connections ∇(e) (e-connection) and ∇(m) (m-connection) [7, (19, 20)]:

On the one hand, the metric encodes notions of distance and angles on the manifold. In particular, the information divergence D locally corresponds to the Fisher metric. In other words, for

Consider two curves

and we will say that the two curves are orthogonal at P0 when the inner product is null. On the other hand, affine connections define notions of straightness on the manifold. The fact that the connections are coupled with the metric

Recall from (2) that a stationary Markovian trajectory has a probability described by the path measure Q(n). For every

where

Similar to the distribution setting, we proceed to define exponential families (e-families) and mixture families (m-families) of stochastic matrices.

Definition 3.1. (e-family of stochastic matrices [7]). Let

is an exponential family (e-family) of stochastic matrices with natural parameter θ, when there exist functions

For some fixed θ ∈ Θ, we may write for convenience ψθ for ψ(θ) and Rθ for

Note that R and ψ are analytic functions of θ and that ψ is a convex potential function. R and ψ are completely determined from g1, …, gd and K by the Perron–Frobenius (PF) theory, and we can introduce a stochastic rescaling mapping [7, 13]:

where ρ and v are, respectively, the PF root and right PF eigenvector of

where exp is understood to be entry-wise. In particular,

The basis is given by

and the parameters are

We can alternatively define e-families as e-autoparallel submanifolds of

We define the set of functions [7, 13, 15]

and observe that we can endow

of dimension

where ∘ stands here for function composition. Essentially, there is a one-to-one correspondence between vector subspaces of

Theorem 3.1. ([7, Theorem 2]). A submanifold

As a corollary [7, Corollary 1],

In the stochastic matrix setting, the notion of a mixture family is naturally defined in terms of edge measures.

Definition 3.2. (m-family of stochastic matrices [15]). We say that a family of irreducible stochastic matrices

where

It is easy to verify that

For an exponential family

where Qθ is the edge measure corresponding to the stochastic matrix at coordinates θ. When

Theorem 3.2. [15, Lemma 4.1] The following statements are equivalent:

(i) The functions g1, …, gd are linearly independent in

(ii) The mappings θ∘η−1 and η∘θ−1 are one-to-one.

(iii) The Hessian matrix

(iv) The Hessian matrix

(v) The parametrization

Defining the Shannon negentropy3 potential function

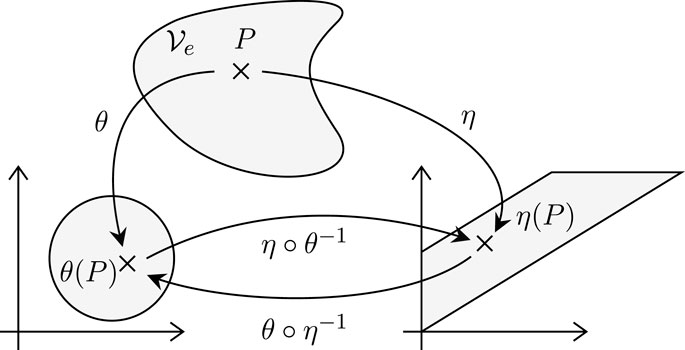

we can express [7, Theorem 4] the chart transition maps (see Figure 1) between the expectation [ηi] and natural [θi] parameters of the e-family

where we wrote ∂i⋅ = ∂ ⋅/∂ηi. We can also obtain the counterpart [13, Lemma 5] of (16) for θ◦η−1,

FIGURE 1. Natural and expectation parametrizations of an e-family

A straightforward computation shows that all the e-connection coefficients

which is sometimes called the fundamental theorem of information geometry [79, Theorem 3]. In other words, e-families and m-families are both e-flat and m-flat [7, Theorem 5], and for any

Thus, φ is also strictly convex, and the coordinate systems [θi] and [ηi] are mutually dual with respect to

An affine connection ∇ defines a notion of the straightness of curves. Namely, a curve γ is called a ∇-geodesic whenever it is ∇-autoparallel,

and the m-geodesic [7, Theorem 7] by

where

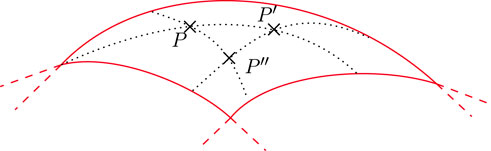

FIGURE 2. E-hull

Definition 3.3. (Exponential hull [13, Definition 7]). Let

where

Definition 3.4. (Mixture hull [13, Definition 8]). Let

where Q (resp., Qi) is the edge measure that pertains to P (resp., Pi).

When a family

The projection of a point onto a surface is among the most natural geometric concepts. In Euclidean geometry, projecting on a connected convex body leads to a unique closest solution point. However, the dually flat geometry on

For a continuously differentiable and strictly convex function

When we let

As ψ and φ are convex conjugate,

where we used the shorthands η = η(θ) and η′ = η(θ′); hence, the KL divergence is the Bregman divergence associated with the Shannon negentropy function, and as any Bregman divergence, it verifies the law of cosines:

which can be re-expressed [7, (23)] as

for γ an m-geodesic going through Pθ and Pθ′ and σ an e-geodesic going through Pθ′ and Pθ″.

One may naturally wonder whether it is possible to recover the divergence D defined at (6) from

where η = η(P) and θ′ = θ(P′). One can verify from (21) that we indeed recover the expression at (6).

Geodesic convexity is a natural generalization of convexity in Euclidean geometry for subsets of Riemannian manifolds and functions defined on them. As straight lines are defined with respect to an affine connection ∇, a subset

However, for

Unlike in the distribution setting, where the KL divergence is jointly m-convex, this property does not hold true for stochastic matrices [21, Remark 4.2].

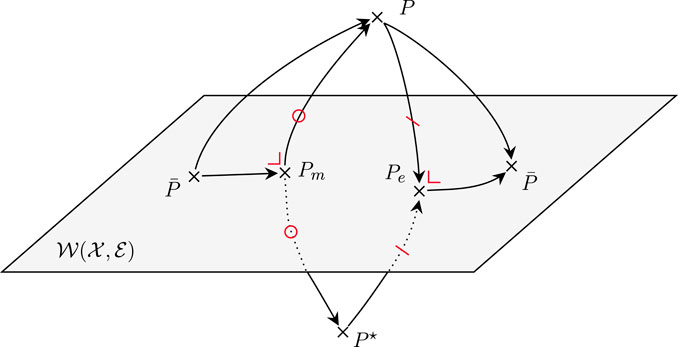

In the more familiar Euclidean geometry, projecting a point P onto a subset

and the m-projection onto

For a point P in context, we simply write Pe = Pe(P) and Pm = Pm(P).

Theorem 3.3. (Pythagorean inequalities for geodesic e-convex [21, Proposition 4.2], m-convex sets [23, Lemma 1]). The following statements hold.

(i) Pe exists in the sense where the minimum is attained for a unique element in

(ii) For

(iii) Pm exists in the sense where the minimum is attained for a unique element in

(iv) For

Inequalities become equalities when projecting onto e-families and m-families.

Theorem 3.4. (Pythagorean theorem for e-families, m-families [19], [15, Section 4.4]). The following statements hold.

(i) Pe exists in the sense where the minimum is attained for a unique element in

(ii) For

(iii) Pm exists in the sense where the minimum is attained for a unique element in

(iv) For

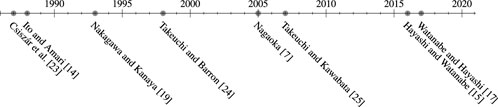

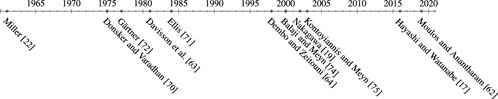

The construction of the conjugate connection manifold from a general contrast function in Section 3.1.1 and Section 3.1.2 follows the general scheme of Eguchi [11, 12], which can also be found in [79, Definition 5, Theorem 4]. The expression for the Fisher metric at (Eq. 8) and the conjugate affine connections at (Eq. 8) were introduced by Nagaoka [7, (9), (19), (20)]. One-dimensional e-families of stochastic matrices were first introduced by Nakagawa and Kanaya [19], whereas the general construction in the multi-dimensional setting was done by Nagaoka [7], who also established the characterization in Theorem 3.1 of minimal e-families in terms of affine structures of in [7, Theorem 2]. Curved exponential families of transition matrices and mixture families make their first named appearances in Hayashi and Watanabe [15; Section 8.3; Section 4.2]. See also [13, Definition 1] for two alternative equivalent definitions of an m-family. The expectation parameter for exponential families in (16) and its expression as the gradient of the potential function were discussed on multiple occasions [7, Theorem 4], [19, (28)], [15, Lemma 5.1]. Theorem 3.2 was taken from [15, Lemma 4.1]. The expression for the chart transition map from expectation to natural parameters in (17) was obtained from [13, Lemma 5]. Geodesics discussed in Section 3.2.6 were introduced in one-dimension in [19] and multiple dimensions in [7], whereas mixture and exponential hulls of sets first appeared in [13]. Nagaoka [7] established the dual flatness of the manifold discussed in Section 3.2.5 and matched the information divergence with the canonical divergence. The expression of the informational divergence and entropy for exponential families in (21) was given in [15, 17]. The law of cosines was also mentioned by Adamčík [20] for general Bregman projections. The convexity properties of the divergence appeared in Hayashi and Watanabe [15, Theorem 3.3] and Hayashi and Watanabe [15, Lemma 4.5], and their strict version was discussed in [21, Section 4] together with the case

The idea of tilting or exponential change of measure, which gives rise to e-families in the context of distributions, can be traced back to Miller [22]. However, in this section, we focused on the milestones toward the geometric construction of Nagaoka [7], and we deferred the history of the development of the large deviation theory to Section 5.2. The first to recognize the exponential family structure of stochastic matrices is Csiszár et al. [23] by considering information projections onto linearly constrained sets and inferring exponential families as the solution to the maximum entropy problem, as discussed in more detail in Section 5.1. The notion of an asymptotic exponential family was implicitly described by Ito and Amari [14] and was formalized by Takeuchi and Barron [24] and Takeuchi and Kawabata [25]. A later result by Takeuchi and Nagaoka [26] proved that asymptotic exponential families and their non-asymptotic counterparts are in fact equivalent.

Some alternative definitions of exponential families of Markov chains include [27–32]. However, they do not enjoy the same geometric properties as the one of Definition 3.1. Thus, we do not discuss them in detail.

One area of recent progress has been the analysis of the geometric properties of significant submanifolds of

In this section, we briefly survey known geometric properties of notable submanifolds of

We say that a stochastic matrix

for

Lemma 4.1. ([13, Lemma 7, Lemma 8]). The two following statements hold:

(i)

(ii)

Recall the parametrization of Ito and Amari [14], reported in (13). Coefficients θij in the expression represent memory in the process, and thus vanish. For

where for i ∈ [m], i ≠ x *,

Bistochastic matrices, also called doubly stochastic matrices, are row- and column-stochastic. In other words,

Lemma 4.2. The two following statements hold:

(i)

(ii) For

A symmetric stochastic matrix P satisfies P(x, x′) = P(x′, x) for any pair of states

Lemma 4.3. ([13, Lemma 9, Lemma 10]). The two following statements hold:

(i)

(ii) For

In Section 4.2.1, we begin by briefly introducing time reversals and time reversibility in the context of Markov chains. In Section 4.2.2, we proceed to analyze geometric structures that are invariant under the time reversal operation. In Section 4.2.3, we inspect the e-family and m-family nature of the submanifold of reversible stochastic matrices and reversible edge measures. In Section 4.2.4 and Section 4.2.5, we, respectively, discuss reversible information projections and how to generate the reversible set as a geodesic hull of structured subfamilies.

Consider a Markov chain

We write

Time-reversibility is a central concept across a myriad of scientific fields, from computer science (queuing networks [33], storage models, Markov Chain Monte Carlo algorithms [34], etc.) to physics (many classical or quantum natural laws appear as being time-reversible [35]). The theory of reversibility for Markov chains was originally developed by Kolmogorov [36, 37], and we refer the reader to [38] for a more complete historical exposition.

Reversible Markov chains enjoy a particularly rich mathematical structure. Perhaps first and foremost, reversibility implies self-adjointness of P with respect to the Hilbert space ℓ

2(π) of real functions over

The time reversal operation is known to preserve some geometric properties of families of transition matrices. Consider

Lemma 4.4. ([13, Proposition 1]). Let

Moreover, the time reversal operation leaves the divergence between stochastic matrices unchanged [80, Proof of Proposition 2]:

When

Lemma 4.5. ([13, Theorem 2]). Let

The class of functions

where

It is possible to verify that

where Δ is the diffeomorphism defined in (15). The following result is then a consequence of Theorem 3.1.

Theorem 4.1. ([13, Theorem 3, Theorem 5, Theorem 6]).

where

Theorem 4.2. ([13, Theorem 4, Theorem 5]). Let

For

forms a basis for

and we can write P as a member of the e-family,

when

Let

There are known closed-form expressions for P

m

and P

e

. Moreover, the fact that

FIGURE 4. Information projections onto

Theorem 4.3. ([13, Theorem 7, Proposition 2]). Let

where

Furthermore, the following bisection property holds

Finally, we mention that the entropy production σ(P) for a Markov chain with transition matrix P, which plays a central role in discussing irreversible phenomena in non-equilibrium systems, can be expressed in terms of the canonical divergence [81, (22)] as follows:

It is known that the set of bistochastic matrices—also known as the Birkhoff polytope—is the convex hull of the set of permutation matrices (theorem of Birkhoff and von Neumann [42–44]). By recalling from Section 3.2.6 the definition of geodesic hulls (Definition 3.3, Definition 3.4) of families of stochastic matrices, results in a similar spirit are known for generating the positive and reversible family as geodesic hulls of particular subfamilies.

Theorem 4.4. ([13, Theorem 9, Theorem 10]). It holds that

(i)

where

(ii) For

where

In the context of distributions, Čencov [45] introduced Markov morphisms in an axiomatic manner as the natural mappings to consider for statistics. The Fisher information metric can then be characterized as the unique invariant metric tensor under Markov morphisms [45–47]. In the context of stochastic matrices, we saw that the metric and connections introduced in Section 3 were asymptotically consistent with Markov models. This section connects with the axiomatic approach of Čencov and proposes a class of data processing operations that are arguably natural in the Markov setting.

We briefly recall lumpability in the context of distributions and data processing. Consider a distribution

When

Crucially, in the independent and identically distributed setting, the lumping operation can be understood both as a form of processing of the stream of observations and as an algebraic manipulation of the distribution that generated the random process.

For Markov chains, the concept of lumpability is vastly richer. The first fact one must come to terms with is that a Markov chain may lose its Markov property after a processing operation on the data stream [48, 49], even for an operation as basic as a lumping. A chain is said to be lumpable [50] with respect to a lumping map

Theorem 4.5. ([50, Theorem 6.3.2]). Let

The subset of

Embeddings of stochastic matrices that correspond to conditional models were proposed and analyzed in [51–53]. However, the question of Markov chains, where one considers the stochastic process, was only recently explored in [21]. Looking at reverse operations to lumping, we are interested in embedding an irreducible family of chains

A.1 Morphisms should preserve the Markov property.

A.2 Morphisms should be expressible as algebraic operations on stochastic matrices.

A.3 Morphisms should have operational meaning on trajectories of observations.

The following definition of a Markov morphism was proposed in [21].

Definition 4.1. (Markov morphism for stochastic matrices [21, Definition 3.2]). A map

where

The constraints on the function Λ in Definition 4.1 ensure that the objects produced by λ are stochastic matrices and are κ-lumpable. Furthermore, given the full description of P and Λ, one can directly compute the embedded λ(P), thereby satisfying A.1 and A.2. Alternatively, when given a sequence of observations

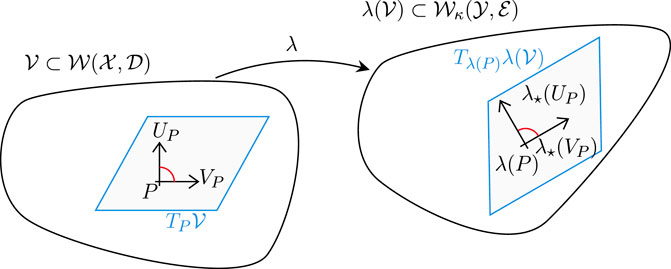

As a consequence, the Fisher metric and affine connections are preserved [21, Lemma 3.1] (see Figure 5), in the sense where for

and for any vector fields

where

defined by

FIGURE 5. Markov morphisms (Definition 4.1) preserve the Fisher metric and the pair of dual affine connections.

However, they are no m-geodesic affine, which means that generally

A more restricted class of embeddings, termed memoryless embeddings, preserve m-geodesics [21, Lemma 3.6], whereas e-geodesics are even preserved by the more general class of exponential embeddings [21, Theorem 3.2]. The concept of lumpability is easily extended to bivariate functions [21, Definition 3.3].

Definition 4.2. (κ-lumpable function).

The set of all κ-lumpable functions is denoted as

Lumpable functions

Definition 4.3. (Linear congruent embedding). A linear map

Theorem 4.6. (Characterization of Markov morphisms as congruent linear embeddings). Let

(i) ϕ is a κ-congruent linear embedding.

(ii) ϕ is a κ-compatible Markov morphism.

Theorem 4.6 is a counterpart for a similar fact for finite measure spaces in the distribution setting, which can be found in Ay et al. [6, Example 5.2].

As Markov morphisms and linear congruent embeddings can be identified, it will be convenient to refer to them simply as Markov embeddings. We proceed to give two examples of embeddings.

Let

Suppose a given stochastic matrix

is such that

There is generally no left inverse for a lumping map κ. However, for any κ-lumpable

For fixed

Less tersely,

It is not hard to show that the submanifold

Theorem 4.7. ([21, Theorem 5.1]). Let

The following Pythagorean identity [21, Theorem 5.2] follows as a direct application of Theorem 4.7. For

and P

0 is both the e-projection onto

For a finite alphabet

For a string

Definition 4.4. (Tree model). For a given tree T and

let us consider the set

The tree model induced by the tree T is

The tree model is a well-studied model of Markov sources in the context of data compression [55, 56], and it can be categorized based on the structure of the underlying tree as follows:

Definition 4.5. (Finite State Machine X (FSMX) model). For a tree model

Theorem 4.8. ([25, 57]). A tree model

In this section, we give details of some application domains of the geometric perspective.

Recall that the maximum entropy probability distribution over a fixed alphabet

It is known [23] that the e-projection (Section 3.3) of an arbitrary

and write ψ(ξ) for the logarithm of the PF root of

and observing that for P = U the maxentropic chain

In other words, the e-projection onto

The topic of large deviation theory is the study of the probabilities of rare events or fluctuations in stochastic systems, where the likelihood of these events occurring is exponentially small in the system parameters. In this context, we provide a concise overview of the classical asymptotic results and offer references to recent developments of finite sample upper bounds for the probability of large deviations. For X

1, …, X

n

, a Markov chain started from an initial distribution μ and with transition matrix P, a function

Similar in spirit to the heart of the approach taken in the iid setting, we proceed with an exponential change of measure (also known as tilting or twisting) of P and define for

We denote by ρ

θ

the Perron–Frobenius root of the matrix

The large deviation rate is given by the convex conjugate (Fenchel–Legendre dual) of the log-Perron–Frobenius eigenvalue of the matrix

Theorem 5.1. ([64, Theorem 3.1.2]). For

Theorem 5.2. ([75, Theorem 6.3]). When

is achieved for θ = θ * , as n → ∞,

where

Moulos and Anantharam [62] achieved the most recent and tightest result. They established a finite sample bound with a prefactor that does not depend on the deviation η, which holds for a large class of Markov chains, surpassing the earlier results [17, 63, 64].

Theorem 5.3. ([62, Theorem 1]). Let

with

Lastly, the subsequent uniform multiplicative ergodic theorem is known to hold.

Theorem 5.4. ([62, Theorem 3]). For

where ψ n is the scaled log-moment-generating-function,

and C(P, f) is the constant defined in Theorem 5.3.

For a more detailed exposition of the aforementioned results in a broader context, please refer to [62].

Let

The statistical behavior of

Furthermore, defining the asymptotic variance of f as

the following Markov chain version of the central limit theorem [65] holds

Although asymptotic analysis may be of mathematical interest, for modern tasks, it is crucial to have a finite sample theory that explains the behavior of the sample mean. With regard to the original bivariate function problem, the sample mean for a sliding window of pairs of observations can be defined as follows:

One can construct by exponential tilting the following one-dimensional parametric family of transition matrices:

where R

θ

and ψ are fixed using the PF theory (see Section 3.2). Essentially,

Defining the asymptotic variance for the bivariate g as

it follows that

Note that it coincides with the reciprocal of the Fisher information with respect to the expectation parameter; see Eq. 18. Essentially, this establishes that the sample mean evaluated on pairs of observations

We let

We interpret

We write

Then, 1 − β is called the power of the test. Fixing

(i)

(ii)

The Neyman–Pearson lemma asserts the existence of a test, which can be achieved through the likelihood ratio test.

Lemma 5.1. [78]. There exist

(i)

(a)

(b)

(ii) If

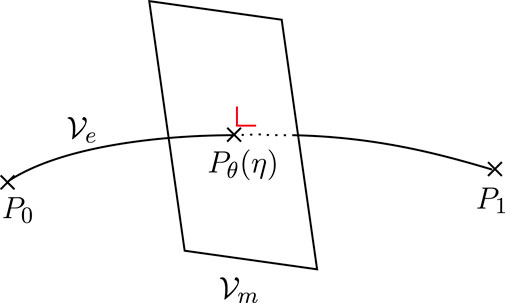

If we ignore the effect of the initial distribution that is negligible asymptotically, the Neyman–Pearson accepts the null hypothesis if

for a threshold η and observation (x 1, …, x n ). Employing the large deviation bound (e.g., [17, Section 8]), we can evaluate the Neyman-Pearson test’s performance in terms of rare events as follows:

where

is the exponential family passing through P

0 and P

1 (see Figure 8), and

FIGURE 8. Geometric interpretation of the Neyman–Pearson test as the orthogonal bisector to the e-geodesic passing through both the null and alternative hypotheses.

Note that the e-family

for any

In fact, it can be proved that D(P θ(η(r))‖P 1) is the optimal attainable exponent of the type II error probability among any tests such that the type I error probability is less than e −nr . Furthermore, it also holds that

and the optimal exponential trade-off between the type I and type II error probability can be attained by the so-called Hoeffding test. For a more detailed derivation of these results and finite length analysis, see [17, 19].

Binary hypothesis testing is one of the well-studied problems in information theory. The use of the Perron–Frobenius theory in this context can be traced back to the 1970s and 1980s [63, 66–68]. The geometrical interpretation of the binary hypothesis testing for Markov chains was first studied in [19]. More recently, the finite length analysis of the binary hypothesis testing for Markov chains was developed in [17] using tools from the information geometry. The binary hypothesis testing is also well studied for quantum systems; for results on quantum systems with memory, see [69].

GW drafted the initial version, which was subsequently reviewed and edited by both authors. All authors contributed to the article and approved the submitted version.

GW was supported by the Special Postdoctoral Researcher Program (SPDR) of RIKEN and the Japan Society for the Promotion of Science KAKENHI under Grant 23K13024. SW was supported in part by JSPS KAKENHI under Grant 20H02144.

The authors are thankful to the referees for their numerous comments, which helped improve the quality of this manuscript, and for bringing reference [81] to their attention.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1 As is customary in the literature, θ denotes both the coordinates of a point in context and the corresponding chart map.

2

In our definition of m-family, we do not allow a redundant choice of Q

0, Q

1, …, Q

d

to express

3 The reason for this name will become clear in (21).

4 When discussing geodesic convexity in this section, we only consider the section of the geodesic joining the two points, achieved for parameter t ∈ [0, 1], not the entire geodesic.

5

For

6 Note that log U is of the form f(x′) − f(x) + c for some function f and constant c.

7 The fact that the second derivative of ψ(θ) coincides with the asymptotic variance was clarified in [15].

8

Note that Nakagawa and Kanaya [19] used a different notation convention, where

1. Diaconis P, Miclo L. On characterizations of Metropolis type algorithms in continuous time. ALEA: Latin Am J Probab Math Stat (2009) 6:199–238.

2. Choi MCH, Wolfer G. Systematic approaches to generate reversiblizations of non-reversible Markov chains (2023). arXiv:2303.03650.

3. Hayashi M. Local equivalence problem in hidden Markov model. Inf Geometry (2019) 2, 1–42. doi:10.1007/s41884-019-00016-z

4. Hayashi M. Information geometry approach to parameter estimation in hidden Markov model. Bernoulli (2022) 28, 307–42. doi:10.3150/21-BEJ1344

7. Nagaoka H. The exponential family of Markov chains and its information geometry. In: The proceedings of the symposium on information theory and its applications, 28-2 (2005). p. 601–604.

8. Vidyasagar M. An elementary derivation of the large deviation rate function for finite state Markov chains. Asian J Control (2014) 16:1–19. doi:10.1002/asjc.806

9. Levin DA, Peres Y, Wilmer EL. Markov chains and mixing times. second edition. American Mathematical Soc. (2009).

10. Rached Z, Alajaji F, Campbell LL. The Kullback-Leibler divergence rate between Markov sources. IEEE Trans Inf Theor (2004) 50:917–21. doi:10.1109/TIT.2004.826687

11. Eguchi S. Second order efficiency of minimum contrast estimators in a curved exponential family. Ann Stat (1983) 11:793–803. doi:10.1214/aos/1176346246

12. Eguchi S. A differential geometric approach to statistical inference on the basis of contrast functionals. Hiroshima Math J (1985) 15:341–91. doi:10.32917/hmj/1206130775

13. Wolfer G, Watanabe S. Information geometry of reversible Markov chains. Inf Geometry (2021) 4:393–433. doi:10.1007/s41884-021-00061-7

14. Ito H, Amari S. Geometry of information sources. In: Proceedings of the 11th symposium on information theory and its applications. SITA ’88 (1988). p. 57–60.

15. Hayashi M, Watanabe S. Information geometry approach to parameter estimation in Markov chains. Ann Stat (2016) 44:1495–535. doi:10.1214/15-AOS1420

16. Bregman LM. The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming. USSR Comput Math Math Phys (1967) 7:200–17. doi:10.1016/0041-5553(67)90040-7

17. Watanabe S, Hayashi M. Finite-length analysis on tail probability for Markov chain and application to simple hypothesis testing. Ann Appl Probab (2017) 27:811–45. doi:10.1214/16-AAP1216

18. Matumoto T Any statistical manifold has a contrast function—On the C3-functions taking the minimum at the diagonal of the product manifold. Hiroshima Math J (1993) 23:327–32. doi:10.32917/hmj/1206128255

19. Nakagawa K, Kanaya F. On the converse theorem in statistical hypothesis testing for Markov chains. IEEE Trans Inf Theor (1993) 39:629–33. doi:10.1109/18.212294

20. Adamčík M. The information geometry of Bregman divergences and some applications in multi-expert reasoning. Entropy (2014) 16:6338–81. doi:10.3390/e16126338

21. Wolfer G, Watanabe S. Geometric aspects of data-processing of Markov chains (2022). arXiv:2203.04575.

22. Miller H. A convexity property in the theory of random variables defined on a finite Markov chain. Ann Math Stat (1961) 32:1260–70. doi:10.1214/aoms/1177704865

23. Csiszár I, Cover T, Choi B-S. Conditional limit theorems under Markov conditioning. IEEE Trans Inf Theor (1987) 33:788–801. doi:10.1109/TIT.1987.1057385

24. Takeuchi J-i., Barron AR. Asymptotically minimax regret by Bayes mixtures. In: Proceedings 1998 IEEE International Symposium on Information Theory (Cat No 98CH36252). IEEE (1998). p. 318.

25. Takeuchi J, Kawabata T. Exponential curvature of Markov models. In: Proceedings. 2007 IEEE International Symposium on Information Theory; June 2007; Nice, France. IEEE (2007). p. 2891–5.

26. Takeuchi J, Nagaoka H. On asymptotic exponential family of Markov sources and exponential family of Markov kernels (2017). [Dataset].

27. Feigin PD Conditional exponential families and a representation theorem for asympotic inference. Ann Stat (1981) 9:597–603. doi:10.1214/aos/1176345463

28. Küchler U, Sørensen M. On exponential families of Markov processes. J Stat Plann inference (1998) 66:3–19. doi:10.1016/S0378-3758(97)00072-4

29. Hudson IL. Large sample inference for Markovian exponential families with application to branching processes with immigration. Aust J Stat (1982) 24:98–112. doi:10.1111/j.1467-842X.1982.tb00811.x

30. Stefanov VT. Explicit limit results for minimal sufficient statistics and maximum likelihood estimators in some Markov processes: Exponential families approach. Ann Stat (1995) 23:1073–101. doi:10.1214/aos/1176324699

31. Küchler U, Sørensen M. Exponential families of stochastic processes: A unifying semimartingale approach. Int Stat Review/Revue Internationale de Statistique (1989) 57:123–44. doi:10.2307/1403382

32. Sørensen M. On sequential maximum likelihood estimation for exponential families of stochastic processes. Int Stat Review/Revue Internationale de Statistique (1986) 54:191–210. doi:10.2307/1403144

34. Brooks S, Gelman A, Jones G, Meng X-L. Handbook of Markov chain Monte Carlo. Chapman & Hall/CRC Press (2011).

35. Schrödinger E. Über die umkehrung der naturgesetze. Sitzungsberichte der preussischen Akademie der Wissenschaften, physikalische mathematische Klasse (1931) 8:144–53.

36. Kolmogorov A. Zur theorie der Markoffschen ketten. Mathematische Annalen (1936) 112:155–60. doi:10.1007/BF01565412

37. Kolmogorov A. Zur umkehrbarkeit der statistischen naturgesetze. Mathematische Annalen (1937) 113:766–72. doi:10.1007/BF01571664

38. Dobrushin RL, Sukhov YM, Fritz J. A.N. Kolmogorov - the founder of the theory of reversible Markov processes. Russ Math Surv (1988) 43:157–82. doi:10.1070/RM1988v043n06ABEH001985

39. Hsu D, Kontorovich A, Levin DA, Peres Y, Szepesvári C, Wolfer G. Mixing time estimation in reversible Markov chains from a single sample path. Ann Appl Probab (2019) 29:2439–80. doi:10.1214/18-AAP1457

40. Pistone G, Rogantin MP. The algebra of reversible Markov chains. Ann Inst Stat Math (2013) 65:269–93. doi:10.1007/s10463-012-0368-7

41. Diaconis P, Rolles SW. Bayesian analysis for reversible Markov chains. Ann Stat (2006) 34:1270–92. doi:10.1214/009053606000000290

42. König D. Theorie der endlichen und unendlichen Graphen: Kombinatorische Topologie der Streckenkomplexe, 16. Akademische Verlagsgesellschaft mbh (1936).

44. Von Neumann J. A certain zero-sum two-person game equivalent to the optimal assignment problem. Contrib Theor Games (1953) 2:5–12. doi:10.1515/9781400881970-002

45. Čencov NN. Statistical decision rules and optimal inference, Transl. Math. Monographs, 53. Providence-RI: Amer. Math. Soc. (1981).

46. Campbell LL. An extended Čencov characterization of the information metric. Proc Am Math Soc (1986) 98:135–41. doi:10.1090/S0002-9939-1986-0848890-5

47. Lê HV. The uniqueness of the Fisher metric as information metric. Ann Inst Stat Math (2017) 69:879–96. doi:10.1007/s10463-016-0562-0

48. Burke C, Rosenblatt M. A Markovian function of a Markov chain. Ann Math Stat (1958) 29:1112–22. doi:10.1214/aoms/1177706444

51. Lebanon G. An extended Čencov-Campbell characterization of conditional information geometry. In: Proceedings of the 20th conference on Uncertainty in artificial intelligence; July 2004 (2004). p. 341–8.

52. Lebanon G. Axiomatic geometry of conditional models. IEEE Trans Inf Theor (2005) 51:1283–94. doi:10.1109/TIT.2005.844060

53. Montúfar G, Rauh J, Ay N. On the Fisher metric of conditional probability polytopes. Entropy (2014) 16:3207–33. doi:10.3390/e16063207

54. Wolfer G, Watanabe S. A geometric reduction approach for identity testing of reversible Markov chains. In: Geometric Science of Information (to appear): 6th International Conference, GSI 2023; August–September, 2023; Saint-Malo, France. Springer (2023). Proceedings 6.

55. Weinberger MJ, Rissanen J, Feder M. A universal finite memory source. IEEE Trans Inf Theor (1995) 41:643–52. doi:10.1109/18.382011

56. Willems F, Shtar’kov Y, Tjalkens T. The context tree weighting method: Basic properties. IEEE Trans Inf Theor (1995) 41:653–64. doi:10.1109/18.382012

57. Takeuchi J, Nagaoka H. Information geometry of the family of Markov kernels defined by a context tree. In: 2017 IEEE Information Theory Workshop (ITW). IEEE (2017). p. 429–33.

58. Spitzer F. A variational characterization of finite Markov chains. Ann Math Stat (1972) 43:303–7. doi:10.1214/aoms/1177692723

59. Justesen J, Hoholdt T. Maxentropic Markov chains (corresp). IEEE Trans Inf Theor (1984) 30:665–7. doi:10.1109/TIT.1984.1056939

60. Duda J. Optimal encoding on discrete lattice with translational invariant constrains using statistical algorithms (2007). arXiv preprint arXiv:0710.3861.

61. Burda Z, Duda J, Luck J-M, Waclaw B. Localization of the maximal entropy random walk. Phys Rev Lett (2009) 102:160602. doi:10.1103/PhysRevLett.102.160602

62. Moulos V, Anantharam V. Optimal chernoff and hoeffding bounds for finite state Markov chains (2019). arXiv preprint arXiv:1907.04467.

63. Davisson L, Longo G, Sgarro A. The error exponent for the noiseless encoding of finite ergodic Markov sources. IEEE Trans Inf Theor (1981) 27:431–8. doi:10.1109/TIT.1981.1056377

65. Jones GL. On the Markov chain central limit theorem. Probab Surv (2004) 1:299–320. doi:10.1214/154957804100000051

66. Boza LB. Asymptotically optimal tests for finite Markov chains. Ann Math Stat (1971) 42:1992–2007. doi:10.1214/aoms/1177693067

67. Vašek K. On the error exponent for ergodic Markov source. Kybernetika (1980) 16:318–29. doi:10.1109/TIT.1981.1056377

68. Natarajan S. Large deviations, hypotheses testing, and source coding for finite Markov chains. IEEE Trans Inf Theor (1985) 31:360–5. doi:10.1109/TIT.1985.1057036

69. Mosonyi M, Ogawa T. Two approaches to obtain the strong converse exponent of quantum hypothesis testing for general sequences of quantum states. IEEE Trans Inf Theor (2015) 61:6975–94. doi:10.1109/TIT.2015.2489259

70. Donsker MD, Varadhan SS. Asymptotic evaluation of certain Markov process expectations for large time, i. Commun Pure Appl Math (1975) 28:1–47. doi:10.1109/TIT.2015.2489259

71. Ellis RS. Large deviations for a general class of random vectors. Ann Probab (1984) 12:1–12. doi:10.1214/aop/1176993370

72. Gärtner J. On large deviations from the invariant measure. Theor Probab Its Appl (1977) 22:24–39. doi:10.1137/1122003

74. Balaji S, Meyn SP. Multiplicative ergodicity and large deviations for an irreducible Markov chain. Stochastic Process their Appl (2000) 90:123–44. doi:10.1016/S0304-4149(00)00032-6

75. Kontoyiannis I, Meyn SP. Spectral theory and limit theorems for geometrically ergodic Markov processes. Ann Appl Probab (2003) 13:304–62. doi:10.1214/aoap/1042765670

77. Nakagawa K. The geometry of m/d/1 queues and large deviation. Int Trans Oper Res (2002) 9:213–22. doi:10.1111/1475-3995.00351

78. Neyman J, Pearson ES. Ix. on the problem of the most efficient tests of statistical hypotheses. Philosophical Trans R Soc Lond Ser A, Containing Pap a Math or Phys Character (1933) 231:289–337. doi:10.1098/rsta.1933.0009

79. Nielsen F. An elementary introduction to information geometry. Entropy (2020) 22:1100. doi:10.3390/e22101100

80. Čencov NN. Algebraic foundation of mathematical statistics. Ser Stat (1978) 9:267–76. doi:10.1080/02331887808801428

Keywords: Markov chains (60J10), data processing, information geometry, congruent embeddings, Markov morphisms

Citation: Wolfer G and Watanabe S (2023) Information geometry of Markov Kernels: a survey. Front. Phys. 11:1195562. doi: 10.3389/fphy.2023.1195562

Received: 28 March 2023; Accepted: 08 June 2023;

Published: 27 July 2023.

Edited by:

Jun Suzuki, The University of Electro-Communications, JapanReviewed by:

Antonio Maria Scarfone, National Research Council (CNR), ItalyCopyright © 2023 Wolfer and Watanabe. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Geoffrey Wolfer, Z2VvZmZyZXkud29sZmVyQHJpa2VuLmpw

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.