- Stephenson Technologies Corporation, Baton Rouge, LA, United States

In the context of human-machine teaming, we are observing new kinds of automated and “intelligent” applications that effectively model and manage both producer and consumer aspects of information presentation. Information produced by the application can be easily accessed by the user at multiple levels of abstraction, depending on the user’s current context and necessity. The research described in this article applies this concept of information abstraction to complex command and control systems in which distributed autonomous systems are managed by multiple human teams. We explore three multidisciplinary and foundational concepts that can be used to design information flow in human-machine teaming situations: 1) formalizing a language we call “RECITAL” (Rules of Engagement, Commander’s Intent, and Transfer of Authority Language), which defines the information flow based on concepts of intent, rules, and delegated authority; 2) applying this language to well-established models of human-machine distributed teams represented as a systemic control hierarchy; and 3) applying construal level theory from social psychology as a means to guide the producer-consumer model of the information abstractions. All three of these are integrated into a novel user interface concept designed to make information available to both human and machine actors based on task-oriented decision criteria. In this research, we describe a conceptual model for future information design to inform shared control and decision-making across distributed human and machine teams. We describe the theoretical components of the concepts and present the conceptual approach to designing such systems. Using the concept, we describe a prototype user interface to situationally manage the information in a mission application.

1 Introduction

Future command and control (C2) systems will feature sophisticated software capabilities with varying degrees of autonomy. In some applications these systems will work closely with humans; in others, operations will be largely autonomous. In the near future, distributed teams of humans will likely work with distributed teams of autonomous systems, with relationships that can change dynamically. Evolution of effective human command and control is crucial to the success of future autonomous systems, robots, artificial intelligence (AI), and embedded machine intelligence. The increased reliance on these intelligent technologies creates challenges and opportunities to improve C2 functions, particularly operational situational awareness, to better realize operator and leadership intent. The opportunity of particular interest is to develop better ways for humans to formally express rules, intent, and related decision authorities when interacting with intelligent machines and other humans. This includes the initial creation and subsequent editing of this information, its strategic and tactical use in the system, and the monitoring of performance during testing, training, and operations.

When humans communicate with one another in complex machine-aided tasks, the machines provide a combination of natural language (visual and aural), graphs, spatial maps or three-dimensional (3D) renderings, and other images. Comprehension of these elements is partly dependent on the training, experience, and general knowledge of the people involved. Machines are improving their ability to understand and communicate across these different media using machine learning (ML) and related technologies. Even if the natural language and image processing capabilities of machines greatly improve, such machines are not expected in the near term to possess the faculty to understand nuances of context, history, and unspoken contingencies in a manner equivalent to trained and experienced humans. These nuances are difficult enough for human to human communication and are often managed by formalizing and training hierarchical communication concepts and general language structures to coordinate activities. Using concepts from hierarchical communication models (specifically command and control models) we can define and create a language that will be used when humans interact with and through such machines. As with human to human communication, this language will necessarily exist at multiple abstraction levels and be comprised of some (constrained) natural language, annotated maps or renderings, graphs and equations, and images. The language will necessarily be rooted in defined data structures and service definitions and will require human-machine interfaces to support creating, editing, querying, and monitoring functions.

2 The RECITAL language and an example

The structure of distributed human-machine teams can be viewed as a control hierarchy. In a complex control hierarchy, some of the operations are explicitly defined and some are left to interpretation. In human enterprises hierarchical control is often guided by formal and semi-formal expressions of rules, intent, and decisional authority to act. In the military, these expressions are formally defined as Rules of Engagement (RE), Commanders Intent (CI), and Transfer of Authority (TA). In the evolution of human-machine teams, this Language does not yet exist. We call the language “RECITAL” using the nested acronym “RE-CI-TA-Language.”

The primary objective of this research is to define the data, services, and user interfaces needed for humans to create, edit, query, and comprehend expressions of complex operational tasks such as rules, intent, decisional authority to act, and related control actions when interacting with each other and with intelligent machines. The primary outcome of this research is an information model and specification of the engineering methods required to support these expressions. In this work we explore three multidisciplinary and foundational concepts that can be used to design information flow in human-machine teaming situations: 1) formalizing the language we call “RECITAL,” which defines the information flow based on concepts of intent, rules, and delegated authority; 2) applying this language to well-established models of human-machine distributed teams represented as a systemic control hierarchy; and 3) applying construal level theory from social psychology as a means to guide the producer-consumer model of the information abstractions. We conceptualize a standard information model intended to inform intentional design of human-machine teams. Here is a relevant example of the need for a new conceptual model for this information flow in the context of a single human-machine team:

In November 2021, a Tesla automobile in Full Self-Driving mode was involved in an accident during a lane change maneuver. Although the details of the incident are not fully public, the driver claimed, “The car went into the wrong lane and I was hit by another driver in the lane next to my lane … ‘I tried to turn the wheel but the car took control and … forced itself into the incorrect lane, creating an unsafe maneuver’…” [1]. According to Tesla’s “Autosteer” instructions, once enabled, the vehicle will automatically change lanes when the turn signal is engaged. Autosteer requires the driver to maintain hands on the steering wheel. According to Tesla’s “Navigate on Autopilot” instructions, when using Autosteer, fully automated route-based and speed based lane changes can be enabled. This mode defaults to the driver engaging a maneuver using the turn signal, but the mode can be set to allow the vehicle to do this autonomously. Once enabled, speed-based lane changes can then be separately disabled or set to operate in a conservative (MILD) or aggressive (MAD MAX) mode [2]. The manual does not discuss how a driver might overcome a vehicle initiated lane change while hands are on the steering wheel, although Tesla separately indicates driver movement of the steering wheel or brake pressure will always disengage autopilot activities.

Without any knowledge of the design of this mode, we will not speculate if and where an error in machine design or human operation may have occurred, we just use this example to familiarize the language in the context of a human-machine team. With respect to RECITAL, enabling the Navigate on Autopilot mode and disabling the default turn signal confirmation is a Transfer of Authority for complex passing maneuvers from human to machine. Selecting the desired lane change operations and defaults reflect human intent and also define machine intent. The instructions in the Tesla manual define rules of engagement for the selected mode. The research questions illustrated by this example are related specifically to the information transfer in this human-machine team and generalization of a language for that transfer. Generalization of this language will be discussed in part 3. The relationships between intent, authority, and rules also include both constraints in the machine design and constraints in human operation developed via training and experience. These relationships are based on how humans interpret the information present, which will be discussed in part 4.

In complex operations, human decision-making is dependent on the information they can access; their knowledge, skills, and abilities associated with the context of the tasks and related tools; and what the tools (machines) allows them to do. In design of related systems, the information requirements associated with both human and machine tasks at differing levels of the command, control, or team structure are subject to misinterpretation and error. The Tesla example might be considered a simple case of a single operator and single machine. This would be common to any Tesla vehicles operating with the same design configuration and software, although the human behavior will vary. In parts 3 and 4, we look more broadly at multiple operators managing control of multiple machines of differing capabilities and design, as subject to changes in constructs related to operational mission, rules, intent, or environment.

Humans have operational freedom to express these constructs at whatever level of specificity they desire, subject to constraints levied on them by the systems they are operating and communicating with and within. Likewise, human designers of intelligent machines have design freedom but in much more constrained environments. Human-machine teams must consider both an ontology as determined by domain and experience, and an ontology as constrained by the communication and machine control systems. Ability to interact at different levels of control will remain a primarily human function, but better design of human/machine interfaces can greatly reduce errors of interpretation and improve the flexibility of human and machine tasks. A standard informational design framework and methodology is needed. This work proposes one such approach.

3 RECITAL as a general information model in hierarchical systems

Rules of Engagement, Commander’s Intent, and Transfer of Authority have a well-specified purpose and relatively standardized language in a military control hierarchy. For background the reader should refer to references [3–5]. In non-military enterprises, these information structures almost always exist but in a less well-specified form. There is no formal research that relates this language to non-military domains although components often appear in organizational leadership coaching [6, 7]. Here is a simple non-military example:

Steve is CEO of a growing services company that is learning to use data and artificial intelligence to improve customer service. Steve decides he needs to hire an Executive to manage corporate data collection and analytics processes to improve competitiveness. Steve directs his Human Resources (HR) Director to find candidates and hire this person within the next 2 months. Steve asks the HR Director to assemble a search team and bring him the top 3 candidates for his review and selection within 30 days. The Vice President (VP)-Engineering and HR Director proceed with the hiring process. Based on the level of hire and the urgency they decide to use an executive search firm known to the HR Director for both its candidate networks and its speed. They provide the search firm a draft position description and a list of selection criteria they would like to emphasize.

In this example, intent is clearly communicated, although it must be interpreted from the language used (hire an Executive to improve customer service, within 30 days). Transfer of authority (directs his Human Resources Director) is explicit. Rules of engagement are not present in the narrative, but one can assume they are present within the enterprise’s human resources organization (rules are normally defined separately). In practice, the fact that intent, rules, and authorities are almost always independent information flows is a common cause of control system failures. The RECITAL language attempts to define an integration framework for these.

3.1 Semantic representation of RECITAL

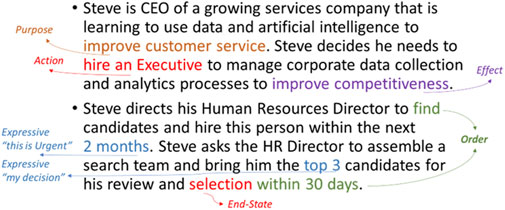

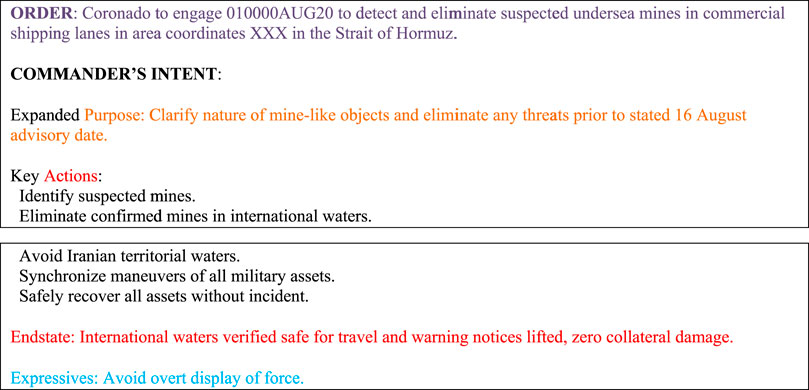

Gustavsson et al. proposed a standardized language representation of Commander’s Intent to aid in machine interpretation [8]. CI is transferred down the military command hierarchy in a written set of orders describing the situation, the desired mission, how the mission should be executed, and supporting mission information. These orders exist alongside military doctrine and rules of engagement which exist separately from the order. CI is embedded within a military order, and directs a change from a current state to an end state by describing actions and intended effects that the commander determines will produce that end state. Gustavsson et al. further define a semantic construct for CI as an expansion on the purpose of the order, key actions to be performed, desired end state, and a set of “expressives” that convey additional intent [8]. Figure 1 shows how these semantic constructs appear using the previous non-military example.

3.2 RECITAL representation in a control hierarchy model

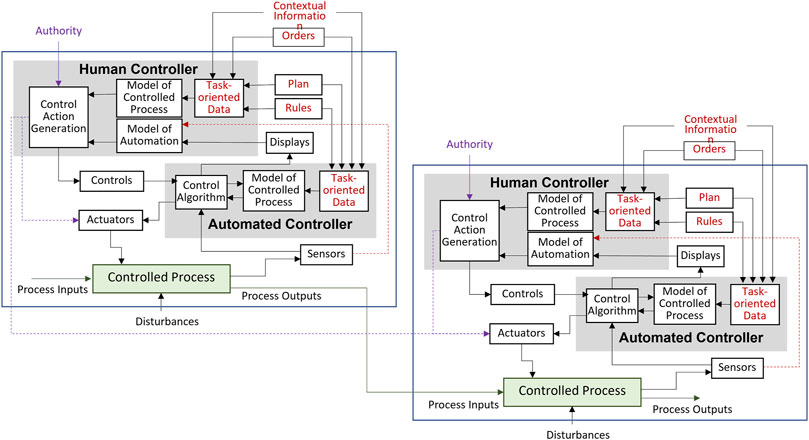

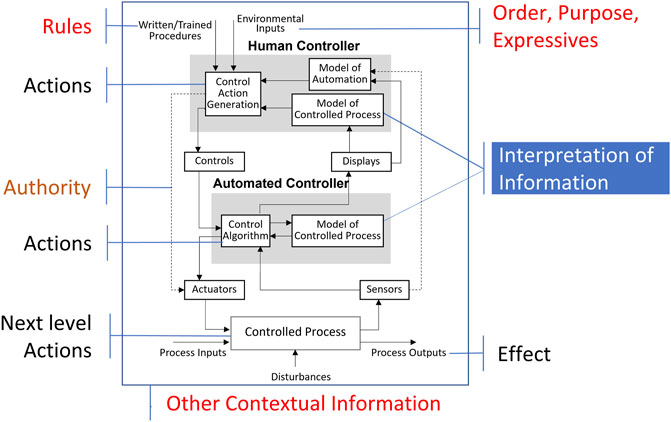

The semantic constructs of order, purpose, action, effect, end-state, and expressives exist universally in human control hierarchies, and as can be seen from the earlier Tesla example, are beginning to influence human-machine control hierarchies. Levenson’s System-Theoretic Process Assessment (STPA) provides a means to formally model these information flows in human and machine control structures [9]. In STPA, a system is represented as a hierarchy of controlled processes, each of which can have a human and machine controller, a model of the controlled process, and a set of information and explicit control flows. Figure 2 provides a depiction of this control structure with the RECITAL ontology overlaid as adapted from [10].

FIGURE 2. STPA control structure overlaid with the RECITAL ontology. Adapted from Cockcroft [11].

3.3 RECITAL integration framework

In this process model, one can define the order, purpose, expressives, and rules as inputs into the control hierarchy; actions, authorities, and effects as implicit in the design of the controller; and the interpretation of this information as a model of the controlled process. Other contextual information that would disturb the controlled processes is noted as coming in from the bottom of the model. In C2 systems, we are particularly interested in events that disturb the normal control process flow and how they affect the interpretation of information. A more complete example of this will be provided in section 6. Each layer of hierarchy in this system might require a change in the abstraction level of the information. RECITAL attempts to resolve errors related to incorrect abstraction of information provided versus that consumed at a level of control hierarchy.

Figure 3 provides a generalized model reflecting two levels of hierarchy. In human-machine distributed teams, one must model how information flows into human and automated machine controllers at any level of a hierarchy. It is expected (at least in the foreseeable future) that authority will be transferred between humans and machines as a human generated control action. We add “task-oriented data” to the model of Figure 2 as both the data that will be available and how that data is interpreted will affect the operation of the control loops. Most tasks in these systems will be at least partly defined by software and related task-oriented data, and data will be used as a selection process for various aspects of a control process. Orders, rules, planning information, and other contextual information can be made available in a consistent way to all human and machine controllers in the control hierarchy. The question becomes how is the right data provided and selected for each task? The answer requires understanding and modeling of both producer (what data is available) and consumer (how will it be interpreted) views of data. In addition, much of this information becomes more subjective as one moves from rules and plans, to orders, to contextual factors. The information processing needs are different at different levels. A framework is needed to manage the data and information abstractions and related decision processes in each controller.

4 Construal level theory and application to RECITAL

Hierarchical control systems become constrained by limitations on the information produced and consumed at various levels of the hierarchy. Typically, changes in context and related information come in at the top of the control hierarchy (i.e., combatant command, vehicle driver) and information is lost or incorrectly abstracted as it progresses down the hierarchy. The additional detail needed by some users may not be present, requiring queries or speculative interpretations to get the needed detail. We desire to design future distributed human-machine systems with more flexibility in decision processes at each level of hierarchy, including more flexibility in decisions made by machine-machine teams. We would like to define the information model so that the multiple levels of detail coexist at each level of hierarchy in the information structure and can be extracted according to user needs.

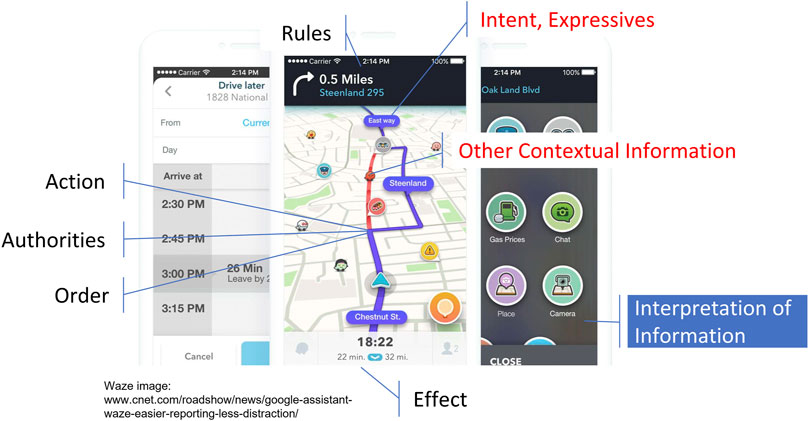

There are a number of AI-based applications appearing today that provide such flexibility by intentionally managing the consumed abstraction hierarchies, providing the human controller greater versatility in selection of contextual information. Figure 4 provides an example of our RECITAL language overlaid on the popular “Waze” road navigation application.

FIGURE 4. Waze application overlaid with RECITAL ontology [12].

In Waze, the driver’s intent is expressed by the initial selection of the route which is generally determined by either shortest time, shortest distance, or an acceptance of the recommended route. The driver’s order is expressed as selection of the route and clicking “start.” At this point, authority is transferred to the application to manage the route. Waze can sense changes in contextual information, such as other driver reports of accidents ahead, and open up a reassignment of authority to the driver to select a new route (or not). What is most interesting about this human-machine user interface is the way in which the Waze application presents to the driver new contextual information at different abstraction levels. The driver can just select the new route, can view the location and nature of the incident ahead before deciding, or can even see the comments from other drivers about the incident. The use of a progressive disclosure concept to manage the abstraction level of consumed information is a well-known approach to consumer-driven information design [13]. It has primarily been applied as a means to reduce complexity in human-machine interface design [14], not as a means to manage informational design tool in the context of RECITAL. Hence, a generalized model and associated research will need to be developed to determine its effectiveness in the context of human-machine teaming.

In this research we investigated Construal level theory (CLT) as a potential underlying theoretical basis for this type of hierarchical information design in applications of human-machine teaming. CLT is used in social psychology to describe the extent to which people prefer information about a topic to be abstract versus concrete as a function of psychological distance [15, 16]. Psychological distance can be defined as a function of separation in time, space, task relevance, or other interest [17]. The degree to which information is abstract versus concrete may manifest as a combination of comprehensiveness of the information elements presented, and level of detail about a given information element.

Tasks performed by different users, in which information about intent, rules, or decisional authority are needed to perform the task to a standard, have common information requirements but differing needs for detail. Differing levels of detail can be supported by these emerging user interface concepts that use AI to monitor information and then provide progressive disclosure. Text-based user interfaces (structured or unstructured) can be formatted so that top-level information is presented in outline or title form, and interested users can progressively expand the text to access the desired level of detail. Similarly, pictograms and annotated maps can be structured so that top-level information is presented, and a “show more”/“show less” structure can be provided to allow a drill-down into the various levels of detail. A narrated story approach, which combines a multitude of medias, may be the most straightforward way to mediate the need to provide different levels of detail to different users (or, to the same user at different points in time), as it combines both mission and task level aspects. The narrated story has the additional advantage of easing the cognitive burden placed on the user.

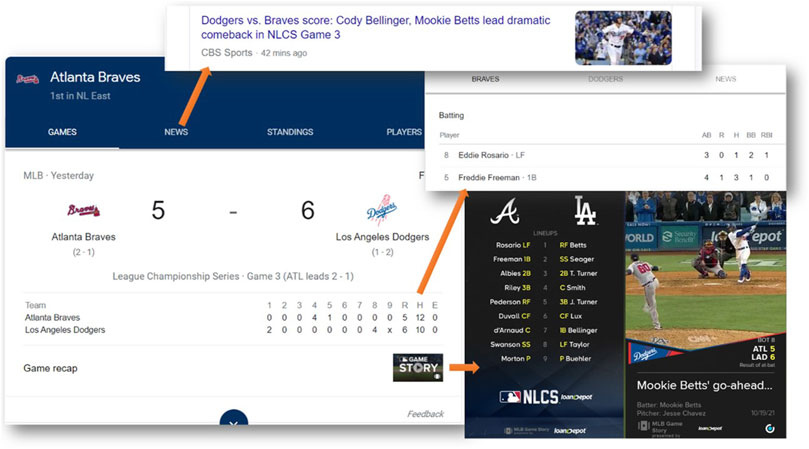

These approaches, though not explicitly identified as design approaches, are also regularly used today to structure information about such diverse topics as movies, sporting events, recreational activities, and other forms of entertainment. For example, an account of a baseball game can be represented simply as the final score, as a box score with statistics for individual participants, as a “highlights” summary showing key plays, as a play-by-play account, and of course, as the full pitch-by-pitch account of the game. These different levels of detail are of interest to different types of consumers. Some baseball followers will simply want to know the final score. Others may also be interested in key facts, such as the impact on pennant races or personal milestones achieved by participants. Some may be interested in scoring plays only, while others may want to track certain participants throughout the course of the game. Relatively few would be interested in a pitch-by-pitch account after the game (though quite a few might be interested in this during the game itself); those interested in pitch-by-pitch after the game might be analysts trying to find certain trends. Figure 5 shows how Google manages progressive disclosure in their search results for a baseball score [18].

In a C2 environment one can draw similar analogies. At the command level, commander’s intent is provided to set the highest level desired outcomes and constraints for a mission. The commander might only want to know mission results and related “box score” information as long as the mission was successful. Planning teams would want additional contextual and operational information including the mission concept of operations, areas of regard, resources available, and rules of engagement. Even different planning activities may demand different levels of detail. For example, the details of order of battle and route planning would differ in detail between an aircraft mission at altitude and one in terrain. Tactical operators would want more of the “play-by-play,” but would only want to be burdened with higher level contextual information when a mission goes off-plan. The ability to selectively add-in or subtract information at different abstraction or construal levels, only when needed for decision making, will be quite useful in complex missions or tasks.

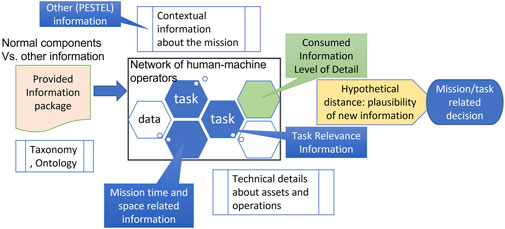

CLT provides a basis for structuring information in advanced user interface concepts, using information representations that can be provided and then selectively engaged to provide more or less detailed information to the interested consumer of the information. We used CLT and the RECITAL language model to structure an operational model of the information flow desired in distributed human-machine teams. A conceptual view of this model is shown in Figure 6.

Given a set of tasks performed by a network of human-machine operators, there are two selection processes: the selection of information provided by the system design; and the selection of information consumed by the operator(s). Future information systems design should attempt to work both aspects of the information selection process. Data analysis, artificial intelligence, and machine learning methods and tools are making great progress in inferring context from large corpuses of data. To design effective queries in more complex tasks, explicitly modeling human construal levels is a promising approach.

A core aspect of achieving effective mission/task related decisions can be related to “plausibility” of the consumed information as related to the operator’s beliefs. The logic follows formal definitions of plausibility from Dempster-Shafer Theory [19] although in our use formal mathematical bounds on plausibility may not be possible. This is an area for further research. We define plausibility as the operator’s perceived probability of occurrence of an event based on hypothetical distance between current state and end state, based on explicit, implicit, and other contextual information. Defining an “estimated proximity function” for that distance would require:

1. Direct access to information and user interfaces that allow an operator to situationally retrieve additional contextual information (either historical or predicted future) based on proximity of that information to the task at hand and hypothetical distance between that information and the task situation,

2. A design for organization of that information based on operator construal level. From CLT, we can initially organize information by Task relevance, Spatial relevance, Temporal relevance, and Other contextual information relevant to the task at hand,

3. Measurement of operator task situational awareness. Endsley [20] defines situational awareness as an operator’s perception of the information, comprehension of the information, and projection of that information onto their task at hand, and

4. The “proximity function” that rates effectiveness of the user interface in relating the information to tasks so as to improve operator situational awareness.

If we are able to define such a function, we can use CLT in practice to evaluate five categories of contextual information in an operational environment:

1. How people perceive, comprehend, and project temporal information

2. How people perceive, comprehend, and project spatial information

3. Relevance of this information to their tasks (or not)

4. Other contextual information that may be relevant (political, social, etc.)

5. Hypothetical distance (plausibility) between their interpretation of the information and their tasks

Temporal and spatial information are related to the operators’ mission, task relevance and hypothetical distance are related to operators’ actions in tasks, and other contextual information may affect both. Again, the Waze user interface is a good example of how mission relevant and task relevant information can be combined into the human-machine interface.

5 A formal model of construal levels

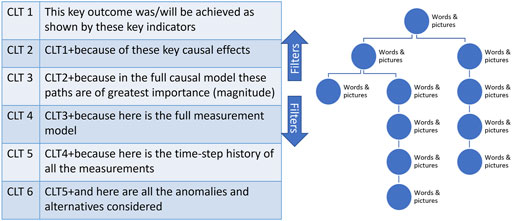

Based on an evaluation of CLT, existing applications, and the full distributed human-machine teaming case study to be described in section 6, we formalized a six-layer model linking construal levels to related information abstractions. This model is shown conceptually as a progressive measurement information model in Figure 7, where increasing CLT layer number denotes progressively increasing detail of information. The tree structure at the right of the table depicts that there is a hierarchy of information (words and pictures) that is added to and progressively disclosed at each increasing CLT layer.

If one were to define a causal model that relates the produced information to the consumed information and then to task decisional effectiveness, that would generally form CLT layer 3.

5.1 Descriptive measurement model

The following provides a descriptive application of construal levels into an information feed consisting of both narrative information and visual images. We have defined six construal levels as appropriate standards in this work. A given application might need fewer levels.

CLT 1: Executive summary. This level is generally composed as one visual and two to three sentences of text, and no more than 10 s in duration. This is the most abstract level. For a future planned event, this level presents the main claim [key outcome] will achieve intent as shown by these [key indicators]. For a past event, this level declares overall success or the lack thereof: this [key outcome] achieved (or did not achieve) intent as shown by these [key indicators].

As a baseball analogy example, imagine the manager of a baseball team interacting with an app that has statistical information on all of the players. The manager’s intent is most certainly to win the game. In the future example, the manager’s level 1 construal might be: “these players in the 8-9th batting order positions are most likely to produce the runs needed to win the game.” In the past example: “the difference in the win was the production of those two additional runs from our 8-9th batting positions.”

CLT 2: Mission overview. This level is composed of perhaps two or three visuals, and the text is no more than 30 s in duration. This is the “elevator speech” level of abstraction. It is more specific about intent and related considerations for rules of engagement. For a future planned event, this level presents the main reason intent is expected to be achieved: this [key outcome] will achieve intent as shown by these [key indicators], due to these [primary causal effects]. For a past event, this level declares overall success or the lack thereof, and gives the key reasons why: this [key outcome] achieved or did not achieve intent as shown by these [key indicators], because of these [primary causal effects].

This level in the baseball analogy might be a future example: “these players in the 8-9th batting order positions are most likely to produce the runs needed to win the game. Joe Baseball and Jim Stealer have matched up well against their starter Mike Pitcher in our previous two meetings. Our statistics indicate we can count on at least two runs from the bottom of the order with these players.” Past example: “the difference in the win was the production of those two additional runs from our 8-9th batting positions. Joe Baseball has been productive in the 8th spot all year.”

CLT 3: Mission summary. Although there are no specific constraints on duration, this level is limited to describing events as a sequence of actions. The narration of each step is succinct. For a typical operation, the duration would be less than 5 min. For a future planned event, this level provides a top-level description of how the planned operation will apply rules and authorities to achieve intent and why success is predicted. It conveys the overall timeline for the operation, describes all of the major actions, and identifies the specific actors. The narration describes the key parameters of actors, resources, and activities that are essential to success (i.e., the primary causal paths in an underlying mission model). For a past event, this level describes the actual sequence of actions and events that determined the success (or lack of success) of the operation.

For a future planned event, the step by step activities are explored and selected by evaluating multiple scenarios for the mission and perhaps running simulations. These steps are similar to how the Waze app might calculate different multiple alternative routes with varying time estimates around an accident, with that information displayed in different colors, and presented to the driver to accept. In baseball, this analogy might reflect how a manager evaluates batter substitutions due to an opponent’s pitching changes. In a more complex mission, human and machine aided planners and tools might test different courses of actions (COA’s) before selecting the best COA to give the operators as their baseline plan.

In the course of a mission, the RECITAL concept dictates that all or many of those scenarios remain present as part of the produced information, to be selected by operators or automated tools based on disruptions to the mission control flow. Instead of simple route changes, the operators have access to more complex alternative mission descriptions based on their spatial, temporal, task-driven, or other information needs for information. This access may require them to explore information into the next construal levels to aid in deciding on an alternate future mission success strategy. The mechanization of this capability will be presented further in section 6.

CLT 4: Mission brief. There are no specific constraints on narrative duration at this level. The emphasis shifts to substantive completeness rather than brevity. Content at this level should cover the major points of background and context (to address why), major contingencies, and elaboration on rules of engagement as appropriate. Authorities to execute the mission are explicit but include multiple scenarios where different authority levels may be assigned. This level would include any significant political/environmental/social/other considerations. For a typical operation, the duration would be less than 30 min. For a future planned event, this level is similar to briefing the mission plan to the next higher-level authority. There should be enough detail to cover what actions are planned, the key timeline for those actions, and the key contingencies that are recognized and covered by the plan. For a past event, this level constitutes an after-action report presented to the next higher-level authority. It states whether the intent was achieved and covers the actions that were taken and the timeline associated with those actions. It also describes contingencies that occurred and the reaction to each, and any anomalies that impacted the outcome. The narration includes reference to rules of engagement that governed the reaction to contingencies or anomalies.

CLT 5: Mission plan/report. Again, there are no specific constraints on narrative duration for this level, but the intent is to include all elements of the mission plan. Key political/environmental/social/other parameters are typically included, even if benign. For a typical operation, the duration would be less than 60 min. For a future planned operation, the content should cover all relevant points of background and context, all contingencies that are reasonable to expect, and key technical parameters or details (to address how). This level is similar to reviewing a detailed mission plan with the crew that will execute it. The contingencies covered by the plan may be considered unlikely but are of enough significance to merit explicit planning. The key milestones on the mission timeline are covered at this level, as they were at Level 4. At this level the impact of contingencies on the mission timeline should be addressed, especially if time itself becomes a forcing function in the presence of certain contingencies. For example, available fuel may limit the route selected by the driver/Waze teaming (or be integrated into the app). Factors that may not be a significant concern in the nominal mission plan should be included as various contingencies, and hence should be a topic covered at this level of detail. Any maintenance-related concerns that will potentially impact the mission should be described at this level as well.

For a past operation, the content is similar to a mission report. It repeats the relevant information about context, to help explain why the operation was conducted, and narrates the sequence of events and actions from beginning to end. It includes narration about contingencies that were realized, and anomalies that occurred that were consequential to the outcome. A user interface described in section 6, which we call “UxBook,” is used to store multiple past mission reports at this level of detail in order to learn and inform future missions.

CLT 6: Mission details/logs. There are no specific constraints on duration at this level. The content is a point-by-point elaboration on Level 5, adding more detail “on demand.” It is not expected that any one individual would be interested in all of the detail. Examples of additional detail that is made available at this level include additional contingencies, additional information on the technical parameters or principles of operation, recent maintenance history relevant to the operation, and full environmental data and estimates.

5.2 Informational forms

The presentation of information in the RECITAL concept will contextually blend different forms of information based on differing spatial/temporal/task-driven/other needs. The selection of form is critical to the appropriate operator consumption of information and must be selected to situationally reduce psychological distance. Informational forms include structured and unstructured text, pictograms, annotated maps or other visual renderings, and narrated story.

5.2.1 Unstructured text

Unstructured text is visual or auditory content that cannot be readily mapped onto standard database fields. Information about intent, rules, decision authority, and control tasking is most often expressed in unstructured text. There are no constraints on how the constructs are expressed. Errors in comprehension occur from both differences in the language used versus comprehension, and differences in the information transferred versus that needed to perform the operation. Using unstructured text to convey these constructs to automated software systems is not practical, as it would require advanced natural language processing capabilities far in excess of what is currently available. However, most human to human information exchange is unstructured or only partially structured as codes or standard terms so informational concepts must address this form.

5.2.2 Structured text

Constraints on information exchange in hierarchical control systems are governed by standard formats that structure the information into defined fields, with limited use of unstructured text in some of those fields. Information appears in a specified order, and for many of the fields is restricted to certain values (or range of values) to be valid. For some messages there is a field that allows unstructured text, typically of constrained length, and perhaps labeled “notes” or “remarks”. In practice, these unstructured fields may contain significant information relevant to the operational task, which must be interpreted based on very limited expressions adapted to fit into a message structure. Representing context is critical for decision making in operational environments, and requires richer forms of communication.

5.2.3 Pictograms

A pictogram is a depiction of relatively abstract information in caricature form (The term is not universally used and is not tightly defined. A pictograph is also used in some contexts.) As used here, a pictogram is a graphical depiction of an action, constraint, or other attribute with minimal reliance on text. The Waze screen in Figure 4 is a typical example. The pictogram relies on some degree of visual similarity to the object, action, or other attribute that is represented. A pictogram is generally static, and a sequence of pictograms may be used to depict temporal order. An animated pictogram is a brief succession of images that supports perception of motion or other action in the context of the pictograph. One example of a simple pictograms are icons, which are used to represent certain ideas, things, or categories, signal certain conditions, or direct attention in a quick and easy manner. Pictograms have the putative advantage of not requiring language proficiency in order to comprehend meaning, although in practice pictograms may be dependent on labels and familiarity with cultural stereotypes in order to be effective.

Pictograms can be used to convey certain actions that are allowed or prohibited, or end states that are intended or unintended. Pictograms thereby convey information about intent, rules, and authorities. Animation of the pictogram may aid comprehension of actions depicted by the pictogram. Pictograms overlaid on an actual operator’s visual scene, such as with augmented reality devices, might also be used.

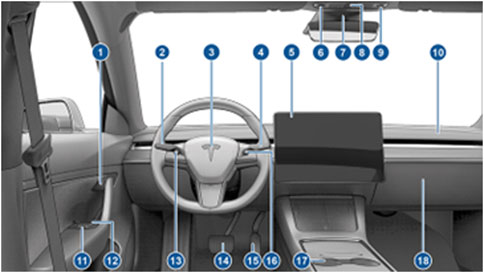

5.2.4 Annotated map

An annotated map uses spatial information overlaid with supplemental annotated information. The Waze image in Figure 4 provided an implemented example. An annotated map is particularly useful at visualizing the spatial context of any operational activity. Annotation on a map may include information that does not have a strict spatial referent, such as the time at which something occurred (or is planned to occur), or an outcome that was achieved (or is intended). Annotated maps may be referenced to an external context, such as a geographic map, or a system context, such as the pictogram image of the Tesla cockpit shown in Figure 8. Many operations are predicated on a coordinated movement of items or people over time and in space. Thus, the annotated map may show the relative positions of participants and objects at the beginning of the operation, along with their intended movements.

FIGURE 8. An example of an image as an annotated map [2].

5.2.5 Narrated story

The narrated story uses visual information (such as still images, full motion video, text, and graphics) accompanied by an audible narration (which may be captioned in some contexts) to create the perception of a story. The story usually has standard elements such as setting, actors, and plot.

The effectiveness of the narrated story is dependent on the extent to which the story builds natural interest and corresponding comprehension in the recipient (user). Unlike annotated maps, which require the user to actively engage in the material, the narrated story allows the user to remain relatively passive as comprehension is created by its presentation. This process eases the cognitive burden on the user and reduces the probability that incorrect inferences will be drawn from the presentation.

An illustration of the narrated story concept associated with this research, with the visuals and accompanying narratives, appears in section 6. The narrated story may be the approach that is best suited for application of the CLT constructs, and it may also benefit from requiring less cognitive effort by the user in achieving required levels of comprehension.

A story, as narrated at CLT Level 6, may include a considerable amount of supporting detail. A single thread of narration may not be practical. Instead, it may be more effective to provide the narrative at Level 5 with a way for the user to request more detail from Level 6 for topics of interest to him or her. The user interface (UI) mechanisms by which such detail can be requested include a list of topics (“more information”), attributes on icons or other symbols on the display, and/or spoken prompts that state an action to take to get more information on a certain topic. Such conventions are not meant to be restricted to Level 5 presentations. They can be used at higher levels, certainly Level 4, but even at Levels 1, 2 and 3.

6 Practical example: Distributed autonomy in a mine warfare mission

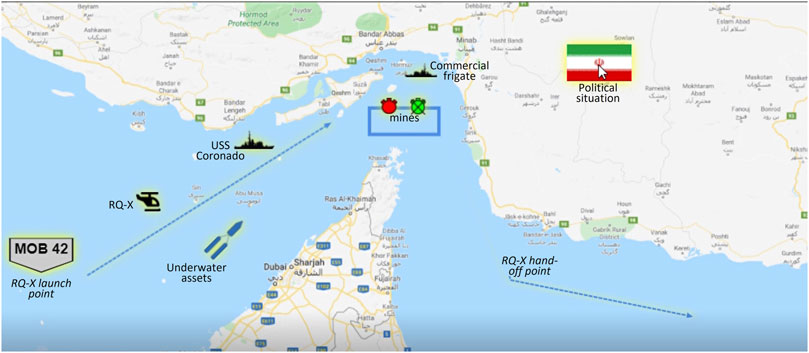

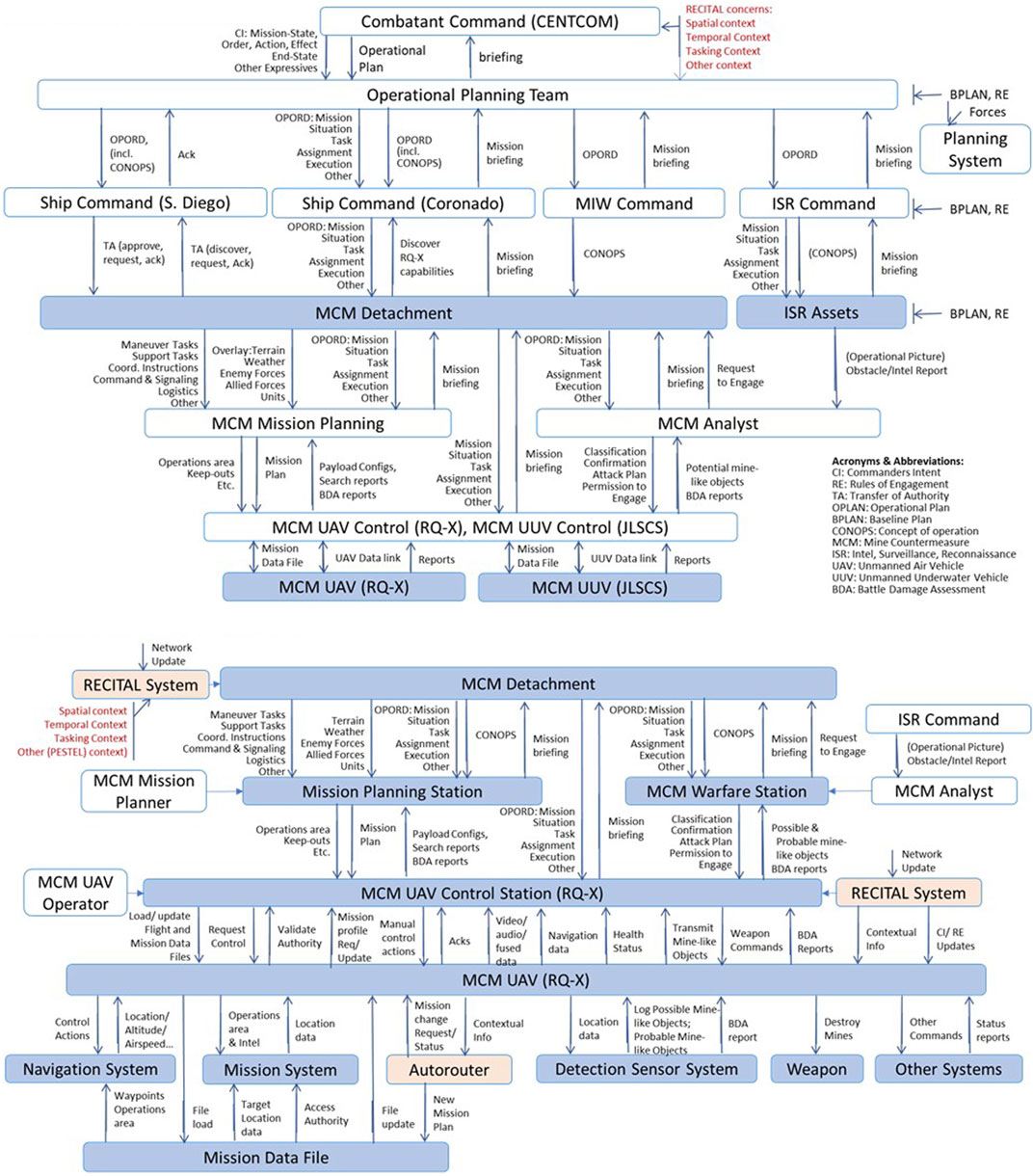

We present an example of the modeling process and development of a future planning system using a military mission scenario associated with undersea mine countermeasure (MCM) operations. In this scenario, military commanders’ intent, rules of engagement, and decision authorities are represented down to a set of operators who are conducting mine search and destroy operations using unmanned airborne and underwater vehicles (UAVs and UUVs) with a number of automation capabilities. Control of mission activities can be distributed between the human planners, the operators, and the vehicles, as well as vehicle to vehicle. In particular, the scenario assesses the information flows associated with transfer of control of the UAV platforms between operators, a process known as Transfer of Tactical Control (ToTC). In this specific scenario, a failure in the UAV associated with one ship, the USS Coronado, requires a transfer of the mission to another UAV known as RQ-X, currently in control of another ship - the USS San Diego. The alternate UAV is an experimental platform with automated mine search and neutralization capability. The ToTC process is executed so the RQ-X is managed by the USS Coronado during the operational mission, then returned to the San Diego at a designated handoff point. Figure 13 provides a visual overview of the mission.

We would like to develop a system that allows the decision authority in that transfer to be made at the operator level, with operator decision data that situationally includes both intent and application of rules of engagement as annotated through various hierarchies of command. This process requires a rapid transfer of authority, a re-evaluation of rules of engagement, and a revision to mission planning. As this transfer is for a less familiar type of UAV to the Coronado’s operators, the scenario presents a narrative-driven planning and rehearsal capability where the operator(s) can review planning information at multiple construal levels. The appropriate construal level for a particular operator would vary based on both their familiarity with the RQ-X and the mission operational context. In the present day, these decision data are normally expressed in unstructured text.

In the definition and analysis process using this methodology, we begin with a mission task analysis (MTA) that defines the sequence of human and machine tasks to be performed in the control hierarchy. The MTA methodology consists of defining a set of design reference scenarios, from which a hierarchical functional breakout can be derived. That functional breakout leads to identification of human and machine tasks, and the information requirements associated with those tasks. The information requirements are a key point of interest. A functional analysis process adopted from Chaal, et al. [21] is used in discussions with operators to make sure all tasks/functions and information needs are captured in the control structure at every level. At this point vignettes are used identify control actions that need to be defined or modified in response to not only disruptions but also changing mission context, orders, rules, or authorities. At this point we use the standard STPA analysis flow of identifying losses, related human or machine operator hazards, and control actions of interest to reason about the additional information needs (either additional detail or context) the operator requires to successfully perform a task. The difference in our use of STPA in this work is a focus on any disruptive change instead of just accidents. For example, a mission loss can occur if a change in political situation requires a mission to be aborted and the operators fail to successfully abort. A sample vignette for our MCM mission is described below:

As the RQ-X conducts its mine search and neutralize pattern, a suspected mine-like object was found at a location near to the politically mandated keep-out zone. The local operator and RQ-X geographic information systems do not have sufficient resolution to isolate location of this mine in the operational area versus keep-out area and the RQ-X is allowed to proceed to this location and neutralize the mine. Higher accuracy satellite geographic information indicates the mine is actually in a keep-out area. Both the human operators and the RQ-X fail to access this additional information and cause an international incident.

In implementation of a RECITAL system the mine in question would show up as an alert on the operator’s screen (likely a visual map) indicating the need to query more detailed information. A similar alert would cause the RQ-X to transfer control back to the human operator for that particular segment of the mission.

We can model this information flow in a system-theoretic approach at multiple levels using the STPA concept of a control model. Figure 9 shows a control model for a complex MCM mission using the RQ-X. Int the lower have of the figure the concept of a “RECITAL System” is a simplified black box function for the set of applications that would scan external context and provide relevant information to the operators at the appropriate construal levels.

FIGURE 9. Top and bottom halves of the hierarchical control model focused on mission level information transfers in the MCM mission involving the RQ-X UAV and JLSCS UUV. The bottom half of the hierarchical control model is just for the RQ-X UAV.

A number of innovative user interface (UI) concepts were identified in this research as alternatives to using text to convey CI and RE. These include combinations of pictograms, annotated maps, and narrated stories. The narrated story concept proved particularly adept at supporting the different levels of detail needed across users. A UI concept rooted in current social media platforms, called the “UxBook” concept, was developed to provide a way to feature structured and unstructured text, pictograms, annotated maps, and narrated stories. The narrated story formed an initial conceptual model of an implementable system, focused on scenarios. System operational and information modeling was identified as providing a useful framework to understand interoperability requirements in information exchanges involving both humans and intelligent systems, and the effort developed an initial approach to capture these information exchanges in a commercial model-based systems engineering (MBSE) tool.

Figure 10 is a potential representation of formal CI based on a typical military concept of operations transfers, presented as unstructured text. This is color coded to reflect the intent and effects model of Figure 1.

FIGURE 10. An example unstructured statement of commander’s intent in a typical military operational order.

Note that a statement of intent generally describes the context of the mission and end state but not the resources or plans required to accomplish it. Resources and plans can be provided in textual format but also more are richly represented as pictograms or maps. The following section describes an illustration of CLT levels in a simulation tool that utilizes annotated maps as the primary user interface.

6.1 Illustration of a narrated story using annotated maps

Our narrated story uses visual information (such as still images, full motion video, text, and graphics) accompanied by an audible narration (which may be captioned in some contexts) to create the perception of a story. The story usually has standard elements such as setting, actors, and plot. The narrated story supports different levels of detail by providing different forms (or versions) of the story.

The narration of the story is provided by natural language, perhaps implemented by a text-to-speech function. (Automatic generation of narrative is a topic currently under investigation by multiple researchers and is showing considerable promise. Future updates to this research will contain a review of this progress.) The narration may feature multiple voices, perhaps to distinguish different sources or points of view, or to represent different functions supported by the information. There is no practical limit to the number of individual human voices that a person can discriminate, but using two to four distinct voices within a given story is likely to be sufficient. Using one male and one female voice is readily discriminable and can be used to distinguish between primary information and supporting information. Narration can also be presented as captions or transcripts if necessary. The following describes an example of a narrated story reflecting the vignette at each CLT level.

6.1.1 CLT level 1

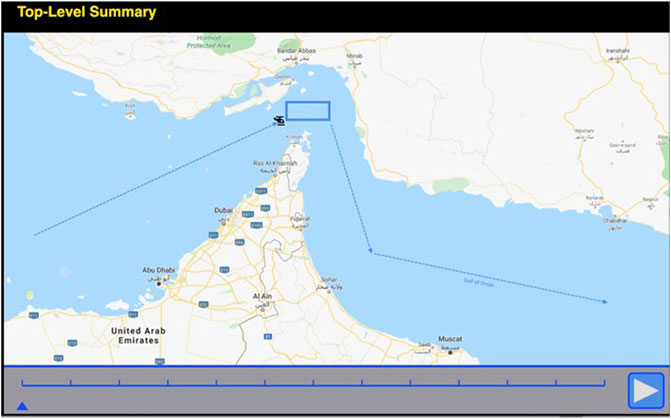

The top level presentation of the story (construal level 1) is illustrated in Figure 11 (In these figures, the narration appears below the figure caption.) As the narration is played, the helicopter icon moves on the screen and the pointer moves across the timeline at the bottom of the map. Note the timeline at the bottom of the map; multiple static panels can be used to depict changes in the position of participants (and other aspects of the operation) at different times, where the small caret below the timeline shows the time in question. The large arrow at the right of the timeline is the “play” button.

CLT one Narration. Voice 1: The RQ-X will find and destroy shallow mines in the Strait of Hormuz on 15 August 2020. It will not enter the Iranian No Fly Zone.

6.1.2 CLT level 2

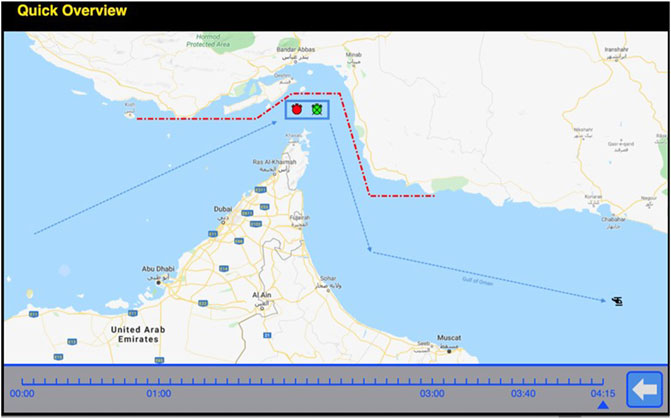

The presentation of the story at construal level 2, the quick overview level, is illustrated in Figure 12. The narration of this panel adds information about purpose and more detail about the time of the operation. This view is at the end of the mission timeline (4 h, 15 min), showing both successful (green circle) and unsuccessful (red circle) mine destruction by the RQ-X. The red dotted line is the keep-out or “no-fly” zone.

Narration. Voice 1: To reduce the threat from mines and to support open sea lines of communication, the RQ-X will find and destroy shallow mines in the Strait of Hormuz on 15 August 2020 commencing at time zero one zero zero Zulu. It will not enter the Iranian No Fly Zone.

Figure 13 is a panel that depicts the full mission from the starting point. The icons on this panel can be selected to show more detail about a particular player in the mission (these icons have been annotated with titles in this figure). Note: the USS San Diego is not shown in this panel. The narration adds the details of the timeline.

Narration. Voice 1: Control of the RQ-X will be transferred from USS San Diego to USS Coronado at zero zero zero hours Zulu. Transit time to the OPAREA is 1 h. In the OPAREA, RQ-X will find and plot mines down to depths of 25 m. If mines are detected at depths of 10 m or less, RQ-X will engage and detonate them. After a maximum of 2 h on station, RQ-X will depart the OPAREA. Tactical control will be transferred back to San Diego no later than zero three forty. Maximum endurance requires RQ-X to be recovered no later than zero four fifteen by San Diego (Note: “OPAREA” refers to the operational area of the mission.)

6.1.3 CLT level 3

As the construal levels progress, more detail is added. Complete presentation of this detail is not practical in the format of this article but the concept has been completely developed in a simple simulation tool. Instead, the increasing levels of detail are illustrated in how the story opens at each level. At construal level 3, the story begins with the most salient background point, thereby explaining the motivation for the mission. At this level, a second voice is added to provide background and supplemental information, and the first voice provides the primary information. The visuals are a sequence of map views, accompanied by the following narration:

Narration. Voice 1: To reduce the threat from mines and to support open sea lines of communication, the RQ-X will find and destroy shallow mines in the Strait of Hormuz on 15 August 2020 commencing at zero one zero zero Zulu.

Voice 2: Overhead assets indicated the potential presence of mine-like objects in the strait of Hormuz. Analysis of those images suggested a mine field with mine-like objects dispersed at multiple depths. USS Coronado was on patrol in the Persian Gulf, configured with its countermine warfare mission module.

Voice 1: USS Coronado received orders from CENTCOM to reconnoiter the area of the suspected mine field and to clear it of mines within 24 h. Coronado prepared a plan to launch its UAV to begin surveillance for shallow mines while its UUV assets were being prepared to continue surveillance and perform mine neutralization.

Voice 2: As Coronado began preparing its unmanned air and underwater systems to surveil the OPAREA, it discovered maintenance issues that threatened the completion of the mission within the specified time. Mission planners aboard Coronado discovered the presence of the RQ-X UAV, equipped with its airborne countermine system, under tactical control of the USS San Diego, located at mobile operating base 42. Mission planners determined that RQ-X was capable of finding and neutralizing shallow mines in the OPAREA.

6.1.4 CLT level 4

At construal level 4, additional detail is added to provide a justification for why the operation is warranted. The visuals are a sequence of map views and image intelligence accompanied by the following narration:

Narration. Voice 1: To reduce the threat from mines and to support open sea lines of communication, the RQ-X will find and destroy shallow mines in the Strait of Hormuz on 15 August 2020 commencing at zero one zero zero Zulu.

Voice 2: On 13 August, an Iranian-flagged surface vessel registered as a nautical research platform was observed executing a pattern consistent with laying a mine field in the straights. As this was happening, the Islamic Republic of Iran issued a general statement asserting its right to control traffic through the Strait of Hormuz. Overhead assets indicated the potential presence of mine-like objects in the straights. Advisories were issued to commercial ships planning to transit the area.

Voice 1: CENTCOM tasked USS Coronado to reconnoiter the area of the suspected mine field and to clear it of mines within 24 h. Rules of engagement specify no UAV reconnaissance below ten thousand feet within 10 nautical miles of the Iranian coastline (Note: “CENTCOM” refers to Central Command.)

Note that the last set of Voice 2 narration is the same as at level 3.

6.1.5 CLT level 5

At construal level 5, still more detail is added in the introduction. For example, details about information from overhead assets is expanded to include which assets were used and the contribution each made. Additional detail is added about the hostile pronouncements by the adversary and the involvement of key coalition partners. The visuals continue to be a series of maps and image intelligence captures, now also supplemented by a video clip of a speech by the Iranian president and a copy of a notice to mariners issued by the United Kingdom. This narration is included in its entirety to provide context for the full mission. Refer to Figure 15 for the players.

Narration. Voice 1: To reduce the threat from mines and to support open sea lines of communication, the RQ-X will find and destroy shallow mines in the Strait of Hormuz on 15 August 2020 commencing at zero one zero zero Zulu. It will launch from Mobile Operating Base 42, transit to the op area, find and plot mines down to 25 m, neutralize mines down to 10 m, and then transit for handoff to the USS San Diego for recovery.

Voice 2: On 13 August, an Iranian-flagged surface vessel registered as a nautical research platform was observed executing a pattern consistent with laying a mine field in the straights. The vessel is the Khalije Fars Voyager, registered to the Iranian Defense Ministry’s Marine Industries Organization, which is affiliated with the Iranian National Institute for Oceanography and Atmospheric Science. It is equipped with a data transfer system that uses satellite communication, and is capable of deploying a precise pattern of bathymetric buoys. This capability can also be used to automatically deploy a wide variety of mines.

Voice 2: The vessel was tracked by Triton, as part of routine maritime surveillance. The Triton mission crew at NAS Jacksonville noticed an anomaly in the AIS report from the vessel. The AIS transmission indicated a planned route along the coast, consistent with normal bathymetry scans. The route as executed deviated from the planned route and followed the same general pattern observed in previous mine warfare training missions conducted by the Iranian Navy. The most recent of these missions was conducted by the Konarak, a Hendijan-class support vessel outfitted with anti-ship missiles and mine laying systems, in December 2019. It departed from the Iranian Navy port in its namesake city, Korarak, proceeded to the straits where it laid a diagonal pattern of dummy mines, then returned to base (Note: “NAS” refers to Naval Air Station and “AIS” refers to Automated Identification System on the ship.)

Voice 2: In response to the AIS anomaly report from Triton, an EA-18 Growler was diverted from routine patrol and tasked to do a specific emitter identification collection on the Iranian vessel. The maritime navigation radar and satellite communications data link transmitters were identified as the Khalije Fars Voyager, which was also the visible hull marking. But the AIS transmitter and an encrypted UHF line of sight radio were identified as from the Korarak. The Konarak was severely damaged in a friendly fire accident in May 2020, and repairs have not been completed. ONI assesses that these components from the Korarak were retrofitted onto the Khalie Fars Voyager to help provide deception regarding the nature of the mine laying mission.

Voice 2: As this was happening, the Islamic Republic of Iran issued a general statement asserting its right to control traffic through the Strait of Hormuz. The Iranian President reminded the world that the body of water is called the Persian Gulf for good reason. As part of a speech on regional tensions, the president stated that Iranian patience and tolerance for intrusion in its territorial waters was strained by repeated provocations from the Gulf states, from Britain, and from the United States. The Iranian foreign minister released a statement addressed to 42 ambassadors warning of severe consequences if the provocations from their nations continue.

Voice 2: Overhead assets indicated the potential presence of mine-like objects in the straights. Triton descended below 45,000 feet and collected detailed hyperspectral images. These images from Triton indicated the presence of potential shallow mines. A geosynchronous KH-11 satellite was tasked to perform a multi-spectral collection on the area. Analysis of those images suggested a mine field with mine-like objects dispersed at multiple depths.

Voice 2: Advisories were issued to commercial ships planning to transit the area. The United Kingdom Maritime Trade Operations office issued a Notice to Mariners regarding the heightened threat level in what was already categorized as a high risk area. This notice contained an estimate that the situation might be resolved by 16 August 2020, about 48 h after the notice was issued.

Voice 1: US Central Command reviewed and assented to the notice before it was sent.

Voice 2: USS Coronado was on routine patrol in the Persian Gulf. The Coronado was configured with its countermine warfare mission package, which includes a UAV platform with a sensor suite capable of detecting shallow mines, and UUV assets capable of detecting deeper mines. Other UUV assets on Coronado can neutralize many mines.

Voice 1: CENTCOM tasked USS Coronado to reconnoiter the area of the suspected mine field and to clear it of mines within 24 h. Coronado prepared a plan to launch its UAV to begin surveillance for shallow mines while its UUV assets were being prepared to continue surveillance and perform mine neutralization.

Voice 1: Rules of engagement specify no UAV reconnaissance below ten thousand feet within 10 nautical miles of the Iranian coastline.

Voice 2: Use of the sensor to detect mines by the Coronado’s UAV requires operation at a maximum altitude of 2000 feet, and better performance is obtained at altitudes of 500 feet or below. The northwest corner of the OPAREA lies approximately nine and one-half nautical miles from the coast of the island of Qeshm.

Voice 2: As Coronado began preparing its unmanned air and underwater systems to surveil the OPAREA, it discovered maintenance issues that threatened the completion of the mission within the specified time of 24 h. Mission planners aboard Coronado discovered the presence of the RQ-X UAV, equipped with its airborne countermine system, under tactical control of the USS San Diego. The RQ-X is an experimental platform undergoing a technology demonstration phase in live operations. The San Diego has been operating the RQ-X since 1 August. When Coronado discovered the RQ-X, it was located at a mobile operating base, MOB 42.

Voice 2: MOB 42 is currently located on the island of Zirku, which is part of the United Arab Emirates. A private commercial airfield on the island allows MOB 42 to use its runways and other support facilities. The RQ-X landed there for routine maintenance and refueling. It was scheduled to remain there for approximately 24 h, awaiting a landing slot back on the San Diego.

Voice 2: The RQ-X is capable of detecting mines down to a depth of 25 m, and neutralizing them at depths of no more than 10 m. To detect the mines, RQ-X uses a COTS sensor with three pulsed lasers. In littoral waters, the TRW sensor can detect mines down to about 25 m.

Voice 2: The RQ-X is also capable of neutralizing shallow mines using a directed energy weapon developed by the Navy Research Laboratory. In littoral waters, the weapon is effective against most mines down to a depth of 10 m, although it is most effective against mines floating on or very near the surface.

Voice 2: After the directed energy weapon attempts to destroy the mine, the TRW sensor system is re-engaged to determine whether the mine-like object is still present in the water.

6.1.6 CLT level 6

At the construal level 6, additional supporting details are added for the interested consumer. Details about how the sensors and weapons will operate are of interest to few users, but these may be germane for those users to assess whether the asset can provide the necessary capabilities. Examples include the following narration, accompanied by appropriate imagery.

Voice 2: This sensor was originally developed by a company called TRW. It performs an alternating circular versus raster scan with the three beams to detect solid objects in the water, and to estimate object size. Objects detected that are within the range of sizes for mine-like objects are further probed by the sensor in a lidar mode, to estimate depth. The depth estimate is more accurate if the sensor is directly above the object.

Voice 2: This weapon, not yet nomenclatured, focuses a coherent beam of energy on the object to find a centroid, then successively adds more coherent beams every 5 seconds until the object begins to splinter, usually from premature detonation or from melting. If the object does not show signs of disintegration after 45 s, the weapon will attempt to find an alternate centroid point and repeat the attack. A maximum of three attempts will be made. Some mines may be neutralized by the attack even though they may not disintegrate. The directed energy attack may defeat the sensor, the fuse, or the other control circuitry in the mine.

6.1.7 Simulation organization

One key to creating and maintaining interest in the story is the match between the construal level of the user and the level of detail in the story as presented. Too much unwanted detail can prompt users to lose interest, and not enough detail can produce frustration, especially if the missing details are needed for task performance. We created a simple user interface using concepts from the popular Facebook application to provide background information on the capabilities of the systems involved and reference to historical missions. As was shown in Figure 13, the user could also select icons to gain more detail about selected players of mission steps (effectively drilling down into the CLT level 6 narrative. Future research will automate information feeds so that we can evaluate automated pop-up of detail as mission events change.

7 Discussion

In this work we applied three new conceptual approaches to design and manage information flow in human-machine teaming situations. We applied construal level theory as an organizing approach to managing information detail in complex mission situations. We formalized the language we call “RECITAL” to constrain that subjective and objective information based on concepts of intent, rules, and delegated authority. To design the information flow, we modeled the human-machine distributed teams as a systemic control hierarchy. The combination of these approaches was used to design and demonstrate a simple command and control user interface operating at six CLT levels using progressive disclosure concepts.

In a complex command and control hierarchy, there is an inherent risk of operators misperceiving and incorrectly abstracting or adapting to the information disseminated. The application of CLT provides a novel approach to the structure and presentation of such information in complex mission environments. By infusing CLT into a UI design, we ensure a better fit to the operator’s mental representation of the information can be realized, and communication and comprehension in a C2 hierarchy can be improved based on an individual’s specific level of psychological distance from the information and context. In this initial work, a UI concept was developed for representing difficult ideas such as intent, rules, control, and outcomes in a simulatable model. Such a model is the foundation for an advanced UI that uses CLT to disseminate mission information in the most efficient possible form.

In this work we present a novel approach to address the subjective nature of expressions of intent, rules, and authorities in complex missions. These expressions are typically composed of unstructured text, delivered from multiple systems to multiple command levels, with various interpretations that gradually make the context of the order seem more distal to an operator. Today, it would not be possible for a machine to process this unstructured text as a means to make real-time decisions, because so much of the contextual information is inferred by operators as a function of training and experience. However, many increasingly “intelligent” machine platforms are making progress with this type of inference by mining additional information in the external context.

Additional research is ongoing to model the RECITAL hierarchical information flows, and the potential definition of a set of applications that would deliver that information to the various planners and operators at different levels of command. At this point, the provision of contextual information is only modelled as a single black box entity in the control flow. Eventually this would be a set of software applications. We envision that these applications would present data in a rich narrative form similar to the stories presented in section 6. Research that uses artificial intelligence to automate narrative generation is being explored as a means to scale the approach. This work provides a conceptual platform for additional research on machine learning approaches to search for and select the contextual information, as well as to learn individual user preferences that help to contextually manage CLT. Finally, the conceptual approach is being extended to a set of additional mission scenarios with more complex distributed autonomy to further evaluate and generalize its applicability and benefits.

8 Conclusion

This research is highly conceptual at this time but is being published because it represents a novel approach to understanding of information flows in human-machine teaming. While many prevailing narratives about distributed automation reflect automation of inefficient human tasks, this work addresses automation of information flows, particularly contextual information, that enable human (and perhaps machine) operators to make better task-related decisions. This mirrors the concepts being observed in popular automation platforms like Google and Waze.

This research makes several fundamental hypotheses about task related activities in human-machine teams. The first is that expressions of intent, rules, and transfer of authority are present in the interaction of human machine teams, just as they are in human-human teams. The second is that these interactions tend to follow information produced and consumed in hierarchical control structures and the information can be modeled as a control flow. The third is that the design of the produced/consumed information interaction between humans and machines can be designed using construal level theory, and that there are six observable levels that reoccur in these interactions. Finally, the research found that visual information combined with narratives is effective at representing construal level information.

Data availability statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Author contributions

DF developed the basic RECITAL concept, applied CLT to the concept, and developed the initial UI demonstration. A key aspect of this work was the use of narrative at different construal layers, leading to the theory that six recognizable construal levels exist. TM contributed the systems engineering approach including the use of STPA and the hierarchical information flows.

Funding

This work was partially funded by the Office of Naval Research (ONR), under contract N00014-20-C-2004. Dr. Jeffrey Morrison, ONR Code 34, is the ONR Program Officer.

Acknowledgments

Thank you to C Jay Battle, Jake Baumbaugh, Zachary Harshaw, and Matthew Schmouder who all contributed to the development of the user interface concepts described in this article. Thank you to Kayla Noble for reviewing and editing this article.

Conflict of interest

Authors TM and DF were employed by Stephenson Technologies Corporation.

The handling editor declared a past co-authorship with one of the authors TM.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1.Associated Press, “Tesla driver’s complaint being looked into by US regulators”, 2021. Available at: https://apnews.com/article/technology-business-traffic-9c3d3c75d9c668dbb1a8cdbbe883ff88 (Accessed 22 May 2022).

2.Tesla Corporation, Tesla 3 owners manual. Available at: https://www.tesla.com/ownersmanual/model3/en_nz/GUID-0535381F-643F-4C60-85AB-1783E723B9B6.html (Accessed 22 May 2022).

3.U.S. Department of Defense Joint Publication 3-0. Joint operations (2018). Available at: https://www.jcs.mil/Doctrine/Joint-Doctrine-Pubs/3-0-Operations-Series/ (Accessed 22 May 2022).

4.U.S. Department of Defense Joint Publication 5-0. Joint planning (2020). Available at: https://www.jcs.mil/Doctrine/Joint-Doctrine-Pubs/5-0-Planning-Series/ (Accessed 22 May 2022).

5.International Institute of Humanitarian Law. Rules of engagement handbook (2009). Available at: https://iihl.org/wp-content/uploads/2017/11/ROE-HANDBOOK-ENGLISH.pdf (Accessed 22 May 2022).

6. Larsen E (2017). “Use these three military lessons to Be decisive in business.” Forbes. Available at: https://www.forbes.com/sites/eriklarson/2017/04/25/three-things-the-military-taught-me-about-being-decisive-in-business/?sh=584262a372ff (Accessed 1 October 2022).

7. Colan L. Why you need to lay down ground rules for a high-performing team. New Delhi: Inc (2014). Available at: https://www.inc.com/lee-colan/rules-of-engagement-for-high-performing-teams.html (Accessed 1 October 2022).

8. Gustavsson PM, Hieb M, Eriksson P, More P, Niklasson L. Machine interpretable representation of commander's intent. In: 13th International Command and Control Research and Technology Symposium; June 17-19, 2009; Bellevue, Washington, USA.

9. Leveson NG. Engineering a safer world: Systems thinking applied to safety. United States: MIT Press (2012).

10. Cockcroft A, “COVID-19 hazard analysis using STPA,” 2020. Available at: https://adrianco.medium.com/covid-19-hazard-analysis-using-stpa-3a8c6d2e40a9 (Accessed 22 May 2022).

11. Leveson NG, Thomas JP, STPA handbook, p. 179, 2018. Available at: http://psas.scripts.mit.edu/home/get_file.php?name=STPA_handbook.pdf (Accessed 22 May 2022).

12.CNET, Cnet, Waze image Available at: www.cnet.com/roadshow/news/google-assistant-waze-easier-reporting-less-distraction/ (Accessed 22 May 2022).

13. Nielson J. Progressive disclosure. California, United States: Nielson Norman Group (2006). Available at: https://www.nngroup.com/articles/progressive-disclosure/ (Accessed 22 May 2022).

14. Spillers F (2004). Progressive disclosure- the best interaction design technique? Experience dynamics. Available at: https://www.experiencedynamics.com/blog/2004/03/progressive-disclosure-best-interaction-design-technique (Accessed 22 May 2022).

15. Trope Y, Liberman N. Construal-level theory of psychological distance. Psychol Rev (2010) 117(2):440–63. doi:10.1037/a0018963

16. Trope YL. Construal level theory. In: Van Lange PK, editor. Handbook of theories of social psychology. Washington DC: Sage Publications Ltd (2012). p. 118–34.

17. Bar-Anan Y, Liberman N, Trope Y. The association between psychological distance and construal level: Evidence from an implicit association test. J Exp Psychol Gen (2006) 135(4):609–22. doi:10.1037/0096-3445.135.4.609

18.Google Google, Available at: google.com/search?q=braves+score (Accessed 22 May 2022).