- Institute of Cognitive Sciences and Technologies, National Research Council of Italy, Rome, Italy

In this paper, we investigate the primitives of collaboration, useful also for conflicting and neutral interactions, in a world populated by both artificial and human agents. We analyze in particular the dependence network of a set of agents. And we enrich the connections of this network with the beliefs that agents have regarding the trustworthiness of their interlocutors. Thanks to a structural theory of what kind of beliefs are involved, it is possible not only to answer important questions about the power of agents in a network, but also to understand the dynamical aspects of relational capital. In practice, we are able to define the basic elements of an extended sociality (including human and artificial agents). In future research, we will address autonomy.

1 Introduction

In this paper we develop an analysis that aims to identify the basic elements of social interaction. In particular, we are interested in investigating the primitives of collaboration in a world populated by both artificial and human agents.

Social networks are studied extensively in the social sciences both from a theoretical and empirical point of view [1–3] and investigated in their various facets and uses. These studies have shown how relevant the structure of these networks is for their active or passive use by different phenomena (from the transmission of information to that of diseases, etc.). These networks can provide us with interesting characteristics of the collective and social phenomena they represent. For example, the paper [4] shows how the collaboration networks of scientists in biology and medicine “seem to constitute a ''small world'' in which the average distance between scientists via a line of intermediate collaborators varies logarithmically with the size of the relevant community” and “it is conjectured that this smallness is a crucial feature of a functional scientific community”. Other studies on social networks have tried to characterize subsets by properties and criteria for their definition: for example, the concept of “community” [5].

The primitives of these networks in which we are interested, which are essential both for collaborative behaviors and for neutral or conflicting interactions, serve to determine what we call an “extended sociality”, i.e. extended to artificial agents as well as human agents. For this to be possible it is necessary that the artificial agents are endowed, as well as humans, with a capacity that refers to a “theory of mind” [6] in order to call into question not so much and not only the objective data of reality but also the prediction on the cognitive processing of other agents (in more simple words: is relevant also the ability to acquire knowledge about other agents’ beliefs and desires).

In this sense, a criticism must be raised against the theory of organization which has not sufficiently reflected on the relevance of beliefs in relational and social capital [7–11]: the thing that transforms a relationship into a capital is not simply the structure of the network objectively considered (who is connected with whom and how much directly, with the consequent potential benefits of the interlocutors) but also the level of trust [12, 13] that characterizes the links in the network (who trusts who and how much). Since trust is based on beliefs–including also the believed dependence (who needs whom)—it should be clear that relational capital is a form of capital, which can be manipulated by manipulating beliefs.

Thanks to a structural theory of what kind of beliefs are involved it is possible not only to answer important questions about agents’ power in network but also to understand the dynamical aspects of relational capital. In particular, it is possible to evaluate how the differences in beliefs (between trustor and trustee) relating to dependence between agents allow to pursue behaviors, both strategic and reactive, with respect to the goals that the different interlocutors want to achieve.

2 Agents and Powers

2.1 Agent’s Definition

Let us consider the theory of intelligent agents and multi-agent systems as the reference field of our analysis. In particular, the BDI model of the rational agent [14–17]. In the following we will present our theory in a semi-formal way. The goal is to develop a conceptual and relational apparatus capable of providing, beyond the strictly formal aspects, a rational, convincing and well-defined perspective that can be understood and translated appropriately in a computational modality.

We define an agent through its characteristics: a repertoire of actions, a set of mental attitudes (goals, beliefs, intentions, etc.), an architecture of the agent (i.e., the way of relating its characteristics with its operation). In particular, let a set of agents1:

We can associate to each agent Agi∈Agt:

(a set of beliefs representing what the agent believes to be true in the world);

(a set of goals representing states of the world that the agent wishes to obtain; that is, states of the world that the agent wants to be true);

(a set of actions representing the elementary actions that Agi is able to perform and that affect the real world; in general, with each action are associated preconditions - states of the world that guarantee its feasibility - and results, that is, states of the world resulting from its performance);

(the Agi’s plan library: a set of rules/prescriptions for aggregating agent actions); and

(a set of resources representing available tool or capacity to the agent, consisting of a material reserve).

Of course, the same belief, goal, action, plan or resource can belong to different agents (i.e., shared), unless we introduce intrinsic limits to these notions2. For example, for the goals we can say that gk could be owned by Agi or by Agj and we would have: gAgik or gAgjk.

We can say that an agent is able to obtain on its own behalf (at a certain time, t, in a certain environmental context, c3) its own goal, gAgix, if it possesses the mental and practical attitudes to achieve that goal. In this case we can say that it has the power to achieve the goal, gAgix applying the plan, pAgix, (which can also coincide with a single elementary action).

In general, as usual [12, 13], we define a task τ, that is a couple

in practice, we combine the goal g with the action α, necessary to obtain g, which may or may not be defined (in fact, indicating the achievement of a state of the world always implies also the application of some action).

2.2 Agent’s Powers

Given the above agent’s definition, we introduce the operator

that means that Agx has the ability (physical and cognitive) and the internal and/or external resources to achieve (or maintain) the state of the world corresponding with the goal g through the (elementary or complex) action (α or p) in the context c at the time t. We can similarly define an operator (lack of power: LoPow) in case it does not have this power:

As we have just seen, we define the power of an agent with respect to a τ task, that is, with respect to the couple (action, state of the world). In this way we take into account, on the one hand, the fact that in many cases this couple is inseparable, i.e., the achievement of a certain state of the world is consequent (and expected) to be bound to the execution of a certain specific action (α) and to the possession of the resources (r1,..,rn) necessary for its execution. On the other hand, in this way we also take into consideration the case in which it is possible to predict the achievement of that state in the world with an action not necessarily defined a priori (therefore, in this case the action α in the τ pair would turn out to be undefined a priori). In the second case it would be possible to assign that power to the agent if it is able to obtain the indicated state of the world (g) regardless of the foreseeable (or expected) action to be applied (for example, it may be able to take different alternative actions to do this).

In any case,

It is important to emphasize that arguing that Agx has the power to perform a certain task τ means attributing to that agent the possession of certain characteristics and the consequent possibility of exercising certain specific actions. This leads to the indication of a high probability of success but not necessarily to the certainty of the desired result. In this regard we introduce a Degree of Ability (DoA), i.e. a number (included between 0 and 1) which expresses - given the characteristics possessed by the agent, the state of the world to be achieved and the context in which this takes place - the probability of successfully realization of the task.

So, we can generally say that if Agx has the power

Where

In words: if

Similarly, we can define the absence of power in the realization of the task τ, by introducing a lower threshold (?), for which:

In the cases in which

We will see later the need to introduce probability thresholds.

3 Social Dependence

3.1 From Personal Powers to Social Dependence

Sociality presupposes a “common world”, hence “interference”: the action of one agent can favor (positive interference) or hamper/compromise the goals of another agent (negative interference). Since agents have limited personal powers, and compete for achieving their goals, they need social powers (that is, to have the availability of some of the powers collected from other agents). They also compete for resources (both material and social) and for having the power necessary for their goals.

3.2 Objective Dependence

Let us introduce the relevant concept of objective dependence [20–22]. Given

where

It is the combination of a lack of Power (LoPow) of one agent (

In words: an agent Agi has an Objective Dependence Relationship with respect to a task τk with agent Agj if for realizing τk, regardless of its awareness, are necessary actions, plans and/or resources that are owned by Agj and not owned (or not available, or less convenient to use) by Agi.

More in general, Agi has an Objective Dependence Relationship with Agj if for achieving at least one of its tasks τk, with gk ∈ GOALAgi, are necessary actions, plans and/or resources that are owned by Agj and not owned (or not available or less convenient to use) by Agi.

3.3 Awareness as Acquisition or Loss of Powers

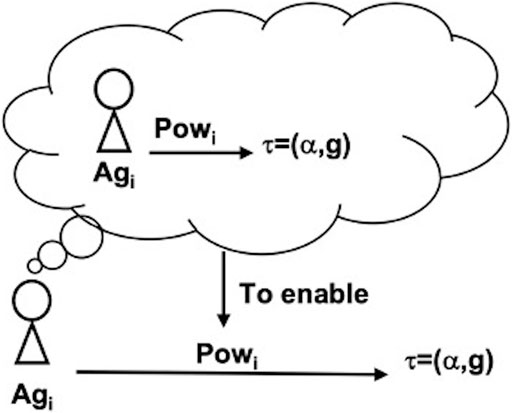

Given that to decide to pursue a goal, a cognitive agent must believe/assume (at least with some degree of certainty) that it has that power (sense of competence, self-confidence, know-how and expertise/skills), then it does not really have that power if it does not know it has that power (Figure 1). Thus, the meta-cognition of agents’ internal powers and the awareness of their external resources empower them (enable them to make their “power” usable).

FIGURE 1. Agi to really have the power to accomplish the task τ, it must believe that it possesses that power. This belief actually enables the real power of it to act.

This awareness allows an agent to use this power also for other agents in the networks of dependence: social power (who could depend on it: power relations over others, relational capital, exchanges, collaborations, etc.).

Acquiring power and therefore autonomy (on that dimension) and power over other agents can therefore simply be due to the awareness of this power and not necessarily to the acquisition of external resources or skills and competences (learning): in fact, it is a cognitive power.

3.4 Types of Objective Dependence

A very relevant distinction is the case of a two-way dependence between agents (bilateral dependence). There are two possible kinds of bilateral dependence (to simplify, we make the task coincide with the goal: τk = gk):

- Reciprocal Dependence, in which Agi depends on Agj as for its goal gAgi1, and Agj depends on Agi as for its own goal gAgj2 (with g1

- Mutual Dependence, in which Agi depends on Agj as for its goal gAgik, and Agj depends on Agi as for the same goal gAgik (both have the goal gk). They have a common goal, and they depend on each other as for this shared goal. When this situation is known by Agi and Agj, it becomes the basis of true cooperation. Agi and Agj are co-interested in the success of the goal of the other (instrumental to gk). Agi helps Agj to pursue her own goal, and vice versa. In this condition to defeat is not rational; it is self-defeating.

In the case in which an agent Agi depends on more than one other agent, it is possible to identify several typical objective dependence patterns. Just to name a few relevant examples, very interesting are the OR-Dependence, a disjunctive composition of dependence relations, and the AND-dependence, a conjunction of dependence relations.

In the first pattern (OR-Dependence) the agent Agi can potentially achieve its goal through the action of just one of the agents with which it is in that relationship. In the second pattern (AND-dependence) the agent Agi can potentially achieve its goal through the action of all the agents with which it is in that relationship (Agi needs all the other agents in that relationship).

The Dependence Network determines and predicts partnerships and coalitions formation, competition, cooperation, exchange, functional structure in organizations, rational and effective communication, and negotiation power. Dependence networks are very dynamic and unpredictable. In fact, they change by changing an individual goal; by changing individual resources or skills; by the exit or entrance of a new agent (open world); by acquaintance and awareness (see later); by indirect power acquisition.

3.5 Objective and Subjective Dependence

Objective Dependence constitutes the basis of all social interaction, the reason for society; it motivates cooperation in its different kinds. But objective dependence relationships that are the basis of adaptive social interactions, are not enough for predicting them. Subjective dependence is needed (that is, the dependence relationships that the agents know or at least believe).

We introduce the

where

When we introduce the concept of subjective view of dependence relationships, as we have just done with the SubjDep, we are considering what our agent believes and represents about its own dependence on others. Vice versa, it should also be analyzed what our agent believes about the dependence of other agents in the network (how it represents the dependencies of other agents). We can therefore formally introduce the formula for each Agi in potential relationship with other agents of the AGT set:

where

1) The objective dependence says who needs who for what in each society (although perhaps ignoring this). This dependence has already the power of establishing certain asymmetric relationships in a potential market, and it determines the actual success or failure of the reliance and transaction.

2) The subjective (believed) dependence, says who is believed to be needed by who. This dependence is what potentially determines relationships in a real market and settles on the negotiation power (see §3); but it might be illusory and wrong, and one might rely upon unable agents, while even being autonomously able to do as needed.

If the world knowledge would be perfect for all the agents, the above-described objective dependence would be a common belief (a belief possessed by all agents) about the real state of the world: there would be no distinction between objective and subjective dependence.

In fact, however, the important relationship is the network of dependence believed by each agent. In other words, we cannot only associate to each agent a set of goals, actions, plans and resources, but we must evaluate these sets as believed by each agent (the subjective point of view), also considering that they would be partial, different each of others, sometime wrong, with different degrees and values, and so on. In more practical terms, each agent will have a different (subjective) representation of the dependence network and of its positioning: it is from this subjective view of the world that the actions and decisions of the agents will be guided.

So, we introduce the

In a first approximation each agent should correctly believe the sets it has, while it could mismatch the sets of other agents4. In formulas:

We define

For each couple (

For each couple (

For each couple (

The three relational levels indicated (objective, subjective and subjective dependence believed by others) in the network of dependence defined above, determine the basic relationships to initiate even minimally informed negotiation processes. The only level always present is the objective one (even if the fact that the agents are aware of it is decisive). The others may or may not be present (and their presence or absence determines different behaviors in the achievement of the goals by the various agents and consequent successes or failures).

3.6 Relevant Relationships within a Dependence Network

The dependence network (Formula 20) collecting all the indicated relationships represents a complex articulation of objective situations and subjective points of view of the various agents that are part of it, with respect to the reciprocal powers to obtain tasks. However, it is interesting to investigate the situations of greatest interest within the defined network. Let’s see some of them below.

3.6.1 Comparison Between Agent’s Point of View and Reality

A first consideration concerns the coincidence or otherwise of the subjective points of view of the agents with respect to reality (objective dependence).

That is, given two agents,

the subjective dependence believed by Agi with respect to Agj coincides with reality, that is, it is objective; or

the subjective dependence believed by Agi with respect to Agj does not coincide with reality, that is, it is not objective.6

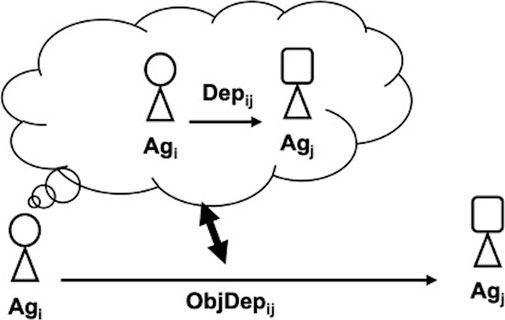

By defining A

FIGURE 2. Dependence of Agi by Agj on the task τ. Comparison on how it is believed by Agi and objective reality.

3.6.2 Comparison Among Points of View of Different Agents

What Agi believes about Agj’s potential subjective dependencies (from various agents in the network, including Agk third-party agents, and on various tasks in

And vice versa, what Agj believes about Agi’s subjective dependence (on the various agents in the network, including Agk third-party agents, and on various tasks in

Comparison between what Agi believes about the dependence of Agi by Agj

In the first case

In the second case,

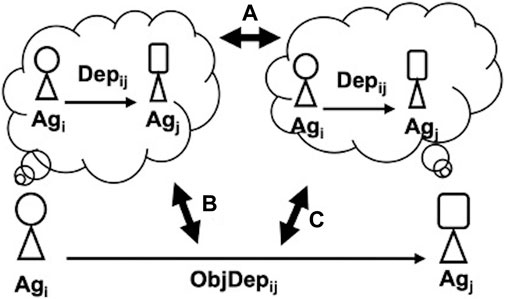

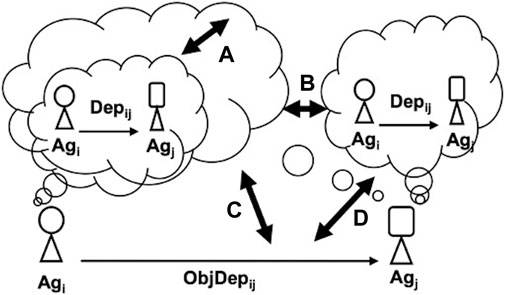

That is, the comparisons are in this case expressed by (see Figure 3):

FIGURE 3. Dependence of Agi from Agj on the task τ. (A) comparison on how it is believed by Agi and by Agj; (B) comparison on how it is believed by Agi and objective reality; (C) comparison on how it is believed by Agj and objective reality.

Another case is the comparison between Agj’s subjective dependence on Agi for a task τ’

For both of these situations we can further compare these two cases with objective reality.

In the first case,

In the second case,

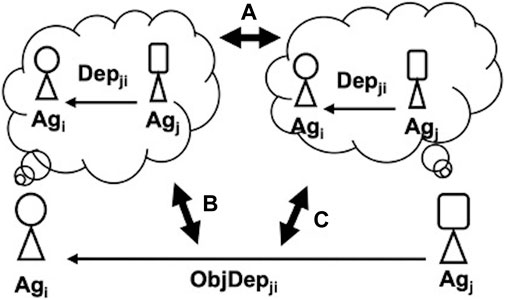

That is, the comparisons are in this case expressed by (see Figure 4):

FIGURE 4. : Dependence of Agj from Agi on the task τ'. (A) comparison on how it is believed by Agi and by Agj; (B) comparison on how it is believed by Agi and objective reality; (C) comparison on how it is believed by Agj and objective reality.

3.6.3 Comparison Among Agents’ Points of View on Others’ Points of View and Reality

Another interesting situation is the comparison between what Agi believes of Agj’s subjective dependence on itself:

In the first case (

In the second case

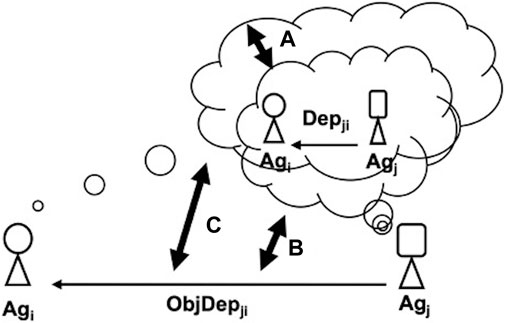

That is, the comparisons are in this case expressed by (see Figure 5):

FIGURE 5. Dependence of Agj from Agi on the task τ'. (A) comparison on how it is believed by Agj and how Agi believes it is believed by Agj; (B) comparison on how it is believed by Agj and objective reality; (C) comparison on how Agi believes it is believed by Agj and objective reality.

In the same but reversed situation, is interesting the comparison between Agi’s subjective dependence on Agj

In the first case, this situation may coincide with reality

In the second case, the point of view of Agi may coincide with reality

That is, the comparisons are in this case expressed by (see Figure 6):

FIGURE 6. Dependence of Agi from Agj on the task τ. (A) comparison on how it is believed by Agi and how Agj believes it is believed by Agi; (B) comparison on how it is believed by Agi and the objective reality; (C) comparison on how Agj believes it is believed by Agi and objective reality.

3.6.4 More Complex Comparisons

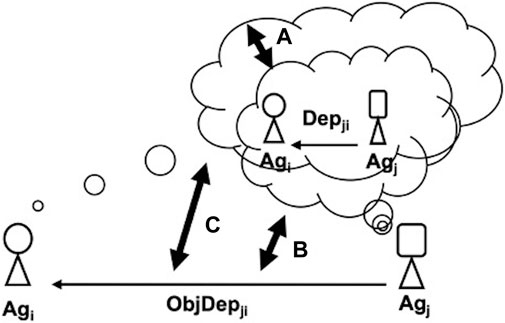

In this case we consider the comparison between the subjective dependence of Agi on Agj

In both cases the comparison with the real situation is also of interest (see Figure 7):

FIGURE 7. Dependence of Agi from Agj on the task τ. (A) comparison on how it is believed by Agi and how Agj believes it is believed by Agi; (B) comparison on how it is believed by Agj and the objective reality; (C) comparison on how Agj believes it is believed by Agi and how it is believed by Agj; (D) comparison on how Agj believes it is believed by Agi and the objective reality.

This relational schema can be analyzed by considering Agj’s point of view. It can compare what both Agi and Agj itself believe of the dependency relationship (

We will see later how the use of the various relationships in the dependency network produces accumulations of “dependency capital” (truthful and/or false) and the phenomena that can result from them.

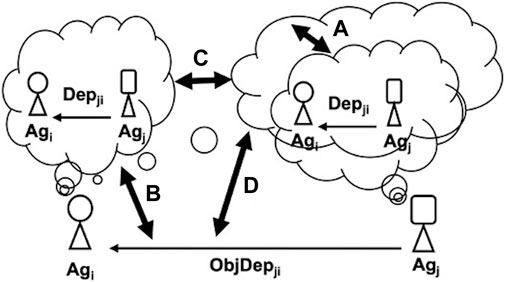

Finally, we consider the comparison between the subjective dependence of Agj from Agi

In both cases, the comparisons with the reality are also of interest (see Figure 8):

FIGURE 8. Dependence of Agj from Agi on the task τ'. (A) comparison on how it is believed by Agj and how Agi believes it is believed by Agj; (B) comparison on how it is believed by Agi and objective reality; (C) comparison on how Agi believes it is believed by Agj and how it is believed by Agi; (D) comparison on how Agi believes it is believed by Agj and objective reality.

3.6.5 Reasoning on the Dependence Network

As can be understood from the very general analyses, just shown the cross-dependence relationships between them can determine different ratios, degrees and dimensions. In this sense we must consider that what we have defined as the “power to accomplish a certain task” can refer to different actions (AZ), resources (R) and contexts (

Not only that, but we also associate the “power of” (

Agents may have beliefs about their dependence on other agents in the network, whether or not they match objective reality. This can happen in two main ways:

- In the first, looking at (formula 24) we can say that there is some task τ for which Agi does not believe it is dependent on some Agj agent and at the same time there is instead (precisely for that task from that agent) an objective dependency relationship. In formulas:

Evaluating how that belief can be denied, given that

i) Thinking of having a power that it does not have

ii) Thinking that Agj does not have that required power

iii) Believing both above as opposed to objective reality.

- In the second case, we can say that there is some task τ for which Agi believes it is dependent on some Agj agent and at the same time there is no objective dependency relationship (precisely for that task from that agent). In formulas:

believing this dependence may mean confirming one or both hypotheses that are denied in reality, namely:

i) Thinking (on the part of Agi) that it does not have a power

ii) Thinking (on Agi’s part) that Agj has that required power

iii) Believing both above as opposed to objective reality.

Going deeper, we can say that the meaning concerning the belief of having or not having the “power” to carry out a certain task,

that is, Agi believes that the application of the action α (and the possession of the resources for its execution) produces the state of the world g (with a high probability of success, let’s say above a rather high threshold).

that is, Agi believes it has the action α in its repertoire. And:

that is, in addition to having the power to obtain the task τ, the Agi agent should also have the state of the world g among the active goals it wants to achieve (we said previously that having the power implies the presence of the goal in potential form). We established (for simplicity) that an agent knows the goals/needs/duties that it possesses, while it may not know the goals of the other agents.

Given the conditions indicated above, there are cases of ignorance with respect to actually existing dependencies or of evaluations of false dependencies. As we have seen above, the beliefs of the agent Agi must also be compared with those of the agent with whom the interaction is being analyzed (Agj). So back to the belief:

putting it from the point of view of Agj we analogously have:

The divergence or convergence of the beliefs of the two agents (Agi, Agj) on the dependence of Agi with respect to Agj can be completely insignificant. What matters for the pursuit of the task and for its eventual success is what Agi believes and whether what it believes is also true in reality

Another interesting analysis concerns the inconsistent fallacious beliefs of agents on dependence on them, of other agents in the network, with respect to objective reality.

That is, Agi may believe that Agj is dependent from it or not. And this may or may not coincide with reality. There are four possible combinations:

As we have seen the belief of dependence implies attribution of powers and lack of powers (and the denial of dependence belief in turn determines similar and inverted attributions). Compared to the previous case, in this case being possible not to necessarily know about the goals of the interlocutor, it is also possible to misunderstand on these goals: for example, considering that

An interesting thing is that there are cases where one can believe that another agent has no power to achieve a task due not to its inability to perform an action (or lack of resources for that execution) but from the fact that the task’s goal is not included among its goals.

4 Dependence and Negotiation Power

Given a Dependence Network (DN, see formula 20) and an agent in this Network (

The same Agi (if it has the appropriate skills) could be included among these agents.

We define Objective Potential for Negotiation of

So, the agents Agl are all included in

In words, m represents the number of agents (Agl) who can carry out the task

psl is the number of agents in AGT who need from Agl of a different task (τq) in competition with the request by Agi (in the same context and at the same time, and being able to offer it help on an Agl’s task in return). We are considering that these parallel requests cause a reduction in availability, as our agent Agl has to contribute to multiple requests (psl + 1) at the same time.

We can therefore say that every other agent in Agi’s network of dependence (either reciprocal or mutual) contributes to

If we indicate with PSD all the agents included in PS with objective dependence equal to 1, so:

we can say that:

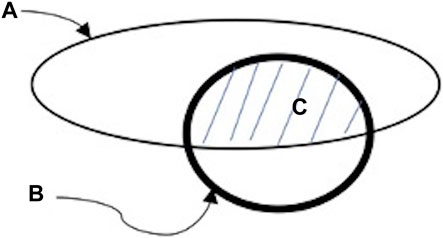

In Figure 9 we represent the objective dependence of Agi: considering the areas of spaces A, B and C proportional to the number of agents they represent, we can say that: A represents the set of agents (Agv) who depend from Agi for some their task

FIGURE 9. Area A is proportional to the number of agents dependent by Agi per τk; Area B is proportional to the number of agents on which Agi depends per τs; Area C is the intersection of (A,B)

However, as we have seen above, the negotiation power of Agi also depends on the possible alternatives (psl) that its potential partners (Agv) have: the few alternatives to Agi they have, the greater its negotiation power (see below)9. Not only that, the power of negotiation should also take into account the abilities of the agents in carrying out their respective tasks (

The one just described is the objective potential for negotiating agents. But, as we have seen in the previous paragraphs, the operational role of dependence is established by being aware of (or at least by believing) such dependence on the part of the agents.

We now want to consider the set of agents with whom Agi can negotiate to get its own task (

We also define the Real Objective Potential for Negotiation (ROPN) of

As can be seen also ROPN, like OPN, depends on the objective dependence of the selected agents. In this case, however, the selection is based on the beliefs of the two interacting agents. We have:

We have made reference above to the believed (by Agi and Agv) dependence relations (not necessarily true in the world). This is sufficient to define

Analogously, we can interpret Figure 9 as the set of believed relationships by the agents.

In case Agi has to carry out the task

- based on the reciprocity task to be performed: the most relevant, the most pleasing, the cheapest, the simplest, and so on.

- based on the agent with whom it is preferred to enter into a relationship: usefulness, friendship, etc.

- based on the trustworthiness of the other agent with respect to the task delegated to it.

This last point leads us to the next paragraph.

5 The Trust Role in Dependence Networks

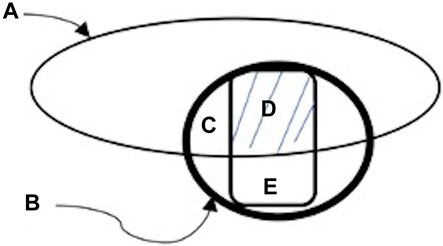

Let us introduce into the dependence network the trust relationships. In fact, although it is important to consider dependence relationship between agents in a society, there will be not exchange in the market if there is not trust to enforce these connections. Considering the analogy with Figure 9, now we will have a representation as given in Figure 10 (where we introduced the rectangle that represents the trustworthy agents with respect to the task

FIGURE 10. The rectangle introduced with respect to Figure 9 represents the trustworthy agents with respect to τs.

The potential agents for negotiation are the ones in the dashed part D: they are trustworthy on the task

While part E includes agents who are trustworthy by Agi on the task

Therefore, not only the decision to trust presupposes a belief of being dependent but notice that a dependence belief implies on the other side a piece of trust. In fact, to believe to be dependent means:

- (

- (

Notice that

So, starting from the objective dependence of the agents, we must include the motivational aspects. In particular, we have a new set of interesting agents, called Potential Trustworthy Solvers (PTS):

Where

where

For Agv to be successful in the

We must now move from the objective value of PTS to what Agi believes about it (Potential Trustworthy Solvers (PTS) believed by Agi):

In fact,

One of the main reasons why Agv is motivated (i.e.,

So, an interesting case is when:

That is, Agv’s motivation to carry out the task

We have therefore defined the belief conditions of the two agents (Agi, Agv) in interaction so that they can negotiate and start a collaboration in which each one can achieve its own goal. These conditions show the need to be in the presence not only of bilateral dependence of Agi and Agv but also of their bilateral trust.

5.1 The Point of View of the Trustee: Towards Trust Capital

Let us, now, explicitly recall what are the cognitive ingredients of trust and reformulate them from the point of view of the trusted agent [23]. In order to do this, it is necessary to limit the set of trusted entities. It has in fact been argued that trust is a mental attitude, a decision and a behavior that only a cognitive agent endowed with both goals and beliefs can have, make and perform. But it has been underlined, also, that the entities that is trusted is not necessarily a cognitive agent. When a cognitive agent trusts another cognitive agent, we talk about social trust. As we have seen, the set of actions, plans and resources owned/available by an agent can be useful for achieving a set of tasks (

We take now the point of view of the trustee agent in the dependence network: so, we present a cognitive theory of trust as a capital, which is, in our view, a good starting point to include this concept in the issue of negotiation power. That is to say what really matters are not the skills and intentions declared by the owner, but those actually believed by the other agents. In other words, it is on the trustworthiness perceived by other agents that our agent’s real negotiating power is based.

We call Objective Trust Capital (OTC) of

With

We can therefore determine on the basis of (OTC) the set of agents in the Agi’s DN that potentially consider the Agi reliable for the task

We are talking about “generic task” as the gS goal is not necessarily included in

As showed in [13] we call Degree of Trust of the Agent Agv on the agent Agi about the task

We call the Subjective Trust Capital (STC) of

In words, the cumulated trust capital of an agent Agi with respect a task

We can therefore determine on the basis of (STC) the set of agents in the Agi’s DN which Agi believes may be potential trustors of Agi itself for the task

We can call Believed Degree of Trust (BDoT)of the Agent Agv on the agent Agi as believed by the agent Agi, about the task

At the same way we can also call the Self-Trust (ST) of the agent Agi about the task

From the comparison between OTC(Agi, τs), STC(Agi, τs), DoT(Agv, Agi, τs) and ST(Agi, τs) a set of interesting actions and decision could be taken from the agents (we will see in the next paragraphs).

6 Dynamics of Relational Capital

An important consideration we have to do is that a dependence network is mainly based on the set of actions, plans and resources owned by the agents and necessary for achieving the agents’ goals (we considered a set of tasks each agent is able to achieve). The dependence network is then closely related to the dynamics of these sets (actions, plans, resources, goals), from their modification over time. In particular, the dynamics of the agents’ goals, from their variations (from the emergency of new ones, from the disappearance of old ones, from the increasing request of a subset of them, and so on). On this basis changes the role and relevance of each agent in the dependence network, changes in fact the trust capital of the agents.

For what concerns the dynamical aspects of this kind of capital, it is possible to make hypotheses on how it can increase or how it can be wasted, depending on how each of basic beliefs involved in trust are manipulated. In the following, let us consider what kind of strategies can be performed by Agi to enforce the other agents’ dependence beliefs and their beliefs about Agi’s competence/motivation.

6.1 Reducing Agl’s Power

Agi can make the other agent (Agl) dependent on it by making the other lacking some resource or skill (or at least inducing the other to believe so).

We can say that there is at least one action (

Where

So:

6.2 Inducing Goals in Agl

Agi can make Agl dependent on it by activating or inducing in Agl a new goal (need, desire) on which Agl is not autonomous (or believes so): effectively introducing a new bond of dependence.

We can say that there is at least one action (

And at the same time is true:

6.3 Reducing Other Agents’ Competition

Agi could work for reducing the believed (by Agl) value of ability/motivation of each of the possible competitors of Agi (in number of pkl) on that specific task τk.

We can say that there are actions (

In practice, the application of the action

In the two cases just indicated (§6.1 and §6.2) the effects on the beliefs of Agl could derive not from the action of Agi but from other causes produced in the world (by third-party agents, by Agl or by environmental changes).

6.4 Increasing its Own Features

Competition with other agents can also be reduced by inducing Agl to believe that Agi is more capable and motivated. We can say that there are actions (

where t1 is the time interval in which the action was carried out while t0 is the interval time prior to its realization. Remembering that

6.5 Signaling its Own Presence and Qualities

Since dependence beliefs is strictly related with the possibility of the others to see the agent in the network and to know its ability in performing useful tasks, the goal of the agent who wants to improve its own relational capital will be to signaling its presence, its skills, and its trustworthiness on those tasks [24–26]. While to show its presence it might have to shift its position (either physically or figuratively like, for instance, changing its field), to communicate its skills and its trustworthiness it might have to hold and show something that can be used as a signal (such as certificate, social status etc.). This implies, in its plan of actions, several and necessary sub-goals to make a signal. These sub-goals are costly to be reached and the cost the agent has to pay to reach them can be taken has the evidence for the signals to be credible (of course without considering cheating in building signals). It is important to underline that using these signals often implies the participation of a third subject in the process of building trust as a capital: a third part which must be trusted. We would say the more the third part is trusted in the society, the more expensive will be for the agent to acquire signals to show, and the more these signals will work in increasing the agent’s relational capital.

Obviously also Agi’s previous performances are ‘signals’ of trustworthiness. And this information is also provided by the circulating reputation of Agi [27].

6.6 Strategic Behavior of the Trustee

As we have seen previously there are different points of view for assessing trustworthiness and trust capital of a specific agent (Agi) with respect to a specific task (τs). In particular:

- its Real Trustworthiness (RT), that which is actually and objectively assessable regardless of what is believed by the same agent (Agi) and by the other agents in its world:

- its own perceived trustworthiness, that is what we have called the Self-Trust (ST):

- there is, therefore, the Objective Trust Capital (OTC) of Agi, i.e. the accumulation of trust that Agi can boast of what other agents in its world objectively believe:

to which corresponds the set of agents (POT) who are potential trustors of Agi:

- And finally, there is the Subjective Trust Capital (STC) of Agi, i.e. the accumulation of trust that Agi believes it can boast with respect to other agents in its world, that is, based on its own beliefs with respect to how other agents deem it trustworthy:

to which corresponds the set of agents (PBT) who are believed by Agi to be potential trustors of Agi:

In fact, there is often a difference between how the others actually trust an agent and what the agent believes about (difference between OTC/POT and STC/PBT); but also between these and the level of trustworthiness that agent perceives in itself (difference between OTC/POT and ST or difference between STC/PBT and ST).

The subjective aspects of trust are fundamental in the process of managing this capital, since it can be possible that the capital is there but the agent does not know to have it (or vice versa).

At the base of the possible discrepancy in subjective valuation of trustworthiness there is the perception of how much an agent feels trustworthy in a given task (ST) and the valuation that agent does of how much the others trust it for that task (STC/PBT). In addition, this perception can change and become closer to the objective level while the task is performed (ST relationship with both RT and OTC/POT): the agent can either find out of being more or less trustworthy than what it believed or realize that the others’ perception was wrong (either positively or negatively). All these factors must take into account and studied together with the different component of trust, in order to build hypotheses on strategic actions the agent will perform to cope with its own relational capital. Then, we must consider what can be implied by these discrepancies in terms of strategic actions: how they can be individuated and valued? How will the trusted agent react when aware of them? it can either try to acquire competences to reduce the gap between others’ valuation and its own one, or exploiting the existence of this discrepancy, taking advantage economically of the reputation aver its capability and counting on the others’ scarce ability of monitoring and testing its real skills and/or motivations. In practice, it is on this basis of comparison between reality and subjective beliefs that the most varied behavioral strategies of agents develop. In the attempt to use the dependence network in which they are immersed at best. Dependence network that represents the most effective way to realize the goals they want to achieve.

7 Conclusion

With the expansion of the capabilities of intelligent autonomous systems and their pervasiveness in the real world, there is a growing need to equip these systems with autonomy and collaborative properties of an adequate level for intelligent interaction with humans. In fact, the complexity of the levels of interaction and the risks of inappropriate or even harmful interference are growing. A theoretical approach on the basic primitives of social interaction and the articulated outcomes that can derive from it is therefore fundamental.

This paper tries to define some basic elements of dependence relationships, enriched through attitudes of trust, in a network of cognitive agents (regardless of their human or artificial nature).

We have shown how, on the basis of the powers attributable to the various agents, objective relationships of dependence emerge between them. At the same time, we have seen how what really matters is the dependence believed by social agents, thus highlighting the need to consider cognition as a decisive element for highly adaptive systems to social interactions.

The articulation of the possibilities of confrontation within the network of dependence between the different interpretations that can arise from them, in a spirit of collaboration or at least of avoidance of conflicts, highlights the need for a clear ontology of social interaction.

By introducing, in the spirit of emulation of truly operational autonomies [28], also the dimension of intentionality and priority choice on this basis, the attitude of trust is particularly relevant, both from the point of view of those who must to choose a partner to trust with a task, as well as from the point of view of those who offer their availability to solve the task. In this sense we have introduced concepts such as relational capital and trust capital.

The future developments of this work will go on the one hand in the direction of further theoretical investigations: on the basis of the model introduced we will define with precision the various and articulated forms of autonomy that derive from it; we will tackle the problem of the “degree of dependence” that derives from many and varied dimensions such as: the value of the goal to be achieved; the number of available and reliable alternative agents that can be contacted; the degree of ability/reliability required for the task to be delegated; and so on.

In parallel, we will try to develop a simulative computational model for trusted dependency networks that we have introduced, with the ambition of having feedback on the basic conceptual scheme and at the same time trying to verify its operability in a concrete way.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author Contributions

RF and CC have equally contributed to the theoretical model; RF developed most of the formalization.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1We introduce the symbol A

2For a more complete and detailed discussion of actions and plans (on their preconditions and results; on how the contexts may affect their effects; on their explicit or implicit conflicts, etc.), please refer to [18, 19].

3The context c defines the boundary conditions that can influence the other parameters of the indicated relationship. Different contexts can determine different outcomes of the actions, affect the agent’s beliefs and even the agent’s goals (for example, determine new ones or change their order of priority). To give a trivial example: being in different meteorological conditions or with a different force of gravity, so to speak, could strongly affect the results of the agent’s actions, and/or have an effect on the agent’s beliefs and/or on its own goals (changing their mutual priority or eliminating some and introducing new ones). In general, standard conditions are considered, i.e. default conditions that represent the usual situation in which agents operate: and the parameters (actions, beliefs, goals, etc.) to which we refer are generally referred to these standard values.

4Our beliefs can be considered with true/false values or included in a range (0,1). In this second case it will be relevant to consider a threshold value beyond which the belief will be considered valid even if not completely certain.

5Of course it can also happen that an agent does not have a good perception of its own characteristics/beliefs/goals/etc..

6The fact of being aware of one’s own goals is of absolute importance for an agent as it determines its subjective dependence which, as we will see, is the basis of its behavior.

7As we have defined the dependence, this non-coincidence may depend on different factors: wrong attribution of one’s own powers or the powers of the other agent.

8The comparison operator (

9if it was aware of it.

10Obviously, this is a possible hypothesis, linked to a particular model of agent and of interaction between agents. We could also foresee different agency hypotheses.

11Of course, the success or failure of these negotiations will also depend on how true the beliefs of the various agents are.

12We assume, for simplicity, that if Agi has the beliefs

13Being both

References

4. Newman MEJ. The Structure of Scientific Collaboration Networks. Proc Natl Acad Sci U.S.A (2001) 98:404–9. doi:10.1073/pnas.98.2.404

5. Radicchi F, Castellano C, Cecconi F, Loreto V, Parisi D. Defining and Identifying Communities in Networks. Proc Natl Acad Sci (2004). doi:10.1073/pnas.0400054101

8. Putnam RD. Making Democracy Work. Civic Traditions in Modern Italy. Princeton NJ: Princeton University Press (1993).

9. Putnam RD. Bowling Alone. The Collapse and Revival of American Community. New York: Simon & Schuster (2000).

10. Coleman JS. Social Capital in the Creation of Human Capital. Am J Sociol (1988) 94:S95–S120. doi:10.1086/228943

11. Bourdieu P. Forms of Capital. In: JC Richards, editor. Handbook of Theory and Research for the Sociology of Education. New York: Greenwood Press (1983).

12. Castelfranchi C, Falcone R. Principles of Trust for MAS: Cognitive Anatomy, Social Importance, and Quantification. In: Proceedings of the International Conference of Multi-Agent Systems (ICMAS'98). Paris: July (1998). p. 72–9.

13. Castelfranchi C, Falcone R. Trust Theory: A Socio-Cognitive and Computational Model. John Wiley & Sons (2010).

14. Bratman M. Intentions, Plans and Practical Reason. Cambridge, Massachusetts: Harvard U. Press (1987).

15. Cohen P, Levesque H. Intention Is Choice with Commitment. Artif Intelligence (1990)(42). doi:10.1016/0004-3702(90)90055-5

16. Rao A, Georgeff M. Modelling Rational Agents within a Bdi-Architecture (1991). Availabl at: http://citeseer.ist.psu.edu/122564.html.

18. Pollack ME. Intentions in Communication. In: J Morgan, and ME Pollack, editors. Plans As Complex Mental Attitudes in Cohen. USA: MIT press (1990). p. 77–103.

19. Bratman ME, Israel DJ, Pollack ME. Plans and Resource-Bounded Practical Reasoning. Hoboken, New Jersey: Computational Intelligence (1988).

20. Sichman J, Conte R, Castelfranchi C, Demazeau Y. A Social Reasoning Mechanism Based on Dependence Networks. In: Proceedings of the 11th ECAI (1994).

21. Castelfranchi C, Conte R. The Dynamics of Dependence Networks and Power Relations in Open Multi-Agent Systems. In: Proc. COOP’96 – Second International Conference on the Design of Cooperative Systems, Juan-Les-Pins, France, June, 12-14. Valbonne, France: INRIA Sophia-Antipolis (1996). p. 125–37.

22. Falcone R, Pezzulo G, Castelfranchi C, Calvi G. Contract Nets for Evaluating Agent Trustworthiness. Spec Issue “Trusting Agents Trusting Electron Societies” Lecture Notes Artif Intelligence (2005) 3577:43–58. doi:10.1007/11532095_3

23. Castelfranchi C, Falcone R, Marzo F. Trust as Relational Capital: Its Importance, Evaluation, and Dynamics. In: Proceedings of the Ninth International Workshop on “Trust in Agent Societies”. Hokkaido (Japan): AAMAS 2006 Conference (2006).

25. Bird RB, Alden SE. Signaling Theory, Strategic Interaction, and Symbolic Capital. Curr Antropology (2005) 46–2. doi:10.1086/427115

27. Conte R, Paolucci M. Reputation in Artificial Societies. In: Social Beliefs for Social Order. Amsterdam (NL): Kluwer (2002). doi:10.1007/978-1-4615-1159-5

Keywords: dependence network, trust, autonomy, agent architecture, power

Citation: Falcone R and Castelfranchi C (2022) Grounding Human Machine Interdependence Through Dependence and Trust Networks: Basic Elements for Extended Sociality. Front. Phys. 10:946095. doi: 10.3389/fphy.2022.946095

Received: 17 May 2022; Accepted: 23 June 2022;

Published: 09 September 2022.

Edited by:

William Frere Lawless, Paine College, United StatesReviewed by:

Giancarlo Fortino, University of Calabria, ItalyLuis Antunes, University of Lisbon, Portugal

Copyright © 2022 Falcone and Castelfranchi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rino Falcone, cmluby5mYWxjb25lQGlzdGMuY25yLml0

Rino Falcone

Rino Falcone