- 1European Organization for Nuclear Research (CERN), Organisation Européenne pour la Recherche Nucléaire (CERN), Geneva, Switzerland

- 2Department of Physics and Astronomy, University of Pittsburgh, Pittsburgh, PA, United States

- 3Department of Physics, University of Wisconsin, Madison, WI, United States

- 4Sezione di Torino, Istituto Nazionale di Fisica Nucleare (INFN), Torino, Italy

- 5Fermi National Accelerator Laboratory (FNAL), Batavia, IL, United States

- 6Oak Ridge National Laboratory, Oak Ridge, TN, United States

- 7Physics Division, Lawrence Berkeley National Laboratory (LBNL), Berkeley, CA, United States

- 8Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany

- 9Department of Physics, The University of Warwick, Coventry, United Kingdom

- 10Deutsches Elektronen-Synchrotron DESY, Hamburg, Germany

- 11Department of Radiation Oncology, Massachusetts General Hospital and Harvard Medical School, Boston, MA, United States

Detector simulation is a key component for studies on prospective future high-energy colliders, the design, optimization, testing and operation of particle physics experiments, and the analysis of the data collected to perform physics measurements. This review starts from the current state of the art technology applied to detector simulation in high-energy physics and elaborates on the evolution of software tools developed to address the challenges posed by future accelerator programs beyond the HL-LHC era, into the 2030–2050 period. New accelerator, detector, and computing technologies set the stage for an exercise in how detector simulation will serve the needs of the high-energy physics programs of the mid 21st century, and its potential impact on other research domains.

1 Introduction

Simulation is an essential tool to design, build, and commission the sophisticated accelerator facilities and particle detectors utilized in experimental high energy physics (HEP). In this context, simulation refers to a software workflow consisting of a chain of modules that starts with particle generation, for example, final state particles from a proton-proton collision. A second module simulates the passage of these particles through the detector geometry and electromagnetic fields, as well as the physics interactions with its materials. The output contains information about times, positions, and energy deposits of the particles when they traverse the readout-sensitive components of the detector. In most modern experiments, this module is based on the Geant4 software toolkit [1–3] but other packages such as FLUKA [4, 5] and MARS [6] are also widely used, depending on the application. A third module generates the electronic signals from the readout components in response to the simulated interactions, outputting this data in the same format as the real detector system. As such, the datasets generated through simulation may be input to the same algorithms used to reconstruct physics observables from real data. Simulation is thus not only vital in designing HEP experiments, it also plays a fundamental role in the interpretation, validation, and analysis of the large and complex datasets collected by experiments to produce physics results, and its impact here should not be underestimated [7].

With many unanswered questions remaining in particle physics and the end of the Large Hadron Collider (LHC) program expected in the late 2030’s, plans and ideas for the next big facilities of the 2030s–2050s are gaining momentum. As these facilities intend to explore ever higher energy scales and luminosities, the scale of simulated data samples needed to design the detectors and their software, and analyze the physics results will correspondingly grow. Simulation codes will thus face challenges in scaling both their throughput and accuracy to meet these sample size requirements with finite but ever evolving computational facilities [8]. The LHC era has already seen a significant evolution of simulation methods from “full” detailed history-based algorithms to a hybrid of full and “fast” parameterized or machine-learning based algorithms for the most computationally expensive parts of detectors [9]. A hybrid simulation strategy, using a combination of full and fast techniques will play a major role for future collider experiments, but full simulation will still be required to develop and validate the fast algorithms, as well as to support searches and analyses of rare processes. The goal of this article is to discuss how detector simulation codes may evolve to meet these challenges in the context of the second and third elements of the above simulation chain, that is the modeling of the detector, excluding the generation of initial particles. An overview of the computational challenges here may be found in [8].

Section 2 presents the design parameters of future accelerators and detectors relevant to their simulation such as colliding particle types, beam parameters, and backgrounds. Challenges in the description and implementation of complex detector geometries and particle navigation through rapidly varying magnetic fields and detector elements of different shapes and materials are discussed in Section 3, while the physics models needed to describe the passage of particles through the detector material at the energy ranges associated with the colliders under consideration will be discussed in Section 4. Beam backgrounds from particle decay or multiple hard collisions are another important topic of discussion, particularly in the case of beams with particles that decay or emit synchrotron radiation, and will be discussed in Section 5. Section 6 focuses on readout modeling in the context of the opportunities and challenges posed by new detector technology, including novel materials and new generation electronics. Section 7 looks forward to the computing landscape anticipated in the era of future colliders, and how these technologies could help improve the physics and computing performance of detector simulation software, and even shape their future evolution. Section 8 will discuss the evolution of simulation software toolkits, including how they might adjust to new computing platforms, experiment software frameworks, programming languages, and the potential success of speculative ideas, as well as the features that would be needed to satisfy the requirements of future collider physics programs. For decades, HEP has collaborated with other communities, such as medical and nuclear physics, and space science, on detector simulation codes, resulting in valuable sharing of research and resources. Section 9 will present examples of application of detector simulation tools originating in HEP, in particular to the medical field, and how the challenges for future HEP simulation may overlap.

This article is one of the first reviews on the role and potential evolution of detector simulation in far future HEP collider physics programs. We hope it contributes to highlight its strategic importance both for HEP and other fields, as well as the need to preserve and grow its priceless community of developers and experts.

2 Future Accelerators and Detectors in Numbers

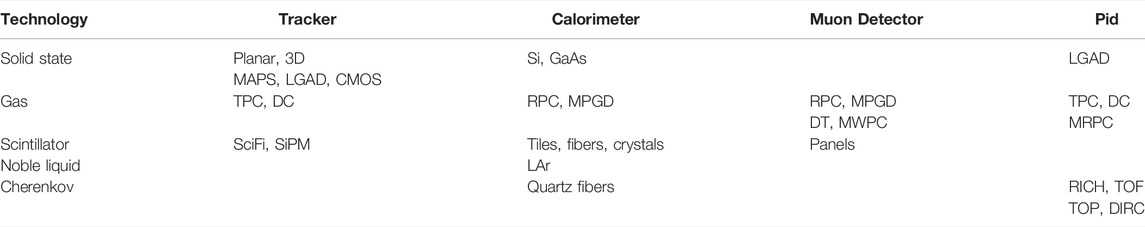

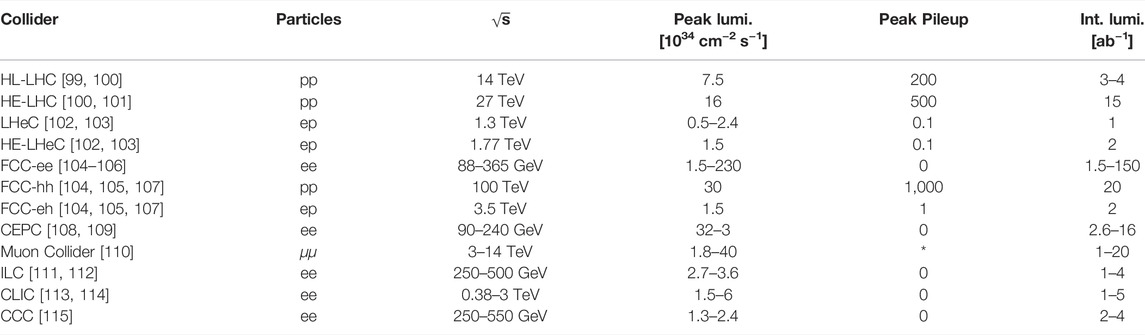

There are several designs for future particle accelerators, each with its strengths and challenges. This chapter focuses on the accelerator and detector design parameters and issues relevant for software modeling. In particular, we survey a number of the most mature proposals, including the high luminosity LHC (HL-LHC), the high energy LHC (HE-LHC), the Large Hadron-electron Collider (LHeC) and its high luminosity upgrade (HL-LHeC), the Future Circular Collider (FCC) program of ee (electron-positron), hh (hadron-hadron), and eh (electron-hadron) colliders, the Circular Electron Positron Collider (CEPC), the Muon Collider, the International Linear Collider (ILC), the Compact Linear Collider (CLIC), and the Cool Copper Collider (CCC). Table 1 summarizes the parameters of these proposed future accelerators, including design values for maximal energy, peak luminosity, and integrated luminosity, and references for each proposal. There are other potential future colliders that are still being designed, including the Super Proton-Proton Collider (SPPC) [10], an electron-muon collider [11], a muon-proton collider [12], and a muon-ion collider [13].

TABLE 1. The parameters of various future accelerators. * Muon colliders face beam-induced backgrounds, which have different properties from pileup at ee or pp colliders.

Modern particle physics accelerators operate with bunched beams and reach peak luminosities higher than 1–2 × 1034 cm−2 s−1, exceeding the initial LHC design specification. The luminosity for future hadron colliders, such as the High Luminosity LHC (HL-LHC), is limited by the maximum number of simultaneous proton-proton collisions, or pileup, under which the detectors can operate effectively. For circular lepton colliders at higher energies, the luminosity is limited by the beamstrahlung (deflection-induced synchrotron radiation), and “top-up” or “top-off” schemes to inject additional particles during beam circulation are expected to be necessary to extend beam lifetime [14]. For linear machines, design parameters like the beam size, beam power, beam currents, and repetition rates drive the peak luminosity.

Proton-proton collisions offer the greatest energy reach, but they are limited by construction costs and the availability of high-field magnets. The largest proposed center-of-mass collision energy comes from the FCC-hh at 100 TeV. Lepton colliders can also push the energy Frontier to multiple TeV. The muon collider requires R&D in order to reduce the transverse and longitudinal beam emittance via cooling and to accelerate to collision energies all within the muon’s 2.2 μs lifetime [15]. However, it offers an exciting path to collision energies up to a few tens of TeV by suppressing synchrotron radiation relative to electrons. The beam-induced background (BIB) created by beam muons decaying in flight places new and unique demands on simulation [16]. Wakefield acceleration also offers a possibility for reaching high energies more compactly in the further future [17].

The proposed detector technologies for the next generations of experiments at colliders are growing in breadth, as indicated by the summary in Table 2. These increases in technological variety are driven by both physics goals and experimental conditions. In addition, new detectors will be increasingly complex and granular. The interplay between instrumentation and computing is therefore increasingly important, as detectors become more challenging to simulate. One example is the upcoming High Granularity Calorimeter (HGCAL) at the Compact Muon Solenoid (CMS) experiment [18]. With roughly six million channels, it will be the most granular calorimeter built to date. This massively increases the geometry complexity, leading to a ∼40–60% increase in the time to simulate the detector [19]; in addition, the increased precision of the detector is expected to require correspondingly more precise physical models, which may further double the simulation time in existing software [20]. The incorporation of precision timing information may also place more demands on the accuracy of the simulation.

The HL-LHC is the nearest-future collider surveyed here, and most further-future colliders aim at higher precision measurements or present even more difficult environments. Therefore, detector complexity should be expected to continue to increase, in order to facilitate the physics programs and measurements for these new colliders. More than ever before, increasingly energetic and potentially heavier particles will interact with the detector materials, and massive increases in accumulated luminosity will enable physicists to explore the tails of relevant kinematic distributions very precisely. New technologies will pose their own challenges, such as the muon collider BIB, or new materials whose electromagnetic and nuclear interactions may not be fully characterized. This motivates the continued development of detector simulation software, to ensure its computational performance and physical accuracy keep up with the bold next steps of experimental high energy physics.

3 Geometry Description and Navigation

Geometric modeling is a core component of particle transport simulation. It describes both the material properties of detector components, which condition the particle interactions, and their geometric boundary limits. Particles are transported through these geometries in small spatial steps, requiring fast and accurate computation of distances and finding the geometry location after crossing volume boundaries. This task uses a significant fraction of total simulation time even for the current LHC experiments [8], making performance a general concern for the evolution of geometry modeling tools. As discussed in Section 2, future detectors will have higher granularity and, in same cases, will need to handle higher interaction rates than at the LHC, requiring the geometry modeling and navigation software to increase the throughput of the above calculations given this increased complexity. Providing the navigation precision necessary to achieve the required physics accuracy will likely be challenged by the presence of very thin detectors placed far away from the interaction point.

The detector geometry description of a HEP experiment goes through several processing steps between the initial computer-aided designs (CAD) [21] to the in-memory representation used by the simulation. These transformations primarily reduce the complexity and level of detail available in the CAD model to increase computing performance without compromising the required physics accuracy. To start with, the detector design study phase is particularly important for future collider experiments. Essential detector parameters concerning solid angle acceptances, material composition or engineering constraints need to be optimized in a tight cycle involving full simulation. DD4hep [22] has emerged as a commonly used detector description front-end for future accelerator experiments, providing an internal model representation independent on the geometry modeler back-end, and interfaces for importing the geometry representation from many sources. This not only facilitates the design optimization cycle, but also the handling of multiple geometry versions and the integration of important detector conditions such as alignment, which affect the geometry during the experiment operation.

Although the geometry models at the core of today’s HEP detector simulation were designed in the 60’s, Geant geometry implementations [1, 23] have enjoyed continuous success over many generations of CPU architectures because of a number of features that reduce both the memory footprint and algorithmic complexity. Multiple volume placements, replication using regular patterns, and hierarchies of non-overlapping ‘container’ volumes enable efficient simulation of very complex setups comprising tens of millions of components. However, creating the model description for such setups is often a long and arduous process, and the resulting geometry is very difficult to update and optimize.

The most popular 3D models used in simulation nowadays are based on primitive solid representations such as boxes, tubes, or trapezoids, supporting arbitrarily complex Boolean combinations using these building blocks. Different simulation packages use different constructive solid geometry (CSG) flavours [24], providing a number of features and model constraints to enhance the descriptive power and computation efficiency. However, performance can be highly degraded by overuse of some of these features, such as creating unbalanced hierarchies of volumes or creating overly complex Boolean solids. Using such inefficient constructs in high occupancy detector regions near the interaction point generally leads to significant performance degradation.

The current geometry implementations have a very limited adaptive capability for optimizing such inefficient constructs, mainly due to the high complexity of the model building blocks. The geometry queries can only be decomposed to the granularity of solid primitives, so user-defined constructs cannot be internally simplified. This calls for investigating surface models as alternatives to today’s geometry representations. Adopting boundary representation (BREP) models [25] composed of first and second-order algebraic surfaces, would allow decomposing navigation tasks into simple surface queries. An appropriate choice of the BREP model flavor allowing surface queries to be independent could greatly favor the highly-parallel workflows of the future.

Developing automatic conversion tools from CAD surface-based models to the Geant4 simulation geometry proved to be too challenging in the past. DD4hep provides a conversion path for complex surfaces into tessellated bodies usable directly in simulation [26]. A conversion procedure relying exclusively on tessellation would however introduce important memory and performance overheads during simulation. Supporting surface representations directly in the simulation geometry would make such conversions more efficient. This would also provide a simpler transition from the engineering designs to the simulation geometry, having fewer intermediate representations. It would also make it easier to implement transparent build-time optimizations for inefficient user constructs.

Successive upgrades to adapt to new computing paradigms such as object-oriented or parallel design have not touched the main modelling concepts described above, which served their purpose for decades of CPU evolution but are quickly becoming a limiting factor for computing hardware with acceleration. Recent R&D studies [27, 28] have shown that today’s state-of-the-art Geant-derived geometry codes such as VecGeom [29] represent a bottleneck for vectorized or massively parallel workflows. Deep polymorphic code stacks, low branch predictability, and incoherent memory access are some of the most important reasons for performance degradation when instruction execution coherence is a hardware constraint. This is intrinsic to the model being used, combining in the same query algorithms of very different complexity, called in an unpredictable manner and unfriendly to compiler optimizations. These studies also indicate the need to simplify the geometry models being used, highly reducing or eliminating unnecessary abstractions.

Performance optimization is particularly important for common geometry navigation tasks such as collision detection of the simulated particle trajectories with the geometry setup, and relocation after crossing volume boundaries. Navigation helpers are using techniques such as voxelization [30] or bounding volume hierarchies (BVH) [31] to achieve logarithmic complexity in setups having several millions objects. Adopting efficient optimization strategies will be more relevant for the more complex detectors of the future.

The same problem of collision detection is addressed by graphics systems, in particular, ray-tracing (RT) engines such as NVIDIA OptiX [32] that make use of dedicated hardware acceleration. Adapting HEP detector simulations to use such engine was implemented in the Opticks library [33], and demonstrated speedups of more than two orders of magnitude compared to CPU-based Geant4 simulations of optical photon transport in large liquid-Argon detectors. This required adapting the complete optical photon simulation workflow to GPUs, but also simplifying and transforming the geometry description to match OptiX requirements. Generalizing this approach for future HEP detector simulation would require a major re-engineering effort, in particular for the geometry description. How exactly RT technology evolves will likely have a big impact on the solutions adopted for detector geometry modeling. As the use of RT acceleration proliferates in the gaming industry, APIs supported by dedicated languages and libraries will most probably be made publicly available. Combined with larger on-chip caches, future low-latency graphics chips may allow externalizing geometry as an accelerated service for simulation. Such service could become an important booster, but would be conditional to the simplification of the geometry description and added support for batched multi-track workflows.

Evolution in computing technology will most probably present game-changing opportunities to improve simulation software, as described in Section 7. For example, tensor cores [34] provide a large density of Flops, although at a cost in precision. Geometry step calculations cannot make use of half-precision floating point (FP16) directly because rounding errors would become too important and affect both physics precision and transportation over large distances in the detector. Some optimizations may however be delegated to a FP16-based navigation system using ML inference to, for instance, prioritize candidate searches. Single-precision FP32-based geometry distance computation should be given more weight in the context of the evolution of reduced-precision accelerator-based hardware, because the option to reduce precision fulfils physics requirements in most cases. Furthermore, it would provide a significant performance boost due to a smaller number of memory operations for such architectures. Recent studies report performance gains as large as 40% for certain GPU-based simulation workflows [28], making R&D in this area a good investment, as long as memory operations remain the dominant bottleneck, even if chips evolve to provide higher Flops at FP32 precision or better. The precision reduction option is, however, not suitable for e.g., micron-thin sensors, where the propagation rounding errors become comparable to the sensor thickness. Addressing this will require supporting different precision settings depending on the detector region.

4 Physics Processes and Models

As mentioned in Section 1, Geant4 has emerged as the primary tool to model particle physics detectors. Geant4 offers a comprehensive list of physics models [35] combined with the continuous deployment of new features and improved functionality, as well as rigorous code verification and physics validation against experimental data.

4.1 Current Status

During the first two periods of data taking in 2010–2018, the LHC experiments produced, reconstructed, stored, transferred, and analyzed tens of billions of simulated events. The physics quality of these Geant4-based Monte Carlo samples produced at unprecedented speed was one of the critical elements enabling these experiments to deliver physics measurements with greater precision and faster than in previous hadron colliders [7, 36]. Future accelerator programs will, however, require the implementation of additional physics processes and continuous improvements to the accuracy of existing ones. A quick review of the current status of physics models in Geant4 will precede a discussion of future needs.

Physics processes in Geant4 are subdivided over several domains, the most relevant for HEP being particle decay, electromagnetic (EM) interactions, hadronic processes, and optical photon transport. The precision of the modeling has to be such that it does not become a limiting factor to the potential offered by detector technology. EM physics interactions of e−/e+/γ with the detector material, producing EM showers in calorimeters, consume a large fraction of the computing resources at the LHC experiments. Reproducing the response, resolution, and shower shape at a level of a few per mille requires modeling particle showers down to keV levels, which contain a large number of low-energy secondary particles that need to be produced and transported through magnetic fields. This level of accuracy is required in order to distinguish EM particles from hadronic jets, and to efficiently identify overlapping showers. Highly accurate models for energy deposition in thin calorimeter layers are also essential for reconstruction of charged particles and muons. Simulation of tracking devices requires accurate modeling of multiple scattering and backscattering at low and high energy, coupled with very low energy delta electrons. Geant4 delivers on all these requirements by modeling EM processes for all particle types in the 1 keV to 100 TeV energy range. The accuracy of Geant4 EM showers is verified by the CMS [37] and ATLAS [38] experiments.

Geant4 models physics processes for leptons, long-lived hadrons, and hadronic resonances. Simulation of particle decay follows recent PDG data. The decay of b-, c-quark hadrons and τ-leptons is outsourced to external physics generators via predefined decay mechanisms.

Simulation of optical photon production and transport is also provided by Geant4. The main accuracy limitation arises from the large compute time required to model the large number of photons and the many reflections that may occur in within the detector. Various methods to speed-up optical photon transport are available, depending on tolerance to physics approximations.

Hadron-nuclear interaction physics models are needed to simulate hadronic jets in calorimeters, hadronic processes in thin layers of tracking devices, and for simulating shower leakage to the muon chambers. Geant4 hadronic physics is based on theory models and tuned on thin target data [3]. This approach guarantees a more reliable predictive power than that offered by parametric models for a wide range of materials, particle types and energy ranges for which data measurements are not available. Parameter tuning and model extensions are necessary to describe all particle interactions at all energies [2]. Geant4 has adopted the approach of combining several models that fit the data best in different energy ranges, since it is unrealistic to expect that one single model would do the job over the full kinematic range of interest. This is done by providing several sets of predefined “physics lists”, which are combinations of EM and hadronic processes and models. Experiments need to identify the most suitable for their own physics program by performing the necessary physics validation studies and possibly applying calibration corrections [37–40].

4.2 Future Needs

The large data volumes to be collected by the HL-LHC experiments will enable experiments to reduce statistical uncertainties, therefore demanding more accurate simulation to help reduce systematic uncertainties in background estimation and calibration procedures. The next generation of HEP detectors to be commissioned at the LHC and designed to operate in future lepton and hadron colliders will have finer granularity and incorporate novel materials, requiring simulation physics models with improved accuracy and precision, as well as a broader kinematic coverage. Materials and magnetic fields will also need to be described in more detail to keep systematic uncertainties small. Moreover, new technologies [41–43] will allow detectors to sample particle showers with a high time resolution of the order of tens of picoseconds, which will need to be matched in simulation. Consequently, the simulation community has launched an ambitious R&D effort to upgrade physics models to improve accuracy and speed, re-implementing them from the ground up when necessary (e.g., GeantV [27], Adept [28], Celeritas [44]). Special attention will be needed to extend accurate physics simulation to the O (100)TeV domain, including new processes and models required to support the future collider programs.

Achieving an optimal balance between accuracy and software performance will be particularly challenging in the case of EM physics, given that the corresponding software module is one of the largest consumers of compute power [36]. Reviews of EM physics model assumptions, approximations and limitations, including those for hadrons and ions will be needed to support the HL-LHC and Future Collider (FCC) programs. The Geant4 description of multiple scattering [45] of charged particles provides predictions in good agreement with data collected at the LHC. Nevertheless, the higher spatial resolution in new detectors [41, 46–48], may require even higher accuracy to reproduce measured track and vertex resolutions. Excellent modeling of single-particle scattering and backscattering across Geant4 volume boundaries for low energy electrons are critical for accurate descriptions of shower shapes in calorimeters, such as CMS’s high granularity hadronic calorimeter. At the very high energies present at the FCC, nuclear size effects must be taken into account, and elastic scattering models must be extended to include nuclear form factors in the highest energy range. The description of form factors may affect EM processes at high energies in such a way that it affects shower shapes and high energy muons. A theoretical description of the Landau-Pomeranchuk-Migdal (LPM) effect, significant at high energy, is included in the models describing the bremsstrahlung and pair-production processes in Geant4. For the latter, introducing LPM leads to differences in cross-sections at very high energies that will need to be understood when data become available. A relativistic pair-production model is essential for simulation accuracy at the FCC. Rare EM processes like γ conversion to muon and hadron pairs also becomes important at very high energies and will have to be added. This is also essential to properly model beam background effects in the collision region of a Higgs Factory. In the cases of the FCC and dark matter search experiments, the description of pair production will need to be extended to include the emission of a nearby orbital electron (triplet production) and to take into account nuclear recoil effects. Finally, γ radiative corrections in EM physics may affect significantly the accuracy of measurements at Higgs factories and will need to be added to the models. All these rare processes must be added to the simulation to improve the accuracy in the tails of the physics distributions, where backgrounds become important. These corrections must be included such that they are invoked only as needed, thus not increasing the computing cost of EM modeling. At the FCC collision energy, the closeness of tracking devices to the interaction points will also require widening the range of physics models of short lived particles. This will be particularly important for high-precision heavy flavor measurements, as non-negligible fractions of beauty and charm hadrons will survive long enough to intercept beam pipes and the first detector layers. Describing the interaction of such particles with matter may already be required at the HL-LHC program because of a reduction of the distance between the trackers and the interaction point [41, 46]. A review of how detector simulation interfaces to dedicated decay generators during particle transport may be necessary.

In hadronic interactions, more than one model is needed to describe QCD physics processes accurately over the whole energy range. Typically, a hadronic interaction is initiated when a high energy hadron collides with a nucleon in the nucleus of a given material. This is followed by the propagation of the secondary particles produced through the nucleus, the subsequent de-excitation of the remnant nucleus and particle evaporation, until the nucleus reaches the ground state. Different sets of models map naturally to these phases depending on the initial energy of the collision: a parton string model for energy above few GeV, an intra-nuclear cascade model below that threshold. Pre-compound and de-excitation models are used to simulate the last steps in the evolution of the interaction. A reliable description of showers in hadronic calorimeters requires accurate descriptions of all these processes.

Geant4 offers two main physics lists to describe hadronic physics in high energy collider experiments. The main difference between the two consists in the choice of the model describing the initiating quark-parton phase mentioned above, either a quark-gluon string model, or a Fritiof model [3]. Having more than one model allows to estimate the systematic uncertainties arising from the approximations they use. Unfortunately, neither of them is accurate enough to describe the hadronic interactions at multi-TeV energies occurring at the FCC. New processes will need to be implemented in the hadronic physics simulation suite to address this higher energy domain, taking inspiration from those available in the EPOS generator [49], used by the cosmic ray and heavy ion physics communities.

Another element essential for the simulation of hadronic physics is precise calculations of interaction cross-sections. At the highest energies, Geant4 uses a general approach based on the Glauber theory [50], while at lower energies cross-sections are evaluated from tables obtained from the Particle Data Group [51]. This approach profits from the latest thin-target experiment measurements and provides cross-sections for any type of projectile particle. The precision of cross-section calculations for different types of particles will need to be improved as more particle types become relevant to particle flow reconstruction in granular calorimeters.

A correct description of particle multiplicity within hadronic showers is also needed to model the physics performance of highly granular calorimeters (e.g. CMS [18]), and is also essential to simulate high-precision tracking devices (e.g. LHCb spectrometer). The parameters describing hadronic models must be tuned to describe all available thin target test beam data, and the models expanded to provide coverage to as many beam particles and target nuclei as possible. For flavor physics, it is important to take into account the differences in hadronic cross-sections between particle and anti-particle projectiles.

5 Beam Backgrounds and Pileup

The main categories of beam backgrounds at ee colliders are machine and luminosity induced [52]. The former is due to accelerator operation and includes Synchrotron Radiation (SR) and beam gas interactions. The latter arises from the interaction of the two beams close to the interaction point of the experiment.

The SR that may affect the detector comes from the bending and focusing magnets closest to it. While detectors will be shielded, a significant fraction of photons may still scatter in the interaction region and be detected. This is expected to be one of the dominant sources of backgrounds in the FCC-ee detector [53]. Beam gas effects are a result of collisions between the beam and residual hydrogen, oxygen and carbon gasses in the beam pipe inside the interaction region.

The luminosity induced background is generated from the electromagnetic force between the two approaching bunches, which leads to the production of hard bremstrahlung photons. These may interact with the beam and an effect similar to e+e− pair creation can occur, or they scatter with each other which can result in hadrons, and potentially jets, in the detector. Stray electrons due to scattering between beam particles in the same bunch can also hit the detector.

The main background at pp colliders are the large number of inelastic proton–proton collisions that occur simultaneously with the hard-scatter process, collectively known as pileup. This usually results in a number of soft jets coinciding with the collision. The number of interactions per crossing at the future colliders is expected to exceed 1,000, compared to no more than 200 at the end of the HL-LHC era. An additional source of luminosity induced background is the cavern background. Neutrons may propagate through the experimental cavern for a few seconds before they are thermalized, thus producing a neutron-photon gas. This gas produces a constant background, consisting of low-energy electrons and protons from spallation.

Machine induced backgrounds at pp colliders are similar to the ee ones [38]. Besides the beam gas, the beam halo is a background resulting from interactions between the beam and upstream accelerator elements. In general, pile-up dominates over the beam gas and beam halo.

Muon colliders are special in that the accelerated particles are not stable. Decays of primary muons and the interaction of their decay products with the collider and detector components [54] constitute the main source of beam background. Compared to ee colliders this represents an additional source of background resulting in a large number of low momentum particles that may not be stopped by shielding and enter the interaction region of the detector. Additionally, this type of background needs to be simulated with higher precision outside of the interaction region.

An important consideration is the detector response and readout time compared to the time between collisions, which is often longer. In-time and out-of-time pile-up should be considered separately. In-time pileup arises from additional collisions that coincide with the hard-scatter one, while out-of-time pile-up comes from collisions occurring at bunch crossings different from the hard-scatter one, although affecting the readout implicitly.

5.1 Bottlenecks in Computational Performance

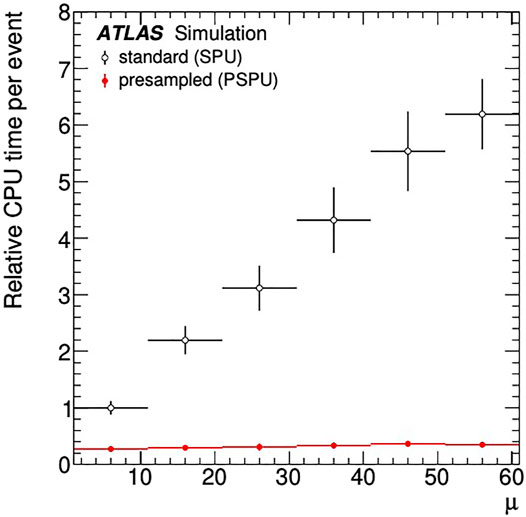

The biggest bottleneck in the time it takes to model pileup in a pp collider is the number of interactions per bunch crossing. As seen in black circles in Figure 1, the CPU time requirement has a very steep dependence on this parameter, which needs to match data-taking conditions. The second issue can be the slow response time of the detectors, requiring a large number of out-of-time bunch crossings to be simulated. This can be solved by only simulating the detectors when needed, as not all have the same sensitive time range. Improvements in detector technologies that will be used in future experiments may make these times small enough not to cause a significant overhead.

FIGURE 1. Comparison of the average CPU time per event in the standard ATLAS pileup digitization (black open circles) and the pre-sampled pileup digitization (red filled circles) as a function of the number of pp collisions per bunch crossing (μ). The CPU time is normalized to the time taken for the standard pileup for the lowest μ bin. Taken from Ref. [55].

Traditionally each in-time or out-of-time interaction is sampled individually and taken into account at the digitisation step, when detector digital responses are emulated. Experiments pre-sample pile-up events and reuse them between different samples to reduce computational time [55, 56]. While the pre-sampling itself still has the same CPU limitations, using those pileup events barely depends on the amount of pileup (red circles in Figure 1), but could cause larger stress on storage. Thresholds to analogue signals are applied at digitization to reduce the amount of saved digits significantly, at the cost of reduced precision when two digital channels are merged. Thus pre-sampling thresholds need to be tuned for each individual detector, and computing resources can only be saved by reusing pre-sampled events, where a compromise between CPU savings and increased storage needs to be made in a way that maintains optimal physics performance.

Another option to fully avoid the CPU bottleneck of pileup pre-sampling is to use pileup events from data. The main bottlenecks here are non-constant detector conditions and alignment. Re-initializing the simulated geometry adds overheads which may be mitigated by averaging conditions over long periods. However, this solution will come at the cost of reproducing data less precisely. Furthermore detector readout only provides digital information above some thresholds which are usually tuned for primary collisions and thus relatively high. This reduces precision when merging the information with the simulated hard-scatter event.

While other types of background are much lower at pp colliders and their simulation can usually be skipped, this is not the case for ee colliders. Some of those backgrounds, e.g., beam gas effects, synchrotron radiation and intra-beam scattering, happen outside the detector cavern. They are simulated by the accelerator team as they also affect beam operations. To avoid re-simulating the same type of background, the simulation can be shared with the experiment as a list of particles that enter the interaction region [57], though this is still a large number of low-momentum particles to simulate. Experiments thus also use randomly-triggered collision events for the background estimation, while also being affected by the threshold effects.

5.2 Optimal Strategy for Future Colliders

During the development stage of the future experiments, detailed simulation of all types of beam backgrounds is of utmost importance. Simulation provides estimates of the physics impact of backgrounds and helps to optimize the detector design to minimize them as much as possible [58]. Some backgrounds can be parametrized or even completely neglected. One such example is that of cavern background neutrons at hadron colliders. In most cases their contribution is orders of magnitude smaller than that of pileup, although outer muon chambers would require a detailed description, if high precision is required. As low momentum neutron simulation is very slow, it can be performed only once and used to derive parametrized detector responses, which can then be injected at the digitization stage.

As discussed earlier in this section, separate simulation of beam backgrounds and pre-digitization saves computing resources and has a negligible impact on physics performance when reused randomly between samples. With the increased background rates expected in future colliders, iterative mixing and merging of background contributions will become an essential technique. Detector readout thresholds must be set sufficiently low to allow merging of digital signals multiple times with negligible degradation of accuracy. This would allow iterative pileup pre-sampling, where multiple events with a low number of interactions could be merged to give an event with a high number of interactions. It would also allow to merge different types of backgrounds that would be prepared independently. Furthermore, a special set of lower background thresholds could be setup in the actual detector to enable the use of real data events as background sources. The latter would yield a reduced performance degradation as compared to current detectors.

Most of all the beam background simulation strategy depends on physics accuracy requirements. As mentioned in Section 1, current experiments are moving towards a more frequent use of fast simulation methods, either based on parametrized detector responses or on machine learning technologies. The latter could be used to choose the precision of the simulation algorithm depending on the event properties, or to fully generate the background on the fly. Regardless of the choice of the strategy used to simulate large volumes of physics samples, a detailed modeling as provided by full simulation will always be needed, if nothing else to derive and tune the faster methods.

6 Electronic Signal Modeling

The ambitious physics program at future accelerator-based experiments requires detectors which can perform very accurate measurements and handle high occupancy at the same time. To achieve these goals, it is of paramount importance to collect as much information from each individual detector channel as possible, including the three spatial coordinates, time and energy.

For simplicity, this section focuses on two main classes of detectors that pose the most challenges from a computational point of view: tracking detectors and calorimeters. Those are the ones that usually use the largest number of electronic readout channels, thus their behavior needs to be simulated in detail.

New generation calorimeters are designed as tracking devices as well as providers of energy deposition information in the form of the five-dimensional measurement referred to in the first paragraph. These extended capabilities beyond traditional calorimetric observables present challenges to the simulation effort, since modeling must achieve accurate descriptions of all these observables simultaneously. Additionally, calorimeters will often operate in a high-occupancy environment in which sensor and electronics performance degrade fast as a consequence of radiation damage.

The digitization step of simulation takes as input the Geant4-generated analogue signals from the detector. The first step of the digitization process accumulates this input and groups it for individual read out elements. This is done in a number of time slots which define the integration time for the detector. Beyond this step, modeling is highly detector dependent. It is driven by detailed descriptions of readout electronics including the noise component, cross-talk, and the readout logic which involves the shaping of the signal and the digitization of the pulse. Finally, a digit is recorded when the signal is above a predefined threshold.

6.1 Tracking Detectors

Various types of tracking detectors are currently employed in HEP experiments at colliders [51], with the most widely used being silicon, gaseous (RPC, MDT, Micromegas, etc), transition-radiation, and scintillation detectors. Of these, silicon-based detectors are among the most challenging and computationally expensive to simulate, given the large number of channels and observables involved.

Silicon detectors give rise to electron-hole pairs which are collected with a certain efficiency, amplified, digitized, and recorded. When biased by a voltage difference, the response of the sensor to the passage of ionizing particles is characterized by its charge collection efficiency (CCE) and its leakage current (Ileak). As the sensors are operated well above their full depletion voltage, the CCE is expected to be high. The current digitization models for silicon detectors use either parametric or bottom-up approaches. For parametric approaches, the overall simulated energy deposit is split across readout channels using a purely parametric function based either on detailed simulations or data; for bottom-up approaches the energy deposit is used to generate multiple electron-hole pairs that are then propagated through a detailed simulation of the electric field and used to compute the expected signal generated at the electrodes. Several models are employed for how the overall deposited energy is split. They range from simple models performing an equal-splitting along the expected trajectory to more complex models [59], each giving different increasing levels of accuracy at the price of being computationally more expensive.

Exposure to radiation induces displacements in the lattice and ionization damage, liberating charge carriers. These effects contribute to a reduction of the CCE and increase in the Ileak. The increase in instantaneous luminosity projected at the HL-LHC collider challenged experiments to implement simulation models able to predict the reduced CCE expected in the presence of radiation damage. A detailed simulation of the electric field is used with more refined models describing the probability of charge-trapping and reduced CCE [60–62]. Those models tend to be heavy on computing resources, prompting parametric simulation approaches to be developed as well.

Detector designs for future colliders differ substantially depending on the type of environment they will have to withstand. Detectors at moderate to high-energy e+e− colliders will see a clean event and moderate rates of radiation. For such detectors, a detailed simulation strategy is crucial for high precision physics measurements; however, the demand for large simulated samples makes a hybrid approach including parametrizations most likely. Silicon-based tracking detectors are also the technology of choice at muon colliders. The radiation environment within this machine poses unique challenges due to the high level of beam-induced backgrounds (BIBs). Real-time selection of what measurements are most likely to come from the interaction point rather than from BIBs is likely to rely on detailed shape analyses of the neighboring pixels that give signals as well as possible correlation across closely-spaced layers [63]. A hybrid approach will likely be needed, consisting of a detailed simulation of the detector layers where the raw signal multiplicity is the highest and needs to be reduced, together with a fast simulation approach for the rest of the tracking detector. For detectors at future hadron colliders, the extreme radiation environment near the interaction point will make it mandatory to implement radiation damage effects in the simulation. For this, a parametrized approach would also be the most realistic path to keep computational costs under control.

6.2 Calorimeters

Calorimeters may be broadly classified as of two types. In homogeneous calorimeters, the entire volume is sensitive and contribute a signal through the generation of light from scintillation or Cerenkov emission. These photons are collected, amplified, digitized and recorded. In sampling calorimeters, the material that produces the particle shower is distinct from the material that measures the deposited energy. Particles traversing sampling calorimeters lose energy through the process of ionization and atomic de-excitation. The charge of the resulting products (electrons and ions) is subsequently collected, amplified, digitized and recorded. In homogeneous calorimeters, modeling photon transport to the photo-transducers is CPU intensive and typically implemented as a parametrization tuned to predictions obtained from a specialized simulation package [33, 64]. Nowadays, simulation of optical photons is offloaded to GPUs to mitigate computing costs, taking advantage of the high levels of parallelism achievable for electromagnetically interacting particles’ transport. The photon transmission coefficient is affected by radiation damage due to formation of color centers in the medium, thus an assumption is made on the distribution of color centers in the medium. The light output, L(d), after receiving a radiation dose d, is described by an exponential function that depends on the dose:

where the parameter μ is a property of the material and depends on the dose rate. The radiation damage parametrizations are typically calibrated from data coming out of a monitoring system. The radiation dose and the neutron fluence (flux over time) are estimated using an independent simulation of the detector setup.

The next step in the simulation chain for calorimeters is the treatment of the photo transducer, the most commonly used type being silicon photo-multipliers. These devices also suffer time-dependent effects related to the radiation exposure: decrease of photo-statistics (fewer photons reaching the device) and increase of the noise coming from dark currents. The noise increases with the square root of the fluence, which in turn is proportional to the sensor’s area. Signal simulation in silicon photo-multipliers involves: emulation of photo-statistics using a Poisson distribution, description of the distribution of the photo electrons according to pulse shape, adjustmentment of the signal arrival time, as well as the modeling of the dark current (thermal emission of photo-electrons), the cross-talk among the channels induced in the neighbors of the fired pixels, the pixel recovery time after being fired, and the saturation effect for large signals when several photo-electrons fall on the same pixel. An exponential function describes accurately the re-charge of the pixel as a function of time, while cross-talk can be modeled using a branching Poisson process. The Borel distribution [65, 66] analytically computes the probability of neighboring cells to fire.

Finally, the simulation of the readout electronics includes: the readout gain, adjusted to get an acceptable signal to noise ratio throughout the life time of the detector; the electronics noise, with contributions from the leakage current in the detector, the resistors shunting the input to the readout chip, and the implementation of the so-called common mode-subtraction; and the ADC pulse shape, which decides the fraction of charge leaked to the neighboring bunches. Zero suppression is also modeled, keeping only the digits which cross a threshold in the time bunch corresponding to sample of interest.

In future colliders, simulation of silicon-based calorimeters will face similar challenges than those described in the previous section for tracking devices. Parametrizations of time consuming photon transport may be replaced with detailed modeling and processed on computing devices with hardware accelerators. Radiation damage will be more pronounced in high-background environments such as high-energy hadron colliders and muon colliders, introducing a time-dependent component all through the signal simulation chain which will need to be measured from data and modeled in detail.

7 Computing

Non-traditional, heterogeneous, architectures, such as GPUs, have recently begun to dominate the design of new High Performance Computing centers, and are also showing increasing prevalence in data centers and cloud computing resources. Transitioning HEP software to run on modern systems is proving to be a slow and challenging process, as described in Section 7.3. However, in the timescale of future colliders, this evolution in the computing landscape offers tremendous opportunity to HEP experiments. The predicted increase in compute power, the capability to offload different tasks to specialized hardware in hybrid systems, the option to run inference as a service in remote locations in the context of a machine learning approach, open the field of HEP simulation to a world where simulation data could grow several times in size, while preserving or improving physics models and detector descriptions.

7.1 Projection of Hardware Architecture Evolution

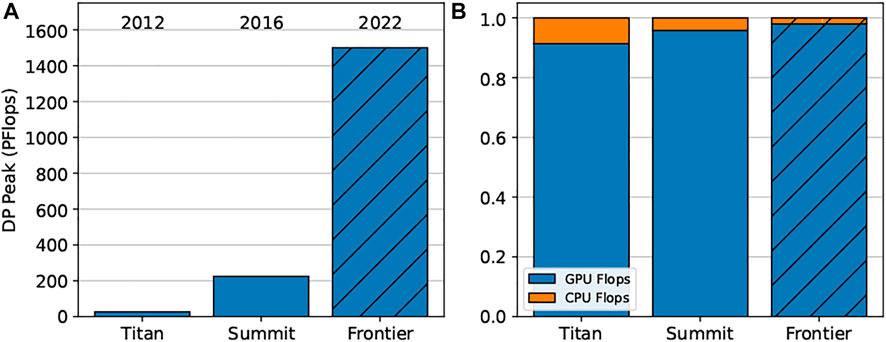

For example, the U.S. Department of Energy (USDOE) will be setting up three new GPU-accelerated, exascale platforms in 2023–2024 at the Oak Ridge Leadership Computing Facility (OLCF [67]), Argonne Leadership Computing Facility (ALCF [68]), and Lawrence Livermore National Laboratory. Additionally, the National Energy Research Scientific Computing Center (NERSC [69]) is deploying an NVIDIA-based GPU system for basic scientific research. Figure 2 shows peak performance in Flops for machines deployed at the OLCF between 2012 and 2023. In addition to the projected

FIGURE 2. Peak performance in Flops (A) and fraction of Flops provided by GPU and CPU (B) for GPU-accelerated systems deployed at the Oak Ridge Leadership Computing Facility (OLCF). The peak performance for Frontier is projected.

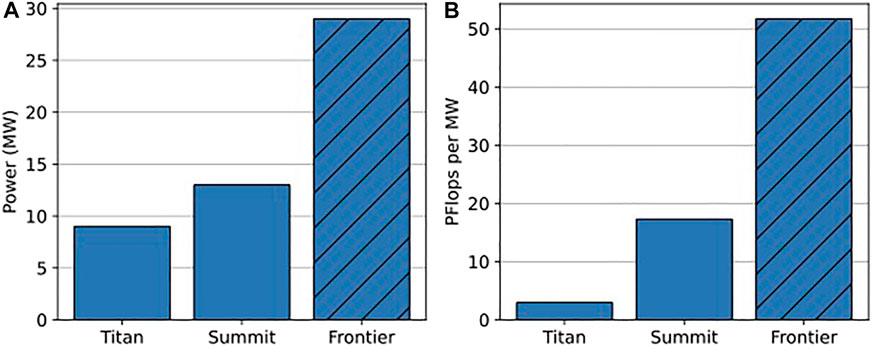

The primary driver of this evolution is the power requirements of high-performance computing. Figure 3 shows power consumption for OLCF machines from 2012 to 2022. Here, we see that for a 3× increase in total power consumption there is a 17 fold increase in Flops per MW.

FIGURE 3. Power consumption (A) and Flops per MW (B) for GPU-accelerated systems deployed at the Oak Ridge Leadership Computing Facility (OLCF). The power requirements for Frontier are projected.

It is difficult to predict the exact nature of the hardware landscape beyond 5 years or so, but undoubtedly we will see evolutionary changes of current hardware rather than revolutionary ones—a failed product can now cost billions of dollars due to design and fabrication costs. Core counts will continue to go up, as transistor feature sizes decrease, with increasing use of multi-chip and 3D stacked solutions needed to avoid overly large silicon sizes. It is also likely that vendors will devote larger sections of silicon to specialized functions, such as we see with Tensor and Ray Tracing cores in current GPUs. FPGA and ASIC vendors are now offering specialized component layouts for domain specific applications, and this level of customization will likely increase. We are also beginning to see the combination of multiple different types of cores, such as high and low power CPUs and FPGAs in the same silicon die or chiplet array, leading to more integrated heterogeneous architectures with faster communication channels between the various components and much quicker offload speeds.

7.2 Description of Heterogeneous Architectures

Heterogeneous architectures such as GPUs and FPGAs are fundamentally different from traditional CPU architectures. CPUs typically possess a small number of complicated cores that excel at branch prediction and instruction prefetching. They have multiple levels of large, fast caches, and typically have very low access latencies. GPUs, on the other hand, have a very large number of simple cores (hundreds of thousands for modern GPUs), that do not handle branch mis-predictions gracefully. GPU cores that are grouped in a block must operate in lockstep, all processing the same instruction. Branch mis-predictions and thread divergence will cause a stall, greatly decreasing throughput. GPUs often have much more silicon devoted to lower and mixed precision operations than they do for double precision calculations, which are heavily used in High Energy Physics. GPUs are optimized for Single Instruction Multiple Data (SIMD) style of operations, where sequential threads or cores access sequential memory locations—randomized memory access causes significant performance degradation. Finally, GPUs have very high access latencies compared to CPUs—it can take tens of microseconds to offload a kernel from a host to a GPU. The combination of massive parallelism, memory access patterns, and high latencies of GPUs require a fundamentally different programming model than that of CPUs.

The architecture of FPGAs is considerably simpler than that of more general purpose GPUs and CPUs, consisting of discrete sets of simple logic and I/O blocks linked by programmable interconnects. Programming an FPGA consists of mapping the program flow of the code onto the logic layout of the device and activating the appropriate interconnects. The concept of directly encoding operations into hardware has gained traction over the last 5 years, and current compute GPUs have significant operations encoded directly into the hardware including mixed-precision matrix-matrix multiplication (tensor cores) and ray-tracing for AI and graphics applications, respectively. FPGAs potentially offer greater promise in this regard because they can be encoded for domain-specific operations, whereas tensor cores have limited utility outside of the deep-learning AI space. This strength is also a weakness when it come to deploying integrated FPGA hardware in large compute centers as developing code on FPGAs is considerably more challenging than on GPUs because the programming languages are not as flexible and the compilation times are several orders of magnitudes slower, making the programming cycle much more difficult. Thus, no major FPGA-based large systems are currently in development, and we suspect that FPGA usage will remain restricted to local deployments for the near-to-medium time frame.

7.3 Challenges for Software Developers

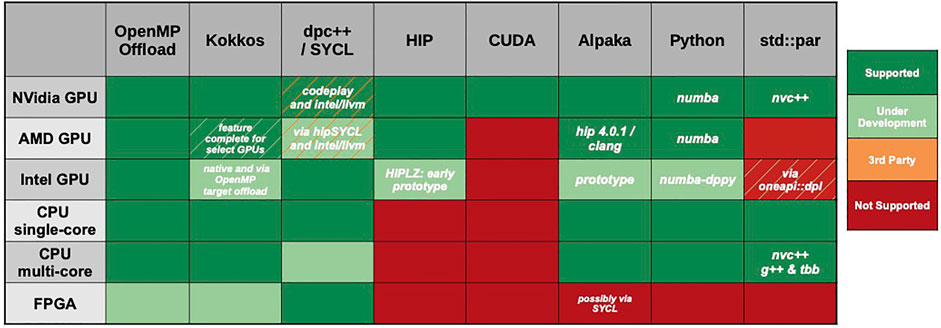

All of the GPU manufacturers support programming only with their own software stack. NVIDIA uses CUDA, AMD promotes HIP, and Intel employs oneAPI. Other heterogeneous architectures such as FPGAs also use unique programming languages such as Verilog and HLS. The vast majority of current HEP software is written in C++, and supported by physicists who are usually not professional developers. Typical HEP workflows encompass millions of lines of code, with hundreds to thousands of kernels, none of which dominate the computation. In order to target the current diverse range of GPUs and FPGAs, we would have to rewrite a very large fraction of the HEP software stack in multiple languages. Given the limited available workforce, and the extremely challenging nature of validating code that executes differently on multiple architectures, experiments would have to make very difficult choices as to which hardware they could target, ignoring large amounts of available computing power. Fortunately, we have seen a number of portability solutions start to emerge recently, such as Kokkos, Raja, Alpaka, and SYCL, which are able to target more than one hardware backend (see Figure 4). Furthermore, hardware vendors have seen the benefits of cross platform compatibility, and are working to develop standards which they are trying to incorporate into the C++ standard. Ideally, a single language or API that could target both CPUs and all available heterogeneous architectures would be the preferred solution.

Currently, mapping computational physics and data codes to GPU architectures requires significant effort and profiling. Most HEP code bases are not easily vectorizable or parallelizable, and many HEP applications are characterized by random memory access patterns. They tend to follow sequential paradigms, with many conditional branch points, which make them challenging to adapt to GPUs. Even tasks such as particle transport, which in high luminosity environments such as the HL-LHC seemingly offer very high levels of parallelism, are in fact very difficult to run efficiently on GPUs due to rapid thread divergence cause by non-homogeneous geometrical and magnetic field constraints.

One avenue that offers some hope for easier adoption of GPUs is the use of Machine Learning (ML) techniques to solve physics problems. We are seeing increasing acceptance of ML algorithms for pattern recognition and feature discrimination tasks in HEP, as well as for more novel tasks such as generative models for energy depositions in calorimeter simulations. ML backends for all GPU and other heterogeneous architectures already exist, and are often supported directly by the hardware manufacturers, which greatly eases the burden for HEP developers.

8 Software Toolkits

The evolution of simulation software toolkits will depend greatly on the hardware, whose evolution on the timescale of 10 years is uncertain as discussed in Section 7. Today’s leading toolkit, the Geant4 toolkit [3] used by most large experiments’ detector simulation, and also the particle transport tools FLUKA [5] and MARS15 [6] used in the assessment of radiation effects, are large, complex, and have evolved over 30 years of CPU-centric computation.

8.1 Computing Hardware Accelerator Usage

Whether current simulation toolkits can be adapted to profit adequately from a variety of computing hardware accelerators, principally GPUs, or whether new accelerator-centric codes can be created and then interfaced into existing toolkits is a key research question. The profitability of the conversion also involves the effort required for the development of the production level code, and the cost to create GPU-capable applications. The latter is under active exploration.

The research into GPU usage is inspired by efforts in related particle transport applications in HEP and other fields. As discussed in Secs. 3 and 6, the Opticks project [33] offloads simulation of optical photons to NVidia GPUs and demonstrates methods to deal with complex specialised geometries on these devices, specifically ones that have many repetitive structures. MPEXS, a CUDA-based application for medical physics [77] using Geant4-derived physics models, also demonstrated efficient use of GPU resources for regular ‘voxelised’ geometries. However, the general problem of modeling a large range of energies for particles combined with the full complexities of modern detector geometries has not been tackled yet. Solving these general problems is the domain of two ongoing R&D efforts, the Celeritas project [44] and the AdePT prototype [28]. Both are starting by creating CUDA-based proof-of-concept implementations of electromagnetic physics, and particularly showering, in complex detector geometries on GPUs. Key goals of the projects include identifying and solving major performance bottlenecks, and providing a first template for efficiently extracting energy deposits, track passage data, and similar user-defined data. Initially, both are targeting the simulation of electron, photon, and positron showers in complex geometrical structures currently described by deep hierarchies with many repetitions of volumes at different levels. They have identified the need for a geometry modeller adapted for GPUs and accelerators, and sufficiently capable to handle these complex structures (see Section 3). They are in the process of defining and developing solutions for such a geometry modeller.

The limitations of the bandwidth and latency for communication between the CPU and accelerator are important constraints in the utilization of GPUs and other accelerators for particle transport simulation, and for the overall application. Minimising the amount of data exchanged, such as input particles and output hits, between the CPU and accelerator, is an important design constraint for GPU-based particle transport. The types of detectors for which it is suitable may depend on this. The contention for this resource may also constrain the overall application which integrates the particle transport and showering with event generation, generation of signal, and further reconstruction.

Existing prototypes such as AdePT and Celeritas strongly focus on keeping computation inside the accelerator, and moving back to the CPU only the absolute minimum of data and work. When only a selected region of a geometry is accelerated, a particle which escapes that region must be returned - as must particle tracks which undergo (rare) interactions not currently simulated in GPU code, e.g., photo-nuclear interactions. Of course the largest and critical data transferred out of the accelerator are the experiment hit records (or processed signal sum values) and other user information such as truth information.

Early phase exploration of the potential of FPGAs for particle transport is being conducted for medical physics simulation [78]. Yet the challenges involved appear more daunting, due to the need to compile a complex tool into hardware. It seems likely that this approach would be investigated only after implementations are built using ‘simpler’ building blocks on GPUs. Potentially these will profit from leveraging implementations created for portable programming frameworks.

Based on current trends, except situations where ultimate performance is required for time critical applications, we expect the established vendor-specific libraries (CUDA, Hip, DPC++) to be slowly supplanted by the emerging portable programming paradigms (Kokkos, Alpaka, SYCL), and within a few years a convergence to be established on standard-supported languages and libraries such as C++‘s standard library std:par execution policy. With the importance of portability between hardware of different vendors, it is critical to identify and invest in cross-vendor solutions, and potentially paradigms that can be used to investigate alternative hardware platforms, as mentioned above for FPGAs.

8.2 Opportunities for Parallelism

We expect applications and future toolkits will need to expose multiple levels of parallelism in order to manage resources and to coordinate with other computations, such as reconstruction and event generation. Such levels could entail parallel processing of different events as well as parallel processing of multiple algorithms or even multiple particles within an event. A detector simulation toolkit cannot assume that it controls all resources, but must cooperate with other ongoing tasks in the experiment application. At this point, it is unclear how to accomplish this cooperation efficiently.

Seeking to obtain massive parallelism of thousands or tens of thousands of active particles is challenging to develop in detector simulation. The GeantV project [27] explored the potential of SIMD-CPU based parallelism by marshalling similar work (‘event-based’ in the parlance of neutron simulation), e.g., waiting till many particles entered a particular volume before propagating the particles through that volume. The project’s conclusion was that the speedup potential was modest - between 1.2 and 2.0.

It seems clear that the ability to execute many concurrent, independent kernels on recent GPUs is of crucial interest to HEP, as it avoids the need for very fine grained parallelism at the thread level, which was the goal of the GeantV project. Given the difficulty of taking advantage of the full available parallelism of modern GPUs by a single kernel, being able to execute many kernels doing different tasks will be invaluable.

8.3 Parametrized Simulation

In parallel with the need for a full, detailed simulation capability to meet the physics requirements of the future colliders, the focus is growing on developing techniques that replace the most CPU-intensive components of the simulation with faster methods (so called “fast simulation” techniques), while maintaining an adequate physics accuracy. This category includes optimization/biasing techniques that aim at tuning parameters concerning simulation constituents such as geometry or physics models and which are strictly experiment specific, as well as the possibility of parametrizing part of the simulation (i.e. electromagnetic shower development in calorimeters), by combining different machine learning techniques. R&D efforts are ongoing in all the major LHC experiments to apply cutting-edge techniques in generative modelling with deep learning approaches, e.g., GANs, VAEs and normalizing flows, targeting the description of electromagnetic showers.

We expect the bulk production of Monte Carlo simulation data to be performed with a combination of detailed and parametrized simulation techniques. To this end, enabling the possibility to combine fast and full simulation tools in a flexible way is of crucial importance. Along these lines, we expect Geant4 to evolve coherently by providing tools allowing integration of ML techniques with an efficient and smooth interleaving of different types of simulation.

8.4 Future of Geant4

Due to its versatility, the large number of physics modeling options, and the investment of many experiments including the LHC experiments, we expect an evolved Geant4 to be a key component of detector simulation for both the ongoing and the near future experiments well into the 2030s. Over the next decade, we expect Geant4’s capabilities to evolve to include options for parameterized simulation using machine learning, and acceleration for specific configurations (geometry, particles and interactions) on selected hardware, both of which should significantly increase simulation throughput. These enhanced capabilities will however come with significant constraints, due to the effort required to adapt user code to the accelerator/heterogeneous computing paradigm. Furthermore, there is a need to demonstrate that substantial speedup or throughput improvements can be obtained before such an investment in adaptation of user applications can be undertaken. Full utilization of accelerators may not be required as offloading some work to accelerators should free up CPU cores to do additional work at the same time thereby improving throughput. In addition, some HPC sites may require applications to make some use of GPUs in order to run at the site. Therefore, some minimum GPU utilization by simulation may make it possible for experiments to run on such HPC resources thereby reducing the total time it takes to do large scale simulation workflows.

9 Applications of HEP Tools to Medical Physics and Other Fields

After the initial developments of Monte Carlo (MC) methods for the Manhattan project, the tools became available to the wider research community after declassification in the 1950’s. One of the early adapters of MC methods were physicists in radiation therapy. Researchers were eager to predict the dose in patients more accurately as well as designing and simulating detectors for quality assurance and radiation protection. The simulations were done mainly using in-house developed codes, with some low energy codes modeling photons up to 20 MeV developed or transferred from basic physics applications [35, 79]. Use of MC tools from the HEP domain mainly started with heavy charged particle therapy, first using protons and Helium ions and later employing heavier ions such as Carbon ions. Early research here was also done with in-house codes mostly studying scattering in inhomogeneous media. In the early 1990’s more and more high-energy physicists entered the field of medical physics and brought their expertise and codes with them. Thus started the use of general-purpose MC codes in radiation therapy that were initially developed and designed for high energy physics applications, such as Geant4 and Fluka. Fruitful collaborations were also established with the space physics field, with HEP-developed toolkits applied to particle detector design as well as the similar areas of dosimetry and radiation damage [80].

9.1 Beam Line Design and Shielding Calculations

Beam line design and shielding calculations are done prior to installing a treatment device. These applications of MC are no different to the HEP use case except for the beam energies studied. Beam line transport would be done by the machine manufacturers and is often based on specialized codes such as, for instance, Beam Delivery Simulation (BDSIM) [35]. On the other hand, shielding calculations aim at a conservative estimate with limited required accuracy and would use mostly analytical methods.

Shielding calculations are also critical in both manned and unmanned space missions to determine the radiation environment for humans [81] and instrumentation, as well as detector backgrounds [82].

9.2 Detector Design Studies

Nuclear and HEP physics hardware developments are frequently finding applications in radiation therapy and space missions due to similar requirements concerning sensors and real-time data processing. Detectors are less complex compared to HEP but the components used in simulations are very similar. Differences are in the particles of interest as well as the energy region of interest. As in HEP, MC simulations are a powerful tool to optimize detectors and treatment devices [83, 84]. In fact, for radiation therapy or diagnostic imaging, MC are not only being employed by researchers but also by vendors to optimize their equipment.

9.3 Dose Calculation

Predicting the dose in patients is arguably the most important task in radiation therapy and has therefore been the most active MC topic [85]. It has similar importance in space physics for predicting dose rates for astronauts and in materials/electronics [81, 86].

Despite its accuracy, MC dose calculation has not found widespread use in treatment planning in medicine. However, vendors of commercial planning systems have now developed very fast Monte Carlo codes for treatment planning where millions of histories in thick targets need to be simulated in minutes or seconds in a very complex geometry, i.e. the patient as imaged with CT [87]. Therefore, these specialized codes have replaced multi-purpose MC codes that are often less efficient. Multi-purpose codes are however being used as a gold standard for measurements that are not feasible in humans. In addition, they are often used to commission treatment planning and delivery workflows. As we are dealing with biological samples such as patients, scoring functionality often goes beyond about what is typically used in HEP such as scoring phase spaces on irregular shaped surfaces or dealing with time-dependent geometries.

9.4 Diagnostic Medical Imaging

MC has long been used in the design of imaging systems such as positron emission tomography (PET) or computed tomography (CT) [88]. Like in therapy, HEP codes are being applied either directly or tailored to imaging applications, i.e. for low energy applications [89]. Time of flight as well as optical simulations are done using MC. In recent years MC is more and more used to also understand interactions in patients. As radiation therapy is pursuing image-guided therapy, imaging devices are also incorporated in treatment machines resulting in problems that are being studied using MC such as the interaction between magnetic resonance imaging (MRI) and radiation therapy, either conventional (photon based) or magnetically scanned proton treatments.

9.5 Simulation Requirements for Non-HEP Applications

9.5.1 Physics Models and Data for Energy Ranges of Interest

Medical and many space applications typically fall not under high-energy but low-energy physics. HEP tools might therefore not simulate some effects accurately or their standard settings are not applicable for low energies and have to be adjusted and potentially even separately validated [90]. Measurements of fragmentation cross-sections and attenuation curves are needed for MC applications in clinical environments. Most cross-sections and codes are indeed not very accurate for applications outside HEP because materials and energy regions of interest are very different. In fact, cross-sections needed for medical physics applications go mostly back to experiments done in the 1970’s and are no longer of interest to the basic physics community. For instance, considerable uncertainties in nuclear interaction cross-sections in biological targets are particularly apparent in the simulation of isotope productions [91]. Furthermore, the interest of high-energy physics is mainly in thin targets whereas medical physics needs accurate representations of thick target physics to determine energy loss in patients or devices including Coulomb scattering and nuclear halo.

9.5.2 Computational Efficiency (Variance Reduction)

In the future we may see two types of MC tools in medical physics, i.e., high-efficiency MC algorithms focusing solely on dose calculation for treatment planning and multi-purpose codes from high energy physics for research and development. The latter can and will be used more and more to replace difficult or cumbersome experiments such as detector design studies for dosimetry and imaging. Nevertheless, thick target simulations are often time consuming and variance reduction techniques have been developed in medical physics [92] that may also be applicable for high-energy physics applications, as discussed in Section 8, with cross-fertilization of the two fields.

9.6 Future Role of MC Tools Outside of HEP