- 1School of Automation Engineering, University of Electronic Science and Technology of China, Chengdu, China

- 2School of Computer Science, Sichuan Normal University, Chengdu, China

- 3Chengdu Research Base of Giant Panda Breeding, Sichuan Key Laboratory of Conservation Biology for Endangered Wildlife, Chengdu, China

Giant panda 3D reconstruction technology plays an important role in the research of giant panda protection. Through the analysis of giant panda video image sequence (GPVS), we prove that it has the long-range–dependent characteristics. This article proposes an algorithm to accurately reconstruct the giant panda 3D model by using the long-range–dependent characteristics of GPVS. First, the algorithm uses a skinned multi-animal linear model (SMAL) to obtain the initial 3D model of giant panda, and the 3D model of the single-frame giant panda image is reconstructed by controlling shape parameters and attitude parameters; then, we use the coherence information contained in the long-range–dependent characteristics between video sequence images to construct a smooth energy function to correct the error of the 3D model. Through this error, we can judge whether the 3D reconstruction result of the giant panda is consistent with the real structural characteristics of the giant panda. The algorithm solves the problem of low 3D reconstruction accuracy and the problem that 3D reconstruction is easily affected by occlusion or interference. Finally, we realize the accurate reconstruction of the giant panda 3D model.

1 Introduction

In recent years, in the field of animal protection, computer three-dimensional reconstruction methods are more and more used in the study of animal morphology. Giant pandas are China’s national treasures and first-class protected animals. China has established giant panda breeding research bases in many places for the protection and research of giant pandas. Through the research on the three-dimensional reconstruction of giant pandas, we can not only carry out non-contact body size measurement of giant pandas, including the measurement of giant pandas’ body height, body length, chest circumference, and weight, but also the protection workers can better understand the growth status of giant pandas, such as height, obesity, weight gain and loss, and body length increase and decrease, so as to further analyze the living environment and health status of giant pandas and to better protect giant panda species and improve the protection level of giant pandas. At the same time, through flexible and diversified forms such as rapid three-dimensional reconstruction and three-dimensional display, people can have a more intuitive and comprehensive understanding of the species of giant panda and further enhance people’s awareness of animal protection. This is not only helpful for animal protection but also beneficial to the whole society.

Since the giant panda is a nonrigid target, the traditional rigid body–based 3D reconstruction algorithm (Structure-from-Motion‐SFM [1], etc.) is not suitable for its 3D modeling. NRSFM (non-rigid structure-from-motion) is an extended SFM method. In 2000, Bregler first proposed the scientific question of how to recover the 3D nonrigid shape model [2] from the video sequence of single view. In 2013, Grag, Ravi, and other scholars used a variant algorithm for dense 3D reconstruction of nonrigid surfaces from monocular video sequences [3], which formulate the nonrigid structure of NRSFM into a global variational energy minimization problem. This method can reconstruct highly deformed smooth surfaces. In reference [4], a method for observing the dynamic motion of nonrigid objects from long monocular video sequences is adopted. This method makes use of the fact that many deformed shapes will repeat over time and simplifies NRSFM to a rigid problem.

In 2015, Matthew Loper and other scholars proposed a three-dimensional SMPL (skinned multi-person linear) model of human body using human shape and position [5]. In 2018, Angjoo Kanazawa et al. utilized a network framework for recovering a 3D human model from a 2D human image by the end-to-end method [6]. This method directly infers 3D-mesh parameters from image features and combines the 3D reconstruction method with deep learning.

Due to the uncontrollable behavior of giant pandas and other animals, the three-dimensional modeling algorithm suitable for human body cannot obtain high three-dimensional modeling accuracy. In 2017, Silvia Zuff et al. designed a 3D modeling method of animals based on a single image, using the 3D shape [7] and pose of animals to build the same statistical shape model as SMPL, called SMAL (skinned multi-animal linear model) [8, 9]. At present, the model has achieved satisfactory results in the application of three-dimensional reconstruction of several kinds of quadrupeds, such as three-dimensional reconstruction of dogs, horses, and cattle.

In the study, we found that the accuracy of the 3D giant panda model based on the single image is related to the results of 3D giant panda pose modeling based on the SMAL model. Because the temporal relationship between frames is not considered in the giant panda 3D model of single frame image data, the motion sequence composed of the results of single-frame pose modeling will be uneven and not smooth. Such errors are difficult to be automatically corrected in the single-frame algorithm. Therefore, the 3D reconstruction effect is usually unsatisfactory.

This article is organized as follows: in Section 2, we define the autocorrelation coefficient of GPVS (giant panda video image sequence) and discuss the long-range dependent of GPVS by analyzing the H index. In Section 3, we propose the giant panda 3D model. In Section 4, we took advantage of the new motion smoothing constraint to improve 3D accuracy by using frame-to-frame relationships. In Section 5, the experimental results of 3D modeling are presented, and in Section 6, we give the conclusion.

2 Long-Range Dependent of GPVS

2.1 ACF of GPVS

If the ACF value of a sequence is not zero or has a tailing phenomenon, we believe that the sequence may be long-range–dependent sequence [10].

Theorem: Let

where

Proof. Let the mean

Let the autocovariance

where

Bring Eq. 2.3 into Eq. 2.4, we get

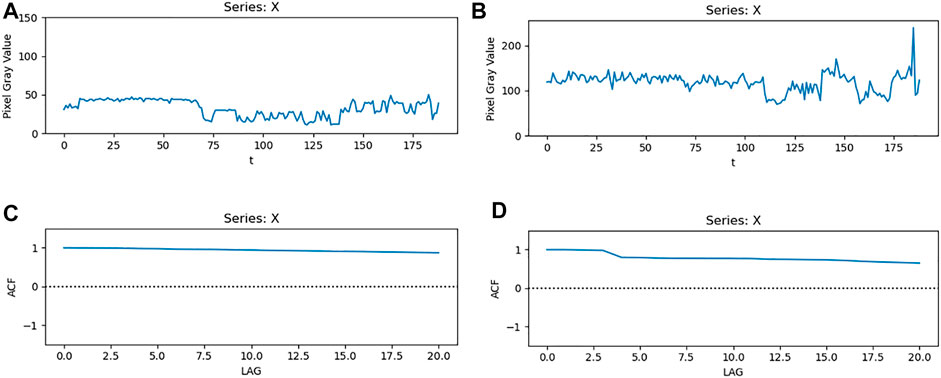

This finishes the proof.Figure 1 is a first frame, a 60th frame, and a 1,800th frame image in the GPVS. Figure 2 shows that the ACF value of the GPVS is not equal to 0, and the ACF curve has a tailing effect, which indicates that the GPVS may be the long-range–dependent sequence.

2.2 Hurst Exponent of GPVS

A Hurst exponent (

When

Through the analysis in Section 2.1, we can see that the GPVS sequence may have long-range–dependent characteristics. Next, we use the

(1) Divide the sequence

(2) Calculate the mean value

(3) Calculated deviation

(4) Calculate cumulative deviation

(5) Calculate range

(6) Calculate standard deviation

(7) Get value

The average value

Finally,

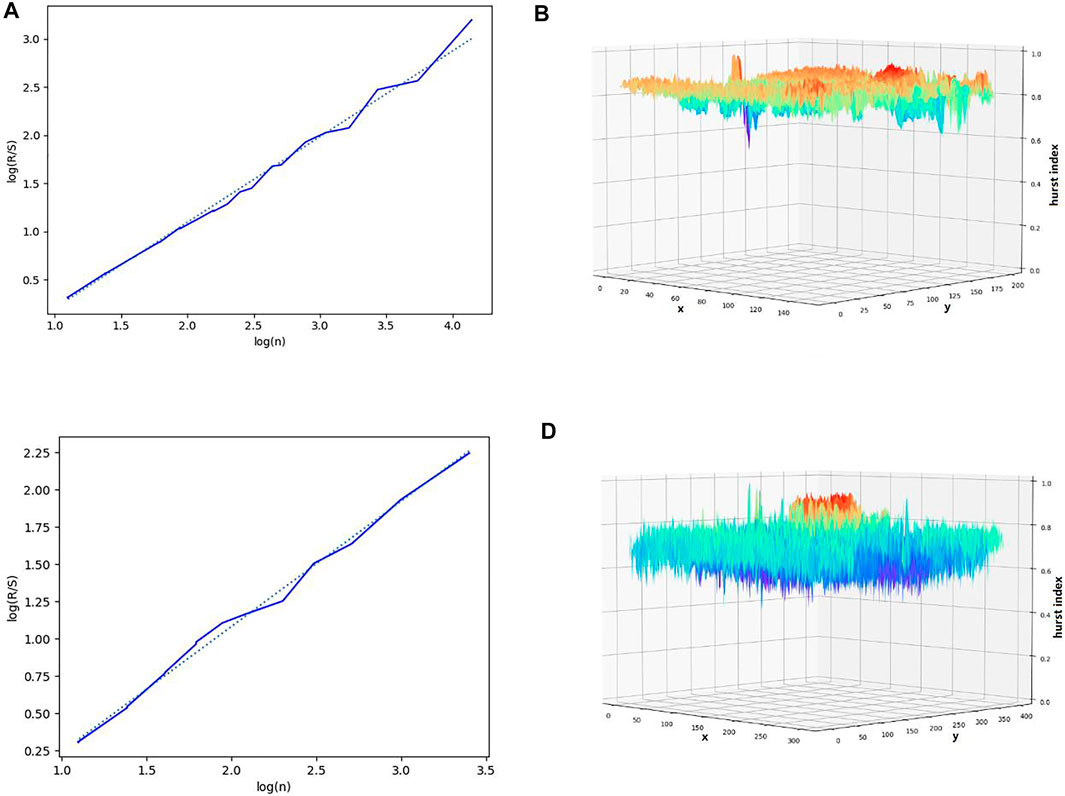

Figure 3A shows the sequence

FIGURE 3. Hurst exponent. (A) Hurst exponent of GPVS1 (300,400), (B) Hurst exponent of GPVS1, (C) Hurst exponent of GPVS2 (600,800), and (D) Hurst exponent of GPVS2.

Figure 3C shows the sequence

From Figure 3, we can see that the

3 Giant Panda 3D Modeling

3.1 Giant Panda Skeleton

The structure formed by a series of joints and bones is called the skeleton. Each joint can correspond to one or more bones and can have multiple subjoints. A correct skeleton structure can ensure that the giant panda has a real and correct motion structure after three-dimensional modeling, which is one of the important links of the giant panda model.

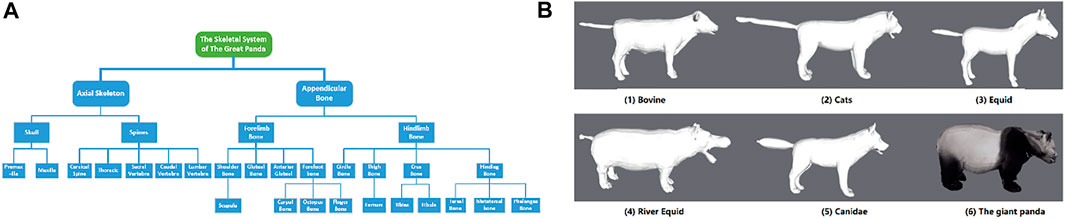

The selection of bones and joints will affect the deformation of the three-dimensional model of the giant panda. Therefore, the analysis of the skeleton structure of the giant panda plays a very important role in the three-dimensional model of the giant panda. Figure 4A shows the detailed skeleton system of the giant panda. In the SMAL model, we usually use 33 joints to represent five kinds of quadrupeds with different attributes, such as cats, dogs, equines, cattle, and hippopotamuses [9, 14]. Compared with these five kinds of quadrupeds, the giant panda has certain similarities, so most of the bones and joints in the whole skeleton can be used for reference, such as head, spine, and leg bone structures.

FIGURE 4. Giant panda skeleton. (A) Giant panda skeleton system. (B) Differences between giant panda skeleton and other animals.

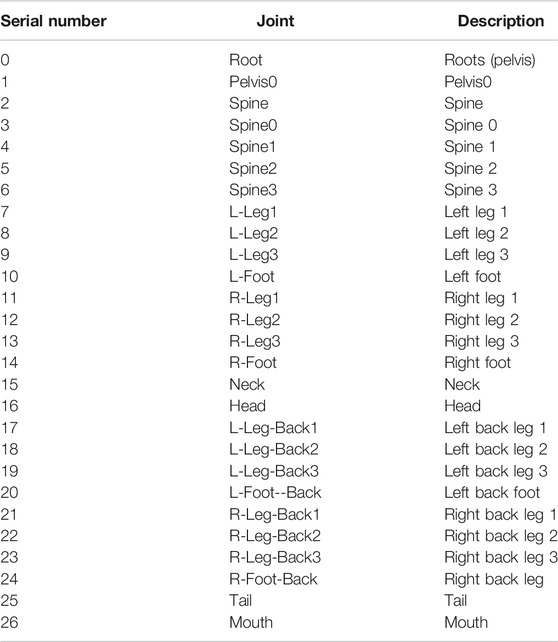

However, the characteristics of giant panda and other quadrupeds are also different in the skeleton structure, as shown in Figure 4B. The tail of the adult giant panda is very short. The body length of the giant panda is about 120–180 cm, while the tail length is only about 10–12 cm. Some tails are shorter than the tail of the rabbit, so we cannot feel the existence of the tail of the giant panda intuitively. In the whole movement structure, we combined the structural characteristics of the giant panda and combined the seven joints of the tail. We used 27 joints as the basic skeleton structure of the giant panda, as shown in Table 1.

3.2 Giant Panda SMAL Model

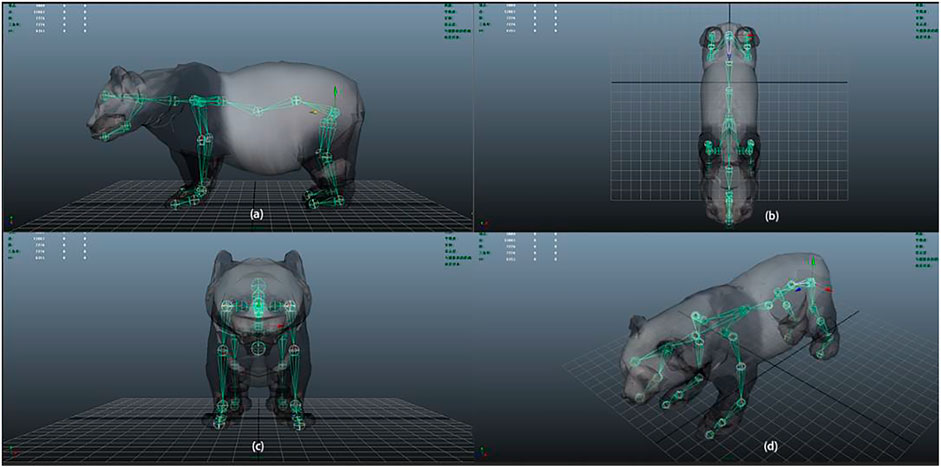

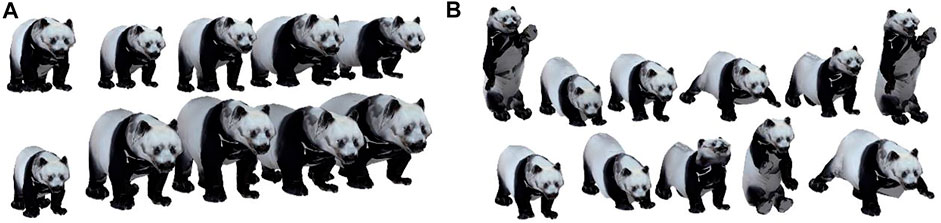

Since it is impossible to obtain the three-dimensional model of giant panda by scanning the living body of giant panda, this article uses the designed three-dimensional model of giant panda to bind the skeleton [15], as shown in Figure 5. The SMAL model is shown in Formula 3.1. Linear blending skinning (LBS) is used to generate giant panda models with different shapes and postures, so as to build the giant panda shape data set and attitude data set, as shown in Figure 6.

where

FIGURE 5. 3D model of a giant panda is bound by a giant panda skeleton. (A) Side view, (B) top view, (C) front view, and (D) oblique view.

FIGURE 6. Giant panda SMAL model. (A) Giant panda shapes obtained by SMAL. (B) Panda poses obtained by SMAL.

4 Motion Smoothing Process

The 3D model of giant panda reconstructed from a single-frame video image does not take into account the time relationship between frames and the long-range–dependent characteristics of the video image sequence. When the target is obscured or has large noise, there will be relatively large 3D reconstruction errors, such as left leg matching to right leg and right leg matching to left leg. Moreover, the motion sequence composed of the results of single-frame 3D modeling will be not smooth [16].

For video image sequences, the long-distance dependent between data is used to capture the correlation between pixels of distant frames in the sequence, which is more conducive to the recognition and judgment of image information. In Section 2.2, we have analyzed that the GPVS has long-distance–dependent characteristics, so we will use the characteristics of the GPVS to improve the giant panda 3D modeling.

In order to improve the accuracy of the single-frame pose modeling algorithm, we fully consider that the RGB video sequence has long-range–dependent characteristics. That is, the movement and limb rotation angle of the giant panda in the video sequence shall not change too much. We use this characteristic to correct the error of 3D modeling results, so as to make the 3D modeling results of giant panda more smooth and accurate.

First, the body shape parameters β and attitude parameters θ of giant pandas in each frame of the video are solved by Eq. 3.1. Then, the energy function formula 4.1 is used to construct the time-smoothing term to improve the motion fluency and 3D reconstruction accuracy.

where

(1) 3D attitude error

where dist is the distance between the SMAL 3D model and the 3D model after rotation.

The main function of this item is to slightly adjust the first three-dimensional vector of the attitude parameters of the SMAL model to adjust the rotation angle of the model while maintaining the consistency between the projection of the three-dimensional attitude of the model and the results of the two-dimensional joint points, so as to improve the accuracy of attitude modeling and restrain some problems of incorrect pose estimation of the model, so as to ensure the accuracy of the attitude modeling algorithm.

(2) Motion smoothing

where

This item uses the similarity of giant panda actions in adjacent frames of the video to constrain the changes of three-dimensional bone joints of the model. The greater the change of model posture from the previous frame, the greater the energy value of this item. In case of large errors, due to image feature mismatch or over-fitting, this item can be constrained to correct some large errors such as leg exchange or posture inversion.

5 Discussion

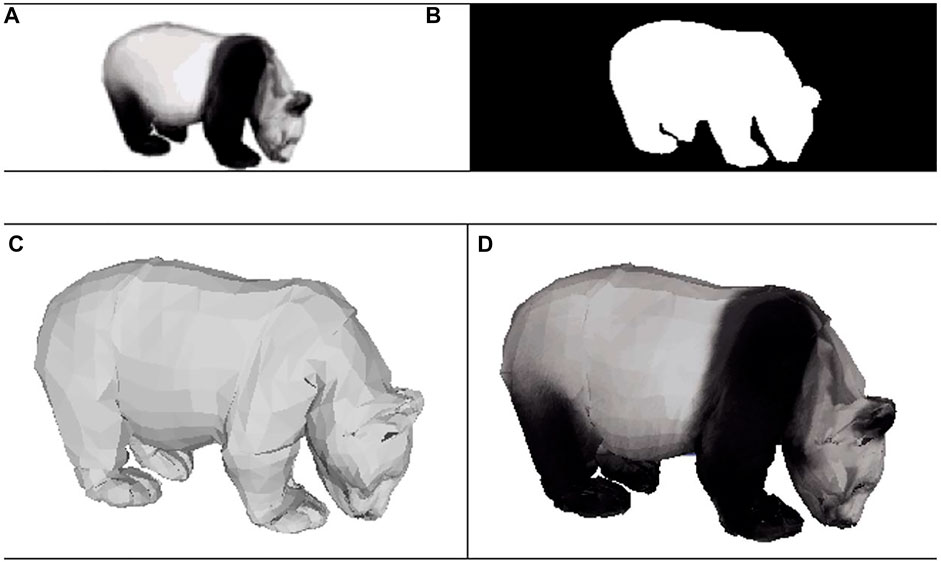

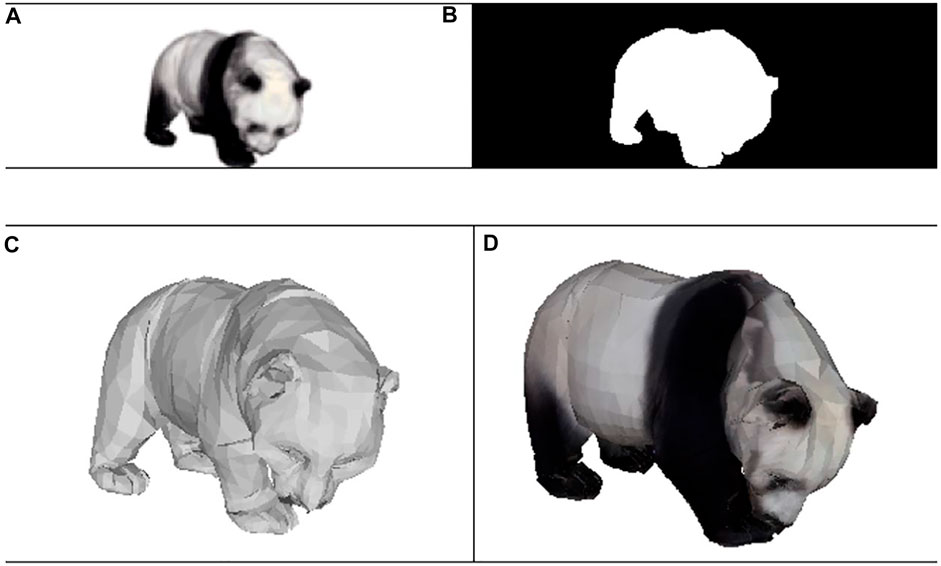

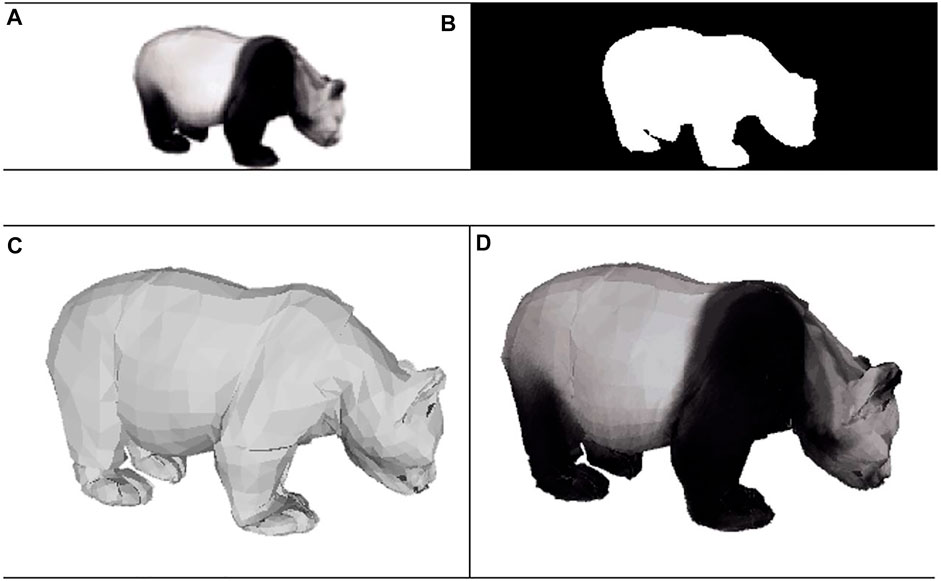

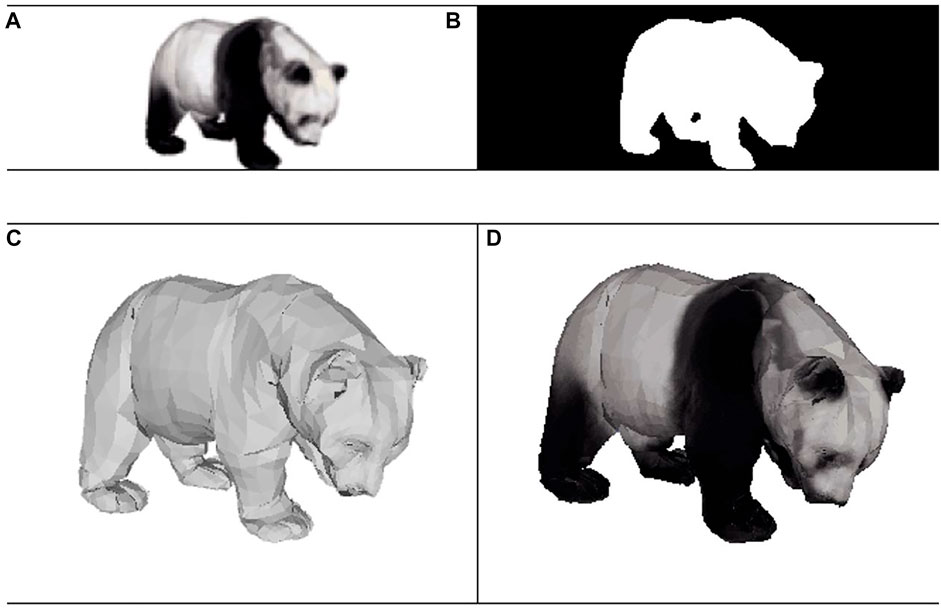

Our experimental work is based on the prior information of the giant panda SMAL model. According to two-dimensional images of the giant panda, the algorithm uses Formula 3.1 to obtain the shape, pose, and other parameters of the three-dimensional model of the giant panda and reconstructs a 3D model of the giant panda. Then, the 3D reconstruction accuracy is improved by Eq. 4.1, and the motion fluency of the 3D model is improved, and the 3D reconstruction results of giant panda are shown in Figures 7–10.

FIGURE 7. 3D reconstruction of GPVS in frame 10. (A) Original image, (B) contour silhouette after segmentation, (C) reconstruction effect of the 3D model of the giant panda without skin texture, and (D) reconstruction effect of the 3D model of the giant panda with skin texture.

FIGURE 8. 3D reconstruction of GPVS in frame 30. (A) Original image, (B) contour silhouette after segmentation, (C) reconstruction effect of the 3D model of the giant panda without skin texture, and (D) reconstruction effect of the 3D model of the giant panda with skin texture.

FIGURE 9. 3D reconstruction of GPVS in frame 60. (A) Original image, (B) contour silhouette after segmentation, (C) reconstruction effect of the 3D model of the giant panda without skin texture, and (D) reconstruction effect of the 3D model of the giant panda with skin texture.

FIGURE 10. 3D reconstruction of GPVS in frame 100. (A) Original image, (B) contour silhouette after segmentation, (C) reconstruction effect of the 3D model of the giant panda without skin texture, and (D) reconstruction effect of the 3D model of the giant panda with skin texture.

Figures 7–10 show the experimental results of the algorithm in restoring the SMAL giant panda 3D model from the image. The upper left figure of each group of images is the original image, the upper right figure is the segmented contour silhouette, and the lower left corner and lower right corner are the reconstruction effects of the giant panda 3D model without skin texture and with skin texture, respectively. It is obvious that there is a good fit between the image and the restored three-dimensional model of giant panda.

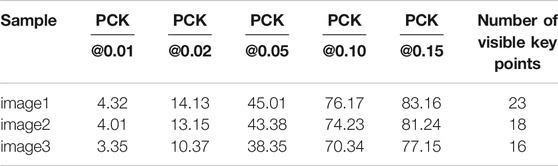

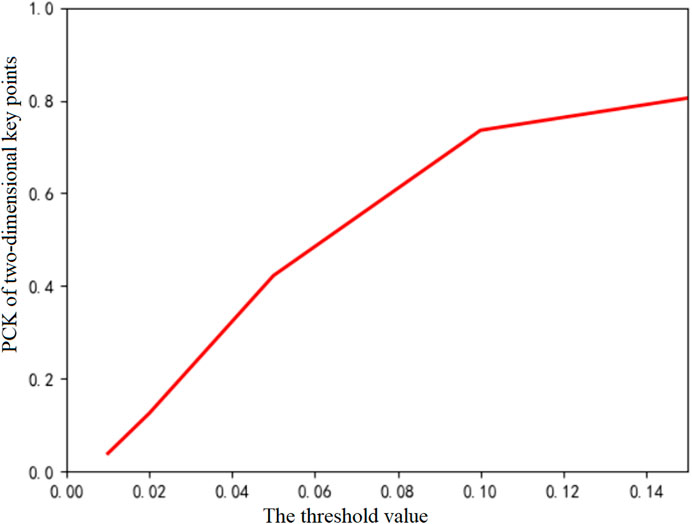

Table 2 shows the PCK indicators under different thresholds for some image examples. Figure 11 shows the visualization results of the mean value of the evaluation index PCK between the projection key points obtained after the restoration of the three-dimensional model of the giant panda and the truly marked key points of the input image. When the threshold of key point detection is PCK@0.15 (corresponding to 0.15 times of image pixels), the accuracy can reach 80.51%. Therefore, experiments show that our method can also obtain a 3D model of giant panda with a good reconstruction effect in the presence of occlusion or insufficient key points.

6 Conclusion

Through the analysis of GPVS, we prove that it has the long-range–dependent characteristics [17, 18]. We propose a method to use the coherent information contained in the long-range–dependent characteristics between video sequence images to construct a smooth energy function to correct the 3D model error. Through this error, we can judge that the 3D reconstruction result of giant panda is different from the real structure of giant panda. Finally, the experimental results show that our algorithm can obtain a more accurate 3D reconstruction model of giant panda.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Materials; further inquiries can be directed to the corresponding author.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This research is supported by the Chengdu Research Base of Giant Panda Breeding (NOs. 2020CPB-C09 and CPB2018-01).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Schönberger JL, Frahm JM. Structure-from-Motion Revisited[C]. In: IEEE Conference on Computer Vision & Pattern Recognition; 2016 Jun 27–30; Las Vegas, Nevada (2016).

2. Bregler C, Hertzmann A, Biermann H. Recovering Non-Rigid 3D Shape from Image Streams[C]. In: IEEE Conference on Computer Vision & Pattern Recognition; 2000 Jun 13–15; Hilton Head, SC. IEEE (2000).

3. Garg R, Roussos A, Agapito L. Dense Variational Reconstruction of Non-Rigid Surfaces from Monocular Video[C]. In: IEEE Conference on Computer Vision and Pattern Recognition; 2013 Jun 23–28; NW Washington, DC. IEEE (2013).

4. Li X, Li H, Joo H, Liu Y, Sheikh Y. Structure from Recurrent Motion: From Rigidity to Recurrency[J] (2018). Salt Lake City, UT: IEEE CVPR.

5. Loper M, Mahmood N, Romero J, Black MJ. SMPL: A Skinned Multi-Person Linear model[J]. Kobe, Japan: ACM Transactions on Graphics TOG (2015).

6. Kanazawa A, Black MJ, Jacobs DW, Malik J. End-to-end Recovery of Human Shape and Pose[J] (2017). Salt Lake City, UT: IEEE CVPR, 2018.

7. Zuffi S, Kanazawa A, Jacobs D, Black MJ. 3D Menagerie: Modeling the 3D Shape and Pose of Animals. In: IEEE Conf. on Computer Vision and Pattern Recognition(CVPR); 2017 Jul 21–26; Hawaii Convention Center, Hawaii (2017). doi:10.1109/cvpr.2017.586

8. Vicente S, Agapito L. Balloon Shapes: Reconstructing and Deforming Objects with Volume from Images[C]. In: International Conference on 3d Vision-3dv; 2013 Jun 29–Jul 01; Seattle, WA. IEEE (2013).

9. Biggs B, Roddick T, Fitzgibbon A, Cipolla R. Creatures Great and SMAL: Recovering the Shape and Motion of Animals from Video. In: Asian Conference on Computer Vision; 2018 Dec 04–06; Perth, Australia (2018).

10. Li M. Fractal Time Series—A Tutorial Review. Math Probl Eng (2010) 2010:157264. doi:10.1155/2010/157264

11. Li M. Modified Multifractional Gaussian Noise and its Application. Physica Scripta (2021) 96(12):125002. doi:10.1088/1402-4896/ac1cf6

12. Li M. Generalized Fractional Gaussian Noise and its Application to Traffic Modeling. Physica A (2021) 579(22):126138. doi:10.1016/j.physa.2021.126138

13. Peltier RF, Levy-Vehel J. Multifractional Brownian Motion: Definition and Preliminaries Results. INRIA TR 2645 (1995).

14. Zuffi S, Kanazawa A, Black M. Lions and Tigers and Bears: Capturing Non-Rigid 3D. Salt Lake City, UT: Articulated Shape from Images (2018).

15. Bogo F, Kanazawa A, Lassner C, Gehler P, Romero J, Black MJ. Keep it SMPL: Automatic Estimation of 3D Human Pose and Shape from a Single Image. In: European Conference on Computer Vision; 2016 Oct 11–14; Amsterdam, Netherlands (2016).

16. Zhang H. Research on 3D Human Modeling and its Application Based on SML Model. Xian, Shaanxi, China: Shaanxi University of Science & Technology (2020).

17. He J, George C, Wu J, Li M, Leng J. Spatiotemporal BME Characterization and Mapping of Sea Surface Chlorophyll in Chesapeake Bay (USA) Using Auxiliary Sea Surface Temperature Data. Sci Total Environ (2021) 794(1):148670. doi:10.1016/j.scitotenv.2021

Keywords: time series, long-range dependent, 3D reconstruction, SMAL, Hurst

Citation: Hu S, Liao Z, Hou R and Chen P (2022) Giant Panda Video Image Sequence and Application in 3D Reconstruction. Front. Phys. 10:839582. doi: 10.3389/fphy.2022.839582

Received: 20 December 2021; Accepted: 12 January 2022;

Published: 23 February 2022.

Edited by:

Ming Li, Zhejiang University, ChinaCopyright © 2022 Hu, Liao, Hou and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Peng Chen, Y2Fwcmljb3JuY3BAMTYzLmNvbQ==

Shaoxiang Hu

Shaoxiang Hu Zhiwu Liao

Zhiwu Liao Rong Hou

Rong Hou Peng Chen

Peng Chen