95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Phys. , 18 November 2022

Sec. Optics and Photonics

Volume 10 - 2022 | https://doi.org/10.3389/fphy.2022.1064693

This article is part of the Research Topic Silicon-Based Optoelectronics and Microelectronics Integration Technology View all 7 articles

A correction has been applied to this article in:

Corrigendum: Optoelectronic integrated circuits for analog optical computing: Development and challenge

Over the past 2 decades, researches in artificial neural networks (ANNs) and deep learning have flourished and enabled the applications of artificial intelligence (AI) in image recognition, natural language processing, medical image analysis, molecular and material science, autopilot and so on. As the application scenarios for AI become more complex, massive perceptual data need to be processed in real-time. Thus, the traditional electronic integrated chips for executing the calculation of ANNs and deep learning algorithms are faced with higher requirements for computation speed and energy consumption. However, due to the unsustainability of Moore’s Law and the failure of the Dennard’s scaling rules, the growth of computing power of the traditional electronic integrated chips based on electronic transistors and von Neumann architecture could difficultly match the rapid growth of data volume. Enabled by silicon-based optoelectronics, analog optical computing can support sub-nanosecond delay and ∼fJ energy consumption efficiency, and provide an alternative method to further greatly improve computing resources and to accelerate deep learning tasks. In Chapter 1, the challenges of electronic computing technologies are briefly explained, and potential solutions including analog optical computing are introduced. Then, separated by four photonic platforms, including coherent integration platform, incoherent integration platform, space-propagation optical platform, and optical fiber platform, the recent important research progresses in analog optical computing are outlined in Chapter 2. Then, the nonlinearity and training algorithm for analog optical computing are summarized and discussed in Chapter 3. In Chapter 4, the prospects and challenges of analog optical computing are pointed out.

As one of the most important algorithms of artificial intelligence (AI), due to its analogy inspired by parallel signal processing in human brain, artificial neural network (ANN) has been proposed more than 60 years [1]. However, until 2006, Hinton [2] demonstrated that the deep ANN model can be effectively trained, the powerful capability of deep ANNs has begun to be truly liberated and Hinton’s study has excited the third development wave of ANNs. Over the past 2 decades, deep learning (DL) has replaced some traditional computing models and successfully shown great superiority in solving the practical problems of pattern recognition, natural language processing, prediction and intelligent recommendation, medical image analysis, molecular and material science, autopilot, intelligent robot and so on. Matrix computation/multiply accumulate computation (MAC), as the fundamental and most heavy computation load in the ANN model, used to be processed by the centralized processing unit (CPU), which sequentially performs all operations specified by the program’s instructions and a separate memory. Because CPUs require a lot of space to place storage units and control units and computing units only occupy a small part, the parallel computing efficiency of the CPU is poor and it is quickly replaced by the graphics processing unit (GPU), which contains massive parallel computing units to accelerate the matrix computation. However, due to the continuous increase of information capacity under the era of big data, it is also insufficient for GPUs to process AI tasks in complex application scenarios [3]. Therefore, researchers have developed new hardware architectures, for instance, field-programmable gate arrays (FPGAs) [4], application specific integrated circuits (ASICs) [5], neural network processing units (NPUs) [6], and neuromorphic electronics [7–11], to improve energy efficiency and computation speed for ANN and DL tasks. However, with the slowing down and end of Moore’s Law and the failure of the Dennard’s scaling rules [12], these traditional von Neumann architectures and CMOS-based electronic components would suffer from the internal bottlenecks of electronics, such as the clock frequency, latency, energy efficiency, and harsh trade-offs between bandwidth and interconnectivity [3, 13–15]. Therefore, it can be inferred that the growth of computing power of the traditional electronic integrated chips based on von Neumann architecture and electronic transistors would not meet the demand of super-high-speed and low-latency processing of massive data [16] in the foreseeable future.

Benefitting from the high speed, broad bandwidth resources, and highly parallel processing capability, optics has unmatched advantages for interconnections and communications [17–23], which can overcome the bandwidth and interconnectivity trade-offs [24]. Six decades ago, researchers have already recognized the potential of optics to process information and have tried to develop optical devices to implement some fundamental computations [25, 26], which is named “optical computing” nowadays. Based on the internal difference of computing method, optical computing can be classified into two categories: the digital optical computing and the analog optical computing [25, 27, 28]. The digital optical computing aims to construct optical transistors which have the similar mechanism as the general electronic computer to process Boolean operation, and has been developed more than 30 years [29, 30]. Driven by the intrinsic merits of optics, such as high bandwidth, negligible heat generation, and ultra-fast response, the digital optical computing was considered as a competitive approach to replace the digital computer to implement efficient computation [31]. However, the criteria for practical optical logic, including cascadability, fan-out, logic-level restoration, input/output isolation, absence of critical biasing, and logic level independent of loss, have not yet been systematically achieved under the current technologies [32]. On the other hand, the analog optical computing opened up an alternative direction to obtain competitive performance against the state-of-art electronic computers. Firstly, the analog optical computing can “freely” perform arithmetic or mathematical operations, such as convolution, matrix-vector multiplications (MVM), Fourier transforms (FT), and random projection, as a byproduct of the light–matter interaction or light propagation [33]. Thus, the energy consumption can be efficiently reduced due to the low propagation loss and avoiding the consumption for logic-level restoration existing in logic circuits [24]. In recent decades, the great progress in silicon-based optoelectronics and the largely increasing of the integration density of photonic devices [34, 35] provides a possible platform that supports sub-nanosecond delay and ∼fJ energy consumption efficiency to implement these operations [15, 36, 37]. Moreover, the broad bandwidth resources are easy to be applied in extending the parallel processing of the analog optical computing by using the wavelength division multiplexing (WDM) [18]. Although the analog optical computing is also faced with the challenges of high-bit accuracy, low-power-consumption nonlinearity, large-scale integration and so on. With the development of optoelectronics, it is hopeful that these problem can be solved one after another and the analog optical computing is still one of the most competitive candidates for super-high-speed, low-energy-consumption, and low-latency massive data processing in the post-Moore era [16, 24, 26, 33, 36, 38, 39].

In this article, the challenges of electronic computing technologies are briefly explained and potential solutions, including the analog optical computing, are introduced in Chapter 1. Then, separated into four photonic platforms, including coherent integration platform, incoherent integration platform, space-propagation optical platform, and optical fiber platform, the recent research progresses in analog optical computing are outlined in Chapter 2. Then, the nonlinearity and training algorithms for analog optical computing are summarized and discussed in Chapter 3. In Chapter 4, the prospects and challenges of analog optical computing are pointed out.

The analog optical computing is explored to directly implement arithmetic/mathematical operations such as dot product [40, 41], MVM [42–44], FT [45, 46], and other operations [47–50] due to its potential possibilities of high parallelism and high energy efficiency. In general, the implementation of these arithmetic/mathematical computations depends on the physical mechanisms behind optical phenomena, for instance, they can be realized by interference, diffraction, optical absorption, and optical nonlinearity, combined with photonics techniques (such as multiplexing technology, optical modulation, and optical detection). Based on the realization of these operations, typical analog optical computing models, including the optical neural network (ONN) [44, 51, 52], optical reservoir computing (ORC) [50, 53, 54], and optical Ising machine (OIM) [49, 55, 56], have been demonstrated via various schemes. To have a coherent description of these implementations, the basic principles of these three computation models are firstly explained in this Chapter, and they are not mentioned anymore in the following implementations. Then, the recent progresses in analog optical computing are summarized and introduced sequentially by classifying them into four dependent optical platforms, including the coherent integration platform, incoherent integration platform, space-propagation platform, and optical fiber platform.

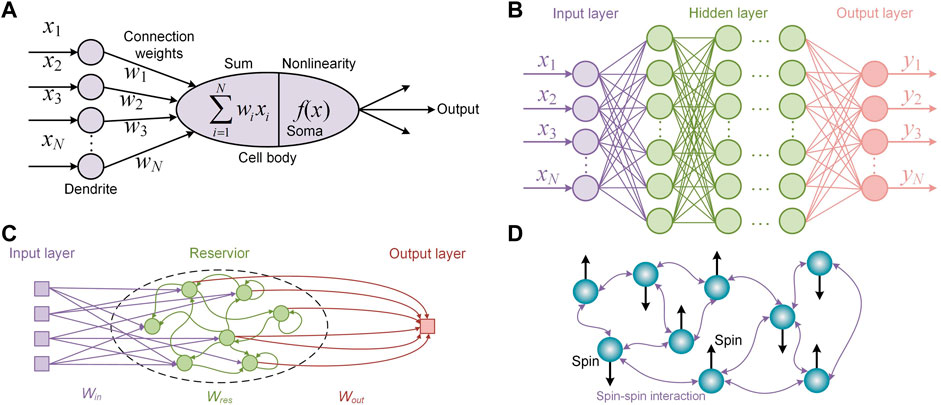

Artificial neural network (ANN) is a kind of parallel distributed processing model inspired by the information processing in biological neurons. Due to its outstanding energy efficiency and computation power, ANN has become one of the most important computation models in the field of AI [57]. The basic computing unit of ANN is the neuron, as shown in Figure 1A. After studying the biological mechanism of neurons and simplifying their functionality by researchers [1, 58], the information processing of neuron can be divided into three steps: the first step is that the dendrite of the neuron performs the weighting operation on the input signals (x1, x2, … , xN) collected from the previous layer of neurons; the second step is that the cell body of neuron performs weighted addition as a combiner; the third step is that the Soma of neuron performs the nonlinear process f(x) on the combined signal. As shown in Figure 1B, by cascading multilayer of neurons and fully connecting each neuron, a kind of fundamental ANN named “fully connected neural network” is constructed. In this network, the connection weight of neurons can learn the “pattern” behind massive of data by being trained with learning algorithms (such as backpropagation) to implement complex processing tasks, for instance, prediction [59], clustering [60], pattern recognition [61, 62]. In general, the data loaded into the input layer of ANN is a vector and the connection weight of neurons between two successive layers can be represented as a weight matrix. Thus, the major computations of ANN are the linear operation/MVM and the nonlinear operation (nonlinear activation in vector). The MVM operation will be very time-consuming and power-consuming for traditional electronic devices when the matrix dimension is very large [39]. However, the optics is very competitive to process large-scale MVM operations because of its high speed, parallel processing capability and low energy consumption [18, 44, 48, 63, 64]. Thus, the optical analog hardware implementation of ANN (ONNs) is an attractive prospect and has motivated many researchers in recent years. In general, ONNs are consisted of optical linear operation and optical/optoelectronic nonlinear operation. The optical linear operation can be implemented through interference [44], diffraction [63], optical absorption [48] and so on. And the nonlinear operation can be implemented through photoelectric effect [63], electro-optic modulation [53], nonlinear gain [65], Kerr effect [66], nonlinear absorption [64] and so on. Until now, the ONNs have been demonstrated competitive computing speed, accuracy, and power-consumption against ANNs operated in the state-of-art computers [44, 48, 63].

FIGURE 1. (A) Schematic of a biological neuron. (B) The model of fully connected neural network. (C) Schematic of a standard RC. (D) Schematic of an Ising machine model.

Reservoir computing (RC), derived from the concept of liquid-state machines [67] and echo state networks [68], belongs to a kind of novel computation model of recurrent neural network (RNN). Same as ANN, reservoir computing model consists of three parts, named as input layer, reservoir (hidden layer), and output layer, as shown in Figure 1C. However, the weight matrix

where fNL is the nonlinear activation function, u(t) is the input signal at the current time step, x(t) and x(t-1) are the reservoir’s internal states at the current time step and the last time step, respectively. The readout y(t) at the current time step is calculated following

when performing off-line training for RC, ridge regression is usually used to get the readout weights [69].

where

Ising machine (IM) is a kind of efficient model to solve the combinatorial optimization problems and nondeterministic polynomial time (NP)-hard/NP-complete problems. Solving these problems are important tasks for various application areas, including operations and scheduling, drug discovery, finance, circuit design, sensing, and manufacturing [80, 81]. However, due to the exponential growth of complexity with the problem size, these problems are very difficult to be solved on conventional von Neumann-based computers. Ising model provides an alternative method to efficiently solve these NP-hard or NP-complete problems by mapping them onto ground-state search problems of the Ising model with polynomial resources [82]. As shown in Figure 1D, the illustrated Ising model has nine spins and each spin occupies one spin state, either spin-up (σi = + 1) or spin-down (σi = − 1). The spin-spin interaction is denoted by Ji,j. The Ising Hamiltonian of the Ising model without an external magnetic field is given by

where N is the total number of spins. When the configuration of spins minimizes the Ising Hamiltonian, the mapped NP problem is solved. Benefiting from the nanophotonic hardware of parallel, low-energy, and high-speed computations [44, 55, 83], the photonic implementation of Ising model (OIM) is one of the most promising candidates to simulate the Ising Hamiltonian. The fundamental of implementing OIMs is to construct optical spin nodes and their interactions. For example, the optical pulse in optical fiber systems [49, 84–86], the spatial mode in free-space systems [87, 88], and the amplitude of coherent light in integrated systems [55, 56] can be used to represent the spin nodes of Ising model. And these systems have demonstrated the advantages of OIMs, such as parallelism, low latency, and nearly free of environment noise.

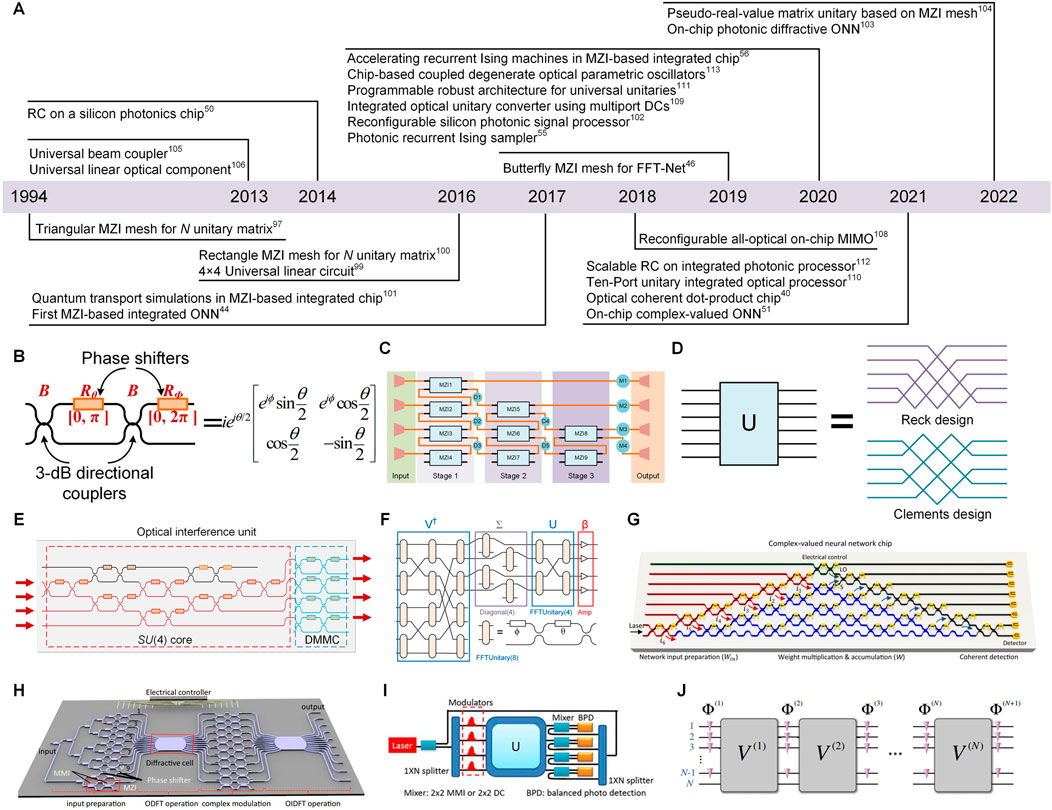

The silicon photonics, by patterning silicon-on-insulator (SOI) or bulk silicon wafers using lithographic technology, the same silicon substrate can heterogeneously integrate electronic and photonic devices. It provides a wide-bandwidth, high-speed, low-loss, low energy-consumption, and highly compact integration platform for optical signal processing and computing [26, 89, 90]. Due to the low-loss, stable, and anti-interference propagation in silicon waveguides, the silicon integration platform is very suitable to control stable interferences to implement various optical devices, such as modulators [91, 92], logic gates [93], optical switches [94], polarization splitters [95], mode converters [96]. Moreover, benefiting from great advances in silicon-based integration technology, the analog optical computing based on silicon photonic integration has flourished in recent years. Figure 2A summarizes the development history and milestones of the analog optical computing based on the integration platform with coherent photonics. In 1994, Reck et al. proposed an experimental implementation for realizing N × N arbitrary unitary transformations, U(N), in a triangular array of Mach-Zehnder interferometers (MZIs) [97]. Figure 2B shows the schematic of a 2 × 2 reconfigurable MZI, which is the building block for realizing N × N arbitrary unitary transformations. The 2 × 2 reconfigurable MZI consists of two 3-dB (50: 50) directional couplers with one phase shifter (θ) on one of the internal arms of the MZI and another phase shifter (ϕ) at one of the outputs after the second directional coupler of the MZI. The unitary transformation matrix of the MZI, namely UMZI, can be described by the product of the transformation matrices of two 3-dB directional couplers and two phase shifters as the following

where B is the transformation matrix of the 3-dB directional coupler.

FIGURE 2. (A) The progresses of analog optical computing in the coherent integration platform. (B) Schematic of a Mach–Zehnder interferometer (MZI). (C) Schematic of a 4 × 4-port universal linear circuit [99]. (D) Schematic of Reck design and Clements design for the unitary implementation [100]. (E) Schematic of the optical interference unit (OIU) [44]. (F) Schematic of the “FFTUnitary” [46]. (G) Schematic of the complex-valued ONN [51]. (H) Schematic of the integrated chip diffractive neural network [103]. (I) Schematic of the pseudo-real-value matrix unitary MZI mesh [104]. (J) A robust architecture for universal unitary [111]. (G) Reprinted from Ref. [51] with permission from Springer Nature: Nature Communications. (H) Reprinted from Ref. [103] with permission from Springer Nature: Nature Communications. (I) Reprinted from Ref. [104] with permission from De Gruyter: Nanophotonics. (J) Reprinted from Ref. [111] with permission from American Physical Society: Physical Review Letters.

In 2013, Miller et al. proposed that MZIs can be organized into a mesh to implement universal beam couplers [105] and universal linear optical components [106]. At the same time, a kind of self-configuration method was proposed to progressively configure these universal beam couplers and linear optical components, requiring no global optimization and continually adjusting itself against changing conditions [106]. It further promoted the application of using the reconfigurable linear optical components for optical computing. Afterwards, based on Miller’s self-configuration method, Ref. [99] demonstrated a silicon implementation of a 4 × 4-port universal linear circuit consisting of a network of thermally tunable symmetric MZIs. The schematic of the integrated circuit is shown in Figure 2C, by the electronic control of phase shifters of MZIs and software feedback, this MZI-based circuit can perform any linear operation between its four input ports and output ports. On the other hand, Clements et al. proposed a brand new architecture of MZIs network for implementing general unitary matrix transformation [100]. The basic idea behind the design was similar to the Reck design [97] that implemented successive SU(2) transformation of MZI components to perform arbitrary U(N) transformation. As shown in Figure 2D, this new design depended on a new mathematical decomposition and achieved the shallower optical depth, requiring roughly half the depth of the triangular design [97], which can effectively minimize optical losses and reduce fabrication resources. Moreover, the rectangular symmetry of this new design greatly improved the robustness to fabrication errors caused by mismatched optical losses. Since then, the analog optical computing (such as ONNs and OIMs) based on MZI-networks has developed rapidly. In 2017, Shen et al. proposed a programmable nanophotonic processor featuring a cascaded array of 56 programmable MZIs in a silicon photonic integrated circuit for a fully ONN [44]. The schematic of the core of the 4-port programmable nanophotonic processor, namely the optical interference unit (OIU) is shown in Figure 2E. The red-part MZIs array performed SU(4) transformation in terms of Reck decomposition principle [97] and performed complete U(N) transformation by cascading the blue-part diagonal matrix multiplication core (DMMC). Based on singular value decomposition, the arbitrary real matrix M can be decomposed into M = U Σ V†. Where U, V† are unitary matrices that can be achieved by OIU, and Σ is a diagonal matrix that can be realized by the DMCC of OIU. By tuning the phase shifters integrated in the OIU, MVM operation of ANN can be passively performed at the speed of light. This ONN architecture demonstrated an enhancement in computational speed and power efficiency over advanced electronics for conventional inference tasks and motivated the attentions on ONN field. In the same year, this type of MZI-based nanophotonic processor was used to simulate the quantum transport [101]. The low-loss and high-fidelity programmable transformations of the integrated processor showed its potential advantages for many-boson quantum simulation tasks. Soon, Fang et al. proposed another better fault tolerance architecture named “FFTUnitary” to implement ONN [46]. As shown in Figure 2F, compared to Reck and Clements design [97, 100], “FFTUnitary” was composed of butterfly-mesh MZIs, which had been demonstrated to realize the discrete Fourier transform (DFT) unitary transformation by Cooley-Tukey FFT algorithm [107]. Despite being non-universal and lacking a decomposition algorithm, “FFTUnitary” can reduce the depth of the unitary multipliers from N to log2(N) so that the robustness of “FFTUnitary” to fabrication errors was improved and the overall noise and loss in the network were reduced. Afterwards, in 2020, the MZI-based silicon photonic integrated circuit was demonstrated as a fully reconfigurable signal processor [102]. A self-configurating method was proposed to program the MZIs without any information about the inner structure. By using this method, the MZI-based integrated circuit can implement various functions, including multichannel optical switching, optical MIMO descrambler, and tunable optical filter. Besides, the above mentioned MZI-based unitary implementation was applied in the photonic recurrent Ising sampler (PRIS) [55, 56], which was a heuristic method tailored for parallel architectures allowing fast and efficient sampling from distributions of arbitrary Ising problems. Because the recurrent photonic transformation of PRIS is a fixed function, the machine was compatible with GHz clock-rate optoelectronic devices that can achieve orders-of-magnitude speedups in solving NP-hard problems.

Afterwards, some researches for improving the ability of information expressivity of the single-layer MZI mesh and reducing the complexity of the MZI-based network have been demonstrated [51, 103, 104]. In 2021, Zhang et al. proposed complex-valued ONN with MZI-based networks [51]. To recover the natural complex-valued operation ability of optical computing, on-chip coherent detection method based on phase-diversity homodyne detection was utilized to determine the relative phase of the output signal to the input signal. As shown in Figure 2G, the red marked MZIs were used for the input preparation, the blue marked MZIs performed the MVM operation, the green marked MZIs separated the reference light that will later be used for coherent detection, and the MZIs marked in grey were used for on-chip coherent detection. The benchmark results showed that the complex-valued calculation can provide stronger learning capabilities, including high accuracy, fast convergence, and the capability to construct nonlinear decision boundaries. In 2022, Zhu et al. [103] demonstrated an integrated diffractive neural network and realized typical computing operations (convolution and matrix multiplication). As shown in Figure 2H, two ultracompact diffractive cells were used to implement optical discrete Fourier transform (ODFT) operation and optical inverse discrete Fourier transform (OIDFT) operation. Between the two diffractive cells, the N-array MZIs were used to achieve the complex-valued modulation. Similar to the principle in Figure 2F, the overall can perform convolution and matrix multiplication through programming phase shifters of MZIs. This implementation resulted in reducing the component number from N2 to N so that a ∼10-fold reduction in both footprint and energy consumption was achieved compared to previous MZI-based ONNs [103]. Besides, Tian et al. proposed another MZI-based implementation that can reduce half of components of unitary multipliers [104]. The previous MZI-based ONN mainly relied on SVD algorithm, the real-value weight matrix of ONN was decomposed into two unitary matrices and one diagonal matrix. Actually, the real part of single unitary matrix had enough freedom to express the real-value weight matrix. The schematic of the pseudo-real-value matrix unitary MZI mesh for matrix expression is shown in Figure 2I, U represented a kind of MZI-based unitary implementation [100], a beam of reference light was split from the input light and then distributed into N output branches, the output light of U interfered with the reference light by the mixer and then was detected by the balanced photodetector to determine the real part of the multiply result. By employing the real-part of a unitary mesh to learn the real-value matrix, the requirement of MZIs was reduced least to O(Nlog2N) level. As the requirement of MVM scale gets larger, low level complexity component design will be more competitive in robustness and power efficiency [46, 103].

On the other hand, due to the natural sensitiveness of MZI-based networks to the manufacturing errors, researcher tried to develop other robust architectures to realize unitary matrix transformation [108–111]. In 2018, Tang et al. proposed a new integrated architecture [108] consisting of phase shifter layers and multimode interference (MMI) coupler layers to perform multi-input-multi-output (MIMO) demultiplexing. This combination of mode mixing component (MMIs and multiport directional couplers [109, 110]) and single-mode phase shifter revealed an alternative method to perform the unitary transformation or linear transformation and demonstrated stronger robustness for expressing the unitary matrix [111]. Besides, Saygin et al. proposed a multichannel-block robust architecture to implement universal unitary transformations [111]. As shown in Figure 2J, the unitary implementation was composed of multiple phase layers Φ(N) and mixing layers V(N) that introduced interaction between the channels to realize multichannel interference. They numerically demonstrated that enough multi-layer Φ(N) and V(N) can always construct the desired unitary matrix whatever the unitary matrix of the mixing layer was. Moreover, compared to that based on the network of two-channel blocks [100], this architecture was more robust to the fabrication errors. In conclusion, these new architectures improved the robustness of the network to the manufacturing errors but lacked mathematical programming algorithms for the rapid reconfiguration.

Except for the MZI-based analog optical computing, the coherent integrated platform with other types of components can also support the implementation of ONNs [40], ORCs [50, 112], and OIMs [113]. In 2014, Vandoorne et al. firstly demonstrated a 16-node parallel reservoir on a silicon photonics chip consisting of feedback loops with a combination of 1 × 2 and 2 × 2 multimode interferometers and delay lines with shallow-etched spiral waveguides [50]. The passive photonic silicon reservoir was used to perform both digital and analogue computational tasks to show its capacity as a generic integrated computational platform for wide applications. Afterwards, in 2020, Okawachi et al. [113] demonstrated an integrated silicon-nitride photonic circuit consisting of spatial-multiplexed degenerate optical parametric oscillators (DOPOs) that can be used to realize a hybrid temporally multiplexed coherent Ising machine to solve NP-hard problems [49, 84, 85]. Then, in 2021, Xu et al. demonstrated a silicon-based optical coherent dot-product chip (OCDC) capable of completing deep learning regression tasks [40]. The weighting operation was finished by the independent modulation of on-chip split coherent light and the summation completed when all branches matched in phase. Meanwhile, the OCDC implemented operations in the complete real-value domain instead of in only the positive domain by introducing the reference light. In the same year, Nakajima et al. demonstrated a scalable on-chip implementation of RC on an integrated coherent linear photonic processor [112]. Compared to previous approaches, the input and recurrent weights of RC were both encoded in the spatiotemporal domain by the integrated circuit consisting of 1: N splitters, delay lines, MZIs, phase shifters, and variable optical attenuators. And the footprint of the input circuit and reservoir circuit were 41 × 46 mm2 and 28 × 47 mm2, respectively.

In above mentioned coherent analog computing architectures, it is often difficult to independently adjust the elements of the computation matrix as they depend on the overall dependent parameters. For example, the transmission matrix of the MZI-based network is associated with the configuration of all the MZIs [100]. An alternative method is to express the elements of the computation matrix with different wavelengths. Due to avoiding the interference between different wavelengths, the calibration of the computation matrix can be discretely implemented. Thus, the incoherent matrix computation method based on the wavelength division multiplexing (WDM) has been widely applied in analog optical computing [41, 43, 48, 52, 64, 114–118].

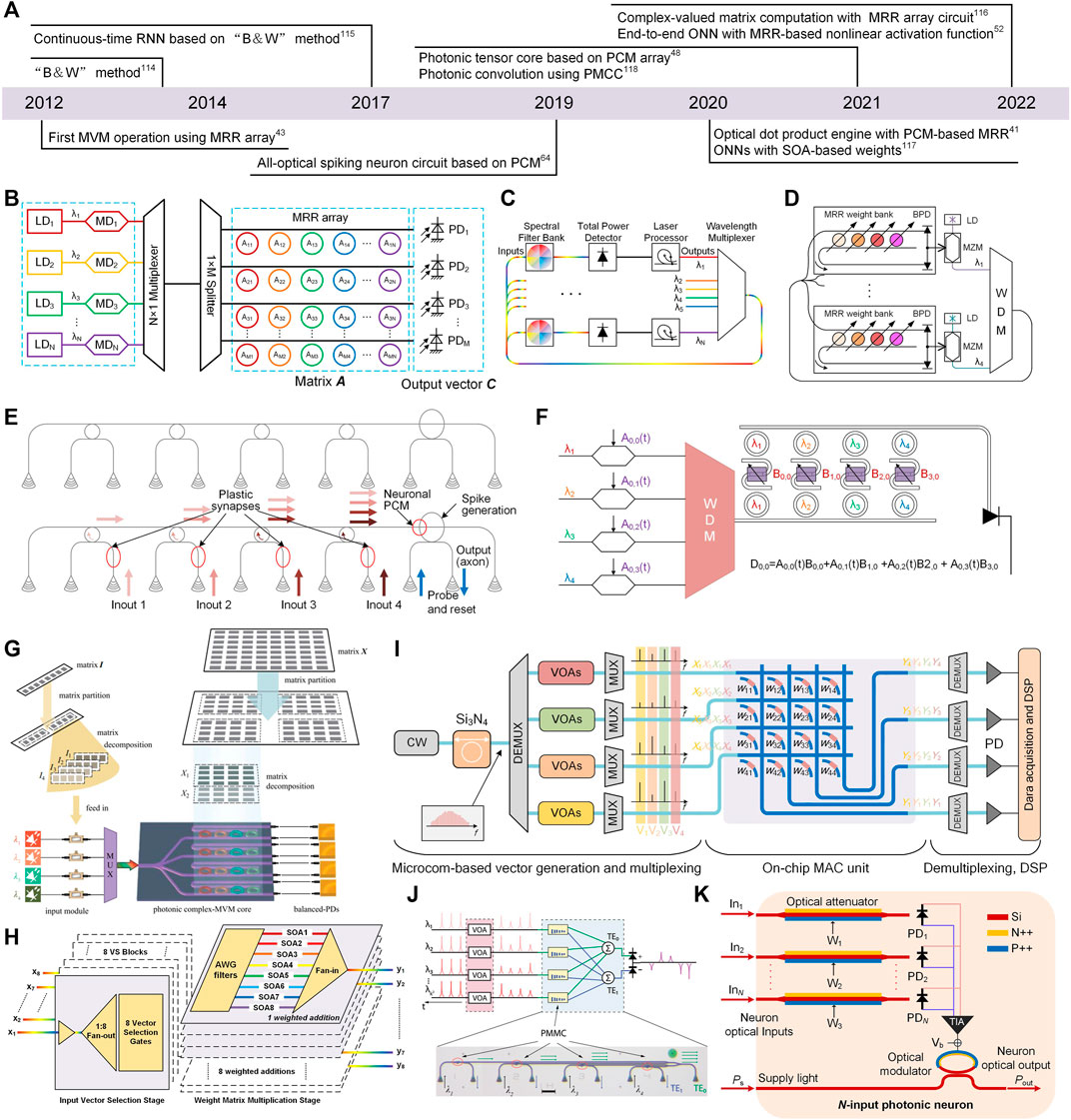

Figure 3A summarizes the timeline of advances in analog optical computing based on the incoherent integration platform. In 2012, Fang et al. first experimentally demonstrated MVM operation using a 4 × 4 silicon microring (MRR) modulator array [43]. As shown in Figure 3B, the input vector B was modulated on the optical power of N × 1 LD-array with different wavelengths and the matrix A was represented by the transmissivity of the M × N MRR modulator array. Then, the input vector B was multiplexed and broadcasted into each row of MRR array. In the end, independent modulation of each MRR executed the multiplication and the photodetector performed the accumulation process. As the footprint of MRR was more compact (a diameter of only a few microns) [43] than that of the MZI (over 10,000 μm2) [44, 51] so that it was promising to use the broad spectrum resource (hundreds of channels) [17] to extend the computing density. After that, many MRR-based analog computing architectures have been proposed [41, 64, 114–116].

FIGURE 3. (A) The progresses of analog optical computing in the incoherent integration platform. (B) Schematic of MRR-based optical matrix-vector multiplier [43]. (C) Schematic of an optical B&W network [114]. (D) An experimental setup concept of B&W network [115]. (E) Schematic of the all-optical spiking neural circuit [64]. (F) Schematic of the dot-product engine based on MRR [41]. (G) The principle of MRR-based complex-valued MVM [116]. (H) Schematic of the SOAbased weighted addition operation circuits [117]. (I) Schematic of the photonic tensor core [48]. (J) Schematic of the convolutional photonic computing core using PMCC array [118]. (K) The schematic of an N-input photonic neuron in the integrated end-to-end photonic deep neural network chip [52]. (G) Reprinted from Ref. 116 with permission from Springer: Frontiers of Optoelectronics. (J) Reprinted from Ref. 118 with permission from Springer Nature: Nature Communications.

In 2014, Tait et al. proposed a protocol named “Broadcast and Weight (B&W)” [114] that can be applied in scalable photonic spike processing and optical computing [37, 115]. An optical implementation of B&W protocol on the neural network model is illustrated in Figure 3C. The multiplexed signal collected from each neuron node (laser processor) was equally split and broadcasted into the weight bank (spectral filter bank) of every neuron node. Then, the weight bank operated independent weighting for each wavelength signal belonging to its unique neuron node and the total power detector yielded the sum of the weighted signals. In the end, the neuron node performed the nonlinear activation function of artificial neurons or spiking neurons. This protocol provided a promising way to construct parallel and scalable interconnections between photonic neurons for neuromorphic processing and optical computing. Followed by the protocol, in 2018, Tait et al. demonstrated a recurrent silicon photonic neural network based on the B&W protocol [115]. In this implementation shown in Figure 3D, the weight bank was realized by the MRR array and the photonic neuron was represented by the voltage-driven Mach-Zehnder modulator (MZM). After that, in 2019, Feldmann et al. proposed an all-optical spiking neuron circuit with phase-change material (PCM)-embedded plastic synapses and neurons [64]. As shown in Figure 3E, the previous-layer output spikes were labelled by different wavelengths and weighted through the PCM-embedded waveguide. Then, the weighted signals were multiplexed together by the MRR array. Next, the multiplexed signal was injected into a big ring resonator with PCM embedded at the crossing. In this architecture, the weighting operation was performed by the differential absorption for light under the different phase states (amorphous state or crystalline state) of PCM. And the MRR-based wavelength-division multiplexing technology was used to implement the sum operation. Lastly, the nonlinearity of neurons derived from the PCM-embedded ring resonator. Benefitting from the nonvolatile weights denoted by PCM and all-optical nonlinearity, this type of optical network can process information under ultra-low power consumption. Moreover, combined with the WDM technology, dense integrated MRRs and on-chip optical frequency comb technology [17] provide an ideal platform for large-scale expansion of networks. Similarly, Miscuglio et al. proposed photonic tensor cores by utilizing the dot product engine [41]. The schematic of the dot product engine is shown in Figure 3F. The input vector was loaded on the WDM signals modulated by high-speed modulators (MZMs) and then weighted by the PCM between two cascaded MRRs. The weighted WDM signals were incoherently summed up using a photodetector, which completed the dot product operation. The numerical simulations showed that the photonic tensor core unit had two to three orders higher performance over electrical tensor core units. Afterwards, in 2022, Cheng et al. improved the MRR-based MVM to perform complex-valued matrix computation and demonstrated Walsh-Hardmard transform, discrete cosine transform, discrete Fourier transform, and image convolutional processing in a 4 × 4 MRR array circuit [116]. The working principle of complex-valued MVM is shown in Figure 3G. In order to process full complex MVM, the input matrix I was divided into four matrices, defined as the positive real, positive imaginary, negative real, and negative imaginary parts of the matrix I. The weight matrix X was also divided into the real and imaginary parts and loaded on the MRR array. Moreover, the balanced photodetectors (PDs) were used between the add-drop port and drop port to cover the real number field expressed by the transmission coefficient of MRRs.

Except for using MRRs and B&W to realize matrix calculation, the PCM, SOA, and optical attenuator have been used to implement MVM operations and ONNs [48, 52, 117, 118]. In 2020, Shi et al. proposed another integrated weighting component (semiconductor optical amplifier, SOA) to perform the MVM operation in a WDM network [117]. The schematic diagram for the SOA-based weighted addition operation circuit is shown in Figure 3H. In the input vector selection stage, WDM inputs were fan-out to multiple weight matrix multiplication blocks, followed by an input vector selection unit. Then each arrayed waveguide grating (AWG) of the weighted addition block de-multiplexed the selected WDM inputs and assigned each wavelength channel to an independent SOA to perform the weighting operation, followed by a fan-in unit to addition operation. Compared to MRR-based weight matrix multiplication, SOA avoided the complicated calibration procedure because of the thermal cross-talk between adjacent elements, and monolithic or hybrid integration of gain and non-linear components was very promising to realize the on-chip nonlinearity and all-optical connectivity [119]. Afterwards, in 2021, Feldmann et al. demonstrated a highly parallelized integrated photonic hardware accelerator (tensor core) that operated at speed of 1012 MAC operations per second [48]. The schematic of the tensor core for performing four parallel MVM operations is shown in Figure 3I. The input vectors (V1 to Vn) were modulated by variable optical attenuators (VOAs) on the frequency combs generated by a high-Q Si3N4 photonic-chip-based microresonator and then de-multiplexed into four channels. Combs (X1 to Xn) belonging to the same group of vectors (in the same color as Vn) were parallelly input into the on-chip MAC unit consisting of a 4 × 4 crossing Si3N4 waveguide array and side-coupled PCM array. Four columns of PCMs (weight matrix) synchronously performed the dot product operation with the input vectors (V1 to Vn) so that four complete MVM operations finished at the same time. Then, the four output vectors were demultiplexed and detected in the following procedures. Due to the nonvolatile and zero-energy maintained features of PCM, the tensor core can achieve parallel, fast, and efficient photonic in-memory computing as the optical analogue of an application-specific integrated circuit (ASIC). In the same year, Wu et al. proposed another PCM-based convolution computing scheme by using phase-change metasurface mode converters (PMMCs) to express the matrix element [118]. PMCC was a programmable waveguide mode converter (TE0 to TE1) controlled by the tunable material phase of phase-gradient metasurface (GST). The schematic of a PMMC array for convolution is shown in Figure 3J. A patch of pixels of an image was encoded as optical pulses and input into k2 optical channels. The weight element was mapped into the mode contrast value of PMCC. The output in TE0 and TE1 were summed incoherently and measured by PDs to calculate the convolution result. By measuring the mode contrast, the value of weight can reach a 6-bit resolution, including both positive and negative values, which had an improvement compared to that of measuring the transmission of the optical input data through the programmed PCM [64]. In 2022, Ashtiani et al. demonstrated an integrated end-to-end photonic deep neural network (PDNN) that consisted of the whole functionality of artificial neurons [52]. The schematic of an N-input photonic neuron in PDNN is shown in Figure 3K. The array of P-doped–intrinsic–N-doped (PIN) current-controlled attenuators was used to individually weight the input signal. Then, the outputs of attenuators were converted into photocurrents by PDs and combined as the weighted sum of the neuron inputs. The weighted sum current drove a PN junction MRR modulator to perform the nonlinear activation function. In the on-chip PDNN, the linear operation was performed optically and the nonlinear operation was realized opto-electronically. Moreover, the inference time for the classification task was under 570 ps which was comparable with a single clock cycle of state-of-the-art digital platforms. In conclusion, the incoherently driving analog optical computing mainly depends on the mechanism that different wavelength channels perform independent multiplication and WDM technology performs the fan-out of inputs and the fan-in of outputs. Due to the rich spectrum resources and stability for independently controlling the weights, the WDM-based incoherent integrated circuit is an alternative platform to implement reconfigurable and scalable analog optical computing.

Apart from modulating information-carrying light in waveguides to realize analog optical computing, the propagation and interconnection of light in free space can be utilized to achieve high-speed, high-parallelism optical linear operation for analog optical computing. Specially, the density of interconnection can be easily extended to hundreds and thousands. Based on various space optics components, such as the lens, mirror, spatial light modulator (SLM), diffractive-optical element (DOE), digital micro-mirror device (DMD), diffractive layer, most analog optical computing models, including ONNs [47, 63, 120–125], ORCs [74], and OIMs [88], have been widely implemented.

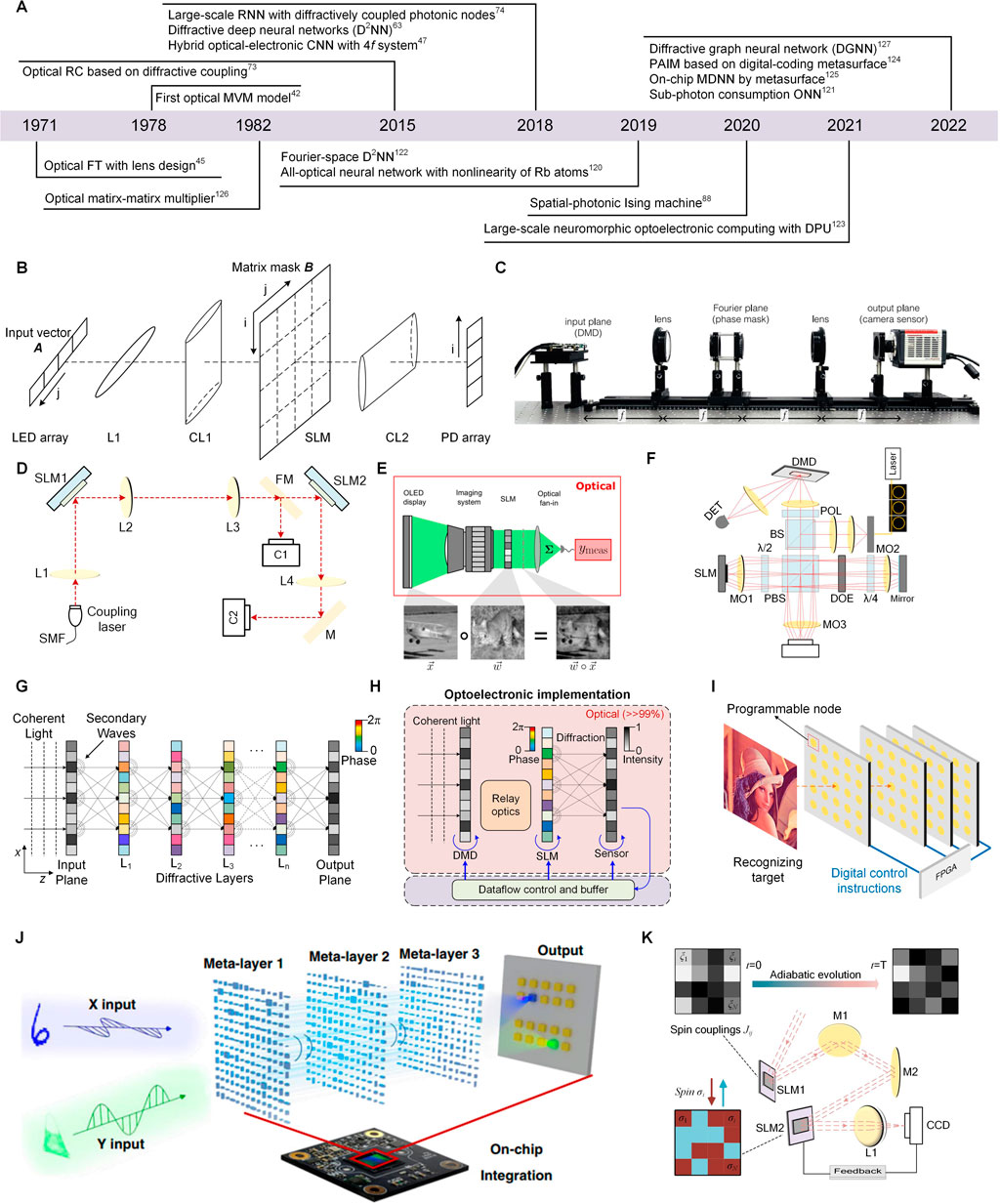

Figure 4A summarizes the timeline of advances in analog optical computing based on the space-propagation optical platform. In 1971, Bieren et al. firstly demonstrated that the Fourier transform operations can be performed without restriction in optical lens system [45]. After few years, a fully parallel, high-speed optical MVM (discrete Fourier transforms) system model was first proposed by Goodman [42]. The principle of optical MVM is depicted in Figure 4B. Firstly, the input vector A was modulated on the intensity of N light-emitting diodes (LED’s) array. Then, the input light beams were collimated by L1 and duplicated in the vertical direction by the cylinder lens (CL1). After passing through the CL1, the duplicated input vectors parallelly performed multiplication operation with each raw vector of the matrix mask B (SLM). Next, the beams from a given raw passed through the lens CL2 and converged (addition operation) on one element of the vertical photodetectors array. By copying the input vector and mapping the duplicates on the matrix mask, the MVM operation was naturally performed in the propagation process. Afterwards, Athale et al. proposed three implementations using outer product decomposition to realize optical matrix-matrix multiplier [126]. These methods mainly utilized the light source, the light modulators (electro-optic modulations, direct driven LED array, and acoustooptic Bragg cells), and the 2-D detector array to construct the product operation. However, the optical Fourier transform was not fully explored to be applied in optical computing in the past. In recent years, due to the activity of analog optical computing, optical Fourier transform has flourished again and promoted its applications in analog optical computing [47, 103, 120–122].

FIGURE 4. (A) The progresses of analog optical computing in the space-propagation optical platform. (B) Schematic of the optical MVM model. (C) Schematic of the optical convolutional layer based on 4f system [47]. (D) Schematic of the linear operation in the all-optical neural network [120]. (E) The procedure for characterizing optical vectorvector dot products [121]. (F) Schematic of the optical recurrent neural network using SLM and DOE [74]. (G) Schematic of the diffractive deep neural network [63]. (H) Schematic of the reconfigurable diffractive processing unit (DPU) [123]. (I) An array of programmable metasurfaces for constructing the programmable artificial intelligence machine [124]. (J) The working principle of the onchip multiplexed diffractive neural network [125]. (K) Principle of the spatial-photonic Ising machine [88]. (C) Reprinted from Ref. 47 with permission from Springer Nature: Scientific Reports. (E) Reprinted from Ref. 121 with permission from Springer Nature: Nature Communications. (J) Reprinted from Ref. 125 with permission from Springer Nature: Light|Science and Applications.

In 2018, Chang et al. proposed a hybrid optical-electronic convolutional neural networks with 4f system implementing optical convolution [47]. Based on the convolution theorem, the convolution of two-dimensional continuous functions in the space domain can be obtained by the inverse transformation of the product of their corresponding two Fourier transforms. The optical convolutional layer design based on 4f system is depicted in Figure 4C. The input image was encoded on the intensity of coherent light by the DMD and converted into the Fourier space after passing through the first lens. The convolution kernel was mapped into the point spread function (PSF) of the phase mask that placed on the common Fourier plane of two lenses. When the input beam passed through the phase mask and the second lens, the convolution result was naturally imaged on the camera sensor. By tiling the multiple kernels, multiple parallel convolutions were performed simultaneously so that the computational burden in CNN was greatly reduced. After that, in 2019, Zuo et al. proposed an all-optical neural network using SLM and Fourier lenses for linear operation and using laser-cooled 85Rb atoms for nonlinear operation [120]. The schematic of the linear operation is shown in Figure 4D, the input coupling laser beam was collimated and illuminated onto the first SLM (SLM1), which selectively reflected separate beam spots as the input vector Xj. The flip mirror (FM) and the first camera (C1) were used to monitor and measure Xj. Then, the incident light beam Xj was split and modulated by SLM2 into different directions i with weight Wij. In the end, the Fourier lens L4 summed all diffracted beams in the same direction onto a spot at its front focal plane as the linear summation Yi = ∑j Wij Xj. After the linear operation, the laser-cooled 85Rb atoms in a dark-line two-dimensional magneto-optical trap (MOT) implemented an electromagnetically induced transparency (EIT) nonlinear activation function. Under the motivation of figuring out the clean and quantitative investigation of the limits of optical energy consumption in large-scale ONNs. In 2022, Wang et al. demonstrated a sub-photon consumption ONN using spatial mode to perform the optical dot product [121]. The schematic for implementing sub-photon optical vector-vector dot product is shown in Figure 4E. The elements of the input vector were encoded in the intensity of independent spatial modes illuminated by an organic light-emitting diode (OLED). And the SLM was used to represent the weight by encoding the transmissivity of the modulator pixel. The scalar multiplication was performed when the emitting beam passed through the SLM. Lastly, a lens was used to focus the transmitted light onto a detector, where the total number of photons impinging on the detector was proportional to the dot product result. The result of the sub-photon consumption successfully proved that the energy consumption of ONNs can reach to an extreme low level.

Different from using optical Fourier lens to implement MVM operation, the direct phase/amplitude modulation on the diffractive lights also can implement highly parallel MVM operation. In 2015, Brunner et al. proposed a diffractive-optical network consisted of diffractive orders of a diffractive-optical element (DOE), imaging lens, and vertical-cavity surface-emitting lasers (VCSELs) array, allowing for constructing the parallel ORC [73]. The diffractive optical coupling in this diffractive-optical network was used to achieve the connection of different neuron in the reservoir and the connection weight was implemented by the coupling between individual lasers of the VCSEL. Based on this concept, in 2018, Brunner’s team further proposed a large scale recurrent neural network with 2025 diffractively coupled photonic nodes by using a SLM and DOE [74]. The schematic of the recurrent neural network is shown in Figure 4F. Firstly, the beam of the illumination laser passed through the beam splitter (BS) and focused on the first microscope objective’s (MO1) back focal plane and illuminated the SLM. Then, the pixel of SLM operated the intensity modulation on the illumination field, which represented encoding the RNN state. After that, the transmitted field was imaged on a mirror through MO2, then imaged on the camera after a double-pass through the λ/4-plate and the reflection of the PBS. The DOE adding to the beam path was used to implement the internal connection weight WDOE in RNN. The network’s new state was denoted by the intensity transmitted through the PBS. Lastly, the computational result was read out after summing the network’s state according to weight matrix WDMD, which was loaded on the DMD. After learning the readout weight of WDMD, the 900-node recurrent neural network can implement low-error chaotic Mackey–Glass sequence prediction despite the Boolean readout weights.

In 2018, an important novel spatial optical computing architecture named “diffractive deep neural network (D2NN)” was proposed by Lin et al. [63]. This research opened up the study direction of using the successive modulation of diffractive plane layers to implement MVM operation and ONNs. As shown in Figure 4G, the D2NN was consisted of multiple diffractive layers, whose every pixel acted as a neuron of artificial neural network, with a complex-valued transmission (or reflection) coefficient. According to the Huygens-Fresnel’ equation, the diffraction of wave between the successive layers can represent the fully connection of neurons. These transmission/reflection coefficients of each layer would be fixed and the diffractive layers would be physically fabricated after training the whole network by simulation, then the D2NN can passively perform the computing function in the speed of light. Due to the dense connections (millions of neurons and billions of connections), the phase-only modulated D2NN can realize 91.75% and 81.1% classification accuracy for MNIST and Fashion-MNIST, respectively, without implementing nonlinear activation function. Next year, Yan et al. demonstrated that the D2NN placed in the Fourier space can obtain better performance in advanced computer vision tasks, including all-optical saliency detection and high-accuracy object classification [122]. Compared to the real-space D2NN, the Fourier-space D2NN was more natural to preserve the spatial correspondence by incorporating a dual 2f optical system, which was helpful for those tasks that required an image-to-image mapping. Moreover, a photorefractive crystal (SBN:60) was used after D2NN to further improve the ability of the network to extract features. After training, the Fourier-space D2NN realized 98.6% and 91.1% classification accuracy for the MNIST and Fashion-MNIST dataset, respectively. In conclusion, the D2NN framework provided a unique all-optical processing platform that efficiently operated at the speed of light using passive components and optical diffraction. Meanwhile, the scale of D2NNs can be easily extended to provide extreme parallelism by using high-throughput and large-area 3D fabrication methods or on-chip integration. After that, in 2021, Zhou et al. [123] proposed using the reconfigurable diffractive processing unit to implement large-scale neuromorphic optoelectronic computing. The basic computing unit for constructing different types of ANN architectures was a reconfigurable diffractive processing unit (DPU) whose schematic is depicted in Figure 4H. Here, the design was used to process large-scale visual signals that feed in images and videos. Thus, the DMD and SLM were used as the modulators to implement the input nodes due to its high data throughput. Different input nodes were physically connected to individual output neurons by the diffraction, where the connection weights were determined by the diffractive modulation of the wavefront. The COMS sensor was used to as the photodetector to implement the optoelectronic neurons. By temporally multiplexing these DPUs, three complex ANN architectures were demonstrated, including the diffractive deep neural network (D2NN), diffractive network in network (D-NIN-1), and diffractive recurrent neural network (D-RNN). Soon after, Yan et al. proposed to realize all-optical graph representation learning by using integrated diffractive photonic computing units (DPUs) [127]. The DPU was consisted of the successive diffractive layers to transform the node attributes of graph network into optical neural messages. The proposed diffractive graph neural network (DGNN) opened up a new direction for designing application-specific integrated photonic circuits for high-efficiency processing large-scale graph data structures.

On the other hand, improving the reconfigurability and integration of D2NN became another promising research direction nowadays. In 2022, Liu et al. proposed a new kind of diffractive deep neural network by using multi-layer digital-coding metasurface array, which was named as the programmable artificial intelligence machine (PAIM) [124]. As shown in Figure 4I, the pre-designed diffractive layers in Ref. [63] were replaced by digital-coding metasurfaces consisting of multiple programmable nodes. By using field-programmable gate arrays (FPGAs) to control these nodes, the metasurfaces can manipulate reflected or transmitted electromagnetic waves in real time. Compared to previous diffractive deep neural network, the PAIM was fully reprogrammable and re-trainable owing to its weight-reprogrammable nodes, which facilitated the flexible configuration in different applications. In the same year, Luo et al. proposed on-chip multiplexed diffractive neural network (MDNN) by metasurface [125]. The schematic of the MDNN for polarization-dependent object classification task is depicted in Figure 4J. The input light was encoded with different information of a handwritten digit and a fashion product in x-polarization and y-polarization, respectively. By tuning the structural parameters of each meta-unit, the metasurface can implement polarization-dependent phase responses in x-polarization and y-polarization. Then, the final diffractive results of different polarizations converged on the corresponding photoelectric detection region on the CMOS chip. The polarization multiplexing scheme opened up a novel way to implement massively parallel computing tasks.

Besides, the space-propagation optical platform can be used to implement OIMs. In 2020, Pierangeli et al. proposed a spatial-photonic Ising machine by using SLM and demonstrated the adiabatic evolution of frustrated Ising models [88]. The experimental setup of the spatial-photonic Ising machine is shown in Figure 4K. The SLM2 encoded Ising spins σi = ±1 on a continuous beam by 0-π phase-delay values. The SLM1 was used to implement the intensity modulation on ξi to control spin interaction. The CDD was used to measure the difference between the pre-determined target image and the image detected. Based on the feedback of CDD, the system was firstly optimized to reach the minimum of a Hamiltonian with homogeneous couplings, then the adiabatic evolution was simulated. This spatial-photonic Ising machine based on SLM provided an alternative method to support large-scale systems consisting of millions of spins.

As above mentioned, many integrated optoelectronic devices have been demonstrated in recent decades, including optical filters [128], optical couplers [129], logic gates [130], modulators [91, 131], polarization splitters [95], mode converters [96], photodetectors [132] and so on, and they have been applied in analog optical computing systems [44, 64, 103, 115]. However, the monolithic integration or heterogeneous integration of passive and active devices is still challenging [133, 134]. Meanwhile, the technological path and architecture design for analog optical computing just have started to flourish and still been developed. Thus, innovative ideas are still being encouraged compared to mature prototypes for commercial products. Due to the flexibility for restructuring with the optoelectronic devices and measuring instruments in the optical fiber communication field, many computation prototypes based on the optical fiber platform also have been proposed for realizing high-performance analog optical computing [18, 49, 53, 54, 65, 78, 79, 84, 85, 135].

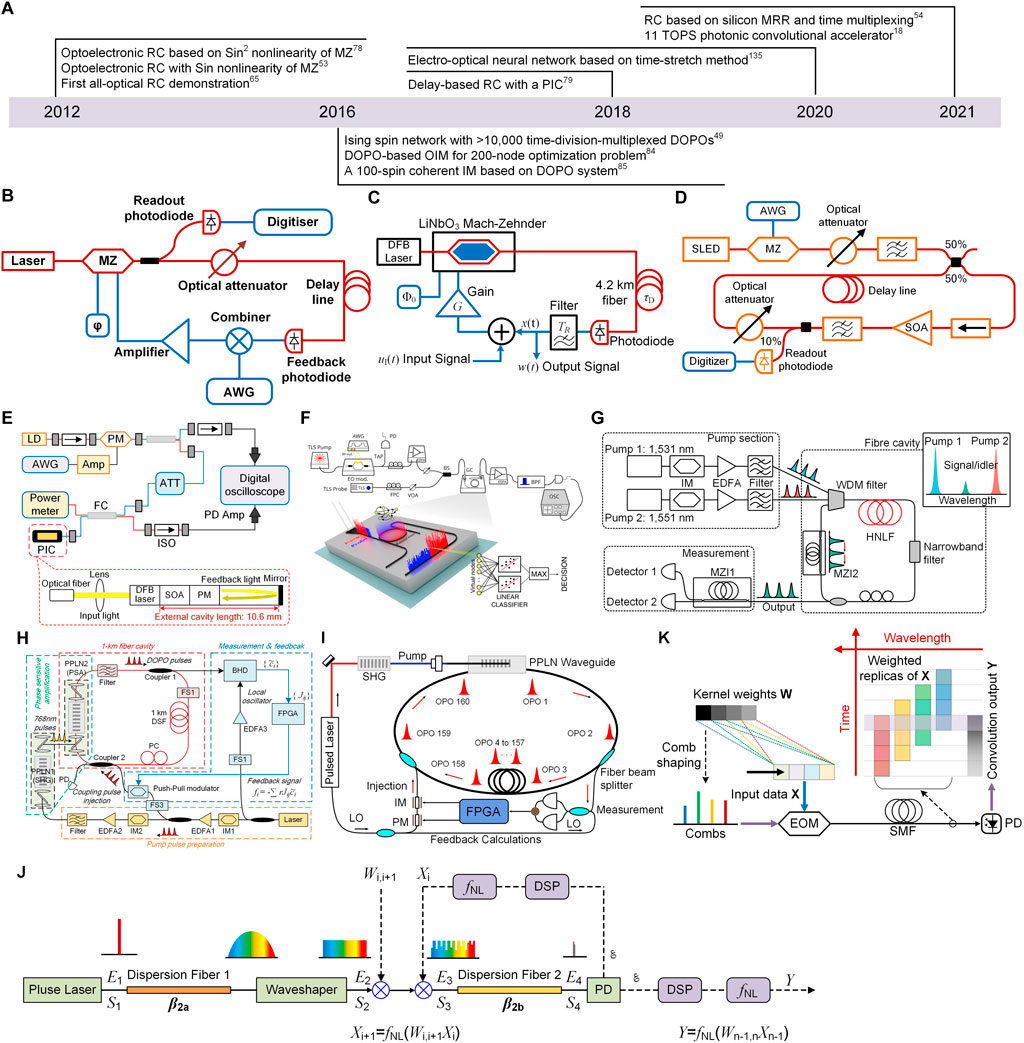

The development history of the analog optical computing based on the optical fiber platform is summarized in Figure 5A. These researches mainly focus on the implementation of ORCs and OIMs, and fewer are related to ONNs. In 2012, three associated researches of optoelectronic and all-optical RCs by using a single nonlinear node subject to a delayed feedback were successively proposed [53, 65, 78]. At first, Paquot et al. demonstrated an optoelectronic implementation of RC [53] based on a previous proposed similar architecture [136] that consisted of a single nonlinear node and a delay line. The experimental setup of the optoelectronic RC is shown in Figure 5B. The core of this RC implementation was the closed loop consisting of Lithium Niobate Mach-Zehnder modulator (M-Z) and a fiber spool, which performed as a nonlinear node to provide a sine nonlinearity and acted as a memory to store the delayed states of the nonlinearity, respectively. Besides, the input signal was fed into the system using arbitrary waveform generator (AWG), the response of the system was recorded through the readout photodiode, and the feedback signal was converted from optical field to electronic field by the feedback photodiode and was rejected into the system combined with input signal after being scaled by optical attenuator. By using computer to optimize the readout weight, the performance of the optoelectronic RC in nonlinear channel equalization and speech recognition tasks was comparable to state-of-the-art digital implementations. Soon, Larger et al. demonstrated a similar experimental scheme for optical information processing using a nonlinear optoelectronic oscillator subject to the delayed feedback [78]. The schematic of the optoelectronic implementation of RC is shown in Figure 5C. It can be seen the major component of the setup was similar to that of Ref. [53] but the nonlinearity derived from the Mach-Zehnder modulator turned into a sin2-function. After employing spoken digit recognition and time series prediction tasks as benchmarks, the optoelectronic RC also achieved competitive performance, which proved that the particular type of the nonlinearity seemed not to be crucial for RC. After that, Duport et al. improved the optoelectronic RC [78] and demonstrated the first all-optical experimental implementation [65]. The experimental set-up of the all-optical RC is shown in Figure 5D. The all-optical nonlinear feedback loop was consisted of an isolator, a SOA, a variable optical attenuator, and a fiber spool that acted as delay line. Different from the design of Ref. [78], the nonlinearity was provided by the saturation of the optical gain in SOA, where the characteristics of nonlinearity can be adjusted by controlling the injection currents of the SOA. The utilization of all-optical nonlinearity avoided the loss of velocity suffered from the conversion from optical field to electronic field. Thus, this implementation constituted a significant step towards the possible development of analog optical computing.

FIGURE 5. (A) The progresses of analog optical computing in the optical fiber platform. (B) Experimental set-up of the optoelectronic RC based on a single non-linear node and a delay line [53]. (C) Schematic of the optoelectronic implementation of RC [78]. (D) Schematic of the alloptical RC using the SOA as non-linearity [65]. (E) Schematic of the setup for delay-based RC using a photonic integrated circuit as nonlinearity [79]. (F) The experimental setup of the MRR-based RC [54]. (G) The principle of generating artificial Ising spins based on DOPOs [49]. (H) Schematic of the setup of the coherent Ising machine with measurement and feedback [84]. (I) Schematic of the coherent Ising machine by using PPLN to form the timedivision-multiplexed pulsed DOPOs [85]. (J) The principle of the time-stretch electro-optical neural network [135]. (K) The working principle of the photonic convolutional accelerator [18]. (F) Reprinted from Ref. 54 with permission from Springer Nature: Scientific Reports.

Afterwards, some researchers aimed to the integration of ORC and demonstrated some methods. In 2018, Takano et al. proposed a compact delay-based RC [79] by using a photonic integrated circuit (PIC). Here, the nonlinearity of RC was implemented by the nonlinear dynamics in the PIC with short external cavities. The structure of the RC and PIC is demonstrated in Figure 5E. The reservoir was implemented through the PIC with time-delayed optical feedback, and the input signal was injected into the reservoir using a semiconductor laser diode (LD) and a phase modulator (PM). The output signal was sampled from the temporal waveforms of the PIC using a photodetector and digital oscilloscope. As shown in Figure 5E (bottom), the PIC was consisted of a distributed-feedback (DFB) semiconductor laser, a SOA, a PM, a passive waveguide, and an external mirror for optical feedback. By the optical fiber connected to the PIC through a lens, the output of the PIC can be detected and input signal can be injected into the PIC. As the delay time of the PIC-based feedback loop was very small, two method were proposed to increase the number of virtual nodes of RC, namely reducing of the node interval and using of multiple delay times. After training, this RC with the PIC demonstrated successful performance in time-series prediction and nonlinear channel equalization tasks. After that, Borghi et al. demonstrated an implementation of RC based on a silicon MRR and time multiplexing [54]. The schematic of the experimental setup is depicted in Figure 5F. The input signal was encoded in the intensity of a pump laser and resonantly coupled to the input port of an MRR. Then, the input information was nonlinearly transferred from the pump light to a continuous wave probe laser by generating carriers through two photo absorption (TPA) and free carrier dispersion in the MRR. In this implementation, there was no external feedback and the virtual nodes were realized by time-multiplexing method. Besides, the nonlinearity was naturally provided by the TPA and carrier dynamics served as the connections between the virtual nodes. After computing, the probe light carried the results and exited from the drop port of the MRR. The reservoir achieved a minimum detectable bit error rate (BER) of 1.4 × 10–3 for bitrates up to 30 MHz in 1-bit delayed XOR task and 99.3% accuracy in the classification of the Iris dataset. In conclusion, the proposed method of using a single nonlinear node subject to delayed feedback for implementing RCs [53, 54, 65, 78, 79, 136, 137] was an inspiring attempt to reduce the complexity of the reservoir and the difficulty for realizing the nonlinearity in optics. However, the method creates many virtual nodes by using time dimension to exchange space dimension so that the bandwidth of components is challenging compared to that of RCs with parallel nodes.

On the other hand, OIMs based on the degenerate optical parametric oscillator (DOPO) attracted much attention and many researches have been reported [49, 84, 85]. In 2016, three important related works were successively reported. Inagaki et al. demonstrated >10,000 time-division-multiplexed DOPOs and simulated a one-dimensional Ising model [49]. The experiential setup by using DOPOs to generate Ising spins is shown in Figure 5G. As mentioned in Eq. 4, the elements with a binary degree of freedom is required to model the spins of Ising machine and the coupling between spins can be programmable in some way. In this implementation, the stable artificial spin was realized by a DOPO that took only the 0 or π phase relative to the pump phase. To obtain larger number of spins, dual-pump four-wave mixing (FWM) in a highly nonlinear fibre (HNLF) placed in a fibre cavity was utilized. As the number of independent DOPO was proportional to the cavity roundtrip time, increasing the pump repetition frequency or by increasing the cavity roundtrip time can get larger number of spins. Moreover, the spin–spin interaction can be simply implemented with mutual injections of DOPO lights using delay interferometers. Then, Inagaki et al. improved the coherent Ising machine (CIM) [49] and increased the number of spins to 2048 with full spin-spin couplings [84]. And the measurement and feedback (MFB) scheme was used to implement all possible connections among 2048 spins, which provided the foundation to solve 200-node maximum cut problems on arbitrary graph topologies. The schematic of the CIM with MFB is shown in Figure 5H. The periodically poled lithium niobate (PPLN) waveguide placed in a 1-km fiber cavity was used as a phase-sensitive amplifier (PSA) to generate DOPO pulses. The coupler one extracted the DOPO pulses into the balanced homodyne detection (BPD) to measure the phase components {

Besides, the optical fiber platform has inspired some novel ideas to implement efficient MVM operations for ONNs. In 2020, Zang et al. proposed an electro-optical neural network using time-stretch method [135]. The time-stretch method was applied to optically perform the linear operation (MVM) in the electro-optical neural network and the nonlinear operation was implemented after converting into the electronic signal. The principle of the time-stretch method is depicted in Figure 5J. Firstly, the ultrashort periodic pulses generated by a mode-locked laser was broadened by the dispersion fiber 1. Then, the broadened pulses were reshaped to flatten by the waveshaper. Afterwards, the flattened broadened pulses were modulated with each row of elements from weight matrix and the input vector in succession. After the modulated pulses passed through the dispersion fiber two and PD, energy of each pulse that implied the result of multiplication of each row of elements from the weight matrix and the input vector was accumulated and then processed with the DSP. By circularly using the setup to implement MVMs and performing nonlinear activations by post-processing, a three-layer electro-optical neural network was constructed and tested in the handwriting digit recognition task with 88% accuracy under considerable noise. In 2021, Xu et al. demonstrated a photonic convolutional accelerator (CA) [18] operating at more than 10 TOPS (trillions of operations per second) by using the dispersion of optical fiber and time multiplexing. The operation principle of the CA is shown in Figure 5K. As first, the input vector X to be processed was modulated by the electro-optical Mach–Zehnder modulator (EOM) on the optical power of multiple frequency combs, whose initial powers were independently reshaped according to the elements of the convolution kernel W. Then, these modulated sequence replicas were delayed at regular intervals after passing through the standard single mode fibre. Ingeniously, the delayed interval caused by the dispersion was set as same with the symbol period of the modulation. Thus, each time slot yielded a convolution between X and W for a given convolution window when the delayed and weighted replicas were summed via high-speed photodetection. Except for the convolution, the CA can realize MVM operations by multichannel wavelength division multiplexing. This architecture sufficiently utilized the advantages of the high-speed modulation of EOMs by simultaneously multiplexing the wavelength dimension and time dimension. Based on the CA, an optical CNN was constructed and showed an accuracy of 88% of the handwritten digit MNIST dataset. In conclusion, although the computing systems based on the optical fiber platform are bulky, these prototypes play an important role in the fast validation of innovative ideas, which is essential for the develop of the analog optical computing.

In the previous section, the principle of typical applications (ONNs, ORCs, and OIMs) of analog optical computing was briefly explained and their implementations were discussed when introducing the development history and milestones of analog optical computing by four platforms. In general, the computation of analog optical computing includes two-part operations, namely the linear operation and nonlinear operation. As previous section mentioned, the linear operation can be realized by the coherent interference [44, 51, 103], incoherent summation in WDM system [43, 48, 64, 115], 4f system [47], diffraction [63, 123, 125] and so on. However, the implementation of the nonlinear operation, particularly for ONNs, is not emphatically discussed. In fact, the nonlinearity is crucial for enhancing the computing power of ONNs and accelerating the convergence speed of the network. For example, multiple hidden layers in ONNs are equivalent to a single linear layer without nonlinear activation function so that ONNs cannot learn the nonlinear models and problems. Besides, the nonlinearity in ORCs enables the ability to process sequence problems and complex classification tasks. In recent years, the attention on studying the optical nonlinearity activation functions is focused and many computing architectures with all-optical nonlinearity have been demonstrated [64–66, 120, 122, 138, 139]. On the other hand, the training is a crucial and indispensable step for ONNs and ORCs. By applying the training process to adjust the internal connections and parameters of the network, the network shows the adaptation for different computation tasks. In this section, the implementations of optical nonlinearity in analog optical computing are summarized. Then, the training algorithms used in analog optical computing, particularly in ONNs, are discussed.

In analog optical computing, the implementation methods of nonlinear activation functions can be into two categories, namely optical-electrical-optical (OEO) method and all-optical method. Due to the flexibility and simple implementing, the OEO method was widely applied [37, 140–144]. However, the requirement for the computing speed and power consumption has promoted all-optical nonlinear activation functions in recent years [66, 139, 145–150]. Here, we summarized the implementations of the OEO and all-optical nonlinear activation functions in recent years, and the characteristics of each implementation are depicted in Table 1, including the physical mechanism, the type of nonlinearity function, power consumption/activation threshold, reconfigurability, and integratability.

For the OEO nonlinear activation functions, photodetector (PD) is a common device to realize a quadratic nonlinear function with I = |E|2 (I: intensity of light; E: electric field intensity) when encoding the information on the electric field intensity [63, 74]. Besides, the nonlinear transfer function of electro-optic modulations is widely applied in ONNs and ORCs [52, 53, 78, 140–142, 151]. For example, the transfer function (sin-function and sin2-function) of MZMs was used to implement the nonlinearity of RCs [53, 78]. As the requirement for the type of nonlinearity is not specific, the unchangeable but stable transfer function of MZMs is suitable to consider as the nonlinearity of RC. At the same time, MZMs can provide GHz of bandwidth to implement highly parallel computation. However, the power consumption of maintaining the bias voltage of modulators and loading the modulation signal is relatively higher. Besides, other modulators such as electro-absorption modulators (EAMs) and silicon microring modulators (MRMs) have been used to implement nonlinear activation functions in ONNs [52, 140–142, 151]. In Ref. [140], Amin et al. demonstrated an EAM based on an indium tin oxide (ITO) layer monolithically integrated into silicon photonic waveguides, and its dynamic range was used as the nonlinear activation function in ONNs. The weighted optical signal was converted into photovoltage through a balanced photodiode and then drove the EAM to nonlinearly modulate the laser power mimicking an activation function. Moreover, the ONN based on the nonlinear activation function of the ITO modulator achieved an accuracy of 97% in handwritten classification prediction tasks. In Ref. [142], Tait et al. proposed a silicon photonic modulator neuron consisting of two PDs connected electrically to an MRM. By setting different biasing conditions, the modulator neuron showed six response shapes, including sigmoid shapes widely used in RNNs, ReLU shapes used in feedforward-machine-learning networks, i.e., in multilayer perceptrons (MLPs) and convolutional neural networks (CNNs), radial basis functions (RBFs) applied in machine learning based on support-vector machines, and quadratic transfer functions. This nonlinear configurability demonstrated the potential of the modulator neuron to be applied in a wide variety of neural-processing tasks. In Ref. [52], the MRM was used to implement the ReLU activation function in an on-chip photonic deep neural network, and facilitated 93.8% and 89.8% accuracies in two-class and four-class classification of handwritten letters, respectively. Meanwhile, Williamson et al. proposed an OEO scheme for realizing reprogrammable nonlinear activation functions for ONNs [143]. In this implementation, a silicon MZI was used to modulate the weighted signal by splitting part of the weighted signal and then converting to electrical field to control the modulation phase of the MZI. By adjusting the electrical transfer function, the ReLU-like response and clipped response can be obtained via the interference of MZI. Besides, laser systems can be used to implement the OEO and all-optical nonlinearity via the nonlinear dynamics. In Refs. [37, 152, 153], the excitable dynamics (threshold characteristics) were demonstrated in graphene-based lasers, distributed feedback lasers, and Fano lasers, respectively, which can be applied in optical neuromorphic computing.