- 1Unidad de Gestión Clínica de Radiofísica Hospitalaria, Hospital Regional Universitario de Málaga, Málaga, Spain

- 2Departamento de Física Atómica, Molecular y Nuclear, Universidad de Granada, Granada, Spain

- 3Facultat de Física (FQA and ICC), Universitat de Barcelona, Barcelona, Spain

After a brief description of the essentials of Monte Carlo simulation methods and the definition of simulation efficiency, the rationale for variance-reduction techniques is presented. Popular variance-reduction techniques applicable to Monte Carlo simulations of radiation transport are described and motivated. The focus is on those techniques that can be used with any transport code, irrespective of the strategies used to track charged particles; they operate by manipulating either the number and weights of the transported particles or the mean free paths of the various interaction mechanisms. The considered techniques are 1) splitting and Russian roulette, with the ant colony method as builder of importance maps, 2) exponential transform and interaction-forcing biasing, 3) Woodcock or delta-scattering method, 4) interaction forcing, and 5) proper use of symmetries and combinations of different techniques. Illustrative results from analog simulations (without recourse to variance-reduction) and from variance-reduced simulations of various transport problems are presented.

1 Introduction

Monte Carlo simulation has become the tool of choice for solving the Boltzmann linear transport equation for high-energy radiation (particles) in complex material structures. As compared with alternative deterministic finite-difference methods, Monte Carlo simulation has several distinct advantages. Firstly, it can describe arbitrary interaction processes, including those with cross sections that are rapidly varying functions of the physical variables (e.g., the atomic photoelectric effect, whose total cross section presents sharp absorption edges). Secondly, Monte Carlo simulation can easily follow particles through material systems with complex geometries, where deterministic methods would find great difficulties even to define the appropriate boundary conditions. Finally, the stochastic nature of Monte Carlo methods permits a straightforward evaluation of statistical (class A) uncertainties of simulation results, while finite-difference methods allow only rough estimations of accumulated numerical errors. Although Monte Carlo codes have reached a high degree of sophistication, simulation suffers from the drawback of requiring very large computation times, particularly for fast charged particles and neutrons, which experience a very large number of interactions before being brought to rest.

Generally, a Monte Carlo simulation involves a radiation source with specified characteristics, which emits primary particles in various initial states. The state variables of a particle are the particle’s kind k (defined by its mass and charge), the kinetic energy E (energy in the case of photons), position r and direction of motion

For a given material, each interaction mechanism (int) of particles of kind k and energy E is characterized by a molecular differential cross section (DCS). Because of the assumed isotropy of the material, the DCSs depend only on the polar angle of scattering, θ, and the energy transfer W. The DCSs are conveniently expressed as

where σk,int(E) is the total (integrated) cross section and pk,int(E; W, cos θ) is the joint probability distribution function (PDF) of the energy loss and the angular deflection cos θ. In the case of polarized particles, the DCS may also depend on the azimuthal scattering angle ϕ [1], although polarization does not affect the total cross section. The product

The length s of the free flight of a particle to its next interaction is a random variable with PDF

where

is the total inverse mean free path. The kind of interaction that occurs at the end of a free flight, and the angular deflection and the energy transfer in the interaction are random variables with PDFs determined by the total cross sections and the DCSs of the active interaction mechanisms.

A simulation code generates the trajectory of a particle as a sequence of free flights, each ending with an interaction where the particle changes its direction of flight, loses energy, and may induce the emission of secondary particles. A Monte Carlo calculation consists of the generation of a large number N of showers by numerical random sampling from the relevant PDFs (see e.g., Ref. [1] and references therein). The sought numerical information on the transport process is obtained as an average over the simulated showers.

A number of general-purpose Monte Carlo codes for simulation of the coupled transport of photons and charged particles are available (e.g., penelope [1], mcnp [2], geant4 [3], fluka [4], egsnrc [5], egs5 [6], tripoli-4 [7], and phits [8]). They perform detailed event-by-event simulation for photons, while charged particles are simulated by means of a combination of class I and class II schemes (see Ref. [9]). In class I or “condensed” simulation schemes, the trajectory of a charged particle is split into segments of predefined length and the cumulative energy loss and angular deflection resulting from the interactions along each segment are sampled from approximate multiple scattering theories. Class II, or “mixed”, schemes simulate individual hard interactions (i.e., interactions with energy loss or polar angular deflection larger than certain cut-offs Wc and θc) from their restricted DCSs, and the effect of the soft interactions (with W or θ less than the corresponding cut-offs) between each pair of hard interactions is described by means of multiple-scattering approximations. Class II schemes describe hard interactions accurately (i.e., according to the adopted DCSs) and also provide a better description of soft events (because multiple scattering approximations are more accurate when applied to soft collisions only).

The present article deals with strategies to speed up transport simulations, generally known as variance-reduction techniques (VRTs). Some Monte Carlo codes allow applying various of these techniques automatically, while other codes may require some extra coding by the user. Our aim here is to offer a general perspective of the VRTs and of their capabilities. For the sake of simplicity, we consider analog Monte Carlo simulations, in which the transport process retains its Markovian character. In these simulations, when a particle reaches an interface separating two different materials, we stop the particle at the interface, and proceed with the simulation in the next material by using the appropriate interaction DCSs. In addition, we only consider VRTs that are independent of the geometry, unless otherwise indicated.

In Section 2 we summarize the essentials of Monte Carlo simulation. The basic ideas leading to the formulation of VRTs for transport simulations are presented in Section 3, followed by the description of various VRTs that are applicable to any Monte Carlo transport algorithm. Results from illustrative simulation examples are presented in Section 4. These were generated by using the Monte Carlo code system penelope [1, 10], which simulates the coupled transport of electrons/positrons and photons.

2 Statistical Uncertainty, Efficiency, and Variance Reduction

Formally, any Monte Carlo simulation is equivalent to the evaluation of one or several integrals. This equivalence permits a formal foundation for Monte Carlo techniques, which is best illustrated by considering the calculation of the one-dimensional integral of a function F(x) over an interval (a, b),

To introduce randomness into this deterministic problem, we consider an arbitrary PDF, p(x), such that.

and

and we express the integral in the form of an expectation value:

with

Then, the integral can be evaluated by generating a large number N of random values xi from p(x) and, by virtue of the law of large numbers, we have

The integral that defines the variance of f,

can be evaluated in a similar way:

Actually, in a Monte Carlo simulation run, the number N of random values generated is finite and, if we repeat the calculation a number of times (with “independent” seeds of the random number generator) we get different values of the estimator

which fluctuate about the mean

with variance

Here the properties of the expectation and the variance have been used. The central limit theorem then implies that, for sufficiently large N, the probability distribution of

is an unbiased estimator for

It is worth noticing that the PDF p(x) can be selected arbitrarily, with the proviso that it complies with Eq. 5a. It is to be expected that Monte Carlo calculations with different PDFs would yield estimates

where T is the computer (CPU) time spent in the calculation. Although during the calculation the value of ϵ fluctuates randomly, the amplitude of its fluctuations decreases when N increases and ϵ tends to a constant value when N → ∞, because

The variance-reduction techniques (VRTs) are strategies aimed at increasing the efficiency of the calculation of the integral without modifying its expectation, i.e., aimed at reducing the relative statistical uncertainty attained after a given CPU time. They normally operate by modifying the PDF p(x) to lessen the variance. It is worth pointing out that a reduction of the variance implies an increase in efficiency only when the sampling process remains simple enough not to outweigh the reduction of variance.

3 VRTs in Transport Simulations

A Monte Carlo simulation of radiation transport can be regarded as the simultaneous evaluation of a number of integrals of the type

where Q is the quantity of interest, q(x) is the contribution of an individual shower, the random variable x (usually an array of random variables) characterizes each individual shower, and p(x) is the PDF for the occurrence of that particular shower. For example, Q may be the average energy deposited into a certain volume

The Monte Carlo estimator of the quantity Q is obtained by generating a sufficiently large number N of showers and setting.

and

where qi is the contribution (deposited energy in the above example) of the i-th shower. Generally, a shower consists of a number n of particles, and each of these particles may contribute to the score, that is

where qij stands for the contribution of the j-th particle of the shower.

In radiation transport simulations, VRTs are implemented by assigning each particle a weight w, which is a factor, usually real and non-negative, that multiplies all the contributions of that particle to the scored quantities. Primary particles emitted from unbiased sources are usually assigned a weight equal to unity. Biased sources can also be considered by assigning appropriate weights to the emitted particles. Generally, secondary particles inherit the weight of the parent particle that induced their emission.

In a very general way (see e. g., Ref. [11]), VRTs can be classified as sppliting-based and importance-sampling-based techniques. The latter modify the interaction PDFs, p(s) and pk,int(E; W, cos θ), while the former manipulate the numbers and weights of transported particles without altering the interaction PDFs. Most VRTs are based on the following considerations.

Let

and

with the respective associated variances.

and

It is then possible that with a proper selection of weights wk, and the associated contributions qik, we can keep the result unbiased, i.e., such that

Unfortunately, VRTs are extremely problem-dependent, and general recipes to optimize efficiency cannot be given. We limit our considerations to simple VRTs that can be readily implemented in a generic transport code, with no specific requirements about the simulation geometry, to reduce the variance of a given quantity Q, keeping the estimators of other quantities, of lesser interest, unbiased. More elaborate VRTs, such as the DXTRAN method implemented in MCNP [2, 12], which rely on partially-deterministic methods, will not be considered.

3.1 Splitting and Russian Roulette

These two techniques, which are normally used in conjunction, are effective in problems where interest is focused on a limited volume in the space of state variables

Splitting consists of transforming a particle, with weight w0 and in a certain state, into a number

Splitting reduces the variance, but one should avoid using splitting factors that are too large, because the extra work needed for tracking the various split particles may reduce the simulation efficiency and increases the computation time. By contrast, Russian roulette increases the variance (because it produces fewer contributions with higher weights) and reduces the CPU time. Russian roulette can be used for avoiding the simulation of low-weight particles, which would spend the same CPU time as for particles with large weights to produce very small contributions to the scores.

The effectiveness of these VRTs relies on the adopted values of the parameters

3.1.1 Consistent Adjoint Driven Importance Sampling Method

Elaborate VR schemes (see, e.g., Ref. [15]) combine source biasing and weight-window strategies, by considering an importance function that measures the likelihood of particles in that cell to contribute to the score. Approximate importance functions can be inferred from previous simulations, or from a deterministic discrete-ordinate transport calculation.

Global strategies for automatically determining importance functions have been developed, mostly for photon and neutron transport because these particles have relatively large mean free paths. Thus, the Consistent Adjoint Driven Importance Sampling (CADIS) method, determines the importance function from a deterministic adjoint calculation [16]. The FW-CADIS method [17] uses a similar strategy starting from a deterministic forward calculation. These are hybrid methods, in the sense that they combine Monte Carlo simulation with a deterministic (discrete-ordinate) calculation. They have been implemented in various codes (e.g., mcnp and tripoli-4) for coupled neutron-gamma simulations and shielding calculations [18, 19].

3.1.2 Ant Colony Method

A simpler, and easier to implement, procedure to progressively build an importance function from information acquired from the simulation itself is provided by the ant colony method [20]. Ant colony algorithms were first proposed by Dorigo et al. [21] and are based on the collective behavior of ant colonies in their searching for food: ants that find food sources return to the nest laying down trails of pheromone. Paths to abundant sources are followed by a greater number of ants and the pheromone level increases, guiding other ants to these sources.

The ant colony method is applied to particles within a limited volume of the particle-state space

The ant colony algorithm described here is consistent as long as all particles that enter the cell structure from outside, or that start their trajectories from within the structure, have weights equal to a power of 2. If this is not the case, i.e., if a particle enters or starts its journey in the cell structure with a weight w such that 2n−1 < w < 2n, Russian roulette with killing probability

The importance map is determined from information gathered either from preliminary simulations or in the course of the simulation run. Let

characterizes the relevance of the cell. Evidently, Pi ranges between 0 (none of the particles that pass cell i, nor its descendants, reach the RoI) and 1 (all particles passing the cell i arrive, themselves or their descendants, to the RoI). The importance of cell i is defined as

where [κi] denotes the closest integer to κi, and

The quantity P0 is the probability that a primary particle, or one of its descendants, arrives in the RoI. Notice that the importance defined in Eq. (21) is positive and increases with the likelihood that particles passing the i-th cell contribute to the scores. As a matter of fact, the definition of κi is somewhat arbitrary; other increasing functions of Pi and such that κi(P0) = 0 would do the job. The numerical coefficients in the definition of Eq. (22) yield values of the exponent [κi] between −5 and 12. Practical experience indicates that moderate variations of those coefficients do not produce significant improvements of the effectiveness of the method.

Once a suitable importance map is acquired, splitting and Russian roulette are activated as follows. When a transported particle having weight w moves from the cell i to the cell f,

• if w If > 1, the particle is split into

• if w If < 1, Russian roulette is applied with killing probability

• if w If = 1, no action is taken.

The definition of Ii as a power of 2, Eq. (21), combined with this strategy, implies that particles that passed a given cell have the same weight,

The ant colony method has been proven to be effective in simulations of clinical linear electron accelerators [22, 23], in a study of the response of MOSFET dosimeters [24], in dosimetry calculations of radiosurgery treatments [25], in the optimization of certain radiotherapy procedures [26], and in calculations of specific absorption fractions in Nuclear Medicine [20].

3.2 Path-Length Biasing

As mentioned above, the path length s to the next interaction is a random variable whose PDF is given by Eq. (2). The VRTs described in this Subsection operate by sampling s from a modified PDF, p′(s), and, to keep the simulation results unbiased, they replace the weight w of the particle with a new value w′ such that [15].

that is

3.2.1 Exponential Transform

There are situations in which one is mostly interested on the transport properties in localized spatial regions. For instance, in shielding calculations we wish to evaluate the dose in deep volumes of irradiated objects, while in backscattering studies our interest is focused on the surface region where the local dose varies quite abruptly (build-up effect). In those situations, the VRT of exponential transform [27] allows concentrating the simulated interactions near or within the RoI. This technique consists of modifying the value of the mean free path λ, which is replaced with

where a is a positive constant. That is, the PDF of the free-flight length to the next interaction is replaced with

and random values of the path length s are sampled by the inverse-transform method, which gives the familiar sampling formula

Here ξ is a random number distributed uniformly between 0 and 1. The modified weight

must be used in all subsequent contributions to the scores.

When a > 1 the interactions occur at smaller path lengths, if a = 1 the simulation is analog, and when 0 < a < 1 the path lengths between interactions are stretched. In the case of photon beams impinging on thick material blocks, the exponential transform with a < 1 is useful in shielding calculations, while with a > 1 helps in determining the dose in the build-up zone, near the entrance surface of the beam. Naturally, the “constant” a can be made direction-dependent (see, e.g., Ref. [2])

In principle the exponential transform is only valid when particles move in a homogeneous body surrounded with vacuum. It may not be applicable in complex geometries, where the transported particle may enter a different material before reaching the position of the next interaction, except when specific interface crossing strategies are adopted.

3.2.2 Forced-Interaction Biasing

Calculations of the dose in low-density gas volumes, or of the emission of secondary particles from thin material bodies use to have low efficiencies in analog simulations because the probability of having an interaction in those volumes and bodies is exceedingly small. A simple VRT that is very effective in these cases consists in forcing an interaction in a restricted path length interval, say between 0 and a given maximum length L. This is accomplished by sampling the path length s to the next interaction from the PDF [2].

limited to the interval (0, L). The path length s can then be sampled by the inverse transform method, which leads to the sampling formula

Notice that when L → ∞, this formula reduces to the familiar form of Eq. (26). To compensate for the effect of forcing the interaction, the weight w of the particle is replaced with the new weight

Since both the exponential transform and the forced-interaction biasing modify the particle weight, they may produce particles with very large or very small weights. It is then convenient to combine these VRTs with splitting and Russian roulette so as to keep the weights between reasonable limits.

3.3 Woodcock Method

This VRT, also known as the delta scattering method [28–30], is helpful in simulations of photon beams. It takes advantage of the high penetration of photons to simplify their tracking through material systems with complex geometries. This is made possible by assuming that, in addition to the physical interactions, the transported photons may undergo delta interactions, i.e., fake interactions that do not modify the state variables of the particle. Photons are transported freely across the system using an augmented inverse mean free path, Λ−1, which is required to be larger than the actual total inverse mean free paths in all the materials crossed by a trajectory ray. The event at the end of each free flight is assumed to be either a real interaction or a delta interaction (which does nothing). Delta interactions occur with probability 1 − λ−1/Λ−1, where λ−1 is the actual total inverse mean free path in the current material. Thus, the probability of real interactions per unit path length, which is equal to λ−1, remains unaltered.

This procedure avoids the need for computing intersections of particle rays with interfaces at the expense of having to determine which material is at the end of each free flight. Hence delta scattering will improve the efficiency for geometries where locating a particle (i.e., finding the material at its current position) is faster than normal tracking. It is particularly effective in calculations of dose distributions from photon beams in voxelized structures such as those obtained from computerized tomography. The Woodcock method is also applicable to fast neutrons, and to any kind of particles that have relatively large mean free paths. Unfortunately, the efficiency gain from this method is small when secondary charged particles are also tracked.

3.4 Interaction Forcing

Sometimes, a high variance results from an extremely low interaction probability. Consider, for instance, the simulation of the energy spectrum of bremsstrahlung photons emitted by medium energy (∼ 100 keV) electrons in a thin foil of a certain material. As radiative events are much less probable than elastic and inelastic scattering, the uncertainty of the simulated photon spectrum will be relatively large. Another difficult situation is found in the calculation of dose from photon beams on thin foils, where the interaction probability is very small. In such cases, an efficient VRT is to artificially increase the interaction probability of the process A of interest, i.e., to force interactions of type A to occur more frequently than for the real process. Our practical implementation of interaction forcing consists of replacing the mean free path λA of the real process by a shorter one,

For the sake of programming simplicity, the length of the free jump to the next A interaction (real or forced) of the transported particle is sampled from the exponential distribution with the reduced mean free path λA,f. To keep the simulation unbiased, we must manipulate the weights of the particles. Let w be the weight of the transported particle. We correct for the introduced distortion of the mean free path as follows:

• A weight

• Interactions of type A (real and forced) are simulated to determine the energy loss and the possible emission of secondary particles, but the state variables of the transported particle are altered only when the interaction is real. As the probability of having a real A interaction is

Of course, interaction forcing should be applied only to interactions that are dynamically allowed, i.e., for particles with energy above the corresponding “reaction” threshold. Evidently, quantities directly related to forced interactions will have a reduced statistical uncertainty due to the increase in number of these interactions. However, for a given simulation time, other quantities may exhibit standard deviations larger than those of the analog simulation because of the time spent in simulating the forced interactions. It is worth noticing that our implementation of interaction forcing introduces forced interactions randomly along the particle trajectory, independently of the geometry, and it keeps the weight of the transported particle unaltered. This is at odds with the VRT of forced-interaction biasing (frequently referred to also with the name of interaction forcing), where forced events alter the weight of the transported particle and occur with probabilities that need to be specified in accordance with the local geometry.

Interaction forcing can be activated locally, at any stage of the trajectory of a particle. Evidently, repeated application of interaction forcing may produce particles with very small weights. In practice this may be avoided by using this VRT only for particles within a given weight window (wl, wu). For instance, if interaction forcing is the only VRT applied and if primary particles are assigned unit weights, a window (0.5,1.5) ensures that interaction forcing with

Interaction forcing has been efficiently used in simulations of electron-probe microanalysis [31, 32], photon beams from medical electron accelerators [33], the response of ionization chambers [34], and the calculation of doses absorbed in small organs in Nuclear Medicine treatments [20].

Although this VRT effectively reduces the statistical uncertainties of results involving the emission of secondary particles and the energy deposition in very thin volumes, it violates energy conservation (because the sum of energies deposited along a track differs from the energy lost by the projectile) and, therefore, yields energy deposition spectra that are biased. Consequently, it cannot be used, e.g., in simulations of energy spectra from scintillation or semiconductor detectors, which require computing the distribution of total energy deposited by all particles in a shower into the sensitive volume of the detector. Because it is very difficult to avoid this kind of bias, many simulations of energy-deposition spectra are purely analog.

3.5 Other Methods

Exploitation of local symmetries present in the simulation is often very useful in reducing the variance [33]. For instance, when the radiation beam and the geometry are locally symmetric under rotations about an axis, splitting can be made more effective if the position and the direction of each of the

Splitting is also useful to favor the emission of secondary particles by taking advantage of the emission symmetries of these particles. The method can be applied, e.g., in simulations of x-ray emission spectra from targets irradiated by electrons with energies of the order of 100 keV and smaller. These electrons emit bremsstrahlung photons and x rays with quite small probabilities. The energy of bremsstrahlung photons depends basically of the polar emission angle and it is quite costly to sample. It is then practical to split each emitted bremsstrahlung photon by assigning to the split ones random values of the azimuthal emission angle, in order to increase the likelihood that one of these photons reaches the detector. Similarly, the number of x rays released in the relaxation of ionized atoms may be increased by splitting them and assigning to the split x rays independent random directions. These VRTs are frequently referred to as directional bremsstrahlung or x-ray splitting; they have been employed, e.g., in simulations of microanalysis measurements [32] and clinical linear accelerators [36], and in dosimetry calculations of radiosurgery [25], usually accompanied with Russian roulette to reduce the number of photons not moving towards the RoI [37]. It is also worth noticing that directional splitting can be applied in combination with interaction forcing.

As a last example, we quote the so-called “range rejection” method, which simply consists of absorbing a particle when it (and its possible descendants) cannot leave (or reach) the RoIs. Range rejection is useful, e.g., when computing the total energy deposition of charged particles in a given spatial RoI. When the residual range of a particle (and its possible descendants) is less than the distance to the nearest limiting surface of the RoI, the particle will deposit all its energy either inside or outside the considered RoI (depending on its current position) and simulation of the track can be stopped. Range rejection is not adequate for photon transport simulation because the concept of photon range is not well defined (or, to be more precise, because photon path length fluctuations are very large). Care must also be exercised when applying range rejection to high-energy electrons or positrons because of the possibility that bremsstrahlung photons emitted by these particles leave or reach the RoI. It is worth mentioning that this technique does not modify the weights of the particles involved.

As a final comment, we would like pointing out that wery frequently, an effective increase of efficiency may be obtained by simply avoiding unnecessary calculations. This is usually true for simulation codes that incorporate general-purpose geometry packages. In the case of simple (e.g., planar, spherical, cylindrical) geometries the program may be substantially simplified and this may speed up the simulation appreciably. In general, the clever use of possible symmetries of the problem under consideration may lead to spectacular variance reductions. For example, when both the source and the material system are symmetric under rotations about an axis, the dose distribution also has that symmetry and it can be tallied by using cylindrical bins. This amounts to removing one spatial coordinate (the azimuthal angle) and leads to an effective reduction of the variance of the calculated local dose.

4 Simulation Examples and Practical Aspects

We present here examples of simulations that have low efficiencies when formulated in analog form and where VRTs prove to be effective. As already mentioned, using a VRT allows increasing the efficiency of the calculation of a certain quantity or a set of related quantities, at the expense of worsening the efficiencies of other quantities. Once a quantity of interest is identified, the user selects the VRTs to be applied among those offered by the simulation code, or those that can be coded additionally, and sets the values of the parameters that define the adopted VRTs. In principle, the optimal parameter values (i.e., those giving the highest efficiency) can only be determined from trial simulations. In many cases, knowledge of the energy-dependent mean free paths, and the CSDA ranges of charged particles, allows estimating appropriate values of the VRT parameters, avoiding the burden of performing trial simulations.

It is not obvious how to quantify the efficiency of calculated continuous distributions (such as energy spectra or dose distributions), which are delivered in the form of histograms with multiple bins. In such cases, the effectiveness of VRTs is best appreciated from a plot of the simulated histogram with statistical uncertainties displayed as error bars.

The simulations reported here were all performed by using the penelope code running on an Intel Core i7-8550U computer at 1.99 GHz. The parameters of the adopted VRTs were fixed beforehand, guided by previous experience. They may not be optimal, i.e., the efficiency could be improved further by varying those parameter values. The following examples show that even a blind setting of the VRT parameters may save quite a fraction of the computer time.

4.1 Electron-Induced Photon Emission

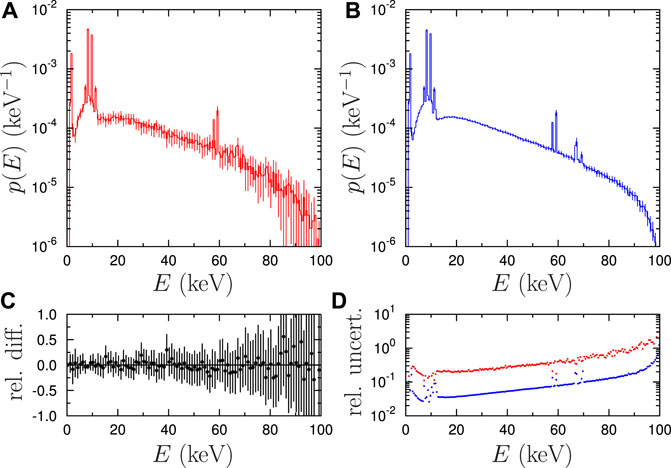

High-energy electrons emit bremsstrahlung photons and induce the emission of x-rays from atoms ionized by electron impact. These are the photons released, e.g., from x-ray generators and from the target in electron-probe microanalysis measurements [32]. The difficulty of simulations of photon emission by electrons with energies of the order of, or lower than 100 keV is the low probability with which these electrons induce the emission of photons. The VRT of interaction forcing has been proven to be effective to cope with this problem. Figure 1 displays results from simulations of 100 keV electrons impinging normally on a foil of tungsten with a thickness equal to the CSDA range of the electrons (R = 1.526 × 10–3 cm). The displayed histograms are energy distributions of photons emitted from the irradiated surface of the foil obtained from 1) an analog simulation (without any VRT applied) run for half an hour (left plot), and 2) a 15-min simulation using interaction forcing of inner-shell ionization with

FIGURE 1. X-ray emission spectra from a tungsten target bombarded by 100 keV electrons at normal incidence. Error bars represent statistical uncertainties with a coverage factor k = 3. The upper left plot (A) is the result from a 30 min analog simulation. The upper right plot (B) was generated in a run of 15 min of the same code by using the VRTs of interaction forcing and emission splitting of bremsstrahlung photons and x-rays, as described in the text. The lower plots display the relative difference between these results (C) and a comparison of the relative uncertainties of each simulation (D).

The forcing factors were determined by setting the mean free paths λA,f of the forced interactions of primary electrons (E = 100 keV) equal to a fraction of the CSDA range. With forcing factors determined in this way, we ensure that each primary electron undergoes on average a certain number of forced interactions along its trajectory. In the present simulations we took λA,f = R/25 for both bremsstrahlung and x-ray emission. Splitting proves to be useful when the photon detector covers a small solid angle [32]; in our case, all photons that leave the irradiated surface are counted and, consequently, using a larger splitting factor would not improve the efficiency appreciably.

The lower left plot in Figure 1 displays the relative differences between the analog and variance-reduced simulations, which average to zero within statistical uncertainties as expected. The lower right plot shows the relative uncertainties of the two simulation results. Notice that, because of the different forcing factors for bremsstrahlung emission and inner-shell ionization, the relative uncertainties of the x-ray lines differ from the continuous trend of the bremsstrahlung background. The combined use of interaction forcing and photon-emission splitting is seen to effectively increase the efficiency of simulations of photon spectra, without altering the reliability of the results.

4.2 Dose in Thin Material Bodies

Another difficult calculation is that of the deposited energy in bodies having thicknesses much less than the total mean free paths of the transported particles. This situation is encountered, e.g., in simulations of the response to photon beams of ionization chambers, where the active gas is almost completely transparent to photons. Again, interaction forcing provides an effective practical solution in this case.

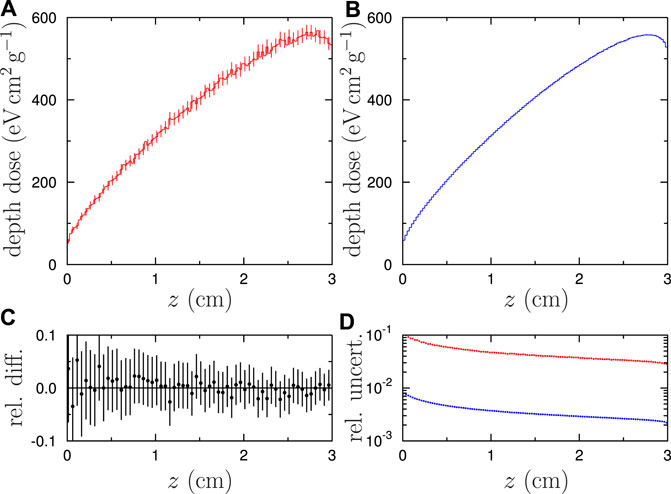

Results from analog and variance-reduced simulations of the spatial dose distribution from a 1.25 MeV photon beam in a 3 cm-thick air layer at normal incidence are displayed in Figure 2. The analog simulation lasted for 83 min and involved the generation of 2 × 109 showers (a part of them involving no interactions at all). In the variance-reduced simulation, we applied interaction forcing to the interactions of photoabsorption, Compton scattering and pair production, all them with

FIGURE 2. Depth-dose distribution (energy deposited per unit mass thickness) in a 3 cm air layer irradiated by 1.25 MeV photons at normal incidence. (A) Result from an analog simulation, after 83 min of CPU time. (B) Result from the variance-reduced simulation (interaction forcing with

4.3 Energy Deposition in Complex Geometries

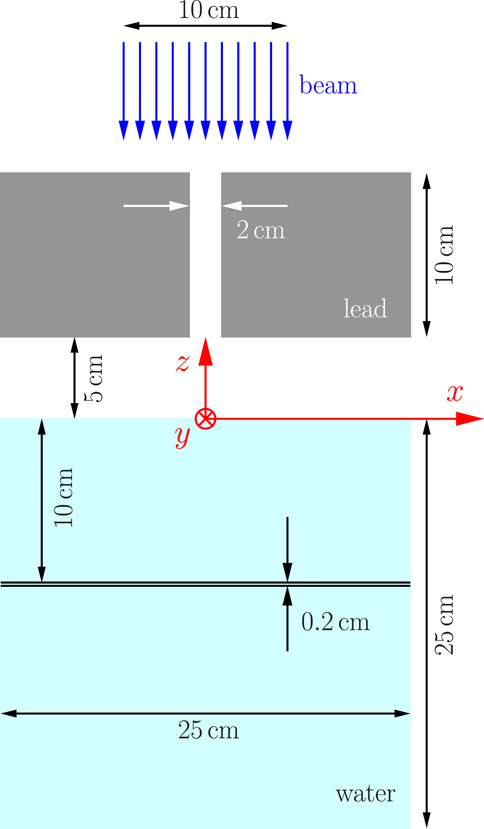

The application of VRTs in cases with complex geometries and small RoIs may require careful planing and even modifications of the simulation code. It is in these cases where the ant colony method proves to be effective, mostly when combined with interaction forcing. As an example, we consider the situation described in Figure 3: a 10 cm by 10 cm parallel beam of 6 MeV photons impinges normally on a collimator followed by a cubic water phantom. The collimator is a 10 cm thick lead block with a cylindrical hole in the direction of the beam and 1 cm radius. The simulation is designed to determine the lateral dose profile (in the direction of the x axis) at a depth of 10 cm in the water phantom by scoring the energy deposited in a 0.2 cm thick layer split into bins of 0.1 and 0.2 cm in the directions of the x and y axes, respectively. The analog simulation is slow partially because of the work spent in following secondary radiation that is generated and absorbed within the lead collimator.

In the variance-reduced simulation we applied the ant colony method. The geometry volume was partitioned by means of a uniform ortogonal mesh of planes into cubic cells of 0.5 cm side, each one identified by the three indexes (kx, ky, kz). An additional index, kp, designated the particle kind (= 1 for electrons and positrons, and = 2 for photons). Finally, a fifth index, kE, indicated the energy bin: the energy range covered by the simulation, from 0 to 6 MeV, was split into 3 cells of 2 MeV width. That is, the adopted importance map was defined as a five-dimensional matrix I(kx, ky, kz, kp, kE).

The RoI has been defined as the set of cells where the lateral dose profile is tallied (i.e., a row of cells parallel to the x axis). Initially, no variance reduction technique is applied, and the importance map is built from the progressing analog simulation until the gathered information is sufficient to switch on the VRT. It has been considered that after 1,000 showers have contributed to the RoI scores, the information in the importance map is detailed anough to activate splitting and Russian roulette guided by the ant colony algorithm described in Section 3.1.2. As the building of the importance map continues during all the simulation, its “quality” improves progressively. Of course, the importance map can be stored and reused in subsequent runs.

Figure 4 displays transverse dose profiles resulting from the analog (upper left panel) and the variance-reduced simulation (upper right panel) runs. Notice that the analog run was ten times longer than the variance-reduced one. The difference between the magnitude of the respective uncertainties is evident. While the profile obtained from the variance-reduced simulation is nearly symmetric, as expected, the result from analog simulation still shows a slight asymmetry. The lower left panel displays the relative differences between both calculations, which average to zero within statistical uncertainties. Finally, the lower right panel shows the relative uncertainties of the two simulation results. Although there is not much difference between the relative uncertainties, consideration of CPU times shows that the use of the ant colony method increases the efficiency by a factor of about 100.

FIGURE 4. Dose profile of a collimated 6 MeV photon beam in water using the geometry shown in Figure 3. (A) Result from an analog simulation, after 720 min of CPU time. (B) Result from the variance-reduced simulation (ant colony method, as described in the text), generated after 72 min CPU time. Other details are the same as in Figure 1.

5 Concluding Comments

We have described a set of relatively simple VRTs that operate either by manipulating the numbers and weights of the transported particles or by modifying the mean free paths for the relevant interaction processes. The ant-colony method (Section 3.1.2), in spite of its conceptual simplicity, has proven to be effective in focusing the radiation flux towards small RoIs in complex geometries. The VRT of interaction forcing (Section 3.4), has been shown to be useful in simulations with penelope of processes with intrinsic small probabilities, such as calculations of absorbed doses in thin material bodies and the emission of photons from samples irradiated by electron beams.

The examples in the last Section evidence the usefulness of VRTs to speed up simulations of radiation transport in difficult situations. The effectiveness of these techniques is mostly determined by the edequacy of the adopted VRTs and their defining parameters. Although optimal parameters may be determined from trial simulations, a great deal of exploratory work can be saved by considering the dimensions of the material system relative to the mean free paths of the relevant interaction processes, and to the CSDA ranges of charged particles. General-purpose Monte Carlo codes should provide tables of these quantities, as functions of the energy of the particles, calculated from the DCSs adopted in the code. The auxiliary program

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

Financial support from the Spanish Ministerio de Ciencia, Innovación y Universidades/Agencia Estatal de Investigación/European Regional Development Fund (ERDF) of European Union (projects nos. RTI2018-098117-B-C22 and PID2019-104888GB-I00) and the Junta de Andalucía (projects nos. FQM387 and P18-RT-3237) is gratefully acknowledged.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We are thankful to the reviewers for their thorough analysis of the original manuscript and for many comments and suggestions.

Footnotes

1We use the term secondary to qualify particles emitted as a result of interactions

2It is worth noticing that the finite size of the phase-space files implies a “latent” uncertainty, which sets a lower limit to the uncertainty attainable by splitting [14].

References

1. Salvat F. PENELOPE-2018: A Code System for Monte Carlo Simulation of Electron and Photon Transport. Document NEA/MBDAV/R(2019)1. Boulogne-Billancourt: OECD Nuclear Energy Agency (2019).

2.X-5 Monte Carlo Team. MCNP–A General Monte Carlo N-Particle Transport Code, Version 5. Report LA-UR-03-1987. Los Alamos: Los Alamos National Laboratory (2003).

3. Allison J, Amakoca K, Apostolakisd J, Arce P, Asaif M, Asog T, et al. Recent Developments in Geant4. Nucl Instrum Meth A (2016) 835:186–225. doi:10.1016/j.nima.2016.06.125

4. Ferrari A, Sala PR, Fassò A, Ranft J. Fluka: a Multi-Particle Transport Code. In: Tech. Rep. CERN-2005-00X, INFN TC 05/11, SLAC-R-773. Geneva: CERN (2005). doi:10.2172/877507

5. Kawrakow I, Rogers DWO. The EGSnrc Code System: Monte Carlo Simulation of Electron and Photon Transport. In: Tech. Rep. PIRS-701. Ottawa: National Research Council of Canada (2001).

6. Hirayama H, Namito Y, Bielajew AF, Wilderman SJ, Nelson WR. The EGS5 Code System. In: Tech. Rep. SLAC-R-730 (KEK 2005-8). Menlo Park: Stanford Linear Accelerator Center (2006).

7. Brun E, Damian F, Diop CM, Dumonteil E, Hugot FX, Jouanne C, et al. TRIPOLI-4, CEA, EDF and AREVA Reference Monte Carlo Code. Ann Nucl Energ (2015) 82:151–60. doi:10.1016/j.anucene.2014.07.053

8. Sato T, Iwamoto Y, Hashimoto S, Ogawa T, Furuta T, Abe S-I, et al. Features of Particle and Heavy Ion Transport Code System (PHITS) Version 3.02. J Nucl Sci Technol (2018) 55:684–90. doi:10.1080/00223131.2017.1419890

9. Berger MJ. Monte Carlo Calculation of the Penetration and Diffusion of Fast Charged Particles. In: B Alder, S Fernbach, and M Rotenberg, editors. Methods in Computational Physics, Vol. 1. New York: Academic Press (1963). p. 135–215.

10. Baró J, Sempau J, Fernández-Varea JM, Salvat F. PENELOPE: An Algorithm for Monte Carlo Simulation of the Penetration and Energy Loss of Electrons and Positrons in Matter. Nucl Instr Methods Phys Res Section B: Beam Interactions Mater Atoms (1995) 100:31–46. doi:10.1016/0168-583x(95)00349-5

11. Rubino G, Tuffin B. Rare Event Simulation Using Monte Carlo Methods. Hoboken, NJ: John Wiley & Sons (2009).

12. Booth TE. Monte Carlo Variance Reduction Approaches for Non-boltzmann Tallies. Report LA-12433. Los Alamos: Los Alamos National Laboratory (1992).

13. Capote R, Jeraj R, Ma CM, Rogers DW, Sánchez-Doblado F, Sempau J, et al. Phase-space Database for External Beam Radiotherapy. Summary Report of a Consultants’ Meeting. In: Tech. Rep. INDC (NDS)-0484. Vienna: International Atomic Energy Agency (2006).

14. Sempau J, Sánchez-Reyes A, Salvat F, Tahar HOb., Jiang SB, Fernández-Varea JM. Monte Carlo Simulation of Electron Beams from an Accelerator Head Using PENELOPE. Phys Med Biol (2001) 46:1163–86. doi:10.1088/0031-9155/46/4/318

16. Wagner JC, Haghighat A. Automated Variance Reduction of Monte Carlo Shielding Calculations Using the Discrete Ordinates Adjoint Function. Nucl Sci Eng (1998) 128:186–208. doi:10.13182/nse98-2

17. Wagner JC, Peplow DE, Mosher SW. FW-CADIS Method for Global and Regional Variance Reduction of Monte Carlo Radiation Transport Calculations. Nucl Sci Eng (2014) 176:37–57. doi:10.13182/nse12-33

18. Petit O, Lee Y-K, Diop CM. Variance Reduction Adjustment in Monte Carlo TRIPOLI-4 Neutron Gamma Coupled Calculations. Prog Nucl Sci Tech (2014) 4:408–12. doi:10.15669/pnst.4.408

19. Nowak M, Mancusi D, Sciannandrone D, Masiello E, Louvin H, Dumonteil E. Accelerating Monte Carlo Shielding Calculations in TRIPOLI-4 with a Deterministic Adjoint Flux. Nucl Sci Eng (2019) 193:966–81. doi:10.1080/00295639.2019.1578568

20. Díaz-Londoño G, García-Pareja S, Salvat F, Lallena AM. Monte Carlo Calculation of Specific Absorbed Fractions: Variance Reduction Techniques. Phys Med Biol (2015) 60:2625–44. doi:10.1088/0031-9155/60/7/2625

21. Dorigo M, Maniezzo V, Colorni A. Ant System: Optimization by a colony of Cooperating Agents. IEEE Trans Syst Man Cybern B (1996) 26:29–41. doi:10.1109/3477.484436

22. García-Pareja S, Vilches M, Lallena AM. Ant colony Method to Control Variance Reduction Techniques in the Monte Carlo Simulation of Clinical Electron Linear Accelerators. Nucl Instr Methods Phys Res Section A: Acc Spectrometers, Detectors Associated Equipment (2007) 580:510–3. doi:10.1016/j.nima.2007.05.217

23. García-Pareja S, Vilches M, Lallena AM. Ant colony Method to Control Variance Reduction Techniques in the Monte Carlo Simulation of Clinical Electron Linear Accelerators of Use in Cancer Therapy. J Comput Appl Maths (2010) 233:1534–41. doi:10.1016/j.cam.2008.03.052

24. Carvajal MA, García-Pareja S, Guirado D, Vilches M, Anguiano M, Palma AJ, et al. Monte Carlo Simulation Using the PENELOPE Code with an Ant colony Algorithm to Study MOSFET Detectors. Phys Med Biol (2009) 54:6263–76. doi:10.1088/0031-9155/54/20/015

25. García-Pareja S, Galán P, Manzano F, Brualla L, Lallena AM. Ant colony Algorithm Implementation in Electron and Photon Monte Carlo Transport: Application to the Commissioning of Radiosurgery Photon Beams. Med Phys (2010) 37:3782–90. doi:10.1118/1.3456108

26. Cenizo E, García-Pareja S, Galán P, Bodineau C, Caudepón F, Casado FJ. A Jaw Calibration Method to Provide a Homogeneous Dose Distribution in the Matching Region when Using a Monoisocentric Beam Split Technique. Med Phys (2011) 38:2374–81. doi:10.1118/1.3581377

27. Clark FH. The Exponential Transform as an Importance-Sampling Device – A Review. In: Document ORNL-RSIC-14. Oak Ridge: Oak Ridge National Laboratory (1966).

28. Woodcock E, Murphy T, Hemmings P, Longworth S. Techniques Used in the GEM Code for Monte Carlo Neutronics Calculations in Reactors and Other Systems of Complex Geometry. In: Proc. Conf. On Applications of Computing Methods to Reactor Problems. Tech. Rep. ANL-7050. Argonne: Argonne National Laboratories (1965).

29. Coleman WA. Mathematical Verification of a Certain Monte Carlo Sampling Technique and Applications of the Technique to Radiation Transport Problems. Nucl Sci Eng (1968) 32:76–81. doi:10.13182/nse68-1

30. Sempau J, Wilderman SJ, Bielajew AF. DPM, a Fast, Accurate Monte Carlo Code Optimized for Photon and Electron Radiotherapy Treatment Planning Dose Calculations. Phys Med Biol (2000) 45:2263–91. doi:10.1088/0031-9155/45/8/315

31. Tian L, Zhu J, Liu M, An Z. Bremsstrahlung Spectra Produced by Kilovolt Electron Impact on Thick Targets. Nucl Instr Methods Phys Res Section B: Beam Interactions Mater Atoms (2009) 267:3495–9. doi:10.1016/j.nimb.2009.08.009

32. Llovet X, Salvat F. PENEPMA: A Monte Carlo Program for the Simulation of X-ray Emission in Electron Probe Microanalysis. Microsc Microanal (2017) 23:634–46. doi:10.1017/s1431927617000526

33. Brualla L, Salvat F, Palanco-Zamora R. Efficient Monte Carlo Simulation of Multileaf Collimators Using Geometry-Related Variance-Reduction Techniques. Phys Med Biol (2009) 54:4131–49. doi:10.1088/0031-9155/54/13/011

34. Benmakhlouf H, Sempau J, Andreo P. Output Correction Factors for Nine Small Field Detectors in 6 MV Radiation Therapy Photon Beams: A PENELOPE Monte Carlo Study. Med Phys (2014) 41:041711. doi:10.1118/1.4868695

35. Bush K, Zavgorodni SF, Beckham WA. Azimuthal Particle Redistribution for the Reduction of Latent Phase-Space Variance in Monte Carlo Simulations. Phys Med Biol (2007) 52:4345–60. doi:10.1088/0031-9155/52/14/021

36. Rodriguez M, Sempau J, Brualla L. A Combined Approach of Variance-Reduction Techniques for the Efficient Monte Carlo Simulation of Linacs. Phys Med Biol (2012) 57:3013–24. doi:10.1088/0031-9155/57/10/3013

Keywords: Monte Carlo simulation, statistical uncertainties, variance-reduction methods, splitting and Russian roulette, ant colony algorithms, interaction forcing, delta scattering

Citation: García-Pareja S, Lallena AM and Salvat F (2021) Variance-Reduction Methods for Monte Carlo Simulation of Radiation Transport. Front. Phys. 9:718873. doi: 10.3389/fphy.2021.718873

Received: 01 June 2021; Accepted: 06 October 2021;

Published: 27 October 2021.

Edited by:

Susanna Guatelli, University of Wollongong, AustraliaReviewed by:

Cindy Le Loirec, CEA Cadarache, FranceMauro Menichelli, Istituto Nazionale di Fisica Nucleare di Perugia, Italy

Marc Verderi, UMR7638 Laboratoire Leprince-Ringuet (LLR), France

Copyright © 2021 García-Pareja, Lallena and Salvat. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Salvador García-Pareja, c2FsdmFkb3IuZ2FyY2lhLnNzcGFAanVudGFkZWFuZGFsdWNpYS5lcw==

Salvador García-Pareja

Salvador García-Pareja Antonio M. Lallena

Antonio M. Lallena Francesc Salvat

Francesc Salvat