95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Phys. , 09 September 2020

Sec. Statistical and Computational Physics

Volume 8 - 2020 | https://doi.org/10.3389/fphy.2020.00367

This article is part of the Research Topic Analytical and Numerical Methods for Differential Equations and Applications View all 11 articles

In this paper Extrapolated Stabilized Explicit Runge-Kutta methods (ESERK) are proposed to solve nonlinear partial differential equations (PDEs) in right triangles. These algorithms evaluate more times the function than a standard explicit Runge–Kutta scheme (nt times per step), and these extra evaluations do not increase the order of convergence but the stability region grows with . Hence, the total computational cost is times lower than with a traditional explicit algorithm. Thus, these algorithms have been traditionally considered to solve stiff PDEs in squares/rectangles or cubes. In this paper, for the first time, ESERK methods are considered in a right triangle. It is demonstrated that such type of codes keep the convergence and the stability properties under certain conditions. This new approach would allow to solve nonlinear parabolic PDEs with stabilized explicit Runge–Kutta schemes in complex domains, that would be decomposed in rectangles and right triangles.

Let us suppose that we have to solve a nonlinear PDE with dominating diffusion:

subject to traditional initial and Dirichlet boundary conditions:

and

These types of problems are very common in a large amount of areas such as atmospheric phenomena, biology, chemical reactions, combustion, financial mathematics, industrial engineering, laser modeling, malware propagation, medicine, mechanics, molecular dynamics, nuclear kinetics, etc., see [1–9], to mention a few.

A widely-used approach for solving these time-dependent and multi-dimensional PDEs is to first discretize the space variables (with finite difference or spectral methods) to obtain a very large system of ODEs of the form

where , t ≥ t0, and f(t, y) takes value in ℝn, this procedure is well-known as the method of lines (MOL). But these systems of ODEs not only have a huge dimension, additionally they might become very stiff problems.

Hence, traditional explicit methods are usually very slow, due to absolute stability, it is necessary to use very small length steps, see [6, 7] and references therein. Therefore, these schemes are not usually considered.

Implicit schemes based on BDF and Runge–Kutta methods have much better stability properties. However, since the dimension of the ODE system is very high, then it is necessary to solve very large nonlinear systems at each iteration.

Other numerous techniques have also been proposed based on ETD schemes (but it is necessary to approximate operators including matrix exponentials), alternating direction implicit methods (they have limitations on the order of convergence) and explicit-implicit algorithms. However, in any case the number of operations is huge when the system dimension is high.

For those cases where it is known that the Jacobian eigenvalues of the function are all real negative or are very close to this semi-axis, there is another option: stabilized explicit Runge–Kutta methods (they are also called Runge-Kutta-Chebyshev methods). This happens, for example, when diffusion dominates in the PDE, when the Laplacian is discretized using finite differences or some spectral techniques, then the associated matrix has this type of eigenvalues.

These types of algorithms are totally explicit, but they have regions of stability extended along the real negative axis. These schemes typically have order 2 or 4 [8, 10–16]. Recently, we propose a new procedure combined with Richardson extrapolation to obtain methods with other orders of convergence [17, 18], but in all these methods, these integrators have many more stages than the order of convergence. Most of these extra stages seek to extend as much as possible the region of stability along the negative real axis. Regions of stability increase quadratically with the number of stages. Thus, the cost per step is greater than in a classic Runge–Kutta, because it is necessary to evaluate the function in Equation (4) nt times. However, the number of steps reduce proportionally with , thus the total computational cost is reduced proportionally with nt.

These schemes have been traditionally considered in squares/rectangles or cubes. But this makes difficult to apply them in PDEs with complex geometries, which happens in most of the cases. Some different strategies have been proposed to apply them when the original domain is not a square nor a cube (see [3–5]). They implemented stabilized Runge–Kutta methods after using adaptive multiresolution techniques or fixed mesh codes in space. But simulations in complex geometries constitute a very challenging problem, see (section 4, [5]), where they stated for their results based on adaptive multiresolution techniques that they “will only present here 2D and 3D simulations in simplified geometries for the sake of assessing our results and perspectives in the field.”

As far as we know, stabilized explicit Runge–Kutta methods have not been tested in triangles yet. For this reason, in this paper, we are analysing how ESERK methods can be employed to solve nonlinear PDEs in these types of regions and their convergence.

In this paper, a summary on ESERK4 methods is provided in section 2. The major advance of our contribution is given in section 3: it is explained how ESERK4 can be utilized for (1) when Ω is a right triangle. After some linear transformations and spatial discretizations ESERK4 is numerically stable and fourth-order convergence in time, and second-order in space is obtained. This allows a new way to numerically approach parabolic nonlinear PDEs in complex domains in the plane, which can be easier decomposed in a sum of triangles and rectangles. Finally, some conclusions and future goals are outlined.

In [17], ESERK4 schemes were developed for nonlinear PDEs in several dimensions with good stability properties and numerical results in squares and cubes. The idea is quite simple: first-order stabilized explicit Runge–Kutta (SERK) methods are derived using Chebyshev polynomials of the first kind:

s being the number of stages of the first-order method.

If we consider

then |Rs(z)| oscillates between −λ4 and λ4 (for a value 0 < λ4 = 0.311688 < 1 that we will calculate later) in a region which is O(s2), and .

We can construct Runge–Kutta schemes with |Rs(z)| as stability functions by just changing (and considering that 1, Ts(x) and are the stability functions of Identity operator, gs, and hf(·), and writing Rs(z) as a linear combination of the Chebyshev polynomials, see Theorem 1 [12] for more details).

Once first-order SERK methods have been derived, they approximate the solution of the initial value problem (4), by performing ni steps with constant step size hi at x0 + h, i.e., yhi(x0 + h): = Si, 1, with step sizes h1 > h2 > h3 > … (taking hi = h/ni, ni = 1, …, 4).

Finally

is a fourth-order approximation with

as its stability function. Additionally, we have that

The positive real solution of

is λ4 = 0.311688. Hence, whenever |Rs(z)| < 0.311688, then |P4s(z)| < 0.95. Taking , it can be checked numerically that |Rs, 4(z)| ≤ 0.311688 for z ∈ [−s2, −1] and 9 ≤ s ≤ 4000, and therefore the ESERK4 methods derived in this way are fourth-order approximations and numerically stable in a region including [−s2, 0].

In [17], we constructed a variable-step and number of stages algorithm combining all the schemes derived there, with s up to 4,000. The idea is quite simple: (i) First, we select the step size in order to control the local error; the best results were obtained using techniques considered for standard extrapolation methods (see Equations (8–11) in [17]). (ii) Later the minimum s is chosen so that the absolute stability is satisfied.

Recently, we are working developing the parallel version of this code (see [19]). Using 4 threads, CPU times are up to 2.5 times smaller than in the previous sequential algorithm. The new parallel code also has a decreasing memory demand, and therefore it is possible to solve problems with higher dimension in regular PCs.

Complex geometric shapes are ubiquitous in our natural environment. In this paper, we are interested in numerically solving partial differential equations (PDEs) in such types of geometries, which are very common in problems related with human bodies, materials, or simply a complicated engine in classical engineering applications.

One very well-known strategy, within a finite element context, is to build the necessary modifications in the vicinity of the boundary. Such an approach is studied in the composite finite element method (FEM). Those methods based on finite element are usually proposed only for linear PDEs. FEM is a numerical method for solving problems of engineering and mathematical physics (typical problems include structural analysis, heat transfer, fluid flow, mass transport, or electromagnetic potential, because these problems generally require numerically approximating the solution of linear partial differential equations). The finite element method allows the transformation of the problem in a system of algebraic equations. Unfortunately, it is more difficult to employ these techniques with nonlinear parabolic PDEs in several dimensions, although some results have been obtained to know when the resulting discrete Galerkin equations have a unique solution in [20]. However, for some problems, some of these techniques are not easy to be employed numerically, they are computationally very expensive because they require solving nonlinear systems with huge dimension at every step, or it is difficult to demonstrate that the numerical schemes have unique solution in a general case.

On the other hand, Implicit–Explicit (IMEX) methods have been employed to solve a very stiff nonlinear system of ODEs coming from the spatial discretization of nonlinear parabolic PDEs that appeared in the modelization of an ischemic stroke in [5]. The authors employed an adaptive multiresolution approach and a finite volume strategy for the spatial discretizations. And a Strang splitting method in time, combining ROCK4, an explicit Stalized Explicit Runge–Kutta scheme for the diffusion part, and Radau5, an implicit A-stable method for the reaction. These methods were previously analyzed in [3] for streamer discharge simulations, and the authors demonstrated second-order convergence in time. Later, they employed similar strategies for different physical problems in [4, 21]. As the authors state, some of these procedures are complicated except in simple domains like squares and cubes, and only second-order convergence in time is possible. However, there are complex problems where nonlinear terms have potentially large stiffness, and at the same time, it is necessary to efficiently solve the model with small errors. This motivates to derive high-order schemes with good internal stability properties.

In what follows we will explain a new strategy to numerically solve the nonlinear parabolic PDE given by Equation (1) where Ω is any right triangle, and therefore any researcher can combine the theory (utilized with FEM) to spatially decomposed any complex geometry into triangles (since any acute triangle and obtuse triangle can be decomposed into two right triangles), and later employing the method described in this paper. Additionally, schemes proposed in this work are fourth-order ODE solvers (in time), and numerical spatial approximations will be second-order (although fourth-order formulae can be explored except for the closest points to vertices).

Without loss of generality we can consider that our right triangle is TR, the one with vertices (0, 1), (0, 0), (1, 0). Otherwise we first use a linear transformation of the right triangle with vertices , , [where is the vertex of the right angle]:

where the parameters a1, a2, b1, b2 can be computed easily as

where

and it is easy to check that Det ≠ 0 if and only the three points are not in a line (but we always have a triangle).

The main reason of decomposing our general region Ω∈R2 into right triangles (and not other triangles) is, that after this linear transformation given by Equations (9) and (10), our PDE given by Equation (1) transforms into the Equation

subject to (traditional) initial and Dirichlet boundary conditions. Therefore, let us first study Equation (12), together with

and

where ∂(TR) is the border of the triangle with vertices (0, 1), (0, 0), (1, 0). One positive issue is that, after the traditional spatial discretization described below, the matrix obtained from the diffusion term has all the eigenvalues real, and therefore we can utilize the ESERK methods proposed in the previous section 2.

Now, let us define the spatial discretization of our continuous problem provided by Equation (12), the problem domain TR is discretized by the grid points (xi, yj), where

since xi+yj ≤ 1.

With this semidiscretizations we will approximate uxx and uyy at point (xi, yj) with the following second-order formulae:

After the linear transformation given by Equations (9) and (10), our PDE given by Equation (1) may transform into one Equation where one term in uxy would appear. Normally, this term can be approximated in the square or the rectangle through the formula

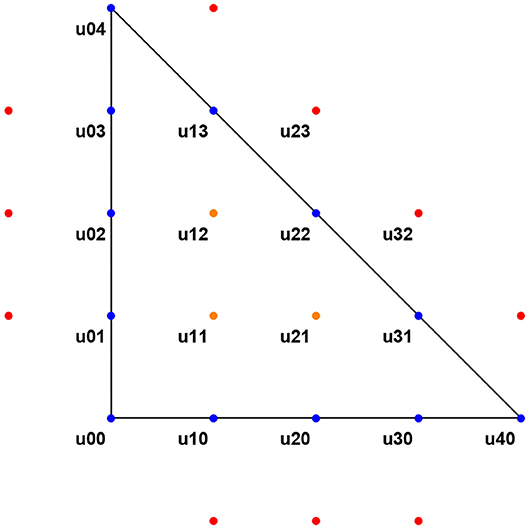

however, in TR, we can obtain that the point (xi, yj) is in TR, i.e., xi + yj < 1, but the point (xi + 1, yj + 1) might not satisfy that xi + 1 + yj + 1 ≤ 1, and therefore we cannot employ these finite difference formulae if a term in uxy appears. Fortunately, we will check that this term cancels after this transformation [given by Equations (9 and 10)] whenever the original triangle with vertices is a right triangle and is the vertex of the right angle. This fact is explained in Figure 1. If we would need to approximate , then it would be necessary to obtain an approximation of u3, 2, but this point is outside of the TR, the region of study.

Figure 1. Spatial discretization in the right triangle TR. We need to approximate partial derivatives of u in the interior (orange points), and therefore we have obtained in the previous steps approximations of the function in the interior, and the points in the border (blue points), but we do not have these values outside of TR (red points).

In this work, we are employing only second-order approximations in space. In other works, for example [13], we have also employed SERK codes after higher-order discretizations in space, but in rectangles. Normally, in rectangles, we can use formulae similar to

and in the lower edge

However, in the triangle, again we can observe in Figure 1, that we would need to approximate the solution in points outside TR before we can calculate (19) near the vertex (0, 1). Obviously, one possible idea for the future is considering the decomposition of complex regions into bigger rectangles in the interior, and small right triangles near the border of the complex region where it is necessary to solve the PDE.

Now, we are ready to understand why we chose right triangles in the decomposition of complex regions. The main reason is, that simple calculations give us [after linear transformations given by Equations (9–11)]:

and therefore, after this linear transformation, has the following term in uxy

If we change a1, a2, b1, and b2 for their values given by Equation (10)

which is 0 if and only if the vectors and are orthogonal, i.e., if they form a right angle at P0.

Thus, if the original triangle has a right angle at P0, there is not a term in uxy, and we can use the second-order approximations in space, with the spatial discretization described above. Additionally, the following theorem guarantee that ESERK methods can be employed (with numerical stability and good results) in this right triangle to solve the PDE given by Equations (1)–(3) after the linear transformation given by Equations (9)–(11) and the spatial discretization given by Equation (15):

Theorem: Let Equations (1)–(3) be the PDE to be solved, and Ω a right triangle with a right angle at P0. After linear transformation given by Equations (9) and (10), this PDE transforms into Equations (12)–(14), which can be discretized by Equations (15) and (16), transforming into the system of ODEs

F(t, xi, yj, uij) being the sum of f(t, x, y, u) at the grid points plus the function given by the spatial discretization of the derivatives at the boundary.

The associate matrix, A, to the terms c1uxx + c2uyy (with c1, c2 ≥ 0) is real and symmetric, and therefore all the eigenvalues of this matrix are negative and real. Hence, Extrapolated Stabilized Explicit Runge–Kutta are numerically stable whenever ∂u[F(t, x, y, u)] does not modify this type of eigenvalues (real and negative) in the Jacobian function and (μ being and ). Therefore, ESERK4 methods can solve Equations (1)–(3) with a fourth-order convergence in time, and second in space.

Proof: It only remains to study the associate matrix A.

But simple calculations allow us to obtain that

where Bi is the square matrix with dimension i

0i, j is the i × j matrix with all the values equal 0, Idi is the identity matrix of dimension i, and , and therefore A is a symmetric real matrix.

Finally, it is well-known that all the eigenvalues of any symmetric real matrix A are real. Let us suppose that (λ, v) is a complex pair of A, i.e., an eigenvector v = x+ yi ∈ℂn, where x, y ∈ ℝn and λ = a + bi ∈ ℂ is the corresponding eigenvalue with a, b ∈ ℝ. Therefore,

Hence, equalizing real and imaginary parts, we have

and therefore

In this way we can conclude that

and, since ||x||2+||y||2≠0, then b = 0 and λ = a∈ℝ

Additionally, since σ, μ ≥ 0, the Gershgoring theorem guarantees for all the eigenvalues of A that 4(μ + σ) ≤ λi ≤ 0.

Therefore, whenever the nonlinear part does not modify this type of eigenvalues (real and negative) in the Jacobian function, a bound of the spectral radius of the Jacobian is 4(μ+σ), and we merely need to use an ESERK method with to guarantee numerical stability.

Let us now study the numerical behavior of ESERK methods in a right triangle with one example. We will consider

where

Ω is the triangle with vertices (−1, 1), (−3, 2), and (0, 3) and initial and boundary conditions are taken such that is its solution.

Hence, we first consider the linear transformation given by Equations (9) and (10), i.e., a1 = −2/5, a2 = 1/5, b1 = 1/5, b2 = 2/5. In this way Equation (30) transforms into the Equation

where

and initial and boundary conditions are taken such that u(t, x, y) = e−tsin(πx) is its solution.

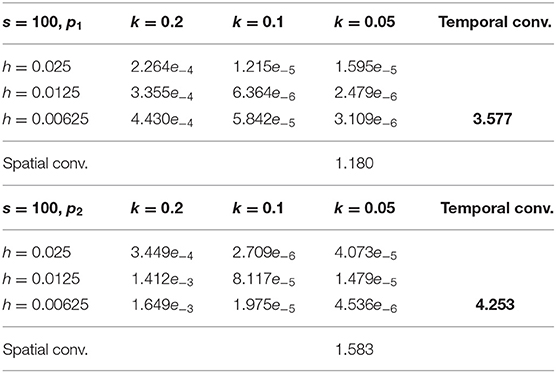

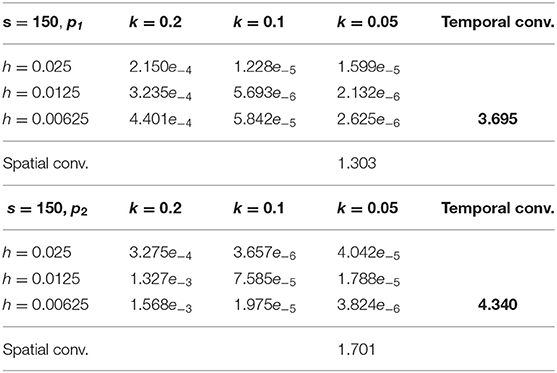

Now, it is possible to utilize second-order approximations in space, as it was explained in the previous section. ESERK4 with s = 100 and 150 where considered for this numerical experiment with different values of h = Δx = Δy and k = Δt. Numerical convergence at several points was analyzed with both methods, and numerical errors at two points [p1 = (t, x, y) = (1, 0.15, 0.15) and p2 = (t, x, y) = (1, 0.5, 0.25)] are shown in Tables 1, 2.

Table 1. Analysis of the numerical convergence at points p1 = (t, x, y) = (1, 0.15, 0.15) (top) and p2 = (t, x, y) = (1, 0.5, 0.25) (bottom) for the ESERK4 algorithm with s = 100 with k = Δt = 0.2, 0.1 and 0.05, and h = Δx = Δy = 0.025, 0.0125, and 0.00625.

Table 2. Analysis of the numerical convergence at points p1 = (t, x, y) = (1, 0.15, 0.15) (top) and p2 = (t, x, y) = (1, 0.5, 0.25) (bottom) for the ESERK4 algorithm with s = 150 with k = Δt = 0.2, 0.1 and 0.05, and h = Δx = Δy = 0.025, 0.0125, and 0.00625.

First of all, we calculated all the eigenvalues of the matrix A after spatial discretization. As it was demonstrated in Theorem 23, they are real and negative, and they are inside the intervals [−1, 292, 0] for h = 0.025; [−5, 183, 0] for h = 0.0125; and [−20, 746, 0] for h = 0.00625. In the three cases, the bound 4(μ+σ) given by Gershgoring theorem is a good approximation for the spectral radius [4(μ+σ) is 1296.91 for h = 0.025, 5187.64 for h = 0.0125, and 20750.6 for h = 0.00625, less than a 1% over the real values].

ESERK4 schemes are stable in [−s2, 0] therefore any ESERK method with (since our bigger k = 0.2) is stable in this numerical example.

In both Tables 1, 2, if we take k = 0.2 (also with k = 0.1), we can observe that errors are similar with the three different values h = 0.025, 0.0125, and 0.00625 at many of the points. In this case, most of the error is due to the temporal discretization. Actually, in L2 norm, errors with constant k = 0.2 grow when h decrease for the three step lengths in space, this is because there are more points and they are close to the border.

If we take h = 0.025 constant, and we vary k = 0.2, 0.1, and k = 0.05, in general we observe that errors in most of the points decrease between k = 0.2 and 0.1, however, if we only compare the errors with h = 0.025, k = 0.1 and k = 0.05, errors are similar at most points (and also in L2 norm). Obviously, this is because, with h = 0.025, k = 0.1, or k = 0.05, part of the error is due to the spatial discretization.

Therefore, it is not so easy to observe 4−th order convergence in time and 2−nd in space. If we choose h = 0.00625, then most of the error with k1 = 0.2, k2 = 0.1, and k3 = 0.05 is due to temporal discretization. Hence, calculating (these values are called Temporal convergence in Tables 1, 2, err1 being the error with k1, and err3 being the error with k3) we can observe numerical rates in the range 3.6–4.3 in general, which gives us a good idea of the fourth-order convergence in time of ESERK4 schemes.

Now, if we fix k = 0.05, and we repeat the process with h1 = 0.025, h2 = 0.0125, and h3 = 0.00625, we observe that between h1 and h2 errors divide (more or less) by 4 which gives us a good idea of the second order in space of the discretization proposed for the right triangle. However, with k = 0.05, and h3 a part of the error is due to the temporal discretization. Thus, if we calculate (these values are called Spatial convergence in Tables 1, 2, err1 being the error with h1, and err3 being the error with h3), we observe numerical rates in the range 1.2–1.7.

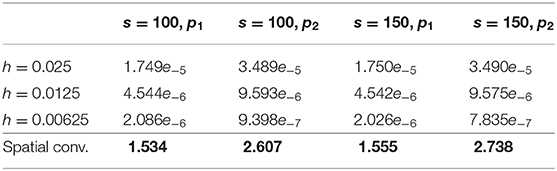

Since, part of the error with k = 0.05 is due to the temporal discretization, and the temporal convergence is fourth-order, let us choose a smaller k4 = 0.025, and repeat the process with this length step in time. In Table 3, errors with both methods (s = 100 and s = 150), and h = Δx = Δy = 0.025, 0.0125, and 0.00625 are shown at both points, p1 and p2.

Table 3. Analysis of the numerical convergence at points p1 = (t, x, y) = (1, 0.15, 0.15), and p2 = (t, x, y) = (1, 0.5, 0.25) for the ESERK4 algorithm withs = 100 and s = 150 with k = Δt = 0.025, and h = Δx = Δy = 0.025, 0.0125, and 0.00625.

Now, most of the errors are because of the spatial discretization, and we can observe that the numerical spatial convergence rates are in the range 1.5–2.7. They suggest that the numerical convergence rate is 2 as it was expected from the previous theoretical analysis.

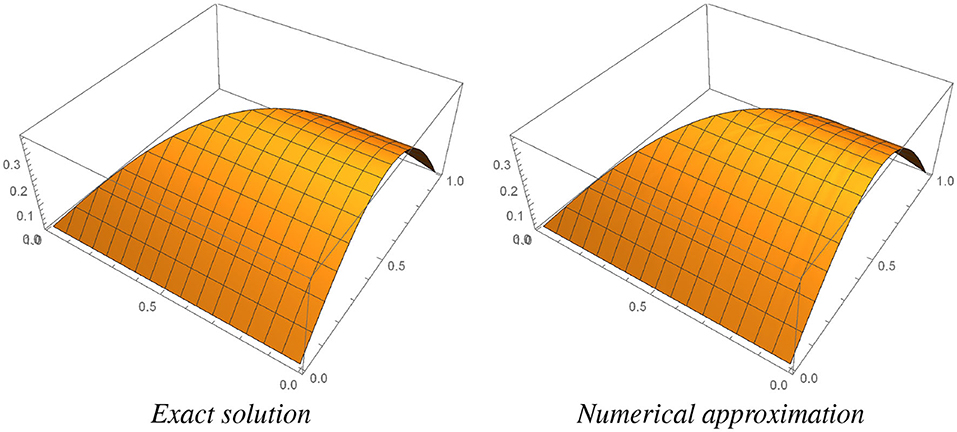

In Figure 2, the exact solution and the numerical approximation obtained with ESERK4 with s = 150, k = Δt = 0.025, h = Δx = Δy = 0.00625 are shown. We can check that both plots look identical.

Figure 2. Exact and numerical solutions in the right triangle TR. Numerical approximation is obtained with ESERK4 with s = 150, k = Δt = 0.025, h = Δx = Δy = 0.00625.

In this paper, for the first time, ESERK schemes are proposed to solve nonlinear partial differential equations (PDEs) in right triangles. These codes are explicit, they do not require to solve very large systems of linear nor nonlinear equations at each step. It is demonstrated that such type of codes are able to solve nonlinear PDEs in right triangles. They keep the order of convergence and the absolute stability property under certain conditions. Hence, this paper opens a new line of research, because this new approach will allow, in the future, to solve nonlinear parabolic PDEs with stabilized explicit Runge–Kutta schemes in complex domains, that would be decomposed in rectangles and right triangles.

Additionally, we consider that this procedure can be extended to tetrahedron and other simplixes for the solution of multi-dimensional nonlinear PDEs in complex regions in ℝn.

The datasets generated for this study are available on request to the corresponding author.

The author confirms being the sole contributor of this work and has approved it for publication.

The authors acknowledges support from the University of Salamanca through its own Programa Propio I, Modalidad C2 grant 18.KB2B.

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

2. Cubillos-Moraga MA. General-Domain Compressible Navier-Stokes Solvers Exhibiting Quasi-Unconditional Stability and High-Order Accuracy in Space and Time. PhD thesis. Pasadena, CA: California Institute of Technology (2015). Available online at: https://thesis.library.caltech.edu/8851/

3. Duarte M, Bonaventura Z, Massot M, Bourdon A, Descombes S, Dumont T. A new numerical strategy with space-time adaptivity and error control for multi-scale streamer discharge simulations. J Comput Phys. (2012) 231:1002–19. doi: 10.1016/j.jcp.2011.07.002

4. Duarte M, Descombes S, Tenaud C, Candel S, Massot M. Time-space adaptive numerical methods for the simulation of combustion fronts. Combust Flame. (2013) 160:1083–101. doi: 10.1016/j.combustflame.2013.01.013

5. Dumont T, Duarte M, Descombes S, Dronne MA, Massot M, Louvet V. Simulation of human ischemic stroke in realistic 3D geometry. Commun Nonlin Sci Numer Simul. (2013) 18:1539–57. doi: 10.1016/j.cnsns.2012.10.002

6. Hairer E, Wanner G. Solving Ordinary Differential Equations. II: Stiff and Differential-Algebraic Problems. Berlin: Springer (1996). doi: 10.1007/978-3-642-05221-7

7. Hundsdorfer WH, Verwer JG. Numerical Solution of Time-Dependent Advection-Diffusion-Reaction Equations. Berlin; Heidelberg: Springer (2007).

8. Martín-Vaquero J, Khaliq AQM, Kleefeld B. Stabilized explicit Runge-Kutta methods for multi-asset American options. Comput Math Appl. (2014) 67:1293–308. doi: 10.1016/j.camwa.2014.01.018

9. Zlatev Z. Computer Treatment of Large Air Pollution Models. Dordrecht: Environmental Science and Technology Library; Springer Netherlands (2012). Available online at: https://www.springer.com/gp/book/9780792333289

10. Abdulle A, Medovikov AA. Second order Chebyshev methods based on orthogonal polynomials. Numer Math. (2001) 90:1–18. doi: 10.1007/s002110100292

11. Abdulle A. Fourth order Chebyshev methods with recurrence relation. SIAM J Sci Comput. (2001) 23:2041–54. doi: 10.1137/S1064827500379549

12. Kleefeld B, Martín-Vaquero J. SERK2v2: A new second-order stabilized explicit Runge-Kutta method for stiff problems. Numer Methods Part Differ Equat. (2013) 29:170–85. doi: 10.1002/num.21704

13. Kleefeld B, Martín-Vaquero J. SERK2v3: solving mildly stiff nonlinear Partial Differential Equations. J Comput Appl Math. (2016) 299:194–206. doi: 10.1016/j.cam.2015.11.045

14. Martín-Vaquero J, Janssen B. Second-order stabilized explicit Runge-Kutta methods for stiff problems. Comput Phys Commun. (2009) 180:1802–10. doi: 10.1016/j.cpc.2009.05.006

15. Medovikov AA. High order explicit methods for parabolic equations. BIT Numer Math. (1998) 38:372–90. doi: 10.1007/BF02512373

16. Sommeijer BP, Shampine LF, Verwer JG. RKC: An explicit solver for parabolic PDEs. J Comput Appl Math. (1997) 88:315–26. doi: 10.1016/S0377-0427(97)00219-7

17. Martín-Vaquero J, Kleefeld B. Extrapolated stabilized explicit Runge-Kutta methods. J Comput Phys. (2016) 326:141–155. doi: 10.1016/j.jcp.2016.08.042

18. Martín-Vaquero J, Kleefeld A. ESERK5: A fifth-order extrapolated stabilized explicit Runge- Kutta method. J Comput Appl Math. (2019) 356:22–36. doi: 10.1016/j.cam.2019.01.040

19. Martín-Vaquero J, Kleefeld A. Solving nonlinear parabolic PDEs in several dimensions: parallelized ESERK codes. J. Comput. Phys. (2020) 109771. doi: 10.1016/j.jcp.2020.109771 Available online at: https://www.sciencedirect.com/science/article/pii/S0021999120305453

20. Farago I. Finite element method for solving nonlinear parabolic equations. Comput Math Appl. (1991) 21:59–69. doi: 10.1016/0898-1221(91)90231-R

Keywords: complex geometries, higher-order codes, multi-dimensional partial differential equations, nonlinear PDEs, Stabilized Explicit Runge-Kutta methods

Citation: Martín-Vaquero J (2020) ESERK Methods to Numerically Solve Nonlinear Parabolic PDEs in Complex Geometries: Using Right Triangles. Front. Phys. 8:367. doi: 10.3389/fphy.2020.00367

Received: 15 February 2020; Accepted: 29 July 2020;

Published: 09 September 2020.

Edited by:

Poom Kumam, King Mongkut's University of Technology Thonburi, ThailandReviewed by:

Abdullahi Yusuf, Federal University Dutse, NigeriaCopyright © 2020 Martín-Vaquero. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jesús Martín-Vaquero, amVzbWFydmFAdXNhbC5lcw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.