94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Pediatr., 11 September 2020

Sec. Neonatology

Volume 8 - 2020 | https://doi.org/10.3389/fped.2020.00544

Background: Frequent simulation-based education is recommended to improve health outcomes during neonatal resuscitation but is often inaccessible due to time, resource, and personnel requirements. Digital simulation presents a potential alternative; however, its effectiveness and reception by healthcare professionals (HCPs) remains largely unexplored.

Objectives: This study explores HCPs' attitudes toward a digital simulator, technology, and mindset to elucidate their effects on neonatal resuscitation performance in simulation-based assessments.

Methods: The study was conducted from April to August 2019 with 2-month (June–October 2019) and 5-month (September 2019–January 2020) follow-up at a tertiary perinatal center in Edmonton, Canada. Of 300 available neonatal HCPs, 50 participated. Participants completed a demographic survey, a pretest, two practice scenarios using the RETAIN neonatal resuscitation digital simulation, a posttest, and an attitudinal survey (100% response rate). Participants repeated the posttest scenario in 2 months (86% response rate) and completed another posttest scenario using a low-fidelity, tabletop simulator (80% response rate) 5 months after the initial study intervention. Participants' survey responses were collected to measure attitudes toward digital simulation and technology. Knowledge was assessed at baseline (pretest), acquisition (posttest), retention (2-month posttest), and transfer (5-month posttest).

Results: Fifty neonatal HCPs participated in this study (44 females and 6 males; 27 nurses, 3 nurse practitioners, 14 respiratory therapists, and 6 doctors). Most participants reported technology in medical education as useful and beneficial. Three attitudinal clusters were identified by a hierarchical clustering algorithm based on survey responses. Although participants exhibited diverse attitudinal paths, they all improved neonatal resuscitation performance after using the digital simulator and successfully transferred their knowledge to a new medium.

Conclusions: Digital simulation improved HCPs' neonatal resuscitation performance. Medical education may benefit by incorporating technology during simulation training.

It is estimated that annually more than 10% of newborns around the globe require assistance to breathe on their own to make the fetal-to-neonatal transition, mainly due to drastic physiological changes from an intrauterine to extrauterine environment (1, 2). Healthcare professionals (HCPs) are expected to perform a series of complex interventions quickly and precisely, including suction, drying, and respiratory support, to help these newborns make the successful fetal-to-neonatal transition. To meet the high expectations for HCPs on neonatal resuscitation tasks under high-pressure conditions, frequent neonatal training is recommended to help HCPs master and retain knowledge and skills and to minimize human errors in the delivery room (1). However, in traditional medical education, students and HCPs have limited access to hands-on experiences that involve complex and rarely occurring high-risk scenarios, such as neonatal resuscitation (3–5).

The advancement of technology enables medical educators to overcome challenges of traditional medical education, including time constraints, space, and clinical duties. It also helps create teaching opportunities for medical students and HCPs to perform high-stakes, sophisticated medical procedures using computer-based simulations (6–8). Many programs have introduced new media technologies to implement learning and assessment tools, including board games (9, 10), video games (11–15), mobile learning platforms (6, 16–18), virtual environments (19–23), and simulations (24–29). Of the limited studies that have examined HCPs' or medical students' perceptions toward the use of technology in their programs (18, 30–33), few have investigated the relationship between attitudes and performance regarding game-based assessments (34–37).

This study analyzes the survey responses of 50 HCPs to gain insight into their perceptions of the RETAIN digital training simulator for neonatal resuscitation, their attitudes toward technology, and their fixed and growth mindset. The objectives of the study are to (1) probe the validity of the survey instrument employed in this study, (2) examine whether HCPs' demographic information and previous experiences of video games and technology impact their attitudes, (3) identify different clusters of HCPs that hold similar attitudes using an unsupervised machine learning algorithm, and (4) reveal the identified clusters' long-term patterns of performance on the four tests to reveal different performance pathways through the digital simulator. The relationship between attitudinal survey responses and the game-based assessment performance is investigated to determine whether attitudes toward technology hinder or enhance HCPs' performance in a neonatal resuscitation digital simulator.

Results from the present study could inform HCPs' general conceptions of game-based simulations, technology, and mindset. Further, the revealed relationship between perceptions and performance could assist in understanding whether attitudes toward technology hinder or enhance students' learning in medical education so that instructors can appropriately incorporate new media and technology into their instruction.

Fifty HCPs (44 females and 6 males) were recruited from the Neonatal Intensive Care Unit (NICU) at the Royal Alexandra Hospital, Edmonton, Canada. Anonymized demographic information was collected prior to the study. The study was approved by the Human Research Ethics Board at the University of Alberta (Pro00064234). Informed consent was obtained from all HCPs prior to participation.

The study was conducted based on the RETAIN (REsuscitation TrAINing for healthcare professionals) digital simulator at the simulation lab at the Centre for the Studies of Asphyxia and Resuscitation (http://retainlabsmedical.com/index.html, RETAIN Labs Medical Inc. Edmonton, Canada). RETAIN was designed for HCPs to help practice their knowledge of neonatal resuscitation through digital and tabletop simulators (37). In RETAIN, participants are asked to assume the role of an HCP and to perform interventions using the given tools, including action cards, adjustable monitors, and equipment pieces, during simulated neonatal resuscitation scenarios.

Participants completed a demographic-information questionnaire and the RETAIN digital simulator tutorial (i.e., a guided neonatal resuscitation scenario involving an apneic 24-week infant). Then, participants completed a pretest (difficult neonatal resuscitation scenario of an apneic infant with fetal bradycardia) using the RETAIN digital simulator to measure baseline performance. After completing two practice scenarios using the RETAIN digital simulator, participants repeated the assessment scenario (difficult neonatal resuscitation scenario of an apneic infant with fetal bradycardia) as a posttest to measure immediate performance change after training. Then, participants completed a questionnaire about HCPs' attitudes toward the digital simulator, technology and gaming habits, and mindset. The survey's construct validity is detailed in the Appendix (Supplementary Material). Two delayed posttests were administered to probe whether participants retained neonatal resuscitation knowledge over time. One was administered 2 months after the immediate posttest, and it was identical to the immediate posttest. The other posttest was administered 5 months after the immediate posttest (i.e., an extremely difficult scenario of an apneic infant with thick meconium) using a tabletop simulator rather than the digital simulator to examine whether HCPs transferred their neonatal resuscitation knowledge to another medium. Performance and behaviors within the digital simulator (i.e., keystrokes and mouse input automatically saved as .txt files) and tabletop simulator (i.e., ordered checklist completed by the supervising researcher) were recorded and scored.

The performance measures in the current study are the scores of the four game-based tests that assessed HCPs' neonatal resuscitation knowledge. Participants' performance on each assessment was scored using the 7th edition Neonatal Resuscitation Program (NRP) guidelines (38). A binary score was assigned to each performance measure: A score of one represented 100% adherence to NRP guidelines, and a score of zero represented <100% adherence to NRP guidelines.

Participants' conceptions and attitudes toward game-based assessment, technology, and their fixed and growth mindset were surveyed in the questionnaire after the immediate posttest. Growth mindset is an incremental theory of intelligence representing the belief that intelligence or ability can be improved through effort, whereas fixed mindset is an entity theory of intelligence representing the belief that intelligence or ability cannot change (39). The mindset scale includes four items using a 5-point Likert scale (1: strongly disagree, 2: disagree, 3: neutral, 4: agree, and 5: strongly agree). All items are positively stated except for Fixed Mindset 1 and 2. Reverse coding was conducted for the two items so that items on fixed mindset and growth mindset loaded on the same latent construct. All the survey questions are included in the Appendix (Supplementary Material).

Participants' demographic information was collected from the questionnaire at the start of the study. It was included in the analysis to investigate whether an HCP's level of education, occupational position, and previous experience of digital games and multimedia technologies impact their attitudes toward educational games, technology in education, and mindset.

All analyses were performed on version 3.6.3 of the open statistical platform, R (40). The study is guided by the following steps.

Before any analysis was conducted on the questionnaire response, an explanatory factor analysis (EFA) was performed to confirm the construct validity of the instrument. More specifically, we set out to validate that three latent factors (digital games, technology, and growth mindset) emerge from the survey responses as intended and that each item loads on the corresponding latent constructs. We used the R psych package to conduct the EFA analysis (41), the parallel analysis, and the very simple structure (VSS) test.

Descriptive statistics from the participants' demographic information and survey response categories were reported to describe the general trend of HCPs' attitudes on the three dimensions: digital games, technology, and growth mindset. Then, a partial credit model (PCM) was employed to estimate the psychometric properties of the survey items to reveal the participants' levels of endorsement on each statement. Then, PCM with person-level covariates was fitted to examine the impact of demographic information and digital game experience on participants' attitudes. The models were fitted using the eirm package that enables a general linear mixed model (GLMM) analysis (conducted using the glmer function in the lme4 package) (42) on a binomial dependent variable with a multilevel structure, thus overcoming the difficulty of modeling multilevel Likert scales.

The unsupervised machine learning algorithm, agglomerative hierarchical clustering, was employed using the hclust function from the R stats package (40) to group the participants based on their survey responses. Centroids of identified clusters were reported to demonstrate the group characteristics on the three dimensions we aimed to measure. In addition, the group performances on the four tests were reported to observe the trajectories of test performance over time.

Two generalized linear mixed model (GLMM) repeated-measures analyses were conducted to track the growth of participant group performances on the four tests over time using the glmer function of the R lme4 package (43).

The first GLMM model was fitted using the entire data set to test the effects of Time and Group Membership on the binary test performance (Test score). The within-subject repeated variable was Time with four levels (pretest, immediate posttest, posttest after 2 months, and posttest after 5 months), and the between-subjects variable was Group Membership categorized by agglomerative hierarchical clustering. The second GLMM model was fitted with data per group to identify the trajectories of performance growth within each cluster. Time was the within-subject repeated predictor variable. Participant ID was introduced as a random effect in both models.

The sample consisted of 27 registered nurses, 14 respiratory therapists, 6 doctors (including clinical fellows, residents, and clinical assistants), and 3 nurse practitioners. Participants were recruited from different educational backgrounds (diploma: 3, bachelor's degree: 24, master's degree: 4, medical degree: 6, and after degree: 4).

Figure 1 shows the survey responses on the binary questions and Likert scales. Of the 50 participants, 48 (96%) reported that the length of time and pacing during the digital simulation were appropriate to retain information of basic resuscitation steps, 37 (74%) agreed that the terminology used did not impede their ability to complete the steps, 42 (84%) reported that they could make quick decisions in the simulation-based tasks. However, 30 (60%) participants found it difficult to quickly and easily find and select the actions while playing. Participant mean years of experience in clinical neonatal care was 10.71 years (SD = 6.76), their average years of video-gaming experience was 7.46 years (SD = 9.87), and the average overall time spent on playing mobile/video games in a typical month was 6.26 (SD = 14.4). The results show that participant experience of and interest in video gaming vary widely.

Also, participants endorsed items related to Growth Mindset the most (100%), followed by Technology Helps Career (96%), Video Game Benefits NRP Training (94%), and Learning about Technology (88%). In contrast, most participants disagreed with items related to Fixed Mindset (98%), Enjoy Reading about Technology (30%), and Computers at Home (8%). In sum, most participants acknowledged the usefulness and potential of digital games and technology in career development, medical training, and education. Despite this, they still showed some reluctance regarding technology. Moreover, participants exhibited a high level of agreement with the growth mindset and disagreement with the fixed mindset. Participants' response categories are detailed in the Appendix (Supplementary Material).

The parallel analysis as part of the EFA reveals that the number of factors was three. The VSS criterion determined that the VSS complexity of 1 (i.e., where all except the greatest absolute loading for an item are ignored) achieved a maximum of 0.66 with three factors, and the VSS complexity of 2 (i.e., where all except the greatest two loadings are ignored) achieved a maximum of 0.75 with three factors. Therefore, we chose the three-factor model for our data set. The factor loadings and model summaries of the EFA are presented in Table 1. Three factors were identified with sum of squared loadings (SS loadings) of 3.52, 2.74, and 1.12, respectively. All three factors are well identified as all the SS loadings are >1. Table 1 displays the factor loadings of each item on the factors identified. Thus, Items 1–4 that relate to digital simulators and game-based assessments load mainly on Factor 3. Also, Items 5–8 that relate to growth mindset load mainly on Factor 2. Last, Items 9–15 that measure attitudes toward technology load mainly on Factor 1. Thus, we assigned the following labels, Technology, Growth Mindset, and Digital Gaming, to the three factors, respectively. The correlation between Technology and Growth Mindset is 0.14 (p > 0.05), between Technology and Digital Gaming is 0.08 (p > 0.05), and between Growth Mindset and Digital Gaming is 0.07 (p > 0.05). The weak correlations again confirm the construct validity of our instrument as we measured diverse dimensions with the items included.

A summary of item psychometric properties from the questionnaire is presented at the top of Table 2. In accordance with results from the descriptive analysis, participant endorsement of items related to Growth Mindset 1 (Estimate = 0.668, p = 0.015) and Growth Mindset 2 (Estimate = 0.830, p = 0.004) are significantly higher than other items.

The effects of participants' demographic information and previous experience of video gaming and neonatal resuscitation on their attitudes toward technology and the RETAIN digital simulator are shown at the bottom of Table 2. Results reveal that, regardless of educational levels, occupational positions, years of neonatal care experience, and video-gaming experiences, all participants show similar attitudes in the survey with no significant differences.

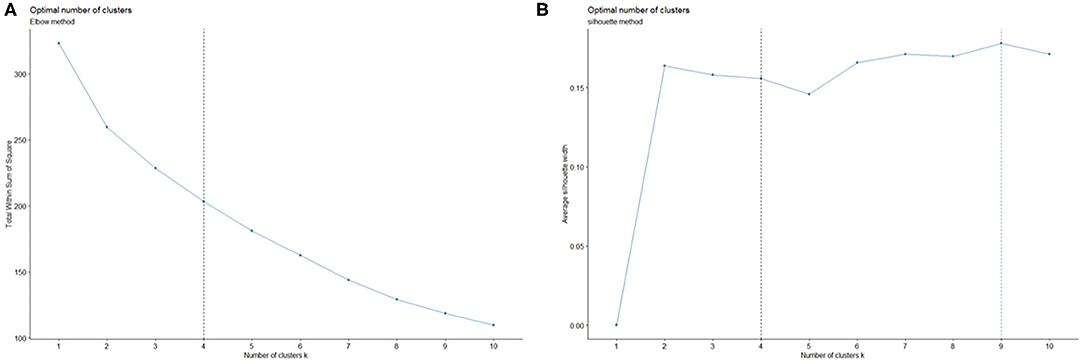

The unsupervised learning algorithm, hierarchical clustering, was used to cluster participants based on their survey responses. We used two methods to evaluate the optimal numbers of clusters. Both the elbow method and the silhouette method returned four as the optimal number of clusters as shown in Figure 2. However, when we set four as the number of clusters, one cluster contained only one participant, and the Euclidean distance between the cluster and the nearest cluster was too small. Therefore, we set three as the number of clusters for the present sample, which also aligns with the three-factor result of the EFA analysis. The cluster dendrogram (Figure 3) provides us with a graphical representation of the similarity in participants' attitudes and shows each participant's cluster membership and the distances between clusters.

Figure 2. Optimal number of clusters (k) decided by the Elbow method (A) and the Silhouette method (B).

The red circle refers to cluster 1 with 15 participants, the green circle next to cluster 1 is cluster 2 with 20 participants, and the blue circle is cluster 3 with 14 participants. One participant did not complete the survey and, thus, was excluded from the clustering analysis. To further explore attitude differences among clusters, we extracted the centroids (i.e., the middle or the average of a cluster) of each cluster to represent their characteristics on the dimensions we measured as shown at the top of Table 3. The cluster dendrogram and details regarding this method can be found in the Appendix (Supplementary Material).

Cluster 1 shows the highest endorsement on most items in the survey compared with the other two clusters, and it shows positive attitudes toward the digital simulator, strong endorsement of growth mindset, and great interest and openness to new technology. Cluster 2 shows neutral attitudes on most items. Cluster 3 shows the highest levels of agreement on items related to growth mindset and Game Benefits NRP Training. Although cluster 3 endorses the highest growth mindset and supports the idea of technology helping careers, it shows the least endorsement of using new technology.

We fitted two GLMMs: The first model probed the effects of Time as a within-subject factor and of Cluster Membership as a between-subjects factor on participants' test performance. The second model fit the data from different clusters with only Time as a within-subject repeated measure to observe each cluster's long-term performance trajectories.

The first model [summarized in the Appendix (Supplementary Material)] reveals that participants performed significantly better on all posttests (Estimateimmediate = 2.05, p < 0.02; Estimate2−month = 1.84, p < 0.05; Estimate5−month = 2.38, p < 0.05) compared with their performance on the pretest. However, there are no significant performance differences among the three clusters. The performance growth trajectories per cluster (Figure 4) are estimated by the marginal means. The results show that, after the simulation-based training for neonatal resuscitation, participants from the three clusters all showed knowledge gains in the transfer test (the posttest after 5 months) regardless of their attitudinal pathways.

Results of the GLMM for the three clusters can be found at the bottom of Table 3, which shows that cluster 1 participants' performance was significantly better on the immediate posttest compared with the pretest (Estimate = 1.79, p = 0.03) but slightly decreased after 2 months (with no significant difference from the pretest) and increased the most on the last posttest (Estimate = 2.11, p = 0.02), which was delayed 5 months. Cluster 2 participants scored significantly higher on the immediate posttest compared with the pretest, and their performance declined slightly on the following tests. However, on the last transfer test, their scores were still significantly higher than the pretest scores (Estimate = 1.76, p = 0.03). Importantly, cluster 3 scored the lowest on the pretest (M = 0.36) and the highest on the last posttest (M = 0.91). Moreover, participants from cluster 3 did not achieve a significantly higher performance compared to the pretest until the last transfer test. This indicates that the tabletop simulator picks up participants' knowledge that may not have been totally captured by the tests in the digital simulator.

Simulation-based neonatal resuscitation training and game-based assessments can provide HCPs with more opportunities to practice their procedural knowledge with real-life scenarios in a low-stakes environment (7). Few studies have examined HCPs' attitudes toward digital simulations, technology, and mindset and even fewer have linked HCPs' attitudes toward knowledge acquisition, retention, and transfer. This study examines 50 HCPs' perceptions of a digital simulator, their attitudes toward technology, and also their self-reported theories of intelligence (i.e., mindsets). Results show that most participants agree that technology and digital simulators can benefit neonatal resuscitation training and career development regardless of their levels of education, positions, years of neonatal care experience, and video-game experience. Moreover, all participants demonstrate strong endorsement of a growth mindset.

In addition, we used unsupervised machine learning to cluster participants based on their attitudes. Three clusters were identified: high endorsement on most items (cluster 1), neutral attitudes toward all survey items (cluster 2), and the highest levels of agreement with growth mindset concomitant with the lowest interest and enjoyment regarding technology despite their acknowledgments of the benefits of digital simulators for neonatal resuscitation and of technology for career development (cluster 3).

To further examine the three clusters' performance growth and knowledge acquisition trajectories, we fitted the data into a GLMM to observe whether the participants learned and retained the neonatal resuscitation knowledge after the simulation-based training program. Results reveal that, although the three clusters underwent disparate attitudinal paths, all participants learned, retained, and transferred neonatal resuscitation knowledge after the digital simulator intervention. More specifically, participants from cluster 1 with high motivation and interest in games and technology showed significant performance progress on the immediate posttest, and although their scores slightly declined on the first delayed posttest, they improved their performance again on the transfer test. Cluster 2 shows a neutral attitude on most survey items compared with the other two clusters. Similar to cluster 1, participants from cluster 2 improved their performance significantly on the immediate posttest. However, they failed to improve more on the following tests. Last, cluster 3 started with the lowest scores among the three groups and did not improve their performance significantly on the immediate posttest or on the test after 2 months, which were both administered using the digital simulator. However, they improved the performance significantly on the posttest after 5 months that was administered using a tabletop simulator, achieving the highest performance on the last posttest that measured knowledge transfer compared with the other clusters.

Although all three clusters show that participants improved their performance over time and transferred their knowledge to a new medium (from digital to traditional board games), the results for cluster 3 show that technology is not a prerequisite for performance gain from a digital simulator and that there may be different pathways to achieving better performance as these participants were able to gain the most from the pretest to the last posttest. The low interest in and enjoyment of technology may have weakened their performances on the computer-based assessments. However, the intervention from the digital simulation–based training helped the participants learn and retain knowledge. They were able to greatly improve their performance when the assessment medium was changed even when the assessment content was more difficult than in previous tests. Although cluster 3 participants were less likely to enjoy technology or digital simulators, they still benefited from using the technology-based digital simulation and transferred their knowledge on the tabletop simulator perhaps due to their high endorsement of a growth mindset. Moreover, an individual's growth mindset could be a pathway to achieving high performance on neonatal resuscitation, especially when learners do not embrace technology; therefore, mindset interventions could be useful in this domain. Negative attitudes toward digital simulators and technology may hinder participant performance in the short term, but their growth mindset may help them learn and transfer their knowledge in the long term. Overall, this study suggests that novel simulators (digital or tabletop) may be a viable alternative to traditional training in neonatal resuscitation.

With the pervasiveness of multimedia and technology, it is important to examine individuals' attitudes and understand how digital simulators could promote neonatal resuscitation training with lower costs and risks. Results show that, although most participants acknowledge the benefits of digital simulators and technology in neonatal resuscitation training, they are reluctant to embrace technology. Three clusters were identified based on participants' attitudes toward digital simulators technology, and mindset. Further, participants have learned, retained, and transferred neonatal resuscitation knowledge over time even if they followed different learning paths determined by their attitudes. Results indicate the potential of digital simulators in training and assessing HCPs that can capture performance expressed through different learning paths.

All datasets generated for this study are included in the article/Supplementary Material.

The studies involving human participants were reviewed and approved by the Human Research Ethics Board at the University of Alberta (Pro00064234). Informed consent was obtained from all HCPs prior to participation. The patients/participants provided their written informed consent to participate in this study.

GS, SG, MC, and CL conceptualized and designed the study, drafted the initial manuscript, and critically reviewed and revised the manuscript. All authors approved the final manuscript as submitted and agree to be accountable for all aspects of the work.

We would like to thank the public for donating money to our funding agencies: SG was a recipient of the CIHR CGS-M, the Maternal and Child Health (MatCH) Scholarship (supported by the University of Alberta, Stollery Children's Hospital Foundation, Women and Children's Health Research Institute, and the Lois Hole Hospital for Women), University of Alberta Department of Pediatrics Graduate Recruitment Scholarship, and Faculty of Medicine and Dentistry/Alberta Health Services Graduate Student Recruitment Studentship. MC was a recipient of the SSHRC Insight, SSHRC Insight Development, NSERC Discovery, and Killam Grants. GS was a recipient of the Heart and Stroke Foundation/University of Alberta Professorship of Neonatal Resuscitation, a National New Investigator of the Heart and Stroke Foundation Canada, and an Alberta New Investigator of the Heart and Stroke Foundation Alberta.

GS is an owner of RETAINLabs Medical Inc., Edmonton, Canada (http://retainlabsmedical.com), which is distributing the game.

GS has registered the RETAIN table-top simulator (Tech ID 2017083) and the RETAIN digital simulator under Canadian copyright (Tech ID—2017086).

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We would like to thank the participants for their time and availability for repeated testing over 5 months.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fped.2020.00544/full#supplementary-material

HCP, Healthcare professional; NICU, Neonatal Intensive Care Unit; NRP, Neonatal Resuscitation Program; RETAIN, REsuscitation TrAINing for healthcare professionals; SD, Standard Deviation; SE, Standard Error; Est, estimate; M, mean; GLMM, generalized linear mixed model; PCM, partial credit model; VSS, very simple structure; EFA, explanatory factor analysis.

1. Perlman JM, Wyllie J, Kattwinkel J, Wyckoff MH, Aziz K, Guinsburg R, et al. Part 7: Neonatal resuscitation. Circulation. (2015) 132:S204–S41. doi: 10.1161/CIR.0000000000000276

2. Wyckoff MH, Aziz K, Escobedo MB, Kapadia VS, Kattwinkel J, Perlman JM, et al. Part 13: Neonatal resuscitation: 2015 american heart association guidelines update for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation. (2015) 132(18 Suppl 2):543. doi: 10.1161/CIR.0000000000000267

3. Scalese RJ, Obeso VT, Issenberg SB. Simulation technology for skills training and competency assessment in medical education. J Gen Intern Med. (2008) 23:46–49. doi: 10.1007/s11606-007-0283-4

4. Janssen A, Shaw T, Goodyear P, Kerfoot BP, Bryce D. A little healthy competition: using mixed methods to pilot a team-based digital game for boosting medical student engagement with anatomy and histology content. BMC Med Educ. (2015) 15:173. doi: 10.1186/s12909-015-0455-6

5. Friedman CP. The marvelous medical education machine or how medical education can be 'unstuck' in time. Med Teach. (2000) 22:496–502. doi: 10.1080/01421590050110786

6. Tews M, Brennan K, Begaz T, Treat R. Medical student case presentation performance and perception when using mobile learning technology in the emergency department. Med Educ Online. (2011) 16:7327. doi: 10.3402/meo.v16i0.7327

7. Jowett N, LeBlanc V, Xeroulis G, MacRae H, Dubrowski A. Surgical skill acquisition with self-directed practice using computer-based video training. Am J Surg. (2007) 193:237–42. doi: 10.1016/j.amjsurg.2006.11.003

8. Nousiainen M, Brydges R, Backstein D, Dubrowski A. Comparison of expert instruction and computer-based video training in teaching fundamental surgical skills to medical students. Surgery. (2008) 143:539–44. doi: 10.1016/j.surg.2007.10.022

9. Fukuchi SG, Offutt LA, Sacks J, Mann BD. Teaching a multidisciplinary approach to cancer treatment during surgical clerkship via an interactive board game. Am J Surg. (2000) 179:337–40. doi: 10.1016/S0002-9610(00)00339-1

10. Moy JR, Rodenbaugh DW, Collins HL, DiCarlo SE. Who wants to be a physician? An educational tool for reviewing pulmonary physiology. Adv Physiol Educ. (2000) 24:30–7. doi: 10.1152/advances.2000.24.1.30

11. Kanthan R, Senger J. The impact of specially designed digital games-based learning in undergraduate pathology and medical education. Arch Pathol Lab Med. (2011) 135:135–42. doi: 10.1043/2009-0698-OAR1.1

12. Mann BD, Eidelson BM, Fukuchi SG, Nissman SA, Robertson S, Jardines L. The development of an interactive game-based tool for learning surgical management algorithms via computer. Am J Surg. (2002) 183:305–8. doi: 10.1016/S0002-9610(02)00800-0

13. Bigdeli S, Kaufman D. Digital games in medical education: key terms, concepts, and definitions. Med J Islam Repub Iran. (2017) 31:52. doi: 10.14196/mjiri.31.52

14. Akl EA, Pretorius RW, Sackett K, Erdley WS, Bhoopathi PS, Alfarah Z, et al. The effect of educational games on medical students' learning outcomes: a systematic review: BEME guide no 14. Med Teach. (2010) 32:16–27. doi: 10.3109/01421590903473969

15. Jalink MB, Goris J, Heineman E, Pierie JEN, ten Cate Hoedemaker Henk O. The effects of video games on laparoscopic simulator skills. Am J Surg. (2014) 208:151–6. doi: 10.1016/j.amjsurg.2013.11.006

16. Davies BS, Rafique J, Vincent TR, Fairclough J, Packer MH, Vincent R, et al. Mobile medical education (MoMEd) - how mobile information resources contribute to learning for undergraduate clinical students - a mixed methods study. BMC Med Educ. (2012) 12:1. doi: 10.1186/1472-6920-12-1

17. Short SS, Lin AC, Merianos DJ, Burke RV, Upperman JS. Smartphones, trainees, and mobile education: implications for graduate medical education. J Grad Med Educ. (2014) 6:199–202. doi: 10.4300/JGME-D-13-00238.1

18. Kron FW, Gjerde CL, Sen A, Fetters MD. Medical student attitudes toward video games and related new media technologies in medical education. BMC Med Educ. (2010) 10:50. doi: 10.1186/1472-6920-10-50

19. Makransky G, Bonde MT, Wulff JS, Wandall J, Hood M, Creed PA, et al. Simulation based virtual learning environment in medical genetics counseling: An example of bridging the gap between theory and practice in medical education. BMC Med Educ. (2016) 16:98. doi: 10.1186/s12909-016-0620-6

20. Schijven MP, Jakimowicz JJ. Validation of virtual reality simulators: Key to the successful integration of a novel teaching technology into minimal access surgery. Minim Invasive Ther Allied Technol. (2005) 14:244–6. doi: 10.1080/13645700500221881

21. Huang H, Liaw S, Lai C. Exploring learner acceptance of the use of virtual reality in medical education: a case study of desktop and projection-based display systems. Interact Learn Environ. (2016) 24:3–19. doi: 10.1080/10494820.2013.817436

22. Herron J. Augmented reality in medical education and training. J Electron Resour Med Lib. (2016) 13:51–5. doi: 10.1080/15424065.2016.1175987

23. Dyer E, Swartzlander BJ, Gugliucci MR. Using virtual reality in medical education to teach empathy. J Med Libr Assoc. (2018) 106:498–500. doi: 10.5195/JMLA.2018.518

24. Schreuder HWR, Oei G, Maas M, Borleffs JCC, Schijven MP. Implementation of simulation in surgical practice: minimally invasive surgery has taken the lead: the dutch experience. Med Teach. (2011) 33:105–15. doi: 10.3109/0142159X.2011.550967

25. McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Acad Med. (2011) 86:706–11. doi: 10.1097/ACM.0b013e318217e119

26. Ziv A, Wolpe PR, Small SD, Glick S. Simulation-based medical education: an ethical imperative. Simul Healthc. (2006) 1:252–6. doi: 10.1097/01.SIH.0000242724.08501.63

27. Okuda Y, Bryson EO, DeMaria S, Jacobson L, Quinones J, Shen B, et al. The utility of simulation in medical education: what is the evidence? Mt Sinai J Med. (2009) 76:330–43. doi: 10.1002/msj.20127

28. Bradley P. The history of simulation in medical education and possible future directions. Med Educ. (2006) 40:254–62. doi: 10.1111/j.1365-2929.2006.02394.x

29. Dankbaar MEW, Alsma J, Jansen EEH, van Merrienboer JJG, van Saase JLCM, Schuit SCE. An experimental study on the effects of a simulation game on students' clinical cognitive skills and motivation. Adv Health Sci Educ. (2016) 21:505–21. doi: 10.1007/s10459-015-9641-x

30. Carroll AE, Christakis DA. Pediatricians' use of and attitudes about personal digital assistants. Pediatrics. (2004) 113:238–42. doi: 10.1542/peds.113.2.238

31. Beylefeld DAA, Struwig MC. A gaming approach to learning medical microbiology: students' experiences of flow. Med Teach. (2007) 29:933–40. doi: 10.1080/01421590701601550

32. Dørup J. Experience and attitudes towards information technology among first-year medical students in denmark: longitudinal questionnaire survey. J Med Internet Res. (2004) 6:e10. doi: 10.2196/jmir.6.1.e10

33. Ghoman SK, Patel SD, Cutumisu M, von Hauff P, Jeffery T, Brown MRG, et al. Serious games, a game changer in teaching neonatal resuscitation? A review. Arch Dis Child Fetal Neonatal Ed. (2020) 105:98–7. doi: 10.1136/archdischild-2019-317011

34. Boeker M, Andel P, Vach W, Frankenschmidt A. Game-based E-learning is more effective than a conventional instructional method: a randomized controlled trial with third-year medical students. PLoS One. (2013) 8:e0082328. doi: 10.1371/journal.pone.0082328

35. Kerfoot BP, Baker H, Pangaro L, Agarwal K, Taffet G, Mechaber AJ, et al. An online spaced-education game to teach and assess medical students: a multi-institutional prospective trial. Acad Med. (2012) 87:1443–9. doi: 10.1097/ACM.0b013e318267743a

36. Rondon S, Sassi FC, Furquim de Andrade CR. Computer game-based and traditional learning method: a comparison regarding students' knowledge retention. BMC Med Educ. (2013) 13:30. doi: 10.1186/1472-6920-13-30

37. Ghoman SK, Schmölzer GM. The RETAIN simulation-based serious Game-a review of the literature. Healthcare. (2020) 8:3. doi: 10.3390/healthcare8010003

38. Weiner GM, Zaichkin J. Textbook of Neonatal Resuscitation (NRP). 7th ed. Elk Grove Village, IL: American Academy of Pediatrics (2016).

39. Dweck CS. Self-Theories: Their Role in Motivation, Personality, and Development. Philadelphia, PA: Psychology Press (2000).

42. Stanke L, Bulut O. Explanatory item response models for polytomous item responses. Int J Assess Tools Educ. (2019) 6:259–78. doi: 10.21449/ijate.515085

Keywords: education, training, simulation, resuscitation, table-top simulator, serious games, digital simulator, medical education

Citation: Lu C, Ghoman SK, Cutumisu M and Schmölzer GM (2020) Unsupervised Machine Learning Algorithms Examine Healthcare Providers' Perceptions and Longitudinal Performance in a Digital Neonatal Resuscitation Simulator. Front. Pediatr. 8:544. doi: 10.3389/fped.2020.00544

Received: 26 May 2020; Accepted: 29 July 2020;

Published: 11 September 2020.

Edited by:

Yogen Singh, Cambridge University Hospitals National Health Service Foundation Trust, United KingdomReviewed by:

Vikranth Bapu Anna Venugopalan, Sandwell and West Birmingham Hospitals National Health Service Trust, United KingdomCopyright © 2020 Lu, Ghoman, Cutumisu and Schmölzer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Georg M. Schmölzer, Z2Vvcmcuc2NobW9lbHplckBtZS5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.