- University Eye Clinic Maastricht, Maastricht University Medical Center, Maastricht, Netherlands

Fundus cameras are widely used by ophthalmologists for monitoring and diagnosing retinal pathologies. Unfortunately, no optical system is perfect, and the visibility of retinal images can be greatly degraded due to the presence of problematic illumination, intraocular scattering, or blurriness caused by sudden movements. To improve image quality, different retinal image restoration/enhancement techniques have been developed, which play an important role in improving the performance of various clinical and computer-assisted applications. This paper gives a comprehensive review of these restoration/enhancement techniques, discusses their underlying mathematical models, and shows how they may be effectively applied in real-life practice to increase the visual quality of retinal images for potential clinical applications including diagnosis and retinal structure recognition. All three main topics of retinal image restoration/enhancement techniques, i.e., illumination correction, dehazing, and deblurring, are addressed. Finally, some considerations about challenges and the future scope of retinal image restoration/enhancement techniques will be discussed.

1 Introduction

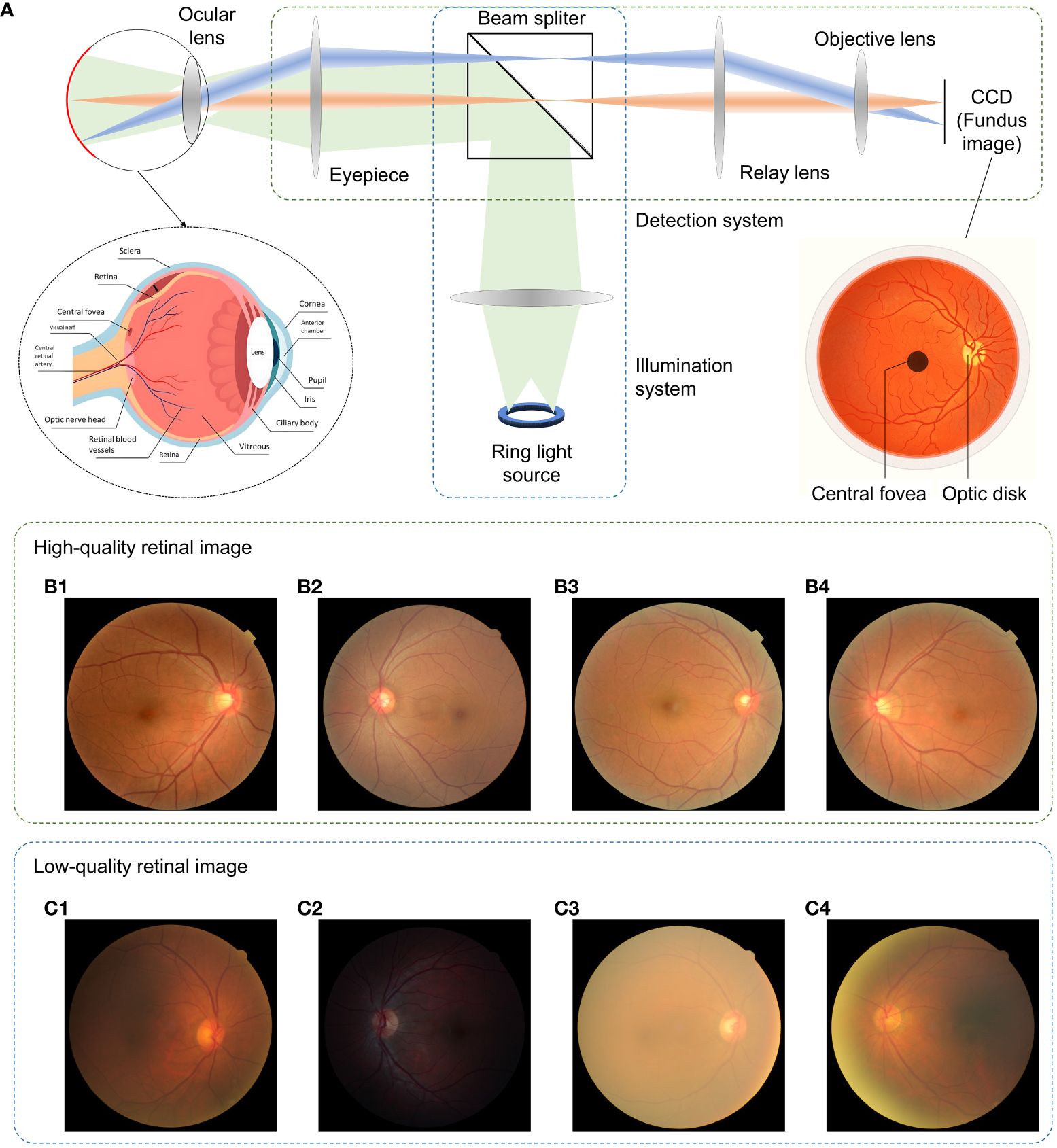

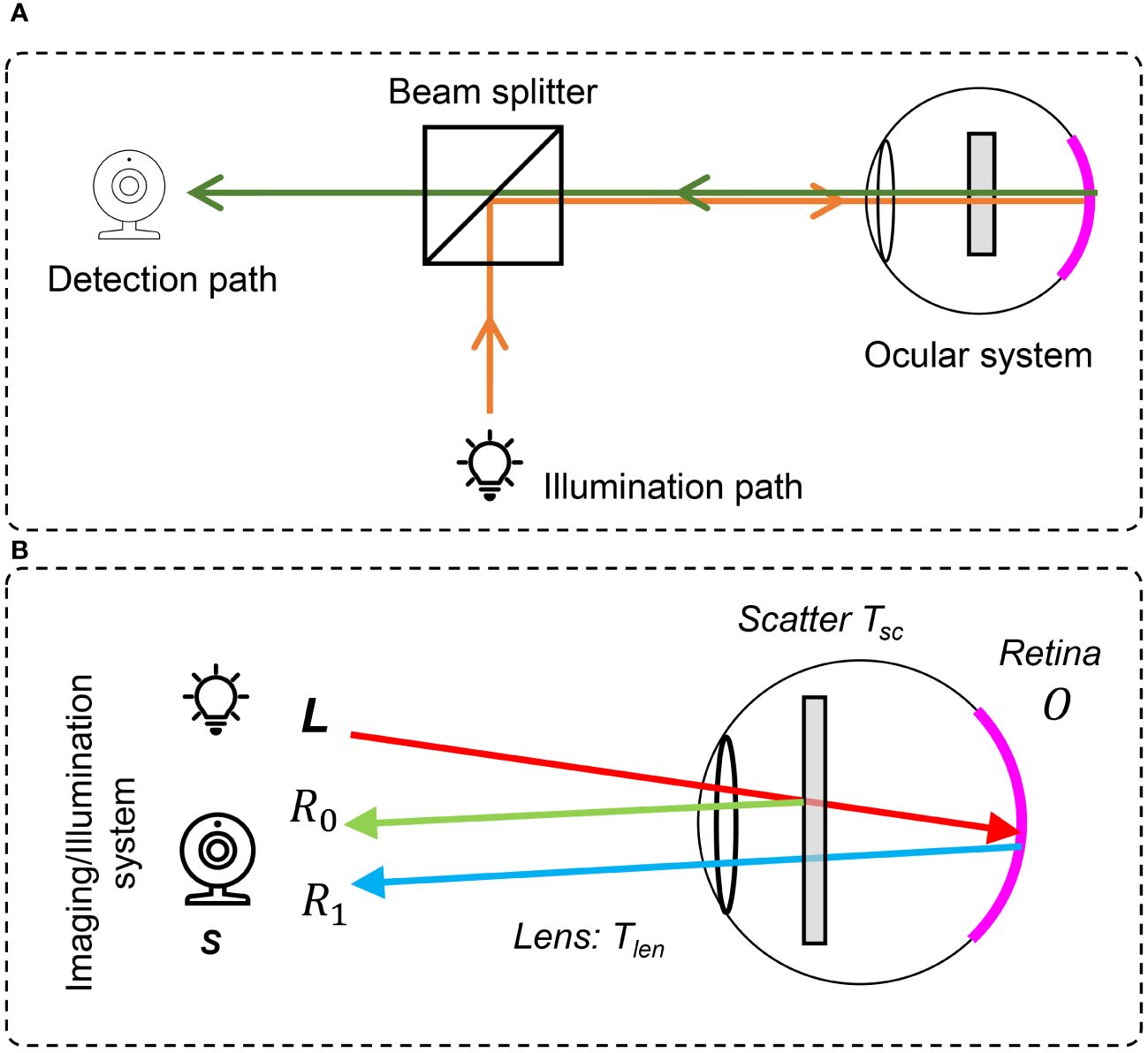

The introduction of the ophthalmoscope by Helmholtz (1) allowed one to obtain images of the retina and put ophthalmology on the map as a separate sub-area of medicine. In his design, the ophthalmoscope, the subject’s eye, and the examiner’s eye together form two optical systems that become the classical design of the successor of the fundus camera where the examiner’s eye is replaced by a camera sensor. A typical retinal imaging platform thus can be regarded as two coupled imaging systems, as shown in Figure 1A (2, 3). One is the ocular system, and the other is a reflective imaging system that normally illuminates the fundus through the pupil and collects the reflected light from the retina, forming the image on the camera sensor. Ophthalmologists have been using retinal images for the early detection, diagnosis, and monitoring of ocular diseases and their progression. Morphologic changes due to eye diseases like diabetic retinopathy (4–7), solar retinopathy (8), glaucoma (9–11), and age-related macular degeneration (12–14) can be directly observed in these images. In addition, neurological diseases such as stroke and cognitive dysfunction can also be diagnosed through retinal images (15, 16) as well as cardiovascular risk factors (17–19). Diagnosing efficiency and precision are deeply related to the quality of retinal imaging. Obviously, the higher the image clarity, the more detailed information can be observed from the image, and the better their diagnostic capabilities. Figures 1B1–B4 shows the fundus images with good visual quality. However, not every retinal image is perfect and low-quality image occurrence is not a minor problem. Heaven et al. found 9.5% of all acquired images to be entirely unsatisfactory in a prospective study of 981 patients with diabetic retinopathy (20). Scanlon and Stephen found the ungradable image rate to be between 19.7% for nonmydriatic photography and 3.7% for the mydriatic photography study of 3,650 patients with diabetes (21).

Figure 1 Fundus camera and demonstration of retinal images of good and low quality. (A) Sketch of optical design of a fundus camera. (B1–B4) are sample high-quality images. (C1) Low-quality retinal image with haze and uneven illumination. (C2) Insufficient illumination. (C3) Haze effect. (C4) Uneven illumination and blurriness.

Retinal images can be severely degraded by opacities in the optical media of cataract eyes (22–24), as shown in Figures 1C1–C4, and retinal images for non-cataract subjects can be degraded by poor illumination conditions including uneven or insufficient illuminations. The quality of retinal imaging can be improved by using high-end fundus cameras such as adaptive optics and laser-based fundus cameras to tackle the optical aberrations (25) and media opacities, but will increase financial pressures and limit access to healthcare for patients since not all clinics have such high-end equipment. In contrast, image enhancement processing offers affordable and efficient solution to digitally increase the image’s quality, such as to correct for illumination artifacts (26–28), to enhance contrast (29–31), and to reveal the effect of dehazing algorithms on cataract (32–34).

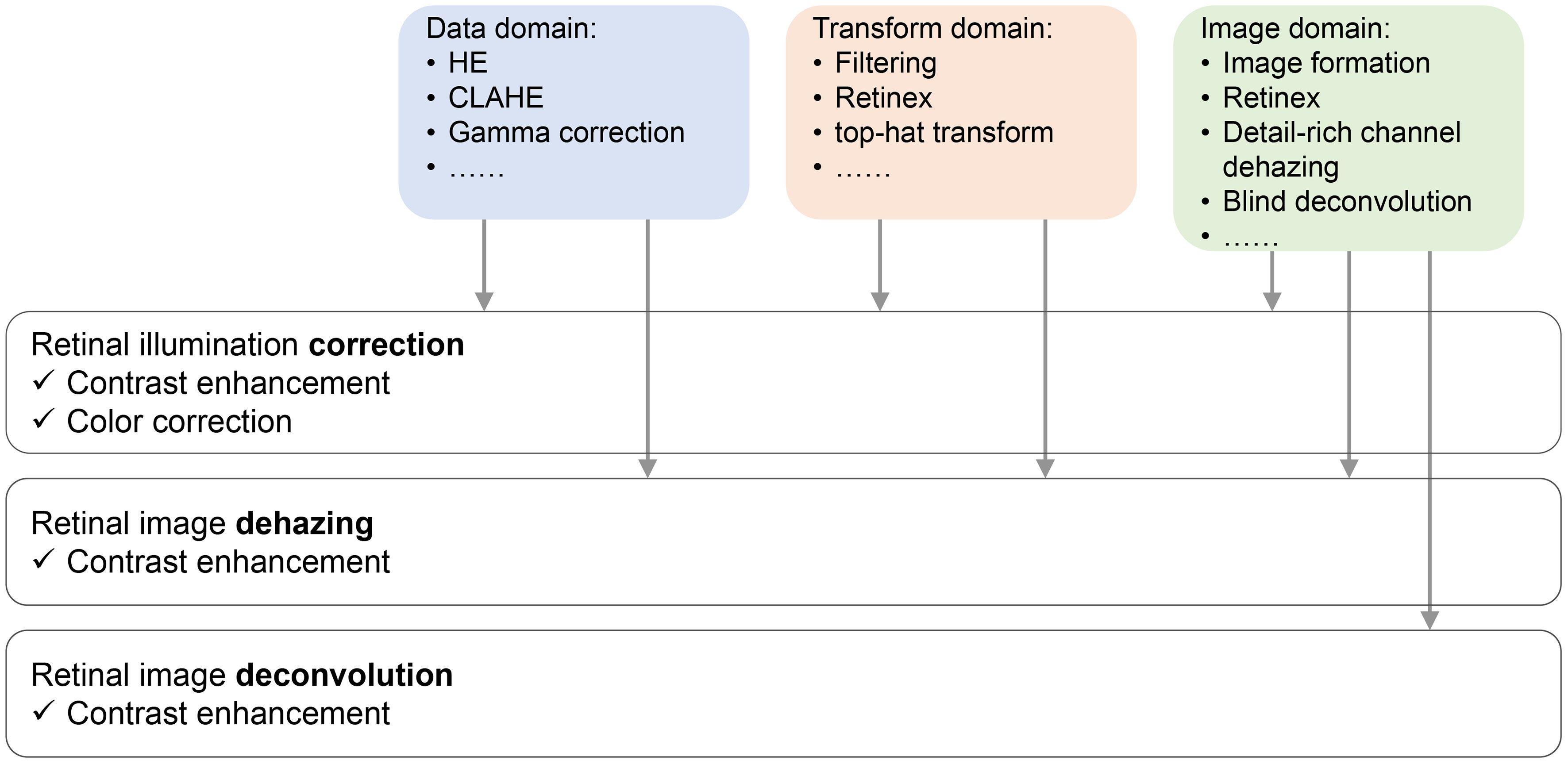

To increase the image clarity again, several contrast-enhancement methods have been proposed, which can be divided into two major categories: data domain methods and restored model methods (35). Data domain methods are further divided into two types based on their algorithms. The first type is known as the transform-domain algorithm, which transforms a raw image into a new function of other parameters such as the spatio-frequency domain corresponding to Fourier transform (36) or the structure feature domain corresponding to top-hat transform (37, 38). The image is processed in the transformed domain and then transformed back, resulting in a new image with enhanced contrast. The transform-domain algorithm enables us to globally or locally modify the weight for different structures within the image. However, owing to computation costs, the image-domain algorithm, which is the second type of data domain methods, is favored.

The core idea of the image-domain algorithm is the gray-level adjustment. Histogram equalization (HE) and its improved version, contrast-limited adaptive histogram equalization (CLAHE), are usually used for quick retinal image enhancement (29). Other histogram modification methods such as q-quantile (39) and the gray-scale global spatial entropy method also show promising results in improving the image’s contrast (10, 40). Global gray-level adjustment methods including the gamma map (41) and α-rooting (42) use a fixed function to convert the global gray-level distribution for adjusting the brightness of retina imaging.

Another group of image-domain algorithms uses filters to enhance contrast. These algorithms are similar to transform-domain algorithms but use a convolution kernel to separate the background and foreground information of an image (42, 43). The foreground information usually corresponds to the detailed structure of an image. By modifying the weights between background and foreground, the contrast of the detailed structure can be enhanced. In general, data domain methods belong to pure signal (image) processing techniques that normally take a few considerations of the physical insight of the image formation and enhancement.

In order to obtain self-consistent methods for retinal imaging enhancement, restored model methods have been developed as they digitally inverse the progress of how a degraded image was formed. These restored model methods share a similar idea of computational imaging, i.e., a physical model describes the optical process of forming an image under the impact of degeneration agents, which could be optical aberrations, unstable vibrations, or limited optical resolution. By directly or indirectly measuring the optical properties of these degeneration agents, one can compensate for the degeneration agents by digitally mimicking the propagation of the optical wave and modifying the wavefront of light (44–47). Imaging through scattering media, for example, is a well-known application of computational imaging (46, 47).

Different from computational imaging, the restored models for imaging enhancement do not measure the optical properties of the degeneration agents but try to find solutions corresponding to statistical properties in optical or visual aspects. The solutions can be regarded as rough estimated versions of those degeneration agents and can be also compensated by applying them to the image formation model, resulting in enhanced images.

Restored model methods are widely used for image dehazing (48, 49), underwater image enhancement, and night image enhancement (50); however, only a few studies have reported their use in retinal imaging enhancement. To our knowledge, the first publication about the application of restored model methods in retinal image enhancement can be traced back to 1989 (22), where the model for imaging the retina in photographs taken through intraocular scatter is considered similar to the model used to represent imaging of the earth from a satellite in the presence of light cloud cover. Here, scattering was removed (or suppressed) by using the Retinex theory.

Based on an image formation model, Xiong et al. (35) used intensity correction and histogram adjustment to preprocess the image, after which a transmission map was generated according to the intensity of the preprocessed image in each color channel. Haze could be suppressed through dehazing. Although the performance of their approach is good, it relies on statistical and empirical properties of the particular retina imaging database to determine the algorithm parameters, which makes it hard to apply in different databases. A subsequent study (26) used the illumination-reflectance model of image formation to correct the illumination of retinal images; the research shows that illumination correction is mathematically equivalent to dehazing when the color of the image is reversed. With that, the color-reversed dark-channel prior (DCP), also known as bright-channel prior (51), was employed, showing an efficient illumination correction. Following the haze image formation model and dehazing, Mitra et al. (52) proposed a “thin layer of cataracts” model to achieve contrast enhancement for cataract fundus images.

Gaudio et al. (53) demonstrated a pixel color amplification method for retina imaging enhancement, which shows good performance in enhancing the detailed structure of retina images. Figure 2 plots the categories of fundus retinal image enhancement algorithm, and their applications.

Figure 2 Sketch of retinal image restoration tasks, their solutions, and the effect they bring to the enhanced images.

In this review paper, we first revisit the mathematical model used for retinal image restoration, their physical/mathematical insight, and how they are related to each other in Section 2. We further show how these image formation models are applied to retinal image restoration in illumination correction, dehazing, and deblurring in Section 3. A brief introduction to deep-learning-based methods is also discussed. In Section 4, the significance and benefits of the clinical applications of retinal image restoration techniques are discussed, and the social impact of retinal image enhancement is described in Section 5. The concluding remarks and future scope are presented in Section 6.

2 Mathematical models for retinal image restoration

2.1 Pixel value stretch model

Enhancing the quality of the retinal image can be achieved by manipulating pixel values. For example, one can enlarge pixel values if the original values are too small to be noticed or decrease them if they are too bright.

Accordingly, the gamma correction, given in Equation (1), provides a straightforward way for pixel adjusting, which is widely used in medical image analysis (54).

where is the input image and denotes the output image after the gamma correction. When γ< 1, the nonlinear transforms the small value of pixels to large value so that the pixels become bright, while if γ > 1, the small value is further suppressed.

Another method for pixel adjustment is HE. Taking the image in Figure 3A as example, when the image is represented by a narrow range of intensity values, HE is able to make the intensity better distributed among the full dynamic range, as shown in Figures 3B, F. To avoid the over- and underexposure effect of HE, CLAHE (55) is proposed to adaptively achieve HE according to the local contrast in the image’s sub-block.

Figure 3 Sketch of retinal image restoration tasks and their solutions. (A) Raw image. (B) Output image enhanced by HE. (C) Output image enhanced by CLAHE (D), (E) and (F) Histogram of pixel value for image in (A), (B) and (C), respectively.

HE and its improved version, CLAHE, are widely used as preprocessing methods for retinal image enhancement, and the research has shown that the image formation model-based methods gain better image restoration results than HE methods as shown in Figure 3C and F.

2.2 Image formation model

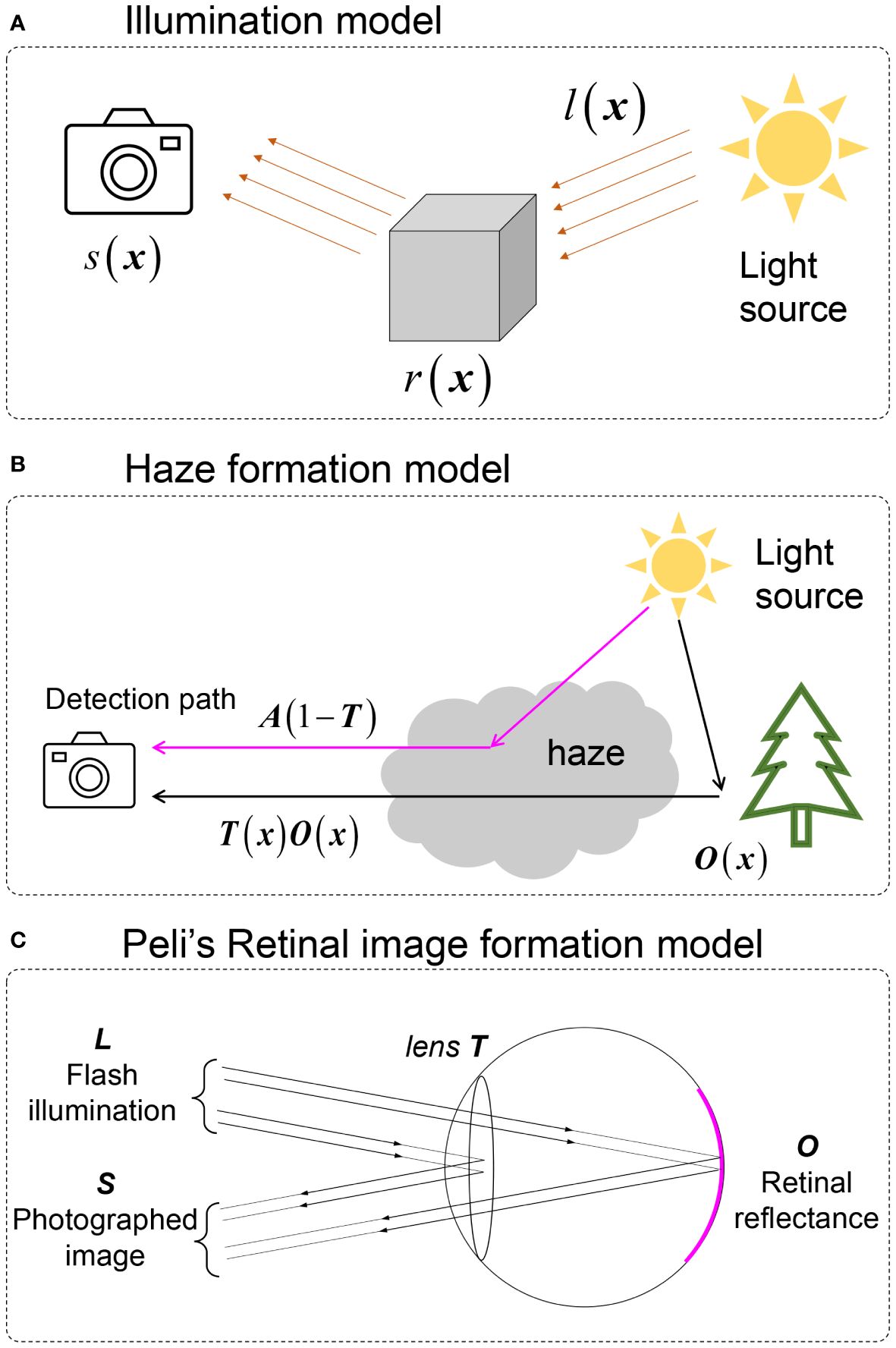

A widely used image formation model for retinal image enhancement is the illumination model, shown in Figure 4A, given by

Figure 4 Image formation models involved in retinal image enhancement. (A) illumination model. (B) Natural scene haze formation model. (C) Peli’s retinal image formation model.

Here, · denotes elementwise multiplication, x denotes the spatial coordinates, s is the captured image by the camera, l is the illumination pattern from the light source that is assumed to be spatially slow varying, and r is the retinal reflectance.

To tackle the haze effect caused by intraocular scattering on retinal imaging, a haze formation model is adopted. The early-stage model was directly adopted from Koschmieder and McCartney’s model (56, 57) of hazy nature scenes shown in Figure 4B and, given by

Here, o(x) is the haze-free image, and t(x) is the transmission matrix of the haze medium describing the portion of the light that is not scattered and reaches the camera. a is the global atmospheric light, and s(x) is the observed image. Despite the fact that a large number of natural scene dehazing studies were based on Equation (4) (58), this was developed for natural scenes and is not the optimal choice for fundus imaging since it ignores the double-pass property of fundus photography.

To establish an image formation model for retinal imaging, Peli et al. (22) developed an optical model for imaging the retina through cataracts, which is

where l is considered to be the flash illumination of the fundus camera and α is the attenuation of retinal illumination due to the cataract. Both l and α are considered to be constant. Different from Equations (3)-(4), reveals that the illumination pattern also impacts the quality of retinal imaging. However, as l is constant, Equation (4) loses the ability to correct the uneven (spatially varying) illumination of retinal imaging. In addition, the existing parameter α shows the idea of the double-pass property where the illumination light interacts twice with the cataract layer (when the light goes inside the eye and when it is reflected out from the fundus).

In a previous study (33), we proposed the double-pass fundus reflection (DPFR) that deals with image formation in retinal imaging as shown in Figure 5A and B. This DPFR model is given by

Figure 5 The double-pass fundus reflection model. (A) Optical path in a fundus camera. (B) Sketch of double-pass fundus reflection.

where l(x) is the illumination from the outside of the eye and is delivered by the illumination system of the fundus camera. Different from the nature scenes’ hazy formula in Equation (3), the transmission matrix t is squared, denoting the double-pass feature of fundus imaging (59, 60), where incident light will transmit twice through the pupil.

We ignore the illumination light color of a fundus camera and assume that the retina is illuminated by ideal white light (identical value in R, G, and B channels), which may have an uneven and insufficient illumination pattern. t(x) is the transmission matrix of intraocular scatter including ocular lens and cataract layers. Equation (5) reveals that the degeneration of the retinal image is mainly due to three parts (1): an uneven illumination condition (2), filtering by the human lens, and (3) intraocular scattering.

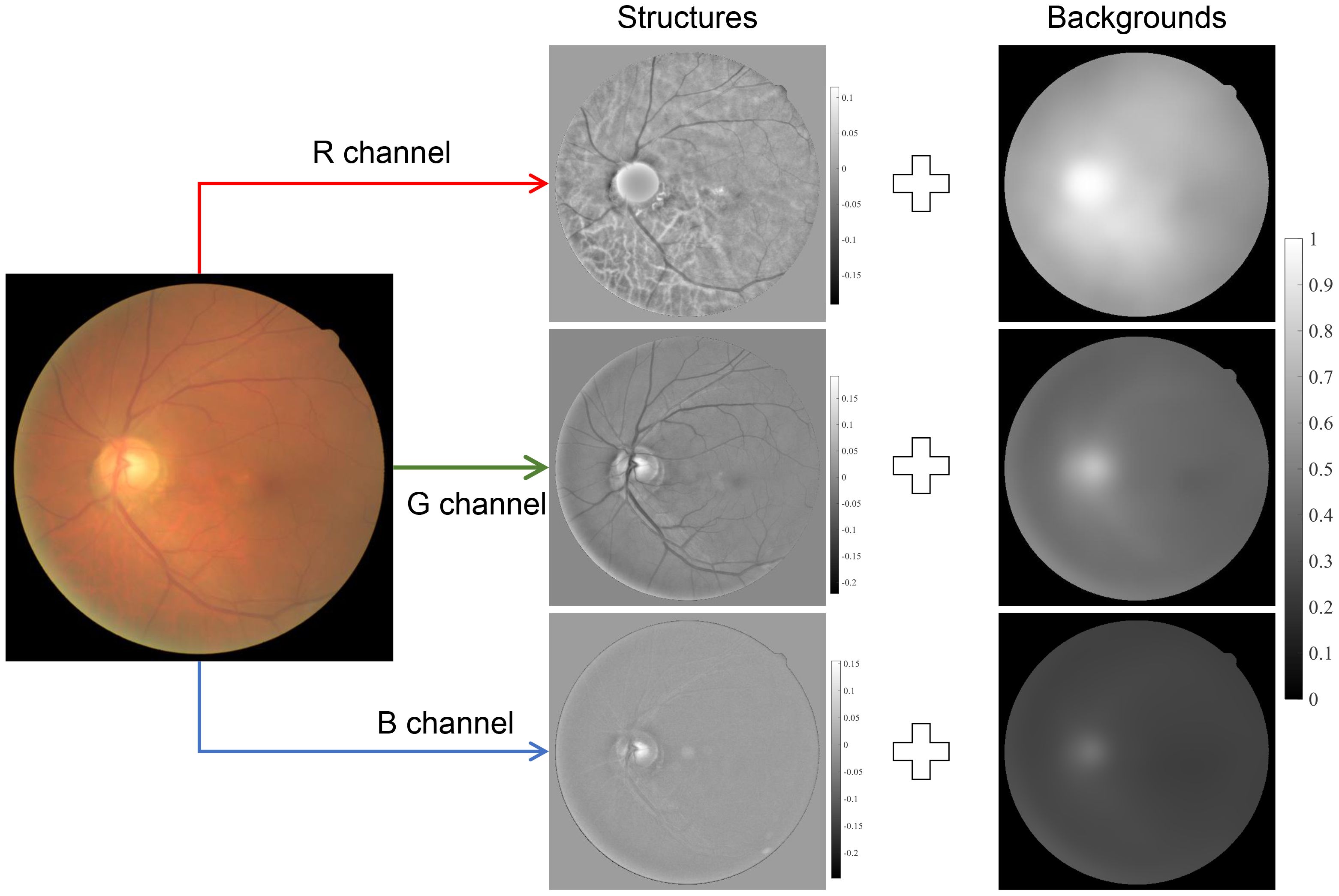

2.3 Image structures model

Besides the image formation model, there are also image structure models used for retinal image enhancement (31, 42, 43, 61), and they can be summarized as

where is the background information of the observed image that corresponds to the low-frequency components, while denotes the detailed information implying the detailed structures and textures of the image, as shown in Figure 6. By giving a large weight to and suppressing the , one can obtain a contrast-enhanced image. The background components, , can be obtained by low-pass filtering of s(x) (42, 61) and total variation regularization (31), while can be obtained by high-pass filtering of s(x) or subtracting from s(x). Note that Equation (6) is not based on the optical process of how the image is formed and the physical insight is different from Equations (2) and (4).

2.4 Retinex theory

It is worth noting that the illumination model [Equation (2)] and image structure model [Equation (6)] can be unified by the Retinex theory, which was developed first to explain the land effect in a visual prospective (62). It was later developed for uneven illumination correction in computer vision. Retinex can be categorized into several types including variational Retinex (63–65), PDE Retinex (66–68), threshold Retinex (69), and center/surrounded Retinex (known also as filtering-based Retinex) (70, 71), while the filtering-based Retinex gained a lot of research interest due to its computational efficiency and simple implementation. Taking the logarithm to both sides of Equation (2), we obtain

which is identical to Equation (6) in their mathematical forms, so that the illumination component is split as a linear term that is added to the reflection component. Since l(x) is assumed to be spatially slow-varying, a good estimation of l(x) can be given by low-pass filtering of s(x). Then, the reflectance r(x) is given as

where F is a low-passing filter, which is known also as surround function. ⊗ denotes the 2D convolution. In practical implementation, a pixel value normalization should be applied to Equation (8), to avoid distorting pixel intensity.

3 Retinal image restoration

3.1 Intensity correction

Retinal image intensity correction is a very important task for retinal image restoration. Statistical analysis shows that many retinal images suffer from problematic, uneven, and insufficient illumination, which is highly related to the performance of photographers, the imperfect head/eye position of subject participants, and the potential poorly designed illumination path of the fundus camera.

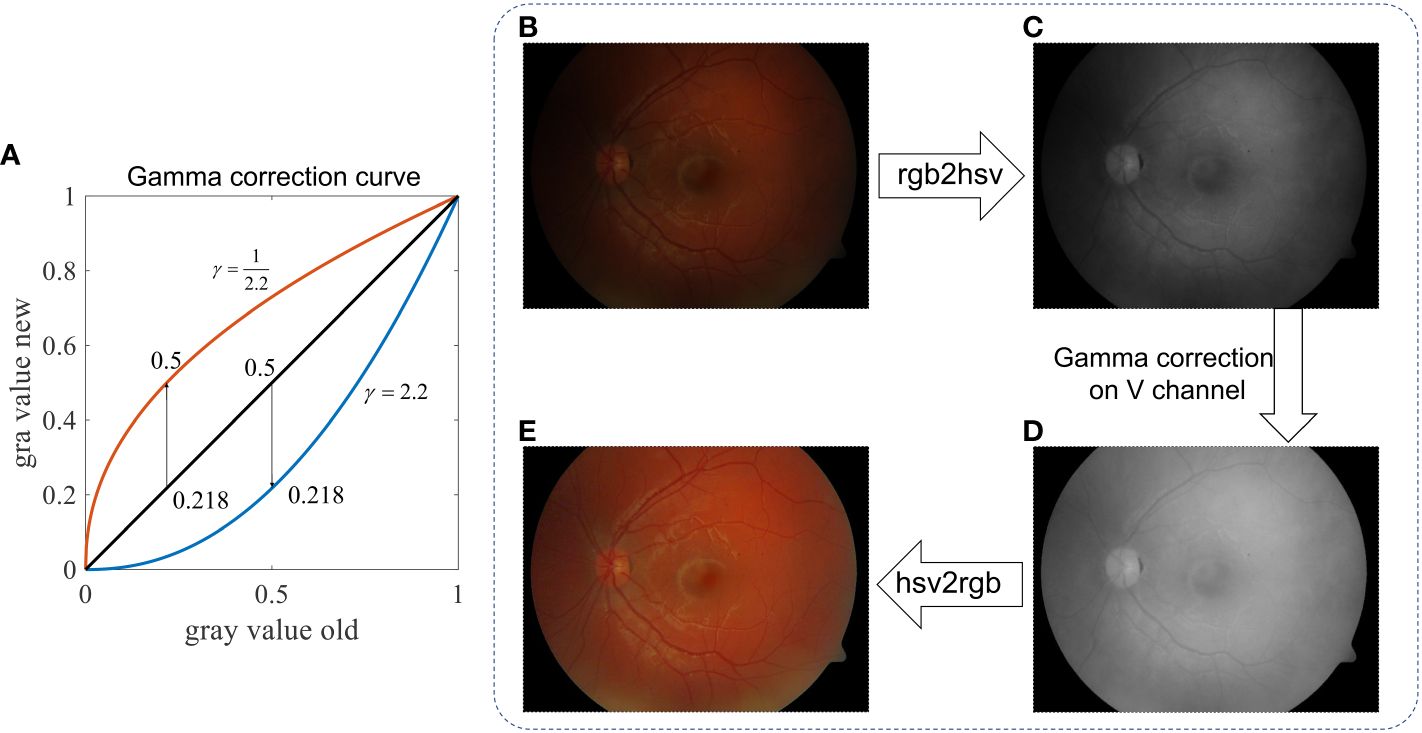

Since human visual assessment on image quality is highly related to the image’s brightness, intensity correction on a retinal image can produce significant improvements on the image’s quality for visual assessment. In this section, we briefly introduce two solutions according to Section 2—the gamma correction and Retinex method for retinal image intensity corrections—and demonstrate their output on sample fundus images.

3.1.1 Gamma correction

An intensity correction can be achieved by a Gamma correction if γ < 1 in Equation (1). As shown in Figure 7A, when γ = ½.2, a small value, say, 0.218, becomes 0.5 after the Gamma correction. Accordingly, we can transform the input RGB retinal image shown in Figure 7B to the HSV-color space, and then perform a gamma correction to its V-channel (Value). After that, the image is transformed back to the RGB-color space, resulting in illumination-corrected images, as shown in Figure 7E. By adjusting the value of γ, one can achieve different strengths of illumination correction, while the image contrast is not yet significantly improved.

Figure 7 Retinal image intensity correction using Gamma correction. (A) Gray value curve shows the mapping of gamma correction. (B) Raw image. (C) V-channel of image in the HSV color space. (D) V-channel after Gamma correction. (E) Enhanced image.

3.1.2 Center-surrounded Retinex

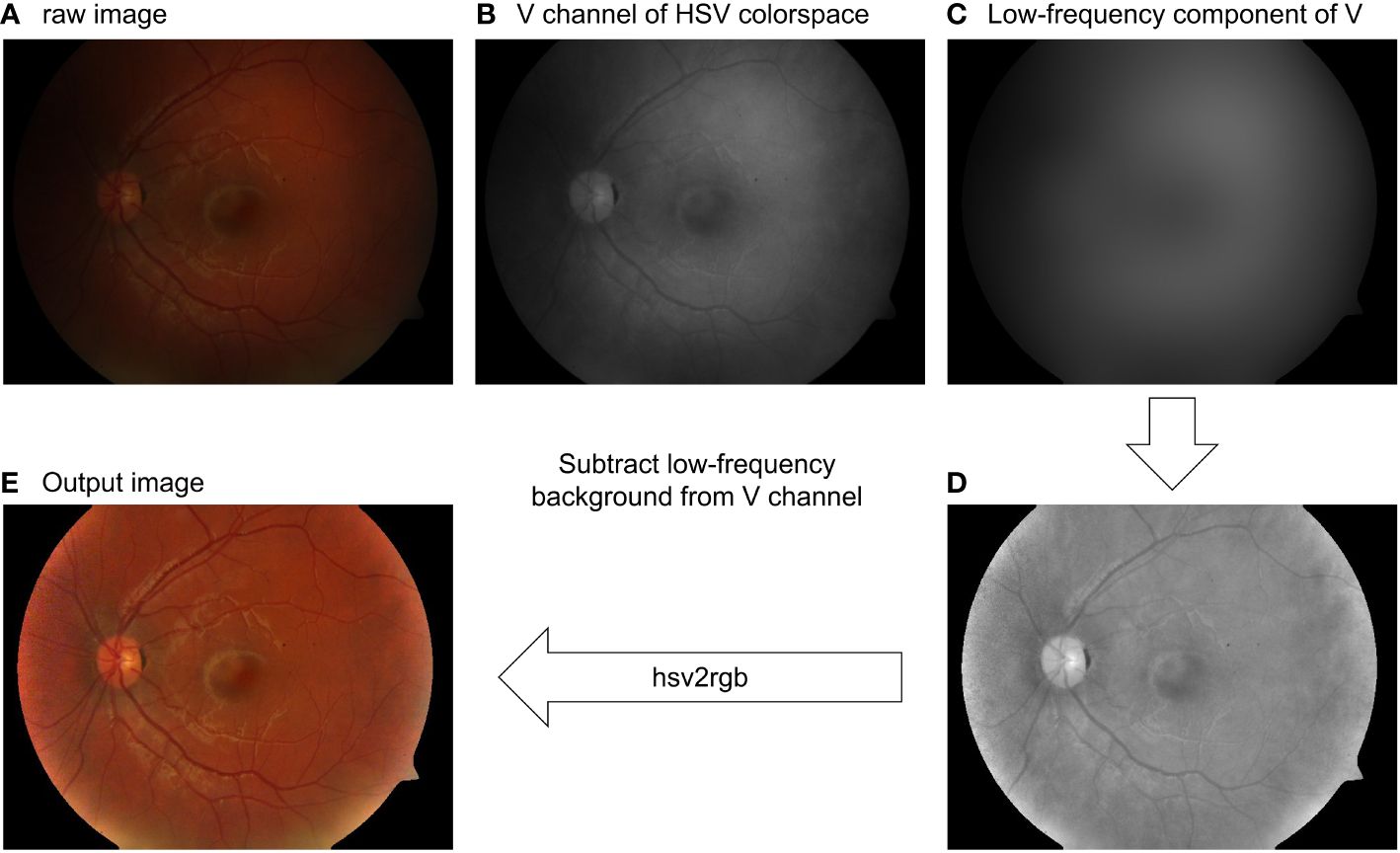

As mentioned in Section 2.4, intensity correction can also be achieved using Retinex. The low-frequency component of the V-channel can be a good estimation of the illumination pattern, as shown in Figure 8A, B and C. Subtracting the illumination pattern from the original V-channel (Figure 8D) and applying the intensity normalization so as the intensity value is between 0 and 1, the output V-channel is shown in Figures 8D, E for the RGB image where the uneven pattern is corrected.

Figure 8 Retinal image intensity correction using the Gaussian filtering Retinex method. (A) Raw image. (B) V-channel of the raw image. (C) Low-pass filtering of (B). (D) Intensity-corrected V-channel. (E) Output image.

Besides the Gaussian-filtering Retinex method shown above, researchers have developed a more complex framework, such as variational Retinex (63–65) with a different regularization and non-local Retinex (72) to achieve illumination correction. The application on the retinal image and how the retinal image can benefit from the Retinex method can attract a large amount of research interest. It is also worth noting that the Retinex theory linked image illumination correction and image dehazing through simple algebra. This property will be further discussed in Section 3.2.2.

3.2 Dehazing

In case of intraocular scattering, the captured image may have a haze-like effect, which is similar to the haze effect occurring in natural scenes. In these cases, a dehazing process is needed to enhance the quality of retinal images.

3.2.1 Dehazing using dark-channel prior

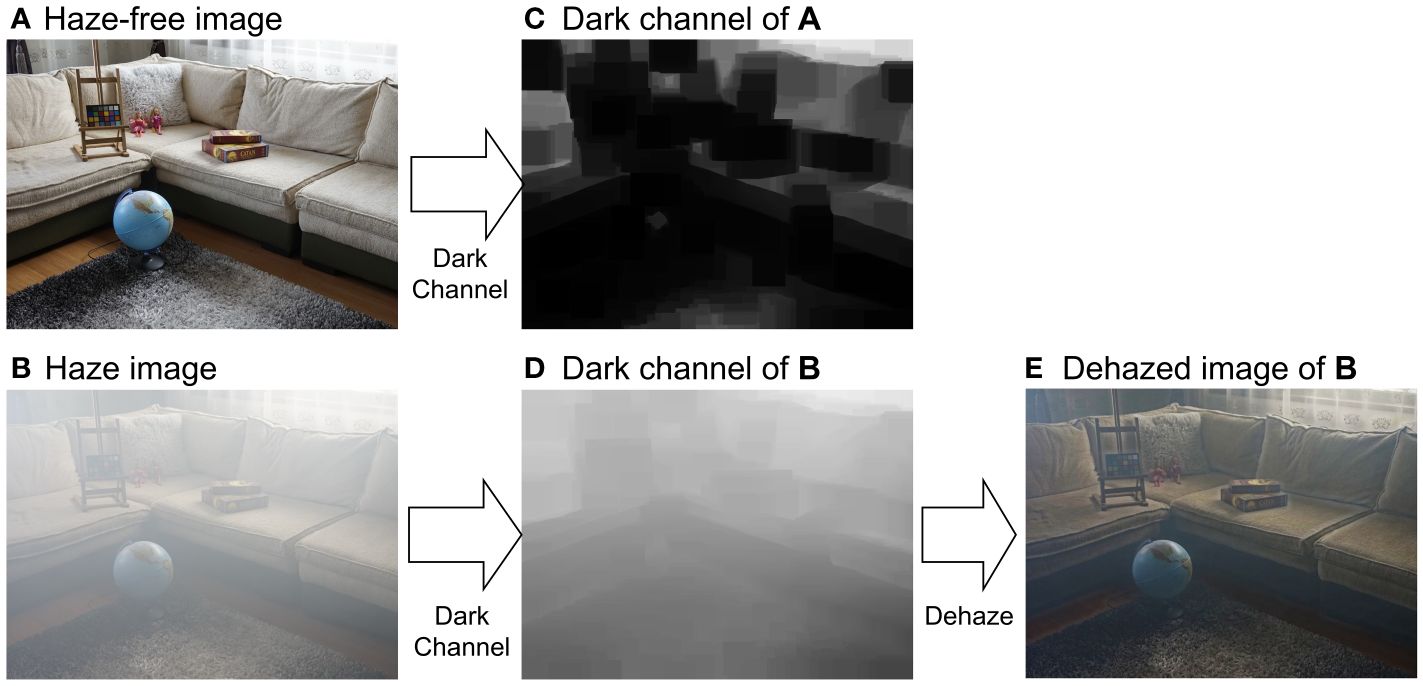

The DCP (73) has been widely used for natural scene dehazing including underwater image enhancement and haze removal even for thick fog situations. Here, the dark channel is obtained by first filtering the three color channels of the image using a local minimum filter with a size of w pixels, and then calculating the minimum value within three color channels.

The principle of DCP tells that in any haze-free image (in RGB color space), as shown in Figure 9A, at least one pixel has zero intensity in at least one channel, as shown in Figure 9C. As such, the transmission map of a hazy image (Figure 9B) can be estimated using the dark channel of the image, as shown in Figure 9D. According to the image formation model in Equation (3), the dehazed image can then be calculated.

Figure 9 Dehazing using dark-channel prior. (A) Hazy-free image. (B) Haze image of (A). (C, D) are dark channels of (A, B), respectively. (C) is the dehazed image. (E) is the dehazed image.

Although results of DCP dehazing are promising, the performance of DCP on retinal image dehazing is limited, especially for thick cataracts due to different color statistical features between natural scene images and retinal images. DPC fails to estimate the transmission map of the retinal image in RGB color space; however, it works in the intensity domain since DCP is valid for gray-scaled image dehazing (74).

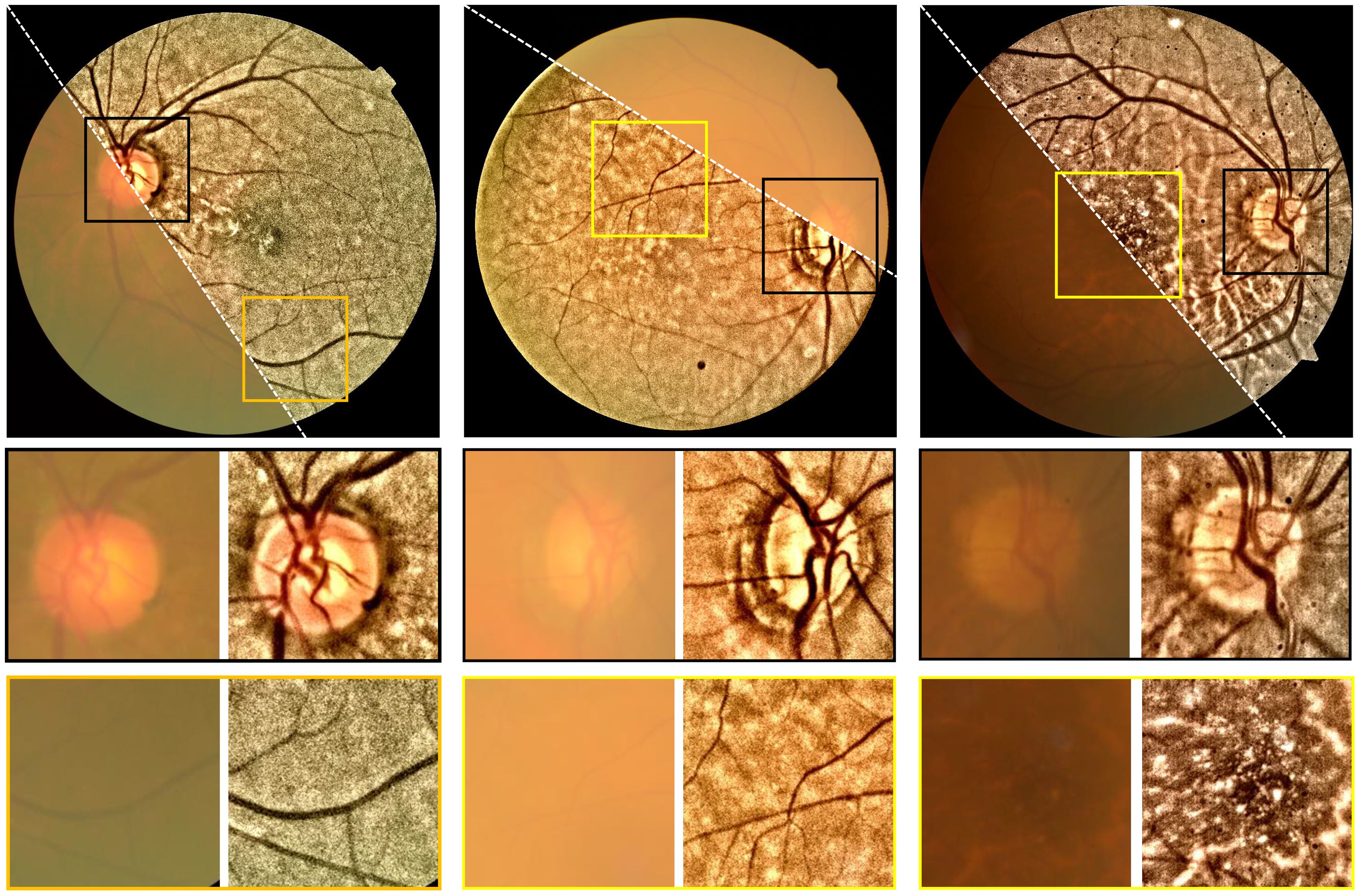

Accordingly, one is able to convert the retinal image from RGB color space to, for example, the CIE-LAB color space, and then perform dehazing to the L-channel (intensity channel). After that, the dehazed retinal image is obtained. Figure 10 shows the dehazing results on the cataractous retinal image after the illumination correction was applied. The haze effect is significantly suppressed.

Figure 10 Restoration of cataractous retinal images. First row: raw images. Second row: restored images. The image was dehazed using the DCP after the illumination correction was performed.

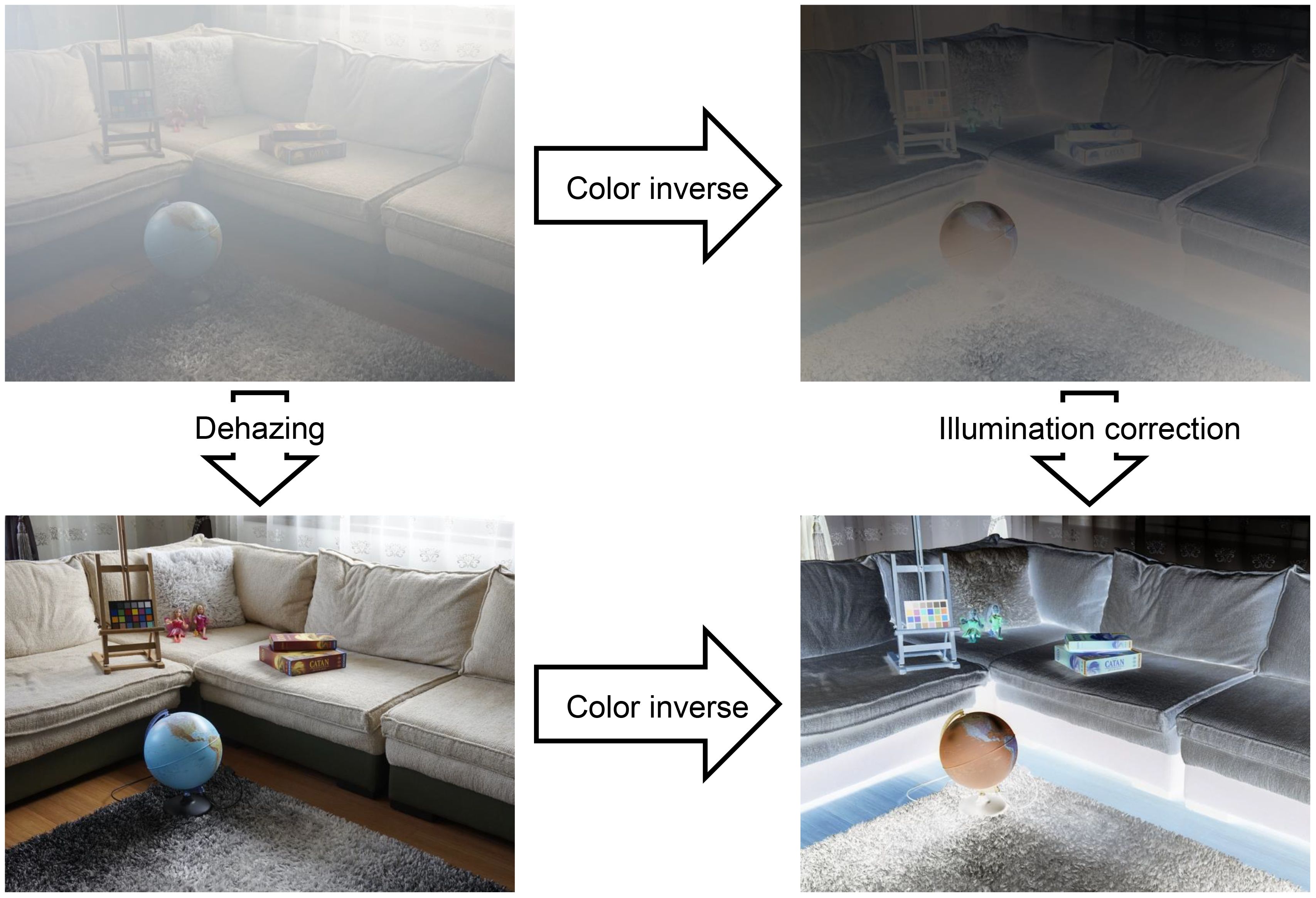

3.2.2 The duality between intensity correction and dehazing

Nature scene image dehazing seems to be unrelated to intensity correction since they deal with different problems. Later, as pointed out in Ref (69)., they are connected by an algebra modification of the haze formation model in Equation (3) by assuming that the input image is globally white-balanced, that is, . With some algebra, it can be rewritten as . By considering and , we are able to convert the haze formation model to the illumination model in Equation (2). This implies an interesting phenomenon such that the color-inversed hazy image looks like an image suffering from insufficient illumination, as shown in Figure 11.

Figure 11 Dehazing task can be converted into an intensity correction task in color-inversed domain.

According to the Retinex theory, by assuming t is spatially slow-varying and using Equations (2) and Equation (3), we have

It is also proven in (75), and shows that the dehazing task can be finished under the Retinex theory. Acccording to Equation (9), the Retinex theory is the bridge to image dehazing and image illumination correction (76). The application of the Retinex theory in retinal image dehazing shares a similar idea of an image structure model and filtering-based Retinex, where the haze layer is regarded as the slow-varying background component of the retinal image, and the dehazed image can be obtained by subtracting the background component from the hazy one (42, 61).

Generally speaking, algorithms for retinal image illumination correction and dehazing do vary in their definition, implementation, underlying structure, and relationship that a concise description is needed to decide which to use. Table 1 lists some of the start-of-the-art publications on non-deep-learning methods of single retinal image enhancement.

Table 1 List of publications on non-deep-learning based methods of single retinal image enhancement.

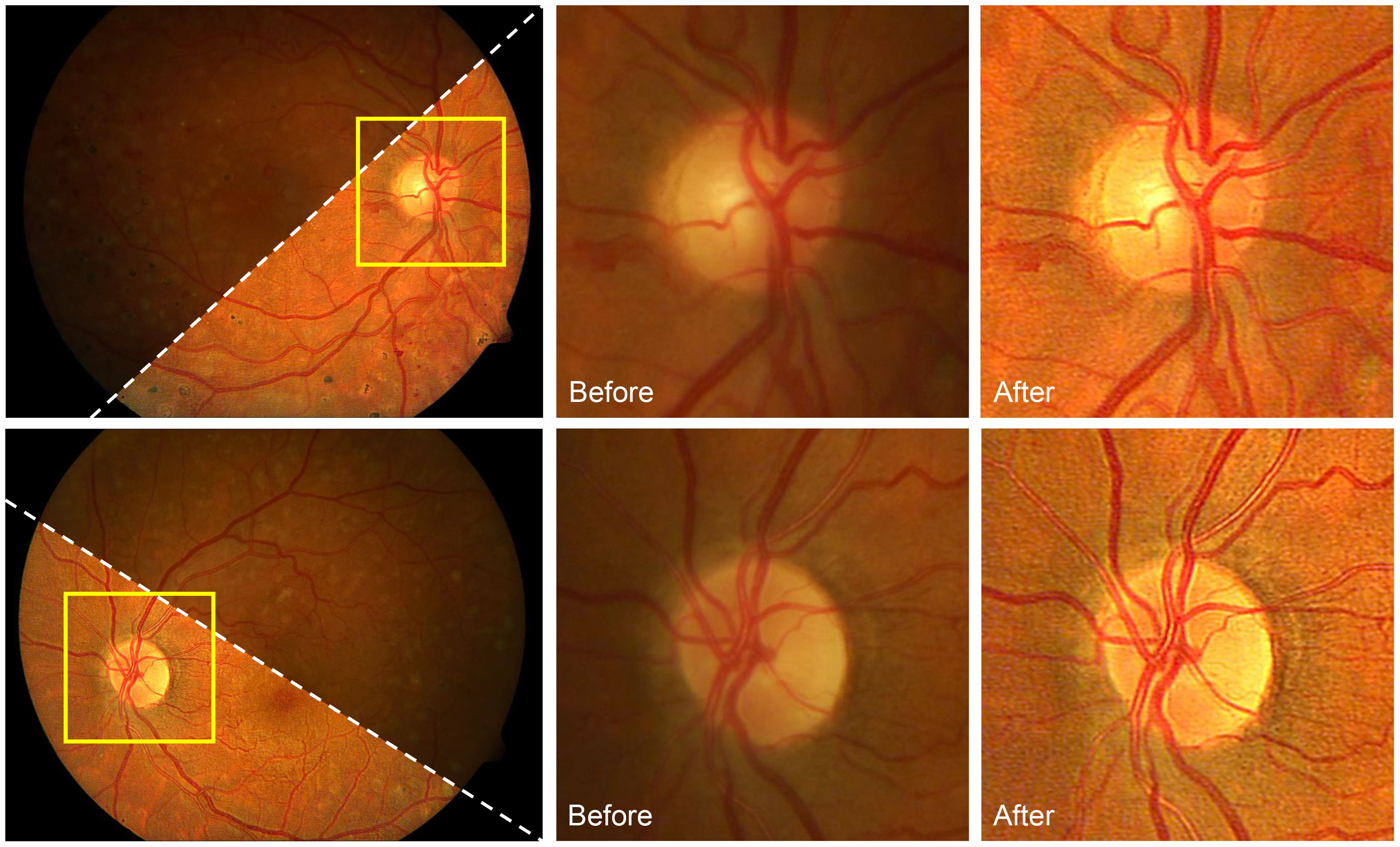

3.3 Deblurring

Image blind deconvolution has been developed and is mainly used for natural scene image deburring (83, 84). Much prior knowledge, including but not limited to the heavy-tail prior (85, 86), gradient L0 prior (87), the dark-channel prior (88), and the local maximum gradient prior (89), has been explored to facilitate single-image blind deconvolution tasks. Nevertheless, blind deconvolution for retinal images is still problematic and challenging since there are a large number of retinal images suffering from poor illumination conditions that hide the structure (edge) information that is essential for proper deconvolutions. To the best of our knowledge, only few studies have reported on single retinal image blind deconvolution (90–93), which rather aimed to correct blurriness caused by aberrations and motions during image capture.

Andrés et al. proposed a two-step retinal image blind deconvolution method (91), in which the first step is estimating and compensating for the uneven illumination using a fourth-order polynomial. The second step is blind deconvolution with TV regularization corresponding to the heavy-tail prior to natural scene deburring. However, this method requires at least two paired retinal images of one identical subject. Francisco et al. limit the shape of the convolution kernel to a Gaussian shape and perform a line search to determine the size of the Gaussian kernel corresponding to the peak image quality score (92). This method does not correct the illumination pattern of the retinal image; in addition, not all retinal images are degraded by a simple Gaussian kernel.

In (93), an image formation model based on the DPFR feature developed a differentiable non-convex cost function that jointly achieves illumination correction and blind deconvolution. Figure 12 shows the results of this approach on retinal image deconvolutions, where the uneven illumination and blurriness are corrected. Nevertheless, the method has limitations. First, the model parameters should be manually adjusted, which is a common drawback of non-learning-based blind deconvolution methods. Second, blind deconvolution can be time-consuming as it requires several iterations for solving the latent images, especially for retinal images with large resolutions. Third, the deconvolution will not significantly increase the image contrast as CLAHE does. Further combination of retinal image deconvolution and contrast enhancement is possible to improve the image quality of deconvolution methods.

3.4 Deep-learning-based retinal image restoration

With the development of computational power, deep-learning-based retinal image enhancements attracted a lot of interest (94). Because of the lack of paired real retinal images for good and degenerated quality, most learning-based retinal image restoration methods published recently can be categorized as extensions of GAN. These methods convert the retinal image restoration task into a style-transform task that transforms the image style from a bad-quality retinal image to a good-quality one. To mitigate the risk of GANs introducing unexpected artifacts, many focus on preserving information fidelity.

Since there are no paired real retinal images, researchers use synthetic/simulated degenerated retinal images to train the networks. For instance, based on the image formation model proposed by Peli et al. (22), Luo et al. (32) trained an unpaired GAN to achieve cataract retinal image dehazing for mild cataract cases. Li et al. (34) proposed an annotation-free GAN for cataractous retinal image restoration. Based on the natural scene haze formation model, Yang et al. (95) trained a modified cycle-GAN for artifact reduction and structure retention in retinal image enhancement. Shen et al. (96) proposed a new mathematical model to formulate the image-degrading process of fundus imaging and train a network for retinal image restoration. Others have modified the structures of the network or loss function to improve the performance of the networks (97, 98).

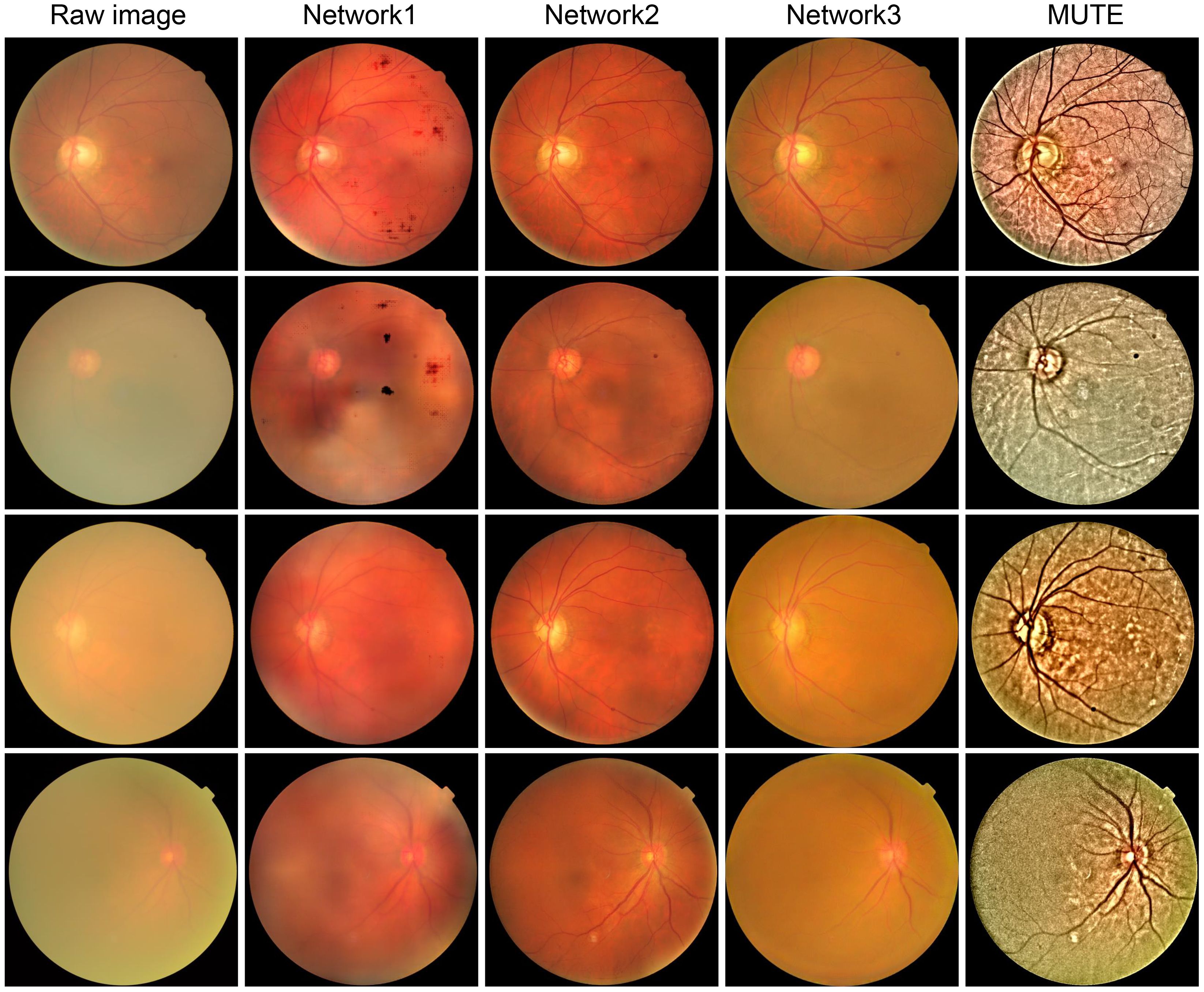

While these learning-based methods produce impressive restoration results in both quality and naturalness preservation, they have limitations. Over-fitting on synthetic data and lack of generalization are potential issues as we will show in the experimental sections. Additionally, the performance of trained networks is limited by the input image resolution (typically 512 × 512), which is too small for clinical applications where image resolution, in general, is larger than 2,000 × 1,000 (99). Furthermore, these methods lack interpretability and may introduce unexpected artifacts or elimination of important retinal structures, as shown in Figure 13, which can be detrimental to clinical applications. Thus, there is still a long way to go in both technical and ethical aspects of learning-based retinal image enhancement methods (102).

Figure 13 Cataractous retinal image enhancement using three network methods and one nonlearning method. Network 1 (34):. Network 2 (100):. Network 3 (95):. MUTE (101):.

3.5 Retinal image quality metrics

Despite the fact that the enhanced images will be finally evaluated by specialists for supporting clinical applications, evaluating the image quality in an objective way is important for understanding and analyzing the performance of different restoration algorithms. The image quality can be calculated, objectively, using well-designed programs, known as quality metrics, with reference-based and non-reference-based ways. Some metrics have been widely used in the field of image processing, and new metrics are still being developed. This subsection gives a brief introduction to the quality metrics used in retinal image analysis.

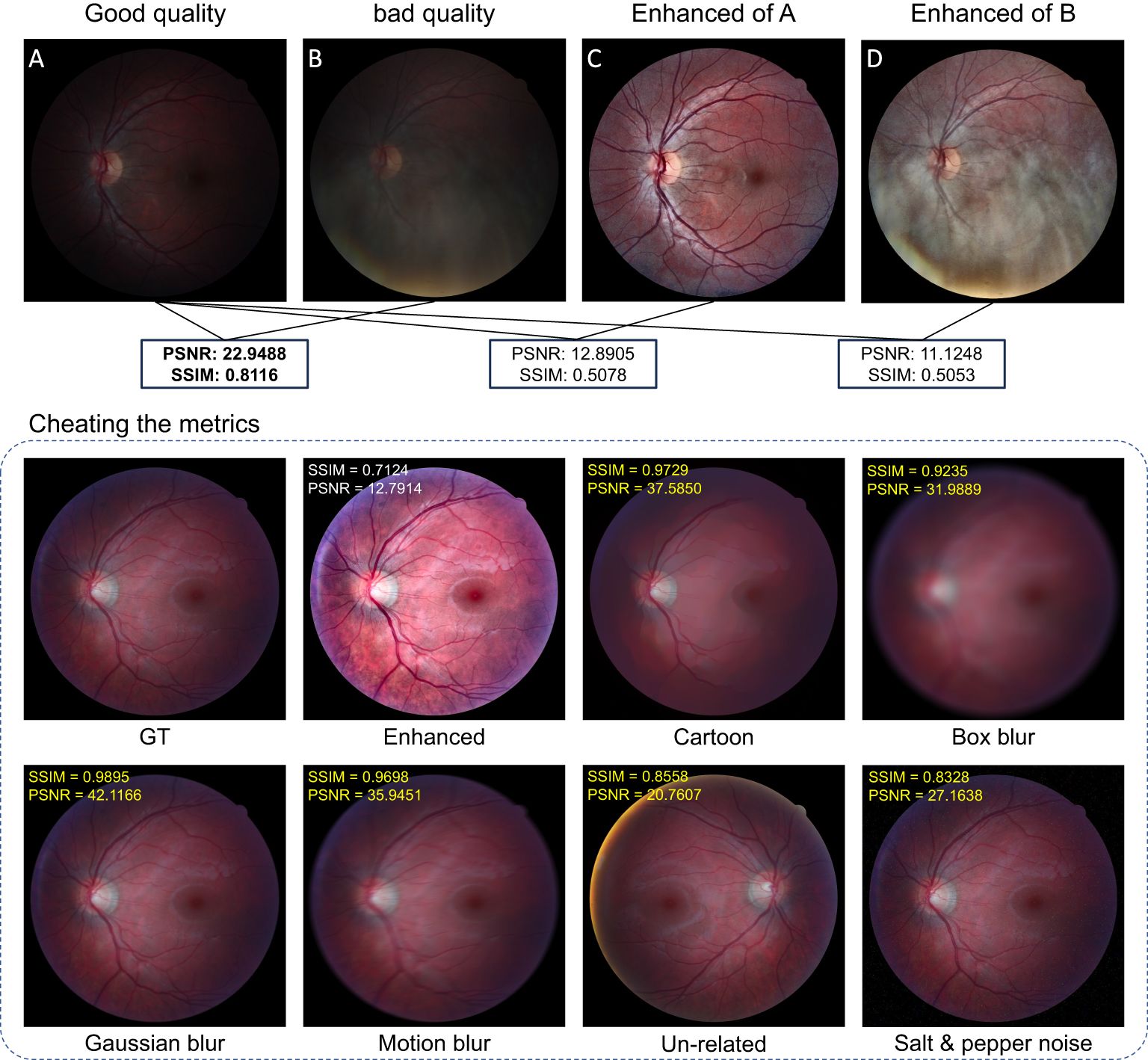

The peak signal-to-noise ratio (PSNR) and structured similarity measure (SSIM) are reference-based metrics that can evaluate the image quality if the ground truth is known (73, 103–105). One drawback of these metrics is that they are not consistent with human-visual feeling. Sometimes, they will generate unexpected evaluation results that violate human assessment. To illustrate the problem, we collect good-quality (GQ) images (18 images) and the corresponding bad-quality (BQ) images (18 images) from the HRF dataset (106). We also perform illumination correction and contrast enhancement using CLAHE on both GQ and BQ images. One example is shown in Figure 14.

We calculated the PSNR and SSIM between (1) GQ images and BQ images (2), GQ images and enhanced GQ images, and (3) GQ images and enhanced BQ images as listed in Figure 14. In all these calculations, the GQ images were set as the reference images as shown in Figure 14A, while the metrics imply that Figures 14C, D have worse image quality than Figure 14B, which conflicts with human visual assessment. In this case, the decrease in PSNR and SSIM is largely due to the change of the image’s intensity level, especially for SSIM as it depends on the intensity level between the given image and the reference image. Since uneven and insufficient illumination are common problems in fundus images, using PSNR and SSIM to assess the performance of our proposed model lacks fairness and practicality, especially for medical images where the real ground truth is not well-defined. Additional examples that demonstrate the unpredictable behavior of SSIM and PSNR are shown in Figure 14. Even the image is degraded by some major types of distortions such as Gaussian blur, motion blur, and noises, and the related SSIM and PSNR scores can be larger than the enhanced image, which far contradicts human visual prospect. More related works showing the drawback of SSIM and PSNR can be found in (73, 107–109).

Reference metrics are usually not applicable since a good reference for medical image usually does not exist or is hard to obtain. As such, non-reference metrics are developed to score the image’s quality based on human visual sensation. The underwater image quality metrics (109) are good candidates to adapt. They include Underwater Image Sharpness Measure (UISM) and Underwater Image Contrast Measure (UIConM). Both the UISM and the UIConM do not rely on the statistical property of images and thus can be applied to retinal images, regardless of the statistical difference between retinal images and underwater images. Moreover, image entropy (IE) describes the randomness distribution of the image and its value denotes the amount of image information (76, 110). The multi-scale contrast of the image, CRAMM, was calculated with a pyramidal multi-resolution representation of luminance (111). Lastly, the fog-aware density evaluator (FADE) (112, 113) was used to numerically predict perceptual hazy density, which can be used to evaluate the image quality of cataractous retinal images.

4 Potential applications

4.1 Diagnosis

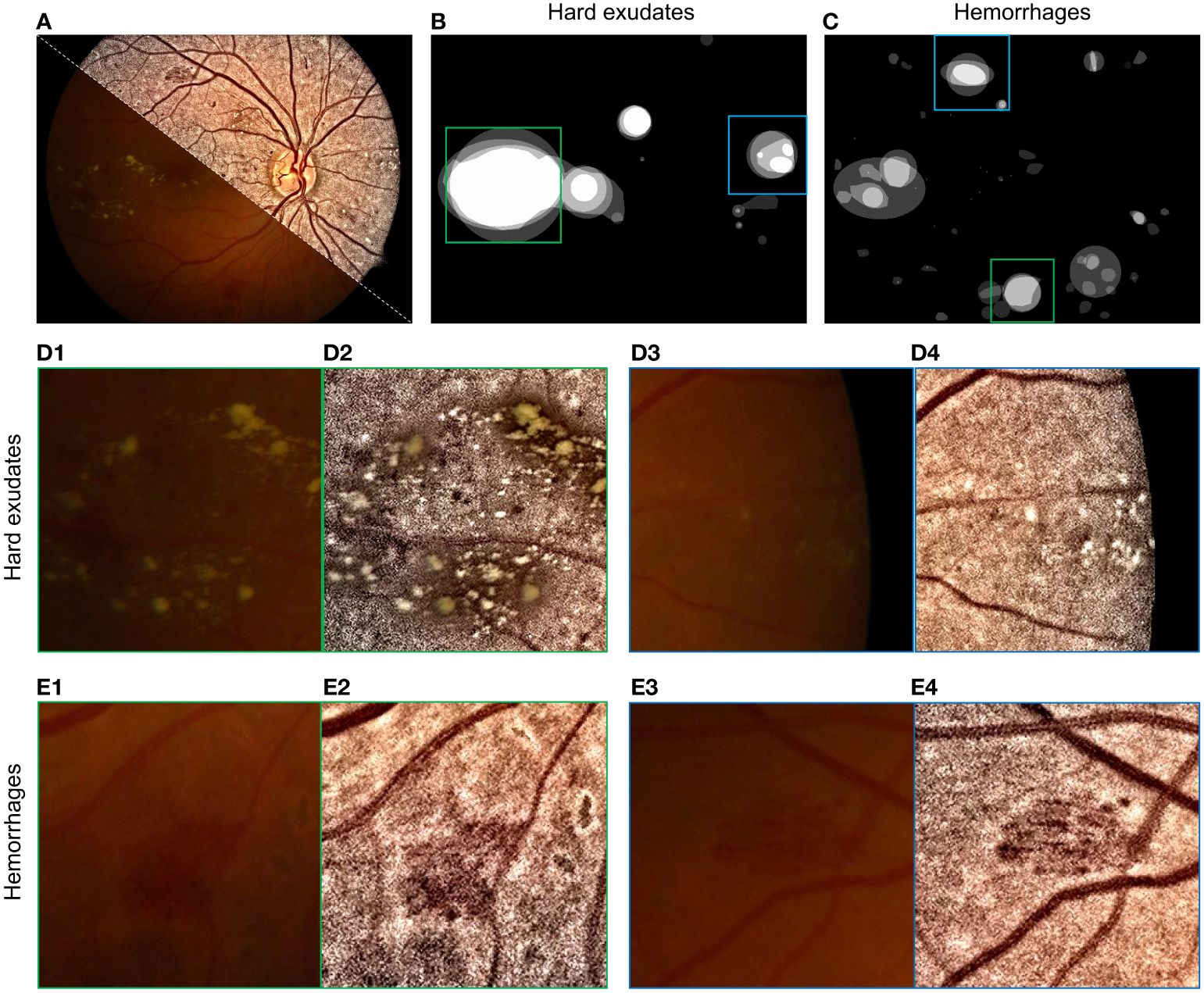

Restored/enhanced retinal images can potentially increase diagnostic accuracy. An example is diabetic retinopathy with areas with hard exudates and hemorrhages, as shown in Figures 15A, B and C. The enhanced images increased the visual quality of the retinopathy area, without unexpected artifacts to guarantee structure fidelity, as shown in Figures 15D1–D4.

Figure 15 Enhancement of retinopathy areas using (101). (A) Montage of raw and enhanced images. (B) Labels of hard exudate areas. (C) Labels of hemorrhage areas. (D1–D4) are enlarged parts of raw and enhanced images corresponding to green and blue boxes in (B). (E1–E4) are enlarged parts of (C).

Some hard exudates that were barely observable in the raw image (Figure 15D3) can be clearly seen in the enhanced images in Figure 15D4 due to the increased contrast. The enhanced image also has a high visual quality in areas with hemorrhages, as shown in Figures 1E1–E4, as the contrast between hemorrhage areas and the background increases in enhanced images (see Figures 15E2–E4).

Note that it is of importance to check if algorithms introduce unexpected artifacts or erase the important structure from the image. To do this, one can collect cataractous retinal images before and after cataract surgery, perform the algorithm on the image before cataract surgery, and check if any structures are added or removed by comparing the latter to the actual image after the surgery.

4.2 Blood vessel tracking

Retinal image blood vessel segmentation allows parameterization of blood vessels, which is important for clinical diagnosis as morphological changes of blood vessels are biomarkers for diseases such as lacunar stroke (114), cognitive dysfunction (115), cardiovascular risk (116), diabetes (117), and glaucoma (118).

Blood vessel segmentation can be retrieved by either human specialists or computer software. The former provides accurate results but is time-consuming. The latter option provides fast segmentation results but is less accurate compared to human specialists. Moreover, because of the poor image contrast of the cataractous retinal image, hand-based segmentation is even more time-consuming, and automatic segmentation for hazy retinal images can be error-prone. With enhanced retinal image, blood vessel segmentation can be better performed due to the increment of image visual quality (119, 120).

4.3 Retinal image registration

Image registration is an important application in computer vision, pattern recognition, and medical image analysis (121–123). It aligns two or more retinal images together to provide an overall comprehensive understanding (121). Retinal image registration relies on precise feature detecting and matching for images to be registered. Registration of cataractous retinal images can be bothersome as the features used to register may be obscured by haze or low-contrast pixels. With the enhancement of image contrast, the registration algorithm can better find the paired feature for accurate registration.

4.4 Ultra-wide field retinal image enhancement

Ultra-wide field (UWF) imaging system allows the capture of 200 degrees of the retina (approximately 82% of retinal surface area) in a single shot. It provides non-contact, high-resolution images for clinicians to analyze retinal disorders (124, 125). Imaging using the UWF system can also suffer from illumination problems and haze effects due to imperfect photographing conditions. Here, we demonstrate the application of InQue in enhancing UWF images.

Figure 16A shows the raw image with insufficient illumination and prominent haze. The enhanced one is shown in Figure 16B, while the retinal structures including the optical disk and blood vessels are zoomed in Figures 16C1–F2. Accordingly, the image clarity of the enhanced image is significantly improved, and blood vessels can be clearly observed.

Figure 16 Enhancement for UWF retinal image. (A) Raw images. (B) Enhanced. (C1, C2) are zoomed-in images of the blue box. (D1, D2) are zoomed-in images of the green box. (E1, E2) are zoomed-in images of the purple box, and (F1, F2) are zoomed-in images of the white box.

5 Impact of retinal image restoration

5.1 Early detection of eye diseases

Retinal image enhancement algorithms improve the quality of images, making it more accurate to identify early signs of eye diseases such as glaucoma, macular degeneration, and diabetic retinopathy (126, 127). Early detection of findings through cataractous retinal images may improve the outcome of treatment of retinal diseases. It may be helpful in the decision-making process of surgery, specifically in combined cases of cataract and retinal disease, to prevent unnecessary interventions (3).

5.2 Access to healthcare

The high cost of equipment and the lack of trained professionals can limit access to techniques of retinal imaging, particularly in low-income and rural areas. Low-cost fundus cameras (128, 129) combined with novel image processing algorithms can make retinal imaging accessible and affordable for patients in these areas.

6 Conclusion and prospects

We discussed different image formation models and methods of retinal image enhancement/restoration, and how they can be of benefit in clinical applications like blood vessel segmentation, image registration, and diagnosis. These algorithms exhibited variations in their definitions, implementations, underlying structures, and mathematical relationships. Two seemingly unrelated topics, namely, retinal image illumination correction and dehazing, are deeply related to each other in their mathematical insight; thus, a concise description is needed when choosing them for retinal image enhancement.

Ongoing research in retinal image restoration focuses on developing more robust and generalizable methods. This includes addressing challenges related to increasing the performance of non-learning-based methods, which usually distort the naturalness of retinal image after enhancement, negatively affecting clinical applications since some forms of retinopathy are deeply related to the color of the tissues.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

SZ: Conceptualization, Validation, Methodology, Writing – original draft, Writing – review & editing. CW: Formal analysis, Project administration, Supervision, Validation, Writing – review & editing. TB: Conceptualization, Investigation, Project administration, Supervision, Validation, Writing – review & editing, Formal analysis.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research is supported by the China Scholarship Council (CSC) (201908340078).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Berendschot TTJM, DeLint PJ. Norren Dv. Fundus reflectance—historical and present ideas. Prog Retinal Eye Res. (2003) 22:171–200. doi: 10.1016/S1350-9462(02)00060-5

2. DeHoog E, Schwiegerling J. Fundus camera systems: a comparative analysis. Appl Optics. (2009) 48:221–8. doi: 10.1364/AO.48.000221

3. Iqbal S, Khan TM, Naveed K, Naqvi SS, Nawaz SJ. Recent trends and advances in fundus image analysis: A review. Comput Biol Med. (2022) 151:106277. doi: 10.1016/j.compbiomed.2022.106277

4. Zhang J, Dashtbozorg B, Huang F, Berendschot TT, ter Haar Romeny BM. (2018). Analysis of retinal vascular biomarkers for early detection of diabetes, in: VipIMAGE 2017: Proceedings of the VI ECCOMAS Thematic Conference on Computational Vision and Medical Image Processing Porto, Portugal, October 18–20, 2017. pp. 811–7. Springer.

5. Sinthanayothin C, Boyce JF, Williamson TH, Cook HL, Mensah E, Lal S, et al. Automated detection of diabetic retinopathy on digital fundus images. Diabetic Med. (2002) 19:105–12. doi: 10.1046/j.1464-5491.2002.00613.x

6. Sopharak A, Uyyanonvara B, Barman S. Automatic exudate detection from non-dilated diabetic retinopathy retinal images using fuzzy c-means clustering. Sensors. (2009) 9:2148–61. doi: 10.3390/s90302148

7. Faust O, Acharya UR, Ng EYK, Ng KH, Suri JS. Algorithms for the automated detection of diabetic retinopathy using digital fundus images: a review. J Med Syst. (2012) 36:145–57. doi: 10.1007/s10916-010-9454-7

8. Borroni D, Erts R, Vallabh NA, Bonzano C, Sepetiene S, Krumina Z, et al. Solar retinopathy: a new setting of red, green, and blue channels. Eur J Ophthalmol. (2021) 31:1261–6. doi: 10.1177/1120672120914852

9. Barros DMS, Moura JCC, Freire CR, Taleb AC, Valentim RAM, Morais PSG. Machine learning applied to retinal image processing for glaucoma detection: review and perspective. Biomed Eng Online. (2020) 19:20. doi: 10.1186/s12938–020-00767–2

10. Chalakkal RJ, Abdulla WH, Hong SC. Fundus retinal image analyses for screening and diagnosing diabetic retinopathy, macular edema, and glaucoma disorders. Diabetes Fundus. (2020) 2020:59–111. doi: 10.1016/B978-0-12-817440-1.00003-6

11. Saba T, Bokhari STF, Sharif M, Yasmin M, Raza M. Fundus image classification methods for the detection of glaucoma: A review. Microscopy Res Technique. (2018) 81:1105–21. doi: 10.1002/jemt.23094

12. Miura M, Yamanari M, Iwasaki T, Elsner A, Makita S, Yatagai T, et al. Imaging polarimetry in age-related macular degeneration. Invest Ophthalmol Visual Sci. (2008) 49:2661–7. doi: 10.1167/iovs.07–0501

13. Trieschmann M, van Kuijk F, Alexander R, Hermans P, Luthert P, Bird A, et al. Macular pigment in the human retina: histological evaluation of localization and distribution. Eye. (2008) 22:132–7. doi: 10.1038/sj.eye.6702780

14. Theelen T, Berendschot T, Hoyng C, Boon C, Klevering B. Near-infrared reflectance imaging of neovascular age-related macular degeneration. Graefes Arch Clin Exp.Ophthalmol. (2009) 247:1625–33. doi: 10.1007/s00417-009-1148-9

15. Ding J, Patton N, Deary IJ, Strachan MW, Fowkes F, Mitchell RJ, et al. Retinal microvascular abnormalities and cognitive dysfunction: a systematic review. Br J Ophthalmol. (2008) 92:1017–25. doi: 10.1136/bjo.2008.141994

16. Zafar S, McCormick J, Giancardo L, Saidha S, Abraham A, Channa R. Retinal imaging for neurological diseases:”a window into the brain”. Int Ophthalmol Clinics. (2019) 59:137–54. doi: 10.1097/IIO.0000000000000261

17. De Boever P, Louwies T, Provost E, Panis LI, Nawrot TS. Fundus photography as a convenient tool to study microvascular responses to cardiovascular disease risk factors in epidemiological studies. JoVE (Journal Visualized Experiments). (2014):e51904. doi: 10.3791/51904

18. Poplin R, Varadarajan AV, Blumer K, Liu Y, McConnell MV, Corrado GS, et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng. (2018) 2:158–64. doi: 10.1038/s41551-018-0195-0

19. Chang J, Ko A, Park SM, Choi S, Kim K, Kim SM, et al. Association of cardiovascular mortality and deep learning-funduscopic atherosclerosis score derived from retinal fundus images. Am J Ophthalmol. (2020) 217:121–30. doi: 10.1016/j.ajo.2020.03.027

20. Heaven C, Cansfield J, Shaw K. The quality of photographs produced by the non-mydriatic fundus camera in a screening programme for diabetic retinopathy: a 1 year prospective study. Eye. (1993) 7:787–90. doi: 10.1038/eye.1993.185

21. Scanlon PH, Foy C, Malhotra R, Aldington SJ. The influence of age, duration of diabetes, cataract, and pupil size on image quality in digital photographic retinal screening. Diabetes Care. (2005) 28:2448–53. doi: 10.2337/diacare.28.10.2448

22. Peli E, Peli T. Restoration of retinal images obtained through cataracts. IEEE Trans Med Imaging. (1989) 8:401–6. doi: 10.1109/42.41493

23. Yang CW, Liang XU, Wang S, Yang H. The evaluation of screening for cataract needed surgery with digital nonmydriatic fundus camera. Ophthalmol China. (2010) 19:46–9.

24. Xiong L, Li H, Xu L. An approach to evaluate blurriness in retinal images with vitreous opacity for cataract diagnosis. J Healthcare Eng. (2017) 2017:1–16. doi: 10.1155/2017/5645498

25. Burns SA, Elsner AE, Sapoznik KA, Warner RL, Gast TJ. Adaptive optics imaging of the human retina. Prog Retin Eye Res. (2019) 68:1–30. doi: 10.1016/j.preteyeres.2018.08.002

26. Savelli B, Bria A, Galdran A, Marrocco C, Molinara M, Campilho A, et al. Illumination correction by dehazing for retinal vessel segmentation. IEEE 30th Int Symposium Computer-Based Med Syst. (2017). doi: 10.1109/CBMS.2017.28

27. Foracchia M, Grisan E, Ruggeri A. Luminosity and contrast normalization in retinal images. Med Image Anal. (2005) 9:179–90. doi: 10.1016/j.media.2004.07.001

28. Ma Y, Liu J, Liu Y, Fu H, Hu Y, Cheng J, et al. Structure and illumination constrained gan for medical image enhancement. IEEE Trans Med Imaging. (2021) 40:3955–67. doi: 10.1109/TMI.2021.3101937

29. Setiawan AW, Mengko TR, Santoso OS, Suksmono AB. (2013). Color retinal image enhancement using clahe, in: International Conference on ICT for Smart Society, . doi: 10.1109/ICTSS.2013.6588092

30. Cao L, Li H. Detail-richest-channel based enhancement for retinal image and beyond. Biomed Signal Process Control. (2021) 69:102933. doi: 10.1016/j.bspc.2021.102933

31. Wang J, Li YJ, Yang KF. Retinal fundus image enhancement with image decomposition and visual adaptation. Comput Biol Med. (2021) 128:104116. doi: 10.1016/j.compbiomed.2020.104116

32. Luo Y, Chen K, Liu L, Liu J, Mao J, Ke G, et al. Dehaze of cataractous retinal images using an unpaired generative adversarial network. IEEE J Biomed Health Inf. (2020) 3374–83. doi: 10.1109/JBHI.6221020

33. Zhang S, Webers CA. Berendschot TT. A double-pass fundus reflection model for efficient single retinal image enhancement. Signal Process. (2022) 192:108400. doi: 10.1016/j.sigpro.2021.108400

34. Li H, Liu H, Hu Y, Fu H, Zhao Y, Miao H, et al. An annotation-free restoration network for cataractous fundus images. IEEE Trans Med Imaging. (2022) 1699–710. doi: 10.1109/TMI.2022.3147854

35. Xiong L, Li H, Xu L. An enhancement method for color retinal images based on image formation model. Comput Methods Programs Biomedicine. (2017) 143:137–50. doi: 10.1016/j.cmpb.2017.02.026

36. Mukherjee J, Mitra SK. Enhancement of color images by scaling the dct coefficients. IEEE Trans Image Process. (2008) 17:1783–94. doi: 10.1109/TIP.2008.2002826

37. Bai X, Zhou F, Xue B. Image enhancement using multi scale image features extracted by top-hat transform. Optics Laser Technol. (2012) 44:328–36. doi: 10.1016/j.optlastec.2011.07.009

38. Liao M, Zhao YQ, Wang XH, Dai PS. Retinal vessel enhancement based on multi-scale top-hat transformation and histogram fitting stretching. Optics Laser Technol. (2014) 58:56–62. doi: 10.1016/j.optlastec.2013.10.018

39. Gupta B, Tiwari M. Color retinal image enhancement using luminosity and quantile based contrast enhancement. Multidimensional Syst Signal Process. (2019) 30:1829–37. doi: 10.1007/s11045-019-00630-1

40. Celik T. Spatial entropy-based global and local image contrast enhancement. IEEE Trans Image Process. (2014) 23:5298–5308. doi: 10.1109/TIP.2014.2364537

41. Zhou M, Jin K, Wang S, Ye J, Qian D. Color retinal image enhancement based on luminosity and contrast adjustment. IEEE Trans Biomed Eng. (2018) 521–7. doi: 10.1109/TBME.10

42. Cao L, Li H, Zhang Y. Retinal image enhancement using low-pass filtering and α-rooting. Signal Process. (2020) 170:107445. doi: 10.1016/j.sigpro.2019.107445

43. Dai P, Sheng H, Zhang J, Li L, Wu J, Fan M. Retinal fundus image enhancement using the normalized convolution and noise removing. Int J Biomed Imaging. (2016) 2016:1–12. doi: 10.1155/2016/5075612

44. Shemonski ND, South FA, Liu YZ, Adie SG, Scott Carney P, Boppart SA. Computational high-resolution optical imaging of the living human retina. Nat photonics. (2015) 9:440–3. doi: 10.1038/nphoton.2015.102

45. Chung J, Martinez GW, Lencioni KC, Sadda SR, Yang C. Computational aberration compensation by coded-aperture-based correction of aberration obtained from optical fourier coding and blur estimation. Optica. (2019) 6:647–61. doi: 10.1364/OPTICA.6.000647

46. Arias A, Artal P. Wavefront-shaping-based correction of optically simulated cataracts. Optica. (2020) 7:22–7. doi: 10.1364/OPTICA.7.000022

47. Dutta R, Manzanera S, Gambín-Regadera A, Irles E, Tajahuerce E, Lancis J, et al. Single-pixel imaging of the retina through scattering media. Biomed Optics Express. (2019) 10:4159–67. doi: 10.1364/BOE.10.004159

48. Singh D, Kumar V. A comprehensive review of computational dehazing techniques. Arch Comput Methods Eng. (2018) 1395–413. doi: 10.1007/s11831-018-9294-z

49. Senthilkumar K, Sivakumar P. A review on haze removal techniques. Comput aided intervention diagnostics Clin Med images. (2019), 113–23.

50. Banerjee S, Chaudhuri SS. Nighttime image-dehazing: A review and quantitative benchmarking. Arch Comput Methods Eng. (2020) 2943–75. doi: 10.1007/s11831-020-09485-3

51. Wang Y, Zhuo S, Tao D, Bu J, Li N. Automatic local exposure correction using bright channel prior for under-exposed images. Signal Process. (2013) 93:3227–38. doi: 10.1016/j.sigpro.2013.04.025

52. Mitra A, Roy S, Roy S, Setua SK. Enhancement and restoration of non-uniform illuminated fundus image of retina obtained through thin layer of cataract. Comput Methods Programs Biomedicine. (2018) 156:169–78. doi: 10.1016/j.cmpb.2018.01.001

53. Gaudio A, Smailagic A, Campilho A. (2020). Enhancement of retinal fundus images via pixel color amplification, in: International conference on image analysis and recognition, . pp. 299–312. Springer.

54. Somasundaram K, Kalavathi P. Medical image contrast enhancement based on gamma correction. Int J Knowl Manag e-learning. (2011) 3:15–8.

55. Pizer SM, Amburn EP, Austin JD, Cromartie R, Geselowitz A, Greer T, et al. Adaptive histogram equalization and its variations. Comput vision graphics image Process. (1987) 39:355–68. doi: 10.1016/S0734-189X(87)80186-X

56. Koschmieder H. Luftlicht und sichtweite. Naturwissenschaften. (1938) 26:521–8. doi: 10.1007/BF01774261

57. McCartney EJ. Optics of the atmosphere: Scattering by molecules and particles. New york. (1976), 408.

58. Mujbaile D, Rojatkar D. (2020). Model based dehazing algorithms for hazy image restoration– a review, in: 2020 2nd International Conference on Innovative Mechanisms for Industry Applications (ICIMIA), . pp. 142–8. IEEE.

59. Artal P, Iglesias I, López-Gil N. Double-pass measurements of the retinal-image quality with unequal entrance and exit pupil sizes and the reversibility of the eye’s optical system. J Opt. Soc Am A. (1995) 12:2358–66. doi: 10.1364/JOSAA.12.002358

60. Artal P, Marcos S, Navarro R, Williams DR. Odd aberrations and double-pass measurements of retinal image quality. J Opt. Soc Am A. (1995) 12:195–201. doi: 10.1364/JOSAA.12.000195

61. Cao L, Li H. Enhancement of blurry retinal image based on non-uniform contrast stretching and intensity transfer. Med Biol Eng Computing. (2020) 59:483–96. doi: 10.1007/s11517-019-02106-7

62. Land EH, McCann JJ. Lightness and retinex theory. Josa. (1971) 61:1–11. doi: 10.1364/JOSA.61.000001

63. Kimmel R, Elad M, Shaked D, Keshet R, Sobel I. A variational framework for retinex. Int J Comput Vision. (2003) 52:7–23. doi: 10.1023/A:1022314423998

64. Ma W, Osher S. A tv bregman iterative model of retinex theory. Inverse Problems Imaging. (2012) 6:697. doi: 10.3934/ipi.2012.6.697

65. Park S, Yu S, Moon B, Ko S, Paik J. Low-light image enhancement using variational optimization-based retinex model. IEEE Trans Consumer Electron. (2017) 63:178–84. doi: 10.1109/TCE.2017.014847

66. Morel JM, Petro AB, Sbert C. A pde formalization of retinex theory. IEEE Trans Image Process. (2010) 19:2825–37. doi: 10.1109/TIP.2010.2049239

67. Limare N, Petro AB, Sbert C, Morel JM. Retinex poisson equation: a model for color perception. Image Process On Line. (2011) 1:39–50. doi: 10.5201/ipol

68. Pu YF, Siarry P, Chatterjee A, Wang ZN, Yi Z, Liu YG, et al. A fractional-order variational framework for retinex: fractional-order partial differential equation-based formulation for multi-scale nonlocal contrast enhancement with texture preserving. IEEE Trans Image Process. (2017) 27:1214–29. doi: 10.1109/TIP.2017.2779601

69. Blake A. Boundary conditions for lightness computation in mondrian world. Comput vision graphics image Process. (1985) 32:314–27. doi: 10.1016/0734-189X(85)90054-4

70. Jobson DJ, Rahman Z, Woodell GA. Properties and performance of a center/surround retinex. IEEE Trans Image Process. (1997) 6:451–62. doi: 10.1109/83

71. Zu R, Jobson DJ, Woodell GA. Retinex processing for automatic image enhancement. J Electronic Imaging. (2004) 13:100–10. doi: 10.1117/1.1636183

72. Zosso D, Tran G, Osher S. A unifying retinex model based on non-local differential operators. Comput Imaging XI (SPIE). (2013) 8657:865702.

73. Wang Z, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process. (2004) 13:600–12. doi: 10.1109/TIP.2003.819861

74. He K, Sun J, Tang X. Single image haze removal using dark channel prior. IEEE Trans Pattern Anal Mach Intell. (2010) 33:2341–53.

75. Galdran A, Alvarez-Gila A, Bria A, Vazquez-Corral J, Bertalmío M. (2018). On the duality between retinex and image dehazing, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, . pp. 8212–21.

76. Wang J, Lu K, Xue J, He N, Shao L. Single image dehazing based on the physical model and msrcr algorithm. IEEE Trans Circuits Syst Video Technol. (2017) 28:2190–9. doi: 10.1109/TCSVT.2017.2728822

77. Bandara AMRR, Giragama PWGRMPB. (2017). A retinal image enhancement technique for blood vessel segmentation algorithm, in: 2017 IEEE International Conference on Industrial and Information Systems. 1–5.

78. Sonali, Sahu S, Singh AK, Ghrera SP, Elhoseny M. An approach for de-noising and contrast enhancement of retinal fundus image using clahe. Optics Laser Technol. (2019) 110:87–98. doi: 10.1016/j.optlastec.2018.06.061

79. Singh N, Kaur L, Singh K. Histogram equalization techniques for enhancement of low radiance retinal images for early detection of diabetic retinopathy. Eng Sci Technology an Int J. (2019) 22:736–45. doi: 10.1016/j.jestch.2019.01.014

80. Qureshi I, Ma J, Shaheed K. A hybrid proposed fundus image enhancement framework for diabetic retinopathy. Algorithms. (2019) 12:14. doi: 10.3390/a12010014

81. Kumar R, Kumar Bhandari A. Luminosity and contrast enhancement of retinal vessel images using weighted average histogram. Biomed Signal Process Control. (2022) 71:103089. doi: 10.1016/j.bspc.2021.103089

82. Han R, Tang C, Xu M, Liang B, Wu T, Lei Z. Enhancement method with naturalness preservation and artifact suppression based on an improved retinex variational model for color retinal images. J Optical Soc America A. (2023) 40:155–64. doi: 10.1364/JOSAA.474020

83. Fergus R, Singh B, Hertzmann A, Roweis ST, Freeman WT. Removing camera shake from a single photograph. Acm Siggraph 2006 Papers (2006). p. 787–94.

84. Qiu J, Wang X, Maybank SJ, Tao D. (2019). World from blur, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, . pp. 8493–504.

85. Kotera J, Šroubek F, Milanfar P. (2013). Blind deconvolution using alternating maximum a posteriori estimation with heavy-tailed priors, in: Computer Analysis of Images and Patterns: 15th International Conference, CAIP 2013, York, UK, August 27–29, 2013, Proceedings, Part II 15, . pp. 59–66. Springer.

86. Levin A, Weiss Y, Durand F, Freeman WT. (2009). Understanding and evaluating blind deconvolution algorithms, in: 2009 IEEE conference on computer vision and pattern recognition, . pp. 1964–71. IEEE.

87. Xu L, Zheng S, Jia J. (2013). Unnatural l0 sparse representation for natural image deblurring, in: Proceedings of the IEEE conference on computer vision and pattern recognition, . pp. 1107–14.

88. Pan J, Sun D, Pfister H, Yang MH. (2016). Blind image deblurring using dark channel prior, in: Proceedings of the IEEE conference on computer vision and pattern recognition, . pp. 1628–36.

89. Chen L, Fang F, Wang T, Zhang G. (2019). Blind image deblurring with local maximum gradient prior, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, . pp. 1742–50.

90. Qidwai U, Qidwai U. Blind deconvolution for retinal image enhancement. IEEE EMBS Conf Biomed Eng Sci. (2010), 20–5. doi: 10.1109/IECBES.2010.5742192

91. Marrugo AG, Šorel M, Šroubek F, Millán MS. Retinal image restoration by means of blind deconvolution. J Biomed Optics. (2011) 16:116016–6. doi: 10.1117/1.3652709

92. Ávila FJ, Ares J, Marcellán MC, Collados MV, Remón L. Iterative-trained semiblind deconvolution algorithm to compensate straylight in retinal images. J Imaging. (2021) 7:73.

93. Zhang S, Webers CAB, Berendschot TTJM. Luminosity rectified blind richardson-lucy deconvolution for single retinal image restoration. Comput Methods Programs Biomedicine. (2023) 229:107297. doi: 10.1016/j.media.2021.101971

94. Li T, Bo W, Hu C, Kang H, Liu H, Wang K, et al. Applications of deep learning in fundus images: A review. Med Image Anal. (2021) 69:101971. doi: 10.1016/j.media.2021.101971

95. Yang B, Zhao H, Cao L, Liu H, Wang N, Li H. Retinal image enhancement with artifact reduction and structure retention. Pattern Recognition. (2023) 133:108968. doi: 10.1016/j.patcog.2022.108968

96. Shen Z, Fu H, Shen J, Shao L. Modeling and enhancing low-quality retinal fundus images. IEEE Trans Med Imaging. (2021) 40:996–1006. doi: 10.1109/TMI.2020.3043495

97. Wan C, Zhou X, You Q, Sun J, Shen J, Zhu S, et al. Retinal image enhancement using cycle-constraint adversarial network. Front Med. (2021) 8:793726. doi: 10.3389/fmed.2021.793726

98. Chen S, Qian Z, Hua Z. A novel un-supervised gan for fundus image enhancement with classification prior loss. Electronics. (2022) 11:996–1006. doi: 10.3390/electronics11071000

99. Bernardes R, Serranho P, Lobo C. Digital ocular fundus imaging: a review. Ophthalmologica. (2011) 226:161–81. doi: 10.1159/000329597

100. Li H, Liu H, Fu H, Shu H, Zhao Y, Luo X, et al. (2022). Structure-consistent restoration network for cataract fundus image enhancement, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, . pp. 487–96. Springer.

101. Zhang S, Mohan A, Webers CA, Berendschot TT. Mute: A multilevel-stimulated denoising strategy for single cataractous retinal image dehazing. Med Image Anal. (2023) 88:102848. doi: 10.1016/j.media.2023.102848

102. Tsima K. The reproducibility issues that haunt health-care ai. Nature. (2023) 613. doi: 10.1038/d41586-023-00023-2

103. Hore A, Ziou D. Image quality metrics: Psnr vs. ssim. 20th international conference on pattern recognition IEEE (2010), pp. 2366–9.

104. Winkler S, Mohandas P. The evolution of video quality measurement: From psnr to hybrid metrics. IEEE Trans Broadcasting. (2008) 54:660–8. doi: 10.1109/TBC.2008.2000733

105. Wang Z, Simoncelli EP, Bovik AC. (2003). Multiscale structural similarity for image quality assessment, in: The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, , Vol. 2. pp. 1398–402. Ieee.

106. Budai A, Bock R, Maier A, Hornegger J, Michelson G. Robust vessel segmentation in fundus images. Int J BioMed Imaging. (2013) 2013:154860. doi: 10.1155/2013/154860

107. Kotevski Z, Mitrevski P. (2009). Experimental comparison of psnr and ssim metrics for video quality estimation, in: . International Conference on ICT Innovations, . pp. 357–66. Springer.

108. Sharif M, Bauer L, Reiter MK. (2018). On the suitability of lp-norms for creating and preventing adversarial examples, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, (2018). pp. 1605–13.

109. Video processing c, quality researc group. Ways of cheating on popular objective metrics: blurring, noise, super-resolution and others. (2021).

110. Hong S, Kim M, Kang M. Single image dehazing via atmospheric scattering model-based image fusion. Signal Process. (2021) 178:107798. doi: 10.1016/j.sigpro.2020.107798

111. Rizzi A, Algeri T, Medeghini G, Marini D. A proposal for contrast measure in digital images. (2004).

112. Choi LK, You J, Bovik AC. Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Trans Image Process. (2015) 24:3888–901. doi: 10.1109/TIP.2015.2456502

113. Choi LK, You J, Bovik AC. Live image defogging database. IEEE Transactions on Image Processing (2015), 3888–901. doi: 10.1109/TIP.2015.2456502

114. Doubal F, MacGillivray T, Patton N, Dhillon B, Dennis M, Wardlaw J. Fractal analysis of retinal vessels suggests that a distinct vasculopathy causes lacunar stroke. Neurology. (2010) 74:1102–7. doi: 10.1212/WNL.0b013e3181d7d8b4

115. Cheung CY, Ong S, Ikram MK, Ong YT, Chen CP, Venketasubramanian N, et al. Retinal vascular fractal dimension is associated with cognitive dysfunction. J Stroke Cerebrovasc Dis. (2014) 23:43–50. doi: 10.1016/j.jstrokecerebrovasdis.2012.09.002

116. Zhu P, Huang F, Lin F, Li Q, Yuan Y, Gao Z, et al. The relationship of retinal vessel diameters and fractal dimensions with blood pressure and cardiovascular risk factors. PloS One. (2014) 9:e106551. doi: 10.1371/journal.pone.0106551

117. Huang F, Dashtbozorg B, Zhang J, Bekkers E, Abbasi-Sureshjani S, Berendschot TT, et al. Reliability of using retinal vascular fractal dimension as a biomarker in the diabetic retinopathy detection. J Ophthalmol. (2016) 2016:6259047. doi: 10.1155/2016/6259047

118. Ciancaglini M, Guerra G, Agnifili L, Mastropasqua R, Fasanella V, Cinelli M, et al. Fractal dimension as a new tool to analyze optic nerve head vasculature in primary open angle glaucoma. In Vivo. (2015) 29:273–9.

119. van den Berg NJ, Zhang S, Smets BM, Berendschot TT, Duits R. (2023). Geodesic tracking of retinal vascular trees with optical and tv-flow enhancement in se (2), in: International Conference on Scale Space and Variational Methods in Computer Vision, . pp. 525–37. Springer.

120. Yang B, Cao L, Zhao H, Li H, Liu H, Wang N. Adaptive enhancement of cataractous retinal images for contrast standardization. Med Biol Eng Computing. (2023), 525–37. doi: 10.1007/s11517–023-02937–5

121. Chanwimaluang T, Fan G, Fransen SR. Hybrid retinal image registration. IEEE Trans Inf Technol biomedicine. (2006) 10:129–42. doi: 10.1109/TITB.2005.856859

122. Chen J, Tian J, Lee N, Zheng J, Smith RT, Laine AF. A partial intensity invariant feature descriptor for multimodal retinal image registration. IEEE Trans Biomed Eng. (2010) 57:1707–18. doi: 10.1109/TBME.2010.2042169

123. Wang G, Wang Z, Chen Y, Zhao W. Robust point matching method for multimodal retinal image registration. Biomed Signal Process Control. (2015) 19:68–76. doi: 10.1016/j.bspc.2015.03.004

124. Midena E, Marchione G, Di Giorgio S, Rotondi G, Longhin E, Frizziero L, et al. Ultra-wide-field fundus photography compared to ophthalmoscopy in diagnosing and classifying major retinal diseases. Sci Rep. (2022) 12:19287. doi: 10.1038/s41598-022-23170-4

125. Kumar V, Surve A, Kumawat D, Takkar B, Azad S, Chawla R, et al. Ultra-wide field retinal imaging: A wider clinical perspective. Indian J Ophthalmol. (2021) 69:824–35. doi: 10.4103/ijo.IJO_1403_20

126. MacGillivray T, Trucco E, Cameron J, Dhillon B, Houston J, Van Beek E. Retinal imaging as a source of biomarkers for diagnosis, characterization and prognosis of chronic illness or long-term conditions. Br J Radiol. (2014) 87:20130832. doi: 10.1259/bjr.20130832

127. Sisodia DS, Nair S, Khobragade P. Diabetic retinal fundus images: Preprocessing and feature extraction for early detection of diabetic retinopathy. Biomed Pharmacol J. (2017) 10:615–26. doi: 10.13005/bpj

128. Wintergerst MW, Jansen LG, Holz FG, Finger RP. Smartphone-based fundus imaging– where are we now? Asia-Pacific J Ophthalmol. (2020) 9:308–14. doi: 10.1097/APO.0000000000000303

Keywords: retinal image, image enhancement, image restoration, illumination correction, dehazing, deblurring, diagnosis

Citation: Zhang S, Webers CAB and Berendschot TTJM (2024) Computational single fundus image restoration techniques: a review. Front. Ophthalmol. 4:1332197. doi: 10.3389/fopht.2024.1332197

Received: 02 November 2023; Accepted: 19 April 2024;

Published: 12 June 2024.

Edited by:

Wolf Harmening, University Hospital Bonn, GermanyReviewed by:

Davide Borroni, Riga Stradiņš University, LatviaRobert Cooper, Marquette University, United States

Copyright © 2024 Zhang, Webers and Berendschot. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shuhe Zhang, c2h1aGUuemhhbmdAbWFhc3RyaWNodHVuaXZlcnNpdHkubmw=

Shuhe Zhang

Shuhe Zhang Carroll A. B. Webers

Carroll A. B. Webers Tos T. J. M. Berendschot

Tos T. J. M. Berendschot